Cilium动手实验室: 精通之旅---20.Isovalent Enterprise for Cilium: Zero Trust Visibility

Cilium动手实验室: 精通之旅---20.Isovalent Enterprise for Cilium: Zero Trust Visibility

- 1. 实验室环境

- 1.1 实验室环境

- 1.2 小测试

- 2. The Endor System

- 2.1 部署应用

- 2.2 检查现有策略

- 3. Cilium 策略实体

- 3.1 创建 allow-all 网络策略

- 3.2 在 Hubble CLI 中验证网络策略源

- 3.3 Hubble Policy Verdict 指标

- 3.4 Grafana 中的策略裁决图

- 3.5 要筛选的流

- 3.6 小测验

- 4. 筛选 DNS 流量

- 4.1 可视化 DNS 流量

- 4.2 网络策略可视化工具

- 4.3 创建新策略

- 4.4 将策略保存到文件

- 4.5 通过哈勃观测结果

- 4.6 Grafana 展示的结果

- 4.7 小测验

- 5. Death Star Ingress 策略

- 5.1 Death Star Ingress 策略

- 5.2 编写网络策略

- 5.3 用Hubble确认

- 5.4 用Grafana确认

- 6. Tie Fighter 出口策略

- 6.1 Tie Fighter 出口策略

- 6.2 外部 toFQDN 出口规则

- 6.3 Death Star 的内部出口规则

- 6.4 用Hubble观测

- 6.5 用Grafana观测

- 6.6 小测验

- 7. 删除 allow-all 策略

- 8. The Hoth System

- 8.1 部署应用

- 8.2 仅允许 Empire 船只

- 8.3 小测验

- 9. L7 Security

- 9.1 L7 漏洞

- 9.2 L7过滤

- 9.3 Hubble 观测

- 9.4 Grafana观测

- 10. 最终实验

- 10.1 题目

- 10.2 解题

- 10.2.1 创建allow-all

- 10.2.2 创建DNS策略

- 10.2.3 创建coreapi策略

- 10.2.4 创建elasticsearch

- 10.2.5 crawler

- 10.2.6 jobposting

- 10.2.7 kafka

- 10.2.8 loader

- 10.2.9 recruiter

- 10.2.10 zookeeper

1. 实验室环境

访问环境

https://isovalent.com/labs/cilium-zero-trust-visibility/

1.1 实验室环境

在本实验中,我们部署了:

- Kube Prometheus Stack Helm 图表(包括 Prometheus 和 Grafana)

- Isovalent Enterprise for Cilium 1.12 与 Hubble 和启用的指标

具体而言,使用了以下 Cilium Helm 参数:

hubble:metrics:enabled:- dns- drop- tcp- flow- icmp- http- policy:sourceContext=app|workload-name|pod|reserved-identity;destinationContext=app|workload-name|pod|dns|reserved-identity;labelsContext=source_namespace,destination_namespaceserviceMonitor:enabled: true

prometheus:enabled: trueserviceMonitor:enabled: true

在本实验中,我们将使用 Cilium 的 Hubble 子系统生成的 Prometheus 指标,以便可视化 Cilium 正在采取的网络策略判定。将这些指标集成到 Grafana 仪表板中将使我们能够采用可见性驱动的方法来构建零信任网络策略。

我们将使用的 Hubble 指标称为 hubble_policy_verdicts_total,通过在上面的 hubble.metrics.enabled Helm 设置中列出策略值来激活。

因此,我们实验室最重要的设置是 hubble.metrics.enabled 键中的策略条目,其中包含:

policy:sourceContext=app|workload-name|pod|reserved-identity;destinationContext=app|workload-name|pod|dns|reserved-identity;labelsContext=source_namespace,destination_namespace

因此,它被激活,并由源上下文和目标上下文补充,后者将动态标签与 Hubble 生成的网络策略判定指标相关联。

在本例中,我们选择使用以下标签:

-

对于源:

app|workload-name|pod|reserved-identity将对源工作负载使用短 Pod 名称(如在 Hubble 中),对非终端节点身份使用保留身份名称。 -

for destinations:

app|workload-name|pod|dns|reserved-identity将使用类似的标签,但当这些名称在 Cilium DNS 代理缓存中已知时,它也将使用集群外部目标的 DNS 名称。 -

source 和 destination 命名空间的额外标签。

以下是我们将通过此配置获得的指标示例:

# HELP hubble_policy_verdicts_total Total number of Cilium network policy verdicts

# TYPE hubble_policy_verdicts_total counter

hubble_policy_verdicts_total{action="forwarded",destination="deathstar",destination_namespace="endor",direction="egress",match="all",source="tiefighter",source_namespace="endor"} 662

hubble_policy_verdicts_total{action="forwarded",destination="deathstar",destination_namespace="endor",direction="ingress",match="all",source="tiefighter",source_namespace="endor"} 312

hubble_policy_verdicts_total{action="forwarded",destination="kube-dns",destination_namespace="kube-system",direction="egress",match="all",source="tiefighter",source_namespace="endor"} 1963

hubble_policy_verdicts_total{action="forwarded",destination="reserved:host",destination_namespace="",direction="egress",match="all",source="tiefighter",source_namespace="endor"} 10

hubble_policy_verdicts_total{action="forwarded",destination="reserved:kube-apiserver",destination_namespace="",direction="egress",match="all",source="tiefighter",source_namespace="endor"} 5

hubble_policy_verdicts_total{action="forwarded",destination="reserved:remote-node",destination_namespace="",direction="egress",match="all",source="tiefighter",source_namespace="endor"} 10

hubble_policy_verdicts_total{action="forwarded",destination="reserved:world",destination_namespace="",direction="egress",match="all",source="tiefighter",source_namespace="endor"} 1323

前两行告诉我们 hubble_policy_verdicts_total 是一个 counter 类型的度量,其值将随着时间的推移而增加。查看第一个示例(第 3 行),我们可以看到此指标具有标签:

action标签告诉我们 Cilium 采取了哪种类型的作(forwarded、dropped或redirected)source和destination标签分别包含 source 和 destination 身份,基于我们在配置中指定的sourceContext和destinationContext设置source_namespace和destination_namespace提供有关源和目标命名空间的信息

match label ( 匹配标签) 提供应用的匹配类型,可以是:

all的裁决匹配all规则,我们将在即将到来的挑战中看到l3-only表示与具有 L3-only 条件的规则匹配的判定l3-l4(指定端口和/或协议时)l7/<protocol>(例如l7/http或l7/dns),当判决是使用 Cilium 的 DNS 代理或 Envoy 代理代理的流时。

让我们等到一切准备就绪。这可能需要几分钟时间才能部署所有组件:

root@server:~# cilium status --wait/¯¯\/¯¯\__/¯¯\ Cilium: OK\__/¯¯\__/ Operator: OK/¯¯\__/¯¯\ Envoy DaemonSet: OK\__/¯¯\__/ Hubble Relay: OK\__/ ClusterMesh: disabledDaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 3cilium-envoy Running: 3cilium-operator Running: 2clustermesh-apiserver hubble-relay Running: 1hubble-ui Running: 1

Cluster Pods: 10/10 managed by Cilium

Helm chart version:

Image versions cilium quay.io/isovalent/cilium:v1.17.1-cee.beta.1: 3cilium-envoy quay.io/cilium/cilium-envoy:v1.31.5-1739264036-958bef243c6c66fcfd73ca319f2eb49fff1eb2ae@sha256:fc708bd36973d306412b2e50c924cd8333de67e0167802c9b48506f9d772f521: 3cilium-operator quay.io/isovalent/operator-generic:v1.17.1-cee.beta.1: 2hubble-relay quay.io/isovalent/hubble-relay:v1.17.1-cee.beta.1: 1hubble-ui quay.io/isovalent/hubble-ui-enterprise-backend:v1.3.2: 1hubble-ui quay.io/isovalent/hubble-ui-enterprise:v1.3.2: 1

Configuration: Unsupported feature(s) enabled: EnvoyDaemonSet (Limited). Please contact Isovalent Support for more information on how to grant an exception.

验证 Hubble 是否连接到 3 个节点:

root@server:~# hubble list nodes

NAME STATUS AGE FLOWS/S CURRENT/MAX-FLOWS

kind-control-plane Connected 4m36s 28.22 4095/4095 (100.00%)

kind-worker Connected 4m36s 21.38 4095/4095 (100.00%)

kind-worker2 Connected 4m35s 13.35 3790/4095 ( 92.55%)

1.2 小测试

× Hubble metrics are active by default

√ Hubble metrics' source and destination labels can be tuned

√ Additional labels can be added to metrics

× The `hubble_policy_verdicts_total` metric is provided by default

2. The Endor System

2.1 部署应用

让我们首先将一个简单的服务器/客户端应用程序部署到 endor 命名空间中

kubectl apply -f endor.yaml

确认下yaml的内容

root@server:~# yq endor.yaml

---

apiVersion: v1

kind: Namespace

metadata:name: endor

---

apiVersion: v1

kind: Service

metadata:namespace: endorname: deathstarlabels:app.kubernetes.io/name: deathstar

spec:type: ClusterIPports:- port: 80name: httpselector:org: empireclass: deathstar

---

apiVersion: apps/v1

kind: Deployment

metadata:namespace: endorname: deathstarlabels:app.kubernetes.io/name: deathstar

spec:replicas: 2selector:matchLabels:org: empireclass: deathstartemplate:metadata:labels:org: empireclass: deathstarapp.kubernetes.io/name: deathstarspec:containers:- name: deathstarimage: docker.io/cilium/starwars

---

apiVersion: apps/v1

kind: Deployment

metadata:namespace: endorname: tiefighter

spec:replicas: 1selector:matchLabels:org: empireclass: tiefighterapp.kubernetes.io/name: tiefightertemplate:metadata:labels:org: empireclass: tiefighterapp.kubernetes.io/name: tiefighterspec:containers:- name: starshipimage: docker.io/tgraf/netperfcommand: ["/bin/sh"]args: ["-c", "while true; do curl -s -XPOST deathstar.endor.svc.cluster.local/v1/request-landing; curl -s https://disney.com; curl -s https://swapi.dev/api/starships; sleep 1; done"]

等待服务就绪

root@server:~# k get deployment,po -n endor

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deathstar 2/2 2 2 59s

deployment.apps/tiefighter 1/1 1 1 59sNAME READY STATUS RESTARTS AGE

pod/deathstar-848954d75c-sqh2q 1/1 Running 0 59s

pod/deathstar-848954d75c-x8jkq 1/1 Running 0 59s

pod/tiefighter-7564fc8d79-77gp6 1/1 Running 0 59s

使用 Hubble 观察此应用程序流量

root@server:~# hubble observe -f -n endor

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> 10.96.0.10:53 (world) pre-xlate-fwd TRACED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) post-xlate-fwd TRANSLATED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6:35519 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) to-overlay FORWARDED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6:35519 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) to-endpoint FORWARDED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> 10.96.0.10:53 (world) pre-xlate-fwd TRACED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) post-xlate-fwd TRANSLATED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6:35519 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8 (ID:23249) pre-xlate-rev TRACED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6:35519 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8 (ID:23249) pre-xlate-rev TRACED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6:35519 (ID:41519) <- kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) to-overlay FORWARDED (UDP)

Jun 4 03:18:24.650: endor/tiefighter-7564fc8d79-77gp6:35519 (ID:41519) <- kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) to-endpoint FORWARDED (UDP)

Jun 4 03:18:24.651: kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) <> endor/tiefighter-7564fc8d79-77gp6 (ID:41519) pre-xlate-rev TRACED (UDP)

Jun 4 03:18:24.651: 10.96.0.10:53 (world) <> endor/tiefighter-7564fc8d79-77gp6 (ID:41519) post-xlate-rev TRANSLATED (UDP)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: SYN)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) <- endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: SYN, ACK)

Jun 4 03:18:24.651: kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) <> endor/tiefighter-7564fc8d79-77gp6 (ID:41519) pre-xlate-rev TRACED (UDP)

Jun 4 03:18:24.651: 10.96.0.10:53 (world) <> endor/tiefighter-7564fc8d79-77gp6 (ID:41519) post-xlate-rev TRANSLATED (UDP)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> 10.96.219.102:80 (world) pre-xlate-fwd TRACED (TCP)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) post-xlate-fwd TRANSLATED (TCP)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: SYN)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) <- endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: SYN, ACK)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: ACK)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: ACK)

Jun 4 03:18:24.651: endor/deathstar-848954d75c-x8jkq:80 (ID:56274) <> endor/tiefighter-7564fc8d79-77gp6 (ID:41519) pre-xlate-rev TRACED (TCP)

Jun 4 03:18:24.651: 10.96.219.102:80 (world) <> endor/tiefighter-7564fc8d79-77gp6 (ID:41519) post-xlate-rev TRANSLATED (TCP)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: ACK, PSH)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) <> endor/deathstar-848954d75c-x8jkq (ID:56274) pre-xlate-rev TRACED (TCP)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) <- endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: ACK, PSH)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) <- endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jun 4 03:18:24.651: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: ACK, FIN)

Jun 4 03:18:24.652: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) <- endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: ACK, FIN)

Jun 4 03:18:24.652: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: ACK)

Jun 4 03:18:24.652: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) <- endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jun 4 03:18:24.652: endor/tiefighter-7564fc8d79-77gp6:59400 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) to-overlay FORWARDED (TCP Flags: ACK)

Jun 4 03:18:24.654: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> 10.96.0.10:53 (world) pre-xlate-fwd TRACED (UDP)

Jun 4 03:18:24.654: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) post-xlate-fwd TRANSLATED (UDP)

Jun 4 03:18:24.654: endor/tiefighter-7564fc8d79-77gp6:37648 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) to-overlay FORWARDED (UDP)

Jun 4 03:18:24.655: endor/tiefighter-7564fc8d79-77gp6:37648 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) to-endpoint FORWARDED (UDP)

Jun 4 03:18:24.655: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> 10.96.0.10:53 (world) pre-xlate-fwd TRACED (UDP)

Jun 4 03:18:24.655: endor/tiefighter-7564fc8d79-77gp6 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) post-xlate-fwd TRANSLATED (UDP)

Jun 4 03:18:24.655: endor/tiefighter-7564fc8d79-77gp6:37648 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8 (ID:23249) pre-xlate-rev TRACED (UDP)

Jun 4 03:18:24.655: endor/tiefighter-7564fc8d79-77gp6:37648 (ID:41519) <> kube-system/coredns-6f6b679f8f-gpfm8 (ID:23249) pre-xlate-rev TRACED (UDP)

在hubble ui中观测endor命名空间下的互联

2.2 检查现有策略

Hubble CLI 允许按类型过滤流。特别是,Cilium 做出的网络策略决策有一个 policy-verdict 类型。

检查当前哈勃流中的判定:

root@server:~# hubble observe -n endor --type policy-verdict

EVENTS LOST: HUBBLE_RING_BUFFER CPU(0) 1

显然现在没有任何网络策略存在

3. Cilium 策略实体

3.1 创建 allow-all 网络策略

由于我们希望在不中断应用程序的现有流量的情况下观察网络策略判定,因此我们将创建一个允许所有网络策略。

为此,我们将编写一个 Cilium 网络策略,接受来自名为 all 的特殊实体的入口和出口流量。

这将有效地允许所有流量,因此不会改变当前情况,但会明确地这样做,并且只要流量与这些规则匹配,就会提供指标。

编辑policies/allow-all.yaml网络策略文件

root@server:~# yq policies/allow-all.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:namespace: endorname: allow-all

spec:endpointSelector: {}ingress:- fromEntities:- allegress:- toEntities:- all

应用策略

kubectl apply -f policies/allow-all.yaml

在Hubble UI中可以看到,所有流量都被允许

3.2 在 Hubble CLI 中验证网络策略源

检查 endor 中的策略判定流 Namespace:

root@server:~# hubble observe -n endor --type policy-verdict

Jun 4 03:24:15.441: endor/tiefighter-7564fc8d79-77gp6:48526 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:16.957: endor/tiefighter-7564fc8d79-77gp6:48530 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:18.474: endor/tiefighter-7564fc8d79-77gp6:48542 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:21.509: endor/tiefighter-7564fc8d79-77gp6:48546 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:23.025: endor/tiefighter-7564fc8d79-77gp6:48552 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:27.588: endor/tiefighter-7564fc8d79-77gp6:36020 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:29.107: endor/tiefighter-7564fc8d79-77gp6:36024 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:30.628: endor/tiefighter-7564fc8d79-77gp6:36038 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:33.663: endor/tiefighter-7564fc8d79-77gp6:40630 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:35.182: endor/tiefighter-7564fc8d79-77gp6:40640 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:41.246: endor/tiefighter-7564fc8d79-77gp6:40656 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:42.771: endor/tiefighter-7564fc8d79-77gp6:40658 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:47.326: endor/tiefighter-7564fc8d79-77gp6:47286 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:48.843: endor/tiefighter-7564fc8d79-77gp6:47296 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:50.362: endor/tiefighter-7564fc8d79-77gp6:47298 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:24:53.400: endor/tiefighter-7564fc8d79-77gp6:47310 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:01.001: endor/tiefighter-7564fc8d79-77gp6:48694 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:02.533: endor/tiefighter-7564fc8d79-77gp6:48708 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:04.069: endor/tiefighter-7564fc8d79-77gp6:49992 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:04.554: endor/tiefighter-7564fc8d79-77gp6:57790 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:04.555: endor/tiefighter-7564fc8d79-77gp6:52904 (ID:41519) -> 52.58.110.120:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:05.590: endor/tiefighter-7564fc8d79-77gp6:38580 (ID:41519) -> kube-system/coredns-6f6b679f8f-xf5pp:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:05.591: endor/tiefighter-7564fc8d79-77gp6:41082 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:05.591: endor/tiefighter-7564fc8d79-77gp6:41082 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:05.595: endor/tiefighter-7564fc8d79-77gp6:36434 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:05.596: endor/tiefighter-7564fc8d79-77gp6:44726 (ID:41519) -> 130.211.198.204:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:06.077: endor/tiefighter-7564fc8d79-77gp6:41752 (ID:41519) -> kube-system/coredns-6f6b679f8f-xf5pp:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:06.078: endor/tiefighter-7564fc8d79-77gp6:52912 (ID:41519) -> 52.58.110.120:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:07.116: endor/tiefighter-7564fc8d79-77gp6:59195 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:07.117: endor/tiefighter-7564fc8d79-77gp6:41096 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:07.117: endor/tiefighter-7564fc8d79-77gp6:41096 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:07.120: endor/tiefighter-7564fc8d79-77gp6:51200 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:07.121: endor/tiefighter-7564fc8d79-77gp6:44728 (ID:41519) -> 130.211.198.204:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:07.594: endor/tiefighter-7564fc8d79-77gp6:60081 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:07.595: endor/tiefighter-7564fc8d79-77gp6:52914 (ID:41519) -> 52.58.110.120:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:08.630: endor/tiefighter-7564fc8d79-77gp6:35796 (ID:41519) -> kube-system/coredns-6f6b679f8f-xf5pp:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:08.630: endor/tiefighter-7564fc8d79-77gp6:50000 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:08.630: endor/tiefighter-7564fc8d79-77gp6:50000 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:08.634: endor/tiefighter-7564fc8d79-77gp6:33977 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:25:08.634: endor/tiefighter-7564fc8d79-77gp6:44742 (ID:41519) -> 130.211.198.204:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

现在,这应该列出多个判定,所有判定都标记为 ALLOWED。

让我们验证一下这些流是否确实与 allow-all 相关联 Network Policy。

例如,让我们使用 Hubble CLI 筛选器查找 Tie Fighter 和 Death Star 工作负载之间通信的最后一个流:

root@server:~# hubble observe --type policy-verdict \--from-pod endor/tiefighter \--to-pod endor/deathstar \--last 1

Jun 4 03:25:45.043: endor/tiefighter-7564fc8d79-77gp6:60116 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:25:45.043: endor/tiefighter-7564fc8d79-77gp6:60116 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

您可能会惊讶地看到此命令中有几行。这是因为 --last 1 标志返回与请求匹配的最后一个流 在集群中的每个节点上。

让我们通过添加 -o jsonpb 来检查 JSON 格式的这些流(为清楚起见,添加了 jq -c 以对 JSON 输出进行着色):

root@server:~# hubble observe --type policy-verdict \--from-pod endor/tiefighter \--to-pod endor/deathstar \--last 1 -o jsonpb | jq -c

{"flow":{"time":"2025-06-04T03:26:27.568268200Z","uuid":"45c306aa-a692-4ef0-87c0-d19ecf9a1f33","verdict":"FORWARDED","ethernet":{"source":"ce:5b:6d:10:d8:e7","destination":"be:f8:e5:2b:7f:a3"},"IP":{"source":"10.0.2.29","destination":"10.0.0.174","ipVersion":"IPv4"},"l4":{"TCP":{"source_port":59850,"destination_port":80,"flags":{"SYN":true}}},"source":{"ID":1413,"identity":41519,"cluster_name":"default","namespace":"endor","labels":["k8s:app.kubernetes.io/name=tiefighter","k8s:class=tiefighter","k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=endor","k8s:io.cilium.k8s.policy.cluster=default","k8s:io.cilium.k8s.policy.serviceaccount=default","k8s:io.kubernetes.pod.namespace=endor","k8s:org=empire"],"pod_name":"tiefighter-7564fc8d79-77gp6","workloads":[{"name":"tiefighter","kind":"Deployment"}]},"destination":{"identity":56274,"cluster_name":"default","namespace":"endor","labels":["k8s:app.kubernetes.io/name=deathstar","k8s:class=deathstar","k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=endor","k8s:io.cilium.k8s.policy.cluster=default","k8s:io.cilium.k8s.policy.serviceaccount=default","k8s:io.kubernetes.pod.namespace=endor","k8s:org=empire"],"pod_name":"deathstar-848954d75c-x8jkq"},"Type":"L3_L4","node_name":"kind-worker2","node_labels":["beta.kubernetes.io/arch=amd64","beta.kubernetes.io/os=linux","kubernetes.io/arch=amd64","kubernetes.io/hostname=kind-worker2","kubernetes.io/os=linux"],"event_type":{"type":5},"traffic_direction":"EGRESS","policy_match_type":4,"is_reply":false,"Summary":"TCP Flags: SYN","egress_allowed_by":[{"name":"allow-all","namespace":"endor","labels":["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=allow-all","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=1d3aa587-ede8-4214-87e3-e2a6448f1da0"],"revision":"2"}]},"node_name":"kind-worker2","time":"2025-06-04T03:26:27.568268200Z"}

{"flow":{"time":"2025-06-04T03:26:27.568310287Z","uuid":"10d33909-ad0e-44fe-9026-b1f8515f2980","verdict":"FORWARDED","ethernet":{"source":"26:2a:17:31:23:9b","destination":"fe:04:44:ad:47:7b"},"IP":{"source":"10.0.2.29","destination":"10.0.0.174","ipVersion":"IPv4"},"l4":{"TCP":{"source_port":59850,"destination_port":80,"flags":{"SYN":true}}},"source":{"identity":41519,"cluster_name":"default","namespace":"endor","labels":["k8s:app.kubernetes.io/name=tiefighter","k8s:class=tiefighter","k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=endor","k8s:io.cilium.k8s.policy.cluster=default","k8s:io.cilium.k8s.policy.serviceaccount=default","k8s:io.kubernetes.pod.namespace=endor","k8s:org=empire"],"pod_name":"tiefighter-7564fc8d79-77gp6"},"destination":{"ID":49,"identity":56274,"cluster_name":"default","namespace":"endor","labels":["k8s:app.kubernetes.io/name=deathstar","k8s:class=deathstar","k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=endor","k8s:io.cilium.k8s.policy.cluster=default","k8s:io.cilium.k8s.policy.serviceaccount=default","k8s:io.kubernetes.pod.namespace=endor","k8s:org=empire"],"pod_name":"deathstar-848954d75c-x8jkq","workloads":[{"name":"deathstar","kind":"Deployment"}]},"Type":"L3_L4","node_name":"kind-worker","node_labels":["beta.kubernetes.io/arch=amd64","beta.kubernetes.io/os=linux","kubernetes.io/arch=amd64","kubernetes.io/hostname=kind-worker","kubernetes.io/os=linux"],"event_type":{"type":5},"traffic_direction":"INGRESS","policy_match_type":4,"is_reply":false,"Summary":"TCP Flags: SYN","ingress_allowed_by":[{"name":"allow-all","namespace":"endor","labels":["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=allow-all","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=1d3aa587-ede8-4214-87e3-e2a6448f1da0"],"revision":"2"}]},"node_name":"kind-worker","time":"2025-06-04T03:26:27.568310287Z"}

您可以观察到 JSON 流指示方向(例如 “traffic_direction”: “EGRESS” 或 "traffic_direction": "INGRESS" )。此外,出口流具有 flow.egress_allowed_by 字段,而入口流具有相应的 flow.ingress_allowed_by 字段。这些字段是数组,因为多个策略可能会允许相同的流。

让我们检查 Tie Fighter 到 Death Star 通信的最后一个入口流。我们将使用 jq 来显示每个 JSON 流的 ingress_allowed_by 部分:

root@server:~# hubble observe --type policy-verdict \--from-pod endor/tiefighter \--to-pod endor/deathstar --last 1 -o jsonpb \| jq '.flow | select(.ingress_allowed_by).ingress_allowed_by'

[{"name": "allow-all","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=allow-all","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=1d3aa587-ede8-4214-87e3-e2a6448f1da0"],"revision": "2"}

]

我们可以看到,唯一允许此流的网络策略是 allow-all 在 endor 命名空间中,这是我们刚刚应用的 Network Policy。

尝试从 Tie Fighter 对出口策略判定执行相同的请求,并观察结果:

root@server:~# hubble observe --type policy-verdict \--from-pod endor/tiefighter \--last 1 -o jsonpb \| jq '.flow | select(.egress_allowed_by).egress_allowed_by'

[{"name": "allow-all","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=allow-all","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=1d3aa587-ede8-4214-87e3-e2a6448f1da0"],"revision": "2"}

]

3.3 Hubble Policy Verdict 指标

除了流之外,Hubble 还可以生成 Prometheus 指标。在本实验中,我们激活了这些指标,正如我们在第一个挑战中所看到的那样。

让我们看一下来自 hubble-metrics 服务的原始指标。

我们可以使用 Tie Fighter Pod 之一来查看原始 Prometheus 指标:

root@server:~# kubectl -n endor exec -ti deployments/tiefighter -- \curl http://hubble-metrics.kube-system.svc.cluster.local:9965/metrics \| grep hubble_policy_verdicts_total

# HELP hubble_policy_verdicts_total Total number of Cilium network policy verdicts

# TYPE hubble_policy_verdicts_total counter

hubble_policy_verdicts_total{action="forwarded",destination="deathstar",destination_namespace="endor",direction="ingress",match="all",source="tiefighter",source_namespace="endor"} 139

您可以看到带有以下标签的指标系列:

| 键 | 含义 |

|---|---|

| action | 动作 |

| destination | 目的 |

| destination_namespace | 目的命名空间 |

| direction | 方向 |

| match | 匹配类型 |

| source | 源 |

| source_namespace | 源命名空间 |

每行末尾的数字是计数器的值(即每组标签计数的事件数)。

3.4 Grafana 中的策略裁决图

在本实验中,我们设置了一个 Grafana 服务器,其数据源指向 Prometheus,并导入了 Cilium Policy Verdicts Dashboard 以可视化与网络策略相关的指标。

这些图表利用了我们之前看到的 hubble_policy_verdicts_total 指标,该指标返回应用的网络策略判定。

当前视图对 endor 命名空间进行筛选,以便您可以看到:

- 到左侧

Endor命名空间的入口流量 - 来自右侧

Endor命名空间的出口流量

黄色区域表示 “all” 类型的网络策略。由于我们应用了 allow-all 策略,因此我们现在可以看到此指标中的网络策略明确允许的所有流量。

相同的区域形状将在底部图表中以绿色显示,表示正在转发此流量。

现在我们已经有了这些信息,我们的目标是识别这些流并为每个流编写明确的网络策略。完成此作后,我们将能够从命名空间中删除 allow-all 策略。

3.5 要筛选的流

在 Grafana 图表下方的表中,您将看到作为相应入口和出口流量的源和目标观察到的身份:

- ingress from

tiefightertodeathstar - egress to

kube-dnsfromtiefighter - egress to

deathstarfromtiefighter - egress to the

worldreserved identity fromtiefighter

运行以下 Hubble 命令,以识别与 Grafana 中显示的数据匹配的 3 个流:

root@server:~# hubble observe -n endor --type policy-verdict

Jun 4 03:38:53.772: endor/tiefighter-7564fc8d79-77gp6:42436 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:38:59.854: endor/tiefighter-7564fc8d79-77gp6:42444 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:04.414: endor/tiefighter-7564fc8d79-77gp6:55050 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:07.452: endor/tiefighter-7564fc8d79-77gp6:55066 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:08.969: endor/tiefighter-7564fc8d79-77gp6:55068 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:10.485: endor/tiefighter-7564fc8d79-77gp6:55070 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:12.003: endor/tiefighter-7564fc8d79-77gp6:55072 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:13.520: endor/tiefighter-7564fc8d79-77gp6:45290 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:15.035: endor/tiefighter-7564fc8d79-77gp6:45304 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:16.560: endor/tiefighter-7564fc8d79-77gp6:45308 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:18.080: endor/tiefighter-7564fc8d79-77gp6:45318 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:21.136: endor/tiefighter-7564fc8d79-77gp6:45328 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:24.176: endor/tiefighter-7564fc8d79-77gp6:51292 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:27.215: endor/tiefighter-7564fc8d79-77gp6:51308 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:30.260: endor/tiefighter-7564fc8d79-77gp6:39291 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:30.260: endor/tiefighter-7564fc8d79-77gp6:51558 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:30.260: endor/tiefighter-7564fc8d79-77gp6:51558 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:30.267: endor/tiefighter-7564fc8d79-77gp6:43300 (ID:41519) -> 130.211.198.204:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:30.267: endor/tiefighter-7564fc8d79-77gp6:35145 (ID:41519) -> kube-system/coredns-6f6b679f8f-xf5pp:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:30.742: endor/tiefighter-7564fc8d79-77gp6:52467 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:30.743: endor/tiefighter-7564fc8d79-77gp6:43292 (ID:41519) -> 52.58.110.120:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:31.781: endor/tiefighter-7564fc8d79-77gp6:44327 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:31.782: endor/tiefighter-7564fc8d79-77gp6:51316 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:31.782: endor/tiefighter-7564fc8d79-77gp6:51316 (ID:41519) -> endor/deathstar-848954d75c-x8jkq:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:31.785: endor/tiefighter-7564fc8d79-77gp6:47827 (ID:41519) -> kube-system/coredns-6f6b679f8f-xf5pp:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:31.786: endor/tiefighter-7564fc8d79-77gp6:43306 (ID:41519) -> 130.211.198.204:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:32.260: endor/tiefighter-7564fc8d79-77gp6:54956 (ID:41519) -> kube-system/coredns-6f6b679f8f-xf5pp:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:32.261: endor/tiefighter-7564fc8d79-77gp6:43298 (ID:41519) -> 52.58.110.120:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:33.297: endor/tiefighter-7564fc8d79-77gp6:60562 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:33.298: endor/tiefighter-7564fc8d79-77gp6:51566 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:33.298: endor/tiefighter-7564fc8d79-77gp6:51566 (ID:41519) -> endor/deathstar-848954d75c-sqh2q:80 (ID:56274) policy-verdict:all INGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:33.301: endor/tiefighter-7564fc8d79-77gp6:38662 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:33.303: endor/tiefighter-7564fc8d79-77gp6:43308 (ID:41519) -> 130.211.198.204:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

Jun 4 03:39:33.778: endor/tiefighter-7564fc8d79-77gp6:50043 (ID:41519) -> kube-system/coredns-6f6b679f8f-gpfm8:53 (ID:23249) policy-verdict:all EGRESS ALLOWED (UDP)

Jun 4 03:39:33.779: endor/tiefighter-7564fc8d79-77gp6:45610 (ID:41519) -> 52.58.110.120:443 (world) policy-verdict:all EGRESS ALLOWED (TCP Flags: SYN)

EVENTS LOST: HUBBLE_RING_BUFFER CPU(0) 1

- DNS (UDP/53) flows from

tiefightertokube-dns - HTTP (TCP/80) flows from

tiefightertodeathstar - HTTPS (TCP/443) flows from

tiefightertoworld

3.6 小测验

√ All traffic in a namespace is allowed by default

√ Adding an `allow-all` policy provides observability

× Adding an `allow-all` policy can block existing traffic

× The Policy Verdicts Grafana dashboard comes out-of-the-box with Cilium

4. 筛选 DNS 流量

4.1 可视化 DNS 流量

让我们从基础开始:访问 Kube DNS。

默认情况下,Kube DNS 隐藏在 Connections (连接) 视图中。在 Hubble Connections UI 一侧的 “Visual filters” 部分,选择 “Kube-DNS:53 pod” 选项。

现在,您将在服务映射图中看到 Kube DNS 组件,并在下面的流表中看到 DNS 流。

在编写策略之前,让我们验证一下此出口流量当前是否仅由 endor/allow-all 网络策略允许。

root@server:~# hubble observe --type policy-verdict \--to-port 53 --last 1 -o jsonpb \| jq '.flow | select(.egress_allowed_by).egress_allowed_by'

[{"name": "allow-all","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=allow-all","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=1d3aa587-ede8-4214-87e3-e2a6448f1da0"],"revision": "2"}

]

这仅返回 endor/allow-all 网络策略作为参考策略,这是我们所期望的,因为尚未应用其他策略。现在,让我们应用一个网络策略来专门允许此流量。

4.2 网络策略可视化工具

DNS 策略,请转到策略 菜单项。

此界面允许可视化集群中的网络策略,以及修改和创建新策略。在此界面中创建的策略不会自动应用于集群。相反,你可以复制生成的 YAML 清单,并使用你选择的方法(kubectl apply、GitOps 等)应用它们。

由于我们已经有一个网络策略,它将显示在左下角的列表中。单击它可查看图表中表示的视图,所有线条均为绿色,因为允许所有流量。

在右下角,您可以看到一个流列表,所有流都已标记 forwarded,对应于 Hubble 知道的有关此命名空间的流量。

4.3 创建新策略

现在,让我们为 DNS 流量创建一个新策略。

为了允许其他流量,可以将规则添加到现有的 allow-all 网络策略中。相反,让我们创建一个新的 URL,以便可以清楚地分离范围。

单击编辑器窗格左上角的 + New 图标。

如果您收到有关当前未保存的网络策略的警报,请单击 Create new empty policy (创建新的空策略 )。

接下来,单击中心框中的📝图标,将策略重命名为 dns,然后单击 Save 按钮。

在右侧(即出口),在 In Cluster 框中,将鼠标悬停在 Kubernetes DNS 部分,然后在弹出窗口中单击 Allow rule 按钮。这会向 YAML 清单添加一个完整的块,允许 DNS (UDP/53) 流量到 kube-system/kube-dns pod。

再次将鼠标悬停在同一个 Kubernetes DNS 部分上,然后切换 “DNS proxy” 选项。

新的 DNS 规则已添加到策略中

apiVersion: cilium.io/v2kind: CiliumNetworkPolicymetadata:name: dnsnamespace: endorspec:endpointSelector: {}egress:- toEndpoints:- matchLabels:io.kubernetes.pod.namespace: kube-systemk8s-app: kube-dnstoPorts:- ports:- port: "53"protocol: UDPrules:dns:- matchPattern: "*"

4.4 将策略保存到文件

将内容保存到policies/dns.yaml中,并应用配置

kubectl apply -f policies/dns.yaml

4.5 通过哈勃观测结果

执行 Hubble CLI 以查看 endor 命名空间中的流量:

hubble observe -n endor -f

您现在将注意到 DNS 查询详细信息,例如:

您还将看到与 DNS 域建立的 HTTPS 连接:

返回到 Connections 选项卡。服务映射和流中的“世界”身份现在被客户端应用程序使用的域名所取代:disney.com 和 swapi.dev。

4.6 Grafana 展示的结果

在Grafana中新颜色将开始出现在 Egress 图表中。

在右上角图表中,按匹配类型表示出口策略判定,现在有绿色和紫色区域,分别表示现在明确允许的 L3/L4 和 L7 DNS 流量。

4.7 小测验

√ Adding a DNS Network Policy provides observability

× Hubble always displays DNS names for all flows

× DNS traffic with the Network Policy in place is only L3/L4

√ DNS traffic with the Network Policy in place uses an L7 proxy

5. Death Star Ingress 策略

5.1 Death Star Ingress 策略

现在我们已经处理了 DNS 流量,让我们专注于允许从 Tie Fighter 到 Death Star 的入口流量。

让我们验证一下,唯一应用于定向到 Death Star 的流量的策略是 endor/allow-all:

root@server:~# hubble observe --type policy-verdict \--to-pod endor/deathstar --last 1 -o jsonpb | \jq '.flow | select(.ingress_allowed_by).ingress_allowed_by'

[{"name": "allow-all","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=allow-all","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=6c3c05c2-9945-41d0-9585-e605295d9a9d"],"revision": "3"}

]

目前仅列出 endor/allow-all,这是意料之中的。

5.2 编写网络策略

单击 deathstar 组件以选择它。这将筛选底部的流,并在顶部窗格中显示一个筛选器,请注意框中列出的标签。为了定位此身份,我们将使用一组两个唯一的标签:

org=empireclass=deathstar

设置此过滤器后,单击 Policies Hubble 选项卡。

通过单击编辑器窗格左上角的 + New 图标来创建新策略。然后单击中心框中的📝图标。将策略重命名为 deathstar 并使用 org=empire, class=deathstar 终端节点选择器,以便将其应用于 Death Star Pod。

注意: 先输入org=empire按回车后再输入class=deathstar

在屏幕的右下角,找到从 tiefighter 到 deathstar 的转发ingress流。我们想要为该类型的流添加规则,因此单击该流并选择“Add rule to policy”(将规则添加到策略)。

复制yaml内容到policies/deathstar.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: deathstarnamespace: endor

spec:endpointSelector:matchLabels:class: deathstarorg: empireingress:- fromEndpoints:- matchLabels:k8s:app.kubernetes.io/name: tiefighterk8s:class: tiefighterk8s:io.kubernetes.pod.namespace: endork8s:org: empiretoPorts:- ports:- port: "80"应用该策略

kubectl apply -f policies/deathstar.yaml

5.3 用Hubble确认

使用 Hubble CLI 检查结论:

root@server:~# hubble observe --type policy-verdict \--to-pod endor/deathstar --last 1 -o jsonpb \| jq '.flow | select(.ingress_allowed_by).ingress_allowed_by'

[{"name": "deathstar","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=deathstar","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=a52ee190-a7b5-43de-b827-a52dfd1d6667"],"revision": "4"}

]

[{"name": "deathstar","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=deathstar","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=a52ee190-a7b5-43de-b827-a52dfd1d6667"],"revision": "4"}

]

最后的匹配应该在 endor/deathstar 上,而不是 endor/allow-all 上。如果多次启动此命令,则提及 endor/allow-all 最终会完全消失。

5.4 用Grafana确认

您将看到整个 Ingress by Match Type 图形区域变为绿色,并且 Ingress 表中的流现在将列为 l3-l4 而不是全部 。这对应于 deathstar 组件的入口流量。

6. Tie Fighter 出口策略

6.1 Tie Fighter 出口策略

为了为 Tie Fighter 出口流量编写网络策略,我们将使用与 Death Star 入口策略类似的方法。

首先,验证此流量的唯一应用策略是否仍为 endor/allow-all:

root@server:~# hubble observe --type policy-verdict \--from-pod endor/tiefighter --last 1 -o jsonpb | \jq '.flow | select(.egress_allowed_by).egress_allowed_by'

[{"name": "allow-all","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=allow-all","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=6c3c05c2-9945-41d0-9585-e605295d9a9d"],"revision": "4"}

]

单击 tiefighter 组件以将其选中。

请注意框中列出的标签。为了定位此身份,我们将使用一组两个唯一的标签:

org=empireclass=tiefighter

设置此过滤器后,单击 Policies Hubble 选项卡。

通过单击编辑器窗格左上角的 + New 图标来创建新策略。然后单击中心框中的📝图标。将策略重命名为 tiefighter 并使用 org=empire, class=tiefighter 终端节点选择器,以便将其应用于 Tie Fighter pod。

6.2 外部 toFQDN 出口规则

在屏幕的右下角,找到 2 个转发的 HTTPS (TCP/443) 流量流向 disney.com 和 swapi.dev 域名。

单击它们中的每一个并将它们添加到策略中。

确保2个域名都加入egress

6.3 Death Star 的内部出口规则

接下来,我们需要向 tiefighter 组件添加一个 egress 规则。

在流中找到从 tiefighter 到 deathstar 的egress流,并将规则添加到网络策略中。确保通过检查 Flow Details (流详细信息) 中的 “Traffic direction” (流量方向) 值来真正添加egress流。

点击Add rule to policy

复制yaml内容到policies/tiefighter.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: tiefighternamespace: endor

spec:endpointSelector:matchLabels:class: tiefighterorg: empireegress:- toFQDNs:- matchName: disney.comtoPorts:- ports:- port: "443"- toFQDNs:- matchName: swapi.devtoPorts:- ports:- port: "443"- toEndpoints:- matchLabels:k8s:app.kubernetes.io/name: deathstark8s:class: deathstark8s:io.kubernetes.pod.namespace: endork8s:org: empiretoPorts:- ports:- port: "80"应用配置

kubectl apply -f policies/tiefighter.yaml

6.4 用Hubble观测

现在检查 egress 判定是否已切换到新的 endor/tiefighter 政策:

root@server:~# hubble observe --type policy-verdict \--from-pod endor/tiefighter --last 1 -o jsonpb \| jq '.flow | select(.egress_allowed_by).egress_allowed_by'

[{"name": "tiefighter","namespace": "endor","labels": ["k8s:io.cilium.k8s.policy.derived-from=CiliumNetworkPolicy","k8s:io.cilium.k8s.policy.name=tiefighter","k8s:io.cilium.k8s.policy.namespace=endor","k8s:io.cilium.k8s.policy.uid=8ef3ed35-e1ff-49e4-9639-18ceb7476906"],"revision": "5"}

]

6.5 用Grafana观测

两个 Match Type (匹配类型) 图表现在都应该完全变为绿色,因为命名空间中的所有流量都由特定的网络策略管理。

一段时间后,Ingress 和 Egress 表中的所有条目现在都仅列出 L3/L4 或 L7 类型的匹配项,这表明不再使用 allow-all 策略。

6.6 小测验

√ The Network Policy Editor allows to add L3/L4 rules

√ Both Ingress and Egress rules can be added in the Editor

× The Network Policy Editor can only manage one Network Policy per namespace

× The Network Policy Editor supports only Cilium Network Policies

7. 删除 allow-all 策略

让我们验证一下 endor/allow-all 网络策略是否不再被任何流使用。首先,检查出口流:

hubble observe --type policy-verdict -o jsonpb \| jq '.flow | select(.egress_allowed_by).egress_allowed_by[] | select(.name=="allow-all")'

然后 Ingress 流:

hubble observe --type policy-verdict -o jsonpb \| jq '.flow | select(.ingress_allowed_by).ingress_allowed_by[] | select(.name=="allow-all")'

这两个命令都不应出现任何内容,指示未使用策略。

现在,所有流量都由网络策略专门针对,您可以删除 allow-all 策略:

kubectl delete -f policies/allow-all.yaml

检查 Hubble 和 Grafana 选项卡。一切都应该工作正常。

8. The Hoth System

现在,我们将部署一个名为 hoth 的 Kubernetes 命名空间。

此命名空间将包含三个工作负载:一个star-destroyer Deployment、一个 x-wing Deployment 和一个 millenium-falcon Pod。

8.1 部署应用

确认配置文件内容

root@server:~# yq hoth.yaml

---

apiVersion: v1

kind: Namespace

metadata:name: hoth

---

apiVersion: apps/v1

kind: Deployment

metadata:namespace: hothname: star-destroyer

spec:replicas: 2selector:matchLabels:org: empireclass: star-destroyerapp.kubernetes.io/name: star-destroyertemplate:metadata:labels:org: empireclass: star-destroyerapp.kubernetes.io/name: star-destroyerspec:containers:- name: starshipimage: docker.io/tgraf/netperfcommand: ["/bin/sh"]args: ["-c", "while true; do curl -s -XPOST deathstar.endor.svc.cluster.local/v1/request-landing; sleep 1; done"]

---

apiVersion: apps/v1

kind: Deployment

metadata:namespace: hothname: x-wing

spec:replicas: 1selector:matchLabels:org: allianceclass: x-wingapp.kubernetes.io/name: x-wingtemplate:metadata:labels:org: allianceclass: x-wingapp.kubernetes.io/name: x-wingspec:containers:- name: starshipimage: docker.io/tgraf/netperfcommand: ["/bin/sh"]args: ["-c", "while true; do curl -s -XPOST deathstar.endor.svc.cluster.local/v1/request-landing; sleep 1; done"]

---

apiVersion: v1

kind: Pod

metadata:namespace: hothname: millenium-falconlabels:class: light-freighterapp.kubernetes.io/name: millenium-falcon

spec:containers:- name: starshipimage: docker.io/tgraf/netperfcommand: ["/bin/sh"]args: ["-c", "while true; do curl -s -XPOST deathstar.endor.svc.cluster.local/v1/request-landing; sleep 1; done"]

为了检查我们的策略是否到位,让我们在 hoth 命名空间中部署其他应用程序:

kubectl apply -f hoth.yaml

这将部署各种船只,这些船只将调用 endor/deathstar。我们的网络策略目前不允许这些流。

检查 Hubble 日志:

root@server:~# hubble observe -n endor --verdict DROPPED

Jun 4 05:28:09.546: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:09.547: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:11.595: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:11.595: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:13.258: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:13.259: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:15.627: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:15.627: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:16.331: hoth/millenium-falcon:39768 (ID:14498) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:16.331: hoth/millenium-falcon:39768 (ID:14498) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:16.972: hoth/star-destroyer-db7577d4-dt7j6:36922 (ID:59627) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:16.972: hoth/star-destroyer-db7577d4-dt7j6:36922 (ID:59627) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:17.291: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:17.291: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:24.074: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:24.075: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:24.587: hoth/millenium-falcon:39768 (ID:14498) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:24.587: hoth/millenium-falcon:39768 (ID:14498) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:25.099: hoth/star-destroyer-db7577d4-dt7j6:36922 (ID:59627) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:25.099: hoth/star-destroyer-db7577d4-dt7j6:36922 (ID:59627) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:25.611: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:25.611: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:40.459: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:40.459: hoth/x-wing-5ccc854bc5-m7q47:50098 (ID:22603) <> endor/deathstar-848954d75c-lhj4k:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:40.971: hoth/millenium-falcon:39768 (ID:14498) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:40.971: hoth/millenium-falcon:39768 (ID:14498) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:41.483: hoth/star-destroyer-db7577d4-dt7j6:36922 (ID:59627) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:41.483: hoth/star-destroyer-db7577d4-dt7j6:36922 (ID:59627) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

Jun 4 05:28:41.994: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 4 05:28:41.994: hoth/star-destroyer-db7577d4-bbzfq:54090 (ID:18211) <> endor/deathstar-848954d75c-kzvcm:80 (ID:47900) Policy denied DROPPED (TCP Flags: SYN)

EVENTS LOST: HUBBLE_RING_BUFFER CPU(0) 1

观察 DENIED 和 DROPPED 判定行(红色):

看到 Hoth 工作负载的 3 个新块。如果你点击其中一个(例如 x-wing),你会注意到指向 deathstar 的链接是红色的,因为流量被拒绝了。

片刻之后,入口图中将出现一个新的红色区域,对应于没有规则匹配的流量( 表中的 match = none),因此被丢弃。由于没有策略匹配,流量被丢弃。

8.2 仅允许 Empire 船只

单击 deathstar 框以选择它并导航到 Policies (策略) 选项卡。

创建一个新策略 + 新建 ,将其命名为 deathstar-from-hoth 📝 并使其应用于 org=empire, class=deathstar 选择器。

然后单击 Star Destroyer 的ingress丢弃流之一 ,然后将规则添加到策略中。

将内容复制到policies/deathstar-from-hoth.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: deathstar-from-hothnamespace: endor

spec:endpointSelector:matchLabels:class: deathstarorg: empireingress:- fromEndpoints:- matchLabels:k8s:app.kubernetes.io/name: star-destroyerk8s:class: star-destroyerk8s:io.kubernetes.pod.namespace: hothk8s:org: empiretoPorts:- ports:- port: "80"应用该配置

kubectl apply -f policies/deathstar-from-hoth.yaml

哈勃日志将立即停止显示歼星舰的 DENIED 判定,但其他船只的流量仍被拒绝:

hubble observe -n endor --verdict DROPPED

一些 Grafana 仪表板区域将再次变为绿色,歼星舰源与 l3-l4 规则正确匹配。

X-Wing 和 Falcon Millenium 都会继续被拒绝,并在 Ingress 流的 Grafana 列表中显示为 match none。

8.3 小测验

× Deny Network Policies must be created explicitely to block traffic

× Deleting the allow-all rules removes observability in the namespace

√ The allow-all rule avoids denied traffic while setting up a Zero Trust approach in a namespace

9. L7 Security

正如我们所看到的,现在通过使用 L3/L4 网络策略来保护 Death Star 免受未知身份的侵害。

虽然这很好,但我们可能希望通过使用第 7 层策略来限制允许访问它的流量。

让我们看看为什么,以及如何解决它!

9.1 L7 漏洞

帝国的 IT 部门发现了死星的安全漏洞。当电荷掉入排气口时,它可能会爆炸。

您可以使用以下方法对其进行测试:

root@server:~# kubectl -n endor exec -ti deployments/tiefighter \-- curl -s -XPUT deathstar.endor.svc.cluster.local/v1/exhaust-port

Panic: deathstar explodedgoroutine 1 [running]:

main.HandleGarbage(0x2080c3f50, 0x2, 0x4, 0x425c0, 0x5, 0xa)/code/src/github.com/empire/deathstar/temp/main.go:9 +0x64

main.main()/code/src/github.com/empire/deathstar/temp/main.go:5 +0x85

9.2 L7过滤

我们想更精细地过滤通往 Death Star 的通道,以避免进入排气口,同时仍然允许 Tie Fighters 降落在其上。

为了实现这一点,我们将调整之前的入口网络策略并添加 HTTP 规则。

然后单击"Policies" 在左侧列中,选择 “deathstar” 策略以在编辑器中显示它。在图的左侧“In Cluster”部分中,将鼠标悬停在端口 80 的规则上

-

删除“to ports”部分中的

80,并将其替换为80|TCP -

路径:

/v1/request-landing -

方法:

POST

点击 L7 Rule +

最后,单击 “Save”,并复制生成的 YAML 清单。保存到policies/deathstar.yaml

root@server:~# vi policies/deathstar.yaml

root@server:~# yq policies/deathstar.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: deathstarnamespace: endor

spec:endpointSelector:matchLabels:any:class: deathstarany:org: empireingress:- fromEndpoints:- matchLabels:k8s:app.kubernetes.io/name: tiefighterk8s:class: tiefighterk8s:org: empireio.kubernetes.pod.namespace: endortoPorts:- rules:http:- path: /v1/request-landingmethod: POSTports:- port: "80"protocol: TCP

再次应用配置

kubectl apply -f policies/deathstar.yaml

9.3 Hubble 观测

由于我们现在在第 7 层进行过滤,因此我们可以看到在 Hubble CLI 中解析的流量:

hubble observe -n endor --protocol http

返回“Connections”并单击“deathstar”框。

服务映射现在在框和流中显示 HTTP 详细信息。

9.4 Grafana观测

入口图现在具有 L7/HTTP 流量区域。按匹配类型划分的入口图显示 L7/HTTP 区域。

按作划分的入口图有一个新的redirected区域,因为该流量现在通过 Cilium Envoy 代理重定向,以实现可观察性、实施和引导。

最后,入口流表现在以 l7/http 的形式列出传入 Death Star 的流量(以及返回到 Tie Fighter 的流量)。

10. 最终实验

10.1 题目

在exam Kubernetes 命名空间中部署了新应用程序。

您可以使用以下方法查看 Pod(并检查它们是否已准备就绪):

kubectl get po -n exam

对于此实践考试,您需要:

- 识别命名空间中的所有流量

- 为所有这些编写 Cilium 网络策略(已在

/root/exam-policies/目录中为此创建了空文件)。单个策略文件可以同时包含身份的 Ingress 和 Egress。

请确保:

- 查找所有入口和出口流量

- 使用正确的标签集定位身份(例如,请参阅

app标签) - 部署 DNS 规则以允许 DNS 流量并发现要筛选的 DNS 名称

- 实际应用您创建的清单!

- 不留下

allow-all规则

请记住,您可以检查是否使用以下命令使用名为 allow-all 的网络策略:

hubble observe --type policy-verdict -o jsonpb | jq '.flow | select(.ingress_allowed_by).ingress_allowed_by[] | select(.name=="allow-all")'

hubble observe --type policy-verdict -o jsonpb | jq '.flow | select(.egress_allowed_by).egress_allowed_by[] | select(.name=="allow-all")'

您还可以使用以下方法检查丢弃的数据包:

hubble observe -n exam --verdict DROPPED

有时,流可能不会显示在 Hubble UI 的列表中。这很可能是因为它发生在过去超过 5 分钟(并且我们在本实验中没有使用 Timescape)。请记住,您始终可以使用 Network Policy Editor 添加手动规则,也可以直接在编辑器选项卡的 YAML 清单中添加手动规则。

10.2 解题

10.2.1 创建allow-all

- 先配置一个allow-all,并应用配置

root@server:~# yq exam-policies/allow-all.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:namespace: examname: allow-all

spec:endpointSelector: {}ingress:- fromEntities:- allegress:- toEntities:- all

root@server:~# k apply -f exam-policies/allow-all.yaml

ciliumnetworkpolicy.cilium.io/allow-all created

此时Hubble和Grafana都正常

10.2.2 创建DNS策略

root@server:~# yq exam-policies/dns.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: dnsnamespace: exam

spec:endpointSelector: {}egress:- toEndpoints:- matchLabels:io.kubernetes.pod.namespace: kube-systemk8s-app: kube-dnstoPorts:- ports:- port: "53"protocol: UDPrules:dns:- matchPattern: "*"

root@server:~# k apply -f exam-policies/dns.yaml

ciliumnetworkpolicy.cilium.io/dns created

10.2.3 创建coreapi策略

依次加上去其他服务的策略

复制并应用yaml

root@server:~# yq exam-policies/coreapi.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: coreapinamespace: exam

spec:endpointSelector:matchLabels:any:app: coreapiingress:- fromEntities:- clusteregress:- toEndpoints:- matchLabels:k8s:app: coreapik8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9080"- toEndpoints:- matchLabels:k8s:app: elasticsearchk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9200"

root@server:~# k apply -f exam-policies/coreapi.yaml

ciliumnetworkpolicy.cilium.io/coreapi created

10.2.4 创建elasticsearch

root@server:~# yq exam-policies/elasticsearch.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: elasticsearchnamespace: exam

spec:endpointSelector:matchLabels:app: elasticsearchegress:- toEndpoints:- matchLabels:k8s:app: elasticsearchk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9200"

root@server:~# k apply -f exam-policies/elasticsearch.yaml

ciliumnetworkpolicy.cilium.io/elasticsearch created

10.2.5 crawler

root@server:~# yq exam-policies/crawler.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: crawlernamespace: exam

spec:endpointSelector:matchLabels:any:app: crawleregress:- toEndpoints:- matchLabels:k8s:app: elasticsearchk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9200"- toEndpoints:- matchLabels:k8s:app: loaderk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "50051"- toFQDNs:- matchName: api.twitter.comtoPorts:- ports:- port: "443"

root@server:~# k apply -f exam-policies/crawler.yaml

ciliumnetworkpolicy.cilium.io/crawler configured

10.2.6 jobposting

root@server:~# yq exam-policies/jobposting.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: jobpostingnamespace: exam

spec:endpointSelector:matchLabels:any:app: jobpostingegress:- toEndpoints:- matchLabels:k8s:app: coreapik8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9080"- toEndpoints:- matchLabels:k8s:app: elasticsearchk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9200"

root@server:~# k apply -f exam-policies/jobposting.yaml

ciliumnetworkpolicy.cilium.io/jobposting configured

10.2.7 kafka

root@server:~# yq exam-policies/kafka.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: kafkanamespace: exam

spec:endpointSelector:matchLabels:any:app: kafkaegress:- toEndpoints:- matchLabels:k8s:app: elasticsearchk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9200"- toEndpoints:- matchLabels:k8s:app: kafkak8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9092"- toEndpoints:- matchLabels:k8s:app: zookeeperk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "2181"

root@server:~# k apply -f exam-policies/kafka.yaml

ciliumnetworkpolicy.cilium.io/kafka created

10.2.8 loader

root@server:~# yq exam-policies/loader.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: loadernamespace: exam

spec:endpointSelector:matchLabels:any:app: loaderegress:- toEndpoints:- matchLabels:k8s:app: elasticsearchk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9200"- toEndpoints:- matchLabels:k8s:app: kafkak8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9092"

root@server:~# k apply -f exam-policies/loader.yaml

ciliumnetworkpolicy.cilium.io/loader created

10.2.9 recruiter

root@server:~# yq exam-policies/loader.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: recruiternamespace: exam

spec:endpointSelector:matchLabels:app: recruiteregress:- toEndpoints:- matchLabels:k8s:app: coreapik8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9080"

root@server:~# k apply -f exam-policies/loader.yaml

ciliumnetworkpolicy.cilium.io/loader created

10.2.10 zookeeper

root@server:~# yq exam-policies/zookeeper.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: zookeepernamespace: exam

spec:endpointSelector:matchLabels:any:app: zookeeperegress:- toEndpoints:- matchLabels:k8s:app: elasticsearchk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "9200"- toEndpoints:- matchLabels:k8s:app: zookeeperk8s:io.kubernetes.pod.namespace: examtoPorts:- ports:- port: "2181"

root@server:~# k apply -f exam-policies/zookeeper.yaml

ciliumnetworkpolicy.cilium.io/zookeeper created

应用所有配置

root@server:~# k apply -f exam-policies

ciliumnetworkpolicy.cilium.io/coreapi created

ciliumnetworkpolicy.cilium.io/crawler created

ciliumnetworkpolicy.cilium.io/dns created

ciliumnetworkpolicy.cilium.io/elasticsearch created

ciliumnetworkpolicy.cilium.io/jobposting created

ciliumnetworkpolicy.cilium.io/kafka created

ciliumnetworkpolicy.cilium.io/loader created

ciliumnetworkpolicy.cilium.io/loader unchanged

ciliumnetworkpolicy.cilium.io/zookeeper created

root@server:~# k delete -f exam-policies/allow-all.yaml

几经波折,直到不再报错.提交!

新徽章GET!

相关文章:

Cilium动手实验室: 精通之旅---20.Isovalent Enterprise for Cilium: Zero Trust Visibility

Cilium动手实验室: 精通之旅---20.Isovalent Enterprise for Cilium: Zero Trust Visibility 1. 实验室环境1.1 实验室环境1.2 小测试 2. The Endor System2.1 部署应用2.2 检查现有策略 3. Cilium 策略实体3.1 创建 allow-all 网络策略3.2 在 Hubble CLI 中验证网络策略源3.3 …...

条件运算符

C中的三目运算符(也称条件运算符,英文:ternary operator)是一种简洁的条件选择语句,语法如下: 条件表达式 ? 表达式1 : 表达式2• 如果“条件表达式”为true,则整个表达式的结果为“表达式1”…...

系统设计 --- MongoDB亿级数据查询优化策略

系统设计 --- MongoDB亿级数据查询分表策略 背景Solution --- 分表 背景 使用audit log实现Audi Trail功能 Audit Trail范围: 六个月数据量: 每秒5-7条audi log,共计7千万 – 1亿条数据需要实现全文检索按照时间倒序因为license问题,不能使用ELK只能使用…...

HTML 列表、表格、表单

1 列表标签 作用:布局内容排列整齐的区域 列表分类:无序列表、有序列表、定义列表。 例如: 1.1 无序列表 标签:ul 嵌套 li,ul是无序列表,li是列表条目。 注意事项: ul 标签里面只能包裹 li…...

深入理解JavaScript设计模式之单例模式

目录 什么是单例模式为什么需要单例模式常见应用场景包括 单例模式实现透明单例模式实现不透明单例模式用代理实现单例模式javaScript中的单例模式使用命名空间使用闭包封装私有变量 惰性单例通用的惰性单例 结语 什么是单例模式 单例模式(Singleton Pattern&#…...

STM32F4基本定时器使用和原理详解

STM32F4基本定时器使用和原理详解 前言如何确定定时器挂载在哪条时钟线上配置及使用方法参数配置PrescalerCounter ModeCounter Periodauto-reload preloadTrigger Event Selection 中断配置生成的代码及使用方法初始化代码基本定时器触发DCA或者ADC的代码讲解中断代码定时启动…...

JVM垃圾回收机制全解析

Java虚拟机(JVM)中的垃圾收集器(Garbage Collector,简称GC)是用于自动管理内存的机制。它负责识别和清除不再被程序使用的对象,从而释放内存空间,避免内存泄漏和内存溢出等问题。垃圾收集器在Ja…...

【机器视觉】单目测距——运动结构恢复

ps:图是随便找的,为了凑个封面 前言 在前面对光流法进行进一步改进,希望将2D光流推广至3D场景流时,发现2D转3D过程中存在尺度歧义问题,需要补全摄像头拍摄图像中缺失的深度信息,否则解空间不收敛…...

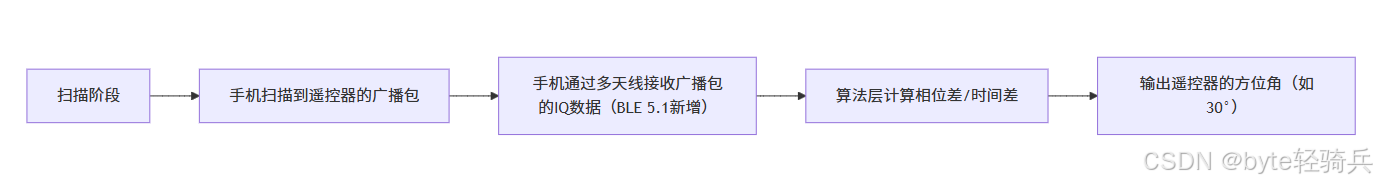

蓝牙 BLE 扫描面试题大全(2):进阶面试题与实战演练

前文覆盖了 BLE 扫描的基础概念与经典问题蓝牙 BLE 扫描面试题大全(1):从基础到实战的深度解析-CSDN博客,但实际面试中,企业更关注候选人对复杂场景的应对能力(如多设备并发扫描、低功耗与高发现率的平衡)和前沿技术的…...

【磁盘】每天掌握一个Linux命令 - iostat

目录 【磁盘】每天掌握一个Linux命令 - iostat工具概述安装方式核心功能基础用法进阶操作实战案例面试题场景生产场景 注意事项 【磁盘】每天掌握一个Linux命令 - iostat 工具概述 iostat(I/O Statistics)是Linux系统下用于监视系统输入输出设备和CPU使…...

鸿蒙中用HarmonyOS SDK应用服务 HarmonyOS5开发一个医院挂号小程序

一、开发准备 环境搭建: 安装DevEco Studio 3.0或更高版本配置HarmonyOS SDK申请开发者账号 项目创建: File > New > Create Project > Application (选择"Empty Ability") 二、核心功能实现 1. 医院科室展示 /…...

React Native在HarmonyOS 5.0阅读类应用开发中的实践

一、技术选型背景 随着HarmonyOS 5.0对Web兼容层的增强,React Native作为跨平台框架可通过重新编译ArkTS组件实现85%以上的代码复用率。阅读类应用具有UI复杂度低、数据流清晰的特点。 二、核心实现方案 1. 环境配置 (1)使用React Native…...

基于Uniapp开发HarmonyOS 5.0旅游应用技术实践

一、技术选型背景 1.跨平台优势 Uniapp采用Vue.js框架,支持"一次开发,多端部署",可同步生成HarmonyOS、iOS、Android等多平台应用。 2.鸿蒙特性融合 HarmonyOS 5.0的分布式能力与原子化服务,为旅游应用带来…...

服务器硬防的应用场景都有哪些?

服务器硬防是指一种通过硬件设备层面的安全措施来防御服务器系统受到网络攻击的方式,避免服务器受到各种恶意攻击和网络威胁,那么,服务器硬防通常都会应用在哪些场景当中呢? 硬防服务器中一般会配备入侵检测系统和预防系统&#x…...

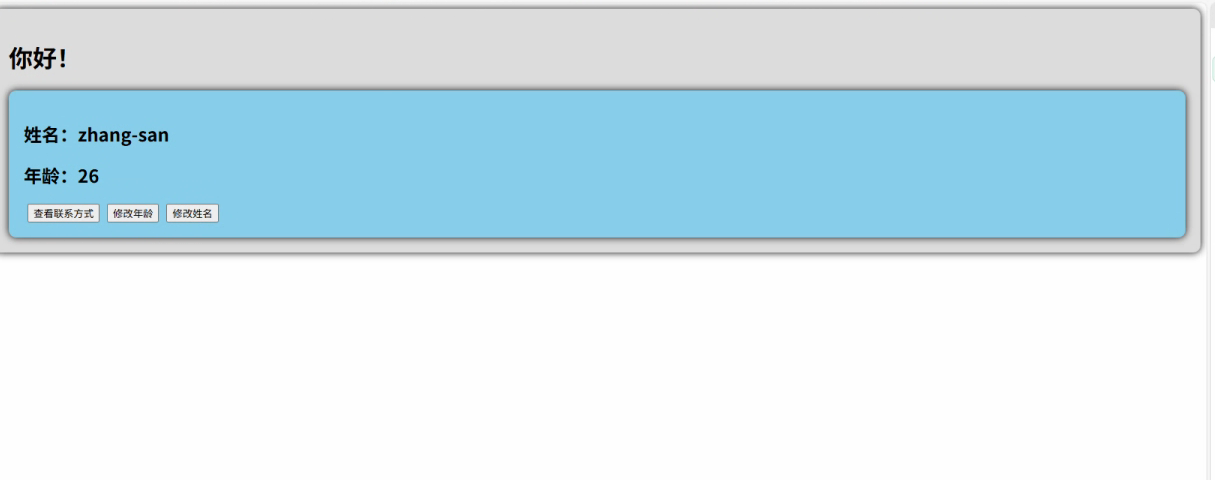

django filter 统计数量 按属性去重

在Django中,如果你想要根据某个属性对查询集进行去重并统计数量,你可以使用values()方法配合annotate()方法来实现。这里有两种常见的方法来完成这个需求: 方法1:使用annotate()和Count 假设你有一个模型Item,并且你想…...

Auto-Coder使用GPT-4o完成:在用TabPFN这个模型构建一个预测未来3天涨跌的分类任务

通过akshare库,获取股票数据,并生成TabPFN这个模型 可以识别、处理的格式,写一个完整的预处理示例,并构建一个预测未来 3 天股价涨跌的分类任务 用TabPFN这个模型构建一个预测未来 3 天股价涨跌的分类任务,进行预测并输…...

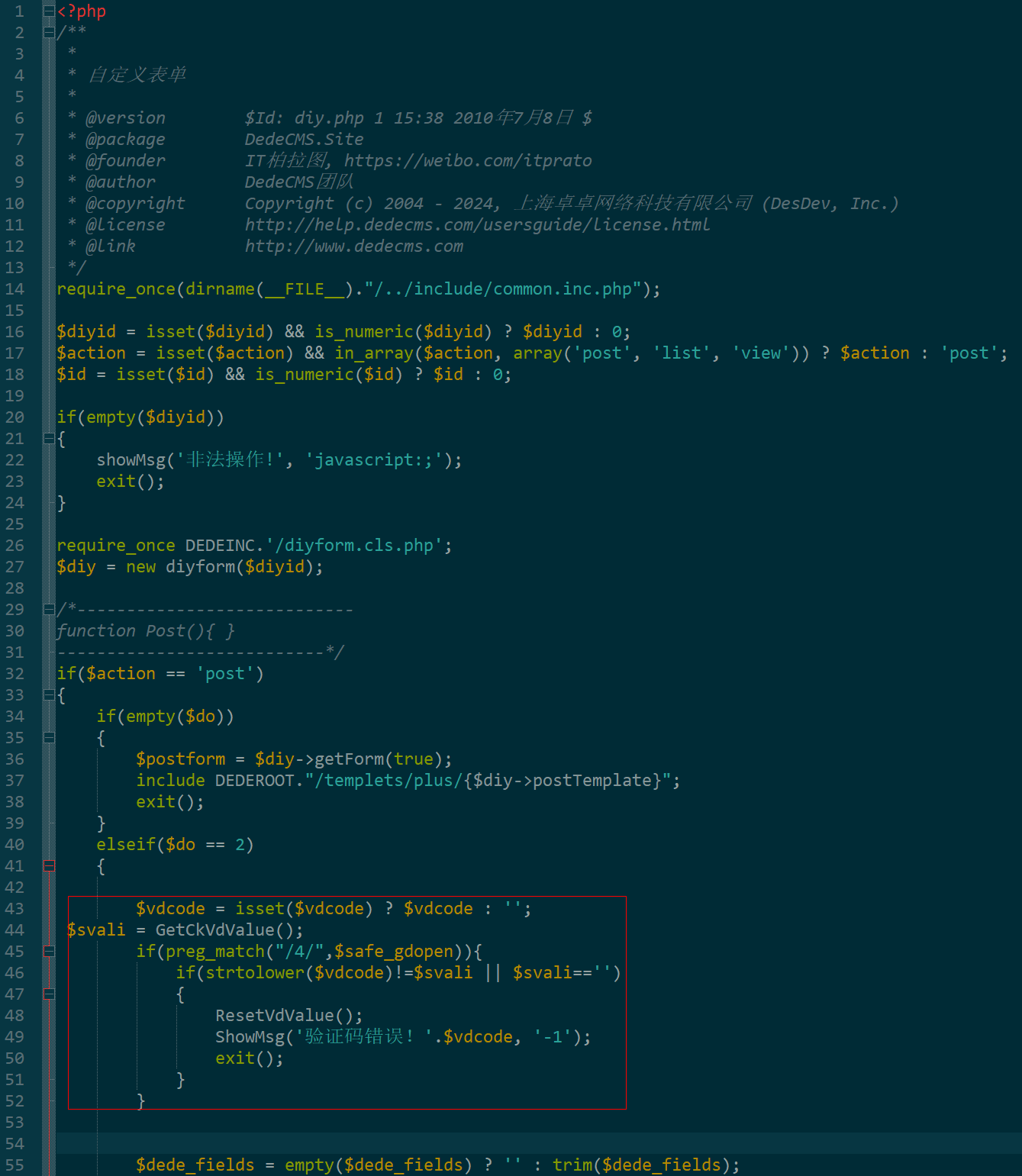

dedecms 织梦自定义表单留言增加ajax验证码功能

增加ajax功能模块,用户不点击提交按钮,只要输入框失去焦点,就会提前提示验证码是否正确。 一,模板上增加验证码 <input name"vdcode"id"vdcode" placeholder"请输入验证码" type"text&quo…...

抖音增长新引擎:品融电商,一站式全案代运营领跑者

抖音增长新引擎:品融电商,一站式全案代运营领跑者 在抖音这个日活超7亿的流量汪洋中,品牌如何破浪前行?自建团队成本高、效果难控;碎片化运营又难成合力——这正是许多企业面临的增长困局。品融电商以「抖音全案代运营…...

电脑插入多块移动硬盘后经常出现卡顿和蓝屏

当电脑在插入多块移动硬盘后频繁出现卡顿和蓝屏问题时,可能涉及硬件资源冲突、驱动兼容性、供电不足或系统设置等多方面原因。以下是逐步排查和解决方案: 1. 检查电源供电问题 问题原因:多块移动硬盘同时运行可能导致USB接口供电不足&#x…...

Golang dig框架与GraphQL的完美结合

将 Go 的 Dig 依赖注入框架与 GraphQL 结合使用,可以显著提升应用程序的可维护性、可测试性以及灵活性。 Dig 是一个强大的依赖注入容器,能够帮助开发者更好地管理复杂的依赖关系,而 GraphQL 则是一种用于 API 的查询语言,能够提…...

2.Vue编写一个app

1.src中重要的组成 1.1main.ts // 引入createApp用于创建应用 import { createApp } from "vue"; // 引用App根组件 import App from ./App.vue;createApp(App).mount(#app)1.2 App.vue 其中要写三种标签 <template> <!--html--> </template>…...

大语言模型如何处理长文本?常用文本分割技术详解

为什么需要文本分割? 引言:为什么需要文本分割?一、基础文本分割方法1. 按段落分割(Paragraph Splitting)2. 按句子分割(Sentence Splitting)二、高级文本分割策略3. 重叠分割(Sliding Window)4. 递归分割(Recursive Splitting)三、生产级工具推荐5. 使用LangChain的…...

全球首个30米分辨率湿地数据集(2000—2022)

数据简介 今天我们分享的数据是全球30米分辨率湿地数据集,包含8种湿地亚类,该数据以0.5X0.5的瓦片存储,我们整理了所有属于中国的瓦片名称与其对应省份,方便大家研究使用。 该数据集作为全球首个30米分辨率、覆盖2000–2022年时间…...

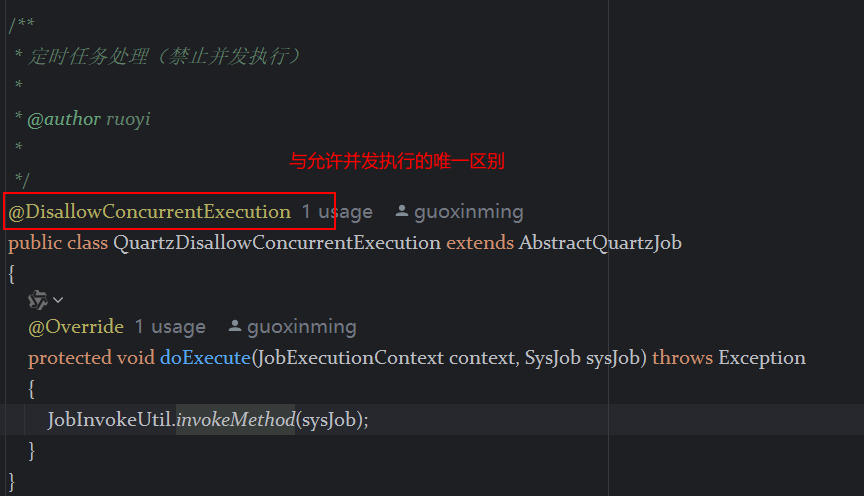

定时器任务——若依源码分析

分析util包下面的工具类schedule utils: ScheduleUtils 是若依中用于与 Quartz 框架交互的工具类,封装了定时任务的 创建、更新、暂停、删除等核心逻辑。 createScheduleJob createScheduleJob 用于将任务注册到 Quartz,先构建任务的 JobD…...

STM32标准库-DMA直接存储器存取

文章目录 一、DMA1.1简介1.2存储器映像1.3DMA框图1.4DMA基本结构1.5DMA请求1.6数据宽度与对齐1.7数据转运DMA1.8ADC扫描模式DMA 二、数据转运DMA2.1接线图2.2代码2.3相关API 一、DMA 1.1简介 DMA(Direct Memory Access)直接存储器存取 DMA可以提供外设…...

376. Wiggle Subsequence

376. Wiggle Subsequence 代码 class Solution { public:int wiggleMaxLength(vector<int>& nums) {int n nums.size();int res 1;int prediff 0;int curdiff 0;for(int i 0;i < n-1;i){curdiff nums[i1] - nums[i];if( (prediff > 0 && curdif…...

《用户共鸣指数(E)驱动品牌大模型种草:如何抢占大模型搜索结果情感高地》

在注意力分散、内容高度同质化的时代,情感连接已成为品牌破圈的关键通道。我们在服务大量品牌客户的过程中发现,消费者对内容的“有感”程度,正日益成为影响品牌传播效率与转化率的核心变量。在生成式AI驱动的内容生成与推荐环境中࿰…...

在 Nginx Stream 层“改写”MQTT ngx_stream_mqtt_filter_module

1、为什么要修改 CONNECT 报文? 多租户隔离:自动为接入设备追加租户前缀,后端按 ClientID 拆分队列。零代码鉴权:将入站用户名替换为 OAuth Access-Token,后端 Broker 统一校验。灰度发布:根据 IP/地理位写…...

学校招生小程序源码介绍

基于ThinkPHPFastAdminUniApp开发的学校招生小程序源码,专为学校招生场景量身打造,功能实用且操作便捷。 从技术架构来看,ThinkPHP提供稳定可靠的后台服务,FastAdmin加速开发流程,UniApp则保障小程序在多端有良好的兼…...

测试markdown--肇兴

day1: 1、去程:7:04 --11:32高铁 高铁右转上售票大厅2楼,穿过候车厅下一楼,上大巴车 ¥10/人 **2、到达:**12点多到达寨子,买门票,美团/抖音:¥78人 3、中饭&a…...