系统学习Linux-ELK日志收集系统

ELK日志收集系统集群实验

实验环境

| 角色 | 主机名 | IP | 接口 |

| httpd | 192.168.31.50 | ens33 | |

| node1 | 192.168.31.51 | ens33 | |

| noed2 | 192.168.31.53 | ens33 | |

环境配置

设置各个主机的ip地址为拓扑中的静态ip,并修改主机名

#httpd

[root@localhost ~]# hostnamectl set-hostname httpd

[root@localhost ~]# bash

[root@httpd ~]# #node1

[root@localhost ~]# hostnamectl set-hostname node1

[root@localhost ~]# bash

[root@node1 ~]# vim /etc/hosts

192.168.31.51 node1

192.168.31.53 node2#node2

[root@localhost ~]# hostnamectl set-hostname node2

[root@localhost ~]# bash

[root@node2 ~]# vim /etc/hosts

192.168.31.51 node1

192.168.31.53 node2安装elasticsearch

#node1

[root@node1 ~]# ls

elk软件包 公共 模板 视频 图片 文档 下载 音乐 桌面

[root@node1 ~]# mv elk软件包 elk

[root@node1 ~]# ls

elk 公共 模板 视频 图片 文档 下载 音乐 桌面

[root@node1 ~]# cd elk

[root@node1 elk]# ls

elasticsearch-5.5.0.rpm kibana-5.5.1-x86_64.rpm node-v8.2.1.tar.gz

elasticsearch-head.tar.gz logstash-5.5.1.rpm phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 elk]# rpm -ivh elasticsearch-5.5.0.rpm

警告:elasticsearch-5.5.0.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

正在升级/安装...1:elasticsearch-0:5.5.0-1 ################################# [100%]

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemdsudo systemctl daemon-reloadsudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executingsudo systemctl start elasticsearch.service#node2

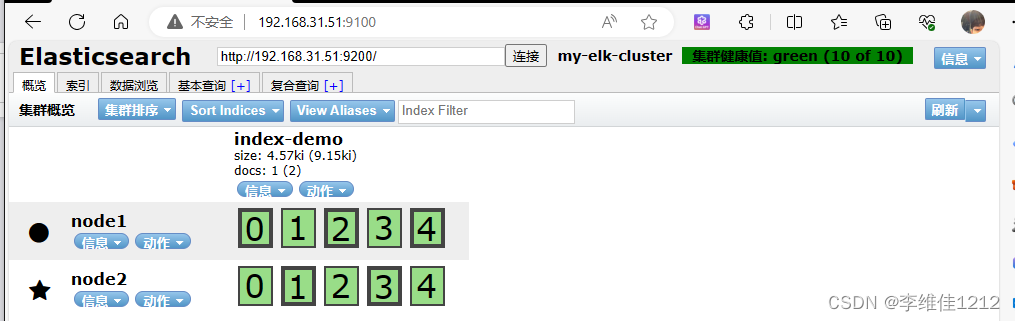

配置

node1

vim /etc/elasticsearch/elasticsearch.yml

17 cluster.name: my-elk-cluster //集群名称 23 node.name: node1 //节点名字33 path.data: /var/lib/elasticsearch //数据存放路径37 path.logs: /var/log/elasticsearch/ //日志存放路径43 bootstrap.memory_lock: false //在启动的时候不锁定内存55 network.host: 0.0.0.0 //提供服务绑定的IP地址,0.0.0.0代表所有地址59 http.port: 9200 //侦听端口为920068 discovery.zen.ping.unicast.hosts: ["node1", "node2"] //群集发现通过单播实现

node2

17 cluster.name: my-elk-cluster //集群名称 23 node.name: node1 //节点名字33 path.data: /var/lib/elasticsearch //数据存放路径37 path.logs: /var/log/elasticsearch/ //日志存放路径43 bootstrap.memory_lock: false //在启动的时候不锁定内存55 network.host: 0.0.0.0 //提供服务绑定的IP地址,0.0.0.0代表所有地址59 http.port: 9200 //侦听端口为920068 discovery.zen.ping.unicast.hosts: ["node1", "node2"] //群集发现通过单播实现

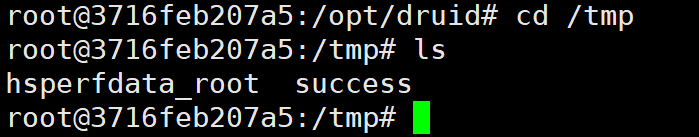

在node1安装-elasticsearch-head插件

移动到elk文件夹

#安装插件编译很慢

[root@node1 ~]# cd elk/

[root@node1 elk]# ls

elasticsearch-5.5.0.rpm kibana-5.5.1-x86_64.rpm phantomjs-2.1.1-linux-x86_64.tar.bz2

elasticsearch-head.tar.gz logstash-5.5.1.rpm node-v8.2.1.tar.gz

[root@node1 elk]# tar xf node-v8.2.1.tar.gz

[root@node1 elk]# cd node-v8.2.1/

[root@node1 node-v8.2.1]# ./configure && make && make install

[root@node1 elk]# cd ~/elk

[root@node1 elk]# tar xf phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 elk]# cd phantomjs-2.1.1-linux-x86_64/bin/

[root@node1 bin]# ls

phantomjs

[root@node1 bin]# cp phantomjs /usr/local/bin/

[root@node1 bin]# cd ~/elk/

[root@node1 elk]# tar xf elasticsearch-head.tar.gz

[root@node1 elk]# cd elasticsearch-head/

[root@node1 elasticsearch-head]# npm install

npm WARN deprecated fsevents@1.2.13: The v1 package contains DANGEROUS / INSECURE binaries. Upgrade to safe fsevents v2

npm WARN optional SKIPPING OPTIONAL DEPENDENCY: fsevents@^1.0.0 (node_modules/karma/node_modules/chokidar/node_modules/fsevents):

npm WARN notsup SKIPPING OPTIONAL DEPENDENCY: Unsupported platform for fsevents@1.2.13: wanted {"os":"darwin","arch":"any"} (current: {"os":"linux","arch":"x64"})

npm WARN elasticsearch-head@0.0.0 license should be a valid SPDX license expressionup to date in 3.536s

修改elasticsearch配置文件

[root@node1 ~]# vim /etc/elasticsearch/elasticsearch.yml 84 # ---------------------------------- Various -----------------------------------85 #86 # Require explicit names when deleting indices:87 #88 #action.destructive_requires_name: true89 http.cors.enabled: true //开启跨域访问支持,默认为false90 http.cors.allow-origin:"*" //跨域访问允许的域名地址

[root@node1 ~]# systemctl restart elasticsearch.service #启动elasticsearch-head

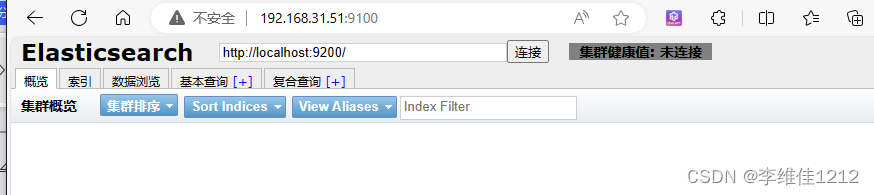

cd /root/elk/elasticsearch-head

npm run start &

#查看监听

netstat -anput | grep :9100

#访问

http://192.168.31.51:9100

node1服务器安装logstash

[root@node1 elk]# rpm -ivh logstash-5.5.1.rpm

警告:logstash-5.5.1.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]软件包 logstash-1:5.5.1-1.noarch 已经安装

#开启并创建一个软连接

[root@node1 elk]# systemctl start logstash.service

[root@node1 elk]# In -s /usr/share/logstash/bin/logstash /usr/local/bin/

#测试1

[root@node1 elk]# logstash -e 'input{ stdin{} }output { stdout{} }'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

16:03:50.250 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

16:03:50.256 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

16:03:50.330 [LogStash::Runner] INFO logstash.agent - No persistent UUID file found. Generating new UUID {:uuid=>"9ba08544-a7a7-4706-a3cd-2e2ca163548d", :path=>"/usr/share/logstash/data/uuid"}

16:03:50.584 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

16:03:50.739 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

16:03:50.893 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

^C16:04:32.838 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

16:04:32.855 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

#测试2

[root@node1 elk]# logstash -e 'input { stdin{} } output { stdout{ codec=>rubydebug }}'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

16:46:23.975 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

The stdin plugin is now waiting for input:

16:46:24.014 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

16:46:24.081 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

^C16:46:29.970 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

16:46:29.975 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

#测试3

16:46:29.975 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

[root@node1 elk]# logstash -e 'input { stdin{} } output { elasticsearch{ hosts=>["192.168.31.51:9200"]} }'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

16:46:55.951 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.31.51:9200/]}}

16:46:55.955 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://192.168.31.51:9200/, :path=>"/"}

16:46:56.049 [[main]-pipeline-manager] WARN logstash.outputs.elasticsearch - Restored connection to ES instance {:url=>#<Java::JavaNet::URI:0x3a106333>}

16:46:56.068 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Using mapping template from {:path=>nil}

16:46:56.204 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

16:46:56.233 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Installing elasticsearch template to _template/logstash

16:46:56.429 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>[#<Java::JavaNet::URI:0x19aeba5c>]}

16:46:56.432 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

16:46:56.461 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

16:46:56.561 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

^C16:46:57.638 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

16:46:57.658 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

logstash日志收集文件格式(默认存储在/etc/logstash/conf.d)

Logstash配置文件基本由三部分组成:input、output以及 filter(根据需要)。标准的配置文件格式如下:

input (...) 输入

filter {...} 过滤

output {...} 输出

在每个部分中,也可以指定多个访问方式。例如,若要指定两个日志来源文件,则格式如下:

input {

file{path =>"/var/log/messages" type =>"syslog"}

file { path =>"/var/log/apache/access.log" type =>"apache"}

}

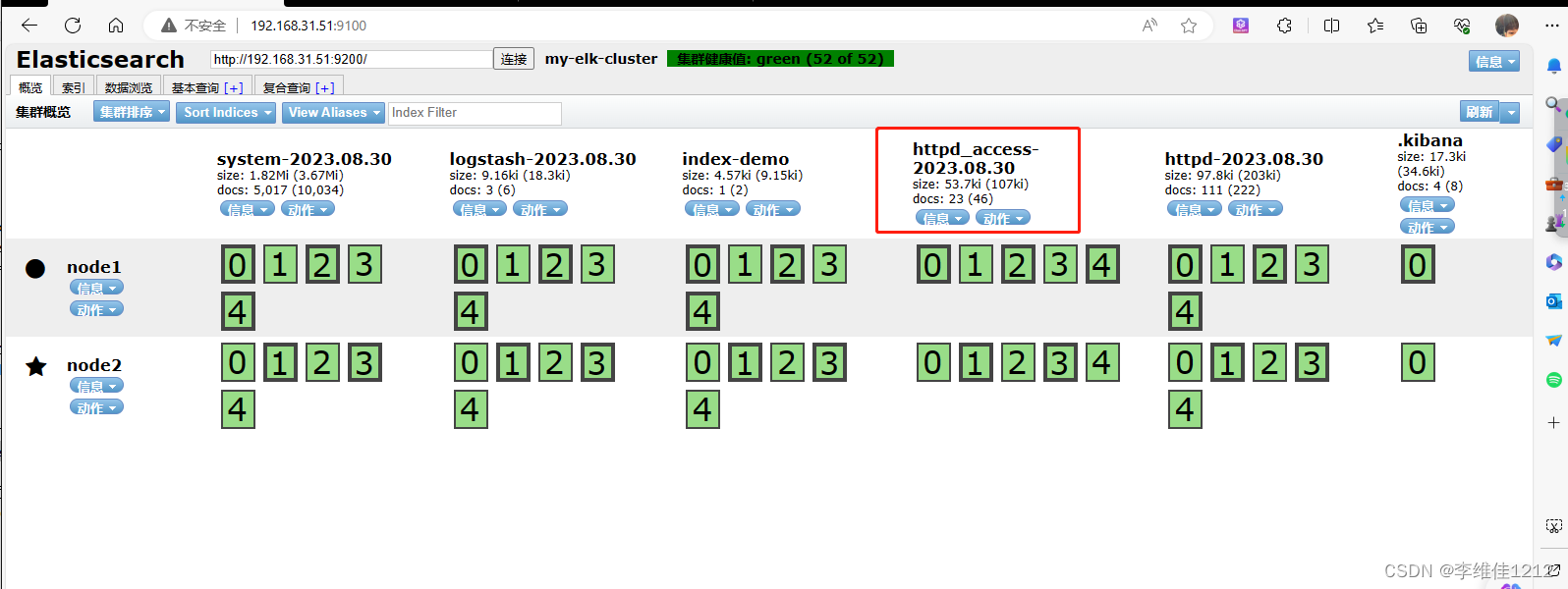

案例:通过logstash收集系统信息日志

[root@node1 conf.d]# chmod o+r /var/log/messages

[root@node1 conf.d]# vim /etc/logstash/conf.d/system.conf

input {

file{

path => "/var/log/messages"

type => "system"

start_position => "beginning"

}

}

output {

elasticsearch{

hosts =>["192.168.31.51:9200"]

index => "system-%{+YYYY.MM.dd}"

}

}

[root@node1 conf.d]# systemctl restart logstash.service

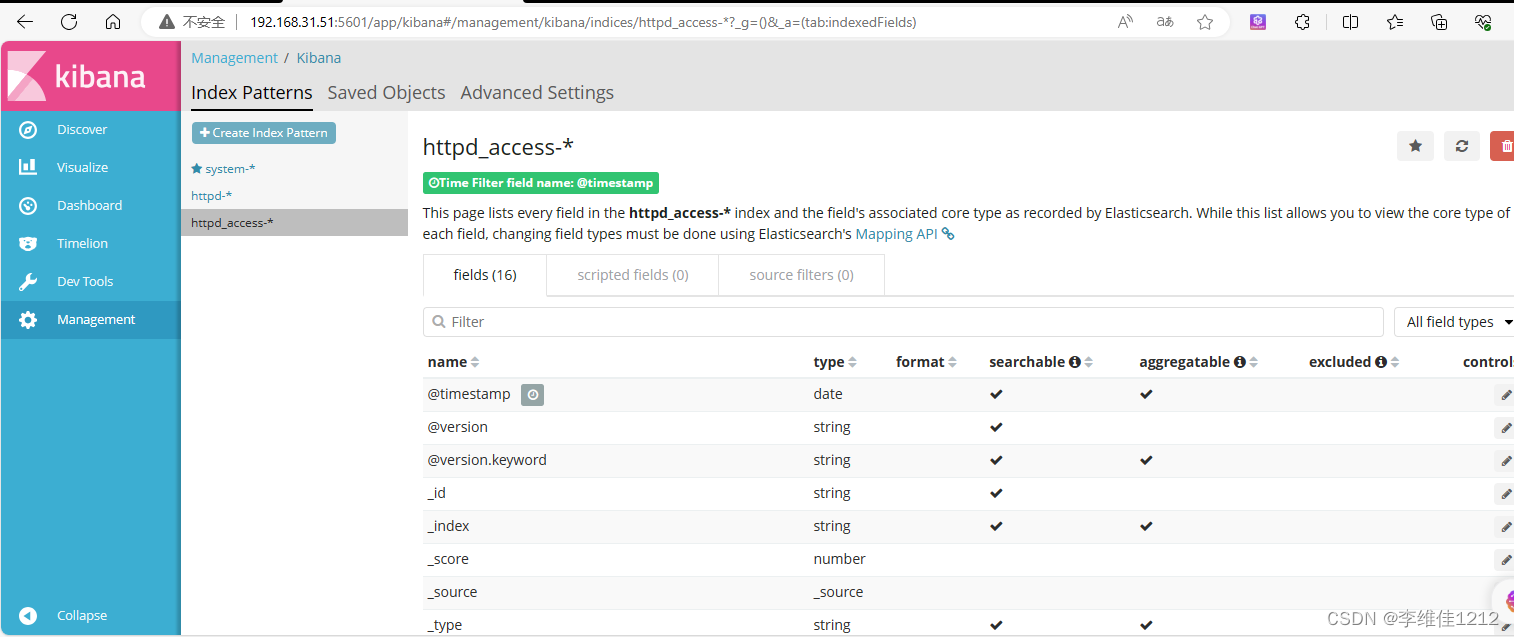

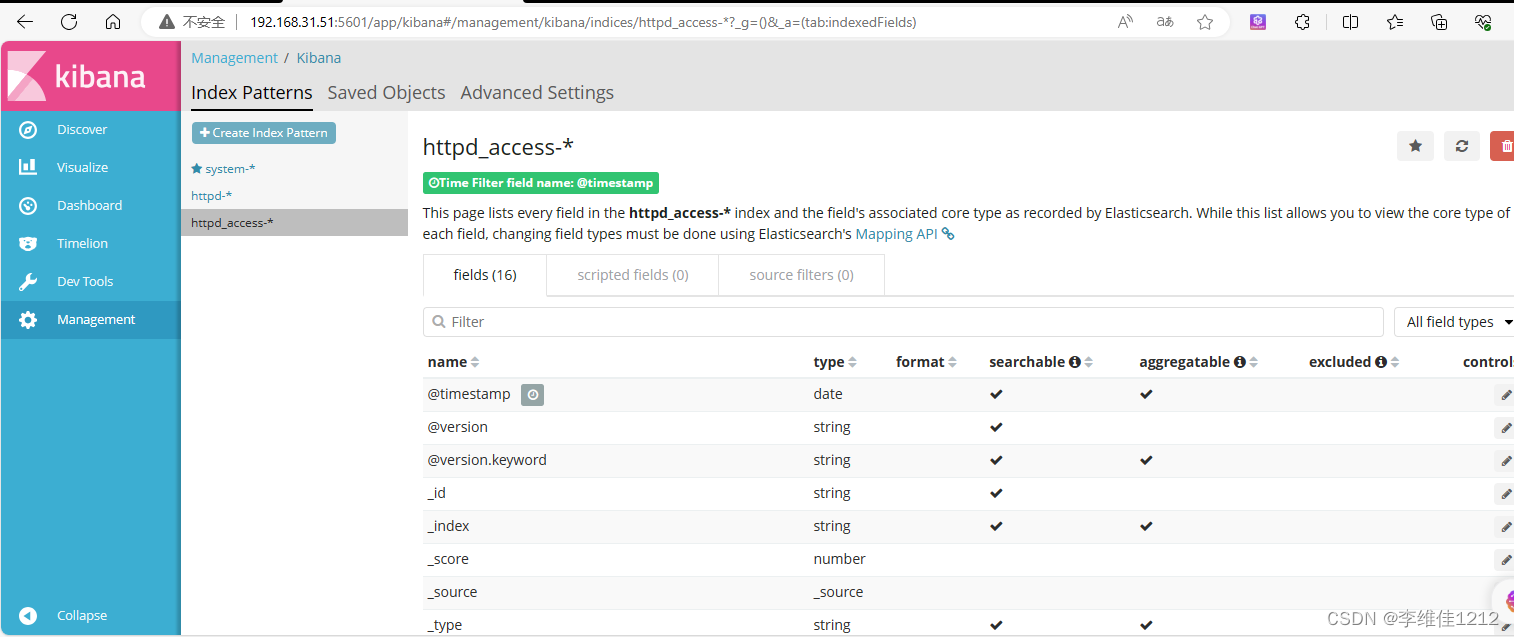

node1节点安装kibana

cd ~/elk

[root@node1 elk]# rpm -ivh kibana-5.5.1-x86_64.rpm

警告:kibana-5.5.1-x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...1:kibana-5.5.1-1 ################################# [100%]

[root@node1 elk]# vim /etc/kibana/kibana.yml 2 server.port: 5601 //Kibana打开的端口7 server.host: "0.0.0.0" //Kibana侦听的地址21 elasticsearch.url: "http://192.168.31.51:9200" //和Elasticsearch 建立连接30 kibana.index: ".kibana" //在Elasticsearch中添加.kibana索引

[root@node1 elk]# systemctl start kibana.service 访问kibana

首次访问需要添加索引,我们添加前面已经添加过的索引:system-*

企业案例

收集nginx访问日志信息

在httpd服务器上安装logstash,参数上述安装过程

logstash在httpd服务器上作为agent(代理),不需要启动

编写httpd日志收集配置文件

[root@httpd ]# yum install -y httpd

[root@httpd ]# systemctl start httpd

[root@httpd ]# systemctl start logstash

[root@httpd ]# vim /etc/logstash/conf.d/httpd.conf

input {file {path => "/var/log/httpd/access_log"type => "access"start_position => "beginning"}

}

output {elasticsearch {hosts => ["192.168.31.51:9200"]index => "httpd-%{+YYYY.MM.dd}"}

}

[root@httpd ]# logstash -f /etc/logstash/conf.d/httpd.conf

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

21:29:34.272 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.31.51:9200/]}}

21:29:34.275 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://192.168.31.51:9200/, :path=>"/"}

21:29:34.400 [[main]-pipeline-manager] WARN logstash.outputs.elasticsearch - Restored connection to ES instance {:url=>#<Java::JavaNet::URI:0x1c254b0a>}

21:29:34.423 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Using mapping template from {:path=>nil}

21:29:34.579 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

21:29:34.585 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>[#<Java::JavaNet::URI:0x3b483278>]}

21:29:34.588 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

21:29:34.845 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

21:29:34.921 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

wwwww

w

w

相关文章:

系统学习Linux-ELK日志收集系统

ELK日志收集系统集群实验 实验环境 角色主机名IP接口httpd192.168.31.50ens33node1192.168.31.51ens33noed2192.168.31.53ens33 环境配置 设置各个主机的ip地址为拓扑中的静态ip,并修改主机名 #httpd [rootlocalhost ~]# hostnamectl set-hostname httpd [root…...

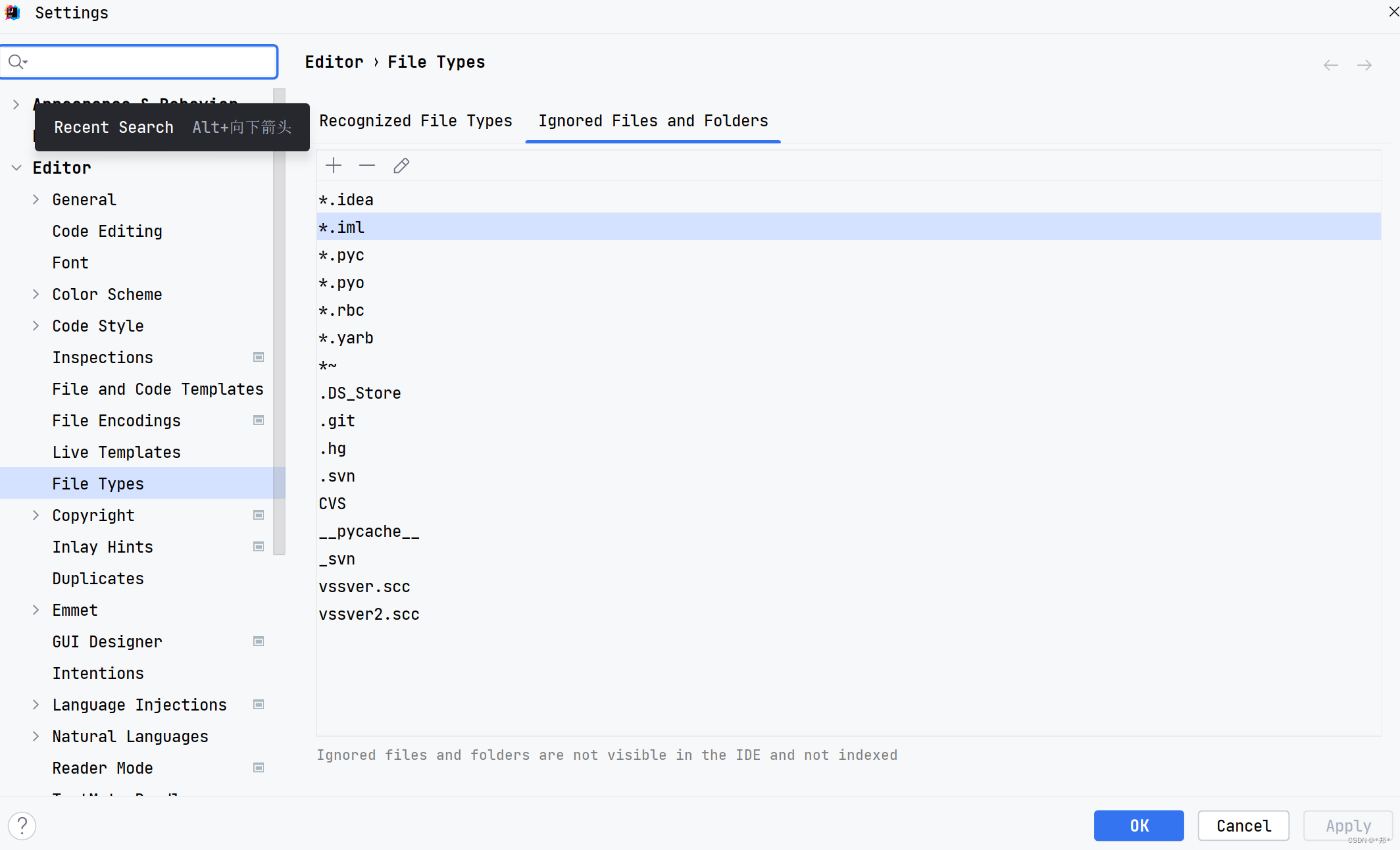

IDEA2023隐藏.idea和.iml文件

IDEA2023隐藏.idea和.iml文件 1. 打开file -> setting,快捷键CtrlAlts2. Editor -> File types3. 点击右侧Ignore files and folders一栏4. 添加需要忽略的文件5. 最重要一步 IDEA新建项目会自动生成一个.idea文件夹和.iml文件,开发中不需要对这两个文件修改&…...

【深入浅出C#】章节 9: C#高级主题:反射和动态编程

反射和动态编程是C#和其他现代编程语言中重要的高级主题,它们具有以下重要性: 灵活性和扩展性:反射允许程序在运行时动态地获取和操作类型信息、成员和对象实例,这使得程序更加灵活和具有扩展性。动态编程则使得程序能够根据运行…...

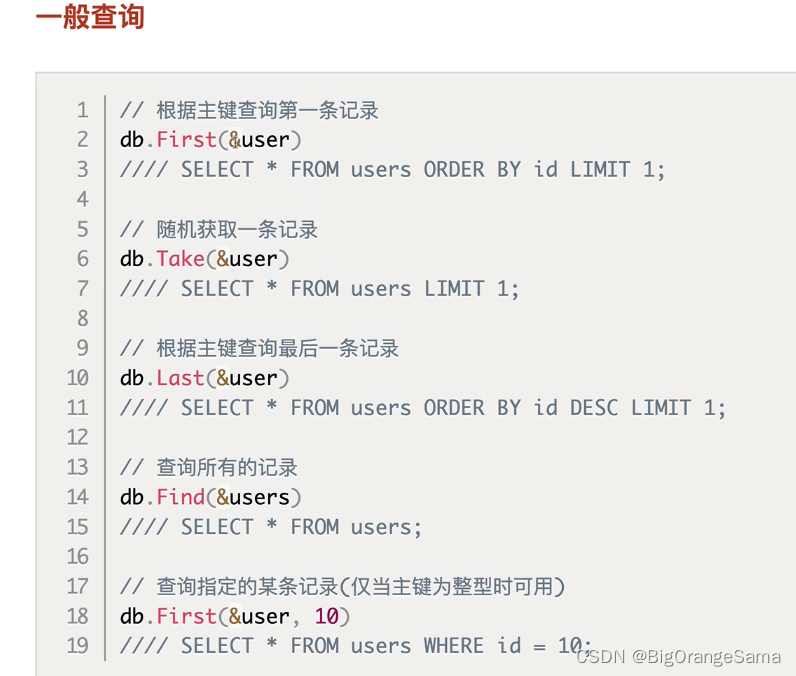

Gorm简单了解

GORM 指南 | GORM - The fantastic ORM library for Golang, aims to be developer friendly. 04_GORM查询操作_哔哩哔哩_bilibili 前置: db调用操作语句中间加debug()可以显示对应的sql语句 1.Gorm模型定义(理解重点ÿ…...

第一百三十三回 StreamProvier

文章目录 概念介绍使用方法示例代码 我们在上一章回中介绍了通道相关的内容,本章回中将介绍 StreamProvider组件.闲话休提,让我们一起Talk Flutter吧。 概念介绍 在Flutter中Stream是经常使用的组件,对该组件的监听可以StremBuilder&#x…...

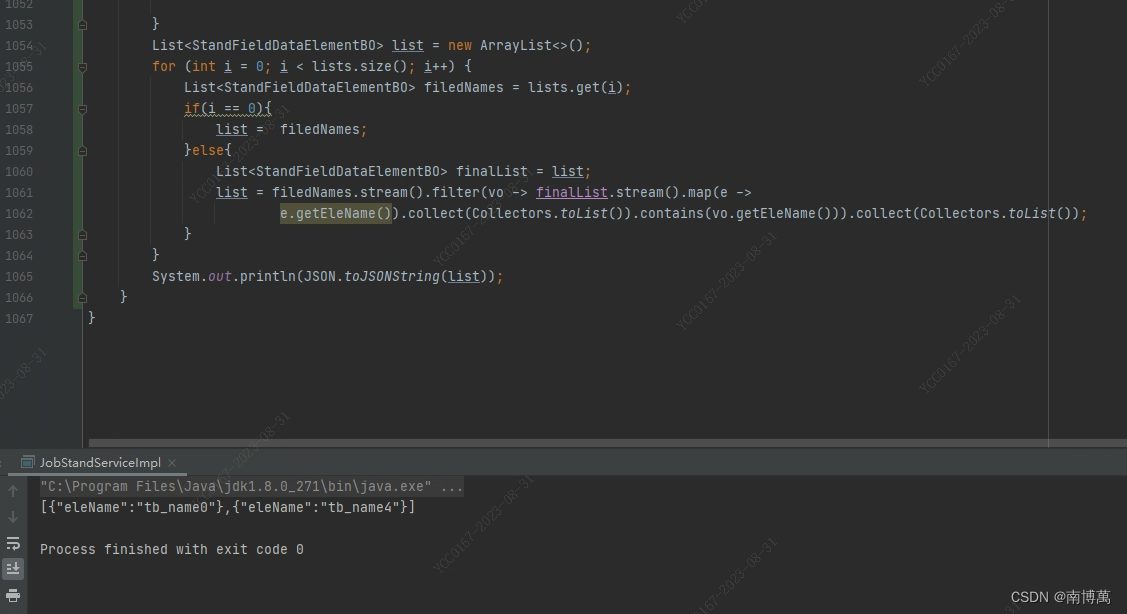

java 多个list取交集

java 多个list集合根据某个字段取出交集 模拟多个list集合,如下图 如果只有一个集合那么交集就是当前集合,如果有多个集合,那么第一个集合当做目标集合,在通过目标集合去和剩下的集合比较,取出相同的值,运…...

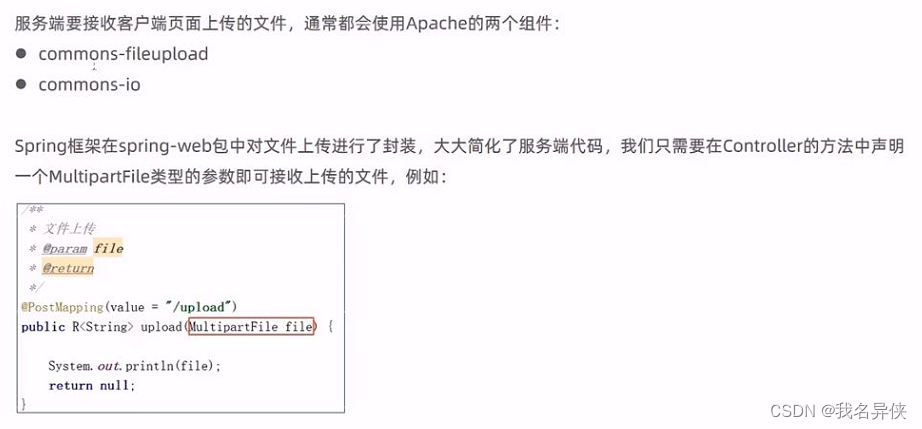

文件上传与下载

文章目录 1. 前端要求2. 后端要求 1. 前端要求 //采用post方法提交文件 method"post" //采用enctype属性 enctype"" //type属性要求 type"file"2. 后端要求 package com.itheima.reggie.controller;import com.itheima.reggie.common.R; impo…...

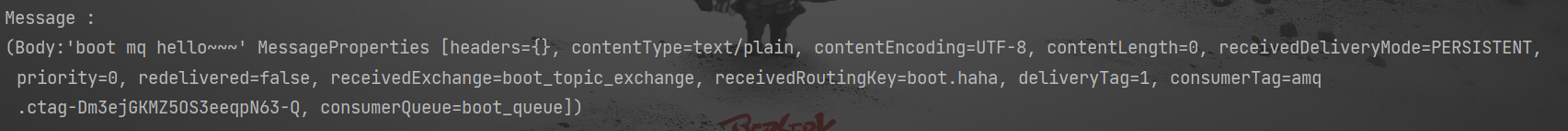

SpringBoot 整合 RabbitMQ

1. 创建 SpringBoot 工程 把版本改为 2.7.14 引入这两个依赖: <dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-amqp</artifactId></dependency><dependency><groupId>org.springfr…...

气象科普丨气象站的分类与应用

气象站是一种用于收集、分析和处理气象数据的设备。根据不同的应用场景和监测需求,气象站可以分为以下几类: 一、农业气象站 农业气象站是专门为农业生产服务的气象站,主要监测土壤温度、土壤湿度等参数,为农业生产提供科学依据…...

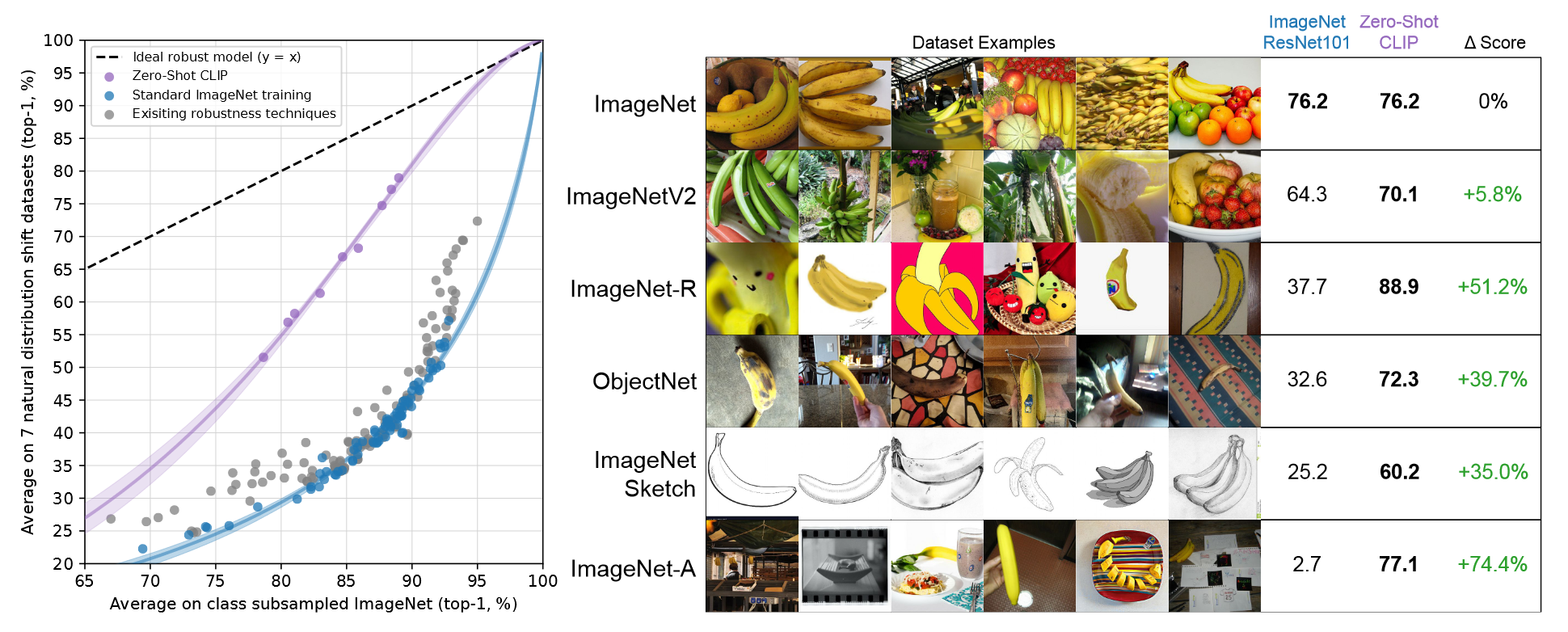

【论文精读】Learning Transferable Visual Models From Natural Language Supervision

Learning Transferable Visual Models From Natural Language Supervision 前言Abstract1. Introduction and Motivating Work2. Approach2.1. Creating a Sufficiently Large Dataset2.2. Selecting an Efficient Pre-Training Method2.3. Choosing and Scaling a Model2.4. P…...

缓存和分布式锁笔记

缓存 开发中,凡是放入缓存中的数据都应该指定过期时间,使其可以在系统即使没有主动更新数据也能自动触发数据加载进缓存的流程。避免业务崩溃导致的数据永久不一致 问题。 redis作为缓存使用redisTemplate操作redis 分布式锁的原理和使用 分布式加锁&…...

Antd)

React笔记(七)Antd

一、登录功能 首先要使用antd,要先下载 yarn add antd 登录页面关键代码 import React from react /*1、如果要在react中完成样式隔离,需要如下操作1)命名一个xx.module.scss webpack要求2) 在需要的组件中通过ES6方式进行导入&#x…...

无涯教程-Android - RadioButton函数

RadioButton有两种状态:选中或未选中,这允许用户从一组中选择一个选项。 Radio Button 示例 本示例将带您完成一些简单的步骤,以展示如何使用Linear Layout和RadioButton创建自己的Android应用程序。 以下是修改后的主要Activity文件 src/MainActivity.java 的内容。 packa…...

kafka如何避免消费组重平衡

目录 前言: 协调者 重平衡的影响 避免重平衡 重平衡发生的场景 参考资料 前言: Rebalance 就是让一个 Consumer Group 下所有的 Consumer 实例就如何消费订阅主题的所有分区达成共识的过程。在 Rebalance 过程中,所有 Consumer 实例…...

浅谈一下企业信息化管理

企业信息化管理 企业信息化是指将企业的生产过程,物料,事务,财务,销售等业务过程数字化,通过各种信息系统网络价格成新的信息资源,提供给各层次的人们东西观察各类动态业务中的一切信息,以便于…...

北京APP外包开发团队人员构成

下面是一个标准的APP开发团队构成,但具体的人员规模和角色可能会根据项目的规模和需求进行调整。例如,一些小型项目或初创公司可能将一些角色合并,或者聘请外包团队来完成部分工作。北京木奇移动技术有限公司,专业的软件外包开发公…...

Node基础and包管理工具

Node基础 fs 模块 fs 全称为 file system,称之为 文件系统,是 Node.js 中的 内置模块,可以对计算机中的磁盘进行操作。 本章节会介绍如下几个操作: 1. 文件写入 2. 文件读取 3. 文件移动与重命名 4. 文件删除 5. 文件夹操作 6. …...

【python使用 Pillow 库】缩小|放大图片

当我们处理图像时,有时候需要调整图像的大小以适应特定的需求。本文将介绍如何使用 Python 的 PIL 库(Pillow)来调整图像的大小,并保存调整后的图像。 环境准备 在开始之前,我们需要安装 Pillow 库。可以使用以下命令…...

解决Ubuntu 或Debian apt-get IPv6问题:如何设置仅使用IPv4

文章目录 解决Ubuntu 或Debian apt-get IPv6问题:如何设置仅使用IPv4 解决Ubuntu 或Debian apt-get IPv6问题:如何设置仅使用IPv4 背景: 在Ubuntu 22.04(包括 20.04 18.04 等版本) 或 Debian (10、11、12)系统中,当你使用apt up…...

Xubuntu16.04系统中解决无法识别exFAT格式的U盘

问题描述 将exFAT格式的U盘插入到Xubuntu16.04系统中,发现系统可以识别到此U盘,但是打不开,查询后发现需要安装exfat-utils库才行。 解决方案: 1.设备有网络的情况下 apt-get install exfat-utils直接安装exfat-utils库即可 2.设备…...

在HarmonyOS ArkTS ArkUI-X 5.0及以上版本中,手势开发全攻略:

在 HarmonyOS 应用开发中,手势交互是连接用户与设备的核心纽带。ArkTS 框架提供了丰富的手势处理能力,既支持点击、长按、拖拽等基础单一手势的精细控制,也能通过多种绑定策略解决父子组件的手势竞争问题。本文将结合官方开发文档,…...

Java如何权衡是使用无序的数组还是有序的数组

在 Java 中,选择有序数组还是无序数组取决于具体场景的性能需求与操作特点。以下是关键权衡因素及决策指南: ⚖️ 核心权衡维度 维度有序数组无序数组查询性能二分查找 O(log n) ✅线性扫描 O(n) ❌插入/删除需移位维护顺序 O(n) ❌直接操作尾部 O(1) ✅内存开销与无序数组相…...

(二)TensorRT-LLM | 模型导出(v0.20.0rc3)

0. 概述 上一节 对安装和使用有个基本介绍。根据这个 issue 的描述,后续 TensorRT-LLM 团队可能更专注于更新和维护 pytorch backend。但 tensorrt backend 作为先前一直开发的工作,其中包含了大量可以学习的地方。本文主要看看它导出模型的部分&#x…...

关于iview组件中使用 table , 绑定序号分页后序号从1开始的解决方案

问题描述:iview使用table 中type: "index",分页之后 ,索引还是从1开始,试过绑定后台返回数据的id, 这种方法可行,就是后台返回数据的每个页面id都不完全是按照从1开始的升序,因此百度了下,找到了…...

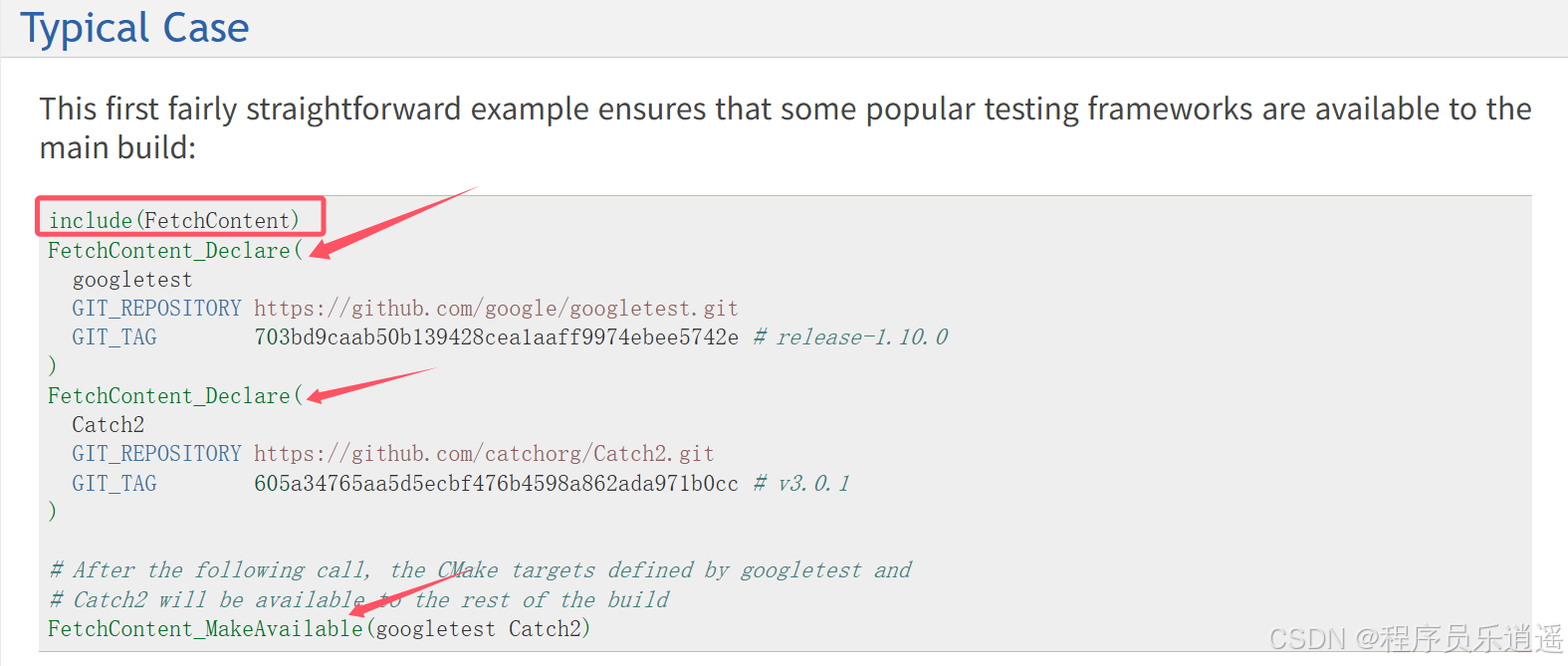

CMake 从 GitHub 下载第三方库并使用

有时我们希望直接使用 GitHub 上的开源库,而不想手动下载、编译和安装。 可以利用 CMake 提供的 FetchContent 模块来实现自动下载、构建和链接第三方库。 FetchContent 命令官方文档✅ 示例代码 我们将以 fmt 这个流行的格式化库为例,演示如何: 使用 FetchContent 从 GitH…...

AI病理诊断七剑下天山,医疗未来触手可及

一、病理诊断困局:刀尖上的医学艺术 1.1 金标准背后的隐痛 病理诊断被誉为"诊断的诊断",医生需通过显微镜观察组织切片,在细胞迷宫中捕捉癌变信号。某省病理质控报告显示,基层医院误诊率达12%-15%,专家会诊…...

多光源(Multiple Lights))

C++.OpenGL (14/64)多光源(Multiple Lights)

多光源(Multiple Lights) 多光源渲染技术概览 #mermaid-svg-3L5e5gGn76TNh7Lq {font-family:"trebuchet ms",verdana,arial,sans-serif;font-size:16px;fill:#333;}#mermaid-svg-3L5e5gGn76TNh7Lq .error-icon{fill:#552222;}#mermaid-svg-3L5e5gGn76TNh7Lq .erro…...

深度剖析 DeepSeek 开源模型部署与应用:策略、权衡与未来走向

在人工智能技术呈指数级发展的当下,大模型已然成为推动各行业变革的核心驱动力。DeepSeek 开源模型以其卓越的性能和灵活的开源特性,吸引了众多企业与开发者的目光。如何高效且合理地部署与运用 DeepSeek 模型,成为释放其巨大潜力的关键所在&…...

CVE-2023-25194源码分析与漏洞复现(Kafka JNDI注入)

漏洞概述 漏洞名称:Apache Kafka Connect JNDI注入导致的远程代码执行漏洞 CVE编号:CVE-2023-25194 CVSS评分:8.8 影响版本:Apache Kafka 2.3.0 - 3.3.2 修复版本:≥ 3.4.0 漏洞类型:反序列化导致的远程代…...

如何使用CodeRider插件在IDEA中生成代码

一、环境搭建与插件安装 1.1 环境准备 名称要求说明操作系统Windows 11JetBrains IDEIntelliJ IDEA 2025.1.1.1 (Community Edition)硬件配置推荐16GB内存50GB磁盘空间 1.2 插件安装流程 步骤1:市场安装 打开IDEA,进入File → Settings → Plugins搜…...