【Azure OpenAI】OpenAI Function Calling 101

概述

本文是结合 github:OpenAI Function Calling 101在 Azure OpenAI 上的实现:

Github Function Calling 101

如何将函数调用与 Azure OpenAI 服务配合使用 - Azure OpenAI Service

使用像ChatGPT这样的llm的困难之一是它们不产生结构化的数据输出。这对于在很大程度上依赖结构化数据进行系统交互的程序化系统非常重要。例如,如果你想构建一个程序来分析电影评论的情绪,你可能必须执行如下提示:

prompt = f'''

Please perform a sentiment analysis on the following movie review:

{MOVIE_REVIEW_TEXT}

Please output your response as a single word: either "Positive" or "Negative". Do not add any extra characters.

'''

这样做的问题是,它并不总是有效。法学硕士学位通常会加上一个不受欢迎的段落或更长的解释,比如:“这部电影的情感是:积极的。”

prompt = f'''Please perform a sentiment analysis on the following movie review:{The Shawshank Redemption}Please output your response as a single word: either "Positive" or "Negative". Do not add any extra characters.'''

---

#OpenAI Answer

import nltk

from nltk.sentiment import SentimentIntensityAnalyzer# Define the movie review

movie_review = "The Shawshank Redemption"# Perform sentiment analysis

sid = SentimentIntensityAnalyzer()

sentiment_scores = sid.polarity_scores(movie_review)# Determine the sentiment based on the compound score

if sentiment_scores['compound'] >= 0:sentiment = "Positive"

else:sentiment = "Negative"# Output the sentiment

sentiment

---

The sentiment analysis of the movie review "The Shawshank Redemption" is "Positive".

虽然您可以通过正则表达式得到答案(🤢),但这显然不是理想的。理想的情况是LLM将返回类似以下结构化JSON的输出:

{'sentiment': 'positive'

}

进入OpenAI的新函数调用! 函数调用正是上述问题的答案。

本Jupyter笔记本将演示如何在Python中使用OpenAI的新函数调用的简单示例。如果你想看完整的文档,请点击这个链接https://platform.openai.com/docs/guides/gpt/function-calling

实验

初始化配置

安装openai Python客户端,已经安装的需要升级它以获得新的函数调用功能

pip3 install openai

pip3 install openai --upgrade

# Importing the necessary Python libraries

import os

import json

import yaml

import openai

结合Azure Openai 的内容,将 API 的设置按照 Azure 给的方式结合文件(放 key),同时准备一个about-me.txt

#../keys/openai-keys.yaml

API_KEY: 06332xxxxxxcd4e70bxxxxxx6ee135

#../data/about-me.txt

Hello! My name is Enigma Zhao. I am an Azure cloud engineer at Microsoft. I enjoy learning about AI and teaching what I learn back to others. I have two sons and a daughter. I drive a Audi A3, and my favorite video game series is The Legend of Zelda.

openai.api_version = "2023-07-01-preview"

openai.api_type = "azure"

openai.api_base = "https://aoaifr01.openai.azure.com/"# Loading the API key and organization ID from file (NOT pushed to GitHub)

with open('../keys/openai-keys.yaml') as f:keys_yaml = yaml.safe_load(f)# Applying our API key

openai.api_key = keys_yaml['API_KEY']

os.environ['OPENAI_API_KEY'] = keys_yaml['API_KEY']# Loading the "About Me" text from local file

with open('../data/about-me.txt', 'r') as f:about_me = f.read()

测试Json 转换

在使用函数调用之前,先看一下如何使用提示工程和Regex生成一个结构JSON,在以后会使用到。

# Engineering a prompt to extract as much information from "About Me" as a JSON object

about_me_prompt = f'''

Please extract information as a JSON object. Please look for the following pieces of information.

Name

Job title

Company

Number of children as a single number

Car make

Car model

Favorite video game seriesThis is the body of text to extract the information from:

{about_me}

'''

# Getting the response back from ChatGPT (gpt-4)

openai_response = openai.ChatCompletion.create(engine="gpt-4",messages = [{'role': 'user', 'content': about_me_prompt}]

)# Loading the response as a JSON object

json_response = json.loads(openai_response['choices'][0]['message']['content'])

print(json_response)

输出如下:

root@ubuntu0:~/python# python3 [fc1.py](http://fc1.py/)

{'Name': 'Enigma Zhao', 'Job title': 'Azure cloud engineer', 'Company': 'Microsoft', 'Number of children': 3, 'Car make': 'Audi', 'Car model': 'A3', 'Favorite video game series': 'The Legend of Zelda'}

简单的自定义函数

# Defining our initial extract_person_info function

def extract_person_info(name, job_title, num_children):'''Prints basic "About Me" informationInputs:name (str): Name of the personjob_title (str): Job title of the personnum_chilren (int): The number of children the parent has.'''print(f'This person\'s name is {name}. Their job title is {job_title}, and they have {num_children} children.')# Defining how we want ChatGPT to call our custom functions

my_custom_functions = [{'name': 'extract_person_info','description': 'Get "About Me" information from the body of the input text','parameters': {'type': 'object','properties': {'name': {'type': 'string','description': 'Name of the person'},'job_title': {'type': 'string','description': 'Job title of the person'},'num_children': {'type': 'integer','description': 'Number of children the person is a parent to'}}}}

] openai_response = openai.ChatCompletion.create(engine="gpt-4",messages = [{'role': 'user', 'content': about_me}],functions = my_custom_functions,function_call = 'auto'

)print(openai_response)

输出如下,OpenAI 讲 about_me 的内容根据 function 的格式进行梳理,调用 function 输出:

root@ubuntu0:~/python# python3 fc2.py

{"id": "chatcmpl-7tYiDoEjPpzNw3tPo3ZDFdaHSbS5u","object": "chat.completion","created": 1693475829,"model": "gpt-4","prompt_annotations": [{"prompt_index": 0,"content_filter_results": {"hate": {"filtered": false,"severity": "safe"},"self_harm": {"filtered": false,"severity": "safe"},"sexual": {"filtered": false,"severity": "safe"},"violence": {"filtered": false,"severity": "safe"}}}],"choices": [{"index": 0,"finish_reason": "function_call","message": {"role": "assistant","function_call": {"name": "extract_person_info","arguments": "{\n \"name\": \"Enigma Zhao\",\n \"job_title\": \"Azure cloud engineer\",\n \"num_children\": 3\n}"}},"content_filter_results": {}}],"usage": {"completion_tokens": 36,"prompt_tokens": 147,"total_tokens": 183}

}

在上面的示例中,自定义函数提取三个非常具体的信息位,通过传递自定义的“About Me”文本作为提示符来证明这可以成功地工作。

如果传入任何其他不包含该信息的提示,会发生什么?

在API客户端调用function_call中设置了一个参数,并将其设置为auto。这个参数实际上是在告诉ChatGPT在确定何时为自定义函数构建输出时使用它的最佳判断。

所以,当提交的提示符与任何自定义函数都不匹配时,会发生什么呢?

简单地说,它默认为典型的行为,就好像函数调用不存在一样。

我们用一个任意的提示来测试一下:“埃菲尔铁塔有多高?”,修改如下:

openai_response = openai.ChatCompletion.create(engine="gpt-4",messages = [{'role': 'user', 'content': 'How tall is the Eiffel Tower?'}],functions = my_custom_functions,function_call = 'auto'

)print(openai_response)

输出结果

{"id": "chatcmpl-7tYvdaiKaoiWW7NXz8QDeZZYEKnko","object": "chat.completion","created": 1693476661,"model": "gpt-4","prompt_annotations": [{"prompt_index": 0,"content_filter_results": {"hate": {"filtered": false,"severity": "safe"},"self_harm": {"filtered": false,"severity": "safe"},"sexual": {"filtered": false,"severity": "safe"},"violence": {"filtered": false,"severity": "safe"}}}],"choices": [{"index": 0,"finish_reason": "stop","message": {"role": "assistant","content": "The Eiffel Tower is approximately 330 meters (1083 feet) tall."},"content_filter_results": {"hate": {"filtered": false,"severity": "safe"},"self_harm": {"filtered": false,"severity": "safe"},"sexual": {"filtered": false,"severity": "safe"},"violence": {"filtered": false,"severity": "safe"}}}],"usage": {"completion_tokens": 18,"prompt_tokens": 97,"total_tokens": 115}

}

多样本情况

现在让我们演示一下,当我们将3个不同的样本应用于所有自定义函数时会发生什么。

# Defining a function to extract only vehicle information

def extract_vehicle_info(vehicle_make, vehicle_model):'''Prints basic vehicle informationInputs:- vehicle_make (str): Make of the vehicle- vehicle_model (str): Model of the vehicle'''print(f'Vehicle make: {vehicle_make}\nVehicle model: {vehicle_model}')# Defining a function to extract all information provided in the original "About Me" prompt

def extract_all_info(name, job_title, num_children, vehicle_make, vehicle_model, company_name, favorite_vg_series):'''Prints the full "About Me" informationInputs:- name (str): Name of the person- job_title (str): Job title of the person- num_chilren (int): The number of children the parent has- vehicle_make (str): Make of the vehicle- vehicle_model (str): Model of the vehicle- company_name (str): Name of the company the person works for- favorite_vg_series (str): Person's favorite video game series.'''print(f'''This person\'s name is {name}. Their job title is {job_title}, and they have {num_children} children.They drive a {vehicle_make} {vehicle_model}.They work for {company_name}.Their favorite video game series is {favorite_vg_series}.''')

# Defining how we want ChatGPT to call our custom functions

my_custom_functions = [{'name': 'extract_person_info','description': 'Get "About Me" information from the body of the input text','parameters': {'type': 'object','properties': {'name': {'type': 'string','description': 'Name of the person'},'job_title': {'type': 'string','description': 'Job title of the person'},'num_children': {'type': 'integer','description': 'Number of children the person is a parent to'}}}},{'name': 'extract_vehicle_info','description': 'Extract the make and model of the person\'s car','parameters': {'type': 'object','properties': {'vehicle_make': {'type': 'string','description': 'Make of the person\'s vehicle'},'vehicle_model': {'type': 'string','description': 'Model of the person\'s vehicle'}}}},{'name': 'extract_all_info','description': 'Extract all information about a person including their vehicle make and model','parameters': {'type': 'object','properties': {'name': {'type': 'string','description': 'Name of the person'},'job_title': {'type': 'string','description': 'Job title of the person'},'num_children': {'type': 'integer','description': 'Number of children the person is a parent to'},'vehicle_make': {'type': 'string','description': 'Make of the person\'s vehicle'},'vehicle_model': {'type': 'string','description': 'Model of the person\'s vehicle'},'company_name': {'type': 'string','description': 'Name of the company the person works for'},'favorite_vg_series': {'type': 'string','description': 'Name of the person\'s favorite video game series'}}}}

]

# Defining a list of samples

samples = [str(about_me),'My name is David Hundley. I am a principal machine learning engineer, and I have two daughters.','She drives a Kia Sportage.'

]# Iterating over the three samples

for i, sample in enumerate(samples):print(f'Sample #{i + 1}\'s results:')# Getting the response back from ChatGPT (gpt-4)openai_response = openai.ChatCompletion.create(engine = 'gpt-4',messages = [{'role': 'user', 'content': sample}],functions = my_custom_functions,function_call = 'auto')# Printing the sample's responseprint(openai_response)

输出如下:

root@ubuntu0:~/python# python3 [fc3.py](http://fc3.py/)

Sample #1's results:

{

"id": "chatcmpl-7tq3Nh7BSccfFAmT2psZcRR3hHpZ7",

"object": "chat.completion",

"created": 1693542489,

"model": "gpt-4",

"prompt_annotations": [

{

"prompt_index": 0,

"content_filter_results": {

"hate": {

"filtered": false,

"severity": "safe"

},

"self_harm": {

"filtered": false,

"severity": "safe"

},

"sexual": {

"filtered": false,

"severity": "safe"

},

"violence": {

"filtered": false,

"severity": "safe"

}

}

}

],

"choices": [

{

"index": 0,

"finish_reason": "function_call",

"message": {

"role": "assistant",

"function_call": {

"name": "extract_all_info",

"arguments": "{\n\"name\": \"Enigma Zhao\",\n\"job_title\": \"Azure cloud engineer\",\n\"num_children\": 3,\n\"vehicle_make\": \"Audi\",\n\"vehicle_model\": \"A3\",\n\"company_name\": \"Microsoft\",\n\"favorite_vg_series\": \"The Legend of Zelda\"\n}"

}

},

"content_filter_results": {}

}

],

"usage": {

"completion_tokens": 67,

"prompt_tokens": 320,

"total_tokens": 387

}

}

Sample #2's results:

{

"id": "chatcmpl-7tq3QUA3o1yixTLZoPtuWOfKA38ub",

"object": "chat.completion",

"created": 1693542492,

"model": "gpt-4",

"prompt_annotations": [

{

"prompt_index": 0,

"content_filter_results": {

"hate": {

"filtered": false,

"severity": "safe"

},

"self_harm": {

"filtered": false,

"severity": "safe"

},

"sexual": {

"filtered": false,

"severity": "safe"

},

"violence": {

"filtered": false,

"severity": "safe"

}

}

}

],

"choices": [

{

"index": 0,

"finish_reason": "function_call",

"message": {

"role": "assistant",

"function_call": {

"name": "extract_person_info",

"arguments": "{\n\"name\": \"David Hundley\",\n\"job_title\": \"principal machine learning engineer\",\n\"num_children\": 2\n}"

}

},

"content_filter_results": {}

}

],

"usage": {

"completion_tokens": 33,

"prompt_tokens": 282,

"total_tokens": 315

}

}

Sample #3's results:

{

"id": "chatcmpl-7tq3RxN3tCLKbPadWkGQguploKFw2",

"object": "chat.completion",

"created": 1693542493,

"model": "gpt-4",

"prompt_annotations": [

{

"prompt_index": 0,

"content_filter_results": {

"hate": {

"filtered": false,

"severity": "safe"

},

"self_harm": {

"filtered": false,

"severity": "safe"

},

"sexual": {

"filtered": false,

"severity": "safe"

},

"violence": {

"filtered": false,

"severity": "safe"

}

}

}

],

"choices": [

{

"index": 0,

"finish_reason": "function_call",

"message": {

"role": "assistant",

"function_call": {

"name": "extract_vehicle_info",

"arguments": "{\n \"vehicle_make\": \"Kia\",\n \"vehicle_model\": \"Sportage\"\n}"

}

},

"content_filter_results": {}

}

],

"usage": {

"completion_tokens": 27,

"prompt_tokens": 268,

"total_tokens": 295

}

}

对于每个相应的提示,ChatGPT选择了正确的自定义函数,可以特别注意到API的响应对象中function_call下的name值。

除了这是一种方便的方法来确定使用哪个函数的参数之外,我们还可以通过编程将实际的自定义Python函数映射到此值,以适当地选择运行正确的代码。

# Iterating over the three samples

for i, sample in enumerate(samples):print(f'Sample #{i + 1}\'s results:')# Getting the response back from ChatGPT (gpt-4)openai_response = openai.ChatCompletion.create(engine = 'gpt-4',messages = [{'role': 'user', 'content': sample}],functions = my_custom_functions,function_call = 'auto')['choices'][0]['message']# Checking to see that a function call was invokedif openai_response.get('function_call'):# Checking to see which specific function call was invokedfunction_called = openai_response['function_call']['name']# Extracting the arguments of the function callfunction_args = json.loads(openai_response['function_call']['arguments'])# Invoking the proper functionsif function_called == 'extract_person_info':extract_person_info(*list(function_args.values()))elif function_called == 'extract_vehicle_info':extract_vehicle_info(*list(function_args.values()))elif function_called == 'extract_all_info':extract_all_info(*list(function_args.values()))

输出如下:

root@ubuntu0:~/python# python3 fc4.py

Sample #1's results:This person's name is Enigma Zhao. Their job title is Azure cloud engineer, and they have 3 children.They drive a Audi A3.They work for Microsoft.Their favorite video game series is The Legend of Zelda.Sample #2's results:

This person's name is David Hundley. Their job title is principal machine learning engineer, and they have 2 children.

Sample #3's results:

Vehicle make: Kia

Vehicle model: Sportage

OpenAI Function Calling with LangChain

考虑到LangChain在生成式AI社区中的受欢迎程度,添加一些代码来展示如何在LangChain中使用这个功能。

注意在使用前要安装 LangChain

pip3 install LangChain

# Importing the LangChain objects

from langchain.chat_models import ChatOpenAI

from langchain.chains import LLMChain

from langchain.prompts.chat import ChatPromptTemplate

from langchain.chains.openai_functions import create_structured_output_chain

# Setting the proper instance of the OpenAI model

#llm = ChatOpenAI(model = 'gpt-3.5-turbo-0613')**#model 格式发生改变,如果用ChatOpenAI(model = 'gpt-4'),系统会给警告

llm = ChatOpenAI(model_kwargs={'engine': 'gpt-4'})**# Setting a LangChain ChatPromptTemplate

chat_prompt_template = ChatPromptTemplate.from_template('{my_prompt}')# Setting the JSON schema for extracting vehicle information

langchain_json_schema = {'name': 'extract_vehicle_info','description': 'Extract the make and model of the person\'s car','type': 'object','properties': {'vehicle_make': {'title': 'Vehicle Make','type': 'string','description': 'Make of the person\'s vehicle'},'vehicle_model': {'title': 'Vehicle Model','type': 'string','description': 'Model of the person\'s vehicle'}}

}

# Defining the LangChain chain object for function calling

chain = create_structured_output_chain(output_schema = langchain_json_schema,llm = llm,prompt = chat_prompt_template)

# Getting results with a demo prompt

print(chain.run(my_prompt = about_me))

输出如下:

root@ubuntu0:~/python# python3 fc0.py

{'vehicle_make': 'Audi', 'vehicle_model': 'A3'}

小结

有几个和原文配置不一样的地方:

-

结合Azure Openai 的内容,将 API 的设置按照 Azure 给的方式结合文件(放 key),同时准备一个about-me.txt

#../keys/openai-keys.yaml API_KEY: 06332xxxxxxcd4e70bxxxxxx6ee135#../data/about-me.txt Hello! My name is zhang san. I am an Azure cloud engineer at Microsoft. I enjoy learning about AI and teaching what I learn back to others. I have two sons and a daughter. I drive a Audi A3, and my favorite video game series is The Legend of Zelda. -

基本配置按照 AOAI 的格式

openai.api_version = "2023-07-01-preview" openai.api_type = "azure" openai.api_base = "https://aoaifr01.openai.azure.com/" #Applying our API key openai.api_key = keys_yaml['API_KEY'] os.environ['OPENAI_API_KEY'] = keys_yaml['API_KEY'] #后面的多样本测试要用 -

Function Call 的配置,将 model = ‘gpt-3.5-turbo’ 改为 engine=“gpt-4”

openai_response = openai.ChatCompletion.create( engine="gpt-4", messages = [{'role': 'user', 'content': about_me}], functions = my_custom_functions, function_call = 'auto' -

OpenAI Function Calling with LangChain

-

先要安装 LangChain

pip3 install LangChain -

model 格式发生改变,如果用原文的ChatOpenAI(model = ‘gpt-4’),系统会给警告

llm = ChatOpenAI(model_kwargs={'engine': 'gpt-4'})

相关文章:

【Azure OpenAI】OpenAI Function Calling 101

概述 本文是结合 github:OpenAI Function Calling 101在 Azure OpenAI 上的实现: Github Function Calling 101 如何将函数调用与 Azure OpenAI 服务配合使用 - Azure OpenAI Service 使用像ChatGPT这样的llm的困难之一是它们不产生结构化的数据输出…...

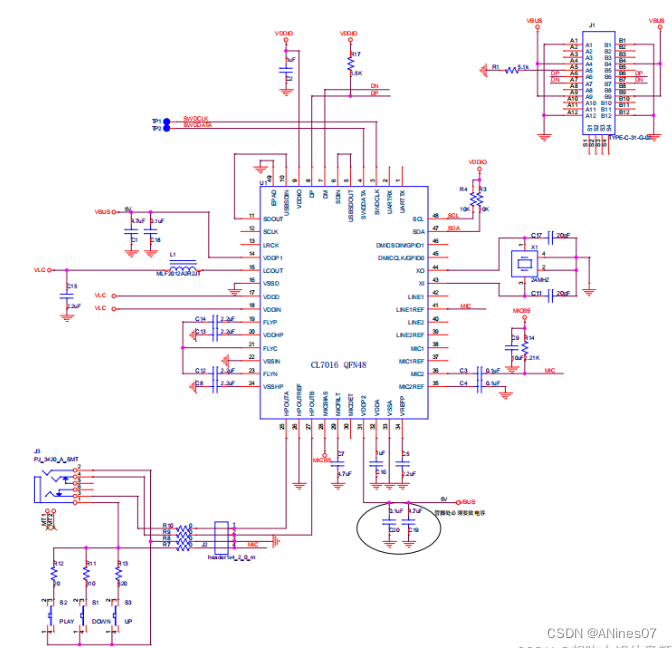

立晶半导体Cubic Lattice Inc 专攻音频ADC,音频DAC,音频CODEC,音频CLASS D等CL7016

概述: CL7016是一款高保真USB Type-C兼容音频编解码芯片。可以录制和回放有24比特音乐和声音。内置回放通路信号动态压缩, 最大42db录音通路增益,PDM数字麦克风,和立体声无需电容耳机驱动放大器。 5V单电源供电。兼容USB 2.0全速工…...

)

【Flutter】支持多平台 多端保存图片到本地相册 (兼容 Web端 移动端 android 保存到本地)

免责声明: 我只测试了Web端 和 Android端 可行哈 import dart:io; import package:flutter/services.dart; import package:http/http.dart as http; import package:universal_html/html.dart as html; import package:oktoast/oktoast.dart; import package:image_gallery_sa…...

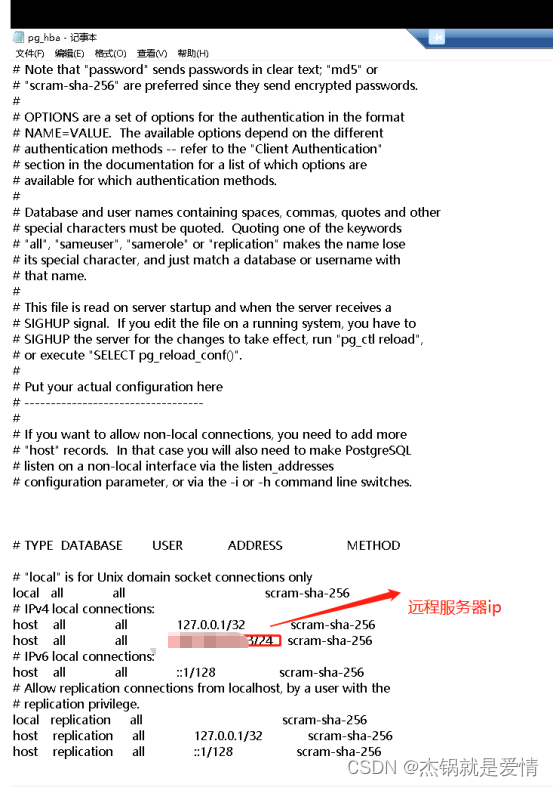

postgresql 安装教程

postgresql 安装教程 本文以window 15版本为教程 文章目录 postgresql 安装教程1.下载地址2.以管理员身份运行3.选择安装路径,点击Next4.选择组件(默认都勾选),点击Next5.选择数据存储路径,点击Next6.设置超级用户的…...

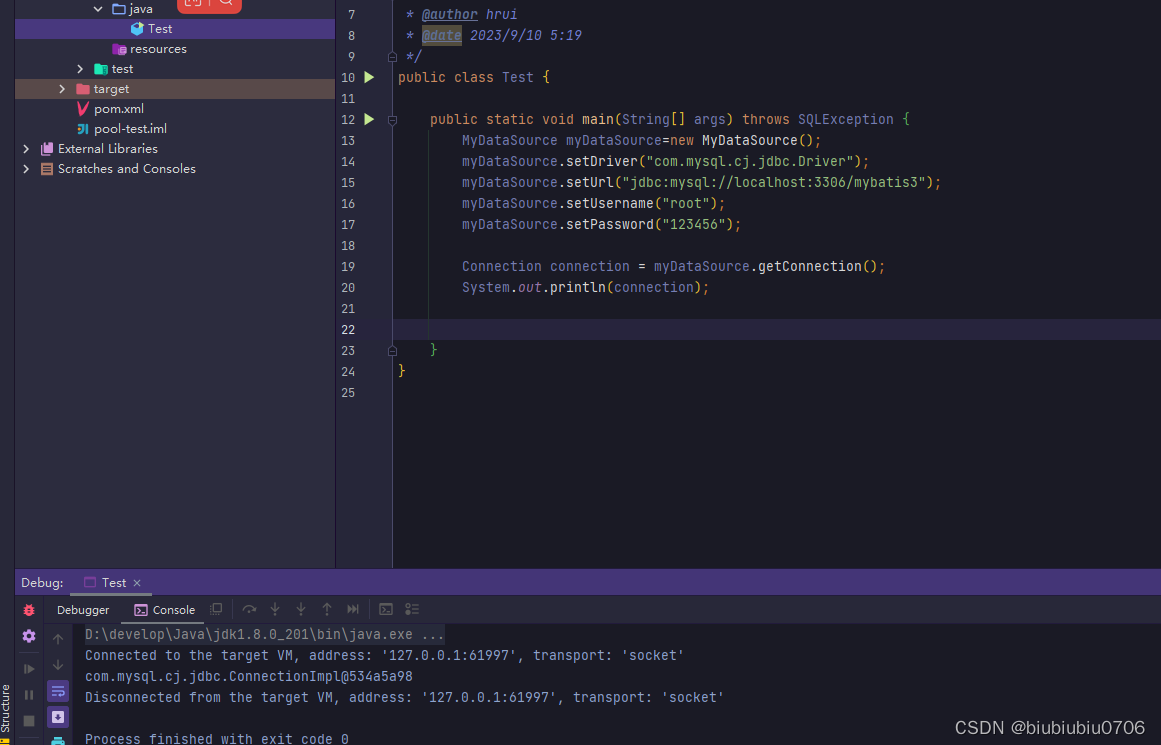

手写数据库连接池

数据库连接是个耗时操作.对数据库连接的高效管理影响应用程序的性能指标. 数据库连接池正是针对这个问题提出来的. 数据库连接池负责分配,管理和释放数据库连接.它允许应用程序重复使用一个现有的数据路连接,而不需要每次重新建立一个新的连接,利用数据库连接池将明显提升对数…...

在CentOS7上增加swap空间

在CentOS7上增加swap空间 在CentOS7上增加swap空间,可以按照以下步骤进行操作: 使用以下命令检查当前swap使用情况: swapon --show创建一个新的swap文件。你可以根据需要指定大小。例如,要创建一个2GB的swap文件,使用…...

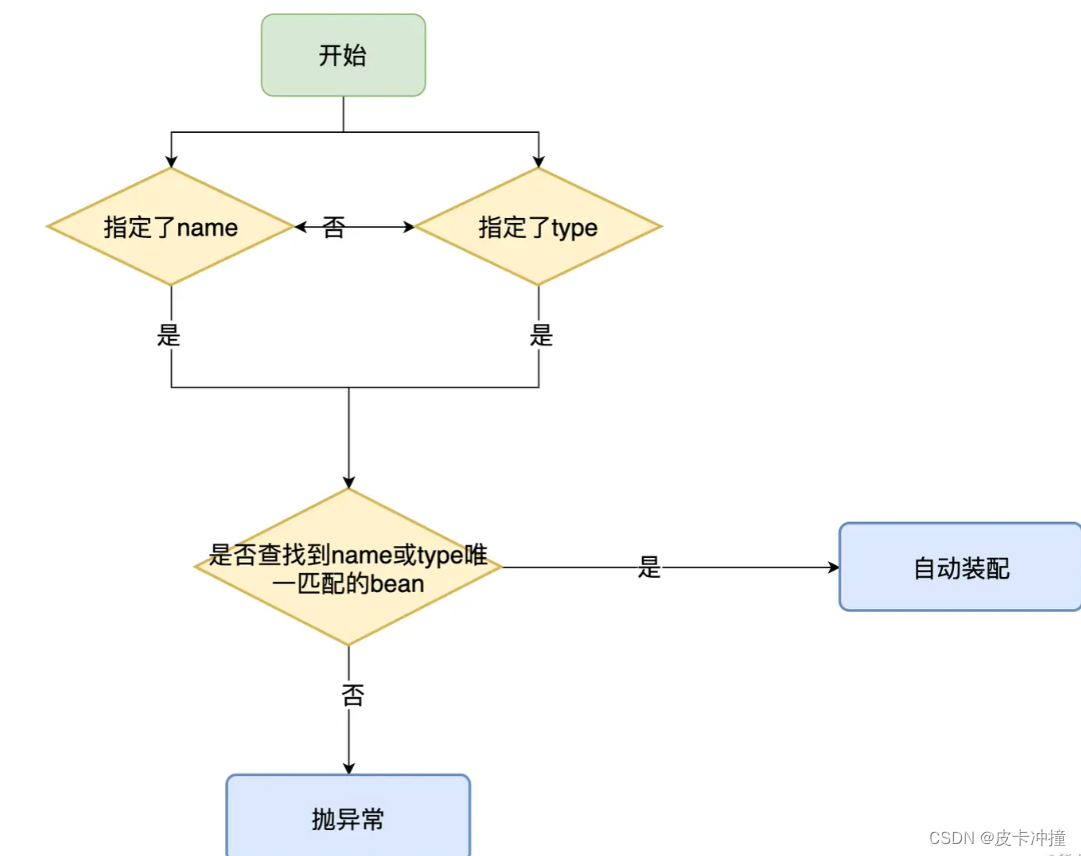

@Autowired和@Resource

文章目录 简介Autowired注解什么是Autowired注解Autowired注解的使用方式Autowired注解的优势和不足 Qualifier总结: Resource注解什么是Resource注解Resource注解的使用方式Resource注解的优势和不足 Autowired vs ResourceAutowired和Resource的区别为什么推荐使用…...

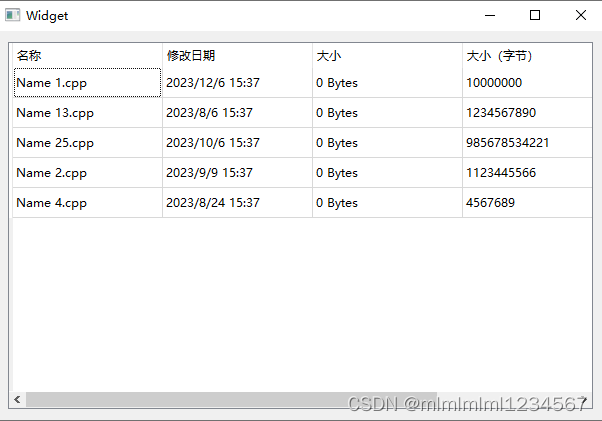

QTableView通过setColumnWidth设置了列宽无效的问题

在用到QT的QTableView时,为了显示效果,向手动的设置每一列的宽度,但是如下的代码是无效的。 ui->tableView->setColumnWidth(0,150);ui->tableView->setColumnWidth(1,150);ui->tableView->setColumnWidth(2,150);ui->t…...

【用unity实现100个游戏之10】复刻经典俄罗斯方块游戏

文章目录 前言开始项目网格生成Block方块脚本俄罗斯方块基类,绘制方块形状移动逻辑限制移动自由下落下落后设置对应风格为不可移动类型检查当前方块是否可以向指定方向移动旋转逻辑消除逻辑游戏结束逻辑怪物生成源码参考完结 前言 当今游戏产业中,经典游…...

Docker容器内数据备份到系统本地

Docker运行容器时没将目录映射出来,或者因docker容器内外数据不一致,导致docker运行错误的,可以使用以下步骤处理: 1.进入要备份的容器: docker exec -it <容器名称或ID> /bin/bash2.在容器内创建一个临时目录…...

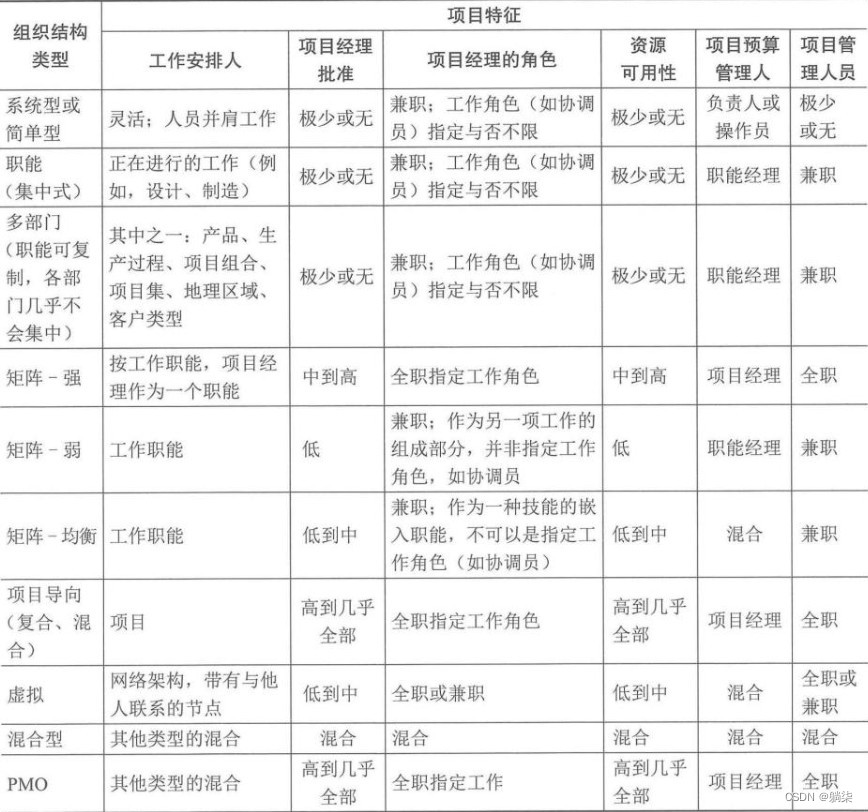

学信息系统项目管理师第4版系列06_项目管理概论

1. 项目基础 1.1. 项目是为创造独特的产品、服务或成果而进行的临时性工作 1.1.1. 独特的产品、服务或成果 1.1.2. 临时性工作 1.1.2.1. 项目有明确的起点和终点 1.1.2.2. 不一定意味着项目的持续时间短 1.1.2.3. 临时性是项目的特点,不是项目目标的特点 1.1…...

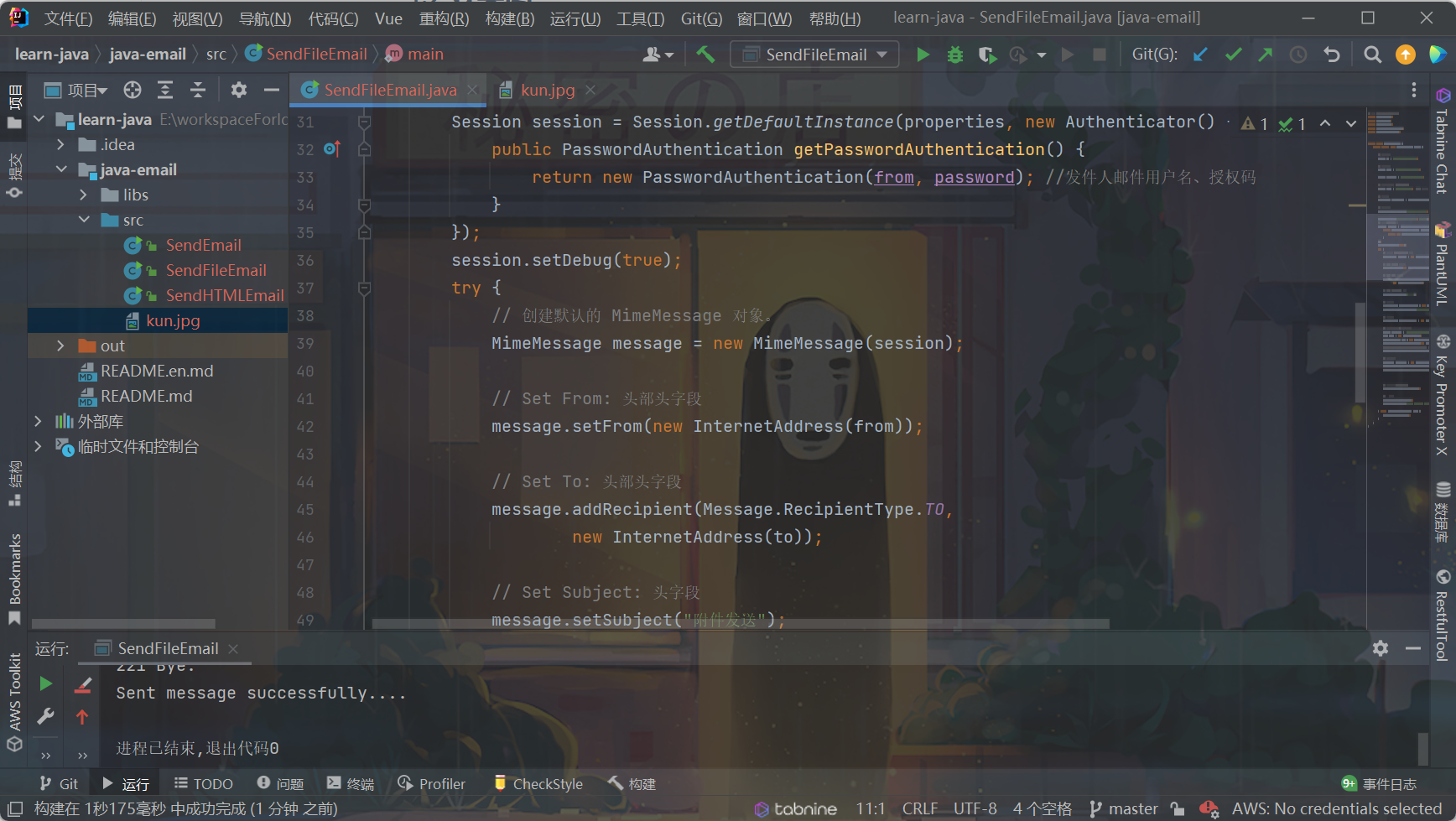

Java发送(QQ)邮箱、验证码发送

前言 使用Java应用程序发送 E-mail 十分简单,但是首先需要在项目中导入 JavaMail API 和Java Activation Framework (JAF) 的jar包。 菜鸟教程提供的下载链接: JavaMail mail.jar 1.4.5JAF(版本 1.1.1) activation.jar 1、准备…...

PostgresSQL----基于Kubernetes部署PostgresSQL

【PostgresSQL----基于Kubernetes部署PostgresSQL】 文章目录 一、创建SC、PV和PVC存储对象1.1 准备一个nfs服务器1.2 编写SC、PV、PVC等存储资源文件1.3 编写部署PostgresSQL数据库的资源声明文件 二、部署PostgresSQL2.1 部署 PV、PVC等存储对象2.2 部署PostgresSQL数据库2.3…...

7 个适合初学者的项目,可帮助您开始使用 ChatGPT

推荐:使用 NSDT场景编辑器快速搭建3D应用场景 从自动化日常任务到预测复杂模式,人工智能正在重塑行业并重新定义可能性。 当我们站在这场人工智能革命中时,我们必须了解它的潜力并将其整合到我们的日常工作流程中。 然而。。。我知道开始使…...

JDBC操作SQLite的工具类

直接调用无需拼装sql 注入依赖 <dependency><groupId>org.xerial</groupId><artifactId>sqlite-jdbc</artifactId><version>3.43.0.0</version></dependency>工具类 import org.sqlite.SQLiteConnection;/*** Author cpf* Dat…...

SEO百度优化基础知识全解析(了解百度SEO标签作用)

百度SEO优化的作用介绍: 百度SEO优化是指通过对网站的内部结构、外部链接、内容质量、用户体验等方面进行优化,提升网站在百度搜索结果中的排名,从而提高网站的曝光率和流量。通过百度SEO优化,可以让更多的潜在用户找到你的网站&…...

用python实现基本数据结构【03/4】

说明 如果需要用到这些知识却没有掌握,则会让人感到沮丧,也可能导致面试被拒。无论是花几天时间“突击”,还是利用零碎的时间持续学习,在数据结构上下点功夫都是值得的。那么Python 中有哪些数据结构呢?列表、字典、集…...

软件测试面试题汇总

测试技术面试题 软件测试面试时一份好简历的重要性 1、什么是兼容性测试?兼容性测试侧重哪些方面? 5 2、我现在有个程序,发现在Windows上运行得很慢,怎么判别是程序存在问题还是软硬件系统存在问题? 5 3、测试的策略…...

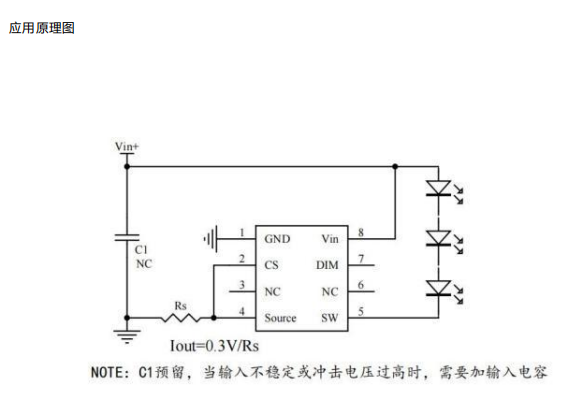

AP5101C 高压线性恒流IC 宽电压6-100V LED汽车大灯照明 台灯LED矿灯 指示灯电源驱动

产品描述 AP5101C 是一款高压线性 LED 恒流芯片 , 外围简单 、 内置功率管 , 适用于6- 100V 输入的高精度降压 LED 恒流驱动芯片。电流2.0A。AP5101C 可实现内置MOS 做 2.0A,外置 MOS 可做 3.0A 的。AP5101C 内置温度保护功能 ,温度保护点为…...

<模拟>)

【大数问题】字符串相减(大数相减)<模拟>

类似 【力扣】415. 字符串相加(大数相加),实现大数相减。 题解 模拟相减的过程,先一直使大数减小数,记录借位,最后再判断是否加负号。(中间需要删除前导0,例如10001-1000000001&am…...

网络六边形受到攻击

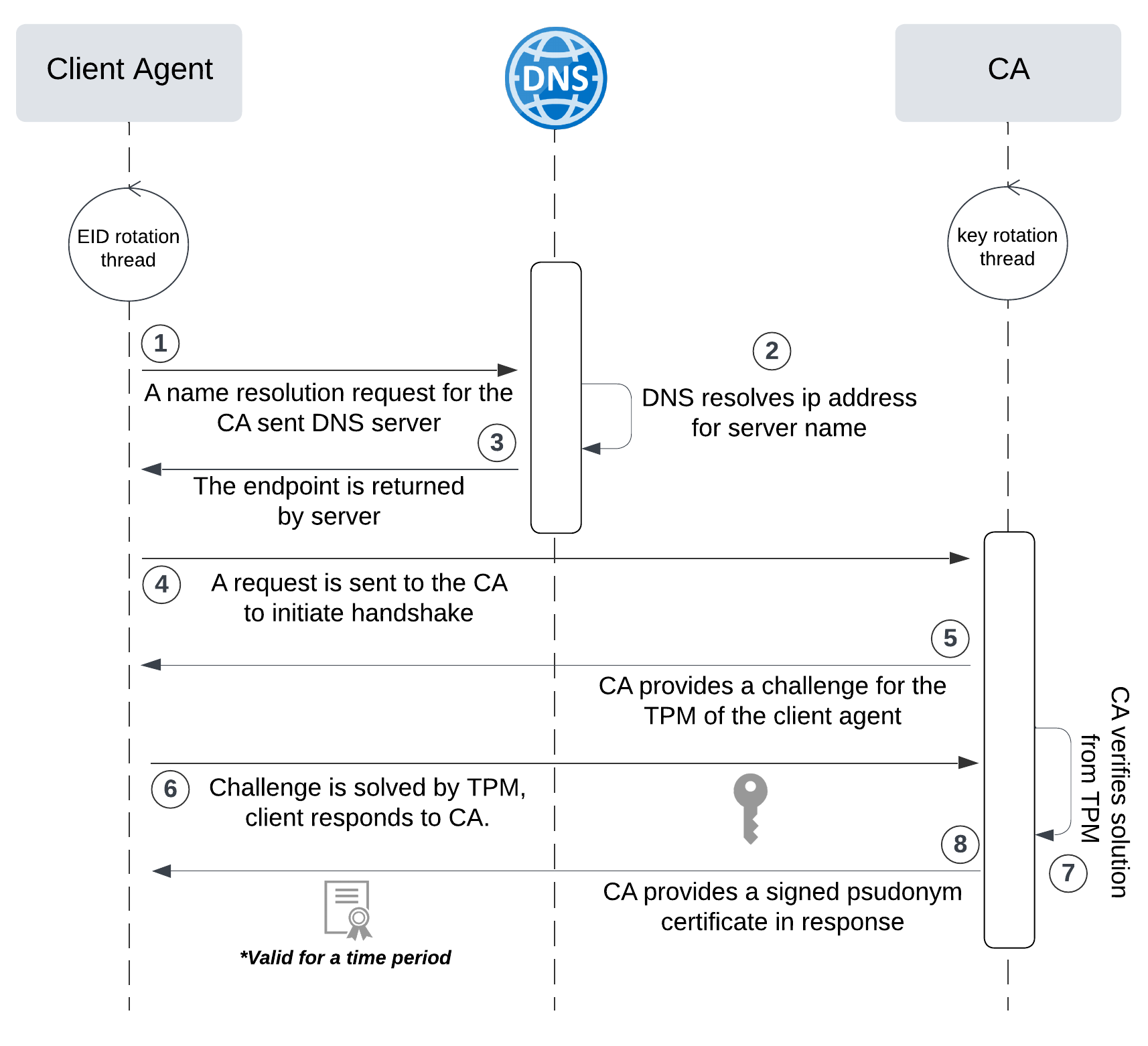

大家读完觉得有帮助记得关注和点赞!!! 抽象 现代智能交通系统 (ITS) 的一个关键要求是能够以安全、可靠和匿名的方式从互联车辆和移动设备收集地理参考数据。Nexagon 协议建立在 IETF 定位器/ID 分离协议 (…...

ES6从入门到精通:前言

ES6简介 ES6(ECMAScript 2015)是JavaScript语言的重大更新,引入了许多新特性,包括语法糖、新数据类型、模块化支持等,显著提升了开发效率和代码可维护性。 核心知识点概览 变量声明 let 和 const 取代 var…...

docker详细操作--未完待续

docker介绍 docker官网: Docker:加速容器应用程序开发 harbor官网:Harbor - Harbor 中文 使用docker加速器: Docker镜像极速下载服务 - 毫秒镜像 是什么 Docker 是一种开源的容器化平台,用于将应用程序及其依赖项(如库、运行时环…...

visual studio 2022更改主题为深色

visual studio 2022更改主题为深色 点击visual studio 上方的 工具-> 选项 在选项窗口中,选择 环境 -> 常规 ,将其中的颜色主题改成深色 点击确定,更改完成...

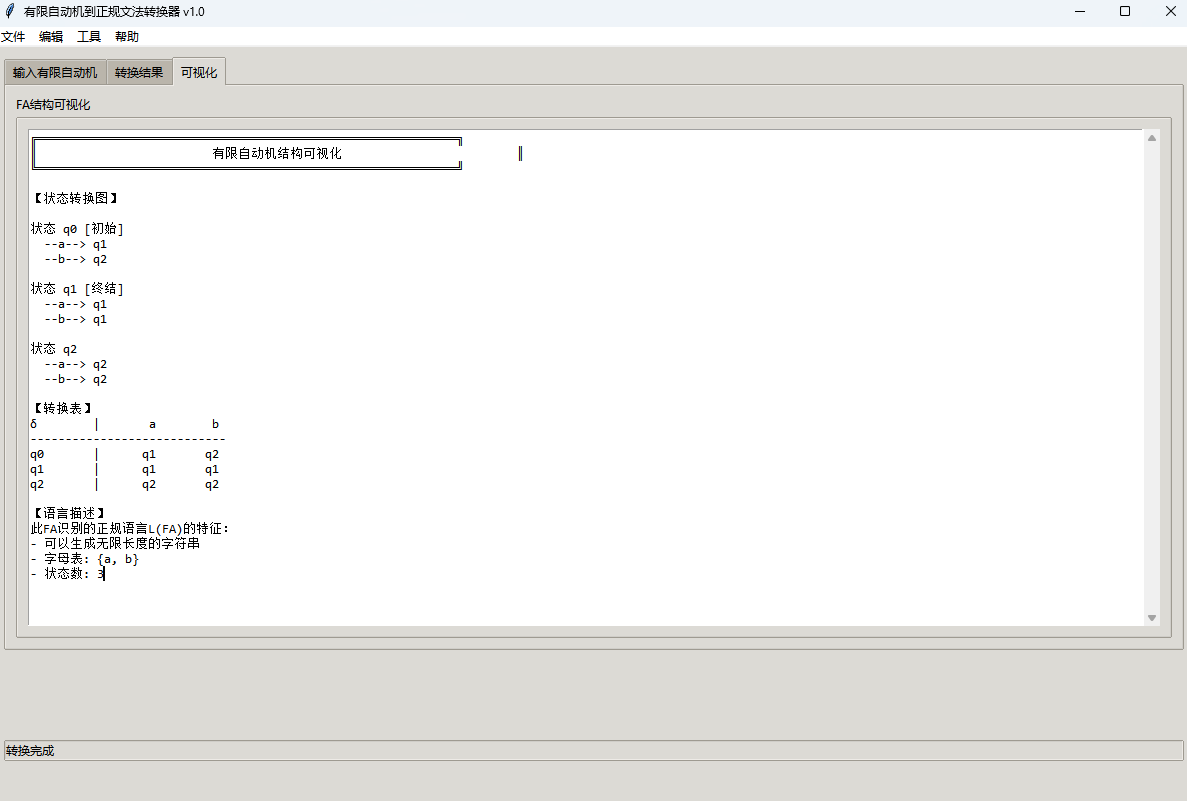

有限自动机到正规文法转换器v1.0

1 项目简介 这是一个功能强大的有限自动机(Finite Automaton, FA)到正规文法(Regular Grammar)转换器,它配备了一个直观且完整的图形用户界面,使用户能够轻松地进行操作和观察。该程序基于编译原理中的经典…...

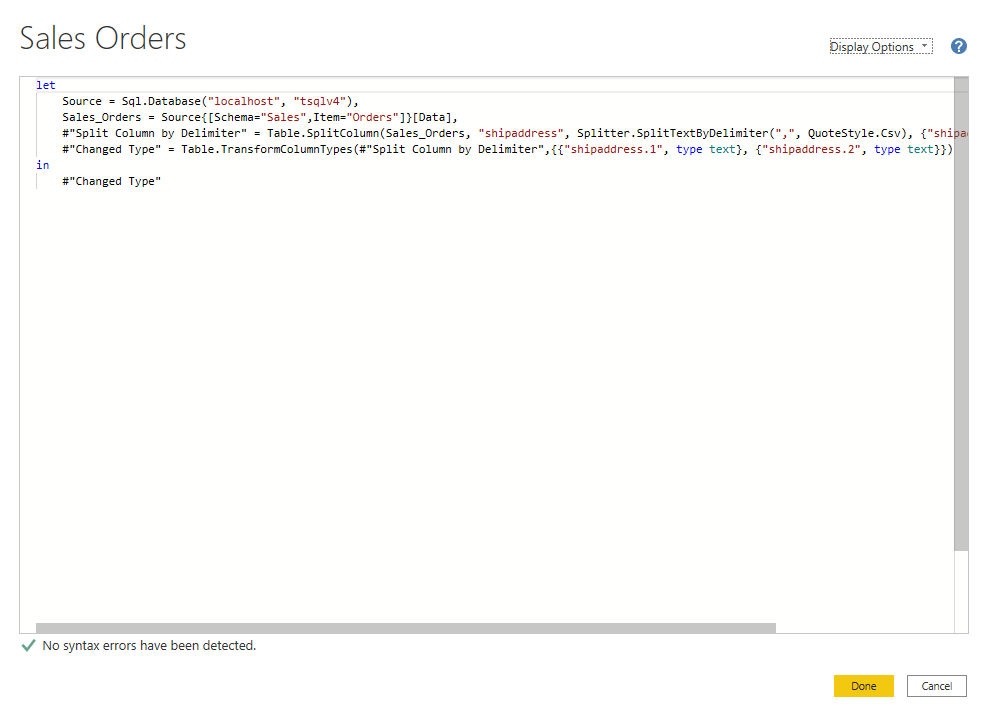

微软PowerBI考试 PL300-在 Power BI 中清理、转换和加载数据

微软PowerBI考试 PL300-在 Power BI 中清理、转换和加载数据 Power Query 具有大量专门帮助您清理和准备数据以供分析的功能。 您将了解如何简化复杂模型、更改数据类型、重命名对象和透视数据。 您还将了解如何分析列,以便知晓哪些列包含有价值的数据,…...

Razor编程中@Html的方法使用大全

文章目录 1. 基础HTML辅助方法1.1 Html.ActionLink()1.2 Html.RouteLink()1.3 Html.Display() / Html.DisplayFor()1.4 Html.Editor() / Html.EditorFor()1.5 Html.Label() / Html.LabelFor()1.6 Html.TextBox() / Html.TextBoxFor() 2. 表单相关辅助方法2.1 Html.BeginForm() …...

打手机检测算法AI智能分析网关V4守护公共/工业/医疗等多场景安全应用

一、方案背景 在现代生产与生活场景中,如工厂高危作业区、医院手术室、公共场景等,人员违规打手机的行为潜藏着巨大风险。传统依靠人工巡查的监管方式,存在效率低、覆盖面不足、判断主观性强等问题,难以满足对人员打手机行为精…...

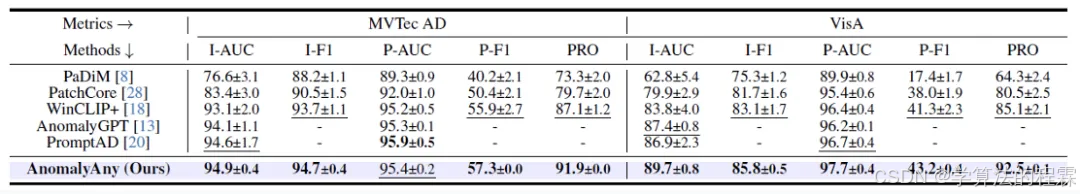

CVPR2025重磅突破:AnomalyAny框架实现单样本生成逼真异常数据,破解视觉检测瓶颈!

本文介绍了一种名为AnomalyAny的创新框架,该方法利用Stable Diffusion的强大生成能力,仅需单个正常样本和文本描述,即可生成逼真且多样化的异常样本,有效解决了视觉异常检测中异常样本稀缺的难题,为工业质检、医疗影像…...

区块链技术概述

区块链技术是一种去中心化、分布式账本技术,通过密码学、共识机制和智能合约等核心组件,实现数据不可篡改、透明可追溯的系统。 一、核心技术 1. 去中心化 特点:数据存储在网络中的多个节点(计算机),而非…...