KubeSphere一键安装部署K8S集群(单master节点)-亲测过

1. 基础环境优化

hostnamectl set-hostname master1 && bash

hostnamectl set-hostname node1 && bash

hostnamectl set-hostname node2 && bash

cat >> /etc/hosts << EOF

192.168.0.34 master1

192.168.0.45 node1

192.168.0.209 node2

EOF

#所有机器上都操作

ssh-keygen -t rsa #一路回车,不输入密码

###把本地的ssh公钥文件安装到远程主机对应的账户

for i in master1 node1 node2 ;do ssh-copy-id -i .ssh/id_rsa.pub $i ;done

#关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

#关闭selinux:

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

#关闭swap:

swapoff -a # 临时

sed -i 's/.*swap.*/#&/' /etc/fstab # 永久#时间同步

yum install ntpdate -y

ntpdate time.windows.com

#设置yum源

yum -y install wget

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

rpm -ivh http://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpm

#安装依赖组件,也就是上面的Kubernetes依赖里的内容,全部安装

yum install -y ebtables socat ipset conntrack wget curl

2. 安装kubesphere(只在master上操作)

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.2.2 sh -

chmod +x kk

#集群配置,创建配置文件,config-sample.yaml

./kk create config --with-kubernetes v1.22.10 --with-kubesphere v3.3.0 #创建配置文件

[root@k8s-master ~]# ./kk create config --with-kubernetes v1.22.10 --with-kubesphere v3.3.0

Generate KubeKey config file successfully

[root@k8s-master ~]# ll

total 70340

-rw-r–r-- 1 root root 4773 Oct 22 18:29 config-sample.yaml

-rwxr-xr-x 1 1001 121 54910976 Jul 26 2022 kk

drwxr-xr-x 4 root root 4096 Oct 22 18:26 kubekey

-rw-r–r-- 1 root root 17102249 Oct 22 18:25 kubekey-v2.2.2-linux-amd64.tar.gz

[root@k8s-master ~]#

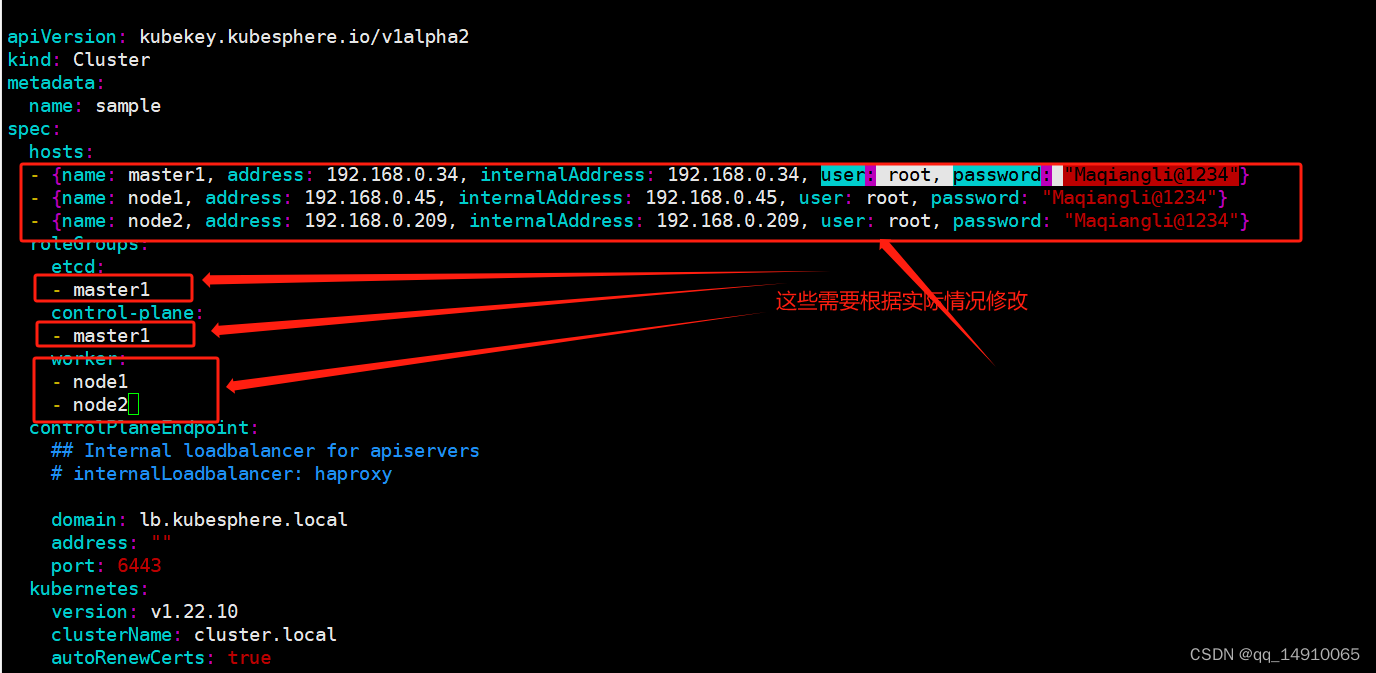

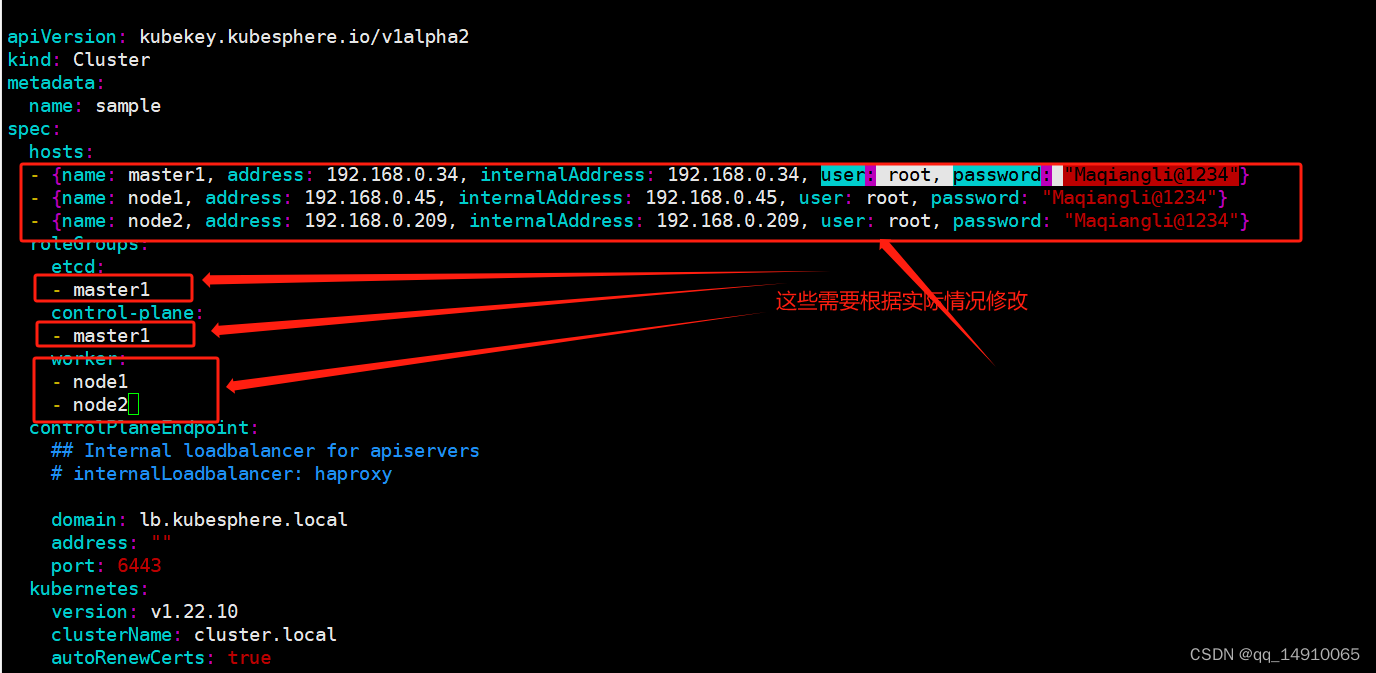

编辑config-sample.yaml

3. 安装kubesphere

#启动脚本和配置文件

./kk create cluster -f config-sample.yaml

这是安装记录的全过程:

[root@master1 ~]# ./kk create cluster -f config-sample.yaml_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |__/ ||___/19:05:34 CST [GreetingsModule] Greetings

19:05:34 CST message: [master1]

Greetings, KubeKey!

19:05:34 CST message: [node2]

Greetings, KubeKey!

19:05:34 CST message: [node1]

Greetings, KubeKey!

19:05:34 CST success: [master1]

19:05:34 CST success: [node2]

19:05:34 CST success: [node1]

19:05:34 CST [NodePreCheckModule] A pre-check on nodes

19:05:35 CST success: [node2]

19:05:35 CST success: [master1]

19:05:35 CST success: [node1]

19:05:35 CST [ConfirmModule] Display confirmation form

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| master1 | y | y | y | y | y | y | | y | y | | | | | | CST 19:05:35 |

| node1 | y | y | y | y | y | y | | y | y | | | | | | CST 19:05:35 |

| node2 | y | y | y | y | y | y | | y | y | | | | | | CST 19:05:35 |

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendationsContinue this installation? [yes/no]: yes

19:05:37 CST success: [LocalHost]

19:05:37 CST [NodeBinariesModule] Download installation binaries

19:05:37 CST message: [localhost]

downloading amd64 kubeadm v1.22.10 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 43.7M 100 43.7M 0 0 1026k 0 0:00:43 0:00:43 --:--:-- 1194k

19:06:21 CST message: [localhost]

downloading amd64 kubelet v1.22.10 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 115M 100 115M 0 0 1024k 0 0:01:55 0:01:55 --:--:-- 1162k

19:08:18 CST message: [localhost]

downloading amd64 kubectl v1.22.10 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 44.7M 100 44.7M 0 0 1031k 0 0:00:44 0:00:44 --:--:-- 1197k

19:09:02 CST message: [localhost]

downloading amd64 helm v3.6.3 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 43.0M 100 43.0M 0 0 996k 0 0:00:44 0:00:44 --:--:-- 1024k

19:09:47 CST message: [localhost]

downloading amd64 kubecni v0.9.1 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 37.9M 100 37.9M 0 0 1022k 0 0:00:37 0:00:37 --:--:-- 1173k

19:10:25 CST message: [localhost]

downloading amd64 crictl v1.24.0 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 13.8M 100 13.8M 0 0 1049k 0 0:00:13 0:00:13 --:--:-- 1174k

19:10:38 CST message: [localhost]

downloading amd64 etcd v3.4.13 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 16.5M 100 16.5M 0 0 1040k 0 0:00:16 0:00:16 --:--:-- 1142k

19:10:55 CST message: [localhost]

downloading amd64 docker 20.10.8 ...% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 58.1M 100 58.1M 0 0 24.8M 0 0:00:02 0:00:02 --:--:-- 24.8M

19:10:58 CST success: [LocalHost]

19:10:58 CST [ConfigureOSModule] Prepare to init OS

19:10:58 CST success: [node1]

19:10:58 CST success: [node2]

19:10:58 CST success: [master1]

19:10:58 CST [ConfigureOSModule] Generate init os script

19:10:58 CST success: [node1]

19:10:58 CST success: [node2]

19:10:58 CST success: [master1]

19:10:58 CST [ConfigureOSModule] Exec init os script

19:10:59 CST stdout: [node1]

setenforce: SELinux is disabled

Disabled

vm.swappiness = 1

net.core.somaxconn = 1024

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

19:10:59 CST stdout: [node2]

setenforce: SELinux is disabled

Disabled

vm.swappiness = 1

net.core.somaxconn = 1024

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

19:10:59 CST stdout: [master1]

setenforce: SELinux is disabled

Disabled

vm.swappiness = 1

net.core.somaxconn = 1024

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

19:10:59 CST success: [node1]

19:10:59 CST success: [node2]

19:10:59 CST success: [master1]

19:10:59 CST [ConfigureOSModule] configure the ntp server for each node

19:10:59 CST skipped: [node2]

19:10:59 CST skipped: [master1]

19:10:59 CST skipped: [node1]

19:10:59 CST [KubernetesStatusModule] Get kubernetes cluster status

19:10:59 CST success: [master1]

19:10:59 CST [InstallContainerModule] Sync docker binaries

19:11:03 CST success: [master1]

19:11:03 CST success: [node2]

19:11:03 CST success: [node1]

19:11:03 CST [InstallContainerModule] Generate docker service

19:11:04 CST success: [node1]

19:11:04 CST success: [node2]

19:11:04 CST success: [master1]

19:11:04 CST [InstallContainerModule] Generate docker config

19:11:04 CST success: [node1]

19:11:04 CST success: [master1]

19:11:04 CST success: [node2]

19:11:04 CST [InstallContainerModule] Enable docker

19:11:05 CST success: [node1]

19:11:05 CST success: [master1]

19:11:05 CST success: [node2]

19:11:05 CST [InstallContainerModule] Add auths to container runtime

19:11:05 CST skipped: [node2]

19:11:05 CST skipped: [node1]

19:11:05 CST skipped: [master1]

19:11:05 CST [PullModule] Start to pull images on all nodes

19:11:05 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

19:11:05 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

19:11:05 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

19:11:07 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.10

19:11:07 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.10

19:11:07 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.22.10

19:11:21 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

19:11:21 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

19:11:24 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.22.10

19:11:29 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

19:11:29 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

19:11:41 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.22.10

19:11:42 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

19:11:49 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.10

19:11:58 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

19:12:04 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

19:12:12 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

19:12:26 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

19:12:42 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

19:12:42 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

19:13:12 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

19:13:24 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

19:13:25 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

19:13:28 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

19:14:07 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

19:14:13 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

19:14:55 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

19:14:59 CST success: [node1]

19:14:59 CST success: [master1]

19:14:59 CST success: [node2]

19:14:59 CST [ETCDPreCheckModule] Get etcd status

19:14:59 CST success: [master1]

19:14:59 CST [CertsModule] Fetch etcd certs

19:14:59 CST success: [master1]

19:14:59 CST [CertsModule] Generate etcd Certs

[certs] Generating "ca" certificate and key

[certs] admin-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 node1 node2] and IPs [127.0.0.1 ::1 192.168.0.34 192.168.0.45 192.168.0.209]

[certs] member-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 node1 node2] and IPs [127.0.0.1 ::1 192.168.0.34 192.168.0.45 192.168.0.209]

[certs] node-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 node1 node2] and IPs [127.0.0.1 ::1 192.168.0.34 192.168.0.45 192.168.0.209]

19:15:00 CST success: [LocalHost]

19:15:00 CST [CertsModule] Synchronize certs file

19:15:01 CST success: [master1]

19:15:01 CST [CertsModule] Synchronize certs file to master

19:15:01 CST skipped: [master1]

19:15:01 CST [InstallETCDBinaryModule] Install etcd using binary

19:15:02 CST success: [master1]

19:15:02 CST [InstallETCDBinaryModule] Generate etcd service

19:15:02 CST success: [master1]

19:15:02 CST [InstallETCDBinaryModule] Generate access address

19:15:02 CST success: [master1]

19:15:02 CST [ETCDConfigureModule] Health check on exist etcd

19:15:02 CST skipped: [master1]

19:15:02 CST [ETCDConfigureModule] Generate etcd.env config on new etcd

19:15:02 CST success: [master1]

19:15:02 CST [ETCDConfigureModule] Refresh etcd.env config on all etcd

19:15:02 CST success: [master1]

19:15:02 CST [ETCDConfigureModule] Restart etcd

19:15:06 CST stdout: [master1]

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

19:15:06 CST success: [master1]

19:15:06 CST [ETCDConfigureModule] Health check on all etcd

19:15:06 CST success: [master1]

19:15:06 CST [ETCDConfigureModule] Refresh etcd.env config to exist mode on all etcd

19:15:06 CST success: [master1]

19:15:06 CST [ETCDConfigureModule] Health check on all etcd

19:15:06 CST success: [master1]

19:15:06 CST [ETCDBackupModule] Backup etcd data regularly

19:15:06 CST success: [master1]

19:15:06 CST [ETCDBackupModule] Generate backup ETCD service

19:15:06 CST success: [master1]

19:15:06 CST [ETCDBackupModule] Generate backup ETCD timer

19:15:07 CST success: [master1]

19:15:07 CST [ETCDBackupModule] Enable backup etcd service

19:15:07 CST success: [master1]

19:15:07 CST [InstallKubeBinariesModule] Synchronize kubernetes binaries

19:15:15 CST success: [master1]

19:15:15 CST success: [node2]

19:15:15 CST success: [node1]

19:15:15 CST [InstallKubeBinariesModule] Synchronize kubelet

19:15:15 CST success: [node2]

19:15:15 CST success: [master1]

19:15:15 CST success: [node1]

19:15:15 CST [InstallKubeBinariesModule] Generate kubelet service

19:15:15 CST success: [node2]

19:15:15 CST success: [node1]

19:15:15 CST success: [master1]

19:15:15 CST [InstallKubeBinariesModule] Enable kubelet service

19:15:15 CST success: [node1]

19:15:15 CST success: [node2]

19:15:15 CST success: [master1]

19:15:15 CST [InstallKubeBinariesModule] Generate kubelet env

19:15:16 CST success: [master1]

19:15:16 CST success: [node2]

19:15:16 CST success: [node1]

19:15:16 CST [InitKubernetesModule] Generate kubeadm config

19:15:16 CST success: [master1]

19:15:16 CST [InitKubernetesModule] Init cluster using kubeadm

19:15:27 CST stdout: [master1]

W1022 19:15:16.479674 23830 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[init] Using Kubernetes version: v1.22.10

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost master1 master1.cluster.local node1 node1.cluster.local node2 node2.cluster.local] and IPs [10.233.0.1 192.168.0.34 127.0.0.1 192.168.0.45 192.168.0.209]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.002336 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master1 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 1zr4e1.dj4l7h0k6rblrzb7

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:kubeadm join lb.kubesphere.local:6443 --token 1zr4e1.dj4l7h0k6rblrzb7 \--discovery-token-ca-cert-hash sha256:34a0b4f8551f17b5d032bd9d9d3dd1d553fbabc7f0ee09734085e5d531bd6418 \--control-plane Then you can join any number of worker nodes by running the following on each as root:kubeadm join lb.kubesphere.local:6443 --token 1zr4e1.dj4l7h0k6rblrzb7 \--discovery-token-ca-cert-hash sha256:34a0b4f8551f17b5d032bd9d9d3dd1d553fbabc7f0ee09734085e5d531bd6418

19:15:27 CST success: [master1]

19:15:27 CST [InitKubernetesModule] Copy admin.conf to ~/.kube/config

19:15:27 CST success: [master1]

19:15:27 CST [InitKubernetesModule] Remove master taint

19:15:27 CST skipped: [master1]

19:15:27 CST [InitKubernetesModule] Add worker label

19:15:27 CST skipped: [master1]

19:15:27 CST [ClusterDNSModule] Generate coredns service

19:15:28 CST success: [master1]

19:15:28 CST [ClusterDNSModule] Override coredns service

19:15:28 CST stdout: [master1]

service "kube-dns" deleted

19:15:29 CST stdout: [master1]

service/coredns created

Warning: resource clusterroles/system:coredns is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:coredns configured

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Generate nodelocaldns

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Deploy nodelocaldns

19:15:29 CST stdout: [master1]

serviceaccount/nodelocaldns created

daemonset.apps/nodelocaldns created

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Generate nodelocaldns configmap

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Apply nodelocaldns configmap

19:15:30 CST stdout: [master1]

configmap/nodelocaldns created

19:15:30 CST success: [master1]

19:15:30 CST [KubernetesStatusModule] Get kubernetes cluster status

19:15:30 CST stdout: [master1]

v1.22.10

19:15:30 CST stdout: [master1]

master1 v1.22.10 [map[address:192.168.0.34 type:InternalIP] map[address:master1 type:Hostname]]

19:15:30 CST stdout: [master1]

I1022 19:15:30.575041 25671 version.go:255] remote version is much newer: v1.28.2; falling back to: stable-1.22

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

63ee559515c0d77547d1fcd24a58242a3199e88ed8a320321b05d81eb250274a

19:15:30 CST stdout: [master1]

secret/kubeadm-certs patched

19:15:31 CST stdout: [master1]

secret/kubeadm-certs patched

19:15:31 CST stdout: [master1]

secret/kubeadm-certs patched

19:15:31 CST stdout: [master1]

9h3nip.2552zirb9kdsggxw

19:15:31 CST success: [master1]

19:15:31 CST [JoinNodesModule] Generate kubeadm config

19:15:31 CST skipped: [master1]

19:15:31 CST success: [node1]

19:15:31 CST success: [node2]

19:15:31 CST [JoinNodesModule] Join control-plane node

19:15:31 CST skipped: [master1]

19:15:31 CST [JoinNodesModule] Join worker node

19:15:49 CST stdout: [node2]

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W1022 19:15:44.065251 11754 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

19:15:49 CST stdout: [node1]

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W1022 19:15:44.065086 11775 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

19:15:49 CST success: [node2]

19:15:49 CST success: [node1]

19:15:49 CST [JoinNodesModule] Copy admin.conf to ~/.kube/config

19:15:49 CST skipped: [master1]

19:15:49 CST [JoinNodesModule] Remove master taint

19:15:49 CST skipped: [master1]

19:15:49 CST [JoinNodesModule] Add worker label to master

19:15:49 CST skipped: [master1]

19:15:49 CST [JoinNodesModule] Synchronize kube config to worker

19:15:49 CST success: [node1]

19:15:49 CST success: [node2]

19:15:49 CST [JoinNodesModule] Add worker label to worker

19:15:50 CST stdout: [node1]

node/node1 labeled

19:15:50 CST stdout: [node2]

node/node2 labeled

19:15:50 CST success: [node1]

19:15:50 CST success: [node2]

19:15:50 CST [DeployNetworkPluginModule] Generate calico

19:15:50 CST success: [master1]

19:15:50 CST [DeployNetworkPluginModule] Deploy calico

19:15:51 CST stdout: [master1]

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

19:15:51 CST success: [master1]

19:15:51 CST [ConfigureKubernetesModule] Configure kubernetes

19:15:51 CST success: [node2]

19:15:51 CST success: [master1]

19:15:51 CST success: [node1]

19:15:51 CST [ChownModule] Chown user $HOME/.kube dir

19:15:51 CST success: [node2]

19:15:51 CST success: [node1]

19:15:51 CST success: [master1]

19:15:51 CST [AutoRenewCertsModule] Generate k8s certs renew script

19:15:51 CST success: [master1]

19:15:51 CST [AutoRenewCertsModule] Generate k8s certs renew service

19:15:51 CST success: [master1]

19:15:51 CST [AutoRenewCertsModule] Generate k8s certs renew timer

19:15:52 CST success: [master1]

19:15:52 CST [AutoRenewCertsModule] Enable k8s certs renew service

19:15:52 CST success: [master1]

19:15:52 CST [SaveKubeConfigModule] Save kube config as a configmap

19:15:52 CST success: [LocalHost]

19:15:52 CST [AddonsModule] Install addons

19:15:52 CST success: [LocalHost]

19:15:52 CST [DeployStorageClassModule] Generate OpenEBS manifest

19:15:52 CST success: [master1]

19:15:52 CST [DeployStorageClassModule] Deploy OpenEBS as cluster default StorageClass

19:15:54 CST success: [master1]

19:15:54 CST [DeployKubeSphereModule] Generate KubeSphere ks-installer crd manifests

19:15:54 CST success: [master1]

19:15:54 CST [DeployKubeSphereModule] Apply ks-installer

19:15:56 CST stdout: [master1]

namespace/kubesphere-system created

serviceaccount/ks-installer created

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

19:15:56 CST success: [master1]

19:15:56 CST [DeployKubeSphereModule] Add config to ks-installer manifests

19:15:56 CST success: [master1]

19:15:56 CST [DeployKubeSphereModule] Create the kubesphere namespace

19:15:57 CST success: [master1]

19:15:57 CST [DeployKubeSphereModule] Setup ks-installer config

19:15:58 CST stdout: [master1]

secret/kube-etcd-client-certs created

19:15:58 CST success: [master1]

19:15:58 CST [DeployKubeSphereModule] Apply ks-installer

19:15:58 CST stdout: [master1]

namespace/kubesphere-system unchanged

serviceaccount/ks-installer unchanged

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io unchanged

clusterrole.rbac.authorization.k8s.io/ks-installer unchanged

clusterrolebinding.rbac.authorization.k8s.io/ks-installer unchanged

deployment.apps/ks-installer unchanged

clusterconfiguration.installer.kubesphere.io/ks-installer created

19:15:58 CST success: [master1]

#####################################################

### Welcome to KubeSphere! ###

#####################################################Console: http://192.168.0.34:30880

Account: admin

Password: P@88w0rdNOTES:1. After you log into the console, please check themonitoring status of service components in"Cluster Management". If any service is notready, please wait patiently until all components are up and running.2. Please change the default password after login.#####################################################

https://kubesphere.io 2023-10-22 19:23:58

#####################################################

19:24:01 CST success: [master1]

19:24:01 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.Please check the result using the command:kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f[root@master1 ~]# [root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 12m v1.22.10

node1 Ready worker 12m v1.22.10

node2 Ready worker 12m v1.22.10

[root@master1 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-69cfcfdf6c-98sh6 1/1 Running 1 (12m ago) 12m

calico-node-tk86b 1/1 Running 0 12m

calico-node-tsdll 1/1 Running 0 12m

calico-node-xj82j 1/1 Running 0 12m

coredns-5495dd7c88-j7tj2 1/1 Running 0 12m

coredns-5495dd7c88-l4lt9 1/1 Running 0 12m

kube-apiserver-master1 1/1 Running 0 13m

kube-controller-manager-master1 1/1 Running 0 13m

kube-proxy-n6sxv 1/1 Running 0 12m

kube-proxy-nzhx8 1/1 Running 0 12m

kube-proxy-wlb9d 1/1 Running 0 12m

kube-scheduler-master1 1/1 Running 0 13m

nodelocaldns-5jxcv 1/1 Running 0 12m

nodelocaldns-lrhg2 1/1 Running 0 12m

nodelocaldns-mcsdt 1/1 Running 0 12m

openebs-localpv-provisioner-6f8b56f75-q27h4 1/1 Running 0 12m

snapshot-controller-0 1/1 Running 0 11m

[root@master1 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-69cfcfdf6c-98sh6 1/1 Running 1 (12m ago) 12m

kube-system calico-node-tk86b 1/1 Running 0 12m

kube-system calico-node-tsdll 1/1 Running 0 12m

kube-system calico-node-xj82j 1/1 Running 0 12m

kube-system coredns-5495dd7c88-j7tj2 1/1 Running 0 13m

kube-system coredns-5495dd7c88-l4lt9 1/1 Running 0 13m

kube-system kube-apiserver-master1 1/1 Running 0 13m

kube-system kube-controller-manager-master1 1/1 Running 0 13m

kube-system kube-proxy-n6sxv 1/1 Running 0 13m

kube-system kube-proxy-nzhx8 1/1 Running 0 13m

kube-system kube-proxy-wlb9d 1/1 Running 0 13m

kube-system kube-scheduler-master1 1/1 Running 0 13m

kube-system nodelocaldns-5jxcv 1/1 Running 0 13m

kube-system nodelocaldns-lrhg2 1/1 Running 0 13m

kube-system nodelocaldns-mcsdt 1/1 Running 0 13m

kube-system openebs-localpv-provisioner-6f8b56f75-q27h4 1/1 Running 0 12m

kube-system snapshot-controller-0 1/1 Running 0 11m

kubesphere-controls-system default-http-backend-56d9d4fdf7-g4942 1/1 Running 0 10m

kubesphere-controls-system kubectl-admin-7685cdd85b-w9hzh 1/1 Running 0 5m30s

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 8m2s

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 8m1s

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 8m

kubesphere-monitoring-system kube-state-metrics-89f49579b-xpwj7 3/3 Running 0 8m13s

kubesphere-monitoring-system node-exporter-45rb9 2/2 Running 0 8m16s

kubesphere-monitoring-system node-exporter-qkdxg 2/2 Running 0 8m15s

kubesphere-monitoring-system node-exporter-xq8zl 2/2 Running 0 8m15s

kubesphere-monitoring-system notification-manager-deployment-6ff7974fbd-5zmdh 2/2 Running 0 6m53s

kubesphere-monitoring-system notification-manager-deployment-6ff7974fbd-wq6bz 2/2 Running 0 6m52s

kubesphere-monitoring-system notification-manager-operator-58bc989b46-29jzz 2/2 Running 0 7m50s

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 8m1s

kubesphere-monitoring-system prometheus-k8s-1 2/2 Running 0 7m59s

kubesphere-monitoring-system prometheus-operator-fc9b55959-lzfph 2/2 Running 0 8m29s

kubesphere-system ks-apiserver-5db774f4f-v5bwh 1/1 Running 0 10m

kubesphere-system ks-console-64b56f967-bdlrx 1/1 Running 0 10m

kubesphere-system ks-controller-manager-7b5f77b47f-zgbgw 1/1 Running 0 10m

kubesphere-system ks-installer-9b4c69688-jl7xf 1/1 Running 0 12m

[root@master1 ~]#

4. 查看kubesphere登录账号和密码

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f #成功输出,有登录账号和密码和ip

**************************************************

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################Console: http://192.168.0.34:30880

Account: admin

Password: P@88w0rd具体登录和使用,请参考博客(从第4步开始)

#在kubernetes群集中创建Nginx:

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --type=NodePort

kubectl scale deployment nginx --replicas=2 #pod副本的数量增加到10个

kubectl get pod,svc -o wide

#浏览器做验证通过

5. 删除集群,重新安装

./kk delete cluster

./kk delete cluster [-f config-sample.yaml] #高级模式删除

相关文章:

KubeSphere一键安装部署K8S集群(单master节点)-亲测过

1. 基础环境优化 hostnamectl set-hostname master1 && bash hostnamectl set-hostname node1 && bash hostnamectl set-hostname node2 && bashcat >> /etc/hosts << EOF 192.168.0.34 master1 192.168.0.45 node1 192.168.0.209…...

vue3 element-plus 组件table表格 勾选框回显(初始化默认回显)完整静态代码

<template><el-table ref"multipleTableRef" :data"tableData" style"width: 100%"><el-table-column type"selection" width"55" /><el-table-column label"时间" width"120">…...

Redis --- 安装教程

Redis--- 特性,使用场景,安装 安装教程在Ubuntu下安装在Centos7.6下安装Redis5 特性在内存中存储数据可编程的扩展能力持久化集群高可用快速 应用场景实时数据存储作为缓存或者Session存储消息队列 安装教程 🚀安装之前切换到root用户。 在…...

代码阅读:LanGCN

toc 1训练 1.1 进度条 import tqdm as tqdm for i, data in tqdm(enumerate(train_loader),disablehvd.rank()):1.2 多进程通信 多线程通信依靠共享内存实现,但是多进程通信就麻烦很多,因此可以采用mpi库,如果是在python中使用࿰…...

基于Java的校园餐厅订餐管理系统设计与实现(源码+lw+部署文档+讲解等)

文章目录 前言具体实现截图论文参考详细视频演示为什么选择我自己的网站自己的小程序(小蔡coding) 代码参考数据库参考源码获取 前言 💗博主介绍:✌全网粉丝10W,CSDN特邀作者、博客专家、CSDN新星计划导师、全栈领域优质创作者&am…...

使用C#和Flurl.Http库的下载器程序

根据您的要求,我为您编写了一个使用C#和Flurl.Http库的下载器程序,用于下载凤凰网的图片。以下是一个简单的示例代码: using System; using Flurl.Http;namespace DownloadImage {class Program{static void Main(string[] args){string url…...

面试经典150题——Day19

文章目录 一、题目二、题解 一、题目 58. Length of Last Word Given a string s consisting of words and spaces, return the length of the last word in the string. A word is a maximal substring consisting of non-space characters only. Example 1: Input: s “…...

on null)

TP6首页加载报错 Call to a member function run() on null

最近新接入一个二开的项目,tp6的项目内置的composer.json文件里引入的topthink框架包文件却是"topthink/framework": "5.0.*",导致了以下错误: 错误: Fatal error: Uncaught Error: Call to a member function run() o…...

洗车小程序源码:10个必备功能,提升洗车体验

作为洗车行业的专家,我们深知在如今数字化时代,拥有一款功能强大的洗车小程序是提升用户体验和业务发展的关键。本文将向您介绍洗车小程序源码中的10个必备功能,让您的洗车业务达到新的高度。 在线预约系统 通过洗车小程序源码,…...

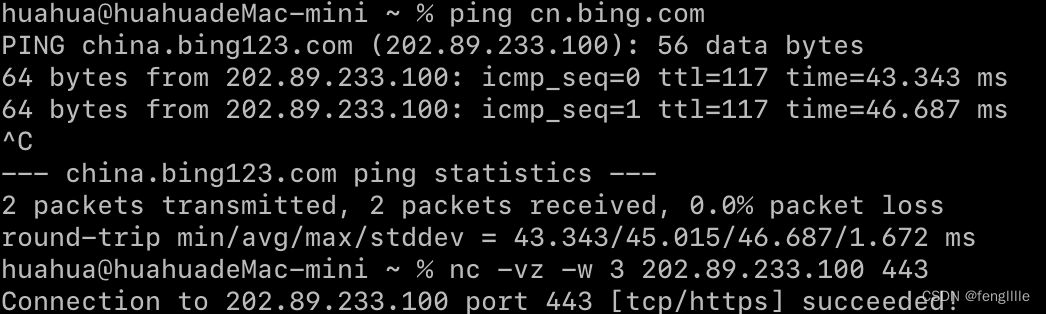

macOS telnet替代方式

前言 经过使用Linux,常常用Linux的telnet查看端口畅通,是否有防火墙,但是在mac上已经没有这个命令了,那么怎么使用这个命令或者有没有其他替代呢,win和linux是否可以使用相同的替代。macOS可以原生用nc命令替代&#…...

【leetcode】独特的电子邮件地址

题目描述 每个 有效电子邮件地址 都由一个 本地名 和一个 域名 组成,以 ‘’ 符号分隔。除小写字母之外,电子邮件地址还可以含有一个或多个 ‘.’ 或 ‘’ 。 例如,在 aliceleetcode.com中, alice 是 本地名 ,而 lee…...

解密Java中神奇的Synchronized关键字

文章目录 🎉 定义🎉 JDK6以前🎉 偏向锁和轻量级锁📝 偏向锁📝 轻量级锁📝 自旋锁📝 重量级锁🔥 1. 加锁🔥 2. 等待🔥 3. 撤销 🎉 锁优化…...

微信删除的好友还能找回来吗?盘点5种超实用的方法!

当我们在认识新的朋友时,都喜欢通过添加对方微信来保持日后的联系。但是有时候可能会由于没有及时备注而不小心误删了对方。想要将对方加回来,又找不到有效的方法。微信删除的好友还能找回来吗?当然可以了!但我们要根据实际情况选…...

Nmap 常用命令汇总

Nmap 命令格式 namp 【扫描类型】 选项 {目标ip 或 目标网络段} 根据扫描类型,可分成两类,一种是主机扫描类型,另一种是端口扫描类型 一、常用主机扫描: 扫描类型: -PR 对目标ip所在局域网进行扫描 (使…...

谷歌浏览器最新版和浏览器驱动下载地址

谷歌最新版和驱动下载地址...

[游戏开发][Unity]Unity运行时加载不在BuildSetting里的场景

从Assets开始路径要写全,需要.unity扩展名 如果路径写错了会报错 LoadSceneAsyncInPlayMode expects a valid full path. The provided path was Assets/Works/Resource/Scenes.unity string sceneFullPath "Assets/Works/Resource/Scenes/TestScene.unity…...

flutter开发实战-hero动画简单实现

flutter开发实战-hero动画简单实现 使用Flutter的Hero widget创建hero动画。 将hero从一个路由飞到另一个路由。 将hero 的形状从圆形转换为矩形,同时将其从一个路由飞到另一个路由的过程中进行动画处理。 Flutter Hero动画 Hero 指的是可以在路由(页面)之间“飞行”的 widge…...

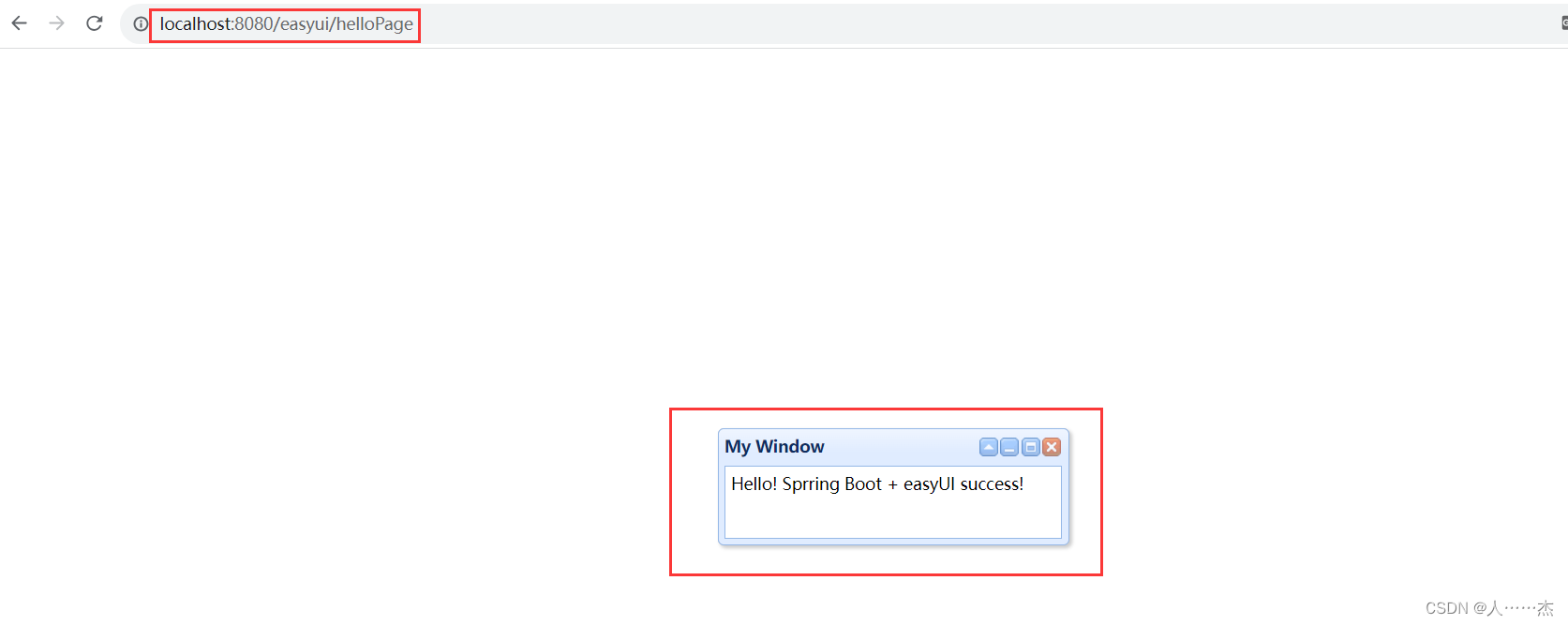

Spring Boot + EasyUI 创建第一个项目(一)

创建一个Spring Boot和EasyUI相结合的项目。 一、构建一个Spring Boot项目 Spring Boot之创建一个Spring Boot项目(一)-CSDN博客 二、配置Thymeleaf Spring Boot Thymeleaf(十一)_thymeleaf 设置字体_人……杰的博客-CSDN博客…...

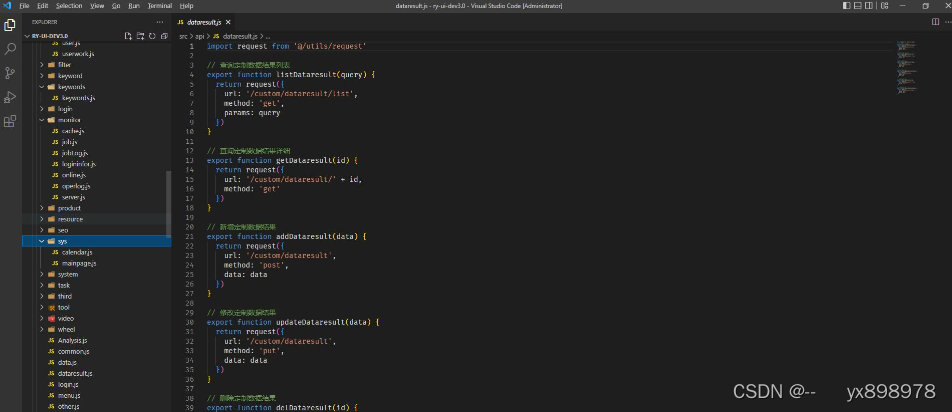

短视频矩阵系统源码/技术应用搭建

短视频矩阵系统开发围绕的开发核心维度: 1. 多账号原理开发维度 适用于多平台多账号管理,支持不同类型账号矩阵通过工具实现统一便捷式管理。(企业号,员工号,个人号) 2. 账号矩阵内容开发维护 利用账号矩…...

硬核子牙:我准备写一本《带你手写64位多核操作系统》的书!

哈喽,我是子牙,一个很卷的硬核男人 深入研究计算机底层、Windows内核、Linux内核、Hotspot源码……聚焦做那些大家想学没地方学的课程。为了保证课程质量及教学效果,一年磨一剑,三年先后做了三个课程:手写JVM、手写OS…...

SpringBoot-17-MyBatis动态SQL标签之常用标签

文章目录 1 代码1.1 实体User.java1.2 接口UserMapper.java1.3 映射UserMapper.xml1.3.1 标签if1.3.2 标签if和where1.3.3 标签choose和when和otherwise1.4 UserController.java2 常用动态SQL标签2.1 标签set2.1.1 UserMapper.java2.1.2 UserMapper.xml2.1.3 UserController.ja…...

内存分配函数malloc kmalloc vmalloc

内存分配函数malloc kmalloc vmalloc malloc实现步骤: 1)请求大小调整:首先,malloc 需要调整用户请求的大小,以适应内部数据结构(例如,可能需要存储额外的元数据)。通常,这包括对齐调整,确保分配的内存地址满足特定硬件要求(如对齐到8字节或16字节边界)。 2)空闲…...

Python爬虫实战:研究feedparser库相关技术

1. 引言 1.1 研究背景与意义 在当今信息爆炸的时代,互联网上存在着海量的信息资源。RSS(Really Simple Syndication)作为一种标准化的信息聚合技术,被广泛用于网站内容的发布和订阅。通过 RSS,用户可以方便地获取网站更新的内容,而无需频繁访问各个网站。 然而,互联网…...

【ROS】Nav2源码之nav2_behavior_tree-行为树节点列表

1、行为树节点分类 在 Nav2(Navigation2)的行为树框架中,行为树节点插件按照功能分为 Action(动作节点)、Condition(条件节点)、Control(控制节点) 和 Decorator(装饰节点) 四类。 1.1 动作节点 Action 执行具体的机器人操作或任务,直接与硬件、传感器或外部系统…...

数据链路层的主要功能是什么

数据链路层(OSI模型第2层)的核心功能是在相邻网络节点(如交换机、主机)间提供可靠的数据帧传输服务,主要职责包括: 🔑 核心功能详解: 帧封装与解封装 封装: 将网络层下发…...

Neo4j 集群管理:原理、技术与最佳实践深度解析

Neo4j 的集群技术是其企业级高可用性、可扩展性和容错能力的核心。通过深入分析官方文档,本文将系统阐述其集群管理的核心原理、关键技术、实用技巧和行业最佳实践。 Neo4j 的 Causal Clustering 架构提供了一个强大而灵活的基石,用于构建高可用、可扩展且一致的图数据库服务…...

解决本地部署 SmolVLM2 大语言模型运行 flash-attn 报错

出现的问题 安装 flash-attn 会一直卡在 build 那一步或者运行报错 解决办法 是因为你安装的 flash-attn 版本没有对应上,所以报错,到 https://github.com/Dao-AILab/flash-attention/releases 下载对应版本,cu、torch、cp 的版本一定要对…...

Ascend NPU上适配Step-Audio模型

1 概述 1.1 简述 Step-Audio 是业界首个集语音理解与生成控制一体化的产品级开源实时语音对话系统,支持多语言对话(如 中文,英文,日语),语音情感(如 开心,悲伤)&#x…...

使用Matplotlib创建炫酷的3D散点图:数据可视化的新维度

文章目录 基础实现代码代码解析进阶技巧1. 自定义点的大小和颜色2. 添加图例和样式美化3. 真实数据应用示例实用技巧与注意事项完整示例(带样式)应用场景在数据科学和可视化领域,三维图形能为我们提供更丰富的数据洞察。本文将手把手教你如何使用Python的Matplotlib库创建引…...

DAY 26 函数专题1

函数定义与参数知识点回顾:1. 函数的定义2. 变量作用域:局部变量和全局变量3. 函数的参数类型:位置参数、默认参数、不定参数4. 传递参数的手段:关键词参数5 题目1:计算圆的面积 任务: 编写一…...