HBase 数据导入导出

HBase 数据导入导出

- 1. 使用 Docker 部署 HBase

- 2. HBase 命令查找

- 3. 命令行操作 HBase

- 3.1 HBase shell 命令

- 3.2 查看命名空间

- 3.3 查看命名空间下的表

- 3.4 新建命名空间

- 3.5 查看具体表结构

- 3.6 创建表

- 4. HBase 数据导出、导入

- 4.1 导出 HBase 中的某个表数据

- 4.2 导入 HBase 中的某个表数据

- 5. 脚本批量导入、导出

1. 使用 Docker 部署 HBase

这里以 x86 架构的 HBase 镜像为例,docker-compose 脚本内容如下:

version: "3.1"

services:# Hbasehbase-server:image: harisekhon/hbase:latestcontainer_name: hbase-serverhostname: hbase-serverrestart: alwaysports: - 2181:2181- 9090:9090- 16000:16000- 16010:16010- 16020:16020- 16030:16030environment:- TZ=Asia/Shanghaivolumes:- /data/docker-volume/hbase/data/:/hbase-data/- /data/docker-volume/hbase/logs/:/hbase/logs

这里挂载了几个默认的端口,有一点需要注意,使用不同的镜像,可能配置的端口不一样,这点要注意,免得被坑了,我在使用 ARM 架构的 HBase 镜像时,由于端口不一致,导致使用 HBase 的服务一直失败,最后发现是因为端口挂载错误的问题;

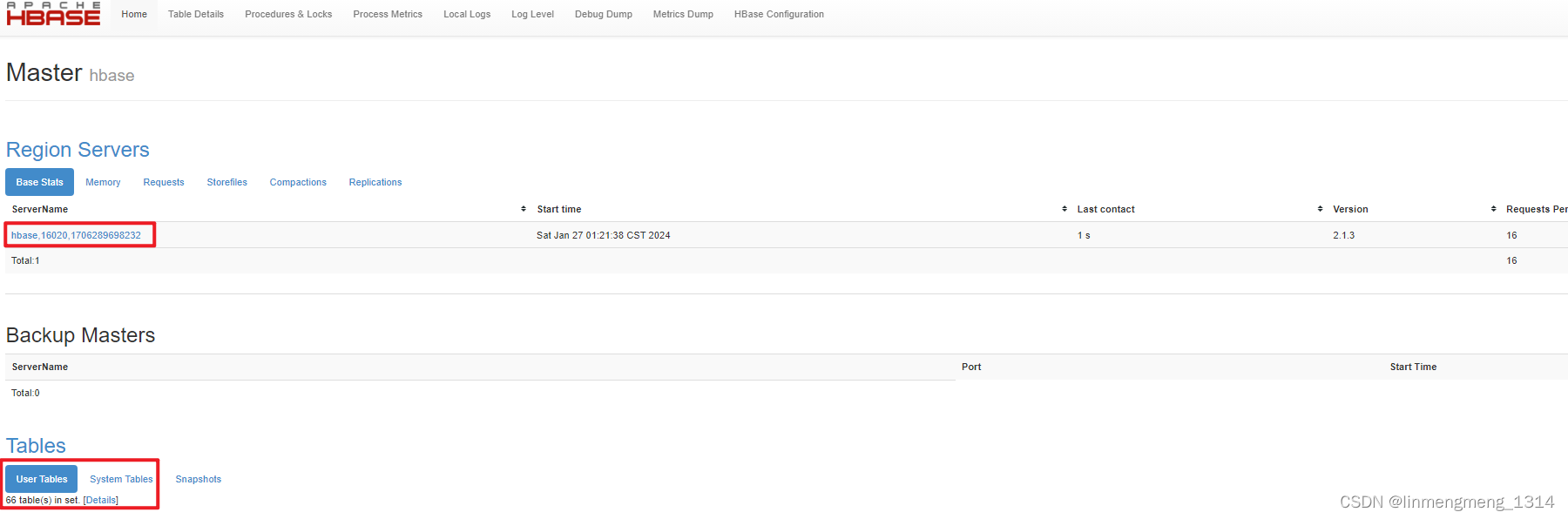

部署成功后,可以访问 HBase 的 Master 页面:http://192.168.88.147:16010/master-status

这个页面正常显示,HBase 的基本信息,以及 HBase 中的表,就证明 HBase 正常启动了。

这里可以看到,ServerName 里面出现了一个 hbase,16020,1706289698232 这个玩意,刚好对应了我们挂载的镜像的 16020 端口。

如果是 ARM 架构的,可以使用这个镜像:satoshiyamamoto/hbase:2.1

# Hbasehbase:image: satoshiyamamoto/hbase:2.1container_name: hbasehostname: hbaserestart: alwaysports: - 2181:2181#- 9090:9090- 60000:60000- 60010:60010- 60020:60020- 60030:60030environment:- TZ=Asia/Shanghaivolumes:#- /data/docker-volume/hbase/data/:/opt/hbase/old_data- /data/docker-volume/hbase/data/:/hbase-data/#- /data/docker-volume/hbase/hbase/:/opt/hbase#- /data/docker-volume/hbase/logs/:/opt/hbase/logsdeploy:resources:limits:cpus: '4'memory: 8G

这里有个区别就是挂载的端口变了,刚开始 在换了镜像之后,我还是使用了原来挂载的端口,发现部署之后,可以访问 HBase 的 Master 页面,也就是 挂载的 60010 端口,如同 x86 架构的镜像的 16010 端口的 Master status 页面。

但是不一样的是,这里的 ServerName 不再是上面的 hbase,16020,1706289698232了,而是 hbase,60020,一串数字,后面的一串数字,不一样还能理解,毕竟不是同一个 HBase 环境了,中间的这个端口也不一样了,刚开始我没注意这点,以为上层服务,只要能连通 2181 端口就可以了呢,结果导致服务一直启动失败。后来把挂载端口同镜像暴露端口改为一致,才能正常使用。

2. HBase 命令查找

进入容器,使用 HBase Shell 操作 HBase:docker exec -it hbase-server bash

直接运行 hbase 命令,看下当前镜像是否配置了环境变量:

bash-4.4# hbase

Usage: hbase [<options>] <command> [<args>]

Options:--config DIR Configuration direction to use. Default: ./conf--hosts HOSTS Override the list in 'regionservers' file--auth-as-server Authenticate to ZooKeeper using servers configuration--internal-classpath Skip attempting to use client facing jars (WARNING: unstable results between versions)Commands:

Some commands take arguments. Pass no args or -h for usage.shell Run the HBase shellhbck Run the HBase 'fsck' tool. Defaults read-only hbck1.Pass '-j /path/to/HBCK2.jar' to run hbase-2.x HBCK2.snapshot Tool for managing snapshotswal Write-ahead-log analyzerhfile Store file analyzerzkcli Run the ZooKeeper shellmaster Run an HBase HMaster noderegionserver Run an HBase HRegionServer nodezookeeper Run a ZooKeeper serverrest Run an HBase REST serverthrift Run the HBase Thrift serverthrift2 Run the HBase Thrift2 serverclean Run the HBase clean up scriptclasspath Dump hbase CLASSPATHmapredcp Dump CLASSPATH entries required by mapreducepe Run PerformanceEvaluationltt Run LoadTestToolcanary Run the Canary toolversion Print the versionregionsplitter Run RegionSplitter toolrowcounter Run RowCounter toolcellcounter Run CellCounter toolpre-upgrade Run Pre-Upgrade validator toolCLASSNAME Run the class named CLASSNAME

bash-4.4#

我这里使用的镜像,是配置了 hbase命令的环境变量的,所以可以直接在命令行执行 hbase 命令;

如果没有配置环境变量,可以去找下 hbase 的命令所在的目录,先去 根目录,看下 跟 hbase 有关的目录,或者 /opt 目录下。

这个镜像 satoshiyamamoto/hbase:2.1 就没有配置 hbase 的环境变量,并且 hbase 是安装在 /opt/hbase 目录下,如果需要执行 hbase 命令,则需要先切换到 /opt/hbase/bin 目录下才行。

如果没有配置环境变量的话,这个目录需要记下来,因为在后面操作导入导出 hbase 数据的时候,需要用得到;

3. 命令行操作 HBase

3.1 HBase shell 命令

使用命令 hbase shell 可以直接进入 HBase 的命令行:

bash-4.4# hbase shell

2024-02-02 16:48:16,431 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.1.3, rda5ec9e4c06c537213883cca8f3cc9a7c19daf67, Mon Feb 11 15:45:33 CST 2019

Took 0.0052 seconds

hbase(main):001:0>

直接执行 help 命令,查看对应的命令的使用帮助说明:

hbase(main):001:0> help

HBase Shell, version 2.1.3, rda5ec9e4c06c537213883cca8f3cc9a7c19daf67, Mon Feb 11 15:45:33 CST 2019

Type 'help "COMMAND"', (e.g. 'help "get"' -- the quotes are necessary) for help on a specific command.

Commands are grouped. Type 'help "COMMAND_GROUP"', (e.g. 'help "general"') for help on a command group.COMMAND GROUPS:Group name: generalCommands: processlist, status, table_help, version, whoamiGroup name: ddlCommands: alter, alter_async, alter_status, clone_table_schema, create, describe, disable, disable_all, drop, drop_all, enable, enable_all, exists, get_table, is_disabled, is_enabled, list, list_regions, locate_region, show_filtersGroup name: namespaceCommands: alter_namespace, create_namespace, describe_namespace, drop_namespace, list_namespace, list_namespace_tablesGroup name: dmlCommands: append, count, delete, deleteall, get, get_counter, get_splits, incr, put, scan, truncate, truncate_preserveGroup name: toolsCommands: assign, balance_switch, balancer, balancer_enabled, catalogjanitor_enabled, catalogjanitor_run, catalogjanitor_switch, cleaner_chore_enabled, cleaner_chore_run, cleaner_chore_switch, clear_block_cache, clear_compaction_queues, clear_deadservers, close_region, compact, compact_rs, compaction_state, flush, is_in_maintenance_mode, list_deadservers, major_compact, merge_region, move, normalize, normalizer_enabled, normalizer_switch, split, splitormerge_enabled, splitormerge_switch, stop_master, stop_regionserver, trace, unassign, wal_roll, zk_dumpGroup name: replicationCommands: add_peer, append_peer_exclude_namespaces, append_peer_exclude_tableCFs, append_peer_namespaces, append_peer_tableCFs, disable_peer, disable_table_replication, enable_peer, enable_table_replication, get_peer_config, list_peer_configs, list_peers, list_replicated_tables, remove_peer, remove_peer_exclude_namespaces, remove_peer_exclude_tableCFs, remove_peer_namespaces, remove_peer_tableCFs, set_peer_bandwidth, set_peer_exclude_namespaces, set_peer_exclude_tableCFs, set_peer_namespaces, set_peer_replicate_all, set_peer_serial, set_peer_tableCFs, show_peer_tableCFs, update_peer_configGroup name: snapshotsCommands: clone_snapshot, delete_all_snapshot, delete_snapshot, delete_table_snapshots, list_snapshots, list_table_snapshots, restore_snapshot, snapshotGroup name: configurationCommands: update_all_config, update_configGroup name: quotasCommands: list_quota_snapshots, list_quota_table_sizes, list_quotas, list_snapshot_sizes, set_quotaGroup name: securityCommands: grant, list_security_capabilities, revoke, user_permissionGroup name: proceduresCommands: list_locks, list_proceduresGroup name: visibility labelsCommands: add_labels, clear_auths, get_auths, list_labels, set_auths, set_visibilityGroup name: rsgroupCommands: add_rsgroup, balance_rsgroup, get_rsgroup, get_server_rsgroup, get_table_rsgroup, list_rsgroups, move_namespaces_rsgroup, move_servers_namespaces_rsgroup, move_servers_rsgroup, move_servers_tables_rsgroup, move_tables_rsgroup, remove_rsgroup, remove_servers_rsgroupSHELL USAGE:

Quote all names in HBase Shell such as table and column names. Commas delimit

command parameters. Type <RETURN> after entering a command to run it.

Dictionaries of configuration used in the creation and alteration of tables are

Ruby Hashes. They look like this:{'key1' => 'value1', 'key2' => 'value2', ...}and are opened and closed with curley-braces. Key/values are delimited by the

'=>' character combination. Usually keys are predefined constants such as

NAME, VERSIONS, COMPRESSION, etc. Constants do not need to be quoted. Type

'Object.constants' to see a (messy) list of all constants in the environment.If you are using binary keys or values and need to enter them in the shell, use

double-quote'd hexadecimal representation. For example:hbase> get 't1', "key\x03\x3f\xcd"hbase> get 't1', "key\003\023\011"hbase> put 't1', "test\xef\xff", 'f1:', "\x01\x33\x40"The HBase shell is the (J)Ruby IRB with the above HBase-specific commands added.

For more on the HBase Shell, see http://hbase.apache.org/book.html

hbase(main):002:0>

可以看到,在 hbase shell 命令行,支持上面这么多的用法;

如果想看具体某个命令的使用帮助,可以使用 对应命令 + help 查看,比如:help "general"

3.2 查看命名空间

查看命名空间:list_namespace

hbase(main):002:0> list_namespace

NAMESPACE

default

hbase

hugegraph

3 row(s)

Took 0.6218 seconds

hbase(main):003:0>

可以看到这里默认有3个命名空间,default 和 hbase、hugegraph;

3.3 查看命名空间下的表

hbase(main):003:0> list_namespace_tables 'hbase'

TABLE

meta

namespace

2 row(s)

Took 0.0330 seconds

=> ["meta", "namespace"]

hbase(main):004:0>

hbase(main):005:0* list_namespace_tables 'default'

TABLE

0 row(s)

Took 0.0067 seconds

=> []

hbase(main):004:0* list_namespace_tables 'hugegraph'

TABLE

c

el

g_ai

g_di

g_ei

g_fi

g_hi

g_ie

g_ii

g_li

g_oe

g_si

g_ui

g_v

g_vi

il

m

m_si

pk

s_ai

s_di

s_ei

s_fi

s_hi

s_ie

s_ii

s_li

s_oe

s_si

s_ui

s_v

s_vi

vl

33 row(s)

Took 0.0317 seconds

=> ["c", "el", "g_ai", "g_di", "g_ei", "g_fi", "g_hi", "g_ie", "g_ii", "g_li", "g_oe", "g_si", "g_ui", "g_v", "g_vi", "il", "m", "m_si", "pk", "s_ai", "s_di", "s_ei", "s_fi", "s_hi", "s_ie", "s_ii", "s_li", "s_oe", "s_si", "s_ui", "s_v", "s_vi", "vl"]

这里如果需要获取当前命名空间下所有的表名称,可以直接在容器内执行命令:

bash-4.4#

bash-4.4# echo "list_namespace_tables 'hugegraph'" | hbase shell | tail -n 2

2024-02-02 17:06:56,173 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

["c", "el", "g_ai", "g_di", "g_ei", "g_fi", "g_hi", "g_ie", "g_ii", "g_li", "g_oe", "g_si", "g_ui", "g_v", "g_vi", "il", "m", "m_si", "pk", "s_ai", "s_di", "s_ei", "s_fi", "s_hi", "s_ie", "s_ii", "s_li", "s_oe", "s_si", "s_ui", "s_v", "s_vi", "vl"]bash-4.4#

这个在后面我们导出数据时有用;

3.4 新建命名空间

如果已经登录 hbase shell 可直接使用命令:

hbase> create_namespace 'hugegraph'hbase> create_namespace 'hugegraph', {'PROPERTY_NAME'=>'PROPERTY_VALUE'}

如果未登录:

bash-4.4# echo "create_namespace 'hugegraph'" | hbase shell

2024-02-03 15:21:26,299 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.1.3, rda5ec9e4c06c537213883cca8f3cc9a7c19daf67, Mon Feb 11 15:45:33 CST 2019

Took 0.0046 seconds

create_namespace 'hugegraph'

Took 0.7720 seconds bash-4.4#

bash-4.4# echo "describe_namespace 'hugegraph'" | hbase shell

2024-02-03 15:21:45,832 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.1.3, rda5ec9e4c06c537213883cca8f3cc9a7c19daf67, Mon Feb 11 15:45:33 CST 2019

Took 0.0048 seconds

describe_namespace 'hugegraph'

DESCRIPTION

{NAME => 'hugegraph'}

Took 0.6109 seconds

1

3.5 查看具体表结构

如果使用过其他数据存储介质,比如 MySQL,都有具体的创建表结构的 DDL,在 HBase 中,表也有具体的 DDL脚本,这里直接看下现有的表结构,比如:hugegraph 命名空间下的 c 这张表;

直接在 hbase shell 命令行,使用命令:describe 'hugegraph:c' ,其中 describe 命令可以简写为 desc

hbase(main):007:0> describe 'hugegraph:c'

Table hugegraph:c is ENABLED

hugegraph:c, {TABLE_ATTRIBUTES => {coprocessor$1 => '|org.apache.hadoop.hbase.coprocessor.AggregateImplementation|1073741823|'}

COLUMN FAMILIES DESCRIPTION

{NAME => 'f', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0',BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

1 row(s)

Took 0.8352 seconds

hbase(main):008:0>

如果没有指定命名空间,则默认去 default 这个命名空间下查找表;

3.6 创建表

创建一个表,在默认空间下,或者在 ns1 命名空间下:

hbase> create 't1', {NAME => 'f1'}, {NAME => 'f2'}, {NAME => 'f3'}

hbase> create 'ns1:t1', {NAME => 'f1', VERSIONS => 5}

实操:

bash-4.4# cat test_schema.txt

create 'hugegraph:c', {NAME => 'f', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

bash-4.4#

bash-4.4# cat test_schema.txt | hbase shell

2024-02-03 15:52:34,940 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.1.3, rda5ec9e4c06c537213883cca8f3cc9a7c19daf67, Mon Feb 11 15:45:33 CST 2019

Took 0.0057 seconds

create 'hugegraph:c', {NAME => 'f', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

Created table hugegraph:c

Took 1.3900 seconds

Hbase::Table - hugegraph:cbash-4.4#

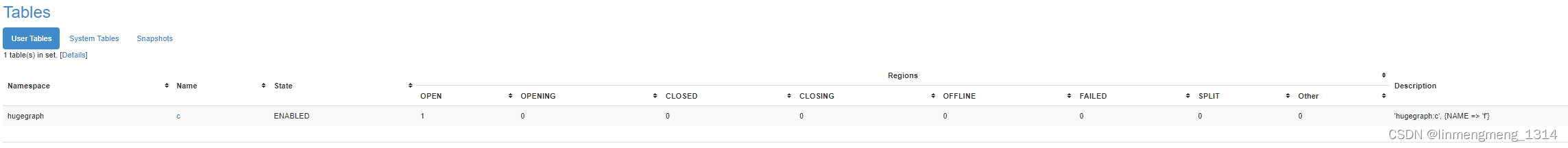

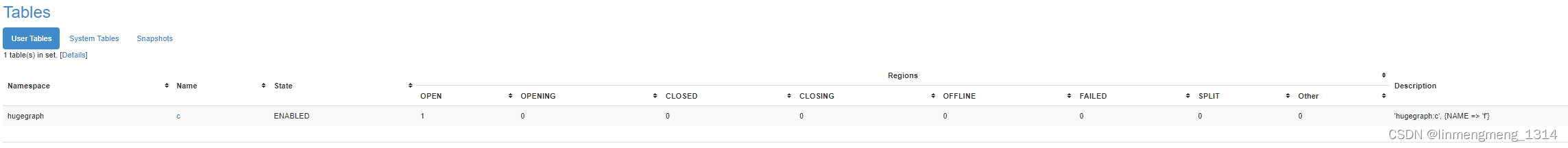

这时候可以在 HBase 的 Master 页面看到刚刚创建的表;

4. HBase 数据导出、导入

4.1 导出 HBase 中的某个表数据

确定要导出的表的名称和命名空间,就可以尝试数据导出了。

这里要导出的表名为 c, 命名空间为:hugegraph ,直接在容器内命令行,使用命令:

hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:c" file:///hbase/logs/export_data/data/hugegraph_c

命令的最后是设定的导出的表命名空间_表名,这里要注意文件夹的命名规范,如果是直接使用 hugegraph:c 有可能导致导出的数据丢失的问题。

在Linux系统中,文件夹名和文件名中包含冒号(:)是不被推荐的,因为冒号在Linux中具有特殊的含义。在路径中使用冒号可能导致解析错误或其他意外结果。

冒号在Linux中用于分隔路径和设备名称,例如在指定设备文件路径时使用。因此,如果在文件夹名或文件名中包含冒号,可能会导致系统无法正确解析路径。

导出日志:

bash-4.4# hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:c" file:///hbase/logs/export_data/data/hugegraph_c

2024-02-03 16:05:54,134 INFO [main] mapreduce.ExportUtils: versions=1, starttime=0, endtime=9223372036854775807, keepDeletedCells=false, visibility labels=null

2024-02-03 16:05:54,458 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2024-02-03 16:05:55,077 INFO [main] Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

2024-02-03 16:05:55,078 INFO [main] jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

2024-02-03 16:05:55,394 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.10-39d3a4f269333c922ed3db283be479f9deacaa0f, built on 03/23/2017 10:13 GMT

2024-02-03 16:05:55,394 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:host.name=hbase

2024-02-03 16:05:55,394 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:java.version=1.8.0_191

2024-02-03 16:05:55,394 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

2024-02-03 16:05:55,394 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:java.home=/usr/lib/jvm/java-1.8-openjdk/jre

2024-02-03 16:05:55,394 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: l-4.0.23.Final.jar:/hbase/bin/../lib/org.eclipse.jdt.core-3.8.2.v20130121.jar:/hbase/bin/../lib/osgi-resource-locator-1.0.1.jar:/hbase/bin/../lib/paranamer-2.3.jar:/hbase/bin/../lib/protobuf-java-2.5.0.jar:/hbase/bin/../lib/snappy-java-1.0.5.jar:/hbase/bin/../lib/spymemcached-2.12.2.jar:/hbase/bin/../lib/validation-api-1.1.0.Final.jar:/hbase/bin/../lib/xmlenc-0.52.jar:/hbase/bin/../lib/xz-1.0.jar:/hbase/bin/../lib/zookeeper-3.4.10.jar:/hbase/bin/../lib/client-facing-thirdparty/audience-annotations-0.5.0.jar:/hbase/bin/../lib/client-facing-thirdparty/commons-logging-1.2.jar:/hbase/bin/../lib/client-facing-thirdparty/findbugs-annotations-1.3.9-1.jar:/hbase/bin/../lib/client-facing-thirdparty/htrace-core4-4.2.0-incubating.jar:/hbase/bin/../lib/client-facing-thirdparty/log4j-1.2.17.jar:/hbase/bin/../lib/client-facing-thirdparty/slf4j-api-1.7.25.jar:/hbase/bin/../lib/client-facing-thirdparty/htrace-core-3.1.0-incubating.jar:/hbase/bin/../lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:java.library.path=/usr/lib/jvm/java-1.8-openjdk/jre/lib/amd64/server:/usr/lib/jvm/java-1.8-openjdk/jre/lib/amd64:/usr/lib/jvm/java-1.8-openjdk/jre/../lib/amd64:/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:os.name=Linux

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:os.arch=amd64

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:os.version=3.10.0-1160.el7.x86_64

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:user.name=root

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:user.home=/root

2024-02-03 16:05:55,395 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Client environment:user.dir=/

2024-02-03 16:05:55,398 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$$Lambda$14/606431564@1c8ee286

2024-02-03 16:05:55,425 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2024-02-03 16:05:55,442 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18-SendThread(localhost:2181)] zookeeper.ClientCnxn: Socket connection established to localhost/127.0.0.1:2181, initiating session

2024-02-03 16:05:55,470 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x18d46ca91220121, negotiated timeout = 90000

2024-02-03 16:05:55,695 INFO [main] mapreduce.RegionSizeCalculator: Calculating region sizes for table "hugegraph:c".

2024-02-03 16:05:56,383 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18] zookeeper.ZooKeeper: Session: 0x18d46ca91220121 closed

2024-02-03 16:05:56,385 INFO [ReadOnlyZKClient-localhost:2181@0x1a942c18-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x18d46ca91220121

2024-02-03 16:05:56,417 INFO [main] mapreduce.JobSubmitter: number of splits:1

2024-02-03 16:05:56,437 INFO [main] Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2024-02-03 16:05:56,545 INFO [main] mapreduce.JobSubmitter: Submitting tokens for job: job_local1007328070_0001

2024-02-03 16:05:56,907 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556667/hbase-hadoop-compat-2.1.3.jar <- //hbase-hadoop-compat-2.1.3.jar

2024-02-03 16:05:56,958 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-hadoop-compat-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556667/hbase-hadoop-compat-2.1.3.jar

2024-02-03 16:05:57,243 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556668/commons-lang3-3.6.jar <- //commons-lang3-3.6.jar

2024-02-03 16:05:57,260 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/commons-lang3-3.6.jar as file:/tmp/hadoop-root/mapred/local/1706947556668/commons-lang3-3.6.jar

2024-02-03 16:05:57,295 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556669/hbase-shaded-miscellaneous-2.1.0.jar <- //hbase-shaded-miscellaneous-2.1.0.jar

2024-02-03 16:05:57,298 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-shaded-miscellaneous-2.1.0.jar as file:/tmp/hadoop-root/mapred/local/1706947556669/hbase-shaded-miscellaneous-2.1.0.jar

2024-02-03 16:05:57,298 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556670/jackson-annotations-2.9.2.jar <- //jackson-annotations-2.9.2.jar

2024-02-03 16:05:57,300 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/jackson-annotations-2.9.2.jar as file:/tmp/hadoop-root/mapred/local/1706947556670/jackson-annotations-2.9.2.jar

2024-02-03 16:05:57,300 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556671/hbase-metrics-api-2.1.3.jar <- //hbase-metrics-api-2.1.3.jar

2024-02-03 16:05:57,303 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-metrics-api-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556671/hbase-metrics-api-2.1.3.jar

2024-02-03 16:05:57,303 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556672/zookeeper-3.4.10.jar <- //zookeeper-3.4.10.jar

2024-02-03 16:05:57,306 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/zookeeper-3.4.10.jar as file:/tmp/hadoop-root/mapred/local/1706947556672/zookeeper-3.4.10.jar

2024-02-03 16:05:57,306 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556673/metrics-core-3.2.1.jar <- //metrics-core-3.2.1.jar

2024-02-03 16:05:57,308 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/metrics-core-3.2.1.jar as file:/tmp/hadoop-root/mapred/local/1706947556673/metrics-core-3.2.1.jar

2024-02-03 16:05:57,308 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556674/hbase-protocol-2.1.3.jar <- //hbase-protocol-2.1.3.jar

2024-02-03 16:05:57,330 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-protocol-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556674/hbase-protocol-2.1.3.jar

2024-02-03 16:05:57,330 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556675/hadoop-mapreduce-client-core-2.7.7.jar <- //hadoop-mapreduce-client-core-2.7.7.jar

2024-02-03 16:05:57,333 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hadoop-mapreduce-client-core-2.7.7.jar as file:/tmp/hadoop-root/mapred/local/1706947556675/hadoop-mapreduce-client-core-2.7.7.jar

2024-02-03 16:05:57,333 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556676/hbase-metrics-2.1.3.jar <- //hbase-metrics-2.1.3.jar

2024-02-03 16:05:57,337 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-metrics-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556676/hbase-metrics-2.1.3.jar

2024-02-03 16:05:57,337 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556677/protobuf-java-2.5.0.jar <- //protobuf-java-2.5.0.jar

2024-02-03 16:05:57,339 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/protobuf-java-2.5.0.jar as file:/tmp/hadoop-root/mapred/local/1706947556677/protobuf-java-2.5.0.jar

2024-02-03 16:05:57,339 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556678/hbase-mapreduce-2.1.3.jar <- //hbase-mapreduce-2.1.3.jar

2024-02-03 16:05:57,343 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-mapreduce-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556678/hbase-mapreduce-2.1.3.jar

2024-02-03 16:05:57,343 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556679/hbase-shaded-protobuf-2.1.0.jar <- //hbase-shaded-protobuf-2.1.0.jar

2024-02-03 16:05:57,345 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-shaded-protobuf-2.1.0.jar as file:/tmp/hadoop-root/mapred/local/1706947556679/hbase-shaded-protobuf-2.1.0.jar

2024-02-03 16:05:57,345 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556680/hbase-protocol-shaded-2.1.3.jar <- //hbase-protocol-shaded-2.1.3.jar

2024-02-03 16:05:57,348 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-protocol-shaded-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556680/hbase-protocol-shaded-2.1.3.jar

2024-02-03 16:05:57,348 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556681/hbase-server-2.1.3.jar <- //hbase-server-2.1.3.jar

2024-02-03 16:05:57,350 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-server-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556681/hbase-server-2.1.3.jar

2024-02-03 16:05:57,350 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556682/hbase-client-2.1.3.jar <- //hbase-client-2.1.3.jar

2024-02-03 16:05:57,352 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-client-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556682/hbase-client-2.1.3.jar

2024-02-03 16:05:57,353 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556683/jackson-databind-2.9.2.jar <- //jackson-databind-2.9.2.jar

2024-02-03 16:05:57,355 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/jackson-databind-2.9.2.jar as file:/tmp/hadoop-root/mapred/local/1706947556683/jackson-databind-2.9.2.jar

2024-02-03 16:05:57,355 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556684/hbase-hadoop2-compat-2.1.3.jar <- //hbase-hadoop2-compat-2.1.3.jar

2024-02-03 16:05:57,357 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-hadoop2-compat-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556684/hbase-hadoop2-compat-2.1.3.jar

2024-02-03 16:05:57,357 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556685/hbase-shaded-netty-2.1.0.jar <- //hbase-shaded-netty-2.1.0.jar

2024-02-03 16:05:57,360 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-shaded-netty-2.1.0.jar as file:/tmp/hadoop-root/mapred/local/1706947556685/hbase-shaded-netty-2.1.0.jar

2024-02-03 16:05:57,360 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556686/jackson-core-2.9.2.jar <- //jackson-core-2.9.2.jar

2024-02-03 16:05:57,363 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/jackson-core-2.9.2.jar as file:/tmp/hadoop-root/mapred/local/1706947556686/jackson-core-2.9.2.jar

2024-02-03 16:05:57,363 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556687/htrace-core4-4.2.0-incubating.jar <- //htrace-core4-4.2.0-incubating.jar

2024-02-03 16:05:57,366 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/client-facing-thirdparty/htrace-core4-4.2.0-incubating.jar as file:/tmp/hadoop-root/mapred/local/1706947556687/htrace-core4-4.2.0-incubating.jar

2024-02-03 16:05:57,366 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556688/hbase-zookeeper-2.1.3.jar <- //hbase-zookeeper-2.1.3.jar

2024-02-03 16:05:57,369 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-zookeeper-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556688/hbase-zookeeper-2.1.3.jar

2024-02-03 16:05:57,369 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556689/hadoop-common-2.7.7.jar <- //hadoop-common-2.7.7.jar

2024-02-03 16:05:57,371 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hadoop-common-2.7.7.jar as file:/tmp/hadoop-root/mapred/local/1706947556689/hadoop-common-2.7.7.jar

2024-02-03 16:05:57,371 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706947556690/hbase-common-2.1.3.jar <- //hbase-common-2.1.3.jar

2024-02-03 16:05:57,374 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-common-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706947556690/hbase-common-2.1.3.jar

2024-02-03 16:05:57,453 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556667/hbase-hadoop-compat-2.1.3.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556668/commons-lang3-3.6.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556669/hbase-shaded-miscellaneous-2.1.0.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556670/jackson-annotations-2.9.2.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556671/hbase-metrics-api-2.1.3.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556672/zookeeper-3.4.10.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556673/metrics-core-3.2.1.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556674/hbase-protocol-2.1.3.jar

2024-02-03 16:05:57,454 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556675/hadoop-mapreduce-client-core-2.7.7.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556676/hbase-metrics-2.1.3.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556677/protobuf-java-2.5.0.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556678/hbase-mapreduce-2.1.3.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556679/hbase-shaded-protobuf-2.1.0.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556680/hbase-protocol-shaded-2.1.3.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556681/hbase-server-2.1.3.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556682/hbase-client-2.1.3.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556683/jackson-databind-2.9.2.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556684/hbase-hadoop2-compat-2.1.3.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556685/hbase-shaded-netty-2.1.0.jar

2024-02-03 16:05:57,455 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556686/jackson-core-2.9.2.jar

2024-02-03 16:05:57,456 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556687/htrace-core4-4.2.0-incubating.jar

2024-02-03 16:05:57,456 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556688/hbase-zookeeper-2.1.3.jar

2024-02-03 16:05:57,456 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556689/hadoop-common-2.7.7.jar

2024-02-03 16:05:57,456 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706947556690/hbase-common-2.1.3.jar

2024-02-03 16:05:57,462 INFO [main] mapreduce.Job: The url to track the job: http://localhost:8080/

2024-02-03 16:05:57,463 INFO [main] mapreduce.Job: Running job: job_local1007328070_0001

2024-02-03 16:05:57,464 INFO [Thread-52] mapred.LocalJobRunner: OutputCommitter set in config null

2024-02-03 16:05:57,495 INFO [Thread-52] output.FileOutputCommitter: File Output Committer Algorithm version is 1

2024-02-03 16:05:57,497 INFO [Thread-52] mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2024-02-03 16:05:57,533 INFO [Thread-52] mapred.LocalJobRunner: Waiting for map tasks

2024-02-03 16:05:57,534 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner: Starting task: attempt_local1007328070_0001_m_000000_0

2024-02-03 16:05:57,569 INFO [LocalJobRunner Map Task Executor #0] output.FileOutputCommitter: File Output Committer Algorithm version is 1

2024-02-03 16:05:57,585 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2024-02-03 16:05:57,866 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask: Processing split: HBase table split(table name: hugegraph:c, scan: {"loadColumnFamiliesOnDemand":null,"startRow":"","stopRow":"","batch":-1,"cacheBlocks":false,"totalColumns":0,"maxResultSize":-1,"families":{},"caching":100,"maxVersions":1,"timeRange":[0,9223372036854775807]}, start row: , end row: , region location: hbase.aikg-net, encoded region name: 0f116ece5ff8d360ab7a4aabbbcefea5)

2024-02-03 16:05:57,874 INFO [ReadOnlyZKClient-localhost:2181@0x444af11a] zookeeper.ZooKeeper: Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$$Lambda$14/606431564@1c8ee286

2024-02-03 16:05:57,877 INFO [ReadOnlyZKClient-localhost:2181@0x444af11a-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2024-02-03 16:05:57,878 INFO [ReadOnlyZKClient-localhost:2181@0x444af11a-SendThread(localhost:2181)] zookeeper.ClientCnxn: Socket connection established to localhost/127.0.0.1:2181, initiating session

2024-02-03 16:05:57,880 INFO [ReadOnlyZKClient-localhost:2181@0x444af11a-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x18d46ca91220122, negotiated timeout = 90000

2024-02-03 16:05:57,883 INFO [LocalJobRunner Map Task Executor #0] mapreduce.TableInputFormatBase: Input split length: 0 bytes.

2024-02-03 16:05:57,972 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner:

2024-02-03 16:05:57,979 INFO [ReadOnlyZKClient-localhost:2181@0x444af11a] zookeeper.ZooKeeper: Session: 0x18d46ca91220122 closed

2024-02-03 16:05:57,980 INFO [ReadOnlyZKClient-localhost:2181@0x444af11a-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x18d46ca91220122

2024-02-03 16:05:57,981 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Task:attempt_local1007328070_0001_m_000000_0 is done. And is in the process of committing

2024-02-03 16:05:57,990 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner:

2024-02-03 16:05:57,991 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Task attempt_local1007328070_0001_m_000000_0 is allowed to commit now

2024-02-03 16:05:57,992 INFO [LocalJobRunner Map Task Executor #0] output.FileOutputCommitter: Saved output of task 'attempt_local1007328070_0001_m_000000_0' to file:/hbase/logs/export_data/data/hugegraph_c/_temporary/0/task_local1007328070_0001_m_000000

2024-02-03 16:05:57,993 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner: map

2024-02-03 16:05:57,993 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Task 'attempt_local1007328070_0001_m_000000_0' done.

2024-02-03 16:05:57,997 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Final Counters for attempt_local1007328070_0001_m_000000_0: Counters: 28File System CountersFILE: Number of bytes read=37598840FILE: Number of bytes written=38261949FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0Map-Reduce FrameworkMap input records=5Map output records=5Input split bytes=142Spilled Records=0Failed Shuffles=0Merged Map outputs=0GC time elapsed (ms)=0Total committed heap usage (bytes)=508887040HBase CountersBYTES_IN_REMOTE_RESULTS=0BYTES_IN_RESULTS=175MILLIS_BETWEEN_NEXTS=28NOT_SERVING_REGION_EXCEPTION=0NUM_SCANNER_RESTARTS=0NUM_SCAN_RESULTS_STALE=0REGIONS_SCANNED=1REMOTE_RPC_CALLS=0REMOTE_RPC_RETRIES=0ROWS_FILTERED=0ROWS_SCANNED=5RPC_CALLS=1RPC_RETRIES=0File Input Format Counters Bytes Read=0File Output Format Counters Bytes Written=366

2024-02-03 16:05:57,997 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner: Finishing task: attempt_local1007328070_0001_m_000000_0

2024-02-03 16:05:57,998 INFO [Thread-52] mapred.LocalJobRunner: map task executor complete.

2024-02-03 16:05:58,468 INFO [main] mapreduce.Job: Job job_local1007328070_0001 running in uber mode : false

2024-02-03 16:05:58,470 INFO [main] mapreduce.Job: map 100% reduce 0%

2024-02-03 16:05:58,473 INFO [main] mapreduce.Job: Job job_local1007328070_0001 completed successfully

2024-02-03 16:05:58,499 INFO [main] mapreduce.Job: Counters: 28File System CountersFILE: Number of bytes read=37598840FILE: Number of bytes written=38261949FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0Map-Reduce FrameworkMap input records=5Map output records=5Input split bytes=142Spilled Records=0Failed Shuffles=0Merged Map outputs=0GC time elapsed (ms)=0Total committed heap usage (bytes)=508887040HBase CountersBYTES_IN_REMOTE_RESULTS=0BYTES_IN_RESULTS=175MILLIS_BETWEEN_NEXTS=28NOT_SERVING_REGION_EXCEPTION=0NUM_SCANNER_RESTARTS=0NUM_SCAN_RESULTS_STALE=0REGIONS_SCANNED=1REMOTE_RPC_CALLS=0REMOTE_RPC_RETRIES=0ROWS_FILTERED=0ROWS_SCANNED=5RPC_CALLS=1RPC_RETRIES=0File Input Format Counters Bytes Read=0File Output Format Counters Bytes Written=366

bash-4.4#

导出的文件:

[root@localhost export_data]# pwd

/data/docker-volume/hbase/logs/export_data

[root@localhost export_data]#

[root@localhost export_data]# cd data/

[root@localhost data]# ll

总用量 0

drwxr-xr-x. 2 root root 88 2月 3 16:05 hugegraph_c

[root@localhost data]#

[root@localhost data]# ll -h hugegraph_c/

总用量 4.0K

-rw-r--r--. 1 root root 354 2月 3 16:05 part-m-00000

-rw-r--r--. 1 root root 0 2月 3 16:05 _SUCCESS

[root@localhost data]#

这里的 part-m-00000 就是等下导入数据要用到的文件;

4.2 导入 HBase 中的某个表数据

CV上面导出的文件夹到新的环境,确保已经启动了 HBase,然后执行导入:

hbase org.apache.hadoop.hbase.mapreduce.Import "hugegraph:c" file:///hbase/logs/export_data/data/hugegraph_c

这里如果 hbase 命令不支持在命令行直接使用的话,可以写绝对路径,如:

/opt/hbase/bin/hbase org.apache.hadoop.hbase.mapreduce.Import "hugegraph:c" file:///hbase/logs/export_data/data/hugegraph_c

导入日志:

bash-4.4# hbase org.apache.hadoop.hbase.mapreduce.Import "hugegraph:c" file:///hbase/logs/export_data/data/hugegraph_c

2024-02-03 16:13:47,069 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2024-02-03 16:13:47,442 INFO [main] mapreduce.Import: writing directly to table from Mapper.

2024-02-03 16:13:47,604 INFO [main] Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

2024-02-03 16:13:47,605 INFO [main] jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

2024-02-03 16:13:47,738 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.10-39d3a4f269333c922ed3db283be479f9deacaa0f, built on 03/23/2017 10:13 GMT

2024-02-03 16:13:47,738 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:host.name=hbase-test

2024-02-03 16:13:47,738 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:java.version=1.8.0_191

2024-02-03 16:13:47,738 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

2024-02-03 16:13:47,738 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:java.home=/usr/lib/jvm/java-1.8-openjdk/jre

2024-02-03 16:13:47,738 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: l-4.0.23.Final.jar:/hbase/bin/../lib/org.eclipse.jdt.core-3.8.2.v20130121.jar:/hbase/bin/../lib/osgi-resource-locator-1.0.1.jar:/hbase/bin/../lib/paranamer-2.3.jar:/hbase/bin/../lib/protobuf-java-2.5.0.jar:/hbase/bin/../lib/snappy-java-1.0.5.jar:/hbase/bin/../lib/spymemcached-2.12.2.jar:/hbase/bin/../lib/validation-api-1.1.0.Final.jar:/hbase/bin/../lib/xmlenc-0.52.jar:/hbase/bin/../lib/xz-1.0.jar:/hbase/bin/../lib/zookeeper-3.4.10.jar:/hbase/bin/../lib/client-facing-thirdparty/audience-annotations-0.5.0.jar:/hbase/bin/../lib/client-facing-thirdparty/commons-logging-1.2.jar:/hbase/bin/../lib/client-facing-thirdparty/findbugs-annotations-1.3.9-1.jar:/hbase/bin/../lib/client-facing-thirdparty/htrace-core4-4.2.0-incubating.jar:/hbase/bin/../lib/client-facing-thirdparty/log4j-1.2.17.jar:/hbase/bin/../lib/client-facing-thirdparty/slf4j-api-1.7.25.jar:/hbase/bin/../lib/client-facing-thirdparty/htrace-core-3.1.0-incubating.jar:/hbase/bin/../lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:java.library.path=/usr/lib/jvm/java-1.8-openjdk/jre/lib/amd64/server:/usr/lib/jvm/java-1.8-openjdk/jre/lib/amd64:/usr/lib/jvm/java-1.8-openjdk/jre/../lib/amd64:/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:os.name=Linux

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:os.arch=amd64

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:os.version=3.10.0-1160.el7.x86_64

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:user.name=root

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:user.home=/root

2024-02-03 16:13:47,739 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Client environment:user.dir=/hbase-2.1.3/logs/export_data

2024-02-03 16:13:47,741 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d] zookeeper.ZooKeeper: Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$$Lambda$13/450416974@60e9aad6

2024-02-03 16:13:47,765 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2024-02-03 16:13:47,777 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d-SendThread(localhost:2181)] zookeeper.ClientCnxn: Socket connection established to localhost/127.0.0.1:2181, initiating session

2024-02-03 16:13:47,796 INFO [ReadOnlyZKClient-localhost:2181@0x6bedbc4d-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x18d68e7f0a80012, negotiated timeout = 90000

2024-02-03 16:13:48,880 INFO [main] input.FileInputFormat: Total input paths to process : 1

2024-02-03 16:13:48,914 INFO [main] mapreduce.JobSubmitter: number of splits:1

2024-02-03 16:13:49,036 INFO [main] mapreduce.JobSubmitter: Submitting tokens for job: job_local1318778997_0001

2024-02-03 16:13:49,412 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029145/hbase-hadoop-compat-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-hadoop-compat-2.1.3.jar

2024-02-03 16:13:49,496 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-hadoop-compat-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029145/hbase-hadoop-compat-2.1.3.jar

2024-02-03 16:13:49,604 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029146/commons-lang3-3.6.jar <- /hbase-2.1.3/logs/export_data/commons-lang3-3.6.jar

2024-02-03 16:13:49,606 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/commons-lang3-3.6.jar as file:/tmp/hadoop-root/mapred/local/1706948029146/commons-lang3-3.6.jar

2024-02-03 16:13:49,610 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029147/hbase-shaded-miscellaneous-2.1.0.jar <- /hbase-2.1.3/logs/export_data/hbase-shaded-miscellaneous-2.1.0.jar

2024-02-03 16:13:49,630 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-shaded-miscellaneous-2.1.0.jar as file:/tmp/hadoop-root/mapred/local/1706948029147/hbase-shaded-miscellaneous-2.1.0.jar

2024-02-03 16:13:49,630 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029148/jackson-annotations-2.9.2.jar <- /hbase-2.1.3/logs/export_data/jackson-annotations-2.9.2.jar

2024-02-03 16:13:49,660 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/jackson-annotations-2.9.2.jar as file:/tmp/hadoop-root/mapred/local/1706948029148/jackson-annotations-2.9.2.jar

2024-02-03 16:13:49,694 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029149/hbase-metrics-api-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-metrics-api-2.1.3.jar

2024-02-03 16:13:49,714 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-metrics-api-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029149/hbase-metrics-api-2.1.3.jar

2024-02-03 16:13:49,714 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029150/zookeeper-3.4.10.jar <- /hbase-2.1.3/logs/export_data/zookeeper-3.4.10.jar

2024-02-03 16:13:49,716 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/zookeeper-3.4.10.jar as file:/tmp/hadoop-root/mapred/local/1706948029150/zookeeper-3.4.10.jar

2024-02-03 16:13:49,717 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029151/metrics-core-3.2.1.jar <- /hbase-2.1.3/logs/export_data/metrics-core-3.2.1.jar

2024-02-03 16:13:49,723 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/metrics-core-3.2.1.jar as file:/tmp/hadoop-root/mapred/local/1706948029151/metrics-core-3.2.1.jar

2024-02-03 16:13:49,723 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029152/hbase-protocol-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-protocol-2.1.3.jar

2024-02-03 16:13:49,730 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-protocol-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029152/hbase-protocol-2.1.3.jar

2024-02-03 16:13:49,730 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029153/hadoop-mapreduce-client-core-2.7.7.jar <- /hbase-2.1.3/logs/export_data/hadoop-mapreduce-client-core-2.7.7.jar

2024-02-03 16:13:49,735 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hadoop-mapreduce-client-core-2.7.7.jar as file:/tmp/hadoop-root/mapred/local/1706948029153/hadoop-mapreduce-client-core-2.7.7.jar

2024-02-03 16:13:49,735 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029154/hbase-metrics-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-metrics-2.1.3.jar

2024-02-03 16:13:49,737 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-metrics-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029154/hbase-metrics-2.1.3.jar

2024-02-03 16:13:49,737 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029155/protobuf-java-2.5.0.jar <- /hbase-2.1.3/logs/export_data/protobuf-java-2.5.0.jar

2024-02-03 16:13:49,755 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/protobuf-java-2.5.0.jar as file:/tmp/hadoop-root/mapred/local/1706948029155/protobuf-java-2.5.0.jar

2024-02-03 16:13:49,756 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029156/hbase-mapreduce-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-mapreduce-2.1.3.jar

2024-02-03 16:13:49,758 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-mapreduce-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029156/hbase-mapreduce-2.1.3.jar

2024-02-03 16:13:49,758 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029157/hbase-shaded-protobuf-2.1.0.jar <- /hbase-2.1.3/logs/export_data/hbase-shaded-protobuf-2.1.0.jar

2024-02-03 16:13:49,760 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-shaded-protobuf-2.1.0.jar as file:/tmp/hadoop-root/mapred/local/1706948029157/hbase-shaded-protobuf-2.1.0.jar

2024-02-03 16:13:49,760 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029158/hbase-protocol-shaded-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-protocol-shaded-2.1.3.jar

2024-02-03 16:13:49,762 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-protocol-shaded-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029158/hbase-protocol-shaded-2.1.3.jar

2024-02-03 16:13:49,762 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029159/hbase-server-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-server-2.1.3.jar

2024-02-03 16:13:49,764 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-server-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029159/hbase-server-2.1.3.jar

2024-02-03 16:13:49,764 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029160/hbase-client-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-client-2.1.3.jar

2024-02-03 16:13:49,766 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-client-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029160/hbase-client-2.1.3.jar

2024-02-03 16:13:49,766 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029161/jackson-databind-2.9.2.jar <- /hbase-2.1.3/logs/export_data/jackson-databind-2.9.2.jar

2024-02-03 16:13:49,768 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/jackson-databind-2.9.2.jar as file:/tmp/hadoop-root/mapred/local/1706948029161/jackson-databind-2.9.2.jar

2024-02-03 16:13:49,768 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029162/hbase-hadoop2-compat-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-hadoop2-compat-2.1.3.jar

2024-02-03 16:13:49,770 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-hadoop2-compat-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029162/hbase-hadoop2-compat-2.1.3.jar

2024-02-03 16:13:49,770 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029163/hbase-shaded-netty-2.1.0.jar <- /hbase-2.1.3/logs/export_data/hbase-shaded-netty-2.1.0.jar

2024-02-03 16:13:49,772 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-shaded-netty-2.1.0.jar as file:/tmp/hadoop-root/mapred/local/1706948029163/hbase-shaded-netty-2.1.0.jar

2024-02-03 16:13:49,773 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029164/jackson-core-2.9.2.jar <- /hbase-2.1.3/logs/export_data/jackson-core-2.9.2.jar

2024-02-03 16:13:49,774 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/jackson-core-2.9.2.jar as file:/tmp/hadoop-root/mapred/local/1706948029164/jackson-core-2.9.2.jar

2024-02-03 16:13:49,775 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029165/htrace-core4-4.2.0-incubating.jar <- /hbase-2.1.3/logs/export_data/htrace-core4-4.2.0-incubating.jar

2024-02-03 16:13:49,776 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/client-facing-thirdparty/htrace-core4-4.2.0-incubating.jar as file:/tmp/hadoop-root/mapred/local/1706948029165/htrace-core4-4.2.0-incubating.jar

2024-02-03 16:13:49,776 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029166/hbase-zookeeper-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-zookeeper-2.1.3.jar

2024-02-03 16:13:49,778 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-zookeeper-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029166/hbase-zookeeper-2.1.3.jar

2024-02-03 16:13:49,778 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029167/hadoop-common-2.7.7.jar <- /hbase-2.1.3/logs/export_data/hadoop-common-2.7.7.jar

2024-02-03 16:13:49,780 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hadoop-common-2.7.7.jar as file:/tmp/hadoop-root/mapred/local/1706948029167/hadoop-common-2.7.7.jar

2024-02-03 16:13:49,780 INFO [main] mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-root/mapred/local/1706948029168/hbase-common-2.1.3.jar <- /hbase-2.1.3/logs/export_data/hbase-common-2.1.3.jar

2024-02-03 16:13:49,782 INFO [main] mapred.LocalDistributedCacheManager: Localized file:/hbase-2.1.3/lib/hbase-common-2.1.3.jar as file:/tmp/hadoop-root/mapred/local/1706948029168/hbase-common-2.1.3.jar

2024-02-03 16:13:49,831 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029145/hbase-hadoop-compat-2.1.3.jar

2024-02-03 16:13:49,831 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029146/commons-lang3-3.6.jar

2024-02-03 16:13:49,831 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029147/hbase-shaded-miscellaneous-2.1.0.jar

2024-02-03 16:13:49,831 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029148/jackson-annotations-2.9.2.jar

2024-02-03 16:13:49,831 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029149/hbase-metrics-api-2.1.3.jar

2024-02-03 16:13:49,831 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029150/zookeeper-3.4.10.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029151/metrics-core-3.2.1.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029152/hbase-protocol-2.1.3.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029153/hadoop-mapreduce-client-core-2.7.7.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029154/hbase-metrics-2.1.3.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029155/protobuf-java-2.5.0.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029156/hbase-mapreduce-2.1.3.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029157/hbase-shaded-protobuf-2.1.0.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029158/hbase-protocol-shaded-2.1.3.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029159/hbase-server-2.1.3.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029160/hbase-client-2.1.3.jar

2024-02-03 16:13:49,832 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029161/jackson-databind-2.9.2.jar

2024-02-03 16:13:49,833 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029162/hbase-hadoop2-compat-2.1.3.jar

2024-02-03 16:13:49,833 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029163/hbase-shaded-netty-2.1.0.jar

2024-02-03 16:13:49,833 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029164/jackson-core-2.9.2.jar

2024-02-03 16:13:49,833 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029165/htrace-core4-4.2.0-incubating.jar

2024-02-03 16:13:49,833 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029166/hbase-zookeeper-2.1.3.jar

2024-02-03 16:13:49,833 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029167/hadoop-common-2.7.7.jar

2024-02-03 16:13:49,833 INFO [main] mapred.LocalDistributedCacheManager: file:/tmp/hadoop-root/mapred/local/1706948029168/hbase-common-2.1.3.jar

2024-02-03 16:13:49,838 INFO [main] mapreduce.Job: The url to track the job: http://localhost:8080/

2024-02-03 16:13:49,839 INFO [main] mapreduce.Job: Running job: job_local1318778997_0001

2024-02-03 16:13:49,842 INFO [Thread-54] mapred.LocalJobRunner: OutputCommitter set in config null

2024-02-03 16:13:49,881 INFO [Thread-54] mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.hbase.mapreduce.TableOutputCommitter

2024-02-03 16:13:49,918 INFO [Thread-54] mapred.LocalJobRunner: Waiting for map tasks

2024-02-03 16:13:49,919 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner: Starting task: attempt_local1318778997_0001_m_000000_0

2024-02-03 16:13:49,980 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2024-02-03 16:13:49,986 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask: Processing split: file:/hbase/logs/export_data/data/hugegraph_c/part-m-00000:0+354

2024-02-03 16:13:49,997 INFO [ReadOnlyZKClient-localhost:2181@0x62cc5d7f] zookeeper.ZooKeeper: Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$$Lambda$13/450416974@60e9aad6

2024-02-03 16:13:49,999 INFO [ReadOnlyZKClient-localhost:2181@0x62cc5d7f-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2024-02-03 16:13:49,999 INFO [ReadOnlyZKClient-localhost:2181@0x62cc5d7f-SendThread(localhost:2181)] zookeeper.ClientCnxn: Socket connection established to localhost/127.0.0.1:2181, initiating session

2024-02-03 16:13:50,003 INFO [ReadOnlyZKClient-localhost:2181@0x62cc5d7f-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x18d68e7f0a80013, negotiated timeout = 90000

2024-02-03 16:13:50,012 INFO [LocalJobRunner Map Task Executor #0] mapreduce.TableOutputFormat: Created table instance for hugegraph:c

2024-02-03 16:13:50,032 INFO [LocalJobRunner Map Task Executor #0] mapreduce.Import: Setting up class org.apache.hadoop.hbase.mapreduce.Import$Importer mapper.

2024-02-03 16:13:50,032 INFO [LocalJobRunner Map Task Executor #0] mapreduce.Import: setting WAL durability to default.

2024-02-03 16:13:50,049 INFO [LocalJobRunner Map Task Executor #0] zookeeper.RecoverableZooKeeper: Process identifier=attempt_local1318778997_0001_m_000000_0 connecting to ZooKeeper ensemble=localhost:2181

2024-02-03 16:13:50,049 INFO [LocalJobRunner Map Task Executor #0] zookeeper.ZooKeeper: Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.PendingWatcher@7761c0c5

2024-02-03 16:13:50,050 INFO [LocalJobRunner Map Task Executor #0-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2024-02-03 16:13:50,051 INFO [LocalJobRunner Map Task Executor #0-SendThread(localhost:2181)] zookeeper.ClientCnxn: Socket connection established to localhost/127.0.0.1:2181, initiating session

2024-02-03 16:13:50,054 INFO [LocalJobRunner Map Task Executor #0-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x18d68e7f0a80014, negotiated timeout = 90000

2024-02-03 16:13:50,079 INFO [LocalJobRunner Map Task Executor #0] zookeeper.ZooKeeper: Session: 0x18d68e7f0a80014 closed

2024-02-03 16:13:50,081 INFO [LocalJobRunner Map Task Executor #0-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x18d68e7f0a80014

2024-02-03 16:13:50,098 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner:

2024-02-03 16:13:50,286 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Task:attempt_local1318778997_0001_m_000000_0 is done. And is in the process of committing

2024-02-03 16:13:50,287 INFO [ReadOnlyZKClient-localhost:2181@0x62cc5d7f] zookeeper.ZooKeeper: Session: 0x18d68e7f0a80013 closed

2024-02-03 16:13:50,288 INFO [ReadOnlyZKClient-localhost:2181@0x62cc5d7f-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x18d68e7f0a80013

2024-02-03 16:13:50,295 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner: map

2024-02-03 16:13:50,295 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Task 'attempt_local1318778997_0001_m_000000_0' done.

2024-02-03 16:13:50,299 INFO [LocalJobRunner Map Task Executor #0] mapred.Task: Final Counters for attempt_local1318778997_0001_m_000000_0: Counters: 15File System CountersFILE: Number of bytes read=37599184FILE: Number of bytes written=38260438FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0Map-Reduce FrameworkMap input records=5Map output records=5Input split bytes=123Spilled Records=0Failed Shuffles=0Merged Map outputs=0GC time elapsed (ms)=0Total committed heap usage (bytes)=129761280File Input Format Counters Bytes Read=366File Output Format Counters Bytes Written=0

2024-02-03 16:13:50,299 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner: Finishing task: attempt_local1318778997_0001_m_000000_0

2024-02-03 16:13:50,300 INFO [Thread-54] mapred.LocalJobRunner: map task executor complete.

2024-02-03 16:13:50,841 INFO [main] mapreduce.Job: Job job_local1318778997_0001 running in uber mode : false

2024-02-03 16:13:50,844 INFO [main] mapreduce.Job: map 100% reduce 0%

2024-02-03 16:13:50,846 INFO [main] mapreduce.Job: Job job_local1318778997_0001 completed successfully

2024-02-03 16:13:50,863 INFO [main] mapreduce.Job: Counters: 15File System CountersFILE: Number of bytes read=37599184FILE: Number of bytes written=38260438FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0Map-Reduce FrameworkMap input records=5Map output records=5Input split bytes=123Spilled Records=0Failed Shuffles=0Merged Map outputs=0GC time elapsed (ms)=0Total committed heap usage (bytes)=129761280File Input Format Counters Bytes Read=366File Output Format Counters Bytes Written=0

bash-4.4#

正常导入,不报错,就没问题;如果提示命名空间或者表不存在,则需要先创建对应的命名空间和表,然后才能执行导入;

5. 脚本批量导入、导出

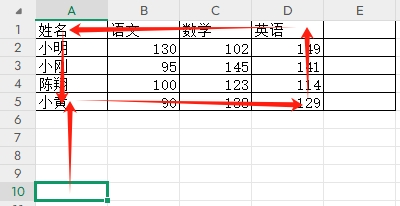

第一步确定两个环境的命名空间和表结构是一致的,这个大前提,才能继续后面的导入导出,否则会出现奇奇怪怪的问题;

然后是获取要导入的表,如果表很多,则可以命令 list_namespace_tables "hugegraph",先拿到多个表名:

hbase(main):010:0* list_namespace_tables "hugegraph"

TABLE

c

el

g_ai

g_di

g_ei

g_fi

g_hi

g_ie

g_ii

g_li

g_oe

g_si

g_ui

g_v

g_vi

il

m

m_si

pk

s_ai

s_di

s_ei

s_fi

s_hi

s_ie

s_ii

s_li

s_oe

s_si

s_ui

s_v

s_vi

vl

33 row(s)

Took 0.0398 seconds

=> ["c", "el", "g_ai", "g_di", "g_ei", "g_fi", "g_hi", "g_ie", "g_ii", "g_li", "g_oe", "g_si", "g_ui", "g_v", "g_vi", "il", "m", "m_si", "pk", "s_ai", "s_di", "s_ei", "s_fi", "s_hi", "s_ie", "s_ii", "s_li", "s_oe", "s_si", "s_ui", "s_v", "s_vi", "vl"]

hbase(main):011:0>

下面新建一个自动导出上面表的脚本:export_tables.sh 内容如下:

#!/bin/bash# 列表参数 TABLES

TABLES='["c", "el", "g_ai", "g_di", "g_ei", "g_fi", "g_hi", "g_ie", "g_ii", "g_li", "g_oe", "g_si", "g_ui", "g_v", "g_vi", "il", "m", "m_si", "pk", "s_ai", "s_di", "s_ei", "s_fi", "s_hi", "s_ie", "s_ii", "s_li", "s_oe", "s_si", "s_ui", "s_v", "s_vi", "vl"]'# 去除列表参数中的方括号和引号

TABLES=${TABLES//[\[\]\"]/}# 将列表参数解析为数组

IFS=', ' read -ra TABLES_ARRAY <<< "$TABLES"# 循环遍历列表并执行导出命令

for table in "${TABLES_ARRAY[@]}"; doexport_cmd="hbase org.apache.hadoop.hbase.mapreduce.Export \"hugegraph:$table\" file:///hbase-data/export_data/data/hugegraph_$table"echo "Executing command: $export_cmd"# 执行导出命令# eval "$export_cmd"

done

尝试执行脚本,看下执行的命令是否正确,输出内容如下:

bash-4.4# bash export_tables.sh

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:c" file:///hbase/logs/export_data/data/hugegraph_c **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:el" file:///hbase/logs/export_data/data/hugegraph_el **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_ai" file:///hbase/logs/export_data/data/hugegraph_g_ai **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_di" file:///hbase/logs/export_data/data/hugegraph_g_di **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_ei" file:///hbase/logs/export_data/data/hugegraph_g_ei **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_fi" file:///hbase/logs/export_data/data/hugegraph_g_fi **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_hi" file:///hbase/logs/export_data/data/hugegraph_g_hi **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_ie" file:///hbase/logs/export_data/data/hugegraph_g_ie **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_ii" file:///hbase/logs/export_data/data/hugegraph_g_ii **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_li" file:///hbase/logs/export_data/data/hugegraph_g_li **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_oe" file:///hbase/logs/export_data/data/hugegraph_g_oe **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_si" file:///hbase/logs/export_data/data/hugegraph_g_si **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_ui" file:///hbase/logs/export_data/data/hugegraph_g_ui **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_v" file:///hbase/logs/export_data/data/hugegraph_g_v **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:g_vi" file:///hbase/logs/export_data/data/hugegraph_g_vi **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:il" file:///hbase/logs/export_data/data/hugegraph_il **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:m" file:///hbase/logs/export_data/data/hugegraph_m **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:m_si" file:///hbase/logs/export_data/data/hugegraph_m_si **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:pk" file:///hbase/logs/export_data/data/hugegraph_pk **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_ai" file:///hbase/logs/export_data/data/hugegraph_s_ai **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_di" file:///hbase/logs/export_data/data/hugegraph_s_di **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_ei" file:///hbase/logs/export_data/data/hugegraph_s_ei **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_fi" file:///hbase/logs/export_data/data/hugegraph_s_fi **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_hi" file:///hbase/logs/export_data/data/hugegraph_s_hi **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_ie" file:///hbase/logs/export_data/data/hugegraph_s_ie **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_ii" file:///hbase/logs/export_data/data/hugegraph_s_ii **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_li" file:///hbase/logs/export_data/data/hugegraph_s_li **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_oe" file:///hbase/logs/export_data/data/hugegraph_s_oe **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_si" file:///hbase/logs/export_data/data/hugegraph_s_si **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_ui" file:///hbase/logs/export_data/data/hugegraph_s_ui **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_v" file:///hbase/logs/export_data/data/hugegraph_s_v **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:s_vi" file:///hbase/logs/export_data/data/hugegraph_s_vi **********

********** Executing command: hbase org.apache.hadoop.hbase.mapreduce.Export "hugegraph:vl" file:///hbase/logs/export_data/data/hugegraph_vl **********

bash-4.4#

确定导出的表和导出的目录没问题之后,打开 eval "$export_cmd" 这一行的注释,再次运行脚本,就可以等待上面的表的数据自动导出;

批量导入脚本:import_table_data.sh

#!/bin/bash# 列表参数 TABLES

TABLES='["c", "el", "g_ai", "g_di", "g_ei", "g_fi", "g_hi", "g_ie", "g_ii", "g_li", "g_oe", "g_si", "g_ui", "g_v", "g_vi", "il", "m", "m_si", "pk", "s_ai", "s_di", "s_ei", "s_fi", "s_hi", "s_ie", "s_ii", "s_li", "s_oe", "s_si", "s_ui", "s_v", "s_vi", "vl"]'# 去除列表参数中的方括号和引号

TABLES=${TABLES//[\[\]\"]/}# 将列表参数解析为数组

IFS=', ' read -ra TABLES_ARRAY <<< "$TABLES"# 循环遍历列表并执行导出命令

for table in "${TABLES_ARRAY[@]}"; doimport_cmd="/opt/hbase/bin/hbase org.apache.hadoop.hbase.mapreduce.Import \"hugegraph:$table\" file:///hbase/logs/export_data/data/hugegraph_$table"echo " ****************** Executing command: $import_cmd ******************"# 执行导出命令# eval "$import_cmd"

done

执行导入脚本,中间如果表数据过大,会慢一点,导入的数据量多少,会直接影响导入的速度,等待程序走完就好;

如果程序走完,没有报错,那么恭喜你,数据导入完成了。

如果上层程序并没有实时查出来导入的数据,则可以重启下服务,如果还不行,最后可以尝试重启 HBase,如果是 Docker 部署的 HBase,需要确保 HBase 的存储目录挂载出来了。免得重启之后,导入的数据又丢了。

相关文章:

HBase 数据导入导出

HBase 数据导入导出 1. 使用 Docker 部署 HBase2. HBase 命令查找3. 命令行操作 HBase3.1 HBase shell 命令3.2 查看命名空间3.3 查看命名空间下的表3.4 新建命名空间3.5 查看具体表结构3.6 创建表 4. HBase 数据导出、导入4.1 导出 HBase 中的某个表数据4.2 导入 HBase 中的某…...

(java版)排序算法----【冒泡,选择,插入,希尔,快速排序,归并排序,基数排序】超详细~~

目录 冒泡排序(BubbleSort): 代码详解: 冒泡排序的优化: 选择排序(SelectSort): 代码详解: 插入排序(InsertSort): 代码详解: 希尔排序(ShellSort): 法一…...

服务器托管的作用是什么?

服务器托管是将企业的服务器和相关设备托管到具有完善机房设施、高品质网络环境与运营经验的网络数据中心内,服务器托管在维护方面一般是由客户负责的,或者是由其他的授权人进行远程维护。 那服务器托管的作用都有哪些呢? 服务器托管不需要企…...

美团启动架构调整:聚力核心本地商业,提升科技与境外业务优先级

2月2日,美团CEO王兴发布内部邮件宣布新的组织架构调整。邮件显示,美团对核心本地商业相关多项业务进行了整合,并进一步提升了科技与国际化相关业务的优先级。 在核心本地商业上,美团对过去相对独立的事业群进行了整合。主要调整包…...

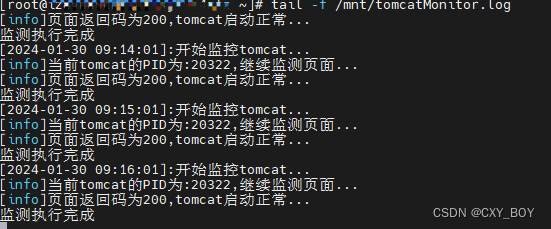

监测Tomcat项目宕机重启脚本(Linux)

1.准备好写好的脚本 #!/bin/sh # 获取tomcat的PID TOMCAT_PID$(ps -ef | grep tomcat | grep -v tomcatMonitor |grep -v grep | awk {print $2}) # tomcat的启动文件位置 START_TOMCAT/mnt/tomcat/bin/startup.sh # 需要监测的一个GET请求地址 MONITOR_URLhttp://localhost:…...

道可云元宇宙每日资讯|北京:推进元宇宙在智慧城市应用

道可云元宇宙每日简报(2024年2月2日)讯,今日元宇宙新鲜事有: 石狮市检察院“元宇宙智慧展馆”正式启用 为深入实施数字检察战略,主动探索元宇宙技术在未成年人检察、公益诉讼检察等方面的应用,打造集案件…...

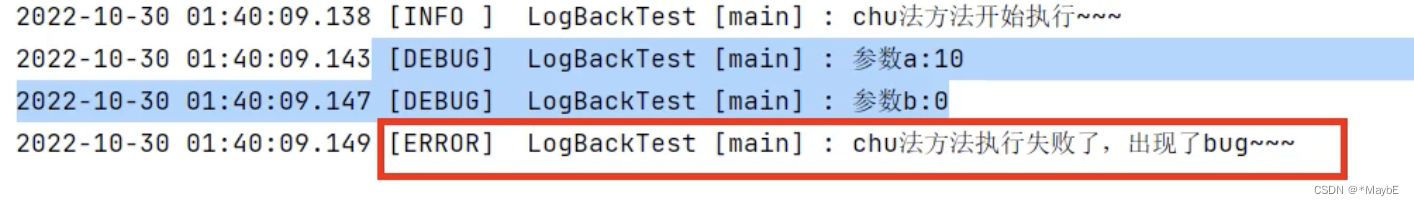

Logback学习

logback 1、logback介绍 Logback是由log4j创始人设计的另一个开源日志组件,性能比log4j要好。 lockback优点: 内核重写、测试充分、初始化内存加载更小,这一切让logback性能和log4j相比有诸多倍的提升。logback非常自然地直接实现了slf4j…...

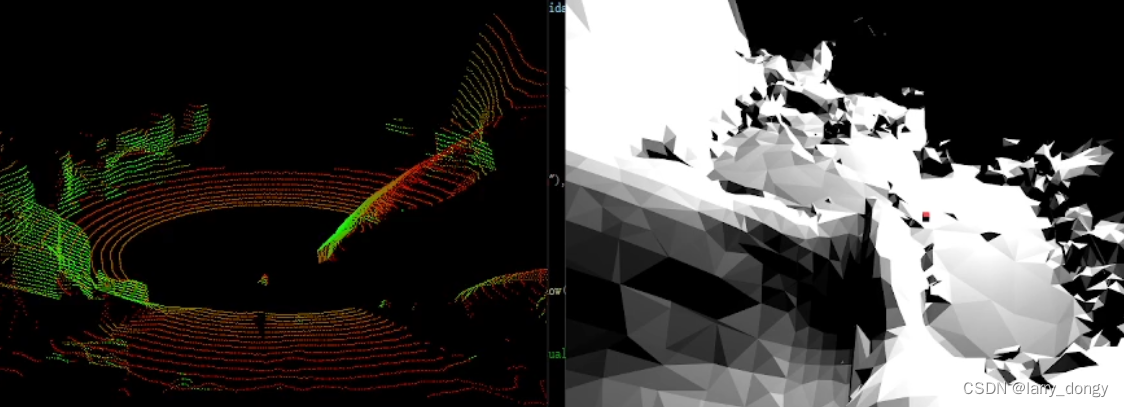

【Chrono Engine学习总结】2-可视化

由于Chrono的官方教程在一些细节方面解释的并不清楚,自己做了一些尝试,做学习总结。 0、基本概念 类型说明: Chrono的可视化包括两块:实时可视化,以及离线/后处理可视化。 其中,实时可视化,又…...

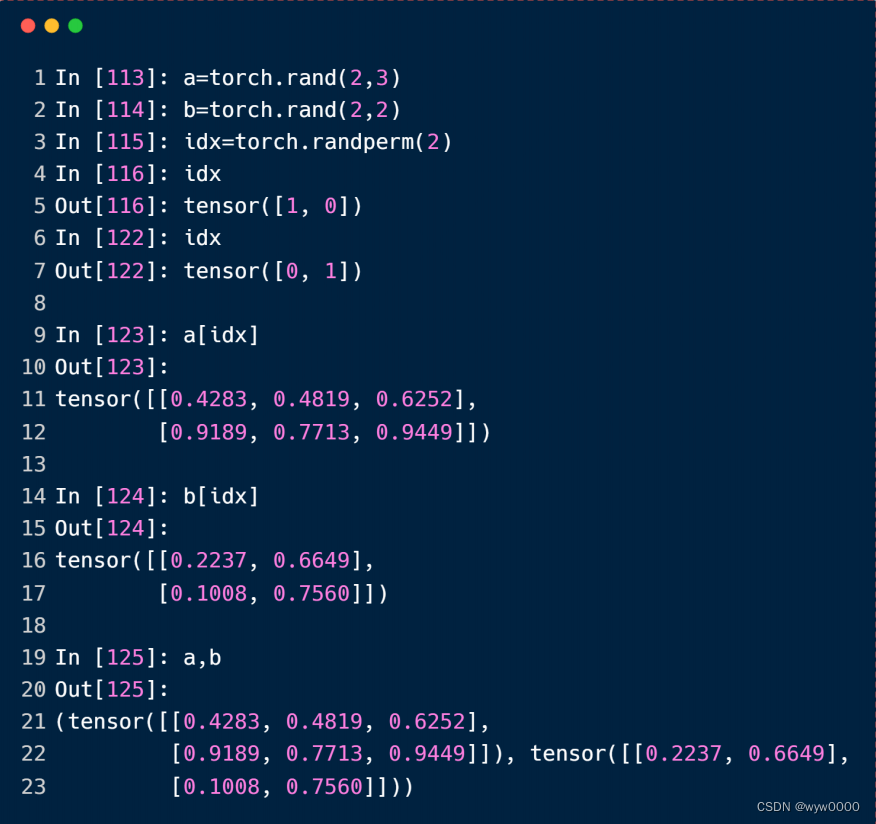

pytorch创建tensor

目录 1. 从numpy创建2. 从list创建3. 创建未初始化tensor4. 设置默认tensor创建类型5. rand/rand_like, randint6. randn生成正态分布随机数7. full8. arange/range9. linspace/logspace10. Ones/zeros/eye11. randperm 1. 从numpy创建 2. 从list创建 3. 创建未初始化tensor T…...

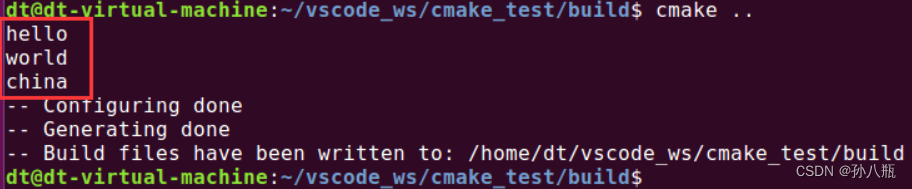

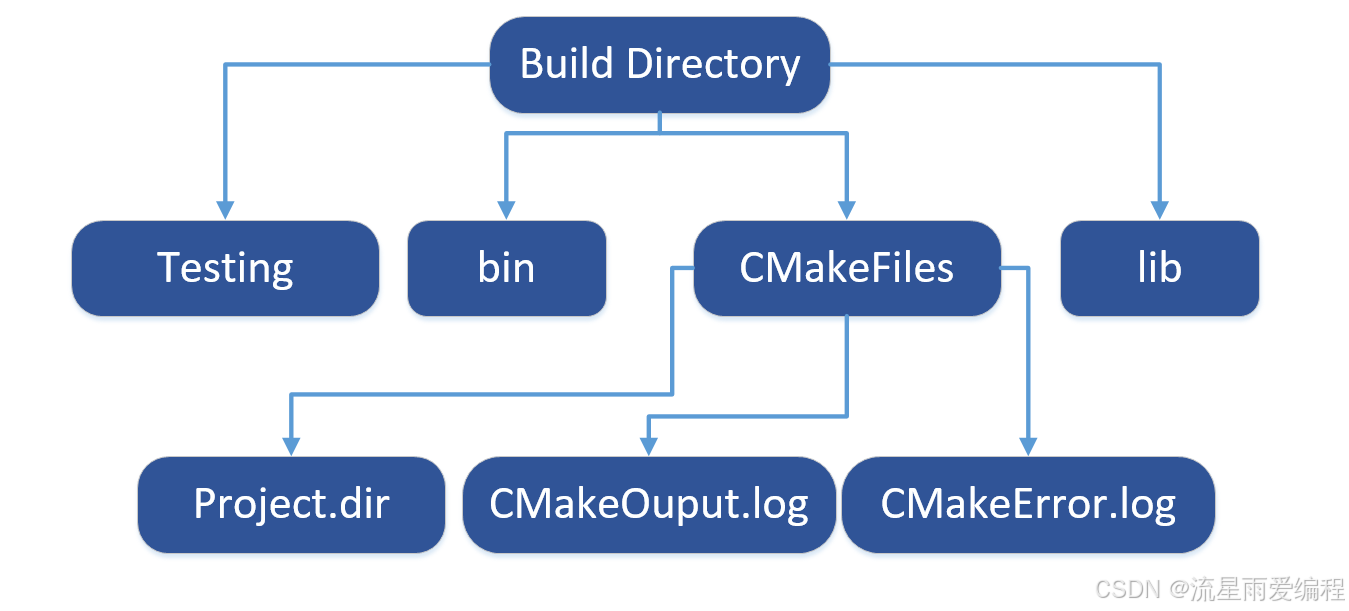

Cmake语法学习3:语法

1.双引号 1.1 命令参数 1)介绍 命令中多个参数之间使用空格进行分隔,而 cmake 会将双引号引起来的内容作为一个整体,当它当成一个参数,假如你的参数中有空格(空格是参数的一部分),那么就可以使…...

JavaScript 基础 - 第1天

介绍 掌握 JavaScript 的引入方式,初步认识 JavaScript 的作用 引入方式 JavaScript 程序不能独立运行,它需要被嵌入 HTML 中,然后浏览器才能执行 JavaScript 代码。通过 script 标签将 JavaScript 代码引入到 HTML 中,有两种方式…...

人口增长问题 T1063

#include<bits/stdc.h> using namespace std; int main(){int n;double x;cin>>x>>n;for(int i1;i<n;i){xx*1.001;}printf("%.4lf",x);return 0; }...

2024年Java算法面试题

2024年Java实战面试题(北京)_java 5 年 面试-CSDN博客 一、波菲那契递归 System.out.println("banc " banc(10)) public static int banc(int n){if( n0 ){return 0;}else if( n1 ){return 1;}else{return banc(n-1) banc(n-2);} } 二、冒…...

C#——三角形面积公式

已知三角形的三个边,求面积,可以使用海伦公式。 因此,可以执行得到三角形面积公式的计算方法代码如下: /** / <summary>* / 三角形面积公式* / </summary>* / <param name"a">边长a</param>*…...

tcpdump在手机上的使用

首先手机得root才可以,主要分析手机与手机的通信协议 我使用的是一加9pro, root方法参考一加全能盒子、一加全能工具箱官方网站——大侠阿木 (daxiaamu.com)https://optool.daxiaamu.com/index.php tcpdump,要安装在/data/local/tmp下要arm6…...

unity 导出H5

Unity 输出html5_mob649e8157aaee的技术博客_51CTO博客 Unity打包WebGL报Unable to parse Build/*.framework.js.gz This can happen if build compression was......._unable to load file build/out.framework.js.gz! che-CSDN博客...

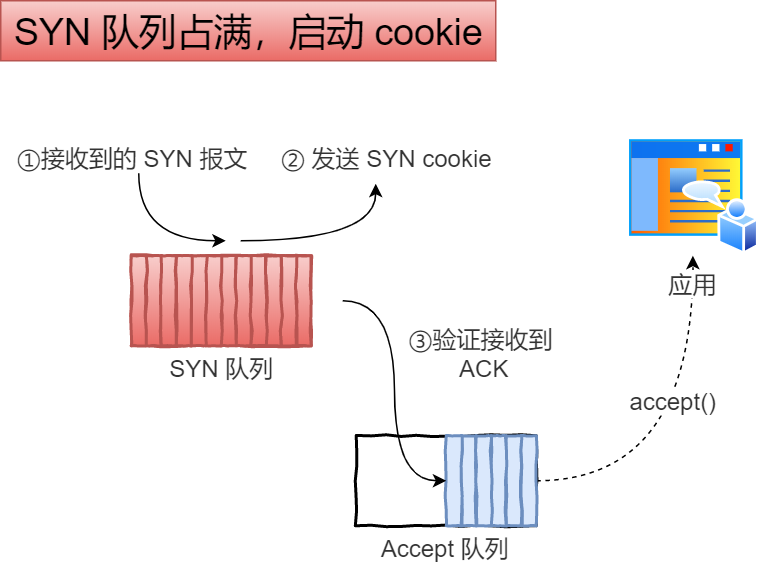

认识 SYN Flood 攻击

文章目录 1.什么是 SYN Flood 攻击?2.半连接与全连接队列3.如何防范 SYN Flood 攻击?增大半连接队列开启 SYN Cookie减少 SYNACK 重传次数 参考文献 1.什么是 SYN Flood 攻击? SYN Flood 是互联网上最原始、最经典的 DDoS(Distri…...

Node需要了解的知识

Node能执行javascript的原因。 浏览器之所以能执行Javascript代码,因为内部含有v8引擎。Node.js基于v8引擎封装,因此可以执行javascript代码。Node.js环境没有DOM和BOM。DOM能访问HTML所有的节点对象,BOM是浏览器对象。但是node中提供了cons…...

网络服务综合实验项目