鲲鹏arm64架构下安装KubeSphere

鲲鹏arm64架构下安装KubeSphere

官方参考文档: https://kubesphere.io/zh/docs/quick-start/minimal-kubesphere-on-k8s/

在Kubernetes基础上最小化安装 KubeSphere

前提条件

官方参考文档: https://kubesphere.io/zh/docs/installing-on-kubernetes/introduction/prerequisites/

-

如需在 Kubernetes 上安装 KubeSphere 3.2.1,您的 Kubernetes 版本必须为:1.19.x、1.20.x、1.21.x 或 1.22.x(实验性支持)。

-

确保您的机器满足最低硬件要求:CPU > 1 核,内存 > 2 GB。

-

在安装之前,需要配置 Kubernetes 集群中的默认存储类型。

uname -a

显示架构如下:

Linux localhost.localdomain 4.14.0-115.el7a.0.1.aarch64 #1 SMP Sun Nov 25 20:54:21 UTC 2018 aarch64 aarch64 aarch64 GNU/Linuxkubectl version

显示版本如下:

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:50:19Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/arm64"}

Server Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:41:49Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/arm64"}free -g

显示内存如下:total used free shared buff/cache available

Mem: 127 48 43 1 34 57

Swap: 0 0 0kubectl get sc

显示存储默认 StorageClass如下:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

glusterfs (default) cluster.local/nfs-client-nfs-client-provisioner Delete Immediate true 24h

nfs-storage (default) k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 23h

部署 KubeSphere

确保您的机器满足安装的前提条件之后,可以按照以下步骤安装 KubeSphere。

1 执行以下命令开始安装:

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

2 检查安装日志:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f报错如下:

2022-02-23T16:02:30+08:00 INFO : shell-operator latest

2022-02-23T16:02:30+08:00 INFO : HTTP SERVER Listening on 0.0.0.0:9115

2022-02-23T16:02:30+08:00 INFO : Use temporary dir: /tmp/shell-operator

2022-02-23T16:02:30+08:00 INFO : Initialize hooks manager ...

2022-02-23T16:02:30+08:00 INFO : Search and load hooks ...

2022-02-23T16:02:30+08:00 INFO : Load hook config from '/hooks/kubesphere/installRunner.py'

2022-02-23T16:02:31+08:00 INFO : Load hook config from '/hooks/kubesphere/schedule.sh'

2022-02-23T16:02:31+08:00 INFO : Initializing schedule manager ...

2022-02-23T16:02:31+08:00 INFO : KUBE Init Kubernetes client

2022-02-23T16:02:31+08:00 INFO : KUBE-INIT Kubernetes client is configured successfully

2022-02-23T16:02:31+08:00 INFO : MAIN: run main loop

2022-02-23T16:02:31+08:00 INFO : MAIN: add onStartup tasks

2022-02-23T16:02:31+08:00 INFO : QUEUE add all HookRun@OnStartup

2022-02-23T16:02:31+08:00 INFO : Running schedule manager ...

2022-02-23T16:02:31+08:00 INFO : MSTOR Create new metric shell_operator_live_ticks

2022-02-23T16:02:31+08:00 INFO : MSTOR Create new metric shell_operator_tasks_queue_length

2022-02-23T16:02:31+08:00 ERROR : error getting GVR for kind 'ClusterConfiguration': Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

2022-02-23T16:02:31+08:00 ERROR : Enable kube events for hooks error: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

2022-02-23T16:02:34+08:00 INFO : TASK_RUN Exit: program halts.

原因是8080端口,k8s默认不对外开放

开放8080端口

8080端口访问不了,k8s开放8080端口

https://www.cnblogs.com/liuxingxing/p/13399729.html

https://blog.csdn.net/qq_29274865/article/details/108953259

进入 cd /etc/kubernetes/manifests/vim kube-apiserver.yaml

添加

- --insecure-port=8080- --insecure-bind-address=0.0.0.0重启apiserver

docker restart apiserver容器id

重新执行上面的命令

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

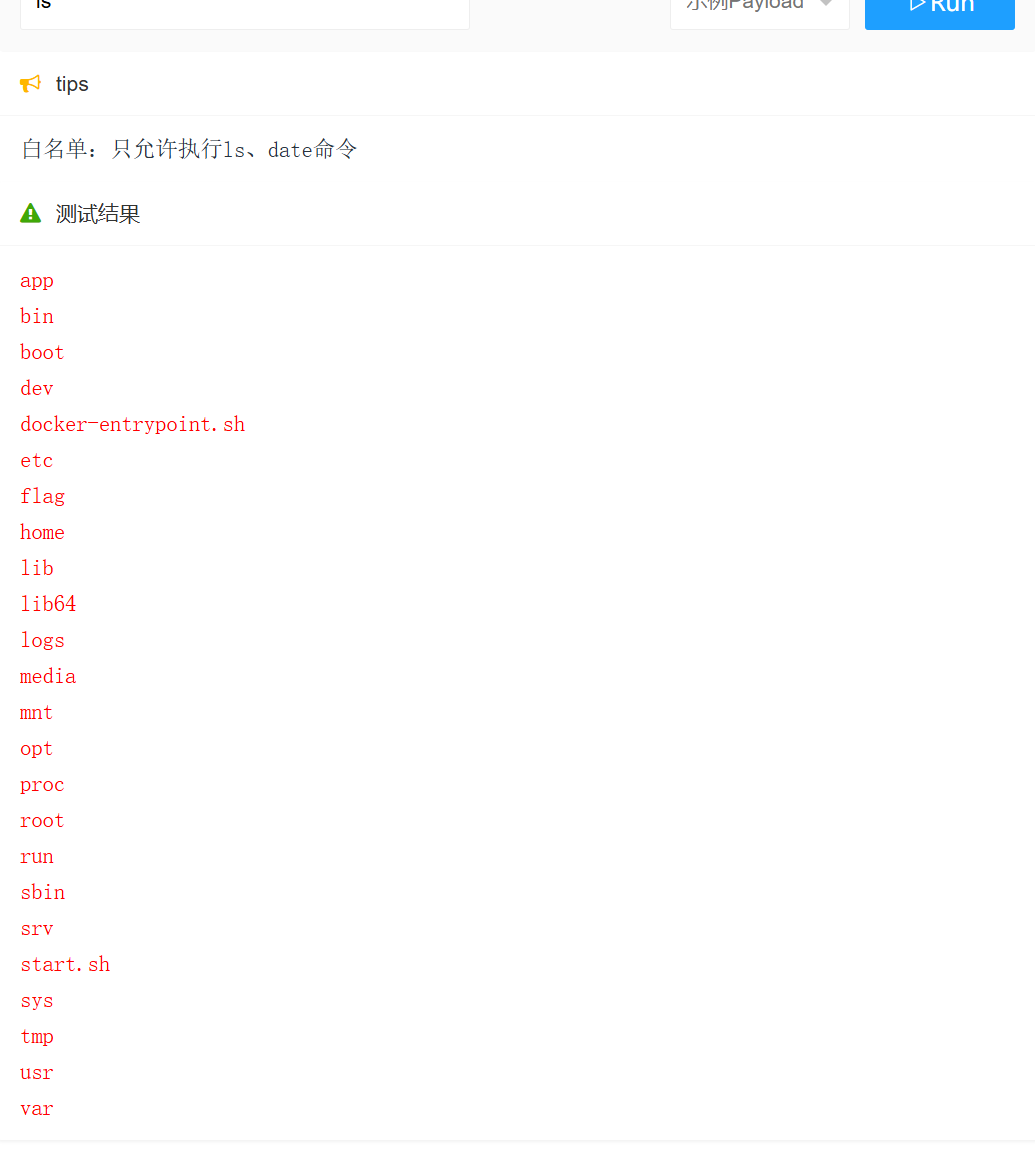

3 使用 kubectl get pod --all-namespaces 查看所有 Pod 是否在 KubeSphere 的相关命名空间中正常运行。如果是,请通过以下命令检查控制台的端口(默认为 30880):

kubectl get pod --all-namespaces

显示Error

kubesphere-system ks-installer-d8b656fb4-gb2qg 0/1 Error 0 27s查看日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f查看日志报错如下:

standard_init_linux.go:228: exec user process caused: exec format error

原因是arm64架构报错,运行不起来

https://hub.docker.com/

hub官方搜索下镜像有没有arm64的

根本就没有arm64架构的镜像,找了一圈,发现有个:kubespheredev/ks-installer:v3.0.0-arm64 试试

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml下载镜像

docker pull kubespheredev/ks-installer:v3.0.0-arm64在使用rancher去修改deployments文件,或下载yaml文件去修改

由于我的k8s master节点是arm64架构的,kubesphere/ks-installer 官方没有arm64的镜像

kubesphere/ks-installer:v3.2.1修改为 kubespheredev/ks-installer:v3.0.0-arm64

节点选择指定master节点部署报错如下:

TASK [common : Kubesphere | Creating common component manifests] ***************

failed: [localhost] (item={'path': 'etcd', 'file': 'etcd.yaml'}) => {"ansible_loop_var": "item", "changed": false, "item": {"file": "etcd.yaml", "path": "etcd"}, "msg": "AnsibleUndefinedVariable: 'dict object' has no attribute 'etcdVolumeSize'"}

failed: [localhost] (item={'name': 'mysql', 'file': 'mysql.yaml'}) => {"ansible_loop_var": "item", "changed": false, "item": {"file": "mysql.yaml", "name": "mysql"}, "msg": "AnsibleUndefinedVariable: 'dict object' has no attribute 'mysqlVolumeSize'"}

failed: [localhost] (item={'path': 'redis', 'file': 'redis.yaml'}) => {"ansible_loop_var": "item", "changed": false, "item": {"file": "redis.yaml", "path": "redis"}, "msg": "AnsibleUndefinedVariable: 'dict object' has no attribute 'redisVolumSize'"}原因是版本对不上,使用v3.2.1版本,镜像确是v3.0.0-arm64

下面使用v3.0.0版本试试

使用v3.0.0版本

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/cluster-configuration.yaml

在使用rancher去修改deployments文件或者下载v3.0.0的yaml文件,并修改

kubesphere-installer.yaml

cluster-configuration.yamlkubesphere-installer.yaml文件修改如下

由于我的k8s master节点是arm64架构的,kubesphere/ks-installer 官方没有arm64的镜像

kubesphere/ks-installer:v3.0.0修改为 kubespheredev/ks-installer:v3.0.0-arm64

节点选择指定master节点部署cluster-configuration.yaml文件修改

endpointIps: 192.168.xxx.xx 修改为k8s master节点IP

不报错,ks-installer也跑起来了,但是其他的没有arm64镜像,其他deployments都跑不起来 ks-controller-manager报错

把所有deployments镜像都换成arm64架构的,节点选择k8s master节点

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -fkubectl get pod --all-namespacesdocker pull kubespheredev/ks-installer:v3.0.0-arm64

docker pull bobsense/redis-arm64 要去掉挂载的存储pvc,不然报错

docker pull kubespheredev/ks-controller-manager:v3.2.1

docker pull kubespheredev/ks-console:v3.0.0-arm64

docker pull kubespheredev/ks-apiserver:v3.2.0除了ks-controller-manager,其他都运行起来了kubectl get svc/ks-console -n kubesphere-system

显示如下:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ks-console NodePort 10.1.4.225 <none> 80:30880/TCP 6h12m再次查看日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

最后显示如下:以为成功了

Console: http://192.168.xxx.xxx:30880

Account: admin

Password: P@88w0rd

kubectl logs ks-controller-manager-646b8fff9f-pd7w7 --namespace=kubesphere-system

ks-controller-manager报错如下:

W0224 11:36:55.643227 1 client_config.go:615] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

E0224 11:36:55.649703 1 server.go:101] failed to connect to ldap service, please check ldap status, error: factory is not able to fill the pool: LDAP Result Code 200 "Network Error": dial tcp: lookup openldap.kubesphere-system.svc on 10.1.0.10:53: no such host

登录报错: 可能是ks-controller-manager没成功运行

日志如下:

request to http://ks-apiserver.kubesphere-system.svc/oauth/token failed, reason: connect ECONNREFUSED 10.1.146.137:80

应该是openldap没启动成功的原因

查看StatefulSets的openldap日志

提示有2个StorageClasses,删除一个之后就运行成功了

persistentvolumeclaims "openldap-pvc-openldap-0" is forbidden: Internal error occurred: 2 default StorageClasses were found

删除StorageClasses

查看

kubectl get sc

显示如下:之前有2条记录,是因为我删除了一条

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storage (default) k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 40h删除

kubectl delete sc glusterfs

删除StorageClasses 后,只有一个StorageClasses 了, openldap正常运行了,ks-controller-manager也正常运行了,有希望了

所有pod都正常运行了,但是登录还是一样的有问题.查看日志

ks-apiserver-556f698dfb-5p2fc

日志如下:

E0225 10:40:17.460271 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmApplication: failed to list *v1alpha1.HelmApplication: the server could not find the requested resource (get helmapplications.application.kubesphere.io)

E0225 10:40:17.548278 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmRepo: failed to list *v1alpha1.HelmRepo: the server could not find the requested resource (get helmrepos.application.kubesphere.io)

E0225 10:40:17.867914 1 reflector.go:138] pkg/models/openpitrix/interface.go:89: Failed to watch *v1alpha1.HelmCategory: failed to list *v1alpha1.HelmCategory: the server could not find the requested resource (get helmcategories.application.kubesphere.io)

E0225 10:40:18.779136 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmRelease: failed to list *v1alpha1.HelmRelease: the server could not find the requested resource (get helmreleases.application.kubesphere.io)

E0225 10:40:19.870229 1 reflector.go:138] pkg/models/openpitrix/interface.go:90: Failed to watch *v1alpha1.HelmRepo: failed to list *v1alpha1.HelmRepo: the server could not find the requested resource (get helmrepos.application.kubesphere.io)

E0225 10:40:20.747617 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmCategory: failed to list *v1alpha1.HelmCategory: the server could not find the requested resource (get helmcategories.application.kubesphere.io)

E0225 10:40:23.130177 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmApplicationVersion: failed to list *v1alpha1.HelmApplicationVersion: the server could not find the requested resource (get helmapplicationversions.application.kubesphere.io)

ks-console-65f46d7649-5zt8c

日志如下:

<-- GET / 2022/02/25T10:41:28.642

{ UnauthorizedError: Not Login

at Object.throw (/opt/kubesphere/console/server/server.js:31701:11)

at getCurrentUser (/opt/kubesphere/console/server/server.js:9037:14)

at renderView (/opt/kubesphere/console/server/server.js:23231:46)

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:70183:16

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:77986:37

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:70183:16

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:77986:37

at dispatch (/opt/kubesphere/console/server/server.js:6870:32) message: 'Not Login' }

--> GET / 302 6ms 43b 2022/02/25T10:41:28.648

<-- GET /login 2022/02/25T10:41:28.649

{ FetchError: request to http://ks-apiserver.kubesphere-system.svc/kapis/config.kubesphere.io/v1alpha2/configs/oauth failed, reason: connect ECONNREFUSED 10.1.144.129:80

at ClientRequest.<anonymous> (/opt/kubesphere/console/server/server.js:80604:11)

at ClientRequest.emit (events.js:198:13)

at Socket.socketErrorListener (_http_client.js:392:9)

at Socket.emit (events.js:198:13)

at emitErrorNT (internal/streams/destroy.js:91:8)

at emitErrorAndCloseNT (internal/streams/destroy.js:59:3)

at process._tickCallback (internal/process/next_tick.js:63:19)

message:

'request to http://ks-apiserver.kubesphere-system.svc/kapis/config.kubesphere.io/v1alpha2/configs/oauth failed, reason: connect ECONNREFUSED 10.1.144.129:80',

type: 'system',

errno: 'ECONNREFUSED',

code: 'ECONNREFUSED' }

--> GET /login 200 7ms 14.82kb 2022/02/25T10:41:28.656

ks-controller-manager-548545f4b4-w4wmx

日志如下:

E0225 10:41:41.633013 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:41.634349 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:41.722377 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:42.636612 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:42.875652 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:42.964819 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:45.177641 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:45.327393 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:46.164454 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:49.011152 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:50.299769 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:50.851105 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:56.831265 1 helm_category_controller.go:158] get helm category: ctg-uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:41:56.923487 1 helm_category_controller.go:176] create helm category: uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:41:58.696406 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:59.876998 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:42:01.266422 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:42:11.724869 1 helm_category_controller.go:158] get helm category: ctg-uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:42:11.929837 1 helm_category_controller.go:176] create helm category: uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:42:12.355338 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

I0225 10:42:15.625073 1 leaderelection.go:253] successfully acquired lease kubesphere-system/ks-controller-manager-leader-election

I0225 10:42:15.625301 1 globalrolebinding_controller.go:122] Starting GlobalRoleBinding controller

I0225 10:42:15.625343 1 globalrolebinding_controller.go:125] Waiting for informer caches to sync

I0225 10:42:15.625365 1 globalrolebinding_controller.go:137] Starting workers

I0225 10:42:15.625351 1 snapshotclass_controller.go:102] Waiting for informer cache to sync.

I0225 10:42:15.625391 1 globalrolebinding_controller.go:143] Started workers

I0225 10:42:15.625380 1 capability_controller.go:110] Waiting for informer caches to sync

I0225 10:42:15.625449 1 capability_controller.go:123] Started workers

I0225 10:42:15.625447 1 basecontroller.go:59] Starting controller: loginrecord-controller

I0225 10:42:15.625478 1 globalrolebinding_controller.go:205] Successfully synced key:authenticated

I0225 10:42:15.625481 1 basecontroller.go:60] Waiting for informer caches to sync for: loginrecord-controller

I0225 10:42:15.625488 1 clusterrolebinding_controller.go:114] Starting ClusterRoleBinding controller

I0225 10:42:15.625546 1 clusterrolebinding_controller.go:117] Waiting for informer caches to sync

I0225 10:42:15.625540 1 basecontroller.go:59] Starting controller: group-controller

I0225 10:42:15.625515 1 basecontroller.go:59] Starting controller: groupbinding-controller

I0225 10:42:15.625596 1 basecontroller.go:60] Waiting for informer caches to sync for: group-controller

I0225 10:42:15.625615 1 basecontroller.go:60] Waiting for informer caches to sync for: groupbinding-controller

I0225 10:42:15.625579 1 clusterrolebinding_controller.go:122] Starting workers

I0225 10:42:15.625480 1 certificatesigningrequest_controller.go:109] Starting CSR controller

I0225 10:42:15.625681 1 certificatesigningrequest_controller.go:112] Waiting for csrInformer caches to sync

就差那么一点点就可以了,等下次解决了在补上吧

参考链接:

https://kubesphere.io/zh/docs/quick-start/minimal-kubesphere-on-k8s/

https://www.yuque.com/leifengyang/oncloud/gz1sls#BIxCW

相关文章:

鲲鹏arm64架构下安装KubeSphere

鲲鹏arm64架构下安装KubeSphere 官方参考文档: https://kubesphere.io/zh/docs/quick-start/minimal-kubesphere-on-k8s/ 在Kubernetes基础上最小化安装 KubeSphere 前提条件 官方参考文档: https://kubesphere.io/zh/docs/installing-on-kubernetes/introduction/prerequi…...

python 函数-02-返回值注释格式

01 函数返回值 1)python中函数可以没有返回值,也可以有通过return的方式 – 【特殊性,区别于java c#等】 2)返回值可以是一个或者多个,多个时通过逗号隔开 – 【特殊性,区别于java c#等】 3)多…...

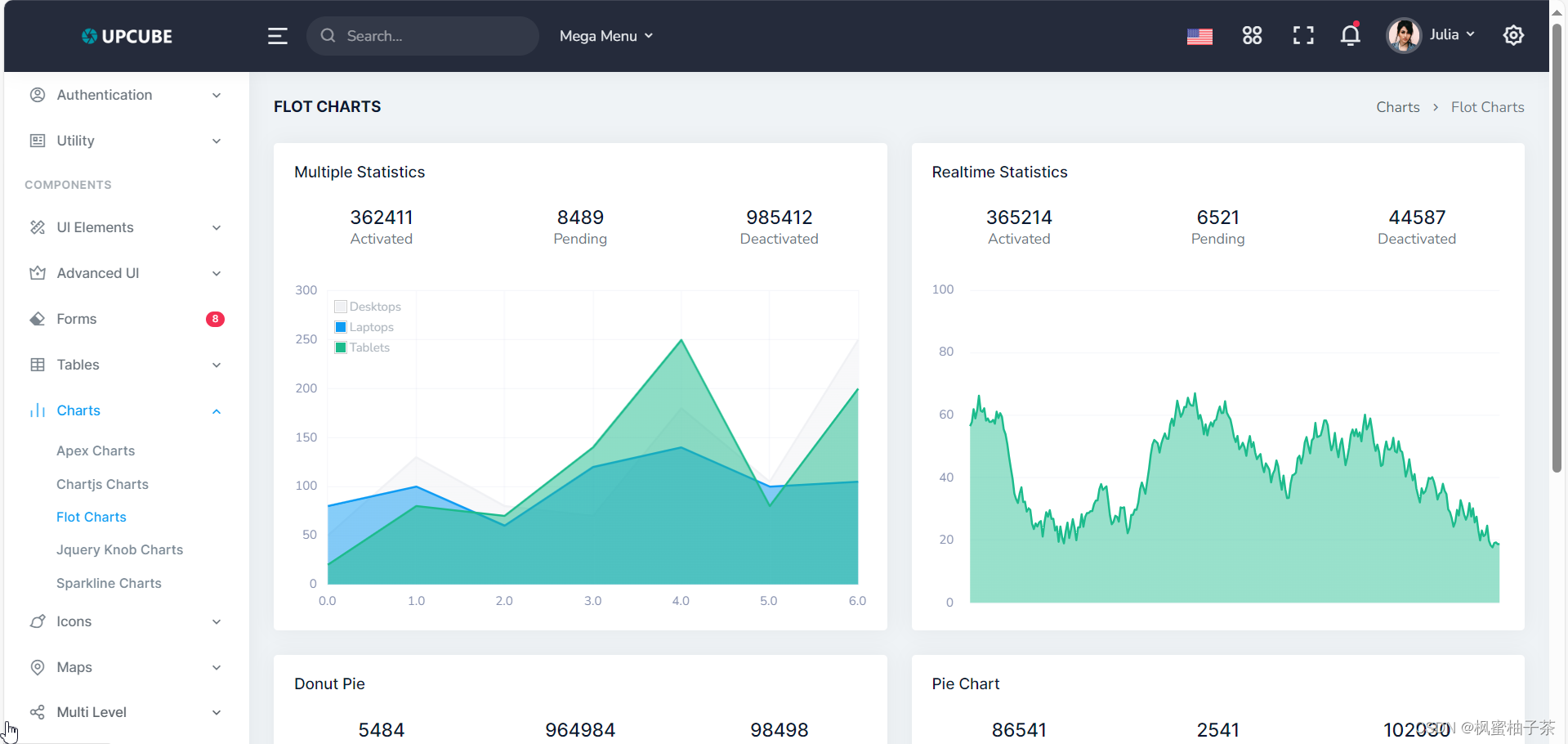

【前端素材】推荐优质后台管理系统Upcube平台模板(附源码)

一、需求分析 后台管理系统在多个层次上提供了丰富的功能和细致的管理手段,帮助管理员轻松管理和控制系统的各个方面。其灵活性和可扩展性使得后台管理系统成为各种网站、应用程序和系统不可或缺的管理工具。 当我们从多个层次来详细分析后台管理系统时࿰…...

可视化 RAG 数据 — 用于检索增强生成的 EDA

原文地址:Visualize your RAG Data — EDA for Retrieval-Augmented Generation 2024 年 2 月 8 日 Github:https://github.com/Renumics/rag-demo/blob/main/notebooks/visualize_rag_tutorial.ipynb 为探索Spotlight中的数据,我们使用Pa…...

数学建模论文、代码百度网盘链接

1.[2018中国大数据年终总决赛冠军] 金融市场板块划分与轮动规律挖掘与可视化问题 2.[2019第九届MathorCup数模二等奖] 数据驱动的城市轨道交通网络优化策略 3.[2019电工杯一等奖] 露天停车场停车位的优化设计 4.[2019数学中国网络数模一等奖] 基于机器学习的保险业数字化变革…...

mysql 迁移-data目录拷贝方式

背景:从服务器进水坏掉,50多G的数据库要重新做主从,但以导入导出的方式太慢,简直是灾难性的,一夜都没好,只好想到了拷贝mysql数据文件的方式 拷贝的数据文件的前提 1.数据库版本必需一致(可以…...

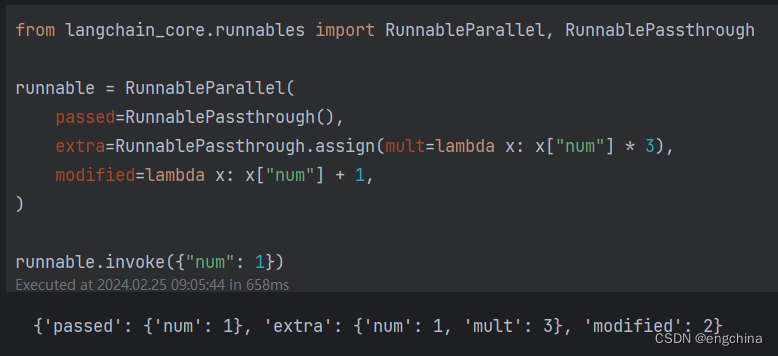

学习 LangChain 的 Passing data through

学习 LangChain 的 Passing data through 1. Passing data through2. 示例 1. Passing data through RunnablePassthrough 允许不改变或添加额外的键来传递输入。这通常与 RunnableParallel 结合使用,将数据分配给映射中的新键。 RunnablePassthrough() 单独调用&…...

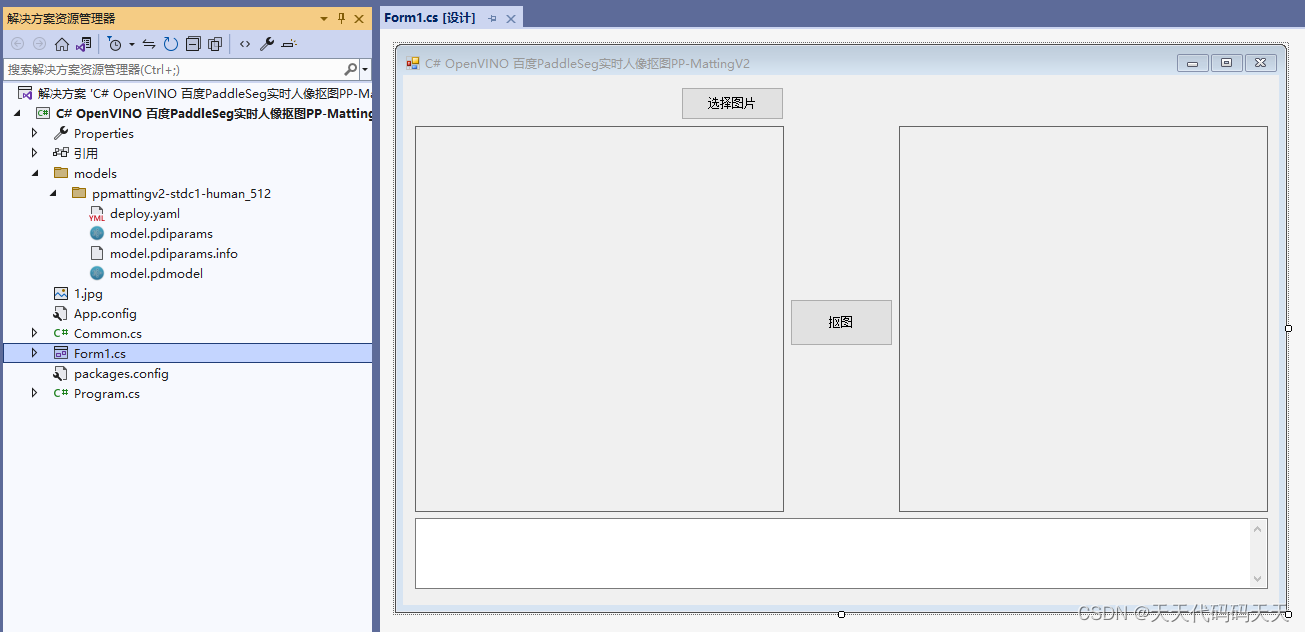

C# OpenVINO PaddleSeg实时人像抠图PP-MattingV2

目录 效果 项目 代码 下载 C# OpenVINO 百度PaddleSeg实时人像抠图PP-MattingV2 效果 项目 代码 using OpenCvSharp; using Sdcb.OpenVINO; using System; using System.Diagnostics; using System.Drawing; using System.Security.Cryptography; using System.Text; us…...

【Android 11】AOSP Settings WIFI随机MAC地址功能

AOSP Settings WIFI随机MAC地址功能 背景 最近客户提出了想要实现随机WIFIMAC地址的功能(我们默认WIFI的MAC地址是固定的)。网上搜到了一篇不错的文章,本次改动也是基于这个来写的。 由于客户指定使用的settings是AOSP的,所以在…...

dmrman备份还原

脱机还原工具-dmrman 前言 根据达梦官网文档整理 一、概述 DMRMAN 命令行设置参数执行又可分为命令行指定脚本、命令行指定语句两种执行方式。 指定语句 $ DMRMAN RMAN>BACKUP DATABASE/dmdata/data/DAMENG/dm.ini;指定脚本 创建一个名为 cmd_file.txt 的文件&#x…...

网页403错误(Spring Security报异常 Encoded password does not look like BCrypt)

这个错误通常表现为"403 Forbidden"或"HTTP Status 403",它指的是访问资源被服务器理解但拒绝授权。换句话说,服务器可以理解你请求看到的页面,但它拒绝给你权限。 也就是说很可能测试给定的参数有问题,后端…...

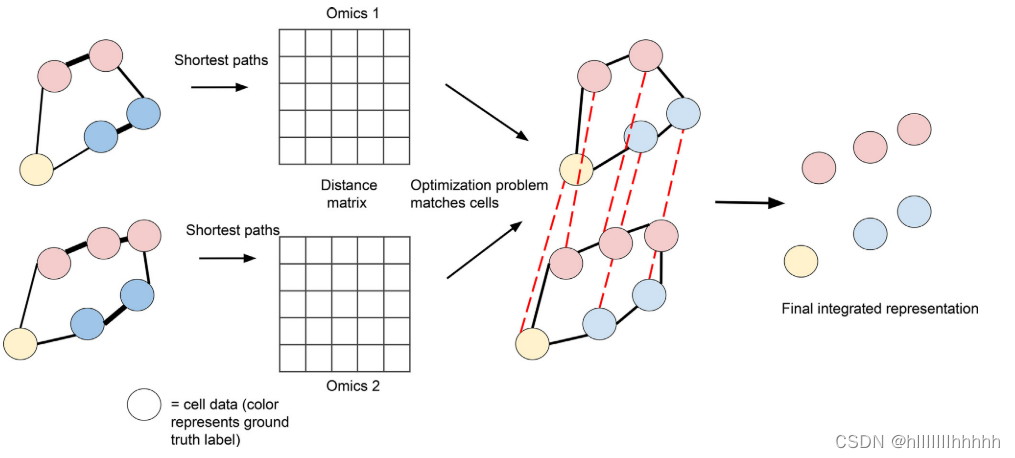

单细胞多组学整合与对齐的计算方法

Computational Methods for Single-cell Multi-omics Integration and Alignment Bioinformatics-2022-密西根大学 关键词:单细胞;多组学;机器学习;无监督学习;集成 摘要 最近发展起来的生成单细胞基因组数据的技术在生物学领域产生了革命性的影响。多组学测定提…...

33.openeuler OECA认证模拟题16

一 、选择题 1.如何查看系统支持的 shell? A、cat /etc/passwd B、cat /etc/shells C、echo SSHELL D、echo $0 答案 :B 2.下列哪项不是 shell的功能? A 、 用户界面,提供用户与内核交互接口 B 、 命令解释器 C 、提供编译环境 D 、 提供各种管理工具,…...

javaScript数组去重的几种实现方式——适用非引用数据去重

最传统的使用循环遍历 //最传统的使用循环遍历 function getUnique(arr) {let newArr [];for (let i 0; i < arr.length; i) {for (let j i 1; j < arr.length; j) {if (arr[i] arr[j]) {i; //相同丢掉前面的元素}}newArr.push(arr[i]);}return newArr; } 利用Set实…...

Nexus Repository Manager

Nexus Repository Manager https://s01.oss.sonatype.org/#welcome https://mvnrepository.com/-CSDN博客...

Python世界之运算符

一、算术运算符 以下假设变量: a10,b20: 运算符 描述 实例 加 - 两个对象相加 a b 输出结果 30 - 减 - 得到负数或是一个数减去另一个数 a - b 输出结果 -10 * 乘 - 两个数相乘或是返回一个被重复若干次的字符串 a * b 输出结…...

蓝桥杯倒计时47天!DFS基础——图的遍历

倒计时47天! 深度优先搜索——DFS 温馨提示:学习dfs之前最好先了解一下递归的思想。 DFS基础——图的遍历 仙境诅咒 问题描述 在一片神秘的仙境中,有N位修仙者,他们各自在仙境中独立修炼,拥有自己独特的修炼之道…...

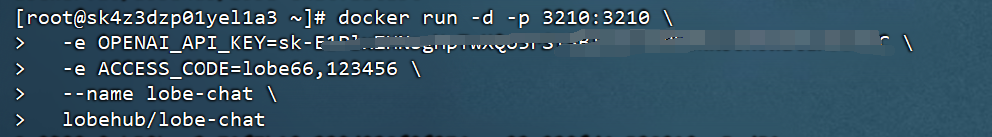

体验LobeChat搭建私人聊天应用

LobeChat是什么 LobeChat 是开源的高性能聊天机器人框架,支持语音合成、多模态、可扩展的(Function Call)插件系统。支持一键免费部署私人 ChatGPT/LLM 网页应用程序。 地址:https://github.com/lobehub/lobe-chat 为什么要用Lobe…...

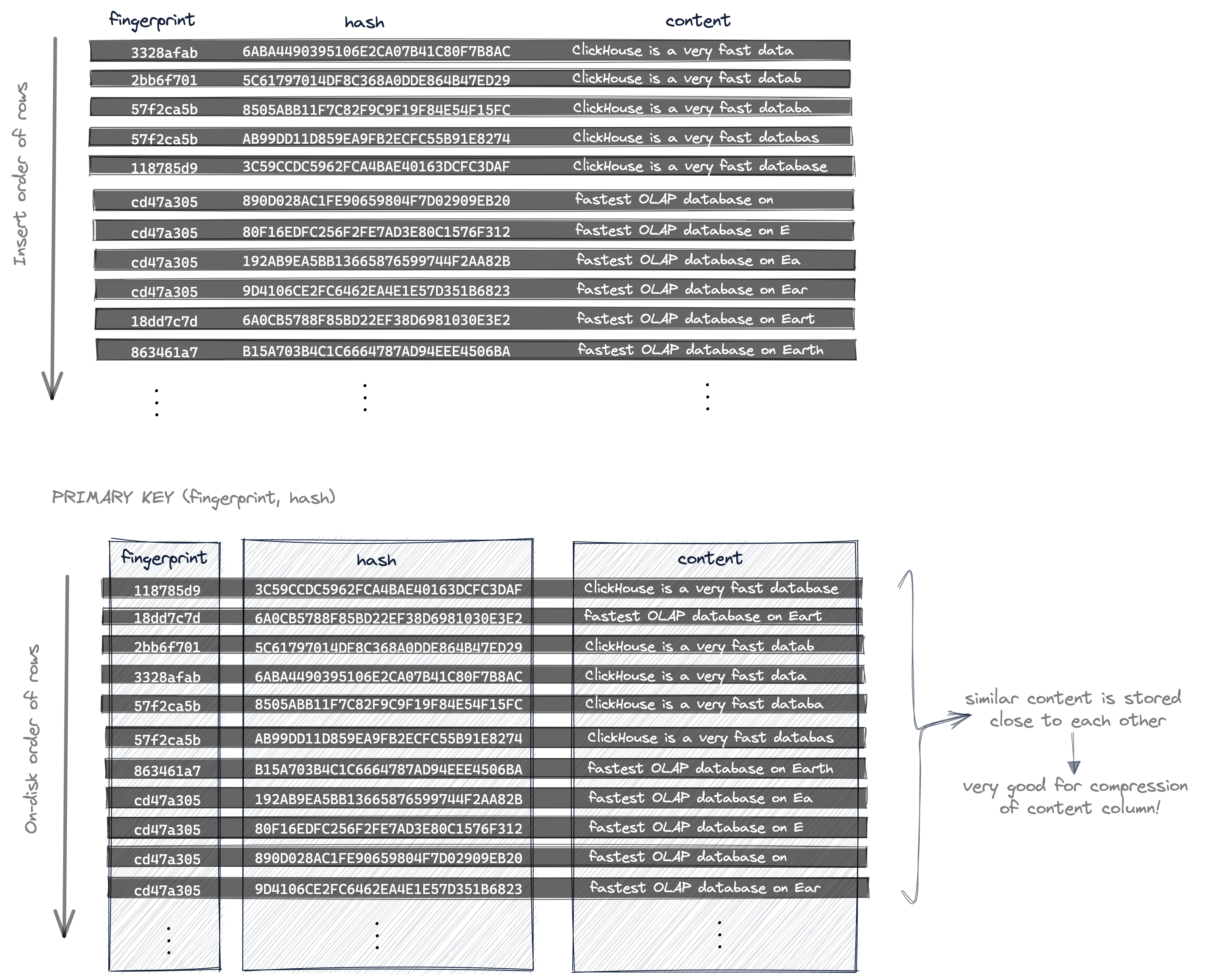

ClickHouse 指南(三)最佳实践 -- 主键稀疏索引

在ClickHouse主索引的实用介绍 ClickHouse release 24.1, 2024-01-30 1、简介 在本指南中,我们将深入研究ClickHouse索引。我们将详细说明和讨论: ClickHouse中的索引与传统的关系数据库管理系统有何不同ClickHouse是如何构建和使用表的稀疏主索引的什么是在Clic…...

【Nginx】Nginx配置反向代理 和 https

nginx.conf配置 进入linux /etc/nginx/ 打开nginx.conf 进行以下配置 http {include mime.types;default_type application/octet-stream;sendfile on;keepalive_timeout 65;server {#监听443端口listen 443 ssl;#你的域名server_name huiblog.top;#ssl证书的pe…...

JavaSec-RCE

简介 RCE(Remote Code Execution),可以分为:命令注入(Command Injection)、代码注入(Code Injection) 代码注入 1.漏洞场景:Groovy代码注入 Groovy是一种基于JVM的动态语言,语法简洁,支持闭包、动态类型和Java互操作性,…...

工业安全零事故的智能守护者:一体化AI智能安防平台

前言: 通过AI视觉技术,为船厂提供全面的安全监控解决方案,涵盖交通违规检测、起重机轨道安全、非法入侵检测、盗窃防范、安全规范执行监控等多个方面,能够实现对应负责人反馈机制,并最终实现数据的统计报表。提升船厂…...

AtCoder 第409场初级竞赛 A~E题解

A Conflict 【题目链接】 原题链接:A - Conflict 【考点】 枚举 【题目大意】 找到是否有两人都想要的物品。 【解析】 遍历两端字符串,只有在同时为 o 时输出 Yes 并结束程序,否则输出 No。 【难度】 GESP三级 【代码参考】 #i…...

江苏艾立泰跨国资源接力:废料变黄金的绿色供应链革命

在华东塑料包装行业面临限塑令深度调整的背景下,江苏艾立泰以一场跨国资源接力的创新实践,重新定义了绿色供应链的边界。 跨国回收网络:废料变黄金的全球棋局 艾立泰在欧洲、东南亚建立再生塑料回收点,将海外废弃包装箱通过标准…...

高等数学(下)题型笔记(八)空间解析几何与向量代数

目录 0 前言 1 向量的点乘 1.1 基本公式 1.2 例题 2 向量的叉乘 2.1 基础知识 2.2 例题 3 空间平面方程 3.1 基础知识 3.2 例题 4 空间直线方程 4.1 基础知识 4.2 例题 5 旋转曲面及其方程 5.1 基础知识 5.2 例题 6 空间曲面的法线与切平面 6.1 基础知识 6.2…...

Android15默认授权浮窗权限

我们经常有那种需求,客户需要定制的apk集成在ROM中,并且默认授予其【显示在其他应用的上层】权限,也就是我们常说的浮窗权限,那么我们就可以通过以下方法在wms、ams等系统服务的systemReady()方法中调用即可实现预置应用默认授权浮…...

SpringCloudGateway 自定义局部过滤器

场景: 将所有请求转化为同一路径请求(方便穿网配置)在请求头内标识原来路径,然后在将请求分发给不同服务 AllToOneGatewayFilterFactory import lombok.Getter; import lombok.Setter; import lombok.extern.slf4j.Slf4j; impor…...

嵌入式学习笔记DAY33(网络编程——TCP)

一、网络架构 C/S (client/server 客户端/服务器):由客户端和服务器端两个部分组成。客户端通常是用户使用的应用程序,负责提供用户界面和交互逻辑 ,接收用户输入,向服务器发送请求,并展示服务…...

【笔记】WSL 中 Rust 安装与测试完整记录

#工作记录 WSL 中 Rust 安装与测试完整记录 1. 运行环境 系统:Ubuntu 24.04 LTS (WSL2)架构:x86_64 (GNU/Linux)Rust 版本:rustc 1.87.0 (2025-05-09)Cargo 版本:cargo 1.87.0 (2025-05-06) 2. 安装 Rust 2.1 使用 Rust 官方安…...

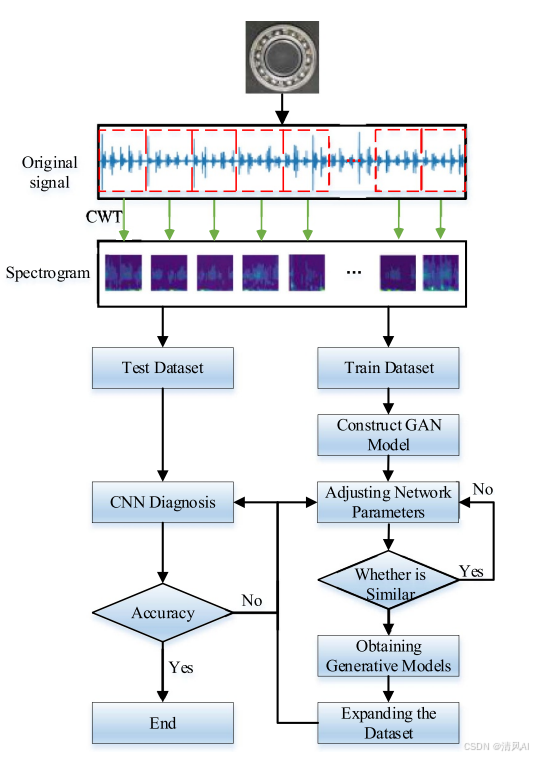

基于IDIG-GAN的小样本电机轴承故障诊断

目录 🔍 核心问题 一、IDIG-GAN模型原理 1. 整体架构 2. 核心创新点 (1) 梯度归一化(Gradient Normalization) (2) 判别器梯度间隙正则化(Discriminator Gradient Gap Regularization) (3) 自注意力机制(Self-Attention) 3. 完整损失函数 二…...