昇思MindSpore学习笔记6-05计算机视觉--SSD目标检测

摘要:

记录MindSpore AI框架使用SSD目标检测算法对图像内容识别的过程、步骤和方法。包括环境准备、下载数据集、数据采样、数据集加载和预处理、构建模型、损失函数、模型训练、模型评估等。

一、概念

1.模型简介

SSD目标检测算法

Single Shot MultiBox Detector

使用Nvidia Titan X在VOC 2007测试集上

输入尺寸300x300的网络

达到74.3%mAP(mean Average Precision)以及59FPS;

输入尺寸512x512的网络

达到了76.9%mAP

超越当时最强的Faster RCNN(73.2%mAP)

SSD目标检测主流算法分成可以两个类型:

two-stage方法:RCNN系列

通过算法产生候选框,然后再对这些候选框进行分类和回归。

one-stage方法:YOLO和SSD

直接通过主干网络给出类别位置信息,不需要区域生成。

SSD是单阶段的目标检测算法

卷积神经网络提取特征

取不同的特征层进行检测输出

多尺度检测方法。

检测特征层使用3 × 3卷积

通道变换

anchor策略

预设不同长宽比例的anchor

每个输出特征层预测多个检测框(4或者6)

浅层用于检测小目标

深层用于检测大目标

SSD框架图:

。

2.模型结构

SSD基础模型为VGG16

新增卷积层获得更多特征图用于检测

SSD网络结构图。

上层是SSD模型

多尺度特征图做检测

下层是YOLO模型

两种单阶段目标检测算法的比较:

SSD卷积提取特征

检测网络3 ×× 3卷积得到输出

卷积通道数=(anchor数量*(类别数量+4))

anchor数量

类别数量

SSD与YOLO的不同

SSD 通过卷积得到最后的边界框

YOLO通过全连接得到一维向量

拆解向量得到最终的检测框

3.模型特点

(1)多尺度检测

SSD使用多个特征层

特征层的尺寸分别是

38 × 38

19 × 19

10 × 10

5 × 5

3 × 3

1 × 1

大尺度特征图检测小物体

小尺度特征图检测大物体

(2)卷积检测

SSD采用卷积提取不同特征图的检测结果

m × n × p形状特征图采用3 × 3 × p小卷积核得到检测值

(3)预设anchor

SSD预设边界框anchor

预测框尺寸anchor指导微调

二、环境准备

%%capture captured_output

# 实验环境已经预装了mindspore==2.2.14,如需更换mindspore版本,可更改下面mindspore的版本号

!pip uninstall mindspore -y

!pip install -i https://pypi.mirrors.ustc.edu.cn/simple mindspore==2.2.14

# 查看当前 mindspore 版本

!pip show mindspore输出:

Name: mindspore

Version: 2.2.14

Summary: MindSpore is a new open source deep learning training/inference framework that could be used for mobile, edge and cloud scenarios.

Home-page: https://www.mindspore.cn

Author: The MindSpore Authors

Author-email: contact@mindspore.cn

License: Apache 2.0

Location: /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages

Requires: asttokens, astunparse, numpy, packaging, pillow, protobuf, psutil, scipy

Required-by: 安装实验所需模块:

mindspore、download、pycocotools、opencv-python

!pip install -i https://pypi.mirrors.ustc.edu.cn/simple pycocotools==2.0.7 输出:

Looking in indexes: https://pypi.mirrors.ustc.edu.cn/simple

Collecting pycocotools==2.0.7Downloading https://mirrors.bfsu.edu.cn/pypi/web/packages/19/93/5aaec888e3aa4d05b3a1472f331b83f7dc684d9a6b2645709d8f3352ba00/pycocotools-2.0.7-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (419 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 419.9/419.9 kB 18.7 MB/s eta 0:00:00

Requirement already satisfied: matplotlib>=2.1.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pycocotools==2.0.7) (3.9.0)

Requirement already satisfied: numpy in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pycocotools==2.0.7) (1.26.4)

Requirement already satisfied: contourpy>=1.0.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (1.2.1)

Requirement already satisfied: cycler>=0.10 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (0.12.1)

Requirement already satisfied: fonttools>=4.22.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (4.53.0)

Requirement already satisfied: kiwisolver>=1.3.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (1.4.5)

Requirement already satisfied: packaging>=20.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (23.2)

Requirement already satisfied: pillow>=8 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (10.3.0)

Requirement already satisfied: pyparsing>=2.3.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (3.1.2)

Requirement already satisfied: python-dateutil>=2.7 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (2.9.0.post0)

Requirement already satisfied: importlib-resources>=3.2.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from matplotlib>=2.1.0->pycocotools==2.0.7) (6.4.0)

Requirement already satisfied: zipp>=3.1.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from importlib-resources>=3.2.0->matplotlib>=2.1.0->pycocotools==2.0.7) (3.17.0)

Requirement already satisfied: six>=1.5 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from python-dateutil>=2.7->matplotlib>=2.1.0->pycocotools==2.0.7) (1.16.0)

Installing collected packages: pycocotools

Successfully installed pycocotools-2.0.7

[notice] A new release of pip is available: 24.1 -> 24.1.1[notice] To update, run: python -m pip install --upgrade pip三、数据准备与处理

1.下载数据集

所用数据集COCO 2017

为了方便先转换为MindRecord格式

减少磁盘IO、网络IO开销

获得更好的使用体验和性能提升

下载MindRecord格式COCO数据集

下载

解压

from download import download

dataset_url = "https://mindspore-website.obs.cn-north-4.myhuaweicloud.com/notebook/datasets/ssd_datasets.zip"

path = "./"

path = download(dataset_url, path, kind="zip", replace=True)输出:

Downloading data from https://mindspore-website.obs.cn-north-4.myhuaweicloud.com/notebook/datasets/ssd_datasets.zip (16.0 MB)file_sizes: 100%|███████████████████████████| 16.8M/16.8M [00:00<00:00, 129MB/s]

Extracting zip file...

Successfully downloaded / unzipped to ./定义数据处理:

coco_root = "./datasets/"

anno_json = "./datasets/annotations/instances_val2017.json"

train_cls = ['background', 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus','train', 'truck', 'boat', 'traffic light', 'fire hydrant','stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog','horse', 'sheep', 'cow', 'elephant', 'bear', 'zebra','giraffe', 'backpack', 'umbrella', 'handbag', 'tie','suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball','kite', 'baseball bat', 'baseball glove', 'skateboard','surfboard', 'tennis racket', 'bottle', 'wine glass', 'cup','fork', 'knife', 'spoon', 'bowl', 'banana', 'apple','sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza','donut', 'cake', 'chair', 'couch', 'potted plant', 'bed','dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote','keyboard', 'cell phone', 'microwave', 'oven', 'toaster', 'sink','refrigerator', 'book', 'clock', 'vase', 'scissors','teddy bear', 'hair drier', 'toothbrush']

train_cls_dict = {}

for i, cls in enumerate(train_cls):train_cls_dict[cls] = i2.数据采样

为了使模型适应各种输入对象大小和形状

SSD算法通过以下选项之一随机采样训练图像:

使用整个原始输入图像

采样一个区域

采样区域和原始图片最小的交并比重叠为0.1,0.3,0.5,0.7或0.9

随机采样一个区域

采样区域大小

原始图像大小的[0.3,1]

长宽比在1/2和2之间

如果真实标签框中心在采样区域内

保留两者重叠部分作为新图片的真实标注框。

固定各采样区域大小

0.5概率水平翻转

import cv2

import numpy as npdef _rand(a=0., b=1.):return np.random.rand() * (b - a) + adef intersect(box_a, box_b):"""Compute the intersect of two sets of boxes."""max_yx = np.minimum(box_a[:, 2:4], box_b[2:4])min_yx = np.maximum(box_a[:, :2], box_b[:2])inter = np.clip((max_yx - min_yx), a_min=0, a_max=np.inf)return inter[:, 0] * inter[:, 1]def jaccard_numpy(box_a, box_b):"""Compute the jaccard overlap of two sets of boxes."""inter = intersect(box_a, box_b)area_a = ((box_a[:, 2] - box_a[:, 0]) *(box_a[:, 3] - box_a[:, 1]))area_b = ((box_b[2] - box_b[0]) *(box_b[3] - box_b[1]))union = area_a + area_b - interreturn inter / uniondef random_sample_crop(image, boxes):"""Crop images and boxes randomly."""height, width, _ = image.shapemin_iou = np.random.choice([None, 0.1, 0.3, 0.5, 0.7, 0.9])if min_iou is None:return image, boxesfor _ in range(50):image_t = imagew = _rand(0.3, 1.0) * widthh = _rand(0.3, 1.0) * height# aspect ratio constraint b/t .5 & 2if h / w < 0.5 or h / w > 2:continueleft = _rand() * (width - w)top = _rand() * (height - h)rect = np.array([int(top), int(left), int(top + h), int(left + w)])overlap = jaccard_numpy(boxes, rect)# dropout some boxesdrop_mask = overlap > 0if not drop_mask.any():continueif overlap[drop_mask].min() < min_iou and overlap[drop_mask].max() > (min_iou + 0.2):continueimage_t = image_t[rect[0]:rect[2], rect[1]:rect[3], :]centers = (boxes[:, :2] + boxes[:, 2:4]) / 2.0m1 = (rect[0] < centers[:, 0]) * (rect[1] < centers[:, 1])m2 = (rect[2] > centers[:, 0]) * (rect[3] > centers[:, 1])# mask in that both m1 and m2 are truemask = m1 * m2 * drop_mask# have any valid boxes? try again if notif not mask.any():continue# take only matching gt boxesboxes_t = boxes[mask, :].copy()boxes_t[:, :2] = np.maximum(boxes_t[:, :2], rect[:2])boxes_t[:, :2] -= rect[:2]boxes_t[:, 2:4] = np.minimum(boxes_t[:, 2:4], rect[2:4])boxes_t[:, 2:4] -= rect[:2]return image_t, boxes_treturn image, boxesdef ssd_bboxes_encode(boxes):"""Labels anchors with ground truth inputs."""def jaccard_with_anchors(bbox):"""Compute jaccard score a box and the anchors."""# Intersection bbox and volume.ymin = np.maximum(y1, bbox[0])xmin = np.maximum(x1, bbox[1])ymax = np.minimum(y2, bbox[2])xmax = np.minimum(x2, bbox[3])w = np.maximum(xmax - xmin, 0.)h = np.maximum(ymax - ymin, 0.)# Volumes.inter_vol = h * wunion_vol = vol_anchors + (bbox[2] - bbox[0]) * (bbox[3] - bbox[1]) - inter_voljaccard = inter_vol / union_volreturn np.squeeze(jaccard)pre_scores = np.zeros((8732), dtype=np.float32)t_boxes = np.zeros((8732, 4), dtype=np.float32)t_label = np.zeros((8732), dtype=np.int64)for bbox in boxes:label = int(bbox[4])scores = jaccard_with_anchors(bbox)idx = np.argmax(scores)scores[idx] = 2.0mask = (scores > matching_threshold)mask = mask & (scores > pre_scores)pre_scores = np.maximum(pre_scores, scores * mask)t_label = mask * label + (1 - mask) * t_labelfor i in range(4):t_boxes[:, i] = mask * bbox[i] + (1 - mask) * t_boxes[:, i]index = np.nonzero(t_label)# Transform to tlbr.bboxes = np.zeros((8732, 4), dtype=np.float32)bboxes[:, [0, 1]] = (t_boxes[:, [0, 1]] + t_boxes[:, [2, 3]]) / 2bboxes[:, [2, 3]] = t_boxes[:, [2, 3]] - t_boxes[:, [0, 1]]# Encode features.bboxes_t = bboxes[index]default_boxes_t = default_boxes[index]bboxes_t[:, :2] = (bboxes_t[:, :2] - default_boxes_t[:, :2]) / (default_boxes_t[:, 2:] * 0.1)tmp = np.maximum(bboxes_t[:, 2:4] / default_boxes_t[:, 2:4], 0.000001)bboxes_t[:, 2:4] = np.log(tmp) / 0.2bboxes[index] = bboxes_tnum_match = np.array([len(np.nonzero(t_label)[0])], dtype=np.int32)return bboxes, t_label.astype(np.int32), num_matchdef preprocess_fn(img_id, image, box, is_training):"""Preprocess function for dataset."""cv2.setNumThreads(2)def _infer_data(image, input_shape):img_h, img_w, _ = image.shapeinput_h, input_w = input_shapeimage = cv2.resize(image, (input_w, input_h))# When the channels of image is 1if len(image.shape) == 2:image = np.expand_dims(image, axis=-1)image = np.concatenate([image, image, image], axis=-1)return img_id, image, np.array((img_h, img_w), np.float32)def _data_aug(image, box, is_training, image_size=(300, 300)):ih, iw, _ = image.shapeh, w = image_sizeif not is_training:return _infer_data(image, image_size)# Random cropbox = box.astype(np.float32)image, box = random_sample_crop(image, box)ih, iw, _ = image.shape# Resize imageimage = cv2.resize(image, (w, h))# Flip image or notflip = _rand() < .5if flip:image = cv2.flip(image, 1, dst=None)# When the channels of image is 1if len(image.shape) == 2:image = np.expand_dims(image, axis=-1)image = np.concatenate([image, image, image], axis=-1)box[:, [0, 2]] = box[:, [0, 2]] / ihbox[:, [1, 3]] = box[:, [1, 3]] / iwif flip:box[:, [1, 3]] = 1 - box[:, [3, 1]]box, label, num_match = ssd_bboxes_encode(box)return image, box, label, num_matchreturn _data_aug(image, box, is_training, image_size=[300, 300])3.数据集创建

from mindspore import Tensor

from mindspore.dataset import MindDataset

from mindspore.dataset.vision import Decode, HWC2CHW, Normalize, RandomColorAdjustdef create_ssd_dataset(mindrecord_file, batch_size=32, device_num=1, rank=0,is_training=True, num_parallel_workers=1, use_multiprocessing=True):"""Create SSD dataset with MindDataset."""dataset = MindDataset(mindrecord_file, columns_list=["img_id", "image", "annotation"], num_shards=device_num,shard_id=rank, num_parallel_workers=num_parallel_workers, shuffle=is_training)decode = Decode()dataset = dataset.map(operations=decode, input_columns=["image"])change_swap_op = HWC2CHW()# Computed from random subset of ImageNet training imagesnormalize_op = Normalize(mean=[0.485 * 255, 0.456 * 255, 0.406 * 255],std=[0.229 * 255, 0.224 * 255, 0.225 * 255])color_adjust_op = RandomColorAdjust(brightness=0.4, contrast=0.4, saturation=0.4)compose_map_func = (lambda img_id, image, annotation: preprocess_fn(img_id, image, annotation, is_training))if is_training:output_columns = ["image", "box", "label", "num_match"]trans = [color_adjust_op, normalize_op, change_swap_op]else:output_columns = ["img_id", "image", "image_shape"]trans = [normalize_op, change_swap_op]dataset = dataset.map(operations=compose_map_func, input_columns=["img_id", "image", "annotation"],output_columns=output_columns, python_multiprocessing=use_multiprocessing,num_parallel_workers=num_parallel_workers)dataset = dataset.map(operations=trans, input_columns=["image"], python_multiprocessing=use_multiprocessing,num_parallel_workers=num_parallel_workers)dataset = dataset.batch(batch_size, drop_remainder=True)return dataset四、模型构建

SSD网络结构:

VGG16 Base Layer

Extra Feature Layer

Detection Layer

NMS

Anchor

VGG16 Base Layer(Backbone Layer)

![]()

输入图像预处理

固定大小300×300

VGG16网络前13个卷积层

VGG16全连接层

fc6转换成3 × 3卷积层block6

block6使用空洞卷积

空洞数为6

padding为6

增加感受范围

参数量不变

特征图尺寸不变

fc7转换成1 × 1卷积层block7

Extra Feature Layer

SSD增加4个深度卷积层block8-11

提取更高层语义信息

从block7输入特征图尺寸19×19

block8

通道数为512

输出特征图尺寸10×10

block9

通道数为256

输出特征图尺寸5×5

block10

通道数为256

输出特征图尺寸3×3

block11

通道数为256

输出特征图的尺寸1×1

为了降低参数量?【没理解】

使用1×1卷积

降低通道数为该层输出通道数的一半

3×3卷积

提取特征

Anchor

SSD采用PriorBox生成区域。

PriorBox固定大小宽高

先验兴趣区域

利用一个阶段完成分类与回归

大量密集PriorBox检测整幅图像

PriorBox位置表示形式(cx,cy,w,h)

中心点坐标和框的宽、高

转换为百分比形式

PriorBox生成规则

6个检测目标特征层

不同特征层PriorBox尺寸scale大小不一样

最低层scale=0.1

最高层scale=0.95

其他层计算公式:

某特征层scale一定,长宽比ratio不同

长和宽的计算公式:

ratio=1时,与下个特征层PriorBox有特定scale

计算公式:

每个特征层的每个点按上述规则生成PriorBox

(cx,cy)当前点的中心点

每个特征层都生成大量密集的PriorBox,如下图:

SSD使用第4、7、8、9、10和11这6个卷积层得到的特征图

6个层的特征图尺寸越来越小

对应的感受范围越来越大

6个特征图上的每一个点分别对应4、6、6、6、4、4个PriorBox。

某特征图上一点根据下采样率可以得到原图的坐标

以该坐标为中心生成4个或6个不同大小的PriorBox

利用特征图的特征预测每个PriorBox对应类别与位置的预测量

共有600个PriorBox。

定义MultiBox类

生成多个预测框

Detection Layer

SSD模型

共有6个预测特征图

其中一个尺寸为m*n,

通道为p的预测特征图,

每个像素点会产生k个anchor

每个anchor对应c个类别和4个回归偏移量

使用(4+c)k个尺寸为3x3

通道为p的卷积核对该预测特征图进行卷积操作

得到尺寸为m*n,通道为(4+c)m*k的输出特征图

包含预测特征图上每个anchor的回归偏移量和各类别概率分数

尺寸为m*n的预测特征图

产生(4+c)k*m*n个结果

cls分支的输出通道数为k*class_num

loc分支的输出通道数为k*4

from mindspore import nndef _make_layer(channels):in_channels = channels[0]layers = []for out_channels in channels[1:]:layers.append(nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=3))layers.append(nn.ReLU())in_channels = out_channelsreturn nn.SequentialCell(layers)class Vgg16(nn.Cell):"""VGG16 module."""def __init__(self):super(Vgg16, self).__init__()self.b1 = _make_layer([3, 64, 64])self.b2 = _make_layer([64, 128, 128])self.b3 = _make_layer([128, 256, 256, 256])self.b4 = _make_layer([256, 512, 512, 512])self.b5 = _make_layer([512, 512, 512, 512])self.m1 = nn.MaxPool2d(kernel_size=2, stride=2, pad_mode='SAME')self.m2 = nn.MaxPool2d(kernel_size=2, stride=2, pad_mode='SAME')self.m3 = nn.MaxPool2d(kernel_size=2, stride=2, pad_mode='SAME')self.m4 = nn.MaxPool2d(kernel_size=2, stride=2, pad_mode='SAME')self.m5 = nn.MaxPool2d(kernel_size=3, stride=1, pad_mode='SAME')def construct(self, x):# block1x = self.b1(x)x = self.m1(x)# block2x = self.b2(x)x = self.m2(x)# block3x = self.b3(x)x = self.m3(x)# block4x = self.b4(x)block4 = xx = self.m4(x)# block5x = self.b5(x)x = self.m5(x)return block4, ximport mindspore as ms

import mindspore.nn as nn

import mindspore.ops as opsdef _last_conv2d(in_channel, out_channel, kernel_size=3, stride=1, pad_mod='same', pad=0):in_channels = in_channelout_channels = in_channeldepthwise_conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='same',padding=pad, group=in_channels)conv = nn.Conv2d(in_channel, out_channel, kernel_size=1, stride=1, padding=0, pad_mode='same', has_bias=True)bn = nn.BatchNorm2d(in_channel, eps=1e-3, momentum=0.97,gamma_init=1, beta_init=0, moving_mean_init=0, moving_var_init=1)return nn.SequentialCell([depthwise_conv, bn, nn.ReLU6(), conv])class FlattenConcat(nn.Cell):"""FlattenConcat module."""def __init__(self):super(FlattenConcat, self).__init__()self.num_ssd_boxes = 8732def construct(self, inputs):output = ()batch_size = ops.shape(inputs[0])[0]for x in inputs:x = ops.transpose(x, (0, 2, 3, 1))output += (ops.reshape(x, (batch_size, -1)),)res = ops.concat(output, axis=1)return ops.reshape(res, (batch_size, self.num_ssd_boxes, -1))class MultiBox(nn.Cell):"""Multibox conv layers. Each multibox layer contains class conf scores and localization predictions."""def __init__(self):super(MultiBox, self).__init__()num_classes = 81out_channels = [512, 1024, 512, 256, 256, 256]num_default = [4, 6, 6, 6, 4, 4]loc_layers = []cls_layers = []for k, out_channel in enumerate(out_channels):loc_layers += [_last_conv2d(out_channel, 4 * num_default[k],kernel_size=3, stride=1, pad_mod='same', pad=0)]cls_layers += [_last_conv2d(out_channel, num_classes * num_default[k],kernel_size=3, stride=1, pad_mod='same', pad=0)]self.multi_loc_layers = nn.CellList(loc_layers)self.multi_cls_layers = nn.CellList(cls_layers)self.flatten_concat = FlattenConcat()def construct(self, inputs):loc_outputs = ()cls_outputs = ()for i in range(len(self.multi_loc_layers)):loc_outputs += (self.multi_loc_layers[i](inputs[i]),)cls_outputs += (self.multi_cls_layers[i](inputs[i]),)return self.flatten_concat(loc_outputs), self.flatten_concat(cls_outputs)class SSD300Vgg16(nn.Cell):"""SSD300Vgg16 module."""def __init__(self):super(SSD300Vgg16, self).__init__()# VGG16 backbone: block1~5self.backbone = Vgg16()# SSD blocks: block6~7self.b6_1 = nn.Conv2d(in_channels=512, out_channels=1024, kernel_size=3, padding=6, dilation=6, pad_mode='pad')self.b6_2 = nn.Dropout(p=0.5)self.b7_1 = nn.Conv2d(in_channels=1024, out_channels=1024, kernel_size=1)self.b7_2 = nn.Dropout(p=0.5)# Extra Feature Layers: block8~11self.b8_1 = nn.Conv2d(in_channels=1024, out_channels=256, kernel_size=1, padding=1, pad_mode='pad')self.b8_2 = nn.Conv2d(in_channels=256, out_channels=512, kernel_size=3, stride=2, pad_mode='valid')self.b9_1 = nn.Conv2d(in_channels=512, out_channels=128, kernel_size=1, padding=1, pad_mode='pad')self.b9_2 = nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=2, pad_mode='valid')self.b10_1 = nn.Conv2d(in_channels=256, out_channels=128, kernel_size=1)self.b10_2 = nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, pad_mode='valid')self.b11_1 = nn.Conv2d(in_channels=256, out_channels=128, kernel_size=1)self.b11_2 = nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, pad_mode='valid')# boxesself.multi_box = MultiBox()def construct(self, x):# VGG16 backbone: block1~5block4, x = self.backbone(x)# SSD blocks: block6~7x = self.b6_1(x) # 1024x = self.b6_2(x)x = self.b7_1(x) # 1024x = self.b7_2(x)block7 = x# Extra Feature Layers: block8~11x = self.b8_1(x) # 256x = self.b8_2(x) # 512block8 = xx = self.b9_1(x) # 128x = self.b9_2(x) # 256block9 = xx = self.b10_1(x) # 128x = self.b10_2(x) # 256block10 = xx = self.b11_1(x) # 128x = self.b11_2(x) # 256block11 = x# boxesmulti_feature = (block4, block7, block8, block9, block10, block11)pred_loc, pred_label = self.multi_box(multi_feature)if not self.training:pred_label = ops.sigmoid(pred_label)pred_loc = pred_loc.astype(ms.float32)pred_label = pred_label.astype(ms.float32)return pred_loc, pred_label五、损失函数

SSD算法目标函数分为两部分:

预选框与目标类别的置信度误差(confidence loss, conf)

位置误差(locatization loss, loc)

N 先验框的正样本数量;

c 类别置信度预测值;

l 先验框对应边界框的位置预测值;

g ground truth的位置参数;

α confidence loss和location loss之间的调整比例,默认为1。

1.对于位置损失函数

针对所有的正样本

采用 Smooth L1 Loss

encode 之后的位置信息

2.对于置信度损失函数

置信度损失是多类置信度(c)上的softmax损失

def class_loss(logits, label):"""Calculate category losses."""label = ops.one_hot(label, ops.shape(logits)[-1], Tensor(1.0, ms.float32), Tensor(0.0, ms.float32))weight = ops.ones_like(logits)pos_weight = ops.ones_like(logits)sigmiod_cross_entropy = ops.binary_cross_entropy_with_logits(logits, label, weight.astype(ms.float32), pos_weight.astype(ms.float32))sigmoid = ops.sigmoid(logits)label = label.astype(ms.float32)p_t = label * sigmoid + (1 - label) * (1 - sigmoid)modulating_factor = ops.pow(1 - p_t, 2.0)alpha_weight_factor = label * 0.75 + (1 - label) * (1 - 0.75)focal_loss = modulating_factor * alpha_weight_factor * sigmiod_cross_entropyreturn focal_loss六、Metrics

非极大值抑制(NMS)

输入图片要求输出框时,用NMS过滤重叠度较大的预测框。

非极大值抑制流程:

根据置信度得分排序

选择置信度最高的边界框

加到最终输出列表

从边界框列表中删除

计算所有边界框的面积

计算置信度最高的边界框与其它候选框的IoU

删除IoU大于阈值的边界框

重复上述过程,直至边界框列表为空

import json

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOevaldef apply_eval(eval_param_dict):net = eval_param_dict["net"]net.set_train(False)ds = eval_param_dict["dataset"]anno_json = eval_param_dict["anno_json"]coco_metrics = COCOMetrics(anno_json=anno_json,classes=train_cls,num_classes=81,max_boxes=100,nms_threshold=0.6,min_score=0.1)for data in ds.create_dict_iterator(output_numpy=True, num_epochs=1):img_id = data['img_id']img_np = data['image']image_shape = data['image_shape']output = net(Tensor(img_np))for batch_idx in range(img_np.shape[0]):pred_batch = {"boxes": output[0].asnumpy()[batch_idx],"box_scores": output[1].asnumpy()[batch_idx],"img_id": int(np.squeeze(img_id[batch_idx])),"image_shape": image_shape[batch_idx]}coco_metrics.update(pred_batch)eval_metrics = coco_metrics.get_metrics()return eval_metricsdef apply_nms(all_boxes, all_scores, thres, max_boxes):"""Apply NMS to bboxes."""y1 = all_boxes[:, 0]x1 = all_boxes[:, 1]y2 = all_boxes[:, 2]x2 = all_boxes[:, 3]areas = (x2 - x1 + 1) * (y2 - y1 + 1)order = all_scores.argsort()[::-1]keep = []while order.size > 0:i = order[0]keep.append(i)if len(keep) >= max_boxes:breakxx1 = np.maximum(x1[i], x1[order[1:]])yy1 = np.maximum(y1[i], y1[order[1:]])xx2 = np.minimum(x2[i], x2[order[1:]])yy2 = np.minimum(y2[i], y2[order[1:]])w = np.maximum(0.0, xx2 - xx1 + 1)h = np.maximum(0.0, yy2 - yy1 + 1)inter = w * hovr = inter / (areas[i] + areas[order[1:]] - inter)inds = np.where(ovr <= thres)[0]order = order[inds + 1]return keepclass COCOMetrics:"""Calculate mAP of predicted bboxes."""def __init__(self, anno_json, classes, num_classes, min_score, nms_threshold, max_boxes):self.num_classes = num_classesself.classes = classesself.min_score = min_scoreself.nms_threshold = nms_thresholdself.max_boxes = max_boxesself.val_cls_dict = {i: cls for i, cls in enumerate(classes)}self.coco_gt = COCO(anno_json)cat_ids = self.coco_gt.loadCats(self.coco_gt.getCatIds())self.class_dict = {cat['name']: cat['id'] for cat in cat_ids}self.predictions = []self.img_ids = []def update(self, batch):pred_boxes = batch['boxes']box_scores = batch['box_scores']img_id = batch['img_id']h, w = batch['image_shape']final_boxes = []final_label = []final_score = []self.img_ids.append(img_id)for c in range(1, self.num_classes):class_box_scores = box_scores[:, c]score_mask = class_box_scores > self.min_scoreclass_box_scores = class_box_scores[score_mask]class_boxes = pred_boxes[score_mask] * [h, w, h, w]if score_mask.any():nms_index = apply_nms(class_boxes, class_box_scores, self.nms_threshold, self.max_boxes)class_boxes = class_boxes[nms_index]class_box_scores = class_box_scores[nms_index]final_boxes += class_boxes.tolist()final_score += class_box_scores.tolist()final_label += [self.class_dict[self.val_cls_dict[c]]] * len(class_box_scores)for loc, label, score in zip(final_boxes, final_label, final_score):res = {}res['image_id'] = img_idres['bbox'] = [loc[1], loc[0], loc[3] - loc[1], loc[2] - loc[0]]res['score'] = scoreres['category_id'] = labelself.predictions.append(res)def get_metrics(self):with open('predictions.json', 'w') as f:json.dump(self.predictions, f)coco_dt = self.coco_gt.loadRes('predictions.json')E = COCOeval(self.coco_gt, coco_dt, iouType='bbox')E.params.imgIds = self.img_idsE.evaluate()E.accumulate()E.summarize()return E.stats[0]class SsdInferWithDecoder(nn.Cell):"""

SSD Infer wrapper to decode the bbox locations."""def __init__(self, network, default_boxes, ckpt_path):super(SsdInferWithDecoder, self).__init__()param_dict = ms.load_checkpoint(ckpt_path)ms.load_param_into_net(network, param_dict)self.network = networkself.default_boxes = default_boxesself.prior_scaling_xy = 0.1self.prior_scaling_wh = 0.2def construct(self, x):pred_loc, pred_label = self.network(x)default_bbox_xy = self.default_boxes[..., :2]default_bbox_wh = self.default_boxes[..., 2:]pred_xy = pred_loc[..., :2] * self.prior_scaling_xy * default_bbox_wh + default_bbox_xypred_wh = ops.exp(pred_loc[..., 2:] * self.prior_scaling_wh) * default_bbox_whpred_xy_0 = pred_xy - pred_wh / 2.0pred_xy_1 = pred_xy + pred_wh / 2.0pred_xy = ops.concat((pred_xy_0, pred_xy_1), -1)pred_xy = ops.maximum(pred_xy, 0)pred_xy = ops.minimum(pred_xy, 1)return pred_xy, pred_label七、训练过程

1.先验框匹配

确定训练图片中ground truth(真实目标)匹配的先验框

用先验框对应边界框来预测

SSD先验框与ground truth的匹配原则主要有两点:

最大IOU匹配原则

正样本:图片中每个ground truth IOU最大的先验框为匹配先验框

负样本:未能与任何ground truth匹配的先验框,只能与背景匹配

IOU大于阈值(一般是0.5)匹配原则

保证正负样本尽量平衡,比例接近1:3

负样本抽样

按照置信度误差降序排列(预测背景的置信度越小,误差越大)

选取误差较大的top-k作为训练的负样本

某个gt可以和多个prior匹配

每个prior只能和一个gt进行匹配。

多个gt和某个prior的IOU均大于阈值

prior只与IOU最大的匹配。

训练中 prior boxes 和 ground truth boxes 匹配的基本思路:

每个prior box回归到ground truth box

调控回归过程需要损失层计算真实值和预测值之间的误差

指导学习走向

2.损失函数

损失函数:位置损失函数和置信度损失函数的加权和。

3.数据增强

使用之前定义的数据增强方式,对创建好的数据进行数据增强。

模型训练

模型训练epoch次数为60

create_ssd_dataset类创建训练集和验证集

batch_size大小为5

图像尺寸统一调整为300×300

损失函数使用位置损失函数和置信度损失函数的加权和

优化器使用Momentum

初始学习率为0.001

回调函数使用LossMonitor和TimeMonitor

监控每epoch训练

损失值Loss的变化情况

每个epoch的运行时间

每个step的运行时间

每训练10个epoch保存一次模型

import math

import itertools as it

from mindspore import set_seed

class GeneratDefaultBoxes():"""Generate Default boxes for SSD, follows the order of (W, H, archor_sizes).`self.default_boxes` has a shape of [archor_sizes, H, W, 4], the last dimension is [y, x, h, w].`self.default_boxes_tlbr` has a shape as `self.default_boxes`, the last dimension is [y1, x1, y2, x2]."""

def __init__(self):fk = 300 / np.array([8, 16, 32, 64, 100, 300])scale_rate = (0.95 - 0.1) / (len([4, 6, 6, 6, 4, 4]) - 1)scales = [0.1 + scale_rate * i for i in range(len([4, 6, 6, 6, 4, 4]))] + [1.0]self.default_boxes = []for idex, feature_size in enumerate([38, 19, 10, 5, 3, 1]):sk1 = scales[idex]sk2 = scales[idex + 1]sk3 = math.sqrt(sk1 * sk2)if idex == 0 and not [[2], [2, 3], [2, 3], [2, 3], [2], [2]][idex]:w, h = sk1 * math.sqrt(2), sk1 / math.sqrt(2)all_sizes = [(0.1, 0.1), (w, h), (h, w)]else:all_sizes = [(sk1, sk1)]for aspect_ratio in [[2], [2, 3], [2, 3], [2, 3], [2], [2]][idex]:w, h = sk1 * math.sqrt(aspect_ratio), sk1 / math.sqrt(aspect_ratio)all_sizes.append((w, h))all_sizes.append((h, w))all_sizes.append((sk3, sk3))

assert len(all_sizes) == [4, 6, 6, 6, 4, 4][idex]

for i, j in it.product(range(feature_size), repeat=2):for w, h in all_sizes:cx, cy = (j + 0.5) / fk[idex], (i + 0.5) / fk[idex]self.default_boxes.append([cy, cx, h, w])

def to_tlbr(cy, cx, h, w):return cy - h / 2, cx - w / 2, cy + h / 2, cx + w / 2

# For IoU calculationself.default_boxes_tlbr = np.array(tuple(to_tlbr(*i) for i in self.default_boxes), dtype='float32')self.default_boxes = np.array(self.default_boxes, dtype='float32')

default_boxes_tlbr = GeneratDefaultBoxes().default_boxes_tlbr

default_boxes = GeneratDefaultBoxes().default_boxes

y1, x1, y2, x2 = np.split(default_boxes_tlbr[:, :4], 4, axis=-1)

vol_anchors = (x2 - x1) * (y2 - y1)

matching_threshold = 0.5from mindspore.common.initializer import initializer, TruncatedNormal

def init_net_param(network, initialize_mode='TruncatedNormal'):"""Init the parameters in net."""params = network.trainable_params()for p in params:if 'beta' not in p.name and 'gamma' not in p.name and 'bias' not in p.name:if initialize_mode == 'TruncatedNormal':p.set_data(initializer(TruncatedNormal(0.02), p.data.shape, p.data.dtype))else:p.set_data(initialize_mode, p.data.shape, p.data.dtype)

def get_lr(global_step, lr_init, lr_end, lr_max, warmup_epochs, total_epochs, steps_per_epoch):""" generate learning rate array"""lr_each_step = []total_steps = steps_per_epoch * total_epochswarmup_steps = steps_per_epoch * warmup_epochsfor i in range(total_steps):if i < warmup_steps:lr = lr_init + (lr_max - lr_init) * i / warmup_stepselse:lr = lr_end + (lr_max - lr_end) * (1. + math.cos(math.pi * (i - warmup_steps) / (total_steps - warmup_steps))) / 2.if lr < 0.0:lr = 0.0lr_each_step.append(lr)

current_step = global_steplr_each_step = np.array(lr_each_step).astype(np.float32)learning_rate = lr_each_step[current_step:]

return learning_rateimport mindspore.dataset as ds

ds.config.set_enable_shared_mem(False)import time

from mindspore.amp import DynamicLossScaler

set_seed(1)

# load data

mindrecord_dir = "./datasets/MindRecord_COCO"

mindrecord_file = "./datasets/MindRecord_COCO/ssd.mindrecord0"

dataset = create_ssd_dataset(mindrecord_file, batch_size=5, rank=0, use_multiprocessing=True)

dataset_size = dataset.get_dataset_size()

image, get_loc, gt_label, num_matched_boxes = next(dataset.create_tuple_iterator())

# Network definition and initialization

network = SSD300Vgg16()

init_net_param(network)

# Define the learning rate

lr = Tensor(get_lr(global_step=0 * dataset_size,lr_init=0.001, lr_end=0.001 * 0.05, lr_max=0.05,warmup_epochs=2, total_epochs=60, steps_per_epoch=dataset_size))

# Define the optimizer

opt = nn.Momentum(filter(lambda x: x.requires_grad, network.get_parameters()), lr,0.9, 0.00015, float(1024))

# Define the forward procedure

def forward_fn(x, gt_loc, gt_label, num_matched_boxes):pred_loc, pred_label = network(x)mask = ops.less(0, gt_label).astype(ms.float32)num_matched_boxes = ops.sum(num_matched_boxes.astype(ms.float32))

# Positioning lossmask_loc = ops.tile(ops.expand_dims(mask, -1), (1, 1, 4))smooth_l1 = nn.SmoothL1Loss()(pred_loc, gt_loc) * mask_locloss_loc = ops.sum(ops.sum(smooth_l1, -1), -1)

# Category lossloss_cls = class_loss(pred_label, gt_label)loss_cls = ops.sum(loss_cls, (1, 2))

return ops.sum((loss_cls + loss_loc) / num_matched_boxes)

grad_fn = ms.value_and_grad(forward_fn, None, opt.parameters, has_aux=False)

loss_scaler = DynamicLossScaler(1024, 2, 1000)

# Gradient updates

def train_step(x, gt_loc, gt_label, num_matched_boxes):loss, grads = grad_fn(x, gt_loc, gt_label, num_matched_boxes)opt(grads)return loss

print("=================== Starting Training =====================")

for epoch in range(60):network.set_train(True)begin_time = time.time()for step, (image, get_loc, gt_label, num_matched_boxes) in enumerate(dataset.create_tuple_iterator()):loss = train_step(image, get_loc, gt_label, num_matched_boxes)end_time = time.time()times = end_time - begin_timeprint(f"Epoch:[{int(epoch + 1)}/{int(60)}], "f"loss:{loss} , "f"time:{times}s ")

ms.save_checkpoint(network, "ssd-60_9.ckpt")

print("=================== Training Success =====================")输出:

=================== Starting Training =====================

Epoch:[1/60], loss:1084.1499 , time:260.8889214992523s

Epoch:[2/60], loss:1074.2556 , time:1.5645153522491455s

Epoch:[3/60], loss:1056.8948 , time:1.5849218368530273s

Epoch:[4/60], loss:1038.404 , time:1.5757107734680176s

Epoch:[5/60], loss:1019.4508 , time:1.591012716293335s

......

Epoch:[55/60], loss:188.63403 , time:1.6473157405853271s

Epoch:[56/60], loss:188.51494 , time:1.6453087329864502s

Epoch:[57/60], loss:188.44801 , time:1.7012412548065186s

Epoch:[58/60], loss:188.40457 , time:1.639800786972046s

Epoch:[59/60], loss:188.38773 , time:1.6424283981323242s

Epoch:[60/60], loss:188.37619 , time:1.656235933303833s

=================== Training Success ======================================== Training Success =====================

八、评估

自定义eval_net()类评估训练模型

调用SsdInferWithDecoder类返回预测的坐标及标签

计算不同IoU阈值、area和maxDets设置下的

Average Precision(AP)

Average Recall(AR)

COCOMetrics类计算mAP

模型在测试集上的评估指标:

1.精确率(AP)和召回率(AR)的解释

TP:IoU>阈值检测框的数量(同一Ground Truth只计算一次)。

FP:IoU<=阈值检测框的数量,或同一个GT多余检测框的数量。

FN:没有检测到的GT的数量。

2.精确率(AP)和召回率(AR)的公式

精确率(Average Precision,AP):

TP 正样本预测正确的结果

FP 正样本预测错误的结果

【需确认】召回率(Average Recall,AR):

TP 正样本预测正确的结果

FN 正样本预测错误的和

反映出来的是预测结果中的漏检率。

3.输出指标

(1)类别AP的平均值mAP(mean Average Precision)

(2)iou取0.5的mAP值

voc的评判标准

(3)评判较为严格的mAP值

反应算法框的位置精准程度

(4)中间几个数为物体大小的mAP值

AR

maxDets=10/100的mAR值

反应检出率

两者接近,说明这个数据集不用检测100个框

可以提高性能

mindrecord_file = "./datasets/MindRecord_COCO/ssd_eval.mindrecord0"

def ssd_eval(dataset_path, ckpt_path, anno_json):"""SSD evaluation."""batch_size = 1ds = create_ssd_dataset(dataset_path, batch_size=batch_size,is_training=False, use_multiprocessing=False)

network = SSD300Vgg16()print("Load Checkpoint!")net = SsdInferWithDecoder(network, Tensor(default_boxes), ckpt_path)

net.set_train(False)total = ds.get_dataset_size() * batch_sizeprint("\n========================================\n")print("total images num: ", total)eval_param_dict = {"net": net, "dataset": ds, "anno_json": anno_json}mAP = apply_eval(eval_param_dict)print("\n========================================\n")print(f"mAP: {mAP}")

def eval_net():print("Start Eval!")ssd_eval(mindrecord_file, "./ssd-60_9.ckpt", anno_json)

eval_net()输出:

Start Eval!

Load Checkpoint!========================================total images num: 9

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

Loading and preparing results...

DONE (t=1.15s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=1.26s).

Accumulating evaluation results...

DONE (t=0.36s).Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.008Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.016Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.001Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.006Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.027Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.021Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.041Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.071Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.063Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.303========================================mAP: 0.007956423581575582相关文章:

昇思MindSpore学习笔记6-05计算机视觉--SSD目标检测

摘要: 记录MindSpore AI框架使用SSD目标检测算法对图像内容识别的过程、步骤和方法。包括环境准备、下载数据集、数据采样、数据集加载和预处理、构建模型、损失函数、模型训练、模型评估等。 一、概念 1.模型简介 SSD目标检测算法 Single Shot MultiBox Detecto…...

vb.netcad二开自学笔记9:界面之ribbon

一个成熟的软件怎么能没有ribbon呢,在前面的框架基础上再加个命令AddRibbon <CommandMethod("AddRibbon")> Public Sub AddRibbon() Dim ribbonControl As RibbonControl ComponentManager.Ribbon Dim tab As RibbonTab New RibbonTab() tab.Tit…...

学习笔记——动态路由——OSPF链路状态通告(LSA)

十、OSPF链路状态通告(LSA) 1、链路状态通告简介 (1)LAS概述 链路状态通告(Link State Advertisement,LSA)是路由器之间链路状态信息的载体。LSA是LSDB的最小组成单位,LSDB由一条条LSA构成的。是OSPF中计算路由的重要依据。 LSA用于向其它邻接OSPF路…...

模拟防止重复提交

gitee地址(需要自取)AopProxy重复提交: 防止重复提交 (gitee.com) RestController public class SubmissionController {Autowiredprivate SubmissionService submissionService;private static Jedis jedis new Jedis("localhost",6379);pr…...

C++:strcut与class的区别

在C中,struct和class在语法上非常相似,但它们之间确实存在一些关键的差异,这些差异主要体现在成员的默认访问权限和继承的默认方式上。然而,从更广泛的角度来看,它们都可以用来定义自定义数据类型,包含数据…...

科研绘图系列:R语言两组数据散点分布图(scatter plot)

介绍 展示两组数据的散点分布图是一种图形化表示方法,用于显示两个变量之间的关系。在散点图中,每个点代表一个数据点,其x坐标对应于第一组数据的值,y坐标对应于第二组数据的值。以下是散点图可以展示的一些结果: 线性关系:如果两组数据之间存在线性关系,散点图将显示出…...

【EasyExcel】根据单元格内容自动调整列宽

1.自定义Excel列宽样式策略类 import com.alibaba.excel.enums.CellDataTypeEnum; import com.alibaba.excel.metadata.Head; import com.alibaba.excel.metadata.data.WriteCellData; import com.alibaba.excel.write.metadata.holder.WriteSheetHolder; import com.alibaba.e…...

半月内笔者暂不写时评文

今晨,笔者在刚恢复的《新浪微博》发布消息表态如下:“要开会了!今起,半月内笔者暂不写敏感时评文,不让自媒体网管感到压力,也是张驰有度、识时务者为俊杰之正常选择。野钓去也。” 截图:来源笔者…...

Python面试题:如何在 Python 中解析 XML 文件?

在 Python 中解析 XML 文件可以使用内置的 xml.etree.ElementTree 模块。以下是一个示例,展示了如何使用这个模块解析 XML 文件: 读取 XML 文件: import xml.etree.ElementTree as ET# 读取 XML 文件 tree ET.parse(example.xml) root tr…...

3033.修改矩阵

1.题目描述 给你一个下标从 0 开始、大小为 m x n 的整数矩阵 matrix ,新建一个下标从 0 开始、名为 answer 的矩阵。使 answer 与 matrix 相等,接着将其中每个值为 -1 的元素替换为所在列的 最大 元素。 返回矩阵 answer 。 示例 1: 输入&am…...

解决MCM功率电源模块EMC的关键

对MCM功率电源而言,由于其工作在几百kHz的高频开关状态,故易成为干扰源。电磁兼容性EMC(Electro Magnetic Compatibility),是指设备或系统在其电磁环境中符合要求运行并不对其环境中的任何设备产生无法忍受的电磁干扰的…...

在conda的环境中安装Jupyter及其他软件包

Pytorch版本、安装和检验 大多数软件包都是随Anaconda安装的,也可以根据需要手动安装一些其他软件包。 目录 创建虚拟环境 进入虚拟环境 安装Jupyter notebook 安装matplotlib 安装 pandas 创建虚拟环境 基于conda包的环境创建、激活、管理与删除http://t.cs…...

spark中的floor函数

在Spark中,floor函数是一种数学函数,用于返回不大于给定数值的最大整数。具体作用如下: 1. 数值操作: floor函数会将每个元素向下取整到最接近的整数。例如,对于浮点数或双精度数值,它会返回不大于该数值的…...

最简单的Docker离线安装教程

最简单的Docker离线安装教程 方式一 RPM 包方式1. 在线下载 RPM 包2. 将 RPM 包拷贝到安装机器3. 安装4. 启动 方式二 二进制安装方式(推荐)1. 下载包2. 将包进行解压授权3. 注册 systemd4. 自启和启动 一直以来在线安装 docker 到服务器上是非常方便的&…...

如何在 Python 中创建一个类似于 MS 计算器的 GUI 计算器

问题背景 假设我们需要创建一个类似于微软计算器的 GUI 计算器。这个计算器应该具有以下功能: 能够显示第一个输入的数字。当按下运算符时,输入框仍显示第一个数字。当按下第二个数字时,第一个数字被替换。 解决方案 为了解决这个问题&am…...

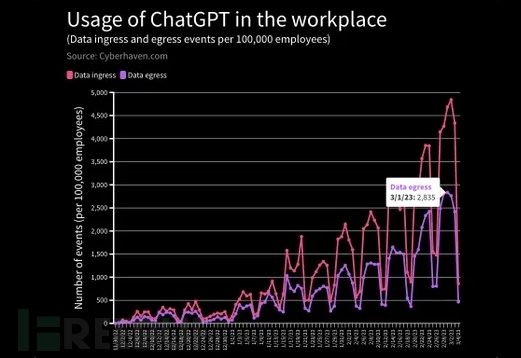

警惕:与ChatGPT共享业务数据可能十分危险

您已经在使用ChatGPT了吗?或者您正在考虑使用它来简化操作或改善客户服务?虽然ChatGPT提供了许多好处,但重要的是,您要意识到与ChatGPT这样的人工智能工具共享敏感业务数据相关的安全风险。下面,我们概述了一些关键问题…...

基于MacOS系统Sonoma 14.5的SSH服务禁止密码登录

基于系统Sonoma 14.5,不同系统有所差异。 修改sshd_config文件 sudo vim /etc/ssh/sshd_config找到以下两行取消注释,修改值为 no PasswordAuthentication no KbdInteractiveAuthentication no重启sshd服务 # 关闭服务 sudo launchctl unload -w /System…...

深入理解MySQL中的EXPLAIN及type列

在MySQL中,EXPLAIN是一个强大的工具,它可以帮助我们理解SQL查询的执行计划。通过使用EXPLAIN,我们可以获取到查询的详细信息,包括如何执行查询,以及查询的各个部分如何连接在一起。在本篇博客中,我们将重点…...

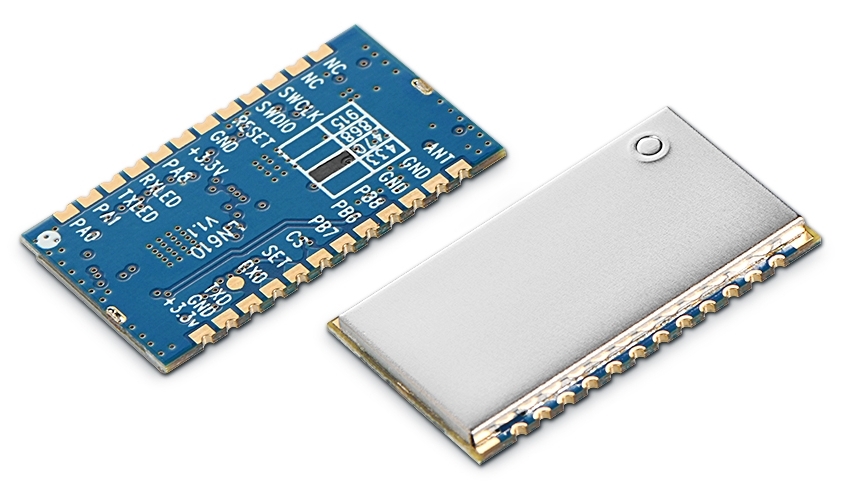

LoRaWAN网络协议Class A/Class B/Class C三种工作模式说明

LoRaWAN是一种专为广域物联网设计的低功耗广域网络协议。它特别适用于物联网(IoT)设备,可以在低数据速率下进行长距离通信。LoRaWAN 网络由多个组成部分构成,其中包括节点(终端设备)、网关和网络服务器。Lo…...

ITSS服务经理:WAVE SUMMIT深度学习开发者大会2024在北京召开

在6月28日,由深度学习技术及应用国家工程研究中心主导的WAVE SUMMIT深度学习开发者大会2024于北京隆重举行。 此次盛会由百度飞桨和文心大模型联袂承办。 在大会上,百度震撼发布文心大模型4.0 Turbo版本,并宣布其API接口将向广大开发者开放…...

观成科技:隐蔽隧道工具Ligolo-ng加密流量分析

1.工具介绍 Ligolo-ng是一款由go编写的高效隧道工具,该工具基于TUN接口实现其功能,利用反向TCP/TLS连接建立一条隐蔽的通信信道,支持使用Let’s Encrypt自动生成证书。Ligolo-ng的通信隐蔽性体现在其支持多种连接方式,适应复杂网…...

conda相比python好处

Conda 作为 Python 的环境和包管理工具,相比原生 Python 生态(如 pip 虚拟环境)有许多独特优势,尤其在多项目管理、依赖处理和跨平台兼容性等方面表现更优。以下是 Conda 的核心好处: 一、一站式环境管理:…...

Ubuntu系统下交叉编译openssl

一、参考资料 OpenSSL&&libcurl库的交叉编译 - hesetone - 博客园 二、准备工作 1. 编译环境 宿主机:Ubuntu 20.04.6 LTSHost:ARM32位交叉编译器:arm-linux-gnueabihf-gcc-11.1.0 2. 设置交叉编译工具链 在交叉编译之前&#x…...

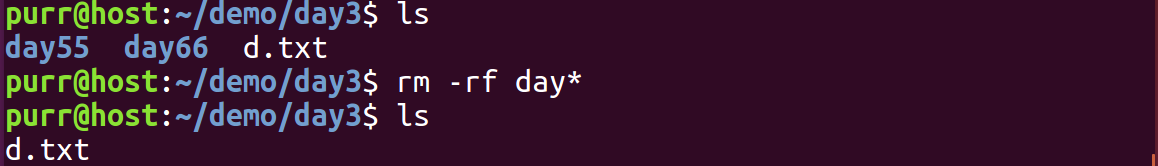

Linux 文件类型,目录与路径,文件与目录管理

文件类型 后面的字符表示文件类型标志 普通文件:-(纯文本文件,二进制文件,数据格式文件) 如文本文件、图片、程序文件等。 目录文件:d(directory) 用来存放其他文件或子目录。 设备…...

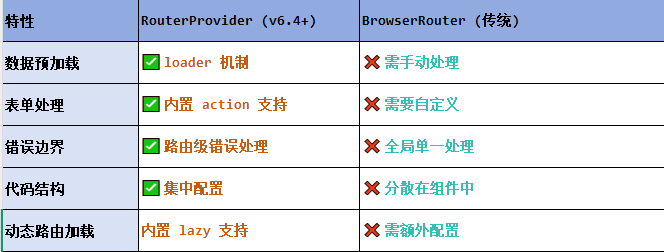

React第五十七节 Router中RouterProvider使用详解及注意事项

前言 在 React Router v6.4 中,RouterProvider 是一个核心组件,用于提供基于数据路由(data routers)的新型路由方案。 它替代了传统的 <BrowserRouter>,支持更强大的数据加载和操作功能(如 loader 和…...

解锁数据库简洁之道:FastAPI与SQLModel实战指南

在构建现代Web应用程序时,与数据库的交互无疑是核心环节。虽然传统的数据库操作方式(如直接编写SQL语句与psycopg2交互)赋予了我们精细的控制权,但在面对日益复杂的业务逻辑和快速迭代的需求时,这种方式的开发效率和可…...

Linux-07 ubuntu 的 chrome 启动不了

文章目录 问题原因解决步骤一、卸载旧版chrome二、重新安装chorme三、启动不了,报错如下四、启动不了,解决如下 总结 问题原因 在应用中可以看到chrome,但是打不开(说明:原来的ubuntu系统出问题了,这个是备用的硬盘&a…...

【C语言练习】080. 使用C语言实现简单的数据库操作

080. 使用C语言实现简单的数据库操作 080. 使用C语言实现简单的数据库操作使用原生APIODBC接口第三方库ORM框架文件模拟1. 安装SQLite2. 示例代码:使用SQLite创建数据库、表和插入数据3. 编译和运行4. 示例运行输出:5. 注意事项6. 总结080. 使用C语言实现简单的数据库操作 在…...

AI编程--插件对比分析:CodeRider、GitHub Copilot及其他

AI编程插件对比分析:CodeRider、GitHub Copilot及其他 随着人工智能技术的快速发展,AI编程插件已成为提升开发者生产力的重要工具。CodeRider和GitHub Copilot作为市场上的领先者,分别以其独特的特性和生态系统吸引了大量开发者。本文将从功…...

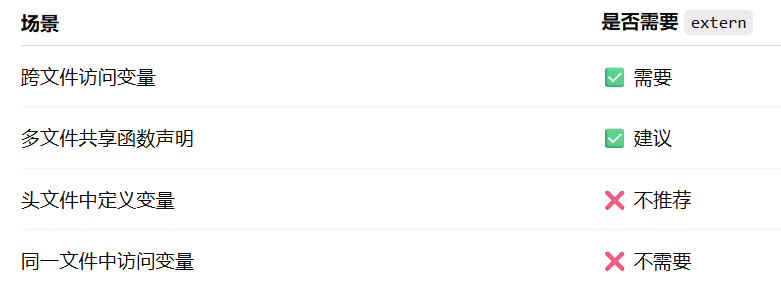

深入解析C++中的extern关键字:跨文件共享变量与函数的终极指南

🚀 C extern 关键字深度解析:跨文件编程的终极指南 📅 更新时间:2025年6月5日 🏷️ 标签:C | extern关键字 | 多文件编程 | 链接与声明 | 现代C 文章目录 前言🔥一、extern 是什么?&…...