【扒网络架构】backbone、ccff

backbone

CCFF

还不知道网络连接方式,只是知道了每一层

backbone

- backbone.backbone.conv1.weight torch.Size([64, 3, 7, 7])

- backbone.backbone.layer1.0.conv1.weight torch.Size([64, 64, 1, 1])

- backbone.backbone.layer1.0.conv2.weight torch.Size([64, 64, 3, 3])

- backbone.backbone.layer1.0.conv3.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer1.0.downsample.0.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer1.1.conv1.weight torch.Size([64, 256, 1, 1])

- backbone.backbone.layer1.1.conv2.weight torch.Size([64, 64, 3, 3])

- backbone.backbone.layer1.1.conv3.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer1.2.conv1.weight torch.Size([64, 256, 1, 1])

- backbone.backbone.layer1.2.conv2.weight torch.Size([64, 64, 3, 3])

- backbone.backbone.layer1.2.conv3.weight torch.Size([256, 64, 1, 1])

- backbone.backbone.layer2.0.conv1.weight torch.Size([128, 256, 1, 1])

- backbone.backbone.layer2.0.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.0.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer2.0.downsample.0.weight torch.Size([512, 256, 1, 1])

- backbone.backbone.layer2.1.conv1.weight torch.Size([128, 512, 1, 1])

- backbone.backbone.layer2.1.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.1.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer2.2.conv1.weight torch.Size([128, 512, 1, 1])

- backbone.backbone.layer2.2.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.2.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer2.3.conv1.weight torch.Size([128, 512, 1, 1])

- backbone.backbone.layer2.3.conv2.weight torch.Size([128, 128, 3, 3])

- backbone.backbone.layer2.3.conv3.weight torch.Size([512, 128, 1, 1])

- backbone.backbone.layer3.0.conv1.weight torch.Size([256, 512, 1, 1])

- backbone.backbone.layer3.0.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.0.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.0.downsample.0.weight torch.Size([1024, 512, 1, 1])

- backbone.backbone.layer3.1.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.1.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.1.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.2.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.2.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.2.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.3.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.3.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.3.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.4.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.4.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.4.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer3.5.conv1.weight torch.Size([256, 1024, 1, 1])

- backbone.backbone.layer3.5.conv2.weight torch.Size([256, 256, 3, 3])

- backbone.backbone.layer3.5.conv3.weight torch.Size([1024, 256, 1, 1])

- backbone.backbone.layer4.0.conv1.weight torch.Size([512, 1024, 1, 1])

- backbone.backbone.layer4.0.conv2.weight torch.Size([512, 512, 3, 3])

- backbone.backbone.layer4.0.conv3.weight torch.Size([2048, 512, 1, 1])

- backbone.backbone.layer4.0.downsample.0.weight torch.Size([2048, 1024, 1, 1])

- backbone.backbone.layer4.1.conv1.weight torch.Size([512, 2048, 1, 1])

- backbone.backbone.layer4.1.conv2.weight torch.Size([512, 512, 3, 3])

- backbone.backbone.layer4.1.conv3.weight torch.Size([2048, 512, 1, 1])

- backbone.backbone.layer4.2.conv1.weight torch.Size([512, 2048, 1, 1])

- backbone.backbone.layer4.2.conv2.weight torch.Size([512, 512, 3, 3])

- backbone.backbone.layer4.2.conv3.weight torch.Size([2048, 512, 1, 1])

- backbone.backbone.fc.weight torch.Size([1000, 2048])

- backbone.backbone.fc.bias torch.Size([1000])

ccf

- ccff.conv1.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.conv1.norm.weight torch.Size([3584])

- ccff.conv1.norm.bias torch.Size([3584])

- ccff.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.conv2.norm.weight torch.Size([3584])

- ccff.conv2.norm.bias torch.Size([3584])

- ccff.bottlenecks.0.conv1.conv.weight torch.Size([3584, 3584, 3, 3])

- ccff.bottlenecks.0.conv1.norm.weight torch.Size([3584])

- ccff.bottlenecks.0.conv1.norm.bias torch.Size([3584])

- ccff.bottlenecks.0.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.bottlenecks.0.conv2.norm.weight torch.Size([3584])

- ccff.bottlenecks.0.conv2.norm.bias torch.Size([3584])

- ccff.bottlenecks.1.conv1.conv.weight torch.Size([3584, 3584, 3, 3])

- ccff.bottlenecks.1.conv1.norm.weight torch.Size([3584])

- ccff.bottlenecks.1.conv1.norm.bias torch.Size([3584])

- ccff.bottlenecks.1.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.bottlenecks.1.conv2.norm.weight torch.Size([3584])

- ccff.bottlenecks.1.conv2.norm.bias torch.Size([3584])

- ccff.bottlenecks.2.conv1.conv.weight torch.Size([3584, 3584, 3, 3])

- ccff.bottlenecks.2.conv1.norm.weight torch.Size([3584])

- ccff.bottlenecks.2.conv1.norm.bias torch.Size([3584])

- ccff.bottlenecks.2.conv2.conv.weight torch.Size([3584, 3584, 1, 1])

- ccff.bottlenecks.2.conv2.norm.weight torch.Size([3584])

- ccff.bottlenecks.2.conv2.norm.bias torch.Size([3584])

input_proj

- input_proj.weight torch.Size([256, 3584, 1, 1])

- input_proj.bias torch.Size([256])

encoder

- encoder.layers.0.norm1.weight torch.Size([256])

- encoder.layers.0.norm1.bias torch.Size([256])

- encoder.layers.0.norm2.weight torch.Size([256])

- encoder.layers.0.norm2.bias torch.Size([256])

- encoder.layers.0.self_attn.in_proj_weight torch.Size([768, 256])

- encoder.layers.0.self_attn.in_proj_bias torch.Size([768])

- encoder.layers.0.self_attn.out_proj.weight torch.Size([256, 256])

- encoder.layers.0.self_attn.out_proj.bias torch.Size([256])

- encoder.layers.0.mlp.linear1.weight torch.Size([2048, 256])

- encoder.layers.0.mlp.linear1.bias torch.Size([2048])

- encoder.layers.0.mlp.linear2.weight torch.Size([256, 2048])

- encoder.layers.0.mlp.linear2.bias torch.Size([256])

- encoder.layers.1.norm1.weight torch.Size([256])

- encoder.layers.1.norm1.bias torch.Size([256])

- encoder.layers.1.norm2.weight torch.Size([256])

- encoder.layers.1.norm2.bias torch.Size([256])

- encoder.layers.1.self_attn.in_proj_weight torch.Size([768, 256])

- encoder.layers.1.self_attn.in_proj_bias torch.Size([768])

- encoder.layers.1.self_attn.out_proj.weight torch.Size([256, 256])

- encoder.layers.1.self_attn.out_proj.bias torch.Size([256])

- encoder.layers.1.mlp.linear1.weight torch.Size([2048, 256])

- encoder.layers.1.mlp.linear1.bias torch.Size([2048])

- encoder.layers.1.mlp.linear2.weight torch.Size([256, 2048])

- encoder.layers.1.mlp.linear2.bias torch.Size([256])

- encoder.layers.2.norm1.weight torch.Size([256])

- encoder.layers.2.norm1.bias torch.Size([256])

- encoder.layers.2.norm2.weight torch.Size([256])

- encoder.layers.2.norm2.bias torch.Size([256])

- encoder.layers.2.self_attn.in_proj_weight torch.Size([768, 256])

- encoder.layers.2.self_attn.in_proj_bias torch.Size([768])

- encoder.layers.2.self_attn.out_proj.weight torch.Size([256, 256])

- encoder.layers.2.self_attn.out_proj.bias torch.Size([256])

- encoder.layers.2.mlp.linear1.weight torch.Size([2048, 256])

- encoder.layers.2.mlp.linear1.bias torch.Size([2048])

- encoder.layers.2.mlp.linear2.weight torch.Size([256, 2048])

- encoder.layers.2.mlp.linear2.bias torch.Size([256])

- encoder.norm.weight torch.Size([256])

- encoder.norm.bias torch.Size([256])

ope

- ope.iterative_adaptation.layers.0.norm1.weight torch.Size([256])

- ope.iterative_adaptation.layers.0.norm1.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.norm2.weight torch.Size([256])

- ope.iterative_adaptation.layers.0.norm2.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.norm3.weight torch.Size([256])

- ope.iterative_adaptation.layers.0.norm3.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.self_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.0.self_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.0.self_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.0.self_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.enc_dec_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.0.enc_dec_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.0.enc_dec_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.0.enc_dec_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.0.mlp.linear1.weight torch.Size([2048, 256])

- ope.iterative_adaptation.layers.0.mlp.linear1.bias torch.Size([2048])

- ope.iterative_adaptation.layers.0.mlp.linear2.weight torch.Size([256, 2048])

- ope.iterative_adaptation.layers.0.mlp.linear2.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.norm1.weight torch.Size([256])

- ope.iterative_adaptation.layers.1.norm1.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.norm2.weight torch.Size([256])

- ope.iterative_adaptation.layers.1.norm2.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.norm3.weight torch.Size([256])

- ope.iterative_adaptation.layers.1.norm3.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.self_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.1.self_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.1.self_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.1.self_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.enc_dec_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.1.enc_dec_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.1.enc_dec_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.1.enc_dec_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.1.mlp.linear1.weight torch.Size([2048, 256])

- ope.iterative_adaptation.layers.1.mlp.linear1.bias torch.Size([2048])

- ope.iterative_adaptation.layers.1.mlp.linear2.weight torch.Size([256, 2048])

- ope.iterative_adaptation.layers.1.mlp.linear2.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.norm1.weight torch.Size([256])

- ope.iterative_adaptation.layers.2.norm1.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.norm2.weight torch.Size([256])

- ope.iterative_adaptation.layers.2.norm2.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.norm3.weight torch.Size([256])

- ope.iterative_adaptation.layers.2.norm3.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.self_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.2.self_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.2.self_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.2.self_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.enc_dec_attn.in_proj_weight torch.Size([768, 256])

- ope.iterative_adaptation.layers.2.enc_dec_attn.in_proj_bias torch.Size([768])

- ope.iterative_adaptation.layers.2.enc_dec_attn.out_proj.weight torch.Size([256, 256])

- ope.iterative_adaptation.layers.2.enc_dec_attn.out_proj.bias torch.Size([256])

- ope.iterative_adaptation.layers.2.mlp.linear1.weight torch.Size([2048, 256])

- ope.iterative_adaptation.layers.2.mlp.linear1.bias torch.Size([2048])

- ope.iterative_adaptation.layers.2.mlp.linear2.weight torch.Size([256, 2048])

- ope.iterative_adaptation.layers.2.mlp.linear2.bias torch.Size([256])

- ope.iterative_adaptation.norm.weight torch.Size([256])

- ope.iterative_adaptation.norm.bias torch.Size([256])

ope.shape_or_objectness

- ope.shape_or_objectness.0.weight torch.Size([64, 2])

- ope.shape_or_objectness.0.bias torch.Size([64])

- ope.shape_or_objectness.2.weight torch.Size([256, 64])

- ope.shape_or_objectness.2.bias torch.Size([256])

- ope.shape_or_objectness.4.weight torch.Size([2304, 256])

- ope.shape_or_objectness.4.bias torch.Size([2304])

回归头

- regression_head.regressor.0.layer.0.weight torch.Size([128, 256, 3, 3])

- regression_head.regressor.0.layer.0.bias torch.Size([128])

- regression_head.regressor.1.layer.0.weight torch.Size([64, 128, 3, 3])

- regression_head.regressor.1.layer.0.bias torch.Size([64])

- regression_head.regressor.2.layer.0.weight torch.Size([32, 64, 3, 3])

- regression_head.regressor.2.layer.0.bias torch.Size([32])

- regression_head.regressor.3.weight torch.Size([1, 32, 1, 1])

- regression_head.regressor.3.bias torch.Size([1])

辅助头

- aux_heads.0.regressor.0.layer.0.weight torch.Size([128, 256, 3, 3])

- aux_heads.0.regressor.0.layer.0.bias torch.Size([128])

- aux_heads.0.regressor.1.layer.0.weight torch.Size([64, 128, 3, 3])

- aux_heads.0.regressor.1.layer.0.bias torch.Size([64])

- aux_heads.0.regressor.2.layer.0.weight torch.Size([32, 64, 3, 3])

- aux_heads.0.regressor.2.layer.0.bias torch.Size([32])

- aux_heads.0.regressor.3.weight torch.Size([1, 32, 1, 1])

- aux_heads.0.regressor.3.bias torch.Size([1])

- aux_heads.1.regressor.0.layer.0.weight torch.Size([128, 256, 3, 3])

- aux_heads.1.regressor.0.layer.0.bias torch.Size([128])

- aux_heads.1.regressor.1.layer.0.weight torch.Size([64, 128, 3, 3])

- aux_heads.1.regressor.1.layer.0.bias torch.Size([64])

- aux_heads.1.regressor.2.layer.0.weight torch.Size([32, 64, 3, 3])

- aux_heads.1.regressor.2.layer.0.bias torch.Size([32])

- aux_heads.1.regressor.3.weight torch.Size([1, 32, 1, 1])

- aux_heads.1.regressor.3.bias torch.Size([1])

Total number of parameters in LOCA: 447974251

Total number of parameters in CCFF: 411099136(这个模块,参数量好大)

相关文章:

【扒网络架构】backbone、ccff

backbone CCFF 还不知道网络连接方式,只是知道了每一层 backbone backbone.backbone.conv1.weight torch.Size([64, 3, 7, 7])backbone.backbone.layer1.0.conv1.weight torch.Size([64, 64, 1, 1])backbone.backbone.layer1.0.conv2.weight torch.Size([64, 64,…...

linux进程

exit()函数正常结束进程 man ps aux 是在使用 ps 命令时常用的一个选项组合,用于显示系统中所有进程的详细信息。aux 不是 ps 命令的一个正式选项,而是三个选项的组合:a, u, 和 x。这三个选项分别代表不同的含义&#…...

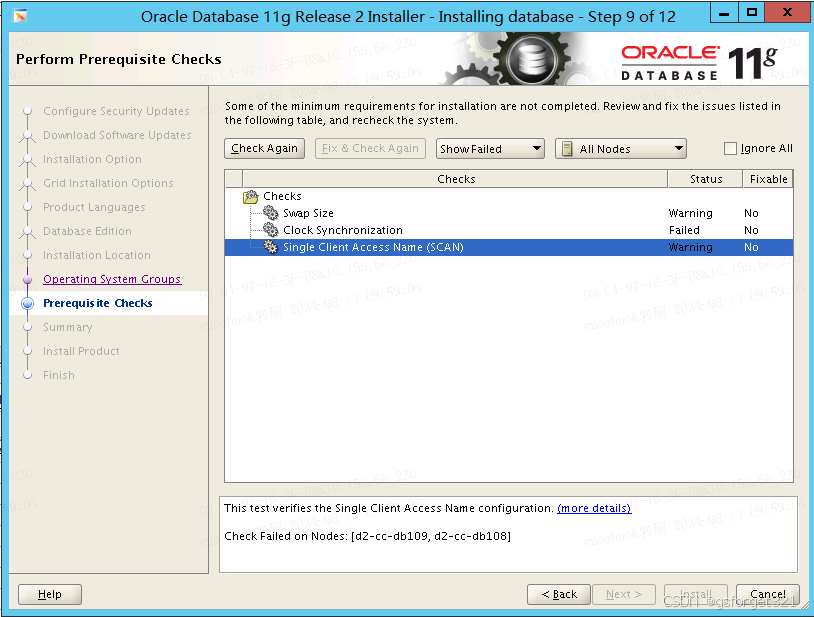

PRVF-4037 : CRS is not installed on any of the nodes

描述:公司要求替换centos,重新安装ORACLE LINUX RAC的数据库做备库,到时候切换成主库,安装Linux7GRID 19C 11G Oracle,顺利安装grid 19c,安装11G数据库软件的时候检测报如题错误:**PRVF-4037 …...

整理 酷炫 Flutter 开源UI框架 FAB

flutter_villains 灵活且易于使用的页面转换。 项目地址:https://github.com/Norbert515/flutter_villains 项目Demo:https://download.csdn.net/download/qq_36040764/89631324...

Unity 编写自己的aar库,接收Android广播(broadcastReceiver)并传递到Unity

编写本文是因为找了很多文章,都比较片段,不容易理解,对于Android新手来说理解起来不友好。我这里写了一个针对比较小白的文章,希望有所帮助。 Android端 首先还是先来写Android端,我们新建一个Android空项目…...

Mysql cast函数、cast用法、字符串转数字、字符串转日期、数据类型转换

文章目录 一、语法二、示例2.1、复杂示例 三、cast与convert的区别 CAST 函数是 SQL 中的一种类型转换函数,它用于将一个数据类型转换为另一个数据类型,这篇文章主要介绍了Mysql中Cast()函数的用法,需要的朋友可以参考下。 Mysql提供了两种将值转换成指…...

微信小程序开发之组件复用机制

新建复用文件,另外需要注册 behavior 例如: 在behavior.js文件中写入方法,并向外暴露出去 写法一: module.exportsBehavior({data: {num: 1},lifetimes: {created() {console.log(1);}} })写法二: const behavior …...

数据结构--线性表

数据结构分类 集合 线性结构(一对一) 树形结构(一对多) 图结构(多对多) 数据结构三要素 1、逻辑结构 2、数据的运算 3、存储结构(物理结构) 线性表分类 1、顺序表 2、链表 3、栈 4、队列 5、串 线性表--顺序表 顺序表的特点 顺序表的删除和插入…...

深入探针:PHP与DTrace的动态追踪艺术

标题:深入探针:PHP与DTrace的动态追踪艺术 在高性能的PHP应用开发中,深入理解代码的执行流程和性能瓶颈是至关重要的。DTrace,作为一种强大的动态追踪工具,为开发者提供了对PHP脚本运行时行为的深入洞察。本文将详细介…...

黑龙江日报报道第5届中国计算机应用技术大赛,赛氪提供赛事支持

2024年7月17日,黑龙江日报、极光新闻对在哈尔滨市举办的第5届中国计算机应用技术大赛全国总决赛进行了深入报道。此次大赛由中国计算机学会主办,中国计算机学会计算机应用专业委员会与赛氪网共同承办,吸引了来自全国各地的顶尖技术团队和选手…...

【计算机网络】LVS四层负载均衡器

https://mobian.blog.csdn.net/article/details/141093263 https://blog.csdn.net/weixin_42175752/article/details/139966198 《高并发的哲学原理》 (基本来自本书) 《亿级流量系统架构设计与实战》 LVS 章文嵩博士创造 LVS(IPVS) 章⽂嵩发…...

)

Java 守护线程练习 (2024.8.12)

DaemonExercise package DaemonExercise20240812;public class DaemonExercise {public static void main(String[] args) {// 守护线程// 当普通线程执行完毕之后,守护线程没有继续执行的必要,所以说会逐步关闭(并非瞬间关闭)//…...

C#小桌面程序调试出错,如何解决??

🏆本文收录于《CSDN问答解惑-专业版》专栏,主要记录项目实战过程中的Bug之前因后果及提供真实有效的解决方案,希望能够助你一臂之力,帮你早日登顶实现财富自由🚀;同时,欢迎大家关注&&收…...

Seatunnel Mysql数据同步到Mysql

环境 mysql-connector-java-8.0.28.jar、connector-cdc-mysql 配置 env {# You can set SeaTunnel environment configuration hereexecution.parallelism 2job.mode "STREAMING"# 10秒检查一次,可以适当加大这个值checkpoint.interval 10000#execu…...

Java Web —— 第五天(请求响应1)

postman Postman是一款功能强大的网页调试与发送网页HTTP请求的Chrome插件 作用:常用于进行接口测试 简单参数 原始方式 在原始的web程序中,获取请求参数,需要通过HttpServletRequest 对象手动获 http://localhost:8080/simpleParam?nameTom&a…...

【LLMOps】手摸手教你把 Dify 接入微信生态

作者:韩方圆 "Dify on WeChat"开源项目作者 概述 微信作为最热门即时通信软件,拥有巨大的流量。 微信友好的聊天窗口是天然的AI应用LUI(Language User Interface)/CUI(Conversation User Interface)。 微信不仅有个人微信,同时提供…...

Ftrans文件摆渡方案:重塑文件传输与管控的科技先锋

一、哪些行业会用到文件摆渡相关方案 文件摆渡相关的产品和方案通常用于需要在不同的网络、安全域、网段之间传输数据的场景,主要是一些有核心数据需要保护的行业,做了网络隔离和划分。以下是一些应用比较普遍的行业: 金融行业:…...

LaTeX中的除号表示方法详解

/除号 LaTeX中的除号表示方法详解1. 使用斜杠 / 表示除号优点缺点 2. 使用 \frac{} 表示分数形式的除法优点缺点 3. 使用 \div 表示标准除号优点缺点 4. 使用 \over 表示分数形式的除法优点缺点 5. 使用 \dfrac{} 和 \tfrac{} 表示大型和小型分数优点缺点 总结 LaTeX中的除号表…...

DID、DID文档、VC、VP分别是什么 有什么关系

DID(去中心化身份) 定义:DID 是一种去中心化的唯一标识符,用于表示个体、组织或设备的身份。DID 不依赖于中央管理机构,而是由去中心化网络(如区块链)生成和管理。 用途:DID 允许用…...

网络安全应急响应

前言\n在网络安全领域,有一句广为人知的话:“没有绝对的安全”。这意味着任何系统都有可能被攻破。安全攻击的发生并不可怕,可怕的是从头到尾都毫无察觉。当系统遭遇攻击时,企业的安全人员需要立即进行应急响应,以将影…...

日语AI面试高效通关秘籍:专业解读与青柚面试智能助攻

在如今就业市场竞争日益激烈的背景下,越来越多的求职者将目光投向了日本及中日双语岗位。但是,一场日语面试往往让许多人感到步履维艰。你是否也曾因为面试官抛出的“刁钻问题”而心生畏惧?面对生疏的日语交流环境,即便提前恶补了…...

关于iview组件中使用 table , 绑定序号分页后序号从1开始的解决方案

问题描述:iview使用table 中type: "index",分页之后 ,索引还是从1开始,试过绑定后台返回数据的id, 这种方法可行,就是后台返回数据的每个页面id都不完全是按照从1开始的升序,因此百度了下,找到了…...

关于 WASM:1. WASM 基础原理

一、WASM 简介 1.1 WebAssembly 是什么? WebAssembly(WASM) 是一种能在现代浏览器中高效运行的二进制指令格式,它不是传统的编程语言,而是一种 低级字节码格式,可由高级语言(如 C、C、Rust&am…...

JavaScript基础-API 和 Web API

在学习JavaScript的过程中,理解API(应用程序接口)和Web API的概念及其应用是非常重要的。这些工具极大地扩展了JavaScript的功能,使得开发者能够创建出功能丰富、交互性强的Web应用程序。本文将深入探讨JavaScript中的API与Web AP…...

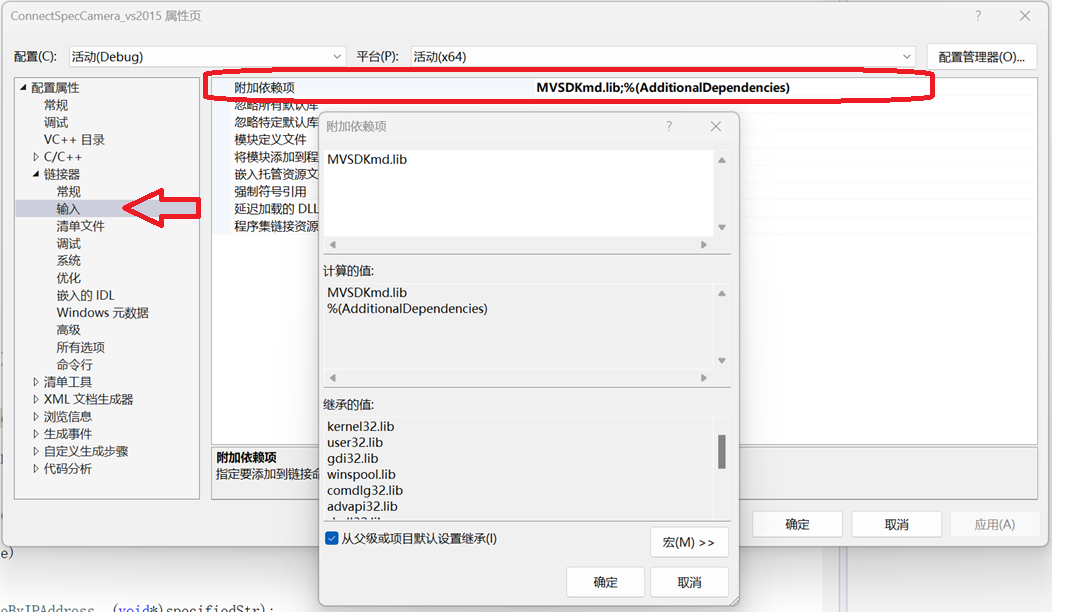

C/C++ 中附加包含目录、附加库目录与附加依赖项详解

在 C/C 编程的编译和链接过程中,附加包含目录、附加库目录和附加依赖项是三个至关重要的设置,它们相互配合,确保程序能够正确引用外部资源并顺利构建。虽然在学习过程中,这些概念容易让人混淆,但深入理解它们的作用和联…...

云原生周刊:k0s 成为 CNCF 沙箱项目

开源项目推荐 HAMi HAMi(原名 k8s‑vGPU‑scheduler)是一款 CNCF Sandbox 级别的开源 K8s 中间件,通过虚拟化 GPU/NPU 等异构设备并支持内存、计算核心时间片隔离及共享调度,为容器提供统一接口,实现细粒度资源配额…...

es6+和css3新增的特性有哪些

一:ECMAScript 新特性(ES6) ES6 (2015) - 革命性更新 1,记住的方法,从一个方法里面用到了哪些技术 1,let /const块级作用域声明2,**默认参数**:函数参数可以设置默认值。3&#x…...

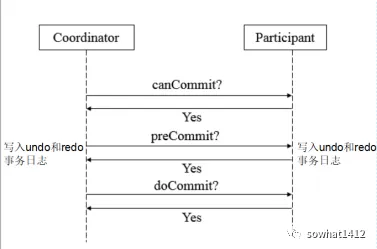

解析两阶段提交与三阶段提交的核心差异及MySQL实现方案

引言 在分布式系统的事务处理中,如何保障跨节点数据操作的一致性始终是核心挑战。经典的两阶段提交协议(2PC)通过准备阶段与提交阶段的协调机制,以同步决策模式确保事务原子性。其改进版本三阶段提交协议(3PC…...

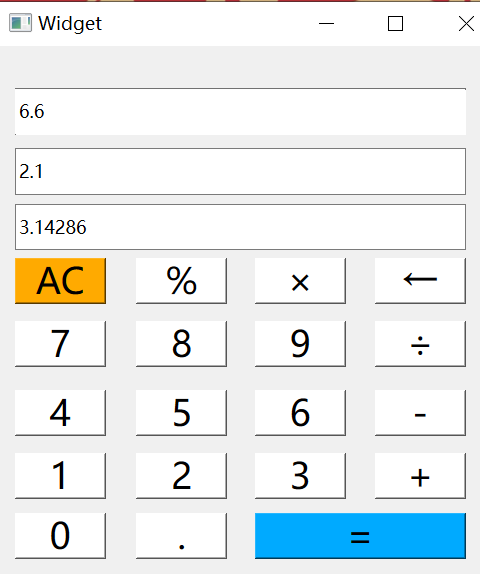

6.9-QT模拟计算器

源码: 头文件: widget.h #ifndef WIDGET_H #define WIDGET_H#include <QWidget> #include <QMouseEvent>QT_BEGIN_NAMESPACE namespace Ui { class Widget; } QT_END_NAMESPACEclass Widget : public QWidget {Q_OBJECTpublic:Widget(QWidget *parent nullptr);…...

【PX4飞控】mavros gps相关话题分析,经纬度海拔获取方法,卫星数锁定状态获取方法

使用 ROS1-Noetic 和 mavros v1.20.1, 携带经纬度海拔的话题主要有三个: /mavros/global_position/raw/fix/mavros/gpsstatus/gps1/raw/mavros/global_position/global 查看 mavros 源码,来分析他们的发布过程。发现前两个话题都对应了同一…...