【llama_factory】qwen2_vl训练与批量推理

训练llama factory配置文件

文件:examples/train_lora/qwen2vl_lora_sft.yaml

### model

model_name_or_path: qwen2_vl/model_72b

trust_remote_code: true### method

stage: sft

do_train: true

finetuning_type: lora

lora_target: all### dataset

dataset: car_item # video: mllm_video_demo

template: qwen2_vl

cutoff_len: 2048

max_samples: 1000

overwrite_cache: true

preprocessing_num_workers: 16### output

output_dir: saves/qwen2_vl-72b/lora/sft

logging_steps: 10

save_steps: 500

plot_loss: true

overwrite_output_dir: true### train

per_device_train_batch_size: 1

gradient_accumulation_steps: 1

learning_rate: 1.0e-4

num_train_epochs: 25.0

lr_scheduler_type: cosine

warmup_ratio: 0.1

bf16: True

ddp_timeout: 180000000

deepspeed: examples/deepspeed/ds_z3_config.json

#有坑,默认的配置文件没有设置deepspeed参数,会每张显卡都并行加载一个72B模型导致显卡OOM,经过查阅文献设置deepspeed分布式训练参数,有五种,训练大模型设置显存最低的,训练7b可以设置为ds_z0_config.json

#ds_z0_config.json ds_z2_config.json ds_z2_offload_config.json ds_z3_config.json ds_z3_offload_config.json

### eval

val_size: 0.1

per_device_eval_batch_size: 0

eval_strategy: steps

eval_steps: 500

llamafactory-cli train examples/train_lora/qwen2vl_lora_sft.yaml

批量推理

文件:examples/train_lora/qwen2vl_lora_sft.yaml

### model

model_name_or_path: qwen2_vl/model_7b

trust_remote_code: true

# method

stage: sft

do_train: false

do_predict: true

predict_with_generate: true

finetuning_type: full# dataset

eval_dataset: car_item #修改为测试集

template: qwen2_vl

cutoff_len: 2048

max_samples: 200

overwrite_cache: true

preprocessing_num_workers: 16

# output

output_dir: saves/qwen2_vl-7b/lora/sft-infer-1 #修改为保存地址

logging_steps: 1

overwrite_output_dir: true# eval

per_device_eval_batch_size: 4

# generation

max_new_tokens: 128

temperature: 0.1

top_k: 1

```bash

llamafactory-cli train examples/train_lora/qwen2vl_lora_sft.yaml

# 总结

总的来说,训练时显存不足需要设置分布式训练方式,deepspeed。

推理时,设置eval_dataset eval相关参数。

另外数据集要进行预处理,搞成标准llama处理格式,加载到data/dataset_info.json中```bash

{"identity": {"file_name": "identity.json"},"alpaca_en_demo": {"file_name": "alpaca_en_demo.json"},"alpaca_zh_demo": {"file_name": "alpaca_zh_demo.json"},"glaive_toolcall_en_demo": {"file_name": "glaive_toolcall_en_demo.json","formatting": "sharegpt","columns": {"messages": "conversations","tools": "tools"}},"glaive_toolcall_zh_demo": {"file_name": "glaive_toolcall_zh_demo.json","formatting": "sharegpt","columns": {"messages": "conversations","tools": "tools"}},"car_item": {"file_name": "car_item/train.json","columns": {"images": "image","prompt": "instruction","query": "input","response": "output"}},"mllm_demo": {"file_name": "mllm_demo.json","formatting": "sharegpt","columns": {"messages": "messages","images": "images"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"mllm_video_demo": {"file_name": "mllm_video_demo.json","formatting": "sharegpt","columns": {"messages": "messages","videos": "videos"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"alpaca_en": {"hf_hub_url": "llamafactory/alpaca_en","ms_hub_url": "llamafactory/alpaca_en","om_hub_url": "HaM/alpaca_en"},"alpaca_zh": {"hf_hub_url": "llamafactory/alpaca_zh","ms_hub_url": "llamafactory/alpaca_zh"},"alpaca_gpt4_en": {"hf_hub_url": "llamafactory/alpaca_gpt4_en","ms_hub_url": "llamafactory/alpaca_gpt4_en"},"alpaca_gpt4_zh": {"hf_hub_url": "llamafactory/alpaca_gpt4_zh","ms_hub_url": "llamafactory/alpaca_gpt4_zh","om_hub_url": "State_Cloud/alpaca-gpt4-data-zh"},"glaive_toolcall_en": {"hf_hub_url": "llamafactory/glaive_toolcall_en","formatting": "sharegpt","columns": {"messages": "conversations","tools": "tools"}},"glaive_toolcall_zh": {"hf_hub_url": "llamafactory/glaive_toolcall_zh","formatting": "sharegpt","columns": {"messages": "conversations","tools": "tools"}},"lima": {"hf_hub_url": "llamafactory/lima","formatting": "sharegpt"},"guanaco": {"hf_hub_url": "JosephusCheung/GuanacoDataset","ms_hub_url": "AI-ModelScope/GuanacoDataset"},"belle_2m": {"hf_hub_url": "BelleGroup/train_2M_CN","ms_hub_url": "AI-ModelScope/train_2M_CN"},"belle_1m": {"hf_hub_url": "BelleGroup/train_1M_CN","ms_hub_url": "AI-ModelScope/train_1M_CN"},"belle_0.5m": {"hf_hub_url": "BelleGroup/train_0.5M_CN","ms_hub_url": "AI-ModelScope/train_0.5M_CN"},"belle_dialog": {"hf_hub_url": "BelleGroup/generated_chat_0.4M","ms_hub_url": "AI-ModelScope/generated_chat_0.4M"},"belle_math": {"hf_hub_url": "BelleGroup/school_math_0.25M","ms_hub_url": "AI-ModelScope/school_math_0.25M"},"belle_multiturn": {"script_url": "belle_multiturn","formatting": "sharegpt"},"ultra_chat": {"script_url": "ultra_chat","formatting": "sharegpt"},"open_platypus": {"hf_hub_url": "garage-bAInd/Open-Platypus","ms_hub_url": "AI-ModelScope/Open-Platypus"},"codealpaca": {"hf_hub_url": "sahil2801/CodeAlpaca-20k","ms_hub_url": "AI-ModelScope/CodeAlpaca-20k"},"alpaca_cot": {"hf_hub_url": "QingyiSi/Alpaca-CoT","ms_hub_url": "AI-ModelScope/Alpaca-CoT"},"openorca": {"hf_hub_url": "Open-Orca/OpenOrca","ms_hub_url": "AI-ModelScope/OpenOrca","columns": {"prompt": "question","response": "response","system": "system_prompt"}},"slimorca": {"hf_hub_url": "Open-Orca/SlimOrca","formatting": "sharegpt"},"mathinstruct": {"hf_hub_url": "TIGER-Lab/MathInstruct","ms_hub_url": "AI-ModelScope/MathInstruct","columns": {"prompt": "instruction","response": "output"}},"firefly": {"hf_hub_url": "YeungNLP/firefly-train-1.1M","columns": {"prompt": "input","response": "target"}},"wikiqa": {"hf_hub_url": "wiki_qa","columns": {"prompt": "question","response": "answer"}},"webqa": {"hf_hub_url": "suolyer/webqa","ms_hub_url": "AI-ModelScope/webqa","columns": {"prompt": "input","response": "output"}},"webnovel": {"hf_hub_url": "zxbsmk/webnovel_cn","ms_hub_url": "AI-ModelScope/webnovel_cn"},"nectar_sft": {"hf_hub_url": "AstraMindAI/SFT-Nectar","ms_hub_url": "AI-ModelScope/SFT-Nectar"},"deepctrl": {"ms_hub_url": "deepctrl/deepctrl-sft-data"},"adgen_train": {"hf_hub_url": "HasturOfficial/adgen","ms_hub_url": "AI-ModelScope/adgen","split": "train","columns": {"prompt": "content","response": "summary"}},"adgen_eval": {"hf_hub_url": "HasturOfficial/adgen","ms_hub_url": "AI-ModelScope/adgen","split": "validation","columns": {"prompt": "content","response": "summary"}},"sharegpt_hyper": {"hf_hub_url": "totally-not-an-llm/sharegpt-hyperfiltered-3k","formatting": "sharegpt"},"sharegpt4": {"hf_hub_url": "shibing624/sharegpt_gpt4","ms_hub_url": "AI-ModelScope/sharegpt_gpt4","formatting": "sharegpt"},"ultrachat_200k": {"hf_hub_url": "HuggingFaceH4/ultrachat_200k","ms_hub_url": "AI-ModelScope/ultrachat_200k","formatting": "sharegpt","columns": {"messages": "messages"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"agent_instruct": {"hf_hub_url": "THUDM/AgentInstruct","ms_hub_url": "ZhipuAI/AgentInstruct","formatting": "sharegpt"},"lmsys_chat": {"hf_hub_url": "lmsys/lmsys-chat-1m","ms_hub_url": "AI-ModelScope/lmsys-chat-1m","formatting": "sharegpt","columns": {"messages": "conversation"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "human","assistant_tag": "assistant"}},"evol_instruct": {"hf_hub_url": "WizardLM/WizardLM_evol_instruct_V2_196k","ms_hub_url": "AI-ModelScope/WizardLM_evol_instruct_V2_196k","formatting": "sharegpt"},"glaive_toolcall_100k": {"hf_hub_url": "hiyouga/glaive-function-calling-v2-sharegpt","formatting": "sharegpt","columns": {"messages": "conversations","tools": "tools"}},"cosmopedia": {"hf_hub_url": "HuggingFaceTB/cosmopedia","columns": {"prompt": "prompt","response": "text"}},"stem_zh": {"hf_hub_url": "hfl/stem_zh_instruction"},"ruozhiba_gpt4": {"hf_hub_url": "hfl/ruozhiba_gpt4_turbo"},"neo_sft": {"hf_hub_url": "m-a-p/neo_sft_phase2","formatting": "sharegpt"},"magpie_pro_300k": {"hf_hub_url": "Magpie-Align/Magpie-Pro-300K-Filtered","formatting": "sharegpt"},"magpie_ultra": {"hf_hub_url": "argilla/magpie-ultra-v0.1","columns": {"prompt": "instruction","response": "response"}},"web_instruct": {"hf_hub_url": "TIGER-Lab/WebInstructSub","columns": {"prompt": "question","response": "answer"}},"openo1_sft": {"hf_hub_url": "llamafactory/OpenO1-SFT","ms_hub_url": "llamafactory/OpenO1-SFT","columns": {"prompt": "prompt","response": "response"}},"llava_1k_en": {"hf_hub_url": "BUAADreamer/llava-en-zh-2k","subset": "en","formatting": "sharegpt","columns": {"messages": "messages","images": "images"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"llava_1k_zh": {"hf_hub_url": "BUAADreamer/llava-en-zh-2k","subset": "zh","formatting": "sharegpt","columns": {"messages": "messages","images": "images"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"llava_150k_en": {"hf_hub_url": "BUAADreamer/llava-en-zh-300k","subset": "en","formatting": "sharegpt","columns": {"messages": "messages","images": "images"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"llava_150k_zh": {"hf_hub_url": "BUAADreamer/llava-en-zh-300k","subset": "zh","formatting": "sharegpt","columns": {"messages": "messages","images": "images"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"pokemon_cap": {"hf_hub_url": "llamafactory/pokemon-gpt4o-captions","formatting": "sharegpt","columns": {"messages": "conversations","images": "images"}},"mllm_pt_demo": {"hf_hub_url": "BUAADreamer/mllm_pt_demo","formatting": "sharegpt","columns": {"messages": "messages","images": "images"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"oasst_de": {"hf_hub_url": "mayflowergmbh/oasst_de"},"dolly_15k_de": {"hf_hub_url": "mayflowergmbh/dolly-15k_de"},"alpaca-gpt4_de": {"hf_hub_url": "mayflowergmbh/alpaca-gpt4_de"},"openschnabeltier_de": {"hf_hub_url": "mayflowergmbh/openschnabeltier_de"},"evol_instruct_de": {"hf_hub_url": "mayflowergmbh/evol-instruct_de"},"dolphin_de": {"hf_hub_url": "mayflowergmbh/dolphin_de"},"booksum_de": {"hf_hub_url": "mayflowergmbh/booksum_de"},"airoboros_de": {"hf_hub_url": "mayflowergmbh/airoboros-3.0_de"},"ultrachat_de": {"hf_hub_url": "mayflowergmbh/ultra-chat_de"},"dpo_en_demo": {"file_name": "dpo_en_demo.json","ranking": true,"formatting": "sharegpt","columns": {"messages": "conversations","chosen": "chosen","rejected": "rejected"}},"dpo_zh_demo": {"file_name": "dpo_zh_demo.json","ranking": true,"formatting": "sharegpt","columns": {"messages": "conversations","chosen": "chosen","rejected": "rejected"}},"dpo_mix_en": {"hf_hub_url": "llamafactory/DPO-En-Zh-20k","subset": "en","ranking": true,"formatting": "sharegpt","columns": {"messages": "conversations","chosen": "chosen","rejected": "rejected"}},"dpo_mix_zh": {"hf_hub_url": "llamafactory/DPO-En-Zh-20k","subset": "zh","ranking": true,"formatting": "sharegpt","columns": {"messages": "conversations","chosen": "chosen","rejected": "rejected"}},"ultrafeedback": {"hf_hub_url": "llamafactory/ultrafeedback_binarized","ms_hub_url": "llamafactory/ultrafeedback_binarized","ranking": true,"columns": {"prompt": "instruction","chosen": "chosen","rejected": "rejected"}},"rlhf_v": {"hf_hub_url": "llamafactory/RLHF-V","ranking": true,"formatting": "sharegpt","columns": {"messages": "conversations","chosen": "chosen","rejected": "rejected","images": "images"}},"vlfeedback": {"hf_hub_url": "Zhihui/VLFeedback","ranking": true,"formatting": "sharegpt","columns": {"messages": "conversations","chosen": "chosen","rejected": "rejected","images": "images"}},"orca_pairs": {"hf_hub_url": "Intel/orca_dpo_pairs","ranking": true,"columns": {"prompt": "question","chosen": "chosen","rejected": "rejected","system": "system"}},"hh_rlhf_en": {"script_url": "hh_rlhf_en","ranking": true,"columns": {"prompt": "instruction","chosen": "chosen","rejected": "rejected","history": "history"}},"nectar_rm": {"hf_hub_url": "AstraMindAI/RLAIF-Nectar","ms_hub_url": "AI-ModelScope/RLAIF-Nectar","ranking": true},"orca_dpo_de": {"hf_hub_url": "mayflowergmbh/intel_orca_dpo_pairs_de","ranking": true},"kto_en_demo": {"file_name": "kto_en_demo.json","formatting": "sharegpt","columns": {"messages": "messages","kto_tag": "label"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"kto_mix_en": {"hf_hub_url": "argilla/kto-mix-15k","formatting": "sharegpt","columns": {"messages": "completion","kto_tag": "label"},"tags": {"role_tag": "role","content_tag": "content","user_tag": "user","assistant_tag": "assistant"}},"ultrafeedback_kto": {"hf_hub_url": "argilla/ultrafeedback-binarized-preferences-cleaned-kto","ms_hub_url": "AI-ModelScope/ultrafeedback-binarized-preferences-cleaned-kto","columns": {"prompt": "prompt","response": "completion","kto_tag": "label"}},"wiki_demo": {"file_name": "wiki_demo.txt","columns": {"prompt": "text"}},"c4_demo": {"file_name": "c4_demo.json","columns": {"prompt": "text"}},"refinedweb": {"hf_hub_url": "tiiuae/falcon-refinedweb","columns": {"prompt": "content"}},"redpajama_v2": {"hf_hub_url": "togethercomputer/RedPajama-Data-V2","columns": {"prompt": "raw_content"},"subset": "default"},"wikipedia_en": {"hf_hub_url": "olm/olm-wikipedia-20221220","ms_hub_url": "AI-ModelScope/olm-wikipedia-20221220","columns": {"prompt": "text"}},"wikipedia_zh": {"hf_hub_url": "pleisto/wikipedia-cn-20230720-filtered","ms_hub_url": "AI-ModelScope/wikipedia-cn-20230720-filtered","columns": {"prompt": "completion"}},"pile": {"hf_hub_url": "monology/pile-uncopyrighted","ms_hub_url": "AI-ModelScope/pile","columns": {"prompt": "text"}},"skypile": {"hf_hub_url": "Skywork/SkyPile-150B","ms_hub_url": "AI-ModelScope/SkyPile-150B","columns": {"prompt": "text"}},"fineweb": {"hf_hub_url": "HuggingFaceFW/fineweb","columns": {"prompt": "text"}},"fineweb_edu": {"hf_hub_url": "HuggingFaceFW/fineweb-edu","columns": {"prompt": "text"}},"the_stack": {"hf_hub_url": "bigcode/the-stack","ms_hub_url": "AI-ModelScope/the-stack","columns": {"prompt": "content"}},"starcoder_python": {"hf_hub_url": "bigcode/starcoderdata","ms_hub_url": "AI-ModelScope/starcoderdata","columns": {"prompt": "content"},"folder": "python"}

}

相关文章:

【llama_factory】qwen2_vl训练与批量推理

训练llama factory配置文件 文件:examples/train_lora/qwen2vl_lora_sft.yaml ### model model_name_or_path: qwen2_vl/model_72b trust_remote_code: true### method stage: sft do_train: true finetuning_type: lora lora_target: all### dataset dataset: ca…...

wpa_cli命令使用记录

wpa_cli可以用于查询当前状态、更改配置、触发事件和请求交互式用户输入。具体来说,它可以显示当前的认证状态、选择的安全模式、dot11和dot1x MIB等,并可以配置一些变量,如EAPOL状态机参数。此外,wpa_cli还可以触发重新关联和IEE…...

【Uniapp-Vue3】页面生命周期onLoad和onReady

一、onLoad函数 onLoad在页面载入时触发,多用于页面跳转时进行参数传递。 我们在跳转的时候传递参数name和age: 接受参数: import {onLoad} from "dcloudio/uni-app"; onLoad((e)>{...}) 二、onReady函数 页面生命周期函数中的onReady其…...

《C++11》并发库:简介与应用

在C11之前,C并没有提供原生的并发支持。开发者通常需要依赖于操作系统的API(如Windows的CreateThread或POSIX的pthread_create)或者第三方库(如Boost.Thread)来创建和管理线程。这些方式存在以下几个问题: …...

LeetCode - #183 Swift 实现查询未下订单的客户

网罗开发 (小红书、快手、视频号同名) 大家好,我是 展菲,目前在上市企业从事人工智能项目研发管理工作,平时热衷于分享各种编程领域的软硬技能知识以及前沿技术,包括iOS、前端、Harmony OS、Java、Python等…...

HTML拖拽功能(纯html5+JS实现)

1、HTML拖拽--单元行拖动 <!DOCTYPE html> <html lang"en"><head><meta charset"UTF-8"><meta name"viewport" content"widthdevice-width, initial-scale1.0"><title>Document</title><…...

mysql 等保处理,设置wait_timeout引发的问题

👨⚕ 主页: gis分享者 👨⚕ 感谢各位大佬 点赞👍 收藏⭐ 留言📝 加关注✅! 👨⚕ 收录于专栏:运维工程师 文章目录 前言问题处理 前言 系统部署完成后,客户需要做二级等保&…...

7.STM32F407ZGT6-RTC

参考: 1.正点原子 前言: RTC实时时钟是很基本的外设,用来记录绝对时间。做个总结,达到: 1.学习RTC的原理和概念。 2.通过STM32CubeMX快速配置RTC。 27.1 RTC 时钟简介 STM32F407 的实时时钟(RTC…...

重写(补充)

大家好,今天我们把剩下一点重写内容说完,来看。 [重写的设计规则] 对于已经投入使用的类,尽量不要进行修政 ,最好的方式是:重新定义一个新的类,来重复利用其中共性的内容 我们不该在原来的类上进行修改,因为原来的类,可能还有用…...

30分钟内搭建一个全能轻量级springboot 3.4 + 脚手架 <3>5分钟集成好druid并使用druid自带监控工具监控sql请求

快速导航 快速导航 <1> 5分钟快速创建一个springboot web项目 <2> 5分钟集成好最新版本的开源swagger ui,并使用ui操作调用接口 <3> 5分钟集成好druid并使用druid自带监控工具监控sql请求 <4> 5分钟集成好mybatisplus并使用mybatisplus g…...

【C#深度学习之路】如何使用C#实现Yolo8/11 Segment 全尺寸模型的训练和推理

【C#深度学习之路】如何使用C#实现Yolo8/11 Segment 全尺寸模型的训练和推理 项目背景项目实现推理过程训练过程 项目展望写在最后项目下载链接 本文为原创文章,若需要转载,请注明出处。 原文地址:https://blog.csdn.net/qq_30270773/article…...

Oracle 分区索引简介

目录 一. 什么是分区索引二. 分区索引的种类2.1 局部分区索引(Local Partitioned Index)2.2 全局分区索引(Global Partitioned Index) 三. 分区索引的创建四. 分区索引查看4.1 USER_IND_COLUMNS 表4.2 USER_INDEXES 表 五. 分区索…...

【科技赋能未来】NDT2025第三届新能源数字科技大会全面启动!

随着我国碳达峰目标、碳中和目标的提出,以及经济社会的发展进步,以风电、光伏发电为代表的新能源行业迎来巨大发展机遇,成为未来绿色经济发展的主要趋势和方向。 此外,数字化技术的不断发展和创新,其在新能源领域的应…...

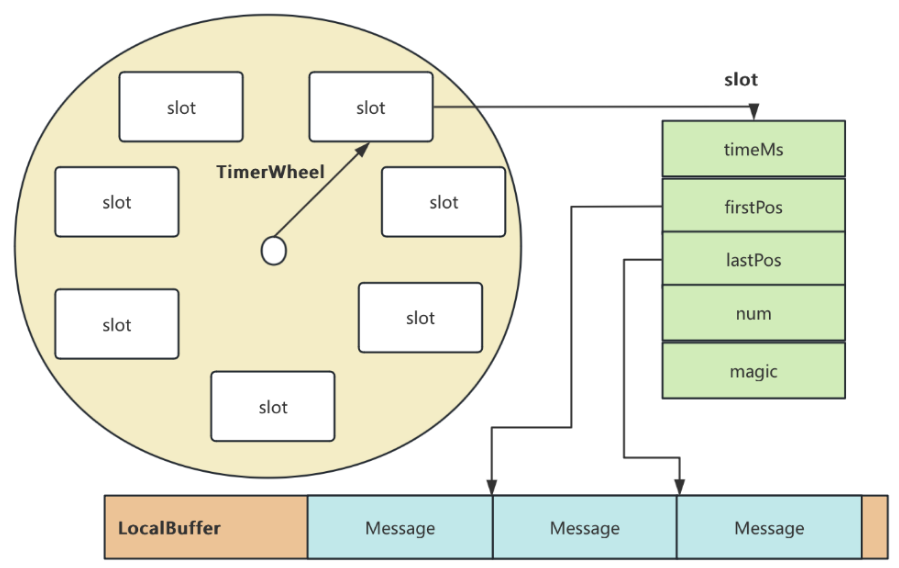

Broker收到消息之后如何存储

1.前言 此文章是在儒猿课程中的学习笔记,感兴趣的想看原来的课程可以去咨询儒猿课堂《从0开始带你成为RocketMQ高手》,我本人觉得这个作者还是不错,都是从场景来进行分析,感觉还是挺适合我这种小白的。这块主要都是我自己的学习笔…...

Mysql--实战篇--SQL优化(查询优化器,常用的SQL优化方法,执行计划EXPLAIN,Mysql性能调优,慢日志开启和分析等)

一、查询优化 1、查询优化器 (Query Optimizer) MySQL查询优化器(Query Optimizer)是MySQL数据库管理系统中的一个关键组件,负责分析和选择最有效的执行计划来执行SQL查询。查询优化器的目标是尽可能减少查询的执行时间和资源消耗ÿ…...

BERT与CNN结合实现糖尿病相关医学问题多分类模型

完整源码项目包获取→点击文章末尾名片! 使用HuggingFace开发的Transformers库,使用BERT模型实现中文文本分类(二分类或多分类) 首先直接利用transformer.models.bert.BertForSequenceClassification()实现文本分类 然后手动实现B…...

rabbitmqp安装延迟队列

在RabbitMQ中,延迟队列是一种特殊的队列类型。当消息被发送到此类队列后,不会立即投递给消费者,而是会等待预设的一段时间,待延迟期满后才进行投递。这种队列在多种场景下都极具价值,比如可用于处理需要在特定时间触发…...

深入探讨DICOM医学影像中的MPPS服务及其具体实现

深入探讨DICOM医学影像中的MPPS服务及其具体实现 1. 引言 在医疗影像的管理和传输过程中,DICOM(数字影像和通信医学)标准发挥着至关重要的作用。除了DICOM影像的存储和传输(如影像存储SCP和影像传输SCP),…...

集合帖:区间问题

一、AcWing 803:区间合并 (1)题目来源:https://www.acwing.com/problem/content/805/ (2)算法代码:https://blog.csdn.net/hnjzsyjyj/article/details/145067059 #include <bits/stdc.h>…...

C#,入门教程(27)——应用程序(Application)的基础知识

上一篇: C#,入门教程(26)——数据的基本概念与使用方法https://blog.csdn.net/beijinghorn/article/details/124952589 一、什么是应用程序 Application? 应用程序是编程的结果。一般把代码经过编译(等)过程&#…...

结构体的进阶应用)

基于算法竞赛的c++编程(28)结构体的进阶应用

结构体的嵌套与复杂数据组织 在C中,结构体可以嵌套使用,形成更复杂的数据结构。例如,可以通过嵌套结构体描述多层级数据关系: struct Address {string city;string street;int zipCode; };struct Employee {string name;int id;…...

RocketMQ延迟消息机制

两种延迟消息 RocketMQ中提供了两种延迟消息机制 指定固定的延迟级别 通过在Message中设定一个MessageDelayLevel参数,对应18个预设的延迟级别指定时间点的延迟级别 通过在Message中设定一个DeliverTimeMS指定一个Long类型表示的具体时间点。到了时间点后…...

Linux相关概念和易错知识点(42)(TCP的连接管理、可靠性、面临复杂网络的处理)

目录 1.TCP的连接管理机制(1)三次握手①握手过程②对握手过程的理解 (2)四次挥手(3)握手和挥手的触发(4)状态切换①挥手过程中状态的切换②握手过程中状态的切换 2.TCP的可靠性&…...

1688商品列表API与其他数据源的对接思路

将1688商品列表API与其他数据源对接时,需结合业务场景设计数据流转链路,重点关注数据格式兼容性、接口调用频率控制及数据一致性维护。以下是具体对接思路及关键技术点: 一、核心对接场景与目标 商品数据同步 场景:将1688商品信息…...

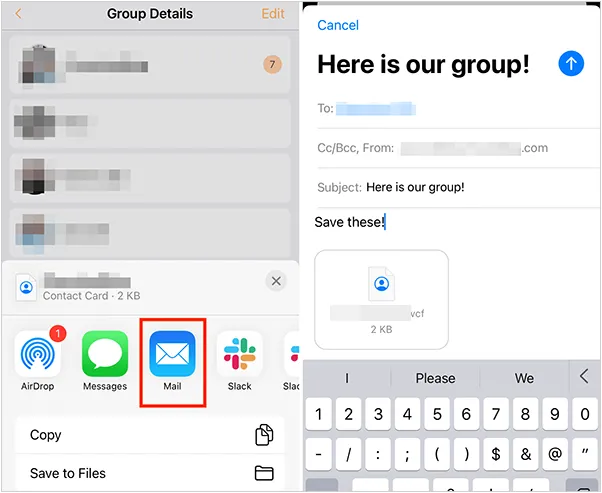

如何将联系人从 iPhone 转移到 Android

从 iPhone 换到 Android 手机时,你可能需要保留重要的数据,例如通讯录。好在,将通讯录从 iPhone 转移到 Android 手机非常简单,你可以从本文中学习 6 种可靠的方法,确保随时保持连接,不错过任何信息。 第 1…...

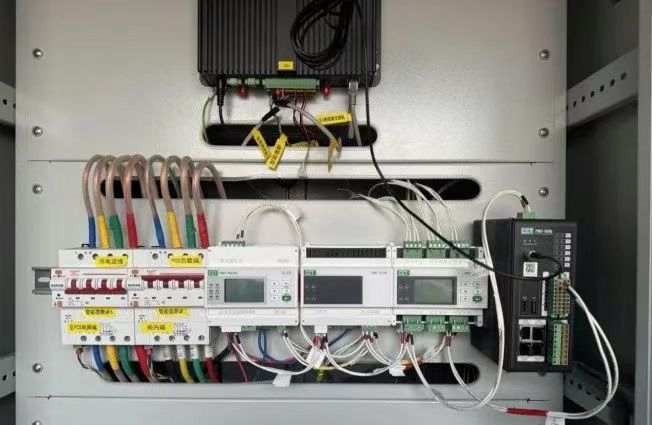

IT供电系统绝缘监测及故障定位解决方案

随着新能源的快速发展,光伏电站、储能系统及充电设备已广泛应用于现代能源网络。在光伏领域,IT供电系统凭借其持续供电性好、安全性高等优势成为光伏首选,但在长期运行中,例如老化、潮湿、隐裂、机械损伤等问题会影响光伏板绝缘层…...

【Java学习笔记】BigInteger 和 BigDecimal 类

BigInteger 和 BigDecimal 类 二者共有的常见方法 方法功能add加subtract减multiply乘divide除 注意点:传参类型必须是类对象 一、BigInteger 1. 作用:适合保存比较大的整型数 2. 使用说明 创建BigInteger对象 传入字符串 3. 代码示例 import j…...

2025季度云服务器排行榜

在全球云服务器市场,各厂商的排名和地位并非一成不变,而是由其独特的优势、战略布局和市场适应性共同决定的。以下是根据2025年市场趋势,对主要云服务器厂商在排行榜中占据重要位置的原因和优势进行深度分析: 一、全球“三巨头”…...

return this;返回的是谁

一个审批系统的示例来演示责任链模式的实现。假设公司需要处理不同金额的采购申请,不同级别的经理有不同的审批权限: // 抽象处理者:审批者 abstract class Approver {protected Approver successor; // 下一个处理者// 设置下一个处理者pub…...

IP如何挑?2025年海外专线IP如何购买?

你花了时间和预算买了IP,结果IP质量不佳,项目效率低下不说,还可能带来莫名的网络问题,是不是太闹心了?尤其是在面对海外专线IP时,到底怎么才能买到适合自己的呢?所以,挑IP绝对是个技…...