mediapipe标注视频姿态关键点(基础版加进阶版)

前言

手语视频流的识别有两种大的分类,一种是直接将视频输入进网络,一种是识别了关键点之后再进入网络。所以这篇文章我就要来讲讲如何用mediapipe对手语视频进行关键点标注。

代码

需要直接使用代码的,我就放这里了。环境自己配置一下吧,不太记得了。

基础代码

这部分实现了主要功能,后续在此基础上进行修改

import os

import cv2

import numpy as np

import mediapipe as mp

from concurrent.futures import ThreadPoolExecutor# 关键点过滤设置

filtered_hand = list(range(21))

filtered_pose = [11, 12, 13, 14, 15, 16] # 只保留躯干和手臂关键点

HAND_NUM = len(filtered_hand)

POSE_NUM = len(filtered_pose)# 初始化MediaPipe模型(增加检测参数)

mp_hands = mp.solutions.hands

mp_pose = mp.solutions.posehands = mp_hands.Hands(static_image_mode=False,max_num_hands=2,min_detection_confidence=0.1,#太高的话,没识别到就不识别,比较低能识别的比较全(没有干扰的情况下低比较好)min_tracking_confidence=0.1#太高,没追踪到也会放弃,比较低的连续性会比较好

)pose = mp_pose.Pose(static_image_mode=False,model_complexity=1,min_detection_confidence=0.7,min_tracking_confidence=0.5

)def get_frame_landmarks(frame):"""获取单帧关键点(修复线程安全问题)"""all_landmarks = np.full((HAND_NUM * 2 + POSE_NUM, 3), np.nan) # 初始化为NaN# 改为顺序执行确保数据可靠性# 手部关键点results_hands = hands.process(frame)if results_hands.multi_hand_landmarks:for i, hand_landmarks in enumerate(results_hands.multi_hand_landmarks[:2]): # 最多两只手hand_type = results_hands.multi_handedness[i].classification[0].indexpoints = np.array([(lm.x, lm.y, lm.z) for lm in hand_landmarks.landmark])if hand_type == 0: # 右手all_landmarks[:HAND_NUM] = pointselse: # 左手all_landmarks[HAND_NUM:HAND_NUM * 2] = points# 身体关键点results_pose = pose.process(frame)if results_pose.pose_landmarks:pose_points = np.array([(lm.x, lm.y, lm.z) for lm in results_pose.pose_landmarks.landmark])all_landmarks[HAND_NUM * 2:HAND_NUM * 2 + POSE_NUM] = pose_points[filtered_pose]return all_landmarksdef get_video_landmarks(video_path, start_frame=1, end_frame=-1):"""获取视频关键点(添加调试信息)"""cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}")return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))if end_frame < 0 or end_frame > total_frames:end_frame = total_framesvalid_frames = []frame_index = 0while cap.isOpened():ret, frame = cap.read()if not ret or frame_index > end_frame:breakif frame_index >= start_frame:frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)landmarks = get_frame_landmarks(frame_rgb)# 检查是否检测到有效关键点if not np.all(np.isnan(landmarks)):valid_frames.append(landmarks)else:print(f"第 {frame_index} 帧未检测到关键点")frame_index += 1cap.release()if not valid_frames:print("警告:未检测到任何关键点")return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))return np.stack(valid_frames)def draw_landmarks(video_path, output_path, landmarks):"""绘制关键点到视频"""cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}")returnfps = int(cap.get(cv2.CAP_PROP_FPS))width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))fourcc = cv2.VideoWriter_fourcc(*'mp4v')out = cv2.VideoWriter(output_path, fourcc, fps, (width, height))landmark_index = 0while cap.isOpened():ret, frame = cap.read()if not ret:breakif landmark_index < len(landmarks):# 绘制关键点for i, (x, y, _) in enumerate(landmarks[landmark_index]):if not np.isnan(x) and not np.isnan(y):px, py = int(x * width), int(y * height)# 右手绿色,左手红色,身体蓝色color = (0, 255, 0) if i < HAND_NUM else \(0, 0, 255) if i < HAND_NUM * 2 else \(255, 0, 0)cv2.circle(frame, (px, py), 4, color, -1)landmark_index += 1out.write(frame)cap.release()out.release()# 处理所有视频

video_root = "./doc/补充版/正式数据集/"

output_root = "./doc/save/"if not os.path.exists(output_root):os.makedirs(output_root)for video_name in os.listdir(video_root):if not video_name.endswith(('.mp4', '.avi', '.mov')):continuevideo_path = os.path.join(video_root, video_name)print(f"\n处理视频: {video_name}")# 获取关键点landmarks = get_video_landmarks(video_path)print(f"获取到 {len(landmarks)} 帧关键点")# 保存npy文件base_name = os.path.splitext(video_name)[0]np.save(os.path.join(output_root,"npy", f"{base_name}.npy"), landmarks)# 生成带关键点的视频output_video = os.path.join(output_root, "MP4",f"{base_name}_landmarks.mp4")draw_landmarks(video_path, output_video, landmarks)

print("全部处理完成!")

使用比较简单,修改video_root为视频目录路径,output_root为结果输出目录路径就可以正常使用了!

前置处理

# 关键点过滤设置

filtered_hand = list(range(21))

filtered_pose = [11, 12, 13, 14, 15, 16] # 只保留躯干和手臂关键点

HAND_NUM = len(filtered_hand)

POSE_NUM = len(filtered_pose)

)

这里需要选取你需要的关键点,手部正常来说每个手21个,姿态和脸部的关键点也可以自己选择保留什么,网上可以查到每个点对应数字。

# 初始化MediaPipe模型(增加检测参数)

mp_hands = mp.solutions.hands

mp_pose = mp.solutions.posehands = mp_hands.Hands(static_image_mode=False,max_num_hands=2,min_detection_confidence=0.1,#太高的话,没识别到就不识别,比较低能识别的比较全(没有干扰的情况下低比较好)min_tracking_confidence=0.1#太高,没追踪到也会放弃,比较低的连续性会比较好

)pose = mp_pose.Pose(static_image_mode=False,model_complexity=1,min_detection_confidence=0.7,min_tracking_confidence=0.5

参数调整,对于手部和姿态都可以进行单独的参数调整,static_image_mode是是否是图片,False代表不是,我这里是视频,如果是视频的话,后面就还有一个min_tracking_confidence追踪阈值,而图片不具有时间连续性,所以用不到这个参数。max_num_hands是最大会识别到有几个手,后面两个参数我也写了怎么调。姿态参数基本同理,有一些区别可以自己查一下。

函数讲解

def get_frame_landmarks(frame):"""获取单帧关键点(修复线程安全问题)"""all_landmarks = np.full((HAND_NUM * 2 + POSE_NUM, 3), np.nan) # 初始化为NaN# 改为顺序执行确保数据可靠性# 手部关键点results_hands = hands.process(frame)if results_hands.multi_hand_landmarks:for i, hand_landmarks in enumerate(results_hands.multi_hand_landmarks[:2]): # 最多两只手hand_type = results_hands.multi_handedness[i].classification[0].indexpoints = np.array([(lm.x, lm.y, lm.z) for lm in hand_landmarks.landmark])if hand_type == 0: # 右手all_landmarks[:HAND_NUM] = pointselse: # 左手all_landmarks[HAND_NUM:HAND_NUM * 2] = points# 身体关键点results_pose = pose.process(frame)if results_pose.pose_landmarks:pose_points = np.array([(lm.x, lm.y, lm.z) for lm in results_pose.pose_landmarks.landmark])all_landmarks[HAND_NUM * 2:HAND_NUM * 2 + POSE_NUM] = pose_points[filtered_pose]return all_landmarks

对于单帧进行处理,先对所有关键点留np的位置,全部填充NaN,再分别对手部关键点和肢体关键点进行识别,将识别的点填入原先的数组里面,得到最后要返回的关键点数组。

def get_video_landmarks(video_path, start_frame=1, end_frame=-1):"""获取视频关键点(添加调试信息)"""cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}")return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))if end_frame < 0 or end_frame > total_frames:end_frame = total_framesvalid_frames = []frame_index = 0while cap.isOpened():ret, frame = cap.read()if not ret or frame_index > end_frame:breakif frame_index >= start_frame:frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)landmarks = get_frame_landmarks(frame_rgb)# 检查是否检测到有效关键点if not np.all(np.isnan(landmarks)):valid_frames.append(landmarks)else:print(f"第 {frame_index} 帧未检测到关键点")frame_index += 1cap.release()if not valid_frames:print("警告:未检测到任何关键点")return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))return np.stack(valid_frames)处理视频帧的关键点识别,读取视频的每一帧,分别做通道BGR转RGB和调用单帧处理函数对其进行处理,将每一帧的结果堆叠起来返回。

def draw_landmarks(video_path, output_path, landmarks):"""绘制关键点到视频"""cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}")returnfps = int(cap.get(cv2.CAP_PROP_FPS))width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))fourcc = cv2.VideoWriter_fourcc(*'mp4v')out = cv2.VideoWriter(output_path, fourcc, fps, (width, height))landmark_index = 0while cap.isOpened():ret, frame = cap.read()if not ret:breakif landmark_index < len(landmarks):# 绘制关键点for i, (x, y, _) in enumerate(landmarks[landmark_index]):if not np.isnan(x) and not np.isnan(y):px, py = int(x * width), int(y * height)# 右手绿色,左手红色,身体蓝色color = (0, 255, 0) if i < HAND_NUM else \(0, 0, 255) if i < HAND_NUM * 2 else \(255, 0, 0)cv2.circle(frame, (px, py), 4, color, -1)landmark_index += 1out.write(frame)cap.release()out.release()绘制结果关键点函数,将视频路径和输出路径以及识别的关键点数组传入,读取视频,对每一帧的图片每一个关键点进行绘制,画圈圈,然后将帧写入保存。

进阶版log代码

该版本在原有基础上将简单点连接,新加上了线连接,效果如下:

同时添加了log,对于结果的视频流进行分析处理,当当前帧缺失了一只手的点,那么就认为该帧出现掉帧,统计掉帧的帧数和将掉帧的前2帧外加后3帧保存为图片记录下来。

import os

import cv2

import numpy as np

import mediapipe as mp

from concurrent.futures import ThreadPoolExecutor# 关键点过滤设置

filtered_hand = list(range(21))

filtered_pose = [11, 12, 13, 14, 15, 16] # 只保留躯干和手臂关键点

HAND_NUM = len(filtered_hand)

POSE_NUM = len(filtered_pose)# 初始化MediaPipe模型(增加检测参数)

mp_hands = mp.solutions.hands

mp_pose = mp.solutions.posehands = mp_hands.Hands(static_image_mode=False,max_num_hands=2,min_detection_confidence=0.1,#太高的话,没识别到就不识别,比较低能识别的比较全(没有干扰的情况下低比较好)min_tracking_confidence=0.1#太高,没追踪到也会放弃,比较低的连续性会比较好

)pose = mp_pose.Pose(static_image_mode=False,model_complexity=1,min_detection_confidence=0.7,min_tracking_confidence=0.5

)def get_frame_landmarks(frame):"""获取单帧关键点(修复线程安全问题)"""all_landmarks = np.full((HAND_NUM * 2 + POSE_NUM, 3), np.nan) # 初始化为NaN# 改为顺序执行确保数据可靠性# 手部关键点results_hands = hands.process(frame)if results_hands.multi_hand_landmarks:for i, hand_landmarks in enumerate(results_hands.multi_hand_landmarks[:2]): # 最多两只手hand_type = results_hands.multi_handedness[i].classification[0].indexpoints = np.array([(lm.x, lm.y, lm.z) for lm in hand_landmarks.landmark])if hand_type == 0: # 右手all_landmarks[:HAND_NUM] = pointselse: # 左手all_landmarks[HAND_NUM:HAND_NUM * 2] = points# 身体关键点results_pose = pose.process(frame)if results_pose.pose_landmarks:pose_points = np.array([(lm.x, lm.y, lm.z) for lm in results_pose.pose_landmarks.landmark])all_landmarks[HAND_NUM * 2:HAND_NUM * 2 + POSE_NUM] = pose_points[filtered_pose]return all_landmarksdef get_video_landmarks(video_path, start_frame=1, end_frame=-1):"""获取视频关键点(严格版帧对齐+掉帧统计)"""output_dir = "./doc/save_log/log"os.makedirs(output_dir, exist_ok=True) # 确保输出目录存在video_name=video_path.split("/")[4].split(".")[0]output_root=os.path.join(output_dir,video_name)os.makedirs(output_root, exist_ok=True)log_file_path = os.path.join(output_root, f"{video_name}.txt")with open(log_file_path, 'w') as log_file:cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}", file=log_file)return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))if end_frame < 0 or end_frame > total_frames:end_frame = total_frames# 预分配全NaN数组确保严格帧对齐results = np.full((end_frame - start_frame + 1, HAND_NUM * 2 + POSE_NUM, 3), np.nan)missing_frames = []frame_index = 0results_index = 0 # 结果数组的索引frame_buffer = [] # 用于保存帧图像width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))while cap.isOpened():ret, frame = cap.read()if not ret or frame_index > end_frame:breakif start_frame <= frame_index <= end_frame:frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)landmarks = get_frame_landmarks(frame_rgb)# 保存帧图像到缓冲区only_draw_landmarks(frame, landmarks, width, height)frame_buffer.append((frame_index, frame.copy()))# 检查关键点数量是否正确if landmarks.shape[0] == HAND_NUM * 2 + POSE_NUM:valid_points = np.sum(~np.isnan(landmarks[:, :2]))results[results_index] = landmarksif valid_points != 2 * (HAND_NUM * 2 + POSE_NUM):# 保存前后5帧save_range = range(max(frame_index - 2, start_frame), min(frame_index + 3, end_frame) + 1)for save_idx in save_range:save_path = os.path.join(output_root, f"frame_{save_idx:04d}_near_nan.png")# 从缓冲区查找帧for buf_idx, buf_frame in frame_buffer:if buf_idx == save_idx:cv2.imwrite(save_path, buf_frame)missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 有效点不足 ({valid_points}/{2 * landmarks.shape[0]})",file=log_file)else:missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 关键点数量异常 ({landmarks.shape[0]} != {HAND_NUM * 2 + POSE_NUM})",file=log_file)results_index += 1frame_index += 1cap.release()# 统计报告total_processed = end_frame - start_frame + 1print("\n关键点检测统计报告:", file=log_file)print(f"处理帧范围: {start_frame}-{end_frame} (共 {total_processed} 帧)", file=log_file)print(f"成功帧数: {total_processed - len(missing_frames)}", file=log_file)print(f"掉帧数: {len(missing_frames)}", file=log_file)if missing_frames:print("掉帧位置: " + ", ".join(map(str, missing_frames)), file=log_file)print(f"掉帧率: {len(missing_frames) / total_processed:.1%}", file=log_file)return resultsdef only_draw_landmarks(frame, landmarks, width, height):"""绘制关键点和连线到帧"""# 定义连接线HAND_CONNECTIONS = [ # 21个手部关键点连线 (MediaPipe手部模型)(0, 1), (1, 2), (2, 3), (3, 4), # 拇指(0, 5), (5, 6), (6, 7), (7, 8), # 食指(0, 9), (9, 10), (10, 11), (11, 12), # 中指(0, 13), (13, 14), (14, 15), (15, 16), # 无名指(0, 17), (17, 18), (18, 19), (19, 20) # 小指]# 躯干和手臂连线 (11-16对应: 肩膀、手肘、手腕)POSE_CONNECTIONS = [(11, 12), # 左右肩连线(11, 13), (13, 15), # 左臂(12, 14), (14, 16) # 右臂]# 绘制关键点for i, (x, y, _) in enumerate(landmarks):if not np.isnan(x) and not np.isnan(y):px, py = int(x * width), int(y * height)# 右手绿色(0-20),左手红色(21-41),身体蓝色(42+)color = (0, 255, 0) if i < HAND_NUM else \(0, 0, 255) if i < HAND_NUM * 2 else \(255, 0, 0)cv2.circle(frame, (px, py), 4, color, -1)# 绘制连线 - 右手 (前21个点)for connection in HAND_CONNECTIONS:start_idx, end_idx = connectionif start_idx < len(landmarks) and end_idx < len(landmarks):x1, y1, _ = landmarks[start_idx]x2, y2, _ = landmarks[end_idx]if not np.isnan(x1) and not np.isnan(y1) and not np.isnan(x2) and not np.isnan(y2):pt1 = (int(x1 * width), int(y1 * height))pt2 = (int(x2 * width), int(y2 * height))cv2.line(frame, pt1, pt2, (0, 255, 0), 2)# 绘制连线 - 左手 (21-41)for connection in HAND_CONNECTIONS:start_idx, end_idx = connectionstart_idx += HAND_NUMend_idx += HAND_NUMif start_idx < len(landmarks) and end_idx < len(landmarks):x1, y1, _ = landmarks[start_idx]x2, y2, _ = landmarks[end_idx]if not np.isnan(x1) and not np.isnan(y1) and not np.isnan(x2) and not np.isnan(y2):pt1 = (int(x1 * width), int(y1 * height))pt2 = (int(x2 * width), int(y2 * height))cv2.line(frame, pt1, pt2, (0, 0, 255), 2)# 绘制连线 - 身体 (只绘制filtered_pose中的点)for connection in POSE_CONNECTIONS:start_idx, end_idx = connection# 转换为实际索引 (假设身体关键点从2*HAND_NUM开始)start_idx = 2 * HAND_NUM + filtered_pose.index(start_idx) if start_idx in filtered_pose else -1end_idx = 2 * HAND_NUM + filtered_pose.index(end_idx) if end_idx in filtered_pose else -1if start_idx != -1 and end_idx != -1 and start_idx < len(landmarks) and end_idx < len(landmarks):x1, y1, _ = landmarks[start_idx]x2, y2, _ = landmarks[end_idx]if not np.isnan(x1) and not np.isnan(y1) and not np.isnan(x2) and not np.isnan(y2):pt1 = (int(x1 * width), int(y1 * height))pt2 = (int(x2 * width), int(y2 * height))cv2.line(frame, pt1, pt2, (255, 0, 0), 2)

def draw_landmarks(video_path, output_path, landmarks):"""绘制关键点和连线到视频"""# 定义连接线HAND_CONNECTIONS = [ # 21个手部关键点连线 (MediaPipe手部模型)(0, 1), (1, 2), (2, 3), (3, 4), # 拇指(0, 5), (5, 6), (6, 7), (7, 8), # 食指(0, 9), (9, 10), (10, 11), (11, 12), # 中指(0, 13), (13, 14), (14, 15), (15, 16), # 无名指(0, 17), (17, 18), (18, 19), (19, 20) # 小指]# 躯干和手臂连线 (11-16对应: 肩膀、手肘、手腕)POSE_CONNECTIONS = [(11, 12), # 左右肩连线(11, 13), (13, 15), # 左臂(12, 14), (14, 16) # 右臂]cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}")returnfps = int(cap.get(cv2.CAP_PROP_FPS))width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))fourcc = cv2.VideoWriter_fourcc(*'mp4v')out = cv2.VideoWriter(output_path, fourcc, fps, (width, height))landmark_index = 0while cap.isOpened():ret, frame = cap.read()if not ret:breakif landmark_index < len(landmarks):current_landmarks = landmarks[landmark_index]# 绘制关键点for i, (x, y, _) in enumerate(current_landmarks):if not np.isnan(x) and not np.isnan(y):px, py = int(x * width), int(y * height)# 右手绿色(0-20),左手红色(21-41),身体蓝色(42+)color = (0, 255, 0) if i < HAND_NUM else \(0, 0, 255) if i < HAND_NUM * 2 else \(255, 0, 0)cv2.circle(frame, (px, py), 4, color, -1)# 绘制连线 - 右手 (前21个点)for connection in HAND_CONNECTIONS:start_idx, end_idx = connectionif start_idx < len(current_landmarks) and end_idx < len(current_landmarks):x1, y1, _ = current_landmarks[start_idx]x2, y2, _ = current_landmarks[end_idx]if not np.isnan(x1) and not np.isnan(y1) and not np.isnan(x2) and not np.isnan(y2):pt1 = (int(x1 * width), int(y1 * height))pt2 = (int(x2 * width), int(y2 * height))cv2.line(frame, pt1, pt2, (0, 255, 0), 2)# 绘制连线 - 左手 (21-41)for connection in HAND_CONNECTIONS:start_idx, end_idx = connectionstart_idx += HAND_NUMend_idx += HAND_NUMif start_idx < len(current_landmarks) and end_idx < len(current_landmarks):x1, y1, _ = current_landmarks[start_idx]x2, y2, _ = current_landmarks[end_idx]if not np.isnan(x1) and not np.isnan(y1) and not np.isnan(x2) and not np.isnan(y2):pt1 = (int(x1 * width), int(y1 * height))pt2 = (int(x2 * width), int(y2 * height))cv2.line(frame, pt1, pt2, (0, 0, 255), 2)# 绘制连线 - 身体 (只绘制filtered_pose中的点)for connection in POSE_CONNECTIONS:start_idx, end_idx = connection# 转换为实际索引 (假设身体关键点从2*HAND_NUM开始)start_idx = 2 * HAND_NUM + filtered_pose.index(start_idx) if start_idx in filtered_pose else -1end_idx = 2 * HAND_NUM + filtered_pose.index(end_idx) if end_idx in filtered_pose else -1if start_idx != -1 and end_idx != -1 and start_idx < len(current_landmarks) and end_idx < len(current_landmarks):x1, y1, _ = current_landmarks[start_idx]x2, y2, _ = current_landmarks[end_idx]if not np.isnan(x1) and not np.isnan(y1) and not np.isnan(x2) and not np.isnan(y2):pt1 = (int(x1 * width), int(y1 * height))pt2 = (int(x2 * width), int(y2 * height))cv2.line(frame, pt1, pt2, (255, 0, 0), 2)landmark_index += 1out.write(frame)cap.release()out.release()# 处理所有视频

video_root = "./doc/补充版/正式数据集/"

output_root = "./doc/try_log/"if not os.path.exists(output_root):os.makedirs(output_root)for video_name in os.listdir(video_root):if not video_name.endswith(('.mp4', '.avi', '.mov')):continuevideo_path = os.path.join(video_root, video_name)print(f"\n处理视频: {video_name}")# 获取关键点landmarks = get_video_landmarks(video_path)print(f"获取到 {len(landmarks)} 帧关键点")if not os.path.exists(os.path.join(output_root,"npy")):os.makedirs(os.path.join(output_root,"npy"))# 保存npy文件base_name = os.path.splitext(video_name)[0]np.save(os.path.join(output_root,"npy", f"{base_name}.npy"), landmarks)if not os.path.exists(os.path.join(output_root,"MP4")):os.makedirs(os.path.join(output_root,"MP4"))# 生成带关键点的视频output_video = os.path.join(output_root, "MP4",f"{base_name}_landmarks.mp4")draw_landmarks(video_path, output_video, landmarks)

print("全部处理完成!")

函数讲解

def get_video_landmarks(video_path, start_frame=1, end_frame=-1):"""获取视频关键点(严格版帧对齐+掉帧统计)"""output_dir = "./doc/save_log/log"os.makedirs(output_dir, exist_ok=True) # 确保输出目录存在video_name=video_path.split("/")[4].split(".")[0]output_root=os.path.join(output_dir,video_name)os.makedirs(output_root, exist_ok=True)log_file_path = os.path.join(output_root, f"{video_name}.txt")with open(log_file_path, 'w') as log_file:cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}", file=log_file)return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))if end_frame < 0 or end_frame > total_frames:end_frame = total_frames# 预分配全NaN数组确保严格帧对齐results = np.full((end_frame - start_frame + 1, HAND_NUM * 2 + POSE_NUM, 3), np.nan)missing_frames = []frame_index = 0results_index = 0 # 结果数组的索引frame_buffer = [] # 用于保存帧图像width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))while cap.isOpened():ret, frame = cap.read()if not ret or frame_index > end_frame:breakif start_frame <= frame_index <= end_frame:frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)landmarks = get_frame_landmarks(frame_rgb)# 保存帧图像到缓冲区only_draw_landmarks(frame, landmarks, width, height)frame_buffer.append((frame_index, frame.copy()))# 检查关键点数量是否正确if landmarks.shape[0] == HAND_NUM * 2 + POSE_NUM:valid_points = np.sum(~np.isnan(landmarks[:, :2]))results[results_index] = landmarksif valid_points != 2 * (HAND_NUM * 2 + POSE_NUM):# 保存前后5帧save_range = range(max(frame_index - 2, start_frame), min(frame_index + 3, end_frame) + 1)for save_idx in save_range:save_path = os.path.join(output_root, f"frame_{save_idx:04d}_near_nan.png")# 从缓冲区查找帧for buf_idx, buf_frame in frame_buffer:if buf_idx == save_idx:cv2.imwrite(save_path, buf_frame)missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 有效点不足 ({valid_points}/{2 * landmarks.shape[0]})",file=log_file)else:missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 关键点数量异常 ({landmarks.shape[0]} != {HAND_NUM * 2 + POSE_NUM})",file=log_file)results_index += 1frame_index += 1cap.release()# 统计报告total_processed = end_frame - start_frame + 1print("\n关键点检测统计报告:", file=log_file)print(f"处理帧范围: {start_frame}-{end_frame} (共 {total_processed} 帧)", file=log_file)print(f"成功帧数: {total_processed - len(missing_frames)}", file=log_file)print(f"掉帧数: {len(missing_frames)}", file=log_file)if missing_frames:print("掉帧位置: " + ", ".join(map(str, missing_frames)), file=log_file)print(f"掉帧率: {len(missing_frames) / total_processed:.1%}", file=log_file)return results

稍稍讲一下这个修改比较大的部分吧,这部分添加了frame_buffer保存缓存帧,用于后续我提取我需要的记录帧,在保存之前添加了only_draw_landmarks函数,对于图片只进行关键点标注而不保存的功能,使得保存的图片能清楚看到问题出现在哪里。

if valid_points != 2 * (HAND_NUM * 2 + POSE_NUM):最关键的判断,有校点的判断,如果有nan的关键点就不是有校点,乘2是因为一个点要保留xy两个数值。当有效点不足时,进行log记录并且保存图片,最后还需要统计报告。

代码进阶版(卡尔曼滤波版)

class Kalman1D:def __init__(self):self.x = 0self.P = 1self.F = 1self.H = 1self.R = 0.01self.Q = 0.001self.initiated = Falsedef update(self, measurement):if not self.initiated:self.x = measurementself.initiated = True# Predictself.x = self.F * self.xself.P = self.F * self.P * self.F + self.Q# UpdateK = self.P * self.H / (self.H * self.P * self.H + self.R)self.x += K * (measurement - self.H * self.x)self.P = (1 - K * self.H) * self.Preturn self.xdef init_kalman_filters(num_points):return [[Kalman1D() for _ in range(3)] for _ in range(num_points)]def get_video_landmarks(video_path, start_frame=1, end_frame=-1):output_dir = "./doc/save_log/log"os.makedirs(output_dir, exist_ok=True)video_name = video_path.split("/")[-1].split(".")[0]output_root = os.path.join(output_dir, video_name)os.makedirs(output_root, exist_ok=True)log_file_path = os.path.join(output_root, f"{video_name}.txt")filters = init_kalman_filters(HAND_NUM * 2 + POSE_NUM)with open(log_file_path, 'w') as log_file:cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}", file=log_file)return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))if end_frame < 0 or end_frame > total_frames:end_frame = total_framesresults = np.full((end_frame - start_frame + 1, HAND_NUM * 2 + POSE_NUM, 3), np.nan)missing_frames = []frame_index = 0results_index = 0frame_buffer = []width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))while cap.isOpened():ret, frame = cap.read()if not ret or frame_index > end_frame:breakif start_frame <= frame_index <= end_frame:frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)landmarks = get_frame_landmarks(frame_rgb)# 应用卡尔曼滤波for i, (x, y, z) in enumerate(landmarks):for j, val in enumerate([x, y, z]):if not np.isnan(val):landmarks[i][j] = filters[i][j].update(val)only_draw_landmarks(frame, landmarks, width, height)frame_buffer.append((frame_index, frame.copy()))if landmarks.shape[0] == HAND_NUM * 2 + POSE_NUM:valid_points = np.sum(~np.isnan(landmarks[:, :2]))results[results_index] = landmarksif valid_points != 2 * (HAND_NUM * 2 + POSE_NUM):save_range = range(max(frame_index - 2, start_frame), min(frame_index + 3, end_frame) + 1)for save_idx in save_range:save_path = os.path.join(output_root, f"frame_{save_idx:04d}_near_nan.png")for buf_idx, buf_frame in frame_buffer:if buf_idx == save_idx:cv2.imwrite(save_path, buf_frame)missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 有效点不足 ({valid_points}/{2 * landmarks.shape[0]})", file=log_file)else:missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 关键点数量异常 ({landmarks.shape[0]} != {HAND_NUM * 2 + POSE_NUM})", file=log_file)results_index += 1frame_index += 1cap.release()total_processed = end_frame - start_frame + 1print("\n关键点检测统计报告:", file=log_file)print(f"处理帧范围: {start_frame}-{end_frame} (共 {total_processed} 帧)", file=log_file)print(f"成功帧数: {total_processed - len(missing_frames)}", file=log_file)print(f"掉帧数: {len(missing_frames)}", file=log_file)if missing_frames:print("掉帧位置: " + ", ".join(map(str, missing_frames)), file=log_file)print(f"掉帧率: {len(missing_frames) / total_processed:.1%}", file=log_file)return results其他部分同上就不赘诉和再次写了,当R=0.01时,会发现整体识别会跟不上视频,而R=0.00001时,又会发现几乎同没有卡尔曼差不多,在我的数据集上是这样的,其他数据集说不定有效果。

代码进阶版(速度卡尔曼滤波)

class Kalman1D_Velocity:def __init__(self):self.x = np.array([[0.], [0.]]) # 初始状态:[位置, 速度]self.P = np.eye(2) # 状态协方差self.F = np.array([[1., 1.],[0., 1.]]) # 状态转移self.H = np.array([[1., 0.]]) # 观测矩阵self.R = np.array([[0.01]]) # 观测噪声self.Q = np.array([[0.001, 0.],[0., 0.001]]) # 过程噪声self.initiated = Falsedef predict(self):self.x = np.dot(self.F, self.x)self.P = np.dot(self.F, np.dot(self.P, self.F.T)) + self.Qreturn self.x[0, 0]def update(self, measurement):if not self.initiated:self.x[0, 0] = measurementself.x[1, 0] = 0.0self.initiated = Truereturn measurement# Predictself.predict()# UpdateS = np.dot(self.H, np.dot(self.P, self.H.T)) + self.RK = np.dot(np.dot(self.P, self.H.T), np.linalg.inv(S))z = np.array([[measurement]])y = z - np.dot(self.H, self.x)self.x = self.x + np.dot(K, y)self.P = self.P - np.dot(K, np.dot(self.H, self.P))return self.x[0, 0]def update_or_predict(self, measurement):if np.isnan(measurement):return self.predict()else:return self.update(measurement)

def init_kalman_filters(num_points):return [[Kalman1D_Velocity() for _ in range(3)] for _ in range(num_points)]def get_video_landmarks(video_path, start_frame=1, end_frame=-1):output_dir = "./doc/save_log/log"os.makedirs(output_dir, exist_ok=True)video_name = video_path.split("/")[-1].split(".")[0]output_root = os.path.join(output_dir, video_name)os.makedirs(output_root, exist_ok=True)log_file_path = os.path.join(output_root, f"{video_name}.txt")filters = init_kalman_filters(HAND_NUM * 2 + POSE_NUM)with open(log_file_path, 'w') as log_file:cap = cv2.VideoCapture(video_path)if not cap.isOpened():print(f"无法打开视频文件: {video_path}", file=log_file)return np.empty((0, HAND_NUM * 2 + POSE_NUM, 3))total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))if end_frame < 0 or end_frame > total_frames:end_frame = total_framesresults = np.full((end_frame - start_frame + 1, HAND_NUM * 2 + POSE_NUM, 3), np.nan)missing_frames = []frame_index = 0results_index = 0frame_buffer = []width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))while cap.isOpened():ret, frame = cap.read()if not ret or frame_index > end_frame:breakif start_frame <= frame_index <= end_frame:frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)landmarks = get_frame_landmarks(frame_rgb)# 应用卡尔曼滤波for i, (x, y, z) in enumerate(landmarks):for j, val in enumerate([x, y, z]):landmarks[i][j] = filters[i][j].update_or_predict(val)only_draw_landmarks(frame, landmarks, width, height)frame_buffer.append((frame_index, frame.copy()))if landmarks.shape[0] == HAND_NUM * 2 + POSE_NUM:valid_points = np.sum(~np.isnan(landmarks[:, :2]))results[results_index] = landmarksif valid_points != 2 * (HAND_NUM * 2 + POSE_NUM):save_range = range(max(frame_index - 2, start_frame), min(frame_index + 3, end_frame) + 1)for save_idx in save_range:save_path = os.path.join(output_root, f"frame_{save_idx:04d}_near_nan.png")for buf_idx, buf_frame in frame_buffer:if buf_idx == save_idx:cv2.imwrite(save_path, buf_frame)missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 有效点不足 ({valid_points}/{2 * landmarks.shape[0]})", file=log_file)else:missing_frames.append(frame_index)print(f"掉帧警告 - 第 {frame_index} 帧: 关键点数量异常 ({landmarks.shape[0]} != {HAND_NUM * 2 + POSE_NUM})", file=log_file)results_index += 1frame_index += 1cap.release()total_processed = end_frame - start_frame + 1print("\n关键点检测统计报告:", file=log_file)print(f"处理帧范围: {start_frame}-{end_frame} (共 {total_processed} 帧)", file=log_file)print(f"成功帧数: {total_processed - len(missing_frames)}", file=log_file)print(f"掉帧数: {len(missing_frames)}", file=log_file)if missing_frames:print("掉帧位置: " + ", ".join(map(str, missing_frames)), file=log_file)print(f"掉帧率: {len(missing_frames) / total_processed:.1%}", file=log_file)return results

这个版本不会出现慢太多的情况,但是会出现有点飘的感觉,有时候会比没有的效果好一点。

总结

基础版:整个路线还是比较清晰的,由于我使用的数据视频背景比较简单,不太会出现误识别,所以我的参数调的很低,但是不知道为什么还是会出现掉帧的情况,需要后续研究一下。

进阶版:用了log记录之后才发现,掉帧和误识别还是有点严重的,一帧一帧会发现很多,采用了卡尔曼和速度卡尔曼似乎都不能很好的处理。

相关文章:

mediapipe标注视频姿态关键点(基础版加进阶版)

前言 手语视频流的识别有两种大的分类,一种是直接将视频输入进网络,一种是识别了关键点之后再进入网络。所以这篇文章我就要来讲讲如何用mediapipe对手语视频进行关键点标注。 代码 需要直接使用代码的,我就放这里了。环境自己配置一下吧&…...

PCtoLCD2002如何制作6*8字符

如何不把“等比缩放”前的打勾取消,则无法修改为对应英文字符为6*8。 取消之后就可以更改了!...

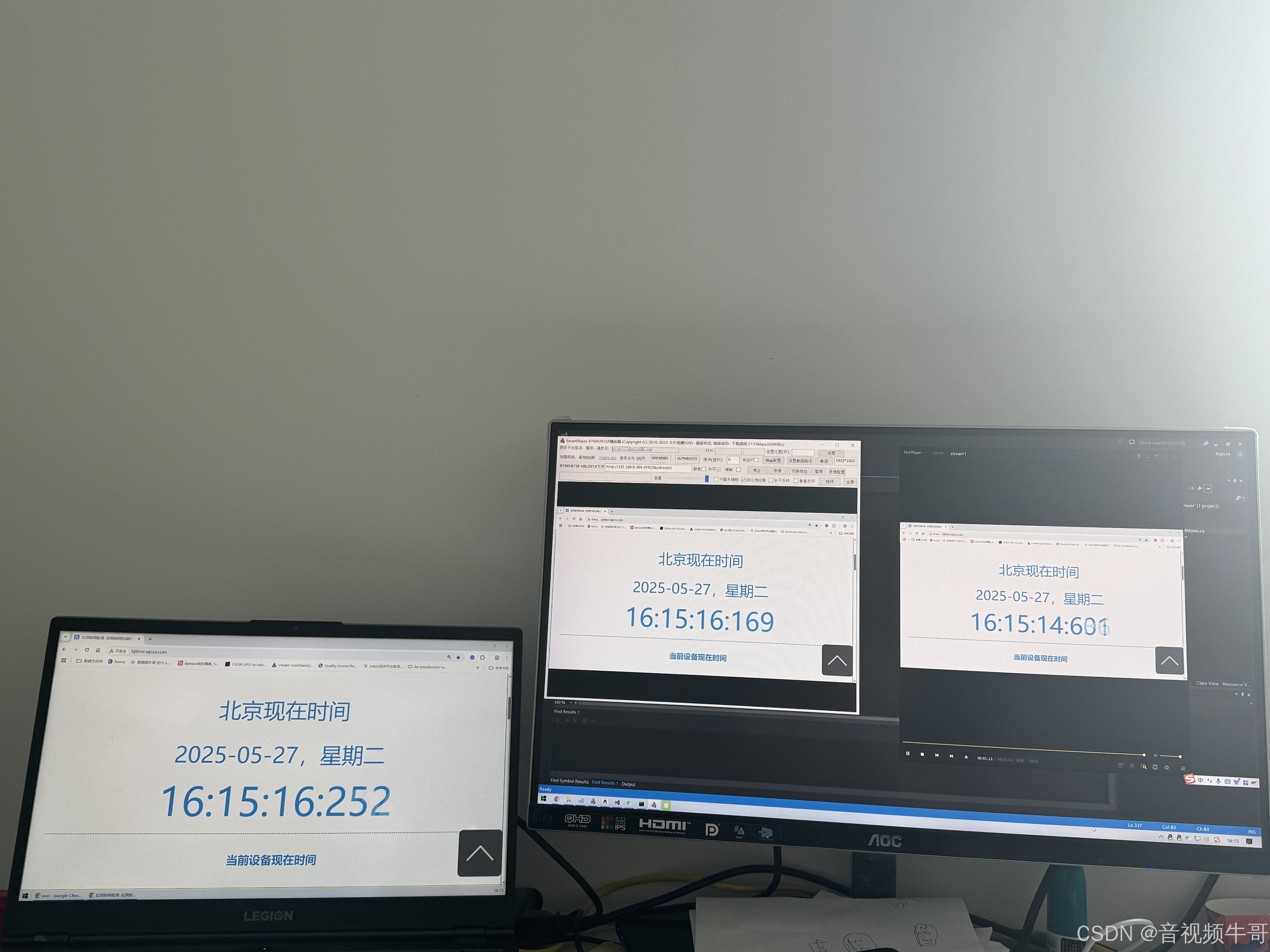

SmartPlayer与VLC播放RTMP:深度对比分析延迟、稳定性与功能

随着音视频直播技术的发展,RTMP(实时消息传输协议)成为了广泛应用于实时直播、在线教育、视频会议等领域的重要协议。为了确保优质的观看体验,RTMP播放器的选择至关重要。大牛直播SDK的SmartPlayer和VLC都是在行业中广受欢迎的播放…...

Qt QPaintEvent绘图事件painter使用指南

绘制需在paintEvent函数中实现 用图片形象理解 如果加了刷子再用笔就相当于用笔画过的区域用刷子走 防雷达: 源文件 #include "widget.h" #include "ui_widget.h" #include <QDebug> #include <QPainter> Widget::Widget(QWidget…...

伪创新-《软件方法》全流程引领AI-第1章 04

《软件方法》全流程引领AI-第1章 ABCD工作流-01 对PlantUML们的评价-《软件方法》全流程引领AI-第1章 02 AI辅助的建模步骤-《软件方法》全流程引领AI-第1章 03 第1章 ABCD工作流 1.5 警惕和揭秘伪创新 初中数学里要学习全等三角形、相似三角形、SSS、SAS……,到…...

win11如何重启

在 Windows 11 中重启电脑有多种方法,以下是其中几种常见方法: 开始菜单重启: 点击屏幕左下角的“开始”按钮(Windows 图标)。 在开始菜单中,点击“电源”图标。 选择“重启”选项。 使用快捷键…...

【iOS】 锁

iOS 锁 文章目录 iOS 锁前言线程安全锁互斥锁pthread_mutexsynchronized (互斥递归锁)synchronized问题:小结 NSLockNSRecursiveLockNSConditionNSConditionLock 自旋锁OSSpinLock(已弃用)atomicatomic修饰的属性绝对安全吗?os_unfair_lock 读写锁互斥锁和自旋锁的对比 小结使…...

uni-app学习笔记十五-vue3页面生命周期(一)

页面生命周期概览 vue3页面生命周期如下图所示: onLoad 此时页面还未显示,没有开始进入的转场动画,页面dom还不存在。 所以这里不能直接操作dom(可以修改data,因为vue框架会等待dom准备后再更新界面)&am…...

Flink核心概念小结

文章目录 前言引言数据流API基于POJO的数据流基本源流配置示例基本流接收器数据管道与ETL(提取、转换、加载)一对一映射构建面向流映射的构建键控流进行分组运算RichFlatMapFunction对于流的状态管理连接流的使用流式分析水位的基本概念和示例侧道输入的基本概念和示例Process …...

《软件工程》第 14 章 - 持续集成

在软件工程的开发流程中,持续集成是保障代码质量与开发效率的关键环节。本章将围绕持续集成的各个方面展开详细讲解,结合 Java 代码示例与可视化图表,帮助读者深入理解并实践相关知识。 14.1 持续集成概述 14.1.1 持续集成的相关概念 持续集…...

大模型 Agent 中的通用 MCP 机制详解

1. 引言 大模型(Large Language Model,LLM)技术的迅猛发展催生了一类全新的应用范式:LLM Agent(大模型 Agent)。简单来说,Agent 是基于大模型的自治智能体,它不仅能理解和生成自然语言,还能通过调用工具与环境交互,从而自主地完成复杂任务。ChatGPT 的出现让人们看到…...

Navicat 17 SQL 预览时表名异常右键表名,点击设计表->SQL预览->另存为的SQL预览时,表名都是 Untitled。

🧑💻 用户 Navicat 17 SQL 预览时表名异常右键表名,点击设计表->SQL预览->另存为的SQL预览时,表名都是 Untitled。 🧑🔧 官方技术中心 了解到您的问题,这个显示是正常的,…...

Orpheus-TTS:AI文本转语音,免费好用的TTS系统

名人说:博观而约取,厚积而薄发。——苏轼《稼说送张琥》 创作者:Code_流苏(CSDN)(一个喜欢古诗词和编程的Coder😊) 目录 一、Orpheus-TTS:重新定义语音合成的标准1. 什么是Orpheus-TTSÿ…...

Python爬虫实战:研究Goose框架相关技术

一、引言 随着互联网的迅速发展,网络上的信息量呈爆炸式增长。从海量的网页中提取有价值的信息成为一项重要的技术。网络爬虫作为一种自动获取网页内容的程序,在信息收集、数据挖掘、搜索引擎等领域有着广泛的应用。本文将详细介绍如何使用 Python 的 Goose 框架构建一个完整…...

webpack优化方法

以下是Webpack优化的系统性策略,涵盖构建速度、输出体积、缓存优化等多个维度,配置示例和原理分析: 一、构建速度优化 1. 缩小文件搜索范围 module.exports {resolve: {// 明确第三方模块的路径modules: [path.resolve(node_modules)],// …...

STM32 Keil工程搭建 (手动搭建)流程 2025年5月27日07:42:09

STM32 Keil工程搭建 (手动搭建)流程 觉得麻烦跳转到最底部看总配置图 1.获取官方标准外设函数库 内部结构如下: 文件夹功能分别为 图标(用不上)库函数(重点) Libraries/ ├── CMSIS/ # ARM Cortex-M Microcontroller Software Interface Standard…...

MyBatis 框架使用与 Spring 集成时的使用

MyBatis 创建项目mybatis项目,首先需要使用maven导入mybatis库 poml.xml <?xml version"1.0" encoding"UTF-8"?> <project xmlns"http://maven.apache.org/POM/4.0.0"xmlns:xsi"http://www.w3.org/2001/XMLSchema…...

OpenGL Chan视频学习-7 Writing a Shader inOpenGL

bilibili视频链接: 【最好的OpenGL教程之一】https://www.bilibili.com/video/BV1MJ411u7Bc?p5&vd_source44b77bde056381262ee55e448b9b1973 函数网站: docs.gl 说明: 1.之后就不再整理具体函数了,网站直接翻译会更直观也会…...

顶会新方向:卡尔曼滤波+目标检测

卡尔曼虑波+目标检测创新结合,新作准确率突破100%! 一个有前景且好发论文的方向:卡尔曼滤波+目标检测! 这种创新结合,得到学术界的广泛认可,多篇成果陆续登上顶会顶刊。例如无人机竞速系统 Swift,登上nat…...

数据库相关问题

1.保留字 1.1错误案例(2025/5/27) 报错: java.sql.SQLSyntaxErrorException: You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near condition, sell…...

一起学数据结构和算法(二)| 数组(线性结构)

数组(Array) 数组是最基础的数据结构,在内存中连续存储,支持随机访问。适用于需要频繁按索引访问元素的场景。 简介 数组是一种线性结构,将相同类型的元素存储在连续的内存空间中。每个元素通过其索引值(数…...

Linux基本指令篇 —— touch指令

touch是Linux和Unix系统中一个非常基础但实用的命令,主要用于操作文件的时间戳和创建空文件。下面我将详细介绍这个命令的用法和功能。 目录 一、基本功能 1. 创建空文件 2. 同时创建多个文件 3. 创建带有空格的文件名(需要使用引号) 二、…...

【后端高阶面经:消息队列篇】23、Kafka延迟消息:实现高并发场景下的延迟任务处理

一、延迟消息的核心价值与Kafka的局限性 在分布式系统中,延迟消息是实现异步延迟任务的核心能力,广泛应用于订单超时取消、库存自动释放、消息重试等场景。 然而,Apache Kafka作为高吞吐的分布式消息队列,原生并不支持延迟消息功能,需通过业务层或中间层逻辑实现。 1.1…...

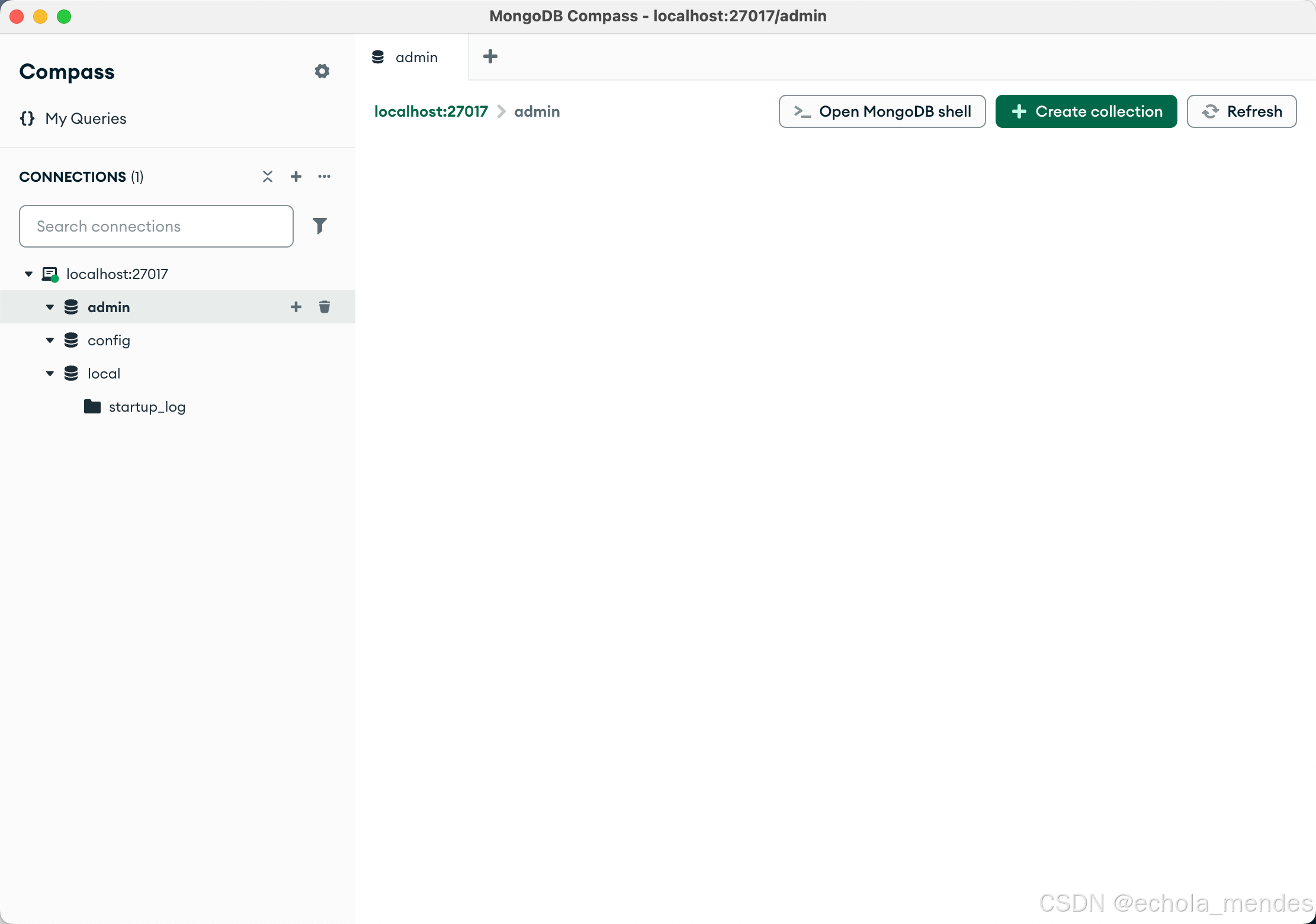

Mac安装MongoDB数据库以及MongoDB Compass可视化连接工具

目录 一、安装 MongoDB 社区版 1、下载 MongoDB 2、配置环境变量 3、配置数据和日志目录 4、启动MongoDB服务 5、使用配置文件启动 6、验证服务运行 二、MongoDB可视化工具MongoDB Compass 一、安装 MongoDB 社区版 1、下载 MongoDB 大家可以直接在官方文档下安装Mo…...

城市地下“隐形卫士”:激光甲烷传感器如何保障燃气安全?

城市“生命线”面临的安全挑战 城市地下管网如同人体的“血管”和“神经”,承载着燃气、供水、电力、通信等重要功能,一旦发生泄漏或爆炸,将严重影响城市运行和居民安全。然而,由于管线老化、违规施工、监管困难等问题࿰…...

MySQL推出全新Hypergraph优化器,正式进军OLAP领域!

在刚刚过去的 MySQL Summit 2025 大会上,Oracle 发布了一个用于 MySQL 的全新 Hypergraph(超图)优化器,能够为复杂的多表查询生成更好的执行计划,从而优化查询性能。 这个功能目前只在 MySQL HeatWave 云数据库中提供&…...

飞牛fnNAS手机相册备份及AI搜图

目录 一、相册安装应用 二、手机开启自动备份 三、开始备份 四、照片检索 五、AI搜图设置 六、AI搜图测试 七、照片传递 现代的手机,已经成为我们最亲密的“伙伴”。自从手机拍照性能提升后,手机已经完全取代了简单的卡片相机,而且与入门级“单反”相机发起了挑战。在…...

消费类,小家电产品如何做Type-C PD快充快速充电

随着快充技术的快速发展现在市场上的产品接口都在逐渐转为Type-C接口,Type-C可以支持最大20V100W的功率。未来Type-C大概会变成最通用的接口,而你的产品却还是还在用其他的接口必然会被淘汰, 而要使小家电用到PD快充,就需要使用到Type-C快充诱…...

连接表、视图和存储过程

1. 视图 1.1. 视图的概念 视图(View):虚拟表,本身不存储数据,而是封装了一个 SQL 查询的结果集。 用途: 只显示部分数据,提高数据访问的安全性。简化复杂查询,提高复用性和可维护…...

人工智能赋能教育:重塑学习生态,开启智慧未来

在科技浪潮风起云涌的当下,人工智能(AI)如同一颗璀璨的新星,正以前所未有的速度和深度融入社会生活的各个领域。教育,作为塑造未来、传承文明的核心领域,自然也未能置身事外。人工智能与教育的结合…...