ffmpeg综合应用示例(五)——多路视频合并(Linux版本)

本文的目的为方便Linux下编译运行多路视频合成Demo

原文:ffmpeg综合应用示例(五)——多路视频合并

Ubuntu 20.04 + ffmpeg version ffmpeg-4.4-x86_64

编译

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/workspace/dengzr/linux-x64/lib:/home/workspace/dengzr/ffmpeg-4.4-x86_64/lib/ D_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/workspace/dengzr/linux-x64/lib:/home/works

g++ -o video_combine video_combine.cpp -I /home/workspace/dengzr/ffmpeg-4.4-x86_64/include/ -L /home/workspace/dengzr/ffmpeg-4.4-x86_64/lib/ -Wl,-Bstatic -Wl,-Bdynamic -lpthread -Wl,-Bstatic -Wl,-Bdynamic -lstdc++ -Wl,-Bstatic -Wl,-Bdynamic -ldl -Wl,-Bstatic -Wl,-Bdynamic -lavcodec -lavformat -lswscale -lavutil -lavfilter -lfdk-aac -lvpx -lpostproc -lavfilter -lm -lva -lOpenCL -lmfx -lstdc++ -ldl -lavformat -lm -lz -lavcodec -lvpx -lm -lvpx -lm -lvpx -lm -lvpx -lm -lz -lfdk-aac -lvorbis -lvorbisenc -lx264 -lpthread -lm -ldl -lx265 -lva -lmfx -lstdc++ -ldl -lpostproc -lm -lswscale -lm -lswresample -lm -lavutil -lva-drm -lva -lva-x11 -lva -lm -ldrm -lmfx -lstdc++ -ldl -lOpenCL -lva -lX11 -lXext -lva -ldrm -lm -lpthread -lz -Wl,-Bstatic -Wl,-Bdynamic -lavcodec -lavformat -lswscale -lavutil -lavfilter -lfdk-aac -lvpx -lpostproc -lOpenCL -lmfx -lstdc++ -ldl -lvorbis -lvorbisenc -lx264 -lx265 -lswresample -lva-drm -lva-x11 -lX11 -lXext -Wl,-Bstatic -Wl,-Bdynamic -L /home/workspace/dengzr/ffmpeg-4.4-x86_64/lib/ -L /home/workspace/dengzr/linux-x64/lib -I /home/workspace/dengzr/linux-x64/include/ -lSDL2

Linux下修改后的代码

/**

*

* 张晖 Hui Zhang

* zhanghuicuc@gmail.com

* 中国传媒大学/数字电视技术

* Communication University of China / Digital TV Technology

*

* 本程序实现了对多路视频进行合并实现分屏效果,并且可以添加不同的视频滤镜。

* 目前还不支持音频合并

*/#include <stdio.h>

extern "C"

{

#include "libavutil/opt.h"

#include "libavutil/time.h"

#include "libavutil/mathematics.h"

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

// #include "libavfilter/avfiltergraph.h"

#include "libavfilter/buffersink.h"

#include "libavfilter/buffersrc.h"

};

// #include <crtdbg.h>/*

FIX: H.264 in some container format (FLV, MP4, MKV etc.) need

"h264_mp4toannexb" bitstream filter (BSF)

*Add SPS,PPS in front of IDR frame

*Add start code ("0,0,0,1") in front of NALU

H.264 in some container (MPEG2TS) don't need this BSF.

*/

//'1': Use H.264 Bitstream Filter

#define USE_H264BSF 0 /*

FIX:AAC in some container format (FLV, MP4, MKV etc.) need

"aac_adtstoasc" bitstream filter (BSF)

*/

//'1': Use AAC Bitstream Filter

#define USE_AACBSF 0 typedef enum{VFX_NULL = 0,VFX_EDGE = 1,VFX_NEGATE = 2

}VFX;typedef enum{AFX_NULL = 0,

}AFX;AVBitStreamFilterContext *aacbsfc = NULL;

AVBitStreamFilterContext* h264bsfc = NULL;

//multiple input

static AVFormatContext **ifmt_ctx;

AVFrame **frame = NULL;

//single output

static AVFormatContext *ofmt_ctx;typedef struct FilteringContext {AVFilterContext *buffersink_ctx;AVFilterContext **buffersrc_ctx;AVFilterGraph *filter_graph;

} FilteringContext;

static FilteringContext *filter_ctx;typedef struct InputFile{const char* filenames;/** position index* 0 - 1 - 2* 3 - 4 - 5* 6 - 7 - 8* ……*/uint32_t video_idx;//scale level, 0 means keep the same//uint32_t video_expand;uint32_t video_effect;uint32_t audio_effect;

} InputFile;

InputFile* inputfiles;typedef struct GlobalContext{//always be a square,such as 2x2, 3x3uint32_t grid_num;uint32_t video_num;uint32_t enc_width;uint32_t enc_height;uint32_t enc_bit_rate;InputFile* input_file;const char* outfilename;

} GlobalContext;

GlobalContext* global_ctx;static int global_ctx_config()

{int i;if (global_ctx->grid_num < global_ctx->video_num){av_log(NULL, AV_LOG_ERROR, "Setting a wrong grid_num %d \t The grid_num is smaller than video_num!! \n", global_ctx->grid_num);global_ctx->grid_num = global_ctx->video_num;//global_ctx->stride = sqrt((double)global_ctx->grid_num);av_log(NULL, AV_LOG_ERROR, "Automatically change the grid_num to be same as video_num!! \n");}//global_ctx->stride = sqrt((double)global_ctx->grid_num);for (i = 0; i < global_ctx->video_num; i++){if (global_ctx->input_file[i].video_idx >= global_ctx->grid_num){av_log(NULL, AV_LOG_ERROR, "Invalid video_inx value in the No.%d input\n", global_ctx->input_file[i].video_idx);return -1;}}return 0;

}static int open_input_file(InputFile *input_file)

{int ret;unsigned int i;unsigned int j;ifmt_ctx = (AVFormatContext**)av_malloc((global_ctx->video_num)*sizeof(AVFormatContext*));for (i = 0; i < global_ctx->video_num; i++){*(ifmt_ctx + i) = NULL;if ((ret = avformat_open_input((ifmt_ctx + i), input_file[i].filenames, NULL, NULL)) < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot open input file\n");return ret;}if ((ret = avformat_find_stream_info(ifmt_ctx[i], NULL)) < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot find stream information\n");return ret;}for (j = 0; j < ifmt_ctx[i]->nb_streams; j++) {AVStream *stream;AVCodecContext *codec_ctx;stream = ifmt_ctx[i]->streams[j];codec_ctx = stream->codec;if (codec_ctx->codec_type == AVMEDIA_TYPE_VIDEO|| codec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {/* Open decoder */ret = avcodec_open2(codec_ctx,avcodec_find_decoder(codec_ctx->codec_id), NULL);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Failed to open decoder for stream #%u\n", i);return ret;}}}av_dump_format(ifmt_ctx[i], 0, input_file[i].filenames, 0);}return 0;

}static int open_output_file(const char *filename)

{AVStream *out_stream;AVStream *in_stream;AVCodecContext *dec_ctx, *enc_ctx;AVCodec *encoder;int ret;unsigned int i;ofmt_ctx = NULL;avformat_alloc_output_context2(&ofmt_ctx, NULL, "flv", filename);if (!ofmt_ctx) {av_log(NULL, AV_LOG_ERROR, "Could not create output context\n");return AVERROR_UNKNOWN;}for (i = 0; i < ifmt_ctx[0]->nb_streams; i++) {out_stream = avformat_new_stream(ofmt_ctx, NULL);if (!out_stream) {av_log(NULL, AV_LOG_ERROR, "Failed allocating output stream\n");return AVERROR_UNKNOWN;}in_stream = ifmt_ctx[0]->streams[i];out_stream->time_base = in_stream->time_base;dec_ctx = in_stream->codec;enc_ctx = out_stream->codec;if (dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO|| dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) { /* In this example, we transcode to same properties (picture size,* sample rate etc.). These properties can be changed for output* streams easily using filters */if (dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO) {/* in this example, we choose transcoding to same codec */encoder = avcodec_find_encoder(AV_CODEC_ID_H264);enc_ctx->height = global_ctx->enc_height;enc_ctx->width = global_ctx->enc_width;enc_ctx->sample_aspect_ratio = dec_ctx->sample_aspect_ratio;/* take first format from list of supported formats */enc_ctx->pix_fmt = encoder->pix_fmts[0];enc_ctx->me_range = 16;enc_ctx->max_qdiff = 4;enc_ctx->bit_rate = global_ctx->enc_bit_rate;enc_ctx->qcompress = 0.6;/* video time_base can be set to whatever is handy and supported by encoder */enc_ctx->time_base.num = 1;enc_ctx->time_base.den = 25;enc_ctx->gop_size = 250;enc_ctx->max_b_frames = 3;AVDictionary * d = NULL;char *k = av_strdup("preset"); // if your strings are already allocated,char *v = av_strdup("ultrafast"); // you can avoid copying them like thisav_dict_set(&d, k, v, AV_DICT_DONT_STRDUP_KEY | AV_DICT_DONT_STRDUP_VAL);ret = avcodec_open2(enc_ctx, encoder, &d);}else {encoder = avcodec_find_encoder(AV_CODEC_ID_AAC);enc_ctx->sample_rate = dec_ctx->sample_rate;enc_ctx->channel_layout = dec_ctx->channel_layout;enc_ctx->channels = av_get_channel_layout_nb_channels(enc_ctx->channel_layout);/* take first format from list of supported formats */enc_ctx->sample_fmt = encoder->sample_fmts[0];AVRational time_base = { 1, enc_ctx->sample_rate };enc_ctx->time_base = time_base;ret = avcodec_open2(enc_ctx, encoder, NULL);}if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot open video encoder for stream #%u\n", i);return ret;} }else if (dec_ctx->codec_type == AVMEDIA_TYPE_UNKNOWN) {av_log(NULL, AV_LOG_FATAL, "Elementary stream #%d is of unknown type, cannot proceed\n", i);return AVERROR_INVALIDDATA;}else {/* if this stream must be remuxed */ret = avcodec_copy_context(ofmt_ctx->streams[i]->codec,ifmt_ctx[0]->streams[i]->codec);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Copying stream context failed\n");return ret;}}if (ofmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)enc_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;}av_dump_format(ofmt_ctx, 0, filename, 1);if (!(ofmt_ctx->oformat->flags & AVFMT_NOFILE)) {ret = avio_open(&ofmt_ctx->pb, filename, AVIO_FLAG_WRITE);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Could not open output file '%s'", filename);return ret;}}/* init muxer, write output file header */ret = avformat_write_header(ofmt_ctx, NULL);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Error occurred when opening output file\n");return ret;}#if USE_H264BSF h264bsfc = av_bitstream_filter_init("h264_mp4toannexb");

#endif

#if USE_AACBSF aacbsfc = av_bitstream_filter_init("aac_adtstoasc");

#endif return 0;

}static int init_filter(FilteringContext* fctx, AVCodecContext **dec_ctx,AVCodecContext *enc_ctx, const char *filter_spec)

{char args[512];char pad_name[10];int ret = 0;int i;AVFilter **buffersrc = (AVFilter**)av_malloc(global_ctx->video_num*sizeof(AVFilter*));AVFilter *buffersink = NULL;AVFilterContext **buffersrc_ctx = (AVFilterContext**)av_malloc(global_ctx->video_num*sizeof(AVFilterContext*));AVFilterContext *buffersink_ctx = NULL;AVFilterInOut **outputs = (AVFilterInOut**)av_malloc(global_ctx->video_num*sizeof(AVFilterInOut*));AVFilterInOut *inputs = avfilter_inout_alloc();AVFilterGraph *filter_graph = avfilter_graph_alloc();for (i = 0; i < global_ctx->video_num; i++){buffersrc[i] = NULL;buffersrc_ctx[i] = NULL;outputs[i] = avfilter_inout_alloc();}if (!outputs || !inputs || !filter_graph) {ret = AVERROR(ENOMEM);goto end;}if (dec_ctx[0]->codec_type == AVMEDIA_TYPE_VIDEO) {for (i = 0; i < global_ctx->video_num; i++){buffersrc[i] = (AVFilter *)avfilter_get_by_name("buffer");}buffersink = (AVFilter *)avfilter_get_by_name("buffersink");if (!buffersrc || !buffersink) {av_log(NULL, AV_LOG_ERROR, "filtering source or sink element not found\n");ret = AVERROR_UNKNOWN;goto end;}for (i = 0; i < global_ctx->video_num; i++){snprintf(args, sizeof(args),"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",dec_ctx[i]->width, dec_ctx[i]->height, dec_ctx[i]->pix_fmt,dec_ctx[i]->time_base.num, dec_ctx[i]->time_base.den,dec_ctx[i]->sample_aspect_ratio.num,dec_ctx[i]->sample_aspect_ratio.den);snprintf(pad_name, sizeof(pad_name), "in%d", i);ret = avfilter_graph_create_filter(&(buffersrc_ctx[i]), buffersrc[i], pad_name,args, NULL, filter_graph);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot create buffer source\n");goto end;}}ret = avfilter_graph_create_filter(&buffersink_ctx, buffersink, "out",NULL, NULL, filter_graph);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot create buffer sink\n");goto end;}ret = av_opt_set_bin(buffersink_ctx, "pix_fmts",(uint8_t*)&enc_ctx->pix_fmt, sizeof(enc_ctx->pix_fmt),AV_OPT_SEARCH_CHILDREN);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot set output pixel format\n");goto end;}}else if (dec_ctx[0]->codec_type == AVMEDIA_TYPE_AUDIO) {for (i = 0; i < global_ctx->video_num; i++){buffersrc[i] = (AVFilter *)avfilter_get_by_name("abuffer");}buffersink = (AVFilter *)avfilter_get_by_name("abuffersink");if (!buffersrc || !buffersink) {av_log(NULL, AV_LOG_ERROR, "filtering source or sink element not found\n");ret = AVERROR_UNKNOWN;goto end;}for (i = 0; i < global_ctx->video_num; i++){if (!dec_ctx[i]->channel_layout)dec_ctx[i]->channel_layout =av_get_default_channel_layout(dec_ctx[i]->channels);snprintf(args, sizeof(args),"time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%I64x",dec_ctx[i]->time_base.num, dec_ctx[i]->time_base.den, dec_ctx[i]->sample_rate,av_get_sample_fmt_name(dec_ctx[i]->sample_fmt),dec_ctx[i]->channel_layout);snprintf(pad_name, sizeof(pad_name), "in%d", i);ret = avfilter_graph_create_filter(&(buffersrc_ctx[i]), buffersrc[i], pad_name,args, NULL, filter_graph);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot create buffer source\n");goto end;}}if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot create audio buffer source\n");goto end;}ret = avfilter_graph_create_filter(&buffersink_ctx, buffersink, "out",NULL, NULL, filter_graph);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot create audio buffer sink\n");goto end;}ret = av_opt_set_bin(buffersink_ctx, "sample_fmts",(uint8_t*)&enc_ctx->sample_fmt, sizeof(enc_ctx->sample_fmt),AV_OPT_SEARCH_CHILDREN);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot set output sample format\n");goto end;}ret = av_opt_set_bin(buffersink_ctx, "channel_layouts",(uint8_t*)&enc_ctx->channel_layout,sizeof(enc_ctx->channel_layout), AV_OPT_SEARCH_CHILDREN);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot set output channel layout\n");goto end;}ret = av_opt_set_bin(buffersink_ctx, "sample_rates",(uint8_t*)&enc_ctx->sample_rate, sizeof(enc_ctx->sample_rate),AV_OPT_SEARCH_CHILDREN);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Cannot set output sample rate\n");goto end;}}/* Endpoints for the filter graph. */for (i = 0; i < global_ctx->video_num; i++){snprintf(pad_name, sizeof(pad_name), "in%d", i);outputs[i]->name = av_strdup(pad_name);outputs[i]->filter_ctx = buffersrc_ctx[i];outputs[i]->pad_idx = 0;if (i == global_ctx->video_num - 1)outputs[i]->next = NULL;elseoutputs[i]->next = outputs[i + 1];}inputs->name = av_strdup("out");inputs->filter_ctx = buffersink_ctx;inputs->pad_idx = 0;inputs->next = NULL;if (!outputs[0]->name || !inputs->name) {ret = AVERROR(ENOMEM);goto end;}if ((ret = avfilter_graph_parse_ptr(filter_graph, filter_spec,&inputs, outputs, NULL)) < 0)goto end;if ((ret = avfilter_graph_config(filter_graph, NULL)) < 0)goto end;/* Fill FilteringContext */fctx->buffersrc_ctx = buffersrc_ctx;fctx->buffersink_ctx = buffersink_ctx;fctx->filter_graph = filter_graph;

end:avfilter_inout_free(&inputs);av_free(buffersrc);// av_free(buffersrc_ctx);avfilter_inout_free(outputs);av_free(outputs);return ret;

}static int init_spec_filter(void)

{char filter_spec[512];char spec_temp[128];unsigned int i;unsigned int j;unsigned int k;unsigned int x_coor;unsigned int y_coor;AVCodecContext** dec_ctx_array;int stream_num = ifmt_ctx[0]->nb_streams;int stride = (int)sqrt((long double)global_ctx->grid_num);int ret;filter_ctx = (FilteringContext *)av_malloc_array(stream_num, sizeof(*filter_ctx));dec_ctx_array = (AVCodecContext**)av_malloc(global_ctx->video_num*sizeof(AVCodecContext));if (!filter_ctx || !dec_ctx_array)return AVERROR(ENOMEM);for (i = 0; i < stream_num; i++) {filter_ctx[i].buffersrc_ctx = NULL;filter_ctx[i].buffersink_ctx = NULL;filter_ctx[i].filter_graph = NULL;for (j = 0; j < global_ctx->video_num; j++)dec_ctx_array[j] = ifmt_ctx[j]->streams[i]->codec;if (!(ifmt_ctx[0]->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO|| ifmt_ctx[0]->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO))continue;if (ifmt_ctx[0]->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO){if (global_ctx->grid_num == 1)snprintf(filter_spec, sizeof(filter_spec), "null");else{snprintf(filter_spec, sizeof(filter_spec), "color=c=black@1:s=%dx%d[x0];", global_ctx->enc_width, global_ctx->enc_height);k = 1;for (j = 0; j < global_ctx->video_num; j++){switch (global_ctx->input_file[j].video_effect){case VFX_NULL:snprintf(spec_temp, sizeof(spec_temp), "[in%d]null[ine%d];", j, j);strcat(filter_spec, spec_temp);break;case VFX_EDGE:snprintf(spec_temp, sizeof(spec_temp), "[in%d]edgedetect[ine%d];", j, j);strcat(filter_spec, spec_temp);break;case VFX_NEGATE:snprintf(spec_temp, sizeof(spec_temp), "[in%d]negate[ine%d];", j, j);strcat(filter_spec, spec_temp);break;}x_coor = global_ctx->input_file[j].video_idx % stride;y_coor = global_ctx->input_file[j].video_idx / stride;snprintf(spec_temp, sizeof(spec_temp), "[ine%d]scale=w=%d:h=%d[inn%d];[x%d][inn%d]overlay=%d*%d/%d:%d*%d/%d[x%d];", j, global_ctx->enc_width / stride, global_ctx->enc_height / stride, j, k - 1, j, global_ctx->enc_width, x_coor, stride, global_ctx->enc_height, y_coor, stride, k);k++;strcat(filter_spec, spec_temp);}snprintf(spec_temp, sizeof(spec_temp), "[x%d]null[out]", k - 1, global_ctx->enc_width, global_ctx->enc_height);strcat(filter_spec, spec_temp);}}else{if (global_ctx->video_num == 1)snprintf(filter_spec, sizeof(filter_spec), "anull");else{snprintf(filter_spec, sizeof(filter_spec), "");for (j = 0; j < global_ctx->video_num; j++){snprintf(spec_temp, sizeof(spec_temp), "[in%d]", j);strcat(filter_spec, spec_temp);}snprintf(spec_temp, sizeof(spec_temp), "amix=inputs=%d[out]", global_ctx->video_num);strcat(filter_spec, spec_temp);}}ret = init_filter(&filter_ctx[i], dec_ctx_array,ofmt_ctx->streams[i]->codec, filter_spec);if (ret)return ret;}av_free(dec_ctx_array);return 0;

}int videocombine(GlobalContext* video_ctx)

{int ret;int tmp = 0;int got_frame_num = 0;unsigned int stream_index;AVPacket packet;AVPacket enc_pkt;AVFrame* picref;enum AVMediaType mediatype;int read_frame_done = 0;int flush_now = 0;int framecnt = 0;int i, j;int got_frame;int enc_got_frame = 0;int(*dec_func)(AVCodecContext *, AVFrame *, int *, const AVPacket *);int(*enc_func)(AVCodecContext *, AVPacket *, const AVFrame *, int *);global_ctx = video_ctx;global_ctx_config();frame = (AVFrame**)av_malloc(global_ctx->video_num*sizeof(AVFrame*));picref = av_frame_alloc();av_register_all();avfilter_register_all();if ((ret = open_input_file(global_ctx->input_file)) < 0)goto end;if ((ret = open_output_file(global_ctx->outfilename)) < 0)goto end;if ((ret = init_spec_filter()) < 0)goto end;while (1) {for (i = 0; i < global_ctx->video_num; i++){if (read_frame_done < 0){flush_now = 1;goto flush;} while ((read_frame_done=av_read_frame(ifmt_ctx[i], &packet)) >= 0){stream_index = packet.stream_index;mediatype = ifmt_ctx[i]->streams[stream_index]->codec->codec_type;if (mediatype == AVMEDIA_TYPE_VIDEO || mediatype == AVMEDIA_TYPE_AUDIO){frame[i] = av_frame_alloc();if (!(frame[i])){ret = AVERROR(ENOMEM);goto end;}av_packet_rescale_ts(&packet,ifmt_ctx[i]->streams[stream_index]->time_base,ifmt_ctx[i]->streams[stream_index]->codec->time_base);dec_func = (mediatype == AVMEDIA_TYPE_VIDEO) ? avcodec_decode_video2 : avcodec_decode_audio4;ret = dec_func(ifmt_ctx[i]->streams[stream_index]->codec, frame[i], &got_frame, &packet);if (ret < 0){av_frame_free(&frame[i]);av_log(NULL, AV_LOG_ERROR, "Decoding failed\n");goto end;}if (got_frame) {frame[i]->pts = av_frame_get_best_effort_timestamp(frame[i]);ret = av_buffersrc_add_frame(filter_ctx[stream_index].buffersrc_ctx[i], frame[i]);if (ret < 0) {av_log(NULL, AV_LOG_ERROR, "Error while feeding the filtergraph\n");goto end;}}else{av_frame_free(&(frame[i]));}}av_free_packet(&packet);if (got_frame){got_frame = 0;break;}}}while (1) {ret = av_buffersink_get_frame_flags(filter_ctx[stream_index].buffersink_ctx, picref, 0);if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF){ret = 0;break;}if (ret < 0)goto end;if (picref) { enc_pkt.data = NULL;enc_pkt.size = 0;av_init_packet(&enc_pkt);enc_func = (mediatype == AVMEDIA_TYPE_VIDEO) ? avcodec_encode_video2 : avcodec_encode_audio2;ret = enc_func(ofmt_ctx->streams[stream_index]->codec, &enc_pkt,picref, &enc_got_frame); if (ret < 0){av_log(NULL, AV_LOG_ERROR, "Encoding failed\n");goto end;}if (enc_got_frame == 1){framecnt++;enc_pkt.stream_index = stream_index;//Write PTSAVRational time_base = ofmt_ctx->streams[stream_index]->time_base;//{ 1, 1000 };AVRational r_framerate1 = ifmt_ctx[0]->streams[stream_index]->r_frame_rate;// { 50, 2 };AVRational time_base_q = { 1, AV_TIME_BASE };//Duration between 2 frames (us)int64_t calc_duration = (double)(AV_TIME_BASE)*(1 / av_q2d(r_framerate1)); //内部时间戳//Parameters//enc_pkt.pts = (double)(framecnt*calc_duration)*(double)(av_q2d(time_base_q)) / (double)(av_q2d(time_base));enc_pkt.pts = av_rescale_q(framecnt*calc_duration, time_base_q, time_base);enc_pkt.dts = enc_pkt.pts;enc_pkt.duration = av_rescale_q(calc_duration, time_base_q, time_base); //(double)(calc_duration)*(double)(av_q2d(time_base_q)) / (double)(av_q2d(time_base));enc_pkt.pos = -1;#if USE_H264BSF av_bitstream_filter_filter(h264bsfc, in_stream->codec, NULL, &pkt.data, &pkt.size, pkt.data, pkt.size, 0);

#endif

#if USE_AACBSF av_bitstream_filter_filter(aacbsfc, out_stream->codec, NULL, &pkt.data, &pkt.size, pkt.data, pkt.size, 0);

#endif ret = av_interleaved_write_frame(ofmt_ctx, &enc_pkt);av_log(NULL, AV_LOG_INFO, "write frame %d\n", framecnt);av_free_packet(&enc_pkt);}av_frame_unref(picref);} }}flush:/* flush filters and encoders */for (i = 0; i < ifmt_ctx[0]->nb_streams; i++) {stream_index = i;/* flush encoder */if (!(ofmt_ctx->streams[stream_index]->codec->codec->capabilities &AV_CODEC_CAP_DELAY))return 0;while (1) {enc_pkt.data = NULL;enc_pkt.size = 0;av_init_packet(&enc_pkt);enc_func = (ifmt_ctx[0]->streams[stream_index]->codec->codec_type == AVMEDIA_TYPE_VIDEO) ?avcodec_encode_video2 : avcodec_encode_audio2;ret = enc_func(ofmt_ctx->streams[stream_index]->codec, &enc_pkt,NULL, &enc_got_frame);av_frame_free(NULL);if (ret < 0){av_log(NULL, AV_LOG_ERROR, "Encoding failed\n");goto end;}if (!enc_got_frame){ret = 0;break;}printf("Flush Encoder: Succeed to encode 1 frame!\tsize:%5d\n", enc_pkt.size);//Write PTSAVRational time_base = ofmt_ctx->streams[stream_index]->time_base;//{ 1, 1000 };AVRational r_framerate1 = ifmt_ctx[0]->streams[stream_index]->r_frame_rate;// { 50, 2 };AVRational time_base_q = { 1, AV_TIME_BASE };//Duration between 2 frames (us)int64_t calc_duration = (double)(AV_TIME_BASE)*(1 / av_q2d(r_framerate1)); //内部时间戳//Parametersenc_pkt.pts = av_rescale_q(framecnt*calc_duration, time_base_q, time_base);enc_pkt.dts = enc_pkt.pts;enc_pkt.duration = av_rescale_q(calc_duration, time_base_q, time_base);/* copy packet*///转换PTS/DTS(Convert PTS/DTS)enc_pkt.pos = -1;framecnt++;ofmt_ctx->duration = enc_pkt.duration * framecnt;/* mux encoded frame */ret = av_interleaved_write_frame(ofmt_ctx, &enc_pkt);}}av_write_trailer(ofmt_ctx);#if USE_H264BSF av_bitstream_filter_close(h264bsfc);

#endif

#if USE_AACBSF av_bitstream_filter_close(aacbsfc);

#endif end:av_free_packet(&packet);for (i = 0; i < global_ctx->video_num; i++){av_frame_free(&(frame[i]));for (j = 0; j < ofmt_ctx->nb_streams; j++) {avcodec_close(ifmt_ctx[i]->streams[j]->codec);}}av_free(frame);av_free(picref);for (i = 0; i<ofmt_ctx->nb_streams; i++){if (ofmt_ctx && ofmt_ctx->nb_streams > i && ofmt_ctx->streams[i] && ofmt_ctx->streams[i]->codec)avcodec_close(ofmt_ctx->streams[i]->codec);av_free(filter_ctx[i].buffersrc_ctx);if (filter_ctx && filter_ctx[i].filter_graph)avfilter_graph_free(&filter_ctx[i].filter_graph);}av_free(filter_ctx);for (i = 0; i < global_ctx->video_num; i++)avformat_close_input(&(ifmt_ctx[i]));if (ofmt_ctx && !(ofmt_ctx->oformat->flags & AVFMT_NOFILE))avio_close(ofmt_ctx->pb);avformat_free_context(ofmt_ctx);av_free(ifmt_ctx);if (ret < 0)av_log(NULL, AV_LOG_ERROR, "Error occurred\n");return (ret ? 1 : 0);

}int main(int argc, char **argv)

{//test 2x2inputfiles = (InputFile*)av_malloc_array(4, sizeof(InputFile));if (!inputfiles)return AVERROR(ENOMEM);inputfiles[0].filenames = "in1.flv";//inputfiles[0].video_expand = 0;inputfiles[0].video_idx = 0;inputfiles[0].video_effect = VFX_EDGE;inputfiles[0].audio_effect = AFX_NULL;inputfiles[1].filenames = "in2.flv";//inputfiles[1].video_expand = 0;inputfiles[1].video_idx = 1;inputfiles[1].video_effect = VFX_NULL;inputfiles[1].audio_effect = AFX_NULL;inputfiles[2].filenames = "in3.flv";//inputfiles[2].video_expand = 0;inputfiles[2].video_idx = 2;inputfiles[2].video_effect = VFX_NULL;inputfiles[2].audio_effect = AFX_NULL;inputfiles[3].filenames = "in4.flv";//inputfiles[3].video_expand = 0;inputfiles[3].video_idx = 3;inputfiles[3].video_effect = VFX_NEGATE;inputfiles[3].audio_effect = AFX_NULL;global_ctx = (GlobalContext*)av_malloc(sizeof(GlobalContext));global_ctx->video_num = 4;global_ctx->grid_num = 4;global_ctx->enc_bit_rate = 500000;global_ctx->enc_height = 360;global_ctx->enc_width = 640;global_ctx->outfilename = "combined.flv";global_ctx->input_file = inputfiles;return videocombine(global_ctx);

}相关文章:

——多路视频合并(Linux版本))

ffmpeg综合应用示例(五)——多路视频合并(Linux版本)

本文的目的为方便Linux下编译运行多路视频合成Demo 原文:ffmpeg综合应用示例(五)——多路视频合并 Ubuntu 20.04 ffmpeg version ffmpeg-4.4-x86_64 编译 export LD_LIBRARY_PATH$LD_LIBRARY_PATH:/home/workspace/dengzr/linux-x64/lib…...

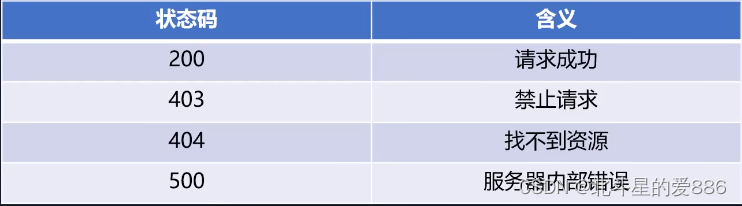

Node.js-http模块服务端请求与响应操作,请求报文与响应报文

简单案例创建HTTP服务端: // 导入 http 模块 const http require("http"); // 创建服务对象 const server http.createServer((request, response) > {// 设置编码格式,解决中文乱码问题response.setHeader("content-type", &…...

除了PS,还有那些软件可以打开PSD文件

设计师在交接文件时,会看到各种格式的扩展文件,不同的格式需要不同的软件来运行。大多数人都听说过流行的文件格式PSD,因为它是最常用的图片格式之一,还有JPG、PNG等。然而,与JPG和PNG不同的是,PSD格式文件…...

uniapp h5支付宝支付后端返回Form表单,前端如何处理

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档 文章目录 前言1.调取接口拿到后端返回的form表单 前言 uniapp h5 支付宝支付,后端返回一串form表单,前端如何拿到支付串并且调用支付 1.调取接口拿到…...

【华秋干货铺】PCB布线技巧升级:高速信号篇

如下表所示,接口信号能工作在8Gbps及以上速率,由于速率很高,PCB布线设计要求会更严格,在前几篇关于PCB布线内容的基础上,还需要根据本篇内容的要求来进行PCB布线设计。 高速信号布线时尽量少打孔换层,换层优…...

c#:ObservableCollection<T>的用法

1.说明: ObservableCollection:表示一个动态数据收集,该集合在添加或删除项或刷新整个列表时提供通知。 2.使用: 首先声明一个类 public ObservableCollection ProItems;//具体情况具体写对应的信息 表格DataGrid案例ÿ…...

)

Linux 端口号占用如何处理(使用命令处理)

查看被占用端口号 sudo netstat -tlnp 端口号 示例: sudo netstat -tlnp 3380杀死进程 sudo kill 进程Id sudo kill 11032...

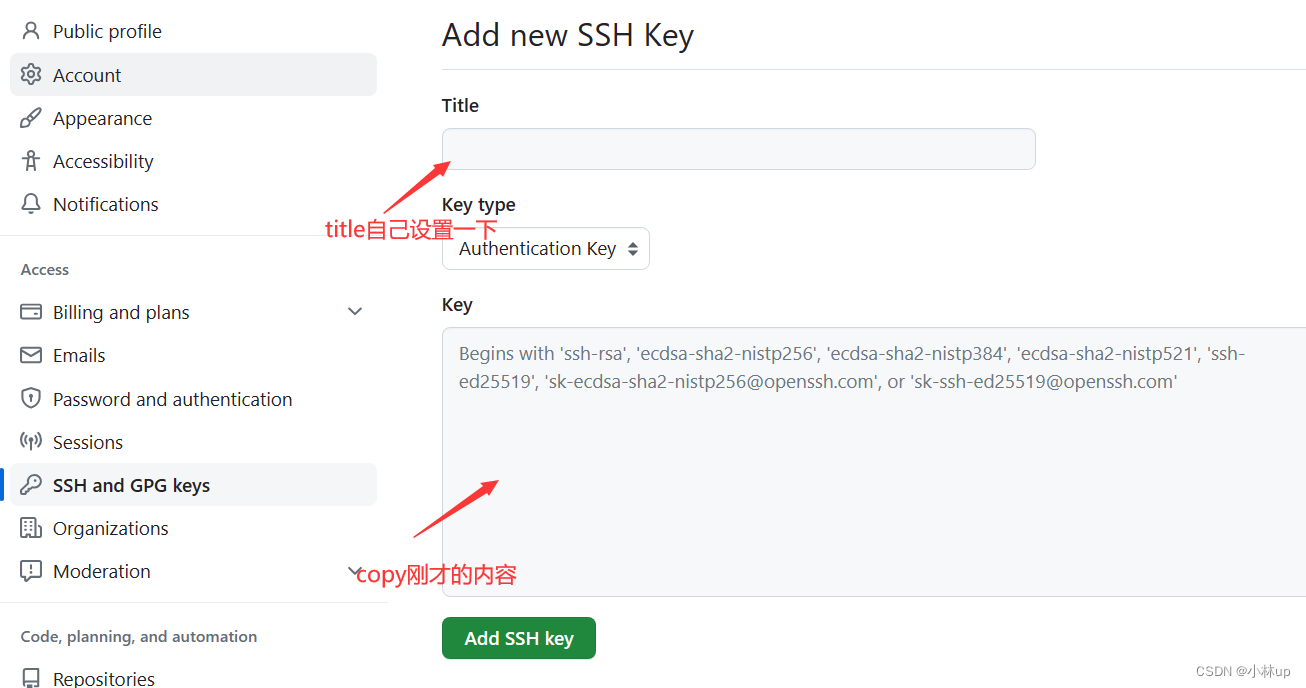

ubuntu git操作记录设置ssh key

用到的命令: 安装git sudo apt-get install git配置git用户和邮箱 git config --global user.name “用户名” git config --global user.email “邮箱地址”安装ssh sudo apt-get install ssh然后查看安装状态: ps -e | grep sshd4. 查看有无ssh k…...

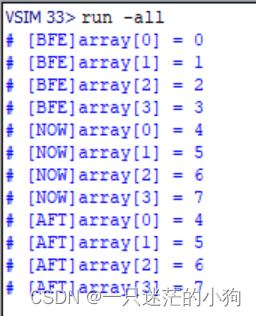

SystemVerilog数组参数传递及引用方法总结

一、将常数数组传递给task/function 如下面的程序,将一个常数数组传递给function module my_array_test();function array_test(int array[4]);foreach(array[i]) begin$display("array[%0d] %0d", i, array[i]);endendfunctioninitial beginarray_tes…...

Shell脚本学习-While循环1

当型循环和直到型循环: 循环语句常用于重复执行一条指令或一组指令,直到条件不满足时停止。 在企业实际应用中,常用于守护进程或者持续运行的程序。 while语法结构: while 条件 do指令... done while循环语句对后面的条件表达…...

docker for Windows, WSL2 ,Hyper-v的关系

Hyper-v Hyper-V是由微软开发的一种虚拟化技术和虚拟机管理器。它允许在Windows操作系统上创建和运行多个虚拟机实例,每个虚拟机可以运行独立的操作系统和应用程序。属于硬件虚拟化。 WSL2 WSL2在技术上与WSL有很大的不同。在WSL2中,Windows 10引入了…...

SAS-数据集SQL水平合并

一、SQL水平合并基本语法 sql的合并有两步,step1:进行笛卡尔乘积运算,第一个表的每一行合并第二个表的每一行,即表a有3行,表b有3行,则合并后3*39行。笛卡尔过程包含源数据的所有列,相同列名会合…...

企业既要用u盘又要防止u盘泄密怎么办?

企业在日常生产生活过程中,使用u盘交换数据是最企业最常用也是最便携的方式,但是在使用u盘的同时,也给企业的数据保密工作带来了很大的挑战,往往很多情况下企业的是通过u盘进行数据泄漏的。很多企业采用一刀切的方式,直…...

汉明距离,两个整数之间的 汉明距离 指的是这两个数字对应二进制位不同的位置的数目。

题记: 两个整数之间的 汉明距离 指的是这两个数字对应二进制位不同的位置的数目。 给你两个整数 x 和 y,计算并返回它们之间的汉明距离。 示例 1: 输入:x 1, y 4 输出:2 解释: 1 (0 0 0 1) 4 (0 1 0 0…...

Android 之 使用 Camera 拍照

本节引言 本节给大家带来的是Android中Camera的使用,简单点说就是拍照咯,无非两种: 1.调用系统自带相机拍照,然后获取拍照后的图片 2.要么自己写个拍照页面 本节我们来写两个简单的例子体验下上面的这两种情况~ 1.调用系统自带…...

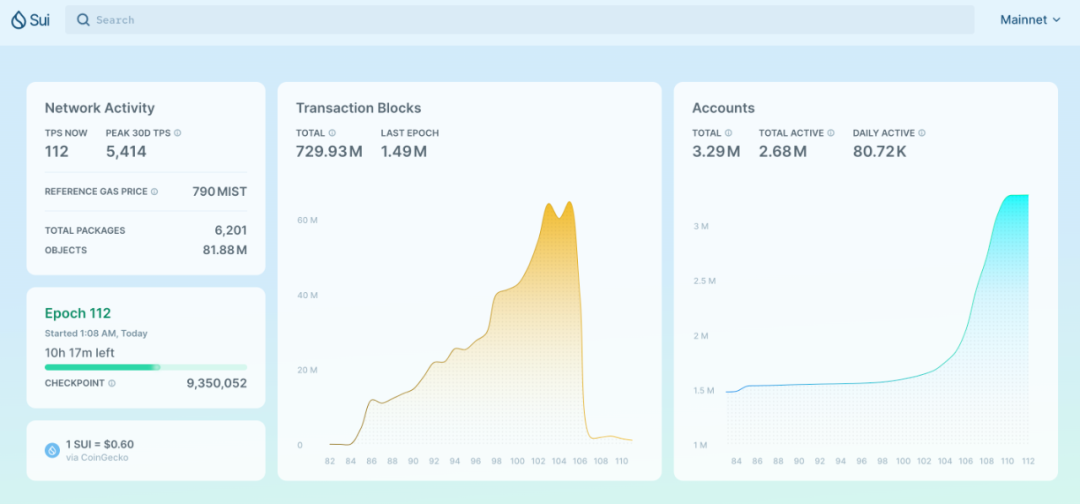

盘点7月Sui生态发展,了解Sui的近期成长历程!

自5月Sui主网上线三个月以来,7月是Sui网络进行最多次重要更新的一个月,Sui网络和生态正呈指数形式不断向上发展。为吸引更多的项目或开发者加入生态构建以及活跃用户参与生态,Sui基金会推出了Builder House、黑客松、Bullshark Quests、NFT再…...

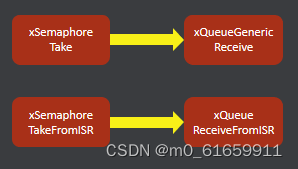

6.物联网操作系统信号量

一。信号量的概念与应用 信号量定义 FreeRTOS信号量介绍 FreeRTOS信号量工作原理 1.信号量的定义 多任务环境下使用,用来协调多个任务正确合理使用临界资源。 2.FreeRTOS信号量介绍 Semaphore包括Binary,Count,Mutex; Mutex包…...

《向量数据库指南》——使用Milvus Cloud操作员安装Milvus Cloud独立版

Milvus cloud操作员HelmDocker Compose Milvus cloud Operator是一种解决方案,帮助您在目标Kubernetes(K8s)集群上部署和管理完整的Milvus cloud服务堆栈。该堆栈包含所有Milvus cloud组件和相关依赖项,如etcd、Pulsar和MinIO。本主题介绍如何使用Milvus cloud Operator安…...

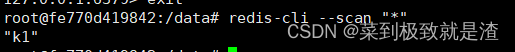

Redis的基础知识

目录 一、什么是Redis 二、关于Redis的一些基本知识 (1)set命令 (2)get命令 三、Redis中的一些常用命令 (1)keys (2)exists (3)type (4…...

Sorting Layer与Order in Layer

就像是两个数相比,比如34与26,Sorting Layer决定的是十位,而Order in Layer决定的是个位,如果Sorting Layer的级别比较高,则可以忽略Order in Layer的比较,当比较的二者的Sorting Layer级别相同,…...

SciencePlots——绘制论文中的图片

文章目录 安装一、风格二、1 资源 安装 # 安装最新版 pip install githttps://github.com/garrettj403/SciencePlots.git# 安装稳定版 pip install SciencePlots一、风格 简单好用的深度学习论文绘图专用工具包–Science Plot 二、 1 资源 论文绘图神器来了:一行…...

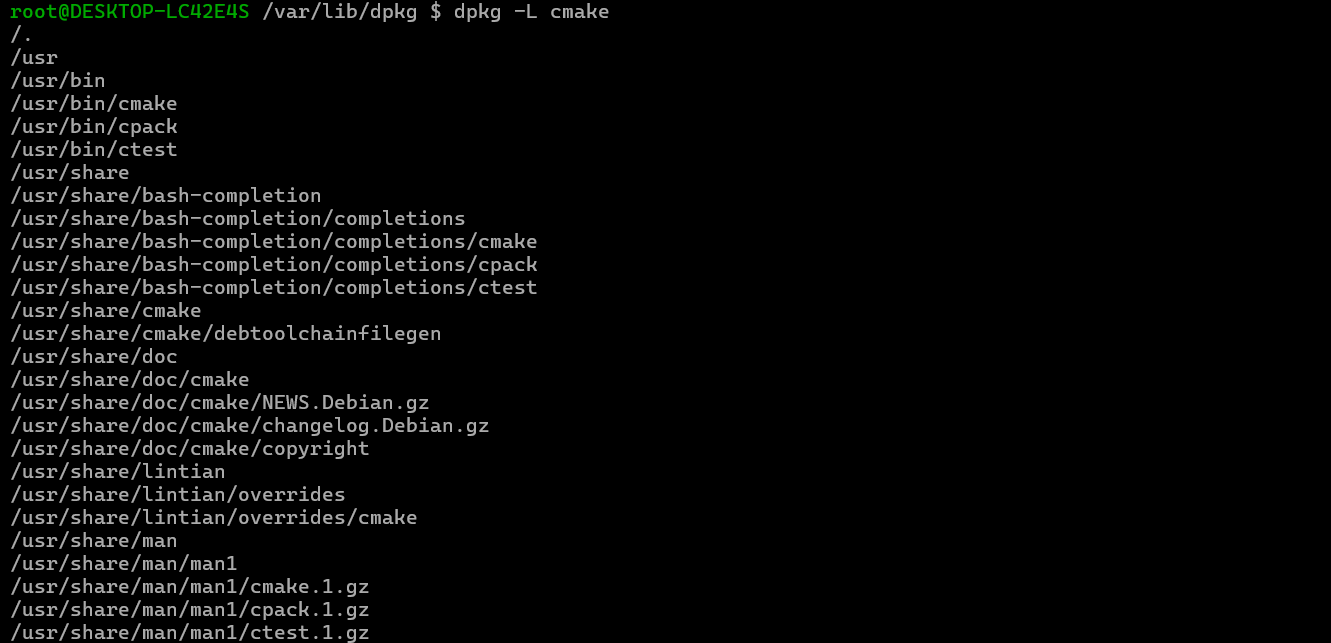

Debian系统简介

目录 Debian系统介绍 Debian版本介绍 Debian软件源介绍 软件包管理工具dpkg dpkg核心指令详解 安装软件包 卸载软件包 查询软件包状态 验证软件包完整性 手动处理依赖关系 dpkg vs apt Debian系统介绍 Debian 和 Ubuntu 都是基于 Debian内核 的 Linux 发行版ÿ…...

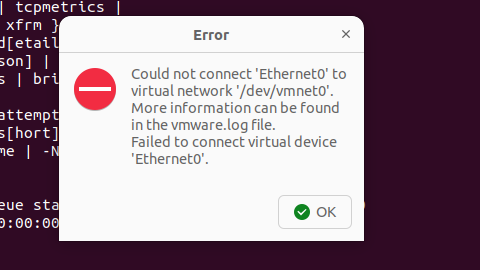

解决Ubuntu22.04 VMware失败的问题 ubuntu入门之二十八

现象1 打开VMware失败 Ubuntu升级之后打开VMware上报需要安装vmmon和vmnet,点击确认后如下提示 最终上报fail 解决方法 内核升级导致,需要在新内核下重新下载编译安装 查看版本 $ vmware -v VMware Workstation 17.5.1 build-23298084$ lsb_release…...

蓝桥杯 2024 15届国赛 A组 儿童节快乐

P10576 [蓝桥杯 2024 国 A] 儿童节快乐 题目描述 五彩斑斓的气球在蓝天下悠然飘荡,轻快的音乐在耳边持续回荡,小朋友们手牵着手一同畅快欢笑。在这样一片安乐祥和的氛围下,六一来了。 今天是六一儿童节,小蓝老师为了让大家在节…...

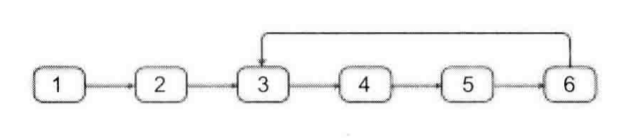

剑指offer20_链表中环的入口节点

链表中环的入口节点 给定一个链表,若其中包含环,则输出环的入口节点。 若其中不包含环,则输出null。 数据范围 节点 val 值取值范围 [ 1 , 1000 ] [1,1000] [1,1000]。 节点 val 值各不相同。 链表长度 [ 0 , 500 ] [0,500] [0,500]。 …...

【算法训练营Day07】字符串part1

文章目录 反转字符串反转字符串II替换数字 反转字符串 题目链接:344. 反转字符串 双指针法,两个指针的元素直接调转即可 class Solution {public void reverseString(char[] s) {int head 0;int end s.length - 1;while(head < end) {char temp …...

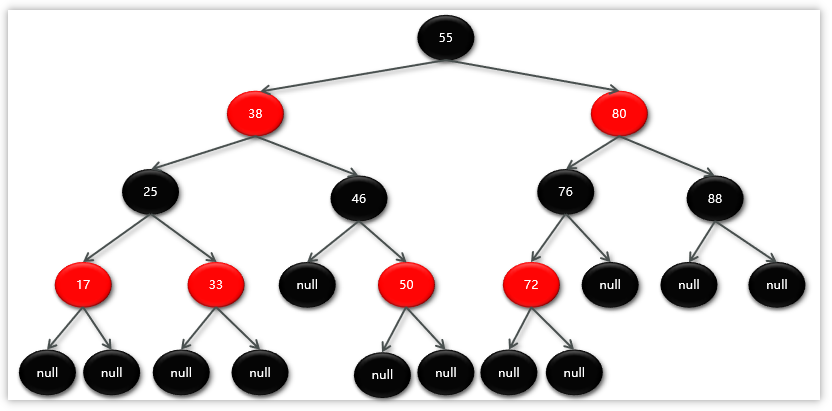

Map相关知识

数据结构 二叉树 二叉树,顾名思义,每个节点最多有两个“叉”,也就是两个子节点,分别是左子 节点和右子节点。不过,二叉树并不要求每个节点都有两个子节点,有的节点只 有左子节点,有的节点只有…...

A2A JS SDK 完整教程:快速入门指南

目录 什么是 A2A JS SDK?A2A JS 安装与设置A2A JS 核心概念创建你的第一个 A2A JS 代理A2A JS 服务端开发A2A JS 客户端使用A2A JS 高级特性A2A JS 最佳实践A2A JS 故障排除 什么是 A2A JS SDK? A2A JS SDK 是一个专为 JavaScript/TypeScript 开发者设计的强大库ÿ…...

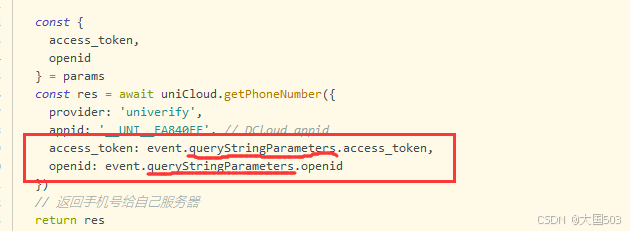

uniapp手机号一键登录保姆级教程(包含前端和后端)

目录 前置条件创建uniapp项目并关联uniClound云空间开启一键登录模块并开通一键登录服务编写云函数并上传部署获取手机号流程(第一种) 前端直接调用云函数获取手机号(第三种)后台调用云函数获取手机号 错误码常见问题 前置条件 手机安装有sim卡手机开启…...

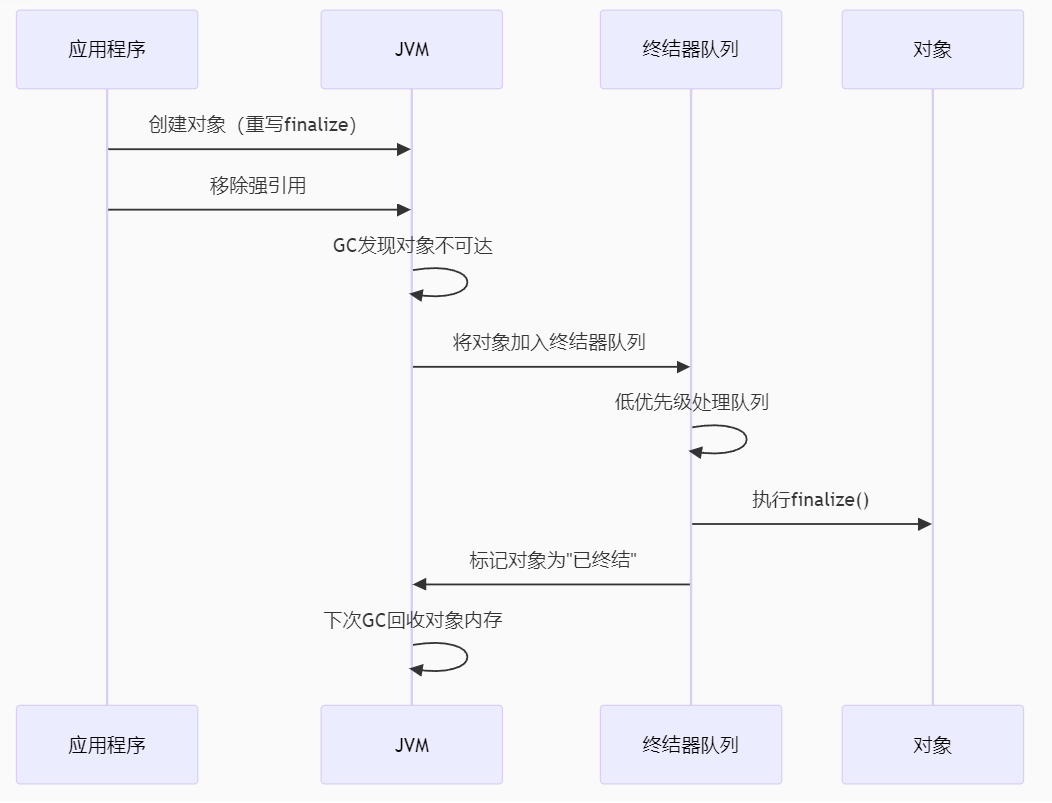

【 java 虚拟机知识 第一篇 】

目录 1.内存模型 1.1.JVM内存模型的介绍 1.2.堆和栈的区别 1.3.栈的存储细节 1.4.堆的部分 1.5.程序计数器的作用 1.6.方法区的内容 1.7.字符串池 1.8.引用类型 1.9.内存泄漏与内存溢出 1.10.会出现内存溢出的结构 1.内存模型 1.1.JVM内存模型的介绍 内存模型主要分…...