Spark 管理和更新Hadoop token 流程

Hadoop Token 管理

- AM 通过 kerberos authentication

- AM 获取 Yarn 和 HDFS Token

- AM send tokens to containers

- Containers load tokens

Enable debug message

log4j.logger.org.apache.hadoop.security=DEBUG

AM Generate tokens

Logs:

23/09/07 22:38:50,375 INFO [main] security.HadoopDelegationTokenManager:57 : Attempting to login to KDC using principal: hadoop_user@PROD.COM, keytab: /home/hadoop_user/hadoop_user.keytab

23/09/07 22:38:50,381 DEBUG [main] security.UserGroupInformation:246 : Hadoop login

23/09/07 22:38:50,381 DEBUG [main] security.UserGroupInformation:192 : hadoop login commit

23/09/07 22:38:50,382 DEBUG [main] security.UserGroupInformation:200 : Using kerberos user: hadoop_user@PROD.COM

23/09/07 22:38:50,382 DEBUG [main] security.UserGroupInformation:218 : Using user: "hadoop_user@PROD.COM" with name: hadoop_user@PROD.COM

23/09/07 22:38:50,382 DEBUG [main] security.UserGroupInformation:230 : User entry: "hadoop_user@PROD.COM"

23/09/07 22:38:50,382 INFO [main] security.HadoopDelegationTokenManager:57 : Successfully logged into KDC.

23/09/07 22:38:51,247 INFO [main] security.HadoopFSDelegationTokenProvider:57 : getting token for: DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_-113291108_1, ugi=hadoop_user@PROD.COM (auth:KERBEROS)]] with renewer rm/hadoop-rm-1.vip.hadoop.COM@PROD.COM

23/09/07 22:38:51,391 DEBUG [main] security.SaslRpcClient:493 : Sending sasl message state: NEGOTIATE23/09/07 22:38:51,398 DEBUG [main] security.SaslRpcClient:288 : Get token info proto:interface org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolPB info:@org.apache.hadoop.security.token.TokenInfo(value=class org.apache.hadoop.hdfs.security.token.delegation.DelegationTokenSelector)

23/09/07 22:38:51,399 DEBUG [main] security.SaslRpcClient:241 : tokens aren't supported for this protocol or user doesn't have one

23/09/07 22:38:51,399 DEBUG [main] security.SaslRpcClient:313 : Get kerberos info proto:interface org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolPB info:@org.apache.hadoop.security.KerberosInfo(clientPrincipal=, serverPrincipal=dfs.namenode.kerberos.principal)

23/09/07 22:38:51,420 DEBUG [main] security.SaslRpcClient:260 : RPC Server's Kerberos principal name for protocol=org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolPB is nn/hadoop-nn-2.vip.hadoop.COM@PROD.COM

23/09/07 22:38:51,421 DEBUG [main] security.SaslRpcClient:271 : Creating SASL GSSAPI(KERBEROS) client to authenticate to service at hadoop-nn-2.vip.hadoop.COM

23/09/07 22:38:51,425 DEBUG [main] security.SaslRpcClient:194 : Use KERBEROS authentication for protocol ClientNamenodeProtocolPB

23/09/07 22:38:51,441 DEBUG [main] security.SaslRpcClient:493 : Sending sasl message state: INITIATE

23/09/07 22:38:51,506 INFO [main] security.HadoopFSDelegationTokenProvider:57 : getting token for: DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_-113291108_1, ugi=hadoop_user@PROD.COM (auth:KERBEROS)]] with renewer hadoop_user@PROD.COM

23/09/07 22:38:52,807 INFO [main] security.HadoopDelegationTokenManager:57 : Scheduling renewal in 18.0 h.

23/09/07 22:38:52,809 INFO [main] security.HadoopDelegationTokenManager:57 : Updating delegation tokens.

23/09/07 22:38:52,833 INFO [main] deploy.SparkHadoopUtil:57 : Updating delegation tokens for current user.

23/09/07 22:38:52,858 INFO [dispatcher-CoarseGrainedScheduler] deploy.SparkHadoopUtil:57 : Updating delegation tokens for current user.

23/09/07 22:38:53,119 DEBUG [main] security.SaslRpcClient:288 : Get token info proto:interface org.apache.hadoop.yarn.api.ApplicationClientProtocolPB info:org.apache.hadoop.yarn.security.client.ClientRMSecurityInfo$2@48f2054d

23/09/07 22:38:53,120 DEBUG [main] security.SaslRpcClient:241 : tokens aren't supported for this protocol or user doesn't have one

23/09/07 22:38:53,121 DEBUG [main] security.SaslRpcClient:313 : Get kerberos info proto:interface org.apache.hadoop.yarn.api.ApplicationClientProtocolPB info:org.apache.hadoop.yarn.security.client.ClientRMSecurityInfo$1@6ce26986

23/09/07 22:38:53,124 DEBUG [main] security.SaslRpcClient:343 : getting serverKey: yarn.resourcemanager.principal conf value: rm/_HOST@PROD.COM principal: rm/hadoop-rm-1.hadoop-rm-rm.hm-prod.svc.35.tess.io@PROD.COM

23/09/07 22:38:53,124 DEBUG [main] security.SaslRpcClient:260 : RPC Server's Kerberos principal name for protocol=org.apache.hadoop.yarn.api.ApplicationClientProtocolPB is rm/hadoop-rm-1.hadoop-rm-rm.hm-prod.svc.35.tess.io@PROD.COM

23/09/07 22:38:53,124 DEBUG [main] security.SaslRpcClient:271 : Creating SASL GSSAPI(KERBEROS) client to authenticate to service at hadoop-rm-1.hadoop-rm-rm.hm-prod.svc.35.tess.io

23/09/07 22:38:53,125 DEBUG [main] security.SaslRpcClient:194 : Use KERBEROS authentication for protocol ApplicationClientProtocolPB

23/09/07 22:38:53,131 DEBUG [main] security.SaslRpcClient:493 : Sending sasl message state: INITIATE

23/09/07 22:38:53,182 DEBUG [main] token.Token:260 : Cloned private token Kind: HDFS_DELEGATION_TOKEN, Service: hadoop-nn-1.vip.hadoop.COM:8020, Ident: (token for hadoop_user: HDFS_DELEGATION_TOKEN owner=hadoop_user@PROD.COM, renewer=yarn, realUser=, issueDate=1694151531461, maxDate=1694756331461, sequenceNumber=1007863, masterKeyId=7139) from Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:hadoop, Ident: (token for hadoop_user: HDFS_DELEGATION_TOKEN owner=hadoop_user@PROD.COM, renewer=yarn, realUser=, issueDate=1694151531461, maxDate=1694756331461, sequenceNumber=1007863, masterKeyId=7139)

23/09/07 22:38:53,182 DEBUG [main] token.Token:260 : Cloned private token Kind: HDFS_DELEGATION_TOKEN, Service: hadoop-nn-2.vip.hadoop.COM:8020, Ident: (token for hadoop_user: HDFS_DELEGATION_TOKEN owner=hadoop_user@PROD.COM, renewer=yarn, realUser=, issueDate=1694151531461, maxDate=1694756331461, sequenceNumber=1007863, masterKeyId=7139) from Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:hadoop, Ident: (token for hadoop_user: HDFS_DELEGATION_TOKEN owner=hadoop_user@PROD.COM, renewer=yarn, realUser=, issueDate=1694151531461, maxDate=1694756331461, sequenceNumber=1007863, masterKeyId=7139)

23/09/07 22:38:53,182 DEBUG [main] token.Token:260 : Cloned private token Kind: HDFS_DELEGATION_TOKEN, Service: hadoop-nn-3.vip.hadoop.COM:8020, Ident: (token for hadoop_user: HDFS_DELEGATION_TOKEN owner=hadoop_user@PROD.COM, renewer=yarn, realUser=, issueDate=1694151531461, maxDate=1694756331461, sequenceNumber=1007863, masterKeyId=7139) from Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:hadoop, Ident: (token for hadoop_user: HDFS_DELEGATION_TOKEN owner=hadoop_user@PROD.COM, renewer=yarn, realUser=, issueDate=1694151531461, maxDate=1694756331461, sequenceNumber=1007863, masterKeyId=7139)

CoarseGrainedSchedulerBackend

启动 token manager

override def start(): Unit = {if (UserGroupInformation.isSecurityEnabled()) {delegationTokenManager = createTokenManager()delegationTokenManager.foreach { dtm =>val ugi = UserGroupInformation.getCurrentUser()val tokens = if (dtm.renewalEnabled) {dtm.start()} else {val creds = ugi.getCredentials()dtm.obtainDelegationTokens(creds)if (creds.numberOfTokens() > 0 || creds.numberOfSecretKeys() > 0) {SparkHadoopUtil.get.serialize(creds)} else {null}}if (tokens != null) {updateDelegationTokens(tokens)}}}}HadoopDelegationTokenManager

定时 Refresh tokens

def start(): Array[Byte] = {require(renewalEnabled, "Token renewal must be enabled to start the renewer.")require(schedulerRef != null, "Token renewal requires a scheduler endpoint.")renewalExecutor =ThreadUtils.newDaemonSingleThreadScheduledExecutor("Credential Renewal Thread")val ugi = UserGroupInformation.getCurrentUser()if (ugi.isFromKeytab()) {// In Hadoop 2.x, renewal of the keytab-based login seems to be automatic, but in Hadoop 3.x,// it is configurable (see hadoop.kerberos.keytab.login.autorenewal.enabled, added in// HADOOP-9567). This task will make sure that the user stays logged in regardless of that// configuration's value. Note that checkTGTAndReloginFromKeytab() is a no-op if the TGT does// not need to be renewed yet.val tgtRenewalTask = new Runnable() {override def run(): Unit = {try {ugi.checkTGTAndReloginFromKeytab()} catch {case e: Throwable =>logWarning("Failed to renew TGT from keytab file", e)}}}val tgtRenewalPeriod = sparkConf.get(KERBEROS_RELOGIN_PERIOD)renewalExecutor.scheduleAtFixedRate(tgtRenewalTask, tgtRenewalPeriod, tgtRenewalPeriod,TimeUnit.SECONDS)}updateTokensTask()}private def updateTokensTask(): Array[Byte] = {try {val freshUGI = doLogin()val creds = obtainTokensAndScheduleRenewal(freshUGI)val tokens = SparkHadoopUtil.get.serialize(creds)logInfo("Updating delegation tokens.")schedulerRef.send(UpdateDelegationTokens(tokens))tokens} catch {case _: InterruptedException =>// Ignore, may happen if shutting down.nullcase e: Exception =>val delay = TimeUnit.SECONDS.toMillis(sparkConf.get(CREDENTIALS_RENEWAL_RETRY_WAIT))logWarning(s"Failed to update tokens, will try again in ${UIUtils.formatDuration(delay)}!" +" If this happens too often tasks will fail.", e)scheduleRenewal(delay)null}}

CoarseGrainedSchedulerBackend

case UpdateDelegationTokens(newDelegationTokens) =>updateDelegationTokens(newDelegationTokens)

Container 启动 Load token

23/09/07 23:41:56,279 DEBUG [main] security.UserGroupInformation:246 : Hadoop login

23/09/07 23:41:56,281 DEBUG [main] security.UserGroupInformation:192 : hadoop login commit

23/09/07 23:41:56,284 DEBUG [main] security.UserGroupInformation:214 : Using local user: UnixPrincipal: hadoop_user

23/09/07 23:41:56,285 DEBUG [main] security.UserGroupInformation:218 : Using user: "UnixPrincipal: hadoop_user" with name: hadoop_user

23/09/07 23:41:56,285 DEBUG [main] security.UserGroupInformation:230 : User entry: "hadoop_user"

23/09/07 23:41:56,285 DEBUG [main] security.UserGroupInformation:741 : Reading credentials from location /hadoop/1/yarn/local/usercache/hadoop_user/appcache/application_1684894519955_69959/container_e2311_1684894519955_69959_01_000021/container_tokens

23/09/07 23:41:56,303 DEBUG [main] security.UserGroupInformation:746 : Loaded 7 tokens from /hadoop/1/yarn/local/usercache/hadoop_user/appcache/application_1684894519955_69959/container_e2311_1684894519955_69959_01_000021/container_tokens

23/09/07 23:41:56,304 DEBUG [main] security.UserGroupInformation:787 : UGI loginUser: hadoop_user (auth:SIMPLE)23/09/07 23:44:54,825 DEBUG [Executor 1 task launch worker for task 1785, task 1757.0 in stage 8.0 of app application_1684894519955_69959] security.SaslRpcClient:284 : Get token info proto:interface org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolPB info:@org.apache.hadoop.security.token.TokenInfo(value=class org.apache.hadoop.hdfs.security.token.delegation.DelegationTokenSelector)

23/09/07 23:44:54,831 DEBUG [Executor 1 task launch worker for task 1785, task 1757.0 in stage 8.0 of app application_1684894519955_69959] security.SaslRpcClient:267 : Creating SASL DIGEST-MD5(TOKEN) client to authenticate to service at default

23/09/07 23:44:54,833 DEBUG [Executor 1 task launch worker for task 1785, task 1757.0 in stage 8.0 of app application_1684894519955_69959] security.SaslRpcClient:190 : Use TOKEN authentication for protocol ClientNamenodeProtocolPB

23/09/07 23:44:54,836 DEBUG [Executor 1 task launch worker for task 1785, task 1757.0 in stage 8.0 of app application_1684894519955_69959] security.SaslRpcClient:690 : SASL client callback: setting username: ABZiX2Nhcm1lbEBQUk9ELkVCQVkuQ09NBHlhcm4AigGKc4ZbmYoBipeS35mMAUIqhY4Ggg==

23/09/07 23:44:54,836 DEBUG [Executor 1 task launch worker for task 1785, task 1757.0 in stage 8.0 of app application_1684894519955_69959] security.SaslRpcClient:695 : SASL client callback: setting userPassword

23/09/07 23:44:54,836 DEBUG [Executor 1 task launch worker for task 1785, task 1757.0 in stage 8.0 of app application_1684894519955_69959] security.SaslRpcClient:700 : SASL client callback: setting realm: default

Spark AM 和 Executor 更新收到的 tokens

case UpdateDelegationTokens(tokenBytes) =>logInfo(s"Received tokens of ${tokenBytes.length} bytes")SparkHadoopUtil.get.addDelegationTokens(tokenBytes, env.conf)..... UserGroupInformation.getCurrentUser.addCredentials(creds)

相关文章:

Spark 管理和更新Hadoop token 流程

Hadoop Token 管理 AM 通过 kerberos authenticationAM 获取 Yarn 和 HDFS TokenAM send tokens to containersContainers load tokens Enable debug message log4j.logger.org.apache.hadoop.securityDEBUG AM Generate tokens Logs: 23/09/07 22:38:50,375 INFO [main]…...

Android文件关联

用户需求:Android在系统文件夹找到一个文件想发送自己开发的app进行处理该怎么办? 这时候可以采用两个Activity,一个Activity用作Launcher,一个用于处理发送的文件;具体Activity intent-filter该怎么写了?可以参考下面的代码: <intent-filter><action androi…...

java操作adb查看apk安装包包名【搬代码】

Testpublic static void findadb() throws InterruptedException {String apkip"E:\\需求\\2023\\gql_1.0.1.apk";String findname1"cmd /c cd E:\\appium\\android-sdk\\build-tools\\27.0.2";//没有进到这里String s1 Cmd.exeCmd(findname1);System.out…...

【JAVA】Object类与抽象类

作者主页:paper jie_的博客 本文作者:大家好,我是paper jie,感谢你阅读本文,欢迎一建三连哦。 本文录入于《JAVASE语法系列》专栏,本专栏是针对于大学生,编程小白精心打造的。笔者用重金(时间和…...

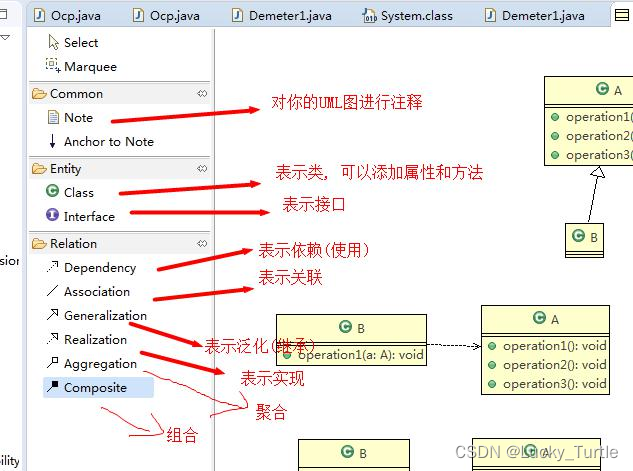

【设计模式】二、UML 类图概述

文章目录 常见含义含义依赖关系(Dependence)泛化关系(Generalization)实现关系(Implementation)关联关系(Association)聚合关系(Aggregation)组合关系&#x…...

百望云亮相服贸会 重磅发布业财税融Copilot

小望小望,我要一杯拿铁! 好的,已下单成功,请问要开具发票嘛? 在获得确认的指令后, 百小望AI智能助手 按用户要求成功开具了一张电子发票! 这是2023年服贸会国家会议中心成果发布现场&#x…...

带配置项注释)

vue 项目代码混淆配置(自定义插件适用)带配置项注释

文章目录 vue 项目代码混淆配置(自定义插件适用)带配置项注释一、概要二、混淆步骤1. 引入混淆插件2. 添加混淆配置3. 执行代码混淆 vue 项目代码混淆配置(自定义插件适用)带配置项注释 一、概要 本文章适用 vue-cli3/webpack4 …...

手写Spring:第7章-实现应用上下文

文章目录 一、目标:实现应用上下文二、设计:实现应用上下文三、实现:实现应用上下文3.1 工程结构3.2 Spring应用上下文和Bean对象扩展类图3.3 对象工厂和对象扩展接口3.3.1 对象工厂扩展接口3.3.2 对象扩展接口 3.4 定义应用上下文3.4.1 定义…...

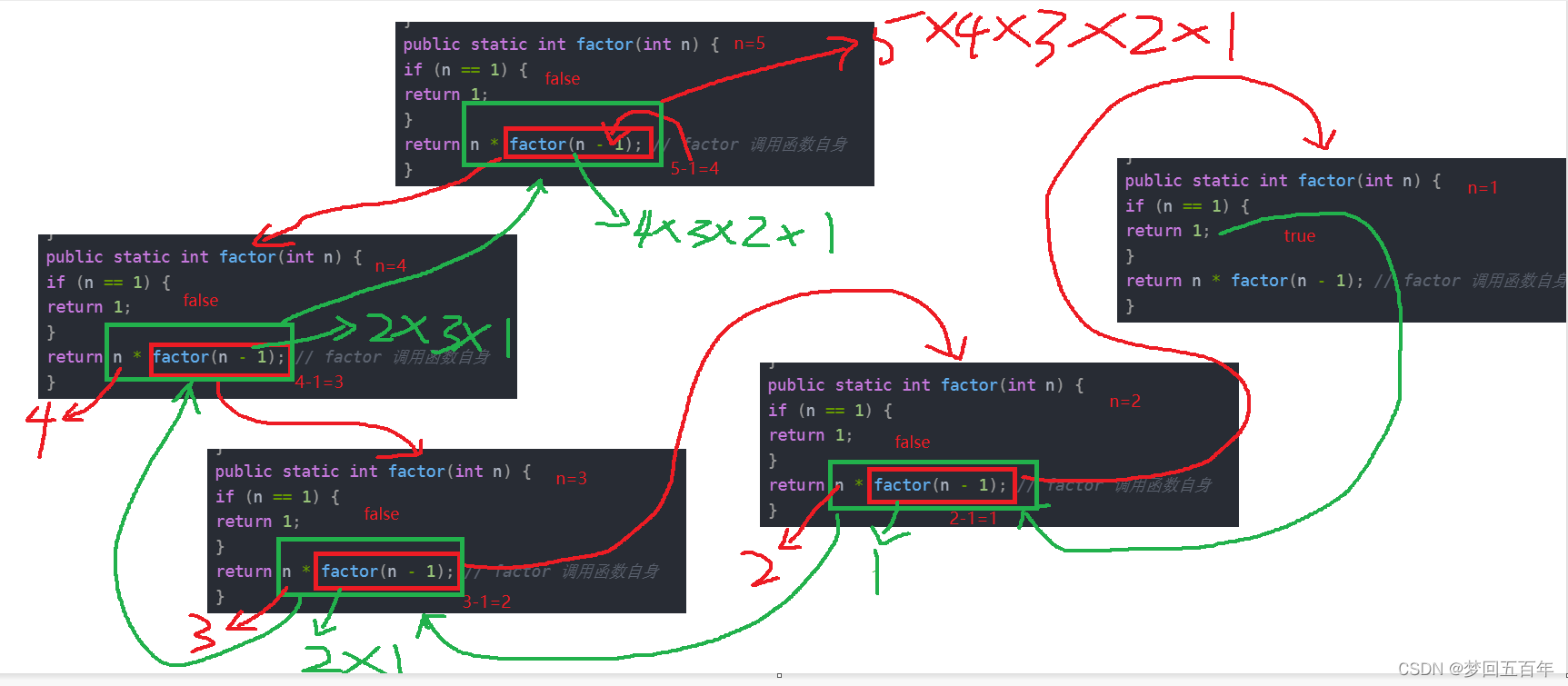

Java(三)逻辑控制(if....else,循环语句)与方法

逻辑控制(if....else,循环语句)与方法 四、逻辑控制1.if...else(常用)1.1表达格式(三种) 2.switch...case(用的少)2.1表达式 3.while(常用)3.1语法格式3.2关键字beak:3.3关键字 continue: 4.for…...

)

通过API接口实现数据实时更新的方案(InsCode AI 创作助手)

要实现实时数据更新,需要采用轮询或者长连接两种方式。 1. 轮询方式 轮询方式指的是客户端定时向服务器请求数据的方式,通过一定的时间间隔去请求最新数据。具体的实现方法包括: 客户端定时向服务器发送请求,获取最新数据&…...

分类预测 | MATLAB实现PCA-GRU(主成分门控循环单元)分类预测

分类预测 | MATLAB实现PCA-GRU(主成分门控循环单元)分类预测 目录 分类预测 | MATLAB实现PCA-GRU(主成分门控循环单元)分类预测预测效果基本介绍程序设计参考资料致谢 预测效果 基本介绍 Matlab实现基于PCA-GRU主成分分析-门控循环单元多输入分类预测(完整程序和数据…...

el-dialog无法关闭

代码如下,:visible.sync"result2DeptVisible"来控制dialog的隐显问题,但当点击关闭的时候 ,无法关闭!! <el-dialog :visible.sync"result2DeptVisible" class"el-dialog-view">&…...

)

MATLAB算法实战应用案例精讲-【大模型】LLM算法(最终篇)

目录 前言 知识储备 1).通讯原语操作: 2).并行计算技术: 算法原理...

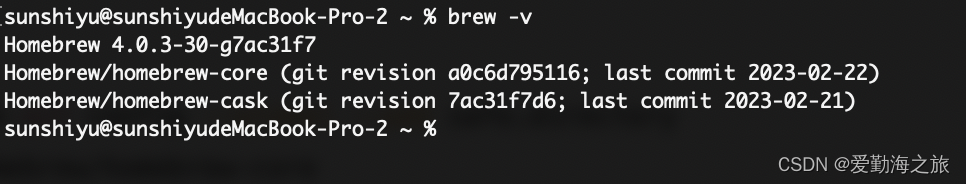

Mac brew -v 报错 fatal: detected dubious ownership in repository

Mac 电脑查询 brew版本时报错,如下错误: Last login: Fri Sep 8 14:56:21 on ttys021 sunshiyusunshiyudeMacBook-Pro-2 ~ % brew -v Homebrew 4.0.3-30-g7ac31f7 fatal: detected dubious ownership in repository at /usr/local/Homebrew/Library/Ta…...

Docker镜像、容器、仓库及数据管理

使用Docker镜像 获取镜像 使用docker pull命令,使用docker search命令可以搜索远端仓库中共享的镜像。 运行容器 使用docker run [OPTIONS] IMAGE [COMMAND] [ARG...]命令,如:docker run --name ubuntu_test --rm -it ubuntu:test /bin/b…...

Java的选择排序、冒泡排序、插入排序

不爱生姜不吃醋 如果本文有什么错误的话欢迎在评论区中指正 与其明天开始,不如现在行动! 文章目录 🌴前言🌴一、选择排序1.原理2.时间复杂度3.代码实现 🌴二、冒泡排序1. 原理2. 时间复杂度3.代码实现 🌴三…...

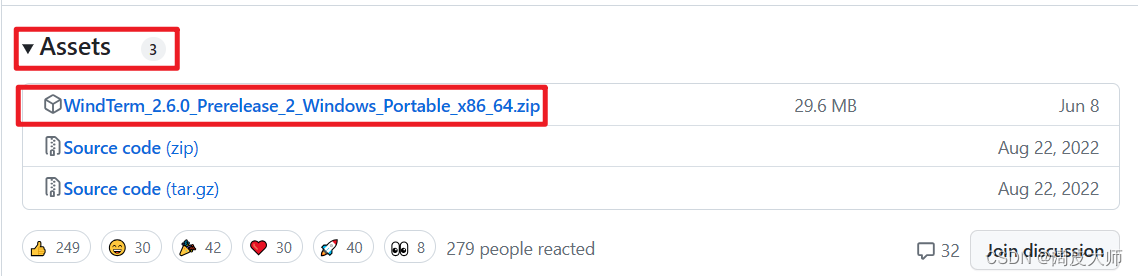

Vagrant + VirtualBox + CentOS7 + WindTerm 5分钟搭建本地linux开发环境

1、准备阶段 将环境搭建所需要的工具和文件下载好(页面找不到可参考Tips部分) Vagrant 版本:vagrant_2.2.18_x86_64.msi 链接:https://developer.hashicorp.com/vagrant/downloads VirtualBox 版本:VirtualBox-6.1.46…...

关于Ajax

1.Ajax 异步 JavaScript 和 XML, 或 Ajax 本身不是一种技术,而是一种将一些现有技术结合起来使用的方法,包括:HTML 或 XHTML、CSS、JavaScript、DOM、XML、XSLT、以及最重要的 XMLHttpRequest 对象。当使用结合了这些技术的 Aja…...

打开转盘锁 -- BFS

打开转盘锁 这里提供两种实现,单向BFS和双向BFS。 class OpenLock:"""752. 打开转盘锁https://leetcode.cn/problems/open-the-lock/"""def solution(self, deadends: List[str], target: str) -> int:"""单向BFS:…...

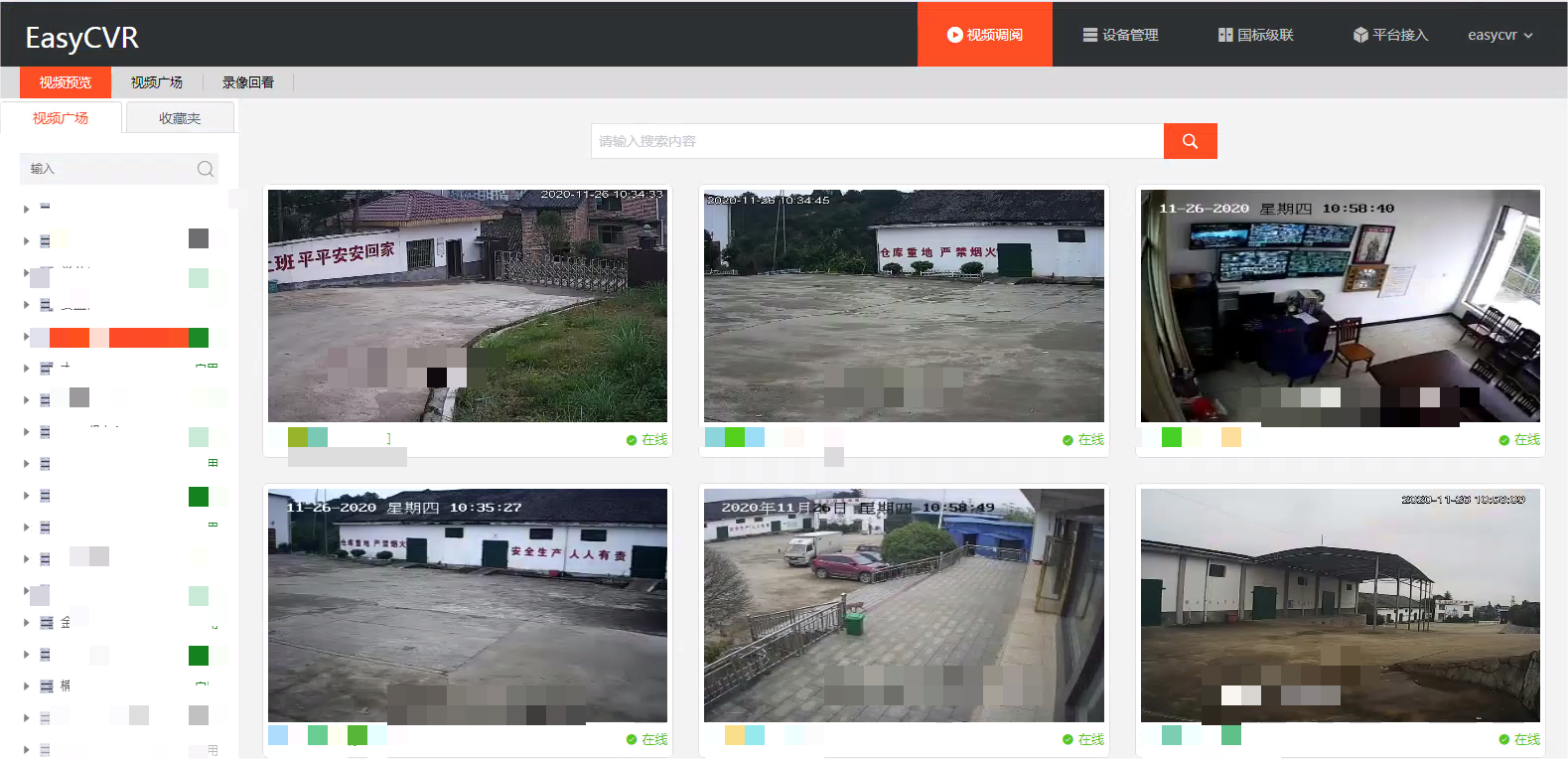

国标EHOME视频平台EasyCVR视频融合平台助力地下停车场安全

EasyCVR能在复杂的网络环境中,将分散的各类视频资源进行统一汇聚、整合、集中管理,实现视频资源的鉴权管理、按需调阅、全网分发、云存储、智能分析等,视频智能分析平台EasyCVR融合性强、开放度高、部署轻快,在智慧工地、智慧园区…...

KubeSphere 容器平台高可用:环境搭建与可视化操作指南

Linux_k8s篇 欢迎来到Linux的世界,看笔记好好学多敲多打,每个人都是大神! 题目:KubeSphere 容器平台高可用:环境搭建与可视化操作指南 版本号: 1.0,0 作者: 老王要学习 日期: 2025.06.05 适用环境: Ubuntu22 文档说…...

深入剖析AI大模型:大模型时代的 Prompt 工程全解析

今天聊的内容,我认为是AI开发里面非常重要的内容。它在AI开发里无处不在,当你对 AI 助手说 "用李白的风格写一首关于人工智能的诗",或者让翻译模型 "将这段合同翻译成商务日语" 时,输入的这句话就是 Prompt。…...

智慧医疗能源事业线深度画像分析(上)

引言 医疗行业作为现代社会的关键基础设施,其能源消耗与环境影响正日益受到关注。随着全球"双碳"目标的推进和可持续发展理念的深入,智慧医疗能源事业线应运而生,致力于通过创新技术与管理方案,重构医疗领域的能源使用模式。这一事业线融合了能源管理、可持续发…...

微信小程序之bind和catch

这两个呢,都是绑定事件用的,具体使用有些小区别。 官方文档: 事件冒泡处理不同 bind:绑定的事件会向上冒泡,即触发当前组件的事件后,还会继续触发父组件的相同事件。例如,有一个子视图绑定了b…...

CVPR 2025 MIMO: 支持视觉指代和像素grounding 的医学视觉语言模型

CVPR 2025 | MIMO:支持视觉指代和像素对齐的医学视觉语言模型 论文信息 标题:MIMO: A medical vision language model with visual referring multimodal input and pixel grounding multimodal output作者:Yanyuan Chen, Dexuan Xu, Yu Hu…...

)

进程地址空间(比特课总结)

一、进程地址空间 1. 环境变量 1 )⽤户级环境变量与系统级环境变量 全局属性:环境变量具有全局属性,会被⼦进程继承。例如当bash启动⼦进程时,环 境变量会⾃动传递给⼦进程。 本地变量限制:本地变量只在当前进程(ba…...

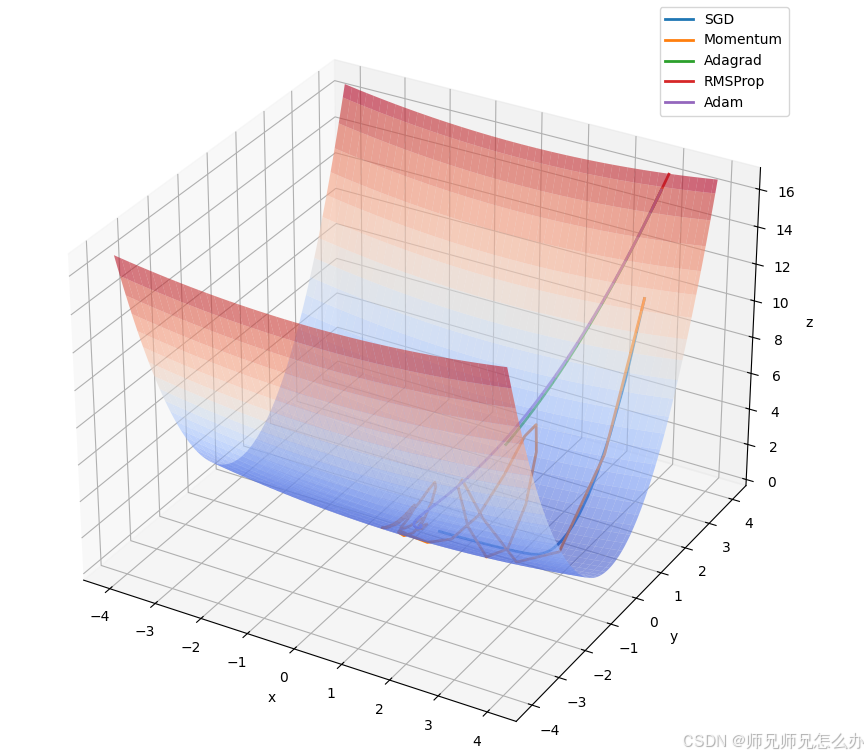

【人工智能】神经网络的优化器optimizer(二):Adagrad自适应学习率优化器

一.自适应梯度算法Adagrad概述 Adagrad(Adaptive Gradient Algorithm)是一种自适应学习率的优化算法,由Duchi等人在2011年提出。其核心思想是针对不同参数自动调整学习率,适合处理稀疏数据和不同参数梯度差异较大的场景。Adagrad通…...

QMC5883L的驱动

简介 本篇文章的代码已经上传到了github上面,开源代码 作为一个电子罗盘模块,我们可以通过I2C从中获取偏航角yaw,相对于六轴陀螺仪的yaw,qmc5883l几乎不会零飘并且成本较低。 参考资料 QMC5883L磁场传感器驱动 QMC5883L磁力计…...

【JVM】- 内存结构

引言 JVM:Java Virtual Machine 定义:Java虚拟机,Java二进制字节码的运行环境好处: 一次编写,到处运行自动内存管理,垃圾回收的功能数组下标越界检查(会抛异常,不会覆盖到其他代码…...

【android bluetooth 框架分析 04】【bt-framework 层详解 1】【BluetoothProperties介绍】

1. BluetoothProperties介绍 libsysprop/srcs/android/sysprop/BluetoothProperties.sysprop BluetoothProperties.sysprop 是 Android AOSP 中的一种 系统属性定义文件(System Property Definition File),用于声明和管理 Bluetooth 模块相…...