二进制部署kubernetes集群的推荐方式

软件版本:

| 软件 | 版本 |

|---|---|

| containerd | v1.6.5 |

| etcd | v3.5.0 |

| kubernetes | v1.24.0 |

一、系统环境

1.1 环境准备

| 角色 | IP | 服务 |

|---|---|---|

| k8s-master01 | 192.168.10.10 | etcd、containerd、kube-apiserver、kube-scheduler、kube-controller-manager、kubele、kube-proxy |

| k8s-node01 | 后续 | etcd、containerd、kubele、kube-proxy |

| k8s-node02 | 后续 | etcd、containerd、kubele、kube-proxy |

1.2 环境初始化

#关闭防火墙和selinux

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0 #关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab#将桥接的IPv4流量传递到iptables的链,lsmod | grep br_netfilter可以查看,sudo modprobe显示加载

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF#将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system #时间同步

yum install ntpdate -y

ntpdate time.windows.com

clock -w#安装ipvs

yum install ipset ipvsadm -y yum install ipset ipvsadm -y #

cat >> /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_sh

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- nf_conntrack_ipv4

EOFchmod +x /etc/sysconfig/modules/ipvs.modules

1.3 文件夹初始化

准备文件夹

mkdir -p /usr/local/k8s-install

mkdir -p /usr/local/k8s-install/cert

mkdir -p /usr/local/k8s-install/tools

mkdir /etc/kubernetes

mkdir /etc/kubernetes/pki

mkdir /etc/kubernetes/manifests

环境文件夹

mkdir -p /opt/etcd/{bin,ssl,cfg,data,wal}

二、软件下载

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64 -o cfssl

chmod +x cfssl

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64 -o cfssljson

chmod +x cfssljson

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl-certinfo_1.5.0_linux_amd64 -o cfssl-certinfo

chmod +x cfssl-certinfo

mv {cfssl,cfssljson,cfssl-certinfo} /usr/local/bin

kubernetes:

k8s二进制包下载

cd /usr/local/k8s-install/tools

wget -c https://dl.k8s.io/v1.24.0/kubernetes-server-linux-amd64.tar.gztar -zxf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bincp {kube-apiserver,kube-controller-manager,kube-scheduler,kubelet,kube-proxy,kubectl} /usr/local/bin

三、生成证书

3.1 证书签发的配置文件

cat > /usr/local/k8s-install/cert/ca-config.json <<END

{"signing": {"default": {"expiry": "876000h"},"profiles": {"kubernetes": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "876000h"},"etcd": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "876000h"}}}

}

END

cp /usr/local/k8s-install/cert/ca-config.json /etc/kubernetes

3.2 etcd CA证书

rm -rf /usr/local/k8s-install/cert/etcd/

mkdir /usr/local/k8s-install/cert/etcd/

cd /usr/local/k8s-install/cert/etcd/

cat > etcd-ca-csr.json <<END

{"CN": "etcd","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "etcd","OU": "etcd"}],"ca": {"expiry": "87600h"}

}

END

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare ca -cp {ca.pem,ca-key.pem} /opt/etcd/ssl/

3.3 etcd 证书签发

cd /usr/local/k8s-install/cert/etcd

cat > etcd-csr.json<< EOF

{"CN":"etcd","hosts":["127.0.0.1","k8s-master01","192.168.10.10"],"key":{"algo":"rsa","size":2048},"names":[{"C":"CN","L":"BeiJing","ST":"BeiJing","O": "Kubernetes","OU": "etcd"}]

}

EOFcfssl gencert -ca=/usr/local/k8s-install/cert/etcd/ca.pem -ca-key=/usr/local/k8s-install/cert/etcd/ca-key.pem -config=/usr/local/k8s-install/cert/ca-config.json -profile=etcd etcd-csr.json | cfssljson -bare etcdcp {etcd-key.pem,etcd.pem} /opt/etcd/ssl/

3.4 k8s CA证书

cat > /usr/local/k8s-install/cert/ca-csr.json <<END

{"CN": "kubernetes","key": { "algo": "rsa", "size": 2048}, "names":[{ "C": "CN", "ST": "Beijing","L": "Beijing", "O": "Kubernetes", "OU": "Kubernetes" }]

}

ENDcd /usr/local/k8s-install/cert

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

cp {ca.pem,ca-key.pem} /etc/kubernetes/pki

3.5 apiserver证书签发

rm -rf /usr/local/k8s-install/cert/apiserver

mkdir /usr/local/k8s-install/cert/apiserver

cd /usr/local/k8s-install/cert/apiservercat > server-csr.json << END

{"CN": "kubernetes","hosts": ["127.0.0.1","10.0.0.1","192.168.10.10","k8s-master01","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "Kubernetes","OU": "Kubernetes"}]

}

ENDcfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem --config=/usr/local/k8s-install/cert/ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare servercp -r {server.pem,server-key.pem} /etc/kubernetes/pki

3.6 front-proxy证书签发

官方文档:配置聚合层 | Kubernetes

ca证书生成:

rm -rf /usr/local/k8s-install/cert/font-proxy

mkdir /usr/local/k8s-install/cert/font-proxy

cd /usr/local/k8s-install/cert/font-proxy

cat > front-proxy-ca-csr.json << EOF

{"CN": "kubernetes","key": {"algo": "rsa","size": 2048}

}

EOFcfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare front-proxy-ca

证书签发

cd /usr/local/k8s-install/cert/font-proxy

cat > front-proxy-client-csr.json << EOF

{"CN": "front-proxy-client","key": {"algo": "rsa","size": 2048}

}

EOFcfssl gencert -ca=front-proxy-ca.pem -ca-key=front-proxy-ca-key.pem -config=/usr/local/k8s-install/cert/ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare front-proxy-clientcp {front-proxy-ca.pem,front-proxy-ca-key.pem,front-proxy-client-key.pem,front-proxy-client.pem} /etc/kubernetes/pki

3.7 kube-controller-manager证书签发

rm -rf /usr/local/k8s-install/cert/kube-controller-manager

mkdir /usr/local/k8s-install/cert/kube-controller-manager

cd /usr/local/k8s-install/cert/kube-controller-manager

cat > kube-controller-manager-csr.json << EOF

{"CN": "system:kube-controller-manager","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing", "ST": "BeiJing","O": "system:masters","OU": "Kubernetes"}]

}

EOFcfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=/usr/local/k8s-install/cert/ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

cp {kube-controller-manager.pem,kube-controller-manager-key.pem} /etc/kubernetes/pki

3.8 scheduler证书签发

rm -rf /usr/local/k8s-install/cert/kube-scheduler

mkdir /usr/local/k8s-install/cert/kube-scheduler

cd /usr/local/k8s-install/cert/kube-scheduler

cat > kube-scheduler-csr.json << EOF

{"CN": "system:kube-scheduler","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:kube-scheduler","OU": "Kubernetes"}]

}

EOFcfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=/usr/local/k8s-install/cert/ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

cp {kube-scheduler.pem,kube-scheduler-key.pem} /etc/kubernetes/pki

3.9 admin证书签发

rm -rf /usr/local/k8s-install/cert/admin

mkdir /usr/local/k8s-install/cert/admin

cd /usr/local/k8s-install/cert/admin

cat > admin-csr.json <<EOF

{"CN": "admin","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "Kubernetes"}]

}

EOFcfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=/etc/kubernetes/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

cp {admin.pem,admin-key.pem} /etc/kubernetes/pki

3.10 ServiceAccount Key生成

k8s每创建一个ServiceAccount,都会分配一个Secret,而Secret里面的秘钥就是sa生成的。

mkdir /usr/local/k8s-install/cert/sa

cd /usr/local/k8s-install/cert/sa

openssl genrsa -out sa.key 2048

openssl rsa -in sa.key -pubout -out sa.pub

cp {sa.key,sa.pub} /etc/kubernetes/pki

3.11 kube-proxy证书签发

rm -rf /usr/local/k8s-install/cert/kube-proxy

mkdir /usr/local/k8s-install/cert/kube-proxy

cd /usr/local/k8s-install/cert/kube-proxy

cat > kube-proxy-csr.json << END

{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "Kubernetes","OU": "System"}]

}

ENDcfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem --config=/etc/kubernetes/ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxycp -r {kube-proxy.pem,kube-proxy-key.pem} /etc/kubernetes/pki

四、部署containerd

#安装containerd

wget -c https://github.com/containerd/containerd/releases/download/v1.6.5/cri-containerd-cni-1.6.5-linux-amd64.tar.gz

tar -zxf cri-containerd-cni-1.6.5-linux-amd64.tar.gz -C /#安装libseccomp

wget http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

rpm -ivh libseccomp-2.5.1-1.el8.x86_64.rpm#开机启动

systemctl enable containerd --now

五、部署etcd

5.1 安装

wget -c https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz

tar -zxf etcd-v3.5.0-linux-amd64.tar.gz

cd etcd-v3.5.0-linux-amd64

mv {etcd,etcdctl,etcdutl} /opt/etcd/bin/#环境变量

echo "PATH=\$PATH:/opt/etcd/bin" >> /etc/profile

source /etc/profile

5.2 证书准备

证书文件在3.3已经准备了

5.3 配置文件

/opt/etcd/cfg/etcd.yaml 配置文件:etcd/etcd.conf.yml.sample at main · etcd-io/etcd · GitHub

192.168.10.10

name: "etcd-1"

data-dir: "/opt/etcd/data"

wal-dir: "/opt/etcd/wal"

# 用于侦听对等流量的逗号分隔的url列表。

listen-peer-urls: https://192.168.10.10:2380

# 用于侦听客户机通信的逗号分隔的url列表。

listen-client-urls: https://192.168.10.10:2379

# 这个成员的对等url的列表,以通告给集群的其他成员。url需要是逗号分隔的列表。

initial-advertise-peer-urls: https://192.168.10.10:2380

#这个成员的对等url的列表,以通告给集群的其他成员。url需要是逗号分隔的列表。

advertise-client-urls: https://192.168.10.10:2379

# Initial cluster configuration for bootstrapping.

initial-cluster: 'etcd-1=https://192.168.10.10:2380,etcd-2=https://192.168.66.41:2380,etcd-3=https://192.168.66.42:2380'

# Initial cluster token for the etcd cluster during bootstrap.

initial-cluster-token: 'etcd-cluster'

# Initial cluster state ('new' or 'existing').

initial-cluster-state: 'new'

client-transport-security:# Path to the client server TLS cert file.cert-file: /opt/etcd/ssl/etcd.pem# Path to the client server TLS key file.key-file: /opt/etcd/ssl/etcd-key.pem# Path to the client server TLS trusted CA cert file.trusted-ca-file: /opt/etcd/ssl/ca.pem

peer-transport-security:# Path to the peer server TLS cert file.cert-file: /opt/etcd/ssl/etcd.pem# Path to the peer server TLS key file.key-file: /opt/etcd/ssl/etcd-key.pem# Path to the peer server TLS trusted CA cert file.trusted-ca-file: /opt/etcd/ssl/ca.pem

192.168.66.41

name: "etcd-2"

listen-peer-urls: https://192.168.66.41:2380

# 用于侦听客户机通信的逗号分隔的url列表。

listen-client-urls: https://192.168.66.41:2379

# 这个成员的对等url的列表,以通告给集群的其他成员。url需要是逗号分隔的列表。

initial-advertise-peer-urls: https://192.168.66.41:2380

#这个成员的对等url的列表,以通告给集群的其他成员。url需要是逗号分隔的列表。

advertise-client-urls: https://192.168.66.41:2379

192.168.66.42

name: "etcd-2"

listen-peer-urls: https://192.168.66.42:2380

# 用于侦听客户机通信的逗号分隔的url列表。

listen-client-urls: https://192.168.66.42:2379

# 这个成员的对等url的列表,以通告给集群的其他成员。url需要是逗号分隔的列表。

initial-advertise-peer-urls: https://192.168.66.42:2380

#这个成员的对等url的列表,以通告给集群的其他成员。url需要是逗号分隔的列表。

advertise-client-urls: https://192.168.66.42:2379

5.4 服务配置

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

ExecStart=/opt/etcd/bin/etcd --config-file /opt/etcd/cfg/etcd.yml

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF5.5 启动服务

systemctl enable etcd --now

5.6 集群状态查看

[root@k8s-master01 k8s]# etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/etcd.pem --key=/opt/etcd/ssl/etcd-key.pem --endpoints="https://192.168.10.10:2379,https://192.168.66.41:2379,https://192.168.66.42:2379" endpoint status --write-out=table

+------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.10.10:2379 | 1f46bee47a4f04aa | 3.5.0 | 20 kB | false | false | 15 | 68 | 68 | |

| https://192.168.66.41:2379 | b3e5838df5f510 | 3.5.0 | 20 kB | false | false | 15 | 68 | 68 | |

| https://192.168.66.42:2379 | a437554da4f2a14c | 3.5.0 | 25 kB | true | false | 15 | 68 | 68 | |

+------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+六、用户环境配置

rm -rf /root/.kube

mkdir /root/.kube

KUBE_CONFIG="/root/.kube/config"

KUBE_APISERVER="https://192.168.10.10:6443"#设置集群参数

kubectl config set-cluster kubernetes \--certificate-authority=/etc/kubernetes/pki/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

#设置客户端认证参数

kubectl config set-credentials kubernetes-admin \--client-certificate=/etc/kubernetes/pki/admin.pem \--client-key=/etc/kubernetes/pki/admin-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}

#设置上下文参数

kubectl config set-context kubernetes-admin@kubernetes \--cluster=kubernetes \--user=kubernetes-admin \--kubeconfig=${KUBE_CONFIG}

#设置默认上下文

kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=${KUBE_CONFIG}

七、部署kube-apiserver

7.1 kube-apiserver 服务配置

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver --v=2 \

--logtostderr=true \

--advertise-address=192.168.10.10 \

--bind-address=192.168.10.10 \

--service-node-port-range=30000-32767 \

--allow-privileged=true \

--authorization-mode=RBAC,Node \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--enable-bootstrap-token-auth=true \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/etcd.pem \

--etcd-keyfile=/opt/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.10.10:12379,https://192.168.10.10:22379,https://192.168.10.10:32379 \

--kubelet-client-certificate=/etc/kubernetes/pki/server.pem \

--kubelet-client-key=/etc/kubernetes/pki/server-key.pem \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=kube-proxy \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--secure-port=6443 \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-cluster-ip-range=10.0.0.0/24 \

--tls-cert-file=/etc/kubernetes/pki/server.pem \

--tls-private-key-file=/etc/kubernetes/pki/server-key.pem \

--runtime-config=settings.k8s.io/v1alpha1=true Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF

说明:

-logtostderr:启用日志

-v:日志等级

–log-dir:日志目录

–etcd-servers:etcd 集群地址

–bind-address:监听地址

–secure-port:https 安全端口

–advertise-address:集群通告地址

–allow-privileged:启用授权

–service-cluster-ip-range:Service 虚拟 IP 地址段

–enable-admission-plugins:准入控制模块

–authorization-mode:认证授权,启用 RBAC 授权和节点自管理

–enable-bootstrap-token-auth:启用 TLS bootstrap 机制

–token-auth-file:bootstrap token 文件

--service-account-issuer 此参数可作为服务账户令牌发放者的身份标识

–service-node-port-range:Service nodeport 类型默认分配端口范围

–kubelet-client-xxx:apiserver 访问 kubelet 客户端证书

–tls-xxx-file:apiserver https 证书

--enable-aggregator-routing=true如果你未在运行 API 服务器的主机上运行 kube-proxy,则必须确保使用以下 kube-apiserver 标志启用系统

–etcd-xxxfile:连接 Etcd 集群证书

–audit-log-xxx:审计日志

如果你未在运行 API 服务器的主机上运行 kube-proxy,则必须确保使用以下 kube-apiserver 标志启用系统:

--enable-aggregator-routing=true

7.2 设置开机启动

systemctl daemon-reload

systemctl enable kube-apiserver --now

八、kube-controller-manager

8.1 kubeconfig配置

rm -rf /etc/kubernetes/controller-manager.conf

KUBE_CONFIG="/etc/kubernetes/controller-manager.conf"

KUBE_APISERVER="https://192.168.10.10:6443"# 设置一个集群项

kubectl config set-cluster kubernetes \--certificate-authority=/etc/kubernetes/pki/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

# 设置一个环境项,一个上下文

kubectl config set-context system:kube-controller-manager@kubernetes \--cluster=kubernetes \--user=system:kube-controller-manager \--kubeconfig=${KUBE_CONFIG}

# 设置一个证书

kubectl config set-credentials system:kube-controller-manager \--client-certificate=/etc/kubernetes/pki/kube-controller-manager.pem \--client-key=/etc/kubernetes/pki/kube-controller-manager-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=${KUBE_CONFIG}

8.2 服务配置

cat > /usr/lib/systemd/system/kube-controller-manager.service << END

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--bind-address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.conf \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

END

| 参数 | 说明 |

|---|---|

| –cluster-cidr string | 集群中 Pod 的 CIDR 范围。要求 --allocate-node-cidrs 标志为 true。 |

| –service-cluster-ip-range | 集群中 Service 对象的 CIDR 范围。要求 --allocate-node-cidrs 标志为 true。 |

| -v, --v int | 日志级别详细程度取值。 |

| –kubeconfig | Kubernetes 认证文件 |

| –leader-elect | 当该组件启动多个时,自动选举(HA) |

| –cluster-signing-cert-file --cluster-signing-key-file | 自动为 kubelet 颁发证书的 CA,与 apiserver 保持一致 |

8.3 启动并设置开机启动

systemctl daemon-reload

systemctl enable kube-controller-manager --now

九、部署kube-scheduler

9.1 kubeconfig配置

rm -rf /etc/kubernetes/kube-scheduler.conf

KUBE_CONFIG="/etc/kubernetes/kube-scheduler.conf"

KUBE_APISERVER="https://192.168.10.10:6443"kubectl config set-cluster kubernetes \--certificate-authority=/etc/kubernetes/pki/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials system:kube-scheduler \--client-certificate=/etc/kubernetes/pki/kube-scheduler.pem \--client-key=/etc/kubernetes/pki/kube-scheduler-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}

kubectl config set-context system:kube-scheduler@kubernetes \--cluster=kubernetes \--user=system:kube-scheduler \--kubeconfig=${KUBE_CONFIG}

kubectl config use-context system:kube-scheduler@kubernetes --kubeconfig=${KUBE_CONFIG}9.2 服务配置

cat > /usr/lib/systemd/system/kube-scheduler.service << END

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--bind-address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/kube-scheduler.confRestart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

END

9.3 启动并设置开机启动

systemctl daemon-reload

systemctl enable kube-scheduler --now十、TLS Bootstrapping配置

启用 TLS Bootstrapping 机制 TLS Bootstraping:Master apiserver 启用 TLS 认证后,Node 节点 kubelet 和 kube- proxy 要与 kube-apiserver 进行通信,必须使用 CA 签发的有效证书才可以,当 Node 节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书,kubelet 会以一个低权限用户自动向 apiserver 申请证书,kubelet 的证书由 apiserver 动态签署。

所以强烈建议在 Node 上使用这种方式,目前主要用于 kubelet,kube-proxy 还是由我们统一颁发一个证书。

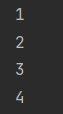

TLS bootstraping 工作流程:

#随机token,但是只需要16个字符

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

# 值如下: 737b177d9823531a433e368fcdb16f5f# 生成16个字符的

head -c 8 /dev/urandom | od -An -t x | tr -d ' '

# d683399b7a553977

生成16个字符的

[root@k8s-master01 ~]# head -c 8 /dev/urandom | od -An -t x | tr -d ' '

271c0f7e7a3bd6cd

创建kubelet上下文信息

KUBE_CONFIG="/etc/kubernetes/bootstrap-kubelet.conf"

KUBE_APISERVER="https://192.168.10.10:6443"

TOKEN_ID="2678ad"

TOKEN="271c0f7e7a3bd6cd"kubectl config set-cluster kubernetes \--certificate-authority=/etc/kubernetes/pki/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kubelet-bootstrap \--token=${TOKEN_ID}.${TOKEN} \--kubeconfig=${KUBE_CONFIG}

kubectl config set-context kubelet-bootstrap@kubernetes \--cluster=kubernetes \--user=kubelet-bootstrap \--kubeconfig=${KUBE_CONFIG}

kubectl config use-context kubelet-bootstrap@kubernetes --kubeconfig=${KUBE_CONFIG}

创建集群引导权限文件:

TOKEN_ID="2678ad"

TOKEN="271c0f7e7a3bd6cd"cat > /usr/local/k8s-install/yaml/bootstrap.secret.yaml << END

apiVersion: v1

kind: Secret

metadata:name: bootstrap-token-${TOKEN_ID}namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:description: "The default bootstrap token generated by 'kubelet '."token-id: ${TOKEN_ID}token-secret: ${TOKEN}usage-bootstrap-authentication: "true"usage-bootstrap-signing: "true"auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: kubelet-bootstrap

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.iokind: Groupname: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: node-autoapprove-bootstrap

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.iokind: Groupname: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: node-autoapprove-certificate-rotation

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.iokind: Groupname: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:kube-apiserver-to-kubelet

rules:- apiGroups:- ""resources:- nodes/proxy- nodes/stats- nodes/log- nodes/spec- nodes/metricsverbs:- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: system:kube-apiservernamespace: ""

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubelet

subjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kube-apiserver

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: system:kubernetesnamespace: ""

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubelet

subjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kubernetes

ENDkubectl create -f /usr/local/k8s-install/yaml/bootstrap.secret.yaml

十一、部署kubelet

11.1 KubeletConfiguration配置

x509参考文章:https://kubernetes.io/search/?q=x509

cat > /etc/kubernetes/kubelet-conf.yml <<END

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:anonymous:enabled: falsewebhook:cacheTTL: 2m0senabled: truex509:clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:mode: Webhookwebhook:cacheAuthorizedTTL: 5m0scacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

featureGates:IPv6DualStack: true

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:imagefs.available: 15%memory.available: 100Minodefs.available: 10%nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0sEND

11.2 服务配置

cat > /usr/lib/systemd/system/kubelet.service <<END

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service[Service]

ExecStart=/usr/local/bin/kubelet \--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf \--kubeconfig=/etc/kubernetes/kubelet.conf \--config=/etc/kubernetes/kubelet-conf.yml \--container-runtime=remote \--container-runtime-endpoint=unix:///run/containerd/containerd.sock \--node-labels=node.kubernetes.io/node= Restart=always

StartLimitInterval=0

RestartSec=10[Install]

WantedBy=multi-user.target

END

11.3 启动服务及开机设置

systemctl daemon-reload

systemctl enable kubelet --now

11.4 批准 kubelet 证书申请并加入集群(这里不需要)

# 查看 kubelet 证书请求

[root@k8s-master01 k8s-install]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-NrSNw-Gx8kR7VerABxUgHoM1mu71VbB8x598UXWOwM0 4m12s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Pending

# 批准申请

[root@k8s-master01 k8s-install]# kubectl certificate approve node-csr-NrSNw-Gx8kR7VerABxUgHoM1mu71VbB8x598UXWOwM0

certificatesigningrequest.certificates.k8s.io/node-csr-NrSNw-Gx8kR7VerABxUgHoM1mu71VbB8x598UXWOwM0 approved

# 查看节点

[root@k8s-master01 k8s-install]# kubectl get node

NAME STATUS ROLES AGE VERSION

node01 Ready <none> 19s v1.24.0

十二、部署kube-proxy

12.1 创建ServiceAccount

非常重要:kubernetes v1.24.0 更新之后进行创建 ServiceAccount 不会自动生成 Secret 需要对其手动创建

#创建kube-proxy的sa

kubectl -n kube-system create serviceaccount kube-proxycat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:name: kube-proxy-tokennamespace: kube-systemannotations:kubernetes.io/service-account.name: "kube-proxy"

EOF#创建角色绑定

kubectl create clusterrolebinding system:kube-proxy \

--clusterrole system:node-proxier \

--serviceaccount kube-system:kube-proxy

12.2 kubeconfig 配置

JWT_TOKEN=$(kubectl -n kube-system get secret/kube-proxy-token --output=jsonpath='{.data.token}' | base64 -d)

KUBE_CONFIG="/etc/kubernetes/kube-proxy.conf"

KUBE_APISERVER="https://192.168.10.10:6443"kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials kubernetes \

--token=${JWT_TOKEN} \

--kubeconfig=${KUBE_CONFIG}kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=kubernetes \

--kubeconfig=${KUBE_CONFIG}kubectl config use-context kubernetes \

--kubeconfig=${KUBE_CONFIG}

12.3 kube-proxy yaml配置

cat > /etc/kubernetes/kube-proxy.yaml <<END

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:acceptContentTypes: ""burst: 10contentType: application/vnd.kubernetes.protobufkubeconfig: /etc/kubernetes/kube-proxy.conf qps: 5

clusterCIDR: 10.244.0.0/16

configSyncPeriod: 15m0s

conntrack:maxPerCore: 32768min: 131072tcpCloseWaitTimeout: 1h0m0stcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:masqueradeAll: falsemasqueradeBit: 14minSyncPeriod: 0ssyncPeriod: 30s

ipvs:minSyncPeriod: 5sscheduler: "rr"syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

END

12.4 服务配置

cat > /usr/lib/systemd/system/kube-proxy.service <<END

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

ExecStart=/usr/local/bin/kube-proxy \--config=/etc/kubernetes/kube-proxy.yaml \--v=2Restart=always

RestartSec=10s[Install]

WantedBy=multi-user.target

END

12.5 启动并设置开机启动

systemctl daemon-reload

systemctl enable kube-proxy --now

十三、部署 CNI 网络

13.1 下载安装

cd /usr/local/k8s-install/yaml

wget https://docs.projectcalico.org/manifests/calico.yaml --no-check-certificate

# 修改CALICO_IPV4POOL_CIDR 10.244.0.0/16

kubectl apply -f calico.yaml

13.2 查看

kubectl get pods -n kube-system

kubectl get node

十四、部署CoreDNS

14.1 保持配置

在前面配置中:

/usr/lib/systemd/system/kube-apiserver.service:

--service-cluster-ip-range=10.0.0.0/24

/etc/kubernetes/kubelet-conf.yml中指定

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

安装CoreDNS:(所以安装dns时指定-r 10.0.0.0/24 -i 10.0.0.2及domain cluster.local)

#jq支持

yum install -y epel-release

yum install -y jqcd /usr/local/k8s-install/tools/

yum install git -y

git clone https://github.com/coredns/deployment.git

cd deployment/kubernetes

./deploy.sh -r 10.0.0.0/24 -i 10.0.0.2 -d cluster.local > coredns.yaml

kubectl create -f coredns.yaml

十五、新增加 Worker Node

拷贝kubelet和kubeproxy配置及证书,因为在实体机中部署,现在只有单台服务器留到后续更新。。。。

十六、部署nginx测试部署

16.1 部署nginx

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --type=NodePort

16.2 查看

[root@k8s-master01 kubernetes]# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-8f458dc5b-f7cnd 1/1 Running 0 3m48s 172.16.32.130 k8s-master01 <none> <none>NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 114m <none>

service/nginx NodePort 10.0.0.47 <none> 80:30824/TCP 3m47s app=nginx[root@k8s-master01 kubernetes]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.32.128:30824 rr-> 172.16.32.130:80 Masq 1 0 0

TCP 192.168.10.10:30824 rr-> 172.16.32.130:80 Masq 1 0 1

TCP 192.168.100.101:30824 rr-> 172.16.32.130:80 Masq 1 0 0

TCP 10.0.0.1:443 rr-> 192.168.10.10:6443 Masq 1 6 0

TCP 10.0.0.2:53 rr-> 172.16.32.129:53 Masq 1 0 0

TCP 10.0.0.2:9153 rr-> 172.16.32.129:9153 Masq 1 0 0

TCP 10.0.0.47:80 rr-> 172.16.32.130:80 Masq 1 0 0

TCP 10.88.0.1:30824 rr-> 172.16.32.130:80 Masq 1 0 0

UDP 10.0.0.2:53 rr-> 172.16.32.129:53 Masq 1 0 0

网络转发分析:

192.168.10.10:30824->172.16.32.130:80

16.3 http访问

[root@k8s-master01 kubernetes]# curl http://192.168.10.10:30824/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>

注意:网上文章大多时各种抄袭,可能都没有验证,所以自己实操部署方式,并且记录验证过后的步骤,希望分享出来帮助需要的朋友

参考文章:

自建高可用k8s集群搭建

PKI 证书和要求 | Kubernetes

相关文章:

二进制部署kubernetes集群的推荐方式

软件版本: 软件版本containerdv1.6.5etcdv3.5.0kubernetesv1.24.0 一、系统环境 1.1 环境准备 角色IP服务k8s-master01192.168.10.10etcd、containerd、kube-apiserver、kube-scheduler、kube-controller-manager、kubele、kube-proxyk8s-node01后续etcd、conta…...

智能矩阵,引领商业新纪元!拓世方案:打破线上线下界限,开启无限营销可能!

在科技赋能商业大潮中,一切行业都在经历巨大变革,传统的营销策略被彻底改变,催生着无数企业去打造横跨线上线下、多维度、全方位的矩阵营销帝国。无数的成功案例已经告诉我们,营销不再只是宣传,而是建立品牌与消费者之…...

)

ADB原理(第四篇:聊聊adb shell ps与adb shell ps有无双引号的区别)

前言 对于经常使用adb的同学,不可避免的一定会这样用adb,比如我们想在手机里执行ps命令,于是在命令行中写下如下代码: adb shell ps -ef 或者 adb shell "ps -ef" 两种方式都可以使用,你喜欢用哪个呢&#…...

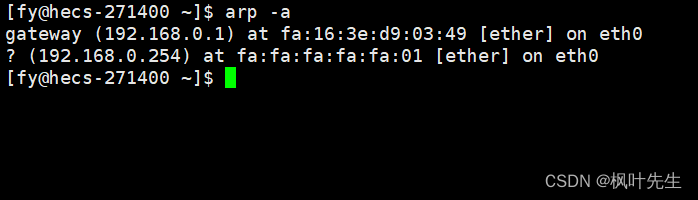

「网络编程」数据链路层协议_ 以太网协议学习

「前言」文章内容是数据链路层以太网协议的讲解。 「归属专栏」网络编程 「主页链接」个人主页 「笔者」枫叶先生(fy) 目录 一、以太网协议简介二、以太网帧格式(报头)三、MTU对上层协议的影响四、ARP协议4.1 ARP协议的作用4.2 ARP协议报头 一、以太网协…...

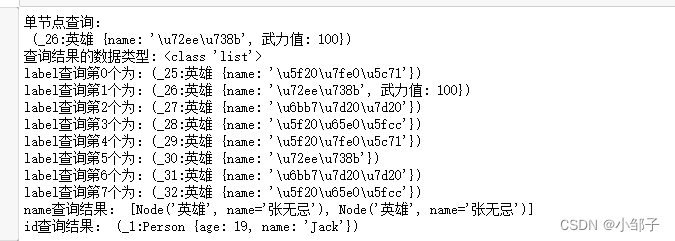

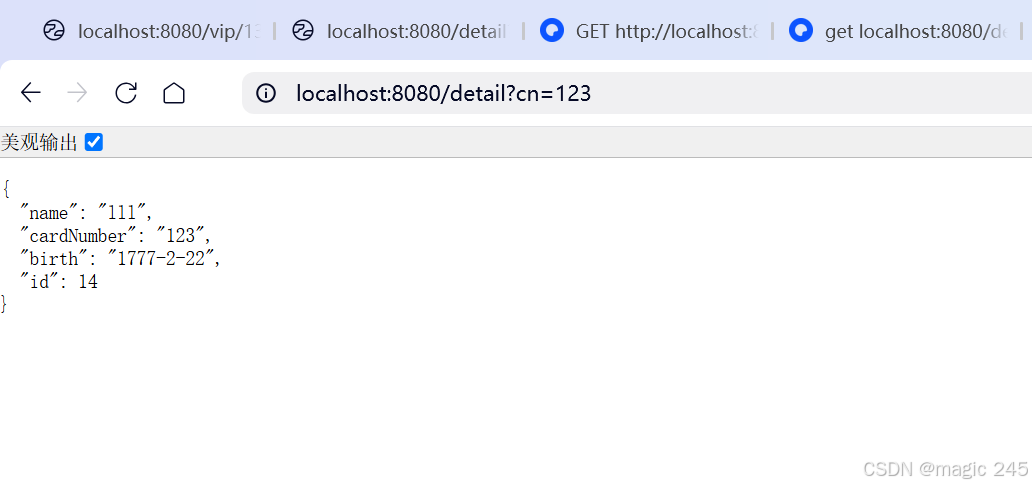

通过python操作neo4j

在neo4j中创建结点和关系 创建结点 创建电影结点 例如:创建一个Movie结点,这个结点上带有三个属性{title:‘The Matrix’, released:1999, tagline:‘Welcome to the Real World’} CREATE (TheMatrix:Movie {title:The Matrix, released:1999, tagl…...

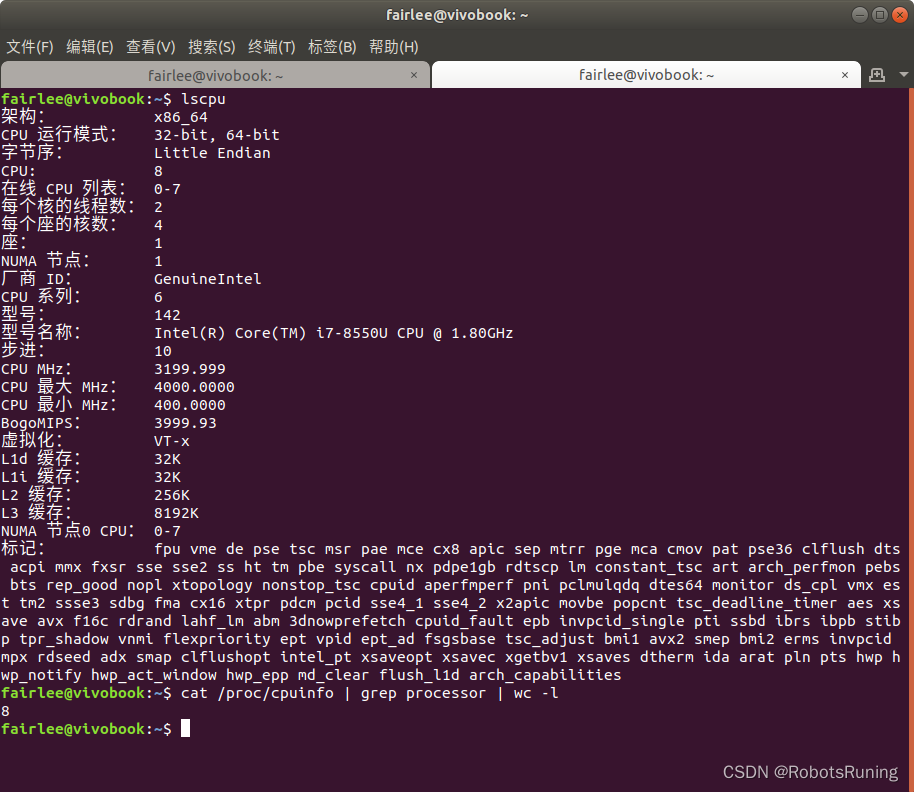

Ubuntu中查看电脑有多少个核——lscpu

1. 使用lscpu命令: 打开终端并输入以下命令: lscpu你会看到与CPU相关的详细信息。查找"CPU(s)"这一行来看总的核心数。另外,“Core(s) per socket”表示每个插槽或每个物理CPU的核数,“Socket(s)”表示物理CPU的数量。将这两个值相乘即得到总…...

)

Python学习笔记第七十二天(Matplotlib imread)

Python学习笔记第七十二天 Matplotlib imread读取图像数据修改图像裁剪图像图像颜色 后记 Matplotlib imread imread() 方法是 Matplotlib 库中的一个函数,用于从图像文件中读取图像数据。 imread() 方法返回一个 numpy.ndarray 对象,其形状是 (nrows,…...

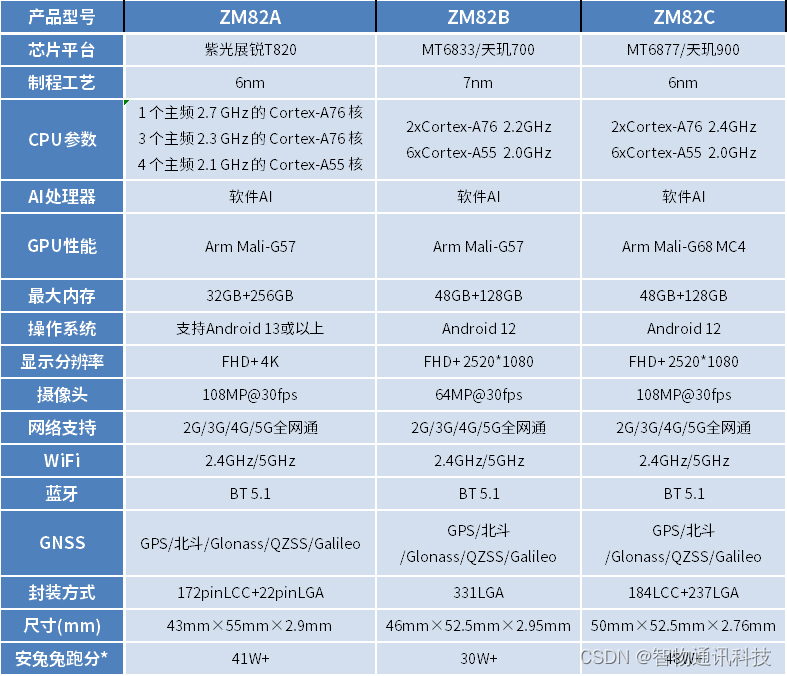

安卓核心板_天玑700、天玑720、天玑900_5G模块规格参数

5G安卓核心板是采用新一代蜂窝移动通信技术的重要设备。它支持万物互联、生活云端化和智能交互的特性。5G技术使得各类智能硬件始终处于联网状态,而物联网则成为5G发展的主要动力。物联网通过传感器、无线网络和射频识别等技术,实现了物体之间的互联。而…...

CS224W2.2——传统基于特征的方法(边层级特征)

在这篇中,我们介绍了链接预测的重要任务,以及如何提取链接级特征来更好地解决这类问题。这在我们需要预测缺失的边或预测将来会出现的边的情况下很有用。我们将讨论的链路级功能包括基于距离的功能,以及本地和全局邻域重叠。 文章目录 1. 边层…...

python—openpyxl操作excel详解

前言 openpyxl属于第三方模块,在python中用来处理excel文件。 可以对excel进行的操作有:读写、修改、调整样式及插入图片等。 但只能用来处理【 .xlsx】 后缀的excel文件。 使用前需要先安装,安装方法: pip install openpyxl…...

汽车行驶性能的主观评价方法(2)-驾驶员的任务

人(驾驶员)-车辆-环境闭环控制系统 驾驶过程中,驾驶员承担着操纵车辆和控制车辆的任务。驾驶员在不知不觉中接受了大量光学、声学和动力学信息并予以评价,同时不断地通过理论值和实际值的比较来完成控制作用(图 2.1&a…...

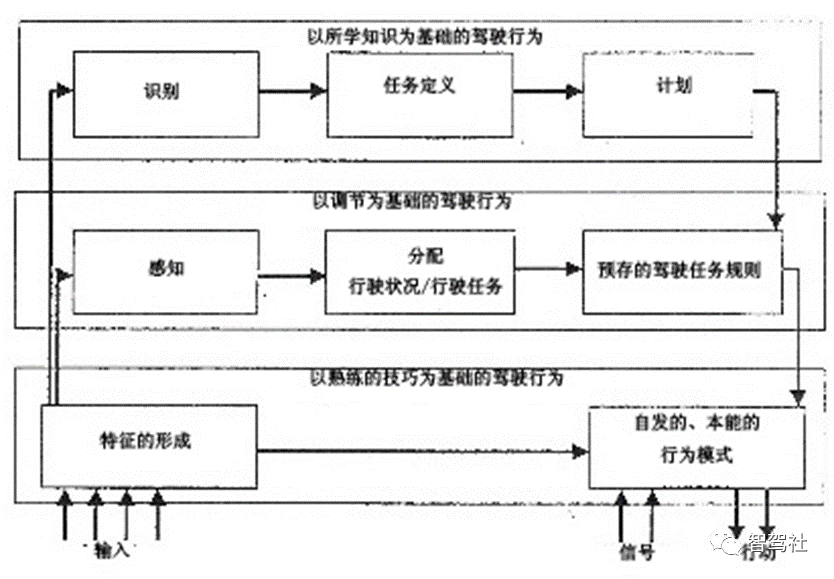

server2012 通过防火墙开启局域网内限定IP进行远程桌面连接

我这里需要被远程桌面的电脑系统版本为windows server2012 1、打开允许远程连接设置 2、开启防火墙 3、设置允许“远程桌面应用”通过防火墙 勾选”远程桌面“ 3、入站规则设置 高级设置→入站规则→远程桌面-用户模式(TCP-In) 进入远程桌面属性的作用域——>远程IP地址—…...

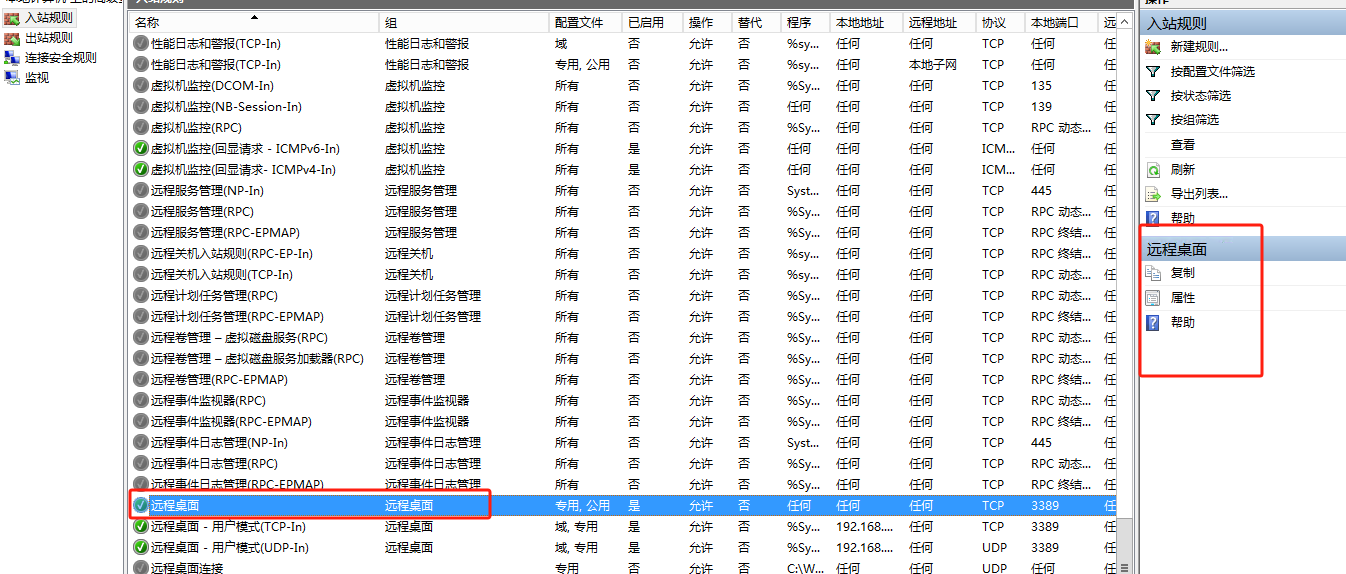

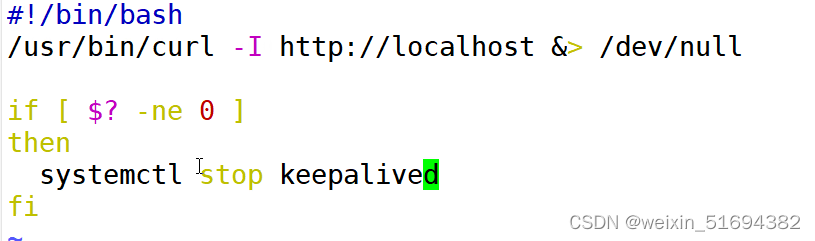

lvs+keepalived: 高可用集群

lvskeepalived: 高可用集群 keepalived为lvs应运而生的高可用服务。lvs的调度器无法做高可用,于是keepalived软件。实现的是调度器的高可用。 但是:keepalived不是专门为集群服务的,也可以做其他服务器的高可用。 lvs的高可用集群…...

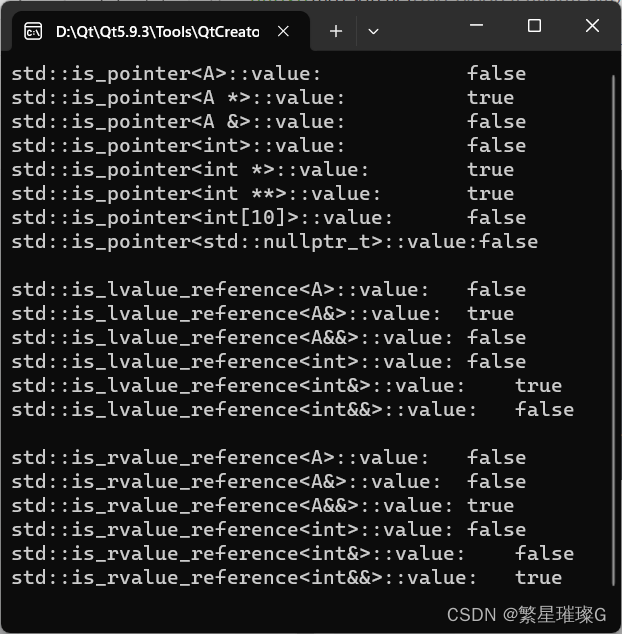

C++标准模板(STL)- 类型支持 (类型特性,is_pointer,is_lvalue_reference,is_rvalue_reference)

类型特性 类型特性定义一个编译时基于模板的结构,以查询或修改类型的属性。 试图特化定义于 <type_traits> 头文件的模板导致未定义行为,除了 std::common_type 可依照其所描述特化。 定义于<type_traits>头文件的模板可以用不完整类型实…...

C++——类和对象(上)

1.面向过程和面向对象初步认识 C语言是面向过程的,关注的是过程,分析出求解问题的步骤,通过函数调用逐步解决问题。 例如手洗衣服 C是基于面向对象的,关注的是对象,将一件事情拆分成不同的对象,靠对象之间…...

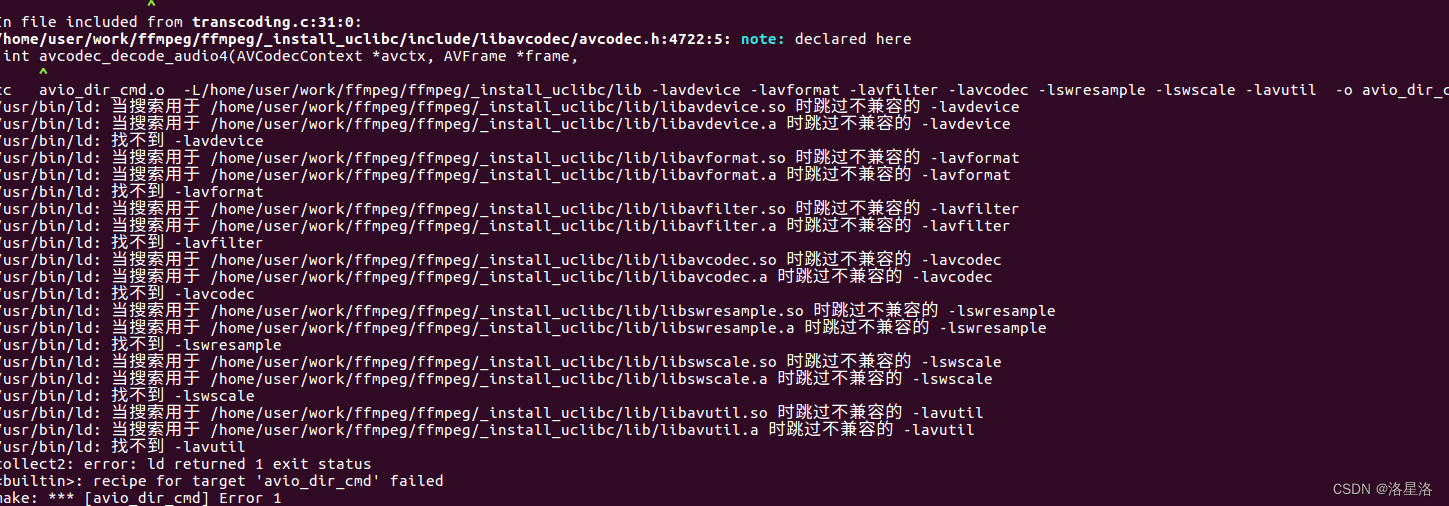

ffmpeg中examples编译报不兼容错误解决办法

ffmpeg中examples编译报不兼容错误解决办法 参考examples下的README可知,编译之前需要设置 PKG_CONFIG_PATH路径。 export PKG_CONFIG_PATH/home/user/work/ffmpeg/ffmpeg/_install_uclibc/lib/pkgconfig之后执行make出现如下错误: 基本都是由于库的版…...

图形旋转、镜像、缩放)

Python与CAD系列基础篇(十一)图形旋转、镜像、缩放

目录 0 简述1 图形旋转2 图形镜像3 图形缩放0 简述 本篇详细介绍使用①通过pyautocad连接AutoCAD进行处理②通过ezdxf处理dxf格式文件进行图形旋转、镜像、缩放的方法。 1 图形旋转 pyautocad方式 from pyautocad import Autocad, APoint, aDouble import mathacad = Autoca…...

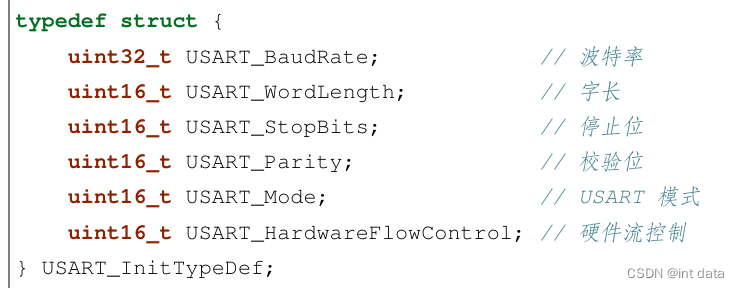

STM32串口通信

数据通信的基础概念 在单片机的应用中,数据通信是必不可少的一部分,比如:单片机和上位机、单片机和外 围器件之间,它们都有数据通信的需求。由于设备之间的电气特性、传输速率、可靠性要求各 不相同,于是就有了各种通信…...

Kafka笔记

一、Kafka 概述 1.1.定义 传统定义:Kafka 是一个分布式的基于发布/订阅模式的消息队列,主要用于大数据实时处理领域。最新定义:Kafka 是一个开源的分布式事件流平台,被数千家公司用于高性能数据管道、流分析、数据集成和关键任务…...

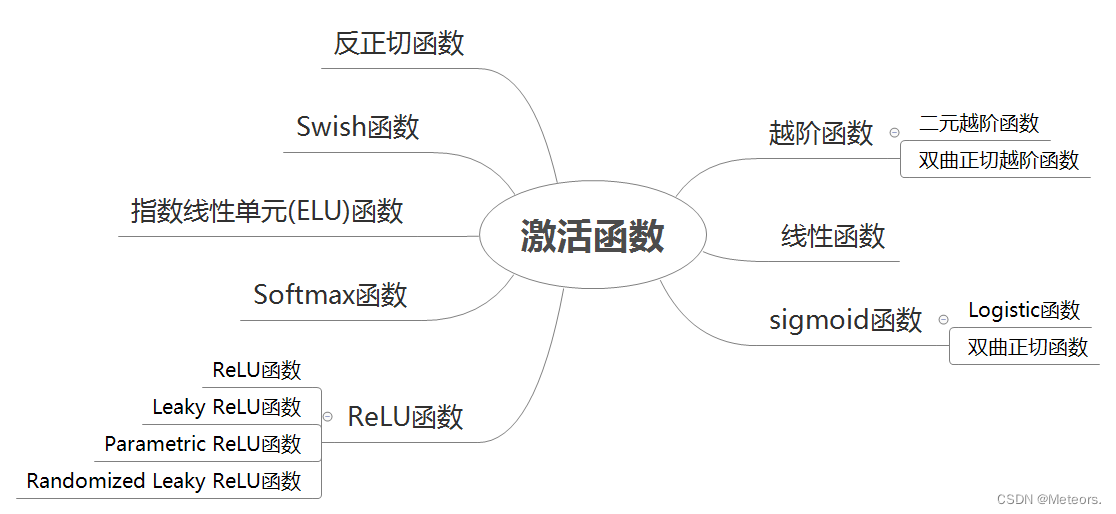

【1.2】神经网络:神经元与激活函数

✅作者简介:大家好,我是 Meteors., 向往着更加简洁高效的代码写法与编程方式,持续分享Java技术内容。 🍎个人主页:Meteors.的博客 💞当前专栏: 神经网络(随缘更新) ✨特色…...

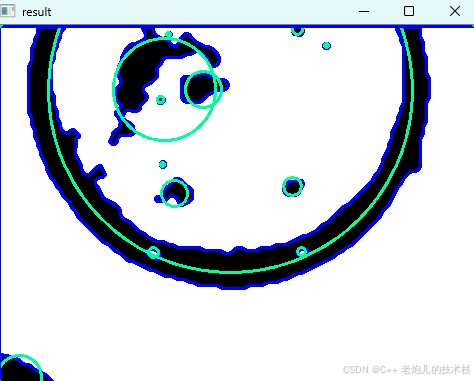

利用最小二乘法找圆心和半径

#include <iostream> #include <vector> #include <cmath> #include <Eigen/Dense> // 需安装Eigen库用于矩阵运算 // 定义点结构 struct Point { double x, y; Point(double x_, double y_) : x(x_), y(y_) {} }; // 最小二乘法求圆心和半径 …...

vscode里如何用git

打开vs终端执行如下: 1 初始化 Git 仓库(如果尚未初始化) git init 2 添加文件到 Git 仓库 git add . 3 使用 git commit 命令来提交你的更改。确保在提交时加上一个有用的消息。 git commit -m "备注信息" 4 …...

Lombok 的 @Data 注解失效,未生成 getter/setter 方法引发的HTTP 406 错误

HTTP 状态码 406 (Not Acceptable) 和 500 (Internal Server Error) 是两类完全不同的错误,它们的含义、原因和解决方法都有显著区别。以下是详细对比: 1. HTTP 406 (Not Acceptable) 含义: 客户端请求的内容类型与服务器支持的内容类型不匹…...

关于nvm与node.js

1 安装nvm 安装过程中手动修改 nvm的安装路径, 以及修改 通过nvm安装node后正在使用的node的存放目录【这句话可能难以理解,但接着往下看你就了然了】 2 修改nvm中settings.txt文件配置 nvm安装成功后,通常在该文件中会出现以下配置&…...

【解密LSTM、GRU如何解决传统RNN梯度消失问题】

解密LSTM与GRU:如何让RNN变得更聪明? 在深度学习的世界里,循环神经网络(RNN)以其卓越的序列数据处理能力广泛应用于自然语言处理、时间序列预测等领域。然而,传统RNN存在的一个严重问题——梯度消失&#…...

渲染学进阶内容——模型

最近在写模组的时候发现渲染器里面离不开模型的定义,在渲染的第二篇文章中简单的讲解了一下关于模型部分的内容,其实不管是方块还是方块实体,都离不开模型的内容 🧱 一、CubeListBuilder 功能解析 CubeListBuilder 是 Minecraft Java 版模型系统的核心构建器,用于动态创…...

Java面试专项一-准备篇

一、企业简历筛选规则 一般企业的简历筛选流程:首先由HR先筛选一部分简历后,在将简历给到对应的项目负责人后再进行下一步的操作。 HR如何筛选简历 例如:Boss直聘(招聘方平台) 直接按照条件进行筛选 例如:…...

Java 二维码

Java 二维码 **技术:**谷歌 ZXing 实现 首先添加依赖 <!-- 二维码依赖 --><dependency><groupId>com.google.zxing</groupId><artifactId>core</artifactId><version>3.5.1</version></dependency><de…...

React---day11

14.4 react-redux第三方库 提供connect、thunk之类的函数 以获取一个banner数据为例子 store: 我们在使用异步的时候理应是要使用中间件的,但是configureStore 已经自动集成了 redux-thunk,注意action里面要返回函数 import { configureS…...

多光源(Multiple Lights))

C++.OpenGL (14/64)多光源(Multiple Lights)

多光源(Multiple Lights) 多光源渲染技术概览 #mermaid-svg-3L5e5gGn76TNh7Lq {font-family:"trebuchet ms",verdana,arial,sans-serif;font-size:16px;fill:#333;}#mermaid-svg-3L5e5gGn76TNh7Lq .error-icon{fill:#552222;}#mermaid-svg-3L5e5gGn76TNh7Lq .erro…...