工程(十四)——ubuntu20.04 PL-VINS

博主创建了一个科研互助群Q:772356582,欢迎大家加入讨论。这是一个科研互助群,主要围绕机器人,无人驾驶,无人机方面的感知定位,决策规划,以及论文发表经验,以方便大家很好很快的科研,少走弯路。欢迎在群里积极提问与回答,相互交流共同学习。

一、简介

PL-VINS是基于最先进的基于点的VINS- mono,开发的一种基于点和线特征的实时、高效优化的单目VINS方法。原始的 PL-VINS 是在ubuntu18.04基于opencv3去开发的。源码的下载地址:https://github.com/cnqiangfu/PL-VINS

我的配置:ubuntu20.04+opencv4.2+eigen3.3.7

更改好的代码如下,可直接用,需要修改mage_node_b.cpp的main函数第一行的地址

huashu996/Ubuntu20.04PL_VINS · GitHub

二、编译

如果你使用官方的代码,编译时候可能会遇到如下问题

mkdir -p ~/catkin_plvins/src

cd catkin_plvins/src //进入创建的catkin_plvins/src文件夹下

catkin_init_workspace 进行空间创建cd ~/catkin_plvins //在文件夹catkin_plvins下建立终端输入

catkin_make //终端输入

source devel/setup.bash

echo $ROS_PACKAGE_PATH//将代码下载到src目录下 或者执行下面代码

cd ~/catkin_plvins/src

git clone https://github.com/cnqiangfu/PL-VINS.git

//编译

cd .. //回到文件夹catkin_plvins

catkin_make

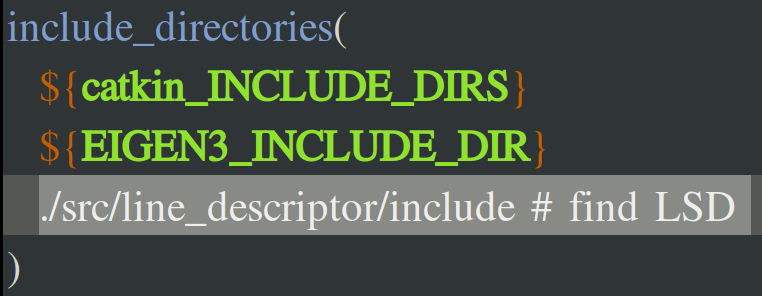

source devel/setup.bash- 将feature_tracker里面的Cmakelist修改一下:主要修改以下2处的路径

- 打开catkin_plvins/src/PL-VINS/image_node_b文件下的CMakeLists.txt添加

set(CMAKE_CXX_STANDARD 14)如果你配置正确,应该能够编译顺利通过

三、opencv4适配

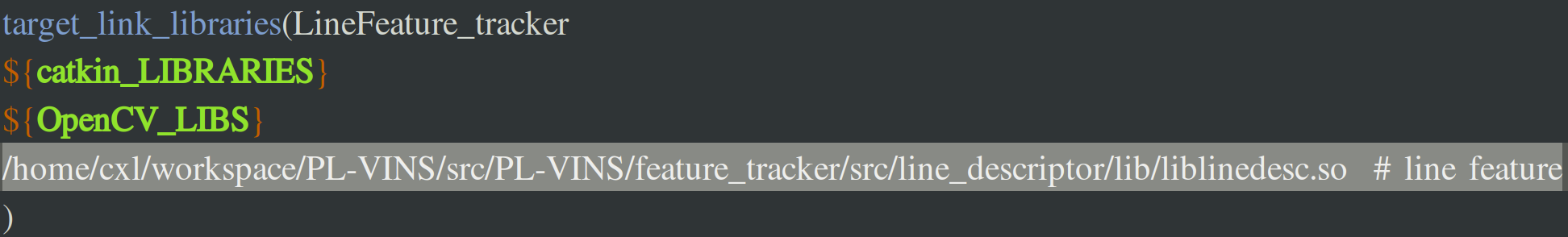

但运行往往会出现如下图问题,对于每个问题我们一次解决

- [image_node_b-7] 挂掉的原因是linefeature_tracker_node中只发布了归一化坐标,没有发布linefeature的startpoint和endpoint的像素坐标。

1.mage_node_b.cpp的main函数第一行的地址,修改原绝对地址。

2.加入project函数

void project(cv::Point2f& pt, cv::Mat const& k)

{pt.x=k.at<float>(0,0)*pt.x+k.at<float>(0,2);pt.y=k.at<float>(1,1)*pt.y+k.at<float>(1,2);

}3.替换

将下面两行

cv::Point startPoint = cv::Point(line_feature_msg->channels[3].values[i], line_feature_msg->channels[4].values[i]);

cv::Point endPoint = cv::Point(line_feature_msg->channels[5].values[i], line_feature_msg->channels[6].values[i]);

替换

cv::Point2f startPoint = cv::Point2f(line_feature_msg->points[i].x,line_feature_msg->points[i].y );project (startPoint,K_);

cv::Point2f endPoint = cv::Point2f(line_feature_msg->channels[1].values[i],line_feature_msg->channels[2].values[i]);- [linefeature_tracker-4]

此问题由于opencv和cv_bridge冲突的问题,因为在ubuntu20.04 cv_bridge是4,如果你使用opencv3那么将产生冲突,如果使用opencv4跑,代码本身又不支持opencv4所以需要更改。相应的解决方式也有两种:

1)把vins-mono代码全部改成opencv4的版本 或者 2)把cv_bridge改成opencv3版本。参考如下博客修改

【精选】Ubuntu20.04下成功运行VINS-mono_ubuntu vinsmono-CSDN博客

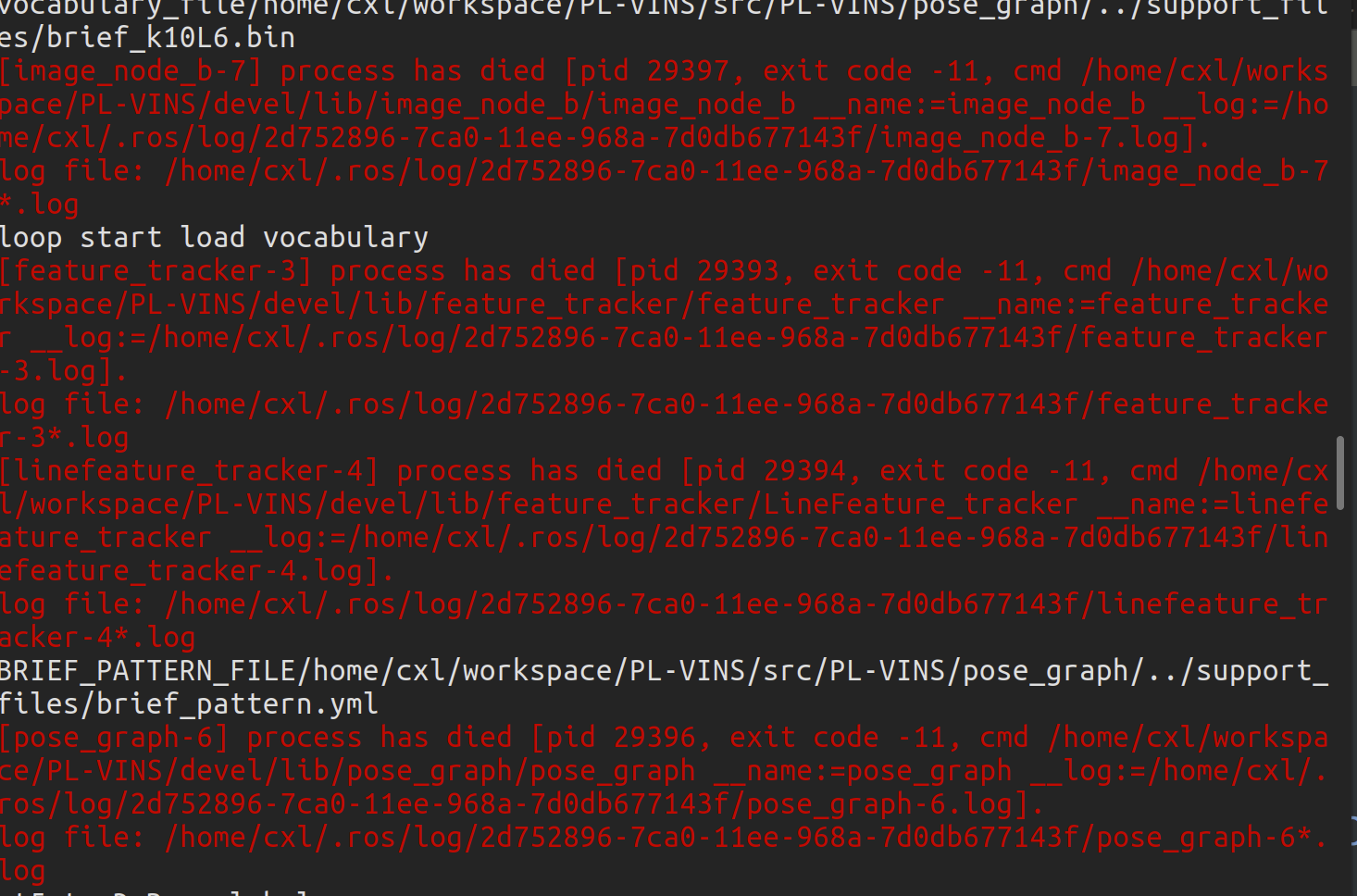

1.将所有包含opencv的Cmakelists.txt中opencv引入都换成4,如下

2.更新头文件使使用opencv4

(1)将camera_model包改成兼容opencv4在camera_model包中的头文件Chessboard.h中添加

#include <opencv2/imgproc/types_c.h>

#include <opencv2/calib3d/calib3d_c.h>

在CameraCalibration.h中添加

#include <opencv2/imgproc/types_c.h>

#include <opencv2/imgproc/imgproc_c.h>(2)将包中所有报错的头文件

#include <opencv/cv.h>

#include <opencv/highgui.h>

替换为

#include <opencv2/highgui.hpp>

#include <opencv2/cvconfig.h>

3.加入线特征检测函数

imgproc.hpp关于线特征的部分由于opencv4权限的问题无法使用了,这里只需要自己定义一个相同功能的头文件去把opencv3中的实现拷贝过去。在路径

PL-VINS/src/PL-VINS/feature_tracker/src/line_descriptor/src

创建一个my_lsd.hpp

//

// Created by fs on 2021/11/3.

// This file is my_lsd.hpp

//

//#include "opencv2/../../src/precomp.hpp"

#include "opencv2/imgproc.hpp"

//#include "opencv2/core/private.hpp"

#include <vector>#define M_3_2_PI (3 * CV_PI) / 2 // 3/2 pi

#define M_2__PI (2 * CV_PI) // 2 pi#ifndef M_LN10

#define M_LN10 2.30258509299404568402

#endif#define NOTDEF double(-1024.0) // Label for pixels with undefined gradient.#define NOTUSED 0 // Label for pixels not used in yet.

#define USED 1 // Label for pixels already used in detection.#define RELATIVE_ERROR_FACTOR 100.0const double DEG_TO_RADS = CV_PI / 180;#define log_gamma(x) ((x)>15.0?log_gamma_windschitl(x):log_gamma_lanczos(x))struct edge

{cv::Point p;bool taken;

};inline double distSq(const double x1, const double y1,const double x2, const double y2)

{return (x2 - x1)*(x2 - x1) + (y2 - y1)*(y2 - y1);

}inline double dist(const double x1, const double y1,const double x2, const double y2)

{return sqrt(distSq(x1, y1, x2, y2));

}// Signed angle difference

inline double angle_diff_signed(const double& a, const double& b)

{double diff = a - b;while(diff <= -CV_PI) diff += M_2__PI;while(diff > CV_PI) diff -= M_2__PI;return diff;

}// Absolute value angle difference

inline double angle_diff(const double& a, const double& b)

{return std::fabs(angle_diff_signed(a, b));

}// Compare doubles by relative error.

inline bool double_equal(const double& a, const double& b)

{// trivial caseif(a == b) return true;double abs_diff = fabs(a - b);double aa = fabs(a);double bb = fabs(b);double abs_max = (aa > bb)? aa : bb;if(abs_max < DBL_MIN) abs_max = DBL_MIN;return (abs_diff / abs_max) <= (RELATIVE_ERROR_FACTOR * DBL_EPSILON);

}inline bool AsmallerB_XoverY(const edge& a, const edge& b)

{if (a.p.x == b.p.x) return a.p.y < b.p.y;else return a.p.x < b.p.x;

}/*** Computes the natural logarithm of the absolute value of* the gamma function of x using Windschitl method.* See http://www.rskey.org/gamma.htm*/

inline double log_gamma_windschitl(const double& x)

{return 0.918938533204673 + (x-0.5)*log(x) - x+ 0.5*x*log(x*sinh(1/x) + 1/(810.0*pow(x, 6.0)));

}/*** Computes the natural logarithm of the absolute value of* the gamma function of x using the Lanczos approximation.* See http://www.rskey.org/gamma.htm*/

inline double log_gamma_lanczos(const double& x)

{static double q[7] = { 75122.6331530, 80916.6278952, 36308.2951477,8687.24529705, 1168.92649479, 83.8676043424,2.50662827511 };double a = (x + 0.5) * log(x + 5.5) - (x + 5.5);double b = 0;for(int n = 0; n < 7; ++n){a -= log(x + double(n));b += q[n] * pow(x, double(n));}return a + log(b);

}

///namespace cv {class myLineSegmentDetectorImpl CV_FINAL : public LineSegmentDetector{public:/*** Create a LineSegmentDetectorImpl object. Specifying scale, number of subdivisions for the image, should the lines be refined and other constants as follows:** @param _refine How should the lines found be refined?* LSD_REFINE_NONE - No refinement applied.* LSD_REFINE_STD - Standard refinement is applied. E.g. breaking arches into smaller line approximations.* LSD_REFINE_ADV - Advanced refinement. Number of false alarms is calculated,* lines are refined through increase of precision, decrement in size, etc.* @param _scale The scale of the image that will be used to find the lines. Range (0..1].* @param _sigma_scale Sigma for Gaussian filter is computed as sigma = _sigma_scale/_scale.* @param _quant Bound to the quantization error on the gradient norm.* @param _ang_th Gradient angle tolerance in degrees.* @param _log_eps Detection threshold: -log10(NFA) > _log_eps* @param _density_th Minimal density of aligned region points in rectangle.* @param _n_bins Number of bins in pseudo-ordering of gradient modulus.*/myLineSegmentDetectorImpl(int _refine = LSD_REFINE_STD, double _scale = 0.8,double _sigma_scale = 0.6, double _quant = 2.0, double _ang_th = 22.5,double _log_eps = 0, double _density_th = 0.7, int _n_bins = 1024);/*** Detect lines in the input image.** @param _image A grayscale(CV_8UC1) input image.* If only a roi needs to be selected, use* lsd_ptr->detect(image(roi), ..., lines);* lines += Scalar(roi.x, roi.y, roi.x, roi.y);* @param _lines Return: A vector of Vec4i or Vec4f elements specifying the beginning and ending point of a line.* Where Vec4i/Vec4f is (x1, y1, x2, y2), point 1 is the start, point 2 - end.* Returned lines are strictly oriented depending on the gradient.* @param width Return: Vector of widths of the regions, where the lines are found. E.g. Width of line.* @param prec Return: Vector of precisions with which the lines are found.* @param nfa Return: Vector containing number of false alarms in the line region, with precision of 10%.* The bigger the value, logarithmically better the detection.* * -1 corresponds to 10 mean false alarms* * 0 corresponds to 1 mean false alarm* * 1 corresponds to 0.1 mean false alarms* This vector will be calculated _only_ when the objects type is REFINE_ADV*/void detect(InputArray _image, OutputArray _lines,OutputArray width = noArray(), OutputArray prec = noArray(),OutputArray nfa = noArray()) CV_OVERRIDE;/*** Draw lines on the given canvas.** @param image The image, where lines will be drawn.* Should have the size of the image, where the lines were found* @param lines The lines that need to be drawn*/void drawSegments(InputOutputArray _image, InputArray lines) CV_OVERRIDE;/*** Draw both vectors on the image canvas. Uses blue for lines 1 and red for lines 2.** @param size The size of the image, where lines1 and lines2 were found.* @param lines1 The first lines that need to be drawn. Color - Blue.* @param lines2 The second lines that need to be drawn. Color - Red.* @param image An optional image, where lines will be drawn.* Should have the size of the image, where the lines were found* @return The number of mismatching pixels between lines1 and lines2.*/int compareSegments(const Size& size, InputArray lines1, InputArray lines2, InputOutputArray _image = noArray()) CV_OVERRIDE;private:Mat image;Mat scaled_image;Mat_<double> angles; // in radsMat_<double> modgrad;Mat_<uchar> used;int img_width;int img_height;double LOG_NT;bool w_needed;bool p_needed;bool n_needed;const double SCALE;const int doRefine;const double SIGMA_SCALE;const double QUANT;const double ANG_TH;const double LOG_EPS;const double DENSITY_TH;const int N_BINS;struct RegionPoint {int x;int y;uchar* used;double angle;double modgrad;};struct normPoint{Point2i p;int norm;};std::vector<normPoint> ordered_points;struct rect{double x1, y1, x2, y2; // first and second point of the line segmentdouble width; // rectangle widthdouble x, y; // center of the rectangledouble theta; // angledouble dx,dy; // (dx,dy) is vector oriented as the line segmentdouble prec; // tolerance angledouble p; // probability of a point with angle within 'prec'};myLineSegmentDetectorImpl& operator= (const myLineSegmentDetectorImpl&); // to quiet MSVC/*** Detect lines in the whole input image.** @param lines Return: A vector of Vec4f elements specifying the beginning and ending point of a line.* Where Vec4f is (x1, y1, x2, y2), point 1 is the start, point 2 - end.* Returned lines are strictly oriented depending on the gradient.* @param widths Return: Vector of widths of the regions, where the lines are found. E.g. Width of line.* @param precisions Return: Vector of precisions with which the lines are found.* @param nfas Return: Vector containing number of false alarms in the line region, with precision of 10%.* The bigger the value, logarithmically better the detection.* * -1 corresponds to 10 mean false alarms* * 0 corresponds to 1 mean false alarm* * 1 corresponds to 0.1 mean false alarms*/void flsd(std::vector<Vec4f>& lines,std::vector<double>& widths, std::vector<double>& precisions,std::vector<double>& nfas);/*** Finds the angles and the gradients of the image. Generates a list of pseudo ordered points.** @param threshold The minimum value of the angle that is considered defined, otherwise NOTDEF* @param n_bins The number of bins with which gradients are ordered by, using bucket sort.* @param ordered_points Return: Vector of coordinate points that are pseudo ordered by magnitude.* Pixels would be ordered by norm value, up to a precision given by max_grad/n_bins.*/void ll_angle(const double& threshold, const unsigned int& n_bins);/*** Grow a region starting from point s with a defined precision,* returning the containing points size and the angle of the gradients.** @param s Starting point for the region.* @param reg Return: Vector of points, that are part of the region* @param reg_angle Return: The mean angle of the region.* @param prec The precision by which each region angle should be aligned to the mean.*/void region_grow(const Point2i& s, std::vector<RegionPoint>& reg,double& reg_angle, const double& prec);/*** Finds the bounding rotated rectangle of a region.** @param reg The region of points, from which the rectangle to be constructed from.* @param reg_angle The mean angle of the region.* @param prec The precision by which points were found.* @param p Probability of a point with angle within 'prec'.* @param rec Return: The generated rectangle.*/void region2rect(const std::vector<RegionPoint>& reg, const double reg_angle,const double prec, const double p, rect& rec) const;/*** Compute region's angle as the principal inertia axis of the region.* @return Regions angle.*/double get_theta(const std::vector<RegionPoint>& reg, const double& x,const double& y, const double& reg_angle, const double& prec) const;/*** An estimation of the angle tolerance is performed by the standard deviation of the angle at points* near the region's starting point. Then, a new region is grown starting from the same point, but using the* estimated angle tolerance. If this fails to produce a rectangle with the right density of region points,* 'reduce_region_radius' is called to try to satisfy this condition.*/bool refine(std::vector<RegionPoint>& reg, double reg_angle,const double prec, double p, rect& rec, const double& density_th);/*** Reduce the region size, by elimination the points far from the starting point, until that leads to* rectangle with the right density of region points or to discard the region if too small.*/bool reduce_region_radius(std::vector<RegionPoint>& reg, double reg_angle,const double prec, double p, rect& rec, double density, const double& density_th);/*** Try some rectangles variations to improve NFA value. Only if the rectangle is not meaningful (i.e., log_nfa <= log_eps).* @return The new NFA value.*/double rect_improve(rect& rec) const;/*** Calculates the number of correctly aligned points within the rectangle.* @return The new NFA value.*/double rect_nfa(const rect& rec) const;/*** Computes the NFA values based on the total number of points, points that agree.* n, k, p are the binomial parameters.* @return The new NFA value.*/double nfa(const int& n, const int& k, const double& p) const;/*** Is the point at place 'address' aligned to angle theta, up to precision 'prec'?* @return Whether the point is aligned.*/bool isAligned(int x, int y, const double& theta, const double& prec) const;public:// Compare normstatic inline bool compare_norm( const normPoint& n1, const normPoint& n2 ){return (n1.norm > n2.norm);}};CV_EXPORTS Ptr<LineSegmentDetector> createLineSegmentDetector(int _refine, double _scale, double _sigma_scale, double _quant, double _ang_th,double _log_eps, double _density_th, int _n_bins){return makePtr<myLineSegmentDetectorImpl>(_refine, _scale, _sigma_scale, _quant, _ang_th,_log_eps, _density_th, _n_bins);}myLineSegmentDetectorImpl::myLineSegmentDetectorImpl(int _refine, double _scale, double _sigma_scale, double _quant,double _ang_th, double _log_eps, double _density_th, int _n_bins):img_width(0), img_height(0), LOG_NT(0), w_needed(false), p_needed(false), n_needed(false),SCALE(_scale), doRefine(_refine), SIGMA_SCALE(_sigma_scale), QUANT(_quant),ANG_TH(_ang_th), LOG_EPS(_log_eps), DENSITY_TH(_density_th), N_BINS(_n_bins){CV_Assert(_scale > 0 && _sigma_scale > 0 && _quant >= 0 &&_ang_th > 0 && _ang_th < 180 && _density_th >= 0 && _density_th < 1 &&_n_bins > 0);

// CV_UNUSED(_refine); CV_UNUSED(_log_eps);

// CV_Error(Error::StsNotImplemented, "Implementation has been removed due original code license issues");}void myLineSegmentDetectorImpl::detect(InputArray _image, OutputArray _lines,OutputArray _width, OutputArray _prec, OutputArray _nfa){// CV_INSTRUMENT_REGION();image = _image.getMat();CV_Assert(!image.empty() && image.type() == CV_8UC1);std::vector<Vec4f> lines;std::vector<double> w, p, n;w_needed = _width.needed();p_needed = _prec.needed();if (doRefine < LSD_REFINE_ADV)n_needed = false;elsen_needed = _nfa.needed();flsd(lines, w, p, n);Mat(lines).copyTo(_lines);if(w_needed) Mat(w).copyTo(_width);if(p_needed) Mat(p).copyTo(_prec);if(n_needed) Mat(n).copyTo(_nfa);// Clear used structuresordered_points.clear();// CV_UNUSED(_image); CV_UNUSED(_lines);

// CV_UNUSED(_width); CV_UNUSED(_prec); CV_UNUSED(_nfa);

// CV_Error(Error::StsNotImplemented, "Implementation has been removed due original code license issues");}void myLineSegmentDetectorImpl::drawSegments(InputOutputArray _image, InputArray lines){// CV_INSTRUMENT_REGION();CV_Assert(!_image.empty() && (_image.channels() == 1 || _image.channels() == 3));if (_image.channels() == 1){cvtColor(_image, _image, COLOR_GRAY2BGR);}Mat _lines = lines.getMat();const int N = _lines.checkVector(4);CV_Assert(_lines.depth() == CV_32F || _lines.depth() == CV_32S);// Draw segmentsif (_lines.depth() == CV_32F){for (int i = 0; i < N; ++i){const Vec4f& v = _lines.at<Vec4f>(i);const Point2f b(v[0], v[1]);const Point2f e(v[2], v[3]);line(_image, b, e, Scalar(0, 0, 255), 1);}}else{for (int i = 0; i < N; ++i){const Vec4i& v = _lines.at<Vec4i>(i);const Point2i b(v[0], v[1]);const Point2i e(v[2], v[3]);line(_image, b, e, Scalar(0, 0, 255), 1);}}}int myLineSegmentDetectorImpl::compareSegments(const Size& size, InputArray lines1, InputArray lines2, InputOutputArray _image){// CV_INSTRUMENT_REGION();Size sz = size;if (_image.needed() && _image.size() != size) sz = _image.size();CV_Assert(!sz.empty());Mat_<uchar> I1 = Mat_<uchar>::zeros(sz);Mat_<uchar> I2 = Mat_<uchar>::zeros(sz);Mat _lines1 = lines1.getMat();Mat _lines2 = lines2.getMat();const int N1 = _lines1.checkVector(4);const int N2 = _lines2.checkVector(4);CV_Assert(_lines1.depth() == CV_32F || _lines1.depth() == CV_32S);CV_Assert(_lines2.depth() == CV_32F || _lines2.depth() == CV_32S);if (_lines1.depth() == CV_32S)_lines1.convertTo(_lines1, CV_32F);if (_lines2.depth() == CV_32S)_lines2.convertTo(_lines2, CV_32F);// Draw segmentsfor(int i = 0; i < N1; ++i){const Point2f b(_lines1.at<Vec4f>(i)[0], _lines1.at<Vec4f>(i)[1]);const Point2f e(_lines1.at<Vec4f>(i)[2], _lines1.at<Vec4f>(i)[3]);line(I1, b, e, Scalar::all(255), 1);}for(int i = 0; i < N2; ++i){const Point2f b(_lines2.at<Vec4f>(i)[0], _lines2.at<Vec4f>(i)[1]);const Point2f e(_lines2.at<Vec4f>(i)[2], _lines2.at<Vec4f>(i)[3]);line(I2, b, e, Scalar::all(255), 1);}// Count the pixels that don't agreeMat Ixor;bitwise_xor(I1, I2, Ixor);int N = countNonZero(Ixor);if (_image.needed()){CV_Assert(_image.channels() == 3);Mat img = _image.getMatRef();CV_Assert(img.isContinuous() && I1.isContinuous() && I2.isContinuous());for (unsigned int i = 0; i < I1.total(); ++i){uchar i1 = I1.ptr()[i];uchar i2 = I2.ptr()[i];if (i1 || i2){unsigned int base_idx = i * 3;if (i1) img.ptr()[base_idx] = 255;else img.ptr()[base_idx] = 0;img.ptr()[base_idx + 1] = 0;if (i2) img.ptr()[base_idx + 2] = 255;else img.ptr()[base_idx + 2] = 0;}}}return N;}void myLineSegmentDetectorImpl::flsd(std::vector<Vec4f>& lines,std::vector<double>& widths, std::vector<double>& precisions,std::vector<double>& nfas){// Angle toleranceconst double prec = CV_PI * ANG_TH / 180;const double p = ANG_TH / 180;const double rho = QUANT / sin(prec); // gradient magnitude thresholdif(SCALE != 1){Mat gaussian_img;const double sigma = (SCALE < 1)?(SIGMA_SCALE / SCALE):(SIGMA_SCALE);const double sprec = 3;const unsigned int h = (unsigned int)(ceil(sigma * sqrt(2 * sprec * log(10.0))));Size ksize(1 + 2 * h, 1 + 2 * h); // kernel sizeGaussianBlur(image, gaussian_img, ksize, sigma);// Scale image to needed sizeresize(gaussian_img, scaled_image, Size(), SCALE, SCALE, INTER_LINEAR_EXACT);ll_angle(rho, N_BINS);}else{scaled_image = image;ll_angle(rho, N_BINS);}LOG_NT = 5 * (log10(double(img_width)) + log10(double(img_height))) / 2 + log10(11.0);const size_t min_reg_size = size_t(-LOG_NT/log10(p)); // minimal number of points in region that can give a meaningful event// // Initialize region only when needed// Mat region = Mat::zeros(scaled_image.size(), CV_8UC1);used = Mat_<uchar>::zeros(scaled_image.size()); // zeros = NOTUSEDstd::vector<RegionPoint> reg;// Search for line segmentsfor(size_t i = 0, points_size = ordered_points.size(); i < points_size; ++i){const Point2i& point = ordered_points[i].p;if((used.at<uchar>(point) == NOTUSED) && (angles.at<double>(point) != NOTDEF)){double reg_angle;region_grow(ordered_points[i].p, reg, reg_angle, prec);// Ignore small regionsif(reg.size() < min_reg_size) { continue; }// Construct rectangular approximation for the regionrect rec;region2rect(reg, reg_angle, prec, p, rec);double log_nfa = -1;if(doRefine > LSD_REFINE_NONE){// At least REFINE_STANDARD lvl.if(!refine(reg, reg_angle, prec, p, rec, DENSITY_TH)) { continue; }if(doRefine >= LSD_REFINE_ADV){// Compute NFAlog_nfa = rect_improve(rec);if(log_nfa <= LOG_EPS) { continue; }}}// Found new line// Add the offsetrec.x1 += 0.5; rec.y1 += 0.5;rec.x2 += 0.5; rec.y2 += 0.5;// scale the result values if a sub-sampling was performedif(SCALE != 1){rec.x1 /= SCALE; rec.y1 /= SCALE;rec.x2 /= SCALE; rec.y2 /= SCALE;rec.width /= SCALE;}//Store the relevant datalines.push_back(Vec4f(float(rec.x1), float(rec.y1), float(rec.x2), float(rec.y2)));if(w_needed) widths.push_back(rec.width);if(p_needed) precisions.push_back(rec.p);if(n_needed && doRefine >= LSD_REFINE_ADV) nfas.push_back(log_nfa);}}}void myLineSegmentDetectorImpl::ll_angle(const double& threshold,const unsigned int& n_bins){//Initialize dataangles = Mat_<double>(scaled_image.size());modgrad = Mat_<double>(scaled_image.size());img_width = scaled_image.cols;img_height = scaled_image.rows;// Undefined the down and right boundariesangles.row(img_height - 1).setTo(NOTDEF);angles.col(img_width - 1).setTo(NOTDEF);// Computing gradient for remaining pixelsdouble max_grad = -1;for(int y = 0; y < img_height - 1; ++y){const uchar* scaled_image_row = scaled_image.ptr<uchar>(y);const uchar* next_scaled_image_row = scaled_image.ptr<uchar>(y+1);double* angles_row = angles.ptr<double>(y);double* modgrad_row = modgrad.ptr<double>(y);for(int x = 0; x < img_width-1; ++x){int DA = next_scaled_image_row[x + 1] - scaled_image_row[x];int BC = scaled_image_row[x + 1] - next_scaled_image_row[x];int gx = DA + BC; // gradient x componentint gy = DA - BC; // gradient y componentdouble norm = std::sqrt((gx * gx + gy * gy) / 4.0); // gradient normmodgrad_row[x] = norm; // store gradientif (norm <= threshold) // norm too small, gradient no defined{angles_row[x] = NOTDEF;}else{angles_row[x] = fastAtan2(float(gx), float(-gy)) * DEG_TO_RADS; // gradient angle computationif (norm > max_grad) { max_grad = norm; }}}}// Compute histogram of gradient valuesdouble bin_coef = (max_grad > 0) ? double(n_bins - 1) / max_grad : 0; // If all image is smooth, max_grad <= 0for(int y = 0; y < img_height - 1; ++y){const double* modgrad_row = modgrad.ptr<double>(y);for(int x = 0; x < img_width - 1; ++x){normPoint _point;int i = int(modgrad_row[x] * bin_coef);_point.p = Point(x, y);_point.norm = i;ordered_points.push_back(_point);}}// Sortstd::sort(ordered_points.begin(), ordered_points.end(), compare_norm);}void myLineSegmentDetectorImpl::region_grow(const Point2i& s, std::vector<RegionPoint>& reg,double& reg_angle, const double& prec){reg.clear();// Point to this regionRegionPoint seed;seed.x = s.x;seed.y = s.y;seed.used = &used.at<uchar>(s);reg_angle = angles.at<double>(s);seed.angle = reg_angle;seed.modgrad = modgrad.at<double>(s);reg.push_back(seed);float sumdx = float(std::cos(reg_angle));float sumdy = float(std::sin(reg_angle));*seed.used = USED;//Try neighboring regionsfor (size_t i = 0;i<reg.size();i++){const RegionPoint& rpoint = reg[i];int xx_min = std::max(rpoint.x - 1, 0), xx_max = std::min(rpoint.x + 1, img_width - 1);int yy_min = std::max(rpoint.y - 1, 0), yy_max = std::min(rpoint.y + 1, img_height - 1);for(int yy = yy_min; yy <= yy_max; ++yy){uchar* used_row = used.ptr<uchar>(yy);const double* angles_row = angles.ptr<double>(yy);const double* modgrad_row = modgrad.ptr<double>(yy);for(int xx = xx_min; xx <= xx_max; ++xx){uchar& is_used = used_row[xx];if(is_used != USED &&(isAligned(xx, yy, reg_angle, prec))){const double& angle = angles_row[xx];// Add pointis_used = USED;RegionPoint region_point;region_point.x = xx;region_point.y = yy;region_point.used = &is_used;region_point.modgrad = modgrad_row[xx];region_point.angle = angle;reg.push_back(region_point);// Update region's anglesumdx += cos(float(angle));sumdy += sin(float(angle));// reg_angle is used in the isAligned, so it needs to be updates?reg_angle = fastAtan2(sumdy, sumdx) * DEG_TO_RADS;}}}}}void myLineSegmentDetectorImpl::region2rect(const std::vector<RegionPoint>& reg,const double reg_angle, const double prec, const double p, rect& rec) const{double x = 0, y = 0, sum = 0;for(size_t i = 0; i < reg.size(); ++i){const RegionPoint& pnt = reg[i];const double& weight = pnt.modgrad;x += double(pnt.x) * weight;y += double(pnt.y) * weight;sum += weight;}// Weighted sum must differ from 0CV_Assert(sum > 0);x /= sum;y /= sum;double theta = get_theta(reg, x, y, reg_angle, prec);// Find length and widthdouble dx = cos(theta);double dy = sin(theta);double l_min = 0, l_max = 0, w_min = 0, w_max = 0;for(size_t i = 0; i < reg.size(); ++i){double regdx = double(reg[i].x) - x;double regdy = double(reg[i].y) - y;double l = regdx * dx + regdy * dy;double w = -regdx * dy + regdy * dx;if(l > l_max) l_max = l;else if(l < l_min) l_min = l;if(w > w_max) w_max = w;else if(w < w_min) w_min = w;}// Store valuesrec.x1 = x + l_min * dx;rec.y1 = y + l_min * dy;rec.x2 = x + l_max * dx;rec.y2 = y + l_max * dy;rec.width = w_max - w_min;rec.x = x;rec.y = y;rec.theta = theta;rec.dx = dx;rec.dy = dy;rec.prec = prec;rec.p = p;// Min width of 1 pixelif(rec.width < 1.0) rec.width = 1.0;}double myLineSegmentDetectorImpl::get_theta(const std::vector<RegionPoint>& reg, const double& x,const double& y, const double& reg_angle, const double& prec) const{double Ixx = 0.0;double Iyy = 0.0;double Ixy = 0.0;// Compute inertia matrixfor(size_t i = 0; i < reg.size(); ++i){const double& regx = reg[i].x;const double& regy = reg[i].y;const double& weight = reg[i].modgrad;double dx = regx - x;double dy = regy - y;Ixx += dy * dy * weight;Iyy += dx * dx * weight;Ixy -= dx * dy * weight;}// Check if inertia matrix is nullCV_Assert(!(double_equal(Ixx, 0) && double_equal(Iyy, 0) && double_equal(Ixy, 0)));// Compute smallest eigenvaluedouble lambda = 0.5 * (Ixx + Iyy - sqrt((Ixx - Iyy) * (Ixx - Iyy) + 4.0 * Ixy * Ixy));// Compute angledouble theta = (fabs(Ixx)>fabs(Iyy))?double(fastAtan2(float(lambda - Ixx), float(Ixy))):double(fastAtan2(float(Ixy), float(lambda - Iyy))); // in degstheta *= DEG_TO_RADS;// Correct angle by 180 deg if necessaryif(angle_diff(theta, reg_angle) > prec) { theta += CV_PI; }return theta;}bool myLineSegmentDetectorImpl::refine(std::vector<RegionPoint>& reg, double reg_angle,const double prec, double p, rect& rec, const double& density_th){double density = double(reg.size()) / (dist(rec.x1, rec.y1, rec.x2, rec.y2) * rec.width);if (density >= density_th) { return true; }// Try to reduce angle tolerancedouble xc = double(reg[0].x);double yc = double(reg[0].y);const double& ang_c = reg[0].angle;double sum = 0, s_sum = 0;int n = 0;for (size_t i = 0; i < reg.size(); ++i){*(reg[i].used) = NOTUSED;if (dist(xc, yc, reg[i].x, reg[i].y) < rec.width){const double& angle = reg[i].angle;double ang_d = angle_diff_signed(angle, ang_c);sum += ang_d;s_sum += ang_d * ang_d;++n;}}CV_Assert(n > 0);double mean_angle = sum / double(n);// 2 * standard deviationdouble tau = 2.0 * sqrt((s_sum - 2.0 * mean_angle * sum) / double(n) + mean_angle * mean_angle);// Try new regionregion_grow(Point(reg[0].x, reg[0].y), reg, reg_angle, tau);if (reg.size() < 2) { return false; }region2rect(reg, reg_angle, prec, p, rec);density = double(reg.size()) / (dist(rec.x1, rec.y1, rec.x2, rec.y2) * rec.width);if (density < density_th){return reduce_region_radius(reg, reg_angle, prec, p, rec, density, density_th);}else{return true;}}bool myLineSegmentDetectorImpl::reduce_region_radius(std::vector<RegionPoint>& reg, double reg_angle,const double prec, double p, rect& rec, double density, const double& density_th){// Compute region's radiusdouble xc = double(reg[0].x);double yc = double(reg[0].y);double radSq1 = distSq(xc, yc, rec.x1, rec.y1);double radSq2 = distSq(xc, yc, rec.x2, rec.y2);double radSq = radSq1 > radSq2 ? radSq1 : radSq2;while(density < density_th){radSq *= 0.75*0.75; // Reduce region's radius to 75% of its value// Remove points from the region and update 'used' mapfor (size_t i = 0; i < reg.size(); ++i){if(distSq(xc, yc, double(reg[i].x), double(reg[i].y)) > radSq){// Remove point from the region*(reg[i].used) = NOTUSED;std::swap(reg[i], reg[reg.size() - 1]);reg.pop_back();--i; // To avoid skipping one point}}if(reg.size() < 2) { return false; }// Re-compute rectangleregion2rect(reg ,reg_angle, prec, p, rec);// Re-compute region points densitydensity = double(reg.size()) /(dist(rec.x1, rec.y1, rec.x2, rec.y2) * rec.width);}return true;}double myLineSegmentDetectorImpl::rect_improve(rect& rec) const{double delta = 0.5;double delta_2 = delta / 2.0;double log_nfa = rect_nfa(rec);if(log_nfa > LOG_EPS) return log_nfa; // Good rectangle// Try to improve// Finer precisionrect r = rect(rec); // Copyfor(int n = 0; n < 5; ++n){r.p /= 2;r.prec = r.p * CV_PI;double log_nfa_new = rect_nfa(r);if(log_nfa_new > log_nfa){log_nfa = log_nfa_new;rec = rect(r);}}if(log_nfa > LOG_EPS) return log_nfa;// Try to reduce widthr = rect(rec);for(unsigned int n = 0; n < 5; ++n){if((r.width - delta) >= 0.5){r.width -= delta;double log_nfa_new = rect_nfa(r);if(log_nfa_new > log_nfa){rec = rect(r);log_nfa = log_nfa_new;}}}if(log_nfa > LOG_EPS) return log_nfa;// Try to reduce one side of rectangler = rect(rec);for(unsigned int n = 0; n < 5; ++n){if((r.width - delta) >= 0.5){r.x1 += -r.dy * delta_2;r.y1 += r.dx * delta_2;r.x2 += -r.dy * delta_2;r.y2 += r.dx * delta_2;r.width -= delta;double log_nfa_new = rect_nfa(r);if(log_nfa_new > log_nfa){rec = rect(r);log_nfa = log_nfa_new;}}}if(log_nfa > LOG_EPS) return log_nfa;// Try to reduce other side of rectangler = rect(rec);for(unsigned int n = 0; n < 5; ++n){if((r.width - delta) >= 0.5){r.x1 -= -r.dy * delta_2;r.y1 -= r.dx * delta_2;r.x2 -= -r.dy * delta_2;r.y2 -= r.dx * delta_2;r.width -= delta;double log_nfa_new = rect_nfa(r);if(log_nfa_new > log_nfa){rec = rect(r);log_nfa = log_nfa_new;}}}if(log_nfa > LOG_EPS) return log_nfa;// Try finer precisionr = rect(rec);for(unsigned int n = 0; n < 5; ++n){if((r.width - delta) >= 0.5){r.p /= 2;r.prec = r.p * CV_PI;double log_nfa_new = rect_nfa(r);if(log_nfa_new > log_nfa){rec = rect(r);log_nfa = log_nfa_new;}}}return log_nfa;}double myLineSegmentDetectorImpl::rect_nfa(const rect& rec) const{int total_pts = 0, alg_pts = 0;double half_width = rec.width / 2.0;double dyhw = rec.dy * half_width;double dxhw = rec.dx * half_width;edge ordered_x[4];edge* min_y = &ordered_x[0];edge* max_y = &ordered_x[0]; // Will be used for loop rangeordered_x[0].p.x = int(rec.x1 - dyhw); ordered_x[0].p.y = int(rec.y1 + dxhw); ordered_x[0].taken = false;ordered_x[1].p.x = int(rec.x2 - dyhw); ordered_x[1].p.y = int(rec.y2 + dxhw); ordered_x[1].taken = false;ordered_x[2].p.x = int(rec.x2 + dyhw); ordered_x[2].p.y = int(rec.y2 - dxhw); ordered_x[2].taken = false;ordered_x[3].p.x = int(rec.x1 + dyhw); ordered_x[3].p.y = int(rec.y1 - dxhw); ordered_x[3].taken = false;std::sort(ordered_x, ordered_x + 4, AsmallerB_XoverY);// Find min y. And mark as taken. find max y.for(unsigned int i = 1; i < 4; ++i){if(min_y->p.y > ordered_x[i].p.y) {min_y = &ordered_x[i]; }if(max_y->p.y < ordered_x[i].p.y) {max_y = &ordered_x[i]; }}min_y->taken = true;// Find leftmost untaken point;edge* leftmost = 0;for(unsigned int i = 0; i < 4; ++i){if(!ordered_x[i].taken){if(!leftmost) // if uninitialized{leftmost = &ordered_x[i];}else if (leftmost->p.x > ordered_x[i].p.x){leftmost = &ordered_x[i];}}}CV_Assert(leftmost != NULL);leftmost->taken = true;// Find rightmost untaken point;edge* rightmost = 0;for(unsigned int i = 0; i < 4; ++i){if(!ordered_x[i].taken){if(!rightmost) // if uninitialized{rightmost = &ordered_x[i];}else if (rightmost->p.x < ordered_x[i].p.x){rightmost = &ordered_x[i];}}}CV_Assert(rightmost != NULL);rightmost->taken = true;// Find last untaken point;edge* tailp = 0;for(unsigned int i = 0; i < 4; ++i){if(!ordered_x[i].taken){if(!tailp) // if uninitialized{tailp = &ordered_x[i];}else if (tailp->p.x > ordered_x[i].p.x){tailp = &ordered_x[i];}}}CV_Assert(tailp != NULL);tailp->taken = true;double flstep = (min_y->p.y != leftmost->p.y) ?(min_y->p.x - leftmost->p.x) / (min_y->p.y - leftmost->p.y) : 0; //first left stepdouble slstep = (leftmost->p.y != tailp->p.x) ?(leftmost->p.x - tailp->p.x) / (leftmost->p.y - tailp->p.x) : 0; //second left stepdouble frstep = (min_y->p.y != rightmost->p.y) ?(min_y->p.x - rightmost->p.x) / (min_y->p.y - rightmost->p.y) : 0; //first right stepdouble srstep = (rightmost->p.y != tailp->p.x) ?(rightmost->p.x - tailp->p.x) / (rightmost->p.y - tailp->p.x) : 0; //second right stepdouble lstep = flstep, rstep = frstep;double left_x = min_y->p.x, right_x = min_y->p.x;// Loop around all points in the region and count those that are aligned.int min_iter = min_y->p.y;int max_iter = max_y->p.y;for(int y = min_iter; y <= max_iter; ++y){if (y < 0 || y >= img_height) continue;for(int x = int(left_x); x <= int(right_x); ++x){if (x < 0 || x >= img_width) continue;++total_pts;if(isAligned(x, y, rec.theta, rec.prec)){++alg_pts;}}if(y >= leftmost->p.y) { lstep = slstep; }if(y >= rightmost->p.y) { rstep = srstep; }left_x += lstep;right_x += rstep;}return nfa(total_pts, alg_pts, rec.p);}double myLineSegmentDetectorImpl::nfa(const int& n, const int& k, const double& p) const{// Trivial casesif(n == 0 || k == 0) { return -LOG_NT; }if(n == k) { return -LOG_NT - double(n) * log10(p); }double p_term = p / (1 - p);double log1term = (double(n) + 1) - log_gamma(double(k) + 1)- log_gamma(double(n-k) + 1)+ double(k) * log(p) + double(n-k) * log(1.0 - p);double term = exp(log1term);if(double_equal(term, 0)){if(k > n * p) return -log1term / M_LN10 - LOG_NT;else return -LOG_NT;}// Compute more terms if neededdouble bin_tail = term;double tolerance = 0.1; // an error of 10% in the result is acceptedfor(int i = k + 1; i <= n; ++i){double bin_term = double(n - i + 1) / double(i);double mult_term = bin_term * p_term;term *= mult_term;bin_tail += term;if(bin_term < 1){double err = term * ((1 - pow(mult_term, double(n-i+1))) / (1 - mult_term) - 1);if(err < tolerance * fabs(-log10(bin_tail) - LOG_NT) * bin_tail) break;}}return -log10(bin_tail) - LOG_NT;}inline bool myLineSegmentDetectorImpl::isAligned(int x, int y, const double& theta, const double& prec) const{if(x < 0 || y < 0 || x >= angles.cols || y >= angles.rows) { return false; }const double& a = angles.at<double>(y, x);if(a == NOTDEF) { return false; }// It is assumed that 'theta' and 'a' are in the range [-pi,pi]double n_theta = theta - a;if(n_theta < 0) { n_theta = -n_theta; }if(n_theta > M_3_2_PI){n_theta -= M_2__PI;if(n_theta < 0) n_theta = -n_theta;}return n_theta <= prec;}} // namespace cv

在LSDDetector_custom.cpp文件中引用头文件

#include "my_lsd.hpp"再次编译运行

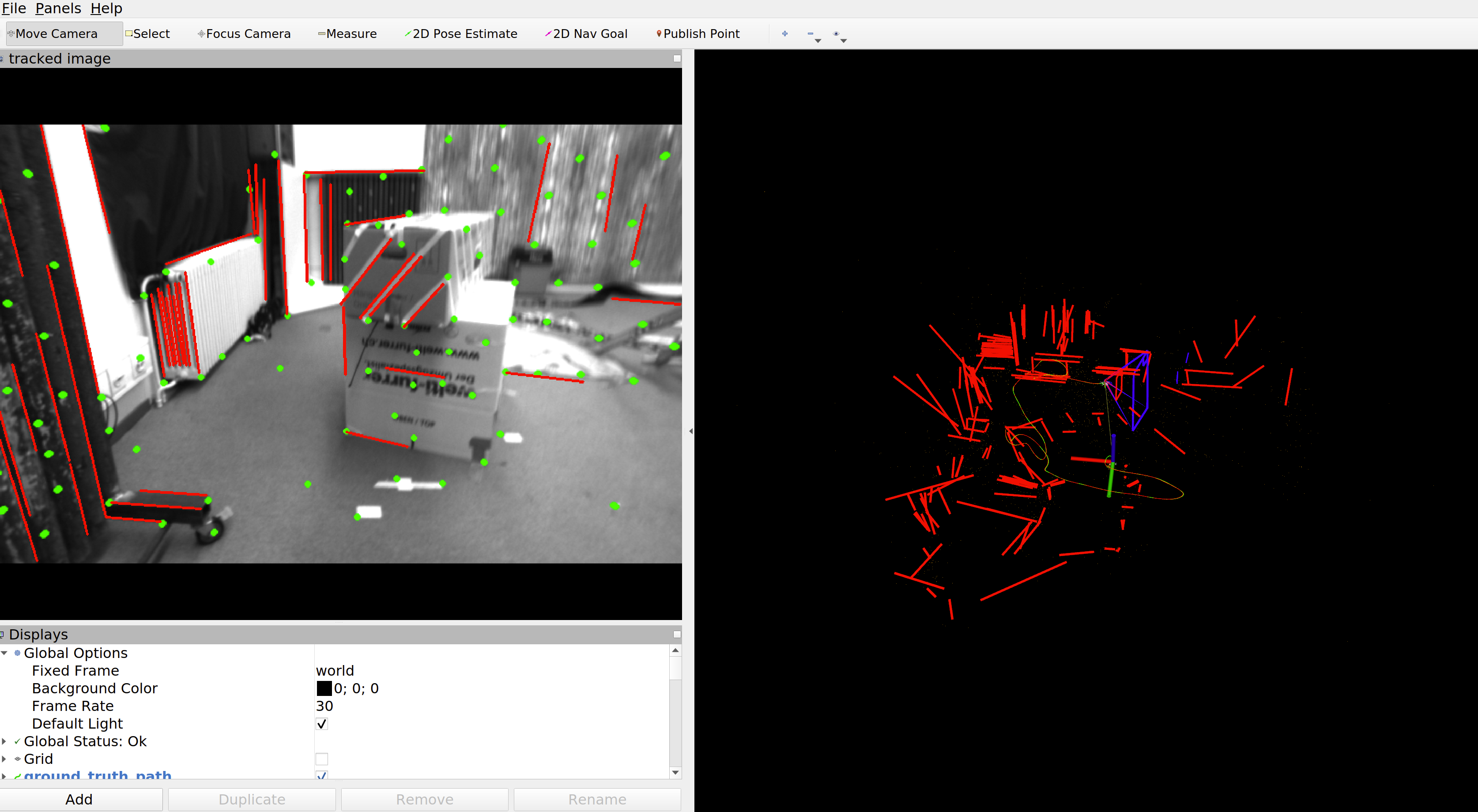

四、运行

source devel/setup.bash

roslaunch plvins_estimator plvins_show_linepoint.launch

rosbag play MH_05_difficult.bag注意:我们此时需要需要将src/PL-VINSvins_estimator/launch/下的plvins-show-linepoint.launch改为plvins_show_linepoint.launch(注意是下划线)文件名

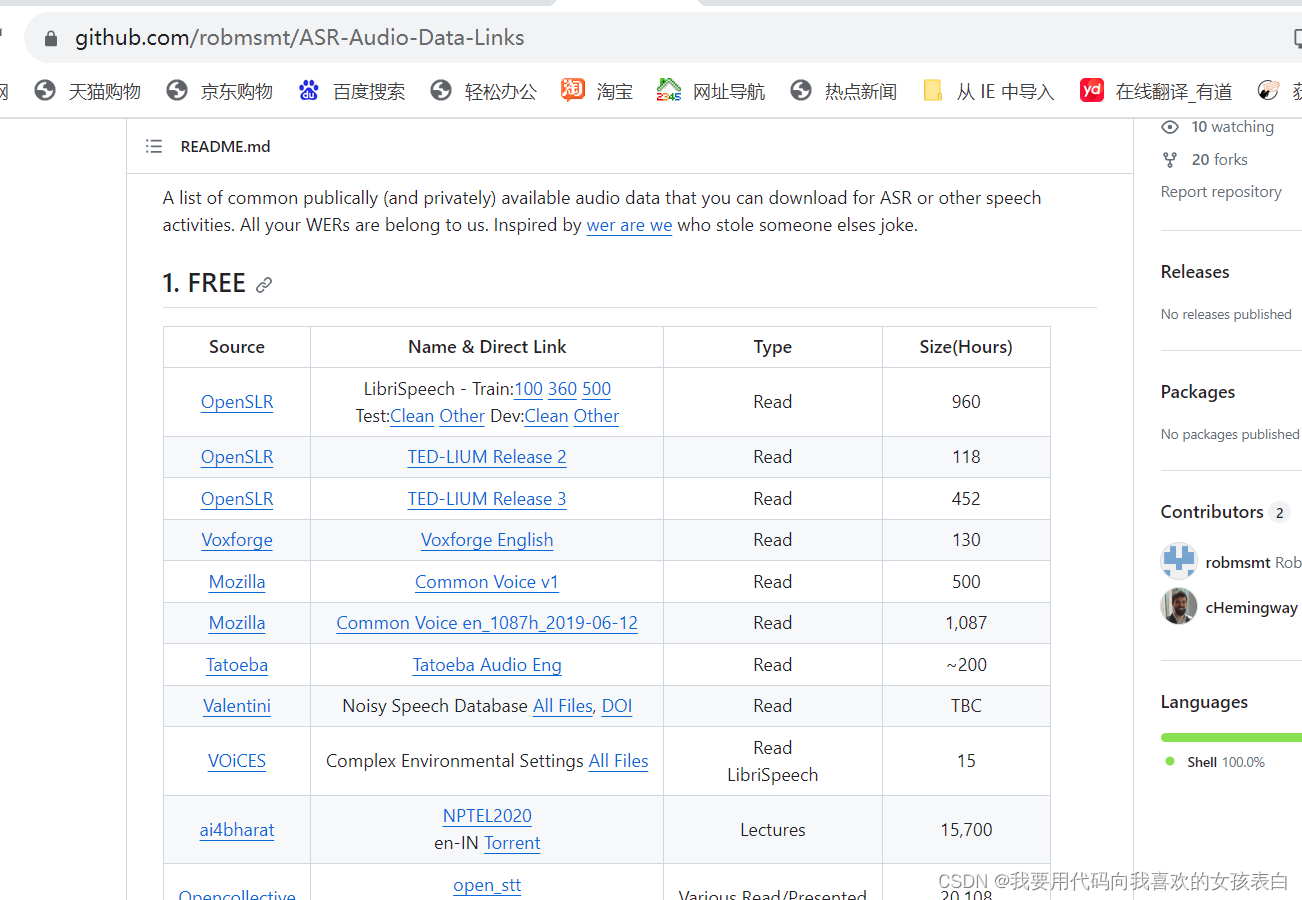

数据集下载地址如下:

kmavvisualinertialdatasets – ASL Datasets

博主github仓库中的代码全部都修改完毕,适用ubuntu20.04 opencv4

相关文章:

工程(十四)——ubuntu20.04 PL-VINS

博主创建了一个科研互助群Q:772356582,欢迎大家加入讨论。这是一个科研互助群,主要围绕机器人,无人驾驶,无人机方面的感知定位,决策规划,以及论文发表经验,以方便大家很好很快的科研…...

C复习-结构struct+bit field+union

参考: 里科《C和指针》 结构的声明 struct {int a;char b; } x; struct {int a;char b; } y[20], *z;// 会报错,因为z和x虽然都没有名字,但是并不是一个东西 z &x;struct SIMPLE {int a;char b; }; // 这样就对了,因为名字匹…...

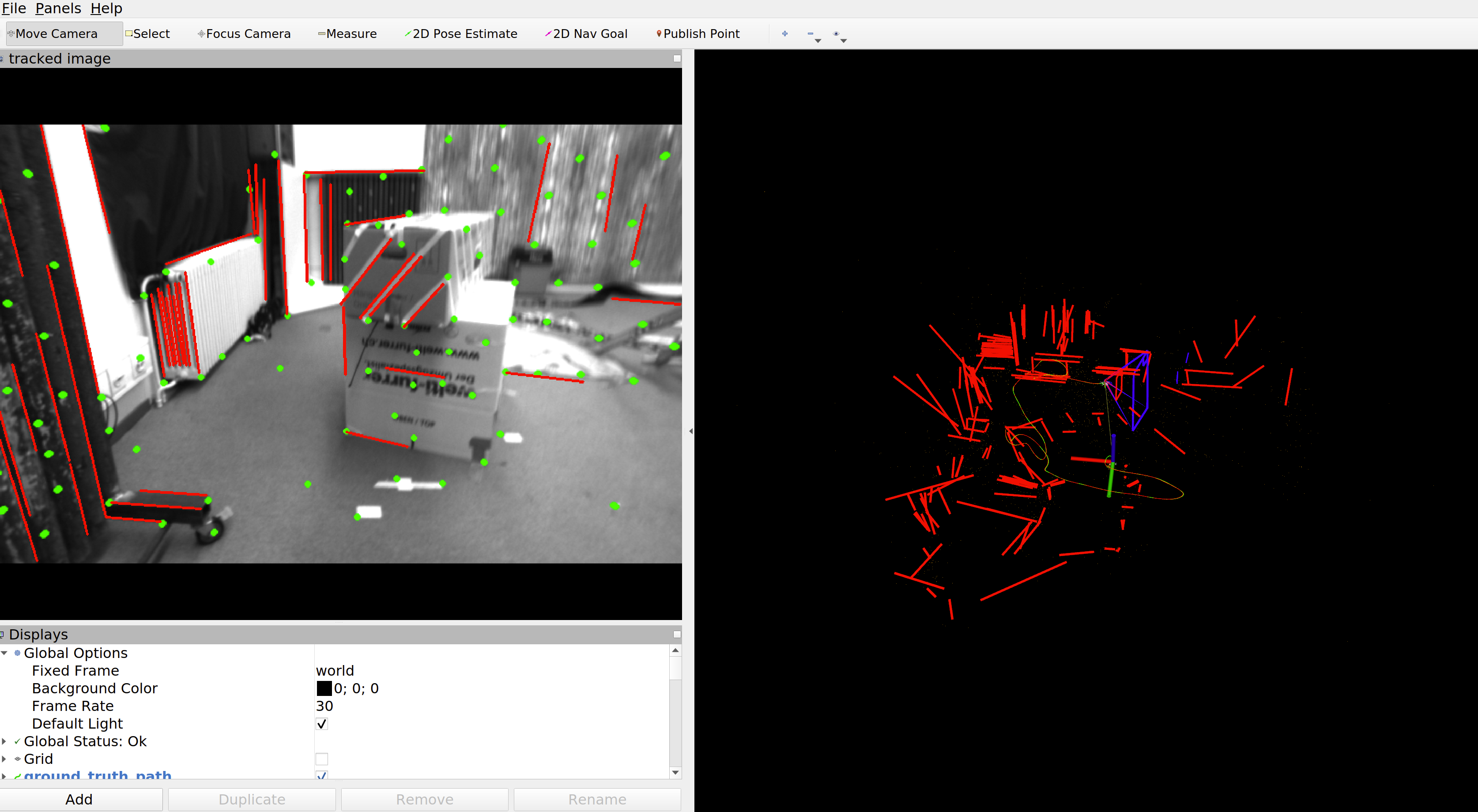

1 快速了解Paimon数据湖核心原理及架构

1.1 什么是Apache Paimon Apache Paimon的前身属于Flink的子项目:Flink Table Store。 目前业内主流的数据湖存储项目都是面向批处理场景设计的,在数据更新处理时效上无法满足流式数据湖的需求,因此Flink社区在2022年的时候内部孵化了 …...

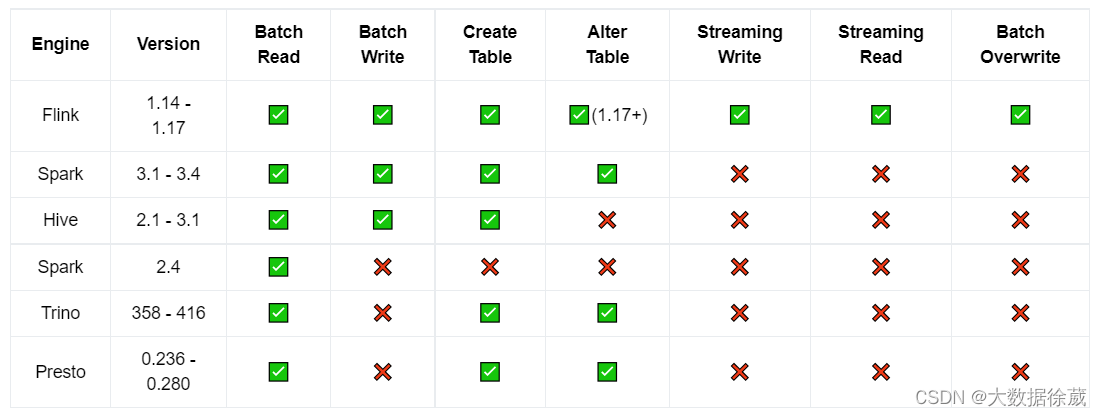

chrome v3开发插件实现所有网站允许跨域

场景: chrome 插件 升级到v3后,原来修改请求响应都变成异步,即无法同步拦截来修改请求响应。 在v3中也不支持修改请求响应内容。 问题:如何在chrome v3中允许其他网站跨域呢。 方式一:禁用chrome跨域,禁…...

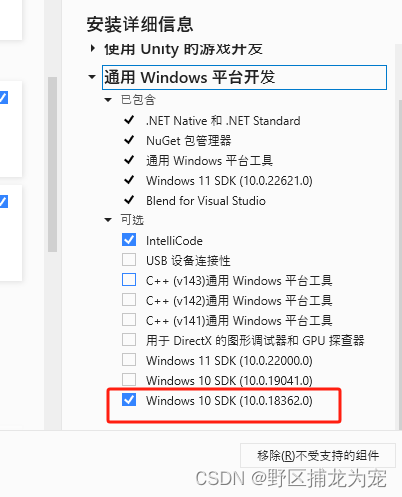

unity Holoens2开发,使用Vuforia识别实体或图片 触发交互

建议:先看官方文档 我使用的utniy 版本:Unity 2021.3.6f1 官方建议:混合现实工具包简介 - 设置项目并使用手势交互 - Training | Microsoft Learn 配置了正确工具的 Windows 10 或 11 电脑Windows 10 SDK 10.0.18362.0 或更高版本安装了 U…...

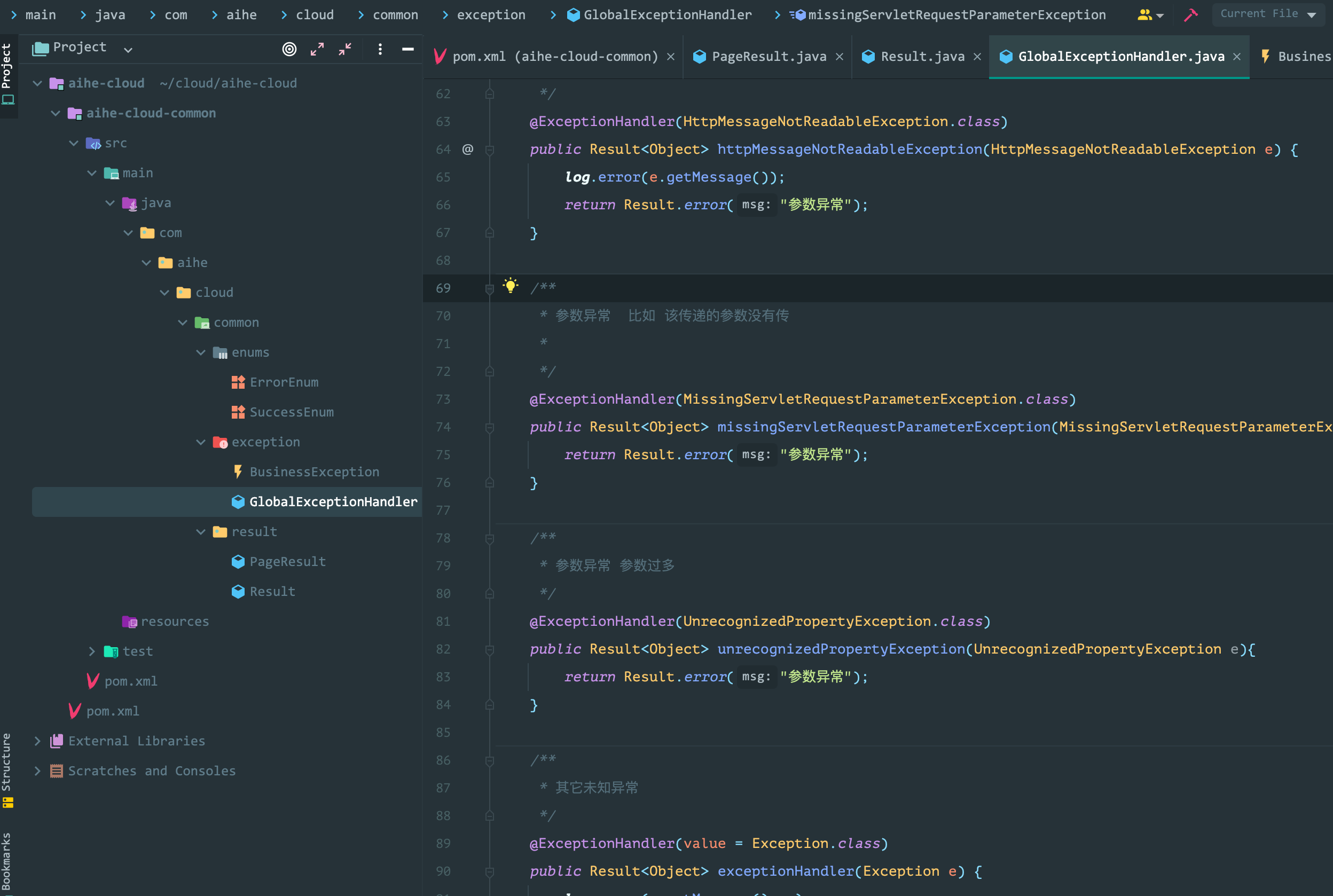

从零开始搭建微服务(一)

构建项目父工程 添加公共核心模块 安装nacos 安装nacos nacos 文档地址: https://nacos.io/zh-cn/docs/what-is-nacos.html 本文使用版本2.2.2 下载地址:https://github.com/alibaba/nacos/archive/refs/tags/2.2.2.zip 使用nacos 我们下载是源代码 解…...

一种可以实现安全便捷文件摆渡的跨网文件安全交换软件

为了保护数据的安全性和完整性,很多企业都采用了内外网物理隔离的方式,防止核心数据泄露或被恶意篡改。然而,这也给企业内部或与外部合作伙伴之间的文件交换带来了很多不便和挑战。如何在保证数据安全的前提下,实现跨网文件的快速…...

『 MySQL数据库 』数据库基础之表的基本操作

文章目录 创建表🗡查看表🗡✒ 查看表内所有信息(描述\表结构等)✒ 根据条件查看表内数据✒ 查看表的具体详细信息: 修改表🗡✒ 修改表名:✒ 修改表的存储引擎、编码集(字符集和校验集):✒ 表内插入数据:insert into✒ 在表中新添一个字段(列)…...

如何提高40%的Docker构建时间

1. 背景 在产品开发中构建docker镜像,随着时间的推移,会变得越来越大,构建时间也越来越长。我的目标是构建时间不超过 5 分钟。 2. 遵循Dockerfile的最佳实践 我们首先确保Dockerfile文件遵循Docker官方的最佳实践,具体做法有: 尽量使用官方的基础镜像,Docker推荐使用…...

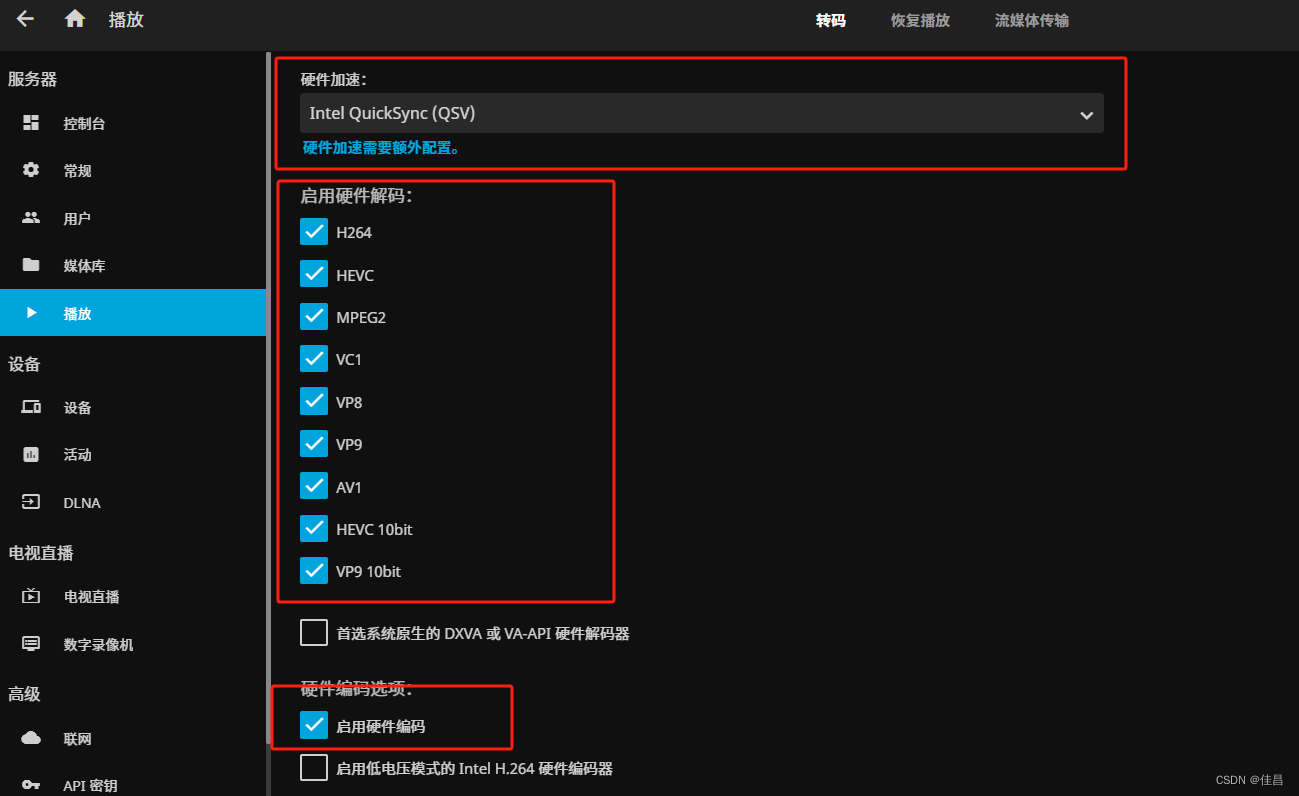

真正解决jellyfin硬解码转码

前段时间入手一个DS423集成显卡UHD600,搭了一个jellyfin,发现网上关于硬解码的教程基本都存在问题,没有真正解决我的硬解码问题。经过一系列分析修改,最终实现硬解码。先贴效果图: 下载安装jellyfin这里就不叙述&#…...

声音训练数据集哪里找?中文、英文

一般找数据集的都是需要训练底膜的,大家git上找的开源项目大多是预训练模型。预训练就是别人已经训练好的底膜,你在他的基础上进行调整。而我们训练如果他这个模型不理想是需要训练底膜的。 找的方式是从git开源上找 中文 推荐MockingBird,…...

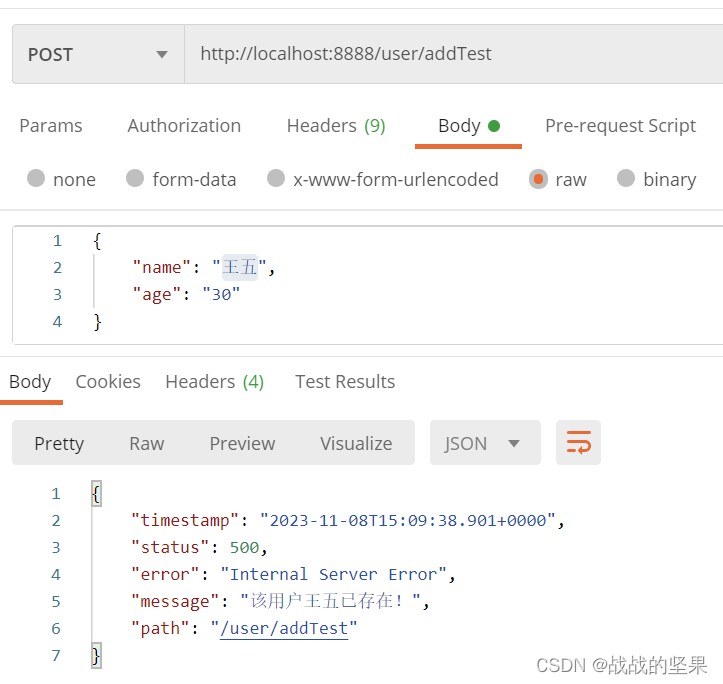

springboot中如何同时操作同一功能

问题描述 测试阶段,由于存在某一功能的同时操作,该功能还是入库逻辑,此时若不进行处理,会造成插入表中多条重复数据,为此该问题需要修复。 解决办法 在接口开始进行对是否存在某个key值的判断,若不存在&…...

YOLOWeeds: 用于棉花生产系统中多类杂草检测的 YOLO 目标检测器的新基准

YOLOWeeds: A novel benchmark of YOLO object detectors for multi-class weed detection in cotton production systems 摘要1、介绍2、总结 摘要 过度依赖除草剂控制杂草,加速了杂草的抗除草剂进化,引起了对环境、食品安全和人类健康的日益关注。自动…...

Vue3:自定义图标选择器(包含 SVG 图标封装)

文章目录 一、准备工作(在 Vue3 中使用 SVG)二、封装 SVG三、封装图标选择器四、Demo 效果预览: 一、准备工作(在 Vue3 中使用 SVG) 本文参考:https://blog.csdn.net/houtengyang/article/details/1290431…...

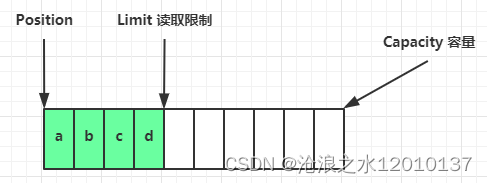

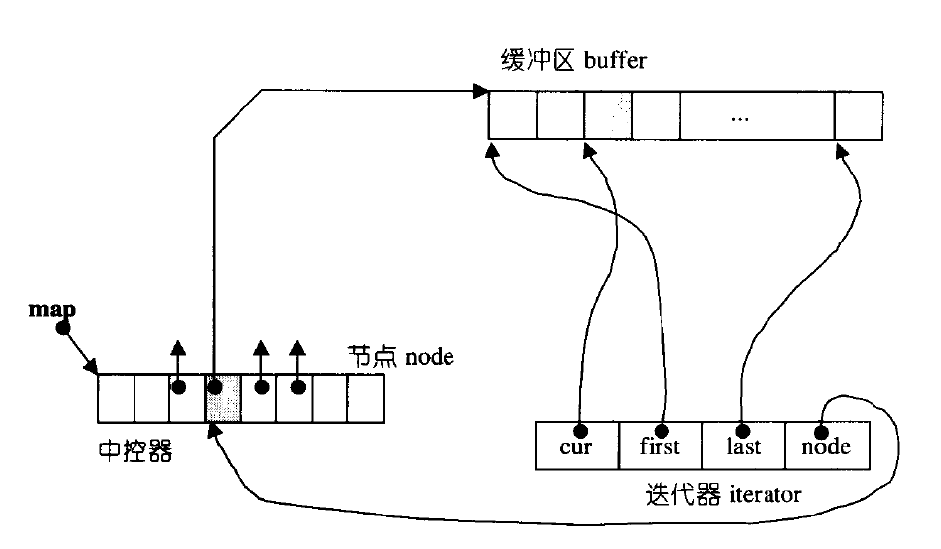

NIO讲解

一:什么是NIO? 二:NIO三大组件 1. channel channel 有一点类似于 stream,它就是读写数据的双向通道,可以从 channel 将数据读入 buffer,也可以将 buffer 的数据写入 channel,而之前的 stream 要么是输入…...

react中jest配置,解决node_modules报错esm无法解析的问题

重点关注: transformIgnorePatterns: [ "/node_modules/(?!(?:jmfe)/)", ], moduleNameMapper: { "\\.(css|less|scss|sss|styl)$": "jest-css-modules" } 并安装jest-css-modules(npm i --save-dev jest-css-mo…...

Qt6,使用 UI 界面完成命令执行自动化的设计

一、需要完成的功能 在子对话框(CmdChildQt)中,点击 “执行” 按钮,将多个命令行指令,依次输入到父对话框(CmdQt)的编辑框中并且执行,要求如下: 在前一个命令执行完成后&…...

Apache Maven;会话技术

Apache Maven是一个项目管理和构建工具,它基于项目对象模型(POM)的概念,通过一小段描述信息来管理项目的构建、报告和文档。 Maven模型有: 项目对象模型、依赖管理模型、插件 会话技术: 会话:…...

Azure - 机器学习:使用自动化机器学习训练计算机视觉模型的数据架构

目录 一、用于训练的数据架构图像分类(二进制/多类)多标签图像分类对象检测实例分段 二、用于推理的数据格式输入格式输出格式图像分类多标签图像分类对象检测实例分段 了解如何设置Azure中 JSONL 文件格式,以便在训练和推理期间在计算机视觉…...

【C++】stack | queue | priority_queue | deque

一、stack栈 介绍 1.栈是一种特殊的线性表,其元素遵循“后进先出”的原则,即仅允许在在表的一端进行插入、删除操作,这一模式被称为“后进先出”或LIFO(last in fisrt out)。 2.从底层实现来看,stack是作…...

无法与IP建立连接,未能下载VSCode服务器

如题,在远程连接服务器的时候突然遇到了这个提示。 查阅了一圈,发现是VSCode版本自动更新惹的祸!!! 在VSCode的帮助->关于这里发现前几天VSCode自动更新了,我的版本号变成了1.100.3 才导致了远程连接出…...

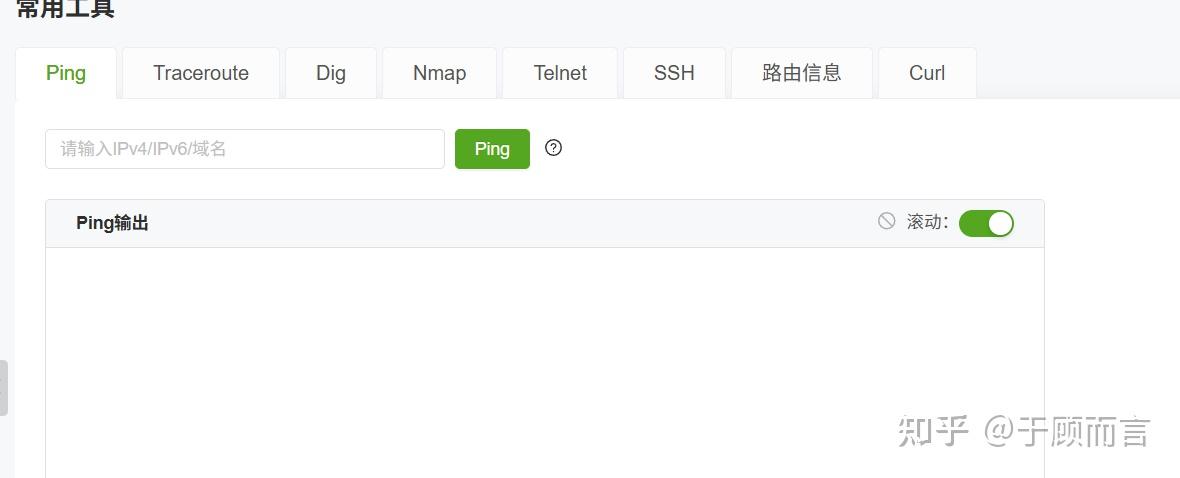

【网络安全产品大调研系列】2. 体验漏洞扫描

前言 2023 年漏洞扫描服务市场规模预计为 3.06(十亿美元)。漏洞扫描服务市场行业预计将从 2024 年的 3.48(十亿美元)增长到 2032 年的 9.54(十亿美元)。预测期内漏洞扫描服务市场 CAGR(增长率&…...

论文浅尝 | 基于判别指令微调生成式大语言模型的知识图谱补全方法(ISWC2024)

笔记整理:刘治强,浙江大学硕士生,研究方向为知识图谱表示学习,大语言模型 论文链接:http://arxiv.org/abs/2407.16127 发表会议:ISWC 2024 1. 动机 传统的知识图谱补全(KGC)模型通过…...

基于Docker Compose部署Java微服务项目

一. 创建根项目 根项目(父项目)主要用于依赖管理 一些需要注意的点: 打包方式需要为 pom<modules>里需要注册子模块不要引入maven的打包插件,否则打包时会出问题 <?xml version"1.0" encoding"UTF-8…...

Axios请求超时重发机制

Axios 超时重新请求实现方案 在 Axios 中实现超时重新请求可以通过以下几种方式: 1. 使用拦截器实现自动重试 import axios from axios;// 创建axios实例 const instance axios.create();// 设置超时时间 instance.defaults.timeout 5000;// 最大重试次数 cons…...

工业自动化时代的精准装配革新:迁移科技3D视觉系统如何重塑机器人定位装配

AI3D视觉的工业赋能者 迁移科技成立于2017年,作为行业领先的3D工业相机及视觉系统供应商,累计完成数亿元融资。其核心技术覆盖硬件设计、算法优化及软件集成,通过稳定、易用、高回报的AI3D视觉系统,为汽车、新能源、金属制造等行…...

招商蛇口 | 执笔CID,启幕低密生活新境

作为中国城市生长的力量,招商蛇口以“美好生活承载者”为使命,深耕全球111座城市,以央企担当匠造时代理想人居。从深圳湾的开拓基因到西安高新CID的战略落子,招商蛇口始终与城市发展同频共振,以建筑诠释对土地与生活的…...

LLMs 系列实操科普(1)

写在前面: 本期内容我们继续 Andrej Karpathy 的《How I use LLMs》讲座内容,原视频时长 ~130 分钟,以实操演示主流的一些 LLMs 的使用,由于涉及到实操,实际上并不适合以文字整理,但还是决定尽量整理一份笔…...

【LeetCode】算法详解#6 ---除自身以外数组的乘积

1.题目介绍 给定一个整数数组 nums,返回 数组 answer ,其中 answer[i] 等于 nums 中除 nums[i] 之外其余各元素的乘积 。 题目数据 保证 数组 nums之中任意元素的全部前缀元素和后缀的乘积都在 32 位 整数范围内。 请 不要使用除法,且在 O…...

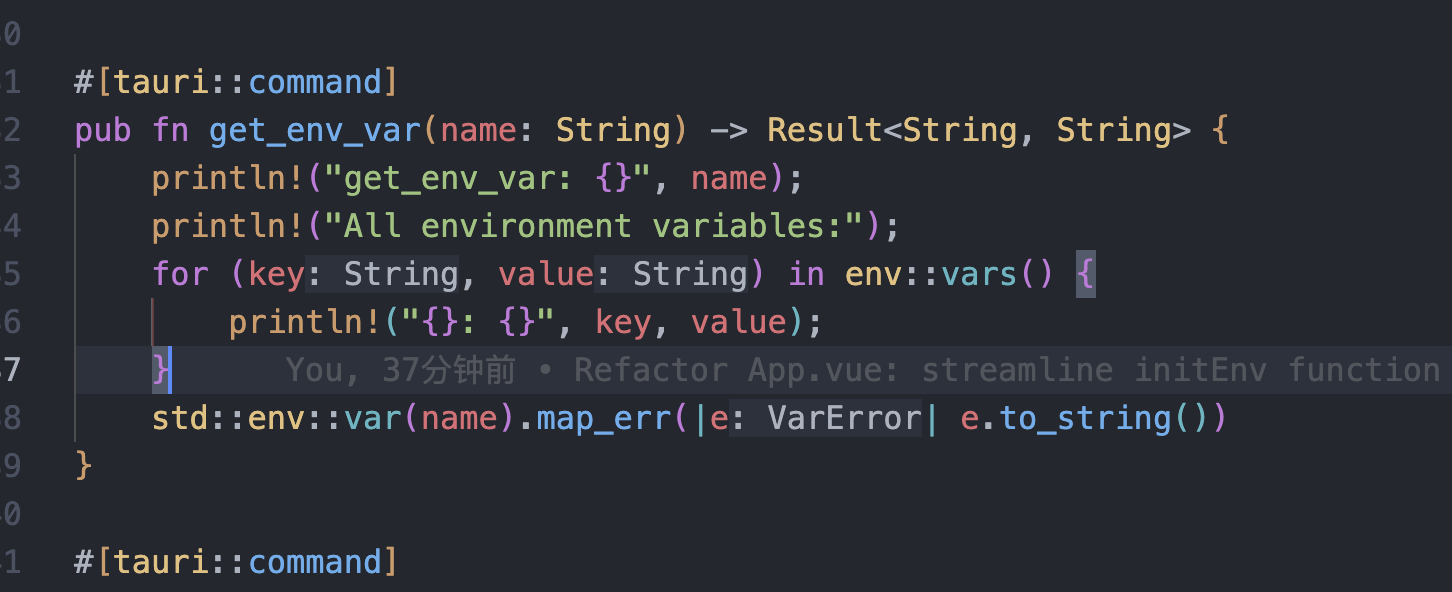

tauri项目,如何在rust端读取电脑环境变量

如果想在前端通过调用来获取环境变量的值,可以通过标准的依赖: std::env::var(name).ok() 想在前端通过调用来获取,可以写一个command函数: #[tauri::command] pub fn get_env_var(name: String) -> Result<String, Stri…...