Hudi入门

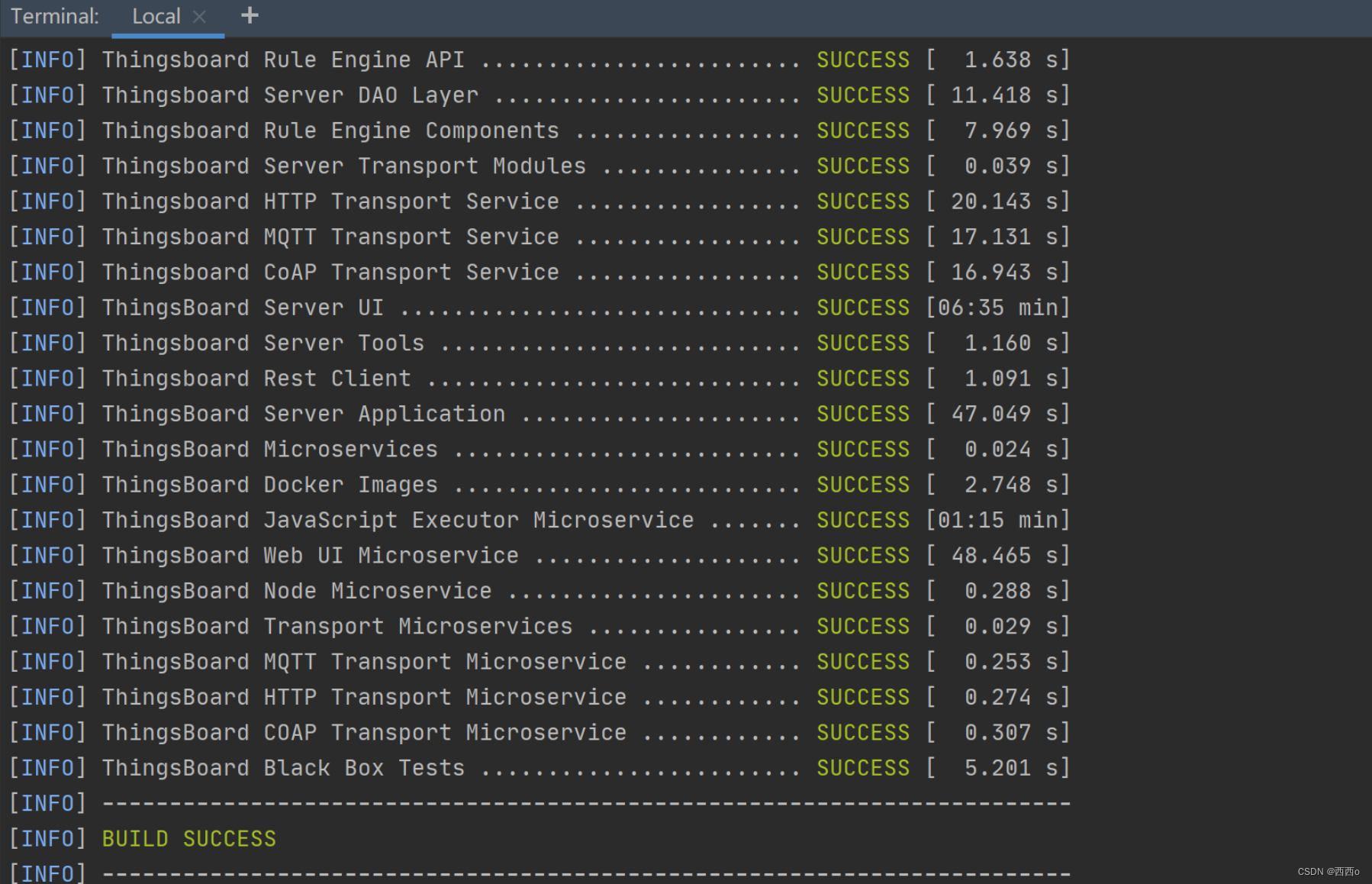

一、Hudi编译安装

1.下载

https://archive.apache.org/dist/hudi/0.9.0/hudi-0.9.0.src.tgz

2.maven编译

mvn clean install -DskipTests -Dscala2.12 -Dspark3

3.配置spark与hudi依赖包

[root@master hudi-spark-jars]# ll

total 37876

-rw-r--r-- 1 root root 38615211 Oct 27 16:13 hudi-spark3-bundle_2.12-0.9.0.jar

-rw-r--r-- 1 root root 161826 Oct 27 16:13 spark-avro_2.12-3.0.1.jar

-rw-r--r-- 1 root root 2777 Oct 27 16:13 spark_unused-1.0.0.jar

二、Hudi基础使用

1.启动cli

[root@master hudi-cli]# hudi-cli.sh

2.启动spark-shell添加hudi-jars

spark-shell \

--master local[2] \

--jars /usr/local/src/hudi/hudi-spark-jars/hudi-spark3-bundle_2.12-0.9.0.jar,/usr/local/src/hudi/hudi-spark-jars/spark-avro_2.12-3.0.1.jar,/usr/local/src/hudi/hudi-spark-jars/spark_unused-1.0.0.jar \

--conf "spark.serializer=org.apache.spark.serializer.KryoSerializer"

3.模拟产生数据

import org.apache.hudi.QuickstartUtils._

import scala.collection.JavaConversions._

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._val tableName="hudi_trips_cow"

val basePath="hdfs://master:9000/hudi-warehouse/hudi_trips_cow"val dataGen=new DataGeneratorval inserts=convertToStringList(dataGen.generateInserts(10))val df=spark.read.json(spark.sparkContext.parallelize(inserts,2))df.printSchema()

-----------------------------------------------------------------------------------------

root|-- begin_lat: double (nullable = true)|-- begin_lon: double (nullable = true)|-- driver: string (nullable = true)|-- end_lat: double (nullable = true)|-- end_lon: double (nullable = true)|-- fare: double (nullable = true)|-- partitionpath: string (nullable = true)|-- rider: string (nullable = true)|-- ts: long (nullable = true)|-- uuid: string (nullable = true)

-----------------------------------------------------------------------------------------df.select("rider","begin_lat","begin_lon","driver","fare","uuid","ts").show(10,truncate=false)

4.保存到hudi表

df.write.mode(Overwrite).format("hudi").options(getQuickstartWriteConfigs).option(PRECOMBINE_FIELD_OPT_KEY, "ts").option(RECORDKEY_FIELD_OPT_KEY, "uuid").option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath").option(TABLE_NAME, tableName).save(basePath)

5.查询hudi数据

val tripsSnapshotDF = spark.read.format("hudi").load("hdfs://master:9000/hudi-warehouse/hudi_trips_cow" + "/*/*/*/*")tripsSnapshotDF.printSchema()

-----------------------------------------------------------------------------------------

root|-- _hoodie_commit_time: string (nullable = true) --提交数据的提交时间 |-- _hoodie_commit_seqno: string (nullable = true) --提交数据的编号 |-- _hoodie_record_key: string (nullable = true) --提交数据的key |-- _hoodie_partition_path: string (nullable = true) --提交数据的存储路径|-- _hoodie_file_name: string (nullable = true) --提交数据的所在文件名称|-- begin_lat: double (nullable = true)|-- begin_lon: double (nullable = true)|-- driver: string (nullable = true)|-- end_lat: double (nullable = true)|-- end_lon: double (nullable = true)|-- fare: double (nullable = true)|-- partitionpath: string (nullable = true)|-- rider: string (nullable = true)|-- ts: long (nullable = true)|-- uuid: string (nullable = true)

-----------------------------------------------------------------------------------------

6.注册为临时视图

tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

7.查询任务

乘车费用 大于 20 信息数据

scala> spark.sql("select fare, begin_lon, begin_lat, ts from hudi_trips_snapshot where fare > 20.0").show()

+------------------+-------------------+-------------------+-------------+

| fare| begin_lon| begin_lat| ts|

+------------------+-------------------+-------------------+-------------+

| 33.92216483948643| 0.9694586417848392| 0.1856488085068272|1698046206939|

| 93.56018115236618|0.14285051259466197|0.21624150367601136|1698296387405|

| 64.27696295884016| 0.4923479652912024| 0.5731835407930634|1697991665477|

| 27.79478688582596| 0.6273212202489661|0.11488393157088261|1697865605719|

| 43.4923811219014| 0.8779402295427752| 0.6100070562136587|1698233221527|

| 66.62084366450246|0.03844104444445928| 0.0750588760043035|1697912700216|

|34.158284716382845|0.46157858450465483| 0.4726905879569653|1697805433844|

| 41.06290929046368| 0.8192868687714224| 0.651058505660742|1698234304674|

+------------------+-------------------+-------------------+-------------+

选取字段查询数据

spark.sql("select _hoodie_commit_time, _hoodie_record_key, _hoodie_partition_path, rider, driver, fare from hudi_trips_snapshot").show()

8.表数据结构

.hoodie文件

.hoodie 文件:由于CRUD的零散性,每一次的操作都会生成一个文件,这些小文件越来越多后,会严重影响HDFS的性能,Hudi设计了一套文件合并机制。 .hoodie文件夹中存放了对应的文件合并操作相关的日志文件。Hudi把随着时间流逝,对表的一系列CRUD操作叫做Timeline。Timeline中某一次的操作,叫做Instant。Instant包含以下信息:Instant Action,记录本次操作是一次数据提交(COMMITS),还是文件合并(COMPACTION),或者是文件清理(CLEANS);Instant Time,本次操作发生的时间;State,操作的状态,发起(REQUESTED),进行中(INFLIGHT),还是已完成(COMPLETED);

amricas和asia文件

amricas和asia相关的路径是实际的数据文件,按分区存储,分区的路径key是可以指定的。

三、基于IDEA使用Hudi

maven项目xml

主语scala版本相对应,否则会报错Exception in thread "main" java.lang.NoSuchMethodError: scala.Product.$init$

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>cn.saddam.hudi</groupId><artifactId>Hudi-Learning</artifactId><version>1.0.0</version><repositories><repository><id>aliyun</id><url>http://maven.aliyun.com/nexus/content/groups/public/</url></repository><repository><id>cloudera</id><url>https://repository.cloudera.com/artifactory/cloudera-repos/</url></repository><repository><id>jboss</id><url>http://repository.jboss.com/nexus/content/groups/public</url></repository>

</repositories><properties>

<scala.version>2.12.1</scala.version>

<scala.binary.version>2.12</scala.binary.version>

<spark.version>3.1.1</spark.version>

<hadoop.version>3.2.1</hadoop.version>

<hudi.version>0.9.0</hudi.version>

</properties><dependencies>

<!-- 依赖Scala语言 -->

<dependency><groupId>org.scala-lang</groupId><artifactId>scala-library</artifactId><version>2.12.1</version>

</dependency>

<!-- Spark Core 依赖 -->

<dependency><groupId>org.apache.spark</groupId><artifactId>spark-core_2.12</artifactId><version>3.1.1</version>

</dependency>

<!-- Spark SQL 依赖 -->

<dependency><groupId>org.apache.spark</groupId><artifactId>spark-sql_2.12</artifactId><version>3.1.1</version>

</dependency><!-- Hadoop Client 依赖 -->

<dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>${hadoop.version}</version>

</dependency><!-- hudi-spark3 -->

<dependency><groupId>org.apache.hudi</groupId><artifactId>hudi-spark3-bundle_2.12</artifactId><version>${hudi.version}</version>

</dependency>

<dependency><groupId>org.apache.spark</groupId><artifactId>spark-avro_2.12</artifactId><version>3.1.1</version>

</dependency></dependencies><build>

<outputDirectory>target/classes</outputDirectory>

<testOutputDirectory>target/test-classes</testOutputDirectory>

<resources><resource><directory>${project.basedir}/src/main/resources</directory></resource>

</resources>

<!-- Maven 编译的插件 -->

<plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-compiler-plugin</artifactId><version>3.0</version><configuration><source>1.8</source><target>1.8</target><encoding>UTF-8</encoding></configuration></plugin><plugin><groupId>net.alchim31.maven</groupId><artifactId>scala-maven-plugin</artifactId><version>3.2.0</version><executions><execution><goals><goal>compile</goal><goal>testCompile</goal></goals></execution></executions></plugin>

</plugins></build>

</project>

1.main方法

object HudiSparkDemo {def main(args: Array[String]): Unit = {System.setProperty("HADOOP_USER_NAME", "root")val spark=SparkSession.builder().appName(this.getClass.getSimpleName.stripSuffix("$")).master("local[2]")// 设置序列化方式:Kryo.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer").getOrCreate()import spark.implicits._//表名称val tableName: String = "tbl_trips_cow"//表存储路径val tablePath: String = "hdfs://192.168.184.135:9000/hudi-warehouse/hudi_trips_cow"// 构建数据生成器,为例模拟产生插入和更新数据import org.apache.hudi.QuickstartUtils._//TODO 任务一:模拟数据,插入Hudi表,采用COW模式//insertData(spark, tableName, tablePath)//TODO 任务二:快照方式查询(Snapshot Query)数据,采用DSL方式//queryData(spark, tablePath)queryDataByTime(spark, tablePath)//Thread.sleep(10000)//TODO 任务三:更新(Update)数据//val dataGen: DataGenerator = new DataGenerator()//insertData(spark, tableName, tablePath, dataGen)//updateData(spark, tableName, tablePath, dataGen)//TODO 任务四:增量查询(Incremental Query)数据,采用SQL方式//incrementalQueryData(spark, tablePath)//TODO 任务五:删除(Delete)数据//deleteData(spark, tableName, tablePath)// 应用结束,关闭资源spark.stop()}

2.模拟数据

在编写代码过程中,指定数据写入到HDFS路径时***直接写“/xxdir”***不要写“hdfs://mycluster/xxdir”,后期会报错“java.lang.IllegalArgumentException: Not in marker dir. Marker Path=hdfs://mycluster/hudi_data/.hoodie.temp/2022xxxxxxxxxx/default/c4b854e7-51d3-4a14-9b7e-54e2e88a9701-0_0-22-22_20220509164730.parquet.marker.CREATE, Expected Marker Root=/hudi_data/.hoodie/.temp/2022xxxxxxxxxx”,可以将对应的hdfs-site.xml、core-site.xml放在resources目录下,直接会找HDFS路径。

/*** 官方案例:模拟产生数据,插入Hudi表,表的类型COW*/def insertData(spark: SparkSession, table: String, path: String): Unit = {import spark.implicits._// TODO: a. 模拟乘车数据import org.apache.hudi.QuickstartUtils._val dataGen: DataGenerator = new DataGenerator()val inserts: util.List[String] = convertToStringList(dataGen.generateInserts(100))import scala.collection.JavaConverters._val insertDF: DataFrame = spark.read.json(spark.sparkContext.parallelize(inserts.asScala, 2).toDS())//insertDF.printSchema()//insertDF.show(10, truncate = false)// TODO: b. 插入数据至Hudi表import org.apache.hudi.DataSourceWriteOptions._import org.apache.hudi.config.HoodieWriteConfig._insertDF.write.mode(SaveMode.Append).format("hudi") // 指定数据源为Hudi.option("hoodie.insert.shuffle.parallelism", "2").option("hoodie.upsert.shuffle.parallelism", "2")// Hudi 表的属性设置.option(PRECOMBINE_FIELD.key(), "ts").option(RECORDKEY_FIELD.key(), "uuid").option(PARTITIONPATH_FIELD.key(), "partitionpath").option(TBL_NAME.key(), table).save(path)}

2.查询数据

def queryData(spark: SparkSession, path: String): Unit = {import spark.implicits._val tripsDF: DataFrame = spark.read.format("hudi").load(path)//tripsDF.printSchema()//tripsDF.show(10, truncate = false)// 查询费用大于20,小于50的乘车数据tripsDF.filter($"fare" >= 20 && $"fare" <= 50).select($"driver", $"rider", $"fare", $"begin_lat", $"begin_lon", $"partitionpath", $"_hoodie_commit_time").orderBy($"fare".desc, $"_hoodie_commit_time".desc).show(20, truncate = false)}

通过时间查询数据

def queryDataByTime(spark: SparkSession, path: String):Unit ={import org.apache.spark.sql.functions._// 方式一:指定字符串,格式 yyyyMMddHHmmssval df1 = spark.read.format("hudi").option("as.of.instant", "20231027172433").load(path).sort(col("_hoodie_commit_time").desc)df1.printSchema()df1.show(5,false)// 方式二:指定字符串,格式yyyy-MM-dd HH:mm:ssval df2 = spark.read.format("hudi").option("as.of.instant", "2023-10-27 17:24:33").load(path).sort(col("_hoodie_commit_time").desc)df2.printSchema()df2.show(5,false)}

3.更新数据

/*** 重新覆盖插入数据,然后更新*/def insertData2(spark: SparkSession, table: String, path: String, dataGen: DataGenerator): Unit = {import spark.implicits._// TODO: a. 模拟乘车数据import org.apache.hudi.QuickstartUtils._val inserts = convertToStringList(dataGen.generateInserts(100))import scala.collection.JavaConverters._val insertDF: DataFrame = spark.read.json(spark.sparkContext.parallelize(inserts.asScala, 2).toDS())//insertDF.printSchema()//insertDF.show(10, truncate = false)// TODO: b. 插入数据至Hudi表import org.apache.hudi.DataSourceWriteOptions._import org.apache.hudi.config.HoodieWriteConfig._insertDF.write.mode(SaveMode.Ignore).format("hudi") // 指定数据源为Hudi.option("hoodie.insert.shuffle.parallelism", "2").option("hoodie.upsert.shuffle.parallelism", "2")// Hudi 表的属性设置.option(PRECOMBINE_FIELD.key(), "ts").option(RECORDKEY_FIELD.key(), "uuid").option(PARTITIONPATH_FIELD.key(), "partitionpath").option(TBL_NAME.key(), table).save(path)}/*** 官方案例:更新Hudi数据,运行程序时,必须要求与插入数据使用同一个DataGenerator对象,更新数据Key是存在的*/def updateData(spark: SparkSession, table: String, path: String, dataGen: DataGenerator): Unit = {import spark.implicits._// TODO: a、模拟产生更新数据import org.apache.hudi.QuickstartUtils._import scala.collection.JavaConverters._val updates = convertToStringList(dataGen.generateUpdates(100))//更新val updateDF = spark.read.json(spark.sparkContext.parallelize(updates.asScala, 2).toDS())// TODO: b、更新数据至Hudi表import org.apache.hudi.DataSourceWriteOptions._import org.apache.hudi.config.HoodieWriteConfig._updateDF.write.mode(SaveMode.Append).format("hudi").option("hoodie.insert.shuffle.parallelism", "2").option("hoodie.upsert.shuffle.parallelism", "2").option(PRECOMBINE_FIELD.key(), "ts").option(RECORDKEY_FIELD.key(), "uuid").option(PARTITIONPATH_FIELD.key(), "partitionpath").option(TBL_NAME.key(), table).save(path)}

4.删除数据

/*** 官方案例:删除Hudi表数据,依据主键UUID进行删除,如果是分区表,指定分区路径*/

def deleteData(spark: SparkSession, table: String, path: String): Unit = {import spark.implicits._// TODO: a. 加载Hudi表数据,获取条目数val tripsDF: DataFrame = spark.read.format("hudi").load(path)println(s"Count = ${tripsDF.count()}")// TODO: b. 模拟要删除的数据val dataframe: DataFrame = tripsDF.select($"uuid", $"partitionpath").limit(2)import org.apache.hudi.QuickstartUtils._val dataGen: DataGenerator = new DataGenerator()val deletes = dataGen.generateDeletes(dataframe.collectAsList())import scala.collection.JavaConverters._val deleteDF = spark.read.json(spark.sparkContext.parallelize(deletes.asScala, 2))// TODO: c. 保存数据至Hudi表,设置操作类型为:DELETEimport org.apache.hudi.DataSourceWriteOptions._import org.apache.hudi.config.HoodieWriteConfig._deleteDF.write.mode(SaveMode.Append).format("hudi").option("hoodie.insert.shuffle.parallelism", "2").option("hoodie.upsert.shuffle.parallelism", "2")// 设置数据操作类型为delete,默认值为upsert.option(OPERATION.key(), "delete").option(PRECOMBINE_FIELD.key(), "ts").option(RECORDKEY_FIELD.key(), "uuid").option(PARTITIONPATH_FIELD.key(), "partitionpath").option(TBL_NAME.key(), table).save(path)// TODO: d. 再次加载Hudi表数据,统计条目数,查看是否减少2条val hudiDF: DataFrame = spark.read.format("hudi").load(path)println(s"Delete After Count = ${hudiDF.count()}")

}

知乎案例

https://www.zhihu.com/question/479484283/answer/2519394483

四、Spark滴滴运营数据分析

hive

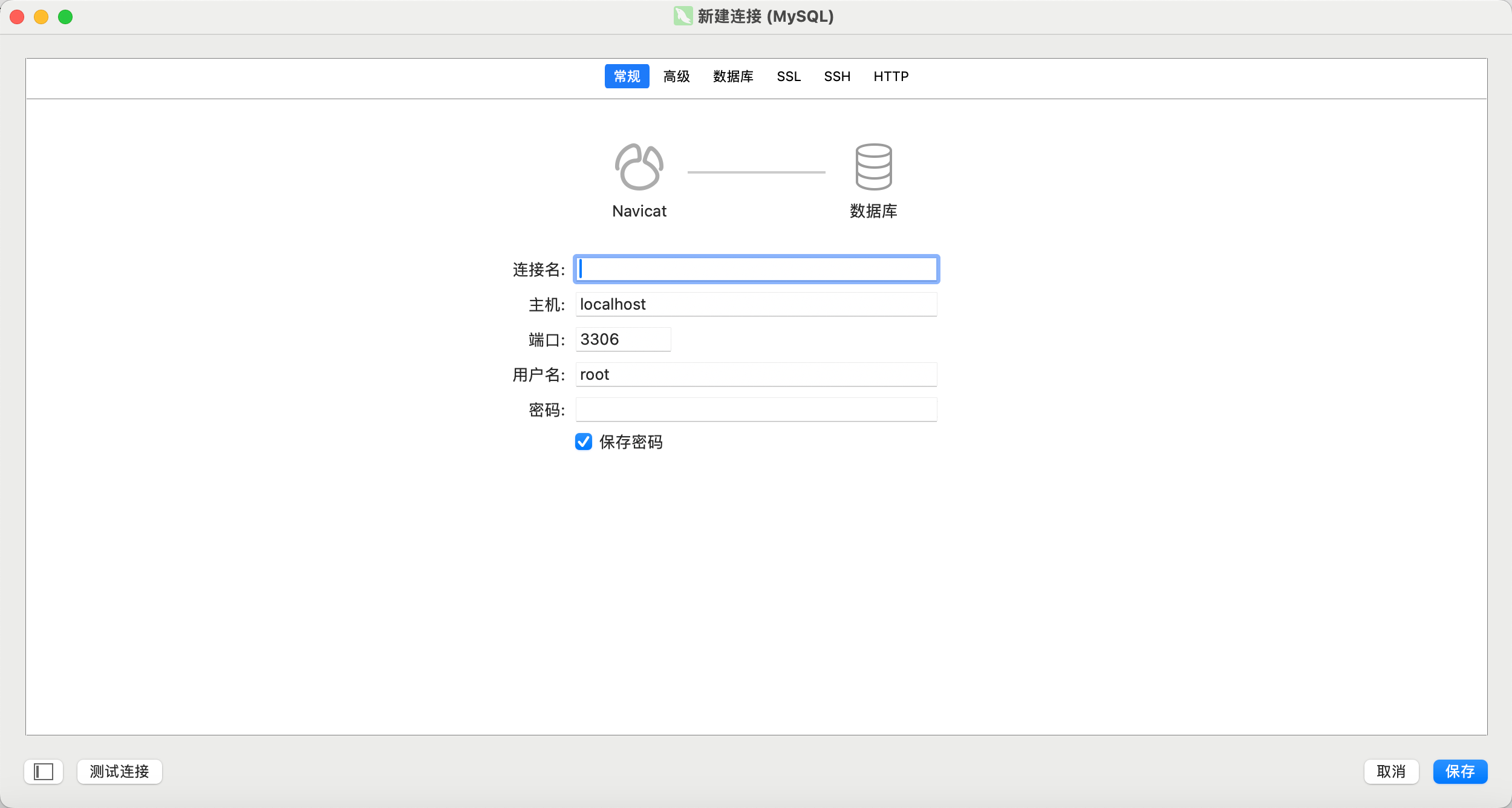

配置文件

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><configuration>

<property><name>hive.metastore.warehouse.dir</name><value>/user/hive/warehouse</value></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value></property><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value></property><property><name>javax.jdo.option.ConnectionUserName</name><value>root</value></property><property><name>javax.jdo.option.ConnectionPassword</name><value>xxxxxx</value></property>

<property><name>hive.metastore.schema.verification</name><value>false</value>

</property>

<property><name>hive.server2.thrift.bind.host</name><value>master</value>

</property>

<property><name>hive.metastore.uris</name><value>thrift://master:9083</value>

</property>

<property><name>hive.mapred.mode</name><value>strict</value></property><property><name>hive.exec.mode.local.auto</name><value>true</value></property><property><name>hive.fetch.task.conversion</name><value>more</value></property><property><name>hive.server2.thrift.client.user</name><value>root</value></property><property><name>hive.server2.thrift.client.password</name><value>32419</value></property><property><name>hive.metastore.event.db.notification.api.auth</name><value>false</value>

</property>

</configuration>脚本

start-beeline.sh

#!/bin/bash/usr/local/src/hive/bin/beeline -u jdbc:hive2://master:10000 -n root -p xxxxxx

start-hiveserver2.sh

#!/bin/sh HIVE_HOME=/usr/local/src/hiveEXEC_CMD=hiveserver2## 启动服务的时间

DATE_STR=`/bin/date '+%Y%m%d%H%M%S'`

# 日志文件名称(包含存储路径)

# HIVE_LOG=${HIVE_HOME}/logs/${EXEC_CMD}-${DATE_STR}.log

HIVE_LOG=${HIVE_HOME}/logs/${EXEC_CMD}.log# 创建日志目录

/usr/bin/mkdir -p ${HIVE_HOME}/logs

## 启动服务

/usr/bin/nohup ${HIVE_HOME}/bin/hive --service ${EXEC_CMD} > ${HIVE_LOG} 2>&1 &

start-metastore.sh

#!/bin/sh HIVE_HOME=/usr/local/src/hiveEXEC_CMD=metastore## 启动服务的时间

DATE_STR=`/bin/date '+%Y%m%d%H%M%S'`

# 日志文件名称(包含存储路径)

HIVE_LOG=${HIVE_HOME}/logs/${EXEC_CMD}-${DATE_STR}.log# 创建日志目录

/usr/bin/mkdir -p ${HIVE_HOME}/logs

## 启动服务

/usr/bin/nohup ${HIVE_HOME}/bin/hive --service ${EXEC_CMD} > ${HIVE_LOG} 2>&1 &

数据字段介绍

Spark读取数据并加载至Hudi

SparkUtils

package cn.saddam.hudi.spark.didiimport org.apache.spark.sql.SparkSession/*** SparkSQL操作数据(加载读取和保存写入)时工具类,比如获取SparkSession实例对象等*/

object SparkUtils {/*** 构建SparkSession实例对象,默认情况下本地模式运行*/def createSparkSession(clazz: Class[_], master: String = "local[4]", partitions: Int = 4): SparkSession ={SparkSession.builder().appName(clazz.getSimpleName.stripSuffix("$")).master(master).config("spark.serializer", "org.apache.spark.serializer.KryoSerializer").config("spark.sql.shuffle.partitions", partitions).getOrCreate()}def main(args: Array[String]): Unit = {val spark=createSparkSession(this.getClass)print(spark)Thread.sleep(1000000)spark.stop()}

}

readCsvFile

/*** 读取CSV格式文本文件数据,封装到DataFrame数据集*/def readCsvFile(spark: SparkSession, path: String): DataFrame = {spark.read// 设置分隔符为逗号.option("sep", "\\t")// 文件首行为列名称.option("header", "true")// 依据数值自动推断数据类型.option("inferSchema", "true")// 指定文件路径.csv(path)}

process

/*** 对滴滴出行海口数据进行ETL转换操作:指定ts和partitionpath 列*/def process(dataframe: DataFrame): DataFrame = {dataframe// 添加分区列:三级分区 -> yyyy/MM/dd.withColumn("partitionpath", // 列名称concat_ws("-", col("year"), col("month"), col("day")))// 删除列:year, month, day.drop("year", "month", "day")// 添加timestamp列,作为Hudi表记录数据与合并时字段,使用发车时间.withColumn("ts",unix_timestamp(col("departure_time"), "yyyy-MM-dd HH:mm:ss"))}

saveToHudi

/*** 将数据集DataFrame保存值Hudi表中,表的类型:COW*/def saveToHudi(dataframe: DataFrame, table: String, path: String): Unit = {// 导入包import org.apache.hudi.DataSourceWriteOptions._import org.apache.hudi.config.HoodieWriteConfig._// 保存数据dataframe.write.mode(SaveMode.Overwrite).format("hudi") // 指定数据源为Hudi.option("hoodie.insert.shuffle.parallelism", "2").option("hoodie.upsert.shuffle.parallelism", "2")// Hudi 表的属性设置.option(PRECOMBINE_FIELD.key(), "ts").option(RECORDKEY_FIELD.key(), "order_id").option(PARTITIONPATH_FIELD.key(), "partitionpath")// 表的名称和路径.option(TBL_NAME.key(), table).save(path)}

main方法

System.setProperty("HADOOP_USER_NAME", "root")// 滴滴数据路径(file意思为本读文件系统)val datasPath: String = "file:/F:\\A-大数据学习\\Hudi\\Hudi-Learning\\datas\\DiDi\\dwv_order_make_haikou_1.txt"// Hudi中表的属性val hudiTableName: String = "tbl_didi_haikou"val hudiTablePath: String = "/hudi-warehouse/tbl_didi_haikou"def main(args: Array[String]): Unit = {//TODO step1. 构建SparkSession实例对象(集成Hudi和HDFS)val spark: SparkSession = SparkUtils.createSparkSession(this.getClass)import spark.implicits._//TODO step2. 加载本地CSV文件格式滴滴出行数据val didiDF: DataFrame = readCsvFile(spark, datasPath)//didiDF.printSchema()//didiDF.show(10, truncate = false)//TODO step3. 滴滴出行数据ETL处理并保存至Hudi表val etlDF: DataFrame = process(didiDF)//etlDF.printSchema()//etlDF.show(10, truncate = false)//TODO stpe4. 保存转换后数据至Hudi表saveToHudi(etlDF, hudiTableName, hudiTablePath)// stpe5. 应用结束,关闭资源spark.stop()}

Spark加载Hudi数据并需求统计

从Hudi表加载数据

/*** 从Hudi表加载数据,指定数据存在路径*/def readFromHudi(spark: SparkSession, hudiTablePath: String): DataFrame ={// a. 指定路径,加载数据,封装至DataFrameval didiDF = spark.read.format("hudi").load(hudiTablePath)// b. 选择字段didiDF.select("order_id", "product_id","type", "traffic_type", "pre_total_fee","start_dest_distance", "departure_time")}

订单类型统计

/*** 订单类型统计,字段:product_id* 对海口市滴滴出行数据,按照订单类型统计,* 使用字段:product_id,其中值【1滴滴专车, 2滴滴企业专车, 3滴滴快车, 4滴滴企业快车】*/def reportProduct(dataframe: DataFrame) = {// a. 按照产品线ID分组统计val reportDF: DataFrame = dataframe.groupBy("product_id").count()// b. 自定义UDF函数,转换名称val to_name =udf(// 1滴滴专车, 2滴滴企业专车, 3滴滴快车, 4滴滴企业快车(productId: Int) => {productId match {case 1 => "滴滴专车"case 2 => "滴滴企业专车"case 3 => "滴滴快车"case 4 => "滴滴企业快车"}})// c. 转换名称,应用函数val resultDF: DataFrame = reportDF.select(to_name(col("product_id")).as("order_type"),col("count").as("total"))

// resultDF.printSchema()

// resultDF.show(10, truncate = false)resultDF.write.format("jdbc").option("driver","com.mysql.jdbc.Driver").option("url", "jdbc:mysql://192.168.184.135:3306/Hudi_DiDi?createDatabaseIfNotExist=true&characterEncoding=utf8&useSSL=false").option("dbtable", "reportProduct").option("user", "root").option("password", "xxxxxx").save()}

订单时效性统计

/*** 订单时效性统计,字段:type*/def reportType(dataframe: DataFrame): DataFrame = {// a. 按照产品线ID分组统计val reportDF: DataFrame = dataframe.groupBy("type").count()// b. 自定义UDF函数,转换名称val to_name = udf(// 0实时,1预约(realtimeType: Int) => {realtimeType match {case 0 => "实时"case 1 => "预约"}})// c. 转换名称,应用函数val resultDF: DataFrame = reportDF.select(to_name(col("type")).as("order_realtime"),col("count").as("total"))

// resultDF.printSchema()

// resultDF.show(10, truncate = false)resultDF}

交通类型统计

/*** 交通类型统计,字段:traffic_type*/def reportTraffic(dataframe: DataFrame): DataFrame = {// a. 按照产品线ID分组统计val reportDF: DataFrame = dataframe.groupBy("traffic_type").count()// b. 自定义UDF函数,转换名称val to_name = udf(// 1企业时租,2企业接机套餐,3企业送机套餐,4拼车,5接机,6送机,302跨城拼车(trafficType: Int) => {trafficType match {case 0 => "普通散客"case 1 => "企业时租"case 2 => "企业接机套餐"case 3 => "企业送机套餐"case 4 => "拼车"case 5 => "接机"case 6 => "送机"case 302 => "跨城拼车"case _ => "未知"}})// c. 转换名称,应用函数val resultDF: DataFrame = reportDF.select(to_name(col("traffic_type")).as("traffic_type"), //col("count").as("total") //)

// resultDF.printSchema()

// resultDF.show(10, truncate = false)resultDF}

订单价格统计

/*** 订单价格统计,将价格分阶段统计,字段:pre_total_fee*/def reportPrice(dataframe: DataFrame): DataFrame = {val resultDF: DataFrame = dataframe.agg(// 价格:0 ~ 15sum(when(col("pre_total_fee").between(0, 15), 1).otherwise(0)).as("0~15"),// 价格:16 ~ 30sum(when(col("pre_total_fee").between(16, 30), 1).otherwise(0)).as("16~30"),// 价格:31 ~ 50sum(when(col("pre_total_fee").between(31, 50), 1).otherwise(0)).as("31~50"),// 价格:50 ~ 100sum(when(col("pre_total_fee").between(51, 100), 1).otherwise(0)).as("51~100"),// 价格:100+sum(when(col("pre_total_fee").gt(100), 1).otherwise(0)).as("100+"))// resultDF.printSchema()

// resultDF.show(10, truncate = false)resultDF}

订单距离统计

/*** 订单距离统计,将价格分阶段统计,字段:start_dest_distance*/def reportDistance(dataframe: DataFrame): DataFrame = {val resultDF: DataFrame = dataframe.agg(// 价格:0 ~ 15sum(when(col("start_dest_distance").between(0, 10000), 1).otherwise(0)).as("0~10km"),// 价格:16 ~ 30sum(when(col("start_dest_distance").between(10001, 20000), 1).otherwise(0)).as("10~20km"),// 价格:31 ~ 50sum(when(col("start_dest_distance").between(200001, 30000), 1).otherwise(0)).as("20~30km"),// 价格:50 ~ 100sum(when(col("start_dest_distance").between(30001, 5000), 1).otherwise(0)).as("30~50km"),// 价格:100+sum(when(col("start_dest_distance").gt(50000), 1).otherwise(0)).as("50+km"))// resultDF.printSchema()

// resultDF.show(10, truncate = false)resultDF}

订单星期分组统计

/*** 订单星期分组统计,字段:departure_time*/def reportWeek(dataframe: DataFrame): DataFrame = {// a. 自定义UDF函数,转换日期为星期val to_week: UserDefinedFunction = udf(// 0实时,1预约(dateStr: String) => {val format: FastDateFormat = FastDateFormat.getInstance("yyyy-MM-dd")val calendar: Calendar = Calendar.getInstance()val date: Date = format.parse(dateStr)calendar.setTime(date)val dayWeek: String = calendar.get(Calendar.DAY_OF_WEEK) match {case 1 => "星期日"case 2 => "星期一"case 3 => "星期二"case 4 => "星期三"case 5 => "星期四"case 6 => "星期五"case 7 => "星期六"}// 返回星期dayWeek})// b. 转换日期为星期,并分组和统计val resultDF: DataFrame = dataframe.select(to_week(col("departure_time")).as("week")).groupBy(col("week")).count().select(col("week"), col("count").as("total") //)

// resultDF.printSchema()

// resultDF.show(10, truncate = false)resultDF}

main方法

// Hudi中表的属性

val hudiTablePath: String = "/hudi-warehouse/tbl_didi_haikou"def main(args: Array[String]): Unit = {//TODO step1. 构建SparkSession实例对象(集成Hudi和HDFS)val spark: SparkSession = SparkUtils.createSparkSession(this.getClass, partitions = 8)import spark.implicits._//TODO step2. 依据指定字段从Hudi表中加载数据val hudiDF: DataFrame = readFromHudi(spark, hudiTablePath)//hudiDF.printSchema()//hudiDF.show(false)//TODO step3. 按照业务指标进行数据统计分析// 指标1:订单类型统计

// reportProduct(hudiDF)

// SparkUtils.saveToMysql(spark,reportType(hudiDF),"reportProduct")// 指标2:订单时效统计

// reportType(hudiDF).show(false)

// SparkUtils.saveToMysql(spark,reportType(hudiDF),"reportType")// 指标3:交通类型统计

// reportTraffic(hudiDF)SparkUtils.saveToMysql(spark,reportTraffic(hudiDF),"reportTraffic")// 指标4:订单价格统计

// reportPrice(hudiDF)SparkUtils.saveToMysql(spark,reportPrice(hudiDF),"reportPrice")// 指标5:订单距离统计

// reportDistance(hudiDF)SparkUtils.saveToMysql(spark,reportDistance(hudiDF),"reportDistance")// 指标6:日期类型:星期,进行统计

// reportWeek(hudiDF)SparkUtils.saveToMysql(spark,reportWeek(hudiDF),"reportWeek")//TODO step4. 应用结束关闭资源spark.stop()}

五、Hive滴滴运营数据分析

Idea连接hive

启动metastore和hiveserver2和beeline2-master-hiverootxxxxxxjdbc:hive2://192.168.184.135:10000

hive加载数据

# 1. 创建数据库

create database db_hudi# 2. 使用数据库

use db_hudi# 3. 创建外部表

CREATE EXTERNAL TABLE db_hudi.tbl_hudi_didi(

order_id bigint ,

product_id int ,

city_id int ,

district int ,

county int ,

type int ,

combo_type int ,

traffic_type int ,

passenger_count int ,

driver_product_id int ,

start_dest_distance int ,

arrive_time string ,

departure_time string ,

pre_total_fee double ,

normal_time string ,

bubble_trace_id string ,

product_1level int ,

dest_lng double ,

dest_lat double ,

starting_lng double ,

starting_lat double ,

partitionpath string ,

ts bigint

)

PARTITIONED BY (date_str string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'

STORED AS INPUTFORMAT

'org.apache.hudi.hadoop.HoodieParquetInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'

LOCATION

'/hudi-warehouse/tbl_didi_haikou'# 5. 添加分区

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-22') location '/hudi-warehouse/tbl_didi_haikou/2017-5-22' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-23') location '/hudi-warehouse/tbl_didi_haikou/2017-5-23' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-24') location '/hudi-warehouse/tbl_didi_haikou/2017-5-24' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-25') location '/hudi-warehouse/tbl_didi_haikou/2017-5-25' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-26') location '/hudi-warehouse/tbl_didi_haikou/2017-5-26' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-27') location '/hudi-warehouse/tbl_didi_haikou/2017-5-27' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-28') location '/hudi-warehouse/tbl_didi_haikou/2017-5-28' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-29') location '/hudi-warehouse/tbl_didi_haikou/2017-5-29' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-30') location '/hudi-warehouse/tbl_didi_haikou/2017-5-30' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-5-31') location '/hudi-warehouse/tbl_didi_haikou/2017-5-31' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-1') location '/hudi-warehouse/tbl_didi_haikou/2017-6-1' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-2') location '/hudi-warehouse/tbl_didi_haikou/2017-6-2' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-3') location '/hudi-warehouse/tbl_didi_haikou/2017-6-3' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-4') location '/hudi-warehouse/tbl_didi_haikou/2017-6-4' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-5') location '/hudi-warehouse/tbl_didi_haikou/2017-6-5' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-6') location '/hudi-warehouse/tbl_didi_haikou/2017-6-6' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-7') location '/hudi-warehouse/tbl_didi_haikou/2017-6-7' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-8') location '/hudi-warehouse/tbl_didi_haikou/2017-6-8' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-9') location '/hudi-warehouse/tbl_didi_haikou/2017-6-9' ;

alter table db_hudi.tbl_hudi_didi add if not exists partition(date_str='2017-6-10') location '/hudi-warehouse/tbl_didi_haikou/2017-6-10' ;# 设置非严格模式

set hive.mapred.mode = nonstrict ;# SQL查询前10条数据

select order_id, product_id, type, traffic_type, pre_total_fee, start_dest_distance, departure_time from db_hudi.tbl_hudi_didi limit 10 ;

HiveQL 分析

SparkSQL连接Hudi 把hudi-spark3-bundle_2.12-0.9.0.jar拷贝到spark/jars

spark-sql \

--conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \

--conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension' \

--conf 'spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog'

指标一:订单类型统计

WITH tmp AS (SELECT product_id, COUNT(1) AS total FROM db_hudi.tbl_hudi_didi GROUP BY product_id

)

SELECT CASE product_idWHEN 1 THEN "滴滴专车"WHEN 2 THEN "滴滴企业专车"WHEN 3 THEN "滴滴快车"WHEN 4 THEN "滴滴企业快车"END AS order_type,total

FROM tmp ;滴滴专车 15615

滴滴快车 1298383

Time taken: 2.721 seconds, Fetched 2 row(s)

指标二:订单时效性统计

WITH tmp AS (SELECT type AS order_realtime, COUNT(1) AS total FROM db_hudi.tbl_hudi_didi GROUP BY type

)

SELECT CASE order_realtimeWHEN 0 THEN "实时"WHEN 1 THEN "预约"END AS order_realtime,total

FROM tmp ;预约 28488

实时 1285510

Time taken: 1.001 seconds, Fetched 2 row(s)

指标三:订单交通类型统计

WITH tmp AS (SELECT traffic_type, COUNT(1) AS total FROM db_hudi.tbl_hudi_didi GROUP BY traffic_type

)

SELECT CASE traffic_typeWHEN 0 THEN "普通散客" WHEN 1 THEN "企业时租"WHEN 2 THEN "企业接机套餐"WHEN 3 THEN "企业送机套餐"WHEN 4 THEN "拼车"WHEN 5 THEN "接机"WHEN 6 THEN "送机"WHEN 302 THEN "跨城拼车"ELSE "未知"END AS traffic_type,total

FROM tmp ;送机 37469

接机 19694

普通散客 1256835

Time taken: 1.115 seconds, Fetched 3 row(s)

指标四:订单价格统计

SELECT SUM(CASE WHEN pre_total_fee BETWEEN 1 AND 15 THEN 1 ELSE 0 END) AS 0_15,SUM(CASE WHEN pre_total_fee BETWEEN 16 AND 30 THEN 1 ELSE 0 END) AS 16_30,SUM(CASE WHEN pre_total_fee BETWEEN 31 AND 50 THEN 1 ELSE 0 END) AS 31_150,SUM(CASE WHEN pre_total_fee BETWEEN 51 AND 100 THEN 1 ELSE 0 END) AS 51_100,SUM(CASE WHEN pre_total_fee > 100 THEN 1 ELSE 0 END) AS 100_

FROM db_hudi.tbl_hudi_didi;

六、Spark结构化流写入Hudi

启动zookeeper

--单机版本(此用)--

[root@node1 conf]# mv zoo_sample.cfg zoo.cfg

[root@node1 conf]# vim zoo.cfg修改内容:dataDir=/export/server/zookeeper/datas

[root@node1 conf]# mkdir -p /export/server/zookeeper/datas#启动zookeeper

[root@master ~]# zkServer.sh start

JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED#查看zookeeper状态信息

[root@master kafka]# zkServer.sh status

JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Mode: standalone--分布式版本--

[root@node1 conf]# vim zoo.cfg修改内容:dataDir=/export/server/zookeeper/datasserver.0=master:2888:3888server.1=slave1:2888:3888server.2=slave2:2888:3888

启动kafka

zookeeper.connect=192.168.184.135:2181/kafka

创建topic要加上/kafka --zookeeper master:2181/kafka

#server.properties修改

listeners=PLAINTEXT://192.168.184.135:9092

log.dirs=/usr/local/src/kafka/kafka-logs

zookeeper.connect=192.168.184.135:2181/kafka#启动kafka

kafka-server-start.sh /usr/local/src/kafka/config/server.properties#查看所有topic

kafka-topics.sh --list --zookeeper master:2181/kafka#创建topic

kafka-topics.sh --create --zookeeper master:2181/kafka --replication-factor 1 --partitions 1 --topic order_topic#删除topic

kafka-topics.sh --delete --zookeeper master:2181/kafka --topic order_topic

kafka tool工具

chroot path /kafka对应zookeeper连接地址后2181/kafka

订单数据模拟生成器

package cn.saddam.hudi.spark_streamingimport java.util.Propertiesimport org.apache.commons.lang3.time.FastDateFormat

import org.apache.kafka.clients.producer.{KafkaProducer, ProducerRecord}

import org.apache.kafka.common.serialization.StringSerializer

import org.json4s.jackson.Jsonimport scala.util.Random/*** 订单实体类(Case Class)** @param orderId 订单ID* @param userId 用户ID* @param orderTime 订单日期时间* @param ip 下单IP地址* @param orderMoney 订单金额* @param orderStatus 订单状态*/

case class OrderRecord(orderId: String,userId: String,orderTime: String,ip: String,orderMoney: Double,orderStatus: Int)/*** 模拟生产订单数据,发送到Kafka Topic中* Topic中每条数据Message类型为String,以JSON格式数据发送* 数据转换:* 将Order类实例对象转换为JSON格式字符串数据(可以使用json4s类库)*/

object MockOrderProducer {def main(args: Array[String]): Unit = {var producer: KafkaProducer[String, String] = nulltry {// 1. Kafka Client Producer 配置信息val props = new Properties()props.put("bootstrap.servers", "192.168.184.135:9092")props.put("acks", "1")props.put("retries", "3")props.put("key.serializer", classOf[StringSerializer].getName)props.put("value.serializer", classOf[StringSerializer].getName)// 2. 创建KafkaProducer对象,传入配置信息producer = new KafkaProducer[String, String](props)// 随机数实例对象val random: Random = new Random()// 订单状态:订单打开 0,订单取消 1,订单关闭 2,订单完成 3val allStatus = Array(0, 1, 2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0)while (true) {// 每次循环 模拟产生的订单数目

// val batchNumber: Int = random.nextInt(1) + 1val batchNumber: Int = random.nextInt(1) + 20(1 to batchNumber).foreach { number =>val currentTime: Long = System.currentTimeMillis()val orderId: String = s"${getDate(currentTime)}%06d".format(number)val userId: String = s"${1 + random.nextInt(5)}%08d".format(random.nextInt(1000))val orderTime: String = getDate(currentTime, format = "yyyy-MM-dd HH:mm:ss.SSS")val orderMoney: String = s"${5 + random.nextInt(500)}.%02d".format(random.nextInt(100))val orderStatus: Int = allStatus(random.nextInt(allStatus.length))// 3. 订单记录数据val orderRecord: OrderRecord = OrderRecord(orderId, userId, orderTime, getRandomIp, orderMoney.toDouble, orderStatus)// 转换为JSON格式数据val orderJson = new Json(org.json4s.DefaultFormats).write(orderRecord)println(orderJson)// 4. 构建ProducerRecord对象val record = new ProducerRecord[String, String]("order-topic", orderId, orderJson)// 5. 发送数据:def send(messages: KeyedMessage[K,V]*), 将数据发送到Topicproducer.send(record)}

// Thread.sleep(random.nextInt(500) + 5000)Thread.sleep(random.nextInt(500))}} catch {case e: Exception => e.printStackTrace()} finally {if (null != producer) producer.close()}}/** =================获取当前时间================= */def getDate(time: Long, format: String = "yyyyMMddHHmmssSSS"): String = {val fastFormat: FastDateFormat = FastDateFormat.getInstance(format)val formatDate: String = fastFormat.format(time) // 格式化日期formatDate}/** ================= 获取随机IP地址 ================= */def getRandomIp: String = {// ip范围val range: Array[(Int, Int)] = Array((607649792, 608174079), //36.56.0.0-36.63.255.255(1038614528, 1039007743), //61.232.0.0-61.237.255.255(1783627776, 1784676351), //106.80.0.0-106.95.255.255(2035023872, 2035154943), //121.76.0.0-121.77.255.255(2078801920, 2079064063), //123.232.0.0-123.235.255.255(-1950089216, -1948778497), //139.196.0.0-139.215.255.255(-1425539072, -1425014785), //171.8.0.0-171.15.255.255(-1236271104, -1235419137), //182.80.0.0-182.92.255.255(-770113536, -768606209), //210.25.0.0-210.47.255.255(-569376768, -564133889) //222.16.0.0-222.95.255.255)// 随机数:IP地址范围下标val random = new Random()val index = random.nextInt(10)val ipNumber: Int = range(index)._1 + random.nextInt(range(index)._2 - range(index)._1)// 转换Int类型IP地址为IPv4格式number2IpString(ipNumber)}/** =================将Int类型IPv4地址转换为字符串类型================= */def number2IpString(ip: Int): String = {val buffer: Array[Int] = new Array[Int](4)buffer(0) = (ip >> 24) & 0xffbuffer(1) = (ip >> 16) & 0xffbuffer(2) = (ip >> 8) & 0xffbuffer(3) = ip & 0xff// 返回IPv4地址buffer.mkString(".")}

}

结构化流实时从Kafka消费数据

package cn.saddam.hudi.spark_streamingimport cn.saddam.hudi.spark.didi.SparkUtils

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.streaming.OutputMode/*

基于StructuredStreaming结构化流实时从Kafka消费数据,经过ETL转换后,存储至Hudi表

*/

object HudiStructuredDemo {def main(args: Array[String]): Unit = {System.setProperty("HADOOP_USER_NAME", "root")//TODO step1、构建SparkSession实例对象val spark=SparkUtils.createSparkSession(this.getClass)//TODO step2、从Kafka实时消费数据val kafkaStreamDF: DataFrame =readFromKafka(spark,"order-topic")//TODO step3、提取数据,转换数据类型val streamDF: DataFrame = process(kafkaStreamDF)//TODO step4、保存数据至Hudi表中:COW(写入时拷贝)和MOR(读取时保存)saveToHudi(streamDF)//TODO step5、流式应用启动以后,等待终止spark.streams.active.foreach(query => println(s"Query: ${query.name} is Running ............."))spark.streams.awaitAnyTermination()}/*** 指定Kafka Topic名称,实时消费数据*/def readFromKafka(spark: SparkSession, topicName: String) = {spark.readStream.format("kafka").option("kafka.bootstrap.servers", "192.168.184.135:9092").option("subscribe", topicName).option("startingOffsets", "latest").option("maxOffsetsPerTrigger", 100000).option("failOnDataLoss", "false").load()}/*** 对Kafka获取数据,进行转换操作,获取所有字段的值,转换为String,以便保存Hudi表*/def process(streamDF: DataFrame) = {/* 从Kafka消费数据后,字段信息如key -> binary,value -> binarytopic -> string, partition -> int, offset -> longtimestamp -> long, timestampType -> int*/streamDF// 选择字段,转换类型为String.selectExpr("CAST(key AS STRING) order_id", //"CAST(value AS STRING) message", //"topic", "partition", "offset", "timestamp"//)// 解析Message,提取字段内置.withColumn("user_id", get_json_object(col("message"), "$.userId")).withColumn("order_time", get_json_object(col("message"), "$.orderTime")).withColumn("ip", get_json_object(col("message"), "$.ip")).withColumn("order_money", get_json_object(col("message"), "$.orderMoney")).withColumn("order_status", get_json_object(col("message"), "$.orderStatus"))// 删除Message列.drop(col("message"))// 转换订单日期时间格式为Long类型,作为Hudi表中合并数据字段.withColumn("ts", to_timestamp(col("order_time"), "yyyy-MM-dd HH:mm:ss.SSSS"))// 订单日期时间提取分区日期:yyyyMMdd.withColumn("day", substring(col("order_time"), 0, 10))}/*** 将流式数据集DataFrame保存至Hudi表,分别表类型:COW和MOR*/def saveToHudi(streamDF: DataFrame): Unit = {streamDF.writeStream.outputMode(OutputMode.Append()).queryName("query-hudi-streaming")// 针对每微批次数据保存.foreachBatch((batchDF: Dataset[Row], batchId: Long) => {println(s"============== BatchId: ${batchId} start ==============")writeHudiMor(batchDF) // TODO:表的类型MOR}).option("checkpointLocation", "/datas/hudi-spark/struct-ckpt-100").start()}/*** 将数据集DataFrame保存到Hudi表中,表的类型:MOR(读取时合并)*/def writeHudiMor(dataframe: DataFrame): Unit = {import org.apache.hudi.DataSourceWriteOptions._import org.apache.hudi.config.HoodieWriteConfig._import org.apache.hudi.keygen.constant.KeyGeneratorOptions._dataframe.write.format("hudi").mode(SaveMode.Append)// 表的名称.option(TBL_NAME.key, "tbl_kafka_mor")// 设置表的类型.option(TABLE_TYPE.key(), "MERGE_ON_READ")// 每条数据主键字段名称.option(RECORDKEY_FIELD_NAME.key(), "order_id")// 数据合并时,依据时间字段.option(PRECOMBINE_FIELD_NAME.key(), "ts")// 分区字段名称.option(PARTITIONPATH_FIELD_NAME.key(), "day")// 分区值对应目录格式,是否与Hive分区策略一致.option(HIVE_STYLE_PARTITIONING_ENABLE.key(), "true")// 插入数据,产生shuffle时,分区数目.option("hoodie.insert.shuffle.parallelism", "2").option("hoodie.upsert.shuffle.parallelism", "2")// 表数据存储路径.save("/hudi-warehouse/tbl_order_mor")}

}

订单数据查询分析(spark-shell)

//启动spark-shell

spark-shell \

--master local[2] \

--jars /usr/local/src/hudi/hudi-spark-jars/hudi-spark3-bundle_2.12-0.9.0.jar,/usr/local/src/hudi/hudi-spark-jars/spark-avro_2.12-3.0.1.jar,/usr/local/src/hudi/hudi-spark-jars/spark_unused-1.0.0.jar \

--conf "spark.serializer=org.apache.spark.serializer.KryoSerializer"//指定Hudi表数据存储目录,加载数据

val ordersDF = spark.read.format("hudi").load("/hudi-warehouse/tbl_order_mor/day=2023-11-02")//查看Schema信息

ordersDF.printSchema()//查看订单表前10条数据,选择订单相关字段

ordersDF.select("order_id", "user_id", "order_time", "ip", "order_money", "order_status", "day").show(false)//查看数据总条目数

ordersDF.count()//注册临时视图

ordersDF.createOrReplaceTempView("view_tmp_orders")//交易订单数据基本聚合统计:最大金额max、最小金额min、平均金额avg

spark.sql("""with tmp AS (SELECT CAST(order_money AS DOUBLE) FROM view_tmp_orders WHERE order_status = '0')select max(order_money) as max_money, min(order_money) as min_money, round(avg(order_money), 2) as avg_money from tmp

""").show()

+---------+---------+---------+

|max_money|min_money|avg_money|

+---------+---------+---------+

| 504.97| 5.05| 255.95|

+---------+---------+---------+DeltaStreamer 工具类

七、Hudi集成SparkSQL

启动spark-sql

spark-sql \

--master local[2] \

--jars /usr/local/src/hudi/hudi-spark-jars/hudi-spark3-bundle_2.12-0.9.0.jar,/usr/local/src/hudi/hudi-spark-jars/spark-avro_2.12-3.0.1.jar,/usr/local/src/hudi/hudi-spark-jars/spark_unused-1.0.0.jar \

--conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' \

--conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension'#Hudi默认upsert/insert/delete的并发度是1500,对于演示小规模数据集设置更小的并发度。

set hoodie.upsert.shuffle.parallelism = 1;

set hoodie.insert.shuffle.parallelism = 1;

set hoodie.delete.shuffle.parallelism = 1;#设置不同步Hudi表元数据

set hoodie.datasource.meta.sync.enable=false;

创建表

--编写DDL语句,创建Hudi表,表的类型:MOR和分区表,主键为id,分区字段为dt,合并字段默认为ts。

create table test_hudi_table (id int,name string,price double,ts long,dt string

) using hudipartitioned by (dt)options (primaryKey = 'id',type = 'mor')

location 'hdfs://192.168.184.135:9000/hudi-warehouse/test2_hudi_table' ;--创建Hudi表后查看创建的Hudi表

show create table test_hudi_table; CREATE TABLE `default`.`test_hudi_table` (`_hoodie_commit_time` STRING,`_hoodie_commit_seqno` STRING,`_hoodie_record_key` STRING,`_hoodie_partition_path` STRING,`_hoodie_file_name` STRING,`id` INT,`name` STRING,`price` DOUBLE,`ts` BIGINT,`dt` STRING)

USING hudi

OPTIONS (`type` 'mor',`primaryKey` 'id')

PARTITIONED BY (dt)

LOCATION 'hdfs://192.168.184.135:9000/hudi-warehouse/test_hudi_table'Time taken: 0.217 seconds, Fetched 1 row(s)

插入数据

java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.expressions.Alias.<init>(Lorg/apache/spark/sql/catalyst/expressions/Expression;Ljava/lang/String;Lorg/apache/spark/sql/catalyst/expressions/ExprId;Lscala/collection/Seq;Lscala/Option;)V

insert into test_hudi_table select 1 as id, 'hudi' as name, 10 as price, 1000 as ts, '2021-11-01' as dt;insert into test_hudi_table select 2 as id, 'spark' as name, 20 as price, 1100 as ts, '2021-11-01' as dt;insert into test_hudi_table select 3 as id, 'flink' as name, 30 as price, 1200 as ts, '2021-11-01' as dt;insert into test_hudi_table select 4 as id, 'sql' as name, 40 as price, 1400 as ts, '2021-11-01' as dt;

查询数据

--使用SQL查询Hudi表数据,全表扫描查询

select * from test_hudi_table ;--查看表中字段结构,使用DESC语句

desc test_hudi_table ;--指定查询字段,查询表中前几天数据

SELECT _hoodie_record_key,_hoodie_partition_path, id, name, price, ts, dt FROM test_hudi_table ;

更新数据

--使用DELETE语句,将id=1的记录删除,命令如下

delete from test_hudi_table where id = 1 ;--再次查询Hudi表数据,查看数据是否更新

SELECT COUNT(1) AS total from test_hudi_table WHERE id = 1;

DDL创建表

在spark-sql中编写DDL语句,创建Hudi表数据,核心三个属性参数

核心参数

Hudi表类型

创建COW类型Hudi表

创建MOR类型Hudi表

options (primaryKey = 'id',type = 'mor')

管理表与外部表

创建表时,指定location存储路径,表就是外部表

创建表时设置为分区表

支持使用CTAS

在实际应用使用时,合理选择创建表的方式,建议创建外部及分区表,便于数据管理和安全。

DDL-DML-DQL-DCL区别

一、DQL

DQL(data Query Language) 数据查询语言

就是我们最经常用到的 SELECT(查)语句 。主要用来对数据库中的数据进行查询操作。

二、DML

DML(data manipulation language)数据操纵语言:

就是我们最经常用到的 INSERT(增)、DELETE(删)、UPDATE(改)。主要用来对数据库重表的数据进行一些增删改操作。三、DDL

DDL(data definition language)数据库定义语言:

就是我们在创建表的时候用到的一些sql,比如说:CREATE、ALTER、DROP等。主要是用在定义或改变表的结构,数据类型,表之间的链接和约束等初始化工作上。四、DCL

DCL(Data Control Language)数据库控制语言:

是用来设置或更改数据库用户或角色权限的语句,包括(grant(授予权限),deny(拒绝权限),revoke(收回权限)等)语句。这个比较少用到。

MergeInto 语句

Merge Into Insert

--当不满足条件时(关联条件不匹配),插入数据到Hudi表中

merge into test_hudi_table as t0

using (select 1 as id, 'hadoop' as name, 1 as price, 9000 as ts, '2021-11-02' as dt

) as s0

on t0.id = s0.id

when not matched then insert * ;

Merge Into Update

--当满足条件时(关联条件匹配),对数据进行更新操作

merge into test_hudi_table as t0

using (select 1 as id, 'hadoop3' as name, 1000 as price, 9999 as ts, '2021-11-02' as dt

) as s0

on t0.id = s0.id

when matched then update set *

Merge Into Delete

--当满足条件时(关联条件匹配),对数据进行删除操作

merge into test_hudi_table t0

using (select 1 as s_id, 'hadoop3' as s_name, 8888 as s_price, 9999 as s_ts, '2021-11-02' as dt

) s0

on t0.id = s0.s_id

when matched and s_ts = 9999 then delete

八、Hudi集成Flink

[flink学习之sql-client之踩坑记录_flink sql-client_cclovezbf的博客-CSDN博客](https://blog.csdn.net/cclovezbf/article/details/127887149)

安装Flink 1.12

使用Flink 1.12版本,部署Flink Standalone集群模式,启动服务,步骤如下

step1、下载安装包https://archive.apache.org/dist/flink/flink-1.12.2/step2、上传软件包step3、解压step5、添加hadoop依赖jar包

往Flink中的lib目录里添加两个jar包:

flink-shaded-hadoop-3-uber-3.1.1.7.2.1.0-327-9.0.jar

commons-cli-1.4.jar集群--添加完后,将lib目录分发给其他虚拟机。虚拟机上也需要添加上面两个jar包下载仓库分别是:

https://mvnrepository.com/artifact/org.apache.flink/flink-shaded-hadoop-3-uber/3.1.1.7.2.1.0-327-9.0

https://mvnrepository.com/artifact/commons-cli/commons-cli/1.4cd flink/libflink-shaded-hadoop-2-uber-2.7.5-10.0.jar

启动Flink

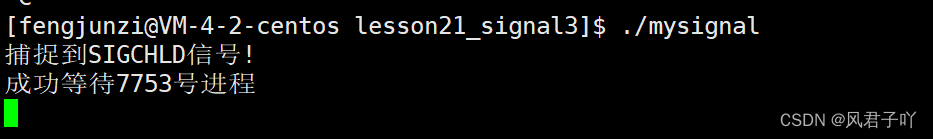

start-cluster.sh[root@master lib]# jps

53121 StandaloneSessionClusterEntrypoint

3218 DataNode

2979 NameNode

53622 Jps

53401 TaskManagerRunner

28107 QuorumPeerMain

5918 RunJarstop-cluster.sh

词频统计WordCount

flink run /usr/local/src/flink/examples/batch/WordCount.jar

java.lang.NoSuchMethodError: org.apache.commons.cli.Option.builder(Ljava/lang/String;)Lorg/apache/commons/cli/Option$Builder; 解决:flink/lib 下添加commons-cli-1.4.jar

Flink快速入门

环境准备

Jar包和配置文件

hudi-flink-bundle_2.12-0.9.0.jar

[root@master target]# cp hudi-flink-bundle_2.12-0.9.0.jar /usr/local/src/flink/lib

[root@master target]# pwd

/usr/local/src/hudi/packaging/hudi-flink-bundle/target

flink-conf.yaml

接下来使用Flink SQL Client提供SQL命令行与Hudi集成,需要启动Flink Standalone集群,其中需要修改配置文件【$FLINK_HOME/conf/flink-conf.yaml】,TaskManager分配Slots数目为4。taskmanager.numberOfTaskSlots: 4修改后重启flink

第一步、启动HDFS集群

[root@master ~]# hadoop-daemon.sh start namenode

[root@master ~]# hadoop-daemon.sh start datanode

第二步、启动Flink 集群

由于Flink需要连接HDFS文件系统,所以先设置HADOOP_CLASSPATH变量,再启动Standalone集群服务。

export HADOOP_CLASSPATH=`$HADOOP_HOME/bin/hadoop classpath`#启动flink

start-cluster.sh

第三步、启动Flink SQL Cli

embedded:嵌入式方式

#启动flink-sql客户端

sql-client.sh embedded shell#在SQL Cli设置分析结果展示模式为tableau:

set execution.result-mode=tableau;Flink SQL> set execution.result-mode=tableau;

[INFO] Session property has been set.-------------------------------------exit报错---------------------------------------------

Flink SQL> exit;

[INFO] Exiting Flink SQL CLI Client...Shutting down the session...

done.

Exception in thread "Thread-6" java.lang.IllegalStateException: Trying to access closed classloader. Please check if you store classloaders directly or indirectly in static fields.解决办法: 在 flink 配置文件里 flink-conf.yaml设置

classloader.check-leaked-classloader: false

SQL Cli-tableau模式

set execution.result-mode=tableau;

创建表并插入数据

创建表

创建表:t1,数据存储到Hudi表中,底层HDFS存储,表的类型:MOR

CREATE TABLE t1(uuid VARCHAR(20), name VARCHAR(10),age INT,ts TIMESTAMP(3),`partition` VARCHAR(20)

)

PARTITIONED BY (`partition`)

WITH ('connector' = 'hudi','path' = 'hdfs://192.168.184.135:9000/hudi-warehouse/hudi-t1','write.tasks' = '1','compaction.tasks' = '1', 'table.type' = 'MERGE_ON_READ','hive-conf-dir' = '/usr/hdp/3.1.5.0-152/hive/conf'

);show tables;--查看表及结构

desc t1;Flink SQL> desc t1;

+-----------+--------------+------+-----+--------+-----------+

| name | type | null | key | extras | watermark |

+-----------+--------------+------+-----+--------+-----------+

| uuid | VARCHAR(20) | true | | | |

| name | VARCHAR(10) | true | | | |

| age | INT | true | | | |

| ts | TIMESTAMP(3) | true | | | |

| partition | VARCHAR(20) | true | | | |

+-----------+--------------+------+-----+--------+-----------+

5 rows in set插入数据

t1中插入数据,其中t1表为分区表,字段名称:**partition**,插入数据时字段值有:【**part1、part2、part3和part4**】

INSERT INTO t1 VALUES('id1','Danny',23,TIMESTAMP '1970-01-01 00:00:01','par1'),

('id2','Stephen',33,TIMESTAMP '1970-01-01 00:00:02','par1'),

('id3','Julian',53,TIMESTAMP '1970-01-01 00:00:03','par2'),

('id4','Fabian',31,TIMESTAMP '1970-01-01 00:00:04','par2'),

('id5','Sophia',18,TIMESTAMP '1970-01-01 00:00:05','par3'),

('id6','Emma',20,TIMESTAMP '1970-01-01 00:00:06','par3'),

('id7','Bob',44,TIMESTAMP '1970-01-01 00:00:07','par4'),

('id8','Han',56,TIMESTAMP '1970-01-01 00:00:08','par4');

批量插入报错:org.apache.flink.runtime.JobException: Recovery is suppressed by NoRestartBackoffTimeStrategy 'dfs.client.block.write.replace-datanode-on-failure.policy' in its configuration.

hdfs-site.xml插入<property><name>dfs.client.block.write.replace-datanode-on-failure.enable</name>

<value>true</value>

</property><property>

<name>dfs.client.block.write.replace-datanode-on-failure.policy</name>

<value>NEVER</value>

</property>

查询数据

select * from t1;select * from t1 where `partition` = 'par1' ;

更新数据

更新数据用insert--将id1的年龄更新为30岁

Flink SQL> select uuid,name,age from t1 where uuid='id1';

+-----+----------------------+----------------------+-------------+

| +/- | uuid | name | age |

+-----+----------------------+----------------------+-------------+

| + | id1 | Danny | 27 |

+-----+----------------------+----------------------+-------------+Flink SQL> insert into t1 values ('id1','Danny',30,TIMESTAMP '1970-01-01 00:00:01','par1');Flink SQL> select uuid,name,age from t1 where uuid='id1';

+-----+----------------------+----------------------+-------------+

| +/- | uuid | name | age |

+-----+----------------------+----------------------+-------------+

| + | id1 | Danny | 30 |

+-----+----------------------+----------------------+-------------+

Received a total of 1 rows

流式查询SteamingQuery

Flink插入Hudi表数据时,支持以流的方式加载数据,增量查询分析

创建表

流式表

CREATE TABLE t2(uuid VARCHAR(20), name VARCHAR(10),age INT,ts TIMESTAMP(3),`partition` VARCHAR(20)

)

PARTITIONED BY (`partition`)

WITH ('connector' = 'hudi','path' = 'hdfs://192.168.184.135:9000/hudi-warehouse/hudi-t1','table.type' = 'MERGE_ON_READ','read.tasks' = '1', 'read.streaming.enabled' = 'true','read.streaming.start-commit' = '20210316134557','read.streaming.check-interval' = '4' );--核心参数选项说明:

read.streaming.enabled 设置为 true,表明通过 streaming 的方式读取表数据;

read.streaming.check-interval 指定了 source 监控新的 commits 的间隔为 4s;

table.type 设置表类型为 MERGE_ON_READ;

插入数据

重新打开一个终端,然后创建一个表非流式表,path与之前的地址一样,然后新的终端中插入新的数据id9,之前创建的t2表会流式插入新的数据

CREATE TABLE t1(uuid VARCHAR(20), name VARCHAR(10),age INT,ts TIMESTAMP(3),`partition` VARCHAR(20)

)

PARTITIONED BY (`partition`)

WITH ('connector' = 'hudi','path' = 'hdfs://192.168.184.135:9000/hudi-warehouse/hudi-t1','write.tasks' = '1','compaction.tasks' = '1', 'table.type' = 'MERGE_ON_READ'

);insert into t1 values ('id9','test',27,TIMESTAMP '1970-01-01 00:00:01','par5');insert into t1 values ('id10','saddam',23,TIMESTAMP '2023-11-05 23:07:01','par5');

Flink SQL Writer

Flink SQL集成Kafka

第一步、创建Topic

#启动zookeeper

[root@master ~]# zkServer.sh start#启动kafka

kafka-server-start.sh /usr/local/src/kafka/config/server.properties#创建topic:flink-topic

kafka-topics.sh --create --zookeeper master:2181/kafka --replication-factor 1 --partitions 1 --topic flink-topic#工具创建

.....

第二步、启动HDFS集群

start-dfs.sh

第三步、启动Flink 集群

export HADOOP_CLASSPATH=`$HADOOP_HOME/bin/hadoop classpath`start-cluster.sh

第四步、启动Flink SQL Cli

采用指定参数【-j xx.jar】方式加载hudi-flink集成包

sql-client.sh embedded -j /usr/local/src/flink/flink-Jars/flink-sql-connector-kafka_2.12-1.12.2.jar shellset execution.result-mode=tableau;

第五步、创建表,映射到Kafka Topic

其中Kafka Topic中数据是CSV文件格式,有三个字段:user_id、item_id、behavior,从Kafka消费数据时,设置从最新偏移量开始

CREATE TABLE tbl_kafka (`user_id` BIGINT,`item_id` BIGINT,`behavior` STRING

) WITH ('connector' = 'kafka','topic' = 'flink-topic','properties.bootstrap.servers' = '192.168.184.135:9092','properties.group.id' = 'test-group-10001','scan.startup.mode' = 'latest-offset','format' = 'csv'

);

第六步、实时向Topic发送数据,并在FlinkSQL查询

首先,在FlinkSQL页面,执行SELECT查询语句

Flink SQL> select * from tbl_kafka;

其次,通过Kafka Console Producer向Topic发送数据

-- 生产者发送数据

kafka-console-producer.sh --broker-list 192.168.184.135:9092 --topic flink-topic

/*

1001,90001,click

1001,90001,browser

1001,90001,click

1002,90002,click

1002,90003,click

1003,90001,order

1004,90001,order

*/

Flink SQL写入Hudi-IDEAJava开发

Maven开发pom文件

<!-- Flink Client --><dependency><groupId>org.apache.flink</groupId><artifactId>flink-clients_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-java</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-streaming-java_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-runtime-web_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency><!-- Flink Table API & SQL --><dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-common</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-planner-blink_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-api-java-bridge_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-connector-kafka_${scala.binary.version}</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-json</artifactId><version>${flink.version}</version></dependency><dependency><groupId>org.apache.hudi</groupId><artifactId>hudi-flink-bundle_${scala.binary.version}</artifactId><version>0.9.0</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-shaded-hadoop-2-uber</artifactId><version>2.7.5-10.0</version></dependency><!-- MySQL/FastJson/lombok --><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>5.1.32</version></dependency><dependency><groupId>com.alibaba</groupId><artifactId>fastjson</artifactId><version>1.2.68</version></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><version>1.18.12</version></dependency><!-- slf4j及log4j --><dependency><groupId>org.slf4j</groupId><artifactId>slf4j-log4j12</artifactId><version>1.7.7</version><scope>runtime</scope></dependency><dependency><groupId>log4j</groupId><artifactId>log4j</artifactId><version>1.2.17</version><scope>runtime</scope></dependency>

消费Kafka数据

启动zookeeper,kafka,然后启动数据模拟生成器,再运行FlinkSQLKafakDemo

package flink_kafka_hudi;import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import static org.apache.flink.table.api.Expressions.*;/*** 基于Flink SQL Connector实现:实时消费Topic中数据,转换处理后,实时存储Hudi表中*/

public class FlinkSQLKafakDemo {public static void main(String[] args) {//TODO 1-获取表执行环境EnvironmentSettings settings = EnvironmentSettings.newInstance().inStreamingMode().build();TableEnvironment tableEnv = TableEnvironment.create(settings) ;//TODO 2-创建输入表, 从Kafka消费数据tableEnv.executeSql("CREATE TABLE order_kafka_source (\n" +" orderId STRING,\n" +" userId STRING,\n" +" orderTime STRING,\n" +" ip STRING,\n" +" orderMoney DOUBLE,\n" +" orderStatus INT\n" +") WITH (\n" +" 'connector' = 'kafka',\n" +" 'topic' = 'order-topic',\n" +" 'properties.bootstrap.servers' = '192.168.184.135:9092',\n" +" 'properties.group.id' = 'gid-1001',\n" +" 'scan.startup.mode' = 'latest-offset',\n" +" 'format' = 'json',\n" +" 'json.fail-on-missing-field' = 'false',\n" +" 'json.ignore-parse-errors' = 'true'\n" +")");//TODO 3-数据转换:提取订单时间中订单日期,作为Hudi表分区字段值Table etlTable = tableEnv.from("order_kafka_source").addColumns($("orderTime").substring(0, 10).as("partition_day")).addColumns($("orderId").substring(0, 17).as("ts"));tableEnv.createTemporaryView("view_order", etlTable);//TODO 4-查询数据tableEnv.executeSql("SELECT * FROM view_order").print();}

}

Flink写入hudi并读取

启动数据生成器用kafka消费

存入hudi

package flink_kafka_hudi;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;import static org.apache.flink.table.api.Expressions.$;/*** 基于Flink SQL Connector实现:实时消费Topic中数据,转换处理后,实时存储到Hudi表中*/

public class FlinkSQLHudiDemo {public static void main(String[] args) {// 1-获取表执行环境StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();// TODO: 由于增量将数据写入到Hudi表,所以需要启动Flink Checkpoint检查点env.setParallelism(1);env.enableCheckpointing(5000) ;EnvironmentSettings settings = EnvironmentSettings.newInstance().inStreamingMode() // 设置流式模式.build();StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);// 2-创建输入表,TODO:从Kafka消费数据tableEnv.executeSql("CREATE TABLE order_kafka_source (\n" +" orderId STRING,\n" +" userId STRING,\n" +" orderTime STRING,\n" +" ip STRING,\n" +" orderMoney DOUBLE,\n" +" orderStatus INT\n" +") WITH (\n" +" 'connector' = 'kafka',\n" +" 'topic' = 'order-topic',\n" +" 'properties.bootstrap.servers' = '192.168.184.135:9092',\n" +" 'properties.group.id' = 'gid-1002',\n" +" 'scan.startup.mode' = 'latest-offset',\n" +" 'format' = 'json',\n" +" 'json.fail-on-missing-field' = 'false',\n" +" 'json.ignore-parse-errors' = 'true'\n" +")");// 3-转换数据:可以使用SQL,也可以时Table APITable etlTable = tableEnv.from("order_kafka_source")// 添加字段:Hudi表数据合并字段,时间戳, "orderId": "20211122103434136000001" -> 20211122103434136.addColumns($("orderId").substring(0, 17).as("ts"))// 添加字段:Hudi表分区字段, "orderTime": "2021-11-22 10:34:34.136" -> 021-11-22.addColumns($("orderTime").substring(0, 10).as("partition_day"));tableEnv.createTemporaryView("view_order", etlTable);// 4-创建输出表,TODO: 关联到Hudi表,指定Hudi表名称,存储路径,字段名称等等信息tableEnv.executeSql("CREATE TABLE order_hudi_sink (\n" +" orderId STRING PRIMARY KEY NOT ENFORCED,\n" +" userId STRING,\n" +" orderTime STRING,\n" +" ip STRING,\n" +" orderMoney DOUBLE,\n" +" orderStatus INT,\n" +" ts STRING,\n" +" partition_day STRING\n" +")\n" +"PARTITIONED BY (partition_day)\n" +"WITH (\n" +" 'connector' = 'hudi',\n" +" 'path' = 'hdfs://192.168.184.135:9000/hudi-warehouse/flink_hudi_order',\n" +" 'table.type' = 'MERGE_ON_READ',\n" +" 'write.operation' = 'upsert',\n" +" 'hoodie.datasource.write.recordkey.field'= 'orderId',\n" +" 'write.precombine.field' = 'ts',\n" +" 'write.tasks'= '1'\n" +")");// 5-通过子查询方式,将数据写入输出表tableEnv.executeSql("INSERT INTO order_hudi_sink\n" +"SELECT\n" +" orderId, userId, orderTime, ip, orderMoney, orderStatus, ts, partition_day\n" +"FROM view_order");}}

读取hudi

package flink_kafka_hudi;import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.TableEnvironment;/*** 基于Flink SQL Connector实现:从Hudi表中加载数据,编写SQL查询*/

public class FlinkSQLReadDemo {public static void main(String[] args) {// 1-获取表执行环境EnvironmentSettings settings = EnvironmentSettings.newInstance().inStreamingMode().build();TableEnvironment tableEnv = TableEnvironment.create(settings) ;// 2-创建输入表,TODO:加载Hudi表数据tableEnv.executeSql("CREATE TABLE order_hudi(\n" +" orderId STRING PRIMARY KEY NOT ENFORCED,\n" +" userId STRING,\n" +" orderTime STRING,\n" +" ip STRING,\n" +" orderMoney DOUBLE,\n" +" orderStatus INT,\n" +" ts STRING,\n" +" partition_day STRING\n" +")\n" +"PARTITIONED BY (partition_day)\n" +"WITH (\n" +" 'connector' = 'hudi',\n" +" 'path' = 'hdfs://192.168.184.135:9000/hudi-warehouse/flink_hudi_order',\n" +" 'table.type' = 'MERGE_ON_READ',\n" +" 'read.streaming.enabled' = 'true',\n" +" 'read.streaming.check-interval' = '4'\n" +")");// 3-执行查询语句,读取流式读取Hudi表数据tableEnv.executeSql("SELECT orderId, userId, orderTime, ip, orderMoney, orderStatus, ts, partition_day FROM order_hudi").print() ;}}

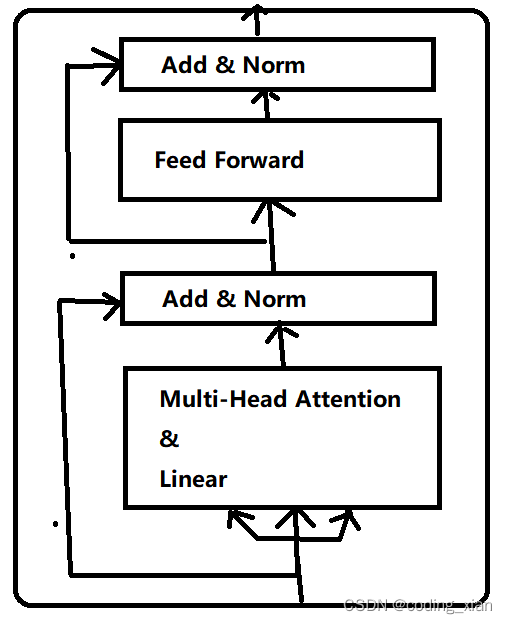

基于Flink实时增量入湖流程图

Flink SQL写入Hudi-FlinkSQL开发

集成环境

#修改$FLINK_HOME/conf/flink-conf.yaml文件

jobmanager.rpc.address: node1.itcast.cn

jobmanager.memory.process.size: 1024m

taskmanager.memory.process.size: 2048m

taskmanager.numberOfTaskSlots: 4classloader.check-leaked-classloader: false

classloader.resolve-order: parent-firstexecution.checkpointing.interval: 3000

state.backend: rocksdb

state.checkpoints.dir: hdfs://master:9000/flink/flink-checkpoints

state.savepoints.dir: hdfs://master:9000/flink/flink-savepoints

state.backend.incremental: true#jar包

将Hudi与Flink集成jar包及其他相关jar包,放置到$FLINK_HOME/lib目录

hudi-flink-bundle_2.12-0.9.0.jar

flink-sql-connector-kafka_2.12-1.12.2.jar

flink-shaded-hadoop-3-uber-3.1.1.7.2.9.0-173-9.0.jar#启动Standalone集群

export HADOOP_CLASSPATH=`/usr/local/src/hadoop/bin/hadoop classpath`

start-cluster.sh#启动SQL Client,最好再次指定Hudi集成jar包

sql-client.sh embedded -j /usr/local/src/flink/lib/hudi-flink-bundle_2.12-0.9.0.jar shell#设置属性

set execution.result-mode=tableau;

set execution.checkpointing.interval=3sec;

执行SQL

首先创建输入表:从Kafka消费数据,其次编写SQL提取字段值,再创建输出表:将数据保存值Hudi表中,最后编写SQL查询Hudi表数据。

第1步、创建输入表,关联Kafka Topic

-- 输入表:Kafka Source

CREATE TABLE order_kafka_source (orderId STRING,userId STRING,orderTime STRING,ip STRING,orderMoney DOUBLE,orderStatus INT

) WITH ('connector' = 'kafka','topic' = 'order-topic','properties.bootstrap.servers' = '192.168.184.135:9092','properties.group.id' = 'gid-1001','scan.startup.mode' = 'latest-offset','format' = 'json','json.fail-on-missing-field' = 'false','json.ignore-parse-errors' = 'true'

);SELECT orderId, userId, orderTime, ip, orderMoney, orderStatus FROM order_kafka_source ;

第2步、处理获取Kafka消息数据,提取字段值

SELECT orderId, userId, orderTime, ip, orderMoney, orderStatus, substring(orderId, 0, 17) AS ts, substring(orderTime, 0, 10) AS partition_day

FROM order_kafka_source ;

第3步、创建输出表,保存数据至Hudi表,设置相关属性

-- 输出表:Hudi Sink

CREATE TABLE order_hudi_sink (orderId STRING PRIMARY KEY NOT ENFORCED,userId STRING,orderTime STRING,ip STRING,orderMoney DOUBLE,orderStatus INT,ts STRING,partition_day STRING

)

PARTITIONED BY (partition_day)

WITH ('connector' = 'hudi','path' = 'hdfs://192.168.184.135:9000/hudi-warehouse/order_hudi_sink','table.type' = 'MERGE_ON_READ','write.operation' = 'upsert','hoodie.datasource.write.recordkey.field'= 'orderId','write.precombine.field' = 'ts','write.tasks'= '1','compaction.tasks' = '1', 'compaction.async.enabled' = 'true', 'compaction.trigger.strategy' = 'num_commits', 'compaction.delta_commits' = '1'

);

第4步、使用INSERT INTO语句,将数据保存Hudi表

-- 子查询插入INSERT ... SELECT ...

INSERT INTO order_hudi_sink

SELECTorderId, userId, orderTime, ip, orderMoney, orderStatus,substring(orderId, 0, 17) AS ts, substring(orderTime, 0, 10) AS partition_day

FROM order_kafka_source ;

Flink CDC Hudi

CDC的全称是Change data Capture,即变更数据捕获,主要面向数据库的变更,是是数据库领域非常常见的技术,主要用于捕获数据库的一些变更,然后可以把变更数据发送到下游。

流程图

环境准备

#修改Hudi集成flink和Hive编译依赖版本配置

原因:现在版本Hudi,在编译的时候本身默认已经集成的flink-SQL-connector-hive的包,会和Flink lib包下的flink-SQL-connector-hive冲突。所以,编译的过程中只修改hive编译版本。文件:hudi-0.9.0/packaging/hudi-flink-bundle/pom.xml<hive.version>3.1.2</hive.version> #hive版本修改为自己的版本然后进入hudi-0.9.0/packaging/hudi-flink-bundle/ 再编译Hudi源码:

mvn clean install -DskipTests -Drat.skip=true -Dscala-2.12 -Dspark3 -Pflink-bundle-shade-hive3#将Flink CDC MySQL对应jar包,放到$FLINK_HOME/lib目录中

flink-sql-connector-mysql-cdc-1.3.0.jar#hive 需要用来读hudi数据,放到$HIVE_HOME/lib目录中

hudi-hadoop-mr-bundle-0.9.0.jar#flink 用来写入和读取数据,将其拷贝至$FLINK_HOME/lib目录中,如果以前有同名jar包,先删除再拷贝。

hudi-flink-bundle_2.12-0.9.0.jar#启动

dfs

zk

kafka

flink

metastore

hiveserver2

创建 MySQL 表

首先开启MySQL数据库binlog日志,再重启MySQL数据库服务,最后创建表。

第一步、开启MySQL binlog日志

[root@node1 ~]# vim /etc/my.cnf

在[mysqld]下面添加内容:server-id=2

log-bin=mysql-bin

binlog_format=row

expire_logs_days=15

binlog_row_image=full

第二步、重启MySQL Server

service mysqld restart

第三步、在MySQL数据库,创建表

-- MySQL 数据库创建表

create database test;

create table test.tbl_users(id bigint auto_increment primary key,name varchar(20) null,birthday timestamp default CURRENT_TIMESTAMP not null,ts timestamp default CURRENT_TIMESTAMP not null

);

创建 CDC 表

先启动HDFS服务、Hive MetaStore和HiveServer2服务和Flink Standalone集群,再运行SQL Client,最后创建表关联MySQL表,采用MySQL CDC方式。

启动相关服务

#启动HDFS服务,分别启动NameNode和DataNode

hadoop-daemon.sh start namenode

hadoop-daemon.sh start datanode#启动Hive服务:元数据MetaStore和HiveServer2

hive/bin/start-metastore.sh

hive/bin/start-hiveserver2.sh#启动Flink Standalone集群

export HADOOP_CLASSPATH=`/usr/local/src/hadoop/bin/hadoop classpath`

start-cluster.sh#启动SQL Client客户端

sql-client.sh embedded -j /usr/local/src/flink/lib/hudi-flink-bundle_2.12-0.9.0.jar shell

设置属性:

set execution.result-mode=tableau;

set execution.checkpointing.interval=3sec;

创建输入表,关联MySQL表,采用MySQL CDC 关联

-- Flink SQL Client创建表

CREATE TABLE users_source_mysql (id BIGINT PRIMARY KEY NOT ENFORCED,name STRING,birthday TIMESTAMP(3),ts TIMESTAMP(3)

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.184.135',

'port' = '3306',

'username' = 'root',

'password' = 'xxxxxx',

'server-time-zone' = 'Asia/Shanghai',

'debezium.snapshot.mode' = 'initial',

'database-name' = 'test',

'table-name' = 'tbl_users'

);

开启MySQL Client客户端,执行DML语句,插入数据

insert into test.tbl_users (name) values ('zhangsan')

insert into test.tbl_users (name) values ('lisi');

insert into test.tbl_users (name) values ('wangwu');

insert into test.tbl_users (name) values ('laoda');

insert into test.tbl_users (name) values ('laoer');

查询CDC表数据

-- 查询数据

select * from users_source_mysql;

创建视图

创建一个临时视图,增加分区列part,方便后续同步hive分区表。

-- 创建一个临时视图,增加分区列 方便后续同步hive分区表

create view view_users_cdc

AS

SELECT *, DATE_FORMAT(birthday, 'yyyyMMdd') as part FROM users_source_mysql;select * from view_users_cdc;

创建 Hudi 表

创建 CDC Hudi Sink表,并自动同步hive分区表

CREATE TABLE users_sink_hudi_hive(

id bigint ,

name string,

birthday TIMESTAMP(3),

ts TIMESTAMP(3),

part VARCHAR(20),

primary key(id) not enforced

)

PARTITIONED BY (part)

with(

'connector'='hudi',

'path'= 'hdfs://192.168.184.135:9000/hudi-warehouse/users_sink_hudi_hive',

'table.type'= 'MERGE_ON_READ',

'hoodie.datasource.write.recordkey.field'= 'id',

'write.precombine.field'= 'ts',

'write.tasks'= '1',

'write.rate.limit'= '2000',

'compaction.tasks'= '1',

'compaction.async.enabled'= 'true',

'compaction.trigger.strategy'= 'num_commits',

'compaction.delta_commits'= '1',

'changelog.enabled'= 'true',

'read.streaming.enabled'= 'true',

'read.streaming.check-interval'= '3',

'hive_sync.enable'= 'true',

'hive_sync.mode'= 'hms',

'hive_sync.metastore.uris'= 'thrift://192.168.184.135:9083',

'hive_sync.jdbc_url'= 'jdbc:hive2://192.168.184.135:10000',

'hive_sync.table'= 'users_sink_hudi_hive',

'hive_sync.db'= 'default',

'hive_sync.username'= 'root',

'hive_sync.password'= 'xxxxxx',

'hive_sync.support_timestamp'= 'true'

);此处Hudi表类型:MOR,Merge on Read (读时合并),快照查询+增量查询+读取优化查询(近实时)。使用列式存储(parquet)+行式文件(arvo)组合存储数据。更新记录到增量文件中,然后进行同步或异步压缩来生成新版本的列式文件。

数据写入Hudi表

编写INSERT语句,从视图中查询数据,再写入Hudi表中

insert into users_sink_hudi_hive select id, name, birthday, ts, part from view_users_cdc;

Hive 表查询

需要引入hudi-hadoop-mr-bundle-0.9.0.jar包,放到$HIVE_HOME/lib下

--启动Hive中beeline客户端,连接HiveServer2服务 已自动生产hudi MOR模式的2张表:users_sink_hudi_hive_ro,ro 表全称 read oprimized table,对于 MOR 表同步的 xxx_ro 表,只暴露压缩后的 parquet。其查询方式和COW表类似。设置完 hiveInputFormat 之后 和普通的 Hive 表一样查询即可;users_sink_hudi_hive_rt,rt表示增量视图,主要针对增量查询的rt表;ro表只能查parquet文件数据, rt表 parquet文件数据和log文件数据都可查;

查看自动生成表users_sink_hudi_hive_ro结构

show create table users_sink_hudi_hive_ro;

查看自动生成表的分区信息

show partitions users_sink_hudi_hive_ro ;

show partitions users_sink_hudi_hive_rt ;

查询Hive 分区表数据

set hive.exec.mode.local.auto=true;

set hive.input.format = org.apache.hudi.hadoop.hive.HoodieCombineHiveInputFormat;

set hive.mapred.mode=nonstrict ;select id, name, birthday, ts, `part` from users_sink_hudi_hive_ro;

指定分区字段过滤,查询数据

select name, ts from users_sink_hudi_hive_ro where part ='20231110';

select name, ts from users_sink_hudi_hive_rt where part ='20231110';

Hudi Client操作Hudi表

进入Hudi客户端命令行:hudi/hudi-cli/hudi-cli.sh

连接Hudi表,查看表信息

connect --path hdfs://192.168.184.135:9000/hudi-warehouse/users_sink_hudi_hive

查看Hudi compactions 计划

compactions show all

查看Hudi commit信息

commits show --sortBy "CommitTime"

help

hudi:users_sink_hudi_hive->help

2023-11-10 21:13:57,140 INFO core.SimpleParser: * ! - Allows execution of operating sy

* // - Inline comment markers (start of line only)

* ; - Inline comment markers (start of line only)

* bootstrap index showmapping - Show bootstrap index mapping

* bootstrap index showpartitions - Show bootstrap indexed partitions

* bootstrap run - Run a bootstrap action for current Hudi table

* clean showpartitions - Show partition level details of a clean

* cleans refresh - Refresh table metadata

* cleans run - run clean

* cleans show - Show the cleans

* clear - Clears the console

* cls - Clears the console

* clustering run - Run Clustering

* clustering schedule - Schedule Clustering

* commit rollback - Rollback a commit

* commits compare - Compare commits with another Hoodie table

* commit show_write_stats - Show write stats of a commit

* commit showfiles - Show file level details of a commit

* commit showpartitions - Show partition level details of a commit

* commits refresh - Refresh table metadata

* commits show - Show the commits

* commits showarchived - Show the archived commits

* commits sync - Compare commits with another Hoodie table

* compaction repair - Renames the files to make them consistent with the timeline as d when compaction unschedule fails partially.

* compaction run - Run Compaction for given instant time

* compaction schedule - Schedule Compaction

* compaction show - Shows compaction details for a specific compaction instant

* compaction showarchived - Shows compaction details for a specific compaction instant

* compactions show all - Shows all compactions that are in active timeline

* compactions showarchived - Shows compaction details for specified time window

* compaction unschedule - Unschedule Compaction

* compaction unscheduleFileId - UnSchedule Compaction for a fileId

* compaction validate - Validate Compaction

* connect - Connect to a hoodie table

* create - Create a hoodie table if not present

* date - Displays the local date and time

* desc - Describe Hoodie Table properties

* downgrade table - Downgrades a table

* exit - Exits the shell

* export instants - Export Instants and their metadata from the Timeline

* fetch table schema - Fetches latest table schema

* hdfsparquetimport - Imports Parquet table to a hoodie table

* help - List all commands usage

* metadata create - Create the Metadata Table if it does not exist

* metadata delete - Remove the Metadata Table

* metadata init - Update the metadata table from commits since the creation

* metadata list-files - Print a list of all files in a partition from the metadata

* metadata list-partitions - Print a list of all partitions from the metadata

* metadata refresh - Refresh table metadata

* metadata set - Set options for Metadata Table

* metadata stats - Print stats about the metadata

* quit - Exits the shell

* refresh - Refresh table metadata

* repair addpartitionmeta - Add partition metadata to a table, if not present

* repair corrupted clean files - repair corrupted clean files

* repair deduplicate - De-duplicate a partition path contains duplicates & produce rep

* repair overwrite-hoodie-props - Overwrite hoodie.properties with provided file. Riskon!

* savepoint create - Savepoint a commit

* savepoint delete - Delete the savepoint

* savepoint rollback - Savepoint a commit

* savepoints refresh - Refresh table metadata

* savepoints show - Show the savepoints

* script - Parses the specified resource file and executes its commands

* set - Set spark launcher env to cli

* show archived commits - Read commits from archived files and show details

* show archived commit stats - Read commits from archived files and show details

* show env - Show spark launcher env by key

* show envs all - Show spark launcher envs

* show fsview all - Show entire file-system view

* show fsview latest - Show latest file-system view

* show logfile metadata - Read commit metadata from log files

* show logfile records - Read records from log files

* show rollback - Show details of a rollback instant

* show rollbacks - List all rollback instants

* stats filesizes - File Sizes. Display summary stats on sizes of files

* stats wa - Write Amplification. Ratio of how many records were upserted to how many

* sync validate - Validate the sync by counting the number of records

* system properties - Shows the shell's properties

* temp_delete - Delete view name

* temp_query - query against created temp view

* temp delete - Delete view name

* temp query - query against created temp view

* temps_show - Show all views name

* temps show - Show all views name

* upgrade table - Upgrades a table

* utils loadClass - Load a class

* version - Displays shell version

九、Hudi案例实战一

七陌社交是一家专门做客服系统的公司, 传智教育是基于七陌社交构建客服系统,每天都有非常多的的用户进行聊天, 传智教育目前想要对这些聊天记录进行存储, 同时还需要对每天的消息量进行实时统计分析, 请您来设计如何实现数据的存储以及实时的数据统计分析工作。

需求如下:

1) 选择合理的存储容器进行数据存储, 并让其支持基本数据查询工作

2) 进行实时统计消息总量

3) 进行实时统计各个地区收 发 消息的总量

4) 进行实时统计每一位客户发送和接收消息数量

1、案例架构

实时采集七陌用户聊天信息数据,存储消息队列Kafka,再实时将数据处理转换,将其消息存储Hudi表中,最终使用Hive和Spark业务指标统计,基于FanBI可视化报表展示。

1、Apache Flume:分布式实时日志数据采集框架

由于业务端数据在不断的在往一个目录下进行生产, 我们需要实时的进行数据采集, 而flume就是一个专门用于数据采集工具,比如就可以监控某个目录下文件, 一旦有新的文件产生即可立即采集。2、Apache Kafka:分布式消息队列

Flume 采集过程中, 如果消息非常的快, Flume也会高效的将数据进行采集, 那么就需要一个能够快速承载数据容器, 而且后续还要对数据进行相关处理转换操作, 此时可以将flume采集过来的数据写入到Kafka中,进行消息数据传输,而Kafka也是整个集团中心所有业务线统一使用的消息系统, 用来对接后续的业务(离线或者实时)。3、Apache Spark:分布式内存计算引擎,离线和流式数据分析处理

整个七陌社交案例, 需要进行实时采集,那么此时也就意味着数据来一条就需要处理一条, 来一条处理一条, 此时就需要一些流式处理的框架,Structured Streaming或者Flink均可。

此外,七陌案例中,对每日用户消息数据按照业务指标分析,最终存储MySQL数据库中,选择SparkSQL。4、Apache Hudi:数据湖框架

七陌用户聊天消息数据,最终存储到Hudi表(底层存储:HDFS分布式文件系统),统一管理数据文件,后期与Spark和Hive集成,进行业务指标分析。5、Apache Hive:大数据数仓框架

与Hudi表集成,对七陌聊天数据进行分析,直接编写SQL即可。6、MySQL:关系型数据库

将业务指标分析结果存储在MySQL数据库中,后期便于指标报表展示。7、FineBI:报表工具

帆软公司的一款商业图表工具, 让图表制作更加简单

2、业务数据

用户聊天数据以文本格式存储日志文件中,包含20个字段,下图所示 各个字段之间分割符号为:**\001**

3、数据生成

运行jar包:7Mo_DataGen.jar,指定参数信息,模拟生成用户聊天信息数据,写入日志文件

第一步、创建原始文件目录

mkdir -p /usr/local/src/datas/7mo_init

第二步、上传模拟数据程序

#7mo_init目录下

7Mo_DataGen.jar

7Mo_Data.xlsx

第三步、创建模拟数据目录

mkdir -p /usr/local/src/datas/7mo_data

touch MOMO_DATA.dat #注意权限 需要写入这个文件

第四步、运行程序生成数据

# 1. 语法

java -jar /usr/local/src/datas/7mo_init/7Mo_DataGen.jar 原始数据路径 模拟数据路径 随机产生数据间隔ms时间# 2. 测试:每500ms生成一条数据

java -jar /usr/local/src/datas/7mo_init/7Mo_DataGen.jar \

/usr/local/src/datas/7mo_init/7Mo_Data.xlsx \

/usr/local/src/datas/7mo_data \

500

第五步、查看产生数据

[root@master 7mo_data]# pwd

/usr/local/src/datas/7mo_data

[root@master 7mo_data]# head -3 MOMO_DATA.dat

4、七陌数据采集

Apache Flume 是什么