超参数调优-通用深度学习篇(上)

文章目录

- 深度学习超参数调优

- 网格搜索

- 示例一:网格搜索回归模型超参数

- 示例二:Keras网格搜索

- 随机搜索

- 贝叶斯搜索

- 超参数调优框架

- Optuna深度学习超参数优化框架

- nvidia nemo大模型超参数优化框架

参数调整理论: 黑盒优化:超参数优化算法最新进展总结

- 均为转载,联系侵删

深度学习超参数调优

- pytorch 网格搜索LSTM最优参数 python网格搜索优化参数

- Keras深度学习超参数优化官方手册

- Keras深度学习超参数优化手册-CSDN博客版

- 超参数搜索不够高效?这几大策略了解一下

- 使用贝叶斯优化进行深度神经网络超参数优化

网格搜索

示例一:网格搜索回归模型超参数

# grid search cnn for airline passengers

from math import sqrt

from numpy import array, mean

from pandas import DataFrame, concat, read_csv

from sklearn.metrics import mean_squared_error

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten, Conv1D, MaxPooling1D# split a univariate dataset into train/test sets

def train_test_split(data, n_test):return data[:-n_test], data[-n_test:]# transform list into supervised learning format

def series_to_supervised(data, n_in=1, n_out=1):df = DataFrame(data)cols = list()# input sequence (t-n, ... t-1)for i in range(n_in, 0, -1):cols.append(df.shift(i))# forecast sequence (t, t+1, ... t+n)for i in range(0, n_out):cols.append(df.shift(-i))# put it all togetheragg = concat(cols, axis=1)# drop rows with NaN valuesagg.dropna(inplace=True)return agg.values# root mean squared error or rmse

def measure_rmse(actual, predicted):return sqrt(mean_squared_error(actual, predicted))# difference dataset

def difference(data, order):return [data[i] - data[i - order] for i in range(order, len(data))]# fit a model

def model_fit(train, config):# unpack confign_input, n_filters, n_kernel, n_epochs, n_batch, n_diff = config# prepare dataif n_diff > 0:train = difference(train, n_diff)# transform series into supervised formatdata = series_to_supervised(train, n_in=n_input)# separate inputs and outputstrain_x, train_y = data[:, :-1], data[:, -1]# reshape input data into [samples, timesteps, features]n_features = 1train_x = train_x.reshape((train_x.shape[0], train_x.shape[1], n_features))# define modelmodel = Sequential()model.add(Conv1D(filters=n_filters, kernel_size=n_kernel, activation='relu', input_shape=(n_input, n_features)))model.add(MaxPooling1D(pool_size=2))model.add(Flatten())model.add(Dense(1))model.compile(loss='mse', optimizer='adam')# fitmodel.fit(train_x, train_y, epochs=n_epochs, batch_size=n_batch, verbose=0)return model# forecast with the fit model

def model_predict(model, history, config):# unpack confign_input, _, _, _, _, n_diff = config# prepare datacorrection = 0.0if n_diff > 0:correction = history[-n_diff]history = difference(history, n_diff)x_input = array(history[-n_input:]).reshape((1, n_input, 1))# forecastyhat = model.predict(x_input, verbose=0)return correction + yhat[0]# walk-forward validation for univariate data

def walk_forward_validation(data, n_test, cfg):predictions = list()# split datasettrain, test = train_test_split(data, n_test)# fit modelmodel = model_fit(train, cfg)# seed history with training datasethistory = [x for x in train]# step over each time-step in the test setfor i in range(len(test)):# fit model and make forecast for historyyhat = model_predict(model, history, cfg)# store forecast in list of predictionspredictions.append(yhat)# add actual observation to history for the next loophistory.append(test[i])# estimate prediction errorerror = measure_rmse(test, predictions)print(' > %.3f' % error)return error# score a model, return None on failure

def repeat_evaluate(data, config, n_test, n_repeats=10):# convert config to a keykey = str(config)# fit and evaluate the model n timesscores = [walk_forward_validation(data, n_test, config) for _ in range(n_repeats)]# summarize scoreresult = mean(scores)print('> Model[%s] %.3f' % (key, result))return (key, result)# grid search configs

def grid_search(data, cfg_list, n_test):# evaluate configsscores = [repeat_evaluate(data, cfg, n_test) for cfg in cfg_list]# sort configs by error, ascscores.sort(key=lambda tup: tup[1])return scores# create a list of configs to try

def model_configs():# define scope of configsn_input = [12]n_filters = [64]n_kernels = [3, 5]n_epochs = [100]n_batch = [1, 150]n_diff = [0, 12]# create configsconfigs = list()for a in n_input:for b in n_filters:for c in n_kernels:for d in n_epochs:for e in n_batch:for f in n_diff:cfg = [a, b, c, d, e, f]configs.append(cfg)print('Total configs: %d' % len(configs))return configs# define dataset

# 下载数据集:https://raw.githubusercontent.com/jbrownlee/Datasets/master/airline-passengers.csv

series = read_csv('airline-passengers.csv', header=0, index_col=0)

data = series.values

# data split

n_test = 12

# model configs

cfg_list = model_configs()

# grid search

scores = grid_search(data, cfg_list, n_test)

print('done')

# list top 10 configs

for cfg, error in scores[:3]:print(cfg, error)

示例二:Keras网格搜索

"""

调整batch size和epochs

"""# Use scikit-learn to grid search the batch size and epochs

import numpy as np

import tensorflow as tf

from sklearn.model_selection import GridSearchCV

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from scikeras.wrappers import KerasClassifier

# Function to create model, required for KerasClassifier

def create_model():# create modelmodel = Sequential()model.add(Dense(12, input_shape=(8,), activation='relu'))model.add(Dense(1, activation='sigmoid'))# Compile modelmodel.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])return model

# fix random seed for reproducibility

seed = 7

tf.random.set_seed(seed)

# load dataset

dataset = np.loadtxt("pima-indians-diabetes.csv", delimiter=",")

# split into input (X) and output (Y) variables

X = dataset[:,0:8]

Y = dataset[:,8]

# create model

model = KerasClassifier(model=create_model, verbose=0)

# define the grid search parameters

batch_size = [10, 20, 40, 60, 80, 100]

epochs = [10, 50, 100]

param_grid = dict(batch_size=batch_size, epochs=epochs)

grid = GridSearchCV(estimator=model, param_grid=param_grid, n_jobs=-1, cv=3)

grid_result = grid.fit(X, Y)

# summarize results

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):print("%f (%f) with: %r" % (mean, stdev, param))

"""

更多参考:https://machinelearningmastery.com/grid-search-hyperparameters-deep-learning-models-python-keras/

"""

随机搜索

# Load the dataset

X, Y = load_dataset()# Create model for KerasClassifier

def create_model(hparams1=dvalue,hparams2=dvalue,...hparamsn=dvalue):# Model definition...model = KerasClassifier(build_fn=create_model) # Specify parameters and distributions to sample from

hparams1 = randint(1, 100)

hparams2 = ['elu', 'relu', ...]

...

hparamsn = uniform(0, 1)# Prepare the Dict for the Search

param_dist = dict(hparams1=hparams1, hparams2=hparams2, ...hparamsn=hparamsn)# Search in action!

n_iter_search = 16 # Number of parameter settings that are sampled.

random_search = RandomizedSearchCV(estimator=model, param_distributions=param_dist,n_iter=n_iter_search,n_jobs=, cv=, verbose=)

random_search.fit(X, Y)# Show the results

print("Best: %f using %s" % (random_search.best_score_, random_search.best_params_))

means = random_search.cv_results_['mean_test_score']

stds = random_search.cv_results_['std_test_score']

params = random_search.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):print("%f (%f) with: %r" % (mean, stdev, param))

贝叶斯搜索

"""

准备数据

"""

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()# split into train, validation and test sets

train_x, val_x, train_y, val_y = train_test_split(train_images, train_labels, stratify=train_labels, random_state=48, test_size=0.05)

(test_x, test_y)=(test_images, test_labels)# normalize pixels to range 0-1

train_x = train_x / 255.0

val_x = val_x / 255.0

test_x = test_x / 255.0#one-hot encode target variable

train_y = to_categorical(train_y)

val_y = to_categorical(val_y)

test_y = to_categorical(test_y)# pip3 install keras-tuner

"""

调整获取最优参数(MLP版)

"""

model = Sequential()model.add(Dense(units = hp.Int('dense-bot', min_value=50, max_value=350, step=50), input_shape=(784,), activation='relu'))for i in range(hp.Int('num_dense_layers', 1, 2)):model.add(Dense(units=hp.Int('dense_' + str(i), min_value=50, max_value=100, step=25), activation='relu'))model.add(Dropout(hp.Choice('dropout_'+ str(i), values=[0.0, 0.1, 0.2])))model.add(Dense(10,activation="softmax"))hp_optimizer=hp.Choice('Optimizer', values=['Adam', 'SGD'])if hp_optimizer == 'Adam':hp_learning_rate = hp.Choice('learning_rate', values=[1e-1, 1e-2, 1e-3])

elif hp_optimizer == 'SGD':hp_learning_rate = hp.Choice('learning_rate', values=[1e-1, 1e-2, 1e-3])nesterov=Truemomentum=0.9

model.compile(optimizer = hp_optimizer, loss='categorical_crossentropy', metrics=['accuracy'])tuner_mlp = kt.tuners.BayesianOptimization(model,seed=random_seed,objective='val_loss',max_trials=30,directory='.',project_name='tuning-mlp')

tuner_mlp.search(train_x, train_y, epochs=50, batch_size=32, validation_data=(dev_x, dev_y), callbacks=callback)

best_mlp_hyperparameters = tuner_mlp.get_best_hyperparameters(1)[0]

print("Best Hyper-parameters")

# best_mlp_hyperparameters.values

"""

使用最优参数来训练模型

"""

model_mlp = Sequential()model_mlp.add(Dense(best_mlp_hyperparameters['dense-bot'], input_shape=(784,), activation='relu'))for i in range(best_mlp_hyperparameters['num_dense_layers']):model_mlp.add(Dense(units=best_mlp_hyperparameters['dense_' +str(i)], activation='relu'))model_mlp.add(Dropout(rate=best_mlp_hyperparameters['dropout_' +str(i)]))model_mlp.add(Dense(10,activation="softmax"))model_mlp.compile(optimizer=best_mlp_hyperparameters['Optimizer'], loss='categorical_crossentropy',metrics=['accuracy'])

history_mlp= model_mlp.fit(train_x, train_y, epochs=100, batch_size=32, validation_data=(dev_x, dev_y), callbacks=callback)

# model_mlp=tuner_mlp.hypermodel.build(best_mlp_hyperparameters)

# history_mlp=model_mlp.fit(train_x, train_y, epochs=100, batch_size=32, validation_data=(dev_x, dev_y), callbacks=callback)

"""

效果测试

"""

mlp_test_loss, mlp_test_acc = model_mlp.evaluate(test_x, test_y, verbose=2)

print('\nTest accuracy:', mlp_test_acc)

# Test accuracy: 0.8823"""

CNN版

"""

"""

基线模型

"""

model_cnn = Sequential()

model_cnn.add(Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)))

model_cnn.add(MaxPooling2D((2, 2)))

model_cnn.add(Flatten())

model_cnn.add(Dense(100, activation='relu'))

model_cnn.add(Dense(10, activation='softmax'))

model_cnn.compile(optimizer="adam", loss='categorical_crossentropy', metrics=['accuracy'])

"""

贝叶斯搜索超参数

"""

model = Sequential()model = Sequential()

model.add(Input(shape=(28, 28, 1)))for i in range(hp.Int('num_blocks', 1, 2)):hp_padding=hp.Choice('padding_'+ str(i), values=['valid', 'same'])hp_filters=hp.Choice('filters_'+ str(i), values=[32, 64])model.add(Conv2D(hp_filters, (3, 3), padding=hp_padding, activation='relu', kernel_initializer='he_uniform', input_shape=(28, 28, 1)))model.add(MaxPooling2D((2, 2)))model.add(Dropout(hp.Choice('dropout_'+ str(i), values=[0.0, 0.1, 0.2])))model.add(Flatten())hp_units = hp.Int('units', min_value=25, max_value=150, step=25)

model.add(Dense(hp_units, activation='relu', kernel_initializer='he_uniform'))model.add(Dense(10,activation="softmax"))hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3])

hp_optimizer=hp.Choice('Optimizer', values=['Adam', 'SGD'])if hp_optimizer == 'Adam':hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3])

elif hp_optimizer == 'SGD':hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3])nesterov=Truemomentum=0.9

model.compile( optimizer=hp_optimizer,loss='categorical_crossentropy', metrics=['accuracy'])tuner_cnn = kt.tuners.BayesianOptimization(model,objective='val_loss',max_trials=100,directory='.',project_name='tuning-cnn')

"""

采用最佳超参数训练模型

"""

model_cnn = Sequential()model_cnn.add(Input(shape=(28, 28, 1)))for i in range(best_cnn_hyperparameters['num_blocks']):hp_padding=best_cnn_hyperparameters['padding_'+ str(i)]hp_filters=best_cnn_hyperparameters['filters_'+ str(i)]model_cnn.add(Conv2D(hp_filters, (3, 3), padding=hp_padding, activation='relu', kernel_initializer='he_uniform', input_shape=(28, 28, 1)))model_cnn.add(MaxPooling2D((2, 2)))model_cnn.add(Dropout(best_cnn_hyperparameters['dropout_'+ str(i)]))model_cnn.add(Flatten())

model_cnn.add(Dense(best_cnn_hyperparameters['units'], activation='relu', kernel_initializer='he_uniform'))model_cnn.add(Dense(10,activation="softmax"))model_cnn.compile(optimizer=best_cnn_hyperparameters['Optimizer'], loss='categorical_crossentropy', metrics=['accuracy'])

print(model_cnn.summary())history_cnn= model_cnn.fit(train_x, train_y, epochs=50, batch_size=32, validation_data=(dev_x, dev_y), callbacks=callback)

cnn_test_loss, cnn_test_acc = model_cnn.evaluate(test_x, test_y, verbose=2)

print('\nTest accuracy:', cnn_test_acc)# Test accuracy: 0.92

超参数调优框架

- Optuna-深度学习-超参数优化

- nvidia nemo-大模型训练优化自动超参数搜索分析

- https://github.com/NVIDIA/NeMo-Framework-Launcher

Optuna深度学习超参数优化框架

import os

import optuna

import plotly

from optuna.trial import TrialState

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torch.utils.data

from torchvision import datasets

from torchvision import transforms

from optuna.visualization import plot_optimization_history

from optuna.visualization import plot_param_importances

from optuna.visualization import plot_slice

from optuna.visualization import plot_intermediate_values

from optuna.visualization import plot_parallel_coordinate# 下述代码指定了SGDClassifier分类器的参数:alpha、max_iter 的搜索空间、损失函数loss的搜索空间。

def objective(trial):iris = sklearn.datasets.load_iris()classes = list(set(iris.target))train_x, valid_x, train_y, valid_y = sklearn.model_selection.train_test_split(iris.data, iris.target, test_size=0.25, random_state=0)#指定参数搜索空间alpha = trial.suggest_loguniform('alpha', 1e-5, 1e-1)max_iter = trial.suggest_int('max_iter',64,192,step=64)loss = trial.suggest_categorical('loss',['hinge','log','perceptron'])clf = sklearn.linear_model.SGDClassifier(alpha=alpha,max_iter=max_iter)# 下述代码指定了学习率learning_rate、优化器optimizer、神经元个数n_uint 的搜索空间。

def objective(trial):params = {'learning_rate': trial.suggest_loguniform('learning_rate', 1e-5, 1e-1),'optimizer': trial.suggest_categorical("optimizer", ["Adam", "RMSprop", "SGD"]),'n_unit': trial.suggest_int("n_unit", 4, 18)}model = build_model(params)accuracy = train_and_evaluate(params, model)return accuracy# 记录超参数训练过程

def objective(trial):iris = sklearn.datasets.load_iris()classes = list(set(iris.target))train_x, valid_x, train_y, valid_y = sklearn.model_selection.train_test_split(iris.data, iris.target, test_size=0.25, random_state=0)alpha = trial.suggest_loguniform('alpha', 1e-5, 1e-1)max_iter = trial.suggest_int('max_iter',64,192,step=64)loss = trial.suggest_categorical('loss',['hinge','log','perceptron'])clf = sklearn.linear_model.SGDClassifier(alpha=alpha,max_iter=max_iter)for step in range(100):clf.partial_fit(train_x, train_y, classes=classes)intermediate_value = 1.0 - clf.score(valid_x, valid_y)trial.report(intermediate_value, step)if trial.should_prune():raise optuna.TrialPruned()return 1.0 - clf.score(valid_x, valid_y)# 创建优化过程

def objective(trial):iris = sklearn.datasets.load_iris()classes = list(set(iris.target))train_x, valid_x, train_y, valid_y = sklearn.model_selection.train_test_split(iris.data, iris.target, test_size=0.25, random_state=0)alpha = trial.suggest_loguniform('alpha', 1e-5, 1e-1)max_iter = trial.suggest_int('max_iter',64,192,step=64)loss = trial.suggest_categorical('loss',['hinge','log','perceptron'])clf = sklearn.linear_model.SGDClassifier(alpha=alpha,max_iter=max_iter)for step in range(100):clf.partial_fit(train_x, train_y, classes=classes)intermediate_value = 1.0 - clf.score(valid_x, valid_y)trial.report(intermediate_value, step)if trial.should_prune():raise optuna.TrialPruned()return 1.0 - clf.score(valid_x, valid_y)study = optuna.create_study(storage='path',study_name='first',pruner=optuna.pruners.MedianPruner())

#study = optuna.study.load_study('first','path')

study.optimize(objective, n_trials=20)

print("Study statistics: ")

print(" Number of finished trials: ", len(study.trials))

print(" Number of pruned trials: ", len(pruned_trials))

print(" Number of complete trials: ", len(complete_trials))

print("Best trial:")

trial = study.best_trial

print(" Value: ", trial.value)

print(" Params: ")

for key, value in trial.params.items():print("{}:{}".format(key, value))# 可视化搜索结果

optuna.visualization.plot_contour(study)#若不行,请尝试:

vis_path = r'result-vis/'

graph_cout = optuna.visualization.plot_contour(study,params=['n_layers','lr'])

plotly.offline.plot(graph_cout,filename=vis_path+'graph_cout.html')plot_optimization_history(study)#若不行,请尝试:

vis_path = r'result-vis/'

history = plot_optimization_history(study)

plotly.offline.plot(history,filename=vis_path+'history.html')plot_intermediate_values(study)#若不行,请尝试:

vis_path = r'result-vis/'

intermed = plot_intermediate_values(study)

plotly.offline.plot(intermed,filename=vis_path+'intermed.html')plot_slice(study, params=['alpha','max_iter','loss'])#若不行,请尝试:

vis_path = r'result-vis/'

slices = plot_slice(study)

plotly.offline.plot(slices,filename=vis_path+'slices.html')plot_parallel_coordinate(study,params=['alpha','max_iter','loss'])#若不行,请尝试:

vis_path = r'result-vis/'

paraller = plot_parallel_coordinate(study)

plotly.offline.plot(paraller,filename=vis_path+'paraller.html')

nvidia nemo大模型超参数优化框架

- 用户手册:nvidia nemo用户手册

相关文章:

)

超参数调优-通用深度学习篇(上)

文章目录 深度学习超参数调优网格搜索示例一:网格搜索回归模型超参数示例二:Keras网格搜索 随机搜索贝叶斯搜索 超参数调优框架Optuna深度学习超参数优化框架nvidia nemo大模型超参数优化框架 参数调整理论: 黑盒优化:超参数优化…...

小程序中data-xx是用方式

data-sts"3" 是微信小程序中的一种数据绑定语法,用于在 WXML(小程序模板)中将自定义的数据绑定到页面元素上。让我详细解释一下: data-xx 的作用: data-xx 允许你在页面元素上自定义属性,以便在事…...

【2024德国工作】外国人在德国找工作是什么体验?

挺难的,德语应该是所有中国人的难点。大部分中国人进德国公司要么是做中国业务相关,要么是做技术领域的工程师。先讲讲人在中国怎么找德国的工作,顺便延申下,德国工作的真实体验,最后聊聊在今年的德国工作签证申请条件…...

Unity中获取数据的方法

Input和GetComponent 一、Input 1、Input类: 用于处理用户输入(如键盘、鼠标、触摸等)的静态类 2、作用: 允许你检查用户的输入状态。如某个键是否被按下,鼠标的位置,触摸的坐标等 3、实例 (1) 键盘…...

Java的死锁问题

Java中的死锁问题是指两个或多个线程互相持有对方所需的资源,导致它们在等待对方释放资源时永久地阻塞的情况。 死锁产生条件 死锁发生通常需要满足以下四个必要条件: 互斥条件:至少有一个资源是只能被一个线程持有的,如果其他…...

Unity 公用函数整理【二】

1、在规定时间时间内将一个值变化到另一个值,使用Mathf.Lerp实现 private float timer;[Tooltip("当前温度")]private float curTemp;[Tooltip("开始温度")]private float startTemp 20;private float maxTemp 100;/// <summary>/// 升…...

千年古城的味蕾传奇-平凉锅盔

在甘肃平凉这片古老而神秘的土地上,有一种美食历经岁月的洗礼,依然散发着独特的魅力,那便是平凉锅盔。平凉锅盔,那可是甘肃平凉的一张美食名片。它外表金黄,厚实饱满,就像一轮散发着诱人香气的金黄月亮。甘…...

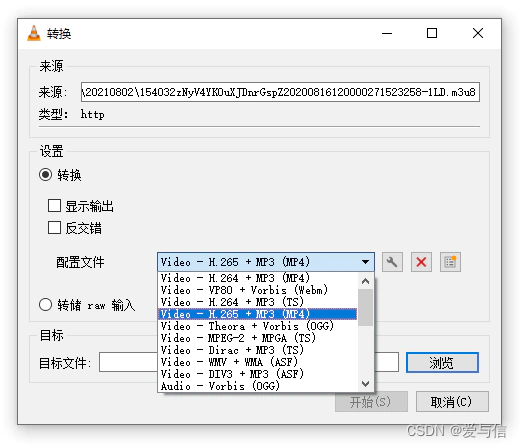

微信小程序视频如何下载

一、工具准备 1、抓包工具Fiddler Download Fiddler Web Debugging Tool for Free by Telerik 2、VLC media player Download official VLC media player for Windows - VideoLAN 3、微信PC端 微信 Windows 版 二、开始抓包 1、打开Fiddler工具,设置修改如下…...

SVN 安装教程

SVN 安装教程 SVN(Subversion)是一个开源的版本控制系统,广泛用于软件开发和文档管理。本文将详细介绍如何在不同的操作系统上安装SVN,包括Windows、macOS和Linux。 Windows系统上的SVN安装 1. 下载SVN 访问SVN官方网站或Visu…...

HTML静态网页成品作业(HTML+CSS)—— 家乡山西介绍网页(3个页面)

🎉不定期分享源码,关注不丢失哦 文章目录 一、作品介绍二、作品演示三、代码目录四、网站代码HTML部分代码 五、源码获取 一、作品介绍 🏷️本套采用HTMLCSS,未使用Javacsript代码,共有6个页面。 二、作品演示 三、代…...

:定理6的补充证明及三道循环置换例题)

【抽代复习笔记】20-群(十四):定理6的补充证明及三道循环置换例题

例1:找出S3中所有不能和(123)交换的元。 解:因为 (123)(1) (1)(123) (123),(123)(132) (132)(123) (1),所以(1)、(132)和(123)均可以交换; 而(12)(123) (23),(123)(12) (13),故 (12)(12…...

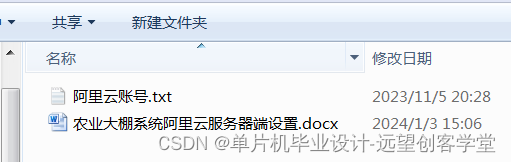

【单片机毕业设计选题24018】-基于STM32和阿里云的农业大棚系统

系统功能: 系统分为手动和自动模式,上电默认为自动模式,自动模式下系统根据采集到的传感器值 自动控制,温度过低后自动开启加热,湿度过高后自动开启通风,光照过低后自动开启补 光,水位过低后自动开启水泵…...

【计算机毕业设计】206校园顺路代送微信小程序

🙊作者简介:拥有多年开发工作经验,分享技术代码帮助学生学习,独立完成自己的项目或者毕业设计。 代码可以私聊博主获取。🌹赠送计算机毕业设计600个选题excel文件,帮助大学选题。赠送开题报告模板ÿ…...

9、PHP 实现调整数组顺序使奇数位于偶数前面

题目: 调整数组顺序使奇数位于偶数前面 描述: 输入一个整数数组,实现一个函数来调整该数组中数字的顺序,使得所有的奇数位于数组的前半部分, 所有的偶数位于位于数组的后半部分,并保证奇数和奇数ÿ…...

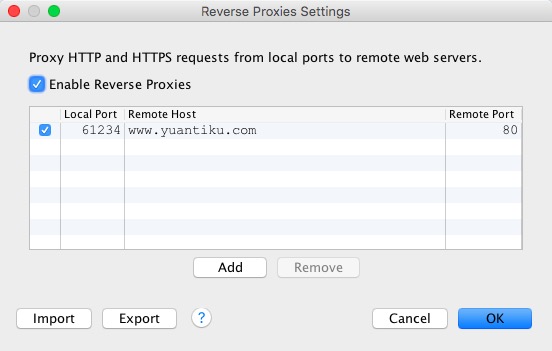

iOS开发工具-网络封包分析工具Charles

一、Charles简介 Charles 是在 Mac 下常用的网络封包截取工具,在做 移动开发时,我们为了调试与服务器端的网络通讯协议,常常需要截取网络封包来分析。 Charles 通过将自己设置成系统的网络访问代理服务器,使得所有的网络访问请求…...

7、PHP 实现矩形覆盖

题目: 矩形覆盖 描述: 我们可以用21的小矩形横着或者竖着去覆盖更大的矩形。 请问用n个21的小矩形无重叠地覆盖一个2*n的大矩形,总共有多少种方法? <?php function rectCover($number) {$prePreNum 1;$preNum 2;$temp 0;i…...

鸿蒙开发通信与连接:【@ohos.wifiext (WLAN)】

WLAN 说明: 本模块首批接口从API version 8开始支持。后续版本的新增接口,采用上角标单独标记接口的起始版本。 该文档中的接口只供非通用类型产品使用,如路由器等,对于常规类型产品,不应该使用这些接口。 导入模块 …...

Ps:脚本事件管理器

Ps菜单:文件/脚本/脚本事件管理器 Scripts/Script Events Manager 脚本事件管理器 Script Events Manager允许用户将特定的事件(如打开、存储或导出文件)与 JavaScript 脚本或 Photoshop 动作关联起来,以便在这些事件发生时自动触…...

redis哨兵模式下业务代码连接实现

目录 一:背景 二:实现过程 三:总结 一:背景 在哨兵模式下,真实的redis服务地址由一个固定ip转变为可以变化的ip,这样我们业务代码在连接redis的时候,就需要判断哪个主redis服务地址,哪个是从…...

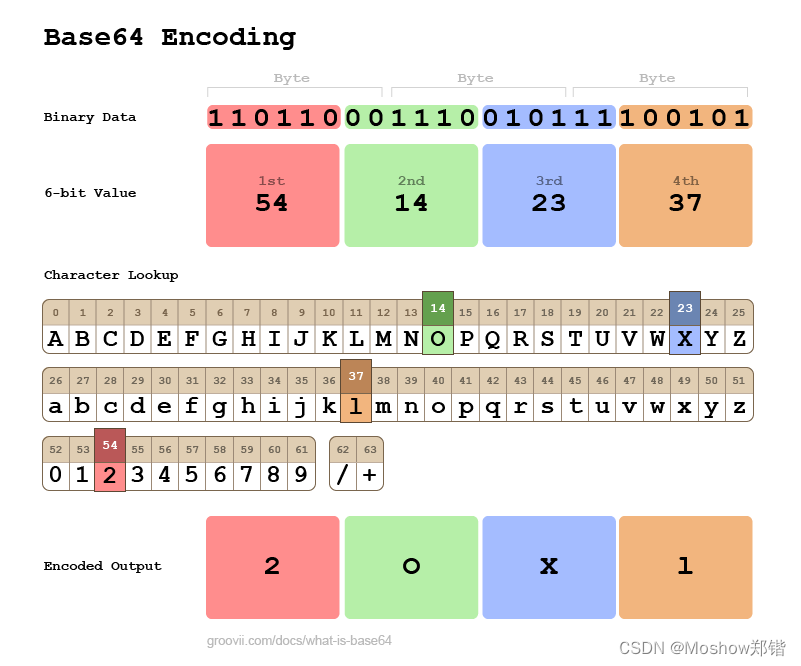

Java中将文件转换为Base64编码的字节码

在Java中,将文件转换为Base64编码的字节码通常涉及以下步骤: 读取文件内容到字节数组。使用java.util.Base64类对字节数组进行编码。 下面是一个简单的Java示例代码,演示如何实现这个过程: import java.io.File; import java.io…...

[特殊字符] 智能合约中的数据是如何在区块链中保持一致的?

🧠 智能合约中的数据是如何在区块链中保持一致的? 为什么所有区块链节点都能得出相同结果?合约调用这么复杂,状态真能保持一致吗?本篇带你从底层视角理解“状态一致性”的真相。 一、智能合约的数据存储在哪里…...

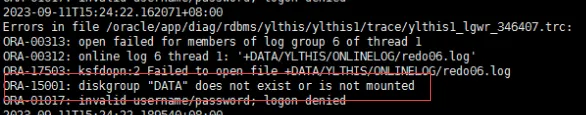

19c补丁后oracle属主变化,导致不能识别磁盘组

补丁后服务器重启,数据库再次无法启动 ORA01017: invalid username/password; logon denied Oracle 19c 在打上 19.23 或以上补丁版本后,存在与用户组权限相关的问题。具体表现为,Oracle 实例的运行用户(oracle)和集…...

【android bluetooth 框架分析 04】【bt-framework 层详解 1】【BluetoothProperties介绍】

1. BluetoothProperties介绍 libsysprop/srcs/android/sysprop/BluetoothProperties.sysprop BluetoothProperties.sysprop 是 Android AOSP 中的一种 系统属性定义文件(System Property Definition File),用于声明和管理 Bluetooth 模块相…...

DBAPI如何优雅的获取单条数据

API如何优雅的获取单条数据 案例一 对于查询类API,查询的是单条数据,比如根据主键ID查询用户信息,sql如下: select id, name, age from user where id #{id}API默认返回的数据格式是多条的,如下: {&qu…...

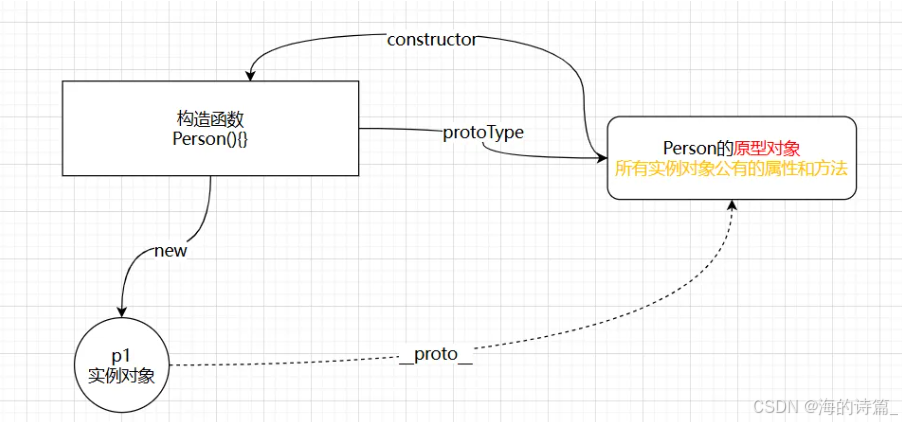

前端开发面试题总结-JavaScript篇(一)

文章目录 JavaScript高频问答一、作用域与闭包1.什么是闭包(Closure)?闭包有什么应用场景和潜在问题?2.解释 JavaScript 的作用域链(Scope Chain) 二、原型与继承3.原型链是什么?如何实现继承&a…...

Mac下Android Studio扫描根目录卡死问题记录

环境信息 操作系统: macOS 15.5 (Apple M2芯片)Android Studio版本: Meerkat Feature Drop | 2024.3.2 Patch 1 (Build #AI-243.26053.27.2432.13536105, 2025年5月22日构建) 问题现象 在项目开发过程中,提示一个依赖外部头文件的cpp源文件需要同步,点…...

企业如何增强终端安全?

在数字化转型加速的今天,企业的业务运行越来越依赖于终端设备。从员工的笔记本电脑、智能手机,到工厂里的物联网设备、智能传感器,这些终端构成了企业与外部世界连接的 “神经末梢”。然而,随着远程办公的常态化和设备接入的爆炸式…...

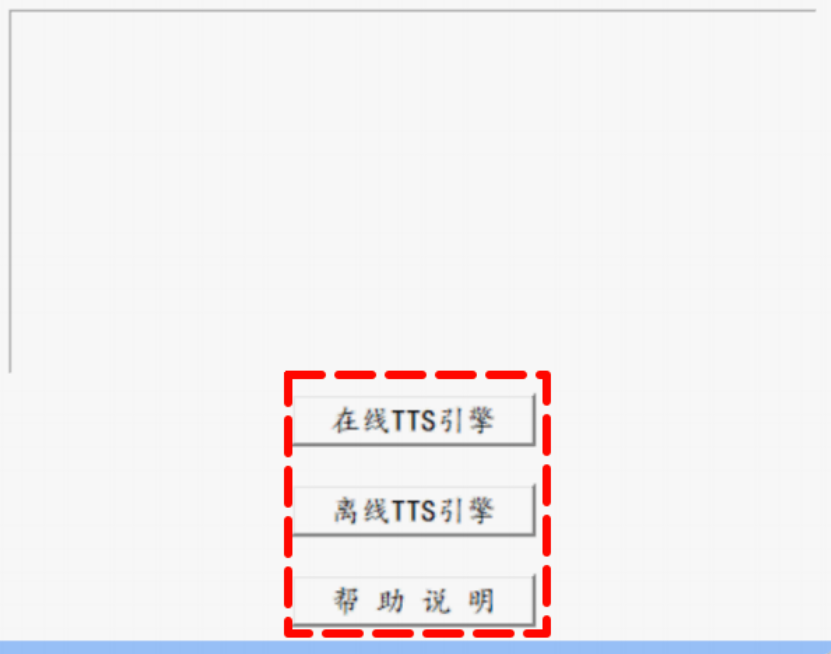

听写流程自动化实践,轻量级教育辅助

随着智能教育工具的发展,越来越多的传统学习方式正在被数字化、自动化所优化。听写作为语文、英语等学科中重要的基础训练形式,也迎来了更高效的解决方案。 这是一款轻量但功能强大的听写辅助工具。它是基于本地词库与可选在线语音引擎构建,…...

Java数值运算常见陷阱与规避方法

整数除法中的舍入问题 问题现象 当开发者预期进行浮点除法却误用整数除法时,会出现小数部分被截断的情况。典型错误模式如下: void process(int value) {double half = value / 2; // 整数除法导致截断// 使用half变量 }此时...

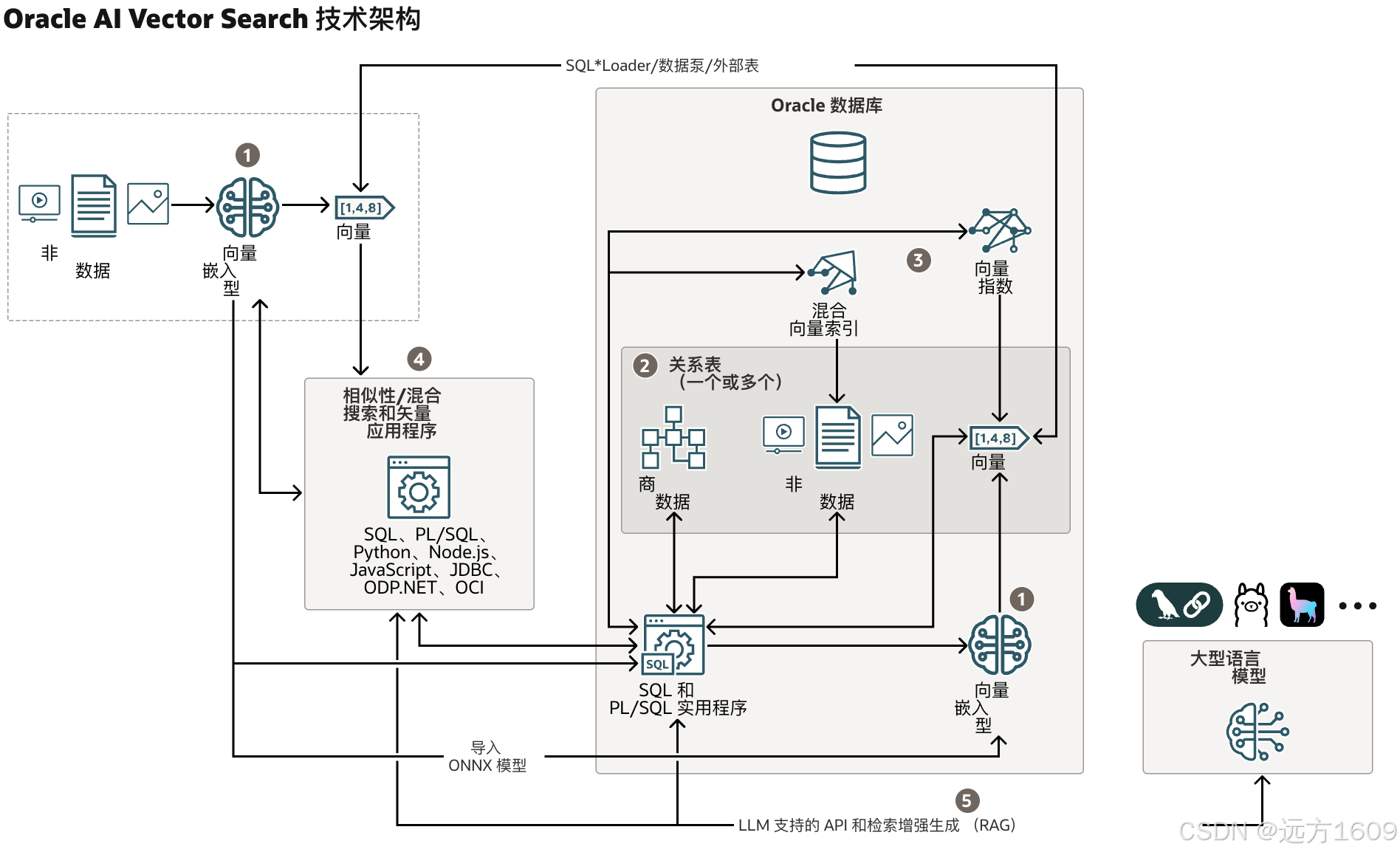

9-Oracle 23 ai Vector Search 特性 知识准备

很多小伙伴是不是参加了 免费认证课程(限时至2025/5/15) Oracle AI Vector Search 1Z0-184-25考试,都顺利拿到certified了没。 各行各业的AI 大模型的到来,传统的数据库中的SQL还能不能打,结构化和非结构的话数据如何和…...