pyflink 安装和测试

FPY Warning!

安装 apache-Flink

# pip install apache-Flink -i https://pypi.tuna.tsinghua.edu.cn/simple/

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple/

Collecting apache-FlinkDownloading https://pypi.tuna.tsinghua.edu.cn/packages/7f/a3/ad50270f0f9b7738922a170c47ec18061224a5afa0864e8749d27b1d5501/apache_flink-1.20.0-cp38-cp38-manylinux1_x86_64.whl (6.8 MB)|████████████████████████████████| 6.8 MB 2.3 MB/s eta 0:00:01

Collecting avro-python3!=1.9.2,>=1.8.1Downloading https://pypi.tuna.tsinghua.edu.cn/packages/cc/97/7a6970380ca8db9139a3cc0b0e3e0dd3e4bc584fb3644e1d06e71e1a55f0/avro-python3-1.10.2.tar.gz (38 kB)

Collecting ruamel.yaml>=0.18.4Downloading https://pypi.tuna.tsinghua.edu.cn/packages/73/67/8ece580cc363331d9a53055130f86b096bf16e38156e33b1d3014fffda6b/ruamel.yaml-0.18.6-py3-none-any.whl (117 kB)|████████████████████████████████| 117 kB 10.1 MB/s eta 0:00:01

Requirement already satisfied: python-dateutil<3,>=2.8.0 in /usr/local/lib/python3.8/dist-packages (from apache-Flink) (2.8.2)

Collecting numpy>=1.22.4Downloading https://pypi.tuna.tsinghua.edu.cn/packages/98/5d/5738903efe0ecb73e51eb44feafba32bdba2081263d40c5043568ff60faf/numpy-1.24.4-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (17.3 MB)|████████████████████████████████| 17.3 MB 2.3 MB/s eta 0:00:01

Requirement already satisfied: pytz>=2018.3 in /usr/local/lib/python3.8/dist-packages (from apache-Flink) (2022.2.1)

Collecting pemja==0.4.1; platform_system != "Windows"Downloading https://pypi.tuna.tsinghua.edu.cn/packages/23/4c/422c99d5d2b823309888714185dc4467a935a25fb1e265c604855d0ea4b9/pemja-0.4.1-cp38-cp38-manylinux1_x86_64.whl (325 kB)|████████████████████████████████| 325 kB 9.8 MB/s eta 0:00:01

Collecting py4j==0.10.9.7Downloading https://pypi.tuna.tsinghua.edu.cn/packages/10/30/a58b32568f1623aaad7db22aa9eafc4c6c194b429ff35bdc55ca2726da47/py4j-0.10.9.7-py2.py3-none-any.whl (200 kB)|████████████████████████████████| 200 kB 10.0 MB/s eta 0:00:01

Collecting protobuf>=3.19.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c0/be/bac52549cab1aaab112d380b3f2a80a348ba7083a80bf4ff4be4fb5a6729/protobuf-5.28.1-cp38-abi3-manylinux2014_x86_64.whl (316 kB)|████████████████████████████████| 316 kB 10.0 MB/s eta 0:00:01

Collecting apache-beam<2.49.0,>=2.43.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/9d/1b/59d9717241170b707fbdd82fa74a676260e7fa03fecfa7fafd58f0c178e1/apache_beam-2.48.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (14.8 MB)|████████████████████████████████| 14.8 MB 2.2 MB/s eta 0:00:01

Collecting requests>=2.26.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/f9/9b/335f9764261e915ed497fcdeb11df5dfd6f7bf257d4a6a2a686d80da4d54/requests-2.32.3-py3-none-any.whl (64 kB)|████████████████████████████████| 64 kB 8.1 MB/s eta 0:00:01

Collecting cloudpickle>=2.2.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/96/43/dae06432d0c4b1dc9e9149ad37b4ca8384cf6eb7700cd9215b177b914f0a/cloudpickle-3.0.0-py3-none-any.whl (20 kB)

Collecting apache-flink-libraries<1.20.1,>=1.20.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/1f/31/80cfa34b6a53a5c19d66faf3e7d29cb0689a69c128a381e584863eb9669a/apache-flink-libraries-1.20.0.tar.gz (231.5 MB)|████████████████████████████████| 231.5 MB 84 kB/s eta 0:00:01

Collecting fastavro!=1.8.0,>=1.1.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/3a/6a/1998c619c6e59a35d0a5df49681f0cdfb6dbbeabb0d24d26e938143c655f/fastavro-1.9.7-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.2 MB)|████████████████████████████████| 3.2 MB 450 kB/s eta 0:00:01

Collecting pyarrow>=5.0.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/3f/08/bc497130789833de09e345e3ce4647e3ce86517c4f70f2144f0367ca378b/pyarrow-17.0.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (40.0 MB)|████████████████████████████████| 40.0 MB 1.3 MB/s eta 0:00:01

Collecting pandas>=1.3.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/f8/7f/5b047effafbdd34e52c9e2d7e44f729a0655efafb22198c45cf692cdc157/pandas-2.0.3-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (12.4 MB)|████████████████████████████████| 12.4 MB 1.2 MB/s eta 0:00:01

Collecting httplib2>=0.19.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/a8/6c/d2fbdaaa5959339d53ba38e94c123e4e84b8fbc4b84beb0e70d7c1608486/httplib2-0.22.0-py3-none-any.whl (96 kB)|████████████████████████████████| 96 kB 2.9 MB/s eta 0:00:011

Collecting ruamel.yaml.clib>=0.2.7; platform_python_implementation == "CPython" and python_version < "3.13"Downloading https://pypi.tuna.tsinghua.edu.cn/packages/22/fa/b2a8fd49c92693e9b9b6b11eef4c2a8aedaca2b521ab3e020aa4778efc23/ruamel.yaml.clib-0.2.8-cp38-cp38-manylinux_2_5_x86_64.manylinux1_x86_64.whl (596 kB)|████████████████████████████████| 596 kB 2.6 MB/s eta 0:00:01

Requirement already satisfied: six>=1.5 in /usr/lib/python3/dist-packages (from python-dateutil<3,>=2.8.0->apache-Flink) (1.14.0)

Collecting find-libpythonDownloading https://pypi.tuna.tsinghua.edu.cn/packages/1d/89/6b4624122d5c61a86e8aebcebd377866338b705ce4f115c45b046dc09b99/find_libpython-0.4.0-py3-none-any.whl (8.7 kB)

Collecting proto-plus<2,>=1.7.1Downloading https://pypi.tuna.tsinghua.edu.cn/packages/7c/6f/db31f0711c0402aa477257205ce7d29e86a75cb52cd19f7afb585f75cda0/proto_plus-1.24.0-py3-none-any.whl (50 kB)|████████████████████████████████| 50 kB 3.5 MB/s eta 0:00:011

Collecting grpcio!=1.48.0,<2,>=1.33.1Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ae/44/f8975d2719dbf58d4a036f936b6c2adeddc7d2a10c2f7ca6ea87ab4c5086/grpcio-1.66.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (5.8 MB)|████████████████████████████████| 5.8 MB 1.3 MB/s eta 0:00:01

Collecting fasteners<1.0,>=0.3Downloading https://pypi.tuna.tsinghua.edu.cn/packages/61/bf/fd60001b3abc5222d8eaa4a204cd8c0ae78e75adc688f33ce4bf25b7fafa/fasteners-0.19-py3-none-any.whl (18 kB)

Collecting crcmod<2.0,>=1.7Downloading https://pypi.tuna.tsinghua.edu.cn/packages/6b/b0/e595ce2a2527e169c3bcd6c33d2473c1918e0b7f6826a043ca1245dd4e5b/crcmod-1.7.tar.gz (89 kB)|████████████████████████████████| 89 kB 5.2 MB/s eta 0:00:011

Requirement already satisfied: typing-extensions>=3.7.0 in /usr/local/lib/python3.8/dist-packages (from apache-beam<2.49.0,>=2.43.0->apache-Flink) (4.3.0)

Collecting pydot<2,>=1.2.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ea/76/75b1bb82e9bad3e3d656556eaa353d8cd17c4254393b08ec9786ac8ed273/pydot-1.4.2-py2.py3-none-any.whl (21 kB)

Requirement already satisfied: regex>=2020.6.8 in /usr/local/lib/python3.8/dist-packages (from apache-beam<2.49.0,>=2.43.0->apache-Flink) (2022.10.31)

Collecting hdfs<3.0.0,>=2.1.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/29/c7/1be559eb10cb7cac0d26373f18656c8037553619ddd4098e50b04ea8b4ab/hdfs-2.7.3.tar.gz (43 kB)|████████████████████████████████| 43 kB 5.2 MB/s eta 0:00:011

Collecting orjson<4.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/25/13/a66f4873ed57832aab57dd8b49c91c4c22b35fb1fa0d1dce3bf8928f2fe0/orjson-3.10.7-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (141 kB)|████████████████████████████████| 141 kB 4.8 MB/s eta 0:00:01

Collecting objsize<0.7.0,>=0.6.1Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ab/37/e5765c22a491e1cd23fb83059f73e478a2c45a464b2d61c98ef5a8d0681c/objsize-0.6.1-py3-none-any.whl (9.3 kB)

Collecting zstandard<1,>=0.18.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/1c/4b/be9f3f9ed33ff4d5e578cf167c16ac1d8542232d5e4831c49b615b5918a6/zstandard-0.23.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (5.4 MB)|████████████████████████████████| 5.4 MB 1.3 MB/s eta 0:00:01

Collecting pymongo<5.0.0,>=3.8.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/2a/72/77445354da27437534ee674faf55a2ef4bfc6ed9b28cbe743d6e7e4c2c61/pymongo-4.8.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (685 kB)|████████████████████████████████| 685 kB 5.0 MB/s eta 0:00:01

Collecting dill<0.3.2,>=0.3.1.1Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c7/11/345f3173809cea7f1a193bfbf02403fff250a3360e0e118a1630985e547d/dill-0.3.1.1.tar.gz (151 kB)|████████████████████████████████| 151 kB 4.9 MB/s eta 0:00:01

Requirement already satisfied: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests>=2.26.0->apache-Flink) (2.8)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/lib/python3/dist-packages (from requests>=2.26.0->apache-Flink) (1.25.8)

Requirement already satisfied: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests>=2.26.0->apache-Flink) (2019.11.28)

Collecting charset-normalizer<4,>=2Downloading https://pypi.tuna.tsinghua.edu.cn/packages/3d/09/d82fe4a34c5f0585f9ea1df090e2a71eb9bb1e469723053e1ee9f57c16f3/charset_normalizer-3.3.2-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (141 kB)|████████████████████████████████| 141 kB 4.9 MB/s eta 0:00:01

Collecting tzdata>=2022.1Downloading https://pypi.tuna.tsinghua.edu.cn/packages/65/58/f9c9e6be752e9fcb8b6a0ee9fb87e6e7a1f6bcab2cdc73f02bb7ba91ada0/tzdata-2024.1-py2.py3-none-any.whl (345 kB)|████████████████████████████████| 345 kB 4.9 MB/s eta 0:00:01

Requirement already satisfied: pyparsing!=3.0.0,!=3.0.1,!=3.0.2,!=3.0.3,<4,>=2.4.2; python_version > "3.0" in /usr/local/lib/python3.8/dist-packages (from httplib2>=0.19.0->apache-Flink) (3.0.9)

Collecting docoptDownloading https://pypi.tuna.tsinghua.edu.cn/packages/a2/55/8f8cab2afd404cf578136ef2cc5dfb50baa1761b68c9da1fb1e4eed343c9/docopt-0.6.2.tar.gz (25 kB)

Collecting dnspython<3.0.0,>=1.16.0Downloading https://pypi.tuna.tsinghua.edu.cn/packages/87/a1/8c5287991ddb8d3e4662f71356d9656d91ab3a36618c3dd11b280df0d255/dnspython-2.6.1-py3-none-any.whl (307 kB)|████████████████████████████████| 307 kB 4.9 MB/s eta 0:00:01

Building wheels for collected packages: avro-python3, apache-flink-libraries, crcmod, hdfs, dill, docoptBuilding wheel for avro-python3 (setup.py) ... doneCreated wheel for avro-python3: filename=avro_python3-1.10.2-py3-none-any.whl size=44009 sha256=b7630bedfef2c8bd38772ef6e0f6f62520cfc6ee7fbc554f7b1b7ae64eb2229eStored in directory: /root/.cache/pip/wheels/6b/28/4f/3f68740c0cd12549e2ba9fcfad15913841346bc927af36903eBuilding wheel for apache-flink-libraries (setup.py) ... doneCreated wheel for apache-flink-libraries: filename=apache_flink_libraries-1.20.0-py2.py3-none-any.whl size=231628008 sha256=2a433e291e2192fa73efb6780bb4753f8c9ecddf7a33036afd02583a42bbc424Stored in directory: /root/.cache/pip/wheels/1d/6c/ea/da3305a119e44581bd4c0c0e92a376fd0615426227a071894dBuilding wheel for crcmod (setup.py) ... doneCreated wheel for crcmod: filename=crcmod-1.7-cp38-cp38-linux_x86_64.whl size=35981 sha256=60feb55c2aec53cb53b2c76013f7b37680467cb7c24e3e9e63e2ddcca37960c7Stored in directory: /root/.cache/pip/wheels/ee/bf/82/ac509f3b383e310a168c1da020cbc62d98c03a6c7c74babc63Building wheel for hdfs (setup.py) ... doneCreated wheel for hdfs: filename=hdfs-2.7.3-py3-none-any.whl size=34321 sha256=52a3f2b630c182293adaa98f1d54c73d6543155a6c8b536b723ba81bcd2c0c7cStored in directory: /root/.cache/pip/wheels/71/d9/87/dc2129ee8e18b4b82cfc6be6dbba6f5b7091a45cfa53b5855dBuilding wheel for dill (setup.py) ... doneCreated wheel for dill: filename=dill-0.3.1.1-py3-none-any.whl size=78530 sha256=8cb55478bc96ba4978d75fcc54972b21f8334d990691267a53e684c2c89eb1e9Stored in directory: /root/.cache/pip/wheels/8b/d5/ad/893b2f2db5de6f4c281a9d16abeb0618f5249620f845a857b4Building wheel for docopt (setup.py) ... doneCreated wheel for docopt: filename=docopt-0.6.2-py2.py3-none-any.whl size=13704 sha256=bf6de70865de15338a3da5fb54a47de343b677743fa989a2289bbf6557330136Stored in directory: /root/.cache/pip/wheels/93/51/4b/6e0f7cba524fbe1e9e973f4bc9be5f3ab1e38346d7d63505f4

Successfully built avro-python3 apache-flink-libraries crcmod hdfs dill docopt

ERROR: apache-beam 2.48.0 has requirement cloudpickle~=2.2.1, but you'll have cloudpickle 3.0.0 which is incompatible.

ERROR: apache-beam 2.48.0 has requirement protobuf<4.24.0,>=3.20.3, but you'll have protobuf 5.28.1 which is incompatible.

ERROR: apache-beam 2.48.0 has requirement pyarrow<12.0.0,>=3.0.0, but you'll have pyarrow 17.0.0 which is incompatible.

Installing collected packages: avro-python3, ruamel.yaml.clib, ruamel.yaml, numpy, find-libpython, pemja, py4j, protobuf, cloudpickle, proto-plus, grpcio, fastavro, fasteners, crcmod, charset-normalizer, requests, pyarrow, pydot, docopt, hdfs, orjson, objsize, zstandard, dnspython, pymongo, httplib2, dill, apache-beam, apache-flink-libraries, tzdata, pandas, apache-FlinkAttempting uninstall: numpyFound existing installation: numpy 1.21.6Uninstalling numpy-1.21.6:Successfully uninstalled numpy-1.21.6Attempting uninstall: requestsFound existing installation: requests 2.22.0Not uninstalling requests at /usr/lib/python3/dist-packages, outside environment /usrCan't uninstall 'requests'. No files were found to uninstall.Attempting uninstall: dillFound existing installation: dill 0.3.7Uninstalling dill-0.3.7:Successfully uninstalled dill-0.3.7Attempting uninstall: pandasFound existing installation: pandas 1.2.0Uninstalling pandas-1.2.0:Successfully uninstalled pandas-1.2.0

Successfully installed apache-Flink-1.20.0 apache-beam-2.48.0 apache-flink-libraries-1.20.0 avro-python3-1.10.2 charset-normalizer-3.3.2 cloudpickle-3.0.0 crcmod-1.7 dill-0.3.1.1 dnspython-2.6.1 docopt-0.6.2 fastavro-1.9.7 fasteners-0.19 find-libpython-0.4.0 grpcio-1.66.1 hdfs-2.7.3 httplib2-0.22.0 numpy-1.24.4 objsize-0.6.1 orjson-3.10.7 pandas-2.0.3 pemja-0.4.1 proto-plus-1.24.0 protobuf-5.28.1 py4j-0.10.9.7 pyarrow-17.0.0 pydot-1.4.2 pymongo-4.8.0 requests-2.32.3 ruamel.yaml-0.18.6 ruamel.yaml.clib-0.2.8 tzdata-2024.1 zstandard-0.23.0

pip 安装后自动会把 flink 也装上

# find / -name flink 2>/dev/null

/usr/local/lib/python3.8/dist-packages/apache_beam/examples/flink

/usr/local/lib/python3.8/dist-packages/apache_beam/io/flink

/usr/local/lib/python3.8/dist-packages/pyflink/bin/flink

/usr/local/lib/python3.8/dist-packages/pyflink/bin/flink 就是 flink 可执行文件

# /usr/local/lib/python3.8/dist-packages/pyflink/bin/flink run -hAction "run" compiles and runs a program.Syntax: run [OPTIONS] <jar-file> <arguments>"run" action options:-c,--class <classname> Class with the program entrypoint ("main()" method). Onlyneeded if the JAR file does notspecify the class in itsmanifest.-C,--classpath <url> Adds a URL to each user codeclassloader on all nodes in thecluster. The paths must specifya protocol (e.g. file://) and beaccessible on all nodes (e.g. bymeans of a NFS share). You canuse this option multiple timesfor specifying more than oneURL. The protocol must besupported by the {@linkjava.net.URLClassLoader}.-cm,--claimMode <arg> Defines how should we restorefrom the given savepoint.Supported options: [claim -claim ownership of the savepointand delete once it is subsumed,no_claim (default) - do notclaim ownership, the firstcheckpoint will not reuse anyfiles from the restored one,legacy (deprecated) - the oldbehaviour, do not assumeownership of the savepointfiles, but can reuse some sharedfiles.-d,--detached If present, runs the job indetached mode-n,--allowNonRestoredState Allow to skip savepoint statethat cannot be restored. Youneed to allow this if youremoved an operator from yourprogram that was part of theprogram when the savepoint wastriggered.-p,--parallelism <parallelism> The parallelism with which torun the program. Optional flagto override the default valuespecified in the configuration.-py,--python <pythonFile> Python script with the programentry point. The dependentresources can be configured withthe `--pyFiles` option.-pyarch,--pyArchives <arg> Add python archive files forjob. The archive files will beextracted to the workingdirectory of python UDF worker.For each archive file, a targetdirectory be specified. If thetarget directory name isspecified, the archive file willbe extracted to a directory withthe specified name. Otherwise,the archive file will beextracted to a directory withthe same name of the archivefile. The files uploaded viathis option are accessible viarelative path. '#' could be usedas the separator of the archivefile path and the targetdirectory name. Comma (',')could be used as the separatorto specify multiple archivefiles. This option can be usedto upload the virtualenvironment, the data files usedin Python UDF (e.g.,--pyArchivesfile:///tmp/py37.zip,file:///tmp/data.zip#data --pyExecutablepy37.zip/py37/bin/python). Thedata files could be accessed inPython UDF, e.g.: f =open('data/data.txt', 'r').-pyclientexec,--pyClientExecutable <arg> The path of the Pythoninterpreter used to launch thePython process when submittingthe Python jobs via "flink run"or compiling the Java/Scala jobscontaining Python UDFs.-pyexec,--pyExecutable <arg> Specify the path of the pythoninterpreter used to execute thepython UDF worker (e.g.:--pyExecutable/usr/local/bin/python3). Thepython UDF worker depends onPython 3.8+, Apache Beam(version == 2.43.0), Pip(version >= 20.3) and SetupTools(version >= 37.0.0). Pleaseensure that the specifiedenvironment meets the aboverequirements.-pyfs,--pyFiles <pythonFiles> Attach custom files for job. Thestandard resource file suffixessuch as .py/.egg/.zip/.whl ordirectory are all supported.These files will be added to thePYTHONPATH of both the localclient and the remote python UDFworker. Files suffixed with .zipwill be extracted and added toPYTHONPATH. Comma (',') could beused as the separator to specifymultiple files (e.g., --pyFilesfile:///tmp/myresource.zip,hdfs:///$namenode_address/myresource2.zip).-pym,--pyModule <pythonModule> Python module with the programentry point. This option must beused in conjunction with`--pyFiles`.-pypath,--pyPythonPath <arg> Specify the path of the pythoninstallation in workernodes.(e.g.: --pyPythonPath/python/lib64/python3.7/).Usercan specify multiple paths usingthe separator ":".-pyreq,--pyRequirements <arg> Specify a requirements.txt filewhich defines the third-partydependencies. These dependencieswill be installed and added tothe PYTHONPATH of the python UDFworker. A directory whichcontains the installationpackages of these dependenciescould be specified optionally.Use '#' as the separator if theoptional parameter exists (e.g.,--pyRequirementsfile:///tmp/requirements.txt#file:///tmp/cached_dir).-rm,--restoreMode <arg> This option is deprecated,please use 'claimMode' instead.-s,--fromSavepoint <savepointPath> Path to a savepoint to restorethe job from (for examplehdfs:///flink/savepoint-1537).-sae,--shutdownOnAttachedExit If the job is submitted inattached mode, perform abest-effort cluster shutdownwhen the CLI is terminatedabruptly, e.g., in response to auser interrupt, such as typingCtrl + C.Options for Generic CLI mode:-D <property=value> Allows specifying multiple generic configurationoptions. The available options can be found athttps://nightlies.apache.org/flink/flink-docs-stable/ops/config.html-e,--executor <arg> DEPRECATED: Please use the -t option instead which isalso available with the "Application Mode".The name of the executor to be used for executing thegiven job, which is equivalent to the"execution.target" config option. The currentlyavailable executors are: "remote", "local","kubernetes-session", "yarn-per-job" (deprecated),"yarn-session".-t,--target <arg> The deployment target for the given application,which is equivalent to the "execution.target" configoption. For the "run" action the currently availabletargets are: "remote", "local", "kubernetes-session","yarn-per-job" (deprecated), "yarn-session". For the"run-application" action the currently availabletargets are: "kubernetes-application".Options for yarn-cluster mode:-m,--jobmanager <arg> Set to yarn-cluster to use YARN executionmode.-yid,--yarnapplicationId <arg> Attach to running YARN session-z,--zookeeperNamespace <arg> Namespace to create the Zookeepersub-paths for high availability modeOptions for default mode:-D <property=value> Allows specifying multiple genericconfiguration options. The availableoptions can be found athttps://nightlies.apache.org/flink/flink-docs-stable/ops/config.html-m,--jobmanager <arg> Address of the JobManager to which toconnect. Use this flag to connect to adifferent JobManager than the one specifiedin the configuration. Attention: Thisoption is respected only if thehigh-availability configuration is NONE.-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper sub-pathsfor high availability modeflink run 发送作业

!cd /usr/local/lib/python3.8/dist-packages/pyflink/ && ./bin/flink run \--jobmanager <<jobmanagerHost>>:8081 \--python examples/table/word_count.py

如果你的执行环境没有python或者安装的是python3,会报错:Cannot run program "python": error=2, No such file or directory

org.apache.flink.client.program.ProgramAbortException: java.io.IOException: Cannot run program "python": error=2, No such file or directoryat org.apache.flink.client.python.PythonDriver.main(PythonDriver.java:134)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:356)at org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:223)at org.apache.flink.client.ClientUtils.executeProgram(ClientUtils.java:113)at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:1026)at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:247)at org.apache.flink.client.cli.CliFrontend.parseAndRun(CliFrontend.java:1270)at org.apache.flink.client.cli.CliFrontend.lambda$mainInternal$10(CliFrontend.java:1367)at org.apache.flink.runtime.security.contexts.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:28)at org.apache.flink.client.cli.CliFrontend.mainInternal(CliFrontend.java:1367)at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1335)

Caused by: java.io.IOException: Cannot run program "python": error=2, No such file or directoryat java.lang.ProcessBuilder.start(ProcessBuilder.java:1048)at org.apache.flink.client.python.PythonEnvUtils.startPythonProcess(PythonEnvUtils.java:378)at org.apache.flink.client.python.PythonEnvUtils.launchPy4jPythonClient(PythonEnvUtils.java:492)at org.apache.flink.client.python.PythonDriver.main(PythonDriver.java:92)... 14 more

Caused by: java.io.IOException: error=2, No such file or directoryat java.lang.UNIXProcess.forkAndExec(Native Method)at java.lang.UNIXProcess.<init>(UNIXProcess.java:247)at java.lang.ProcessImpl.start(ProcessImpl.java:134)at java.lang.ProcessBuilder.start(ProcessBuilder.java:1029)... 17 more

略施小计,创建软链接指向现有python3即可

!ln -s /usr/bin/python3 /usr/bin/python

在此执行,job被提交到flink集群

相关文章:

pyflink 安装和测试

FPY Warning! 安装 apache-Flink # pip install apache-Flink -i https://pypi.tuna.tsinghua.edu.cn/simple/ Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple/ Collecting apache-FlinkDownloading https://pypi.tuna.tsinghua.edu.cn/packages/7f/a3/ad502…...

《网络故障处理案例:公司网络突然中断》

网络故障处理案例:公司网络突然中断 一、故障背景 某工作日上午,一家拥有 500 名员工的公司突然出现整个网络中断的情况。员工们无法访问互联网、内部服务器和共享文件,严重影响了工作效率。 二、故障现象 1. 所有员工的电脑…...

JavaSE:9、数组

1、一维数组 初始化 import com.test.*;public class Main {public static void main(String [] argv){int a[]{1,2};int b[]new int[]{1,0,2};// int b[]new int[3]{1,2,3}; ERROR 要么指定长度要么大括号里初始化数据算长度int[] c{1,2};int d[]new int[10];} }基本类型…...

【裸机装机系列】2.kali(ubuntu)-裸机安装kali并进行磁盘分区-2024.9最新

【前言】 2024年为什么弃用ubuntu,请参考我写的另一篇博文:为什么不用ubuntu,而选择基于debian的kali操作系统-2024.9最新 【镜像下载】 1、镜像下载地址 https://www.kali.org/get-kali/选择installer-image,进入界面下载相应的ISO文件 我…...

解决:Vue 中 debugger 不生效

目录 1,问题2,解决2.1,修改 webpack 配置2.2,修改浏览器设置 1,问题 在 Vue 项目中,可以使用 debugger 在浏览器中开启调试。但有时却不生效。 2,解决 2.1,修改 webpack 配置 通…...

Mac笔记本上查看/user/目录下的文件的几种方法

在Mac笔记本上查看/user/下的文件,可以通过多种方法实现。以下是一些常见的方法: 一、使用Finder 打开Finder:点击Dock栏中的Finder图标,或者使用快捷键Command F。 导航到用户目录: 在Finder的菜单栏中࿰…...

工程师 - ACPI和ACPICA的区别

ACPI(高级配置和电源接口)和 ACPICA(ACPI 组件架构)密切相关,但在系统电源管理和配置方面却有不同的作用。以下是它们的区别: ACPI(高级配置和电源接口) - 定义: ACPI 是…...

一文快速上手-create-vue脚手架

文章目录 初识 create-vuecreate-vue新建项目Vue.js 3 项目目录结构项目的运行和打包vite.config.js文件解析其他:webpack和Vite的区别 初识 create-vue create-vue类似于Vue CLI脚手架,可以快速创建vuejs 3项目,create-vue基于Vite。Vite支…...

rcs文件和登录部分与密码解析)

笔记整理—内核!启动!—kernel部分(7)rcs文件和登录部分与密码解析

该文件的位置在/etc/init.d/rcs,前文说过这个是一个配置文件,最开始的地方首先就是PATH相关的用export导出相关的PATH做环境变量,将可执行路径导为PATH执行时就不用写全路径了,该位置的PATH路径导出了/bin、/sbin、/usr/bin、/usr…...

)

朴素贝叶斯 (Naive Bayes)

朴素贝叶斯 (Naive Bayes) 通俗易懂算法 朴素贝叶斯(Naive Bayes)是一种基于概率统计的分类算法。它的核心思想是通过特征的条件独立性假设来简化计算复杂度,将复杂的联合概率分布分解为特征的独立概率分布之积。 基本思想 朴素贝叶斯基于…...

高德2.0 多边形覆盖物无法选中编辑

多边形覆盖物无法选中编辑。先检查一下数据的类型得是<number[]>,里面是字符串的虽然显示没问题,但是不能选中编辑。 (在项目中排查了加载时机,事件监听…等等种种原因,就是没发现问题。突然想到可能是数据就有问题…...

时序最佳入门代码|基于pytorch的LSTM天气预测及数据分析

前言 在本篇文章,我们基于pytorch框架,构造了LSTM模型进行天气预测,并对数据进行了可视化分析,非常值得入门学习。该数据集提供了2013年1月1日至2017年4月24日在印度德里市的数据。其中包含的4个参数是平均温度(meant…...

85-MySQL怎么判断要不要加索引

在MySQL中,决定是否为表中的列添加索引通常基于查询性能的考量。以下是一些常见的情况和策略: 查询频繁且对性能有影响的列:如果某个列经常用于查询条件,且没有创建索引,查询性能可能会下降。 在WHERE、JOIN和ORDER B…...

)

车载软件架构 --- SOA设计与应用(中)

我是穿拖鞋的汉子,魔都中坚持长期主义的汽车电子工程师。 老规矩,分享一段喜欢的文字,避免自己成为高知识低文化的工程师: 屏蔽力是信息过载时代一个人的特殊竞争力,任何消耗你的人和事,多看一眼都是你的不对。非必要不费力证明自己,无利益不试图说服别人,是精神上的节…...

MATLAB求解微分方程和微分方程组的详细分析

目录 引言 微分方程的定义 MATLAB求解常微分方程 参数分析: MATLAB求解偏微分方程 刚性和非刚性问题 总结 引言 微分方程在物理、工程、经济和生物等多个领域有着广泛的应用。它们用于描述系统中变量与其导数之间的关系,通过这些方程可以解释和预…...

Sybase「退役」在即,某公共卫生机构如何实现 SAP Sybase 到 PostgreSQL 的持续、无缝数据迁移?

使用 TapData,化繁为简,摆脱手动搭建、维护数据管道的诸多烦扰,轻量替代 OGG, Kettle 等同步工具,以及基于 Kafka 的 ETL 解决方案,「CDC 流处理 数据集成」组合拳,加速仓内数据流转,帮助企业…...

如何通过Chrome浏览器轻松获取视频网站的TS文件

在当今这个信息爆炸的时代,视频内容成为了我们获取知识和娱乐的重要方式。然而,许多视频网站出于版权保护等原因,往往限制用户直接下载视频。今天,我将教你如何利用Chrome浏览器轻松下载视频网站的TS文件,甚至批量下载…...

Linux下进程间的通信--共享内存

共享内存概述: 共享内存是进程间通信的一种方式,它允许两个或多个进程共享一个给定的存储区。共享内存是最快的一种IPC形式,因为它允许进程直接对内存进行读写操作,而不需要数据在进程之间复制。 共享内存是进程间通信ÿ…...

Big Data 流处理框架 Flink

Big Data 流处理框架 Flink 什么是 FlinkFlink 的主要特性典型应用场景 Amazon Elastic MapReduce (EMR) VS Flink架构和运行时环境实时处理能力开发和编程模型操作和管理应用场景总结 Flink 支持的数据源Flink 如何消费 AWS SQS 数据源自定义 Source FunctionFlink Connector …...

校园水电费管理微信小程序的设计与实现+ssm(lw+演示+源码+运行)

校园水电费管理小程序 摘 要 随着社会的发展,社会的方方面面都在利用信息化时代的优势。互联网的优势和普及使得各种系统的开发成为必需。 本文以实际运用为开发背景,运用软件工程原理和开发方法,它主要是采用java语言技术和mysql数据库来…...

地震勘探——干扰波识别、井中地震时距曲线特点

目录 干扰波识别反射波地震勘探的干扰波 井中地震时距曲线特点 干扰波识别 有效波:可以用来解决所提出的地质任务的波;干扰波:所有妨碍辨认、追踪有效波的其他波。 地震勘探中,有效波和干扰波是相对的。例如,在反射波…...

智慧医疗能源事业线深度画像分析(上)

引言 医疗行业作为现代社会的关键基础设施,其能源消耗与环境影响正日益受到关注。随着全球"双碳"目标的推进和可持续发展理念的深入,智慧医疗能源事业线应运而生,致力于通过创新技术与管理方案,重构医疗领域的能源使用模式。这一事业线融合了能源管理、可持续发…...

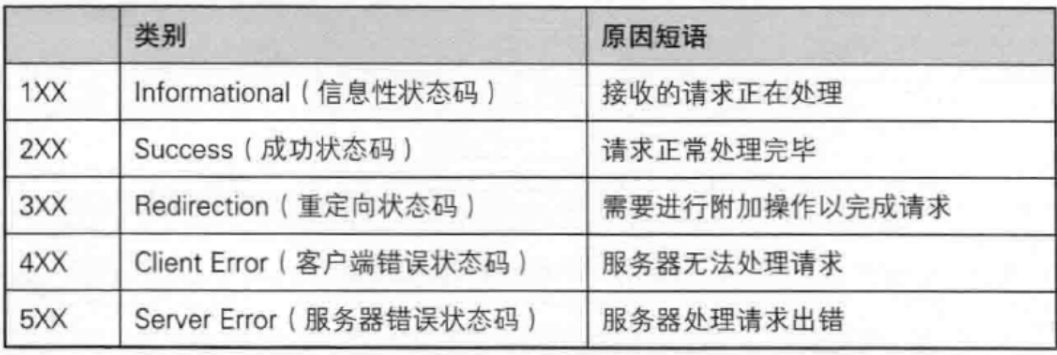

【JavaEE】-- HTTP

1. HTTP是什么? HTTP(全称为"超文本传输协议")是一种应用非常广泛的应用层协议,HTTP是基于TCP协议的一种应用层协议。 应用层协议:是计算机网络协议栈中最高层的协议,它定义了运行在不同主机上…...

MongoDB学习和应用(高效的非关系型数据库)

一丶 MongoDB简介 对于社交类软件的功能,我们需要对它的功能特点进行分析: 数据量会随着用户数增大而增大读多写少价值较低非好友看不到其动态信息地理位置的查询… 针对以上特点进行分析各大存储工具: mysql:关系型数据库&am…...

中南大学无人机智能体的全面评估!BEDI:用于评估无人机上具身智能体的综合性基准测试

作者:Mingning Guo, Mengwei Wu, Jiarun He, Shaoxian Li, Haifeng Li, Chao Tao单位:中南大学地球科学与信息物理学院论文标题:BEDI: A Comprehensive Benchmark for Evaluating Embodied Agents on UAVs论文链接:https://arxiv.…...

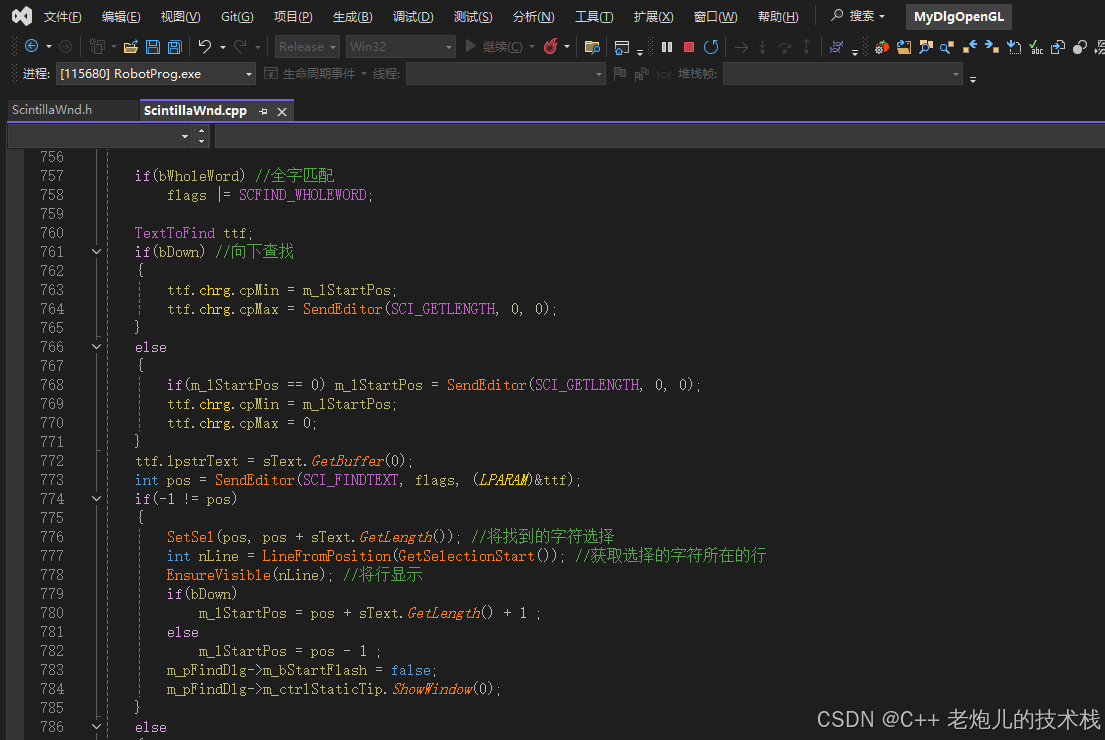

visual studio 2022更改主题为深色

visual studio 2022更改主题为深色 点击visual studio 上方的 工具-> 选项 在选项窗口中,选择 环境 -> 常规 ,将其中的颜色主题改成深色 点击确定,更改完成...

AtCoder 第409场初级竞赛 A~E题解

A Conflict 【题目链接】 原题链接:A - Conflict 【考点】 枚举 【题目大意】 找到是否有两人都想要的物品。 【解析】 遍历两端字符串,只有在同时为 o 时输出 Yes 并结束程序,否则输出 No。 【难度】 GESP三级 【代码参考】 #i…...

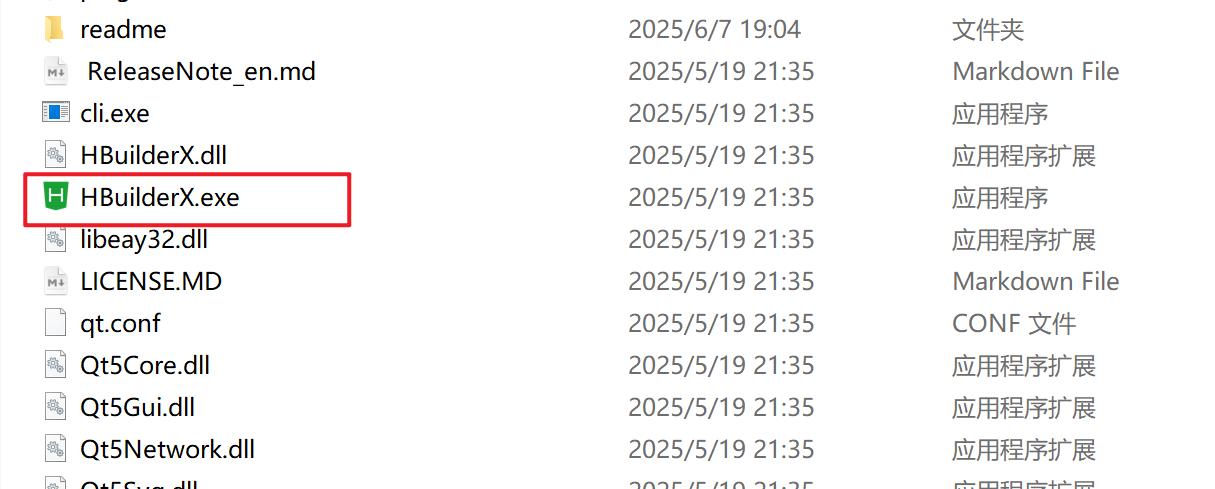

HBuilderX安装(uni-app和小程序开发)

下载HBuilderX 访问官方网站:https://www.dcloud.io/hbuilderx.html 根据您的操作系统选择合适版本: Windows版(推荐下载标准版) Windows系统安装步骤 运行安装程序: 双击下载的.exe安装文件 如果出现安全提示&…...

数据库分批入库

今天在工作中,遇到一个问题,就是分批查询的时候,由于批次过大导致出现了一些问题,一下是问题描述和解决方案: 示例: // 假设已有数据列表 dataList 和 PreparedStatement pstmt int batchSize 1000; // …...

可以参考以下方法:)

根据万维钢·精英日课6的内容,使用AI(2025)可以参考以下方法:

根据万维钢精英日课6的内容,使用AI(2025)可以参考以下方法: 四个洞见 模型已经比人聪明:以ChatGPT o3为代表的AI非常强大,能运用高级理论解释道理、引用最新学术论文,生成对顶尖科学家都有用的…...