开源模型应用落地-glm模型小试-glm-4-9b-chat-vLLM集成(四)

一、前言

GLM-4是智谱AI团队于2024年1月16日发布的基座大模型,旨在自动理解和规划用户的复杂指令,并能调用网页浏览器。其功能包括数据分析、图表创建、PPT生成等,支持128K的上下文窗口,使其在长文本处理和精度召回方面表现优异,且在中文对齐能力上超过GPT-4。与之前的GLM系列产品相比,GLM-4在各项性能上提高了60%,并且在指令跟随和多模态功能上有显著强化,适合于多种应用场景。尽管在某些领域仍逊于国际一流模型,GLM-4的中文处理能力使其在国内大模型中占据领先地位。该模型的研发历程自2020年始,经过多次迭代和改进,最终构建出这一高性能的AI系统。

在开源模型应用落地-glm模型小试-glm-4-9b-chat-快速体验(一)已经掌握了glm-4-9b-chat的基本入门。

在开源模型应用落地-glm模型小试-glm-4-9b-chat-批量推理(二)已经掌握了glm-4-9b-chat的批量推理。

在开源模型应用落地-glm模型小试-glm-4-9b-chat-Gradio集成(三)已经掌握了如何集成Gradio进行页面交互。

本篇将介绍如何集成vLLM进行推理加速。

二、术语

2.1.GLM-4-9B

是智谱 AI 推出的一个开源预训练模型,属于 GLM-4 系列。它于 2024 年 6 月 6 日发布,专为满足高效能语言理解和生成任务而设计,并支持最高 1M(约两百万字)的上下文输入。该模型拥有更强的基础能力,支持26种语言,并且在多模态能力上首次实现了显著进展。

GLM-4-9B的基础能力包括:

- 中英文综合性能提升 40%,在特别的中文对齐能力、指令遵从和工程代码等任务中显著增强

- 较 Llama 3 8B 的性能提升,尤其在数学问题解决和代码编写等复杂任务中表现优越

- 增强的函数调用能力,提升了 40% 的性能

- 支持多轮对话,还支持网页浏览、代码执行、自定义工具调用等高级功能,能够快速处理大量信息并给出高质量的回答

2.2.GLM-4-9B-Chat

是智谱 AI 在 GLM-4-9B 系列中推出的对话版本模型。它设计用于处理多轮对话,并具有一些高级功能,使其在自然语言处理任务中更加高效和灵活。

2.3.vLLM

vLLM是一个开源的大模型推理加速框架,通过PagedAttention高效地管理attention中缓存的张量,实现了比HuggingFace Transformers高14-24倍的吞吐量。

三、前置条件

3.1.基础环境及前置条件

1. 操作系统:centos7

2. NVIDIA Tesla V100 32GB CUDA Version: 12.2

3.最低硬件要求

3.2.下载模型

huggingface:

https://huggingface.co/THUDM/glm-4-9b-chat/tree/main

ModelScope:

魔搭社区

使用git-lfs方式下载示例:

3.3.创建虚拟环境

conda create --name glm4 python=3.10

conda activate glm43.4.安装依赖库

pip install torch>=2.5.0

pip install torchvision>=0.20.0

pip install transformers>=4.46.0

pip install huggingface-hub>=0.25.1

pip install sentencepiece>=0.2.0

pip install jinja2>=3.1.4

pip install pydantic>=2.9.2

pip install timm>=1.0.9

pip install tiktoken>=0.7.0

pip install numpy==1.26.4

pip install accelerate>=1.0.1

pip install sentence_transformers>=3.1.1

pip install openai>=1.51.0

pip install einops>=0.8.0

pip install pillow>=10.4.0

pip install sse-starlette>=2.1.3

pip install bitsandbytes>=0.43.3# using with VLLM Framework

pip install vllm>=0.6.3四、技术实现

4.1.vLLM服务端实现

# -*- coding: utf-8 -*-

import time

from asyncio.log import logger

import re

import uvicorn

import gc

import json

import torch

import random

import string

from vllm import SamplingParams, AsyncEngineArgs, AsyncLLMEngine

from fastapi import FastAPI, HTTPException, Response

from fastapi.middleware.cors import CORSMiddleware

from contextlib import asynccontextmanager

from typing import List, Literal, Optional, Union

from pydantic import BaseModel, Field

from transformers import AutoTokenizer, LogitsProcessor

from sse_starlette.sse import EventSourceResponseEventSourceResponse.DEFAULT_PING_INTERVAL = 1000MAX_MODEL_LENGTH = 8192@asynccontextmanager

async def lifespan(app: FastAPI):yieldif torch.cuda.is_available():torch.cuda.empty_cache()torch.cuda.ipc_collect()app = FastAPI(lifespan=lifespan)app.add_middleware(CORSMiddleware,allow_origins=["*"],allow_credentials=True,allow_methods=["*"],allow_headers=["*"],

)def generate_id(prefix: str, k=29) -> str:suffix = ''.join(random.choices(string.ascii_letters + string.digits, k=k))return f"{prefix}{suffix}"class ModelCard(BaseModel):id: str = ""object: str = "model"created: int = Field(default_factory=lambda: int(time.time()))owned_by: str = "owner"root: Optional[str] = Noneparent: Optional[str] = Nonepermission: Optional[list] = Noneclass ModelList(BaseModel):object: str = "list"data: List[ModelCard] = ["glm-4"]class FunctionCall(BaseModel):name: Optional[str] = Nonearguments: Optional[str] = Noneclass ChoiceDeltaToolCallFunction(BaseModel):name: Optional[str] = Nonearguments: Optional[str] = Noneclass UsageInfo(BaseModel):prompt_tokens: int = 0total_tokens: int = 0completion_tokens: Optional[int] = 0class ChatCompletionMessageToolCall(BaseModel):index: Optional[int] = 0id: Optional[str] = Nonefunction: FunctionCalltype: Optional[Literal["function"]] = 'function'class ChatMessage(BaseModel):# “function” 字段解释:# 使用较老的OpenAI API版本需要注意在这里添加 function 字段并在 process_messages函数中添加相应角色转换逻辑为 observationrole: Literal["user", "assistant", "system", "tool"]content: Optional[str] = Nonefunction_call: Optional[ChoiceDeltaToolCallFunction] = Nonetool_calls: Optional[List[ChatCompletionMessageToolCall]] = Noneclass DeltaMessage(BaseModel):role: Optional[Literal["user", "assistant", "system"]] = Nonecontent: Optional[str] = Nonefunction_call: Optional[ChoiceDeltaToolCallFunction] = Nonetool_calls: Optional[List[ChatCompletionMessageToolCall]] = Noneclass ChatCompletionResponseChoice(BaseModel):index: intmessage: ChatMessagefinish_reason: Literal["stop", "length", "tool_calls"]class ChatCompletionResponseStreamChoice(BaseModel):delta: DeltaMessagefinish_reason: Optional[Literal["stop", "length", "tool_calls"]]index: intclass ChatCompletionResponse(BaseModel):model: strid: Optional[str] = Field(default_factory=lambda: generate_id('chatcmpl-', 29))object: Literal["chat.completion", "chat.completion.chunk"]choices: List[Union[ChatCompletionResponseChoice, ChatCompletionResponseStreamChoice]]created: Optional[int] = Field(default_factory=lambda: int(time.time()))system_fingerprint: Optional[str] = Field(default_factory=lambda: generate_id('fp_', 9))usage: Optional[UsageInfo] = Noneclass ChatCompletionRequest(BaseModel):model: strmessages: List[ChatMessage]temperature: Optional[float] = 0.8top_p: Optional[float] = 0.8max_tokens: Optional[int] = Nonestream: Optional[bool] = Falsetools: Optional[Union[dict, List[dict]]] = Nonetool_choice: Optional[Union[str, dict]] = Nonerepetition_penalty: Optional[float] = 1.1class InvalidScoreLogitsProcessor(LogitsProcessor):def __call__(self, input_ids: torch.LongTensor, scores: torch.FloatTensor) -> torch.FloatTensor:if torch.isnan(scores).any() or torch.isinf(scores).any():scores.zero_()scores[..., 5] = 5e4return scoresdef process_response(output: str, tools: dict | List[dict] = None, use_tool: bool = False) -> Union[str, dict]:lines = output.strip().split("\n")arguments_json = Nonespecial_tools = ["cogview", "simple_browser"]tools = {tool['function']['name'] for tool in tools} if tools else {}if len(lines) >= 2 and lines[1].startswith("{"):function_name = lines[0].strip()arguments = "\n".join(lines[1:]).strip()if function_name in tools or function_name in special_tools:try:arguments_json = json.loads(arguments)is_tool_call = Trueexcept json.JSONDecodeError:is_tool_call = function_name in special_toolsif is_tool_call and use_tool:content = {"name": function_name,"arguments": json.dumps(arguments_json if isinstance(arguments_json, dict) else arguments,ensure_ascii=False)}if function_name == "simple_browser":search_pattern = re.compile(r'search\("(.+?)"\s*,\s*recency_days\s*=\s*(\d+)\)')match = search_pattern.match(arguments)if match:content["arguments"] = json.dumps({"query": match.group(1),"recency_days": int(match.group(2))}, ensure_ascii=False)elif function_name == "cogview":content["arguments"] = json.dumps({"prompt": arguments}, ensure_ascii=False)return contentreturn output.strip()@torch.inference_mode()

async def generate_stream_glm4(params):messages = params["messages"]tools = params["tools"]tool_choice = params["tool_choice"]temperature = float(params.get("temperature", 1.0))repetition_penalty = float(params.get("repetition_penalty", 1.0))top_p = float(params.get("top_p", 1.0))max_new_tokens = int(params.get("max_tokens", 8192))messages = process_messages(messages, tools=tools, tool_choice=tool_choice)inputs = tokenizer.apply_chat_template(messages, add_generation_prompt=True, tokenize=False)params_dict = {"n": 1,"best_of": 1,"presence_penalty": 1.0,"frequency_penalty": 0.0,"temperature": temperature,"top_p": top_p,"top_k": -1,"repetition_penalty": repetition_penalty,"stop_token_ids": [151329, 151336, 151338],"ignore_eos": False,"max_tokens": max_new_tokens,"logprobs": None,"prompt_logprobs": None,"skip_special_tokens": True,}sampling_params = SamplingParams(**params_dict)async for output in engine.generate(prompt=inputs, sampling_params=sampling_params, request_id=f"{time.time()}"):output_len = len(output.outputs[0].token_ids)input_len = len(output.prompt_token_ids)ret = {"text": output.outputs[0].text,"usage": {"prompt_tokens": input_len,"completion_tokens": output_len,"total_tokens": output_len + input_len},"finish_reason": output.outputs[0].finish_reason,}yield retgc.collect()torch.cuda.empty_cache()def process_messages(messages, tools=None, tool_choice="none"):_messages = messagesprocessed_messages = []msg_has_sys = Falsedef filter_tools(tool_choice, tools):function_name = tool_choice.get('function', {}).get('name', None)if not function_name:return []filtered_tools = [tool for tool in toolsif tool.get('function', {}).get('name') == function_name]return filtered_toolsif tool_choice != "none":if isinstance(tool_choice, dict):tools = filter_tools(tool_choice, tools)if tools:processed_messages.append({"role": "system","content": None,"tools": tools})msg_has_sys = Trueif isinstance(tool_choice, dict) and tools:processed_messages.append({"role": "assistant","metadata": tool_choice["function"]["name"],"content": ""})for m in _messages:role, content, func_call = m.role, m.content, m.function_calltool_calls = getattr(m, 'tool_calls', None)if role == "function":processed_messages.append({"role": "observation","content": content})elif role == "tool":processed_messages.append({"role": "observation","content": content,"function_call": True})elif role == "assistant":if tool_calls:for tool_call in tool_calls:processed_messages.append({"role": "assistant","metadata": tool_call.function.name,"content": tool_call.function.arguments})else:for response in content.split("\n"):if "\n" in response:metadata, sub_content = response.split("\n", maxsplit=1)else:metadata, sub_content = "", responseprocessed_messages.append({"role": role,"metadata": metadata,"content": sub_content.strip()})else:if role == "system" and msg_has_sys:msg_has_sys = Falsecontinueprocessed_messages.append({"role": role, "content": content})if not tools or tool_choice == "none":for m in _messages:if m.role == 'system':processed_messages.insert(0, {"role": m.role, "content": m.content})breakreturn processed_messages@app.get("/health")

async def health() -> Response:"""Health check."""return Response(status_code=200)@app.get("/v1/models", response_model=ModelList)

async def list_models():model_card = ModelCard(id="glm-4")return ModelList(data=[model_card])@app.post("/v1/chat/completions", response_model=ChatCompletionResponse)

async def create_chat_completion(request: ChatCompletionRequest):if len(request.messages) < 1 or request.messages[-1].role == "assistant":raise HTTPException(status_code=400, detail="Invalid request")gen_params = dict(messages=request.messages,temperature=request.temperature,top_p=request.top_p,max_tokens=request.max_tokens or 1024,echo=False,stream=request.stream,repetition_penalty=request.repetition_penalty,tools=request.tools,tool_choice=request.tool_choice,)logger.debug(f"==== request ====\n{gen_params}")if request.stream:predict_stream_generator = predict_stream(request.model, gen_params)output = await anext(predict_stream_generator)if output:return EventSourceResponse(predict_stream_generator, media_type="text/event-stream")logger.debug(f"First result output:\n{output}")function_call = Noneif output and request.tools:try:function_call = process_response(output, request.tools, use_tool=True)except:logger.warning("Failed to parse tool call")if isinstance(function_call, dict):function_call = ChoiceDeltaToolCallFunction(**function_call)generate = parse_output_text(request.model, output, function_call=function_call)return EventSourceResponse(generate, media_type="text/event-stream")else:return EventSourceResponse(predict_stream_generator, media_type="text/event-stream")response = ""async for response in generate_stream_glm4(gen_params):passif response["text"].startswith("\n"):response["text"] = response["text"][1:]response["text"] = response["text"].strip()usage = UsageInfo()function_call, finish_reason = None, "stop"tool_calls = Noneif request.tools:try:function_call = process_response(response["text"], request.tools, use_tool=True)except Exception as e:logger.warning(f"Failed to parse tool call: {e}")if isinstance(function_call, dict):finish_reason = "tool_calls"function_call_response = ChoiceDeltaToolCallFunction(**function_call)function_call_instance = FunctionCall(name=function_call_response.name,arguments=function_call_response.arguments)tool_calls = [ChatCompletionMessageToolCall(id=generate_id('call_', 24),function=function_call_instance,type="function")]message = ChatMessage(role="assistant",content=None if tool_calls else response["text"],function_call=None,tool_calls=tool_calls,)logger.debug(f"==== message ====\n{message}")choice_data = ChatCompletionResponseChoice(index=0,message=message,finish_reason=finish_reason,)task_usage = UsageInfo.model_validate(response["usage"])for usage_key, usage_value in task_usage.model_dump().items():setattr(usage, usage_key, getattr(usage, usage_key) + usage_value)return ChatCompletionResponse(model=request.model,choices=[choice_data],object="chat.completion",usage=usage)async def predict_stream(model_id, gen_params):output = ""is_function_call = Falsehas_send_first_chunk = Falsecreated_time = int(time.time())function_name = Noneresponse_id = generate_id('chatcmpl-', 29)system_fingerprint = generate_id('fp_', 9)tools = {tool['function']['name'] for tool in gen_params['tools']} if gen_params['tools'] else {}delta_text = ""async for new_response in generate_stream_glm4(gen_params):decoded_unicode = new_response["text"]delta_text += decoded_unicode[len(output):]output = decoded_unicodelines = output.strip().split("\n")# 检查是否为工具# 这是一个简单的工具比较函数,不能保证拦截所有非工具输出的结果,比如参数未对齐等特殊情况。##TODO 如果你希望做更多处理,可以在这里进行逻辑完善。if not is_function_call and len(lines) >= 2:first_line = lines[0].strip()if first_line in tools:is_function_call = Truefunction_name = first_linedelta_text = lines[1]# 工具调用返回if is_function_call:if not has_send_first_chunk:function_call = {"name": function_name, "arguments": ""}tool_call = ChatCompletionMessageToolCall(index=0,id=generate_id('call_', 24),function=FunctionCall(**function_call),type="function")message = DeltaMessage(content=None,role="assistant",function_call=None,tool_calls=[tool_call])choice_data = ChatCompletionResponseStreamChoice(index=0,delta=message,finish_reason=None)chunk = ChatCompletionResponse(model=model_id,id=response_id,choices=[choice_data],created=created_time,system_fingerprint=system_fingerprint,object="chat.completion.chunk")yield ""yield chunk.model_dump_json(exclude_unset=True)has_send_first_chunk = Truefunction_call = {"name": None, "arguments": delta_text}delta_text = ""tool_call = ChatCompletionMessageToolCall(index=0,id=None,function=FunctionCall(**function_call),type="function")message = DeltaMessage(content=None,role=None,function_call=None,tool_calls=[tool_call])choice_data = ChatCompletionResponseStreamChoice(index=0,delta=message,finish_reason=None)chunk = ChatCompletionResponse(model=model_id,id=response_id,choices=[choice_data],created=created_time,system_fingerprint=system_fingerprint,object="chat.completion.chunk")yield chunk.model_dump_json(exclude_unset=True)# 用户请求了 Function Call 但是框架还没确定是否为Function Callelif (gen_params["tools"] and gen_params["tool_choice"] != "none") or is_function_call:continue# 常规返回else:finish_reason = new_response.get("finish_reason", None)if not has_send_first_chunk:message = DeltaMessage(content="",role="assistant",function_call=None,)choice_data = ChatCompletionResponseStreamChoice(index=0,delta=message,finish_reason=finish_reason)chunk = ChatCompletionResponse(model=model_id,id=response_id,choices=[choice_data],created=created_time,system_fingerprint=system_fingerprint,object="chat.completion.chunk")yield chunk.model_dump_json(exclude_unset=True)has_send_first_chunk = Truemessage = DeltaMessage(content=delta_text,role="assistant",function_call=None,)delta_text = ""choice_data = ChatCompletionResponseStreamChoice(index=0,delta=message,finish_reason=finish_reason)chunk = ChatCompletionResponse(model=model_id,id=response_id,choices=[choice_data],created=created_time,system_fingerprint=system_fingerprint,object="chat.completion.chunk")yield chunk.model_dump_json(exclude_unset=True)# 工具调用需要额外返回一个字段以对齐 OpenAI 接口if is_function_call:yield ChatCompletionResponse(model=model_id,id=response_id,system_fingerprint=system_fingerprint,choices=[ChatCompletionResponseStreamChoice(index=0,delta=DeltaMessage(content=None,role=None,function_call=None,),finish_reason="tool_calls")],created=created_time,object="chat.completion.chunk",usage=None).model_dump_json(exclude_unset=True)elif delta_text != "":message = DeltaMessage(content="",role="assistant",function_call=None,)choice_data = ChatCompletionResponseStreamChoice(index=0,delta=message,finish_reason=None)chunk = ChatCompletionResponse(model=model_id,id=response_id,choices=[choice_data],created=created_time,system_fingerprint=system_fingerprint,object="chat.completion.chunk")yield chunk.model_dump_json(exclude_unset=True)finish_reason = 'stop'message = DeltaMessage(content=delta_text,role="assistant",function_call=None,)delta_text = ""choice_data = ChatCompletionResponseStreamChoice(index=0,delta=message,finish_reason=finish_reason)chunk = ChatCompletionResponse(model=model_id,id=response_id,choices=[choice_data],created=created_time,system_fingerprint=system_fingerprint,object="chat.completion.chunk")yield chunk.model_dump_json(exclude_unset=True)yield '[DONE]'else:yield '[DONE]'async def parse_output_text(model_id: str, value: str, function_call: ChoiceDeltaToolCallFunction = None):delta = DeltaMessage(role="assistant", content=value)if function_call is not None:delta.function_call = function_callchoice_data = ChatCompletionResponseStreamChoice(index=0,delta=delta,finish_reason=None)chunk = ChatCompletionResponse(model=model_id,choices=[choice_data],object="chat.completion.chunk")yield "{}".format(chunk.model_dump_json(exclude_unset=True))yield '[DONE]'if __name__ == "__main__":MODEL_PATH = "/data/model/glm-4-9b-chat"tensor_parallel_size = 1tokenizer = AutoTokenizer.from_pretrained(MODEL_PATH, trust_remote_code=True)engine_args = AsyncEngineArgs(model=MODEL_PATH,tokenizer=MODEL_PATH,# 如果你有多张显卡,可以在这里设置成你的显卡数量tensor_parallel_size=tensor_parallel_size,dtype=torch.float16,trust_remote_code=True,# 占用显存的比例,请根据你的显卡显存大小设置合适的值,例如,如果你的显卡有80G,您只想使用24G,请按照24/80=0.3设置gpu_memory_utilization=0.9,enforce_eager=True,worker_use_ray=False,disable_log_requests=True,max_model_len=MAX_MODEL_LENGTH,)engine = AsyncLLMEngine.from_engine_args(engine_args)uvicorn.run(app, host='0.0.0.0', port=8000, workers=1)4.2.vLLM服务端启动

(glm4) [root@gpu test]# python -u glm_server.py

WARNING 11-06 12:11:19 config.py:1668] Casting torch.bfloat16 to torch.float16.

WARNING 11-06 12:11:23 config.py:395] To see benefits of async output processing, enable CUDA graph. Since, enforce-eager is enabled, async output processor cannot be used

INFO 11-06 12:11:23 llm_engine.py:237] Initializing an LLM engine (v0.6.3.post1) with config: model='/data/model/glm-4-9b-chat', speculative_config=None, tokenizer='/data/model/glm-4-9b-chat', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, rope_scaling=None, rope_theta=None, tokenizer_revision=None, trust_remote_code=True, dtype=torch.float16, max_seq_len=8192, download_dir=None, load_format=LoadFormat.AUTO, tensor_parallel_size=1, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=None, enforce_eager=True, kv_cache_dtype=auto, quantization_param_path=None, device_config=cuda, decoding_config=DecodingConfig(guided_decoding_backend='outlines'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=/data/model/glm-4-9b-chat, num_scheduler_steps=1, chunked_prefill_enabled=False multi_step_stream_outputs=True, enable_prefix_caching=False, use_async_output_proc=False, use_cached_outputs=False, mm_processor_kwargs=None)

WARNING 11-06 12:11:24 tokenizer.py:169] Using a slow tokenizer. This might cause a significant slowdown. Consider using a fast tokenizer instead.

INFO 11-06 12:11:24 selector.py:224] Cannot use FlashAttention-2 backend for Volta and Turing GPUs.

INFO 11-06 12:11:24 selector.py:115] Using XFormers backend.

/usr/local/miniconda3/envs/glm4/lib/python3.10/site-packages/xformers/ops/fmha/flash.py:211: FutureWarning: `torch.library.impl_abstract` was renamed to `torch.library.register_fake`. Please use that instead; we will remove `torch.library.impl_abstract` in a future version of PyTorch.@torch.library.impl_abstract("xformers_flash::flash_fwd")

/usr/local/miniconda3/envs/glm4/lib/python3.10/site-packages/xformers/ops/fmha/flash.py:344: FutureWarning: `torch.library.impl_abstract` was renamed to `torch.library.register_fake`. Please use that instead; we will remove `torch.library.impl_abstract` in a future version of PyTorch.@torch.library.impl_abstract("xformers_flash::flash_bwd")

INFO 11-06 12:11:25 model_runner.py:1056] Starting to load model /data/model/glm-4-9b-chat...

INFO 11-06 12:11:25 selector.py:224] Cannot use FlashAttention-2 backend for Volta and Turing GPUs.

INFO 11-06 12:11:25 selector.py:115] Using XFormers backend.

Loading safetensors checkpoint shards: 0% Completed | 0/10 [00:00<?, ?it/s]

Loading safetensors checkpoint shards: 10% Completed | 1/10 [00:00<00:08, 1.01it/s]

Loading safetensors checkpoint shards: 20% Completed | 2/10 [00:01<00:07, 1.13it/s]

Loading safetensors checkpoint shards: 30% Completed | 3/10 [00:02<00:06, 1.14it/s]

Loading safetensors checkpoint shards: 40% Completed | 4/10 [00:03<00:05, 1.15it/s]

Loading safetensors checkpoint shards: 50% Completed | 5/10 [00:04<00:04, 1.18it/s]

Loading safetensors checkpoint shards: 60% Completed | 6/10 [00:05<00:03, 1.08it/s]

Loading safetensors checkpoint shards: 70% Completed | 7/10 [00:06<00:02, 1.07it/s]

Loading safetensors checkpoint shards: 80% Completed | 8/10 [00:07<00:01, 1.13it/s]

Loading safetensors checkpoint shards: 90% Completed | 9/10 [00:08<00:00, 1.10it/s]

Loading safetensors checkpoint shards: 100% Completed | 10/10 [00:08<00:00, 1.10it/s]

Loading safetensors checkpoint shards: 100% Completed | 10/10 [00:08<00:00, 1.11it/s]INFO 11-06 12:11:35 model_runner.py:1067] Loading model weights took 17.5635 GB

INFO 11-06 12:11:37 gpu_executor.py:122] # GPU blocks: 12600, # CPU blocks: 6553

INFO 11-06 12:11:37 gpu_executor.py:126] Maximum concurrency for 8192 tokens per request: 24.61x

INFO: Started server process [1627618]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

4.3.客户端实现

# -*- coding: utf-8 -*-

from openai import OpenAIbase_url = "http://127.0.0.1:8000/v1/"

client = OpenAI(api_key="EMPTY", base_url=base_url)

MODEL_PATH = "/data/model/glm-4-9b-chat"def chat(use_stream=False):messages = [{"role": "system","content": "你是一名专业的导游。",},{"role": "user","content": "请推荐一些广州特色的景点?",}]response = client.chat.completions.create(model=MODEL_PATH,messages=messages,stream=use_stream,max_tokens=8192,temperature=0.4,presence_penalty=1.2,top_p=0.9,)if response:if use_stream:for chunk in response:msg = chunk.choices[0].delta.contentprint(msg,end='',flush=True)else:print(response)else:print("Error:", response.status_code)if __name__ == "__main__":chat(use_stream=True)

4.4.客户端调用

(glm4) [root@gpu test]# python -u glm_client.py 当然可以!广州是中国广东省的省会,历史悠久,文化底蕴深厚,同时也是一座现代化的大都市。以下是一些广州的特色景点推荐:1. **白云山** - 广州著名的风景区,有“羊城第一秀”之称。山上空气清新,景色优美,是登山和观赏广州市区全景的好地方。2. **珠江夜游** - 乘坐游船在珠江上欣赏两岸的夜景,可以看到广州塔、海心沙等著名地标,以及璀璨的灯光秀。3. **长隆旅游度假区** - 包括长隆野生动物世界、长隆水上乐园、长隆国际大马戏等多个主题公园,适合家庭游玩。4. **陈家祠** - 又称陈氏书院,是一座典型的岭南传统建筑,以其精美的木雕、石雕和砖雕闻名。5. **越秀公园** - 公园内有五羊雕像,是广州的象征之一。还有中山纪念碑、镇海楼等历史遗迹。6. **北京路步行街** - 这里集合了购物、餐饮、娱乐于一体,是一条充满活力的商业街区。7. **上下九步行街** - 这条古老的街道以骑楼建筑为特色,两旁有许多老字号商店和小吃店,是体验广州传统文化的好去处。8. **广州塔(小蛮腰)** - 作为广州的地标性建筑,游客可以从这里俯瞰整个城市的壮丽景观。9. **南越王宫博物馆** - 展示了两千多年前南越国的历史文化,馆内有一座复原的宫殿模型。10. **荔湾湖公园** - 一个集自然风光与人文景观于一体的公园,湖水清澈,环境宜人。11. **广州动物园** - 是中国最大的城市动物园之一,拥有多种珍稀动物。12. **广州艺术博物院** - 收藏了大量珍贵的艺术品和历史文物,是了解广东乃至华南地区文化艺术的重要场所。这些景点不仅展示了广州的自然美景,也体现了其丰富的文化遗产和现代都市的风貌。希望您在广州旅行时能有一个愉快的体验!相关文章:

开源模型应用落地-glm模型小试-glm-4-9b-chat-vLLM集成(四)

一、前言 GLM-4是智谱AI团队于2024年1月16日发布的基座大模型,旨在自动理解和规划用户的复杂指令,并能调用网页浏览器。其功能包括数据分析、图表创建、PPT生成等,支持128K的上下文窗口,使其在长文本处理和精度召回方面表现优异&a…...

.net为什么要在单独的项目中定义扩展方法?C#

使用 扩展方法(Extension Methods) 和创建 扩展类(Extension Class) 在 C# 中有几个特定的目的,主要是为了提高代码的可扩展性、灵活性和可读性。让我们来详细解释这些概念以及为什么扩展类需要是静态的。 为什么使用…...

动态规划 —— dp 问题-打家劫舍II

1.打家劫舍II 题目链接: 213. 打家劫舍 II - 力扣(LeetCode)https://leetcode.cn/problems/house-robber-ii/ 2. 题目解析 通过分类讨论,将环形问题转换为两个线性的“打家劫舍|” 当偷第一个位置的时候,rob1在&#…...

Java基础-组件及事件处理(上)

(创作不易,感谢有你,你的支持,就是我前行的最大动力,如果看完对你有帮助,请留下您的足迹) 目录 Swing 概述 MVC 架构 Swing 特点 控件 SWING UI 元素 JFrame SWING 容器 说明 常用方法 示例&a…...

Python实例:爱心代码

前言 在编程的奇妙世界里,代码不仅仅是冰冷的指令集合,它还可以成为表达情感、传递温暖的独特方式。今天,我们将一同探索用 Python 语言绘制爱心的神奇之旅。 爱心,这个象征着爱与温暖的符号,一直以来都在人类的情感世界中占据着特殊的地位。而通过 Python 的强大功能,…...

图解大模型训练系列:序列并行3,Ring Attention

在序列并行系列中,我们将详细介绍下面四种常用的框架/方法: Megatron Sequence Parallelism:本质是想通过降低单卡激活值大小的方式,尽可能多保存激活值,少做重计算,以此提升整体训练速度,一般…...

pyspark基础准备

1.前言介绍 学习目标:了解什么是Speak、PySpark,了解为什么学习PySpark,了解课程是如何和大数据开发方向进行衔接 使用pyspark库所写出来的代码,既可以在电脑上简单运行,进行数据分析处理,又可以把代码无缝…...

Netty报错

问题:因客户反馈Netty版本低,影响性能,建议提升。于是,我将所有Netty版本从4.1.82.Final到4.1.114.Final后,报下面的错误,java.lang.NoClassDefFoundError: io/netty/util/Recycler$EnhancedHandle…...

Kafka 之顺序消息

前言: 在分布式消息系统中,消息的顺序性是一个重要的问题,也是一个常见的业务场景,那 Kafka 作为一个高性能的分布式消息中间件,又是如何实现顺序消息的呢?本篇我们将对 Kafka 的顺序消息展开讨论。 Kafk…...

Kafka 之批量消息发送消费

前言: 前面我们分享了 Kafka 的一些基础知识,以及 Spring Boot 集成 Kafka 完成消息发送消费,本篇我们来分享一下 Kafka 的批量消息发送消费。 Kafka 系列文章传送门 Kafka 简介及核心概念讲解 Spring Boot 整合 Kafka 详解 Kafka Kafka…...

【大数据学习 | kafka】kafka的偏移量管理

1. 偏移量的概念 消费者在消费数据的时候需要将消费的记录存储到一个位置,防止因为消费者程序宕机而引起断点消费数据丢失问题,下一次可以按照相应的位置从kafka中找寻数据,这个消费位置记录称之为偏移量offset。 kafka0.9以前版本将偏移量信…...

实景三维赋能森林防灭火指挥调度智慧化

森林防灭火工作是保护森林资源和生态环境的重要任务。随着信息技术的发展,实景三维技术在森林防灭火指挥调度中的应用日益广泛,为提升防灭火工作的效率和效果提供了有力支持。 一、森林防灭火面临的挑战 森林火灾具有突发性强、破坏性大、蔓延速度快、…...

【C++课程学习】:string的模拟实现

🎁个人主页:我们的五年 🔍系列专栏:C课程学习 🎉欢迎大家点赞👍评论📝收藏⭐文章 目录 一.string的主体框架: 二.string的分析: 🍔构造函数和析构函数&a…...

Linux(VMware + CentOS )设置固定ip

需求:设置ip为 192.168.88.130 先关闭虚拟机 启动虚拟机 查看当前自动获取的ip 使用 FinalShell 通过 ssh 服务远程登录系统,更换到 root 用户 修改ip配置文件 vim /etc/sysconfig/network-scripts/ifcfg-ens33 重启网卡 systemctl restart network …...

安卓 android studio各版本下载地址(官方)

https://developer.android.google.cn/studio/archive 别用中文,右上角的语言切换成英文...

如何在一个 Docker 容器中运行多个进程 ?

在容器化的世界里,Docker 彻底改变了开发人员构建、发布和运行应用程序的方式。Docker 容器封装了运行应用程序所需的所有依赖项,使其易于跨不同环境一致地部署。然而,在单个 Docker 容器中管理多个进程可能具有挑战性,这就是 Sup…...

poetry 配置多个cuda环境心得

操作系统:ubuntu22.04 LTS python版本:3.12.7 最近学习了用poetry配置python虚拟环境,当为不同的项目配置cuda时,会遇到不同的项目使用的cuda版本不一致的情况。 像torch 这样的库,它们会对cuda-toolkit有依赖&…...

网络编程入门

目录 1.网络编程入门 1.1 网络编程概述【理解】 1.2 网络编程三要素【理解】 1.3 IP地址【理解】 1.4InetAddress【应用】 1.5端口和协议【理解】 2.UDP通信程序 2.1 UDP发送数据【应用】 2.2UDP接收数据【应用】 2.3UDP通信程序练习【应用】 3.TCP通信程序 3.1TCP…...

Linux-socket详解

Linux-socket详解_socket linux-CSDN博客...

)

SQL Server 2022安装要求(硬件、软件、操作系统等)

SQL Server 2022安装要求 1、硬件要求2、软件要求3、操作系统支持4、Server Core 支持5、跨语言支持6、磁盘空间要求 1、硬件要求 以下内存和处理器要求适用于所有版本的 SQL Server: 组件要求存储SQL Server 要求最少 6 GB 的可用硬盘驱动器空间。 磁盘空间要求随…...

BCS 2025|百度副总裁陈洋:智能体在安全领域的应用实践

6月5日,2025全球数字经济大会数字安全主论坛暨北京网络安全大会在国家会议中心隆重开幕。百度副总裁陈洋受邀出席,并作《智能体在安全领域的应用实践》主题演讲,分享了在智能体在安全领域的突破性实践。他指出,百度通过将安全能力…...

【python异步多线程】异步多线程爬虫代码示例

claude生成的python多线程、异步代码示例,模拟20个网页的爬取,每个网页假设要0.5-2秒完成。 代码 Python多线程爬虫教程 核心概念 多线程:允许程序同时执行多个任务,提高IO密集型任务(如网络请求)的效率…...

QT: `long long` 类型转换为 `QString` 2025.6.5

在 Qt 中,将 long long 类型转换为 QString 可以通过以下两种常用方法实现: 方法 1:使用 QString::number() 直接调用 QString 的静态方法 number(),将数值转换为字符串: long long value 1234567890123456789LL; …...

《C++ 模板》

目录 函数模板 类模板 非类型模板参数 模板特化 函数模板特化 类模板的特化 模板,就像一个模具,里面可以将不同类型的材料做成一个形状,其分为函数模板和类模板。 函数模板 函数模板可以简化函数重载的代码。格式:templa…...

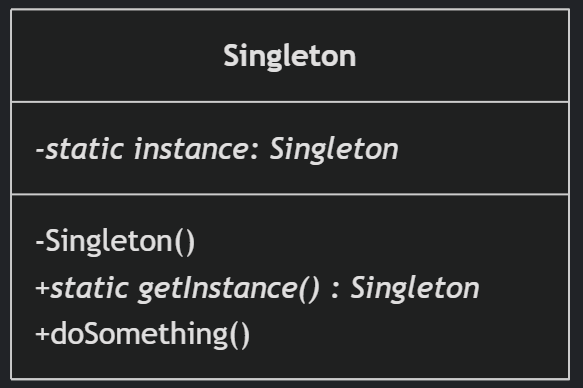

(一)单例模式

一、前言 单例模式属于六大创建型模式,即在软件设计过程中,主要关注创建对象的结果,并不关心创建对象的过程及细节。创建型设计模式将类对象的实例化过程进行抽象化接口设计,从而隐藏了类对象的实例是如何被创建的,封装了软件系统使用的具体对象类型。 六大创建型模式包括…...

零知开源——STM32F103RBT6驱动 ICM20948 九轴传感器及 vofa + 上位机可视化教程

STM32F1 本教程使用零知标准板(STM32F103RBT6)通过I2C驱动ICM20948九轴传感器,实现姿态解算,并通过串口将数据实时发送至VOFA上位机进行3D可视化。代码基于开源库修改优化,适合嵌入式及物联网开发者。在基础驱动上新增…...

API网关Kong的鉴权与限流:高并发场景下的核心实践

🔥「炎码工坊」技术弹药已装填! 点击关注 → 解锁工业级干货【工具实测|项目避坑|源码燃烧指南】 引言 在微服务架构中,API网关承担着流量调度、安全防护和协议转换的核心职责。作为云原生时代的代表性网关,Kong凭借其插件化架构…...

水泥厂自动化升级利器:Devicenet转Modbus rtu协议转换网关

在水泥厂的生产流程中,工业自动化网关起着至关重要的作用,尤其是JH-DVN-RTU疆鸿智能Devicenet转Modbus rtu协议转换网关,为水泥厂实现高效生产与精准控制提供了有力支持。 水泥厂设备众多,其中不少设备采用Devicenet协议。Devicen…...

阿里云Ubuntu 22.04 64位搭建Flask流程(亲测)

cd /home 进入home盘 安装虚拟环境: 1、安装virtualenv pip install virtualenv 2.创建新的虚拟环境: virtualenv myenv 3、激活虚拟环境(激活环境可以在当前环境下安装包) source myenv/bin/activate 此时,终端…...

[USACO23FEB] Bakery S

题目描述 Bessie 开了一家面包店! 在她的面包店里,Bessie 有一个烤箱,可以在 t C t_C tC 的时间内生产一块饼干或在 t M t_M tM 单位时间内生产一块松糕。 ( 1 ≤ t C , t M ≤ 10 9 ) (1 \le t_C,t_M \le 10^9) (1≤tC,tM≤109)。由于空间…...