RT-DETR融合GhostModel V3及相关改进思路

RT-DETR使用教程: RT-DETR使用教程

RT-DETR改进汇总贴:RT-DETR更新汇总贴

《GhostNetV3: Exploring the Training Strategies for Compact Models》

一、 模块介绍

论文链接:https://arxiv.org/pdf/2404.11202v1

代码链接:https://github.com/huawei-noah/Efficient-AI-Backbones/....

论文速览:

小型神经网络专为边缘设备上的应用程序而设计,具有更快的推理速度和适中的性能。然而,目前紧凑模型的训练策略借鉴了传统模型的训练策略,忽略了它们在模型容量上的差异,从而可能阻碍紧凑模型的性能。在本文中,通过系统地研究不同训练成分的影响,我们引入了一种针对紧凑模型的强大训练策略。我们发现,适当的重新参数化和知识蒸馏设计对于训练高性能紧凑模型至关重要,而一些常用的用于训练常规模型的数据增强,如 Mixup 和 CutMix,会导致性能变差。我们在 ImageNet-1K 数据集上的实验表明,我们对紧凑模型的专门训练策略适用于各种架构,包括 GhostNetV2、MobileNetV2 和 ShuffleNetV2。具体来说,配备我们的策略,GhostNetV3 1.3 × 在移动设备上仅以 269M FLOPs和 14.46ms 的延迟实现了 79.1% 的顶级准确率,大大超过了通常训练的同类产品。此外,我们的观察还可以扩展到对象检测场景。

总结:Ghost Net V3。

二、 加入到RT-DETR中

2.1 创建脚本文件

首先在ultralytics->nn路径下创建blocks.py脚本,用于存放模块代码。

2.2 复制代码

复制代码粘到刚刚创建的blocks.py脚本中,如下图所示:

import torch

import torch.nn as nn

import torch.nn.functional as F

import mathfrom typing import Tupledef _make_divisible(v, divisor, min_value=None):"""This function is taken from the original tf repo.It ensures that all layers have a channel number that is divisible by 8It can be seen here:https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py"""if min_value is None:min_value = divisornew_v = max(min_value, int(v + divisor / 2) // divisor * divisor)# Make sure that round down does not go down by more than 10%.if new_v < 0.9 * v:new_v += divisorreturn new_vdef hard_sigmoid(x, inplace: bool = False):if inplace:return x.add_(3.).clamp_(0., 6.).div_(6.)else:return F.relu6(x + 3.) / 6.class SqueezeExcite(nn.Module):def __init__(self, in_chs, se_ratio=0.25, reduced_base_chs=None,act_layer=nn.ReLU, gate_fn=hard_sigmoid, divisor=4, **_):super(SqueezeExcite, self).__init__()self.gate_fn = gate_fnreduced_chs = _make_divisible((reduced_base_chs or in_chs) * se_ratio, divisor)self.avg_pool = nn.AdaptiveAvgPool2d(1)self.conv_reduce = nn.Conv2d(in_chs, reduced_chs, 1, bias=True)self.act1 = act_layer(inplace=True)self.conv_expand = nn.Conv2d(reduced_chs, in_chs, 1, bias=True)def forward(self, x):x_se = self.avg_pool(x)x_se = self.conv_reduce(x_se)x_se = self.act1(x_se)x_se = self.conv_expand(x_se)x = x * self.gate_fn(x_se)return xclass ConvBnAct(nn.Module):def __init__(self, in_chs, out_chs, kernel_size,stride=1, act_layer=nn.ReLU):super(ConvBnAct, self).__init__()self.conv = nn.Conv2d(in_chs, out_chs, kernel_size, stride, kernel_size // 2, bias=False)self.bn1 = nn.BatchNorm2d(out_chs)self.act1 = act_layer(inplace=True)def forward(self, x):x = self.conv(x)x = self.bn1(x)x = self.act1(x)return xdef gcd(a, b):if a < b:a, b = b, awhile (a % b != 0):c = a % ba = bb = creturn bdef MyNorm(dim):return nn.GroupNorm(1, dim)class GhostModuleV3(nn.Module):def __init__(self, inp, oup, kernel_size=1, ratio=2, dw_size=3, stride=1, relu=True, mode='ori', args=None):super(GhostModuleV3, self).__init__()# self.args=args# mode = 'ori_shortcut_mul_conv15'self.mode = modeself.gate_loc = 'before'self.inter_mode = 'nearest'self.scale = 1.0self.infer_mode = Falseself.num_conv_branches = 3self.dconv_scale = Trueself.gate_fn = nn.Sigmoid()# if args.gate_fn=='hard_sigmoid':# self.gate_fn=hard_sigmoid# elif args.gate_fn=='sigmoid':# self.gate_fn=nn.Sigmoid()# elif args.gate_fn=='relu':# self.gate_fn=nn.ReLU()# elif args.gate_fn=='clip':# self.gate_fn=myclip# elif args.gate_fn=='tanh':# self.gate_fn=nn.Tanh()if self.mode in ['ori']:self.oup = oupinit_channels = math.ceil(oup / ratio)new_channels = init_channels * (ratio - 1)if self.infer_mode:self.primary_conv = nn.Sequential(nn.Conv2d(inp, init_channels, kernel_size, stride, kernel_size // 2, bias=False),nn.BatchNorm2d(init_channels),nn.ReLU(inplace=True) if relu else nn.Sequential(),)self.cheap_operation = nn.Sequential(nn.Conv2d(init_channels, new_channels, dw_size, 1, dw_size // 2, groups=init_channels, bias=False),nn.BatchNorm2d(new_channels),nn.ReLU(inplace=True) if relu else nn.Sequential(),)else:self.primary_rpr_skip = nn.BatchNorm2d(inp) \if inp == init_channels and stride == 1 else Noneprimary_rpr_conv = list()for _ in range(self.num_conv_branches):primary_rpr_conv.append(self._conv_bn(inp, init_channels, kernel_size, stride, kernel_size // 2, bias=False))self.primary_rpr_conv = nn.ModuleList(primary_rpr_conv)# Re-parameterizable scale branchself.primary_rpr_scale = Noneif kernel_size > 1:self.primary_rpr_scale = self._conv_bn(inp, init_channels, 1, 1, 0, bias=False)self.primary_activation = nn.ReLU(inplace=True) if relu else Noneself.cheap_rpr_skip = nn.BatchNorm2d(init_channels) \if init_channels == new_channels else Nonecheap_rpr_conv = list()for _ in range(self.num_conv_branches):cheap_rpr_conv.append(self._conv_bn(init_channels, new_channels, dw_size, 1, dw_size // 2, groups=init_channels,bias=False))self.cheap_rpr_conv = nn.ModuleList(cheap_rpr_conv)# Re-parameterizable scale branchself.cheap_rpr_scale = Noneif dw_size > 1:self.cheap_rpr_scale = self._conv_bn(init_channels, new_channels, 1, 1, 0, groups=init_channels,bias=False)self.cheap_activation = nn.ReLU(inplace=True) if relu else Noneself.in_channels = init_channelsself.groups = init_channelsself.kernel_size = dw_sizeelif self.mode in ['ori_shortcut_mul_conv15']:self.oup = oupinit_channels = math.ceil(oup / ratio)new_channels = init_channels * (ratio - 1)self.short_conv = nn.Sequential(nn.Conv2d(inp, oup, kernel_size, stride, kernel_size // 2, bias=False),nn.BatchNorm2d(oup),nn.Conv2d(oup, oup, kernel_size=(1, 5), stride=1, padding=(0, 2), groups=oup, bias=False),nn.BatchNorm2d(oup),nn.Conv2d(oup, oup, kernel_size=(5, 1), stride=1, padding=(2, 0), groups=oup, bias=False),nn.BatchNorm2d(oup),)if self.infer_mode:self.primary_conv = nn.Sequential(nn.Conv2d(inp, init_channels, kernel_size, stride, kernel_size // 2, bias=False),nn.BatchNorm2d(init_channels),nn.ReLU(inplace=True) if relu else nn.Sequential(),)self.cheap_operation = nn.Sequential(nn.Conv2d(init_channels, new_channels, dw_size, 1, dw_size // 2, groups=init_channels, bias=False),nn.BatchNorm2d(new_channels),nn.ReLU(inplace=True) if relu else nn.Sequential(),)else:self.primary_rpr_skip = nn.BatchNorm2d(inp) \if inp == init_channels and stride == 1 else Noneprimary_rpr_conv = list()for _ in range(self.num_conv_branches):primary_rpr_conv.append(self._conv_bn(inp, init_channels, kernel_size, stride, kernel_size // 2, bias=False))self.primary_rpr_conv = nn.ModuleList(primary_rpr_conv)# Re-parameterizable scale branchself.primary_rpr_scale = Noneif kernel_size > 1:self.primary_rpr_scale = self._conv_bn(inp, init_channels, 1, 1, 0, bias=False)self.primary_activation = nn.ReLU(inplace=True) if relu else Noneself.cheap_rpr_skip = nn.BatchNorm2d(init_channels) \if init_channels == new_channels else Nonecheap_rpr_conv = list()for _ in range(self.num_conv_branches):cheap_rpr_conv.append(self._conv_bn(init_channels, new_channels, dw_size, 1, dw_size // 2, groups=init_channels,bias=False))self.cheap_rpr_conv = nn.ModuleList(cheap_rpr_conv)# Re-parameterizable scale branchself.cheap_rpr_scale = Noneif dw_size > 1:self.cheap_rpr_scale = self._conv_bn(init_channels, new_channels, 1, 1, 0, groups=init_channels,bias=False)self.cheap_activation = nn.ReLU(inplace=True) if relu else Noneself.in_channels = init_channelsself.groups = init_channelsself.kernel_size = dw_sizedef forward(self, x):if self.mode in ['ori']:if self.infer_mode:x1 = self.primary_conv(x)x2 = self.cheap_operation(x1)else:identity_out = 0if self.primary_rpr_skip is not None:identity_out = self.primary_rpr_skip(x)scale_out = 0if self.primary_rpr_scale is not None and self.dconv_scale:scale_out = self.primary_rpr_scale(x)x1 = scale_out + identity_outfor ix in range(self.num_conv_branches):x1 += self.primary_rpr_conv[ix](x)if self.primary_activation is not None:x1 = self.primary_activation(x1)cheap_identity_out = 0if self.cheap_rpr_skip is not None:cheap_identity_out = self.cheap_rpr_skip(x1)cheap_scale_out = 0if self.cheap_rpr_scale is not None and self.dconv_scale:cheap_scale_out = self.cheap_rpr_scale(x1)x2 = cheap_scale_out + cheap_identity_outfor ix in range(self.num_conv_branches):x2 += self.cheap_rpr_conv[ix](x1)if self.cheap_activation is not None:x2 = self.cheap_activation(x2)out = torch.cat([x1, x2], dim=1)return outelif self.mode in ['ori_shortcut_mul_conv15']:res = self.short_conv(F.avg_pool2d(x, kernel_size=2, stride=2))if self.infer_mode:x1 = self.primary_conv(x)x2 = self.cheap_operation(x1)else:identity_out = 0if self.primary_rpr_skip is not None:identity_out = self.primary_rpr_skip(x)scale_out = 0if self.primary_rpr_scale is not None and self.dconv_scale:scale_out = self.primary_rpr_scale(x)x1 = scale_out + identity_outfor ix in range(self.num_conv_branches):x1 += self.primary_rpr_conv[ix](x)if self.primary_activation is not None:x1 = self.primary_activation(x1)cheap_identity_out = 0if self.cheap_rpr_skip is not None:cheap_identity_out = self.cheap_rpr_skip(x1)cheap_scale_out = 0if self.cheap_rpr_scale is not None and self.dconv_scale:cheap_scale_out = self.cheap_rpr_scale(x1)x2 = cheap_scale_out + cheap_identity_outfor ix in range(self.num_conv_branches):x2 += self.cheap_rpr_conv[ix](x1)if self.cheap_activation is not None:x2 = self.cheap_activation(x2)out = torch.cat([x1, x2], dim=1)if self.gate_loc == 'before':return out[:, :self.oup, :, :] * F.interpolate(self.gate_fn(res / self.scale), size=out.shape[-2:],mode=self.inter_mode) # 'nearest'# return out*F.interpolate(self.gate_fn(res/self.scale),size=out.shape[-1].item(),mode=self.inter_mode) # 'nearest'else:return out[:, :self.oup, :, :] * self.gate_fn(F.interpolate(res, size=out.shape[-2:], mode=self.inter_mode))# return out*self.gate_fn(F.interpolate(res,size=out.shape[-1],mode=self.inter_mode))def reparameterize(self):""" Following works like `RepVGG: Making VGG-style ConvNets Great Again` -https://arxiv.org/pdf/2101.03697.pdf. We re-parameterize multi-branchedarchitecture used at training time to obtain a plain CNN-like structurefor inference."""if self.infer_mode:returnprimary_kernel, primary_bias = self._get_kernel_bias_primary()self.primary_conv = nn.Conv2d(in_channels=self.primary_rpr_conv[0].conv.in_channels,out_channels=self.primary_rpr_conv[0].conv.out_channels,kernel_size=self.primary_rpr_conv[0].conv.kernel_size,stride=self.primary_rpr_conv[0].conv.stride,padding=self.primary_rpr_conv[0].conv.padding,dilation=self.primary_rpr_conv[0].conv.dilation,groups=self.primary_rpr_conv[0].conv.groups,bias=True)self.primary_conv.weight.data = primary_kernelself.primary_conv.bias.data = primary_biasself.primary_conv = nn.Sequential(self.primary_conv,self.primary_activation if self.primary_activation is not None else nn.Sequential())cheap_kernel, cheap_bias = self._get_kernel_bias_cheap()self.cheap_operation = nn.Conv2d(in_channels=self.cheap_rpr_conv[0].conv.in_channels,out_channels=self.cheap_rpr_conv[0].conv.out_channels,kernel_size=self.cheap_rpr_conv[0].conv.kernel_size,stride=self.cheap_rpr_conv[0].conv.stride,padding=self.cheap_rpr_conv[0].conv.padding,dilation=self.cheap_rpr_conv[0].conv.dilation,groups=self.cheap_rpr_conv[0].conv.groups,bias=True)self.cheap_operation.weight.data = cheap_kernelself.cheap_operation.bias.data = cheap_biasself.cheap_operation = nn.Sequential(self.cheap_operation,self.cheap_activation if self.cheap_activation is not None else nn.Sequential())# Delete un-used branchesfor para in self.parameters():para.detach_()if hasattr(self, 'primary_rpr_conv'):self.__delattr__('primary_rpr_conv')if hasattr(self, 'primary_rpr_scale'):self.__delattr__('primary_rpr_scale')if hasattr(self, 'primary_rpr_skip'):self.__delattr__('primary_rpr_skip')if hasattr(self, 'cheap_rpr_conv'):self.__delattr__('cheap_rpr_conv')if hasattr(self, 'cheap_rpr_scale'):self.__delattr__('cheap_rpr_scale')if hasattr(self, 'cheap_rpr_skip'):self.__delattr__('cheap_rpr_skip')self.infer_mode = Truedef _get_kernel_bias_primary(self) -> Tuple[torch.Tensor, torch.Tensor]:""" Method to obtain re-parameterized kernel and bias.Reference: https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py#L83:return: Tuple of (kernel, bias) after fusing branches."""# get weights and bias of scale branchkernel_scale = 0bias_scale = 0if self.primary_rpr_scale is not None:kernel_scale, bias_scale = self._fuse_bn_tensor(self.primary_rpr_scale)# Pad scale branch kernel to match conv branch kernel size.pad = self.kernel_size // 2kernel_scale = torch.nn.functional.pad(kernel_scale,[pad, pad, pad, pad])# get weights and bias of skip branchkernel_identity = 0bias_identity = 0if self.primary_rpr_skip is not None:kernel_identity, bias_identity = self._fuse_bn_tensor(self.primary_rpr_skip)# get weights and bias of conv brancheskernel_conv = 0bias_conv = 0for ix in range(self.num_conv_branches):_kernel, _bias = self._fuse_bn_tensor(self.primary_rpr_conv[ix])kernel_conv += _kernelbias_conv += _biaskernel_final = kernel_conv + kernel_scale + kernel_identitybias_final = bias_conv + bias_scale + bias_identityreturn kernel_final, bias_finaldef _get_kernel_bias_cheap(self) -> Tuple[torch.Tensor, torch.Tensor]:""" Method to obtain re-parameterized kernel and bias.Reference: https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py#L83:return: Tuple of (kernel, bias) after fusing branches."""# get weights and bias of scale branchkernel_scale = 0bias_scale = 0if self.cheap_rpr_scale is not None:kernel_scale, bias_scale = self._fuse_bn_tensor(self.cheap_rpr_scale)# Pad scale branch kernel to match conv branch kernel size.pad = self.kernel_size // 2kernel_scale = torch.nn.functional.pad(kernel_scale,[pad, pad, pad, pad])# get weights and bias of skip branchkernel_identity = 0bias_identity = 0if self.cheap_rpr_skip is not None:kernel_identity, bias_identity = self._fuse_bn_tensor(self.cheap_rpr_skip)# get weights and bias of conv brancheskernel_conv = 0bias_conv = 0for ix in range(self.num_conv_branches):_kernel, _bias = self._fuse_bn_tensor(self.cheap_rpr_conv[ix])kernel_conv += _kernelbias_conv += _biaskernel_final = kernel_conv + kernel_scale + kernel_identitybias_final = bias_conv + bias_scale + bias_identityreturn kernel_final, bias_finaldef _fuse_bn_tensor(self, branch) -> Tuple[torch.Tensor, torch.Tensor]:""" Method to fuse batchnorm layer with preceeding conv layer.Reference: https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py#L95:param branch::return: Tuple of (kernel, bias) after fusing batchnorm."""if isinstance(branch, nn.Sequential):kernel = branch.conv.weightrunning_mean = branch.bn.running_meanrunning_var = branch.bn.running_vargamma = branch.bn.weightbeta = branch.bn.biaseps = branch.bn.epselse:assert isinstance(branch, nn.BatchNorm2d)if not hasattr(self, 'id_tensor'):input_dim = self.in_channels // self.groupskernel_value = torch.zeros((self.in_channels,input_dim,self.kernel_size,self.kernel_size),dtype=branch.weight.dtype,device=branch.weight.device)for i in range(self.in_channels):kernel_value[i, i % input_dim,self.kernel_size // 2,self.kernel_size // 2] = 1self.id_tensor = kernel_valuekernel = self.id_tensorrunning_mean = branch.running_meanrunning_var = branch.running_vargamma = branch.weightbeta = branch.biaseps = branch.epsstd = (running_var + eps).sqrt()t = (gamma / std).reshape(-1, 1, 1, 1)return kernel * t, beta - running_mean * gamma / stddef _conv_bn(self, in_channels, out_channels, kernel_size, stride, padding, groups=1, bias=False):""" Helper method to construct conv-batchnorm layers.:param kernel_size: Size of the convolution kernel.:param padding: Zero-padding size.:return: Conv-BN module."""mod_list = nn.Sequential()mod_list.add_module('conv', nn.Conv2d(in_channels=in_channels,out_channels=out_channels,kernel_size=kernel_size,stride=stride,padding=padding,groups=groups,bias=bias))mod_list.add_module('bn', nn.BatchNorm2d(out_channels))return mod_list

2.3 更改task.py文件

打开ultralytics->nn->modules->task.py,在脚本空白处导入函数。

from ultralytics.nn.blocks import *

之后找到模型解析函数parse_model(约在tasks.py脚本中940行左右位置,可能因代码版本不同变动),在该函数的最后一个else分支上面增加相关解析代码。

elif m is GhostModuleV3:c2 = args[0]args = [ch[f], *args]

2.4 更改yaml文件

yam文件解读:YOLO系列 “.yaml“文件解读_yolo yaml文件-CSDN博客

打开更改ultralytics/cfg/models/rt-detr路径下的rtdetr-l.yaml文件,替换原有模块。(放在该位置仅能插入该模块,具体效果未知。博主精力有限,仅完成与其他模块二次创新融合的测试,结构图见文末,代码见群文件更新。)

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'# [depth, width, max_channels]l: [1.00, 1.00, 1024]backbone:# [from, repeats, module, args]- [-1, 1, HGStem, [32, 48]] # 0-P2/4- [-1, 6, HGBlock, [48, 128, 3]] # stage 1- [-1, 1, DWConv, [128, 3, 2, 1, False]] # 2-P3/8- [-1, 6, HGBlock, [96, 512, 3]] # stage 2- [-1, 1, DWConv, [512, 3, 2, 1, False]] # 4-P3/16- [-1, 2, GhostModuleV3, [512, 3]] # cm, c2, k, light, shortcut- [-1, 6, HGBlock, [192, 1024, 5, True, True]]- [-1, 6, HGBlock, [192, 1024, 5, True, True]] # stage 3- [-1, 1, DWConv, [1024, 3, 2, 1, False]] # 8-P4/32- [-1, 6, HGBlock, [384, 2048, 5, True, False]] # stage 4head:- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 10 input_proj.2- [-1, 1, AIFI, [1024, 8]]- [-1, 1, Conv, [256, 1, 1]] # 12, Y5, lateral_convs.0- [-1, 1, nn.Upsample, [None, 2, "nearest"]]- [7, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 14 input_proj.1- [[-2, -1], 1, Concat, [1]]- [-1, 3, RepC3, [256]] # 16, fpn_blocks.0- [-1, 1, Conv, [256, 1, 1]] # 17, Y4, lateral_convs.1- [-1, 1, nn.Upsample, [None, 2, "nearest"]]- [3, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 19 input_proj.0- [[-2, -1], 1, Concat, [1]] # cat backbone P4- [-1, 3, RepC3, [256]] # X3 (21), fpn_blocks.1- [-1, 1, Conv, [256, 3, 2]] # 22, downsample_convs.0- [[-1, 17], 1, Concat, [1]] # cat Y4- [-1, 3, RepC3, [256]] # F4 (24), pan_blocks.0- [-1, 1, Conv, [256, 3, 2]] # 25, downsample_convs.1- [[-1, 12], 1, Concat, [1]] # cat Y5- [-1, 3, RepC3, [256]] # F5 (27), pan_blocks.1- [[21, 24, 27], 1, RTDETRDecoder, [nc]] # Detect(P3, P4, P5)

2.5 修改train.py文件

创建Train_RT脚本用于训练。

from ultralytics.models import RTDETR

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True'if __name__ == '__main__':model = RTDETR(model='ultralytics/cfg/models/rt-detr/rtdetr-l.yaml')# model.load('yolov8n.pt')model.train(data='./data.yaml', epochs=2, batch=1, device='0', imgsz=640, workers=2, cache=False,amp=True, mosaic=False, project='runs/train', name='exp')

在train.py脚本中填入修改好的yaml路径,运行即可训。

三、相关改进思路(2024/11/23日群文件)

该模块可如图加入到HGBlock、RepNCSPELAN4、RepC3等模块中,代码见群文件,结构如图。自研模块与该模块融合代码及yaml文件见群文件。

⭐另外,融合上百种改进模块的YOLO项目仅79.9(含百种改进的v9),RTDETR79.9,含高性能自研模型,更易发论文,代码每周更新,欢迎点击下方小卡片加我了解。⭐

⭐⭐平均每个文章对应4-6个二创及自研融合模块⭐⭐

相关文章:

RT-DETR融合GhostModel V3及相关改进思路

RT-DETR使用教程: RT-DETR使用教程 RT-DETR改进汇总贴:RT-DETR更新汇总贴 《GhostNetV3: Exploring the Training Strategies for Compact Models》 一、 模块介绍 论文链接:https://arxiv.org/pdf/2404.11202v1 代码链接:https:…...

JVM有哪些垃圾回收器

Serial垃圾回收器:单线程收集器,适用于客户端模式下的小型应用。 使用复制算法回收新生代,使用标记-整理算法回收老年代。 在进行垃圾回收时,会停止所有用户线程(Stop-The-World, STW)。Serial Old垃圾回收…...

EWM 打印

目录 1 简介 2 后台配置 3 主数据 4 业务操作 1 简介 打印即输出管理(output management)利用“条件表”那一套理论实现。而当打印跟 EWM 集成到一起时,也需要利用 PPF(Post Processing Framework)那一套理论。而…...

前端文件优化

一、图片优化 计算图片大小 对于一张100*100像素的图片来说,图像上有 10000 个像素点,如果每个像素的值是 RGBA 存储的话,那么也就是说每个像素有 4 个通道,每个通道 1 个字节(8 位 1个字 节)࿰…...

电脑怎么自动切换IP地址

在现代网络环境中,电脑自动切换IP地址的需求日益增多。无论是出于网络安全、隐私保护,还是为了绕过地域限制,自动切换IP地址都成为了许多用户关注的焦点。本文将详细介绍几种实现电脑自动切换IP地址的方法,以满足不同用户的需求。…...

hbase集成phoenix

1.环境 环境准备 三台节点zookeeper三节点hadoop三节点hbase三节点 2.pheonix集成 官网下载地址,需挂梯子,使用官网推荐的对应hbase版本即可 https://phoenix.apache.org/download.html下载及解压 wget https://dlcdn.apache.org/phoenix/phoenix-…...

单片机智能家居火灾环境安全检测

目录 前言 一、本设计主要实现哪些很“开门”功能? 二、电路设计原理图 电路图采用Altium Designer进行设计: 三、实物设计图 四、程序源代码设计 五、获取资料内容 前言 在现代社会,火灾安全始终是人们关注的重点问题。随着科技的不…...

Git_2024/11/16

文章目录 前言Git是什么核心概念工作流程常见术语解读Git的优势 Git与SVN对比SVNGit总结 Git配置流程及指令环境配置获取Git仓库本地初始化远程克隆 工作目录、暂存区、版本库文件的两种状态本地仓库操作远程仓库操作Git分支Git标签IntelliJ IDEA使用Git回滚代码 GitHub配置流程…...

Java基础夯实——2.1Java常见的线程创建方式

在 Java 中,线程是实现并发编程的核心。主要有以下三种: 继承 Thread 类实现 Runnable 接口实现 Callable 接口并结合 Future 使用 1. 继承 Thread 类 继承 Thread 类是创建线程的最简单方式之一。通过扩展 Thread 类并重写其 run 方法,可…...

【Docker容器】一、一文了解docker

1、什么是docker? 1.1 docker概念 Docker是一种容器化平台,通过使用容器技术,Docker允许开发人员将应用程序和其依赖项打包到一个独立的、可移植的容器中。每个容器具有自己的文件系统、环境变量和资源隔离,从而使应用程序可以在…...

Spring:IOC实例化对象bean的方式

对象已经能交给Spring的IOC容器来创建了,但是容器是如何来创建对象的呢? 就需要研究下bean的实例化过程,在这块内容中主要解决两部分内容,分别是 bean是如何创建的实例化bean的三种方式,构造方法,静态工厂和实例工厂 在讲解这…...

)

深入解析生成对抗网络(GAN)

1. 引言 背景介绍 在过去的几十年中,深度学习在计算机视觉、自然语言处理和语音识别等领域取得了巨大的突破。然而,如何让机器生成高质量、逼真的数据一直是人工智能领域的挑战。传统的生成模型,如变分自编码器(VAE)…...

curl命令提交大json

有个客户需要提交一个4M左右的pdf,接口里传的是pdf字节流base64编码后的字符串。 直接curl -XPOST -d json串 api接口会报 参数过长报错Argument list too long 网上搜了下解决方案把json串放到文本里然后通过json.txt引入参数 这一试不要紧,差点儿导致…...

以太坊拥堵扩展解决方案Arbitrum

Arbitrum是一种用于以太坊的Layer 2(L2)扩展解决方案,以下是详细介绍: 1. 背景和基本原理 背景介绍:随着以太坊网络的发展,交易拥堵和高Gas费用的问题逐渐凸显。为了解决这些问题,Layer 2扩展…...

Kafka新节点加入集群操作指南

一、环境准备 1. Java环境安装 # 安装JDK apt-get update apt-get install openjdk-8-jdk -y2. 下载并解压 wget https://archive.apache.org/dist/kafka/2.8.1/kafka_2.13-2.8.1.tgz tar xf kafka_2.13-2.8.1.tgz mv kafka_2.13-2.8.1 kafka二、配置环境变量 1. 创建kafka…...

【Android compose原创组件】在Compose里面实现内容不满一屏也可以触发边界阻尼效果的一种可用方法

创意背景 在安卓 View 传统命令式开发里面提供了非常多稳定美观体验好的组件,但是目前Compose还未有可用的组件,比如View中可以使用 coordinatorlayout 的滚动效果可以实现局部(即使内容不满一屏也可以触发滚动边界阻尼效果)&…...

介绍一下struct(c基础)

struct 是命名结构体的,可以看成集合。不同元素即是表达一个对象的不同方面属性。 格式 struct stu (一种标识符) { //命名不可初始化 [元素类型] 元素名; char 元素1[n]; int 元素2; int 元素3; __________ int 元素n; }; struct stu {//…...

模型压缩——基于粒度剪枝

1.引言 模型剪枝本质上是一种利用稀疏性来减少模型大小和计算量,从而提高训练和推理效率的技术。它为何会有效呢? 理论依据:有研究发现,在许多深度神经网络中,大部分参数是接近于0的,这些参数对模型最终的…...

IntelliJ IDEA 2023.2x——图文配置

IntelliJ IDEA 2023.2——配置说明 界面如下图所示 : 绿泡泡查找 “码猿趣事” 查找【idea99】 IntelliJ IDEA 的官方下载地址 IntelliJ IDEA 官网下载地址 一路上NEXT 到结尾: 继续NEXT 下一步:...

SpringBoot(5)-SpringSecurity

目录 一、是什么 二、实战测试 2.1 认识 2.2 认证和授权 2.3 权限控制和注销 2.4 记住我 一、是什么 Spring Security是一个框架,侧重于为java应用程序提供身份验证和授权。 Web应用的安全性主要分为两个部分: 认证(Authentication&…...

在软件开发中正确使用MySQL日期时间类型的深度解析

在日常软件开发场景中,时间信息的存储是底层且核心的需求。从金融交易的精确记账时间、用户操作的行为日志,到供应链系统的物流节点时间戳,时间数据的准确性直接决定业务逻辑的可靠性。MySQL作为主流关系型数据库,其日期时间类型的…...

C++_核心编程_多态案例二-制作饮品

#include <iostream> #include <string> using namespace std;/*制作饮品的大致流程为:煮水 - 冲泡 - 倒入杯中 - 加入辅料 利用多态技术实现本案例,提供抽象制作饮品基类,提供子类制作咖啡和茶叶*//*基类*/ class AbstractDr…...

云启出海,智联未来|阿里云网络「企业出海」系列客户沙龙上海站圆满落地

借阿里云中企出海大会的东风,以**「云启出海,智联未来|打造安全可靠的出海云网络引擎」为主题的阿里云企业出海客户沙龙云网络&安全专场于5.28日下午在上海顺利举办,现场吸引了来自携程、小红书、米哈游、哔哩哔哩、波克城市、…...

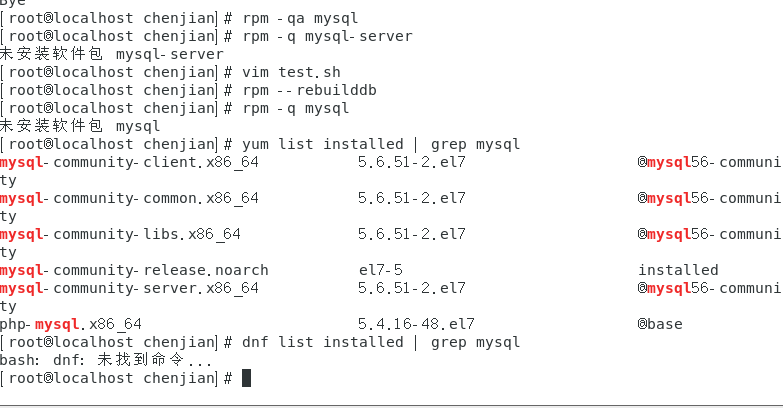

mysql已经安装,但是通过rpm -q 没有找mysql相关的已安装包

文章目录 现象:mysql已经安装,但是通过rpm -q 没有找mysql相关的已安装包遇到 rpm 命令找不到已经安装的 MySQL 包时,可能是因为以下几个原因:1.MySQL 不是通过 RPM 包安装的2.RPM 数据库损坏3.使用了不同的包名或路径4.使用其他包…...

【SSH疑难排查】轻松解决新版OpenSSH连接旧服务器的“no matching...“系列算法协商失败问题

【SSH疑难排查】轻松解决新版OpenSSH连接旧服务器的"no matching..."系列算法协商失败问题 摘要: 近期,在使用较新版本的OpenSSH客户端连接老旧SSH服务器时,会遇到 "no matching key exchange method found", "n…...

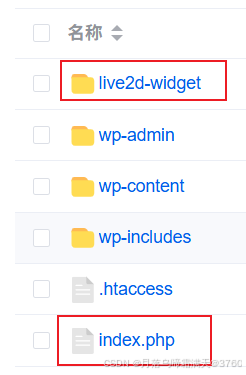

给网站添加live2d看板娘

给网站添加live2d看板娘 参考文献: stevenjoezhang/live2d-widget: 把萌萌哒的看板娘抱回家 (ノ≧∇≦)ノ | Live2D widget for web platformEikanya/Live2d-model: Live2d model collectionzenghongtu/live2d-model-assets 前言 网站环境如下,文章也主…...

Qt 事件处理中 return 的深入解析

Qt 事件处理中 return 的深入解析 在 Qt 事件处理中,return 语句的使用是另一个关键概念,它与 event->accept()/event->ignore() 密切相关但作用不同。让我们详细分析一下它们之间的关系和工作原理。 核心区别:不同层级的事件处理 方…...

HTML前端开发:JavaScript 获取元素方法详解

作为前端开发者,高效获取 DOM 元素是必备技能。以下是 JS 中核心的获取元素方法,分为两大系列: 一、getElementBy... 系列 传统方法,直接通过 DOM 接口访问,返回动态集合(元素变化会实时更新)。…...

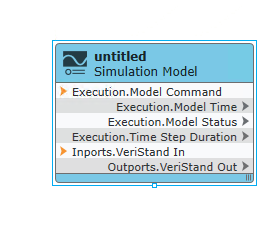

【Veristand】Veristand环境安装教程-Linux RT / Windows

首先声明,此教程是针对Simulink编译模型并导入Veristand中编写的,同时需要注意的是老用户编译可能用的是Veristand Model Framework,那个是历史版本,且NI不会再维护,新版本编译支持为VeriStand Model Generation Suppo…...

云安全与网络安全:核心区别与协同作用解析

在数字化转型的浪潮中,云安全与网络安全作为信息安全的两大支柱,常被混淆但本质不同。本文将从概念、责任分工、技术手段、威胁类型等维度深入解析两者的差异,并探讨它们的协同作用。 一、核心区别 定义与范围 网络安全:聚焦于保…...