k8s helm部署kafka集群(KRaft模式)——筑梦之路

添加helm仓库

helm repo add bitnami "https://helm-charts.itboon.top/bitnami" --force-update

helm repo add grafana "https://helm-charts.itboon.top/grafana" --force-update

helm repo add prometheus-community "https://helm-charts.itboon.top/prometheus-community" --force-update

helm repo add ingress-nginx "https://helm-charts.itboon.top/ingress-nginx" --force-update

helm repo update搜索kafka版本

# helm search repo bitnami/kafka -l

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami2/kafka 31.1.1 3.9.0

bitnami2/kafka 31.0.0 3.9.0

bitnami2/kafka 30.1.8 3.8.1

bitnami2/kafka 30.1.4 3.8.0

bitnami2/kafka 30.0.5 3.8.0

bitnami2/kafka 29.3.13 3.7.1

bitnami2/kafka 29.3.4 3.7.0

bitnami2/kafka 29.2.0 3.7.0

bitnami2/kafka 28.1.1 3.7.0

bitnami2/kafka 28.0.0 3.7.0

bitnami2/kafka 26.11.4 3.6.1

bitnami2/kafka 26.8.3 3.6.1编辑kafka.yaml

image:registry: docker.iorepository: bitnami/kafkatag: 3.9.0-debian-12-r4

listeners:client:protocol: PLAINTEXT #关闭访问认证controller:protocol: PLAINTEXT #关闭访问认证interbroker:protocol: PLAINTEXT #关闭访问认证external:protocol: PLAINTEXT #关闭访问认证

controller:replicaCount: 3 #副本数controllerOnly: false #controller+broker共用模式heapOpts: -Xmx4096m -Xms2048m #KAFKA JVMresources:limits:cpu: 4 memory: 8Girequests:cpu: 500mmemory: 512Miaffinity: #仅部署在master节点,不限制可删除nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: node-role.kubernetes.io/masteroperator: Exists- matchExpressions:- key: node-role.kubernetes.io/control-planeoperator: Existstolerations: #仅部署在master节点,不限制可删除- operator: Existseffect: NoSchedule- operator: Existseffect: NoExecutepersistence:storageClass: "local-path" #存储卷类型size: 10Gi #每个pod的存储大小

externalAccess:enabled: true #开启外部访问controller:service:type: NodePort #使用NodePort方式nodePorts:- 30091 #对外端口- 30092 #对外端口- 30093 #对外端口useHostIPs: true #使用宿主机IP

安装部署

helm install kafka bitnami/kafka -f kafka.yaml --dry-runhelm install kafka bitnami-china/kafka -f kafka.yaml内部访问

kafka-controller-headless.default:9092kafka-controller-0.kafka-controller-headless.default:9092

kafka-controller-1.kafka-controller-headless.default:9092

kafka-controller-2.kafka-controller-headless.default:9092外部访问

# node ip +设置的nodeport端口,注意端口对应的节点的ip

192.168.100.110:30091

192.168.100.111:30092

192.168.100.112:30093# 从pod的配置中查找外部访问信息

kubectl exec -it kafka-controller-0 -- cat /opt/bitnami/kafka/config/server.properties | grep advertised.listeners测试验证

# 创建一个podkubectl run kafka-client --restart='Never' --image bitnami/kafka:3.9.0-debian-12-r4 --namespace default --command -- sleep infinity# 进入pod生产消息

kubectl exec --tty -i kafka-client --namespace default -- bash

kafka-console-producer.sh \--broker-list kafka-controller-0.kafka-controller-headless.default.svc.cluster.local:9092,kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9092,kafka-controller-2.kafka-controller-headless.default.svc.cluster.local:9092 \--topic test# 进入pod消费消息

kubectl exec --tty -i kafka-client --namespace default -- bash

kafka-console-consumer.sh \--bootstrap-server kafka.default.svc.cluster.local:9092 \--topic test \--from-beginning仅供参考

所有yaml文件

---

# Source: kafka/templates/networkpolicy.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:name: kafkanamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1

spec:podSelector:matchLabels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkapolicyTypes:- Ingress- Egressegress:- {}ingress:# Allow client connections- ports:- port: 9092- port: 9094- port: 9093- port: 9095

---

# Source: kafka/templates/broker/pdb.yaml

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:name: kafka-brokernamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: brokerapp.kubernetes.io/part-of: kafka

spec:maxUnavailable: 1selector:matchLabels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/component: brokerapp.kubernetes.io/part-of: kafka

---

# Source: kafka/templates/controller-eligible/pdb.yaml

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:name: kafka-controllernamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafka

spec:maxUnavailable: 1selector:matchLabels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafka

---

# Source: kafka/templates/provisioning/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: kafka-provisioningnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1

automountServiceAccountToken: false

---

# Source: kafka/templates/rbac/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: kafkanamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: kafka

automountServiceAccountToken: false

---

# Source: kafka/templates/secrets.yaml

apiVersion: v1

kind: Secret

metadata:name: kafka-kraft-cluster-idnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1

type: Opaque

data:kraft-cluster-id: "eDJrTHBicnVhQ1ZIUExEVU5BZVMxUA=="

---

# Source: kafka/templates/controller-eligible/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: kafka-controller-configurationnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafka

data:server.properties: |-# Listeners configurationlisteners=CLIENT://:9092,INTERNAL://:9094,EXTERNAL://:9095,CONTROLLER://:9093advertised.listeners=CLIENT://advertised-address-placeholder:9092,INTERNAL://advertised-address-placeholder:9094listener.security.protocol.map=CLIENT:PLAINTEXT,INTERNAL:PLAINTEXT,CONTROLLER:PLAINTEXT,EXTERNAL:PLAINTEXT# KRaft process rolesprocess.roles=controller,broker#node.id=controller.listener.names=CONTROLLERcontroller.quorum.voters=0@kafka-controller-0.kafka-controller-headless.default.svc.cluster.local:9093,1@kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9093,2@kafka-controller-2.kafka-controller-headless.default.svc.cluster.local:9093# Kafka data logs directorylog.dir=/bitnami/kafka/data# Kafka application logs directorylogs.dir=/opt/bitnami/kafka/logs# Common Kafka Configuration# Interbroker configurationinter.broker.listener.name=INTERNAL# Custom Kafka Configuration

---

# Source: kafka/templates/scripts-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: kafka-scriptsnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1

data:kafka-init.sh: |-#!/bin/bashset -o errexitset -o nounsetset -o pipefailerror(){local message="${1:?missing message}"echo "ERROR: ${message}"exit 1}retry_while() {local -r cmd="${1:?cmd is missing}"local -r retries="${2:-12}"local -r sleep_time="${3:-5}"local return_value=1read -r -a command <<< "$cmd"for ((i = 1 ; i <= retries ; i+=1 )); do"${command[@]}" && return_value=0 && breaksleep "$sleep_time"donereturn $return_value}replace_in_file() {local filename="${1:?filename is required}"local match_regex="${2:?match regex is required}"local substitute_regex="${3:?substitute regex is required}"local posix_regex=${4:-true}local result# We should avoid using 'sed in-place' substitutions# 1) They are not compatible with files mounted from ConfigMap(s)# 2) We found incompatibility issues with Debian10 and "in-place" substitutionslocal -r del=$'\001' # Use a non-printable character as a 'sed' delimiter to avoid issuesif [[ $posix_regex = true ]]; thenresult="$(sed -E "s${del}${match_regex}${del}${substitute_regex}${del}g" "$filename")"elseresult="$(sed "s${del}${match_regex}${del}${substitute_regex}${del}g" "$filename")"fiecho "$result" > "$filename"}kafka_conf_set() {local file="${1:?missing file}"local key="${2:?missing key}"local value="${3:?missing value}"# Check if the value was set beforeif grep -q "^[#\\s]*$key\s*=.*" "$file"; then# Update the existing keyreplace_in_file "$file" "^[#\\s]*${key}\s*=.*" "${key}=${value}" falseelse# Add a new keyprintf '\n%s=%s' "$key" "$value" >>"$file"fi}replace_placeholder() {local placeholder="${1:?missing placeholder value}"local password="${2:?missing password value}"local -r del=$'\001' # Use a non-printable character as a 'sed' delimiter to avoid issues with delimiter symbols in sed stringsed -i "s${del}$placeholder${del}$password${del}g" "$KAFKA_CONFIG_FILE"}append_file_to_kafka_conf() {local file="${1:?missing source file}"local conf="${2:?missing kafka conf file}"cat "$1" >> "$2"}configure_external_access() {# Configure external hostnameif [[ -f "/shared/external-host.txt" ]]; thenhost=$(cat "/shared/external-host.txt")elif [[ -n "${EXTERNAL_ACCESS_HOST:-}" ]]; thenhost="$EXTERNAL_ACCESS_HOST"elif [[ -n "${EXTERNAL_ACCESS_HOSTS_LIST:-}" ]]; thenread -r -a hosts <<<"$(tr ',' ' ' <<<"${EXTERNAL_ACCESS_HOSTS_LIST}")"host="${hosts[$POD_ID]}"elif [[ "$EXTERNAL_ACCESS_HOST_USE_PUBLIC_IP" =~ ^(yes|true)$ ]]; thenhost=$(curl -s https://ipinfo.io/ip)elseerror "External access hostname not provided"fi# Configure external portif [[ -f "/shared/external-port.txt" ]]; thenport=$(cat "/shared/external-port.txt")elif [[ -n "${EXTERNAL_ACCESS_PORT:-}" ]]; thenif [[ "${EXTERNAL_ACCESS_PORT_AUTOINCREMENT:-}" =~ ^(yes|true)$ ]]; thenport="$((EXTERNAL_ACCESS_PORT + POD_ID))"elseport="$EXTERNAL_ACCESS_PORT"fielif [[ -n "${EXTERNAL_ACCESS_PORTS_LIST:-}" ]]; thenread -r -a ports <<<"$(tr ',' ' ' <<<"${EXTERNAL_ACCESS_PORTS_LIST}")"port="${ports[$POD_ID]}"elseerror "External access port not provided"fi# Configure Kafka advertised listenerssed -i -E "s|^(advertised\.listeners=\S+)$|\1,EXTERNAL://${host}:${port}|" "$KAFKA_CONFIG_FILE"}export KAFKA_CONFIG_FILE=/config/server.propertiescp /configmaps/server.properties $KAFKA_CONFIG_FILE# Get pod ID and role, last and second last fields in the pod name respectivelyPOD_ID=$(echo "$MY_POD_NAME" | rev | cut -d'-' -f 1 | rev)POD_ROLE=$(echo "$MY_POD_NAME" | rev | cut -d'-' -f 2 | rev)# Configure node.id and/or broker.idif [[ -f "/bitnami/kafka/data/meta.properties" ]]; thenif grep -q "broker.id" /bitnami/kafka/data/meta.properties; thenID="$(grep "broker.id" /bitnami/kafka/data/meta.properties | awk -F '=' '{print $2}')"kafka_conf_set "$KAFKA_CONFIG_FILE" "node.id" "$ID"elseID="$(grep "node.id" /bitnami/kafka/data/meta.properties | awk -F '=' '{print $2}')"kafka_conf_set "$KAFKA_CONFIG_FILE" "node.id" "$ID"fielseID=$((POD_ID + KAFKA_MIN_ID))kafka_conf_set "$KAFKA_CONFIG_FILE" "node.id" "$ID"fireplace_placeholder "advertised-address-placeholder" "${MY_POD_NAME}.kafka-${POD_ROLE}-headless.default.svc.cluster.local"if [[ "${EXTERNAL_ACCESS_ENABLED:-false}" =~ ^(yes|true)$ ]]; thenconfigure_external_accessfiif [ -f /secret-config/server-secret.properties ]; thenappend_file_to_kafka_conf /secret-config/server-secret.properties $KAFKA_CONFIG_FILEfi

---

# Source: kafka/templates/controller-eligible/svc-external-access.yaml

apiVersion: v1

kind: Service

metadata:name: kafka-controller-0-externalnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: kafkapod: kafka-controller-0

spec:type: NodePortpublishNotReadyAddresses: falseports:- name: tcp-kafkaport: 9094nodePort: 30091targetPort: externalselector:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/part-of: kafkaapp.kubernetes.io/component: controller-eligiblestatefulset.kubernetes.io/pod-name: kafka-controller-0

---

# Source: kafka/templates/controller-eligible/svc-external-access.yaml

apiVersion: v1

kind: Service

metadata:name: kafka-controller-1-externalnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: kafkapod: kafka-controller-1

spec:type: NodePortpublishNotReadyAddresses: falseports:- name: tcp-kafkaport: 9094nodePort: 30092targetPort: externalselector:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/part-of: kafkaapp.kubernetes.io/component: controller-eligiblestatefulset.kubernetes.io/pod-name: kafka-controller-1

---

# Source: kafka/templates/controller-eligible/svc-external-access.yaml

apiVersion: v1

kind: Service

metadata:name: kafka-controller-2-externalnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: kafkapod: kafka-controller-2

spec:type: NodePortpublishNotReadyAddresses: falseports:- name: tcp-kafkaport: 9094nodePort: 30093targetPort: externalselector:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/part-of: kafkaapp.kubernetes.io/component: controller-eligiblestatefulset.kubernetes.io/pod-name: kafka-controller-2

---

# Source: kafka/templates/controller-eligible/svc-headless.yaml

apiVersion: v1

kind: Service

metadata:name: kafka-controller-headlessnamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafka

spec:type: ClusterIPclusterIP: NonepublishNotReadyAddresses: trueports:- name: tcp-interbrokerport: 9094protocol: TCPtargetPort: interbroker- name: tcp-clientport: 9092protocol: TCPtargetPort: client- name: tcp-controllerprotocol: TCPport: 9093targetPort: controllerselector:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafka

---

# Source: kafka/templates/svc.yaml

apiVersion: v1

kind: Service

metadata:name: kafkanamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: kafka

spec:type: ClusterIPsessionAffinity: Noneports:- name: tcp-clientport: 9092protocol: TCPtargetPort: clientnodePort: null- name: tcp-externalport: 9095protocol: TCPtargetPort: externalselector:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/part-of: kafka

---

# Source: kafka/templates/controller-eligible/statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: kafka-controllernamespace: "default"labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafka

spec:podManagementPolicy: Parallelreplicas: 3selector:matchLabels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafkaserviceName: kafka-controller-headlessupdateStrategy:type: RollingUpdatetemplate:metadata:labels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/managed-by: Helmapp.kubernetes.io/name: kafkaapp.kubernetes.io/version: 3.9.0helm.sh/chart: kafka-31.1.1app.kubernetes.io/component: controller-eligibleapp.kubernetes.io/part-of: kafkaannotations:checksum/configuration: 84a30ef8698d80825ae7ffe45fae93a0d18c8861e2dfc64b4b809aa92065dfffspec:automountServiceAccountToken: falsehostNetwork: falsehostIPC: falseaffinity:podAffinity:podAntiAffinity:preferredDuringSchedulingIgnoredDuringExecution:- podAffinityTerm:labelSelector:matchLabels:app.kubernetes.io/instance: kafkaapp.kubernetes.io/name: kafkaapp.kubernetes.io/component: controller-eligibletopologyKey: kubernetes.io/hostnameweight: 1nodeAffinity:securityContext:fsGroup: 1001fsGroupChangePolicy: AlwaysseccompProfile:type: RuntimeDefaultsupplementalGroups: []sysctls: []serviceAccountName: kafkaenableServiceLinks: trueinitContainers:- name: kafka-initimage: docker.io/bitnami/kafka:3.9.0-debian-12-r4imagePullPolicy: IfNotPresentsecurityContext:allowPrivilegeEscalation: falsecapabilities:drop:- ALLreadOnlyRootFilesystem: truerunAsGroup: 1001runAsNonRoot: truerunAsUser: 1001seLinuxOptions: {}resources:limits: {}requests: {} command:- /bin/bashargs:- -ec- |/scripts/kafka-init.shenv:- name: BITNAMI_DEBUGvalue: "false"- name: MY_POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: KAFKA_VOLUME_DIRvalue: "/bitnami/kafka"- name: KAFKA_MIN_IDvalue: "0"- name: EXTERNAL_ACCESS_ENABLEDvalue: "true"- name: HOST_IPvalueFrom:fieldRef:fieldPath: status.hostIP- name: EXTERNAL_ACCESS_HOSTvalue: "$(HOST_IP)"- name: EXTERNAL_ACCESS_PORTS_LISTvalue: "30091,30092,30093"volumeMounts:- name: datamountPath: /bitnami/kafka- name: kafka-configmountPath: /config- name: kafka-configmapsmountPath: /configmaps- name: kafka-secret-configmountPath: /secret-config- name: scriptsmountPath: /scripts- name: tmpmountPath: /tmpcontainers:- name: kafkaimage: docker.io/bitnami/kafka:3.9.0-debian-12-r4imagePullPolicy: "IfNotPresent"securityContext:allowPrivilegeEscalation: falsecapabilities:drop:- ALLreadOnlyRootFilesystem: truerunAsGroup: 1001runAsNonRoot: truerunAsUser: 1001seLinuxOptions: {}env:- name: BITNAMI_DEBUGvalue: "false"- name: KAFKA_HEAP_OPTSvalue: "-Xmx4096m -Xms2048m"- name: KAFKA_KRAFT_CLUSTER_IDvalueFrom:secretKeyRef:name: kafka-kraft-cluster-idkey: kraft-cluster-idports:- name: controllercontainerPort: 9093- name: clientcontainerPort: 9092- name: interbrokercontainerPort: 9094- name: externalcontainerPort: 9095livenessProbe:failureThreshold: 3initialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 5exec:command:- pgrep- -f- kafkareadinessProbe:failureThreshold: 6initialDelaySeconds: 5periodSeconds: 10successThreshold: 1timeoutSeconds: 5tcpSocket:port: "controller"resources:limits:cpu: 4memory: 8Girequests:cpu: 500mmemory: 512MivolumeMounts:- name: datamountPath: /bitnami/kafka- name: logsmountPath: /opt/bitnami/kafka/logs- name: kafka-configmountPath: /opt/bitnami/kafka/config/server.propertiessubPath: server.properties- name: tmpmountPath: /tmpvolumes:- name: kafka-configmapsconfigMap:name: kafka-controller-configuration- name: kafka-secret-configemptyDir: {}- name: kafka-configemptyDir: {}- name: tmpemptyDir: {}- name: scriptsconfigMap:name: kafka-scriptsdefaultMode: 493- name: logsemptyDir: {}volumeClaimTemplates:- apiVersion: v1kind: PersistentVolumeClaimmetadata:name: dataspec:accessModes:- "ReadWriteOnce"resources:requests:storage: "10Gi"storageClassName: "local-path"

相关文章:

——筑梦之路)

k8s helm部署kafka集群(KRaft模式)——筑梦之路

添加helm仓库 helm repo add bitnami "https://helm-charts.itboon.top/bitnami" --force-update helm repo add grafana "https://helm-charts.itboon.top/grafana" --force-update helm repo add prometheus-community "https://helm-charts.itboo…...

unity action委托举例

using System; using UnityEngine; public class DelegateExample : MonoBehaviour { void Start() { // 创建委托实例并添加方法 Action myAction Method1; myAction Method2; myAction Method3; // 调用委托,会依次执…...

conda 批量安装requirements.txt文件

conda 批量安装requirements.txt文件中包含的组件依赖 conda install --yes --file requirements.txt #这种执行方式,一遇到安装不上就整体停止不会继续下面的包安装。 下面这条命令能解决上面出现的不执行后续包的问题,需要在CMD窗口执行: 点…...

Flutter:封装一个自用的bottom_picker选择器

效果图:单列选择器 使用bottom_picker: ^2.9.0实现,单列选择器,官方文档 pubspec.yaml # 底部选择 bottom_picker: ^2.9.0picker_utils.dart AppTheme:自定义的颜色 TextWidget.body Text() <Widget>[].toRow Row()下边代…...

Group3r:一款针对活动目录组策略安全的漏洞检测工具

关于Group3r Group3r是一款针对活动目录组策略安全的漏洞检测工具,可以帮助广大安全研究人员迅速枚举目标AD组策略中的相关配置,并识别其中的潜在安全威胁。 Group3r专为红蓝队研究人员和渗透测试人员设计,该工具可以通过将 LDAP 与域控制器…...

支持向量机算法(一):像讲故事一样讲明白它的原理及实现奥秘

1、支持向量机算法介绍 支持向量机(Support Vector Machine,SVM)是一种基于统计学习理论的模式识别方法, 属于有监督学习模型,主要用于解决数据分类问题。SVM将每个样本数据表示为空间中的点,使不同类别的…...

力扣-数组-35 搜索插入位置

解析 时间复杂度要求,所以使用二分的思想,漏掉了很多问题,这里记录 在left-right1时,已经找到了插入位置,但是没有赋值,然后break,所以导致一直死循环。 if(right - left 1){result right;b…...

List ---- 模拟实现LIST功能的发现

目录 listlist概念 list 中的迭代器list迭代器知识const迭代器写法list访问自定义类型 附录代码 list list概念 list是可以在常数范围内在任意位置进行插入和删除的序列式容器,并且该容器可以前后双向迭代。list的底层是双向链表结构,双向链表中每个元素…...

HashMap和HashTable区别问题

并发:hashMap线程不安全,hashTable线程安全,底层在put操作的方法上加了synchronized 初始化:hashTable初始容量为11,hashmap初始容量为16 阔容因子:阔容因子都是0.75 扩容比例: 补充 hashMap…...

mysql -> 达梦数据迁移(mbp大小写问题兼容)

安装 注意后面初始化需要忽略大小写 初始化程序启动路径 F:\dmdbms\tool dbca.exe 创建表空间,用户,模式 管理工具启动路径 F:\dmdbms\tool manager.exe 创建表空间 创建用户 创建同名模式,指定模式拥有者TEST dts 工具数据迁移 mysql -&g…...

leetcode热门100题1-4

第一天 两数之和 //暴力枚举 class Solution { public:vector<int> twoSum(vector<int>& nums, int target) {int n nums.size();for (int i 0; i < n; i) {for (int j i 1; j < n; j) {if (nums[i] nums[j] target) {return {i, j};}}}return {…...

作业:IO:day2

题目一 第一步:创建一个 struct Student 类型的数组 arr[3],初始化该数组中3个学生的属性 第二步:编写一个叫做save的函数,功能为 将数组arr中的3个学生的所有信息,保存到文件中去,使用fread实现fwrite 第三步…...

UVM: TLM机制

topic overview 不建议的方法:假如没有TLM TLM TLM 1.0 整个TLM机制下,底层逻辑离不开动作发起者和被动接受者这个底层的模型基础,但实际上,在验证环境中,任何一个组件,都有可能成为动作的发起者࿰…...

flink的EventTime和Watermark

时间机制 Flink中的时间机制主要用在判断是否触发时间窗口window的计算。 在Flink中有三种时间概念:ProcessTime、IngestionTime、EventTime。 ProcessTime:是在数据抵达算子产生的时间(Flink默认使用ProcessTime) IngestionT…...

arcgis的合并、相交、融合、裁剪、联合、标识操作的区别和使用

1、相交 需要输入两个面要素,最终得到的是两个输入面要素相交部分的结果面要素。 2、合并 合并能将两个单独存放的两个要素类的内容,汇集到一个要素类里面。 3、融合 融合能将一个要素类内的所有元素融合成一个整体。 4、裁剪 裁剪需要输入两个面要…...

【Leetcode 热题 100】20. 有效的括号

问题背景 给定一个只包括 ‘(’,‘)’,‘{’,‘}’,‘[’,‘]’ 的字符串 s s s,判断字符串是否有效。 有效字符串需满足: 左括号必须用相同类型的右括号闭合。左括号必须以正确的顺序闭合。每…...

比较procfs 、 sysctl和Netlink

procfs 文件系统和 sysctl 的使用: procfs 文件系统(/proc) procfs 文件系统是 Linux 内核向用户空间暴露内核数据结构以及配置信息的一种方式。`procfs` 的挂载点是 /proc 目录,这个目录中的文件和目录呈现内核的运行状况和配置信息。通过读写这些文件,可以查看和控制内…...

Leetcode 3413. Maximum Coins From K Consecutive Bags

Leetcode 3413. Maximum Coins From K Consecutive Bags 1. 解题思路2. 代码实现 题目链接:3413. Maximum Coins From K Consecutive Bags 1. 解题思路 这一题的话思路上整体上就是一个遍历,显然,要获得最大的coin,其选取的范围…...

MakeFile使用指南

文章目录 1. MakeFile 的作用2. 背景知识说明2.1 程序的编译与链接2.2 常见代码的文档结构 3. MakeFile 的内容4. Makefile的基本语法5. 变量定义5.1 一般变量赋值语法5.2 自动化变量 6. 通配符 参考: Makefile教程:Makefile文件编写1天入门 Makefile由浅…...

矩阵碰一碰发视频的视频剪辑功能源码搭建,支持OEM

在短视频创作与传播领域,矩阵碰一碰发视频结合视频剪辑功能,为用户带来了高效且富有创意的内容产出方式。这一功能允许用户通过碰一碰 NFC 设备触发视频分享,并在分享前对视频进行个性化剪辑。以下将详细阐述该功能的源码搭建过程。 一、技术…...

手游刚开服就被攻击怎么办?如何防御DDoS?

开服初期是手游最脆弱的阶段,极易成为DDoS攻击的目标。一旦遭遇攻击,可能导致服务器瘫痪、玩家流失,甚至造成巨大经济损失。本文为开发者提供一套简洁有效的应急与防御方案,帮助快速应对并构建长期防护体系。 一、遭遇攻击的紧急应…...

日语AI面试高效通关秘籍:专业解读与青柚面试智能助攻

在如今就业市场竞争日益激烈的背景下,越来越多的求职者将目光投向了日本及中日双语岗位。但是,一场日语面试往往让许多人感到步履维艰。你是否也曾因为面试官抛出的“刁钻问题”而心生畏惧?面对生疏的日语交流环境,即便提前恶补了…...

Linux链表操作全解析

Linux C语言链表深度解析与实战技巧 一、链表基础概念与内核链表优势1.1 为什么使用链表?1.2 Linux 内核链表与用户态链表的区别 二、内核链表结构与宏解析常用宏/函数 三、内核链表的优点四、用户态链表示例五、双向循环链表在内核中的实现优势5.1 插入效率5.2 安全…...

反向工程与模型迁移:打造未来商品详情API的可持续创新体系

在电商行业蓬勃发展的当下,商品详情API作为连接电商平台与开发者、商家及用户的关键纽带,其重要性日益凸显。传统商品详情API主要聚焦于商品基本信息(如名称、价格、库存等)的获取与展示,已难以满足市场对个性化、智能…...

【Java学习笔记】Arrays类

Arrays 类 1. 导入包:import java.util.Arrays 2. 常用方法一览表 方法描述Arrays.toString()返回数组的字符串形式Arrays.sort()排序(自然排序和定制排序)Arrays.binarySearch()通过二分搜索法进行查找(前提:数组是…...

Java如何权衡是使用无序的数组还是有序的数组

在 Java 中,选择有序数组还是无序数组取决于具体场景的性能需求与操作特点。以下是关键权衡因素及决策指南: ⚖️ 核心权衡维度 维度有序数组无序数组查询性能二分查找 O(log n) ✅线性扫描 O(n) ❌插入/删除需移位维护顺序 O(n) ❌直接操作尾部 O(1) ✅内存开销与无序数组相…...

生成 Git SSH 证书

🔑 1. 生成 SSH 密钥对 在终端(Windows 使用 Git Bash,Mac/Linux 使用 Terminal)执行命令: ssh-keygen -t rsa -b 4096 -C "your_emailexample.com" 参数说明: -t rsa&#x…...

SpringCloudGateway 自定义局部过滤器

场景: 将所有请求转化为同一路径请求(方便穿网配置)在请求头内标识原来路径,然后在将请求分发给不同服务 AllToOneGatewayFilterFactory import lombok.Getter; import lombok.Setter; import lombok.extern.slf4j.Slf4j; impor…...

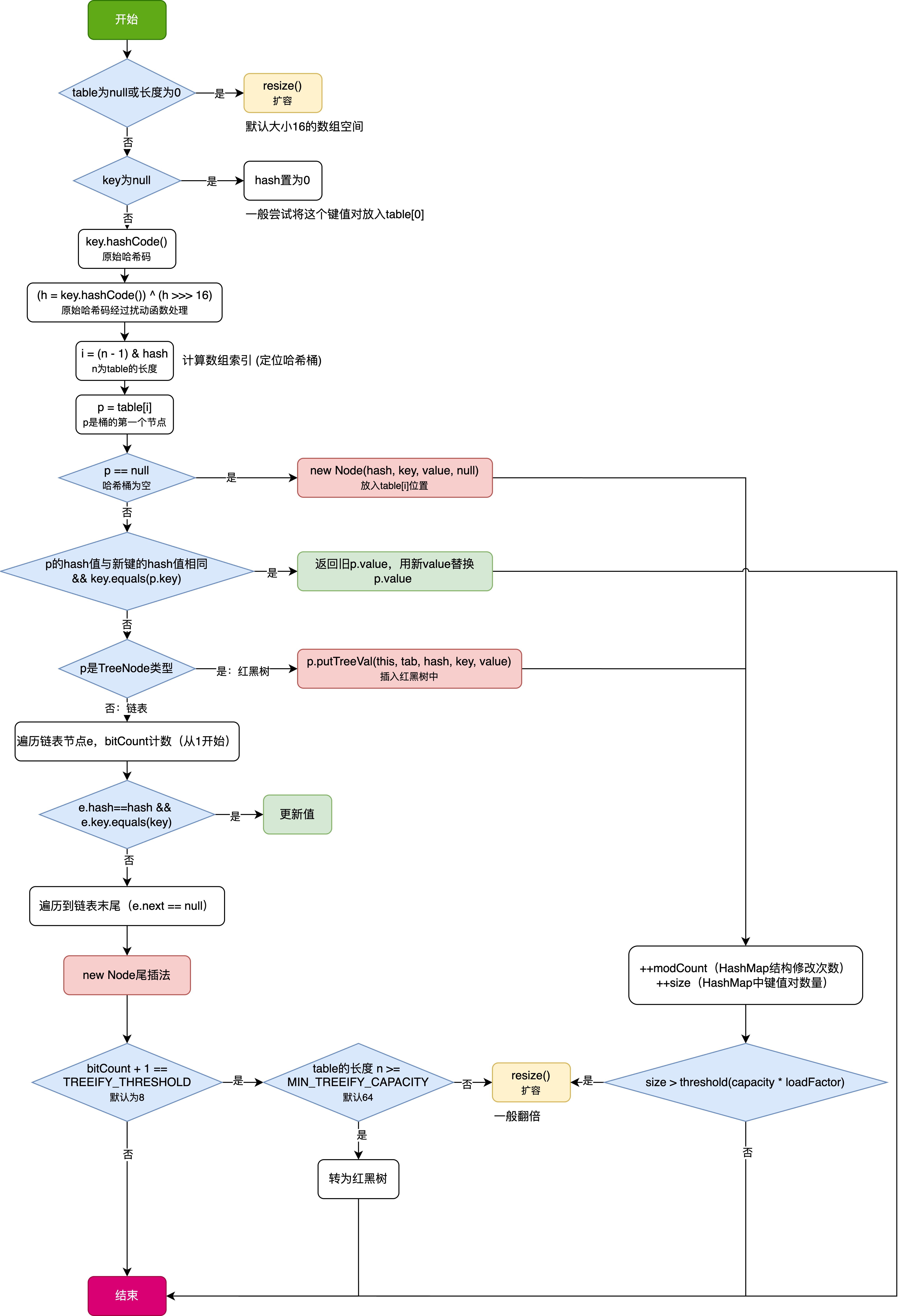

HashMap中的put方法执行流程(流程图)

1 put操作整体流程 HashMap 的 put 操作是其最核心的功能之一。在 JDK 1.8 及以后版本中,其主要逻辑封装在 putVal 这个内部方法中。整个过程大致如下: 初始判断与哈希计算: 首先,putVal 方法会检查当前的 table(也就…...

)

C#学习第29天:表达式树(Expression Trees)

目录 什么是表达式树? 核心概念 1.表达式树的构建 2. 表达式树与Lambda表达式 3.解析和访问表达式树 4.动态条件查询 表达式树的优势 1.动态构建查询 2.LINQ 提供程序支持: 3.性能优化 4.元数据处理 5.代码转换和重写 适用场景 代码复杂性…...