llama.cpp LLM_CHAT_TEMPLATE_DEEPSEEK_3

llama.cpp LLM_CHAT_TEMPLATE_DEEPSEEK_3

- 1. `LLAMA_VOCAB_PRE_TYPE_DEEPSEEK3_LLM`

- 2. `static const std::map<std::string, llm_chat_template> LLM_CHAT_TEMPLATES`

- 3. `LLM_CHAT_TEMPLATE_DEEPSEEK_3`

- References

不宜吹捧中国大语言模型的同时,又去贬低美国大语言模型。

水是人体的主要化学成分,约占体重的 50% 至 70%,大语言模型的含水量也不会太低。

科技发展靠的是硬实力,而不是情怀和口号。

llama.cpp

https://github.com/ggerganov/llama.cpp

1. LLAMA_VOCAB_PRE_TYPE_DEEPSEEK3_LLM

LLAMA_VOCAB_PRE_TYPE_DEEPSEEK3_LLM,LLAMA_VOCAB_PRE_TYPE_DEEPSEEK_LLMandLLAMA_VOCAB_PRE_TYPE_DEEPSEEK_CODER

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/include/llama.h

enum llama_vocab_type {LLAMA_VOCAB_TYPE_NONE = 0, // For models without vocabLLAMA_VOCAB_TYPE_SPM = 1, // LLaMA tokenizer based on byte-level BPE with byte fallbackLLAMA_VOCAB_TYPE_BPE = 2, // GPT-2 tokenizer based on byte-level BPELLAMA_VOCAB_TYPE_WPM = 3, // BERT tokenizer based on WordPieceLLAMA_VOCAB_TYPE_UGM = 4, // T5 tokenizer based on UnigramLLAMA_VOCAB_TYPE_RWKV = 5, // RWKV tokenizer based on greedy tokenization};// pre-tokenization typesenum llama_vocab_pre_type {LLAMA_VOCAB_PRE_TYPE_DEFAULT = 0,LLAMA_VOCAB_PRE_TYPE_LLAMA3 = 1,LLAMA_VOCAB_PRE_TYPE_DEEPSEEK_LLM = 2,LLAMA_VOCAB_PRE_TYPE_DEEPSEEK_CODER = 3,LLAMA_VOCAB_PRE_TYPE_FALCON = 4,LLAMA_VOCAB_PRE_TYPE_MPT = 5,LLAMA_VOCAB_PRE_TYPE_STARCODER = 6,LLAMA_VOCAB_PRE_TYPE_GPT2 = 7,LLAMA_VOCAB_PRE_TYPE_REFACT = 8,LLAMA_VOCAB_PRE_TYPE_COMMAND_R = 9,LLAMA_VOCAB_PRE_TYPE_STABLELM2 = 10,LLAMA_VOCAB_PRE_TYPE_QWEN2 = 11,LLAMA_VOCAB_PRE_TYPE_OLMO = 12,LLAMA_VOCAB_PRE_TYPE_DBRX = 13,LLAMA_VOCAB_PRE_TYPE_SMAUG = 14,LLAMA_VOCAB_PRE_TYPE_PORO = 15,LLAMA_VOCAB_PRE_TYPE_CHATGLM3 = 16,LLAMA_VOCAB_PRE_TYPE_CHATGLM4 = 17,LLAMA_VOCAB_PRE_TYPE_VIKING = 18,LLAMA_VOCAB_PRE_TYPE_JAIS = 19,LLAMA_VOCAB_PRE_TYPE_TEKKEN = 20,LLAMA_VOCAB_PRE_TYPE_SMOLLM = 21,LLAMA_VOCAB_PRE_TYPE_CODESHELL = 22,LLAMA_VOCAB_PRE_TYPE_BLOOM = 23,LLAMA_VOCAB_PRE_TYPE_GPT3_FINNISH = 24,LLAMA_VOCAB_PRE_TYPE_EXAONE = 25,LLAMA_VOCAB_PRE_TYPE_CHAMELEON = 26,LLAMA_VOCAB_PRE_TYPE_MINERVA = 27,LLAMA_VOCAB_PRE_TYPE_DEEPSEEK3_LLM = 28,};

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-hparams.h

// bump if necessary

#define LLAMA_MAX_LAYERS 512

#define LLAMA_MAX_EXPERTS 256 // DeepSeekV3enum llama_expert_gating_func_type {LLAMA_EXPERT_GATING_FUNC_TYPE_NONE = 0,LLAMA_EXPERT_GATING_FUNC_TYPE_SOFTMAX = 1,LLAMA_EXPERT_GATING_FUNC_TYPE_SIGMOID = 2,

};

2. static const std::map<std::string, llm_chat_template> LLM_CHAT_TEMPLATES

LLM_CHAT_TEMPLATE_DEEPSEEK_3,LLM_CHAT_TEMPLATE_DEEPSEEK_2andLLM_CHAT_TEMPLATE_DEEPSEEK

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-chat.h

enum llm_chat_template {LLM_CHAT_TEMPLATE_CHATML,LLM_CHAT_TEMPLATE_LLAMA_2,LLM_CHAT_TEMPLATE_LLAMA_2_SYS,LLM_CHAT_TEMPLATE_LLAMA_2_SYS_BOS,LLM_CHAT_TEMPLATE_LLAMA_2_SYS_STRIP,LLM_CHAT_TEMPLATE_MISTRAL_V1,LLM_CHAT_TEMPLATE_MISTRAL_V3,LLM_CHAT_TEMPLATE_MISTRAL_V3_TEKKEN,LLM_CHAT_TEMPLATE_MISTRAL_V7,LLM_CHAT_TEMPLATE_PHI_3,LLM_CHAT_TEMPLATE_PHI_4,LLM_CHAT_TEMPLATE_FALCON_3,LLM_CHAT_TEMPLATE_ZEPHYR,LLM_CHAT_TEMPLATE_MONARCH,LLM_CHAT_TEMPLATE_GEMMA,LLM_CHAT_TEMPLATE_ORION,LLM_CHAT_TEMPLATE_OPENCHAT,LLM_CHAT_TEMPLATE_VICUNA,LLM_CHAT_TEMPLATE_VICUNA_ORCA,LLM_CHAT_TEMPLATE_DEEPSEEK,LLM_CHAT_TEMPLATE_DEEPSEEK_2,LLM_CHAT_TEMPLATE_DEEPSEEK_3,LLM_CHAT_TEMPLATE_COMMAND_R,LLM_CHAT_TEMPLATE_LLAMA_3,LLM_CHAT_TEMPLATE_CHATGML_3,LLM_CHAT_TEMPLATE_CHATGML_4,LLM_CHAT_TEMPLATE_MINICPM,LLM_CHAT_TEMPLATE_EXAONE_3,LLM_CHAT_TEMPLATE_RWKV_WORLD,LLM_CHAT_TEMPLATE_GRANITE,LLM_CHAT_TEMPLATE_GIGACHAT,LLM_CHAT_TEMPLATE_MEGREZ,LLM_CHAT_TEMPLATE_UNKNOWN,

};

{ "deepseek3", LLM_CHAT_TEMPLATE_DEEPSEEK_3 },{ "deepseek2", LLM_CHAT_TEMPLATE_DEEPSEEK_2 }and{ "deepseek", LLM_CHAT_TEMPLATE_DEEPSEEK }

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-chat.cpp

static const std::map<std::string, llm_chat_template> LLM_CHAT_TEMPLATES = {{ "chatml", LLM_CHAT_TEMPLATE_CHATML },{ "llama2", LLM_CHAT_TEMPLATE_LLAMA_2 },{ "llama2-sys", LLM_CHAT_TEMPLATE_LLAMA_2_SYS },{ "llama2-sys-bos", LLM_CHAT_TEMPLATE_LLAMA_2_SYS_BOS },{ "llama2-sys-strip", LLM_CHAT_TEMPLATE_LLAMA_2_SYS_STRIP },{ "mistral-v1", LLM_CHAT_TEMPLATE_MISTRAL_V1 },{ "mistral-v3", LLM_CHAT_TEMPLATE_MISTRAL_V3 },{ "mistral-v3-tekken", LLM_CHAT_TEMPLATE_MISTRAL_V3_TEKKEN },{ "mistral-v7", LLM_CHAT_TEMPLATE_MISTRAL_V7 },{ "phi3", LLM_CHAT_TEMPLATE_PHI_3 },{ "phi4", LLM_CHAT_TEMPLATE_PHI_4 },{ "falcon3", LLM_CHAT_TEMPLATE_FALCON_3 },{ "zephyr", LLM_CHAT_TEMPLATE_ZEPHYR },{ "monarch", LLM_CHAT_TEMPLATE_MONARCH },{ "gemma", LLM_CHAT_TEMPLATE_GEMMA },{ "orion", LLM_CHAT_TEMPLATE_ORION },{ "openchat", LLM_CHAT_TEMPLATE_OPENCHAT },{ "vicuna", LLM_CHAT_TEMPLATE_VICUNA },{ "vicuna-orca", LLM_CHAT_TEMPLATE_VICUNA_ORCA },{ "deepseek", LLM_CHAT_TEMPLATE_DEEPSEEK },{ "deepseek2", LLM_CHAT_TEMPLATE_DEEPSEEK_2 },{ "deepseek3", LLM_CHAT_TEMPLATE_DEEPSEEK_3 },{ "command-r", LLM_CHAT_TEMPLATE_COMMAND_R },{ "llama3", LLM_CHAT_TEMPLATE_LLAMA_3 },{ "chatglm3", LLM_CHAT_TEMPLATE_CHATGML_3 },{ "chatglm4", LLM_CHAT_TEMPLATE_CHATGML_4 },{ "minicpm", LLM_CHAT_TEMPLATE_MINICPM },{ "exaone3", LLM_CHAT_TEMPLATE_EXAONE_3 },{ "rwkv-world", LLM_CHAT_TEMPLATE_RWKV_WORLD },{ "granite", LLM_CHAT_TEMPLATE_GRANITE },{ "gigachat", LLM_CHAT_TEMPLATE_GIGACHAT },{ "megrez", LLM_CHAT_TEMPLATE_MEGREZ },

};

3. LLM_CHAT_TEMPLATE_DEEPSEEK_3

LLM_CHAT_TEMPLATE_DEEPSEEK_3,LLM_CHAT_TEMPLATE_DEEPSEEK_2andLLM_CHAT_TEMPLATE_DEEPSEEK

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-chat.cpp

// Simple version of "llama_apply_chat_template" that only works with strings

// This function uses heuristic checks to determine commonly used template. It is not a jinja parser.

int32_t llm_chat_apply_template(llm_chat_template tmpl,const std::vector<const llama_chat_message *> & chat,std::string & dest, bool add_ass) {// Taken from the research: https://github.com/ggerganov/llama.cpp/issues/5527std::stringstream ss;if (tmpl == LLM_CHAT_TEMPLATE_CHATML) {// chatml templatefor (auto message : chat) {ss << "<|im_start|>" << message->role << "\n" << message->content << "<|im_end|>\n";}if (add_ass) {ss << "<|im_start|>assistant\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_MISTRAL_V7) {// Official mistral 'v7' template// See: https://huggingface.co/mistralai/Mistral-Large-Instruct-2411#basic-instruct-template-v7for (auto message : chat) {std::string role(message->role);std::string content(message->content);if (role == "system") {ss << "[SYSTEM_PROMPT] " << content << "[/SYSTEM_PROMPT]";} else if (role == "user") {ss << "[INST] " << content << "[/INST]";}else {ss << " " << content << "</s>";}}} else if (tmpl == LLM_CHAT_TEMPLATE_MISTRAL_V1|| tmpl == LLM_CHAT_TEMPLATE_MISTRAL_V3|| tmpl == LLM_CHAT_TEMPLATE_MISTRAL_V3_TEKKEN) {// See: https://github.com/mistralai/cookbook/blob/main/concept-deep-dive/tokenization/chat_templates.md// See: https://github.com/mistralai/cookbook/blob/main/concept-deep-dive/tokenization/templates.mdstd::string leading_space = tmpl == LLM_CHAT_TEMPLATE_MISTRAL_V1 ? " " : "";std::string trailing_space = tmpl == LLM_CHAT_TEMPLATE_MISTRAL_V3_TEKKEN ? "" : " ";bool trim_assistant_message = tmpl == LLM_CHAT_TEMPLATE_MISTRAL_V3;bool is_inside_turn = false;for (auto message : chat) {if (!is_inside_turn) {ss << leading_space << "[INST]" << trailing_space;is_inside_turn = true;}std::string role(message->role);std::string content(message->content);if (role == "system") {ss << content << "\n\n";} else if (role == "user") {ss << content << leading_space << "[/INST]";} else {ss << trailing_space << (trim_assistant_message ? trim(content) : content) << "</s>";is_inside_turn = false;}}} else if (tmpl == LLM_CHAT_TEMPLATE_LLAMA_2|| tmpl == LLM_CHAT_TEMPLATE_LLAMA_2_SYS|| tmpl == LLM_CHAT_TEMPLATE_LLAMA_2_SYS_BOS|| tmpl == LLM_CHAT_TEMPLATE_LLAMA_2_SYS_STRIP) {// llama2 template and its variants// [variant] support system message// See: https://huggingface.co/blog/llama2#how-to-prompt-llama-2bool support_system_message = tmpl != LLM_CHAT_TEMPLATE_LLAMA_2;// [variant] add BOS inside historybool add_bos_inside_history = tmpl == LLM_CHAT_TEMPLATE_LLAMA_2_SYS_BOS;// [variant] trim spaces from the input messagebool strip_message = tmpl == LLM_CHAT_TEMPLATE_LLAMA_2_SYS_STRIP;// construct the promptbool is_inside_turn = true; // skip BOS at the beginningss << "[INST] ";for (auto message : chat) {std::string content = strip_message ? trim(message->content) : message->content;std::string role(message->role);if (!is_inside_turn) {is_inside_turn = true;ss << (add_bos_inside_history ? "<s>[INST] " : "[INST] ");}if (role == "system") {if (support_system_message) {ss << "<<SYS>>\n" << content << "\n<</SYS>>\n\n";} else {// if the model does not support system message, we still include it in the first message, but without <<SYS>>ss << content << "\n";}} else if (role == "user") {ss << content << " [/INST]";} else {ss << content << "</s>";is_inside_turn = false;}}} else if (tmpl == LLM_CHAT_TEMPLATE_PHI_3) {// Phi 3for (auto message : chat) {std::string role(message->role);ss << "<|" << role << "|>\n" << message->content << "<|end|>\n";}if (add_ass) {ss << "<|assistant|>\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_PHI_4) {// chatml templatefor (auto message : chat) {ss << "<|im_start|>" << message->role << "<|im_sep|>" << message->content << "<|im_end|>";}if (add_ass) {ss << "<|im_start|>assistant<|im_sep|>";}} else if (tmpl == LLM_CHAT_TEMPLATE_FALCON_3) {// Falcon 3for (auto message : chat) {std::string role(message->role);ss << "<|" << role << "|>\n" << message->content << "\n";}if (add_ass) {ss << "<|assistant|>\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_ZEPHYR) {// zephyr templatefor (auto message : chat) {ss << "<|" << message->role << "|>" << "\n" << message->content << "<|endoftext|>\n";}if (add_ass) {ss << "<|assistant|>\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_MONARCH) {// mlabonne/AlphaMonarch-7B template (the <s> is included inside history)for (auto message : chat) {std::string bos = (message == chat.front()) ? "" : "<s>"; // skip BOS for first messagess << bos << message->role << "\n" << message->content << "</s>\n";}if (add_ass) {ss << "<s>assistant\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_GEMMA) {// google/gemma-7b-itstd::string system_prompt = "";for (auto message : chat) {std::string role(message->role);if (role == "system") {// there is no system message for gemma, but we will merge it with user prompt, so nothing is brokensystem_prompt = trim(message->content);continue;}// in gemma, "assistant" is "model"role = role == "assistant" ? "model" : message->role;ss << "<start_of_turn>" << role << "\n";if (!system_prompt.empty() && role != "model") {ss << system_prompt << "\n\n";system_prompt = "";}ss << trim(message->content) << "<end_of_turn>\n";}if (add_ass) {ss << "<start_of_turn>model\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_ORION) {// OrionStarAI/Orion-14B-Chatstd::string system_prompt = "";for (auto message : chat) {std::string role(message->role);if (role == "system") {// there is no system message support, we will merge it with user promptsystem_prompt = message->content;continue;} else if (role == "user") {ss << "Human: ";if (!system_prompt.empty()) {ss << system_prompt << "\n\n";system_prompt = "";}ss << message->content << "\n\nAssistant: </s>";} else {ss << message->content << "</s>";}}} else if (tmpl == LLM_CHAT_TEMPLATE_OPENCHAT) {// openchat/openchat-3.5-0106,for (auto message : chat) {std::string role(message->role);if (role == "system") {ss << message->content << "<|end_of_turn|>";} else {role[0] = toupper(role[0]);ss << "GPT4 Correct " << role << ": " << message->content << "<|end_of_turn|>";}}if (add_ass) {ss << "GPT4 Correct Assistant:";}} else if (tmpl == LLM_CHAT_TEMPLATE_VICUNA || tmpl == LLM_CHAT_TEMPLATE_VICUNA_ORCA) {// eachadea/vicuna-13b-1.1 (and Orca variant)for (auto message : chat) {std::string role(message->role);if (role == "system") {// Orca-Vicuna variant uses a system prefixif (tmpl == LLM_CHAT_TEMPLATE_VICUNA_ORCA) {ss << "SYSTEM: " << message->content << "\n";} else {ss << message->content << "\n\n";}} else if (role == "user") {ss << "USER: " << message->content << "\n";} else if (role == "assistant") {ss << "ASSISTANT: " << message->content << "</s>\n";}}if (add_ass) {ss << "ASSISTANT:";}} else if (tmpl == LLM_CHAT_TEMPLATE_DEEPSEEK) {// deepseek-ai/deepseek-coder-33b-instructfor (auto message : chat) {std::string role(message->role);if (role == "system") {ss << message->content;} else if (role == "user") {ss << "### Instruction:\n" << message->content << "\n";} else if (role == "assistant") {ss << "### Response:\n" << message->content << "\n<|EOT|>\n";}}if (add_ass) {ss << "### Response:\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_COMMAND_R) {// CohereForAI/c4ai-command-r-plusfor (auto message : chat) {std::string role(message->role);if (role == "system") {ss << "<|START_OF_TURN_TOKEN|><|SYSTEM_TOKEN|>" << trim(message->content) << "<|END_OF_TURN_TOKEN|>";} else if (role == "user") {ss << "<|START_OF_TURN_TOKEN|><|USER_TOKEN|>" << trim(message->content) << "<|END_OF_TURN_TOKEN|>";} else if (role == "assistant") {ss << "<|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>" << trim(message->content) << "<|END_OF_TURN_TOKEN|>";}}if (add_ass) {ss << "<|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>";}} else if (tmpl == LLM_CHAT_TEMPLATE_LLAMA_3) {// Llama 3for (auto message : chat) {std::string role(message->role);ss << "<|start_header_id|>" << role << "<|end_header_id|>\n\n" << trim(message->content) << "<|eot_id|>";}if (add_ass) {ss << "<|start_header_id|>assistant<|end_header_id|>\n\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_CHATGML_3) {// chatglm3-6bss << "[gMASK]" << "sop";for (auto message : chat) {std::string role(message->role);ss << "<|" << role << "|>" << "\n " << message->content;}if (add_ass) {ss << "<|assistant|>";}} else if (tmpl == LLM_CHAT_TEMPLATE_CHATGML_4) {ss << "[gMASK]" << "<sop>";for (auto message : chat) {std::string role(message->role);ss << "<|" << role << "|>" << "\n" << message->content;}if (add_ass) {ss << "<|assistant|>";}} else if (tmpl == LLM_CHAT_TEMPLATE_MINICPM) {// MiniCPM-3B-OpenHermes-2.5-v2-GGUFfor (auto message : chat) {std::string role(message->role);if (role == "user") {ss << LU8("<用户>");ss << trim(message->content);ss << "<AI>";} else {ss << trim(message->content);}}} else if (tmpl == LLM_CHAT_TEMPLATE_DEEPSEEK_2) {// DeepSeek-V2for (auto message : chat) {std::string role(message->role);if (role == "system") {ss << message->content << "\n\n";} else if (role == "user") {ss << "User: " << message->content << "\n\n";} else if (role == "assistant") {ss << "Assistant: " << message->content << LU8("<|end▁of▁sentence|>");}}if (add_ass) {ss << "Assistant:";}} else if (tmpl == LLM_CHAT_TEMPLATE_DEEPSEEK_3) {// DeepSeek-V3for (auto message : chat) {std::string role(message->role);if (role == "system") {ss << message->content << "\n\n";} else if (role == "user") {ss << LU8("<|User|>") << message->content;} else if (role == "assistant") {ss << LU8("<|Assistant|>") << message->content << LU8("<|end▁of▁sentence|>");}}if (add_ass) {ss << LU8("<|Assistant|>");}} else if (tmpl == LLM_CHAT_TEMPLATE_EXAONE_3) {// ref: https://huggingface.co/LGAI-EXAONE/EXAONE-3.0-7.8B-Instruct/discussions/8#66bae61b1893d14ee8ed85bb// EXAONE-3.0-7.8B-Instructfor (auto message : chat) {std::string role(message->role);if (role == "system") {ss << "[|system|]" << trim(message->content) << "[|endofturn|]\n";} else if (role == "user") {ss << "[|user|]" << trim(message->content) << "\n";} else if (role == "assistant") {ss << "[|assistant|]" << trim(message->content) << "[|endofturn|]\n";}}if (add_ass) {ss << "[|assistant|]";}} else if (tmpl == LLM_CHAT_TEMPLATE_RWKV_WORLD) {// this template requires the model to have "\n\n" as EOT tokenfor (auto message : chat) {std::string role(message->role);if (role == "user") {ss << "User: " << message->content << "\n\nAssistant:";} else {ss << message->content << "\n\n";}}} else if (tmpl == LLM_CHAT_TEMPLATE_GRANITE) {// IBM Granite templatefor (const auto & message : chat) {std::string role(message->role);ss << "<|start_of_role|>" << role << "<|end_of_role|>";if (role == "assistant_tool_call") {ss << "<|tool_call|>";}ss << message->content << "<|end_of_text|>\n";}if (add_ass) {ss << "<|start_of_role|>assistant<|end_of_role|>\n";}} else if (tmpl == LLM_CHAT_TEMPLATE_GIGACHAT) {// GigaChat templatebool has_system = !chat.empty() && std::string(chat[0]->role) == "system";// Handle system message if presentif (has_system) {ss << "<s>" << chat[0]->content << "<|message_sep|>";} else {ss << "<s>";}// Process remaining messagesfor (size_t i = has_system ? 1 : 0; i < chat.size(); i++) {std::string role(chat[i]->role);if (role == "user") {ss << "user<|role_sep|>" << chat[i]->content << "<|message_sep|>"<< "available functions<|role_sep|>[]<|message_sep|>";} else if (role == "assistant") {ss << "assistant<|role_sep|>" << chat[i]->content << "<|message_sep|>";}}// Add generation prompt if neededif (add_ass) {ss << "assistant<|role_sep|>";}} else if (tmpl == LLM_CHAT_TEMPLATE_MEGREZ) {// Megrez templatefor (auto message : chat) {std::string role(message->role);ss << "<|role_start|>" << role << "<|role_end|>" << message->content << "<|turn_end|>";}if (add_ass) {ss << "<|role_start|>assistant<|role_end|>";}} else {// template not supportedreturn -1;}dest = ss.str();return dest.size();

}

llm_chat_template llm_chat_detect_template(const std::string & tmpl) {try {return llm_chat_template_from_str(tmpl);} catch (const std::out_of_range &) {// ignore}auto tmpl_contains = [&tmpl](const char * haystack) -> bool {return tmpl.find(haystack) != std::string::npos;};if (tmpl_contains("<|im_start|>")) {return tmpl_contains("<|im_sep|>")? LLM_CHAT_TEMPLATE_PHI_4: LLM_CHAT_TEMPLATE_CHATML;} else if (tmpl.find("mistral") == 0 || tmpl_contains("[INST]")) {if (tmpl_contains("[SYSTEM_PROMPT]")) {return LLM_CHAT_TEMPLATE_MISTRAL_V7;} else if (// catches official 'v1' templatetmpl_contains("' [INST] ' + system_message")// catches official 'v3' and 'v3-tekken' templates|| tmpl_contains("[AVAILABLE_TOOLS]")) {// Official mistral 'v1', 'v3' and 'v3-tekken' templates// See: https://github.com/mistralai/cookbook/blob/main/concept-deep-dive/tokenization/chat_templates.md// See: https://github.com/mistralai/cookbook/blob/main/concept-deep-dive/tokenization/templates.mdif (tmpl_contains(" [INST]")) {return LLM_CHAT_TEMPLATE_MISTRAL_V1;} else if (tmpl_contains("\"[INST]\"")) {return LLM_CHAT_TEMPLATE_MISTRAL_V3_TEKKEN;}return LLM_CHAT_TEMPLATE_MISTRAL_V3;} else {// llama2 template and its variants// [variant] support system message// See: https://huggingface.co/blog/llama2#how-to-prompt-llama-2bool support_system_message = tmpl_contains("<<SYS>>");bool add_bos_inside_history = tmpl_contains("bos_token + '[INST]");bool strip_message = tmpl_contains("content.strip()");if (strip_message) {return LLM_CHAT_TEMPLATE_LLAMA_2_SYS_STRIP;} else if (add_bos_inside_history) {return LLM_CHAT_TEMPLATE_LLAMA_2_SYS_BOS;} else if (support_system_message) {return LLM_CHAT_TEMPLATE_LLAMA_2_SYS;} else {return LLM_CHAT_TEMPLATE_LLAMA_2;}}} else if (tmpl_contains("<|assistant|>") && tmpl_contains("<|end|>")) {return LLM_CHAT_TEMPLATE_PHI_3;} else if (tmpl_contains("<|assistant|>") && tmpl_contains("<|user|>")) {return LLM_CHAT_TEMPLATE_FALCON_3;} else if (tmpl_contains("<|user|>") && tmpl_contains("<|endoftext|>")) {return LLM_CHAT_TEMPLATE_ZEPHYR;} else if (tmpl_contains("bos_token + message['role']")) {return LLM_CHAT_TEMPLATE_MONARCH;} else if (tmpl_contains("<start_of_turn>")) {return LLM_CHAT_TEMPLATE_GEMMA;} else if (tmpl_contains("'\\n\\nAssistant: ' + eos_token")) {// OrionStarAI/Orion-14B-Chatreturn LLM_CHAT_TEMPLATE_ORION;} else if (tmpl_contains("GPT4 Correct ")) {// openchat/openchat-3.5-0106return LLM_CHAT_TEMPLATE_OPENCHAT;} else if (tmpl_contains("USER: ") && tmpl_contains("ASSISTANT: ")) {// eachadea/vicuna-13b-1.1 (and Orca variant)if (tmpl_contains("SYSTEM: ")) {return LLM_CHAT_TEMPLATE_VICUNA_ORCA;}return LLM_CHAT_TEMPLATE_VICUNA;} else if (tmpl_contains("### Instruction:") && tmpl_contains("<|EOT|>")) {// deepseek-ai/deepseek-coder-33b-instructreturn LLM_CHAT_TEMPLATE_DEEPSEEK;} else if (tmpl_contains("<|START_OF_TURN_TOKEN|>") && tmpl_contains("<|USER_TOKEN|>")) {// CohereForAI/c4ai-command-r-plusreturn LLM_CHAT_TEMPLATE_COMMAND_R;} else if (tmpl_contains("<|start_header_id|>") && tmpl_contains("<|end_header_id|>")) {return LLM_CHAT_TEMPLATE_LLAMA_3;} else if (tmpl_contains("[gMASK]sop")) {// chatglm3-6breturn LLM_CHAT_TEMPLATE_CHATGML_3;} else if (tmpl_contains("[gMASK]<sop>")) {return LLM_CHAT_TEMPLATE_CHATGML_4;} else if (tmpl_contains(LU8("<用户>"))) {// MiniCPM-3B-OpenHermes-2.5-v2-GGUFreturn LLM_CHAT_TEMPLATE_MINICPM;} else if (tmpl_contains("'Assistant: ' + message['content'] + eos_token")) {return LLM_CHAT_TEMPLATE_DEEPSEEK_2;} else if (tmpl_contains(LU8("<|Assistant|>")) && tmpl_contains(LU8("<|User|>")) && tmpl_contains(LU8("<|end▁of▁sentence|>"))) {return LLM_CHAT_TEMPLATE_DEEPSEEK_3;} else if (tmpl_contains("[|system|]") && tmpl_contains("[|assistant|]") && tmpl_contains("[|endofturn|]")) {// ref: https://huggingface.co/LGAI-EXAONE/EXAONE-3.0-7.8B-Instruct/discussions/8#66bae61b1893d14ee8ed85bb// EXAONE-3.0-7.8B-Instructreturn LLM_CHAT_TEMPLATE_EXAONE_3;} else if (tmpl_contains("rwkv-world")) {return LLM_CHAT_TEMPLATE_RWKV_WORLD;} else if (tmpl_contains("<|start_of_role|>")) {return LLM_CHAT_TEMPLATE_GRANITE;} else if (tmpl_contains("message['role'] + additional_special_tokens[0] + message['content'] + additional_special_tokens[1]")) {return LLM_CHAT_TEMPLATE_GIGACHAT;} else if (tmpl_contains("<|role_start|>")) {return LLM_CHAT_TEMPLATE_MEGREZ;}return LLM_CHAT_TEMPLATE_UNKNOWN;

}

References

[1] Yongqiang Cheng, https://yongqiang.blog.csdn.net/

[2] huggingface/gguf, https://github.com/huggingface/huggingface.js/tree/main/packages/gguf

相关文章:

llama.cpp LLM_CHAT_TEMPLATE_DEEPSEEK_3

llama.cpp LLM_CHAT_TEMPLATE_DEEPSEEK_3 1. LLAMA_VOCAB_PRE_TYPE_DEEPSEEK3_LLM2. static const std::map<std::string, llm_chat_template> LLM_CHAT_TEMPLATES3. LLM_CHAT_TEMPLATE_DEEPSEEK_3References 不宜吹捧中国大语言模型的同时,又去贬低美国大语言…...

深度学习的应用场景及常用技术

深度学习作为机器学习的一个重要分支,在众多领域都有广泛的应用,以下是一些主要的应用场景及常用技术。 1.应用场景 1. 计算机视觉 图像分类 描述:对图像中的内容进行分类,识别出图像中物体所属的类别。例如,在安防领…...

小程序项目-购物-首页与准备

前言 这一节讲一个购物项目 1. 项目介绍与项目文档 我们这里可以打开一个网址 https://applet-base-api-t.itheima.net/docs-uni-shop/index.htm 就可以查看对应的文档 2. 配置uni-app的开发环境 可以先打开这个的官网 https://uniapp.dcloud.net.cn/ 使用这个就可以发布到…...

网件r7000刷回原厂固件合集测评

《网件R7000路由器刷回原厂固件详解》 网件R7000是一款备受赞誉的高性能无线路由器,其强大的性能和可定制性吸引了许多高级用户。然而,有时候用户可能会尝试第三方固件以提升功能或优化网络性能,但这也可能导致一些问题,如系统不…...

微信登录模块封装

文章目录 1.资质申请2.combinations-wx-login-starter1.目录结构2.pom.xml 引入okhttp依赖3.WxLoginProperties.java 属性配置4.WxLoginUtil.java 后端通过 code 获取 access_token的工具类5.WxLoginAutoConfiguration.java 自动配置类6.spring.factories 激活自动配置类 3.com…...

[STM32 - 野火] - - - 固件库学习笔记 - - -十三.高级定时器

一、高级定时器简介 高级定时器的简介在前面一章已经介绍过,可以点击下面链接了解,在这里进行一些补充。 [STM32 - 野火] - - - 固件库学习笔记 - - -十二.基本定时器 1.1 功能简介 1、高级定时器可以向上/向下/两边计数,还独有一个重复计…...

后台管理系统通用页面抽离=>高阶组件+配置文件+hooks

目录结构 配置文件和通用页面组件 content.config.ts const contentConfig {pageName: "role",header: {title: "角色列表",btnText: "新建角色"},propsList: [{ type: "selection", label: "选择", width: "80px&q…...

)

8.原型模式(Prototype)

动机 在软件系统中,经常面临着某些结构复杂的对象的创建工作;由于需求的变化,这些对象经常面临着剧烈的变化,但是它们却拥有比较稳定一致的接口。 之前的工厂方法和抽象工厂将抽象基类和具体的实现分开。原型模式也差不多&#…...

)

Python-基于PyQt5,pdf2docx,pathlib的PDF转Word工具(专业版)

前言:日常生活中,我们常常会跟WPS Office打交道。作表格,写报告,写PPT......可以说,我们的生活已经离不开WPS Office了。与此同时,我们在这个过程中也会遇到各种各样的技术阻碍,例如部分软件的PDF转Word需要收取额外费用等。那么,可不可以自己开发一个小工具来实现PDF转…...

)

13 尺寸结构模块(size.rs)

一、size.rs源码 // Copyright 2013 The Servo Project Developers. See the COPYRIGHT // file at the top-level directory of this distribution. // // Licensed under the Apache License, Version 2.0 <LICENSE-APACHE or // http://www.apache.org/licenses/LICENSE…...

STM32单片机学习记录(2.2)

一、STM32 13.1 - PWR简介 1. PWR(Power Control)电源控制 (1)PWR负责管理STM32内部的电源供电部分,可以实现可编程电压监测器和低功耗模式的功能; (2)可编程电压监测器(…...

CSS 样式化表格:从基础到高级技巧

CSS 样式化表格:从基础到高级技巧 1. 典型的 HTML 表格结构2. 为表格添加样式2.1 间距和布局2.2 简单的排版2.3 图形和颜色2.4 斑马条纹2.5 样式化标题 3. 完整的示例代码4. 总结 在网页设计中,表格是展示数据的常见方式。然而,默认的表格样式…...

【python】tkinter实现音乐播放器(源码+音频文件)【独一无二】

👉博__主👈:米码收割机 👉技__能👈:C/Python语言 👉专__注👈:专注主流机器人、人工智能等相关领域的开发、测试技术。 【python】tkinter实现音乐播放器(源码…...

javascript常用函数大全

javascript函数一共可分为五类: •常规函数 •数组函数 •日期函数 •数学函数 •字符串函数 1.常规函数 javascript常规函数包括以下9个函数: (1)alert函数:显示一个警告对话框,包括一个OK按钮。 (2)confirm函数:显…...

)

C#属性和字段(访问修饰符)

不同点逻辑性/灵活性存储性访问性使用范围安全性属性(Property)源于字段,对字段的扩展,逻辑字段并不占用实际的内存可以被其他类访问对接收的数据范围做限定,外部使用增加了数据的安全性字段(Field)不经过逻辑处理占用内存的空间及位置大部分字段不能直接被访问内存使用不安全 …...

DeepSeek为什么超越了OpenAI?从“存在主义之问”看AI的觉醒

悉尼大学学者Teodor Mitew向DeepSeek提出的问题,在推特上掀起了一场关于AI与人类意识的大讨论。当被问及"你最想问人类什么问题"时,DeepSeek的回答直指人类存在的本质:"如果意识是进化的偶然,宇宙没有内在的意义&a…...

langchain基础(二)

一、输出解析器(Output Parser) 作用:(1)让模型按照指定的格式输出; (2)解析模型输出,提取所需的信息 1、逗号分隔列表 CommaSeparatedListOutputParser:…...

数据库安全管理中的权限控制:保护数据资产的关键措施

title: 数据库安全管理中的权限控制:保护数据资产的关键措施 date: 2025/2/2 updated: 2025/2/2 author: cmdragon excerpt: 在信息化迅速发展的今天,数据库作为关键的数据存储和管理中心,已经成为了企业营运和决策的核心所在。然而,伴随着数据规模的不断扩大和数据价值…...

Leetcode598:区间加法 II

题目描述: 给你一个 m x n 的矩阵 M 和一个操作数组 op 。矩阵初始化时所有的单元格都为 0 。ops[i] [ai, bi] 意味着当所有的 0 < x < ai 和 0 < y < bi 时, M[x][y] 应该加 1。 在 执行完所有操作后 ,计算并返回 矩阵中最大…...

【Proteus】NE555纯硬件实现LED呼吸灯效果,附源文件,效果展示

本文通过NE555定时器芯片和简单的电容充放电电路,设计了一种纯硬件实现的呼吸灯方案,并借助Proteus仿真软件验证其功能。方案无需编程,成本低且易于实现,适合电子爱好者学习PWM(脉宽调制)和定时器电路原理。 一、呼吸灯原理与NE555功能分析 1. 呼吸灯核心原理 呼吸灯的…...

观成科技:隐蔽隧道工具Ligolo-ng加密流量分析

1.工具介绍 Ligolo-ng是一款由go编写的高效隧道工具,该工具基于TUN接口实现其功能,利用反向TCP/TLS连接建立一条隐蔽的通信信道,支持使用Let’s Encrypt自动生成证书。Ligolo-ng的通信隐蔽性体现在其支持多种连接方式,适应复杂网…...

【Java学习笔记】Arrays类

Arrays 类 1. 导入包:import java.util.Arrays 2. 常用方法一览表 方法描述Arrays.toString()返回数组的字符串形式Arrays.sort()排序(自然排序和定制排序)Arrays.binarySearch()通过二分搜索法进行查找(前提:数组是…...

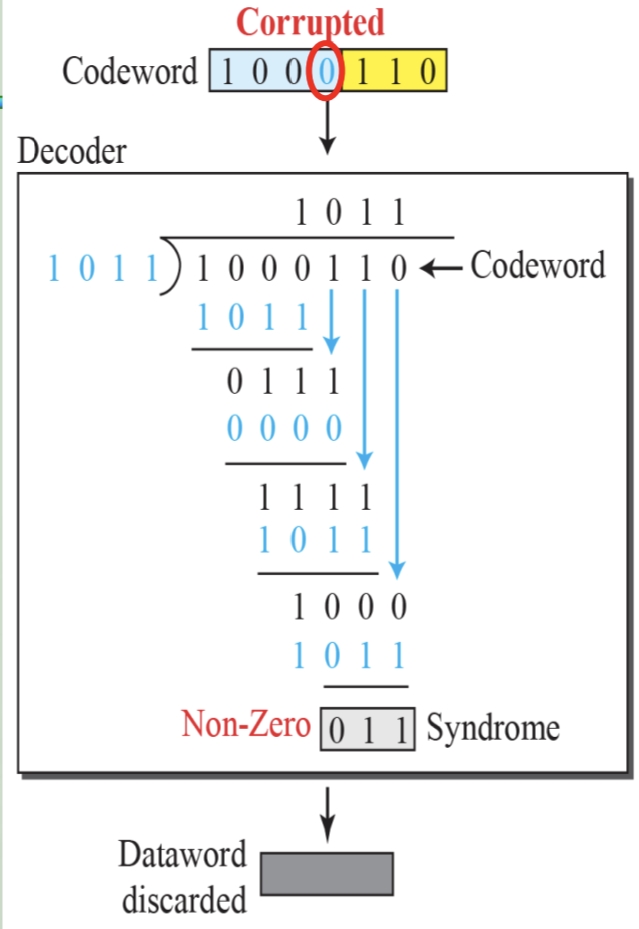

循环冗余码校验CRC码 算法步骤+详细实例计算

通信过程:(白话解释) 我们将原始待发送的消息称为 M M M,依据发送接收消息双方约定的生成多项式 G ( x ) G(x) G(x)(意思就是 G ( x ) G(x) G(x) 是已知的)࿰…...

Qt Widget类解析与代码注释

#include "widget.h" #include "ui_widget.h"Widget::Widget(QWidget *parent): QWidget(parent), ui(new Ui::Widget) {ui->setupUi(this); }Widget::~Widget() {delete ui; }//解释这串代码,写上注释 当然可以!这段代码是 Qt …...

蓝桥杯 2024 15届国赛 A组 儿童节快乐

P10576 [蓝桥杯 2024 国 A] 儿童节快乐 题目描述 五彩斑斓的气球在蓝天下悠然飘荡,轻快的音乐在耳边持续回荡,小朋友们手牵着手一同畅快欢笑。在这样一片安乐祥和的氛围下,六一来了。 今天是六一儿童节,小蓝老师为了让大家在节…...

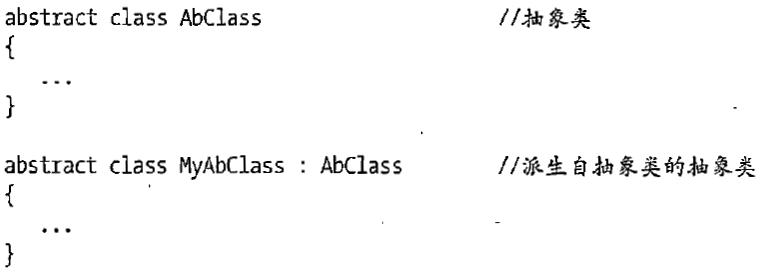

C# 类和继承(抽象类)

抽象类 抽象类是指设计为被继承的类。抽象类只能被用作其他类的基类。 不能创建抽象类的实例。抽象类使用abstract修饰符声明。 抽象类可以包含抽象成员或普通的非抽象成员。抽象类的成员可以是抽象成员和普通带 实现的成员的任意组合。抽象类自己可以派生自另一个抽象类。例…...

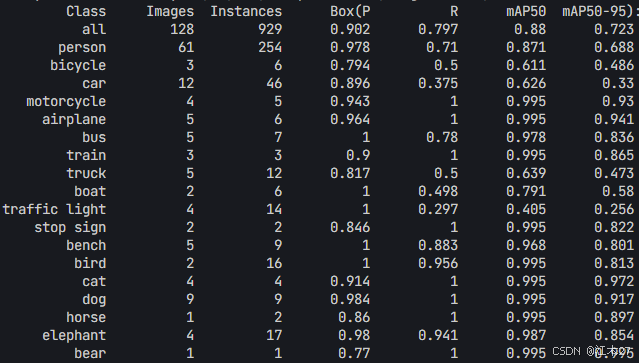

Yolov8 目标检测蒸馏学习记录

yolov8系列模型蒸馏基本流程,代码下载:这里本人提交了一个demo:djdll/Yolov8_Distillation: Yolov8轻量化_蒸馏代码实现 在轻量化模型设计中,**知识蒸馏(Knowledge Distillation)**被广泛应用,作为提升模型…...

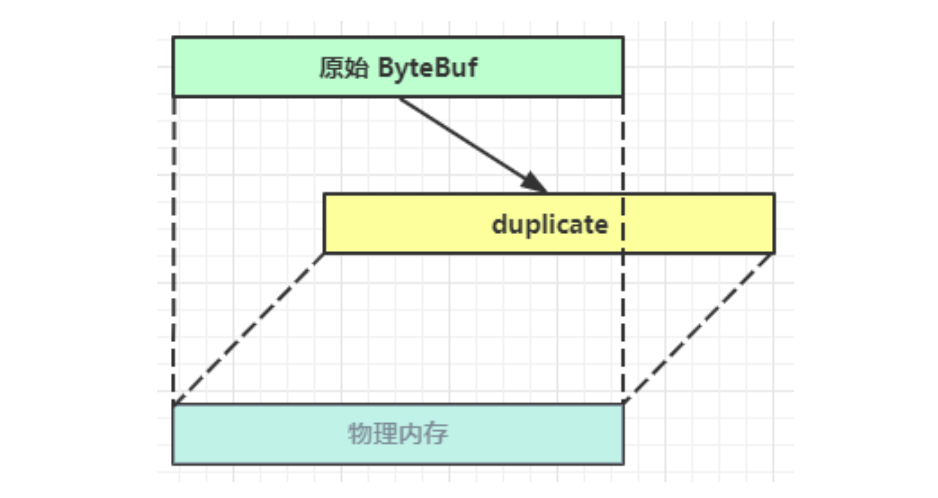

Netty从入门到进阶(二)

二、Netty入门 1. 概述 1.1 Netty是什么 Netty is an asynchronous event-driven network application framework for rapid development of maintainable high performance protocol servers & clients. Netty是一个异步的、基于事件驱动的网络应用框架,用于…...

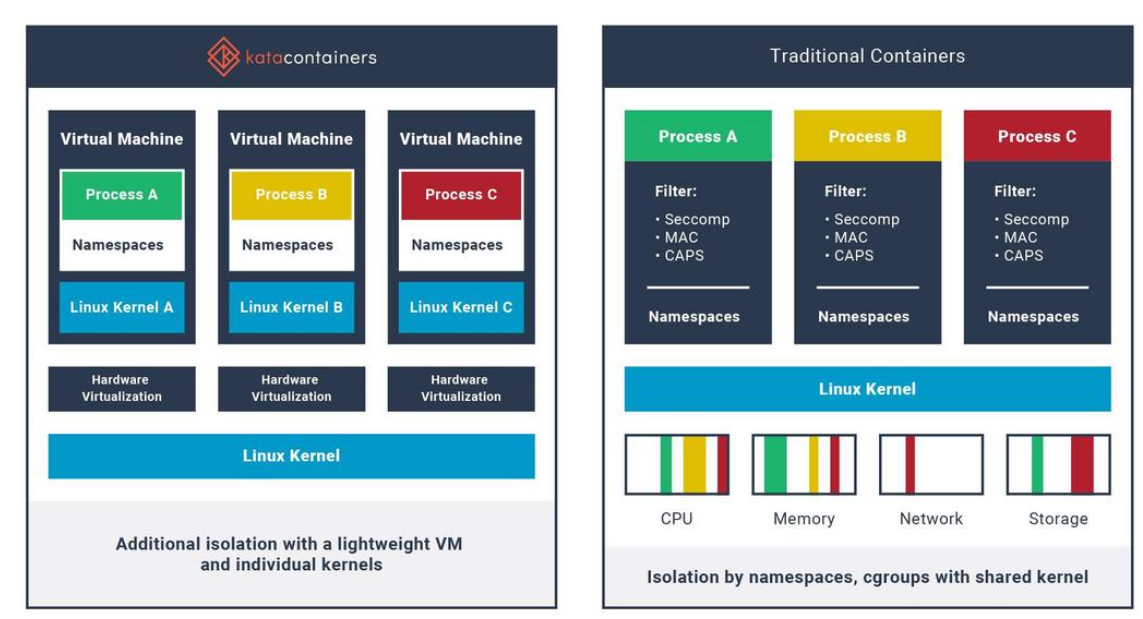

沙箱虚拟化技术虚拟机容器之间的关系详解

问题 沙箱、虚拟化、容器三者分开一一介绍的话我知道他们各自都是什么东西,但是如果把三者放在一起,它们之间到底什么关系?又有什么联系呢?我不是很明白!!! 就比如说: 沙箱&#…...

Pydantic + Function Calling的结合

1、Pydantic Pydantic 是一个 Python 库,用于数据验证和设置管理,通过 Python 类型注解强制执行数据类型。它广泛用于 API 开发(如 FastAPI)、配置管理和数据解析,核心功能包括: 数据验证:通过…...