基于Ubuntu2404搭建k8s-1.31集群

k8s 1.31

- 环境初始化

- 安装Container

- 安装runc

- 安装CNI插件

- 部署k8s集群

- 安装crictl

- 使用kubeadm部署集群

- 节点加入集群

- 部署Calico网络

- 配置dashboard

本实验基于VMware创建的Ubuntu2404虚拟机搭建k8s 1.31版本集群,架构为一主一从,容器运行时使用Container,网络插件采用Calico

注:本实验上传软件包默认放在root目录下,涉及到的镜像、软件包等可网盘自取

通过网盘分享的文件:k8s-1.32.1

链接: https://pan.baidu.com/s/1qlPUNOMu1OWZlNRN7M7RSw?pwd=6kf8 提取码: 6kf8

–来自百度网盘超级会员v4的分享

| 节点 | IP |

|---|---|

| master | 192.168.200.160 |

| worker01 | 192.168.200.161 |

官网

本次搭建的版本为最新的1.32版本,引入了多项重要功能和增强,提升了集群的性能、可管理性和可靠性。以下是该版本的主要特点:

-

动态资源分配(Dynamic Resource Allocation, DRA)增强

DRA 是一个集群级 API,用于在 Pod 和容器之间请求和共享资源。在 1.32 版本中,DRA 得到了多项增强,特别是在管理依赖 GPU 等专用硬件的 AI/ML 工作负载方面,提高了资源分配的效率和灵活性。 -

Windows 节点的优雅关机支持

此前,Kubernetes 仅在 Linux 节点上支持优雅关机功能。在 1.32 版本中,kubelet 增强了对 Windows 节点的支持,确保在关机时,Pod 能够遵循正确的生命周期事件,实现优雅终止,从而保障工作负载的平稳迁移和不中断运行。 -

核心组件新增状态端点

为 kube-scheduler 和 kube-controller-manager 等核心组件新增了 /statusz 和 /flagz 两个 HTTP 状态端点。这使得收集集群健康状况和配置的详细信息更加容易,有助于发现并排除故障。 -

调度器中的异步抢占(Asynchronous Preemption)

引入了调度器中的异步抢占机制,允许高优先级的 Pod 通过并行驱逐低优先级的 Pod 来获取所需资源,从而最大限度地减少集群中其他 Pod 的调度延迟。 -

自动删除由 StatefulSets 创建的 PersistentVolumeClaim(PVC)

这项功能已在 1.32 版本中达到稳定状态。它简化了有状态工作负载的存储管理,降低了资源闲置的风险。 -

Kubelet 的 OpenTelemetry 跟踪数据导出改进

对 Kubelet 生成和导出 OpenTelemetry 跟踪数据的功能进行了全面改进,旨在让监控、检测和解决与 Kubelet 相关的问题变得更容易。 -

其他增强和弃用

匿名授权配置端点:允许对已配置的端点进行匿名授权,增强了集群的安全性和灵活性。

卷扩展失败恢复:引入了从卷扩展失败中恢复的功能,允许以较小的容量重试卷扩展失败后的恢复,降低数据丢失或损坏的风险。

API 移除:移除了与 FlowSchema 和 PriorityLevelConfiguration 相关的 flowcontrol.apiserver.k8s.io/v1beta3 API,鼓励用户迁移到自 1.29 版本起可用的 flowcontrol.apiserver.k8s.io/v1 API。

环境初始化

- 初始化集群信息:包括主机名、映射、免密等等

vim init.sh

#!/bin/bash# 定义节点信息

NODES=("192.168.200.160 master root" "192.168.200.161 worker01 root")# 定义当前节点的密码(默认集群统一密码)

HOST_PASS="000000"# 时间同步的目标节点

TIME_SERVER=master# 时间同步的地址段

TIME_SERVER_IP=192.160.200.0/24# 欢迎界面

cat > /etc/motd <<EOF################################# Welcome to k8s #################################

EOF# 修改主机名

for node in "${NODES[@]}"; doip=$(echo "$node" | awk '{print $1}')hostname=$(echo "$node" | awk '{print $2}')# 获取当前节点的主机名和 IPcurrent_ip=$(hostname -I | awk '{print $1}')current_hostname=$(hostname)# 检查当前节点与要修改的节点信息是否匹配if [[ "$current_ip" == "$ip" && "$current_hostname" != "$hostname" ]]; thenecho "Updating hostname to $hostname on $current_ip..."hostnamectl set-hostname "$hostname"if [ $? -eq 0 ]; thenecho "Hostname updated successfully."elseecho "Failed to update hostname."fibreakfi

done# 遍历节点信息并添加到 hosts 文件

for node in "${NODES[@]}"; doip=$(echo "$node" | awk '{print $1}')hostname=$(echo "$node" | awk '{print $2}')# 检查 hosts 文件中是否已存在相应的解析if grep -q "$ip $hostname" /etc/hosts; thenecho "Host entry for $hostname already exists in /etc/hosts."else# 添加节点的解析条目到 hosts 文件sudo sh -c "echo '$ip $hostname' >> /etc/hosts"echo "Added host entry for $hostname in /etc/hosts."fi

doneif [[ ! -s ~/.ssh/id_rsa.pub ]]; thenssh-keygen -t rsa -N '' -f ~/.ssh/id_rsa -q -b 2048

fi# 检查并安装 sshpass 工具

if ! which sshpass &> /dev/null; thenecho "sshpass 工具未安装,正在安装 sshpass..."sudo apt-get install -y sshpass

fi# 遍历所有节点进行免密操作

for node in "${NODES[@]}"; doip=$(echo "$node" | awk '{print $1}')hostname=$(echo "$node" | awk '{print $2}')user=$(echo "$node" | awk '{print $3}')# 使用 sshpass 提供密码,并自动确认密钥sshpass -p "$HOST_PASS" ssh-copy-id -o StrictHostKeyChecking=no -i /root/.ssh/id_rsa.pub "$user@$hostname"

done# 时间同步

apt install -y chrony

if [[ $TIME_SERVER_IP == *$(hostname -I)* ]]; then# 配置当前节点为时间同步源sed -i '20,23s/^/#/g' /etc/chrony/chrony.confecho "server $TIME_SERVER iburst maxsources 2" >> /etc/chrony/chrony.confecho "allow $TIME_SERVER_IP" >> /etc/chrony/chrony.confecho "local stratum 10" >> /etc/chrony/chrony.conf

else# 配置当前节点同步到目标节点sed -i '20,23s/^/#/g' /etc/chrony/chrony.confecho "pool $TIME_SERVER iburst maxsources 2" >> /etc/chrony/chrony.conf

fi# 重启并启用 chrony 服务

systemctl daemon-reload

systemctl restart chrony

systemctl enable chrony# 关闭交换分区

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab#配置 Linux 主机以支持 Kubernetes 网络和容器桥接网络

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOFsudo modprobe overlay

sudo modprobe br_netfiltercat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOFsudo sysctl --systemecho "###############################################################"

echo "################# k8s集群初始化成功 ###################"

echo "###############################################################"

- 双节点执行

bash init.sh

安装Container

Containerd 是行业标准的容器运行时,注重简单性、稳健性和可移植性。它可用作 Linux 和 Windows 的守护进程,可以管理其主机系统的完整容器生命周期:镜像传输和存储、容器执行和监督、低级存储和网络附件等。安装最新即可

Github下载软件包

- 将压缩包给上传后配置

vim ctr.sh

#!/bin/bashtar -zxf containerd-2.0.2-linux-amd64.tar.gz -C /usr/local/#修改配置文件

cat > /etc/systemd/system/containerd.service <<eof

# Copyright The containerd Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target[Service]

#uncomment to enable the experimental sbservice (sandboxed) version of containerd/cri integration

#Environment="ENABLE_CRI_SANDBOXES=sandboxed"

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerdType=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999[Install]

WantedBy=multi-user.target

eof#加载生效

systemctl daemon-reload

systemctl enable --now containerd#查看版本并生成配置文件

ctr version

mkdir /etc/containerd

containerd config default > /etc/containerd/config.toml

systemctl restart containerd

- 双节点执行

bash ctr.sh

- 查看验证

root@master:~# ctr version

Client:Version: v2.0.2Revision: c507a0257ea6462fbd6f5ba4f5c74facb04021f4Go version: go1.23.4Server:Version: v2.0.2Revision: c507a0257ea6462fbd6f5ba4f5c74facb04021f4UUID: cbb1f812-d4da-4db7-982e-6d14100b1872

root@master:~# systemctl status containerd

● containerd.service - containerd container runtimeLoaded: loaded (/etc/systemd/system/containerd.service; enabled; preset: enabled)Active: active (running) since Sat 2025-02-08 06:13:07 UTC; 56s agoDocs: https://containerd.ioMain PID: 12066 (containerd)Tasks: 8Memory: 14.5M (peak: 15.0M)CPU: 121msCGroup: /system.slice/containerd.service└─12066 /usr/local/bin/containerdFeb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.665875531Z" level=info msg="Start recovering state"

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.665955208Z" level=info msg="Start event monitor"

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.665966555Z" level=info msg="Start cni network conf >

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.665972388Z" level=info msg="Start streaming server"

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.665985026Z" level=info msg="Registered namespace \">

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.665992443Z" level=info msg="runtime interface start>

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.665997209Z" level=info msg="starting plugins..."

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.666004445Z" level=info msg="Synchronizing NRI (plug>

Feb 08 06:13:07 master containerd[12066]: time="2025-02-08T06:13:07.666080260Z" level=info msg="containerd successfully>

Feb 08 06:13:07 master systemd[1]: Started containerd.service - containerd container runtime.

安装runc

runc是一个根据 OCI 规范在 Linux 上生成和运行容器的 CLI 工具。安装最新即可

GitHub下载软件包

- 上传到两台节点安装

install -m 755 runc.amd64 /usr/local/sbin/runc

- 验证查看

root@master:~# runc -v

runc version 1.2.4

commit: v1.2.4-0-g6c52b3fc

spec: 1.2.0

go: go1.22.10

libseccomp: 2.5.5

安装CNI插件

容器网络接口,提供网络资源,通过CNI接口,Kubernetes可以支持不同网络环境。安装最新即可

GitHub软件包下载

- 上传节点后配置

vim cni.sh

#!/bin/bashmkdir -p /opt/cni/bin

tar -zxf cni-plugins-linux-amd64-v1.6.2.tgz -C /opt/cni/bin# 配置 containerd 证书路径

sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.toml

mkdir -p /etc/containerd/certs.d/docker.io# 配置镜像加速和镜像源

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

# 配置阿里云镜像加速器(仅支持 pull)

[host."https://o90diikg.mirror.aliyuncs.com"]capabilities = ["pull", "resolve"]# 配置官方 Docker Hub(支持 pull + push)

[host."https://registry-1.docker.io"]capabilities = ["pull", "push", "resolve"]# 配置腾讯云镜像仓库(支持 pull + push)

[host."https://registry-mirrors.yunyuan.co"]capabilities = ["pull", "push", "resolve"]skip_verify = true # 如果是自签名证书,可以跳过证书验证

EOF

- 修改 cgroup 驱动 为 SystemdCgroup = true,确保 containerd 使用 systemd 管理 cgroup,与 Kubernetes 默认 cgroup 方式匹配。

官网配置说明

vim /etc/containerd/config.toml

[plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes][plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes.runc]runtime_type = 'io.containerd.runc.v2'runtime_path = ''pod_annotations = []container_annotations = []privileged_without_host_devices = falseprivileged_without_host_devices_all_devices_allowed = falsebase_runtime_spec = ''cni_conf_dir = ''cni_max_conf_num = 0snapshotter = ''sandboxer = 'podsandbox'io_type = ''SystemdCgroup = true # 添加

- 注意添加的这个镜像地址要与下面使用阿里云拉取的镜像名称版本一致,否则初始化集群会报错镜像拉取失败

[plugins.'io.containerd.grpc.v1.cri']disable_tcp_service = truestream_server_address = '127.0.0.1'stream_server_port = '0'stream_idle_timeout = '4h0m0s'enable_tls_streaming = falsesandbox_image = "registry.aliyuncs.com/google_containers/pause:3.10" # 添加

[plugins.'io.containerd.cri.v1.images'.pinned_images]sandbox = 'registry.aliyuncs.com/google_containers/pause:3.10' # 修改

systemctl daemon-reload ; systemctl restart containerd

- 双节点执行

bash cni.sh

部署k8s集群

安装crictl

Crictl是Kubelet 容器运行时接口 (CRI) 的 CLI 和验证工具。安装最新即可

GitHub软件包下载

- 上传节点后配置

vim cri.sh

#!/bin/bashtar -zxf crictl-v1.32.0-linux-amd64.tar.gz -C /usr/local/bin/cat >> /etc/crictl.yaml << EOF

runtime-endpoint: unix:///var/run/containerd/containerd.sock

image-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: true

EOFsystemctl daemon-reload;systemctl restart containerd

- 双节点执行

bash cri.sh

- 验证查看

root@master:~# crictl -v

crictl version v1.32.0

使用kubeadm部署集群

k8s官网:由于被墙下载不了

使用阿里云拉取所需镜像,官网教程

- 两台节点执行

apt update && apt-get install -y apt-transport-https

curl -fsSL https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.32/deb/Release.key |gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.32/deb/ /" |tee /etc/apt/sources.list.d/kubernetes.list

apt update

- 安装kubeadm、kubelet、kubectl(1.32.1版本)

apt install -y kubelet=1.32.1-1.1 kubeadm=1.32.1-1.1 kubectl=1.32.1-1.1

- 查看验证

root@master:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"32", GitVersion:"v1.32.1", GitCommit:"e9c9be4007d1664e68796af02b8978640d2c1b26", GitTreeState:"clean", BuildDate:"2025-01-15T14:39:14Z", GoVersion:"go1.23.4", Compiler:"gc", Platform:"linux/amd64"}

root@master:~# kubectl version --client

Client Version: v1.32.1

Kustomize Version: v5.5.0

root@master:~#

- master节点生成默认的配置文件并修改

root@master:~# kubeadm config print init-defaults > kubeadm.yaml

root@master:~# vim kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta4

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:advertiseAddress: 192.168.200.160 # 修改为master的IPbindPort: 6443

nodeRegistration:criSocket: unix:///var/run/containerd/containerd.sock # Unix 域套接字,不同容器运行时对应不同imagePullPolicy: IfNotPresentimagePullSerial: truename: master # 修改为控制节点主机名taints: null

timeouts:controlPlaneComponentHealthCheck: 4m0sdiscovery: 5m0setcdAPICall: 2m0skubeletHealthCheck: 4m0skubernetesAPICall: 1m0stlsBootstrap: 5m0supgradeManifests: 5m0s

---

apiServer: {}

apiVersion: kubeadm.k8s.io/v1beta4

caCertificateValidityPeriod: 87600h0m0s

certificateValidityPeriod: 8760h0m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

encryptionAlgorithm: RSA-2048

etcd:local:dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 修改为阿里云镜像仓库

kind: ClusterConfiguration

kubernetesVersion: 1.32.1 # 根据实际版本号修改

networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12podSubnet: 10.244.0.0/16 # 设置pod网段,需要添加,如果没有添加则默认使用网络插件的网段

proxy: {}

scheduler: {}

#添加内容:配置kubelet的CGroup为systemd

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

- 下载镜像

kubeadm config images pull --image-repository=registry.aliyuncs.com/google_containers --kubernetes-version=v1.32.1

root@master:~# kubeadm config images pull --image-repository=registry.aliyuncs.com/google_containers --kubernetes-version=v1.32.1

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.32.1

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.32.1

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.32.1

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.32.1

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.11.3

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.10

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.16-0

- kubeadm开始初始化

kubeadm init --config kubeadm.yaml

root@master:~# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.32.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action beforehand using 'kubeadm config images pull'

W0209 04:05:45.955712 49522 checks.go:846] detected that the sandbox image "" of the container runtime is inconsistent with that used by kubeadm.It is recommended to use "registry.aliyuncs.com/google_containers/pause:3.10" as the CRI sandbox image.

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.200.160]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.200.160 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192.168.200.160 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "super-admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests"

[kubelet-check] Waiting for a healthy kubelet at http://127.0.0.1:10248/healthz. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 503.750966ms

[api-check] Waiting for a healthy API server. This can take up to 4m0s

[api-check] The API server is healthy after 6.002633068s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.200.160:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:fdd4e72c72ad3cb70675d5004cbc193650cfd919621758b74430261d694c628d

root@master:~#

注:如果初始化失败,检查容器container、kubelet等日志,然后重置集群重新初始化(如果搭建的版本和本博文不一致,注意修改registry.aliyuncs.com/google_containers/pause:3.10镜像地址在container配置文件中是否对应!!!)

官网故障排查

rm -rf /etc/kubernetes/*

kubeadm reset

- 配置访问k8s集群,在初始化信息中可获取命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@master:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 11m v1.32.1

节点加入集群

使用初始化信息中的加入集群命令

kubeadm join 192.168.200.160:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:fdd4e72c72ad3cb70675d5004cbc193650cfd919621758b74430261d694c628d

root@worker01:~# kubeadm join 192.168.200.160:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:fdd4e72c72ad3cb70675d5004cbc193650cfd919621758b74430261d694c628d

[preflight] Running pre-flight checks

[preflight] Reading configuration from the "kubeadm-config" ConfigMap in namespace "kube-system"...

[preflight] Use 'kubeadm init phase upload-config --config your-config.yaml' to re-upload it.

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-check] Waiting for a healthy kubelet at http://127.0.0.1:10248/healthz. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 1.012874735s

[kubelet-start] Waiting for the kubelet to perform the TLS BootstrapThis node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

- 因为还没有部署网络coredns还起不来,并且是notready状态

root@master:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 20h v1.32.1

worker01 NotReady <none> 20s v1.32.1

root@master:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6766b7b6bb-d6x7n 0/1 Pending 0 20h

kube-system coredns-6766b7b6bb-wpsq5 0/1 Pending 0 20h

kube-system etcd-master 1/1 Running 0 20h

kube-system kube-apiserver-master 1/1 Running 0 20h

kube-system kube-controller-manager-master 1/1 Running 1 20h

kube-system kube-proxy-bmfm5 1/1 Running 0 20h

kube-system kube-proxy-wctln 1/1 Running 0 4m41s

kube-system kube-scheduler-master 1/1 Running 0 20h

- 配置node节点访问集群

scp -r root@master:/etc/kubernetes/admin.conf /etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

source /etc/profile

root@worker01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 20h v1.32.1

worker01 NotReady <none> 6m56s v1.32.1

如果需要删除节点:

让节点停止接受新的Pod,使用kubectl drain命令驱逐该节点上的pod

kubectl drain <节点名称> --ignore-daemonsets --delete-emptydir-data

- 参数说明:

–ignore-daemonsets:忽略 DaemonSet 运行的 Pod

–delete-emptydir-data:强制删除emptyDir卷的数据(如果有)

–force选项:可强制删除

然后删除节点

kubectl delete node <节点名称>

部署Calico网络

GitHub官网介绍

Calico 项目由Tigera创建和维护,是一个拥有活跃开发和用户社区的开源项目。Calico Open Source 已发展成为最广泛采用的容器网络和安全解决方案,每天为 166 个国家/地区的 800 多万个节点提供支持。版本使用最新即可,有如下两种方式搭建,任选其一:

(1)使用官方提供的 Calico 清单

适用于标准环境,Calico 作为 DaemonSet 运行,提供 Pod 网络、网络策略等功能

curl -O https://raw.githubusercontent.com/projectcalico/calico/v3.29.2/manifests/calico.yaml

vi calico.yaml

- 如果需要自定义设置容器网段,取消注释进行修改

初始化集群时没有指定则使用calico的网段,如果指定了则两边需要一致

注意yaml格式要对齐

6291 - name: CALICO_IPV4POOL_CIDR

6292 value: "192.168.0.0/16"

- 导入calico所需镜像

calico/node:v3.29.2

calico/kube-controllers:v3.29.2

calico/cni:v3.29.2

root@master:~# ctr -n k8s.io images import all_docker_images.tar

docker.io/calico/cni:v3.29.2 saved

docker.io/calico/node:v3.29.2 saved

docker.io/calico/kube controllers:v3.29. saved

application/vnd.oci.image.manifest.v1+json sha256:38f50349e2d40f94db2c7d7e3edf777a40b884a3903932c07872ead2a4898b85

application/vnd.oci.image.manifest.v1+json sha256:63bf93f2b3698ff702fa325d215f20a22d50a70d1da2a425b8ce1f9767a5e393

application/vnd.oci.image.manifest.v1+json sha256:27942e6de00c7642be5522f45ab3efe3436de8223fc72c4be1df5da7d88a3b22

Importing elapsed: 25.0s total: 0.0 B (0.0 B/s)

- 启动calico配置文件

root@master:~# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

serviceaccount/calico-cni-plugin created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/tiers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/adminnetworkpolicies.policy.networking.k8s.io created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

- 查看节点信息,已经处于ready状态,并且容器pod的默认网段为172.17.0.0/16

root@master:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-77969b7d87-vbgzf 1/1 Running 0 46s

kube-system calico-node-52dd8 0/1 Running 0 46s

kube-system calico-node-d5zc2 1/1 Running 0 46s

kube-system coredns-6766b7b6bb-d6x7n 1/1 Running 0 26h

kube-system coredns-6766b7b6bb-wpsq5 1/1 Running 0 26h

kube-system etcd-master 1/1 Running 0 26h

kube-system kube-apiserver-master 1/1 Running 0 26h

kube-system kube-controller-manager-master 1/1 Running 1 26h

kube-system kube-proxy-bmfm5 1/1 Running 0 26h

kube-system kube-proxy-wctln 1/1 Running 0 5h37m

kube-system kube-scheduler-master 1/1 Running 0 26h

root@master:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 26h v1.32.1

worker01 Ready <none> 5h37m v1.32.1

root@master:~# kubectl get ippool -o yaml | grep cidrcidr: 172.17.0.0/16

(2)使用calico官网推荐方式安装calico

适用于生产环境,通过Tigera Operator 统一管理Calico 部署,提供自动更新、配置管理等功能

- 安装 Tigera Calico 操作员和自定义资源定义

curl -O https://raw.githubusercontent.com/projectcalico/calico/v3.29.2/manifests/tigera-operator.yaml

kubectl create -f tigera-operator.yaml

root@master:~# kubectl create -f tigera-operator.yaml

namespace/tigera-operator created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/tiers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/adminnetworkpolicies.policy.networking.k8s.io created

customresourcedefinition.apiextensions.k8s.io/apiservers.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/imagesets.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/installations.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/tigerastatuses.operator.tigera.io created

serviceaccount/tigera-operator created

clusterrole.rbac.authorization.k8s.io/tigera-operator created

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created

deployment.apps/tigera-operator created

- 通过创建必要的自定义资源来安装 Calico。

curl -O https://raw.githubusercontent.com/projectcalico/calico/v3.29.2/manifests/custom-resources.yaml

- 配置文件中可以指定pod网段(如果初始化集群时没有指定则使用calico的网段,但指定了则两边需要一致)

cidr: 192.168.0.0/16

kubectl create -f custom-resources.yaml

root@master:~# kubectl create -f custom-resources.yaml

installation.operator.tigera.io/default created

apiserver.operator.tigera.io/default created

root@master:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-apiserver calico-apiserver-6966f49c46-5jwmd 1/1 Running 0 31m

calico-apiserver calico-apiserver-6966f49c46-6rlgk 1/1 Running 0 31m

kube-system coredns-69767bd799-lhjr5 1/1 Running 0 5s

kube-system coredns-7c6d7f5fd8-ljgt4 1/1 Running 0 3m40s

kube-system etcd-master 1/1 Running 0 86m

kube-system kube-apiserver-master 1/1 Running 0 86m

kube-system kube-controller-manager-master 1/1 Running 0 86m

kube-system kube-proxy-c8frl 1/1 Running 0 86m

kube-system kube-proxy-rtwtr 1/1 Running 0 86m

kube-system kube-scheduler-master 1/1 Running 0 86m

tigera-operator tigera-operator-ccfc44587-7kgzp 1/1 Running 0 81m

- 配置k8s命令补全,这样使用Tab就可以补全命令

apt install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

配置dashboard

Kubernetes Dashboard 是 Kubernetes 集群的通用 Web UI。它允许用户管理集群中运行的应用程序并对其进行故障排除,以及管理集群本身。

从 7.0.0 版开始,Kubernetes Dashboard 已不再支持基于 Manifest 的安装,目前仅支持基于 Helm 的安装,因为它速度更快,并且让我们能够更好地控制运行 Dashboard 所需的所有依赖项

GitHub官网

-

安装helm

Helm 是一款简化 Kubernetes 应用程序安装和管理的工具。可以将其视为 Kubernetes 的 apt/yum/homebrew

GitHub官网下载 -

使用二进制安装,其他安装方式例如脚本、软件包等参考helm官网

- 解压后配置

tar -xf helm-v3.17.0-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

root@master:~# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

下面提供两种安装方式任选其一

- 提前手动导入文件所需要的镜像

root@master:~# ctr -n k8s.io images import k8s_dashboard_7.10.4.tar

docker.io/kubernetesui/dashboard metrics saved

docker.io/kubernetesui/dashboard api:1.1 saved

docker.io/kubernetesui/dashboard web:1.6 saved

docker.io/kubernetesui/dashboard auth:1. saved

application/vnd.oci.image.manifest.v1+json sha256:0295030c88f838b4119dc12513131e81bfbdd1d04550049649750aec09bf45b4

application/vnd.oci.image.manifest.v1+json sha256:fde4440560bbdd2a5330e225e7adda111097b8a08e351da6e9b1c5d1bd67e0e4

application/vnd.oci.image.manifest.v1+json sha256:17979eb1f79ee098f0b2f663d2fb0bfc55002a2bcf07df87cd28d88a43e2fc3a

application/vnd.oci.image.manifest.v1+json sha256:22ab8b612555e4f76c8757a262b8e1398abc81522af7b0f6d9159e5aa68625c1

Importing elapsed: 10.9s total: 0.0 B (0.0 B/s)

root@master:~# ctr -n k8s.io images import kong-3.8.tar

docker.io/library/kong:3.8 saved

application/vnd.oci.image.manifest.v1+json sha256:d6eea46f36d363d1c27bb4b598a1cd3bbee30e79044c5e3717137bec84616d50

Importing elapsed: 7.4 s total: 0.0 B (0.0 B/s)

- 1、仓库安装 Kubernetes Dashboard:官网

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

root@master:~# helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

Release "kubernetes-dashboard" does not exist. Installing it now.

NAME: kubernetes-dashboard

LAST DEPLOYED: Tue Feb 11 02:28:55 2025

NAMESPACE: kubernetes-dashboard

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

*************************************************************************************************

*** PLEASE BE PATIENT: Kubernetes Dashboard may need a few minutes to get up and become ready ***

*************************************************************************************************Congratulations! You have just installed Kubernetes Dashboard in your cluster.To access Dashboard run:kubectl -n kubernetes-dashboard port-forward svc/kubernetes-dashboard-kong-proxy 8443:443NOTE: In case port-forward command does not work, make sure that kong service name is correct.Check the services in Kubernetes Dashboard namespace using:kubectl -n kubernetes-dashboard get svcDashboard will be available at:https://localhost:8443

- 安装后只能本地集群访问

root@master:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-77969b7d87-jsndm 1/1 Running 0 112m

kube-system calico-node-smkh7 1/1 Running 0 112m

kube-system calico-node-tjpg8 1/1 Running 0 112m

kube-system coredns-69767bd799-lhjr5 1/1 Running 0 17h

kube-system coredns-69767bd799-tzd2g 1/1 Running 0 17h

kube-system etcd-master 1/1 Running 0 19h

kube-system kube-apiserver-master 1/1 Running 0 19h

kube-system kube-controller-manager-master 1/1 Running 2 (47m ago) 19h

kube-system kube-proxy-c8frl 1/1 Running 0 19h

kube-system kube-proxy-rtwtr 1/1 Running 0 19h

kube-system kube-scheduler-master 1/1 Running 2 (47m ago) 19h

kubernetes-dashboard kubernetes-dashboard-api-6d65fb674d-2gzcc 1/1 Running 0 2m7s

kubernetes-dashboard kubernetes-dashboard-auth-65569d7fdd-vbr54 1/1 Running 0 2m7s

kubernetes-dashboard kubernetes-dashboard-kong-79867c9c48-6fvhh 1/1 Running 0 2m7s

kubernetes-dashboard kubernetes-dashboard-metrics-scraper-55cc88cbcb-gpdkz 1/1 Running 0 2m7s

kubernetes-dashboard kubernetes-dashboard-web-8f95766b5-qhq4p 1/1 Running 0 2m7s

root@master:~# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard-api ClusterIP 10.105.81.232 <none> 8000/TCP 14s

kubernetes-dashboard-auth ClusterIP 10.108.168.165 <none> 8000/TCP 14s

kubernetes-dashboard-kong-proxy ClusterIP 10.109.133.99 <none> 443/TCP 14s

kubernetes-dashboard-metrics-scraper ClusterIP 10.104.172.95 <none> 8000/TCP 14s

kubernetes-dashboard-web ClusterIP 10.106.165.230 <none> 8000/TCP 14s

- 让集群外部可以访问

root@master:~# kubectl -n kubernetes-dashboard patch svc kubernetes-dashboard-kong-proxy -p '{"spec": {"type": "NodePort"}}'

service/kubernetes-dashboard-kong-proxy patched

root@master:~# kubectl -n kubernetes-dashboard get svc kubernetes-dashboard-kong-proxy

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard-kong-proxy NodePort 10.109.133.99 <none> 443:30741/TCP 7m20s

root@master:~#

- 如需自定义修改端口可执行修改(选做)

kubectl edit svc kubernetes-dashboard-kong-proxy -n kubernetes-dashboard

- 重启coredns

root@master:~# kubectl -n kube-system delete pod -l k8s-app=kube-dns

pod "coredns-69767bd799-lhjr5" deleted

pod "coredns-69767bd799-tzd2g" deleted

- 界面访问:https:IP:30741

- 创建账号生成令牌登录

root@master:~# kubectl create serviceaccount admin-user -n kubernetes-dashboard

serviceaccount/admin-user created

root@master:~# kubectl create clusterrolebinding admin-user --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:admin-user

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

root@master:~# kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6Ikd4Z2syd3psak1WTGhNYlFKVW5CY09DdFJPTnVPMHdFV1RUTzlOWFNkVDAifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzM5MjQ1NjI3LCJpYXQiOjE3MzkyNDIwMjcsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiM2M2N2VkNmYtNTJiNS00NGIxLTkwYzMtODAwYzU2Njk4MGIyIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiM2RmZGM2NGEtYWFmYy00ODIxLWE3M2EtMDUwZTA5NGZkYmU2In19LCJuYmYiOjE3MzkyNDIwMjcsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.eSt6MaWzSIYjl8AdPq4ndP5kkG-W7IE1fIV1m_zUBmpWMXkyyqOiiF6qzqrNP-6AoHq0mlLgeDdL_bzhTTAr0OWakWswLNXxd_IfUjcbEYzWJen7wvIsMmHMYy7j3WU4yaLefX_TxWuhzCegVvnEPjn3prEl0qVGyqqr5cnRylkcZigucV3dp3-3tXetTm5TAsUAASb8ZVKD3Mdp5TJmebNrvJ0VqnyKENyZvp-vW6zpM4NTpKprQwRWnchGThLMJM0W_QDV4wp_oSKkNsZ9N6XLQHhfCPhTxGuoSKWBBfbJzLv6VWHMRlM1NnXcNhjepinE7weQLUZ6IS7vGBwDUg

注:如果需要删除helm重新启用则使用以下命令

root@master:~# helm list -n kubernetes-dashboard

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

kubernetes-dashboard kubernetes-dashboard 1 2025-02-11 02:28:55.751187857 +0000 UTC deployed kubernetes-dashboard-7.10.4

root@master:~# helm delete kubernetes-dashboard -n kubernetes-dashboard

release "kubernetes-dashboard" uninstalled

- 2、自定义安装,下载后编辑value文件

- value官网参数说明

tar -xf kubernetes-dashboard-7.10.4.tgzvi kubernetes-dashboard/values.yaml

- 配置启用http,以及外部访问

kong:

-----type: NodePort # 修改http:enabled: true # 启用http访问nodePort: 30080 # 添加https:# 添加enabled: true # 添加nodePort: 30443 # 配置https访问端口

- 创建命名空间

kubectl create ns kube-dashboard

- 执行helm模板

root@master:~# helm install kubernetes-dashboard ./kubernetes-dashboard --namespace kube-dashboard

NAME: kubernetes-dashboard

LAST DEPLOYED: Tue Feb 11 03:16:02 2025

NAMESPACE: kube-dashboard

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

*************************************************************************************************

*** PLEASE BE PATIENT: Kubernetes Dashboard may need a few minutes to get up and become ready ***

*************************************************************************************************Congratulations! You have just installed Kubernetes Dashboard in your cluster.To access Dashboard run:kubectl -n kube-dashboard port-forward svc/kubernetes-dashboard-kong-proxy 8443:443NOTE: In case port-forward command does not work, make sure that kong service name is correct.Check the services in Kubernetes Dashboard namespace using:kubectl -n kube-dashboard get svcDashboard will be available at:https://localhost:8443

root@master:~# kubectl get svc -n kube-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard-api ClusterIP 10.104.102.123 <none> 8000/TCP 3m29s

kubernetes-dashboard-auth ClusterIP 10.108.76.123 <none> 8000/TCP 3m29s

kubernetes-dashboard-kong-proxy NodePort 10.111.226.77 <none> 80:30080/TCP,443:30443/TCP 3m29s

kubernetes-dashboard-metrics-scraper ClusterIP 10.111.158.35 <none> 8000/TCP 3m29s

kubernetes-dashboard-web ClusterIP 10.110.180.200 <none> 8000/TCP

使用http访问

使用https进行访问

相关文章:

基于Ubuntu2404搭建k8s-1.31集群

k8s 1.31 环境初始化安装Container安装runc安装CNI插件部署k8s集群安装crictl使用kubeadm部署集群节点加入集群部署Calico网络配置dashboard 本实验基于VMware创建的Ubuntu2404虚拟机搭建k8s 1.31版本集群,架构为一主一从,容器运行时使用Container&#…...

Golang的图形编程应用案例

Golang的图形编程应用案例 一、Golang的图形编程概述 是一种高效、可靠且易于使用的编程语言,具有并发性和简洁性,因此在图形编程领域也有着广泛的应用。Golang的图形编程主要通过各种图形库来实现,其中最知名的是Go图形库(Ebiten…...

PostgreSQL 错误代码 23505 : ERROR: duplicate key value violates unique constraint

目录 1. 确认错误信息2. 检查数据3. 处理重复数据4. 检查唯一约束5. 添加唯一约束6. 使用事务处理并发操作7. 使用触发器8. 使用 ON CONFLICT 子句9. 重置序列10. 捕获异常并重试 错误代码 23505 是 PostgreSQL 中表示违反唯一约束(unique violation)的标…...

基于SpringBoot和PostGIS的省域“地理难抵点(最纵深处)”检索及可视化实践

目录 前言 1、研究背景 2、研究意义 一、研究目标 1、“地理难抵点”的概念 二、“难抵点”空间检索实现 1、数据获取与处理 2、计算流程 3、难抵点计算 4、WebGIS可视化 三、成果展示 1、华东地区 2、华南地区 3、华中地区 4、华北地区 5、西北地区 6、西南地…...

MySQL InnoDB引擎 MVCC

MVCC(Multi-Version Concurrency Control)即多版本并发控制,是 MySQL 的 InnoDB 存储引擎实现并发控制的一种重要技术。它在很多情况下避免了加锁操作,从而提高了数据库的并发性能。 一、原理 MVCC 的核心思想是通过保存数据在某…...

服务器使用centos7.9操作系统前需要做的准备工作

文章目录 前言1.操作记录 总结 前言 记录一下centos7.9操作系统的服务器在部署业务服务之前需要做的准备工作。 大家可以复制到自己的编辑器里面,有需求的注释一些步骤。 备注:有条件的项目推荐使用有长期支持的操作系统版本。 1.操作记录 # 更换阿里云…...

【Prometheus】prometheus结合cAdvisor监控docker容器运行状态,并且实现实时告警通知

✨✨ 欢迎大家来到景天科技苑✨✨ 🎈🎈 养成好习惯,先赞后看哦~🎈🎈 🏆 作者简介:景天科技苑 🏆《头衔》:大厂架构师,华为云开发者社区专家博主,阿里云开发者社区专家博主,CSDN全栈领域优质创作者,掘金优秀博主,51CTO博客专家等。 🏆《博客》:Python全…...

【Stable Diffusion模型测试】测试ControlNet,没有线稿图?

相信很多小伙伴跟我一样,在测试Stable Diffusion的Lora模型时,ControlNet没有可输入的线稿图,大家的第一反应就是百度搜,但是能从互联网上搜到的高质量线稿图,要么收费,要么质量很差。 现在都什么年代了&a…...

算法刷题-数组系列-卡码网.区间和

题目描述 给定一个整数数组 Array,请计算该数组在每个指定区间内元素的总和。 示例: 输入: 5 1 2 3 4 5 0 1 1 3 输出: 3 9 要点 本题目以ACM的形式输入输出,与力扣的形式不一样,考察头文件的书写、数据结构的书写、…...

Druid GetConnectionTimeoutException解决方案之一

> Druid版本:v1.2.18 最近项目中经常出现:com.alibaba.druid.pool.GetConnectionTimeoutException: wait millis 120000, active 0, maxActive 128, creating 0, createErrorCount 2,但是其他平台连接这个数据源正常的 于是做了一个实验复…...

【JavaScript爬虫记录】记录一下使用JavaScript爬取m4s流视频过程(内含ffmpeg合并)

前言 前段时间发现了一个很喜欢的视频,可惜网站不让下载,简单看了一下视频是被切片成m4s格式的流文件,初步想法是将所有的流文件下载下来然后使用ffmpeg合并成一个完整的mp4,于是写了一段脚本来实现一下,电脑没有配python环境,所以使用JavaScript实现,合并功能需要安装ffmpeg,…...

CSDN2024年度总结|乾坤未定你我皆是黑马|2025一起为了梦想奋斗加油少年!!!

CSDN2024年我的创作纪念日1024天|不忘初心|努力上进|积极向前 一、前言:二、2024个人成长经历:HarmonyOS鸿蒙应用生态构建与扩展——杭州站AGI创新工坊&神经网络大模型——杭州站 三、2024年度创作总结:2024创作数据总结:博客…...

【前端】 react项目使用bootstrap、useRef和useState之间的区别和应用

一、场景描述 我想写一个轮播图的程序,只是把bootstrap里面的轮播图拉过来就用上感觉不是很合适,然后我就想自己写自动轮播,因此,这篇文章里面只是自动轮播的部分,没有按键跟自动轮播的衔接部分。 Ps: 本文用的是函数…...

联想电脑如何进入BIOS?

打开设置 下滑找到更新与安全 点击恢复和立即重新启动 选择疑难解答 选择UEFI固件设置 然后如果有重启点击重启 重启开机时一直点击FNF10进入BIOS界面...

)

蓝桥杯单片机大模板(西风)

#include <REGX52.H> #include "Key.h" #include "Seg.h" //变量声明区 unsigned char Key_Val,Key_Down,Key_Old;//按键扫描专用变量 unsigned char Key_Slow_Down;//按键减速专用变量 10ms unsigned int Seg_Slow_Down;//按键扫描专用变量 500ms …...

20250213刷机飞凌的OK3588-C_Linux5.10.209+Qt5.15.10_用户资料_R1

20250213刷机飞凌的OK3588-C_Linux5.10.209Qt5.15.10_用户资料_R1 2025/2/13 15:10 缘起:OK3588-C_Linux5.10.66Qt5.15.2的R5都出来了。但是公司一直在R4上面开发的,不想动了。 不过我的原则,只要是有新的系统SDK/BSP放出来,都先在…...

2.13学习记录

web ezSSTI 根据题意,这题考察ssti漏洞,查询有关信息得知这是一种模版攻击漏洞。这种题目可以利用工具进行解决,用焚靖,这是一个针对CTF比赛中Jinja SSTI绕过WAF的全自动脚本 根据教程安装工具和对应的依赖就可以了这个脚本会自…...

【DeepSeek】Deepseek辅组编程-通过卫星轨道计算终端距离、相对速度和多普勒频移

引言 笔者在前面的文章中,介绍了基于卫星轨道参数如何计算终端和卫星的距离,相对速度和多普勒频移。 【一文读懂】卫星轨道的轨道参数(六根数)和位置速度矢量转换及其在终端距离、相对速度和多普勒频移计算中的应用 Matlab程序 …...

JavaEE架构

一.架构选型 1.VM架构 VM架构通常指的是虚拟机(Virtual Machine)的架构。虚拟机是一种软件实现的计算机系统,它模拟了物理计算机的功能,允许在单一物理硬件上运行多个操作系统实例。虚拟机架构主要包括以下几个关键组件ÿ…...

Docker 网络的几种常见类型

目录 Docker 网络类型 桥接网络(Bridge) 通俗解释 特点 使用场景 示例 主机网络(Host) 通俗解释 特点 使用场景 示例 None 网络 通俗解释 特点 使用场景 示例 Overlay 网络 通俗解释 特点 使用场景 示例 Ma…...

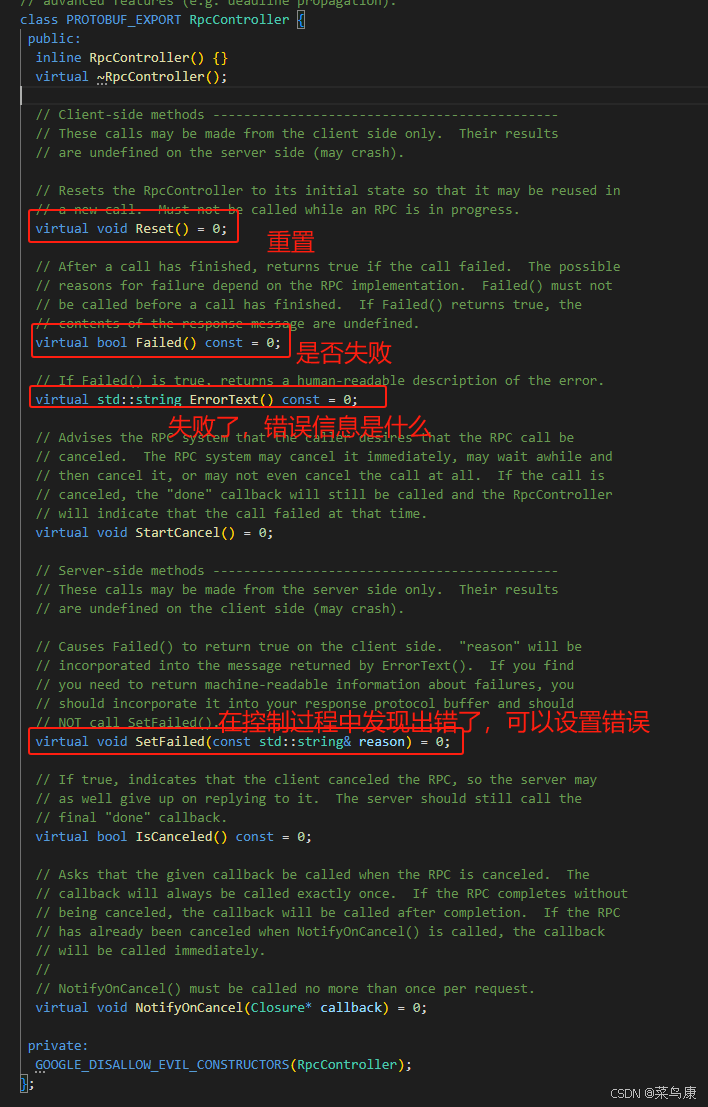

C++实现分布式网络通信框架RPC(3)--rpc调用端

目录 一、前言 二、UserServiceRpc_Stub 三、 CallMethod方法的重写 头文件 实现 四、rpc调用端的调用 实现 五、 google::protobuf::RpcController *controller 头文件 实现 六、总结 一、前言 在前边的文章中,我们已经大致实现了rpc服务端的各项功能代…...

反向工程与模型迁移:打造未来商品详情API的可持续创新体系

在电商行业蓬勃发展的当下,商品详情API作为连接电商平台与开发者、商家及用户的关键纽带,其重要性日益凸显。传统商品详情API主要聚焦于商品基本信息(如名称、价格、库存等)的获取与展示,已难以满足市场对个性化、智能…...

Java如何权衡是使用无序的数组还是有序的数组

在 Java 中,选择有序数组还是无序数组取决于具体场景的性能需求与操作特点。以下是关键权衡因素及决策指南: ⚖️ 核心权衡维度 维度有序数组无序数组查询性能二分查找 O(log n) ✅线性扫描 O(n) ❌插入/删除需移位维护顺序 O(n) ❌直接操作尾部 O(1) ✅内存开销与无序数组相…...

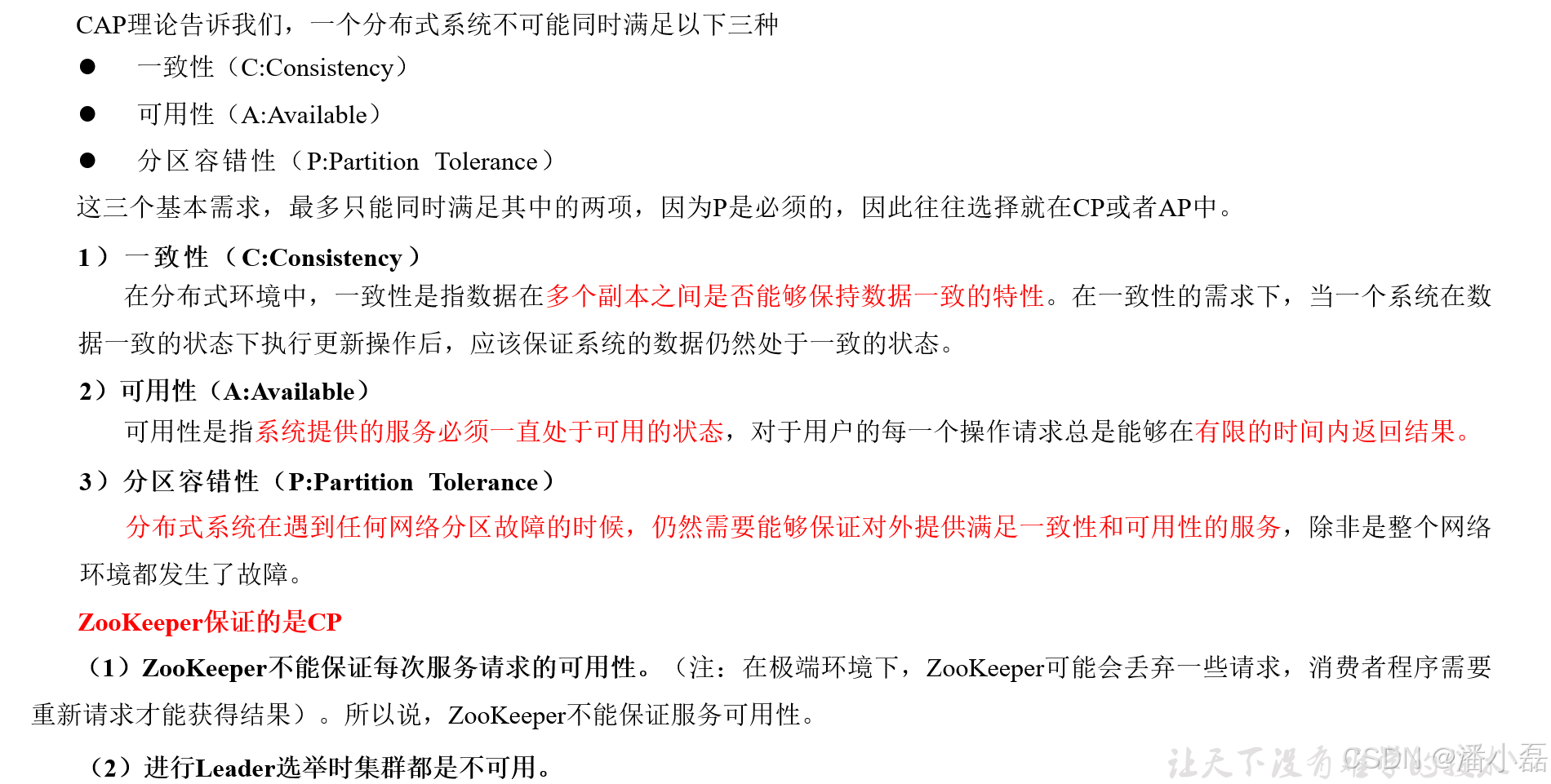

高频面试之3Zookeeper

高频面试之3Zookeeper 文章目录 高频面试之3Zookeeper3.1 常用命令3.2 选举机制3.3 Zookeeper符合法则中哪两个?3.4 Zookeeper脑裂3.5 Zookeeper用来干嘛了 3.1 常用命令 ls、get、create、delete、deleteall3.2 选举机制 半数机制(过半机制࿰…...

电脑插入多块移动硬盘后经常出现卡顿和蓝屏

当电脑在插入多块移动硬盘后频繁出现卡顿和蓝屏问题时,可能涉及硬件资源冲突、驱动兼容性、供电不足或系统设置等多方面原因。以下是逐步排查和解决方案: 1. 检查电源供电问题 问题原因:多块移动硬盘同时运行可能导致USB接口供电不足&#x…...

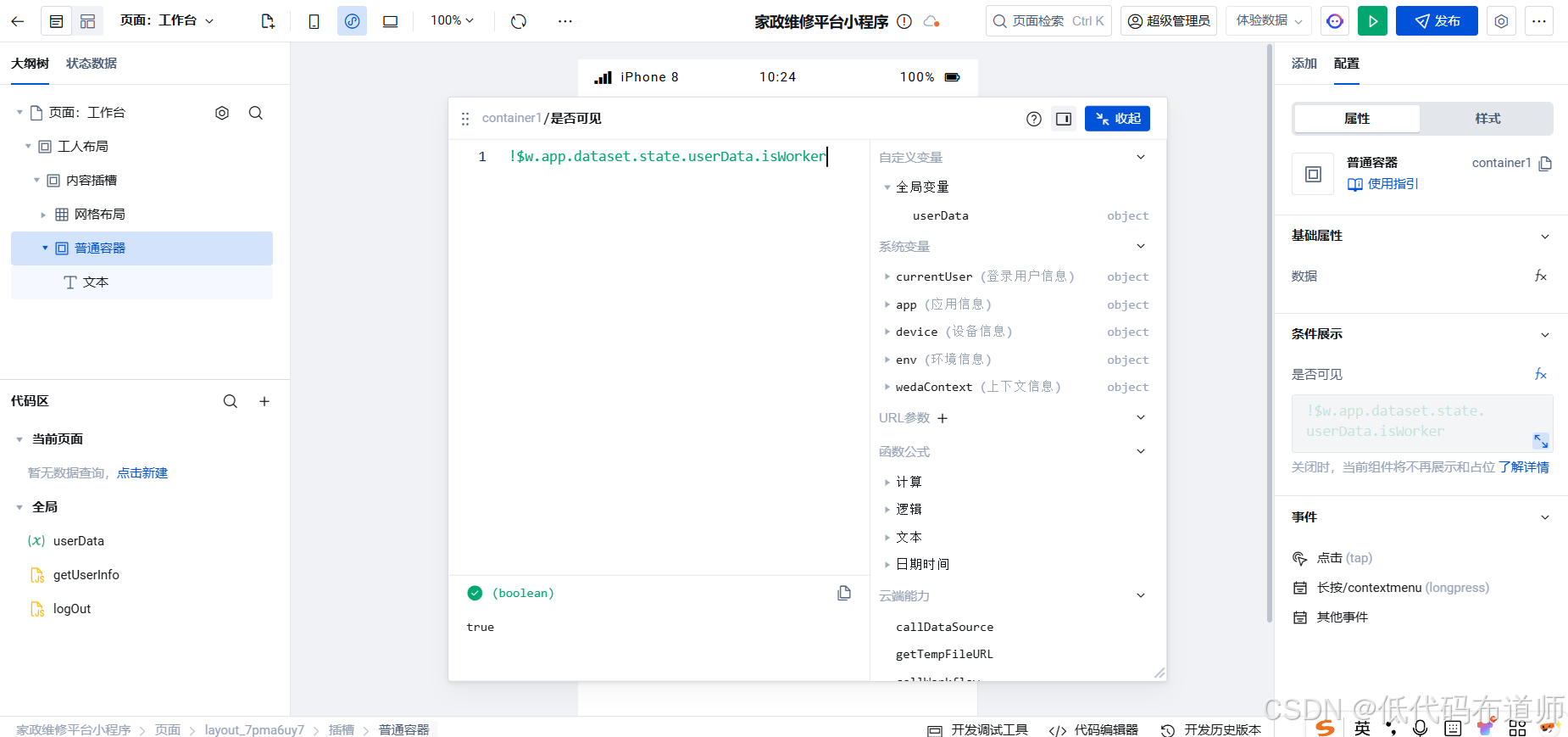

家政维修平台实战20:权限设计

目录 1 获取工人信息2 搭建工人入口3 权限判断总结 目前我们已经搭建好了基础的用户体系,主要是分成几个表,用户表我们是记录用户的基础信息,包括手机、昵称、头像。而工人和员工各有各的表。那么就有一个问题,不同的角色…...

spring:实例工厂方法获取bean

spring处理使用静态工厂方法获取bean实例,也可以通过实例工厂方法获取bean实例。 实例工厂方法步骤如下: 定义实例工厂类(Java代码),定义实例工厂(xml),定义调用实例工厂ÿ…...

ETLCloud可能遇到的问题有哪些?常见坑位解析

数据集成平台ETLCloud,主要用于支持数据的抽取(Extract)、转换(Transform)和加载(Load)过程。提供了一个简洁直观的界面,以便用户可以在不同的数据源之间轻松地进行数据迁移和转换。…...

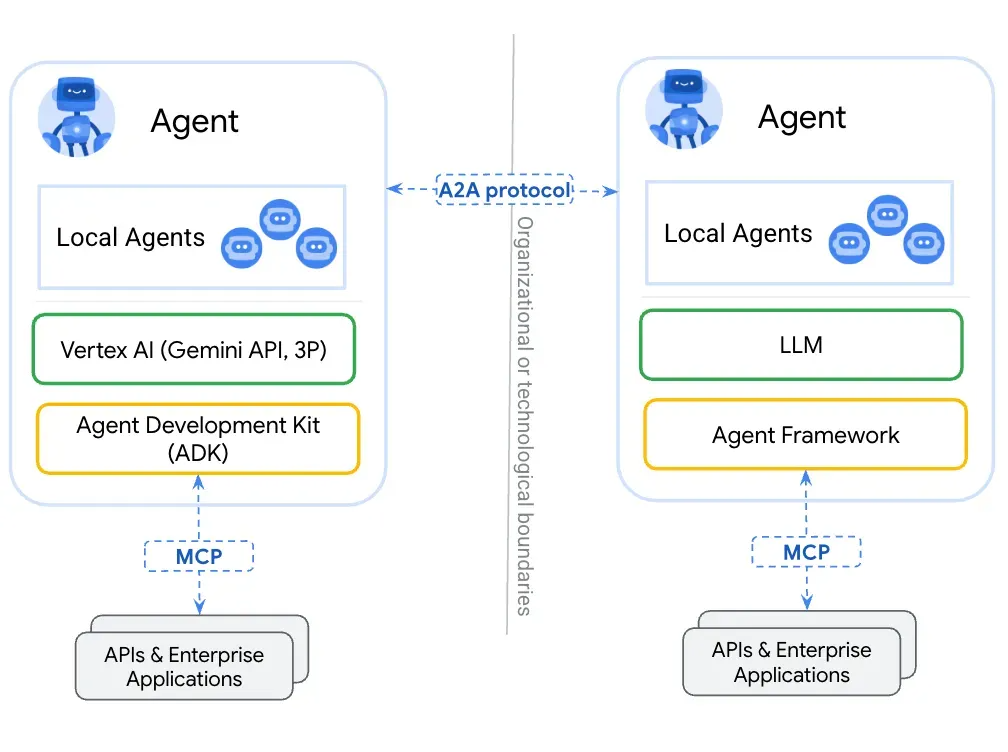

第一篇:Agent2Agent (A2A) 协议——协作式人工智能的黎明

AI 领域的快速发展正在催生一个新时代,智能代理(agents)不再是孤立的个体,而是能够像一个数字团队一样协作。然而,当前 AI 生态系统的碎片化阻碍了这一愿景的实现,导致了“AI 巴别塔问题”——不同代理之间…...

浅谈不同二分算法的查找情况

二分算法原理比较简单,但是实际的算法模板却有很多,这一切都源于二分查找问题中的复杂情况和二分算法的边界处理,以下是博主对一些二分算法查找的情况分析。 需要说明的是,以下二分算法都是基于有序序列为升序有序的情况…...