自然语言处理从入门到应用——LangChain:记忆(Memory)-[记忆的类型Ⅲ]

分类目录:《自然语言处理从入门到应用》总目录

对话令牌缓冲存储器ConversationTokenBufferMemory

ConversationTokenBufferMemory在内存中保留了最近的一些对话交互,并使用标记长度来确定何时刷新交互,而不是交互数量。

from langchain.memory import ConversationTokenBufferMemory

from langchain.llms import OpenAI

llm = OpenAI()

memory = ConversationTokenBufferMemory(llm=llm, max_token_limit=10)

memory.save_context({"input": "hi"}, {"output": "whats up"})

memory.save_context({"input": "not much you"}, {"output": "not much"})

memory.load_memory_variables({})

输出:

{‘history’: ‘Human: not much you\nAI: not much’}

我们还可以将历史记录作为消息列表获取,如果我们正在使用聊天模型,将非常有用:

memory = ConversationTokenBufferMemory(llm=llm, max_token_limit=10, return_messages=True)

memory.save_context({"input": "hi"}, {"output": "whats up"})

memory.save_context({"input": "not much you"}, {"output": "not much"})

在链式模型中的应用

让我们通过一个例子来演示如何在链式模型中使用它,同样设置verbose=True,以便我们可以看到提示信息。

from langchain.chains import ConversationChain

conversation_with_summary = ConversationChain(llm=llm, # We set a very low max_token_limit for the purposes of testing.memory=ConversationTokenBufferMemory(llm=OpenAI(), max_token_limit=60),verbose=True

)

conversation_with_summary.predict(input="Hi, what's up?")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Current conversation:Human: Hi, what's up?

AI:> Finished chain.

输出:

" Hi there! I'm doing great, just enjoying the day. How about you?"

输入:

conversation_with_summary.predict(input="Just working on writing some documentation!")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Current conversation:

Human: Hi, what's up?

AI: Hi there! I'm doing great, just enjoying the day. How about you?

Human: Just working on writing some documentation!

AI:> Finished chain.

输出:

' Sounds like a productive day! What kind of documentation are you writing?'

输入:

conversation_with_summary.predict(input="For LangChain! Have you heard of it?")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Current conversation:

Human: Hi, what's up?

AI: Hi there! I'm doing great, just enjoying the day. How about you?

Human: Just working on writing some documentation!

AI: Sounds like a productive day! What kind of documentation are you writing?

Human: For LangChain! Have you heard of it?

AI:> Finished chain.

输出:

" Yes, I have heard of LangChain! It is a decentralized language-learning platform that connects native speakers and learners in real time. Is that the documentation you're writing about?"

输入:

# 我们可以看到这里缓冲区被更新了

conversation_with_summary.predict(input="Haha nope, although a lot of people confuse it for that")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Current conversation:

Human: For LangChain! Have you heard of it?

AI: Yes, I have heard of LangChain! It is a decentralized language-learning platform that connects native speakers and learners in real time. Is that the documentation you're writing about?

Human: Haha nope, although a lot of people confuse it for that

AI:> Finished chain.

输出:

" Oh, I see. Is there another language learning platform you're referring to?"

基于向量存储的记忆VectorStoreRetrieverMemory

VectorStoreRetrieverMemory将内存存储在VectorDB中,并在每次调用时查询最重要的前 K K K个文档。与大多数其他Memory类不同,它不明确跟踪交互的顺序。在这种情况下,“文档”是先前的对话片段。这对于提及AI在对话中早些时候得知的相关信息非常有用。

from datetime import datetime

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.llms import OpenAI

from langchain.memory import VectorStoreRetrieverMemory

from langchain.chains import ConversationChain

from langchain.prompts import PromptTemplate

初始化VectorStore

根据我们选择的存储方式,此步骤可能会有所不同,我们可以查阅相关的VectorStore文档以获取更多详细信息。

import faissfrom langchain.docstore import InMemoryDocstore

from langchain.vectorstores import FAISSembedding_size = 1536 # Dimensions of the OpenAIEmbeddings

index = faiss.IndexFlatL2(embedding_size)

embedding_fn = OpenAIEmbeddings().embed_query

vectorstore = FAISS(embedding_fn, index, InMemoryDocstore({}), {})

创建VectorStoreRetrieverMemory

记忆对象是从VectorStoreRetriever实例化的。

# In actual usage, you would set `k` to be a higher value, but we use k=1 to show that the vector lookup still returns the semantically relevant information

retriever = vectorstore.as_retriever(search_kwargs=dict(k=1))

memory = VectorStoreRetrieverMemory(retriever=retriever)# When added to an agent, the memory object can save pertinent information from conversations or used tools

memory.save_context({"input": "My favorite food is pizza"}, {"output": "thats good to know"})

memory.save_context({"input": "My favorite sport is soccer"}, {"output": "..."})

memory.save_context({"input": "I don't the Celtics"}, {"output": "ok"}) #

# Notice the first result returned is the memory pertaining to tax help, which the language model deems more semantically relevant

# to a 1099 than the other documents, despite them both containing numbers.

print(memory.load_memory_variables({"prompt": "what sport should i watch?"})["history"])

输出:

input: My favorite sport is soccer

output: ...

在对话链中使用

让我们通过一个示例来演示,在此示例中我们继续设置verbose=True以便查看提示。

llm = OpenAI(temperature=0) # Can be any valid LLM

_DEFAULT_TEMPLATE = """The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Relevant pieces of previous conversation:

{history}(You do not need to use these pieces of information if not relevant)Current conversation:

Human: {input}

AI:"""

PROMPT = PromptTemplate(input_variables=["history", "input"], template=_DEFAULT_TEMPLATE

)

conversation_with_summary = ConversationChain(llm=llm, prompt=PROMPT,# We set a very low max_token_limit for the purposes of testing.memory=memory,verbose=True

)

conversation_with_summary.predict(input="Hi, my name is Perry, what's up?")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Relevant pieces of previous conversation:

input: My favorite food is pizza

output: thats good to know(You do not need to use these pieces of information if not relevant)Current conversation:

Human: Hi, my name is Perry, what's up?

AI:> Finished chain.

输出:

" Hi Perry, I'm doing well. How about you?"

输入:

# Here, the basketball related content is surfaced

conversation_with_summary.predict(input="what's my favorite sport?")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Relevant pieces of previous conversation:

input: My favorite sport is soccer

output: ...(You do not need to use these pieces of information if not relevant)Current conversation:

Human: what's my favorite sport?

AI:> Finished chain.

输出:

' You told me earlier that your favorite sport is soccer.'

输入:

# Even though the language model is stateless, since relavent memory is fetched, it can "reason" about the time.

# Timestamping memories and data is useful in general to let the agent determine temporal relevance

conversation_with_summary.predict(input="Whats my favorite food")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Relevant pieces of previous conversation:

input: My favorite food is pizza

output: thats good to know(You do not need to use these pieces of information if not relevant)Current conversation:

Human: Whats my favorite food

AI:> Finished chain.

输出:

' You said your favorite food is pizza.'

输入:

# The memories from the conversation are automatically stored,

# since this query best matches the introduction chat above,

# the agent is able to 'remember' the user's name.

conversation_with_summary.predict(input="What's my name?")

日志输出:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.Relevant pieces of previous conversation:

input: Hi, my name is Perry, what's up?

response: Hi Perry, I'm doing well. How about you?(You do not need to use these pieces of information if not relevant)Current conversation:

Human: What's my name?

AI:> Finished chain.

输出:

' Your name is Perry.'

参考文献:

[1] LangChain官方网站:https://www.langchain.com/

[2] LangChain 🦜️🔗 中文网,跟着LangChain一起学LLM/GPT开发:https://www.langchain.com.cn/

[3] LangChain中文网 - LangChain 是一个用于开发由语言模型驱动的应用程序的框架:http://www.cnlangchain.com/

相关文章:

-[记忆的类型Ⅲ])

自然语言处理从入门到应用——LangChain:记忆(Memory)-[记忆的类型Ⅲ]

分类目录:《自然语言处理从入门到应用》总目录 对话令牌缓冲存储器ConversationTokenBufferMemory ConversationTokenBufferMemory在内存中保留了最近的一些对话交互,并使用标记长度来确定何时刷新交互,而不是交互数量。 from langchain.me…...

【ARM 嵌入式 编译系列 10.3 -- GNU elfutils 工具小结】

文章目录 什么是 GNU elfutils?GNU elfutils 常用工具有哪些?objcopy 常用参数有哪些?GNU binutils和GNU elfutils区别是什么? 上篇文章:ARM 嵌入式 编译系列 10.2 – 符号表与可执行程序分离详细讲解 什么是 GNU elfu…...

黑马项目一阶段面试 项目介绍篇

我完成了一个外卖项目,名叫苍穹外卖,是跟着黑马程序员的课程来自己动手写的。 项目基本实现了外卖客户端、商家端的后端完整业务。 商家端分为员工管理、文件上传、菜品管理、分类管理、套餐管理、店铺营业状态、订单下单派送等的管理、数据统计等&…...

重构内置类Function原型上的call方法

重构内置类Function原型上的call方法 // > 重构内置类Function原型上的call方法 ~(function () {/*** call: 改变函数中的this指向* params* context 可以不传递,传递必须是引用类型的值,因为后面要给它加 fn 属性**/function myCall(context) {/…...

Nginx之lnmp架构

目录 一.什么是LNMP二.LNMP环境搭建1.Nginx的搭建2.安装php3.安装数据库4.测试Nginx与PHP的连接5.测试PHP连接数据库 一.什么是LNMP LNMP是一套技术的组合,Llinux,Nnginx,Mmysql,Pphp 首先Nginx服务是不能处理动态资源请求&…...

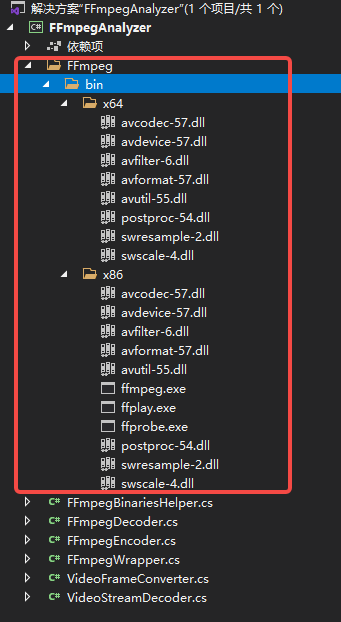

C# 使用FFmpeg.Autogen对byte[]进行编解码

C# 使用FFmpeg.Autogen对byte[]进行编解码,参考:https://github.com/vanjoge/CSharpVideoDemo 入口调用类: using System; using System.IO; using System.Drawing; using System.Runtime.InteropServices; using FFmpeg.AutoGen;namespace F…...

websocket是多线程的嘛

经过测试, onOpen事件的threadId和onMessage的threadId是不一样的,但是onMessage的threadId一直是同一个,就是说收消息的部分是单线程的,收到第一个Message后如果给它sleep较长时间,期间收到第二个,效果是它在排队&am…...

CentOS7.9 禁用22端口,使用其他端口替代

文章目录 业务场景操作步骤修改sshd配置文件修改SELinux开放给ssh使用的端口修改防火墙,开放新端口重启sshd生效 相关知识点介绍sshd服务SELinux服务firewall.service服务 业务场景 我们在某市实施交通信控平台项目,我们申请了一台服务器,用…...

2023国赛 高教社杯数学建模ABCDE题思路汇总分析

文章目录 0 赛题思路1 竞赛信息2 竞赛时间3 建模常见问题类型3.1 分类问题3.2 优化问题3.3 预测问题3.4 评价问题 4 建模资料 0 赛题思路 (赛题出来以后第一时间在CSDN分享) https://blog.csdn.net/dc_sinor?typeblog 1 竞赛信息 全国大学生数学建模…...

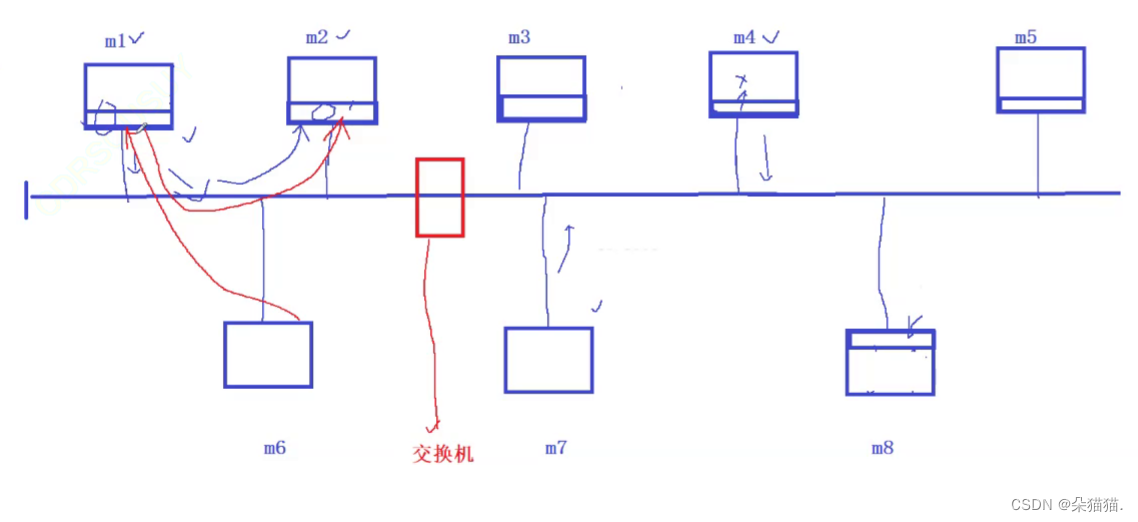

【网络层+数据链路层】深入理解IP协议和MAC帧协议的基本原理

文章目录 前言一、IP协议二、MAC帧协议 1.以太网2.以太网帧(MAC帧)格式报头3.基于协议讲解局域网转发的原理总结 前言 为什么经常将TCP/IP放在一起呢?这是因为IP层的核心工作就是通过IP地址来定位主机的,具有将一个数据报从A主机…...

银行家算法【学习算法】

银行家算法【学习算法】 前言版权推荐银行家算法7.避免死锁7.1 系统安全状态7.2 利用银行家算法避免死锁 Java算法实现代码结果 最后 前言 2023-8-14 18:18:01 以下内容源自《【学习算法】》 仅供学习交流使用 版权 禁止其他平台发布时删除以下此话 本文首次发布于CSDN平台…...

萤石直播以及回放的接入和销毁

以下基于vue项目 1.安装 npm i ezuikit-js 2、导入 main.js中 import EZUIKit from "ezuikit-js"; //导入萤石Vue.use(EZUIKit); 3、创建容器 <div class"video"><div id"video-container"></div><!-- <iframe :src…...

C语言易错知识点总结2

函数 第 1 题(单选题) 题目名称: 能把函数处理结果的二个数据返回给主调函数,在下面的方法中不正确的是:( ) 题目内容: A .return 这二个数 B .形参用数组 C .形参用二个指针 D .用…...

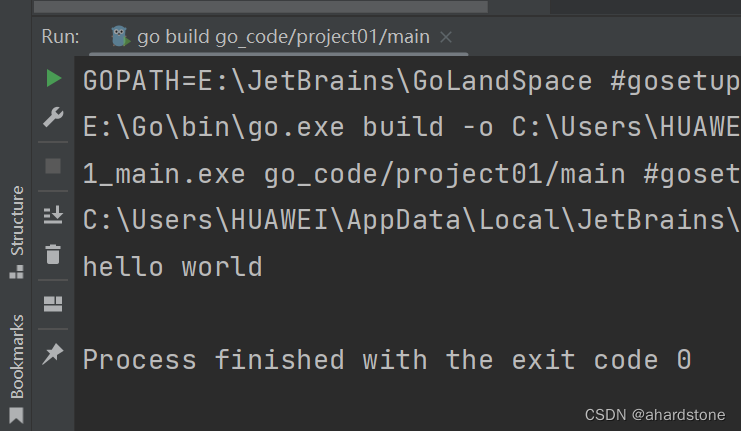

Go学习-Day1

Go学习-Day1 个人博客:CSDN博客 打卡。 Go语言的核心开发团队: Ken Thompson (C语言,B语言,Unix的发明者,牛人)Rob Pike(UTF-8发明人)Robert Griesemer(协助HotSpot编译器,Js引擎V8) Go语言有静态语言的…...

冠达管理:机构密集调研医药生物股 反腐政策影响受关注

进入8月,跟着反腐事件发酵,医药生物板块呈现震荡。与此一起,组织出资者对该板块上市公司也展开了密集调研。 到昨日,8月以来就有包含南微医学、百济神州、维力医疗、方盛制药等12家医药生物板块的上市公司接受组织调研,…...

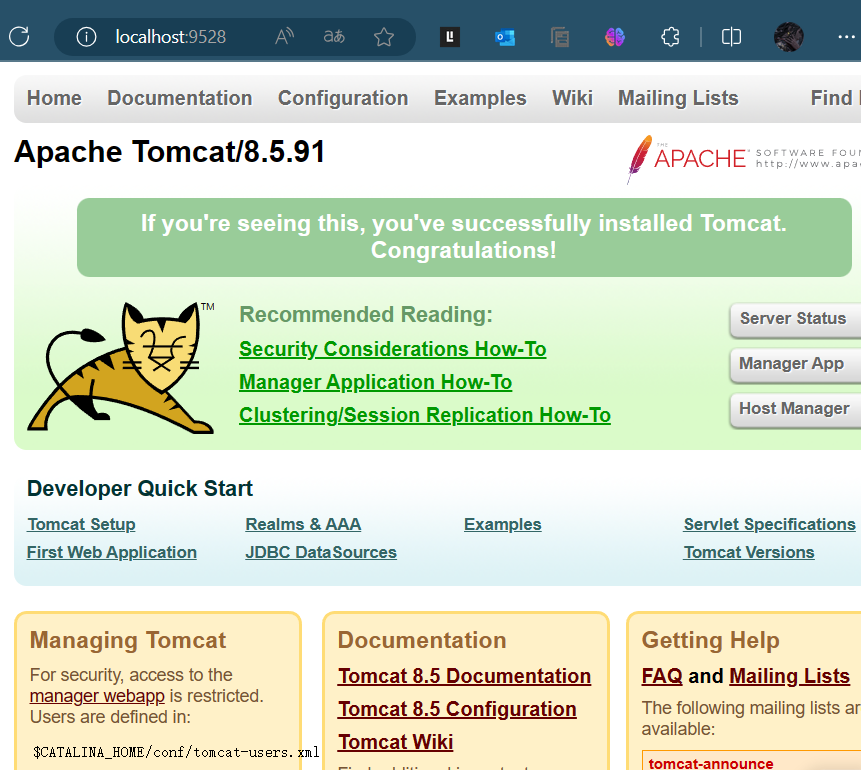

安装Tomac服务器——安装步骤以及易出现问题的解决方法

文章目录 前言 一、下载Tomcat及解压 1、选择下载版本(本文选择tomcat 8版本为例) 2、解压安装包 二、配置环境 1、在电脑搜索栏里面搜索环境变量即可 2、点击高级系统设置->环境变量->新建系统变量 1) 新建系统变量,变量名为…...

JVM 性能优化思路

点击下方关注我,然后右上角点击...“设为星标”,就能第一时间收到更新推送啦~~~ 一般在系统出现问题的时候,我们会考虑对 JVM 进行性能优化。优化思路就是根据问题的情况,结合工具进行问题排查,针对排查出来的可能问题…...

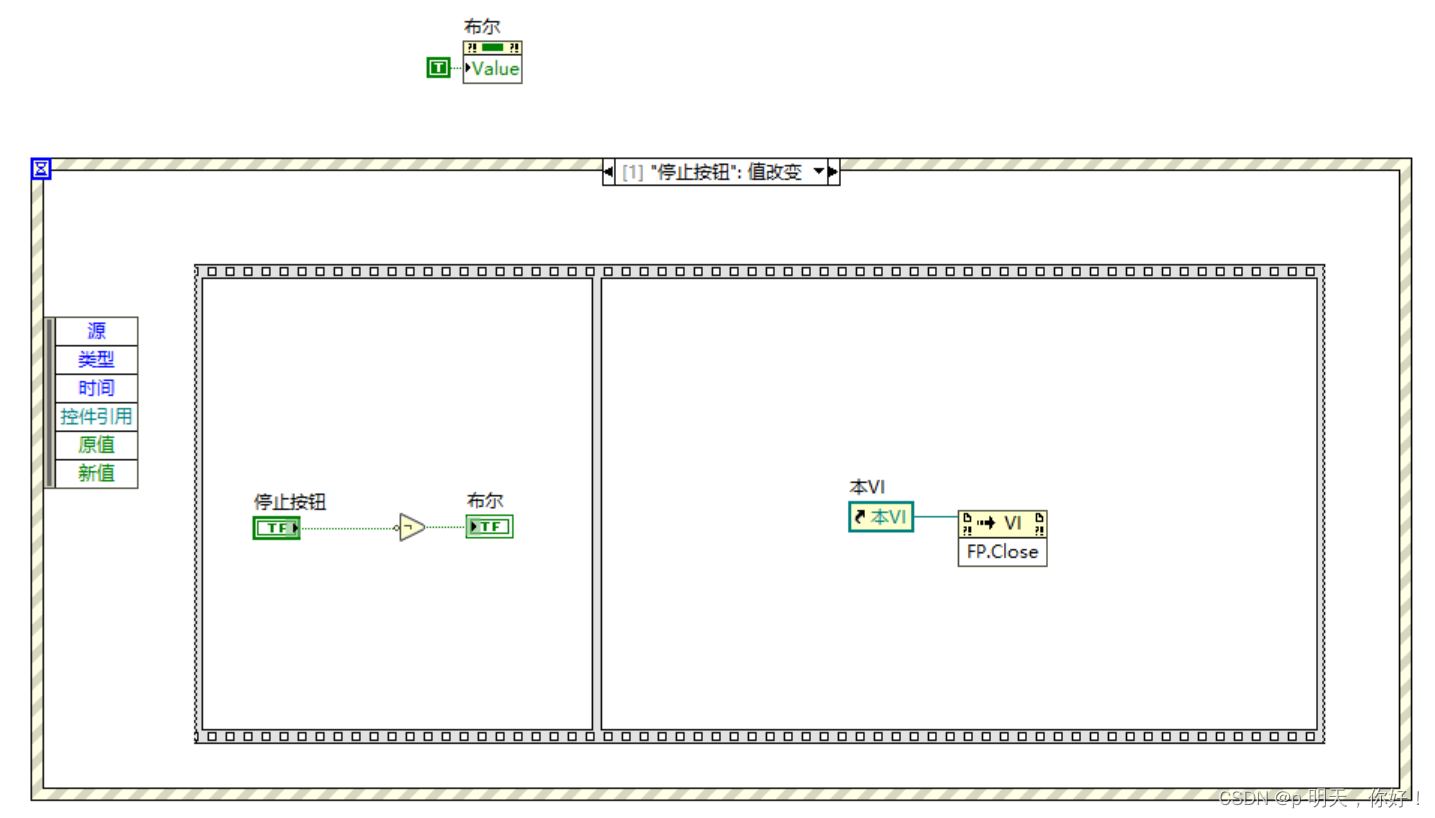

Labview解决“重置VI:xxx.vi”报错问题

文章目录 前言一、程序框图二、前面板三、问题描述四、解决办法 前言 在程序关闭前面板的时候小概率型出现了 重置VI:xxx.vi 这个报错,并且发现此时只能通过任务管理器杀掉 LabVIEW 进程才能退出,这里介绍一下解决方法。 一、程序框图 程序…...

场:郑州轻工业大学C-数位dp)

2023河南萌新联赛第(五)场:郑州轻工业大学C-数位dp

链接:登录—专业IT笔试面试备考平台_牛客网 给定一个正整数 n,你可以对 n 进行任意次(包括零次)如下操作: 选择 n 上的某一数位,将其删去,剩下的左右部分合并。例如 123,你可以选择…...

找不到mfc140u.dll怎么办?mfc140u.dll丢失怎样修复?简单三招搞定

最近我遇到了一个问题,发现我的电脑上出现了mfc140u.dll文件丢失的错误提示。这个错误导致一些应用程序无法正常运行,让我感到非常困扰。经过一番研究和尝试,我终于成功修复了这个问题,并从中总结出了一些心得。 mfc140u.dll丢失原…...

)

rknn优化教程(二)

文章目录 1. 前述2. 三方库的封装2.1 xrepo中的库2.2 xrepo之外的库2.2.1 opencv2.2.2 rknnrt2.2.3 spdlog 3. rknn_engine库 1. 前述 OK,开始写第二篇的内容了。这篇博客主要能写一下: 如何给一些三方库按照xmake方式进行封装,供调用如何按…...

云启出海,智联未来|阿里云网络「企业出海」系列客户沙龙上海站圆满落地

借阿里云中企出海大会的东风,以**「云启出海,智联未来|打造安全可靠的出海云网络引擎」为主题的阿里云企业出海客户沙龙云网络&安全专场于5.28日下午在上海顺利举办,现场吸引了来自携程、小红书、米哈游、哔哩哔哩、波克城市、…...

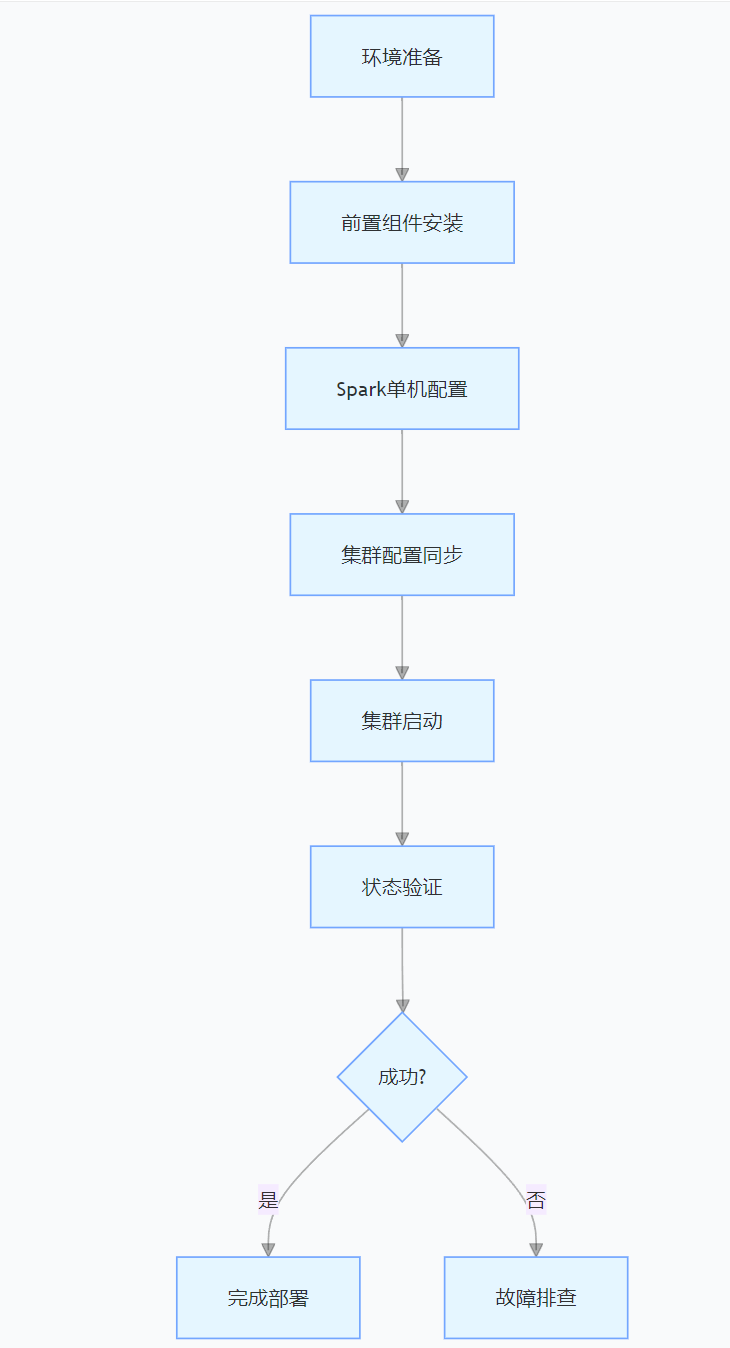

CentOS下的分布式内存计算Spark环境部署

一、Spark 核心架构与应用场景 1.1 分布式计算引擎的核心优势 Spark 是基于内存的分布式计算框架,相比 MapReduce 具有以下核心优势: 内存计算:数据可常驻内存,迭代计算性能提升 10-100 倍(文档段落:3-79…...

【论文笔记】若干矿井粉尘检测算法概述

总的来说,传统机器学习、传统机器学习与深度学习的结合、LSTM等算法所需要的数据集来源于矿井传感器测量的粉尘浓度,通过建立回归模型来预测未来矿井的粉尘浓度。传统机器学习算法性能易受数据中极端值的影响。YOLO等计算机视觉算法所需要的数据集来源于…...

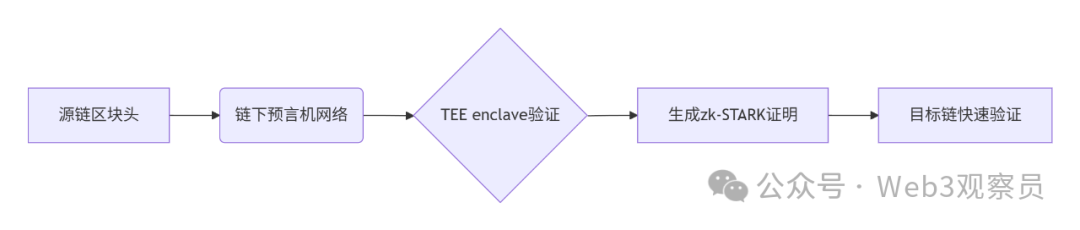

跨链模式:多链互操作架构与性能扩展方案

跨链模式:多链互操作架构与性能扩展方案 ——构建下一代区块链互联网的技术基石 一、跨链架构的核心范式演进 1. 分层协议栈:模块化解耦设计 现代跨链系统采用分层协议栈实现灵活扩展(H2Cross架构): 适配层…...

在WSL2的Ubuntu镜像中安装Docker

Docker官网链接: https://docs.docker.com/engine/install/ubuntu/ 1、运行以下命令卸载所有冲突的软件包: for pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; done2、设置Docker…...

稳定币的深度剖析与展望

一、引言 在当今数字化浪潮席卷全球的时代,加密货币作为一种新兴的金融现象,正以前所未有的速度改变着我们对传统货币和金融体系的认知。然而,加密货币市场的高度波动性却成为了其广泛应用和普及的一大障碍。在这样的背景下,稳定…...

的使用)

Go 并发编程基础:通道(Channel)的使用

在 Go 中,Channel 是 Goroutine 之间通信的核心机制。它提供了一个线程安全的通信方式,用于在多个 Goroutine 之间传递数据,从而实现高效的并发编程。 本章将介绍 Channel 的基本概念、用法、缓冲、关闭机制以及 select 的使用。 一、Channel…...

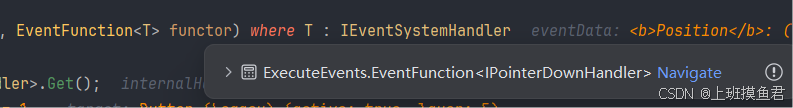

Unity UGUI Button事件流程

场景结构 测试代码 public class TestBtn : MonoBehaviour {void Start(){var btn GetComponent<Button>();btn.onClick.AddListener(OnClick);}private void OnClick(){Debug.Log("666");}}当添加事件时 // 实例化一个ButtonClickedEvent的事件 [Formerl…...

论文阅读笔记——Muffin: Testing Deep Learning Libraries via Neural Architecture Fuzzing

Muffin 论文 现有方法 CRADLE 和 LEMON,依赖模型推理阶段输出进行差分测试,但在训练阶段是不可行的,因为训练阶段直到最后才有固定输出,中间过程是不断变化的。API 库覆盖低,因为各个 API 都是在各种具体场景下使用。…...