Kafka生产者原理 kafka生产者发送流程 kafka消息发送到集群步骤 kafka如何发送消息 kafka详解

kafka尚硅谷视频:

10_尚硅谷_Kafka_生产者_原理_哔哩哔哩_bilibili

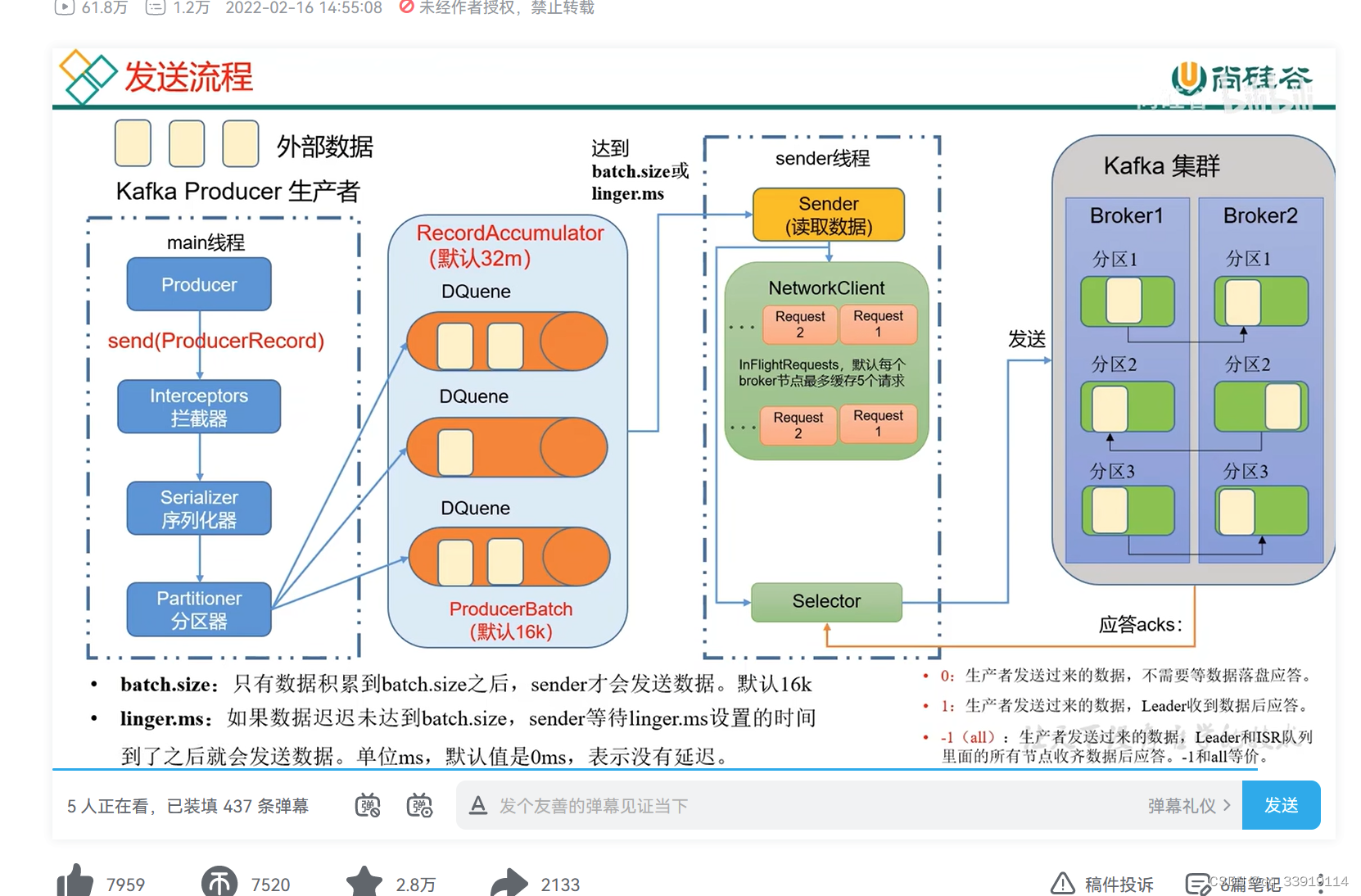

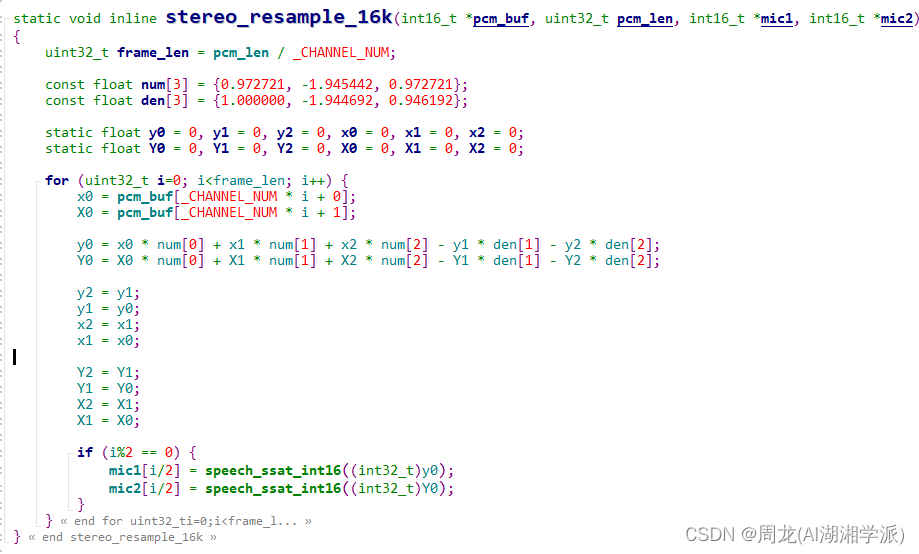

1. producer初始化:加载默认配置,以及配置的参数,开启网络线程

2. 拦截器拦截

3. 序列化器进行消息key, value序列化

4. 进行分区

5. kafka broker集群 获取metaData

6. 消息缓存到RecordAccumulator收集器,分配到该分区的DQueue(RecordBatch)

7. batch.size满了,或者linker.ms到达指定时间,唤醒sender线程, 实例化networkClient

RecordBatch ==>RequestClient 发送消息体,

8. 与分区相同broker建立网络连接,发送到对应broker

1. send()方法参数producerRecord对象:

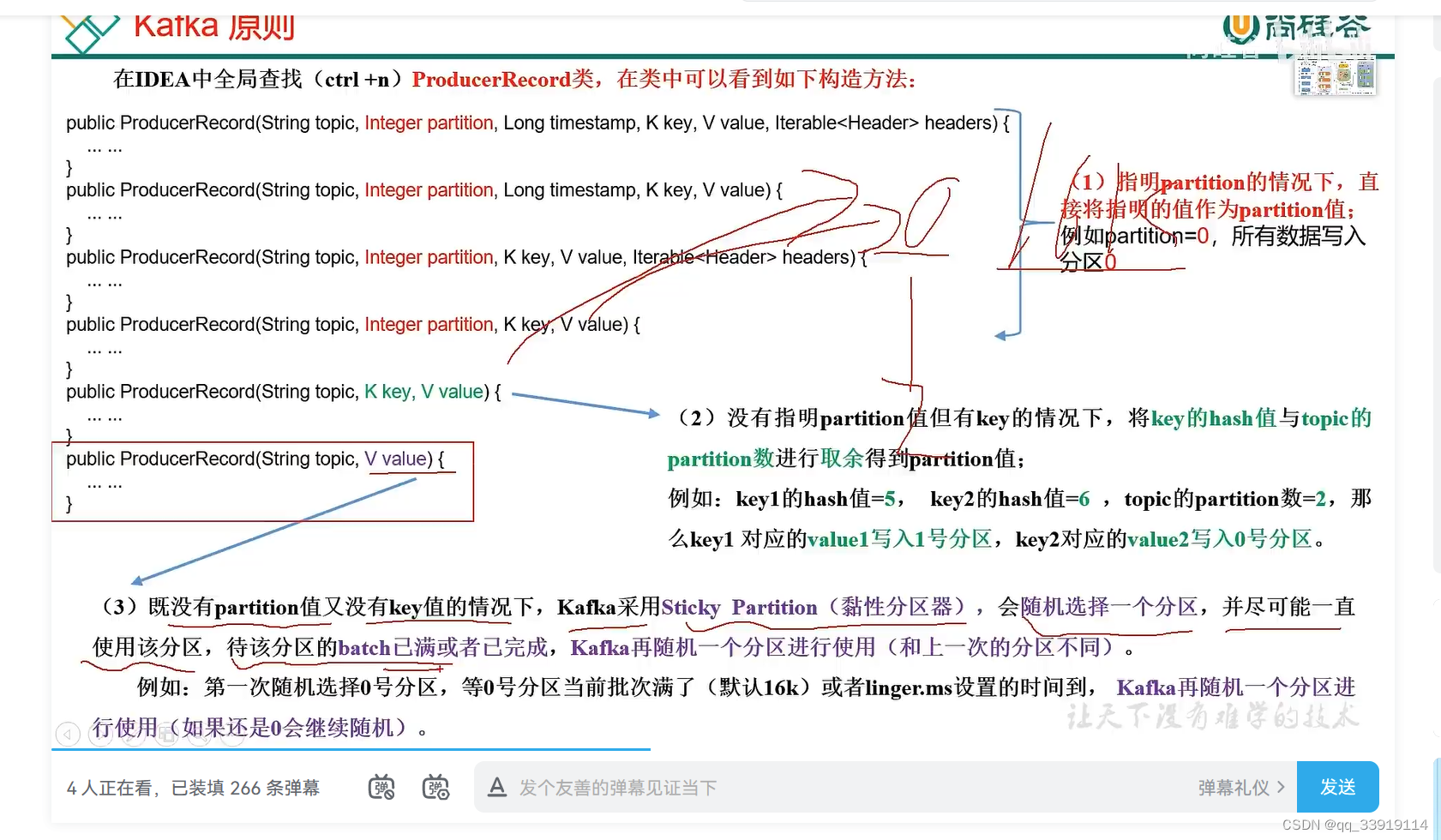

关于分区:

a.指定分区,则发送到该分区

b.不指定分区,k值没有传入,使用黏性分区(sticky partition)

第一次调用时随机生成一个整数(后面每次调用在这个整数上自增),将这个值与 topic 可用的 partition 总数取余得到 partition 值,也就是常说的 round-robin 算法

c.不指定分区,传入k值,k值先进行hash获取hashCodeValue, 再与topic下的分区数进行求模取余,进行分区。

如 k hash = 5 topic目前的分区数2 则 分区为:1

k hash =6 topic目前的分区数2 则 分区为:0

2. KafkaProducer 异步, 同步发送api:

异步发送:

producer.send(producerRecord对象);

同步发送则send()方法后面.get()

kafka 的send方法核心逻辑:

public Future<RecordMetadata> send(ProducerRecord<K, V> record) {return this.send(record, (Callback)null);}public Future<RecordMetadata> send(ProducerRecord<K, V> record, Callback callback) {// 拦截器集合。多个拦截对象循环遍历ProducerRecord<K, V> interceptedRecord = this.interceptors.onSend(record);return this.doSend(interceptedRecord, callback);}private Future<RecordMetadata> doSend(ProducerRecord<K, V> record, Callback callback) {TopicPartition tp = null;// 获取集群信息metadatatry {this.throwIfProducerClosed();long nowMs = this.time.milliseconds();ClusterAndWaitTime clusterAndWaitTime;try {clusterAndWaitTime = this.waitOnMetadata(record.topic(), record.partition(), nowMs, this.maxBlockTimeMs);} catch (KafkaException var22) {if (this.metadata.isClosed()) {throw new KafkaException("Producer closed while send in progress", var22);}throw var22;}nowMs += clusterAndWaitTime.waitedOnMetadataMs;long remainingWaitMs = Math.max(0L, this.maxBlockTimeMs - clusterAndWaitTime.waitedOnMetadataMs);Cluster cluster = clusterAndWaitTime.cluster;// 序列化器 key序列化byte[] serializedKey;try {serializedKey = this.keySerializer.serialize(record.topic(), record.headers(), record.key());} catch (ClassCastException var21) {throw new SerializationException("Can't convert key of class " + record.key().getClass().getName() + " to class " + this.producerConfig.getClass("key.serializer").getName() + " specified in key.serializer", var21);}// 序列化器 value序列化byte[] serializedValue;try {serializedValue = this.valueSerializer.serialize(record.topic(), record.headers(), record.value());} catch (ClassCastException var20) {throw new SerializationException("Can't convert value of class " + record.value().getClass().getName() + " to class " + this.producerConfig.getClass("value.serializer").getName() + " specified in value.serializer", var20);}// 分区int partition = this.partition(record, serializedKey, serializedValue, cluster);tp = new TopicPartition(record.topic(), partition);this.setReadOnly(record.headers());Header[] headers = record.headers().toArray();int serializedSize = AbstractRecords.estimateSizeInBytesUpperBound(this.apiVersions.maxUsableProduceMagic(), this.compressionType, serializedKey, serializedValue, headers);this.ensureValidRecordSize(serializedSize);long timestamp = record.timestamp() == null ? nowMs : record.timestamp();if (this.log.isTraceEnabled()) {this.log.trace("Attempting to append record {} with callback {} to topic {} partition {}", new Object[]{record, callback, record.topic(), partition});}Callback interceptCallback = new InterceptorCallback(callback, this.interceptors, tp);// RecordAccumulator.append() 添加数据转 ProducerBatchRecordAccumulator.RecordAppendResult result = this.accumulator.append(tp, timestamp, serializedKey, serializedValue, headers, interceptCallback, remainingWaitMs, true, nowMs);if (result.abortForNewBatch) {int prevPartition = partition;this.partitioner.onNewBatch(record.topic(), cluster, partition);partition = this.partition(record, serializedKey, serializedValue, cluster);tp = new TopicPartition(record.topic(), partition);if (this.log.isTraceEnabled()) {this.log.trace("Retrying append due to new batch creation for topic {} partition {}. The old partition was {}", new Object[]{record.topic(), partition, prevPartition});}interceptCallback = new InterceptorCallback(callback, this.interceptors, tp);result = this.accumulator.append(tp, timestamp, serializedKey, serializedValue, headers, interceptCallback, remainingWaitMs, false, nowMs);}if (this.transactionManager != null) {this.transactionManager.maybeAddPartition(tp);}// 判断是否满了,满了唤醒sender , sender继承了runnableif (result.batchIsFull || result.newBatchCreated) {this.log.trace("Waking up the sender since topic {} partition {} is either full or getting a new batch", record.topic(), partition);this.sender.wakeup();}return result.future;} catch (ApiException var23) {this.log.debug("Exception occurred during message send:", var23);if (tp == null) {tp = ProducerInterceptors.extractTopicPartition(record);}Callback interceptCallback = new InterceptorCallback(callback, this.interceptors, tp);interceptCallback.onCompletion((RecordMetadata)null, var23);this.errors.record();this.interceptors.onSendError(record, tp, var23);return new FutureFailure(var23);} catch (InterruptedException var24) {this.errors.record();this.interceptors.onSendError(record, tp, var24);throw new InterruptException(var24);} catch (KafkaException var25) {this.errors.record();this.interceptors.onSendError(record, tp, var25);throw var25;} catch (Exception var26) {this.interceptors.onSendError(record, tp, var26);throw var26;}}

Sender类 run()方法:

public void run() {this.log.debug("Starting Kafka producer I/O thread.");while(this.running) {try {this.runOnce();} catch (Exception var5) {this.log.error("Uncaught error in kafka producer I/O thread: ", var5);}}this.log.debug("Beginning shutdown of Kafka producer I/O thread, sending remaining records.");while(!this.forceClose && (this.accumulator.hasUndrained() || this.client.inFlightRequestCount() > 0 || this.hasPendingTransactionalRequests())) {try {this.runOnce();} catch (Exception var4) {this.log.error("Uncaught error in kafka producer I/O thread: ", var4);}}while(!this.forceClose && this.transactionManager != null && this.transactionManager.hasOngoingTransaction()) {if (!this.transactionManager.isCompleting()) {this.log.info("Aborting incomplete transaction due to shutdown");this.transactionManager.beginAbort();}try {this.runOnce();} catch (Exception var3) {this.log.error("Uncaught error in kafka producer I/O thread: ", var3);}}if (this.forceClose) {if (this.transactionManager != null) {this.log.debug("Aborting incomplete transactional requests due to forced shutdown");this.transactionManager.close();}this.log.debug("Aborting incomplete batches due to forced shutdown");this.accumulator.abortIncompleteBatches();}try {this.client.close();} catch (Exception var2) {this.log.error("Failed to close network client", var2);}this.log.debug("Shutdown of Kafka producer I/O thread has completed.");}void runOnce() {if (this.transactionManager != null) {try {this.transactionManager.maybeResolveSequences();if (this.transactionManager.hasFatalError()) {RuntimeException lastError = this.transactionManager.lastError();if (lastError != null) {this.maybeAbortBatches(lastError);}this.client.poll(this.retryBackoffMs, this.time.milliseconds());return;}this.transactionManager.bumpIdempotentEpochAndResetIdIfNeeded();if (this.maybeSendAndPollTransactionalRequest()) {return;}} catch (AuthenticationException var5) {this.log.trace("Authentication exception while processing transactional request", var5);this.transactionManager.authenticationFailed(var5);}}long currentTimeMs = this.time.milliseconds();// 发送数据long pollTimeout = this.sendProducerData(currentTimeMs);this.client.poll(pollTimeout, currentTimeMs);}sendProducerData() :

最终转换为ClientRequest对象

ClientRequest clientRequest = this.client.newClientRequest(nodeId, requestBuilder, now, acks != 0, this.requestTimeoutMs, callback);this.client.send(clientRequest, now);

private long sendProducerData(long now) {Cluster cluster = this.metadata.fetch();RecordAccumulator.ReadyCheckResult result = this.accumulator.ready(cluster, now);Iterator iter;if (!result.unknownLeaderTopics.isEmpty()) {iter = result.unknownLeaderTopics.iterator();while(iter.hasNext()) {String topic = (String)iter.next();this.metadata.add(topic, now);}this.log.debug("Requesting metadata update due to unknown leader topics from the batched records: {}", result.unknownLeaderTopics);this.metadata.requestUpdate();}iter = result.readyNodes.iterator();long notReadyTimeout = Long.MAX_VALUE;while(iter.hasNext()) {Node node = (Node)iter.next();if (!this.client.ready(node, now)) {iter.remove();notReadyTimeout = Math.min(notReadyTimeout, this.client.pollDelayMs(node, now));}}Map<Integer, List<ProducerBatch>> batches = this.accumulator.drain(cluster, result.readyNodes, this.maxRequestSize, now);this.addToInflightBatches(batches);List expiredBatches;Iterator var11;ProducerBatch expiredBatch;if (this.guaranteeMessageOrder) {Iterator var9 = batches.values().iterator();while(var9.hasNext()) {expiredBatches = (List)var9.next();var11 = expiredBatches.iterator();while(var11.hasNext()) {expiredBatch = (ProducerBatch)var11.next();this.accumulator.mutePartition(expiredBatch.topicPartition);}}}this.accumulator.resetNextBatchExpiryTime();List<ProducerBatch> expiredInflightBatches = this.getExpiredInflightBatches(now);expiredBatches = this.accumulator.expiredBatches(now);expiredBatches.addAll(expiredInflightBatches);if (!expiredBatches.isEmpty()) {this.log.trace("Expired {} batches in accumulator", expiredBatches.size());}var11 = expiredBatches.iterator();while(var11.hasNext()) {expiredBatch = (ProducerBatch)var11.next();String errorMessage = "Expiring " + expiredBatch.recordCount + " record(s) for " + expiredBatch.topicPartition + ":" + (now - expiredBatch.createdMs) + " ms has passed since batch creation";this.failBatch(expiredBatch, (RuntimeException)(new TimeoutException(errorMessage)), false);if (this.transactionManager != null && expiredBatch.inRetry()) {this.transactionManager.markSequenceUnresolved(expiredBatch);}}this.sensors.updateProduceRequestMetrics(batches);long pollTimeout = Math.min(result.nextReadyCheckDelayMs, notReadyTimeout);pollTimeout = Math.min(pollTimeout, this.accumulator.nextExpiryTimeMs() - now);pollTimeout = Math.max(pollTimeout, 0L);if (!result.readyNodes.isEmpty()) {this.log.trace("Nodes with data ready to send: {}", result.readyNodes);pollTimeout = 0L;}this.sendProduceRequests(batches, now);return pollTimeout;}private void sendProduceRequests(Map<Integer, List<ProducerBatch>> collated, long now) {Iterator var4 = collated.entrySet().iterator();while(var4.hasNext()) {Map.Entry<Integer, List<ProducerBatch>> entry = (Map.Entry)var4.next();this.sendProduceRequest(now, (Integer)entry.getKey(), this.acks, this.requestTimeoutMs, (List)entry.getValue());}}private void sendProduceRequest(long now, int destination, short acks, int timeout, List<ProducerBatch> batches) {if (!batches.isEmpty()) {Map<TopicPartition, ProducerBatch> recordsByPartition = new HashMap(batches.size());byte minUsedMagic = this.apiVersions.maxUsableProduceMagic();Iterator var9 = batches.iterator();while(var9.hasNext()) {ProducerBatch batch = (ProducerBatch)var9.next();if (batch.magic() < minUsedMagic) {minUsedMagic = batch.magic();}}ProduceRequestData.TopicProduceDataCollection tpd = new ProduceRequestData.TopicProduceDataCollection();Iterator var16 = batches.iterator();while(var16.hasNext()) {ProducerBatch batch = (ProducerBatch)var16.next();TopicPartition tp = batch.topicPartition;MemoryRecords records = batch.records();if (!records.hasMatchingMagic(minUsedMagic)) {records = (MemoryRecords)batch.records().downConvert(minUsedMagic, 0L, this.time).records();}ProduceRequestData.TopicProduceData tpData = tpd.find(tp.topic());if (tpData == null) {tpData = (new ProduceRequestData.TopicProduceData()).setName(tp.topic());tpd.add(tpData);}tpData.partitionData().add((new ProduceRequestData.PartitionProduceData()).setIndex(tp.partition()).setRecords(records));recordsByPartition.put(tp, batch);}String transactionalId = null;if (this.transactionManager != null && this.transactionManager.isTransactional()) {transactionalId = this.transactionManager.transactionalId();}ProduceRequest.Builder requestBuilder = ProduceRequest.forMagic(minUsedMagic, (new ProduceRequestData()).setAcks(acks).setTimeoutMs(timeout).setTransactionalId(transactionalId).setTopicData(tpd));RequestCompletionHandler callback = (response) -> {this.handleProduceResponse(response, recordsByPartition, this.time.milliseconds());};String nodeId = Integer.toString(destination);ClientRequest clientRequest = this.client.newClientRequest(nodeId, requestBuilder, now, acks != 0, this.requestTimeoutMs, callback);// this.client 为KafkaClient接口 实现类:NetworkClient对象this.client.send(clientRequest, now);this.log.trace("Sent produce request to {}: {}", nodeId, requestBuilder);}}NetworkClient send()方法:

public void send(ClientRequest request, long now) {this.doSend(request, false, now);}private void doSend(ClientRequest clientRequest, boolean isInternalRequest, long now) {this.ensureActive();String nodeId = clientRequest.destination();if (!isInternalRequest && !this.canSendRequest(nodeId, now)) {throw new IllegalStateException("Attempt to send a request to node " + nodeId + " which is not ready.");} else {AbstractRequest.Builder<?> builder = clientRequest.requestBuilder();try {NodeApiVersions versionInfo = this.apiVersions.get(nodeId);short version;if (versionInfo == null) {version = builder.latestAllowedVersion();if (this.discoverBrokerVersions && this.log.isTraceEnabled()) {this.log.trace("No version information found when sending {} with correlation id {} to node {}. Assuming version {}.", new Object[]{clientRequest.apiKey(), clientRequest.correlationId(), nodeId, version});}} else {version = versionInfo.latestUsableVersion(clientRequest.apiKey(), builder.oldestAllowedVersion(), builder.latestAllowedVersion());}this.doSend(clientRequest, isInternalRequest, now, builder.build(version));} catch (UnsupportedVersionException var9) {this.log.debug("Version mismatch when attempting to send {} with correlation id {} to {}", new Object[]{builder, clientRequest.correlationId(), clientRequest.destination(), var9});ClientResponse clientResponse = new ClientResponse(clientRequest.makeHeader(builder.latestAllowedVersion()), clientRequest.callback(), clientRequest.destination(), now, now, false, var9, (AuthenticationException)null, (AbstractResponse)null);if (!isInternalRequest) {this.abortedSends.add(clientResponse);} else if (clientRequest.apiKey() == ApiKeys.METADATA) {this.metadataUpdater.handleFailedRequest(now, Optional.of(var9));}}}}private void doSend(ClientRequest clientRequest, boolean isInternalRequest, long now, AbstractRequest request) {String destination = clientRequest.destination();RequestHeader header = clientRequest.makeHeader(request.version());if (this.log.isDebugEnabled()) {this.log.debug("Sending {} request with header {} and timeout {} to node {}: {}", new Object[]{clientRequest.apiKey(), header, clientRequest.requestTimeoutMs(), destination, request});}Send send = request.toSend(header);// clientRequest convert InFlightRequest 对象InFlightRequest inFlightRequest = new InFlightRequest(clientRequest, header, isInternalRequest, request, send, now);this.inFlightRequests.add(inFlightRequest);// nio channel。。。selector 发送消息信息//this.selector is Selectable interface KafkaChannel is implementthis.selector.send(new NetworkSend(clientRequest.destination(), send));}总结:直接阅读源码很快就能想明白kafka 生产者发送逻辑,kafka-client.jar。 核心==>

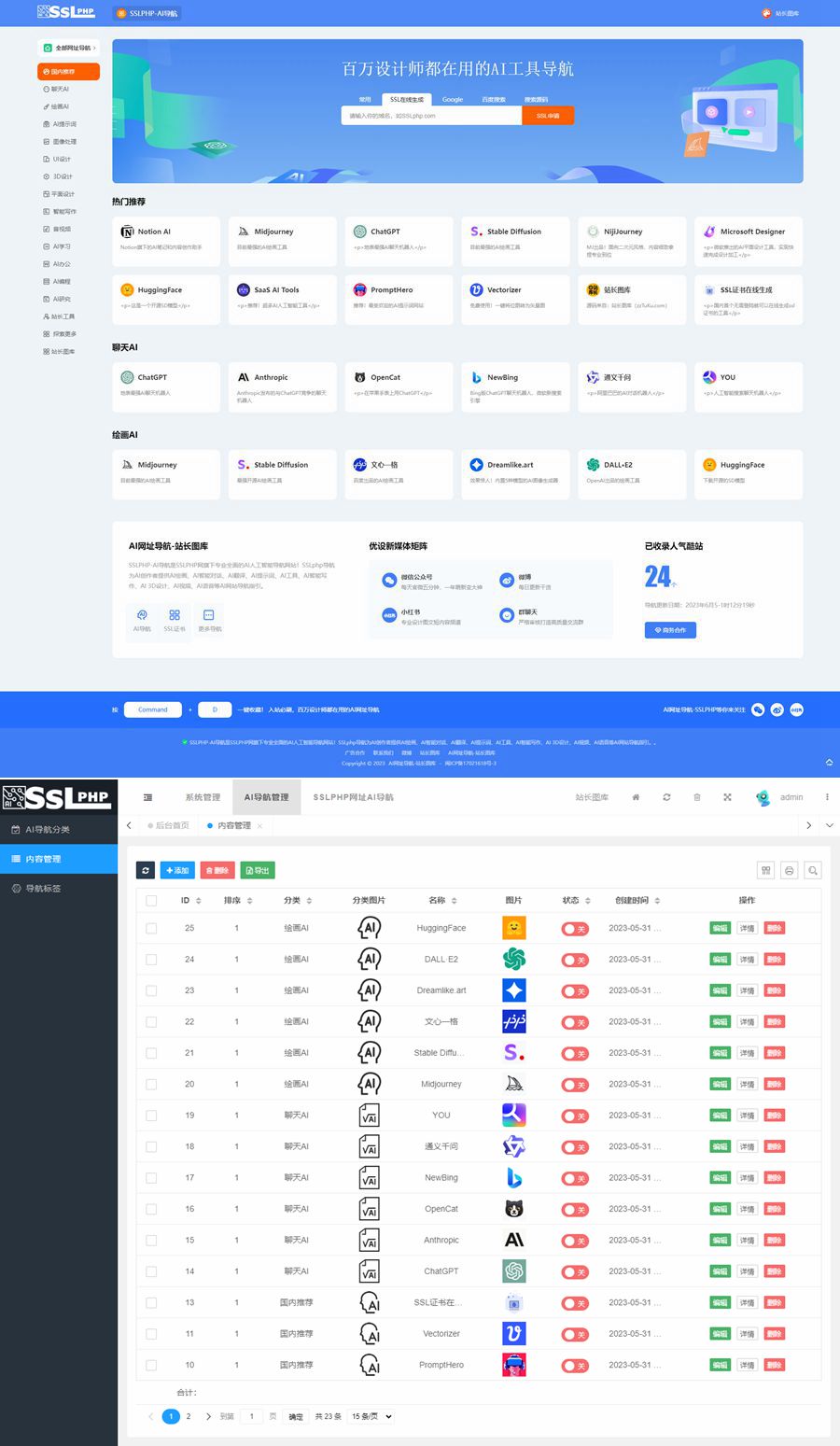

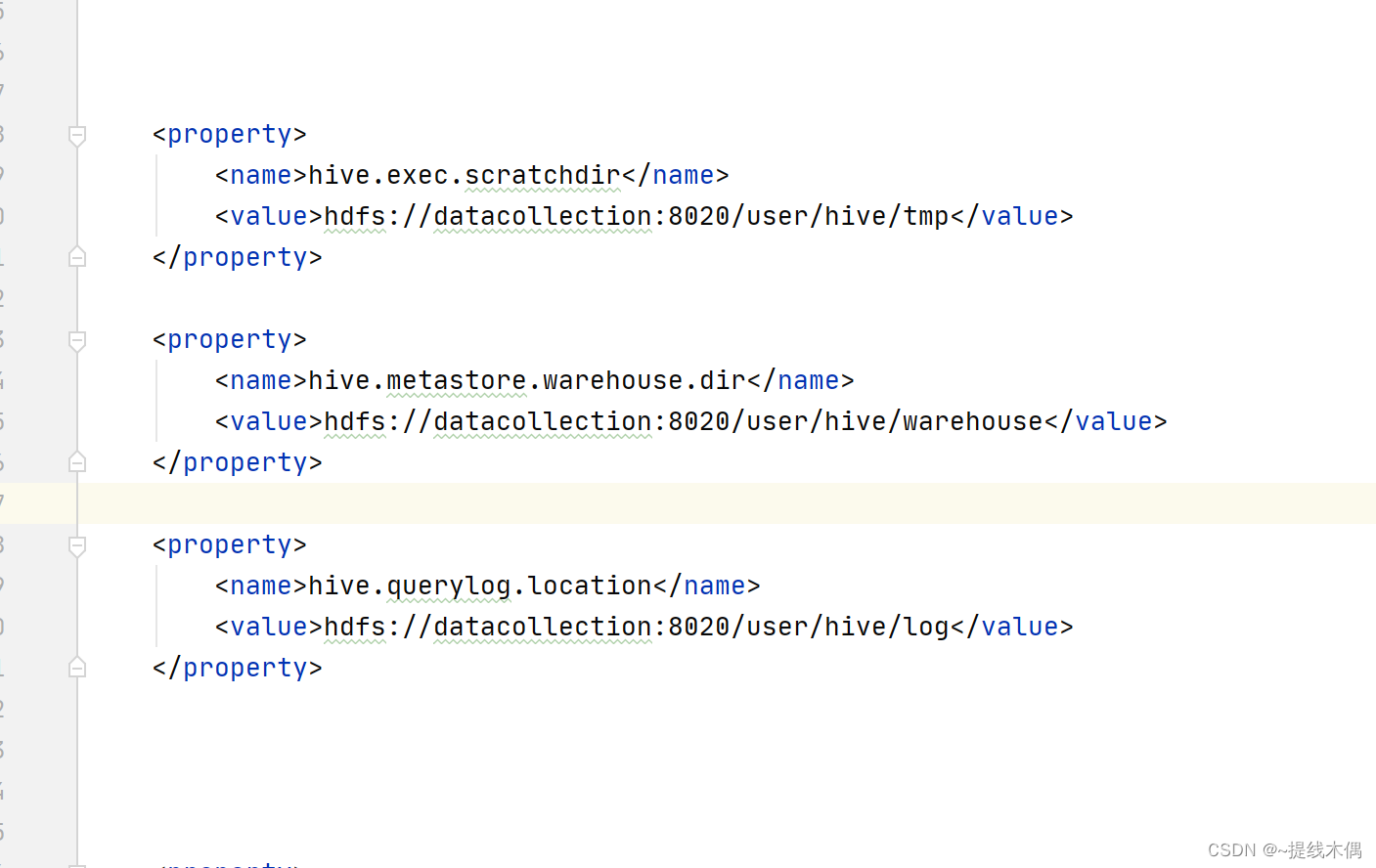

本文第一张图片

相关文章:

Kafka生产者原理 kafka生产者发送流程 kafka消息发送到集群步骤 kafka如何发送消息 kafka详解

kafka尚硅谷视频: 10_尚硅谷_Kafka_生产者_原理_哔哩哔哩_bilibili 1. producer初始化:加载默认配置,以及配置的参数,开启网络线程 2. 拦截器拦截 3. 序列化器进行消息key, value序列化 4. 进行分区 5. kafka broker集群 获取…...

uniapp打包)

Uniapp笔记(七)uniapp打包

一、项目打包 1、h5打包 登录dcloud账户,在manifest.json的基础配置选项中,点击重新获取uniapp应用标识APPID 在manifest.json的Web配置选项的运行的基础路径中输入./ 在菜单栏的发行栏目,点击网站-PC或手机H5 输入网站标题和网站域名&am…...

软考高级系统架构设计师系列论文七十六:论基于构件的软件开发

软考高级系统架构设计师系列论文七十六:论基于构件的软件开发 一、构件相关知识点二、摘要三、正文四、总结一、构件相关知识点 软考高级系统架构设计师系列之:面向构件的软件设计,构件平台与典型架构...

基于Thinkphp6框架全新UI的AI网址导航系统源码

2023全新UI的AI网址导航系统源码,基于thinkphp6框架开发的 AI 网址导航是一个非常实用的工具,它能够帮助用户方便地浏览和管理自己喜欢的网站。 相比于其他的 AI 网址导航,这个项目使用了更加友好和易用的 ThinkPHP 框架进行搭建,…...

Html 补充

accesskey 设置快捷键 Alt设定的键 <a href"https://blog.csdn.net/lcatake/article/details/131716967?spm1001.2014.3001.5501" target"_blank" accesskey"i">我的博客</a> contenteditable 使文本可编译 默认为false 对输入框无…...

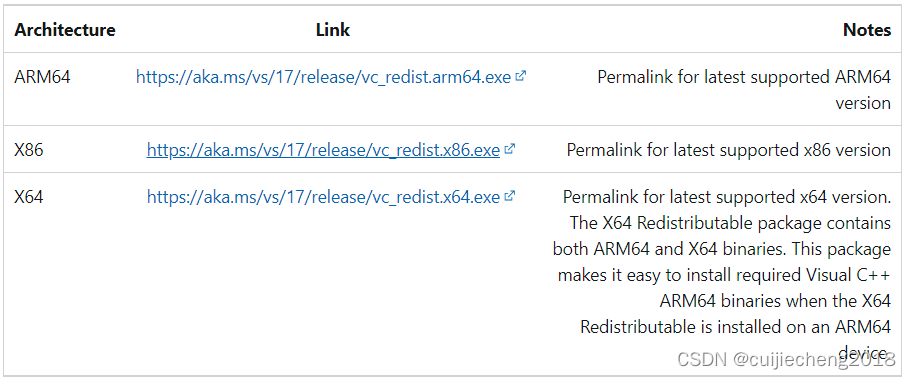

Visual Studio编译出来的程序无法在其它电脑上运行

在其它电脑(比如Windows Server 2012)上运行Visual Studio编译出来的应用程序,结果报错:“无法启动此程序,因为计算机中丢失VCRUNTIME140.dll。尝试重新安装该程序以解决此问题。” 解决方法: 属性 -> …...

习题练习 C语言(暑期第二弹)

编程能力小提升! 前言一、表达式判断二、Assii码的理解应用三、循环跳出判断四、数字在升序数组中出现的次数五、整数转换六、循环语句的应用七、函数调用八、两个数组的交集九、C语言基础十、图片整理十一、数组的引用十二、数组的引用十三、字符个数统计十四、多数…...

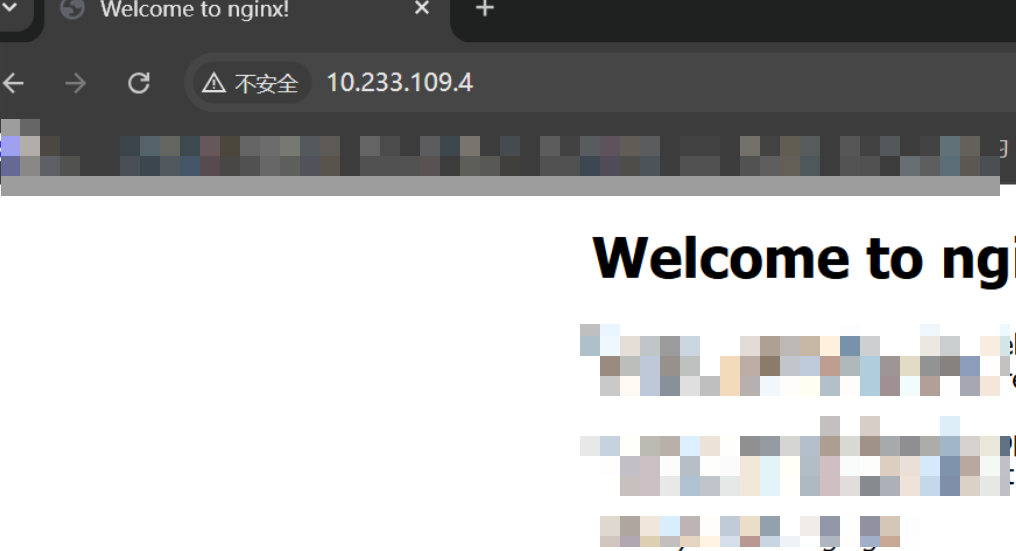

树莓派使用Nginx+cpolar内网穿透实现无公网IP访问内网本地站点

文章目录 1. Nginx安装2. 安装cpolar3.配置域名访问Nginx4. 固定域名访问5. 配置静态站点 安装 Nginx(发音为“engine-x”)可以将您的树莓派变成一个强大的 Web 服务器,可以用于托管网站或 Web 应用程序。相比其他 Web 服务器,Ngi…...

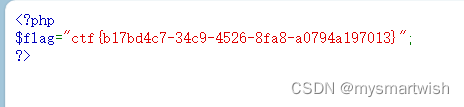

攻防世界-Web_php_unserialize

原题 解题思路 注释说了flag存在f14g.php中,但是在wakeup函数中,会把传入的文件名变成index.php。看wp知道,如果被反序列话的字符串其中对应的对象的属性个数发生变化时,会导致反序列化失败而同时使得__wakeup 失效(CV…...

云化背景下的接口测试覆盖率自动化检查

一、问题来源 在云化场景下,API的测试覆盖是一项重要评估与考察指标。除了开发者自测试外(UT),还可以利用云化测试平台、流水线等方法进行相关指标的检查与考核。利用这种方法既可以减轻开发者测试工作量,不必在本地做…...

QCC_BES 音频重采样算法实现

+V hezkz17进数字音频系统研究开发交流答疑群(课题组) 这段代码是一个用于将音频数据进行立体声重采样的函数。以下是对代码的解读: 函数接受以下参数: pcm_buf:16位有符号整型的音频缓冲区,存储了输入的音频数据。pcm_len:音频缓冲区的长度。mic1:16位有符号整型的音频…...

如何使用CSS实现一个3D旋转效果?

聚沙成塔每天进步一点点 ⭐ 专栏简介⭐ 3D效果实现⭐ 写在最后 ⭐ 专栏简介 前端入门之旅:探索Web开发的奇妙世界 记得点击上方或者右侧链接订阅本专栏哦 几何带你启航前端之旅 欢迎来到前端入门之旅!这个专栏是为那些对Web开发感兴趣、刚刚踏入前端领域…...

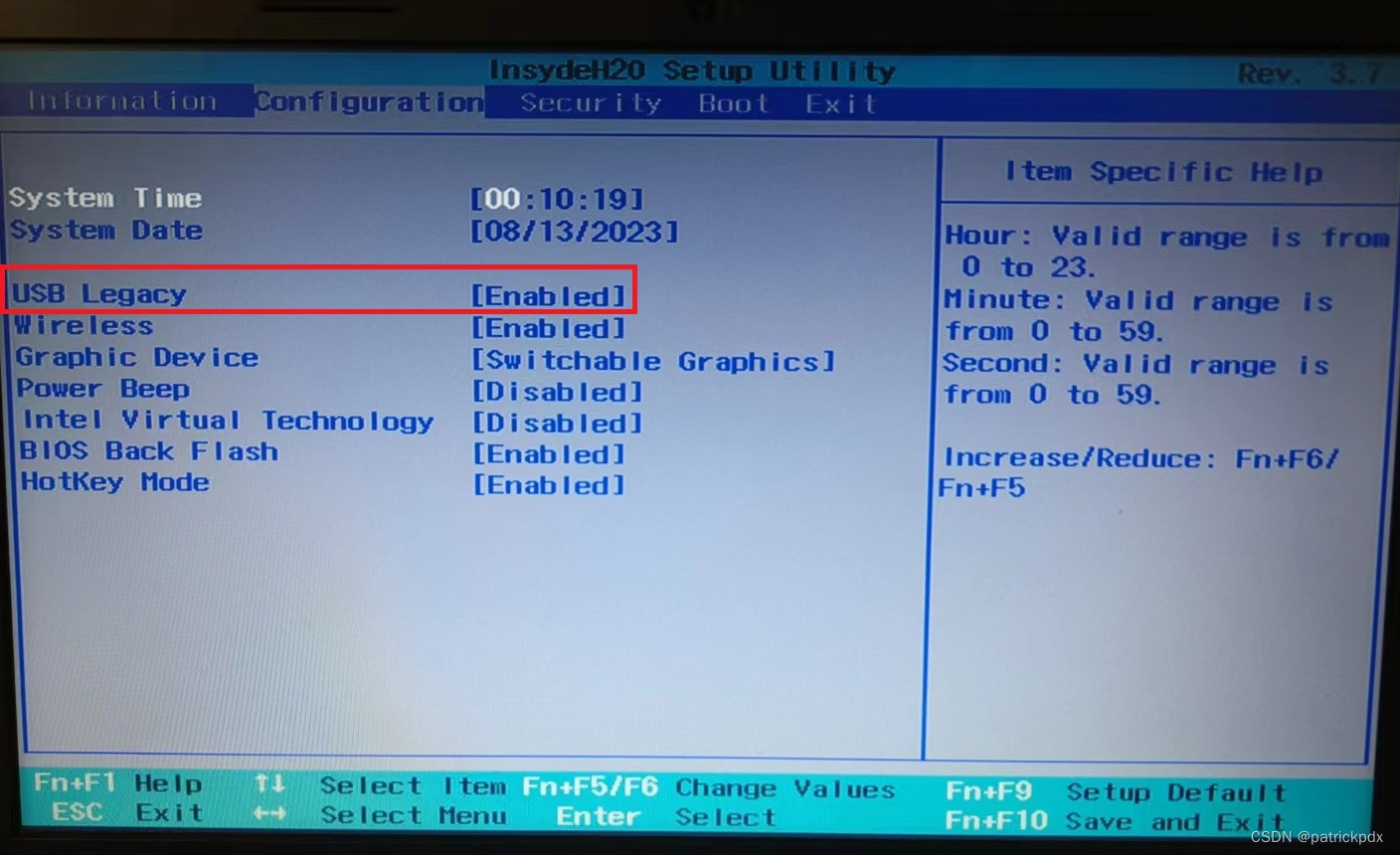

联想电脑装系统无法按F9后无法从系统盘启动的解决方案

开机时按F9发现没有加载系统盘. 打开BIOS设置界面,调整设置如下: BOOT MODE: Legacy Support.允许legacy方式boot. BOOT PRIORITY: Legacy First. Legacy方式作为首选的boot方式. USB BOOT: ENABLED. 允许以usb方式boot. Legacy: 这里设置legacy boot的优先级,…...

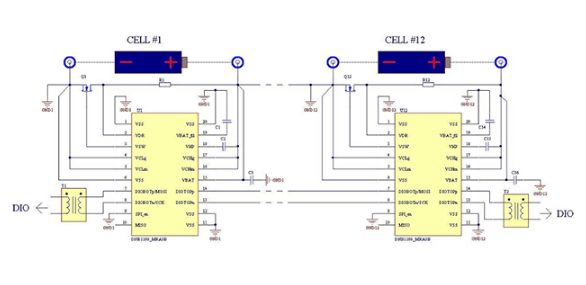

AMEYA360:大唐恩智浦电池管理芯片DNB1168-新能源汽车BMS系统的选择

DNB1168是一款全球独有的集成(EIS)交流阻抗谱监测功能的单电池监测芯片。该芯片通过车规级AEC-Q100和汽车行业最高功能安全等级ISO 26262:2018 ASIL-D双重认证。芯片?内部集成多种高精度电池参数监测,支持电压、温度、交流阻抗检…...

【Python进阶学习】【Excel读写】使用openpyxl写入xlsx文件

1、当前文件不存在指定的子文件夹则创建 2、文件存在追加写入 3、文件不存在创建文件并写入表头 # -*- coding: utf-8 -*- import openpyxl as xl import osdef write_excel_file(folder_path):if not os.path.exists(folder_path):os.makedirs(folder_path)result_path os.p…...

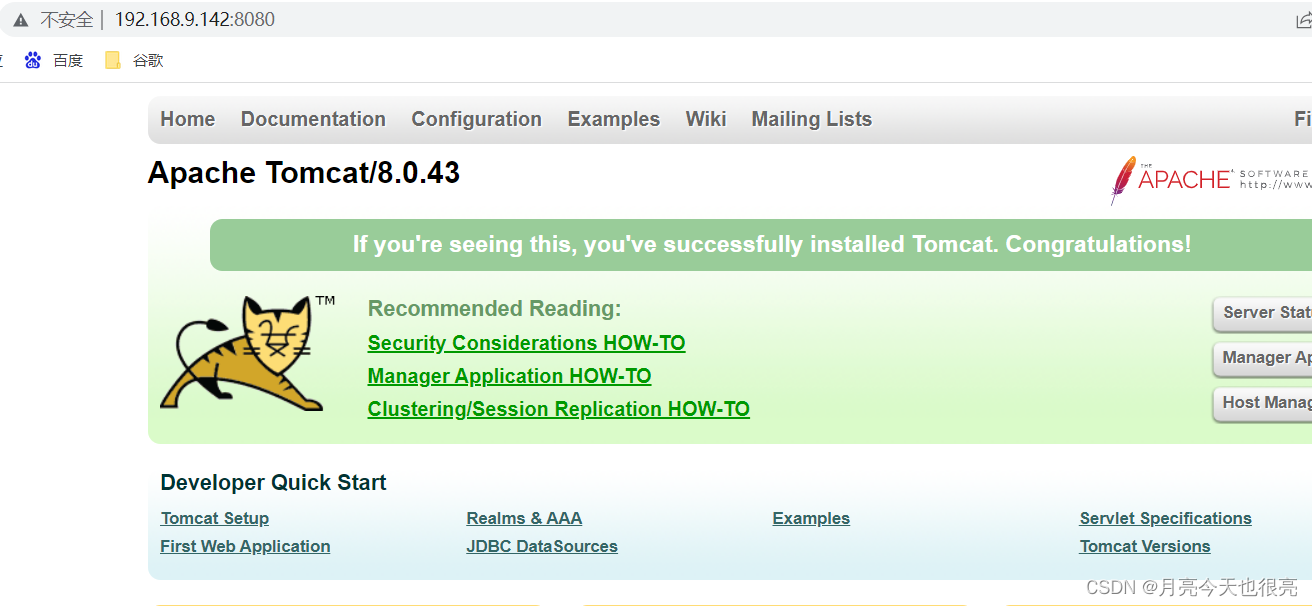

Docker(md版)

Docker 一、Docker二、更换apt源三、docker搭建四、停启管理五、配置加速器5.1、方法一5.2、方法二 六、使用docker运行漏洞靶场1、拉取tomcat8镜像2、拉取成功3、开启服务4、查看kali的IP地址5、访问靶场6、关闭漏洞靶场 七、vulapps靶场搭建 一、Docker Docker是一个开源的应…...

如何使用CSS实现一个无限循环滚动的图片轮播效果?

聚沙成塔每天进步一点点 ⭐ 专栏简介⭐HTML 结构⭐ CSS 样式⭐ JavaScript 控制⭐ 注意事项:⭐ 写在最后 ⭐ 专栏简介 前端入门之旅:探索Web开发的奇妙世界 记得点击上方或者右侧链接订阅本专栏哦 几何带你启航前端之旅 欢迎来到前端入门之旅࿰…...

你使用过WebSocket吗?

什么是WebSocket? WebSocket 是一种在单个 TCP 连接上进行全双工通信的协议,它的出现是为了解决 Web 应用中实时通信的需求。传统的 HTTP 协议是基于请求-响应模式的,即客户端发送请求,服务器响应请求,然后连接关闭。…...

Spark整合hive的时候出错

Spark整合hive的时候 连接Hdfs不从我hive所在的机器上找,而是去连接我的集群里的另外两台机器 但是我的集群没有开 所以下面就一直在retry 猜测: 出现这个错误的原因可能与core-site.xml和hdfs-site.xml有关,因为这里面配置了集群的nameno…...

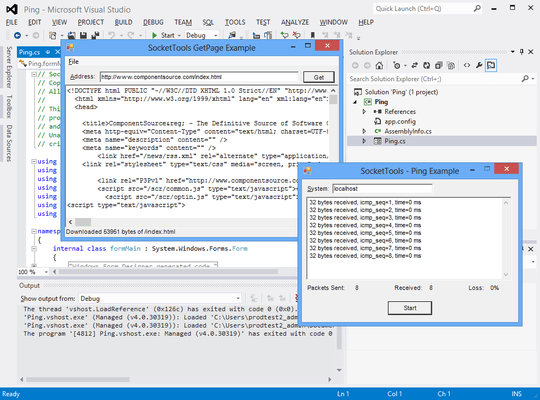

SocketTools.NET 11.0.2148.1554 Crack

添加新功能以简化使用 URL 建立 TCP 连接的过程。 2023 年 8 月 23 日 - 12:35新版本 特征 添加了“HttpGetTextEx”函数,该函数在返回字符串缓冲区中的文本内容时提供附加选项。添加了对“FileTransfer”.NET 类和 ActiveX 控件中的“GetText”和“PutText”方法的…...

:にする)

日语学习-日语知识点小记-构建基础-JLPT-N4阶段(33):にする

日语学习-日语知识点小记-构建基础-JLPT-N4阶段(33):にする 1、前言(1)情况说明(2)工程师的信仰2、知识点(1) にする1,接续:名词+にする2,接续:疑问词+にする3,(A)は(B)にする。(2)復習:(1)复习句子(2)ために & ように(3)そう(4)にする3、…...

FFmpeg 低延迟同屏方案

引言 在实时互动需求激增的当下,无论是在线教育中的师生同屏演示、远程办公的屏幕共享协作,还是游戏直播的画面实时传输,低延迟同屏已成为保障用户体验的核心指标。FFmpeg 作为一款功能强大的多媒体框架,凭借其灵活的编解码、数据…...

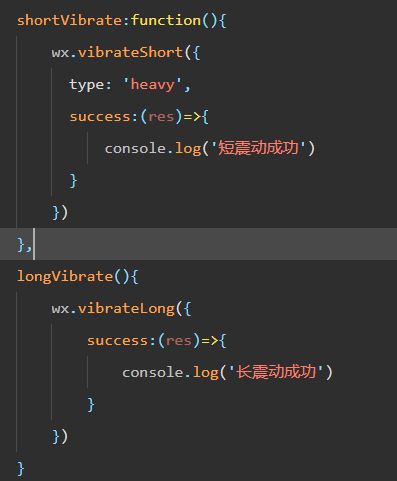

微信小程序 - 手机震动

一、界面 <button type"primary" bindtap"shortVibrate">短震动</button> <button type"primary" bindtap"longVibrate">长震动</button> 二、js逻辑代码 注:文档 https://developers.weixin.qq…...

k8s业务程序联调工具-KtConnect

概述 原理 工具作用是建立了一个从本地到集群的单向VPN,根据VPN原理,打通两个内网必然需要借助一个公共中继节点,ktconnect工具巧妙的利用k8s原生的portforward能力,简化了建立连接的过程,apiserver间接起到了中继节…...

laravel8+vue3.0+element-plus搭建方法

创建 laravel8 项目 composer create-project --prefer-dist laravel/laravel laravel8 8.* 安装 laravel/ui composer require laravel/ui 修改 package.json 文件 "devDependencies": {"vue/compiler-sfc": "^3.0.7","axios": …...

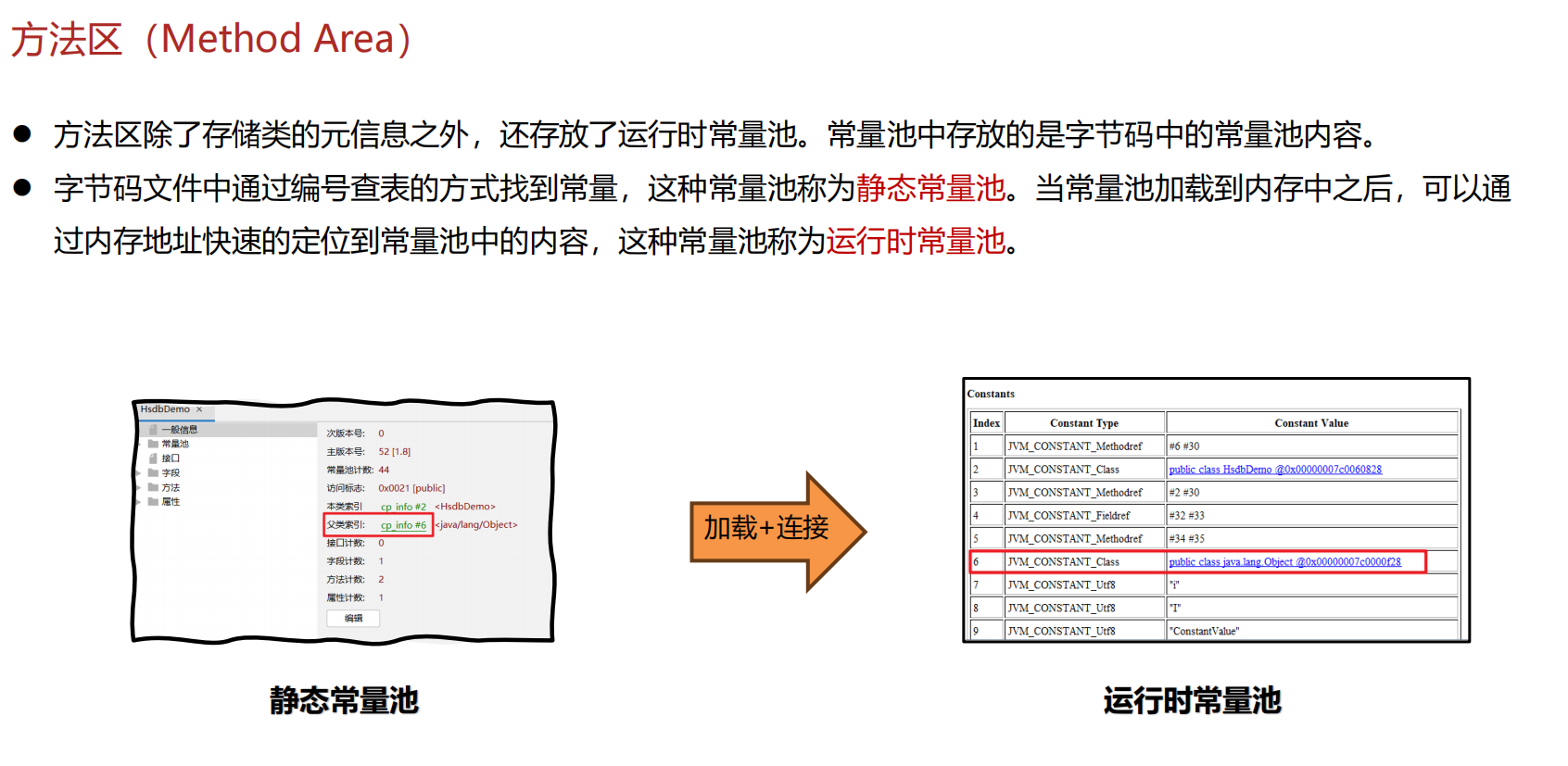

JVM 内存结构 详解

内存结构 运行时数据区: Java虚拟机在运行Java程序过程中管理的内存区域。 程序计数器: 线程私有,程序控制流的指示器,分支、循环、跳转、异常处理、线程恢复等基础功能都依赖这个计数器完成。 每个线程都有一个程序计数…...

TSN交换机正在重构工业网络,PROFINET和EtherCAT会被取代吗?

在工业自动化持续演进的今天,通信网络的角色正变得愈发关键。 2025年6月6日,为期三天的华南国际工业博览会在深圳国际会展中心(宝安)圆满落幕。作为国内工业通信领域的技术型企业,光路科技(Fiberroad&…...

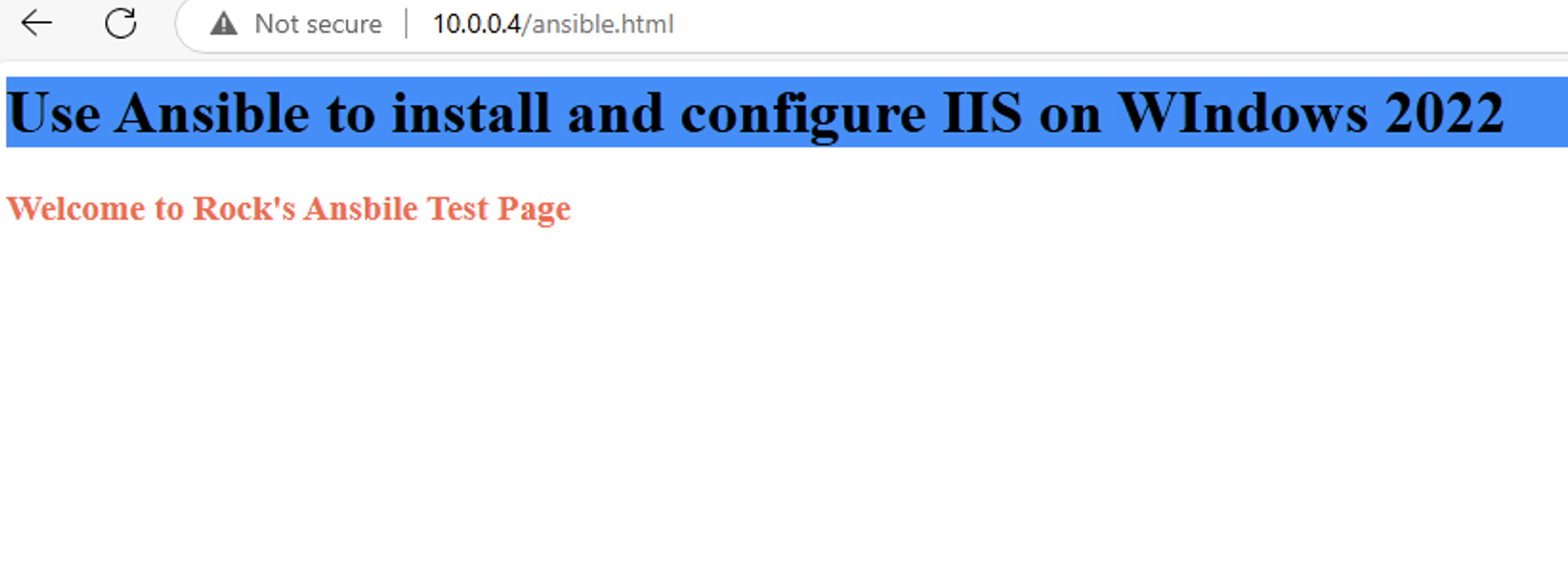

通过 Ansible 在 Windows 2022 上安装 IIS Web 服务器

拓扑结构 这是一个用于通过 Ansible 部署 IIS Web 服务器的实验室拓扑。 前提条件: 在被管理的节点上安装WinRm 准备一张自签名的证书 开放防火墙入站tcp 5985 5986端口 准备自签名证书 PS C:\Users\azureuser> $cert New-SelfSignedCertificate -DnsName &…...

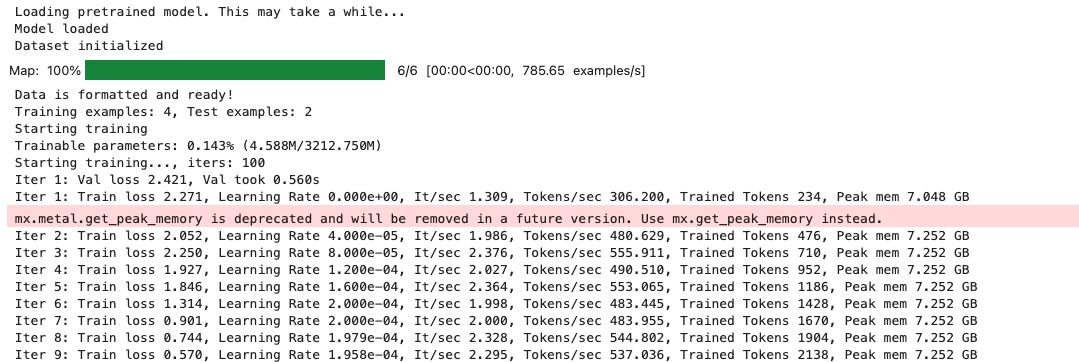

mac:大模型系列测试

0 MAC 前几天经过学生优惠以及国补17K入手了mac studio,然后这两天亲自测试其模型行运用能力如何,是否支持微调、推理速度等能力。下面进入正文。 1 mac 与 unsloth 按照下面的进行安装以及测试,是可以跑通文章里面的代码。训练速度也是很快的。 注意…...

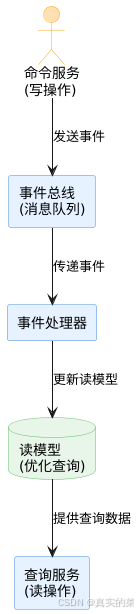

消息队列系统设计与实践全解析

文章目录 🚀 消息队列系统设计与实践全解析🔍 一、消息队列选型1.1 业务场景匹配矩阵1.2 吞吐量/延迟/可靠性权衡💡 权衡决策框架 1.3 运维复杂度评估🔧 运维成本降低策略 🏗️ 二、典型架构设计2.1 分布式事务最终一致…...