数据分析作业四-基于用户及物品数据进行内容推荐

## 导入支持库

import pandas as pd

import matplotlib.pyplot as plt

import sklearn.metrics as metrics

import numpy as np

from sklearn.neighbors import NearestNeighbors

from scipy.spatial.distance import correlation

from sklearn.metrics.pairwise import pairwise_distances

import ipywidgets as widgets

from IPython.display import display, clear_output

from contextlib import contextmanager

import warnings

warnings.filterwarnings('ignore')

import numpy as np

import os, sys

import re

import seaborn as sns

## 加载数据集并检查书籍,用户和评级数据集的形状

books = pd.read_csv('F:\\data\\bleeding_data\\BX-Books.csv',sep=None,encoding="latin-1")

books.columns = ['ISBN', 'bookTitle', 'bookAuthor','yearOfPublication', 'publisher','imageUrlS', 'imageUrlM', 'imageUrlL']users = pd.read_csv('F:\\data\\bleeding_data\\BX-Users.csv',sep=None, encoding="latin-1")

users.columns = ['userID', 'Location', 'Age']ratings = pd.read_csv('F:\\data\\bleeding_data\\BX-Book-Ratings.csv',sep=None, encoding="latin-1")

ratings.columns = ['userID', 'ISBN', 'bookRating']print (books.shape)

print (users.shape)

print (ratings.shape)

(271360, 8)

(278858, 3)

(1149780, 3)

## 一、图书数据集

books.head()

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | imageUrlS | imageUrlM | imageUrlL | |

|---|---|---|---|---|---|---|---|---|

| 0 | 0195153448 | Classical Mythology | Mark P. O. Morford | 2002 | Oxford University Press | http://images.amazon.com/images/P/0195153448.0... | http://images.amazon.com/images/P/0195153448.0... | http://images.amazon.com/images/P/0195153448.0... |

| 1 | 0002005018 | Clara Callan | Richard Bruce Wright | 2001 | HarperFlamingo Canada | http://images.amazon.com/images/P/0002005018.0... | http://images.amazon.com/images/P/0002005018.0... | http://images.amazon.com/images/P/0002005018.0... |

| 2 | 0060973129 | Decision in Normandy | Carlo D'Este | 1991 | HarperPerennial | http://images.amazon.com/images/P/0060973129.0... | http://images.amazon.com/images/P/0060973129.0... | http://images.amazon.com/images/P/0060973129.0... |

| 3 | 0374157065 | Flu: The Story of the Great Influenza Pandemic... | Gina Bari Kolata | 1999 | Farrar Straus Giroux | http://images.amazon.com/images/P/0374157065.0... | http://images.amazon.com/images/P/0374157065.0... | http://images.amazon.com/images/P/0374157065.0... |

| 4 | 0393045218 | The Mummies of Urumchi | E. J. W. Barber | 1999 | W. W. Norton & Company | http://images.amazon.com/images/P/0393045218.0... | http://images.amazon.com/images/P/0393045218.0... | http://images.amazon.com/images/P/0393045218.0... |

## url不需要分析,进行删除

books.drop(['imageUrlS', 'imageUrlM', 'imageUrlL'],axis=1,inplace=True)

books.head()

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 0 | 0195153448 | Classical Mythology | Mark P. O. Morford | 2002 | Oxford University Press |

| 1 | 0002005018 | Clara Callan | Richard Bruce Wright | 2001 | HarperFlamingo Canada |

| 2 | 0060973129 | Decision in Normandy | Carlo D'Este | 1991 | HarperPerennial |

| 3 | 0374157065 | Flu: The Story of the Great Influenza Pandemic... | Gina Bari Kolata | 1999 | Farrar Straus Giroux |

| 4 | 0393045218 | The Mummies of Urumchi | E. J. W. Barber | 1999 | W. W. Norton & Company |

## books.dtypes

books.dtypes

ISBN object

bookTitle object

bookAuthor object

yearOfPublication object

publisher object

dtype: object

## 现在检查属性的唯一值

books.bookTitle.unique()

array(['Classical Mythology', 'Clara Callan', 'Decision in Normandy', ...,'Lily Dale : The True Story of the Town that Talks to the Dead',"Republic (World's Classics)","A Guided Tour of Rene Descartes' Meditations on First Philosophy with Complete Translations of the Meditations by Ronald Rubin"],dtype=object)

books.yearOfPublication.unique()

array(['2002', '2001', '1991', '1999', '2000', '1993', '1996', '1988','2004', '1998', '1994', '2003', '1997', '1983', '1979', '1995','1982', '1985', '1992', '1986', '1978', '1980', '1952', '1987','1990', '1981', '1989', '1984', '0', '1968', '1961', '1958','1974', '1976', '1971', '1977', '1975', '1965', '1941', '1970','1962', '1973', '1972', '1960', '1966', '1920', '1956', '1959','1953', '1951', '1942', '1963', '1964', '1969', '1954', '1950','1967', '2005', '1957', '1940', '1937', '1955', '1946', '1936','1930', '2011', '1925', '1948', '1943', '1947', '1945', '1923','2020', '1939', '1926', '1938', '2030', '1911', '1904', '1949','1932', '1928', '1929', '1927', '1931', '1914', '2050', '1934','1910', '1933', '1902', '1924', '1921', '1900', '2038', '2026','1944', '1917', '1901', '2010', '1908', '1906', '1935', '1806','2021', '2012', '2006', 'DK Publishing Inc', 'Gallimard', '1909','2008', '1378', '1919', '1922', '1897', '2024', '1376', '2037'],dtype=object)

books.loc[books.yearOfPublication == 'DK Publishing Inc',:]

books.yearOfPublication.unique()

array(['2002', '2001', '1991', '1999', '2000', '1993', '1996', '1988','2004', '1998', '1994', '2003', '1997', '1983', '1979', '1995','1982', '1985', '1992', '1986', '1978', '1980', '1952', '1987','1990', '1981', '1989', '1984', '0', '1968', '1961', '1958','1974', '1976', '1971', '1977', '1975', '1965', '1941', '1970','1962', '1973', '1972', '1960', '1966', '1920', '1956', '1959','1953', '1951', '1942', '1963', '1964', '1969', '1954', '1950','1967', '2005', '1957', '1940', '1937', '1955', '1946', '1936','1930', '2011', '1925', '1948', '1943', '1947', '1945', '1923','2020', '1939', '1926', '1938', '2030', '1911', '1904', '1949','1932', '1928', '1929', '1927', '1931', '1914', '2050', '1934','1910', '1933', '1902', '1924', '1921', '1900', '2038', '2026','1944', '1917', '1901', '2010', '1908', '1906', '1935', '1806','2021', '2012', '2006', 'DK Publishing Inc', 'Gallimard', '1909','2008', '1378', '1919', '1922', '1897', '2024', '1376', '2037'],dtype=object)

print(books.loc[books.yearOfPublication == 'DK Publishing Inc',:])

ISBN bookTitle \

209538 078946697X DK Readers: Creating the X-Men, How It All Beg...

221678 0789466953 DK Readers: Creating the X-Men, How Comic Book... bookAuthor yearOfPublication \

209538 2000 DK Publishing Inc

221678 2000 DK Publishing Inc publisher

209538 http://images.amazon.com/images/P/078946697X.0...

221678 http://images.amazon.com/images/P/0789466953.0...

books.loc[books.yearOfPublication == 'DK Publishing Inc',:]

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 209538 | 078946697X | DK Readers: Creating the X-Men, How It All Beg... | 2000 | DK Publishing Inc | http://images.amazon.com/images/P/078946697X.0... |

| 221678 | 0789466953 | DK Readers: Creating the X-Men, How Comic Book... | 2000 | DK Publishing Inc | http://images.amazon.com/images/P/0789466953.0... |

## 从上面可以看出,bookAuthor错误地装载了bookTitle,因此需要进行修正。

# ISBN '0789466953'

books.loc[books.ISBN == '0789466953','yearOfPublication'] = 2000

books.loc[books.ISBN == '0789466953','bookAuthor'] = "James Buckley"

books.loc[books.ISBN == '0789466953','publisher'] = "DK Publishing Inc"

books.loc[books.ISBN == '0789466953','bookTitle'] = "DK Readers: Creating the X-Men, How Comic Books Come to Life (Level 4: Proficient Readers)"#ISBN '078946697X'

books.loc[books.ISBN == '078946697X','yearOfPublication'] = 2000

books.loc[books.ISBN == '078946697X','bookAuthor'] = "Michael Teitelbaum"

books.loc[books.ISBN == '078946697X','publisher'] = "DK Publishing Inc"

books.loc[books.ISBN == '078946697X','bookTitle'] = "DK Readers: Creating the X-Men, How It All Began (Level 4: Proficient Readers)"

books.loc[(books.ISBN == '0789466953') | (books.ISBN == '078946697X'),:]

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 209538 | 078946697X | DK Readers: Creating the X-Men, How It All Beg... | Michael Teitelbaum | 2000 | DK Publishing Inc |

| 221678 | 0789466953 | DK Readers: Creating the X-Men, How Comic Book... | James Buckley | 2000 | DK Publishing Inc |

## 继续纠正出版年鉴的类型

books.yearOfPublication=pd.to_numeric(books.yearOfPublication, errors='coerce')

sorted(books['yearOfPublication'].unique())

[0.0,1376.0,1378.0,1806.0,1897.0,1900.0,1901.0,1902.0,1904.0,1906.0,1908.0,1909.0,1910.0,1911.0,1914.0,1917.0,1919.0,1920.0,1921.0,1922.0,1923.0,1924.0,1925.0,1926.0,1927.0,1928.0,1929.0,1930.0,1931.0,1932.0,1933.0,1934.0,1935.0,1936.0,1937.0,1938.0,1939.0,1940.0,1941.0,1942.0,1943.0,1944.0,1945.0,1946.0,1947.0,1948.0,1949.0,1950.0,1951.0,1952.0,1953.0,1954.0,1955.0,1956.0,1957.0,1958.0,1959.0,1960.0,1961.0,1962.0,1963.0,1964.0,1965.0,1966.0,1967.0,1968.0,1969.0,1970.0,1971.0,1972.0,1973.0,1974.0,1975.0,1976.0,1977.0,1978.0,1979.0,1980.0,1981.0,1982.0,1983.0,1984.0,1985.0,1986.0,1987.0,1988.0,1989.0,1990.0,1991.0,1992.0,1993.0,1994.0,1995.0,1996.0,1997.0,1998.0,1999.0,2000.0,2001.0,2002.0,2003.0,2004.0,2005.0,2006.0,2008.0,2010.0,2011.0,2012.0,2020.0,2021.0,2024.0,2026.0,2030.0,2037.0,2038.0,2050.0,nan]

## 现在可以看出yearOfPublication的类型为int,其值范围为0-2050。## 由于该数据集建于2004年,我假设2006年之后的所有年份都无效,保留两年的保证金,以防数据集可能已更新。## 对于所有无效条目(包括0),我将这些条目转换为NaN,然后用剩余年份的平均值替换它们。

books.loc[(books.yearOfPublication > 2006) | (books.yearOfPublication == 0),'yearOfPublication'] = np.NAN

# 用年出版的平均价值代替NaNs在案例数据集被更新的情况下保留一定的空白

books.yearOfPublication.fillna(round(books.yearOfPublication.mean()), inplace=True)

books.yearOfPublication.isnull().sum()

0

books.yearOfPublication = books.yearOfPublication.astype(np.int32)

## publisher

books.loc[books.publisher.isnull(),:]

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 128890 | 193169656X | Tyrant Moon | Elaine Corvidae | 2002 | NaN |

| 129037 | 1931696993 | Finders Keepers | Linnea Sinclair | 2001 | NaN |

## 检查行是否有书签作为查找器,看看我们是否能得到任何线索## 与不同的出版商和图书作者的所有行

books.loc[(books.bookTitle == 'Tyrant Moon'),:]

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 128890 | 193169656X | Tyrant Moon | Elaine Corvidae | 2002 | NaN |

books.loc[(books.bookTitle == 'Finders Keepers'),:]

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 10799 | 082177364X | Finders Keepers | Fern Michaels | 2002 | Zebra Books |

| 42019 | 0070465037 | Finders Keepers | Barbara Nickolae | 1989 | McGraw-Hill Companies |

| 58264 | 0688118461 | Finders Keepers | Emily Rodda | 1993 | Harpercollins Juvenile Books |

| 66678 | 1575663236 | Finders Keepers | Fern Michaels | 1998 | Kensington Publishing Corporation |

| 129037 | 1931696993 | Finders Keepers | Linnea Sinclair | 2001 | NaN |

| 134309 | 0156309505 | Finders Keepers | Will | 1989 | Voyager Books |

| 173473 | 0973146907 | Finders Keepers | Sean M. Costello | 2002 | Red Tower Publications |

| 195885 | 0061083909 | Finders Keepers | Sharon Sala | 2003 | HarperTorch |

| 211874 | 0373261160 | Finders Keepers | Elizabeth Travis | 1993 | Worldwide Library |

## 由图书作者检查以找到模式## 都有不同的出版商。这里没有线索

books.loc[(books.bookAuthor == 'Elaine Corvidae'),:]

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 126762 | 1931696934 | Winter's Orphans | Elaine Corvidae | 2001 | Novelbooks |

| 128890 | 193169656X | Tyrant Moon | Elaine Corvidae | 2002 | NaN |

| 129001 | 0759901880 | Wolfkin | Elaine Corvidae | 2001 | Hard Shell Word Factory |

## 由图书作者检查以找到模式

books.loc[(books.bookAuthor == 'Linnea Sinclair'),:]

| ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|

| 129037 | 1931696993 | Finders Keepers | Linnea Sinclair | 2001 | NaN |

## 因为没有什么共同的东西可以推断出NaNs的发布者,将它们替换为“other”

books.loc[(books.ISBN == '193169656X'),'publisher'] = 'other'

books.loc[(books.ISBN == '1931696993'),'publisher'] = 'other'

## 二、用户数据集

print (users.shape)

users.head()

(278858, 3)

| userID | Location | Age | |

|---|---|---|---|

| 0 | 1 | nyc, new york, usa | NaN |

| 1 | 2 | stockton, california, usa | 18.0 |

| 2 | 3 | moscow, yukon territory, russia | NaN |

| 3 | 4 | porto, v.n.gaia, portugal | 17.0 |

| 4 | 5 | farnborough, hants, united kingdom | NaN |

users.dtypes

userID int64

Location object

Age float64

dtype: object

users.userID.values

array([ 1, 2, 3, ..., 278856, 278857, 278858], dtype=int64)

## Age

sorted(users.Age.unique())

[nan,0.0,1.0,2.0,3.0,4.0,5.0,6.0,7.0,8.0,9.0,10.0,11.0,12.0,13.0,14.0,15.0,16.0,17.0,18.0,19.0,20.0,21.0,22.0,23.0,24.0,25.0,26.0,27.0,28.0,29.0,30.0,31.0,32.0,33.0,34.0,35.0,36.0,37.0,38.0,39.0,40.0,41.0,42.0,43.0,44.0,45.0,46.0,47.0,48.0,49.0,50.0,51.0,52.0,53.0,54.0,55.0,56.0,57.0,58.0,59.0,60.0,61.0,62.0,63.0,64.0,65.0,66.0,67.0,68.0,69.0,70.0,71.0,72.0,73.0,74.0,75.0,76.0,77.0,78.0,79.0,80.0,81.0,82.0,83.0,84.0,85.0,86.0,87.0,88.0,89.0,90.0,91.0,92.0,93.0,94.0,95.0,96.0,97.0,98.0,99.0,100.0,101.0,102.0,103.0,104.0,105.0,106.0,107.0,108.0,109.0,110.0,111.0,113.0,114.0,115.0,116.0,118.0,119.0,123.0,124.0,127.0,128.0,132.0,133.0,136.0,137.0,138.0,140.0,141.0,143.0,146.0,147.0,148.0,151.0,152.0,156.0,157.0,159.0,162.0,168.0,172.0,175.0,183.0,186.0,189.0,199.0,200.0,201.0,204.0,207.0,208.0,209.0,210.0,212.0,219.0,220.0,223.0,226.0,228.0,229.0,230.0,231.0,237.0,239.0,244.0]

## 年龄栏有一些无效的条目,比如nan,0和非常高的值,比如100和以上

users.loc[(users.Age > 90) | (users.Age < 5), 'Age'] = np.nan

## 用平均值代替NaN

## 将数据类型设置为int

users.Age = users.Age.fillna(users.Age.mean())

users.Age = users.Age.astype(np.int32)

sorted(users.Age.unique())

[5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90]

## 三、评级数据集

ratings.shape

(1149780, 3)

## 如果每个用户对每个条目进行评级,那么评级数据集将有nusers * nbooks条目,这表明数据集非常稀疏。

n_users = users.shape[0]

n_books = books.shape[0]

print (n_users * n_books)

75670906880

ratings.head(5)

| userID | ISBN | bookRating | |

|---|---|---|---|

| 0 | 276725 | 034545104X | 0 |

| 1 | 276726 | 0155061224 | 5 |

| 2 | 276727 | 0446520802 | 0 |

| 3 | 276729 | 052165615X | 3 |

| 4 | 276729 | 0521795028 | 6 |

ratings.bookRating.unique()

array([ 0, 5, 3, 6, 8, 7, 10, 9, 4, 1, 2], dtype=int64)

ratings_new = ratings[ratings.ISBN.isin(books.ISBN)]

print (ratings.shape)

print (ratings_new.shape)

(1149780, 3)

(1031136, 3)

## 没有新用户添加,因此我们将使用高于数据集的新用户(1031136,3)

print ("number of users: " + str(n_users))

print ("number of books: " + str(n_books))

number of users: 278858

number of books: 271360

sparsity=1.0-len(ratings_new)/float(n_users*n_books)

print ('图书交叉数据集的稀疏级别是 ' + str(sparsity*100) + ' %')

图书交叉数据集的稀疏级别是 99.99863734155898 %

ratings.bookRating.unique()

array([ 0, 5, 3, 6, 8, 7, 10, 9, 4, 1, 2], dtype=int64)

ratings_explicit = ratings_new[ratings_new.bookRating != 0]

ratings_implicit = ratings_new[ratings_new.bookRating == 0]

print (ratings_new.shape)

print( ratings_explicit.shape)

print (ratings_implicit.shape)

(1031136, 3)

(383842, 3)

(647294, 3)

## 统计

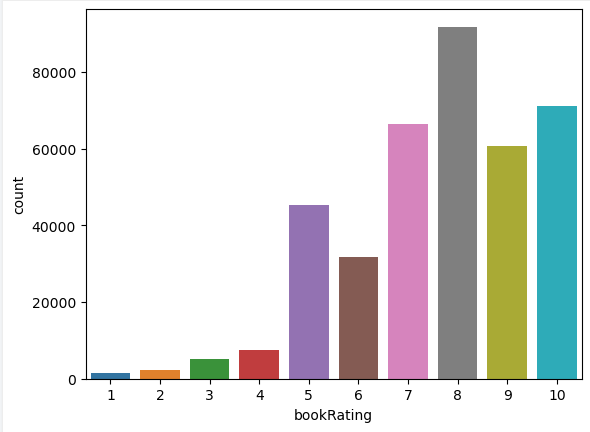

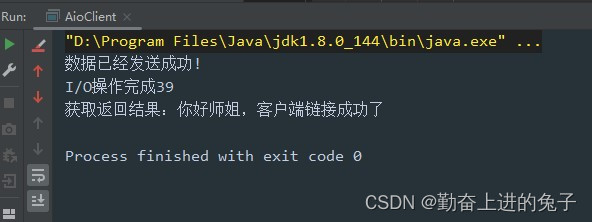

sns.countplot(data=ratings_explicit , x='bookRating')

plt.show()

## 基于简单流行度的推荐系统

ratings_count = pd.DataFrame(ratings_explicit.groupby(['ISBN'])['bookRating'].sum())

top10 = ratings_count.sort_values('bookRating', ascending = False).head(10)

print ("推荐下列书籍")

top10.merge(books, left_index = True, right_on = 'ISBN')

推荐下列书籍

| bookRating | ISBN | bookTitle | bookAuthor | yearOfPublication | publisher | |

|---|---|---|---|---|---|---|

| 408 | 5787 | 0316666343 | The Lovely Bones: A Novel | Alice Sebold | 2002 | Little, Brown |

| 748 | 4108 | 0385504209 | The Da Vinci Code | Dan Brown | 2003 | Doubleday |

| 522 | 3134 | 0312195516 | The Red Tent (Bestselling Backlist) | Anita Diamant | 1998 | Picador USA |

| 2143 | 2798 | 059035342X | Harry Potter and the Sorcerer's Stone (Harry P... | J. K. Rowling | 1999 | Arthur A. Levine Books |

| 356 | 2595 | 0142001740 | The Secret Life of Bees | Sue Monk Kidd | 2003 | Penguin Books |

| 26 | 2551 | 0971880107 | Wild Animus | Rich Shapero | 2004 | Too Far |

| 1105 | 2524 | 0060928336 | Divine Secrets of the Ya-Ya Sisterhood: A Novel | Rebecca Wells | 1997 | Perennial |

| 706 | 2402 | 0446672211 | Where the Heart Is (Oprah's Book Club (Paperba... | Billie Letts | 1998 | Warner Books |

| 231 | 2219 | 0452282152 | Girl with a Pearl Earring | Tracy Chevalier | 2001 | Plume Books |

| 118 | 2179 | 0671027360 | Angels & Demons | Dan Brown | 2001 | Pocket Star |

users_exp_ratings = users[users.userID.isin(ratings_explicit.userID)]

users_imp_ratings = users[users.userID.isin(ratings_implicit.userID)]

print (users.shape)

print (users_exp_ratings.shape)

print (users_imp_ratings.shape)

(278858, 3)

(68091, 3)

(52451, 3)

## 基于协同过滤的推荐系统

counts1 = ratings_explicit['userID'].value_counts()

ratings_explicit = ratings_explicit[ratings_explicit['userID'].isin(counts1[counts1 >= 100].index)]

counts = ratings_explicit['bookRating'].value_counts()

ratings_explicit = ratings_explicit[ratings_explicit['bookRating'].isin(counts[counts >= 100].index)]

ratings_matrix = ratings_explicit.pivot(index='userID', columns='ISBN', values='bookRating')

userID = ratings_matrix.index

ISBN = ratings_matrix.columns

print(ratings_matrix.shape)

ratings_matrix.head()

(449, 66574)

| ISBN | 0000913154 | 0001046438 | 000104687X | 0001047213 | 0001047973 | 000104799X | 0001048082 | 0001053736 | 0001053744 | 0001055607 | ... | B000092Q0A | B00009EF82 | B00009NDAN | B0000DYXID | B0000T6KHI | B0000VZEJQ | B0000X8HIE | B00013AX9E | B0001I1KOG | B000234N3A |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| userID | |||||||||||||||||||||

| 2033 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2110 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2276 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 4017 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 4385 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

5 rows × 66574 columns

n_users = ratings_matrix.shape[0] #只考虑那些给出明确评级的用户

n_books = ratings_matrix.shape[1]

print (n_users, n_books)

449 66574

ratings_matrix.fillna(0, inplace = True)

ratings_matrix = ratings_matrix.astype(np.int32)

ratings_matrix.head(5)

| ISBN | 0000913154 | 0001046438 | 000104687X | 0001047213 | 0001047973 | 000104799X | 0001048082 | 0001053736 | 0001053744 | 0001055607 | ... | B000092Q0A | B00009EF82 | B00009NDAN | B0000DYXID | B0000T6KHI | B0000VZEJQ | B0000X8HIE | B00013AX9E | B0001I1KOG | B000234N3A |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| userID | |||||||||||||||||||||

| 2033 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2110 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2276 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4017 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4385 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 66574 columns

sparsity=1.0-len(ratings_explicit)/float(users_exp_ratings.shape[0]*n_books)

print ('图书交叉数据集的稀疏级别是 ' + str(sparsity*100) + ' %')

图书交叉数据集的稀疏级别是 99.99772184106935 %

## 基于用户的协同过滤

global metric,k

k=10

metric='cosine'

def findksimilarusers(user_id, ratings, metric = metric, k=k):similarities=[]indices=[]model_knn = NearestNeighbors(metric = metric, algorithm = 'brute') model_knn.fit(ratings)loc = ratings.index.get_loc(user_id)distances, indices = model_knn.kneighbors(ratings.iloc[loc, :].values.reshape(1, -1), n_neighbors = k+1)similarities = 1-distances.flatten()return similarities,indices

def predict_userbased(user_id, item_id, ratings, metric = metric, k=k):prediction=0user_loc = ratings.index.get_loc(user_id)item_loc = ratings.columns.get_loc(item_id)similarities, indices=findksimilarusers(user_id, ratings,metric, k) #similar users based on cosine similaritymean_rating = ratings.iloc[user_loc,:].mean() #to adjust for zero based indexingsum_wt = np.sum(similarities)-1product=1wtd_sum = 0 for i in range(0, len(indices.flatten())):if indices.flatten()[i] == user_loc:continue;else: ratings_diff = ratings.iloc[indices.flatten()[i],item_loc]-np.mean(ratings.iloc[indices.flatten()[i],:])product = ratings_diff * (similarities[i])wtd_sum = wtd_sum + product#在非常稀疏的数据集的情况下,使用基于协作的方法的相关度量可能会给出负面的评价#在这里的处理如下if prediction <= 0:prediction = 1 elif prediction >10:prediction = 10prediction = int(round(mean_rating + (wtd_sum/sum_wt)))print ('用户预测等级 {0} -> item {1}: {2}'.format(user_id,item_id,prediction))return prediction

## 测试

predict_userbased(11676,'0001056107',ratings_matrix)

用户预测等级 11676 -> item 0001056107: 22

## 基于项目的协同过滤

def findksimilaritems(item_id, ratings, metric=metric, k=k):similarities=[]indices=[]ratings=ratings.Tloc = ratings.index.get_loc(item_id)model_knn = NearestNeighbors(metric = metric, algorithm = 'brute')model_knn.fit(ratings)distances, indices = model_knn.kneighbors(ratings.iloc[loc, :].values.reshape(1, -1), n_neighbors = k+1)similarities = 1-distances.flatten()return similarities,indices

def predict_itembased(user_id, item_id, ratings, metric = metric, k=k):prediction= wtd_sum =0user_loc = ratings.index.get_loc(user_id)item_loc = ratings.columns.get_loc(item_id)similarities, indices=findksimilaritems(item_id, ratings) #similar users based on correlation coefficientssum_wt = np.sum(similarities)-1product=1for i in range(0, len(indices.flatten())):if indices.flatten()[i] == item_loc:continue;else:product = ratings.iloc[user_loc,indices.flatten()[i]] * (similarities[i])wtd_sum = wtd_sum + product prediction = int(round(wtd_sum/sum_wt))#在非常稀疏的数据集的情况下,使用基于协作的方法的相关度量可能会给出负面的评价#在这里处理的是下面的//代码,没有下面的代码片段,下面的代码片段是为了避免负面影响#在使用相关度规时,可能会出现非常稀疏的数据集的预测if prediction <= 0:prediction = 1 elif prediction >10:prediction = 10print ('用户预测等级 {0} -> item {1}: {2}'.format(user_id,item_id,prediction) ) return prediction

## 测试

prediction = predict_itembased(11676,'0001056107',ratings_matrix)

用户预测等级 11676 -> item 0001056107: 1

相关文章:

数据分析作业四-基于用户及物品数据进行内容推荐

## 导入支持库 import pandas as pd import matplotlib.pyplot as plt import sklearn.metrics as metrics import numpy as np from sklearn.neighbors import NearestNeighbors from scipy.spatial.distance import correlation from sklearn.metrics.pairwise import pairwi…...

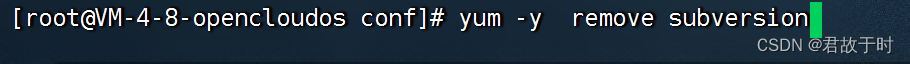

在腾讯云服务器OpenCLoudOS系统中安装svn(有图详解)

1. 安装svn yum -y install subversion 安装成功: 2. 创建数据根目录及仓库 mkdir -p /usr/local/svn/svnrepository 创建test仓库: svnadmin create /usr/local/svn/test test仓库创建成功: 3. 修改配置test仓库 cd /usr/local/svn/te…...

C语言日常刷题5

文章目录 题目答案与解析1234567、 题目 1、以下叙述中正确的是( ) A: 只能在循环体内和switch语句体内使用break语句 B: 当break出现在循环体中的switch语句体内时,其作用是跳出该switch语句体,并中止循环体的执行 C: continue语…...

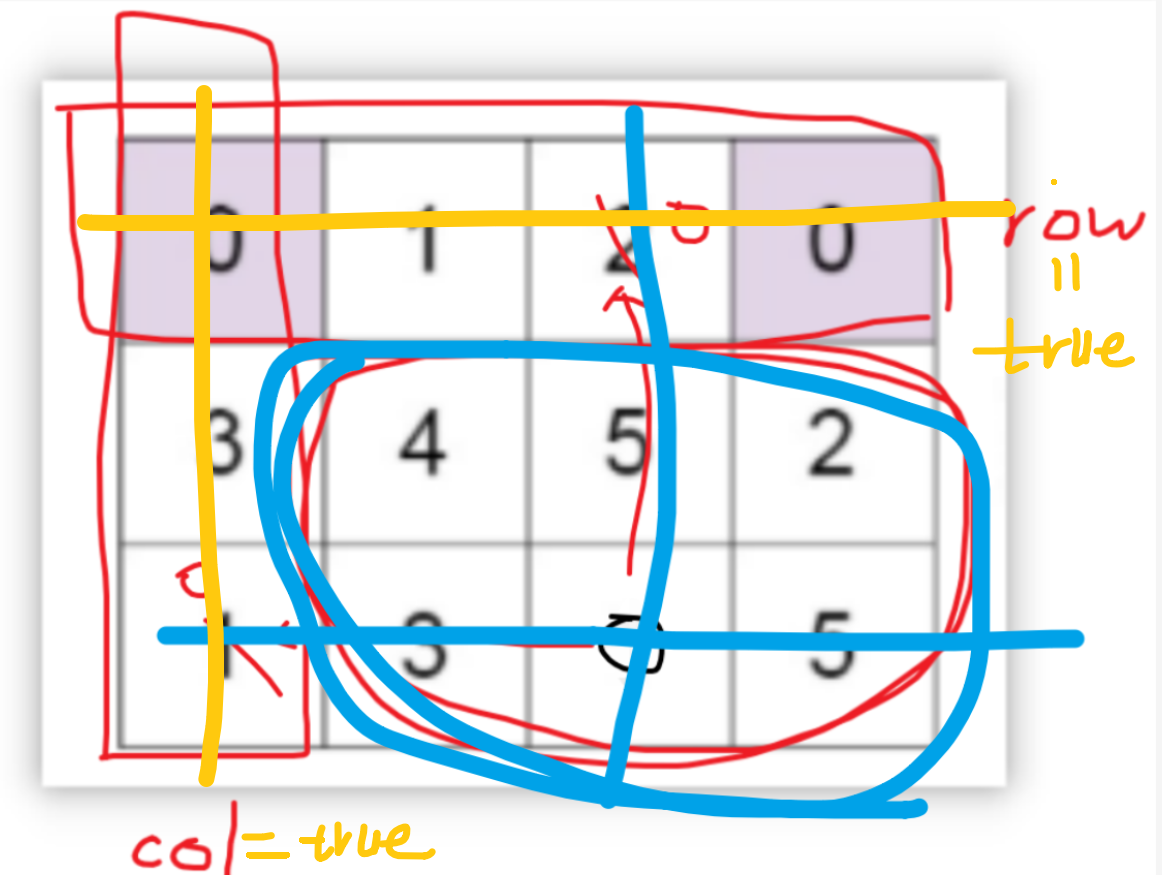

【LeetCode-中等题】73. 矩阵置零

题目 题解一:使用标记数组 public void setZeroes(int[][] matrix) {int m matrix.length;int n matrix[0].length;boolean[] row new boolean[m];boolean[] col new boolean[n];for(int i0; i< m;i){for(int j 0;j<n;j){if (matrix[i][j] 0) row[i]col…...

本地部署 FastGPT

本地部署 FastGPT 1. FastGPT 是什么2. 部署 FastGPT 1. FastGPT 是什么 FastGPT 是一个基于 LLM 大语言模型的知识库问答系统,提供开箱即用的数据处理、模型调用等能力。同时可以通过 Flow 可视化进行工作流编排,从而实现复杂的问答场景! …...

软件工程(十八) 行为型设计模式(四)

1、状态模式 简要说明 允许一个对象在其内部改变时改变它的行为 速记关键字 状态变成类 类图如下 状态模式主要用来解决对象在多种状态转换时,需要对外输出不同的行为的问题。比如订单从待付款到待收货的咋黄台发生变化,执行的逻辑是不一样的。 所以我们将状态抽象为一…...

Socket通信与WebSocket协议

文章目录 目录 文章目录 前言 一、Socket通信 1.1 BIO 1.2 NIO 1.3 AIO 二、WebSocket协议 总结 前言 一、Socket通信 Socket是一种用于网络通信的编程接口(API),它提供了一种机制,使不同主机之间可以通过网络进行数据传输和通信…...

新KG视点 | Jeff Pan、陈矫彦等——大语言模型与知识图谱的机遇与挑战

OpenKG 大模型专辑 导读 知识图谱和大型语言模型都是用来表示和处理知识的手段。大模型补足了理解语言的能力,知识图谱则丰富了表示知识的方式,两者的深度结合必将为人工智能提供更为全面、可靠、可控的知识处理方法。在这一背景下,OpenKG组织…...

详解过滤器Filter和拦截器Interceptor的区别和联系

目录 前言 区别 联系 前言 过滤器(Filter)和拦截器(Interceptor)都是用于在Web应用程序中处理请求和响应的组件,但它们在实现方式和功能上有一些区别。 区别 1. 实现方式: - 过滤器是基于Servlet规范的组件,通过实现javax.servlet.Filt…...

List常用的操作

1、看List里是否存在某个元素 contains //省略建立listboolean contains stringList.contains("上海");System.out.println(contains); 如果存在是true,不存在是false 2、看某个元素在List中的索引号 .indexOf List<String>stringList new Ar…...

Android studio APK切换多个摄像头(Camera2)

1.先设置camera的权限 <uses-permission android:name"android.permission.CAMERA" /> 2.布局 <?xml version"1.0" encoding"utf-8"?> <LinearLayout xmlns:android"http://schemas.android.com/apk/res/android"and…...

ChatGPT 对教育的影响,AI 如何颠覆传统教育

胜量老师 来源:BV1Nv4y1H7kC 由Chat GPT引发的对教育的思考,人类文明发展至今一直靠教育完成文明的传承,一个年轻人要经历若干年的学习,才能进入社会投入对文明的建设,而学习中有大量内容是需要记忆和反复训练的。 无…...

Spring(九)声明式事务

Spring整合Junit4和JdbcTemplater如下所示: 我们所使用的junit的jar包不同,可以整合不同版本的junit。 我们导入的依赖如下所示: <?xml version"1.0" encoding"UTF-8"?> <project xmlns"http://maven.a…...

)

java中用HSSFWorkbook生成xls格式的excel(亲测)

SXSSFWorkbook类是用于生成XLSX格式的Excel文件(基于XML格式),而不是XLS格式的Excel文件(基于二进制格式)。 如果你需要生成XLS格式的Excel文件,可以使用HSSFWorkbook类。以下是一个简单的示例:…...

做平面设计一般电脑可以吗 优漫动游

平面设计常用的软件如下:Photoshop、AutoCAD、AI等。其中对电脑配置要求高的是AutoCAD,可运行AutoCAD的软件均可运行如上软件。 做平面设计一般电脑可以吗 AutoCAD64位版配置要求:AMDAthlon64位处理器、支持SSE2技术的AMDOpteron处理器、…...

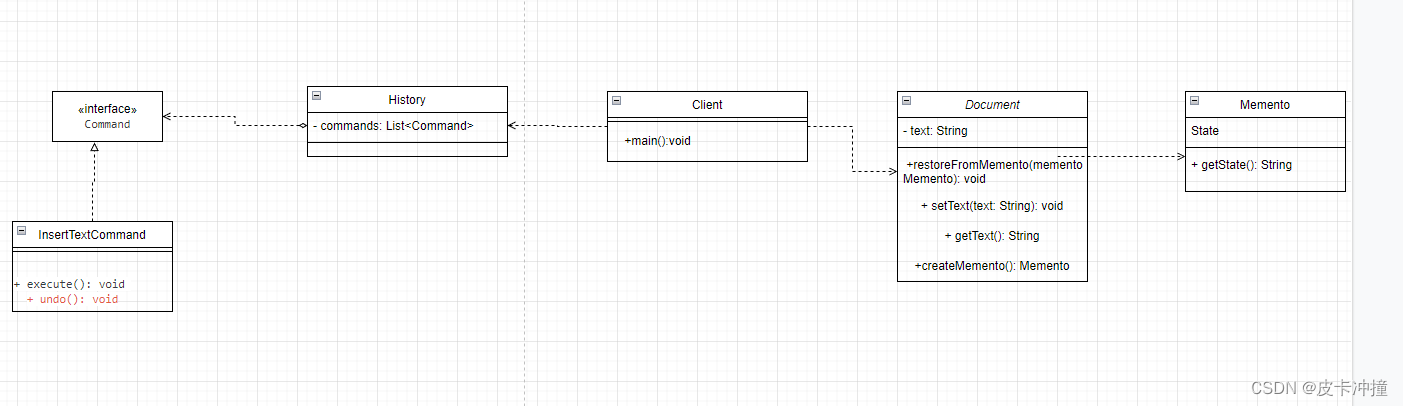

设计模式备忘录+命令模式实现Word撤销恢复操作

文章目录 前言思路代码实现uml类图总结 前言 最近学习设计模式行为型的模式,学到了备忘录模式提到这个模式可以记录一个对象的状态属性值,用于下次复用,于是便想到了我们在Windows系统上使用的撤销操作,于是便想着使用这个模式进…...

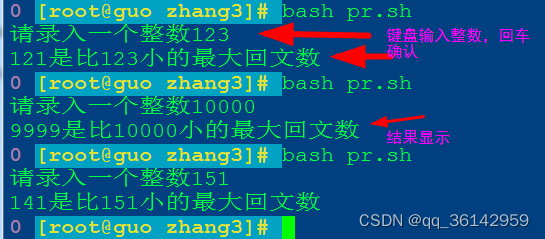

Linux centos7 bash编程小训练

训练要求: 求比一个数小的最大回文数 知识点: 一个数字正读反读都一样,我们称为回文数,如5、11、55、121、222等。 我们训练用bash编写一个小程序,由我们标准输入一个整数,计算机将显示出一个比这个数小…...

创作2周年纪念日-特别篇

创作2周年纪念日-特别篇 1. 与CSDN的机缘2. 收获3. 憧憬 1. 与CSDN的机缘 很荣幸,在大学时候,能够接触到CSDN这样一个平台,当时对嵌入式开发、编程、计算机视觉等内容比较感兴趣。后面一个很偶然的联培实习机会,让我接触到了Pych…...

【UE5】用法简介-使用MAWI高精度树林资产的地形材质与添加风雪效果

首先我们新建一个basic工程 然后点击floor按del键,把floor给删除。 只留下空白场景 点击“地形” 在这个范例里,我只创建一个500X500大小的地形,只为了告诉大家用法,点击创建 创建好之后有一大片空白的地形出现 让我们点左上角…...

兼容AD210 车规级高精度隔离放大器:ISO EM210

车规级高精度隔离放大器:ISO EM210 Pin-Pin兼容AD210的低成本,小体积DIP标准38Pin金属外壳封装模块,能有效屏蔽现场EMC空间干扰。功能设计全面,采用非固定增益方式,输入信号经过输入端的前置放大器(增益为1-100&#x…...

)

进程地址空间(比特课总结)

一、进程地址空间 1. 环境变量 1 )⽤户级环境变量与系统级环境变量 全局属性:环境变量具有全局属性,会被⼦进程继承。例如当bash启动⼦进程时,环 境变量会⾃动传递给⼦进程。 本地变量限制:本地变量只在当前进程(ba…...

DockerHub与私有镜像仓库在容器化中的应用与管理

哈喽,大家好,我是左手python! Docker Hub的应用与管理 Docker Hub的基本概念与使用方法 Docker Hub是Docker官方提供的一个公共镜像仓库,用户可以在其中找到各种操作系统、软件和应用的镜像。开发者可以通过Docker Hub轻松获取所…...

通过Wrangler CLI在worker中创建数据库和表

官方使用文档:Getting started Cloudflare D1 docs 创建数据库 在命令行中执行完成之后,会在本地和远程创建数据库: npx wranglerlatest d1 create prod-d1-tutorial 在cf中就可以看到数据库: 现在,您的Cloudfla…...

第25节 Node.js 断言测试

Node.js的assert模块主要用于编写程序的单元测试时使用,通过断言可以提早发现和排查出错误。 稳定性: 5 - 锁定 这个模块可用于应用的单元测试,通过 require(assert) 可以使用这个模块。 assert.fail(actual, expected, message, operator) 使用参数…...

LLM基础1_语言模型如何处理文本

基于GitHub项目:https://github.com/datawhalechina/llms-from-scratch-cn 工具介绍 tiktoken:OpenAI开发的专业"分词器" torch:Facebook开发的强力计算引擎,相当于超级计算器 理解词嵌入:给词语画"…...

【python异步多线程】异步多线程爬虫代码示例

claude生成的python多线程、异步代码示例,模拟20个网页的爬取,每个网页假设要0.5-2秒完成。 代码 Python多线程爬虫教程 核心概念 多线程:允许程序同时执行多个任务,提高IO密集型任务(如网络请求)的效率…...

MySQL用户和授权

开放MySQL白名单 可以通过iptables-save命令确认对应客户端ip是否可以访问MySQL服务: test: # iptables-save | grep 3306 -A mp_srv_whitelist -s 172.16.14.102/32 -p tcp -m tcp --dport 3306 -j ACCEPT -A mp_srv_whitelist -s 172.16.4.16/32 -p tcp -m tcp -…...

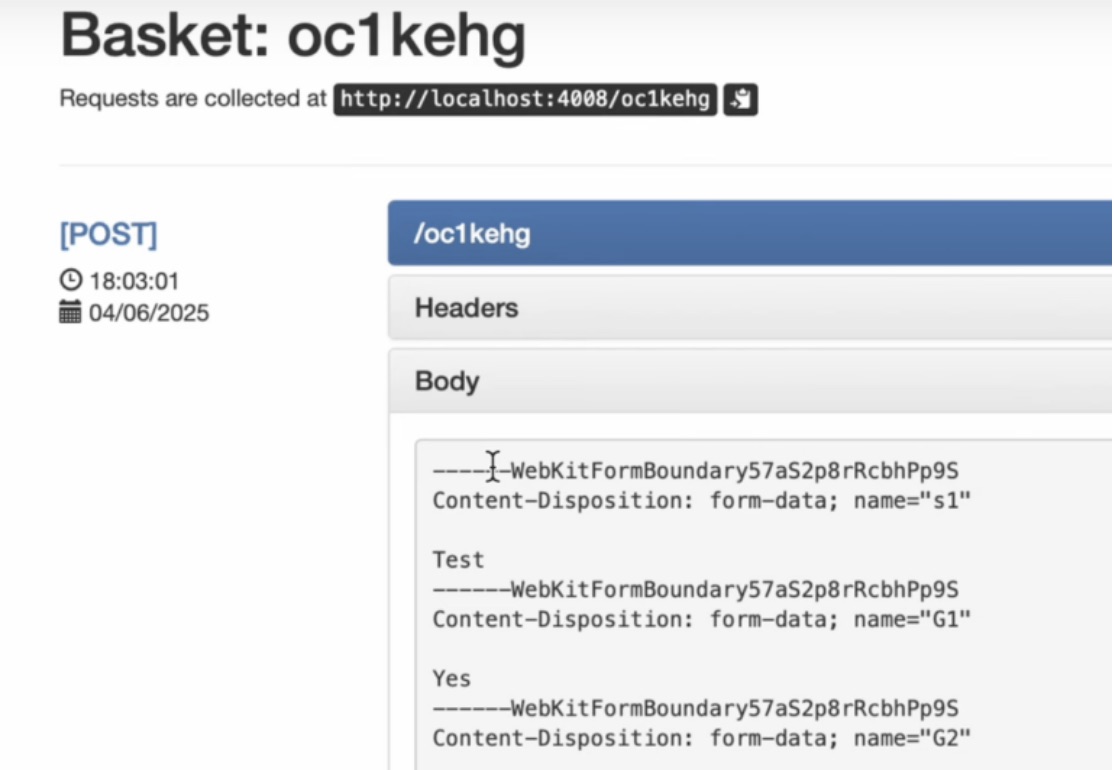

如何在网页里填写 PDF 表格?

有时候,你可能希望用户能在你的网站上填写 PDF 表单。然而,这件事并不简单,因为 PDF 并不是一种原生的网页格式。虽然浏览器可以显示 PDF 文件,但原生并不支持编辑或填写它们。更糟的是,如果你想收集表单数据ÿ…...

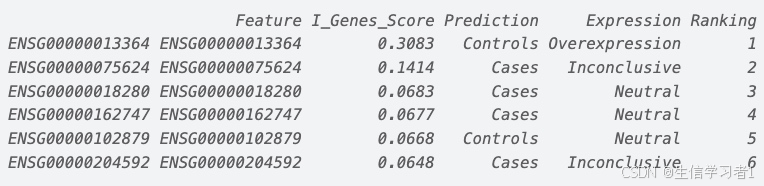

【数据分析】R版IntelliGenes用于生物标志物发现的可解释机器学习

禁止商业或二改转载,仅供自学使用,侵权必究,如需截取部分内容请后台联系作者! 文章目录 介绍流程步骤1. 输入数据2. 特征选择3. 模型训练4. I-Genes 评分计算5. 输出结果 IntelliGenesR 安装包1. 特征选择2. 模型训练和评估3. I-Genes 评分计…...

提供了哪些便利?)

现有的 Redis 分布式锁库(如 Redisson)提供了哪些便利?

现有的 Redis 分布式锁库(如 Redisson)相比于开发者自己基于 Redis 命令(如 SETNX, EXPIRE, DEL)手动实现分布式锁,提供了巨大的便利性和健壮性。主要体现在以下几个方面: 原子性保证 (Atomicity)ÿ…...