diffusers库中stable Diffusion模块的解析

diffusers库中stable Diffusion模块的解析

diffusers中,stable Diffusion v1.5主要由以下几个部分组成

Out[3]: dict_keys(['vae', 'text_encoder', 'tokenizer', 'unet', 'scheduler', 'safety_checker', 'feature_extractor'])

下面给出具体的结构说明。

“text_encoder block”

CLIPTextModel((text_model): CLIPTextTransformer((embeddings): CLIPTextEmbeddings((token_embedding): Embedding(49408, 768)(position_embedding): Embedding(77, 768))(encoder): CLIPEncoder((layers): ModuleList((0-11): 12 x CLIPEncoderLayer((self_attn): CLIPAttention((k_proj): Linear(in_features=768, out_features=768, bias=True)(v_proj): Linear(in_features=768, out_features=768, bias=True)(q_proj): Linear(in_features=768, out_features=768, bias=True)(out_proj): Linear(in_features=768, out_features=768, bias=True))(layer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(mlp): CLIPMLP((activation_fn): QuickGELUActivation()(fc1): Linear(in_features=768, out_features=3072, bias=True)(fc2): Linear(in_features=3072, out_features=768, bias=True))(layer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True))))(final_layer_norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True))

)

“vae block”

AutoencoderKL((encoder): Encoder((conv_in): Conv2d(3, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(down_blocks): ModuleList((0): DownEncoderBlock2D((resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 128, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(downsamplers): ModuleList((0): Downsample2D((conv): LoRACompatibleConv(128, 128, kernel_size=(3, 3), stride=(2, 2)))))(1): DownEncoderBlock2D((resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 128, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(128, 256, kernel_size=(1, 1), stride=(1, 1)))(1): ResnetBlock2D((norm1): GroupNorm(32, 256, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(downsamplers): ModuleList((0): Downsample2D((conv): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(2, 2)))))(2): DownEncoderBlock2D((resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 256, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(256, 512, kernel_size=(1, 1), stride=(1, 1)))(1): ResnetBlock2D((norm1): GroupNorm(32, 512, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(downsamplers): ModuleList((0): Downsample2D((conv): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(2, 2)))))(3): DownEncoderBlock2D((resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 512, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))))(mid_block): UNetMidBlock2D((attentions): ModuleList((0): Attention((group_norm): GroupNorm(32, 512, eps=1e-06, affine=True)(to_q): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(to_k): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(to_v): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(1): Dropout(p=0.0, inplace=False))))(resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 512, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU())))(conv_norm_out): GroupNorm(32, 512, eps=1e-06, affine=True)(conv_act): SiLU()(conv_out): Conv2d(512, 8, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(decoder): Decoder((conv_in): Conv2d(4, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(up_blocks): ModuleList((0-1): 2 x UpDecoderBlock2D((resnets): ModuleList((0-2): 3 x ResnetBlock2D((norm1): GroupNorm(32, 512, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(upsamplers): ModuleList((0): Upsample2D((conv): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(2): UpDecoderBlock2D((resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 512, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(512, 256, kernel_size=(1, 1), stride=(1, 1)))(1-2): 2 x ResnetBlock2D((norm1): GroupNorm(32, 256, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 256, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(upsamplers): ModuleList((0): Upsample2D((conv): LoRACompatibleConv(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(3): UpDecoderBlock2D((resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 256, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(256, 128, kernel_size=(1, 1), stride=(1, 1)))(1-2): 2 x ResnetBlock2D((norm1): GroupNorm(32, 128, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 128, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))))(mid_block): UNetMidBlock2D((attentions): ModuleList((0): Attention((group_norm): GroupNorm(32, 512, eps=1e-06, affine=True)(to_q): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(to_k): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(to_v): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=512, out_features=512, bias=True)(1): Dropout(p=0.0, inplace=False))))(resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 512, eps=1e-06, affine=True)(conv1): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(norm2): GroupNorm(32, 512, eps=1e-06, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU())))(conv_norm_out): GroupNorm(32, 128, eps=1e-06, affine=True)(conv_act): SiLU()(conv_out): Conv2d(128, 3, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))(quant_conv): Conv2d(8, 8, kernel_size=(1, 1), stride=(1, 1))(post_quant_conv): Conv2d(4, 4, kernel_size=(1, 1), stride=(1, 1))

)

“unet block”

UNet2DConditionModel((conv_in): Conv2d(4, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_proj): Timesteps()(time_embedding): TimestepEmbedding((linear_1): LoRACompatibleLinear(in_features=320, out_features=1280, bias=True)(act): SiLU()(linear_2): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True))(down_blocks): ModuleList((0): CrossAttnDownBlock2D((attentions): ModuleList((0-1): 2 x Transformer2DModel((norm): GroupNorm(32, 320, eps=1e-06, affine=True)(proj_in): LoRACompatibleConv(320, 320, kernel_size=(1, 1), stride=(1, 1))(transformer_blocks): ModuleList((0): BasicTransformerBlock((norm1): LayerNorm((320,), eps=1e-05, elementwise_affine=True)(attn1): Attention((to_q): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_k): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_v): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=320, out_features=320, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm2): LayerNorm((320,), eps=1e-05, elementwise_affine=True)(attn2): Attention((to_q): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_k): LoRACompatibleLinear(in_features=768, out_features=320, bias=False)(to_v): LoRACompatibleLinear(in_features=768, out_features=320, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=320, out_features=320, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm3): LayerNorm((320,), eps=1e-05, elementwise_affine=True)(ff): FeedForward((net): ModuleList((0): GEGLU((proj): LoRACompatibleLinear(in_features=320, out_features=2560, bias=True))(1): Dropout(p=0.0, inplace=False)(2): LoRACompatibleLinear(in_features=1280, out_features=320, bias=True)))))(proj_out): LoRACompatibleConv(320, 320, kernel_size=(1, 1), stride=(1, 1))))(resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 320, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(320, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=320, bias=True)(norm2): GroupNorm(32, 320, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(320, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(downsamplers): ModuleList((0): Downsample2D((conv): LoRACompatibleConv(320, 320, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)))))(1): CrossAttnDownBlock2D((attentions): ModuleList((0-1): 2 x Transformer2DModel((norm): GroupNorm(32, 640, eps=1e-06, affine=True)(proj_in): LoRACompatibleConv(640, 640, kernel_size=(1, 1), stride=(1, 1))(transformer_blocks): ModuleList((0): BasicTransformerBlock((norm1): LayerNorm((640,), eps=1e-05, elementwise_affine=True)(attn1): Attention((to_q): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_k): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_v): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=640, out_features=640, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm2): LayerNorm((640,), eps=1e-05, elementwise_affine=True)(attn2): Attention((to_q): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_k): LoRACompatibleLinear(in_features=768, out_features=640, bias=False)(to_v): LoRACompatibleLinear(in_features=768, out_features=640, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=640, out_features=640, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm3): LayerNorm((640,), eps=1e-05, elementwise_affine=True)(ff): FeedForward((net): ModuleList((0): GEGLU((proj): LoRACompatibleLinear(in_features=640, out_features=5120, bias=True))(1): Dropout(p=0.0, inplace=False)(2): LoRACompatibleLinear(in_features=2560, out_features=640, bias=True)))))(proj_out): LoRACompatibleConv(640, 640, kernel_size=(1, 1), stride=(1, 1))))(resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 320, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(320, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=640, bias=True)(norm2): GroupNorm(32, 640, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(320, 640, kernel_size=(1, 1), stride=(1, 1)))(1): ResnetBlock2D((norm1): GroupNorm(32, 640, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=640, bias=True)(norm2): GroupNorm(32, 640, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(downsamplers): ModuleList((0): Downsample2D((conv): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)))))(2): CrossAttnDownBlock2D((attentions): ModuleList((0-1): 2 x Transformer2DModel((norm): GroupNorm(32, 1280, eps=1e-06, affine=True)(proj_in): LoRACompatibleConv(1280, 1280, kernel_size=(1, 1), stride=(1, 1))(transformer_blocks): ModuleList((0): BasicTransformerBlock((norm1): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(attn1): Attention((to_q): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_k): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_v): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm2): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(attn2): Attention((to_q): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_k): LoRACompatibleLinear(in_features=768, out_features=1280, bias=False)(to_v): LoRACompatibleLinear(in_features=768, out_features=1280, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm3): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(ff): FeedForward((net): ModuleList((0): GEGLU((proj): LoRACompatibleLinear(in_features=1280, out_features=10240, bias=True))(1): Dropout(p=0.0, inplace=False)(2): LoRACompatibleLinear(in_features=5120, out_features=1280, bias=True)))))(proj_out): LoRACompatibleConv(1280, 1280, kernel_size=(1, 1), stride=(1, 1))))(resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 640, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(640, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(norm2): GroupNorm(32, 1280, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(640, 1280, kernel_size=(1, 1), stride=(1, 1)))(1): ResnetBlock2D((norm1): GroupNorm(32, 1280, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(norm2): GroupNorm(32, 1280, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))(downsamplers): ModuleList((0): Downsample2D((conv): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)))))(3): DownBlock2D((resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 1280, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(norm2): GroupNorm(32, 1280, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()))))(up_blocks): ModuleList((0): UpBlock2D((resnets): ModuleList((0-2): 3 x ResnetBlock2D((norm1): GroupNorm(32, 2560, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(2560, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(norm2): GroupNorm(32, 1280, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(2560, 1280, kernel_size=(1, 1), stride=(1, 1))))(upsamplers): ModuleList((0): Upsample2D((conv): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(1): CrossAttnUpBlock2D((attentions): ModuleList((0-2): 3 x Transformer2DModel((norm): GroupNorm(32, 1280, eps=1e-06, affine=True)(proj_in): LoRACompatibleConv(1280, 1280, kernel_size=(1, 1), stride=(1, 1))(transformer_blocks): ModuleList((0): BasicTransformerBlock((norm1): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(attn1): Attention((to_q): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_k): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_v): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm2): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(attn2): Attention((to_q): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_k): LoRACompatibleLinear(in_features=768, out_features=1280, bias=False)(to_v): LoRACompatibleLinear(in_features=768, out_features=1280, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm3): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(ff): FeedForward((net): ModuleList((0): GEGLU((proj): LoRACompatibleLinear(in_features=1280, out_features=10240, bias=True))(1): Dropout(p=0.0, inplace=False)(2): LoRACompatibleLinear(in_features=5120, out_features=1280, bias=True)))))(proj_out): LoRACompatibleConv(1280, 1280, kernel_size=(1, 1), stride=(1, 1))))(resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 2560, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(2560, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(norm2): GroupNorm(32, 1280, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(2560, 1280, kernel_size=(1, 1), stride=(1, 1)))(2): ResnetBlock2D((norm1): GroupNorm(32, 1920, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(1920, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(norm2): GroupNorm(32, 1280, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(1920, 1280, kernel_size=(1, 1), stride=(1, 1))))(upsamplers): ModuleList((0): Upsample2D((conv): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(2): CrossAttnUpBlock2D((attentions): ModuleList((0-2): 3 x Transformer2DModel((norm): GroupNorm(32, 640, eps=1e-06, affine=True)(proj_in): LoRACompatibleConv(640, 640, kernel_size=(1, 1), stride=(1, 1))(transformer_blocks): ModuleList((0): BasicTransformerBlock((norm1): LayerNorm((640,), eps=1e-05, elementwise_affine=True)(attn1): Attention((to_q): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_k): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_v): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=640, out_features=640, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm2): LayerNorm((640,), eps=1e-05, elementwise_affine=True)(attn2): Attention((to_q): LoRACompatibleLinear(in_features=640, out_features=640, bias=False)(to_k): LoRACompatibleLinear(in_features=768, out_features=640, bias=False)(to_v): LoRACompatibleLinear(in_features=768, out_features=640, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=640, out_features=640, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm3): LayerNorm((640,), eps=1e-05, elementwise_affine=True)(ff): FeedForward((net): ModuleList((0): GEGLU((proj): LoRACompatibleLinear(in_features=640, out_features=5120, bias=True))(1): Dropout(p=0.0, inplace=False)(2): LoRACompatibleLinear(in_features=2560, out_features=640, bias=True)))))(proj_out): LoRACompatibleConv(640, 640, kernel_size=(1, 1), stride=(1, 1))))(resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 1920, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(1920, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=640, bias=True)(norm2): GroupNorm(32, 640, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(1920, 640, kernel_size=(1, 1), stride=(1, 1)))(1): ResnetBlock2D((norm1): GroupNorm(32, 1280, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(1280, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=640, bias=True)(norm2): GroupNorm(32, 640, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(1280, 640, kernel_size=(1, 1), stride=(1, 1)))(2): ResnetBlock2D((norm1): GroupNorm(32, 960, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(960, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=640, bias=True)(norm2): GroupNorm(32, 640, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(960, 640, kernel_size=(1, 1), stride=(1, 1))))(upsamplers): ModuleList((0): Upsample2D((conv): LoRACompatibleConv(640, 640, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))))(3): CrossAttnUpBlock2D((attentions): ModuleList((0-2): 3 x Transformer2DModel((norm): GroupNorm(32, 320, eps=1e-06, affine=True)(proj_in): LoRACompatibleConv(320, 320, kernel_size=(1, 1), stride=(1, 1))(transformer_blocks): ModuleList((0): BasicTransformerBlock((norm1): LayerNorm((320,), eps=1e-05, elementwise_affine=True)(attn1): Attention((to_q): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_k): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_v): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=320, out_features=320, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm2): LayerNorm((320,), eps=1e-05, elementwise_affine=True)(attn2): Attention((to_q): LoRACompatibleLinear(in_features=320, out_features=320, bias=False)(to_k): LoRACompatibleLinear(in_features=768, out_features=320, bias=False)(to_v): LoRACompatibleLinear(in_features=768, out_features=320, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=320, out_features=320, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm3): LayerNorm((320,), eps=1e-05, elementwise_affine=True)(ff): FeedForward((net): ModuleList((0): GEGLU((proj): LoRACompatibleLinear(in_features=320, out_features=2560, bias=True))(1): Dropout(p=0.0, inplace=False)(2): LoRACompatibleLinear(in_features=1280, out_features=320, bias=True)))))(proj_out): LoRACompatibleConv(320, 320, kernel_size=(1, 1), stride=(1, 1))))(resnets): ModuleList((0): ResnetBlock2D((norm1): GroupNorm(32, 960, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(960, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=320, bias=True)(norm2): GroupNorm(32, 320, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(320, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(960, 320, kernel_size=(1, 1), stride=(1, 1)))(1-2): 2 x ResnetBlock2D((norm1): GroupNorm(32, 640, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(640, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=320, bias=True)(norm2): GroupNorm(32, 320, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(320, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU()(conv_shortcut): LoRACompatibleConv(640, 320, kernel_size=(1, 1), stride=(1, 1))))))(mid_block): UNetMidBlock2DCrossAttn((attentions): ModuleList((0): Transformer2DModel((norm): GroupNorm(32, 1280, eps=1e-06, affine=True)(proj_in): LoRACompatibleConv(1280, 1280, kernel_size=(1, 1), stride=(1, 1))(transformer_blocks): ModuleList((0): BasicTransformerBlock((norm1): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(attn1): Attention((to_q): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_k): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_v): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm2): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(attn2): Attention((to_q): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=False)(to_k): LoRACompatibleLinear(in_features=768, out_features=1280, bias=False)(to_v): LoRACompatibleLinear(in_features=768, out_features=1280, bias=False)(to_out): ModuleList((0): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(1): Dropout(p=0.0, inplace=False)))(norm3): LayerNorm((1280,), eps=1e-05, elementwise_affine=True)(ff): FeedForward((net): ModuleList((0): GEGLU((proj): LoRACompatibleLinear(in_features=1280, out_features=10240, bias=True))(1): Dropout(p=0.0, inplace=False)(2): LoRACompatibleLinear(in_features=5120, out_features=1280, bias=True)))))(proj_out): LoRACompatibleConv(1280, 1280, kernel_size=(1, 1), stride=(1, 1))))(resnets): ModuleList((0-1): 2 x ResnetBlock2D((norm1): GroupNorm(32, 1280, eps=1e-05, affine=True)(conv1): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(time_emb_proj): LoRACompatibleLinear(in_features=1280, out_features=1280, bias=True)(norm2): GroupNorm(32, 1280, eps=1e-05, affine=True)(dropout): Dropout(p=0.0, inplace=False)(conv2): LoRACompatibleConv(1280, 1280, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(nonlinearity): SiLU())))(conv_norm_out): GroupNorm(32, 320, eps=1e-05, affine=True)(conv_act): SiLU()(conv_out): Conv2d(320, 4, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

“feature extractor block”

CLIPImageProcessor {"crop_size": {"height": 224,"width": 224},"do_center_crop": true,"do_convert_rgb": true,"do_normalize": true,"do_rescale": true,"do_resize": true,"feature_extractor_type": "CLIPFeatureExtractor","image_mean": [0.48145466,0.4578275,0.40821073],"image_processor_type": "CLIPImageProcessor","image_std": [0.26862954,0.26130258,0.27577711],"resample": 3,"rescale_factor": 0.00392156862745098,"size": {"shortest_edge": 224},"use_square_size": false

}

“tokenizer block”

CLIPTokenizer(name_or_path='/home/tiger/.cache/huggingface/hub/models--runwayml--stable-diffusion-v1-5/snapshots/1d0c4ebf6ff58a5caecab40fa1406526bca4b5b9/tokenizer', vocab_size=49408, model_max_length=77, is_fast=False, padding_side='right', truncation_side='right', special_tokens={'bos_token': '<|startoftext|>', 'eos_token': '<|endoftext|>', 'unk_token': '<|endoftext|>', 'pad_token': '<|endoftext|>'}, clean_up_tokenization_spaces=True), added_tokens_decoder={49406: AddedToken("<|startoftext|>", rstrip=False, lstrip=False, single_word=False, normalized=True, special=True),49407: AddedToken("<|endoftext|>", rstrip=False, lstrip=False, single_word=False, normalized=True, special=True),

}

“safety_checker block”

StableDiffusionSafetyChecker((vision_model): CLIPVisionModel((vision_model): CLIPVisionTransformer((embeddings): CLIPVisionEmbeddings((patch_embedding): Conv2d(3, 1024, kernel_size=(14, 14), stride=(14, 14), bias=False)(position_embedding): Embedding(257, 1024))(pre_layrnorm): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)(encoder): CLIPEncoder((layers): ModuleList((0-23): 24 x CLIPEncoderLayer((self_attn): CLIPAttention((k_proj): Linear(in_features=1024, out_features=1024, bias=True)(v_proj): Linear(in_features=1024, out_features=1024, bias=True)(q_proj): Linear(in_features=1024, out_features=1024, bias=True)(out_proj): Linear(in_features=1024, out_features=1024, bias=True))(layer_norm1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)(mlp): CLIPMLP((activation_fn): QuickGELUActivation()(fc1): Linear(in_features=1024, out_features=4096, bias=True)(fc2): Linear(in_features=4096, out_features=1024, bias=True))(layer_norm2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True))))(post_layernorm): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)))(visual_projection): Linear(in_features=1024, out_features=768, bias=False)

)

“scheduler block”

PNDMScheduler {"_class_name": "PNDMScheduler","_diffusers_version": "0.22.3","beta_end": 0.012,"beta_schedule": "scaled_linear","beta_start": 0.00085,"clip_sample": false,"num_train_timesteps": 1000,"prediction_type": "epsilon","set_alpha_to_one": false,"skip_prk_steps": true,"steps_offset": 1,"timestep_spacing": "leading","trained_betas": null

}

相关文章:

diffusers库中stable Diffusion模块的解析

diffusers库中stable Diffusion模块的解析 diffusers中,stable Diffusion v1.5主要由以下几个部分组成 Out[3]: dict_keys([vae, text_encoder, tokenizer, unet, scheduler, safety_checker, feature_extractor])下面给出具体的结构说明。 “text_encoder block…...

智慧城市照明为城市节能降耗提供支持继电器开关钡铼S270

智慧城市照明:为城市节能降耗提供支持——以钡铼技术S270继电器开关为例 随着城市化进程的加速,城市照明系统的需求也日益增长。与此同时,能源消耗和环境污染问题日益严重,使得城市照明的节能减排成为重要议题。智慧城市照明系统…...

固高GTS800控制卡开发数控系统宏程序心得

在对固高GTS800控制卡做数控系统开发时,经过多年的总结与积累,总算是实现了一个数控系统的基本功能。 基本实现宏程序的译码与执行同时执行,虽然不是实时执行,但在充分利用插补缓存区的基础上,实现了相对的实时性。 …...

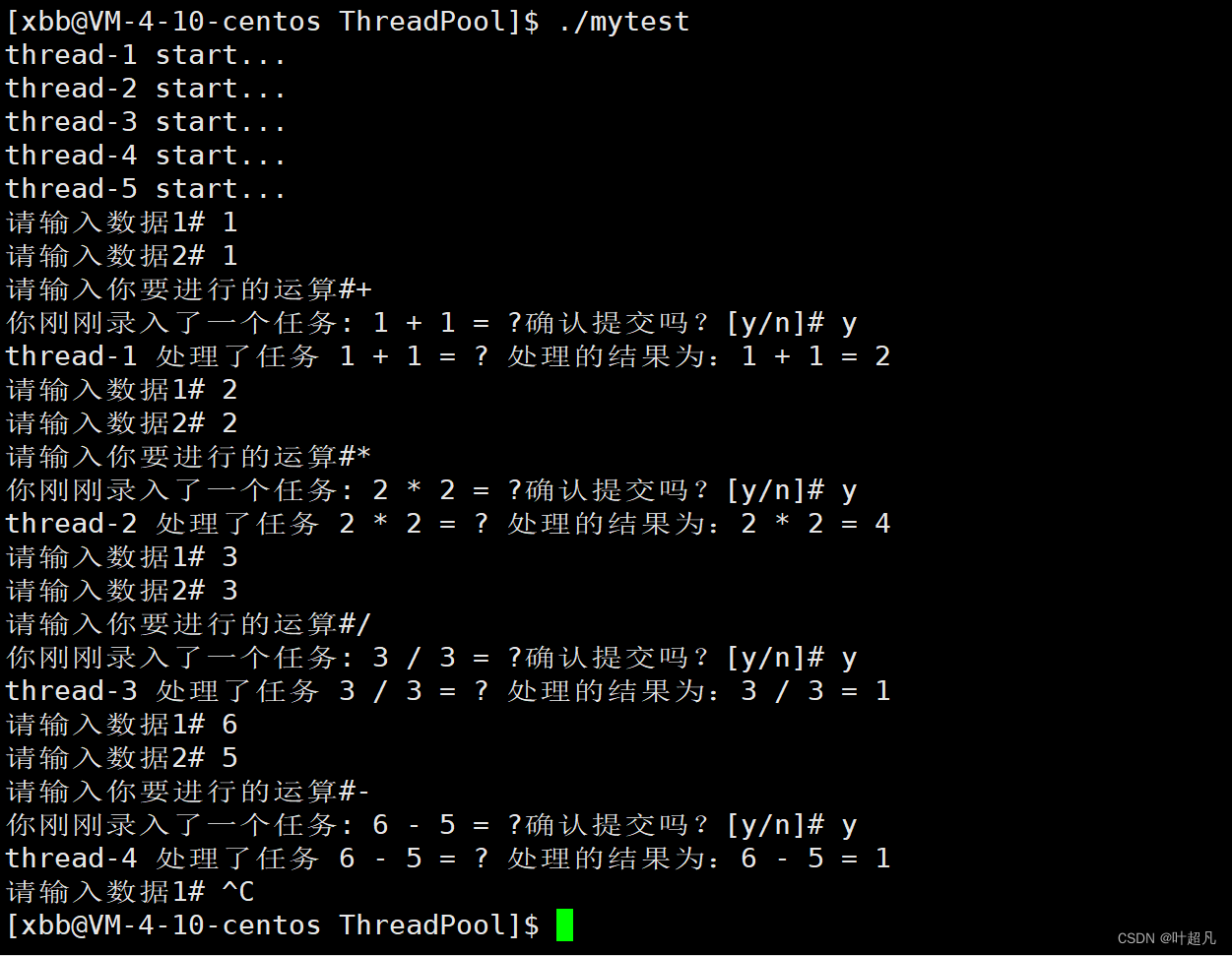

linux入门---线程池的模拟实现

目录标题 什么是线程池线程的封装准备工作构造函数和析构函数start函数join函数threadname函数完整代码 线程池的实现准备工作构造函数和析构函数push函数pop函数run函数完整的代码 测试代码 什么是线程池 在实现线程池之前我们先了解一下什么是线程池,所谓的池大家…...

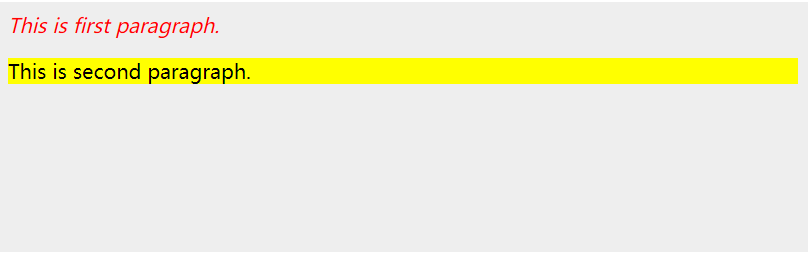

jQuery HTML/CSS 参考文档

jQuery HTML/CSS 参考文档 文章目录 应用样式 示例属性方法示例 jQuery HTML/CSS 参考文档 应用样式 addClass( classes ) 方法可用于将定义好的样式表应用于所有匹配的元素上。可以通过空格分隔指定多个类。 示例 以下是一个简单示例,设置了para标签 <p&g…...

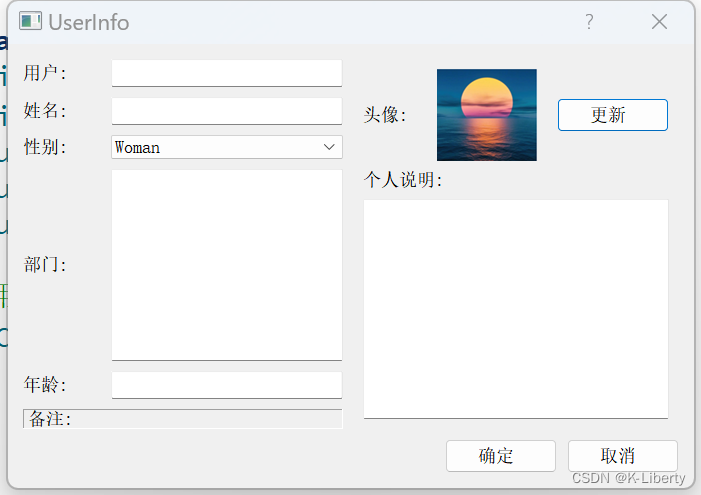

QT 布局管理综合实例

通过一个实例基本布局管理,演示QHBoxLayout类、QVBoxLayout类及QGridLayout类效果 本实例共用到四个布局管理器,分别是 LeftLayout、RightLayout、BottomLayout和MainLayout。 在源文件“dialog.cpp”具体代码如下: 运行效果: Se…...

使用 pubsub-js 进行消息发布订阅

npm 包地址 github 包地址 pubsub-js 是一个轻量级的 JavaScript 基于主题的消息订阅发布库 ,压缩后小于1b。它具有使用简单、性能高效、支持多平台等优点,可以很好地满足各种需求。 功能特点: 无依赖同步解耦ES3 兼容。pubsub-js 能够在…...

TA Shader基础

渲染管线 概念:GPU绘制物体的时候,标准的,流水线一样的操作 游戏引擎如何绘制物体:CPU提供绘制数据(顶点数据,纹理贴图等)给GPU,配置渲染管线(装载Shader代码到GPU&…...

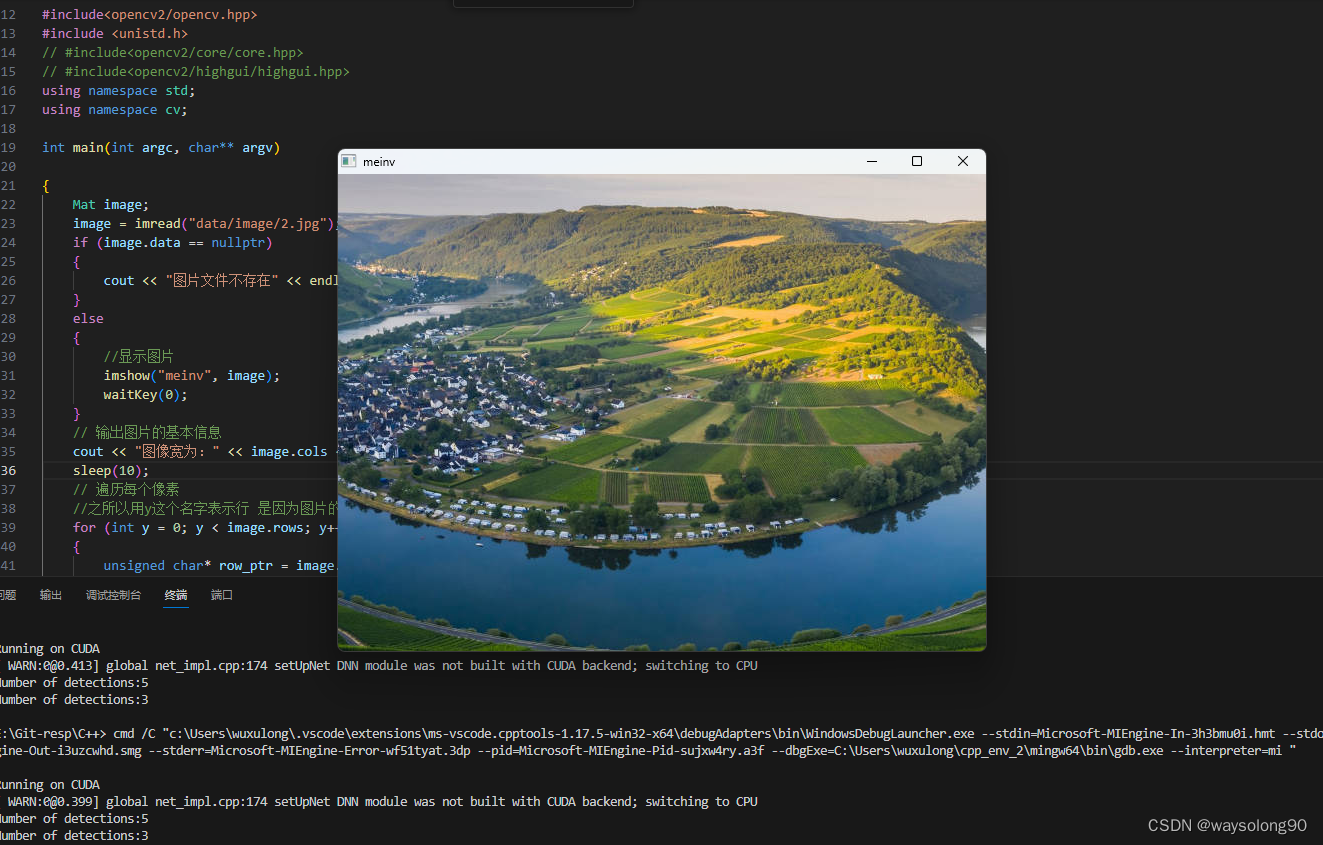

VScode + opencv(cmake编译) + c++ + win配置教程

1、下载opencv 2、下载CMake 3、下载MinGW 放到一个文件夹中 并解压另外两个文件 4、cmake编译opencv 新建文件夹mingw-build 双击cmake-gui 程序会开始自动生成Makefiles等文件配置,需要耐心等待一段时间。 简单总结下:finish->configuring …...

Vue中的常用指令v-html / v-show / v-if / v-else / v-on / v-bind / v-for / v-model

前言 持续学习总结输出中,Vue中的常用指令v-html / v-show / v-if / v-else / v-on / v-bind / v-for / v-model 概念:指令(Directives)是Vue提供的带有 v- 前缀 的特殊标签属性。可以提高操作 DOM 的效率。 vue 中的指令按照不…...

ChatGPT 提问技巧

ChatGPT是由 OpenAI 训练的⼀款⼤型语⾔模型,能够和你进⾏任何领域的对话。 它能够⽣成类似于⼈类写作的⽂本。您只需要给出提示或提出问题,它就可以⽣成你想要的东⻄。 在此⻚⾯中,您将找到可与 ChatGPT ⼀起使⽤的各种提示。 只需按照下…...

)

2023-11-09 LeetCode每日一题(逃离火灾)

2023-11-09每日一题 一、题目编号 2258. 逃离火灾二、题目链接 点击跳转到题目位置 三、题目描述 给你一个下标从 0 开始大小为 m x n 的二维整数数组 grid ,它表示一个网格图。每个格子为下面 3 个值之一: 0 表示草地。1 表示着火的格子。2 表示一…...

阿里云-maven私服idea访问私服与组件上传

1.进入aliyun制品仓库 2. 点击 生产库-release进入 根据以上步骤修改本地 m2/setting.xml文件 3.pom.xml文件 点击设置获取url 4. idea发布组件...

Ubuntu上的TFTP服务软件

2023年11月11日,周六下午 目录 tftpd-hpa atftpd 配置和启动 tftpd-hpa 这是一个TFTP服务器软件包,提供了一个简单的TFTP服务器。 你可以使用以下命令安装它: sudo apt-get install tftpd-hpaatftpd 这是另一个常用的TFTP服务器软件包…...

jedis、lettuce与redis交互分析

概念梳理: redis是缓存服务器,jedis、lettuce都是Java语言下的redis客户端,用于与redis服务器进行交互。springboot项目中一般使用的是spring data redis,spring data redis依赖与jedis或lettuce,可以进行配置&#x…...

C++算法:矩阵中的最长递增路径

涉及知识点 拓扑排序 题目 给定一个 m x n 整数矩阵 matrix ,找出其中 最长递增路径 的长度。 对于每个单元格,你可以往上,下,左,右四个方向移动。 你 不能 在 对角线 方向上移动或移动到 边界外(即不允…...

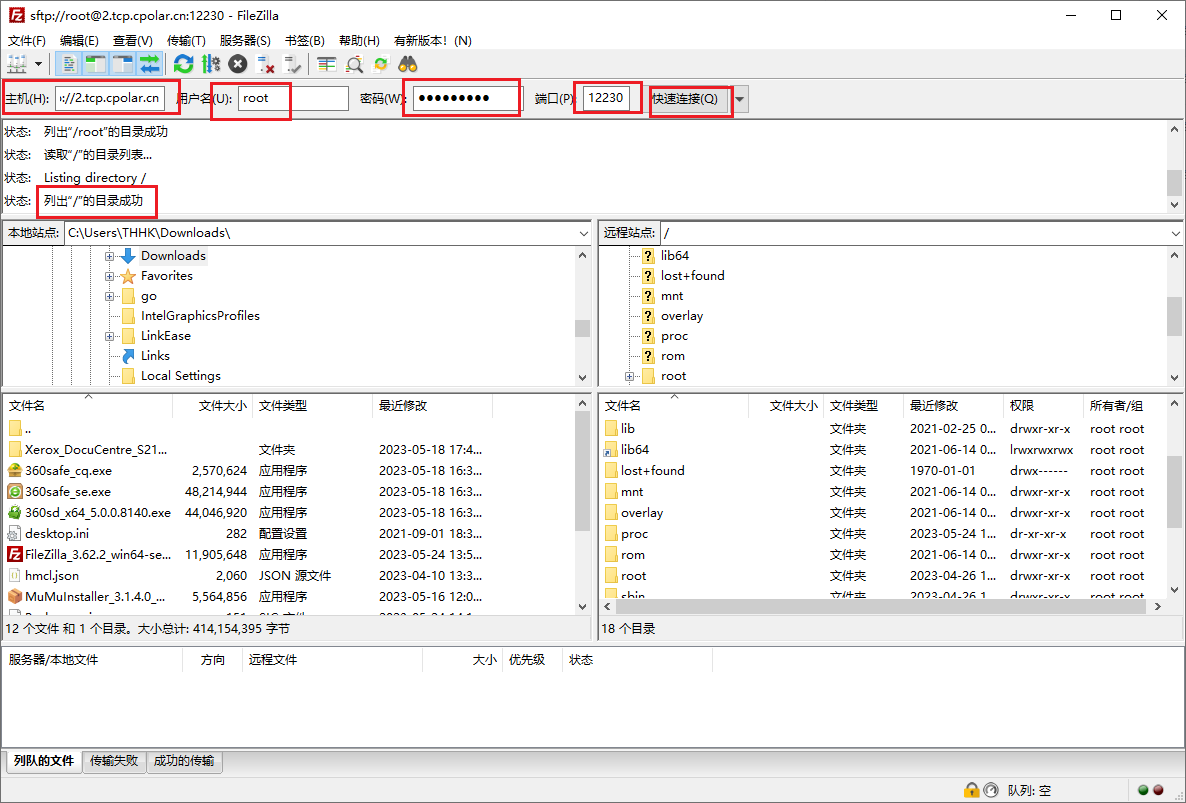

OpenWRT配置SFTP远程文件传输,让数据分享更安全

文章目录 前言 1. openssh-sftp-server 安装2. 安装cpolar工具3.配置SFTP远程访问4.固定远程连接地址 前言 本次教程我们将在OpenWRT上安装SFTP服务,并结合cpolar内网穿透,创建安全隧道映射22端口,实现在公网环境下远程OpenWRT SFTP…...

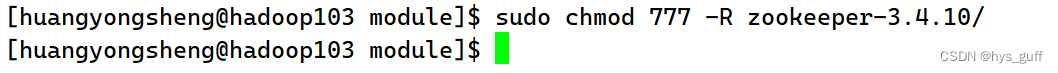

已解决:rm: 无法删除“/opt/module/zookeeper-3.4.10/zkData/zookeeper_server.pid“: 权限不够

解决: ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Stopping zookeeper ... /opt/module/zookeeper-3.4.10/bin/zkServer.sh: 第 182 行:kill: (4149) - 不允许的操作 rm: 无法删除"/opt/module/zooke…...

【DataStream API - Source算子】)

Flink(四)【DataStream API - Source算子】

前言 今天开始学习 DataStream 的 API ,这一块是 Flink 的核心部分,我们不去学习 DataSet 的 API 了,因为从 Flink 12 开始已经实现了流批一体, DataSet 已然是被抛弃了。忘记提了,从这里开始,我开始换用 F…...

GIS入门,xyz地图瓦片是什么,xyz数据格式详解,如何发布离线XYZ瓦片到nginx或者tomcat中

XYZ介绍 XYZ瓦片是一种在线地图数据格式,由goole公司开发。 与其他瓦片地图类似,XYZ瓦片将地图数据分解为一系列小的图像块,以提高地图显示效率和性能。 XYZ瓦片提供了一种开放的地图平台,使开发者可以轻松地将地图集成到自己的应用程序中。同时,它还提供了高分辨率图像和…...

结构体的进阶应用)

基于算法竞赛的c++编程(28)结构体的进阶应用

结构体的嵌套与复杂数据组织 在C中,结构体可以嵌套使用,形成更复杂的数据结构。例如,可以通过嵌套结构体描述多层级数据关系: struct Address {string city;string street;int zipCode; };struct Employee {string name;int id;…...

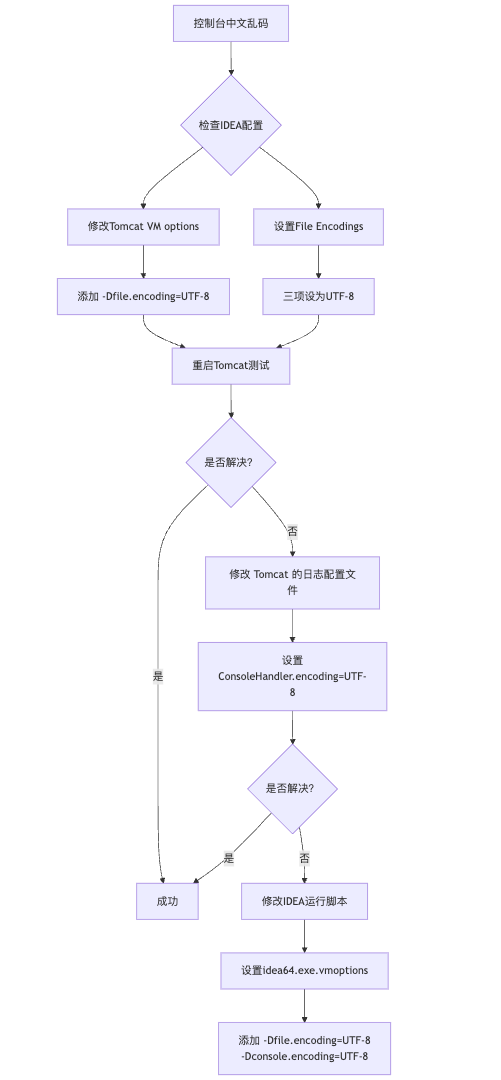

IDEA运行Tomcat出现乱码问题解决汇总

最近正值期末周,有很多同学在写期末Java web作业时,运行tomcat出现乱码问题,经过多次解决与研究,我做了如下整理: 原因: IDEA本身编码与tomcat的编码与Windows编码不同导致,Windows 系统控制台…...

从WWDC看苹果产品发展的规律

WWDC 是苹果公司一年一度面向全球开发者的盛会,其主题演讲展现了苹果在产品设计、技术路线、用户体验和生态系统构建上的核心理念与演进脉络。我们借助 ChatGPT Deep Research 工具,对过去十年 WWDC 主题演讲内容进行了系统化分析,形成了这份…...

智慧工地云平台源码,基于微服务架构+Java+Spring Cloud +UniApp +MySql

智慧工地管理云平台系统,智慧工地全套源码,java版智慧工地源码,支持PC端、大屏端、移动端。 智慧工地聚焦建筑行业的市场需求,提供“平台网络终端”的整体解决方案,提供劳务管理、视频管理、智能监测、绿色施工、安全管…...

Cilium动手实验室: 精通之旅---20.Isovalent Enterprise for Cilium: Zero Trust Visibility

Cilium动手实验室: 精通之旅---20.Isovalent Enterprise for Cilium: Zero Trust Visibility 1. 实验室环境1.1 实验室环境1.2 小测试 2. The Endor System2.1 部署应用2.2 检查现有策略 3. Cilium 策略实体3.1 创建 allow-all 网络策略3.2 在 Hubble CLI 中验证网络策略源3.3 …...

Auto-Coder使用GPT-4o完成:在用TabPFN这个模型构建一个预测未来3天涨跌的分类任务

通过akshare库,获取股票数据,并生成TabPFN这个模型 可以识别、处理的格式,写一个完整的预处理示例,并构建一个预测未来 3 天股价涨跌的分类任务 用TabPFN这个模型构建一个预测未来 3 天股价涨跌的分类任务,进行预测并输…...

渲染学进阶内容——模型

最近在写模组的时候发现渲染器里面离不开模型的定义,在渲染的第二篇文章中简单的讲解了一下关于模型部分的内容,其实不管是方块还是方块实体,都离不开模型的内容 🧱 一、CubeListBuilder 功能解析 CubeListBuilder 是 Minecraft Java 版模型系统的核心构建器,用于动态创…...

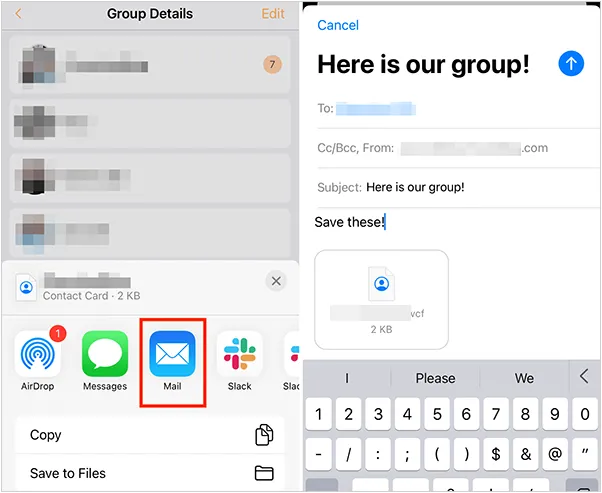

如何将联系人从 iPhone 转移到 Android

从 iPhone 换到 Android 手机时,你可能需要保留重要的数据,例如通讯录。好在,将通讯录从 iPhone 转移到 Android 手机非常简单,你可以从本文中学习 6 种可靠的方法,确保随时保持连接,不错过任何信息。 第 1…...

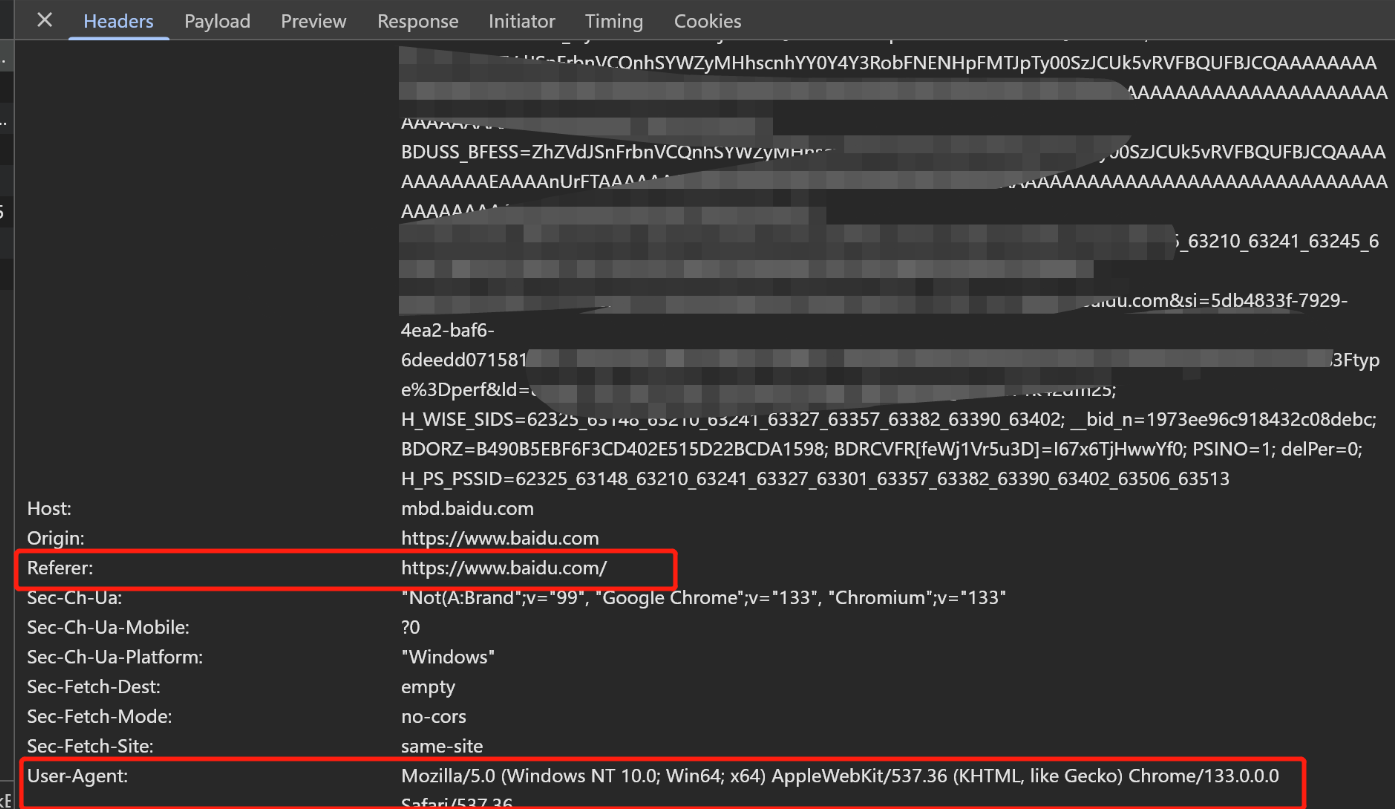

Python爬虫(一):爬虫伪装

一、网站防爬机制概述 在当今互联网环境中,具有一定规模或盈利性质的网站几乎都实施了各种防爬措施。这些措施主要分为两大类: 身份验证机制:直接将未经授权的爬虫阻挡在外反爬技术体系:通过各种技术手段增加爬虫获取数据的难度…...

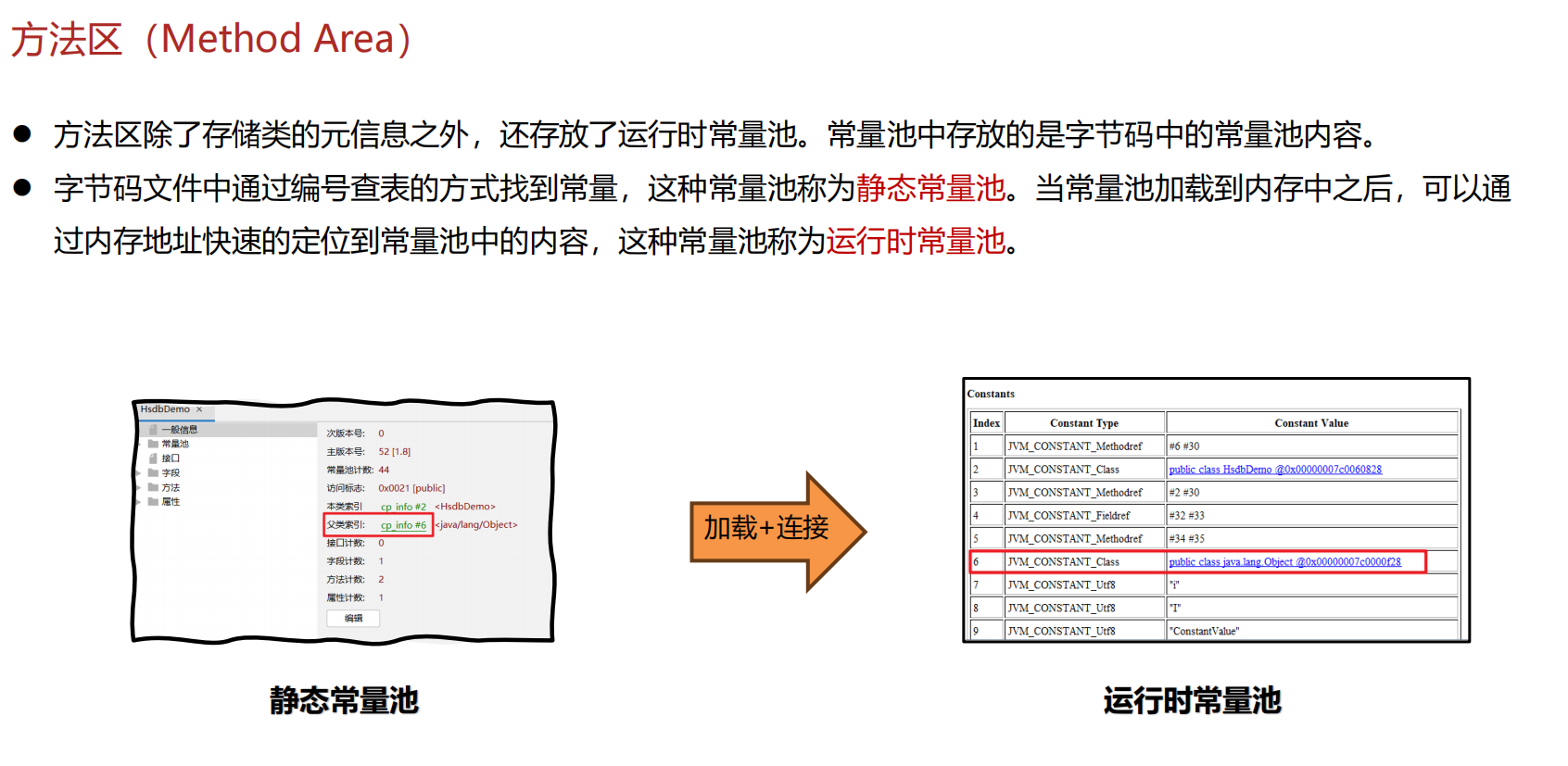

JVM 内存结构 详解

内存结构 运行时数据区: Java虚拟机在运行Java程序过程中管理的内存区域。 程序计数器: 线程私有,程序控制流的指示器,分支、循环、跳转、异常处理、线程恢复等基础功能都依赖这个计数器完成。 每个线程都有一个程序计数…...