Flink会话集群docker-compose一键安装

1、安装docker

参考,本人这篇博客:https://blog.csdn.net/taotao_guiwang/article/details/135508643?spm=1001.2014.3001.5501

2、flink-conf.yaml

flink-conf.yaml放在/home/flink/conf/job、/home/flink/conf/task下面,flink-conf.yaml内容如下:

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#################################################################################==============================================================================

# Common

#==============================================================================# The external address of the host on which the JobManager runs and can be

# reached by the TaskManagers and any clients which want to connect. This setting

# is only used in Standalone mode and may be overwritten on the JobManager side

# by specifying the --host <hostname> parameter of the bin/jobmanager.sh executable.

# In high availability mode, if you use the bin/start-cluster.sh script and setup

# the conf/masters file, this will be taken care of automatically. Yarn/Mesos

# automatically configure the host name based on the hostname of the node where the

# JobManager runs.jobmanager.rpc.address: jobmanager# The RPC port where the JobManager is reachable.jobmanager.rpc.port: 6123# The total process memory size for the JobManager.

#

# Note this accounts for all memory usage within the JobManager process, including JVM metaspace and other overhead.jobmanager.memory.process.size: 4096m# The total process memory size for the TaskManager.

#

# Note this accounts for all memory usage within the TaskManager process, including JVM metaspace and other overhead.taskmanager.memory.process.size: 16384m# To exclude JVM metaspace and overhead, please, use total Flink memory size instead of 'taskmanager.memory.process.size'.

# It is not recommended to set both 'taskmanager.memory.process.size' and Flink memory.

#

# taskmanager.memory.flink.size: 1280m# The number of task slots that each TaskManager offers. Each slot runs one parallel pipeline.taskmanager.numberOfTaskSlots: 10# The parallelism used for programs that did not specify and other parallelism.parallelism.default: 1# The default file system scheme and authority.

#

# By default file paths without scheme are interpreted relative to the local

# root file system 'file:///'. Use this to override the default and interpret

# relative paths relative to a different file system,

# for example 'hdfs://mynamenode:12345'

#

# fs.default-scheme#==============================================================================

# High Availability

#==============================================================================# The high-availability mode. Possible options are 'NONE' or 'zookeeper'.

#

# high-availability: zookeeper# The path where metadata for master recovery is persisted. While ZooKeeper stores

# the small ground truth for checkpoint and leader election, this location stores

# the larger objects, like persisted dataflow graphs.

#

# Must be a durable file system that is accessible from all nodes

# (like HDFS, S3, Ceph, nfs, ...)

#

# high-availability.storageDir: hdfs:///flink/ha/# The list of ZooKeeper quorum peers that coordinate the high-availability

# setup. This must be a list of the form:

# "host1:clientPort,host2:clientPort,..." (default clientPort: 2181)

#

# high-availability.zookeeper.quorum: localhost:2181# ACL options are based on https://zookeeper.apache.org/doc/r3.1.2/zookeeperProgrammers.html#sc_BuiltinACLSchemes

# It can be either "creator" (ZOO_CREATE_ALL_ACL) or "open" (ZOO_OPEN_ACL_UNSAFE)

# The default value is "open" and it can be changed to "creator" if ZK security is enabled

#

# high-availability.zookeeper.client.acl: open#==============================================================================

# Fault tolerance and checkpointing

#==============================================================================# The backend that will be used to store operator state checkpoints if

# checkpointing is enabled.

#

# Supported backends are 'jobmanager', 'filesystem', 'rocksdb', or the

# <class-name-of-factory>.

#

# state.backend: filesystem# Directory for checkpoints filesystem, when using any of the default bundled

# state backends.

#

# state.checkpoints.dir: hdfs://namenode-host:port/flink-checkpoints# Default target directory for savepoints, optional.

#

#state.savepoints.dir: file:/tmp/savepoint# Flag to enable/disable incremental checkpoints for backends that

# support incremental checkpoints (like the RocksDB state backend).

#

# state.backend.incremental: false# The failover strategy, i.e., how the job computation recovers from task failures.

# Only restart tasks that may have been affected by the task failure, which typically includes

# downstream tasks and potentially upstream tasks if their produced data is no longer available for consumption.jobmanager.execution.failover-strategy: region#==============================================================================

# Rest & web frontend

#==============================================================================# The port to which the REST client connects to. If rest.bind-port has

# not been specified, then the server will bind to this port as well.

#

#rest.port: 8081# The address to which the REST client will connect to

#

#rest.address: 0.0.0.0# Port range for the REST and web server to bind to.

#

#rest.bind-port: 8080-8090# The address that the REST & web server binds to

#

#rest.bind-address: 0.0.0.0# Flag to specify whether job submission is enabled from the web-based

# runtime monitor. Uncomment to disable.#web.submit.enable: false#==============================================================================

# Advanced

#==============================================================================# Override the directories for temporary files. If not specified, the

# system-specific Java temporary directory (java.io.tmpdir property) is taken.

#

# For framework setups on Yarn or Mesos, Flink will automatically pick up the

# containers' temp directories without any need for configuration.

#

# Add a delimited list for multiple directories, using the system directory

# delimiter (colon ':' on unix) or a comma, e.g.:

# /data1/tmp:/data2/tmp:/data3/tmp

#

# Note: Each directory entry is read from and written to by a different I/O

# thread. You can include the same directory multiple times in order to create

# multiple I/O threads against that directory. This is for example relevant for

# high-throughput RAIDs.

#

# io.tmp.dirs: /tmp# The classloading resolve order. Possible values are 'child-first' (Flink's default)

# and 'parent-first' (Java's default).

#

# Child first classloading allows users to use different dependency/library

# versions in their application than those in the classpath. Switching back

# to 'parent-first' may help with debugging dependency issues.

#

# classloader.resolve-order: child-first# The amount of memory going to the network stack. These numbers usually need

# no tuning. Adjusting them may be necessary in case of an "Insufficient number

# of network buffers" error. The default min is 64MB, the default max is 1GB.

#

# taskmanager.memory.network.fraction: 0.1

# taskmanager.memory.network.min: 64mb

# taskmanager.memory.network.max: 1gb#==============================================================================

# Flink Cluster Security Configuration

#==============================================================================# Kerberos authentication for various components - Hadoop, ZooKeeper, and connectors -

# may be enabled in four steps:

# 1. configure the local krb5.conf file

# 2. provide Kerberos credentials (either a keytab or a ticket cache w/ kinit)

# 3. make the credentials available to various JAAS login contexts

# 4. configure the connector to use JAAS/SASL# The below configure how Kerberos credentials are provided. A keytab will be used instead of

# a ticket cache if the keytab path and principal are set.# security.kerberos.login.use-ticket-cache: true

# security.kerberos.login.keytab: /path/to/kerberos/keytab

# security.kerberos.login.principal: flink-user# The configuration below defines which JAAS login contexts# security.kerberos.login.contexts: Client,KafkaClient#==============================================================================

# ZK Security Configuration

#==============================================================================# Below configurations are applicable if ZK ensemble is configured for security# Override below configuration to provide custom ZK service name if configured

# zookeeper.sasl.service-name: zookeeper# The configuration below must match one of the values set in "security.kerberos.login.contexts"

# zookeeper.sasl.login-context-name: Client#==============================================================================

# HistoryServer

#==============================================================================# The HistoryServer is started and stopped via bin/historyserver.sh (start|stop)# Directory to upload completed jobs to. Add this directory to the list of

# monitored directories of the HistoryServer as well (see below).

#jobmanager.archive.fs.dir: hdfs:///completed-jobs/# The address under which the web-based HistoryServer listens.

#historyserver.web.address: 0.0.0.0# The port under which the web-based HistoryServer listens.

#historyserver.web.port: 8082# Comma separated list of directories to monitor for completed jobs.

#historyserver.archive.fs.dir: hdfs:///completed-jobs/# Interval in milliseconds for refreshing the monitored directories.

#historyserver.archive.fs.refresh-interval: 10000blob.server.port: 6124

query.server.port: 61253、docker-compose.yaml

使用如下命令部署集群:

docker-compose -f docker-compose.yml up -d

docker-compose.yaml内容如下:

version: "2.1"

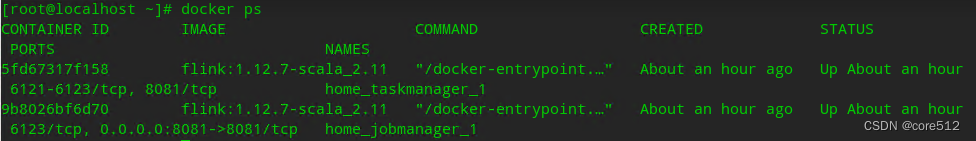

services:jobmanager:image: flink:1.12.7-scala_2.11expose:- "6123"ports:- "8081:8081"command: jobmanagerenvironment:- JOB_MANAGER_RPC_ADDRESS=jobmanagervolumes:- /home/flink/conf/job/flink-conf.yaml:/opt/flink/conf/flink-conf.yamlrestart: alwaystaskmanager:image: flink:1.12.7-scala_2.11expose:- "6121"- "6122"depends_on:- jobmanagercommand: taskmanagerlinks:- "jobmanager:jobmanager"environment:- JOB_MANAGER_RPC_ADDRESS=jobmanagervolumes:- /home/flink/conf/task/flink-conf.yaml:/opt/flink/conf/flink-conf.yamlrestart: always4、启动后

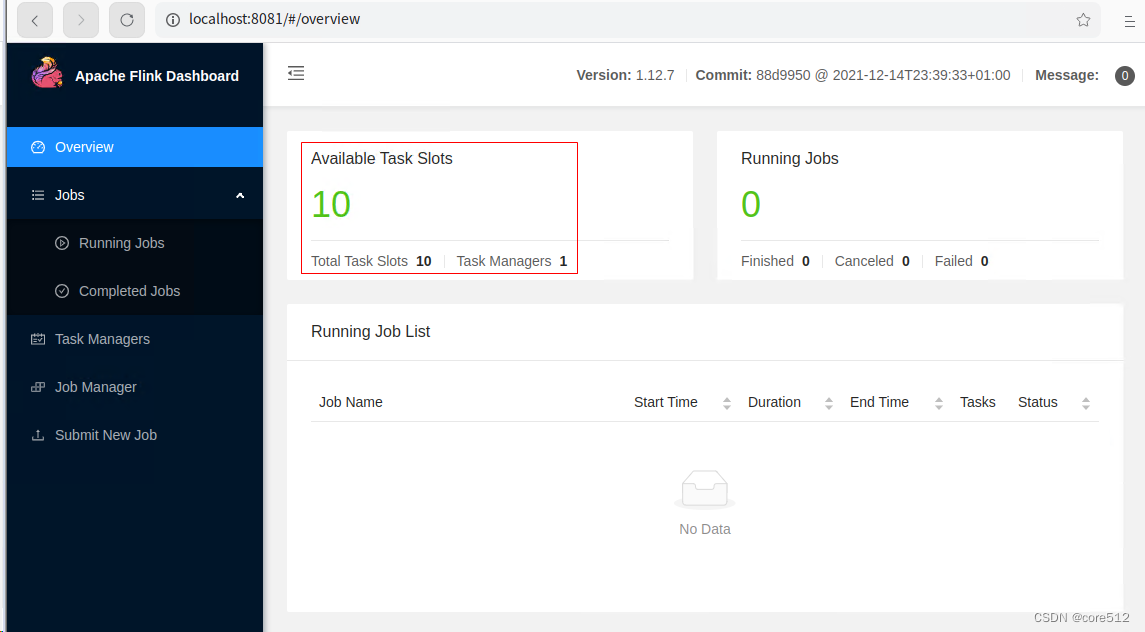

5、页面访问

访问地址:http://IP:8081

相关文章:

Flink会话集群docker-compose一键安装

1、安装docker 参考,本人这篇博客:https://blog.csdn.net/taotao_guiwang/article/details/135508643?spm1001.2014.3001.5501 2、flink-conf.yaml flink-conf.yaml放在/home/flink/conf/job、/home/flink/conf/task下面,flink-conf.yaml…...

qt.qpa.plugin: Could not find the Qt platform plugin “windows“ in ““

系统环境:Win10家庭中文版 Qt : 5.12.9 链接了一些64位的第三方库,程序编译完运行后出现 qt.qpa.plugin: Could not find the Qt platform plugin "windows" in "" 弹窗如下: 网上搜了一些都是关于pyQt的,…...

vue面试题集锦

1. 谈一谈对 MVVM 的理解? MVVM 是 Model-View-ViewModel 的缩写。MVVM 是一种设计思想。 Model 层代表数据模型,也可以在 Model 中定义数据修改和操作的业务逻辑; View 代表 UI 组件,它负责将数据模型转化成 UI 展现出来,View 是…...

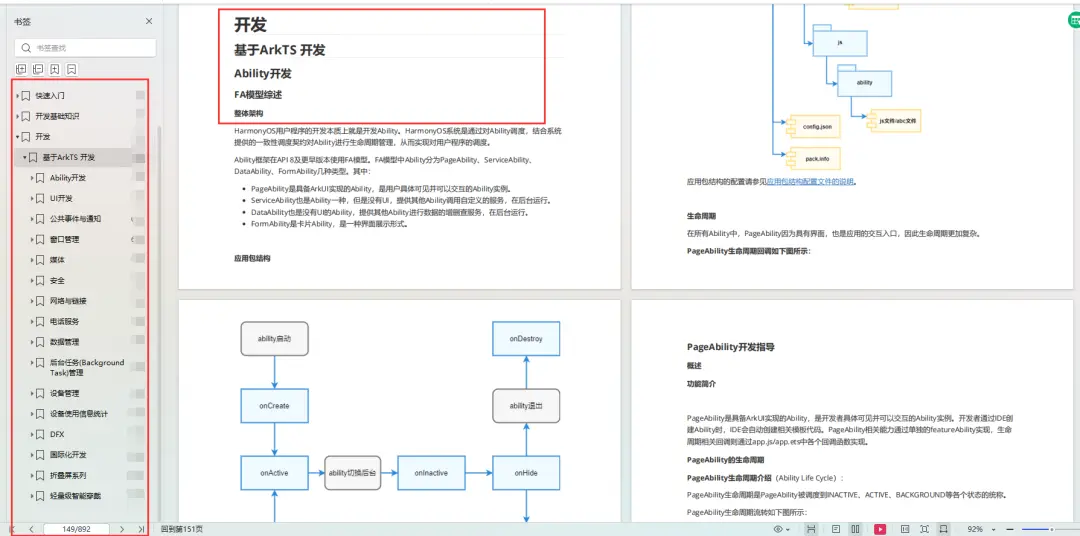

2024年学鸿蒙开发就业前景怎么样?

随着科技的不断进步,鸿蒙系统作为华为自主研发的操作系统,逐渐引起了人们的关注。 2024年,鸿蒙开发就业前景如何? 对于那些对鸿蒙开发感兴趣并希望在这一领域寻找职业发展的人来说,这是一个非常重要的问题。 首先&a…...

Unity网络通讯学习

---部分截图来自 siki学院Unity网络通讯课程 Socket 网络上的两个程序通过一个双向的通信连接实现数据交换,这个连接的一端称为一个 Socket ,Socket 包含了网络通信必须的五种信息 Socket 例子{ 协议: TCP 本地: IP ÿ…...

js入口函数和jQuery入口函数的区别

JS入口函数指的是JavaScript中的主入口函数,用来初始化页面加载完成后的操作。通常情况下,JS入口函数是在HTML页面中的<script>标签中定义的,通过onload事件等方式触发调用。 jQuery入口函数则是指使用jQuery库时的主入口函数…...

Docker-Compose编排Nginx1.25.1+PHP7.4.33+Redis7.0.11环境

实践说明:基于RHEL7(CentOS7.9)部署docker环境(23.0.1、24.0.2),编排也可应用于RHEL7-9(如AlmaLinux9.1),但因为docker的特性,适用场景是不限于此的。 文档形成时期:2017-2023年 因系统或软件版本不同,构建…...

《新课程教学》(电子版)是正规期刊吗?能评职称吗?

《新课程教学》(电子版)主要出版内容为学科教学理论、学科教学实践经验和成果,主要读者对象为中小学教师,期刊设卷首语、名家讲堂、课程与教学、教学实践、考试评价、教育信息化、教学琐谈、教育管理、教师心语、一线课堂、重温经…...

Posgresql macOS安装和基础操作

摘要 本文介绍macOS版本Postgresql的安装,pg常用命令。作为笔记记录,后续方便查看。 Postgresql安装 官网下载postgresql安装包https://www.postgresql.org/download/。官网下载慢时,可以从这里下载我上传的mac版本的pg安装包资源。下载后&am…...

ArkUI-X跨平台已至,何需其它!

运行环境 DevEco Studio:4.0Release OpenHarmony SDK API10 开发板:润和DAYU200 自从写了一篇ArkUI-X跨平台的文章之后,好多人都说对这个项目十分关注。 那么今天我们就来完整的梳理一下这个项目。 1、ArkUI-X 我们之前可能更多接触的…...

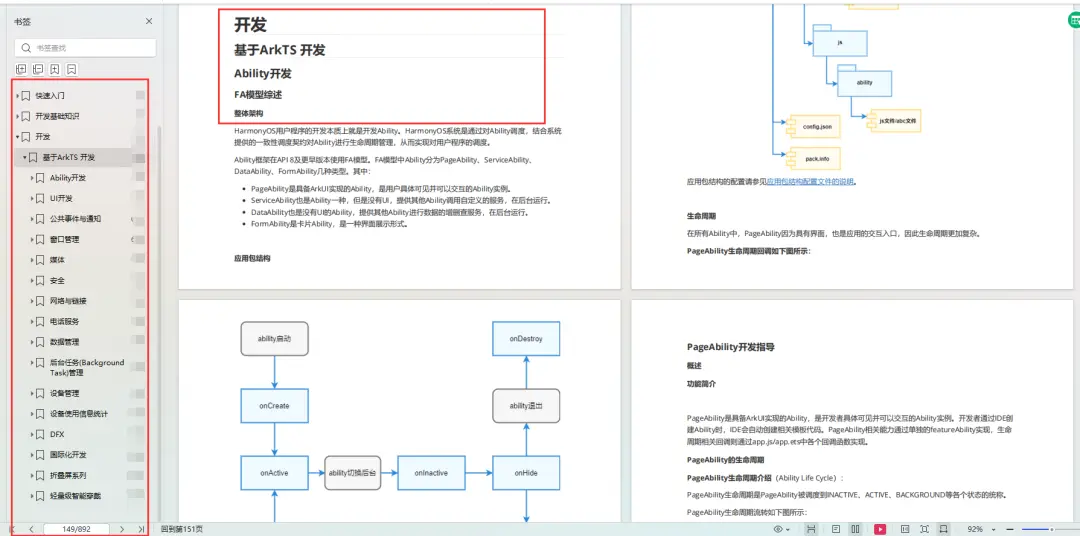

(2024,分数蒸馏抽样,Delta 降噪分数,LoRA)PALP:文本到图像模型的提示对齐个性化

PALP: Prompt Aligned Personalization of Text-to-Image Models 公和众和号:EDPJ(进 Q 交流群:922230617 或加 VX:CV_EDPJ 进 V 交流群) 目录 0. 摘要 4. 提示对齐方法 4.1 概述 4.2 个性化 4.3 提示对齐分数抽…...

近日遇到数据库及其他问题

一、查找备份表和原表不一样数据 select * from A where (select count(1) from A_BAK where A.IDA_BAK.ID) 0 二、在数据量比较大的表中新增有默认值的列速度较慢问题 使用 以下语句,在上亿数据的表中执行速度较慢 alter table TEST add col_a integer DEFA…...

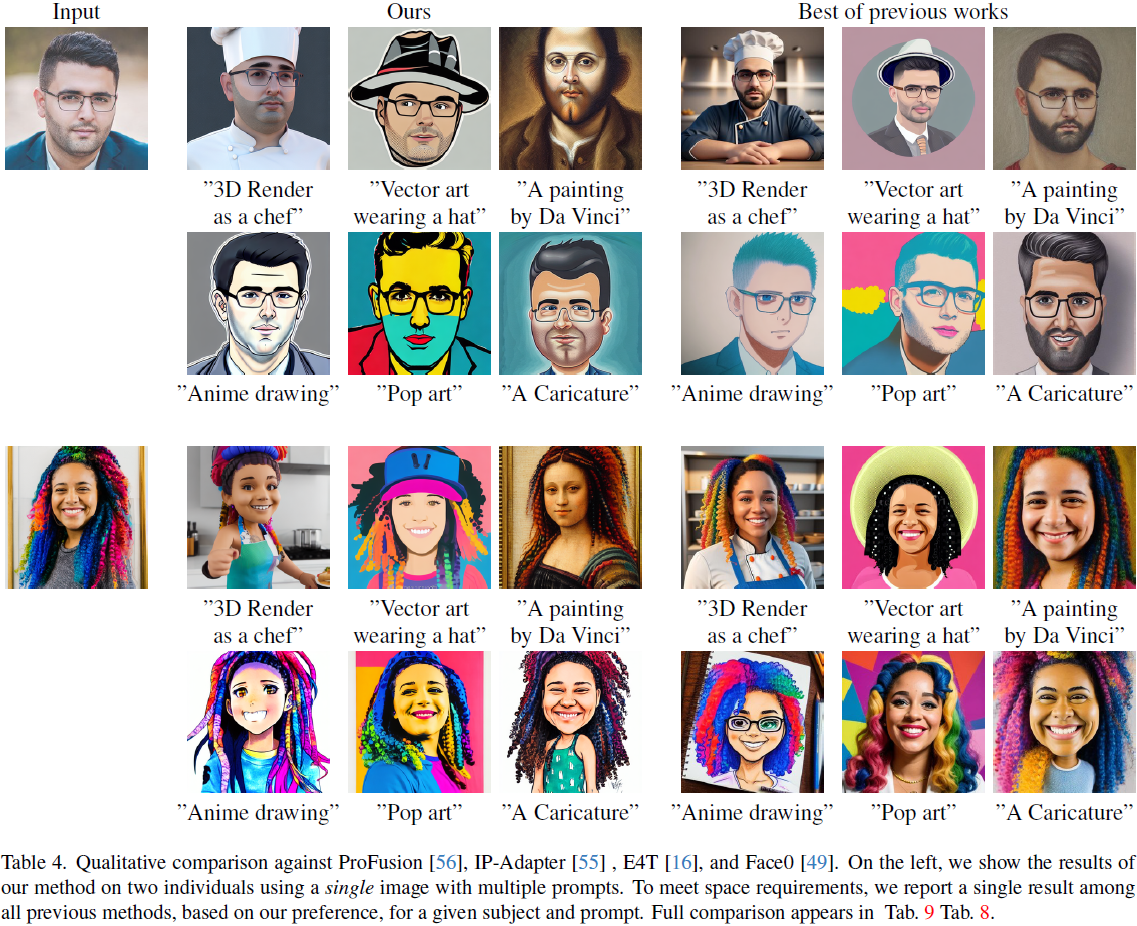

【conda】conda 版本控制和环境迁移/安装conda加速工具mamba /conda常用指令/Anaconda配置

【conda】安装conda加速工具mamba /conda常用指令/Anaconda配置 0. conda 版本控制和环境迁移1. 安装conda加速工具mamba2. conda install version3. [Anaconda 镜像](https://mirrors.tuna.tsinghua.edu.cn/help/anaconda/)使用帮助4. error deal 0. conda 版本控制和环境迁移…...

“/bin/bash“: stat /bin/bash: no such file or directory: unknown

简介:常规情况下,在进入容器时习惯使用 /bin/bash为结尾,如:docker exec -it test-sanic /bin/bash, 但是如果容器本身使用了精简版,只装了sh命令,未安装bash。这时就会抛出"/bin/bash&quo…...

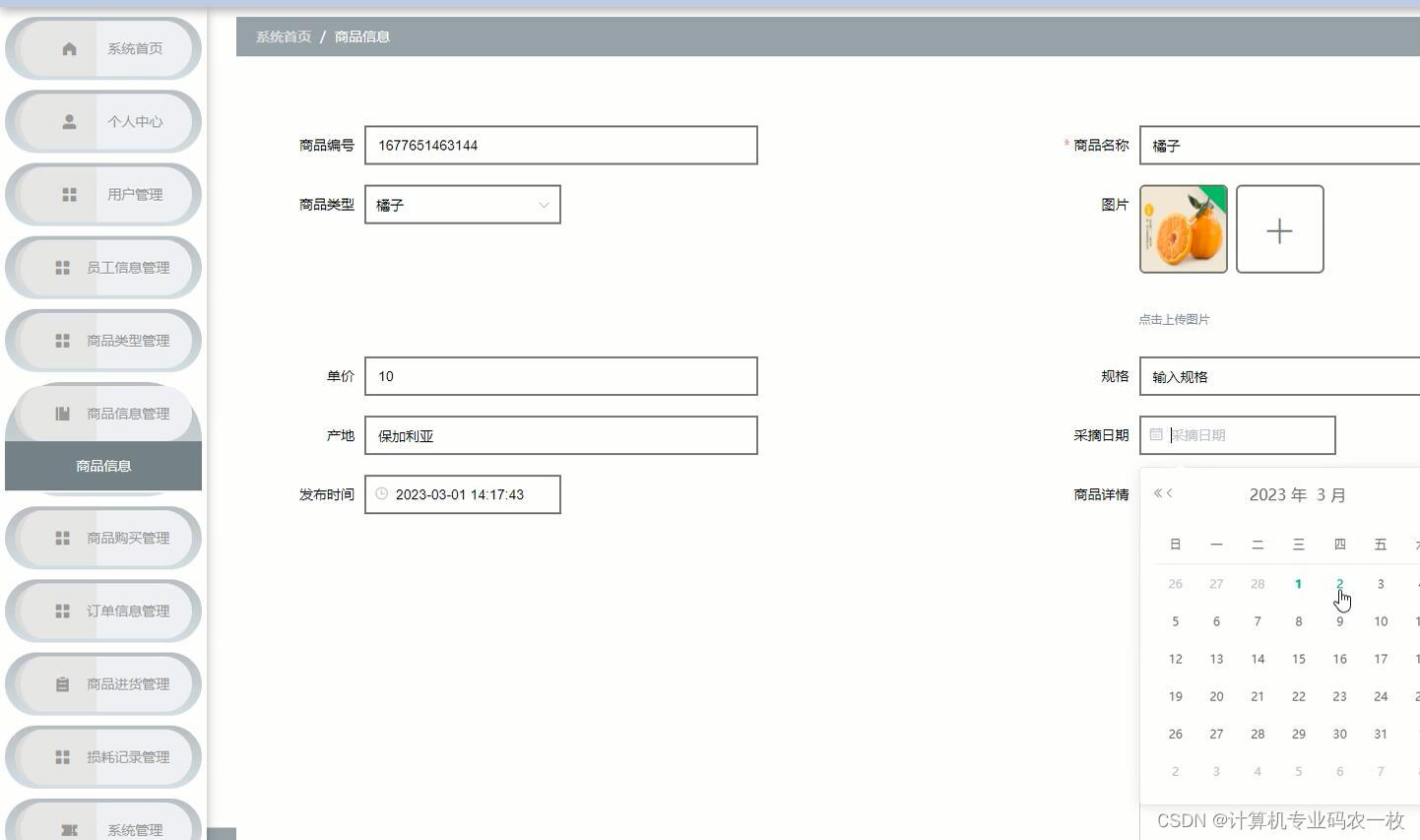

基于Spring Boot+vue的云上新鲜水果超市商城系统

本云上水果超市是为了提高用户查阅信息的效率和管理人员管理信息的工作效率,可以快速存储大量数据,还有信息检索功能,这大大的满足了用户、员工信息和管理员这三者的需求。操作简单易懂,合理分析各个模块的功能,尽可能…...

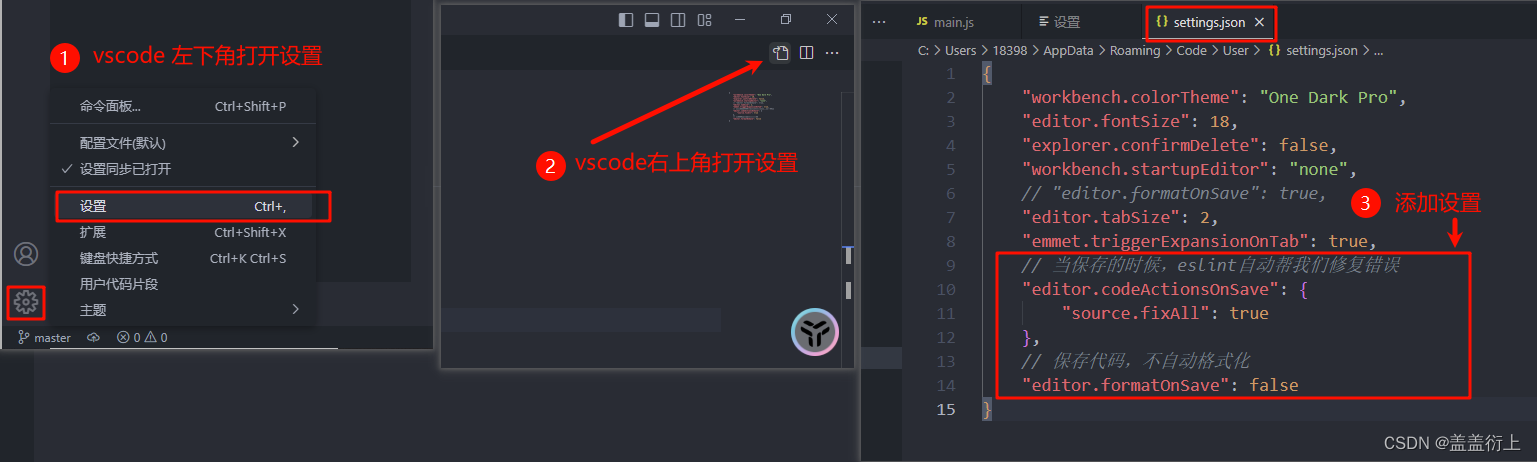

vue-ESlint代码规范及修复

1. 介绍 ESLint:是一个代码检查工具,用来检查你的代码是否符合指定的规则(你和你的团队可以自行约定一套规则)。 在创建项目时,我们使用的是 JavaScript Standard Style 代码风格的规则。 规范网址:https://standardjs.com/rules-zhcn.htm…...

Oracle数据库断电后不能打开的解决

数据库突然断电后,不能打开。或者偶尔能打开,但是很快就关闭。 原因可能很多。但是解决问题只有一种办法:看trace日志,alert错误日志 简单写下我的解决过程: 1,在alert日志中: 错误如下两种:…...

论文复现: In-Loop Filter with Customized Weights For VVC Intra Coding

论文复现: In-Loop Filter with Customized Weights For VVC Intra Coding 这个好难好难。啊啊啊啊。核心:权重预测模块功能快捷键合理的创建标题,有助于目录的生成如何改变文本的样式插入链接与图片如何插入一段漂亮的代码片生成一个适合你的…...

配置华为设备NQA UDP Jitter检测VoIP业务抖动

组网需求 如图1所示,总部和子公司之间需要跨越外部网络进行通信,DeviceA和DeviceD为总部和子公司的网络出口设备,DeviceB和DeviceC为外部网络提供商的边缘设备。 总部和子公司之间经常要通过VoIP进行电话会议,要求双向时延小于2…...

GitHub要求所有贡献代码的用户在2023年底前启用双因素认证

到2023年底,所有向github托管的存储库贡献代码的用户都必须启用一种或多种形式的2FA。 双重身份认证 所谓双重身份认证(Two-Factor Authentication),就是在账号密码以外还额外需要一种方式来确认用户身份。 GitHub正在大力推动双…...

谷歌浏览器插件

项目中有时候会用到插件 sync-cookie-extension1.0.0:开发环境同步测试 cookie 至 localhost,便于本地请求服务携带 cookie 参考地址:https://juejin.cn/post/7139354571712757767 里面有源码下载下来,加在到扩展即可使用FeHelp…...

MySQL 隔离级别:脏读、幻读及不可重复读的原理与示例

一、MySQL 隔离级别 MySQL 提供了四种隔离级别,用于控制事务之间的并发访问以及数据的可见性,不同隔离级别对脏读、幻读、不可重复读这几种并发数据问题有着不同的处理方式,具体如下: 隔离级别脏读不可重复读幻读性能特点及锁机制读未提交(READ UNCOMMITTED)允许出现允许…...

Java 8 Stream API 入门到实践详解

一、告别 for 循环! 传统痛点: Java 8 之前,集合操作离不开冗长的 for 循环和匿名类。例如,过滤列表中的偶数: List<Integer> list Arrays.asList(1, 2, 3, 4, 5); List<Integer> evens new ArrayList…...

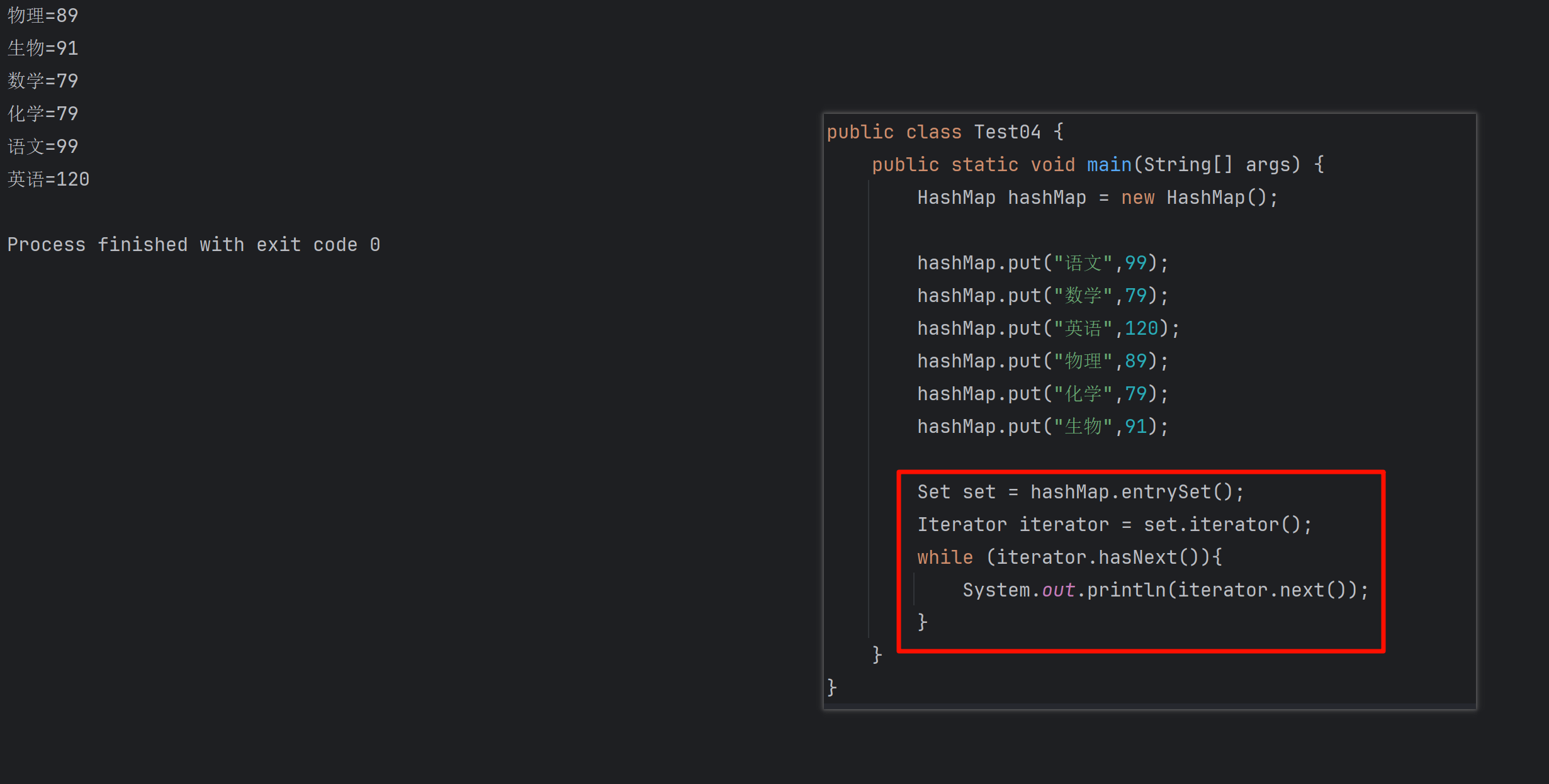

遍历 Map 类型集合的方法汇总

1 方法一 先用方法 keySet() 获取集合中的所有键。再通过 gey(key) 方法用对应键获取值 import java.util.HashMap; import java.util.Set;public class Test {public static void main(String[] args) {HashMap hashMap new HashMap();hashMap.put("语文",99);has…...

python/java环境配置

环境变量放一起 python: 1.首先下载Python Python下载地址:Download Python | Python.org downloads ---windows -- 64 2.安装Python 下面两个,然后自定义,全选 可以把前4个选上 3.环境配置 1)搜高级系统设置 2…...

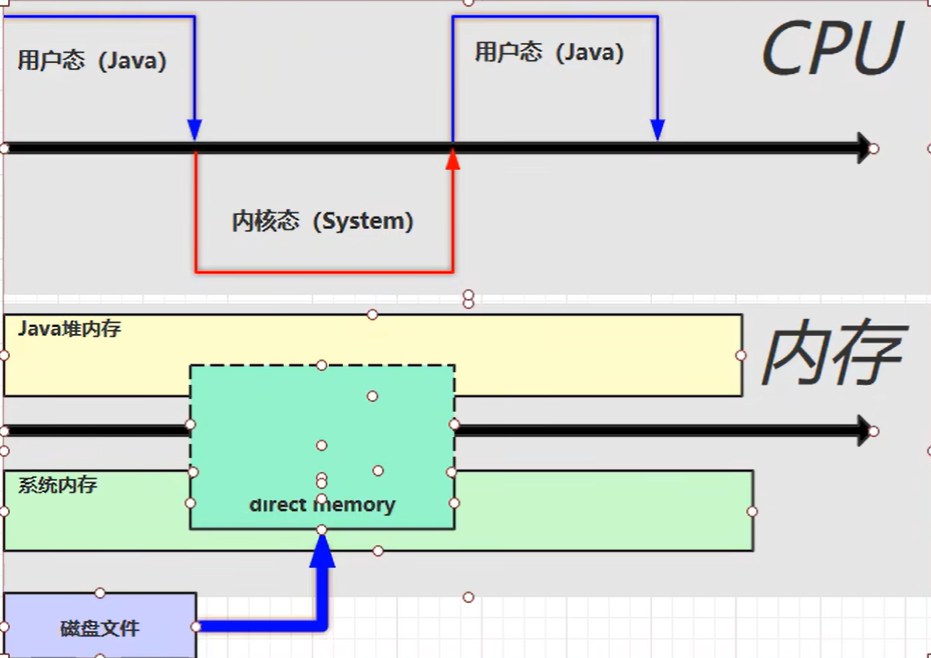

【JVM】- 内存结构

引言 JVM:Java Virtual Machine 定义:Java虚拟机,Java二进制字节码的运行环境好处: 一次编写,到处运行自动内存管理,垃圾回收的功能数组下标越界检查(会抛异常,不会覆盖到其他代码…...

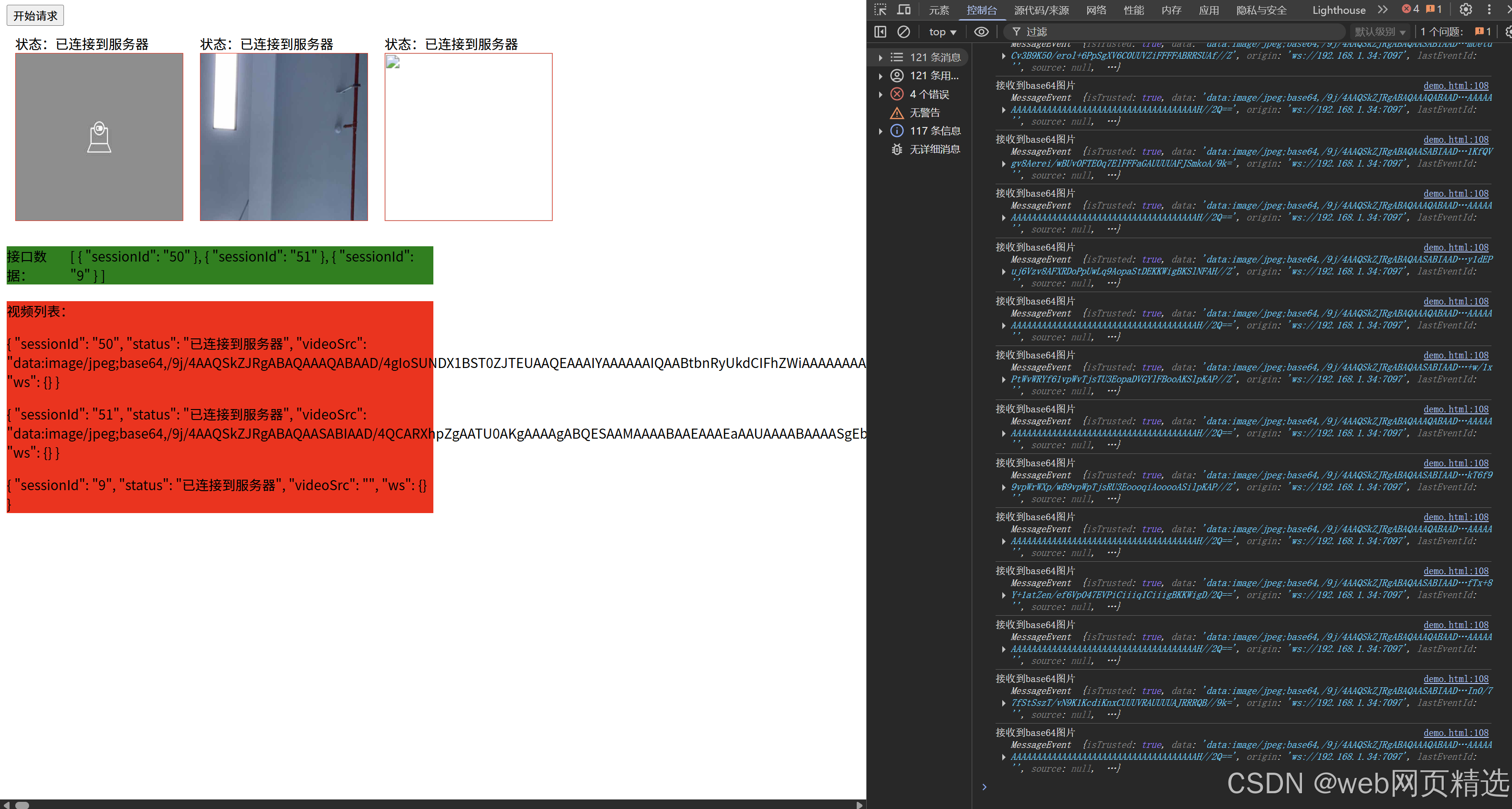

uniapp微信小程序视频实时流+pc端预览方案

方案类型技术实现是否免费优点缺点适用场景延迟范围开发复杂度WebSocket图片帧定时拍照Base64传输✅ 完全免费无需服务器 纯前端实现高延迟高流量 帧率极低个人demo测试 超低频监控500ms-2s⭐⭐RTMP推流TRTC/即构SDK推流❌ 付费方案 (部分有免费额度&#x…...

CRMEB 框架中 PHP 上传扩展开发:涵盖本地上传及阿里云 OSS、腾讯云 COS、七牛云

目前已有本地上传、阿里云OSS上传、腾讯云COS上传、七牛云上传扩展 扩展入口文件 文件目录 crmeb\services\upload\Upload.php namespace crmeb\services\upload;use crmeb\basic\BaseManager; use think\facade\Config;/*** Class Upload* package crmeb\services\upload* …...

Android15默认授权浮窗权限

我们经常有那种需求,客户需要定制的apk集成在ROM中,并且默认授予其【显示在其他应用的上层】权限,也就是我们常说的浮窗权限,那么我们就可以通过以下方法在wms、ams等系统服务的systemReady()方法中调用即可实现预置应用默认授权浮…...

多模态大语言模型arxiv论文略读(108)

CROME: Cross-Modal Adapters for Efficient Multimodal LLM ➡️ 论文标题:CROME: Cross-Modal Adapters for Efficient Multimodal LLM ➡️ 论文作者:Sayna Ebrahimi, Sercan O. Arik, Tejas Nama, Tomas Pfister ➡️ 研究机构: Google Cloud AI Re…...