头歌:共享单车之数据分析

第1关 统计共享单车每天的平均使用时间

package com.educoder.bigData.sharedbicycle;import java.io.IOException;

import java.text.ParseException;

import java.util.Collection;

import java.util.Date;

import java.util.HashMap;

import java.util.Locale;

import java.util.Map;

import java.util.Scanner;

import java.math.RoundingMode;

import java.math.BigDecimal;

import org.apache.commons.lang3.time.DateFormatUtils;

import org.apache.commons.lang3.time.FastDateFormat;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.BytesWritable;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.util.Tool;import com.educoder.bigData.util.HBaseUtil;/*** 统计共享单车每天的平均使用时间*/

public class AveragetTimeMapReduce extends Configured implements Tool {public static final byte[] family = "info".getBytes();public static class MyMapper extends TableMapper<Text, BytesWritable> {protected void map(ImmutableBytesWritable rowKey, Result result, Context context)throws IOException, InterruptedException {/********** Begin *********/long beginTime = Long.parseLong(Bytes.toString(result.getValue(family, "beginTime".getBytes())));long endTime = Long.parseLong(Bytes.toString(result.getValue(family, "endTime".getBytes())));String format = DateFormatUtils.format(beginTime, "yyyy-MM-dd", Locale.CHINA);long useTime = endTime - beginTime;BytesWritable bytesWritable = new BytesWritable(Bytes.toBytes(format + "_" + useTime));context.write(new Text("avgTime"), bytesWritable); /********** End *********/}}public static class MyTableReducer extends TableReducer<Text, BytesWritable, ImmutableBytesWritable> {@Overridepublic void reduce(Text key, Iterable<BytesWritable> values, Context context)throws IOException, InterruptedException {/********** Begin *********/double sum = 0;int length = 0;Map<String, Long> map = new HashMap<String, Long>();for (BytesWritable price : values) {byte[] copyBytes = price.copyBytes();String string = Bytes.toString(copyBytes);String[] split = string.split("_");if (map.containsKey(split[0])) {Long integer = map.get(split[0]) + Long.parseLong(split[1]);map.put(split[0], integer);} else {map.put(split[0], Long.parseLong(split[1]));}}Collection<Long> values2 = map.values();for (Long i : values2) {length++;sum += i;}BigDecimal decimal = new BigDecimal(sum / length /1000);BigDecimal setScale = decimal.setScale(2, RoundingMode.HALF_DOWN);Put put = new Put(Bytes.toBytes(key.toString()));put.addColumn(family, "avgTime".getBytes(), Bytes.toBytes(setScale.toString()));context.write(null, put); /********** End *********/}}public int run(String[] args) throws Exception {// 配置JobConfiguration conf = HBaseUtil.conf;// Scanner sc = new Scanner(System.in);// String arg1 = sc.next();// String arg2 = sc.next();String arg1 = "t_shared_bicycle";String arg2 = "t_bicycle_avgtime";try {HBaseUtil.createTable(arg2, new String[] { "info" });} catch (Exception e) {// 创建表失败e.printStackTrace();}Job job = configureJob(conf, new String[] { arg1, arg2 });return job.waitForCompletion(true) ? 0 : 1;}private Job configureJob(Configuration conf, String[] args) throws IOException {String tablename = args[0];String targetTable = args[1];Job job = new Job(conf, tablename);Scan scan = new Scan();scan.setCaching(300);scan.setCacheBlocks(false);// 在mapreduce程序中千万不要设置允许缓存// 初始化Mapreduce程序TableMapReduceUtil.initTableMapperJob(tablename, scan, MyMapper.class, Text.class, BytesWritable.class, job);// 初始化ReduceTableMapReduceUtil.initTableReducerJob(targetTable, // output tableMyTableReducer.class, // reducer classjob);job.setNumReduceTasks(1);return job;}

}第2关 统计共享单车在指定地点的每天平均次数

package com.educoder.bigData.sharedbicycle;import java.io.IOException;import java.math.BigDecimal;import java.math.RoundingMode;import java.util.ArrayList;import java.util.Collection;import java.util.HashMap;import java.util.Locale;import java.util.Map;import java.util.Scanner;import org.apache.commons.lang3.time.DateFormatUtils;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.conf.Configured;import org.apache.hadoop.hbase.CompareOperator;import org.apache.hadoop.hbase.client.Put;import org.apache.hadoop.hbase.client.Result;import org.apache.hadoop.hbase.client.Scan;import org.apache.hadoop.hbase.filter.BinaryComparator;import org.apache.hadoop.hbase.filter.Filter;import org.apache.hadoop.hbase.filter.FilterList;import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;import org.apache.hadoop.hbase.filter.SubstringComparator;import org.apache.hadoop.hbase.io.ImmutableBytesWritable;import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;import org.apache.hadoop.hbase.mapreduce.TableMapper;import org.apache.hadoop.hbase.mapreduce.TableReducer;import org.apache.hadoop.hbase.util.Bytes;import org.apache.hadoop.io.BytesWritable;import org.apache.hadoop.io.DoubleWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.util.Tool;import com.educoder.bigData.util.HBaseUtil;/*** 共享单车每天在韩庄村的平均空闲时间*/public class AverageVehicleMapReduce extends Configured implements Tool {public static final byte[] family = "info".getBytes();public static class MyMapper extends TableMapper<Text, BytesWritable> {protected void map(ImmutableBytesWritable rowKey, Result result, Context context)throws IOException, InterruptedException {/********** Begin *********/String beginTime = Bytes.toString(result.getValue(family, "beginTime".getBytes()));String format = DateFormatUtils.format(Long.parseLong(beginTime), "yyyy-MM-dd", Locale.CHINA);BytesWritable bytesWritable = new BytesWritable(Bytes.toBytes(format));context.write(new Text("河北省保定市雄县-韩庄村"), bytesWritable);/********** End *********/}}public static class MyTableReducer extends TableReducer<Text, BytesWritable, ImmutableBytesWritable> {@Overridepublic void reduce(Text key, Iterable<BytesWritable> values, Context context)throws IOException, InterruptedException {/********** Begin *********/double sum = 0;int length = 0;Map<String, Integer> map = new HashMap<String, Integer>();for (BytesWritable price : values) {byte[] copyBytes = price.copyBytes();String string = Bytes.toString(copyBytes);if (map.containsKey(string)) {Integer integer = map.get(string) + 1;map.put(string, integer);} else {map.put(string, new Integer(1));}}Collection<Integer> values2 = map.values();for (Integer i : values2) {length++;sum += i;}BigDecimal decimal = new BigDecimal(sum / length);BigDecimal setScale = decimal.setScale(2, RoundingMode. HALF_DOWN);Put put = new Put(Bytes.toBytes(key.toString()));put.addColumn(family, "avgNum".getBytes(), Bytes.toBytes(setScale.toString()));context.write(null, put);/********** End *********/}}public int run(String[] args) throws Exception {// 配置JobConfiguration conf = HBaseUtil.conf;//Scanner sc = new Scanner(System.in);//String arg1 = sc.next();//String arg2 = sc.next();String arg1 = "t_shared_bicycle";String arg2 = "t_bicycle_avgnum";try {HBaseUtil.createTable(arg2, new String[] { "info" });} catch (Exception e) {// 创建表失败e.printStackTrace();}Job job = configureJob(conf, new String[] { arg1, arg2 });return job.waitForCompletion(true) ? 0 : 1;}private Job configureJob(Configuration conf, String[] args) throws IOException {String tablename = args[0];String targetTable = args[1];Job job = new Job(conf, tablename);Scan scan = new Scan();scan.setCaching(300);scan.setCacheBlocks(false);// 在mapreduce程序中千万不要设置允许缓存/********** Begin *********///设置过滤ArrayList<Filter> listForFilters = new ArrayList<Filter>();Filter destinationFilter =new SingleColumnValueFilter(Bytes.toBytes("info"), Bytes.toBytes("destination"),CompareOperator.EQUAL, new SubstringComparator("韩庄村"));Filter departure = new SingleColumnValueFilter(Bytes.toBytes("info"), Bytes.toBytes("departure"),CompareOperator.EQUAL, Bytes.toBytes("河北省保定市雄县"));listForFilters.add(departure);listForFilters.add(destinationFilter);scan.setCaching(300);scan.setCacheBlocks(false);Filter filters = new FilterList(listForFilters);scan.setFilter(filters);/********** End *********/// 初始化Mapreduce程序TableMapReduceUtil.initTableMapperJob(tablename, scan, MyMapper.class, Text.class, BytesWritable.class, job);// 初始化ReduceTableMapReduceUtil.initTableReducerJob(targetTable, // output tableMyTableReducer.class, // reducer classjob);job.setNumReduceTasks(1);return job;}}第3关 统计共享单车指定车辆每次使用的空闲平均时间

package com.educoder.bigData.sharedbicycle;import java.io.IOException;import java.math.BigDecimal;import java.math.RoundingMode;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.conf.Configured;import org.apache.hadoop.hbase.CompareOperator;import org.apache.hadoop.hbase.client.Put;import org.apache.hadoop.hbase.client.Result;import org.apache.hadoop.hbase.client.Scan;import org.apache.hadoop.hbase.filter.Filter;import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;import org.apache.hadoop.hbase.io.ImmutableBytesWritable;import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;import org.apache.hadoop.hbase.mapreduce.TableMapper;import org.apache.hadoop.hbase.mapreduce.TableReducer;import org.apache.hadoop.hbase.util.Bytes;import org.apache.hadoop.io.BytesWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.util.Tool;import com.educoder.bigData.util.HBaseUtil;/*** * 统计5996共享单车每次使用的空闲平均时间*/public class FreeTimeMapReduce extends Configured implements Tool {public static final byte[] family = "info".getBytes();public static class MyMapper extends TableMapper<Text, BytesWritable> {protected void map(ImmutableBytesWritable rowKey, Result result, Context context)throws IOException, InterruptedException {/********** Begin *********/long beginTime = Long.parseLong(Bytes.toString(result.getValue(family, "beginTime".getBytes())));long endTime = Long.parseLong(Bytes.toString(result.getValue(family, "endTime".getBytes())));BytesWritable bytesWritable = new BytesWritable(Bytes.toBytes(beginTime + "_" + endTime));context.write(new Text("5996"), bytesWritable); /********** End *********/}}public static class MyTableReducer extends TableReducer<Text, BytesWritable, ImmutableBytesWritable> {@Overridepublic void reduce(Text key, Iterable<BytesWritable> values, Context context)throws IOException, InterruptedException {/********** Begin *********/long freeTime = 0;long beginTime = 0;int length = 0;for (BytesWritable time : values) {byte[] copyBytes = time.copyBytes();String timeLong = Bytes.toString(copyBytes);String[] split = timeLong.split("_");if(beginTime == 0) {beginTime = Long.parseLong(split[0]);continue;}else {freeTime = freeTime + beginTime - Long.parseLong(split[1]);beginTime = Long.parseLong(split[0]);length ++;}}Put put = new Put(Bytes.toBytes(key.toString()));BigDecimal decimal = new BigDecimal(freeTime / length /1000 /60 /60);BigDecimal setScale = decimal.setScale(2, RoundingMode.HALF_DOWN);put.addColumn(family, "freeTime".getBytes(), Bytes.toBytes(setScale.toString()));context.write(null, put);/********** End *********/}}public int run(String[] args) throws Exception {// 配置JobConfiguration conf = HBaseUtil.conf;// Scanner sc = new Scanner(System.in);// String arg1 = sc.next();// String arg2 = sc.next();String arg1 = "t_shared_bicycle";String arg2 = "t_bicycle_freetime";try {HBaseUtil.createTable(arg2, new String[] { "info" });} catch (Exception e) {// 创建表失败e.printStackTrace();}Job job = configureJob(conf, new String[] { arg1, arg2 });return job.waitForCompletion(true) ? 0 : 1;}private Job configureJob(Configuration conf, String[] args) throws IOException {String tablename = args[0];String targetTable = args[1];Job job = new Job(conf, tablename);Scan scan = new Scan();scan.setCaching(300);scan.setCacheBlocks(false);// 在mapreduce程序中千万不要设置允许缓存/********** Begin *********///设置过滤条件Filter filter = new SingleColumnValueFilter(Bytes.toBytes("info"), Bytes.toBytes("bicycleId"), CompareOperator.EQUAL, Bytes.toBytes("5996"));scan.setFilter(filter); /********** End *********/// 初始化Mapreduce程序TableMapReduceUtil.initTableMapperJob(tablename, scan, MyMapper.class, Text.class, BytesWritable.class, job);// 初始化ReduceTableMapReduceUtil.initTableReducerJob(targetTable, // output tableMyTableReducer.class, // reducer classjob);job.setNumReduceTasks(1);return job;}}第4关 统计指定时间共享单车使用次数

package com.educoder.bigData.sharedbicycle;import java.io.IOException;import java.util.ArrayList;import org.apache.commons.lang3.time.FastDateFormat;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.conf.Configured;import org.apache.hadoop.hbase.CompareOperator;import org.apache.hadoop.hbase.client.Put;import org.apache.hadoop.hbase.client.Result;import org.apache.hadoop.hbase.client.Scan;import org.apache.hadoop.hbase.filter.Filter;import org.apache.hadoop.hbase.filter.FilterList;import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;import org.apache.hadoop.hbase.io.ImmutableBytesWritable;import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;import org.apache.hadoop.hbase.mapreduce.TableMapper;import org.apache.hadoop.hbase.mapreduce.TableReducer;import org.apache.hadoop.hbase.util.Bytes;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.util.Tool;import com.educoder.bigData.util.HBaseUtil;/*** 共享单车使用次数统计*/public class UsageRateMapReduce extends Configured implements Tool {public static final byte[] family = "info".getBytes();public static class MyMapper extends TableMapper<Text, IntWritable> {protected void map(ImmutableBytesWritable rowKey, Result result, Context context)throws IOException, InterruptedException {/********** Begin *********/IntWritable doubleWritable = new IntWritable(1);context.write(new Text("departure"), doubleWritable);/********** End *********/}}public static class MyTableReducer extends TableReducer<Text, IntWritable, ImmutableBytesWritable> {@Overridepublic void reduce(Text key, Iterable<IntWritable> values, Context context)throws IOException, InterruptedException {/********** Begin *********/ int totalNum = 0;for (IntWritable num : values) {int d = num.get();totalNum += d;}Put put = new Put(Bytes.toBytes(key.toString()));put.addColumn(family, "usageRate".getBytes(), Bytes.toBytes(String.valueOf(totalNum)));context.write(null, put);/********** End *********/}}public int run(String[] args) throws Exception {// 配置JobConfiguration conf = HBaseUtil.conf;// Scanner sc = new Scanner(System.in);// String arg1 = sc.next();// String arg2 = sc.next();String arg1 = "t_shared_bicycle";String arg2 = "t_bicycle_usagerate";try {HBaseUtil.createTable(arg2, new String[] { "info" });} catch (Exception e) {// 创建表失败e.printStackTrace();}Job job = configureJob(conf, new String[] { arg1, arg2 });return job.waitForCompletion(true) ? 0 : 1;}private Job configureJob(Configuration conf, String[] args) throws IOException {String tablename = args[0];String targetTable = args[1];Job job = new Job(conf, tablename);ArrayList<Filter> listForFilters = new ArrayList<Filter>();FastDateFormat instance = FastDateFormat.getInstance("yyyy-MM-dd");Scan scan = new Scan();scan.setCaching(300);scan.setCacheBlocks(false);// 在mapreduce程序中千万不要设置允许缓存/********** Begin *********/try {Filter destinationFilter = new SingleColumnValueFilter(Bytes.toBytes("info"), Bytes.toBytes("beginTime"), CompareOperator.GREATER_OR_EQUAL, Bytes.toBytes(String.valueOf(instance.parse("2017-08-01").getTime())));Filter departure = new SingleColumnValueFilter(Bytes.toBytes("info"), Bytes.toBytes("endTime"), CompareOperator.LESS_OR_EQUAL, Bytes.toBytes(String.valueOf(instance.parse("2017-09-01").getTime())));listForFilters.add(departure);listForFilters.add(destinationFilter);}catch (Exception e) {e.printStackTrace();return null;}Filter filters = new FilterList(listForFilters);scan.setFilter(filters);/********** End *********/// 初始化Mapreduce程序TableMapReduceUtil.initTableMapperJob(tablename, scan, MyMapper.class, Text.class, IntWritable.class, job);// 初始化ReduceTableMapReduceUtil.initTableReducerJob(targetTable, // output tableMyTableReducer.class, // reducer classjob);job.setNumReduceTasks(1);return job;}}

第5关 统计共享单车线路流量

package com.educoder.bigData.sharedbicycle;import java.io.IOException;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.util.Tool;import com.educoder.bigData.util.HBaseUtil;/*** 共享单车线路流量统计*/

public class LineTotalMapReduce extends Configured implements Tool {public static final byte[] family = "info".getBytes();public static class MyMapper extends TableMapper<Text, IntWritable> {protected void map(ImmutableBytesWritable rowKey, Result result, Context context)throws IOException, InterruptedException {/********** Begin *********/String start_latitude = Bytes.toString(result.getValue(family, "start_latitude".getBytes()));String start_longitude = Bytes.toString(result.getValue(family, "start_longitude".getBytes()));String stop_latitude = Bytes.toString(result.getValue(family, "stop_latitude".getBytes()));String stop_longitude = Bytes.toString(result.getValue(family, "stop_longitude".getBytes()));String departure = Bytes.toString(result.getValue(family, "departure".getBytes()));String destination = Bytes.toString(result.getValue(family, "destination".getBytes()));IntWritable doubleWritable = new IntWritable(1);context.write(new Text(start_latitude + "-" + start_longitude + "_" + stop_latitude + "-" + stop_longitude + "_" + departure + "-" + destination), doubleWritable);/********** End *********/}}public static class MyTableReducer extends TableReducer<Text, IntWritable, ImmutableBytesWritable> {@Overridepublic void reduce(Text key, Iterable<IntWritable> values, Context context)throws IOException, InterruptedException {/********** Begin *********/int totalNum = 0;for (IntWritable num : values) {int d = num.get();totalNum += d;}Put put = new Put(Bytes.toBytes(key.toString() + totalNum ));put.addColumn(family, "lineTotal".getBytes(), Bytes.toBytes(String.valueOf(totalNum)));context.write(null, put);/********** End *********/}}public int run(String[] args) throws Exception {// 配置JobConfiguration conf = HBaseUtil.conf;// Scanner sc = new Scanner(System.in);// String arg1 = sc.next();// String arg2 = sc.next();String arg1 = "t_shared_bicycle";String arg2 = "t_bicycle_linetotal";try {HBaseUtil.createTable(arg2, new String[] { "info" });} catch (Exception e) {// 创建表失败e.printStackTrace();}Job job = configureJob(conf, new String[] { arg1, arg2 });return job.waitForCompletion(true) ? 0 : 1;}private Job configureJob(Configuration conf, String[] args) throws IOException {String tablename = args[0];String targetTable = args[1];Job job = new Job(conf, tablename);Scan scan = new Scan();scan.setCaching(300);scan.setCacheBlocks(false);// 在mapreduce程序中千万不要设置允许缓存// 初始化Mapreduce程序TableMapReduceUtil.initTableMapperJob(tablename, scan, MyMapper.class, Text.class, IntWritable.class, job);// 初始化ReduceTableMapReduceUtil.initTableReducerJob(targetTable, // output tableMyTableReducer.class, // reducer classjob);job.setNumReduceTasks(1);return job;}

}相关文章:

头歌:共享单车之数据分析

第1关 统计共享单车每天的平均使用时间 package com.educoder.bigData.sharedbicycle;import java.io.IOException; import java.text.ParseException; import java.util.Collection; import java.util.Date; import java.util.HashMap; import java.util.Locale; import java…...

MySQL的数据类型和细节

1.整型 数值类型字节描述TINYINT[UNSIGNED]1很小的整数,默认有符号 [-128,127]/[0,255]SMALLINT[UNSIGNED]2较小的整数,默认有符号 [-32768,32767]/[0,65535]MEDIUMINT[UNSIGNED]3中等的整数,默认有符号 [-8388608,8388607]/[0,16777215]…...

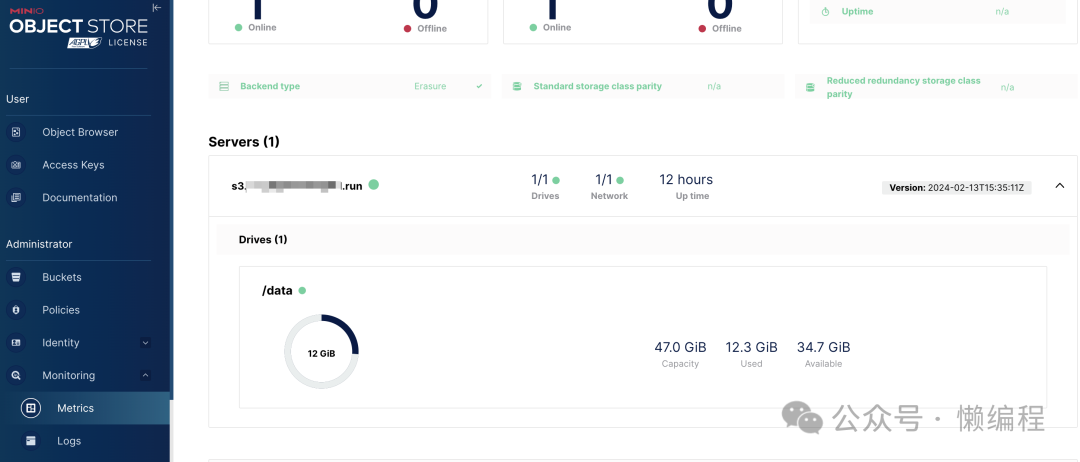

自建AWS S3存储服务

unsetunset前言unsetunset AWS S3(Amazon S3,全名为亚马逊简易存储服务),是亚马逊公司利用其亚马逊网络服务系统所提供的网络在线存储服务。我常用的很多SaaS服务中提供的文件存储功能,底层也都是AWS S3,比…...

『论文阅读|研究用于视障人士户外障碍物检测的 YOLO 模型』

研究用于视障人士户外障碍物检测的 YOLO 模型 摘要1 引言2 相关工作2.1 障碍物检测的相关工作2.2 物体检测和其他基于CNN的模型 3 问题的提出4 方法4.1 YOLO4.2 YOLOv54.3 YOLOv64.4 YOLOv74.5 YOLOv84.6 YOLO-NAS 5 实验和结果5.1 数据集和预处理5.2 训练和实现细节5.3 性能指…...

LeetCode--1445. 苹果和桔子

文章目录 1 题目描述2 测试用例3 解题思路 1 题目描述 表: Sales ------------------------ | Column Name | Type | ------------------------ | sale_date | date | | fruit | enum | | sold_num | int | ------------------------(sale…...

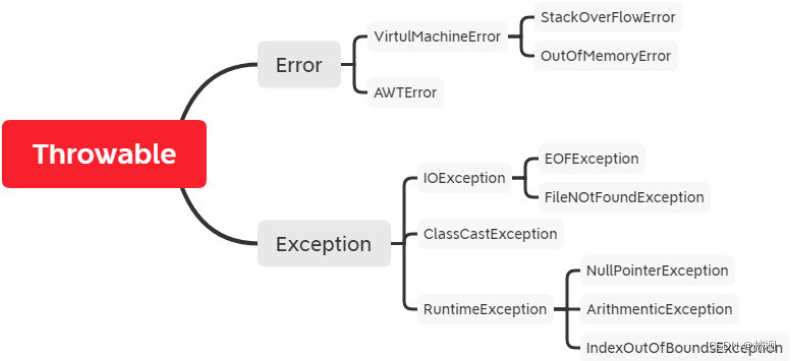

Java基础知识

一、标识符规范 标识符必须以字母(汉字)、下划线、美元符号开头,其他部分可以是字母、下划线、美元符号,数字的任意组合。谨记不能以数字开头。java使用unicode字符集,汉字也可以用该字符集表示。因此汉字也可以用作变量名。 关键字不能用作…...

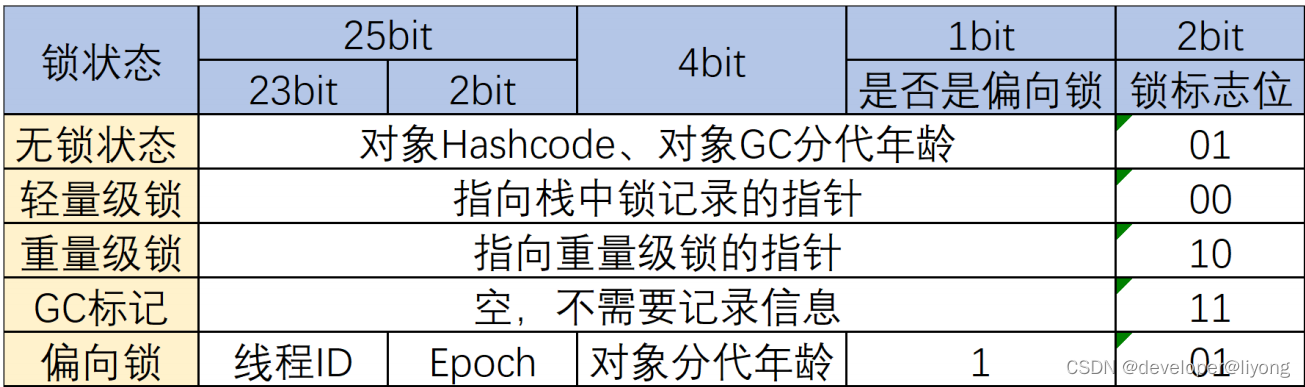

并发编程-Synchronized

什么是Synchronized synchronized是Java提供的一个关键字,Synchronized可以保证并发程序的原子性,可见性,有序性。 我们会把synchronized称为重量级锁。主要原因,是因为JDK1.6之前,synchronized是一个重量级锁相比于J…...

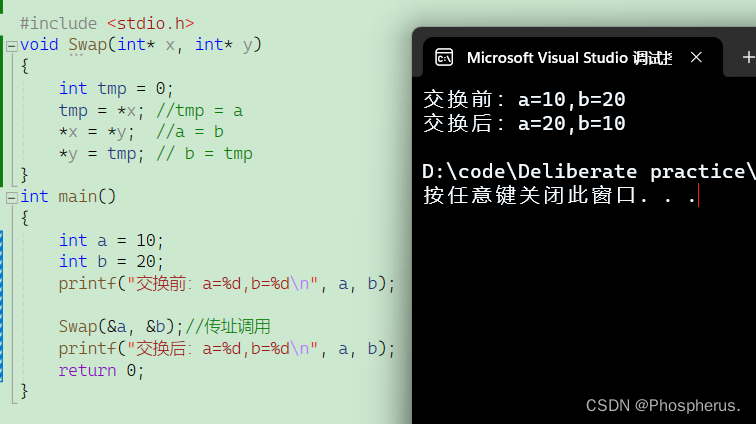

C语言——从头开始——深入理解指针(1)

一.内存和地址 我们知道计算上CPU(中央处理器)在处理数据的时候,是通过地址总线把需要的数据从内存中读取的,后通过数据总线把处理后的数据放回内存中。如下图所示: 计算机把内存划分为⼀个个的内存单元,每…...

微信小程序-绑定数据并在后台获取它

如图 遍历列表的过程中需要绑定数据,点击时候需要绑定数据 这里是源代码 <block wx:for"{{productList}}" wx:key"productId"><view class"product-item" bindtap"handleProductClick" data-product-id"{{i…...

【删除数组用delete和Vue.delete有什么区别】

删除数组用delete和Vue.delete有什么区别? 在 JavaScript 中,delete 和 Vue.js 中的 Vue.delete 是两个完全不同的概念,它们在删除数组元素时的作用和效果也有所不同。 JavaScript 中的 delete 关键字: 在原生 JavaScript 中&a…...

)

【QT+QGIS跨平台编译】之四十二:【QWT+Qt跨平台编译】(一套代码、一套框架,跨平台编译)

文章目录 一、QWT介绍二、QWT下载三、文件分析四、pro文件五、编译实践5.1 Windows下编译4.2 Linux下编译5.3 MacOS下编译一、QWT介绍 QWT是一个基于Qt框架的开源C++库,用于创建交互式的图形用户界面。它提供了丰富的绘图和交互功能,可以用于快速开发图形化应用程序。 QWT包…...

yum方式快速安装mysql

问题描述 使用yum的方式简单安装了一下mysql,对过程进行简单记录。 步骤 ①安装wget和vim sudo yum -y install wget vim②下载mysql的rpm包 sudo wget https://dev.mysql.com/get/mysql80-community-release-el7-3.noarch.rpm③升级和更新rpm包 sudo rpm -Uv…...

基于Java的家政预约管理平台

功能介绍 平台采用B/S结构,后端采用主流的Springboot框架进行开发,前端采用主流的Vue.js进行开发。 整个平台包括前台和后台两个部分。 前台功能包括:首页、家政详情、家政入驻、用户中心模块。后台功能包括:家政管理、分类管理…...

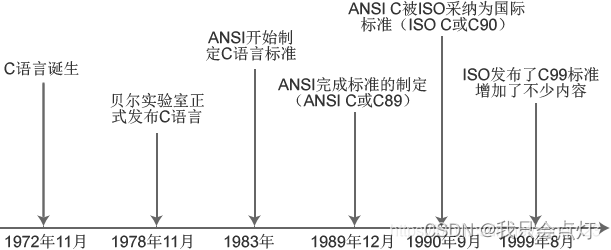

C语言前世今生

C语言前世今生 C语言的发展历史 C语言于1972年11月问世,1978年美国电话电报公司(AT&T)贝尔实验室正式发布C语言,1983年由美国国家标准局(American National Standards Institute,简称ANSI)…...

android aidl进程间通信封装通用实现-用法说明

接上一篇:android aidl进程间通信封装通用实现-CSDN博客 该aar包的使用还是比较方便的 一先看客户端 1 初始化 JsonProtocolManager.getInstance().init(mContext, "com.autoaidl.jsonprotocol"); //客户端监听事件实现 JsonProtocolManager.getInsta…...

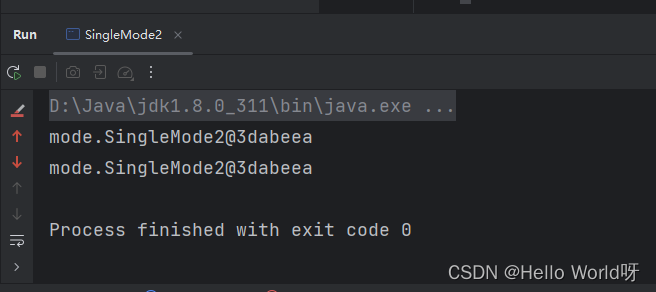

【Java中23种设计模式-单例模式2--懒汉式线程不安全】

加油,新时代打工人! 今天,重新回顾一下设计模式,我们一起变强,变秃。哈哈。 23种设计模式定义介绍 Java中23种设计模式-单例模式 package mode;/*** author wenhao* date 2024/02/19 09:16* description 单例模式--懒…...

【后端高频面试题--Linux篇】

🚀 作者 :“码上有前” 🚀 文章简介 :后端高频面试题 🚀 欢迎小伙伴们 点赞👍、收藏⭐、留言💬 后端高频面试题--Linux篇 往期精彩内容Windows和Linux的区别?Unix和Linux有什么区别…...

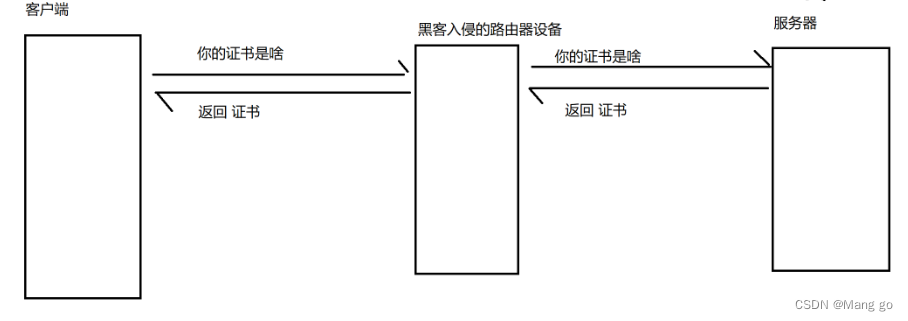

网络原理HTTP/HTTPS(2)

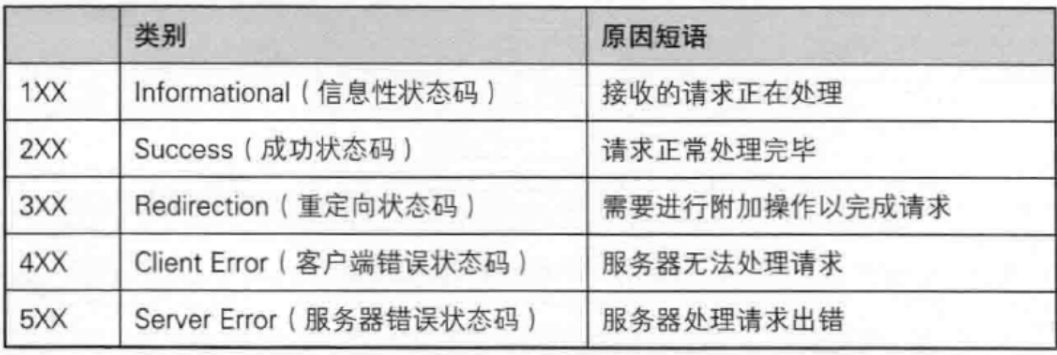

文章目录 HTTP响应状态码200 OK3xx 表示重定向4xx5xx状态码小结 HTTPSHTTPS的加密对称加密非对称加密 HTTP响应状态码 状态码表⽰访问⼀个⻚⾯的结果.(是访问成功,还是失败,还是其他的⼀些情况…).以下为常见的状态码. 200 OK 这是⼀个最常⻅的状态码,表⽰访问成功 2xx都表示…...

【Java中23种设计模式-单例模式2--懒汉式2线程安全】

加油,新时代打工人! 简单粗暴,学习Java设计模式。 23种设计模式定义介绍 Java中23种设计模式-单例模式 Java中23种设计模式-单例模式2–懒汉式线程不安全 package mode;/*** author wenhao* date 2024/02/19 09:38* description 单例模式…...

由LeetCode541引发的java数组和字符串的转换问题

起因是今天在刷下面这个力扣题时的一个报错 541. 反转字符串 II - 力扣(LeetCode) 这个题目本身是比较简单的,所以就不讲具体思路了。问题出在最后方法的返回值处,要将字符数组转化为字符串,第一次写的时候也没思考直…...

[特殊字符] 智能合约中的数据是如何在区块链中保持一致的?

🧠 智能合约中的数据是如何在区块链中保持一致的? 为什么所有区块链节点都能得出相同结果?合约调用这么复杂,状态真能保持一致吗?本篇带你从底层视角理解“状态一致性”的真相。 一、智能合约的数据存储在哪里…...

【JavaEE】-- HTTP

1. HTTP是什么? HTTP(全称为"超文本传输协议")是一种应用非常广泛的应用层协议,HTTP是基于TCP协议的一种应用层协议。 应用层协议:是计算机网络协议栈中最高层的协议,它定义了运行在不同主机上…...

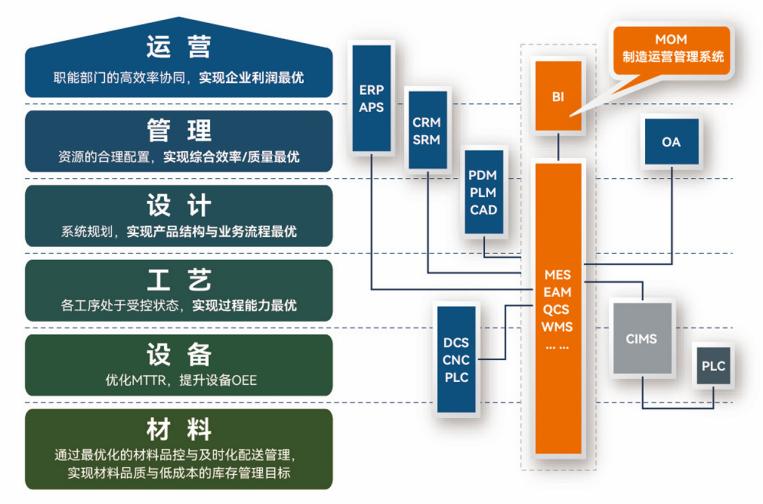

盘古信息PCB行业解决方案:以全域场景重构,激活智造新未来

一、破局:PCB行业的时代之问 在数字经济蓬勃发展的浪潮中,PCB(印制电路板)作为 “电子产品之母”,其重要性愈发凸显。随着 5G、人工智能等新兴技术的加速渗透,PCB行业面临着前所未有的挑战与机遇。产品迭代…...

【磁盘】每天掌握一个Linux命令 - iostat

目录 【磁盘】每天掌握一个Linux命令 - iostat工具概述安装方式核心功能基础用法进阶操作实战案例面试题场景生产场景 注意事项 【磁盘】每天掌握一个Linux命令 - iostat 工具概述 iostat(I/O Statistics)是Linux系统下用于监视系统输入输出设备和CPU使…...

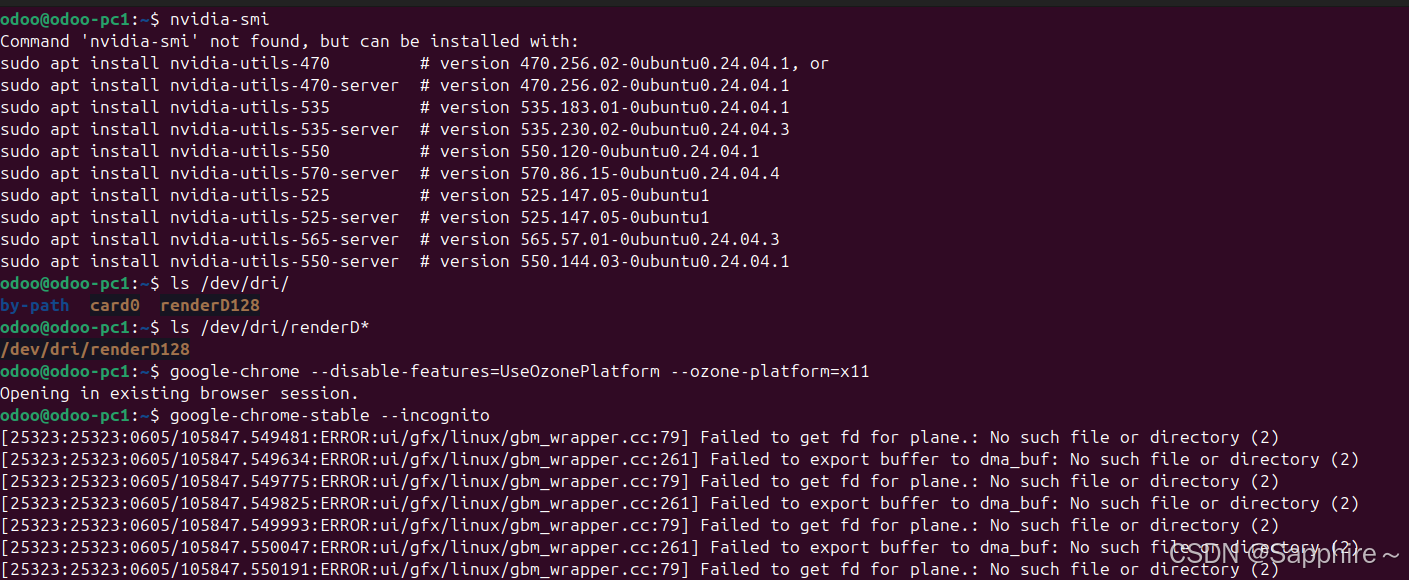

Linux-07 ubuntu 的 chrome 启动不了

文章目录 问题原因解决步骤一、卸载旧版chrome二、重新安装chorme三、启动不了,报错如下四、启动不了,解决如下 总结 问题原因 在应用中可以看到chrome,但是打不开(说明:原来的ubuntu系统出问题了,这个是备用的硬盘&a…...

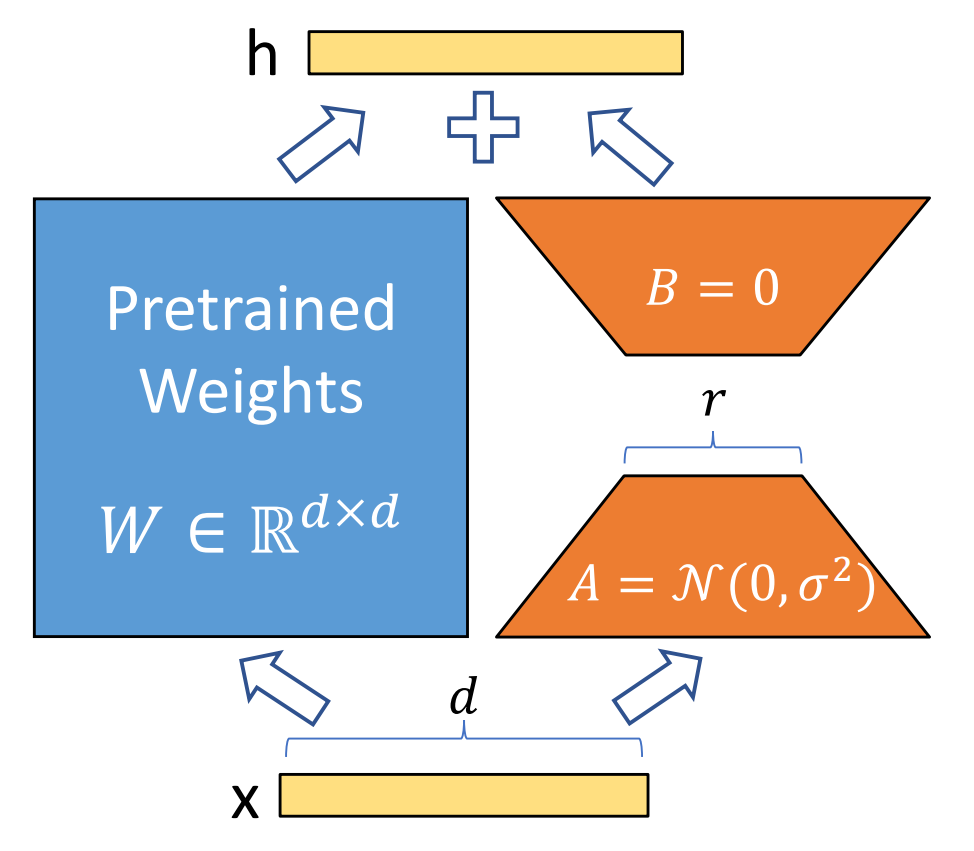

算法岗面试经验分享-大模型篇

文章目录 A 基础语言模型A.1 TransformerA.2 Bert B 大语言模型结构B.1 GPTB.2 LLamaB.3 ChatGLMB.4 Qwen C 大语言模型微调C.1 Fine-tuningC.2 Adapter-tuningC.3 Prefix-tuningC.4 P-tuningC.5 LoRA A 基础语言模型 A.1 Transformer (1)资源 论文&a…...

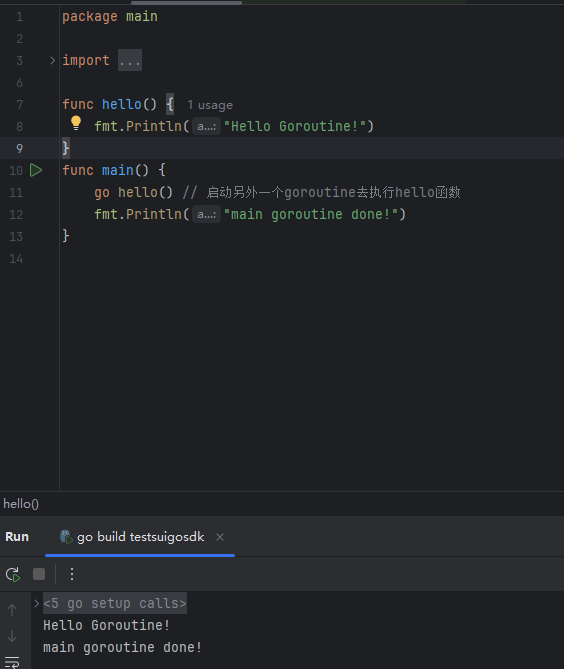

GO协程(Goroutine)问题总结

在使用Go语言来编写代码时,遇到的一些问题总结一下 [参考文档]:https://www.topgoer.com/%E5%B9%B6%E5%8F%91%E7%BC%96%E7%A8%8B/goroutine.html 1. main()函数默认的Goroutine 场景再现: 今天在看到这个教程的时候,在自己的电…...

6️⃣Go 语言中的哈希、加密与序列化:通往区块链世界的钥匙

Go 语言中的哈希、加密与序列化:通往区块链世界的钥匙 一、前言:离区块链还有多远? 区块链听起来可能遥不可及,似乎是只有密码学专家和资深工程师才能涉足的领域。但事实上,构建一个区块链的核心并不复杂,尤其当你已经掌握了一门系统编程语言,比如 Go。 要真正理解区…...

前端高频面试题2:浏览器/计算机网络

本专栏相关链接 前端高频面试题1:HTML/CSS 前端高频面试题2:浏览器/计算机网络 前端高频面试题3:JavaScript 1.什么是强缓存、协商缓存? 强缓存: 当浏览器请求资源时,首先检查本地缓存是否命中。如果命…...

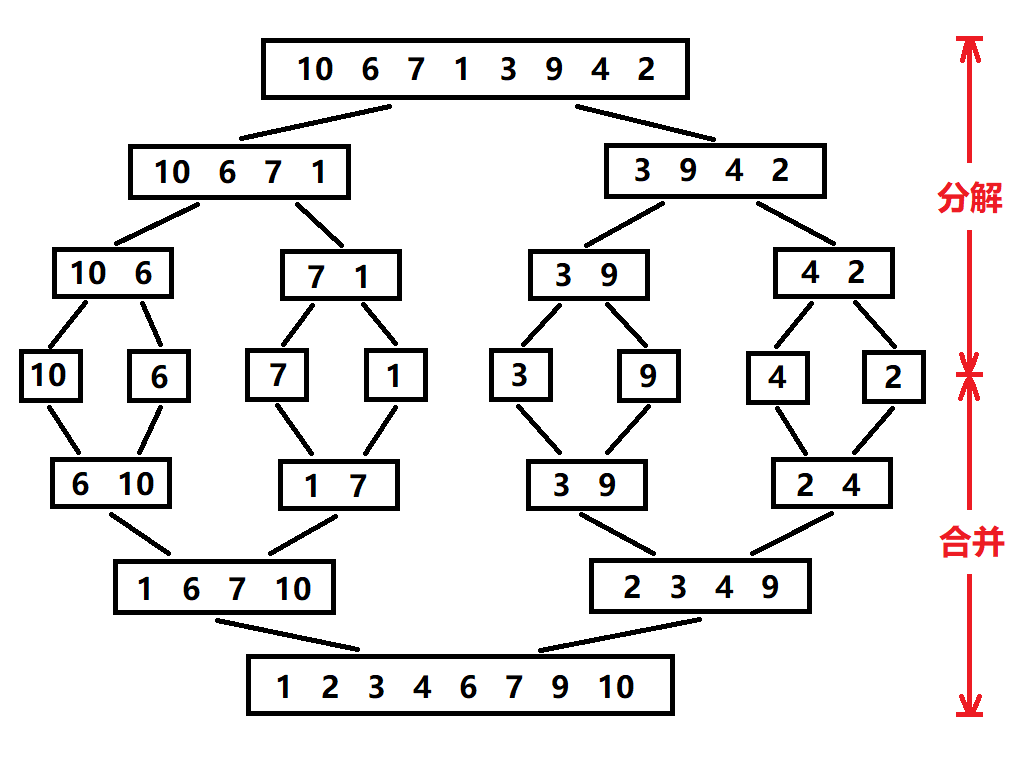

归并排序:分治思想的高效排序

目录 基本原理 流程图解 实现方法 递归实现 非递归实现 演示过程 时间复杂度 基本原理 归并排序(Merge Sort)是一种基于分治思想的排序算法,由约翰冯诺伊曼在1945年提出。其核心思想包括: 分割(Divide):将待排序数组递归地分成两个子…...