DataX,MongoDB数据导入hdfs与mysql

- 【尚硅谷】Alibaba开源数据同步工具DataX技术教程

- 【尚硅谷】Alibaba开源数据同步工具DataX技术教程_哔哩哔哩_bilibili

目录

1、MongoDB

1.1、MongoDB介绍

1.2、MongoDB基本概念解析

1.3、MongoDB中的数据存储结构

1.4、MongoDB启动服务

1.5、MongoDB小案例

2、DataX导入导出案例

2.1、读取MongoDB的数据导入到HDFS

2.1.1、mongodb2hdfs.json

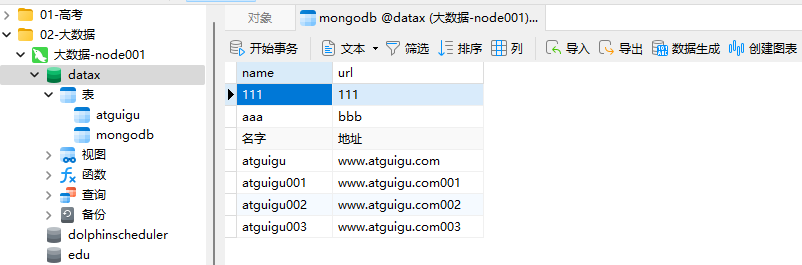

2.2.2、mongodb数据

2.2.3、运行命令

2.2、读取 MongoDB 的数据导入 MySQL

2.2.1、mongodb2mysql.json

2.2.2、MySQL数据

2.2.3、运行命令

1、MongoDB

1.1、MongoDB介绍

- MongoDB:应用程序数据平台 | MongoDB

1.2、MongoDB基本概念解析

1.3、MongoDB中的数据存储结构

通过下图实例,我们也可以更直观的了解 Mongo 中的一些概念:

1.4、MongoDB启动服务

1、启动 MongoDB 服务

[atguigu@node001 mongodb-5.0.2]$ pwd

/opt/module/mongoDB/mongodb-5.0.2

[atguigu@node001 mongodb-5.0.2]$ bin/mongod --bind_ip 0.0.0.02、进入 shell 页面

[atguigu@node001 bin]$ pwd

/opt/module/mongoDB/mongodb-5.0.2/bin

[atguigu@node001 bin]$ /opt/module/mongoDB/mongodb-5.0.2/bin/mongo

5)启动 MongoDB 服务

[atguigu@hadoop102 mongodb]$ bin/mongod

6)进入 shell 页面

[atguigu@hadoop102 mongodb]$ bin/mongo[atguigu@node001 mongodb-5.0.2]$ pwd

/opt/module/mongoDB/mongodb-5.0.2

[atguigu@node001 mongodb-5.0.2]$ sudo mkdir -p /data/db/

[atguigu@node001 mongodb-5.0.2]$ sudo mkdir -p /opt/module/mongoDB/mongodb-5.0.2/data/db/

[atguigu@node001 mongodb-5.0.2]$ sudo chmod 777 -R /data/db/

[atguigu@node001 mongodb-5.0.2]$ sudo chmod 777 -R /opt/module/mongoDB/mongodb-5.0.2/data/db/

[atguigu@node001 mongodb-5.0.2]$ bin/mongod[atguigu@node001 bin]$ /opt/module/mongoDB/mongodb-5.0.2/bin/mongo

MongoDB shell version v5.0.2

connecting to: mongodb://127.0.0.1:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("b9e6b776-c67d-4a8d-8e31-c0350850065e") }

MongoDB server version: 5.0.2

================

Warning: the "mongo" shell has been superseded by "mongosh",

which delivers improved usability and compatibility.The "mongo" shell has been deprecated and will be removed in

an upcoming release.

We recommend you begin using "mongosh".

For installation instructions, see

https://docs.mongodb.com/mongodb-shell/install/

================

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, seehttps://docs.mongodb.com/

Questions? Try the MongoDB Developer Community Forumshttps://community.mongodb.com

---

The server generated these startup warnings when booting: 2024-04-09T15:39:39.728+08:00: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine. See http://dochub.mongodb.org/core/prodnotes-filesystem2024-04-09T15:39:40.744+08:00: Access control is not enabled for the database. Read and write access to data and configuration is unrestricted2024-04-09T15:39:40.744+08:00: This server is bound to localhost. Remote systems will be unable to connect to this server. Start the server with --bind_ip <address> to specify which IP addresses it should serve responses from, or with --bind_ip_all to bind to all interfaces. If this behavior is desired, start the server with --bind_ip 127.0.0.1 to disable this warning2024-04-09T15:39:40.748+08:00: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. We suggest setting it to 'never'2024-04-09T15:39:40.749+08:00: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. We suggest setting it to 'never'

---

---Enable MongoDB's free cloud-based monitoring service, which will then receive and displaymetrics about your deployment (disk utilization, CPU, operation statistics, etc).The monitoring data will be available on a MongoDB website with a unique URL accessible to youand anyone you share the URL with. MongoDB may use this information to make productimprovements and to suggest MongoDB products and deployment options to you.To enable free monitoring, run the following command: db.enableFreeMonitoring()To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

>

1.5、MongoDB小案例

> show databases;

admin 0.000GB

config 0.000GB

local 0.000GB

> show tables;

> db

test

> use test

switched to db test

> db.create

db.createCollection( db.createRole( db.createUser( db.createView(

> db.createCollection("atguigu")

{ "ok" : 1 }

> show tables;

atguigu

> db.atguigu.insert({"name":"atguigu","url":"www.atguigu.com"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.find()

{ "_id" : ObjectId("6614f3467fc519a5008ef01a"), "name" : "atguigu", "url" : "www.atguigu.com" }

> db.createCollection("mycol",{ capped : true,autoIndexId : true,size : 6142800, max :

... 1000})

{"note" : "The autoIndexId option is deprecated and will be removed in a future release","ok" : 1

}

> show tables;

atguigu

mycol

> db.mycol2.insert({"name":"atguigu"})

WriteResult({ "nInserted" : 1 })

> show collections

atguigu

mycol

mycol2

> 2、DataX导入导出案例

2.1、读取MongoDB的数据导入到HDFS

2.1.1、mongodb2hdfs.json

[atguigu@node001 ~]$ cd /opt/module/datax

[atguigu@node001 datax]$ bin/datax.py -r mongodbreader -w hdfswriterDataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.Please refer to the mongodbreader document:https://github.com/alibaba/DataX/blob/master/mongodbreader/doc/mongodbreader.md Please refer to the hdfswriter document:https://github.com/alibaba/DataX/blob/master/hdfswriter/doc/hdfswriter.md Please save the following configuration as a json file and usepython {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.{"job": {"content": [{"reader": {"name": "mongodbreader", "parameter": {"address": [], "collectionName": "", "column": [], "dbName": "", "userName": "", "userPassword": ""}}, "writer": {"name": "hdfswriter", "parameter": {"column": [], "compress": "", "defaultFS": "", "fieldDelimiter": "", "fileName": "", "fileType": "", "path": "", "writeMode": ""}}}], "setting": {"speed": {"channel": ""}}}

}

[atguigu@node001 datax]$ {"job": {"content": [{"reader": {"name": "mongodbreader","parameter": {"address": ["node001:27017"],"collectionName": "atguigu","column": [{"name": "name","type": "string"},{"name": "url","type": "string"}],"dbName": "test","userName": "","userPassword": ""}},"writer": {"name": "hdfswriter","parameter": {"column": [{"name": "name","type": "string"},{"name": "url","type": "string"}],"compress": "","defaultFS": "hdfs://node001:8020","fieldDelimiter": "\t","fileName": "mongoDB001.txt","fileType": "text","path": "/mongoDB","writeMode": "append"}}}],"setting": {"speed": {"channel": "1"}}}

}2.2.2、mongodb数据

> db.atguigu.find()

{ "_id" : ObjectId("6614f3467fc519a5008ef01a"), "name" : "atguigu", "url" : "www.atguigu.com" }

> db.atguigu.insert({"name":"atguigu001","url":"www.atguigu.com001"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.insert({"name":"atguigu002","url":"www.atguigu.com002"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.insert({"name":"atguigu003","url":"www.atguigu.com003"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.find()

{ "_id" : ObjectId("6614f3467fc519a5008ef01a"), "name" : "atguigu", "url" : "www.atguigu.com" }

{ "_id" : ObjectId("6614fb187fc519a5008ef01c"), "name" : "atguigu001", "url" : "www.atguigu.com001" }

{ "_id" : ObjectId("6614fb207fc519a5008ef01d"), "name" : "atguigu002", "url" : "www.atguigu.com002" }

{ "_id" : ObjectId("6614fb297fc519a5008ef01e"), "name" : "atguigu003", "url" : "www.atguigu.com003" }

> 2.2.3、运行命令

[atguigu@node001 mongodb-5.0.2]$ bin/mongod --bind_ip 0.0.0.0

{"t":{"$date":"2024-04-09T16:11:21.173+08:00"},"s":"I", "c":"NETWORK", "id":4915701, "ctx":"-","msg":"Initialized wire specification","attr":{"spec":{"incomingExternalClient":{"minWireVersion":0,"maxWireVersion":13},"incomingInternalClient":{"minWireVersion":0,"maxWireVersion":13},"outgoing":{"minWireVersion":0,"maxWireVersion":13},"isInternalClient":true}}}[atguigu@node001 datax]$ bin/datax.py job/mongodb/mongdb2hdfs.json DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.2024-04-09 16:13:00.914 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2024-04-09 16:13:00.930 [main] INFO Engine - the machine info => osInfo: Red Hat, Inc. 1.8 25.372-b07jvmInfo: Linux amd64 3.10.0-862.el7.x86_64cpu num: 4totalPhysicalMemory: -0.00GfreePhysicalMemory: -0.00GmaxFileDescriptorCount: -1currentOpenFileDescriptorCount: -1GC Names [PS MarkSweep, PS Scavenge]MEMORY_NAME | allocation_size | init_size PS Eden Space | 256.00MB | 256.00MB Code Cache | 240.00MB | 2.44MB Compressed Class Space | 1,024.00MB | 0.00MB PS Survivor Space | 42.50MB | 42.50MB PS Old Gen | 683.00MB | 683.00MB Metaspace | -0.00MB | 0.00MB 2024-04-09 16:13:00.959 [main] INFO Engine -

{"content":[{"reader":{"name":"mongodbreader","parameter":{"address":["node001:27017"],"collectionName":"atguigu","column":[{"name":"name","type":"string"},{"name":"url","type":"string"}],"dbName":"test","userName":"","userPassword":""}},"writer":{"name":"hdfswriter","parameter":{"column":[{"name":"name","type":"string"},{"name":"url","type":"string"}],"compress":"","defaultFS":"hdfs://node001:8020","fieldDelimiter":"\t","fileName":"mongo.txt","fileType":"text","path":"/","writeMode":"append"}}}],"setting":{"speed":{"channel":"1"}}

}2024-04-09 16:13:00.986 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2024-04-09 16:13:00.990 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2024-04-09 16:13:00.990 [main] INFO JobContainer - DataX jobContainer starts job.

2024-04-09 16:13:00.993 [main] INFO JobContainer - Set jobId = 0

2024-04-09 16:13:01.175 [job-0] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:13:01.175 [job-0] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:13:01.405 [cluster-ClusterId{value='6614f88d21b10d1b9122f208', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:1, serverValue:2}] to node001:27017

2024-04-09 16:13:01.408 [cluster-ClusterId{value='6614f88d21b10d1b9122f208', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1825826}

2024-04-09 16:13:01.410 [cluster-ClusterId{value='6614f88d21b10d1b9122f208', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

四月 09, 2024 4:13:02 下午 org.apache.hadoop.util.NativeCodeLoader <clinit>

警告: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2024-04-09 16:13:02.883 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2024-04-09 16:13:02.886 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do prepare work .

2024-04-09 16:13:02.887 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] do prepare work .

2024-04-09 16:13:03.426 [job-0] INFO HdfsWriter$Job - 由于您配置了writeMode append, 写入前不做清理工作, [/] 目录下写入相应文件名前缀 [mongo.txt] 的文件

2024-04-09 16:13:03.429 [job-0] INFO JobContainer - jobContainer starts to do split ...

2024-04-09 16:13:03.430 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2024-04-09 16:13:03.565 [job-0] INFO connection - Opened connection [connectionId{localValue:2, serverValue:3}] to node001:27017

2024-04-09 16:13:03.591 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] splits to [1] tasks.

2024-04-09 16:13:03.592 [job-0] INFO HdfsWriter$Job - begin do split...

2024-04-09 16:13:03.628 [job-0] INFO HdfsWriter$Job - splited write file name:[hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd]

2024-04-09 16:13:03.628 [job-0] INFO HdfsWriter$Job - end do split.

2024-04-09 16:13:03.629 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] splits to [1] tasks.

2024-04-09 16:13:03.709 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2024-04-09 16:13:03.731 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2024-04-09 16:13:03.741 [job-0] INFO JobContainer - Running by standalone Mode.

2024-04-09 16:13:03.774 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2024-04-09 16:13:03.823 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2024-04-09 16:13:03.824 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2024-04-09 16:13:03.942 [0-0-0-reader] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:13:03.942 [0-0-0-reader] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:13:03.943 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2024-04-09 16:13:03.986 [cluster-ClusterId{value='6614f88f21b10d1b9122f209', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:3, serverValue:4}] to node001:27017

2024-04-09 16:13:03.988 [cluster-ClusterId{value='6614f88f21b10d1b9122f209', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1293606}

2024-04-09 16:13:03.988 [cluster-ClusterId{value='6614f88f21b10d1b9122f209', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

2024-04-09 16:13:04.029 [0-0-0-reader] INFO connection - Opened connection [connectionId{localValue:4, serverValue:5}] to node001:27017

2024-04-09 16:13:04.061 [0-0-0-writer] INFO HdfsWriter$Task - begin do write...

2024-04-09 16:13:04.062 [0-0-0-writer] INFO HdfsWriter$Task - write to file : [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd]

2024-04-09 16:13:08.157 [0-0-0-writer] INFO HdfsWriter$Task - end do write

2024-04-09 16:13:08.185 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[4268]ms

2024-04-09 16:13:08.185 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2024-04-09 16:13:13.866 [job-0] INFO StandAloneJobContainerCommunicator - Total 1 records, 22 bytes | Speed 2B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:13:13.866 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2024-04-09 16:13:13.866 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] do post work.

2024-04-09 16:13:13.867 [job-0] INFO HdfsWriter$Job - start rename file [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd] to file [hdfs://node001:8020/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd].

2024-04-09 16:13:13.964 [job-0] INFO HdfsWriter$Job - finish rename file [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd] to file [hdfs://node001:8020/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd].

2024-04-09 16:13:13.965 [job-0] INFO HdfsWriter$Job - start delete tmp dir [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49] .

2024-04-09 16:13:13.995 [job-0] INFO HdfsWriter$Job - finish delete tmp dir [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49] .

2024-04-09 16:13:13.996 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do post work.

2024-04-09 16:13:13.996 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2024-04-09 16:13:13.997 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2024-04-09 16:13:14.102 [job-0] INFO JobContainer - [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00%[total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime PS MarkSweep | 1 | 1 | 1 | 0.042s | 0.042s | 0.042s PS Scavenge | 1 | 1 | 1 | 0.020s | 0.020s | 0.020s 2024-04-09 16:13:14.102 [job-0] INFO JobContainer - PerfTrace not enable!

2024-04-09 16:13:14.103 [job-0] INFO StandAloneJobContainerCommunicator - Total 1 records, 22 bytes | Speed 2B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:13:14.111 [job-0] INFO JobContainer -

任务启动时刻 : 2024-04-09 16:13:00

任务结束时刻 : 2024-04-09 16:13:14

任务总计耗时 : 13s

任务平均流量 : 2B/s

记录写入速度 : 0rec/s

读出记录总数 : 1

读写失败总数 : 0[atguigu@node001 datax]$ 2.2、读取 MongoDB 的数据导入 MySQL

2.2.1、mongodb2mysql.json

[atguigu@node001 datax]$ bin/datax.py -r mongodbreader -w mysqlwriterDataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.Please refer to the mongodbreader document:https://github.com/alibaba/DataX/blob/master/mongodbreader/doc/mongodbreader.md Please refer to the mysqlwriter document:https://github.com/alibaba/DataX/blob/master/mysqlwriter/doc/mysqlwriter.md Please save the following configuration as a json file and usepython {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.{"job": {"content": [{"reader": {"name": "mongodbreader", "parameter": {"address": [], "collectionName": "", "column": [], "dbName": "", "userName": "", "userPassword": ""}}, "writer": {"name": "mysqlwriter", "parameter": {"column": [], "connection": [{"jdbcUrl": "", "table": []}], "password": "", "preSql": [], "session": [], "username": "", "writeMode": ""}}}], "setting": {"speed": {"channel": ""}}}

}

[atguigu@node001 datax]$ {"job": {"content": [{"reader": {"name": "mongodbreader","parameter": {"address": ["node001:27017"],"collectionName": "atguigu","column": [{"name": "name","type": "string"},{"name": "url","type": "string"}],"dbName": "test","userName": "","userPassword": ""}},"writer": {"name": "mysqlwriter","parameter": {"column": ["*"],"connection": [{"jdbcUrl": "jdbc:mysql://node001:3306/datax","table": ["mongodb"]}],"username": "root","password": "123456","preSql": [],"session": [],"writeMode": "insert"}}}],"setting": {"speed": {"channel": "1"}}}

}2.2.2、MySQL数据

/*Navicat Premium Data TransferSource Server : localhostSource Server Type : MySQLSource Server Version : 50735 (5.7.35-log)Source Host : localhost:3306Source Schema : dataxTarget Server Type : MySQLTarget Server Version : 50735 (5.7.35-log)File Encoding : 65001Date: 09/04/2024 16:40:39

*/SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;-- ----------------------------

-- Table structure for atguigu

-- ----------------------------

DROP TABLE IF EXISTS `atguigu`;

CREATE TABLE `atguigu` (`name` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,`url` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;-- ----------------------------

-- Records of atguigu

-- ----------------------------

INSERT INTO `atguigu` VALUES ('111', '111');

INSERT INTO `atguigu` VALUES ('名字', '地址');-- ----------------------------

-- Table structure for mongodb

-- ----------------------------

DROP TABLE IF EXISTS `mongodb`;

CREATE TABLE `mongodb` (`name` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,`url` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;-- ----------------------------

-- Records of mongodb

-- ----------------------------

INSERT INTO `mongodb` VALUES ('111', '111');

INSERT INTO `mongodb` VALUES ('aaa', 'bbb');

INSERT INTO `mongodb` VALUES ('名字', '地址');SET FOREIGN_KEY_CHECKS = 1;2.2.3、运行命令

[atguigu@node001 datax]$ bin/datax.py job/mongodb/mongodb2mysql.json DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.2024-04-09 16:42:09.299 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2024-04-09 16:42:09.319 [main] INFO Engine - the machine info => osInfo: Red Hat, Inc. 1.8 25.372-b07jvmInfo: Linux amd64 3.10.0-862.el7.x86_64cpu num: 4totalPhysicalMemory: -0.00GfreePhysicalMemory: -0.00GmaxFileDescriptorCount: -1currentOpenFileDescriptorCount: -1GC Names [PS MarkSweep, PS Scavenge]MEMORY_NAME | allocation_size | init_size PS Eden Space | 256.00MB | 256.00MB Code Cache | 240.00MB | 2.44MB Compressed Class Space | 1,024.00MB | 0.00MB PS Survivor Space | 42.50MB | 42.50MB PS Old Gen | 683.00MB | 683.00MB Metaspace | -0.00MB | 0.00MB 2024-04-09 16:42:09.366 [main] INFO Engine -

{"content":[{"reader":{"name":"mongodbreader","parameter":{"address":["node001:27017"],"collectionName":"atguigu","column":[{"name":"name","type":"string"},{"name":"url","type":"string"}],"dbName":"test","userName":"","userPassword":""}},"writer":{"name":"mysqlwriter","parameter":{"column":["*"],"connection":[{"jdbcUrl":"jdbc:mysql://node001:3306/datax","table":["mongodb"]}],"password":"******","preSql":[],"session":[],"username":"root","writeMode":"insert"}}}],"setting":{"speed":{"channel":"1"}}

}2024-04-09 16:42:09.401 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2024-04-09 16:42:09.405 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2024-04-09 16:42:09.405 [main] INFO JobContainer - DataX jobContainer starts job.

2024-04-09 16:42:09.411 [main] INFO JobContainer - Set jobId = 0

2024-04-09 16:42:09.681 [job-0] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:42:09.681 [job-0] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:42:09.951 [cluster-ClusterId{value='6614ff6121b10d1da8148cac', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:1, serverValue:17}] to node001:27017

2024-04-09 16:42:09.954 [cluster-ClusterId{value='6614ff6121b10d1da8148cac', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1877048}

2024-04-09 16:42:09.960 [cluster-ClusterId{value='6614ff6121b10d1da8148cac', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

2024-04-09 16:42:11.020 [job-0] INFO OriginalConfPretreatmentUtil - table:[mongodb] all columns:[

name,url

].

2024-04-09 16:42:11.021 [job-0] WARN OriginalConfPretreatmentUtil - 您的配置文件中的列配置信息存在风险. 因为您配置的写入数据库表的列为*,当您的表字段个数、类型有变动时,可能影响任务正确性甚至会运行出错。请检查您的配置并作出修改.

2024-04-09 16:42:11.024 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

insert INTO %s (name,url) VALUES(?,?)

], which jdbcUrl like:[jdbc:mysql://node001:3306/datax?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true]

2024-04-09 16:42:11.025 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2024-04-09 16:42:11.027 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do prepare work .

2024-04-09 16:42:11.031 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

2024-04-09 16:42:11.032 [job-0] INFO JobContainer - jobContainer starts to do split ...

2024-04-09 16:42:11.032 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2024-04-09 16:42:11.094 [job-0] INFO connection - Opened connection [connectionId{localValue:2, serverValue:18}] to node001:27017

2024-04-09 16:42:11.123 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] splits to [1] tasks.

2024-04-09 16:42:11.125 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

2024-04-09 16:42:11.172 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2024-04-09 16:42:11.183 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2024-04-09 16:42:11.189 [job-0] INFO JobContainer - Running by standalone Mode.

2024-04-09 16:42:11.204 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2024-04-09 16:42:11.217 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2024-04-09 16:42:11.218 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2024-04-09 16:42:11.244 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2024-04-09 16:42:11.246 [0-0-0-reader] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:42:11.247 [0-0-0-reader] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:42:11.292 [cluster-ClusterId{value='6614ff6321b10d1da8148cad', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:3, serverValue:19}] to node001:27017

2024-04-09 16:42:11.295 [cluster-ClusterId{value='6614ff6321b10d1da8148cad', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1154664}

2024-04-09 16:42:11.295 [cluster-ClusterId{value='6614ff6321b10d1da8148cad', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

2024-04-09 16:42:11.311 [0-0-0-reader] INFO connection - Opened connection [connectionId{localValue:4, serverValue:20}] to node001:27017

2024-04-09 16:42:11.447 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[207]ms

2024-04-09 16:42:11.449 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2024-04-09 16:42:21.236 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 106 bytes | Speed 10B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:42:21.236 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2024-04-09 16:42:21.237 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do post work.

2024-04-09 16:42:21.238 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do post work.

2024-04-09 16:42:21.241 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2024-04-09 16:42:21.242 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2024-04-09 16:42:21.244 [job-0] INFO JobContainer - [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00%[total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime PS MarkSweep | 1 | 1 | 1 | 0.057s | 0.057s | 0.057s PS Scavenge | 1 | 1 | 1 | 0.021s | 0.021s | 0.021s 2024-04-09 16:42:21.244 [job-0] INFO JobContainer - PerfTrace not enable!

2024-04-09 16:42:21.245 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 106 bytes | Speed 10B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:42:21.248 [job-0] INFO JobContainer -

任务启动时刻 : 2024-04-09 16:42:09

任务结束时刻 : 2024-04-09 16:42:21

任务总计耗时 : 11s

任务平均流量 : 10B/s

记录写入速度 : 0rec/s

读出记录总数 : 4

读写失败总数 : 0[atguigu@node001 datax]$ 相关文章:

DataX,MongoDB数据导入hdfs与mysql

【尚硅谷】Alibaba开源数据同步工具DataX技术教程【尚硅谷】Alibaba开源数据同步工具DataX技术教程_哔哩哔哩_bilibili 目录 1、MongoDB 1.1、MongoDB介绍 1.2、MongoDB基本概念解析 1.3、MongoDB中的数据存储结构 1.4、MongoDB启动服务 1.5、MongoDB小案例 2、DataX导入…...

【OpenCV-颜色空间】

OpenCV-颜色空间 ■ RGB■ BGR■ HSV■ HSL■ HUE■ YUV ■ RGB ■ BGR BGR 就是RGB R和B调换位置。 OpenCV 默认使用BGR ■ HSV ■ HSL ■ HUE ■ YUV...

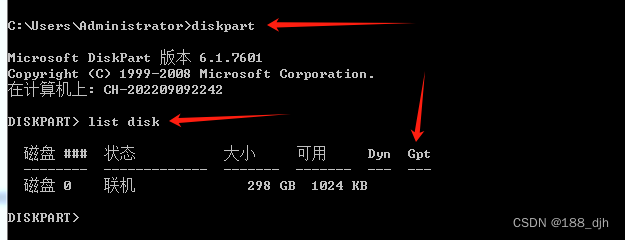

电脑硬盘分区表的两种格式:MBR 和 GPT

电脑硬盘分区表的两种格式:MBR 和 GPT 段子手168 2024-4-5 电脑硬盘分区表有两种格式:MBR 和 GPT: 一、MBR 分区表 1.MBR 是主引导记录 (Master Boot Record) 的英文缩写 在传统(Legacy)硬盘分区模式中,…...

kafka 常用非基础的核心设置项

在测试的过程中,心血来潮,想要测试下新topic中还没被消费的消息。专门查了下ai,奈何一本正经的胡说八道,浪费了点时间。现在记录下: 解决topic缺失时项目无法启动 , 报错: Topic(s) [……] is/a…...

杂谈 EV之我见

每周至少更新一片博文,没有目的的看代码是没有效率的,带着目的去看代码才会有所得, 目前车载行业火爆,得益于EV和AI技术的发展,汽车从一个传统工业产品,摇身一变成为了前沿科技产品。 小米su7的发布会我看…...

白色磨砂质感html5页源码

白色磨砂质感html5页源码,简约的基础上加上了团队成员,自动打字特效音乐播放器存活时间 源码下载 https://www.qqmu.com/2980.html...

sqlite建立数据库

在做一些简单的实验项目的时候,sqlite比较好用(MacOS验视环境)。相关包下载网页:https://www.sqlite.org/download.html 1 创建数据文件目录 cd /<project_path> mkdir database cd /database2 创建数据库 在当前目录&am…...

)

HTML5标签(网页编程)

一、常用标签 HTML5(或HTML)中有很多常用的标签,这些标签用于构建网页的结构和内容。以下是一些常用的HTML5标签: 1. 标题标签 <h1> 到 <h6>:定义六个级别的标题,<h1> 级别最高&#…...

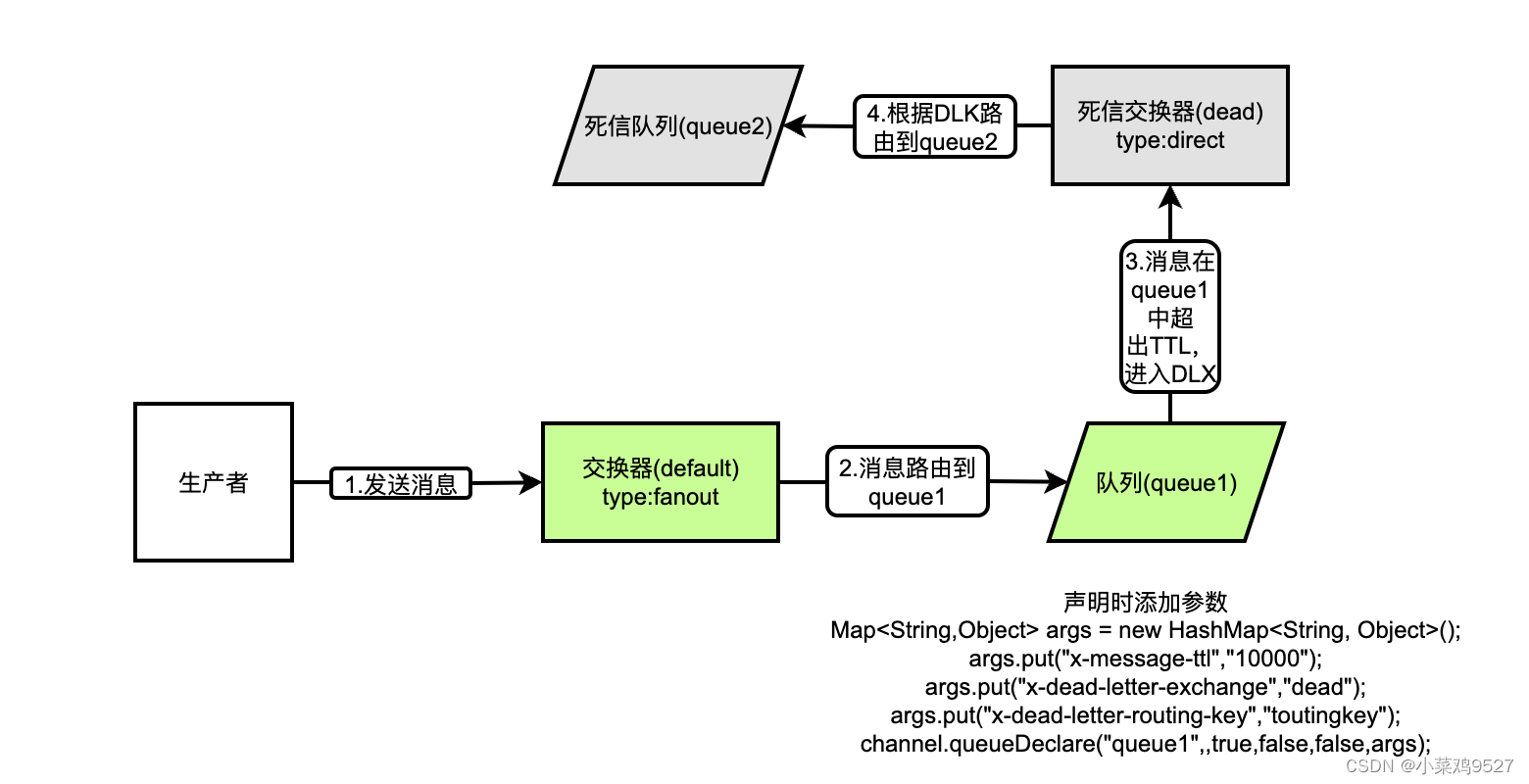

RabbitMQ小记

参考书籍:朱忠华的《RabbitMQ实战指南》 一、基础概念 1.Exchange 1.1 创建方法的参数,exchangeDeclare() exchange:交换器的名称type:交换器的类型durable:是否持久化,true代表持久化。(持…...

【备忘录】docker-maven-plugin 使用

在使用docker-maven-plugin 插件时,经常会碰到一些奇怪的问题: 比如: 1、docker远程访问时,认证安全问题? 2、dockerHost 访问地址准确性? 3、需要多个tag时如何处理? 4、push 到仓库时…...

一起学习python——基础篇(10)

前言,Python 是一种面向对象的编程语言。以前大学读书的时候经常开玩笑说的一句话“如果没有对象,就new一个”。起因就是编程老师上课时经常说一句“首先,我们new一个对象”。 今天讲一下python的类和对象。 类是什么?它是一种用…...

LoRa自组网络设计 6

1 深入了解LoRaWan 1.1 LoRaWan概述 LoRaWAN采用星型无线拓扑 End Nodes 节点 Gateway 网关 Network Server 网络服务器 Application Server 应用服务器 LoRa联盟是2015年3月Semtech牵头成立的一个开放的、非盈利的组织,发起成员还有法国Actility,中国…...

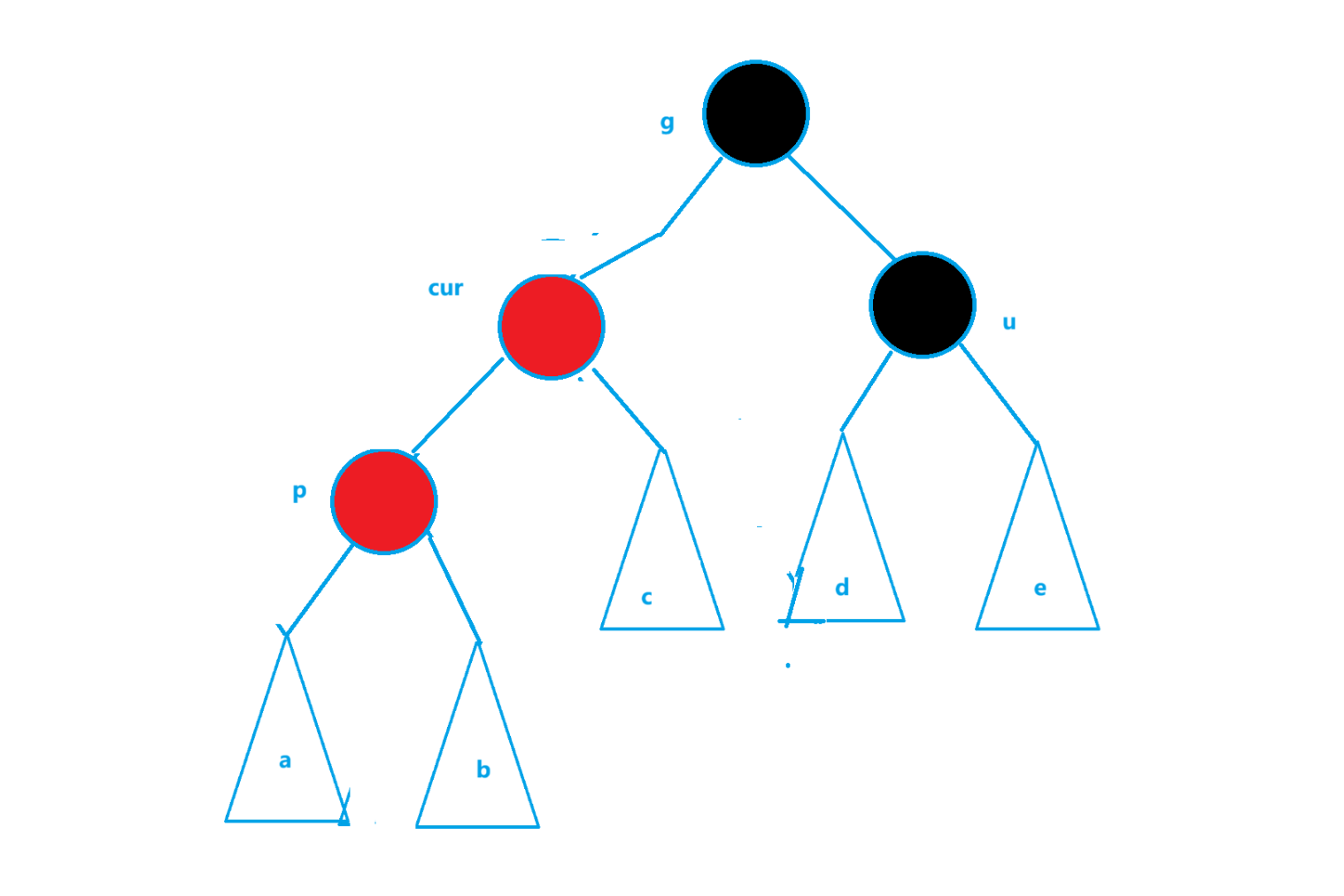

C++手撕红黑树

文章目录 红黑树概念性质(条件限制)节点的定义红黑树的结构红黑树的插入cur为红,p为红,g为黑,u存在且为红cur为红,p为红,g为黑,u不存在或u为黑,插入到p对应的一边cur为红…...

计算机中,逻辑端口

计算机中,端口是什么 在计算机领域中,端口(Port)是一个逻辑概念,用于标识计算机与外部设备或另一台计算机通信时的出入口。它是计算机与外部通信的途径,分为物理端口和逻辑端口两种。 物理端口:物理端口也被称为接口,是计算机主板上或其他设备上的硬件接口,如USB接口…...

SV学习笔记(一)

SV:SystemVerilog 开启SV之路 数据类型 內建数据类型 四状态与双状态 : 四状态指0、1、X、Z,包括logic、integer、 reg、 wire。双状态指0、1,包括bit、byte、 shortint、int、longint。 有符号与无符号 : 有符号&am…...

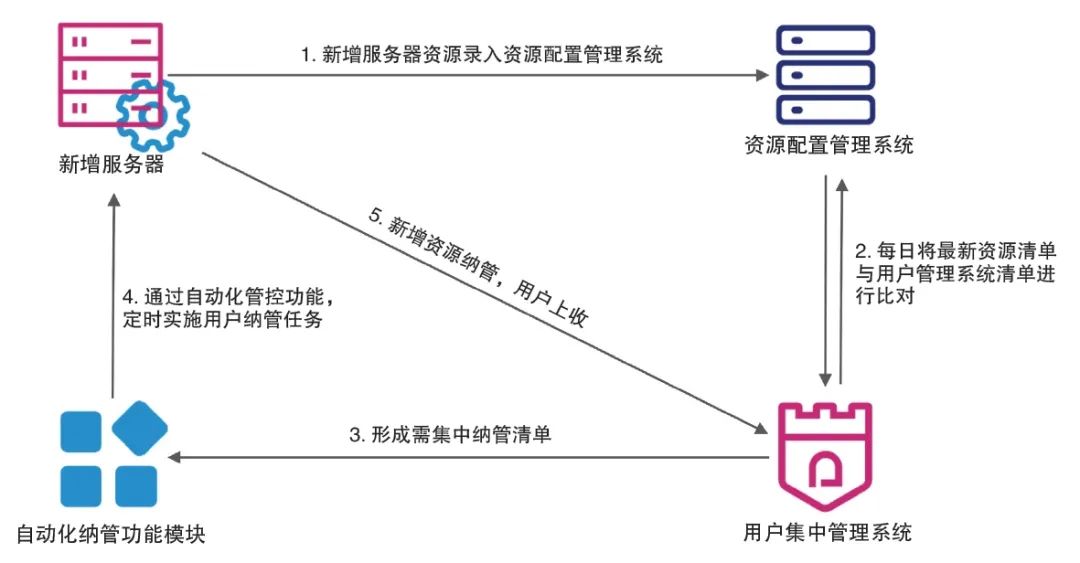

大型商业银行基础设施的用户安全管理创新与实践

文丨中国工商银行数据中心安全运营部 陈茜倩 王喆 基础设施的用户安全一直是银行业信息系统稳定运行的重要保障,近年来,随着科技的快速发展,针对用户安全的攻击方式层出不穷,如网络钓鱼、恶意软件、身份盗用、弱密码攻击、社交工程…...

数据库入门-----SQL基础知识

目录 📖前言: 📑SQL概述&&通用语法: 🐳DDL: 🐻操作数据库: 🐞数据类型: 🦉操作表: 🦦DML: 语法规则&#x…...

本地代码第一次提交到远程仓库gitee

1.在gitee新建仓库 2.新建一个空文件夹 打开黑窗口,执行命令 克隆仓库地址 执行命令 git clone https://gitee.com/llncomms/test.git打开隐藏的项目 复制全部内容到需要提交的代码中 3.在提交的代码中执行命令 $ git add .git commit -m 首次提交$ git push提交成功...

)

蓝桥杯刷题 深度优先搜索-[178]全球变暖(C++)

题目描述 你有一张某海域 NN 像素的照片,”.”表示海洋、”#”表示陆地,如下所示: … .##… .##… …##. …####. …###. … 其中”上下左右”四个方向上连在一起的一片陆地组成一座岛屿,例如上图就有 2 座岛屿。 由于全球变暖…...

C语言-函数指针-快速排序算法(书籍示例-入门)

概述 使用C语言,实现结构体多元素,排序算法(冒泡排序),这里使用示例:书籍示例讲解 函数简介 函数声明 void qsort(void *base, size_t nitems, size_t size, int (*compar)(const void *, const void*)) 参…...

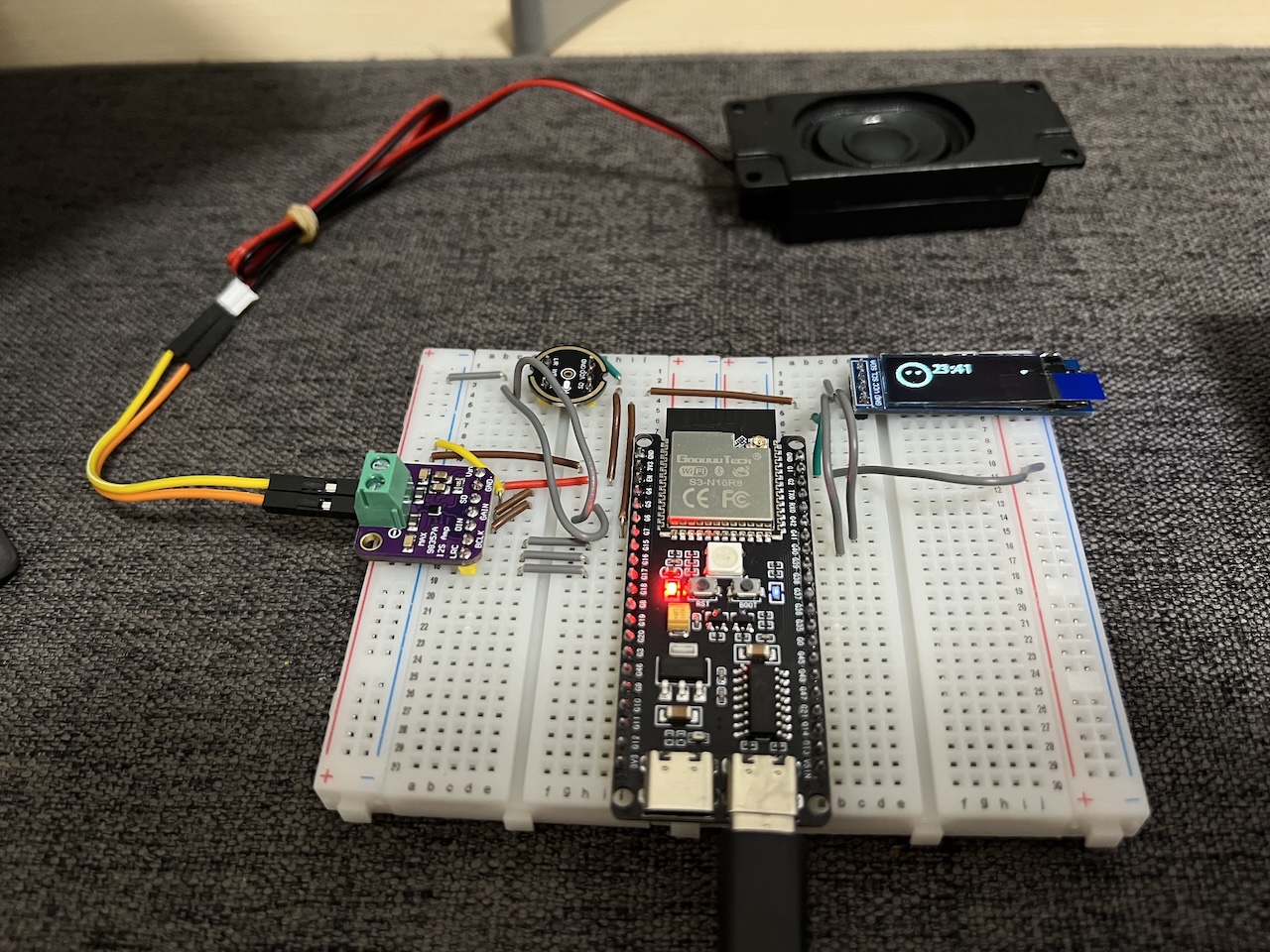

网络编程(Modbus进阶)

思维导图 Modbus RTU(先学一点理论) 概念 Modbus RTU 是工业自动化领域 最广泛应用的串行通信协议,由 Modicon 公司(现施耐德电气)于 1979 年推出。它以 高效率、强健性、易实现的特点成为工业控制系统的通信标准。 包…...

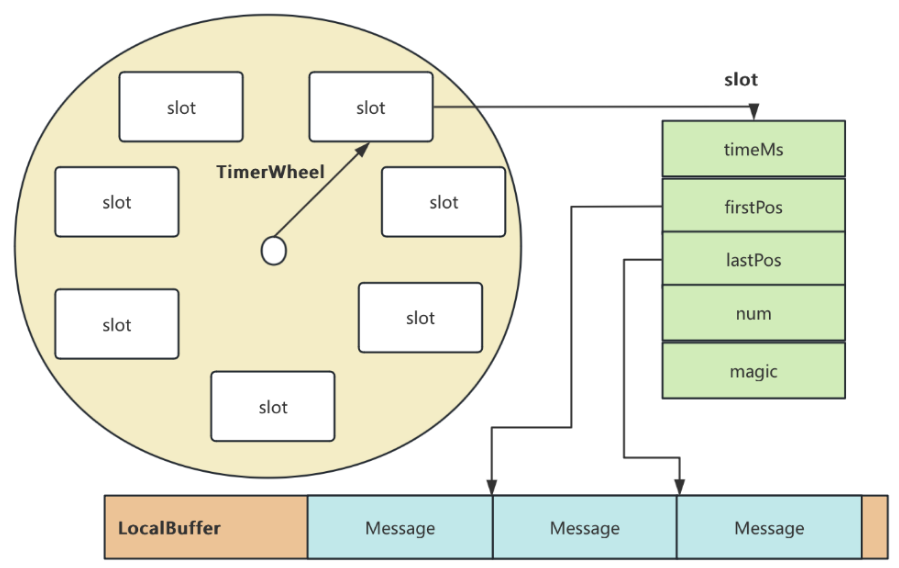

RocketMQ延迟消息机制

两种延迟消息 RocketMQ中提供了两种延迟消息机制 指定固定的延迟级别 通过在Message中设定一个MessageDelayLevel参数,对应18个预设的延迟级别指定时间点的延迟级别 通过在Message中设定一个DeliverTimeMS指定一个Long类型表示的具体时间点。到了时间点后…...

对WWDC 2025 Keynote 内容的预测

借助我们以往对苹果公司发展路径的深入研究经验,以及大语言模型的分析能力,我们系统梳理了多年来苹果 WWDC 主题演讲的规律。在 WWDC 2025 即将揭幕之际,我们让 ChatGPT 对今年的 Keynote 内容进行了一个初步预测,聊作存档。等到明…...

Cinnamon修改面板小工具图标

Cinnamon开始菜单-CSDN博客 设置模块都是做好的,比GNOME简单得多! 在 applet.js 里增加 const Settings imports.ui.settings;this.settings new Settings.AppletSettings(this, HTYMenusonichy, instance_id); this.settings.bind(menu-icon, menu…...

DIY|Mac 搭建 ESP-IDF 开发环境及编译小智 AI

前一阵子在百度 AI 开发者大会上,看到基于小智 AI DIY 玩具的演示,感觉有点意思,想着自己也来试试。 如果只是想烧录现成的固件,乐鑫官方除了提供了 Windows 版本的 Flash 下载工具 之外,还提供了基于网页版的 ESP LA…...

Device Mapper 机制

Device Mapper 机制详解 Device Mapper(简称 DM)是 Linux 内核中的一套通用块设备映射框架,为 LVM、加密磁盘、RAID 等提供底层支持。本文将详细介绍 Device Mapper 的原理、实现、内核配置、常用工具、操作测试流程,并配以详细的…...

相比,优缺点是什么?适用于哪些场景?)

Redis的发布订阅模式与专业的 MQ(如 Kafka, RabbitMQ)相比,优缺点是什么?适用于哪些场景?

Redis 的发布订阅(Pub/Sub)模式与专业的 MQ(Message Queue)如 Kafka、RabbitMQ 进行比较,核心的权衡点在于:简单与速度 vs. 可靠与功能。 下面我们详细展开对比。 Redis Pub/Sub 的核心特点 它是一个发后…...

React---day11

14.4 react-redux第三方库 提供connect、thunk之类的函数 以获取一个banner数据为例子 store: 我们在使用异步的时候理应是要使用中间件的,但是configureStore 已经自动集成了 redux-thunk,注意action里面要返回函数 import { configureS…...

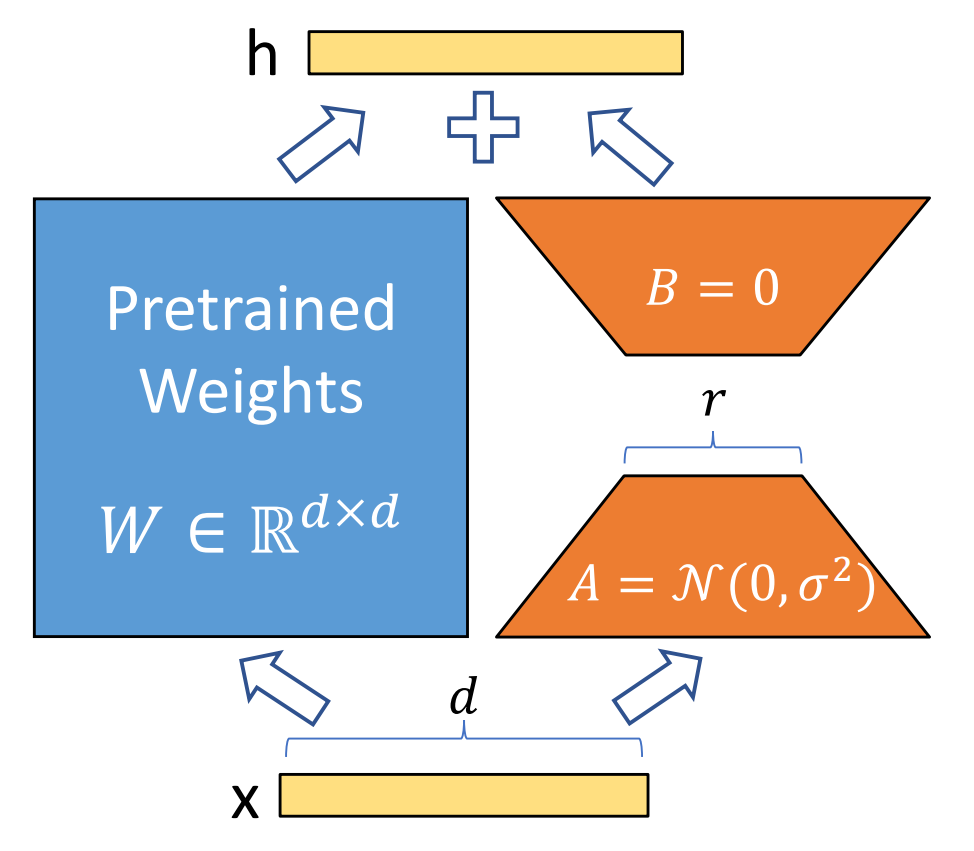

算法岗面试经验分享-大模型篇

文章目录 A 基础语言模型A.1 TransformerA.2 Bert B 大语言模型结构B.1 GPTB.2 LLamaB.3 ChatGLMB.4 Qwen C 大语言模型微调C.1 Fine-tuningC.2 Adapter-tuningC.3 Prefix-tuningC.4 P-tuningC.5 LoRA A 基础语言模型 A.1 Transformer (1)资源 论文&a…...

音视频——I2S 协议详解

I2S 协议详解 I2S (Inter-IC Sound) 协议是一种串行总线协议,专门用于在数字音频设备之间传输数字音频数据。它由飞利浦(Philips)公司开发,以其简单、高效和广泛的兼容性而闻名。 1. 信号线 I2S 协议通常使用三根或四根信号线&a…...