Speech Synthesis (LASC11062)

大纲

- Module 1 – introduction

- Module 2 - unit selection

- Module 3 - unit selection target cost functions

- Module 4 - the database

- Module 5 - evaluation

- Module 6 - speech signal analysis & modelling

- Module 7 - Statistical Parametric Speech Synthesis (SPSS)

- Module 7 bonus - hybrid speech synthesis

- Module 8 - Deep Neural Networks (DNNs)

- Module 9 - sequence-to-sequence models

Module 1 – introduction

We can identify four main challenges for any builder of a TTS system.

1. Semiotic classification of text (text normalisation):Festival

2. Decoding natural-language text:homographs (POS); shallow (“syntactic”) structure

3. Creating natural, human-sounding speech:low-level signal quality (vocoders); segmental quality (pronunciation, stress, connected speech processes); augmentative prosody (text-related); affective prosody (not necessarily text-related) (generating ‘affective’ or ‘emotional’ speech)

4. Creating intelligible speech:Closer to a solved problem than naturalness (interestingly, the most natural-sounding systems are not always the most intelligible);Can achieve human levels of intelligibility (straightforward with good statistical parametric systems);Unit selection systems (generally less intelligible than natural speech but this is in lab conditions with semantically-unpredictable sentences)

We can also identify two current and future main challenges:

1. Generating affective and augmentative prosody

2. Speaking in a way that takes the listener’s situation and needs into account

Current issues:

What is currently possible in commercial systems

• neutral reading-out-loud speaking style

• some languages only (fewer than 50)

• a few expressive ‘tricks’ but not true expressive speech

What is currently possible in research systems

• more varied speaking style, some expressivity (e.g., acted ‘emotions’)

• adapted and personalised voices

• use of ‘found data’

• more languages

Module 2 – unit selection 单元选择语音合成

Interactive unit selection

1. Key concepts

The context in which a sound is produced affects that sound:

- articulatory trajectories 调音轨迹(舌头上下移动时,这与嘴唇的张开、软腭的打开/关闭、或声带聚集)

- phonological effects 音系之间影响 such as assimilation where entire sounds change category depending on their environment

- prosodic environment 韵律的环境 changes the way sounds are produced. For example, their fundamental frequency and their duration are strongly influenced by their position in the phrase. 短语中重音位置不一样

我们观察到的语音信号(波形)是在不同时间尺度上运行的相互作用过程的产物。语境是复杂的--它不仅仅是前面/后面的声音。我们希望同时

-- 将语音建模为一串单元units(这样我们就能将波形片段串联起来concatenate waveform fragments)

-- 考虑当前时刻之前/期间/之后的语境影响 before/during/after the current moment in time

Context-dependent units offer a solution:

• engineer the system in terms of a simple linear string of units

• account for context with a different version of each unit for every different context

但是,我们怎么知道所有不同的语境是什么呢?

- 如果我们列举所有可能的语境,它们实际上是无限的

- 语言中有无数个不同的句子,语境可能跨越整个句子(或更远)

幸运的是,语境对当前语音的影响effects才是最重要的。因此,接下来我们可以考虑减少有效不同语境effectively different contexts的数量

First solution: diphones 双音子

假设影响当前声音的唯一语境是前一个音素preceding phone和后一个音素following phone,于是

- diphone units

- number of unit types O(N^2)

Problems with diphone synthesis 双音子合成的问题

Signal processing is required to manipulate:

- F0 & duration: fairly easy, within a limited range

- Spectrum: not so easy, can do simple smoothing at the joins but otherwise it’s not obvious what aspects to modify

But, this extensive signal processing:

- introduces artefacts and degrades the signal

- cannot faithfully replicate every detail of natural variation in speech (don’t know what to replicate; don’t have powerful enough techniques to manipulate every aspect of speech)

不能通过增加unit types的数量来减少manipulation的需要:因为上下文因子unit types数量会指数级增长(stressed and unstressed versions 增长两倍2000-4000; Phrase-final / non-final versions 在增长两倍4000-8000)

Why is feasible to unit selection synthesis / statistical parametric synthesis:

Some contexts are (nearly) equivalent (don't need every speech sound in every possible context; just each speech sound in a sufficient variety of different contexts)

In diphone synthesis, there is just one recorded copy of each diphone (one copy of each unit type in a carrier phrase to ensure that the diphones were in a “neutral” context). The F0 and duration of that recorded copy will be arbitrary. If we simply concatenated these recordings, we would get an arbitrary and very probably discontinuous F0 contour. We must manipulate the recording in order to impose the predicted F0 (e.g., to get gradual declination over a phrase), and to impose predicted duration.

In unit selection synthesis, we have many recordings of each diphone to choose from. In some versions of unit selection, we will use the front end’s predictions of F0 and duration to help us choose the most appropriate one.

But now we want the effects of context (for lots of different contexts)

The key concept of unit selection speech synthesis:

- record a database of speech containing natural variation caused by context

- at synthesis time, search for the most appropriate sequence of units

Several unit sizes (half phone, diphone, …) are possible - the principles are the same

总结:双音子合成每个双音子只录一次,合成时候要处理;单元选择会有多种单元(unit可以是各种大小,比如diphone, phone, half phone)在不同上下文,我们选择最适合的单元去拼接。

2. Target and candidate units

to a linear string of context-dependent units

The target unit sequence: 上图的序列,每个unit包含上下文信息。each unit annotated with linguistic features.

下一步我们要找到 candidate waveform fragment to render the utterance (from database)。

【!Importantly, the linguistic features are local to each target and each candidate unit】

candidate units from the database:

下一步找到 the best-sounding sequence of candidates。

1. Quantify “best sounding”

2. Search for the best sequence

不需要看neighbors,只看相同的linguistic features

3. Target cost and join cost 目标成本和连接成本

3.1. Target cost function

the target cost function: measuring similarity (Similarity between candidate sequence and the target sequence)

The ideal candidate unit sequence might comprise units taken from identical linguistic contexts to those in the target unit sequence but this will not be possible in general. So we must use less-than-ideal units from non-identical (i.e., mismatched) contexts. We need to quantify how mismatched each candidate is, so we can choose amongst them.

The mismatch ‘distance’ or ‘cost’ between a candidate unit and the ideal (i.e., target) unit is measured by the target cost function

Taylor describes two possible formulations of the target cost function

- independent feature formulation (IFF)

- assume that units from similar linguistic contexts will sound similar

- target cost function measures linguistic feature mismatch

- acoustic-space formulation (ASF)

- acoustic properties of the candidates are known

- make a prediction of the acoustic properties of the target units

- target cost function measures acoustic distance between candidates and targets

3.2. Join cost function

the join cost function (joins not joints! 整体不是仅连接处): measuring concatenation quality; the acoustic mismatch between two candidate units

After candidate units are taken from the database, they will be joined (concatenated). There will have mismatches in acoustic properties around the join point including the spectral envelope, F0, energy

The acoustic mismatch between consecutive candidates is measured by the join cost function

- A typical join cost quantifies the acoustic mismatch across the concatenation point

e.g., spectral characteristics (parameterised as MFCCs, perhaps), F0, energy - Festival’s multisyn uses a sum of normalised sub-costs (weights tuned by ear) 加权和

- Common to also inject some knowledge into the join cost (rule-based); e.g., some phones are easier to splice than others

Typical join cost function (e.g., Festival’s multisyn) uses one frame from each side of the join (very local, may miss important information )

To improve:

- consider several frames around the join, or the entire sequence of frames

- consider deltas (the rate of change)

- (probabilistic) model of the sequence of frames. e.g., hybrid synthesis, which typically involves predicting acoustic parameter trajectories, addresses this

4. Search (for the best sequence)

The total cost of a particular candidate unit sequence under consideration is the sum of

- the target cost for every individual candidate unit in the sequence

- the join cost between every pair of consecutive candidate units in the sequence

There is a single globally-optimal (lowest total cost) sequence; a search is required, to find this sequence

What base unit type is really used? Homogeneous or heterogeneous units?

Homogeneous system will be easier to implement in software:(unit types are same)

- the start and end points of all candidate units align

- the search lattice is simple

Heterogeneous system will be a little more complex:(multiple unit types)

- start and end points will not all align

- number of target costs and join costs to sum up will vary for different paths through the lattice

- some normalisation may be needed to correct for this

with the “zero join cost trick”

= heterogeneous units !

ASF will eventually lead us to hybrid methods which use statistical models to make predictions about the acoustic properties of the target unit sequence

we will have a close look of IFF, ASF in next module (仅是目标成本)

Module 3 - unit selection target cost functions

1. Independent Feature Formulation (IFF)

- Independent Feature Formulation (IFF) target cost function:no prediction of any acoustic properties is involved 没有声学属性

- count the number of linguistic features in the context of the candidate that do not match those of the corresponding target unit

for two competing candidates

我们发现:

- co-articulation: left context has a stronger effect on the current sound than the right context,所以left context权重比right大一点

- 对于影响F0的特征只有布尔值,而没有"near match"的概念。比如left-phonetic-context of [v]和[b]相似,都是voiced。而不是radically different ones, like a liquid. 因此我们应该适当降低惩罚而不直接加所有的权重。

how is prosody “created” using an IFF target cost function (no explicit predictions of any acoustic properties)?

- candidates from appropriate contexts, when selected, will have appropriate prosody

- the join cost will ensure that F0 is continuous

- So, we simply need to make sure the linguistic features capture sufficient contextual information that is relevant to prosody • e.g., stress status, position in phrase

- Optional: if our front end predicts symbolic prosodic features (e.g., ToBI accents and boundary tones), then we can use them in the target cost function

很明显缺点有:不同linguistic features匹配的两个unit也可能听起来很像;所以我们可以比较听感上相似度(声学属性),也就是之后说的ASF

2. Acoustic Space Formulation (ASF) target cost function

acoustic features 声学特征是什么?

- simple acoustic properties such as F0, duration and energy

- a more detailed specification such as the spectral envelope (e.g., as cepstral coefficients)

It will only work if we can accurately predict these properties from the linguistic feature 从linguistic预测出来的属性

how about predicting a complete acoustic specification? 使用regression方法,比如decision regression tree 通过linguistic(如voiced? stop? stressed?)来预测 f0。

3. Mixed IFF + ASF

- 加权和,sub-costs 一部分用linguistic,一部分用acoustic

- 原因:

- ASF escapes some of the sparsity problems inherent in IFF

- but our acoustic properties do not capture all possible acoustic variation (e.g., voice quality, such as phrase-final creaky voice)

- and, of course, our predictions of acoustic properties will contain errors

4. unit selection design choices

- unit types: 通常 diphones 或者 half-phones。 可以用“zero-join-cost-trick”来使用更大的unit(即连续的unit不需要计算)。

- target cost:Festival几乎都是IFF,一小部分acoustic

- join cost:通常包括f0, energy, spectral envelop。 一些系统还会在joins处作smooth处理。

- search:动态规划 + pruning

- database

Module 4 – the database

1. key concepts

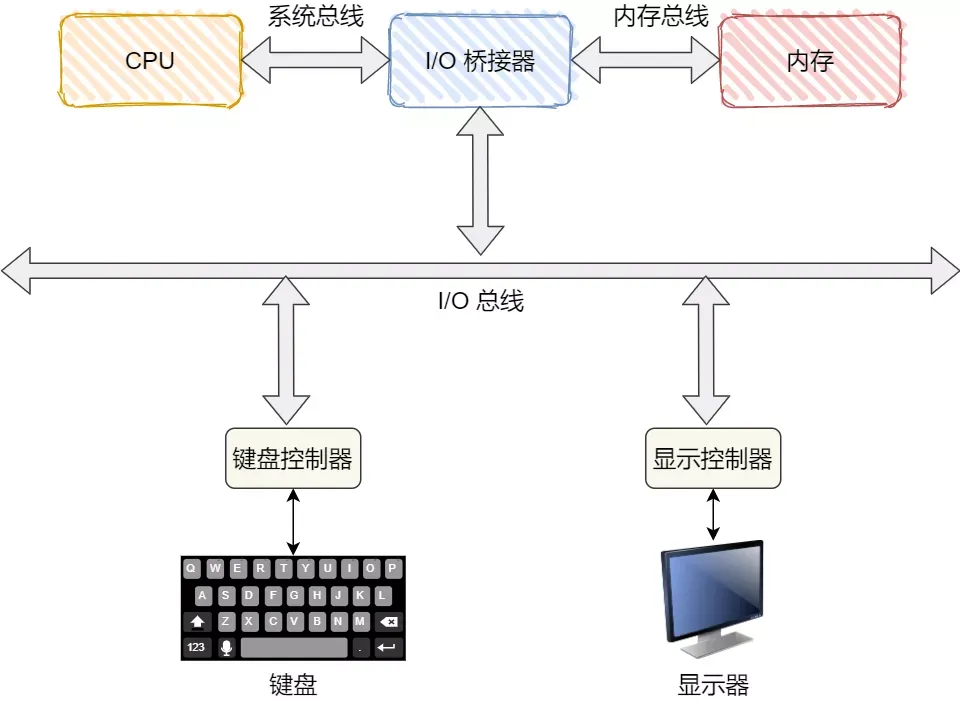

- basic ASR: • Hidden Markov Models • finite state language model • decoding

- base unit type: relatively small number of types (e.g., diphone)

- in unit selection, base unit type is strictly matched between target and candidate; unless database is badly designed: then we would have to back off to a similar type

- therefore, target cost does not need to query the base unit type ; only query its context

- context: the linguistic and acoustic environment in which a base unit occurs

- phonetic context - the sounds before and after it

- prosodic environment - stress, prosody, …

- position - in the syllable, word, phrase, …

- coverage: We would like a database of speech which contains every possible speech base unit type; in every possible context (i. e. every unit-in-context)

但是Zipf distribution的问题同样存在phoneme中

2. script design 文本设计

- Why design a script: In practice, it will be impossible to find a set of sentences that includes at least one token of every unit-in-context type

- Goals

- Cover as many types (in context) as possible

- With as few tokens as possible - i.e., in as few sentences as possible

Typical approach to script design: a greedy algorithm for text selection

- Find a very large text corpus (e.g., as used in the ARCTIC corpora)

• e.g., newspaper text, out-of-copyright novels, web scraping - Make an exhaustive ‘wish list’ of all possible types (in context) that we would like

- Find the sentence in the corpus which provides the largest number of different types that we don’t already have

- Add that sentence our recording script

- Remove those types from the ‘wish list’

- If recording script is long enough, stop. Otherwise, go to 3

Example of text selection

We’ll assume that we have a large corpus of text to start from

- Corpus cleaning

- Define the vocabulary (e.g., only words in our dictionary, or the most frequent words in the corpus)

- Discard all sentences that contain out-of-vocabulary (OOV) words

- Discard all sentences that are too long (hard to read out loud) or too short (atypical prosody)

- Optional: discard hard-to-read sentences

- Front-end processing

- Pass the text through the TTS front end to obtain, for each sentence

- base unit sequence (e.g., diphones)

- linguistic context of each unit (e.g., stress)

The wish list (with 'stress' as context)

e.g., aa_aa_unstressed, aa_aa_stressed, aa_ae_unstressed, aa_ae_stressed...

Optional improvements:

- Guarantee at least one token of every base unit type

- Try to cover the rarest units first

- count occurrences in the original large corpus to find the rarest one

- how to implement: include weights in the “richness” measure that reward rarer units in inverse proportion to their frequency

Optional: domain-specific script

- Select (or manually design, or automatically generate) in-domain sentences

- Measure coverage obtained so far

- Fill in the gaps in coverage, using sentences selected from the large text corpus

3. annotating the database

Now we have: a script composed of sentences && a recording of each sentence

What needs to be done: a time-aligned phonetic transcription of the speech && annotate the speech with supra-segmental linguistic information

Analytical labelling

Forced alignment

Pronunciation model = dictionary + optional vowel reduction

flat start training

Module 5 – evaluation

跨系统比较 cross-system comparisons : optionally, control certain components, such as

- a common database (as in the Blizzard Challenge)

- fixed annotation and label alignments

- common front end

Subjective evaluation 主观评估

- ask listeners to perform some task

- test design

- materials used

Listener task

- a simple, obvious task: • “choose the version you prefer” • 5 point scales • “type in the words you heard”

- to pay attention to specific aspects of speech, e.g., prosody (time-consuming!)

- then perform a more sophisticated analysis of the outcome • e.g., pairwise task followed by multi-dimensional scaling analysis

Test design

- absolute vs. relative judgements

- Absolute - in other words, listeners rate a single, isolated stimulus • Mean Opinion Score (MOS)

- Relative - listeners compare multiple stimuli

more than two stimuli, optionally including references (lower and/or upper) • rating (e.g., multiple MOS), ranking , sorting

- interface

- MUSHRA: Method for the subjective assessment of intermediate quality levels of coding systems

- test / sample size: number of listeners, test duration per listener, number of stimuli per listener and in total

- • maximum test duration 45 minutes

- at least 20 listeners, and preferably more

- as many different sentences as possible, to mitigate the effects of any atypical ones

- the listeners (“subjects”): type of listener, how to recruit them, quality control of their responses

- within vs. between subjects designs

- within subjects: all has same stimuli (too many!); priming or ordering effect

- between subjects: a "virtual subject"; no memory carry-over effect

Materials 材料选择

Two potentially opposing requirements

- expected usage (domain) of the system

- goals of the evaluation and the type of analysis we plan to do

e.g. for intelligibility testing we might choose between:

isolated words

- can narrow down range of possible errors listener can make

- might even design around minimal pairs (e.g., DRT (Diagnostic Rhyme Test) , MRT (Modified Rhyme Test))

full sentences

- errors will be more variable & harder to analyse

- much more natural task for the listener, perhaps closer to target domain

Materials: intelligibility

- ‘normal’ material - e.g., sentences from a newspaper. • tend to get a ceiling effect, due to interference from semantics (predictability)

- Semantically Unpredictable Sentences (SUS) : e.g., “The unsure steaks overcame the zippy rudder”

- Diagnostic Rhyme Test (DRT) or Modified Rhyme Test (MRT): uses minimal pairs

- e.g., “Now we will say cold again.” “Now we will say gold again.”

- specific to individual phonemes - a diagnostic unit test

- very time consuming and therefore rarely used

- other ways to avoid a ceiling effect:

- Add noise

- Induce additional cognitive load with another task in parallel

Materials: naturalness

- “Randomly” selected text: which domain? newspapers or novels?

- Carefully designed text: e.g., Harvard (IEEE) sentences • in phonetically balanced lists

Objective evaluation 客观评估

- simple distances to reference samples

- Compare acoustic properties to a natural reference sample (‘gold standard’)

- Time-align natural and synthetic: frame-by-frame comparison, sum up local differences

- Does not account for natural variation (could use multiple natural examples)

- or perhaps more sophisticated auditory models

- Based only on properties of the signal

- spectral envelope: Mel-Cepstral Distortion (MCD)

- F0 contour: Root Mean Square Error of F0 (RMSE F0) and/or correlation

- Complex objective measures

- from telecommunications (for distorted natural speech): e.g., PESQ (P.862) or POLQA (P.863)

- PESQ (Perceptual evaluation of speech quality) is based on a weighted combination of differences in many properties of speech, such as the higher-order statistical properties of various spectral coefficients

- from telecommunications (for distorted natural speech): e.g., PESQ (P.862) or POLQA (P.863)

Module 6 – speech signal analysis & modelling

Two parts:

speech signal analysis: generalising source+filter to excitation+spectral envelope

- epoch detection (‘pitch marking’): one point in each pitch period

- F0 estimation (‘pitch tracking’): avg rate vibration of vocalfold in a local region

- spectral envelope estimation

speech signal modelling:: representing speech parameters in a form suitable for statistical modelling

- speech parameters

- representations suitable for modelling

- converting back to a waveform

1. speech signal analysis

Often, we don’t really need the ‘true’ source and filter. We just need to work with the speech signal, so that we can

- measure: • individual properties: e.g., F0 for use in the join cost

- modify: • phonetic identity • prosody

- manipulate: • waveforms: e.g., to smoothly concatenate candidates from the database

Epoch detection vs F0 estimation

Epoch detection (also known as pitch marking, Glottal Closure Instant (GCI) detection)

- pitch-synchronous signal processing 音高同步信号处理

- TD-PSOLA 时域-基音同步叠加

- or simply just overlap-add joining of units: 对齐前后两个unit的epoch/pitchmark

- a few vocoders operate pitch synchronously

PSOLA 算法的原理是:将原始语音信号与一 系列基音同步窗相乘得到一系列短时分析信号: 将短时分析信号修正后得到短时合成信号,根据 原始语音波形信号和目标波形的基音曲线和音 长,确定二者之间的基音周期映射,从而确定所 需的短时基音序列;将合成的短时基音序列与目 标基音周期同步排列,重叠相加得到基音波形, 此时合成的语音波形就是所期望的基音周期曲线 和音长。

时域基音同步叠加 (TD-PSOLA),TD-PSOLA 算法只在时域对波形进 行处理,不对信号作频域上的调整。音高(基频 f0) 的修改是通过改变基频之间的时间间隔得到的, 音长的修正是通过重复或者省略一些语音片断来 完成。

F0 estimation (also known as pitch determination, pitch tracking, F0 tracking, …)

- a component of the join cost in all unit selection systems 用来计算join cost的

- used in the target cost, for systems that predict F0 targets (ASF)

- a parameter for most (probably all) vocoders

1.1 Epoch detection

What we need epoch detection for: PSOLA (Pitch Synchronous Overlap and Add) 两个音频合并时候需要找到epoch来同步

A simple algorithm for epoch detection:

- pre-process • remove unwanted frequencies with a low-pass filter 直接移除F0以外的频率后变成简单正弦波了~

- peak picking 找到正弦波的顶点处(求导后找零点)

- differentiate

- smooth, to remove spurious low-amplitude variations

- find zero crossings (from positive to negative)

- post-process • correct for time offset - e.g., to align pitchmark with largest peak in each period 算法简单所以我们做点后处理去对齐,以及一些噪声需要处理

epoch detection (pitch marking / Glottal Closure Instant(GCI) detection) 用于signal processing的算法。而下面的f0 estimation (pitch tracking) 用于 parameterising 语音信号。

1.2 F0 estimation

可以用1/T from epoch detection 来估计 F0, 但是会有很多local errors!(这个error可以减轻通过选取多个epoch作平均)。更聪明做法,引入lag去找重合。

Cross-correlation (also known as “modified autocorrelation”)

We search for a peak in the (modified) autocorrelation function.

There will be a large peak at a lag of 0, another at the pitch period and then every exact multiple of the pitch period 比如第二个图上在0延迟(也就是重合)最大,第二个最大的就是我们想找的

The autocorrelation method (上图右)

Pick the highest non-zero-lag peak over some search range

- the corresponding lag = the pitch period (measured in samples)

对于整个utterance,我们可以设置t为每个10ms,所以我们会获得很多F0。

Not always as easy at that sounds: 有很多困难点

- real signals are not perfectly periodic

- formants will lead to some waveform self-similarity at lags other than exact multiples of the pitch period

- choose the search range carefully: 搜索范围的选择

- if upper limit too high, we may choose a peak at too great a lag:

overestimate the pitch period = underestimate F0 by a factor (e.g., pitch halving) - if lower limit too low, we may choose the zero-lag peak

- if upper limit too high, we may choose a peak at too great a lag:

因此,基本上pitch estimation 都是在 auto-correlation或者 Cross-correlation的基础上再加上多种预处理和后处理机制。

1.3 pre-process

- 使用 low-pass filtering 低通滤波器:移除vocal tract informatoin (e.g., formants)和 unvoiced sounds,并且通常和下采样连用(降低计算复杂度)

- spectal flattering: 频谱包络线平整化,如 inverse filtering

1.4 post-processing

可能有多个f0 的 candidates,我们需要选择最佳candidates序列。

- dynamic programming: YAPPT

所以通用方法 pre-process + autocorrelation + post-process。通常包含大量参数:

Alternatives to auto-correlation:

- cepstral domain methods

- comb filtering: an adaptive filters that eliminates the harmonic (at multiples of F0)

- probabilistic methods 概率方法: 监督训练

倒频谱可以被视为在不同频带上变化速率的信息

1.5 Evaluation

ground truth 包含: hand-labelled F0 contours; Laryngograph(EGG) recordings;一些公开数据集

error types: voicing status errors (voice还是unvoice的检测); F0 error (in voiced speech)

F0 estimation算法一般都会假设periodicity,因此对于creaky voice效果很差。而epoch detection (pitch marking)表现比较平均。

2. Spectral envelope estimation 频谱包络预测

去除频谱图的details来获得包络线。

当计算频谱的window的窗口大小是整数个周期T0时 (comparable),power spectrum在时域上变换是周期性的。

当计算频谱的window大小包含多个pitch period周期时,power spectrum在频域上显示周期性。

如上图 频谱图(spectrogram)或时频谱 所示。

The STRAIGHT vocoder: 需要用上面 envelope的估计方法减少harmonic影响(自调节window)。

在STRAIGHT分析阶段,使用 F0 adaptive window 最小化envelope中harmonic的干预。并且通过对频率插值来 smooth 包络线。

3. Speech signal modelling 参数化语音合成

speech parameters representation + regression function -> waveform

speech parameters 包括 f0,envelope,还有aperiodic energy

parameters需要转换为适合建模的representation,

- fixed in number (per frame), and low-dimiensional

- at a fixed frame rate

- a good separation of prosodic and segmental identity aspects of speech 所以可以独立建模

- well behaved and stable when we perturb them (e.g., by averaging)

- for statistical modelling, uncorrelated params (can avoid having to model covariance)

STRAIGHT vocoder 如何实现?首先是分析阶段,三个params:

1. smooth spectral envelope: high resolution (same as FFT) ; highly-correlated parameters 因为过滤他们的filterbanks是高度相关的。

为了提高统计上表达能力,需要表达envelope为 Mel-cepstrum。 (和MFCCs动机相同,但本质不一样。)具体方法:

- warp the frequency scale: 不使用lossy discrete filterbank,改用continuous function (all-pass filter)

- decorrelate:转换spectrum为cepstrum 倒谱

- reduce dimensionality:截断cepstrum,ASR时候保留12个系数,合成时候保留更多40~60个

2. aperiodic energy: 在每个frequency上的 periodic和aperiodic energy的比率。 periodic energy为 harmonic的顶点形成的包络线, aperiodic energy为 harmonic的低点形成的包络线。同时,也是high resolution (same af FFT),high-correlated parameters

reduce dimensionality:reduce resolution by averaging across broad frequency bands (5~25 bands on a mel scale)

3. f0 analysis: + Voiced / unvoiced decision

在STRAIGHT vocoder的合成阶段,分析阶段获得的 f0 用来生成 periodic pulse(作为voice energy 也就是激励);分析的aperiodic energy生成 non-periodic component (如噪声);分析的envelope作为 filter。

中间缺失部分即之后的 SPSS,通过参数合成新的未见过的语音。

Module 7 – Statistical Parametric Speech Synthesis(SPSS)

三种方法比较:

- unit selection: 用linguistic specification的target cost和phontic的 join cost 拼接 units

- Speech signal modelling: source-filter model,首先分离excitation和spectral envelope,然后重建wave

- Statistical Parametric Synthesis: 从lingustic specification 预测parameters,是一个回归问题。

1. TTS 作为一个 seq2seq 的 regression回归问题

解决regression tree + Hidden Markov Model (HMM): 一个生成序列一个预测。

- 作为回归任务:两个任务需要完成。第一个是处理phonetic sequence,决定duration,创建frame序列(可用HMM)。第二个是Prediction (regression),基于特征预测每一帧。(可用回归树,如CART)

- 使用context-dependent models完成实现:解决少sample的type和没sample的type;以及相似模型的parameter sharing。方法:Grouping contexts according to phonetic features

Linguistic processing:

- from text to linguistic features using the front end (same as in unit selection)

- attach linguistic features to phonemes: “flatten” the linguistic structures

- we then create one context-dependent HMM for every unique combination of linguistic features

Training the HMMs:

- need labelled speech data, just as for ASR (supervised learning)

- need models for all combinations of linguistic features, including those unseen in the training data (by parameterising the models using a regression tree)

Synthesising from the HMMs:

- use the front end to predict required sequence of context-dependent models (the regression tree provides the parameters for these models)

- use those models to generate speech parameters

- use a vocoder to convert those to a waveform

Module 7+ Hybrid speech synthesis

比较:

- SPSS (with HMMs or DNNs): flexible, robust to labelling errors,but naturalness is limited by vocoder

- Unit selection: potentially excellent naturalness but strongly affected by labelling errors ; 很难在新数据上优化(对于target和join cost)。

- Hybrid synthesis:robust 统计模型 + waveform 拼接; ASF target cost function!

回顾一下:

- Signal processing: 参数化语言信号的方法,如MFCCs

- Unit selection:在linguistic and/or acoustic space上有 稀疏性。

- SPSS: 使用HMM/DNN的 seq2seq 回归

Case study: Trajectory Tiling 轨迹平铺?

使用

Module 8 – Deep Neural Networks(DNN)

第一篇使用DNN的: Statistical parametric speech synthesis using deep neural networks (2013)

可应用于语音生成中的声学语音建模,以克服前面提到的局限性,实现更好的输入到聚类和/或聚类到特征的映射

Module 9 – sequence-to-sequence models (encoder-decoder)

这些模型解决三个问题:

- regression from input to output

- alignment during training (和ASR任务类似)

- duration prediction during inference (synthesis)

所有模型解决类似的方式解决1。模型差别主要在2和3。

One class of models (e.g., Tacotron 2) attempts to jointly solve 2 and 3 using a single neural architecture. Another class of models (e.g., FastPitch) uses separate mechanisms for 2 and 3.

Whilst it appears elegant to solve two problems with a single architecture, we know that the problem of alignment is actually very different from the problem of duration prediction. Alignment is very similar to Automatic Speech Recognition (ASR), so we might want to take advantage of the best available ASR models to do that. In contrast, duration prediction is a straightforward regression task.

Reading

- 语音信号数字处理(L.R.Rabiner) 403 pages

- 语音信号处理 (162 pages)

相关文章:

Speech Synthesis (LASC11062)

大纲 Module 1 – introductionModule 2 - unit selectionModule 3 - unit selection target cost functionsModule 4 - the databaseModule 5 - evaluationModule 6 - speech signal analysis & modellingModule 7 - Statistical Parametric Speech Synthesis (SPSS)Modu…...

拟合与插值|线性最小二乘拟合|非线性最小二乘拟合|一维插值|二维插值

挖掘数据背后的规律是数学建模的重要任务,拟合与插值是常用的分析方法 掌握拟合与插值的基本概念和方法熟悉Matlab相关程序实现能够从数据中挖掘数学规律 拟合问题的基本提法 拟合问题的概念 已知一组数据(以二维为例),即平面上n个点 ( x i , y i ) …...

《python语言程序设计》2018版第7章第05题几何:正n边形,一个正n边形的边都有同样的长度。角度同样 设计RegularPolygon类

结果和代码 这里只涉及一个办法 方法部分 def main():rX, rY eval(input("Enter regular polygon x and y axis:"))regular_num eval(input("Enter regular number: "))side_long eval(input("Enter side number: "))a exCode07.RegularPol…...

使用Virtio Driver实现一个计算阶乘的小程序——QEMU平台

目录 一、概述 二、代码部分 1、Virtio 前端 (1) User Space (2) Kernel Space 2、Virtio 后端 三、运行 QEMU Version:qemu-7.2.0 Linux Version:linux-5.4.239 一、概述 本篇文章的主要内容是使用Virtio前后端数据传输的机制实现一个计算阶乘的…...

【PyCharm】配置“清华镜像”地址

文章目录 前言一、清华镜像是什么?二、pip是什么?三、具体步骤1.复制镜像地址2.打开PyCharm,然后点击下图红框的选项3.在弹出的新窗口点击下图红框的选项进行添加4.在URL输入框中粘贴第一步复制的地址,名字可以不更改,…...

IO器件性能评估

整体逻辑:需要先了解到读写速率的差异,在明确使用场景。比如应用启动过程中的IO主要是属于随机读的io 评估逻辑: UFS 与 eMMC主要差别在io读写能力: 1,对比UFS、eMMC的规格书标注的io读写能力 ufs spec : sequentia…...

在js中判断对象是空对象的几种方法

使用 Object.keys() 方法 Object.keys() 方法返回对象自身的可枚举属性名称组成的数组。如果数组的长度为 0,那么对象是空的。 function isEmptyObject(obj) {return Object.keys(obj).length 0 && obj.constructor Object; }const obj1 {}; const obj2…...

【整理】后端接口设计和优化相关思路汇总

文章目录 明确的接口定义和文档化使用RESTful设计规范分页和过滤合理使用缓存限流与熔断机制安全性设计异步处理与后台任务接口参数校验(入参和出参)接口扩展性考虑核心接口,线程池隔离关键接口,日志打印接口功能单一性原则接口查…...

docker 部署 sql server

众所周知,sql server不好装,本人之前装了两次,这个数据库简直是恶心。 突然想到,用docker容器吧 果然可以 记得放开1433端口 还有 记得docker加速,不然拉不到镜像的最后工具还是要装的,这个就自己研究吧。 …...

微信云开发云存储 下载全部文件

一、安装 首先按照这个按照好依赖,打开cmd 安装 | 云开发 CloudBase - 一站式后端云服务 npm i -g cloudbase/cli 安装可能遇到的问题 ‘tcb‘ 不是内部或外部命令,也不是可运行的程序或批处理文件。-CSDN博客 二、登录 在cmd输入 tcb login 三、…...

1、巡线功能实现(7路数字循迹)

一、小车运行 1.PWM初始化函数 (pwm.c中编写) 包括四个轮子PWM通道使用的GPIO接口初始化、定时器初始化、PWM通道初始化。 void PWM_Init(uint16_t arr,uint16_t psc); 2.PWM占空比设置函数 (pwm.c中编写) 此函数调用了四个通道设置占空比的函数,作用是方便修改四…...

来了...腾讯内推的软件测试面试PDF 文档(共107页)

不多说,直接上干货(展示部分以腾讯面试纲要为例)完整版文末领取 通过大数据总结发现,其实软件测试岗的面试都是差不多的。常问的有下面这几块知识点: 全网首发-涵盖16个技术栈 第一部分,测试理论&#x…...

Android大脑--systemserver进程

用心坚持输出易读、有趣、有深度、高质量、体系化的技术文章,技术文章也可以有温度。 本文摘要 系统native进程的文章就先告一段落了,从这篇文章开始写Java层的文章,本文同样延续自述的方式来介绍systemserver进程,通过本文您将…...

python项目部署:Nginx和UWSGI认识

Nginx: HTTP服务器,反向代理,静态资源转发,负载均衡,SSL终端,缓存,高并发处理。 UWSGI: Python应用程序服务器,WSGI兼容,多进程管理,快速应用部署,多种协议支…...

【区块链+金融服务】农业大宗供应链线上融资平台 | FISCO BCOS应用案例

释放数据要素价值,FISCO BCOS 2024 应用案例征集 粮食贸易受季节性影响显著。每年的粮收季节,粮食收储企业会根据下游订单需求,从上游粮食贸易商或粮农手 里大量采购粮食,并分批销售给下游粮食加工企业(面粉厂、饲料厂…...

2025ICASSP Author Guidelines

Part I: General Information Procedure ICASSP 2025 论文提交与评审过程将与往届会议类似: 有意参加会议的作者需提交一份完整描述其创意和相关研究成果的文件,技术内容(包括图表和可能的参考文献)最多为4页&…...

Openstack 所需要的共享服务组件及核心组件

openstack 共享服务组件: 数据库服务(Database service):MariaDB及MongoDB 消息传输服务(messages queues):RabbitMQ 缓存(cache):Memcache 时间同步(time sync)&…...

解密Linux中的通用块层:加速存储系统,提升系统性能

通用块层 通用块层是Linux中的一个重要组件,用于管理不同块设备的统一接口,减少不同块设备的差异带来的影响。它位于文件系统和磁盘驱动之间,类似于Java中的适配器模式,让我们无需关注底层实现,只需提供固定接口即可。…...

浅析国有商业银行人力资源数字化平台建设

近年来,在复杂的国际经济金融环境下,中国金融市场整体运行保持稳定。然而,随着国内金融机构改革的不断深化,国有商业银行全面完成股改上市,金融市场规模逐步扩大,体系日益完善,同时行业的竞争也…...

微信h5跳转消息页关注公众号,关注按钮闪一下消失

一、需求背景 在微信里访问h5页面,在页面里跳转到微信公众号消息页关注公众号。如下图: 二、实现跳转消息页关注公众号 跳转链接是通过 https://mp.weixin.qq.com/mp/profile_ext?actionhome&__bizxxxxx&scene110#wechat_redirect 来实现。…...

设计模式和设计原则回顾

设计模式和设计原则回顾 23种设计模式是设计原则的完美体现,设计原则设计原则是设计模式的理论基石, 设计模式 在经典的设计模式分类中(如《设计模式:可复用面向对象软件的基础》一书中),总共有23种设计模式,分为三大类: 一、创建型模式(5种) 1. 单例模式(Sing…...

蓝桥杯 2024 15届国赛 A组 儿童节快乐

P10576 [蓝桥杯 2024 国 A] 儿童节快乐 题目描述 五彩斑斓的气球在蓝天下悠然飘荡,轻快的音乐在耳边持续回荡,小朋友们手牵着手一同畅快欢笑。在这样一片安乐祥和的氛围下,六一来了。 今天是六一儿童节,小蓝老师为了让大家在节…...

《通信之道——从微积分到 5G》读书总结

第1章 绪 论 1.1 这是一本什么样的书 通信技术,说到底就是数学。 那些最基础、最本质的部分。 1.2 什么是通信 通信 发送方 接收方 承载信息的信号 解调出其中承载的信息 信息在发送方那里被加工成信号(调制) 把信息从信号中抽取出来&am…...

C# SqlSugar:依赖注入与仓储模式实践

C# SqlSugar:依赖注入与仓储模式实践 在 C# 的应用开发中,数据库操作是必不可少的环节。为了让数据访问层更加简洁、高效且易于维护,许多开发者会选择成熟的 ORM(对象关系映射)框架,SqlSugar 就是其中备受…...

浅谈不同二分算法的查找情况

二分算法原理比较简单,但是实际的算法模板却有很多,这一切都源于二分查找问题中的复杂情况和二分算法的边界处理,以下是博主对一些二分算法查找的情况分析。 需要说明的是,以下二分算法都是基于有序序列为升序有序的情况…...

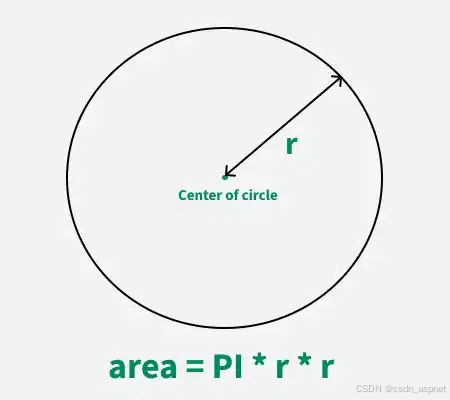

C# 求圆面积的程序(Program to find area of a circle)

给定半径r,求圆的面积。圆的面积应精确到小数点后5位。 例子: 输入:r 5 输出:78.53982 解释:由于面积 PI * r * r 3.14159265358979323846 * 5 * 5 78.53982,因为我们只保留小数点后 5 位数字。 输…...

SQL慢可能是触发了ring buffer

简介 最近在进行 postgresql 性能排查的时候,发现 PG 在某一个时间并行执行的 SQL 变得特别慢。最后通过监控监观察到并行发起得时间 buffers_alloc 就急速上升,且低水位伴随在整个慢 SQL,一直是 buferIO 的等待事件,此时也没有其他会话的争抢。SQL 虽然不是高效 SQL ,但…...

混合(Blending))

C++.OpenGL (20/64)混合(Blending)

混合(Blending) 透明效果核心原理 #mermaid-svg-SWG0UzVfJms7Sm3e {font-family:"trebuchet ms",verdana,arial,sans-serif;font-size:16px;fill:#333;}#mermaid-svg-SWG0UzVfJms7Sm3e .error-icon{fill:#552222;}#mermaid-svg-SWG0UzVfJms7Sm3e .error-text{fill…...

【Nginx】使用 Nginx+Lua 实现基于 IP 的访问频率限制

使用 NginxLua 实现基于 IP 的访问频率限制 在高并发场景下,限制某个 IP 的访问频率是非常重要的,可以有效防止恶意攻击或错误配置导致的服务宕机。以下是一个详细的实现方案,使用 Nginx 和 Lua 脚本结合 Redis 来实现基于 IP 的访问频率限制…...

代码规范和架构【立芯理论一】(2025.06.08)

1、代码规范的目标 代码简洁精炼、美观,可持续性好高效率高复用,可移植性好高内聚,低耦合没有冗余规范性,代码有规可循,可以看出自己当时的思考过程特殊排版,特殊语法,特殊指令,必须…...