Rust : 数据分析利器polars用法

Polars虽牛刀小试,就显博大精深,在数据分析上,未来有重要一席。

下面主要列举一些常见用法。

一、toml

需要说明的是,在Rust中,不少的功能都需要对应features引入设置,这些需要特别注意,否则编译通不过。

以下polars的版本是0.41.3。

相关依赖项如下:

[dependencies]

polars = { version = "0.41.3", features = ["lazy","dtype-struct","polars-io","dtype-datetime","dtype-date","range","temporal","rank","serde","csv","ndarray","parquet","strings"] }

rand = "0.8.5"

chrono = "0.4.38"

serde_json = "1.0.124"

itertools = "0.13"

二、main.rs

部分函数功能还没有完成,用todo标示,请大家注意。

#![allow(warnings,dead_code, unused,unused_imports, unused_variables, unused_mut)]

use polars::prelude::*;

use std::time::Instant;

use serde_json::*;

use chrono::{NaiveDate};

#[allow(dead_code)]

fn main(){//create_df_by_series();//create_df_by_df_macro();//df_apply();// 需要把相关函数放在里面即可,这里不一一列示。//df_to_vec_tuples_by_izip();//write_read_parquet_files();//date_to_str_in_column();str_to_datetime_date_cast_in_df();//create_list_in_df_by_apply();//unnest_struct_in_df();//as_struct_in_df();//struct_apply_in_df();//test();

}fn create_df_by_series(){println!("------------- create_df_by_series test ---------------- ");let s1 = Series::new("from vec", vec![4, 3, 2]);let s2 = Series::new("from slice", &[true, false, true]);let s3 = Series::new("from array", ["rust", "go", "julia"]);let df = DataFrame::new(vec![s1, s2, s3]).unwrap();println!("{:?}", &df);

}fn create_df_by_df_macro(){println!("------------- create_df_by_macro test ---------------- ");let df1: DataFrame = df!("D1" => &[1, 3, 1, 5, 6],"D2" => &[3, 2, 3, 5, 3]).unwrap();let df2 = df1.lazy().select(&[col("D1").count().alias("total"),col("D1").filter(col("D1").gt(lit(2))).count().alias("D1 > 3"),]).collect().unwrap();println!("{}", df2);

}fn rank(){println!("------------- rank test ---------------- ");// 注意:toml => feature : ranklet mut df = df!("scores" => ["A", "A", "A", "B", "C", "B"],"class" => [1, 2, 3, 4, 2, 2]).unwrap();let df = df.clone().lazy().with_column(col("class").rank(RankOptions{method: RankMethod::Ordinal, descending: false}, None).over([col("scores")]).alias("rank_")).sort_by_exprs([col("scores"), col("class"), col("rank_")], Default::default());println!("{:?}", df.collect().unwrap().head(Some(3)));

}fn head_tail_sort(){println!("------------------head_tail_sort test-------------------");let df = df!("scores" => ["A", "B", "C", "B", "A", "B"],"class" => [1, 3, 1, 1, 2, 3]).unwrap();let head = df.head(Some(3));let tail = df.tail(Some(3));// 对value列进行sort,生成新的series,并进行排序let sort = df.lazy().select([col("class").sort(Default::default())]).collect();println!("df head :{:?}",head);println!("df tail:{:?}",tail);println!("df sort:{:?}",sort);

}fn filter_group_by_agg(){println!("----------filter_group_by_agg test--------------");use rand::{thread_rng, Rng};let mut arr = [0f64; 5];thread_rng().fill(&mut arr);let df = df! ("nrs" => &[Some(1), Some(2), Some(3), None, Some(5)],"names" => &[Some("foo"), Some("ham"), Some("spam"), Some("eggs"), None],"random" => &arr,"groups" => &["A", "A", "B", "C", "B"],).unwrap();let df2 = df.clone().lazy().filter(col("groups").eq(lit("A"))).collect().unwrap();println!("df2 :{:?}",df2);println!("{}", &df);let out = df.lazy().group_by([col("groups")]).agg([sum("nrs"), // sum nrs by groupscol("random").count().alias("count"), // count group members// sum random where name != nullcol("random").filter(col("names").is_not_null()).sum().name().suffix("_sum"),col("names").reverse().alias("reversed names"),]).collect().unwrap();println!("{}", out);}fn filter_by_exclude(){println!("----------filter_by_exclude----------------------");let df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let lst = df["date"].as_list().slice(1,1);println!("s :{:?}",lst);// 下面all() 可以用col(*)替代;let df_filter = df.lazy().select([all().exclude(["code","date"])]).collect().unwrap();println!("df_filter :{}",df_filter);}fn windows_over(){println!("------------- windows_over test ---------------- ");let df = df!("key" => ["a", "a", "a", "a", "b", "c"],"value" => [1, 2, 1, 3, 3, 3]).unwrap();// over()函数:col("value").min().over([col("key")]),表示:请根据col("key")进行分类,再对分类得到的组求最小值操作;let df = df.clone().lazy().with_column(col("value").min() // .max(), .mean().over([col("key")]).alias("over_min")).with_column(col("value").max().over([col("key")]).alias("over_max"));println!("{:?}", df.collect().unwrap().head(Some(10)));

}//read_csvfn lazy_read_csv(){println!("------------- lazy_read_csv test ---------------- ");// features => lazy and csv // 请根据自己文件情况进行设置let filepath = "../my_duckdb/src/test.csv";// CSV数据格式// 600036.XSHG,2079/7/24,3345.9,3357.8,3326.7,3357,33589,69181710.57,1// 600036.XSHG,2079/7/25,3346,3357.9,3326.8,3357.1,33590,69184251.47,1let polars_lazy_csv_time = Instant::now();let p = LazyCsvReader::new(filepath).with_try_parse_dates(true) //需要增加Available on crate feature temporal only..with_has_header(true).finish().unwrap();let df = p.collect().expect("error to dataframe!");println!("polars lazy 读出csv的行和列数:{:?}",df.shape());println!("polars lazy 读csv 花时: {:?} 秒!", polars_lazy_csv_time.elapsed().as_secs_f32());

}fn read_csv(){println!("------------- read_csv test ---------------- ");// features => polars-iouse std::fs::File;let csv_time = Instant::now();let filepath = "../my_duckdb/src/test.csv";// CSV数据格式// 600036.XSHG,2079/7/24,3345.9,3357.8,3326.7,3357,33589,69181710.57,1// 600036.XSHG,2079/7/25,3346,3357.9,3326.8,3357.1,33590,69184251.47,1let file = File::open(filepath).expect("could not read file");let df = CsvReader::new(file).finish().unwrap();//println!("df:{:?}",df);println!("读出csv的行和列数:{:?}",df.shape());println!("读csv 花时: {:?} 秒!",csv_time.elapsed().as_secs_f32());

}fn read_csv2(){println!("------------- read_csv2 test ---------------- ");// features => polars-io// 具体按自己目录路径下的文件let filepath = "../my_duckdb/src/test.csv"; //请根据自已文件情况进行设置// CSV数据格式// 600036.XSHG,2079/7/24,3345.9,3357.8,3326.7,3357,33589,69181710.57,1// 600036.XSHG,2079/7/25,3346,3357.9,3326.8,3357.1,33590,69184251.47,1let df = CsvReadOptions::default().with_has_header(true).try_into_reader_with_file_path(Some(filepath.into())).unwrap().finish().unwrap();println!("read_csv2 => df {:?}",df)

}fn parse_date_csv(){println!("------------- parse_date_csv test ---------------- ");// features => polars-iolet filepath = "../my_duckdb/src/test.csv";// 读出csv,并对csv中date类型进行转换// CSV数据格式// 600036.XSHG,2019/7/24,3345.9,3357.8,3326.7,3357,33589,69181710.57,1// 600036.XSHG,2019/7/25,3346,3357.9,3326.8,3357.1,33590,69184251.47,1let df = CsvReadOptions::default().map_parse_options(|parse_options| parse_options.with_try_parse_dates(true)).try_into_reader_with_file_path(Some(filepath.into())).unwrap().finish().unwrap();println!("{}", &df);

}fn write_csv_df(){println!("----------- write_csv_df test -------------------------");// toml features => csv// features => polars-iolet mut df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let mut file = std::fs::File::create("600036SH.csv").unwrap();CsvWriter::new(&mut file).finish(&mut df).unwrap();

}fn iter_dataframe_as_row() {println!("------------- iter_dataframe_as_row test ---------------- ");let starttime = Instant::now();let df: DataFrame = df!("D1" => &[1, 3, 1, 5, 6],"D2" => &[3, 2, 3, 5, 3]).unwrap();let (_row,_col) = df.shape();for i in 0.._row{let mut rows = Vec::new();for j in 0.._col{let value = df[j].get(i).unwrap();rows.push(value);}}println!("dataframe按行遍历cost time :{:?} seconds!",starttime.elapsed().as_secs_f32());

}fn join_concat(){println!("------------- join_concat test ---------------- ");// 创建表结构,内部有空数据let df = df! [// 表头 对应数据"Model" => ["iPhone XS", "iPhone 12", "iPhone 13", "iPhone 14", "Samsung S11", "Samsung S12", "Mi A1", "Mi A2"],"Company" => ["Apple", "Apple", "Apple", "Apple", "Samsung", "Samsung", "Xiao Mi", "Xiao Mi"],"Sales" => [80, 170, 130, 205, 400, 30, 14, 8],"Comment" => [None, None, Some("Sold Out"), Some("New Arrival"), None, Some("Sold Out"), None, None],].unwrap();let df_price = df! ["Model" => ["iPhone XS", "iPhone 12", "iPhone 13", "iPhone 14", "Samsung S11", "Samsung S12", "Mi A1", "Mi A2"],"Price" => [2430, 3550, 5700, 8750, 2315, 3560, 980, 1420],"Discount" => [Some(0.85), Some(0.85), Some(0.8), None, Some(0.87), None, Some(0.66), Some(0.8)],].unwrap();// 合并// join()接收5个参数,分别是:要合并的DataFrame,左表主键,右表主键,合并方式let df_join = df.join(&df_price, ["Model"], ["Model"], JoinArgs::from(JoinType::Inner)).unwrap();println!("{:?}", &df_join);let df_v1 = df!("a"=> &[1],"b"=> &[3],).unwrap();let df_v2 = df!("a"=> &[2],"b"=> &[4],).unwrap();let df_vertical_concat = concat([df_v1.clone().lazy(), df_v2.clone().lazy()],UnionArgs::default(),).unwrap().collect().unwrap();println!("{}", &df_vertical_concat);}fn get_slice_scalar_from_df(){println!("------------- get_slice_scalar_from_df test ---------------- ");let df: DataFrame = df!("D1" => &[1, 2, 3, 4, 5],"D2" => &[3, 2, 3, 5, 3]).unwrap();// slice(1,4): 从第2行开始(包含),各列向下共取4行let slice = &df.slice(1,4);println!("slice :{:?}",&slice);// 获取第2列第3个值的标量let scalar = df[1].get(3).unwrap(); println!("saclar :{:?}",scalar);

}fn replace_drop_col(){println!("------------- replace_drop_col test ---------------- ");// toml :features => replacelet mut df: DataFrame = df!("D1" => &[1, 2, 3, 4, 5],"D2" => &[3, 2, 3, 5, 3]).unwrap();let new_s1 = Series::new("", &[2,3,4,5,6]); // ""为名字不变;// D1列进行替换let df2 = df.replace("D1", new_s1).unwrap();// 删除D2列let df3 = df2.drop_many(&["D2"]);println!("df3:{:?}",df3);

}fn drop_null_fill_null(){println!("------------- drop_null_fill_null test ---------------- ");let df: DataFrame = df!("D1" => &[None, Some(2), Some(3), Some(4), None],"D2" => &[3, 2, 3, 5, 3]).unwrap();// 取当前列第一个非空的值填充后面的空值let df2 = df.fill_null(FillNullStrategy::Forward(None)).unwrap();// Forward(Option):向后遍历,用遇到的第一个非空值(或给定下标位置的值)填充后面的空值// Backward(Option):向前遍历,用遇到的第一个非空值(或给定下标位置的值)填充前面的空值// Mean:用算术平均值填充// Min:用最小值填充// Max: 用最大值填充// Zero:用0填充// One:用1填充// MaxBound:用数据类型的取值范围的上界填充// MinBound:用数据类型的取值范围的下界填充println!("fill_null :{:?}", df2);// 删除D1列中的None值let df3 = df2.drop_nulls(Some(&["D1"])).unwrap();println!("drop_nulls :{:?}",df3);}fn compute_return(){println!("-----------compute_return test -----------------------");let df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let _df = df.clone().lazy().with_columns([(col("close")/col("close").first()-lit(1.0)).alias("ret")]).collect().unwrap();println!("_df :{}",_df)

}fn standardlize_center(){println!("------------- standardlize_center test ---------------- ");let df: DataFrame = df!("D1" => &[1, 2, 3, 4, 5],"D2" => &[3, 2, 3, 5, 3]).unwrap();// 进行标准化:对所有的列,每个值除以本列最大值// cast(): 由int =>Float64let standardization = df.lazy().select([col("*").cast(DataType::Float64) / col("*").cast(DataType::Float64).max()]);// 对于标准化后的列,进行中心化let center = standardization.select([col("*") - col("*").mean()]).collect().unwrap();println!("standardlize : {:?}",center);

}fn create_list_in_df_by_apply(){println!("----------creat_list_in_df_by_apply test ------------------------");let df = df!("lang" => &["go","rust", "go", "julia","julia","rust","rust"],"users" => &[223,1032, 222, 42,1222,3213,4445],"year" =>&["2020","2021","2022","2023","2024","2025","2026"]).unwrap();println!("df :{}",df);let out = df.clone().lazy().group_by([col("lang")]).agg([col("users") .apply(|s| { let v = s.i32().unwrap();let out = v.into_iter().map(|v| match v {Some(v_) => v_ ,_ => 0}).collect::<Vec<i32>>();Ok(Some(Series::new("_", out)))}, GetOutput::default()).alias("aggr_vec"),]) //.with_column(col("aggr_sum").list().alias("aggr_sum_first")) .collect().unwrap();println!("{}", out);

}fn create_struct_in_df_by_apply(){println!("-----------------create_struct_in_df_by_apply test -------------------------");// TOML features => "dtype-struct"use polars::prelude::*;let df = df!("keys" => &["a", "a", "b"],"values" => &[10, 7, 1],).unwrap();let out = df.clone().lazy().with_column(col("values").apply(|s| {let s = s.i32()?;let out_1: Vec<Option<i32>> = s.into_iter().map(|v| match v {Some(v_) => Some(v_ * 10),_ => None,}).collect();let out_2: Vec<Option<i32>> = s.into_iter().map(|v| match v {Some(v_) => Some(v_ * 20),_ => None,}).collect();let out = df! ("v1" => &out_1,"v2" => &out_2,).unwrap().into_struct("vals").into_series();Ok(Some(out))},GetOutput::default())) .collect().unwrap();println!("{}", out);

}fn field_value_counts(){println!("--------------field_value_counts test---------------");let ratings = df!("Movie"=> &["Cars", "IT", "ET", "Cars", "Up", "IT", "Cars", "ET", "Up", "ET"],"Theatre"=> &["NE", "ME", "IL", "ND", "NE", "SD", "NE", "IL", "IL", "SD"],"Avg_Rating"=> &[4.5, 4.4, 4.6, 4.3, 4.8, 4.7, 4.7, 4.9, 4.7, 4.6],"Count"=> &[30, 27, 26, 29, 31, 28, 28, 26, 33, 26],).unwrap();println!("{}", &ratings);let out = ratings.clone().lazy().select([col("Theatre").value_counts(true, true, "count".to_string(), false)]).collect().unwrap();println!("{}", &out);}

// 宏macro_rules! structs_to_dataframe {($input:expr, [$($field:ident),+]) => {{// Extract the field values into separate vectors$(let mut $field = Vec::new();)*for e in $input.into_iter() {$($field.push(e.$field);)*}df! {$(stringify!($field) => $field,)*}}};

}macro_rules! dataframe_to_structs_todo {($df:expr, $StructName:ident,[$($field:ident),+]) => {{// 把df 对应的fields =>Vec<StructName>,let mut vec:Vec<$StructName> = Vec::new();vec}};

}fn df_to_structs_by_macro_todo(){println!("---------------df_to_structs_by_macro_todo test -------------------");let df = df!("date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();// 把df =>Vec<Bar>struct Bar {date:NaiveDate,close:f64,open:f64,high:f64,low:f64,}impl Bar {fn bar(date:NaiveDate, close:f64,open:f64,high:f64,low:f64) -> Self{Bar{date,close,open,high,low}}}let bars: Vec<Bar> = dataframe_to_structs_todo!(df, Bar,[date,close,open,high,low]);println!("df:{:?}",df);

}fn structs_to_df_by_macro(){println!(" ---------------- structs_to_df_by_macro test -----------------------");struct Bar {date:NaiveDate,close:f64,open:f64,high:f64,low:f64,}impl Bar {fn new(date:NaiveDate, close:f64,open:f64,high:f64,low:f64) -> Self{Bar{date,close,open,high,low}}}let test_bars:Vec<Bar> = vec![Bar::new(NaiveDate::from_ymd_opt(2024,1,1).unwrap(),10.1,10.12,10.2,9.99),Bar::new(NaiveDate::from_ymd_opt(2024,1,2).unwrap(),10.2,10.22,10.3,10.1)];let df = structs_to_dataframe!(test_bars, [date,close,open,high,low]).unwrap();println!("df:{:?}",df);

}fn df_to_structs_by_iter(){println!("---------------df_to_structs_by_iter test----------------");// toml :features => "dtype-struct"let now = Instant::now();#[derive(Debug, Clone)]struct Bar {code :String,date:NaiveDate,close:f64,open:f64,high:f64,low:f64,}impl Bar {fn new(code:String,date:NaiveDate, close:f64,open:f64,high:f64,low:f64) -> Self{Bar{code,date,close,open,high,low}}}let df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let mut bars:Vec<Bar> = Vec::new();let rows_data = df.into_struct("bars");let start_date = NaiveDate::from_ymd_opt(1970, 1, 2).unwrap();for row_data in &rows_data{let code = row_data.get(0).unwrap();let mut new_code = "".to_string();if let &AnyValue::String(value) = code{new_code = value.to_string();}let mut new_date = NaiveDate::from_ymd_opt(2000,1,1).unwrap(); let since_days = start_date.signed_duration_since(NaiveDate::from_ymd_opt(1,1,1).unwrap());let date = row_data.get(1).unwrap();if let &AnyValue::Date(dt) = date {let tmp_date = NaiveDate::from_num_days_from_ce_opt(dt).unwrap();new_date = tmp_date.checked_add_signed(since_days).unwrap();}let open =row_data[3].extract::<f64>().unwrap();let high = row_data[4].extract::<f64>().unwrap();let close =row_data[2].extract::<f64>().unwrap();let low = row_data[5].extract::<f64>().unwrap();bars.push(Bar::new(new_code,new_date,close,open,high,low));}println!("df_to_structs2 => structchunk : cost time :{:?}",now.elapsed().as_secs_f32());println!("bars :{:?}",bars);

}fn df_to_structs_by_zip(){println!("-----------df_to_structs_by_zip test --------------------");// 同样适用df -> struct ,tuple,hashmap 等let now = Instant::now();#[derive(Debug, Clone)]struct Bar {code :String,date:NaiveDate,close:f64,open:f64,high:f64,low:f64,}impl Bar {fn new(code:String,date:NaiveDate, close:f64,open:f64,high:f64,low:f64) -> Self{Bar{code,date,close,open,high,low}}}let df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let bars : Vec<Bar> = df["code"].str().unwrap().iter().zip(df["date"].date().unwrap().as_date_iter()).zip(df["close"].f64().unwrap().iter()).zip(df["open"].f64().unwrap().iter()).zip(df["high"].f64().unwrap().iter()).zip(df["low"].f64().unwrap().iter()).map(|(((((code,date),close),open),high),low)| Bar::new(code.unwrap().to_string(),date.unwrap(),close.unwrap(),open.unwrap(),high.unwrap(),low.unwrap())).collect();println!("df_to_struct3 => zip : cost time :{:?} seconds!",now.elapsed().as_secs_f32());println!("bars :{:?}",bars);//izip! from itertools --其它参考--,省各种复杂的括号!//use itertools::izip;//izip!(code, date, close, open,high,low).collect::<Vec<_>>() // Vec of 4-tuples}fn df_to_vec_tuples_by_izip(){println!("-------------df_to_tuple_by_izip test---------------");use itertools::izip;// In my real code this is generated from two joined DFs.let df = df!("code" => &["600036.sh".to_string(),"600036.sh".to_string(),"600036.sh".to_string()],"date" => &[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let mut dates = df.column("date").unwrap().date().unwrap().as_date_iter();let mut codes = df.column("code").unwrap().str().unwrap().iter();let mut closes = df.column("close").unwrap().f64().unwrap().iter();let mut tuples = Vec::new();for (date, code, close) in izip!(&mut dates, &mut codes, &mut closes){//println!("{:?} {:?} {:?}", date.unwrap(), code.unwrap(), close.unwrap());tuples.push((date.unwrap(),code.unwrap(),close.unwrap()));}// 或这种方式let tuples2 = izip!(&mut dates, &mut codes, &mut closes).collect::<Vec<_>>();println!("tuples :{:?}",tuples);println!("tuples2 :{:?}",tuples2);

}fn series_to_vec(){println!("------------series_to_vec test-----------------------");let df = df!("date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],).unwrap();let vec :Vec<Option<NaiveDate>>= df["date"].date().unwrap().as_date_iter().collect();println!("vec :{:?}",vec)

}fn series_to_vec2(){println!("------------series_to_vec2 test----------------------");let df = df!("lang" =>&["rust","go","julia"],).unwrap();let vec:Vec<Option<&str>> = df["date"].str().unwrap().into_iter().map(|s|match s{Some(v_) => Some(v_),_ => None,}).collect();println!("vec:{:?}",vec);}fn structs_in_df(){println!("-----------structs_in_df test -----------------");// feature => dtype-structlet df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap().into_struct("bars").into_series();println!("{}", &df);// how to get series from struct column?let out = df.struct_().unwrap().field_by_name("close").unwrap();println!("out :{}",out);// how to get struct value in df let _ = df.struct_().unwrap().into_iter().map(|rows| {println!("code :{} date :{} close:{},open:{},high:{},low:{}",rows[0],rows[1],rows[2],rows[3],rows[4],rows[5]);}).collect::<Vec<_>>();}fn list_in_df(){println!("-------------list_in_df test ------------------------------");let df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let lst = df["close"].as_list().get(0).unwrap();println!("lst :{:?}",lst);}fn serialize_df_to_json(){println!("--------------- serialize_df_to_json test -----------------------");// toml features => serdelet df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();let df_json = serde_json::to_value(&df).unwrap();println!("df_json {df_json}");

}fn serialize_df_to_binary_todo(){println!("---------serialize_df_to_binary_todo test -------------");// toml features => serdelet df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();// todo//let df_binary = serde_json::to_value(&df).unwrap();//println!("df_json {df_binary}");

}fn df_to_ndarray(){println!("-------------- df_to_ndarray test ------------------------");// toml features =>ndarraylet df = df!("code" => &["600036.SH".to_string(),"600036.SH".to_string(),"600036.SH".to_string()],"date" =>&[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();// ndarray 化: 先去除非f64列let df_filter = df.lazy().select([all().exclude(["code","date"])]).collect().unwrap();let ndarray = df_filter.to_ndarray::<Float64Type>(IndexOrder::Fortran).unwrap();println!("ndarray :{}",ndarray);

}fn df_apply(){println!("--------------df_apply--------------------");// df_apply: apply应用于df的一列// 将其中的"code"列小写改成大写// mut !let mut df = df!("code" => &["600036.sh".to_string(),"600036.sh".to_string(),"600036.sh".to_string()],"date" => &[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();// fn code_to_uppercase(code_val: &Series) -> Series {code_val.str().unwrap().into_iter().map(|opt_code: Option<&str>| {opt_code.map(|code: &str| code.to_uppercase())}).collect::<StringChunked>().into_series()}// 对 code列进行str_to_upper操作 ,把本列的小写改成大写,有两种方法// method 1//df.apply("code", code_to_uppercase).unwrap();// method 2df.apply_at_idx(0, code_to_uppercase).unwrap(); // 对第0列,即首列进行操作println!("df {}",df);}fn write_read_parquet_files(){println!("------------ write_read_parquet_files test -------------------------");// features =>parquetlet mut df = df!("code" => &["600036.sh".to_string(),"600036.sh".to_string(),"600036.sh".to_string()],"date" => &[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();write_parquet(&mut df);let df_ = read_parquet("600036SH.parquet");let _df_ = scan_parquet("600036SH.parquet").select([all()]).collect().unwrap();assert_eq!(df,df_);assert_eq!(df,_df_);println!("pass write_read parquet test!");fn write_parquet(df : &mut DataFrame){let mut file = std::fs::File::create("600036SH.parquet").unwrap();ParquetWriter::new(&mut file).finish(df).unwrap();}fn read_parquet(filepath:&str) ->DataFrame{let mut file = std::fs::File::open(filepath).unwrap();let df = ParquetReader::new(&mut file).finish().unwrap();df}fn scan_parquet(filepath:&str) ->LazyFrame{let args = ScanArgsParquet::default();let lf = LazyFrame::scan_parquet(filepath, args).unwrap();lf}}fn date_to_str_in_column(){println!("---------------date_t0_str test----------------------");// feature => temporallet mut df = df!("code" => &["600036.sh".to_string(),"600036.sh".to_string(),"600036.sh".to_string()],"date" => &[NaiveDate::from_ymd_opt(2015, 3, 14).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 15).unwrap(),NaiveDate::from_ymd_opt(2015, 3, 16).unwrap(),],"close" => &[1.21,1.22,1.23],"open" => &[1.22,1.21,1.23],"high" => &[1.22,1.25,1.24],"low" => &[1.19, 1.20,1.21],).unwrap();// 增加一列,把date -> date_strlet df = df.clone().lazy().with_columns([cols(["date"]).dt().to_string("%Y-%h-%d").alias("date_str")]).collect().unwrap();println!("df:{}",df);

}fn when_logicial_in_df(){println!("------------------when_condition_in_df test----------------------");let df = df!("name" =>&["c","julia","go","python","rust","c#","matlab"],"run-time"=>&[1.0,1.11,1.51,3.987,1.01,1.65,2.11]).unwrap();// 当运行速度要在[1.0,1.5]之间为true,其它为falselet df_conditional = df.clone().lazy().select([col("run-time"),when(col("run-time").lt_eq(1.50).and(col("run-time").gt_eq(1.0))).then(lit(true)).otherwise(lit(false)).alias("speed_conditional"),]).collect().unwrap();println!("{}", &df_conditional);

}fn str_to_datetime_date_cast_in_df(){println!("--------------date_cast_in_df test---------------------------");// features => strings 否则str()有问题!let df = df!("custom" => &["Tom","Jack","Rose"],"login" => &["2024-08-14","2024-08-12","2023-08-09"],//首次登陆日期"order" => &["2024-08-14 10:15:32","2024-08-14 11:22:32","2024-08-14 14:12:52"],//下单时间"send" => &["2024-08-15 10:25:38","2024-08-15 14:28:38","2024-08-16 09:07:32"],//快递时间).unwrap();let out = df.lazy().with_columns([col("login").str().to_date(StrptimeOptions::default()).alias("login_dt")]).with_columns([col("login").str().to_datetime(Some(TimeUnit::Microseconds),None,StrptimeOptions::default(),lit("raise")).alias("login_dtime")]).with_columns([col("order").str().strptime(DataType::Datetime(TimeUnit::Milliseconds, None),StrptimeOptions::default(),lit("raise"),).alias("order_dtime"),col("send").str().strptime(DataType::Datetime(TimeUnit::Milliseconds, None),StrptimeOptions::default(),lit("raise"), // raise an error if the parsing fails).alias("send_dtime"),]).with_columns([(col("send_dtime") - col("order_dtime")).alias("duration(seconds)").dt().total_seconds()]).collect().unwrap();println!("out :{}",out);

}fn unnest_struct_in_df(){// unnest() =>将dataframe中struct列执行展开操作// 生成带struct的dataframelet mut df: DataFrame = df!("company" => &["ailibaba", "baidu"],"profit" => &[777277778.0, 86555555.9]).unwrap();let series = df.clone().into_struct("info").into_series();let mut _df = df.insert_column(0, series).unwrap();println!("_df :{}",df);// unnest() <=> into_structlet out = df.lazy().with_column(col("info").struct_().rename_fields(vec!["co.".to_string(), "pl".to_string()]))// 将struct所有字段展开.unnest(["info"]).collect().unwrap();println!("out :{}", out);

// _df :shape: (2, 3)

// ┌───────────────────────────┬──────────┬──────────────┐

// │ info ┆ company ┆ profit │

// │ --- ┆ --- ┆ --- │

// │ struct[2] ┆ str ┆ f64 │

// ╞═══════════════════════════╪══════════╪══════════════╡

// │ {"ailibaba",7.77277778e8} ┆ ailibaba ┆ 7.77277778e8 │

// │ {"baidu",8.6556e7} ┆ baidu ┆ 8.6556e7 │

// └───────────────────────────┴──────────┴──────────────┘

// out :shape: (2, 4)

// ┌──────────┬──────────────┬──────────┬──────────────┐

// │ co. ┆ pl ┆ company ┆ profit │

// │ --- ┆ --- ┆ --- ┆ --- │

// │ str ┆ f64 ┆ str ┆ f64 │

// ╞══════════╪══════════════╪══════════╪══════════════╡

// │ ailibaba ┆ 7.77277778e8 ┆ ailibaba ┆ 7.77277778e8 │

// │ baidu ┆ 8.6556e7 ┆ baidu ┆ 8.6556e7 │

// └──────────┴──────────────┴──────────┴──────────────┘

}fn as_struct_in_df(){// features = >lazylet df: DataFrame = df!("company" => &["ailibaba", "baidu"],"profit" => &[777277778.0, 86555555.9]).unwrap();// as_struct: 生成相关struct列let _df = df.clone().lazy().with_columns([as_struct(vec![col("company"),col("profit")]).alias("info")]).collect().unwrap();let df_ = df.clone().lazy().with_columns([as_struct(vec![col("*")]).alias("info")]).collect().unwrap();assert_eq!(_df,df_);println!("df :{}",_df);// df :shape: (2, 3)// ┌──────────┬──────────────┬───────────────────────────┐// │ company ┆ profit ┆ info │// │ --- ┆ --- ┆ --- │// │ str ┆ f64 ┆ struct[2] │// ╞══════════╪══════════════╪═══════════════════════════╡// │ ailibaba ┆ 7.77277778e8 ┆ {"ailibaba",7.77277778e8} │// │ baidu ┆ 8.6556e7 ┆ {"baidu",8.6556e7} │// └──────────┴──────────────┴───────────────────────────┘}fn struct_apply_in_df(){let df = df!("lang" => &["julia", "go", "rust","c","c++"],"ratings" => &["AAAA", "AAA", "AAAAA","AAAA","AAA"],"users" =>&[201,303,278,99,87],"references"=>&[5,6,9,4,1] ).unwrap();// 需求:生成一列struct {lang,ratings,users},并应用apply对struct进行操作,具体见表:let out = df.lazy().with_columns([// 得到 struct 列as_struct(vec![col("lang"), col("ratings"),col("users")])// 应用 apply.apply(|s| {// 从series得到structlet ss = s.struct_().unwrap();// 拆出 Serieslet s_lang = ss.field_by_name("lang").unwrap();let s_ratings = ss.field_by_name("ratings").unwrap();let s_users = ss.field_by_name("users").unwrap();// downcast the `Series` to their known typelet _s_lang = s_lang.str().unwrap();let _s_ratings = s_ratings.str().unwrap();let _s_users = s_users.i32().unwrap();// zip series`let out: StringChunked = _s_lang.into_iter().zip(_s_ratings).zip(_s_users).map(|((opt_lang, opt_rating),opt_user)| match (opt_lang, opt_rating,opt_user) {(Some(la), Some(ra),Some(us)) => Some(format!("{}-{}-{}",la,ra,us)),_ => None,}).collect();Ok(Some(out.into_series()))},GetOutput::from_type(DataType::String),).alias("links-three"),]).collect().unwrap();println!("{}", out);// shape: (5, 5)

// ┌───────┬─────────┬───────┬────────────┬────────────────┐

// │ lang ┆ ratings ┆ users ┆ references ┆ links-three │

// │ --- ┆ --- ┆ --- ┆ --- ┆ --- │

// │ str ┆ str ┆ i32 ┆ i32 ┆ str │

// ╞═══════╪═════════╪═══════╪════════════╪════════════════╡

// │ julia ┆ AAAA ┆ 201 ┆ 5 ┆ julia-AAAA-201 │

// │ go ┆ AAA ┆ 303 ┆ 6 ┆ go-AAA-303 │

// │ rust ┆ AAAAA ┆ 278 ┆ 9 ┆ rust-AAAAA-278 │

// │ c ┆ AAAA ┆ 99 ┆ 4 ┆ c-AAAA-99 │

// │ c++ ┆ AAA ┆ 87 ┆ 1 ┆ c++-AAA-87 │

// └───────┴─────────┴───────┴────────────┴────────────────┘}相关文章:

Rust : 数据分析利器polars用法

Polars虽牛刀小试,就显博大精深,在数据分析上,未来有重要一席。 下面主要列举一些常见用法。 一、toml 需要说明的是,在Rust中,不少的功能都需要对应features引入设置,这些需要特别注意,否则编译…...

Qt第一课

作者前言 🎂 ✨✨✨✨✨✨🍧🍧🍧🍧🍧🍧🍧🎂 🎂 作者介绍: 🎂🎂 🎂 🎉🎉🎉…...

论“graphics.h”库,easyx

前言 别人十步我则百,别人百步我则千 你是否有这样的想法,把图片到入进c里,亦或者能实时根据你发出的信息而做出回应的程序,graphics.h这个库完美满足了你的需求,那今天作者就给大家介绍一下这个库,并做一些…...

如何在寂静中用电脑找回失踪的手机?远程控制了解一下

经过一番努力,我终于成功地将孩子哄睡了。夜深人静,好不容易有了一点自己的时间,就想刷手机放松放松,顺便看看有没有重要信息。但刚才专心哄孩子去了,一时就忘记哄孩子之前,顺手把手机放哪里去了。 但找过手…...

Android 实现动态换行显示的 TextView 列表

在开发 Android 应用程序时,我们经常需要在标题栏中显示多个 TextView,而这些 TextView 的内容长度可能不一致。如果一行内容过长,我们希望它们能自动换行;如果一行占不满屏幕宽度,则保持在一行内。本文将带我们一步步…...

Golang | Leetcode Golang题解之第352题将数据流变为多个不相交区间

题目: 题解: type SummaryRanges struct {*redblacktree.Tree }func Constructor() SummaryRanges {return SummaryRanges{redblacktree.NewWithIntComparator()} }func (ranges *SummaryRanges) AddNum(val int) {// 找到 l0 最大的且满足 l0 < val…...

Ubuntu安装mysql 以及远程连接mysql Windows—适合初学者的讲解(详细)

目录 准备工作 一.Xshell中操作 (1)在虚拟机中安装mysql (2)连接Windows数据库 (3)进入linux数据库。 (4)修改mysql配置文件 二.Windows命令窗口操作 需要软件虚拟机,Xsh…...

【数学建模】MATLAB快速入门

文章目录 1. MATLAB界面与基本操作1.1 MATLAB的基本操作 2. MATLAB字符串和文本2.1 string变量2.2 char变量 3. MATLAB的矩阵运算 1. MATLAB界面与基本操作 初始界面: 刚开始的界面只要一个命令行窗口,为了使编辑界面出现我们需要新建一个文件ÿ…...

【ubuntu24.04】k8s 部署5:配置calico 镜像拉取

kubeadm - 中国大陆版建议:初始化Kubeadm –apiserver-advertise-address 这个地址是本地用于和其他节点通信的IP地址 –pod-network-cidr pod network 地址空间 sudo kubeadm init --image-repository registry.aliyuncs.com/google_containers --apiserver-advertise-add…...

Elasticsearch 的数据备份与恢复

在生产环境中,数据的安全性和可靠性至关重要。对于基于 Elasticsearch 的系统而言,数据备份与恢复是确保数据完整性、应对灾难恢复的关键操作。本文将详细介绍 Elasticsearch 中如何进行数据备份与恢复,帮助管理员构建一个可靠的数据保护策略…...

Ps:首选项 - 暂存盘

Ps菜单:编辑/首选项 Edit/Preferences 快捷键:Ctrl K Photoshop 首选项中的“暂存盘” Scratch Disks选项卡通过合理配置和管理暂存盘,可以显著提高 Photoshop 的运行性能,特别是在处理复杂的设计项目或大型图像文件时。选择合适…...

力扣217题详解:存在重复元素的多种解法与复杂度分析

在本篇文章中,我们将详细解读力扣第217题“存在重复元素”。通过学习本篇文章,读者将掌握如何使用多种方法来解决这一问题,并了解相关的复杂度分析和模拟面试问答。每种方法都将配以详细的解释,以便于理解。 问题描述 力扣第217…...

享元模式:轻量级对象共享,高效利用内存

享元模式(Flyweight Pattern)是一种结构型设计模式,用于减少对象数量、降低内存消耗和提高系统性能。它通过共享相似对象的内部状态,减少重复创建的对象。下面将具体介绍享元模式的各个方面: 组成 抽象享元࿰…...

人工智能-自然语言处理(NLP)

人工智能-自然语言处理(NLP) 1. NLP的基础理论1.1 语言模型(Language Models)1.1.1 N-gram模型1.1.2 词嵌入(Word Embeddings)1.1.2.1 词袋模型(Bag of Words, BoW)1.1.2.2 TF-IDF&a…...

基于UE5和ROS2的激光雷达+深度RGBD相机小车的仿真指南(三)---创建自定义激光雷达Componet组件

前言 本系列教程旨在使用UE5配置一个具备激光雷达深度摄像机的仿真小车,并使用通过跨平台的方式进行ROS2和UE5仿真的通讯,达到小车自主导航的目的。本教程默认有ROS2导航及其gazebo仿真相关方面基础,Nav2相关的学习教程可以参考本人的其他博…...

C++ 设计模式——策略模式

策略模式 策略模式主要组成部分例一:逐步重构并引入策略模式第一步:初始实现第二步:提取共性并实现策略接口第三步:实现具体策略类第四步:实现上下文类策略模式 UML 图策略模式的 UML 图解析 例二:逐步重构…...

【书生大模型实战营(暑假场)闯关材料】基础岛:第3关 浦语提示词工程实践

1.配置环境时遇到的问题 注意要使用terminal,而不是jupyter。 否则退出TMUX会话时,会出问题。 退出TMUX会话命令如下: ctrlB D # 先按CTRLB 随后按D另外一个是,端口转发命令 ssh -p XXXX rootssh.intern-ai.org.cn -CNg -L …...

C++ | Leetcode C++题解之第350题两个数组的交集II

题目: 题解: class Solution { public:vector<int> intersect(vector<int>& nums1, vector<int>& nums2) {sort(nums1.begin(), nums1.end());sort(nums2.begin(), nums2.end());int length1 nums1.size(), length2 nums2…...

遗传算法原理与实战(python、matlab)

遗传算法 1.什么是遗传算法 遗传算法(Genetic Algorithm,简称GA)是一种基于生物进化论和遗传学原理的全局优化搜索算法。它通过模拟自然界中生物种群的遗传机制和进化过程来解决复杂问题,如函数优化、组合优化、机器学习等。遗传…...

《黑神话:悟空》媒体评分解禁 M站均分82

《黑神话:悟空》媒体评分现已解禁,截止发稿时,M站共有43家媒体评测,均分为82分。 部分媒体评测: God is a Geek 100: 毫无疑问,《黑神话:悟空》是今年最好的动作游戏之一ÿ…...

2024年赣州旅游投资集团社会招聘笔试真

2024年赣州旅游投资集团社会招聘笔试真 题 ( 满 分 1 0 0 分 时 间 1 2 0 分 钟 ) 一、单选题(每题只有一个正确答案,答错、不答或多答均不得分) 1.纪要的特点不包括()。 A.概括重点 B.指导传达 C. 客观纪实 D.有言必录 【答案】: D 2.1864年,()预言了电磁波的存在,并指出…...

深入理解JavaScript设计模式之单例模式

目录 什么是单例模式为什么需要单例模式常见应用场景包括 单例模式实现透明单例模式实现不透明单例模式用代理实现单例模式javaScript中的单例模式使用命名空间使用闭包封装私有变量 惰性单例通用的惰性单例 结语 什么是单例模式 单例模式(Singleton Pattern&#…...

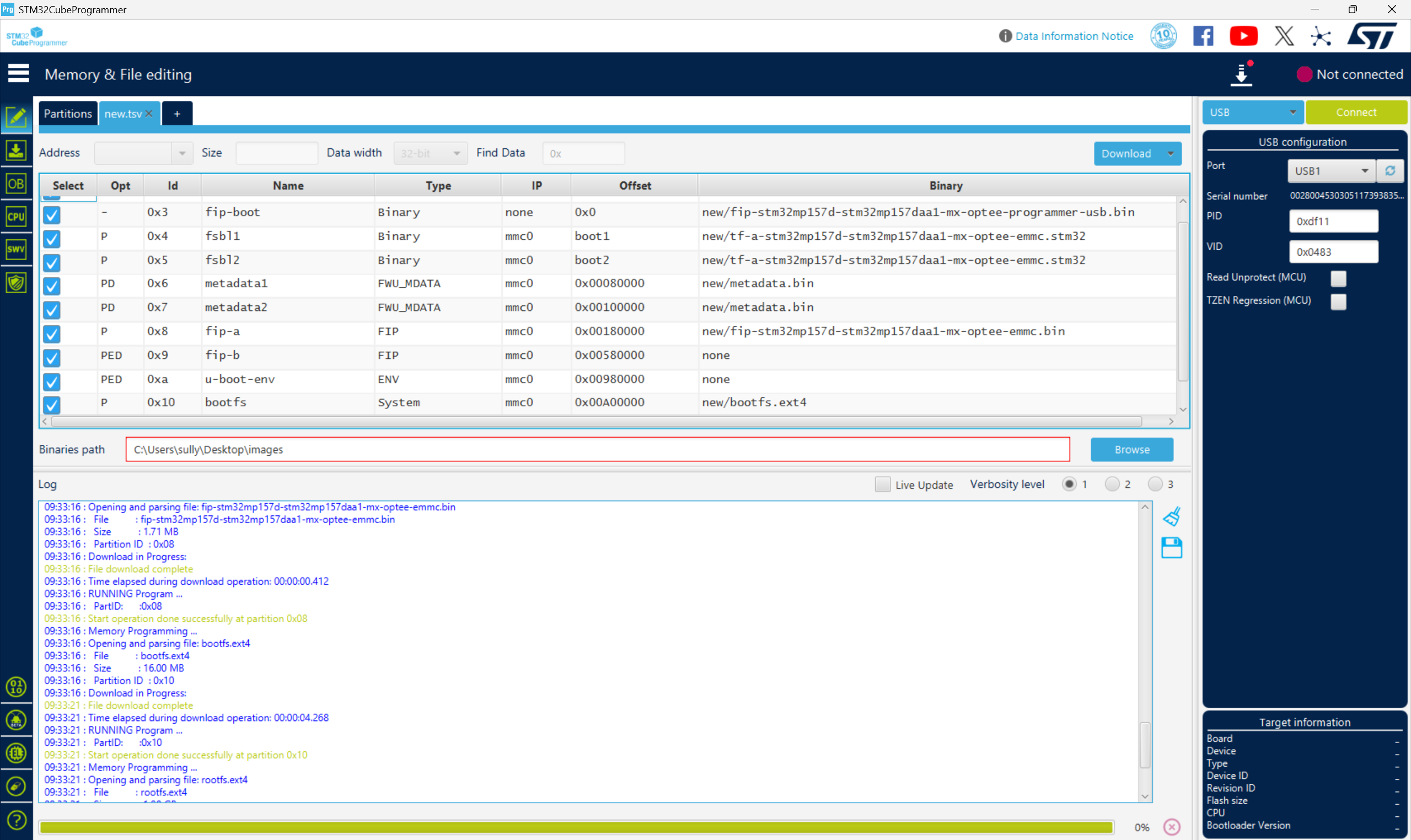

从零开始打造 OpenSTLinux 6.6 Yocto 系统(基于STM32CubeMX)(九)

设备树移植 和uboot设备树修改的内容同步到kernel将设备树stm32mp157d-stm32mp157daa1-mx.dts复制到内核源码目录下 源码修改及编译 修改arch/arm/boot/dts/st/Makefile,新增设备树编译 stm32mp157f-ev1-m4-examples.dtb \stm32mp157d-stm32mp157daa1-mx.dtb修改…...

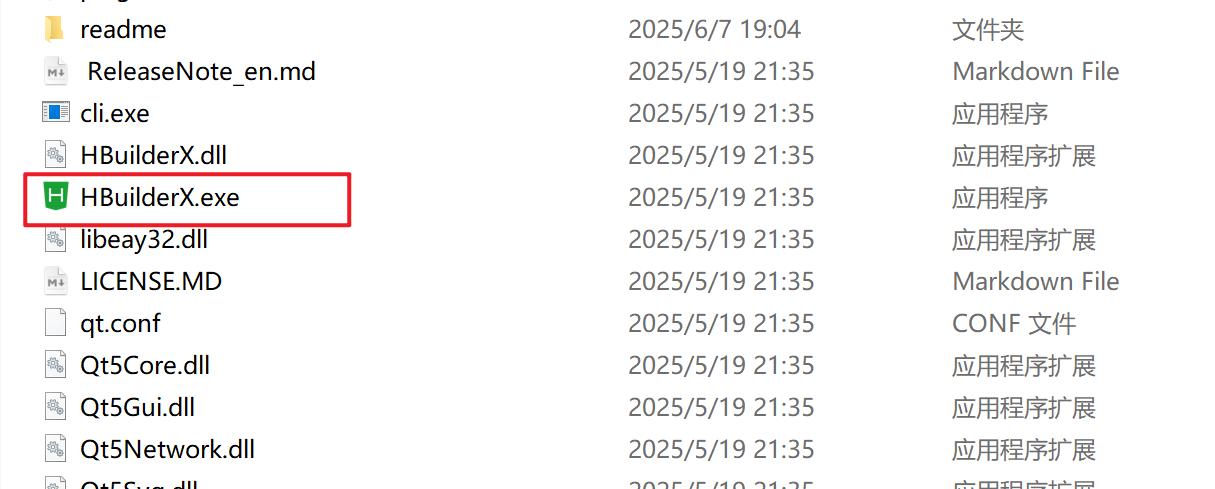

HBuilderX安装(uni-app和小程序开发)

下载HBuilderX 访问官方网站:https://www.dcloud.io/hbuilderx.html 根据您的操作系统选择合适版本: Windows版(推荐下载标准版) Windows系统安装步骤 运行安装程序: 双击下载的.exe安装文件 如果出现安全提示&…...

Map相关知识

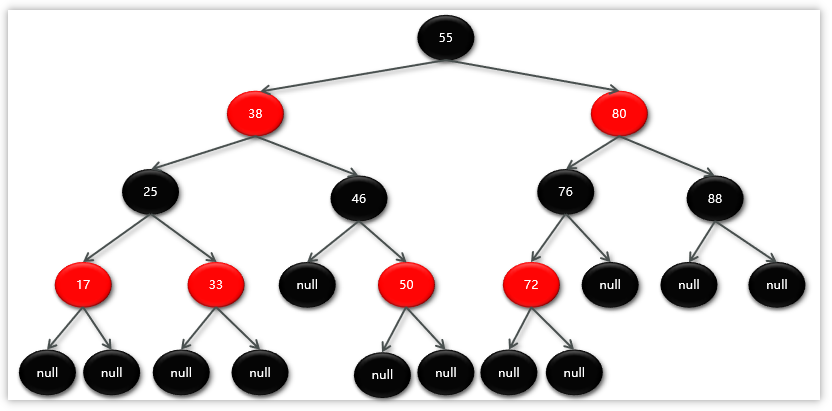

数据结构 二叉树 二叉树,顾名思义,每个节点最多有两个“叉”,也就是两个子节点,分别是左子 节点和右子节点。不过,二叉树并不要求每个节点都有两个子节点,有的节点只 有左子节点,有的节点只有…...

)

Typeerror: cannot read properties of undefined (reading ‘XXX‘)

最近需要在离线机器上运行软件,所以得把软件用docker打包起来,大部分功能都没问题,出了一个奇怪的事情。同样的代码,在本机上用vscode可以运行起来,但是打包之后在docker里出现了问题。使用的是dialog组件,…...

Python 包管理器 uv 介绍

Python 包管理器 uv 全面介绍 uv 是由 Astral(热门工具 Ruff 的开发者)推出的下一代高性能 Python 包管理器和构建工具,用 Rust 编写。它旨在解决传统工具(如 pip、virtualenv、pip-tools)的性能瓶颈,同时…...

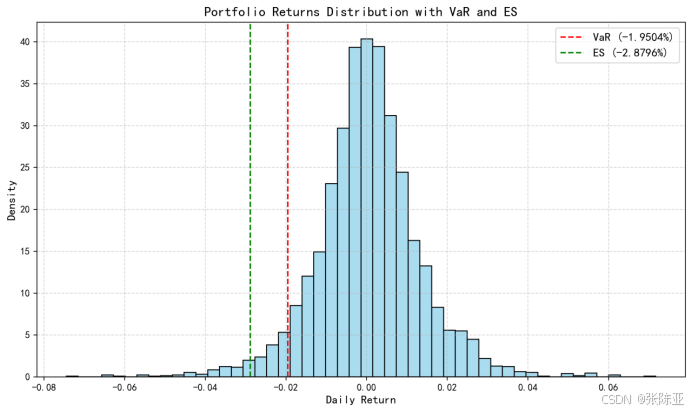

Python基于历史模拟方法实现投资组合风险管理的VaR与ES模型项目实战

说明:这是一个机器学习实战项目(附带数据代码文档),如需数据代码文档可以直接到文章最后关注获取。 1.项目背景 在金融市场日益复杂和波动加剧的背景下,风险管理成为金融机构和个人投资者关注的核心议题之一。VaR&…...

十九、【用户管理与权限 - 篇一】后端基础:用户列表与角色模型的初步构建

【用户管理与权限 - 篇一】后端基础:用户列表与角色模型的初步构建 前言准备工作第一部分:回顾 Django 内置的 `User` 模型第二部分:设计并创建 `Role` 和 `UserProfile` 模型第三部分:创建 Serializers第四部分:创建 ViewSets第五部分:注册 API 路由第六部分:后端初步测…...

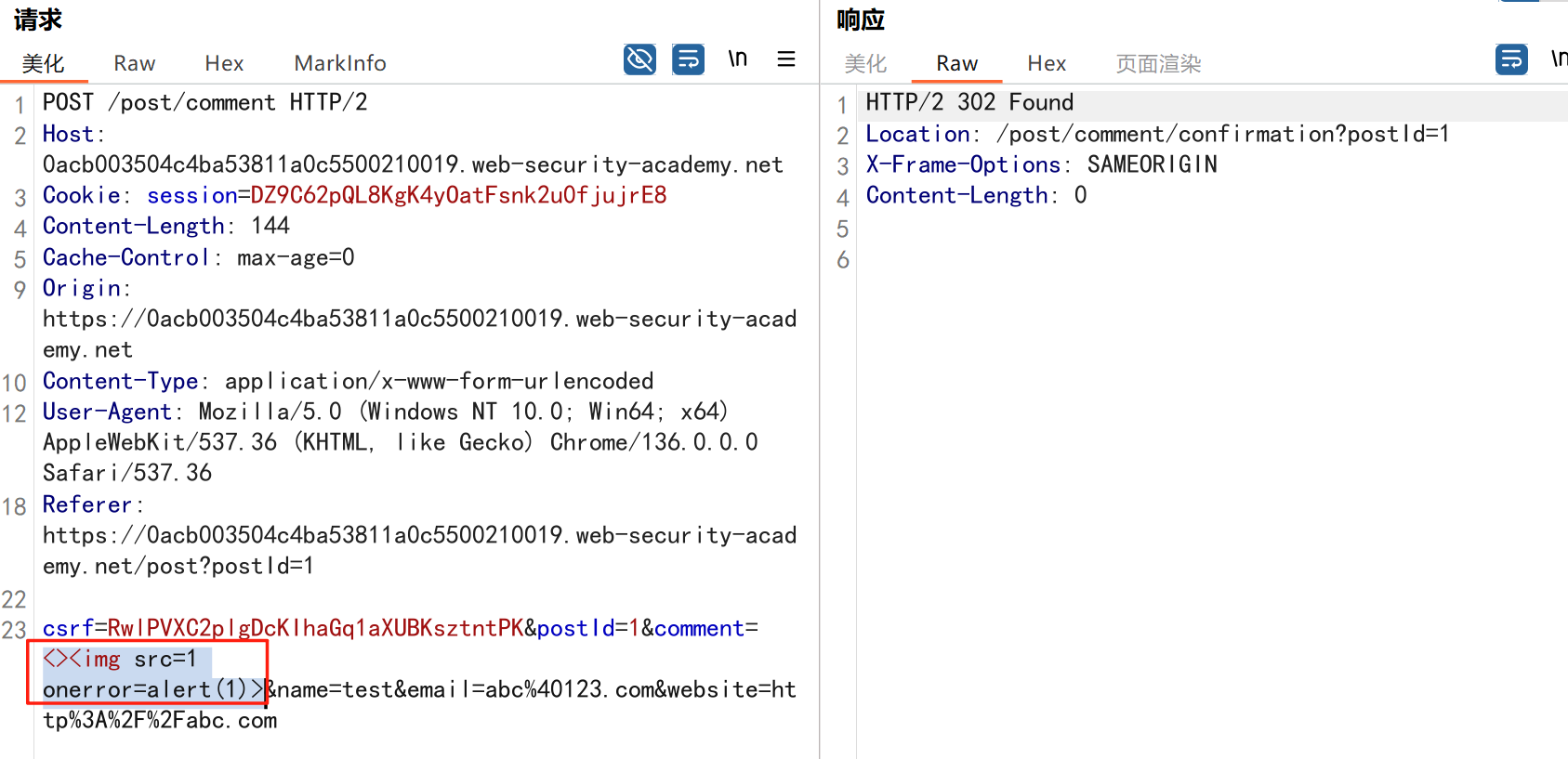

渗透实战PortSwigger靶场:lab13存储型DOM XSS详解

进来是需要留言的,先用做简单的 html 标签测试 发现面的</h1>不见了 数据包中找到了一个loadCommentsWithVulnerableEscapeHtml.js 他是把用户输入的<>进行 html 编码,输入的<>当成字符串处理回显到页面中,看来只是把用户输…...