OpenGL Texture C++ Camera Filter滤镜

基于OpenGL Texture纹理的强大功能,在片段着色器(Shader)中编写GLSL代码,对YUV的数据进行数据转换从而实现视频编辑软件中的相机滤镜功能。

接上一篇OpenGL Texture C++ 预览Camera视频的功能实现,本篇来实现Camera滤镜效果并各种滤镜的切换。

项目github地址:GitHub - wangyongyao1989/WyFFmpeg: 音视频相关基础实现

效果展示:

filter_switch_show1

一.着色器程序创建及切换:

着色器的编译/链接/使用基于上一篇OpenGL Texture C++ 预览Camera视频的OpenGLTextureVideoRender类进行createProgram() -> createTextures() -> draw() -> render()扩展应用,代码如下:

// Author : wangyongyao https://github.com/wangyongyao1989

// Created by MMM on 2024/9/5.

//#include "OpenglesTexureVideoRender.h"

#include "OpenGLShader.h"void

OpenglesTexureVideoRender::init(ANativeWindow *window, AAssetManager *assetManager, size_t width,size_t height) {LOGI("OpenglesTexureVideoRender init==%d, %d", width, width);m_backingWidth = width;m_backingHeight = height;

}void OpenglesTexureVideoRender::render() {

// LOGI("OpenglesTexureVideoRender render");glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);glClearColor(0.0f, 0.0f, 0.0f, 1.0f);if (!updateTextures() || !useProgram()) return;glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

}void OpenglesTexureVideoRender::updateFrame(const video_frame &frame) {m_sizeY = frame.width * frame.height;m_sizeU = frame.width * frame.height / 4;m_sizeV = frame.width * frame.height / 4;if (m_pDataY == nullptr || m_width != frame.width || m_height != frame.height) {m_pDataY = std::make_unique<uint8_t[]>(m_sizeY + m_sizeU + m_sizeV);m_pDataU = m_pDataY.get() + m_sizeY;m_pDataV = m_pDataU + m_sizeU;isProgramChanged = true;}m_width = frame.width;m_height = frame.height;if (m_width == frame.stride_y) {memcpy(m_pDataY.get(), frame.y, m_sizeY);} else {uint8_t *pSrcY = frame.y;uint8_t *pDstY = m_pDataY.get();for (int h = 0; h < m_height; h++) {memcpy(pDstY, pSrcY, m_width);pSrcY += frame.stride_y;pDstY += m_width;}}if (m_width / 2 == frame.stride_uv) {memcpy(m_pDataU, frame.u, m_sizeU);memcpy(m_pDataV, frame.v, m_sizeV);} else {uint8_t *pSrcU = frame.u;uint8_t *pSrcV = frame.v;uint8_t *pDstU = m_pDataU;uint8_t *pDstV = m_pDataV;for (int h = 0; h < m_height / 2; h++) {memcpy(pDstU, pSrcU, m_width / 2);memcpy(pDstV, pSrcV, m_width / 2);pDstU += m_width / 2;pDstV += m_width / 2;pSrcU += frame.stride_uv;pSrcV += frame.stride_uv;}}isDirty = true;

}void OpenglesTexureVideoRender::draw(uint8_t *buffer, size_t length, size_t width, size_t height,float rotation) {m_length = length;m_rotation = rotation;video_frame frame{};frame.width = width;frame.height = height;frame.stride_y = width;frame.stride_uv = width / 2;frame.y = buffer;frame.u = buffer + width * height;frame.v = buffer + width * height * 5 / 4;updateFrame(frame);

}void OpenglesTexureVideoRender::setParameters(uint32_t params) {m_params = params;

}uint32_t OpenglesTexureVideoRender::getParameters() {return m_params;

}bool OpenglesTexureVideoRender::createTextures() {auto widthY = (GLsizei) m_width;auto heightY = (GLsizei) m_height;glActiveTexture(GL_TEXTURE0);glGenTextures(1, &m_textureIdY);glBindTexture(GL_TEXTURE_2D, m_textureIdY);glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, widthY, heightY, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE,nullptr);if (!m_textureIdY) {LOGE("OpenGL Error Create Y texture");return false;}GLsizei widthU = (GLsizei) m_width / 2;GLsizei heightU = (GLsizei) m_height / 2;glActiveTexture(GL_TEXTURE1);glGenTextures(1, &m_textureIdU);glBindTexture(GL_TEXTURE_2D, m_textureIdU);glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, widthU, heightU, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE,nullptr);if (!m_textureIdU) {LOGE("OpenGL Error Create U texture");return false;}GLsizei widthV = (GLsizei) m_width / 2;GLsizei heightV = (GLsizei) m_height / 2;glActiveTexture(GL_TEXTURE2);glGenTextures(1, &m_textureIdV);glBindTexture(GL_TEXTURE_2D, m_textureIdV);glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, widthV, heightV, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE,nullptr);if (!m_textureIdV) {LOGE("OpenGL Error Create V texture");return false;}return true;

}bool OpenglesTexureVideoRender::updateTextures() {if (!m_textureIdY && !m_textureIdU && !m_textureIdV && !createTextures()) return false;LOGI("OpenglesTexureVideoRender updateTextures");if (isDirty) {glActiveTexture(GL_TEXTURE0);glBindTexture(GL_TEXTURE_2D, m_textureIdY);glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, (GLsizei) m_width, (GLsizei) m_height, 0,GL_LUMINANCE, GL_UNSIGNED_BYTE, m_pDataY.get());glActiveTexture(GL_TEXTURE1);glBindTexture(GL_TEXTURE_2D, m_textureIdU);glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, (GLsizei) m_width / 2, (GLsizei) m_height / 2,0,GL_LUMINANCE, GL_UNSIGNED_BYTE, m_pDataU);glActiveTexture(GL_TEXTURE2);glBindTexture(GL_TEXTURE_2D, m_textureIdV);glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, (GLsizei) m_width / 2, (GLsizei) m_height / 2,0,GL_LUMINANCE, GL_UNSIGNED_BYTE, m_pDataV);isDirty = false;return true;}return false;

}int

OpenglesTexureVideoRender::createProgram() {m_program = openGlShader->createProgram();m_vertexShader = openGlShader->vertexShader;m_pixelShader = openGlShader->fraShader;LOGI("OpenglesTexureVideoRender createProgram m_program:%d", m_program);if (!m_program) {LOGE("Could not create program.");return 0;}//Get Uniform Variables Locationm_vertexPos = (GLuint) glGetAttribLocation(m_program, "position");m_textureYLoc = glGetUniformLocation(m_program, "s_textureY");m_textureULoc = glGetUniformLocation(m_program, "s_textureU");m_textureVLoc = glGetUniformLocation(m_program, "s_textureV");m_textureLoc = (GLuint) glGetAttribLocation(m_program, "texcoord");return m_program;

}GLuint OpenglesTexureVideoRender::useProgram() {if (!m_program && !createProgram()) {LOGE("Could not use program.");return 0;}if (isProgramChanged) {glUseProgram(m_program);glVertexAttribPointer(m_vertexPos, 2, GL_FLOAT, GL_FALSE, 0, kVerticek);glEnableVertexAttribArray(m_vertexPos);glUniform1i(m_textureYLoc, 0);glUniform1i(m_textureULoc, 1);glUniform1i(m_textureVLoc, 2);glVertexAttribPointer(m_textureLoc, 2, GL_FLOAT, GL_FALSE, 0, kTextureCoordk);glEnableVertexAttribArray(m_textureLoc);isProgramChanged = false;}return m_program;

}bool OpenglesTexureVideoRender::setSharderPath(const char *vertexPath, const char *fragmentPath) {openGlShader->getSharderPath(vertexPath, fragmentPath);return 0;

}bool OpenglesTexureVideoRender::setSharderStringPath(string vertexPath, string fragmentPath) {openGlShader->getSharderStringPath(vertexPath, fragmentPath);return 0;

}OpenglesTexureVideoRender::OpenglesTexureVideoRender() {openGlShader = new OpenGLShader();

}OpenglesTexureVideoRender::~OpenglesTexureVideoRender() {deleteTextures();delete_program(m_program);

}void OpenglesTexureVideoRender::delete_program(GLuint &program) {if (program) {glUseProgram(0);glDeleteProgram(program);program = 0;}

}void OpenglesTexureVideoRender::deleteTextures() {if (m_textureIdY) {glActiveTexture(GL_TEXTURE0);glBindTexture(GL_TEXTURE_2D, 0);glDeleteTextures(1, &m_textureIdY);m_textureIdY = 0;}if (m_textureIdU) {glActiveTexture(GL_TEXTURE1);glBindTexture(GL_TEXTURE_2D, 0);glDeleteTextures(1, &m_textureIdU);m_textureIdU = 0;}if (m_textureIdV) {glActiveTexture(GL_TEXTURE2);glBindTexture(GL_TEXTURE_2D, 0);glDeleteTextures(1, &m_textureIdV);m_textureIdV = 0;}

}void OpenglesTexureVideoRender::printGLString(const char *name, GLenum s) {const char *v = (const char *) glGetString(s);LOGI("OpenGL %s = %s\n", name, v);

}void OpenglesTexureVideoRender::checkGlError(const char *op) {for (GLint error = glGetError(); error; error = glGetError()) {LOGI("after %s() glError (0x%x)\n", op, error);}

}纹理的使用:

在Filter滤镜的片段着色中沿用着三个关于YUV的s_textureY/s_textureU/s_textureV三个uniform参数,用于创建纹理时的数据传递。

//Get Uniform Variables Locationm_vertexPos = (GLuint) glGetAttribLocation(m_program, "position");m_textureYLoc = glGetUniformLocation(m_program, "s_textureY");m_textureULoc = glGetUniformLocation(m_program, "s_textureU");m_textureVLoc = glGetUniformLocation(m_program, "s_textureV");m_textureLoc = (GLuint) glGetAttribLocation(m_program, "texcoord");多片段着色器程序的传入:

要实现滤镜效果,会在片段着色器程序(Fragment Shader)中对YUV数据进行各种有意思的转换。这样的话必须传入多个片段着色器程序代码,以供滤镜切换时使用。

bool OpenglesTextureFilterRender::setSharderStringPathes(string vertexPath,vector<string> fragmentPathes) {m_fragmentStringPathes = fragmentPathes;m_vertexStringPath = vertexPath;return OpenglesTexureVideoRender::setSharderStringPath(vertexPath, m_fragmentStringPathes.front());

}片段着色器的切换:

对滤镜来说关于顶点数据及纹理的顶点数据是不变的,所以在本篇中只讨论顶点程序(Vertex Shader)不变的,当然也可以同时顶点程序也可以变化实现一些动态的效果。等以后think出一些多的idea在实现动态滤镜的效果,到时再写几篇博客分享分享。

在render函数中切换片段着色器,切换时要把之前的着色器程序删除,然后再传入需要的片段着色器重新走一遍着色器的编译/链接/使用过程。

void OpenglesTextureFilterRender::render() {if (m_filter != m_prevFilter) {m_prevFilter = m_filter;if (m_filter >= 0 && m_filter < m_fragmentStringPathes.size()) {isProgramChanged = true;delete_program(m_program);setSharderStringPath(m_vertexStringPath, m_fragmentStringPathes.at(m_filter));createProgram();}}OpenglesTexureVideoRender::render();

}

二.片段着色器程序GLSL:

1.YUV数据的真实显示:

无添加任何滤镜的状态,显示Camera的真实画面:

#version 320 esprecision mediump float;in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;// https://stackoverflow.com/questions/26695253/when-switching-to-glsl-300-met-the-following-error

//The predefined variable gl_FragColor does not exist anymore in GLSL ES 3.00.

//out vec4 gl_FragColor;

out vec4 FragColor;void main() {float y, u, v, r, g, b;y = texture(s_textureY, v_texcoord).r;u = texture(s_textureU, v_texcoord).r;v = texture(s_textureV, v_texcoord).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;FragColor = vec4(r, g, b, 1.0f);

// gl_FragColor = vec4(r, g, b, 1.0);}2.模糊处理滤镜:

#version 320 esprecision mediump float;in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;out vec4 FragColor;vec4 YuvToRgb(vec2 uv) {float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;return vec4(r, g, b, 1.0);}void main() {vec4 sample0, sample1, sample2, sample3;float blurStep = 0.5;float step = blurStep / 100.0f;sample0 = YuvToRgb(vec2(v_texcoord.x - step, v_texcoord.y - step));sample1 = YuvToRgb(vec2(v_texcoord.x + step, v_texcoord.y + step));sample2 = YuvToRgb(vec2(v_texcoord.x + step, v_texcoord.y - step));sample3 = YuvToRgb(vec2(v_texcoord.x - step, v_texcoord.y + step));FragColor = (sample0 + sample1 + sample2 + sample3) / 4.0;

}

3.鱼眼滤镜:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;out vec4 FragColor;//鱼眼滤镜

void main() {float aperture = 158.0;float apertureHalf = 0.5 * aperture * (PI / 180.0);float maxFactor = sin(apertureHalf);vec2 uv;vec2 xy = 2.0 * v_texcoord.xy - 1.0;float d = length(xy);if (d < (2.0 - maxFactor)) {d = length(xy * maxFactor);float z = sqrt(1.0 - d * d);float r = atan(d, z) / PI;float phi = atan(xy.y, xy.x);uv.x = r * cos(phi) + 0.5;uv.y = r * sin(phi) + 0.5;} else {uv = v_texcoord.xy;}float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;FragColor = vec4(r, g, b, 1.0);}

4. 旋流过滤器:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;//旋流过滤器

void main() {float radius = 200.0;float angle = 0.8;vec2 center = vec2(texSize.x / 2.0, texSize.y / 2.0);vec2 tc = v_texcoord * texSize;tc -= center;float dist = length(tc);if (dist < radius) {float percent = (radius - dist) / radius;float theta = percent * percent * angle * 8.0;float s = sin(theta);float c = cos(theta);tc = vec2(dot(tc, vec2(c, -s)), dot(tc, vec2(s, c)));}tc += center;float y, u, v, r, g, b;y = texture(s_textureY, tc / texSize).r;u = texture(s_textureU, tc / texSize).r;v = texture(s_textureV, tc / texSize).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;FragColor = vec4(r, g, b, 1.0);}

5.放大镜滤光片:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;vec4 YuvToRgb(vec2 uv) {float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;return vec4(r, g, b, 1.0);}//放大镜滤光片

void main() {float circleRadius = float(0.5);float minZoom = 0.4;float maxZoom = 0.6;vec2 center = vec2(texSize.x / 2.0, texSize.y / 2.0);vec2 uv = v_texcoord;uv.x *= (texSize.x / texSize.y);vec2 realCenter = vec2(0.0, 0.0);realCenter.x = (center.x / texSize.x) * (texSize.x / texSize.y);realCenter.y = center.y / texSize.y;float maxX = realCenter.x + circleRadius;float minX = realCenter.x - circleRadius;float maxY = realCenter.y + circleRadius;float minY = realCenter.y - circleRadius;if (uv.x > minX && uv.x < maxX && uv.y > minY && uv.y < maxY) {float relX = uv.x - realCenter.x;float relY = uv.y - realCenter.y;float ang = atan(relY, relX);float dist = sqrt(relX * relX + relY * relY);if (dist <= circleRadius) {float newRad = dist * ((maxZoom * dist / circleRadius) + minZoom);float newX = realCenter.x + cos(ang) * newRad;newX *= (texSize.y / texSize.x);float newY = realCenter.y + sin(ang) * newRad;FragColor = YuvToRgb(vec2(newX, newY));} else {FragColor = YuvToRgb(v_texcoord);}} else {FragColor = YuvToRgb(v_texcoord);}}

6.利希滕斯坦过滤器

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;//利希滕斯坦式过滤器

void main() {float size = texSize.x / 75.0;float radius = size * 0.5;vec2 fragCoord = v_texcoord * texSize.xy;vec2 quadPos = floor(fragCoord.xy / size) * size;vec2 quad = quadPos/texSize.xy;vec2 quadCenter = (quadPos + size/2.0);float dist = length(quadCenter - fragCoord.xy);float y, u, v, r, g, b;y = texture(s_textureY, quad).r;u = texture(s_textureU, quad).r;v = texture(s_textureV, quad).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;if (dist > radius) {FragColor = vec4(0.25);} else {FragColor = vec4(r, g, b, 1.0);}}

7.三角形马赛克滤镜:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;vec4 YuvToRgb(vec2 uv) {float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;return vec4(r, g, b, 1.0);}//三角形马赛克滤镜

void main() {vec2 tileNum = vec2(40.0, 20.0);vec2 uv = v_texcoord;vec2 uv2 = floor(uv * tileNum) / tileNum;uv -= uv2;uv *= tileNum;vec3 color = YuvToRgb(uv2 + vec2(step(1.0 - uv.y, uv.x) / (2.0 * tileNum.x),step(uv.x, uv.y) / (2.0 * tileNum.y))).rgb;FragColor = vec4(color, 1.0);}

8.像素过滤器:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;//像素过滤器

void main() {vec2 pixelSize = vec2(texSize.x/100.0, texSize.y/100.0);vec2 uv = v_texcoord.xy;float dx = pixelSize.x*(1./texSize.x);float dy = pixelSize.y*(1./texSize.y);vec2 coord = vec2(dx*floor(uv.x/dx),dy*floor(uv.y/dy));float y, u, v, r, g, b;y = texture(s_textureY, coord).r;u = texture(s_textureU, coord).r;v = texture(s_textureV, coord).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;FragColor = vec4(r, g, b, 1.0);}

8.交叉缝合过滤器:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;vec4 YuvToRgb(vec2 uv) {float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;return vec4(r, g, b, 1.0);}vec4 CrossStitching(vec2 uv) {float stitchSize = texSize.x / 35.0;int invert = 0;vec4 color = vec4(0.0);float size = stitchSize;vec2 cPos = uv * texSize.xy;vec2 tlPos = floor(cPos / vec2(size, size));tlPos *= size;int remX = int(mod(cPos.x, size));int remY = int(mod(cPos.y, size));if (remX == 0 && remY == 0)tlPos = cPos;vec2 blPos = tlPos;blPos.y += (size - 1.0);if ((remX == remY) || (((int(cPos.x) - int(blPos.x)) == (int(blPos.y) - int(cPos.y))))) {if (invert == 1)color = vec4(0.2, 0.15, 0.05, 1.0);elsecolor = YuvToRgb(tlPos * vec2(1.0 / texSize.x, 1.0 / texSize.y)) * 1.4;} else {if (invert == 1)color = YuvToRgb(tlPos * vec2(1.0 / texSize.x, 1.0 / texSize.y)) * 1.4;elsecolor = vec4(0.0, 0.0, 0.0, 1.0);}return color;}//交叉缝合过滤器

void main() {FragColor = CrossStitching(v_texcoord);}

9.Toonfiy过滤器:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;

const int kHueLevCount = 6;

const int kSatLevCount = 7;

const int kValLevCount = 4;

float hueLevels[kHueLevCount];

float satLevels[kSatLevCount];

float valLevels[kValLevCount];

float edge_thres = 0.2;

float edge_thres2 = 5.0;out vec4 FragColor;vec4 YuvToRgb(vec2 uv) {float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;return vec4(r, g, b, 1.0);}vec3 RGBtoHSV(float r, float g, float b) {float minv, maxv, delta;vec3 res;minv = min(min(r, g), b);maxv = max(max(r, g), b);res.z = maxv;delta = maxv - minv;if (maxv != 0.0)res.y = delta / maxv;else {res.y = 0.0;res.x = -1.0;return res;}if (r == maxv)res.x = ( g - b ) / delta;else if (g == maxv)res.x = 2.0 + ( b - r ) / delta;elseres.x = 4.0 + ( r - g ) / delta;res.x = res.x * 60.0;if(res.x < 0.0)res.x = res.x + 360.0;return res;}vec3 HSVtoRGB(float h, float s, float v ) {int i;float f, p, q, t;vec3 res;if(s == 0.0) {res.x = v;res.y = v;res.z = v;return res;}h /= 60.0;i = int(floor( h ));f = h - float(i);p = v * ( 1.0 - s );q = v * ( 1.0 - s * f );t = v * ( 1.0 - s * ( 1.0 - f ) );if (i == 0) {res.x = v;res.y = t;res.z = p;} else if (i == 1) {res.x = q;res.y = v;res.z = p;} else if (i == 2) {res.x = p;res.y = v;res.z = t;} else if (i == 3) {res.x = p;res.y = q;res.z = v;} else if (i == 4) {res.x = t;res.y = p;res.z = v;} else if (i == 5) {res.x = v;res.y = p;res.z = q;}return res;}float nearestLevel(float col, int mode) {int levCount;if (mode==0) levCount = kHueLevCount;if (mode==1) levCount = kSatLevCount;if (mode==2) levCount = kValLevCount;for (int i=0; i<levCount-1; i++ ) {if (mode==0) {if (col >= hueLevels[i] && col <= hueLevels[i+1]) {return hueLevels[i+1];}}if (mode==1) {if (col >= satLevels[i] && col <= satLevels[i+1]) {return satLevels[i+1];}}if (mode==2) {if (col >= valLevels[i] && col <= valLevels[i+1]) {return valLevels[i+1];}}}}float avgIntensity(vec4 pix) {return (pix.r + pix.g + pix.b)/3.;}vec4 getPixel(vec2 coords, float dx, float dy) {return YuvToRgb(coords + vec2(dx, dy));}float IsEdge(in vec2 coords) {float dxtex = 1.0 / float(texSize.x);float dytex = 1.0 / float(texSize.y);float pix[9];int k = -1;float delta;for (int i=-1; i<2; i++) {for(int j=-1; j<2; j++) {k++;pix[k] = avgIntensity(getPixel(coords,float(i)*dxtex, float(j)*dytex));}}delta = (abs(pix[1]-pix[7]) + abs(pix[5]-pix[3]) + abs(pix[0]-pix[8])+ abs(pix[2]-pix[6]))/4.;return clamp(edge_thres2*delta,0.0,1.0);}//Toonify过滤器

void main() {hueLevels[0] = 0.0;hueLevels[1] = 140.0;hueLevels[2] = 160.0;hueLevels[3] = 240.0;hueLevels[4] = 240.0;hueLevels[5] = 360.0;satLevels[0] = 0.0;satLevels[1] = 0.15;satLevels[2] = 0.3;satLevels[3] = 0.45;satLevels[4] = 0.6;satLevels[5] = 0.8;satLevels[6] = 1.0;valLevels[0] = 0.0;valLevels[1] = 0.3;valLevels[2] = 0.6;valLevels[3] = 1.0;vec2 uv = v_texcoord;vec3 color = YuvToRgb(uv).rgb;vec3 vHSV = RGBtoHSV(color.r, color.g, color.b);vHSV.x = nearestLevel(vHSV.x, 0);vHSV.y = nearestLevel(vHSV.y, 1);vHSV.z = nearestLevel(vHSV.z, 2);float edg = IsEdge(uv);vec3 vRGB = (edg >= edge_thres) ? vec3(0.0,0.0,0.0) : HSVtoRGB(vHSV.x,vHSV.y,vHSV.z);FragColor = vec4(vRGB.x, vRGB.y, vRGB.z, 1.0);}

10.捕食者热视觉滤镜:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;//捕食者热视觉滤镜

void main() {float y, u, v, r, g, b;y = texture(s_textureY, v_texcoord).r;u = texture(s_textureU, v_texcoord).r;v = texture(s_textureV, v_texcoord).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;vec3 color = vec3(r, g, b);vec2 uv = v_texcoord.xy;vec3 colors[3];colors[0] = vec3(0.,0.,1.);colors[1] = vec3(1.,1.,0.);colors[2] = vec3(1.,0.,0.);float lum = (color.r + color.g + color.b)/3.;int idx = (lum < 0.5) ? 0 : 1;vec3 rgb = mix(colors[idx],colors[idx+1],(lum-float(idx)*0.5)/0.5);FragColor = vec4(rgb, 1.0);}

11.压花过滤器:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;vec4 YuvToRgb(vec2 uv) {float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;return vec4(r, g, b, 1.0);}//压花过滤器

void main() {vec4 color;color.rgb = vec3(0.5);vec2 onePixel = vec2(1.0 / texSize.x, 1.0 / texSize.y);color -= YuvToRgb(v_texcoord - onePixel) * 5.0;color += YuvToRgb(v_texcoord + onePixel) * 5.0;color.rgb = vec3((color.r + color.g + color.b) / 3.0);FragColor = vec4(color.rgb, 1.0);}

12.边缘检测滤波器:

#version 320 esprecision mediump float;

const float PI = 3.1415926535;

in vec2 v_texcoord;uniform lowp sampler2D s_textureY;

uniform lowp sampler2D s_textureU;

uniform lowp sampler2D s_textureV;

uniform vec2 texSize;out vec4 FragColor;vec4 YuvToRgb(vec2 uv) {float y, u, v, r, g, b;y = texture(s_textureY, uv).r;u = texture(s_textureU, uv).r;v = texture(s_textureV, uv).r;u = u - 0.5;v = v - 0.5;r = y + 1.403 * v;g = y - 0.344 * u - 0.714 * v;b = y + 1.770 * u;return vec4(r, g, b, 1.0);}//边缘检测滤波器

void main() {vec2 pos = v_texcoord.xy;vec2 onePixel = vec2(1, 1) / texSize;vec4 color = vec4(0);mat3 edgeDetectionKernel = mat3(-1, -1, -1,-1, 8, -1,-1, -1, -1);for(int i = 0; i < 3; i++) {for(int j = 0; j < 3; j++) {vec2 samplePos = pos + vec2(i - 1 , j - 1) * onePixel;vec4 sampleColor = YuvToRgb(samplePos);sampleColor *= edgeDetectionKernel[i][j];color += sampleColor;}}FragColor = vec4(color.rgb, 1.0);}

相关文章:

OpenGL Texture C++ Camera Filter滤镜

基于OpenGL Texture纹理的强大功能,在片段着色器(Shader)中编写GLSL代码,对YUV的数据进行数据转换从而实现视频编辑软件中的相机滤镜功能。 接上一篇OpenGL Texture C 预览Camera视频的功能实现,本篇来实现Camera滤镜效…...

基于Sobel算法的边缘检测设计与实现

1、边缘检测 针对的时灰度图像,顾名思义,检测图像的边缘,是针对图像像素点的一种计算,目的时标识数字图像中灰度变化明显的点,图像的边缘检测,在保留了图像的重要结构信息的同时,剔除了可以认为…...

java:练习

编写一个 Java 程序,计算并输出从 1 到用户指定的数字 n 中,所有“幸运数字”。幸运数字的定义如下:条件 1:数字的所有位数(如个位、十位)加起来的和是 7 的倍数。条件 2:数字本身是一个质数&am…...

大数据中一些常用的集群启停命令

文章目录 一、HDFS二、MapReduce && YARN三、Hive 一、HDFS 格式化namenode # 确保以hadoop用户执行 su - hadoop # 格式化namenode hadoop namenode -format启动 # 一键启动hdfs集群 start-dfs.sh # 一键关闭hdfs集群 stop-dfs.sh# 如果遇到命令未找到的错误&#…...

Golang、Python、C语言、Java的圆桌会议

一天,Golang、C语言、Java 和 Python 四位老朋友坐在编程领域的“圆桌会议”上,讨论如何一起完成一个任务:实现一个简单的高并发服务器,用于处理成千上万的请求。大家各抒己见,而 Golang 则是这次会议的主角。 1. Pyth…...

C语言编译原理

目录 一、C语言的编译过程 二、预处理 三、编译阶段 3.1 词法分析(Lexical Analysis) 3.2 语法分析(Syntax Analysis) 语法分析的主要步骤: 语法分析的关键技术: 构建AST: 符号表的维护…...

【c++】类和对象(下)(取地址运算符重载、深究构造函数、类型转换、static修饰成员、友元、内部类、匿名对象)

🌟🌟作者主页:ephemerals__ 🌟🌟所属专栏:C 目录 前言 一、取地址运算符重载 1. const修饰成员函数 2. 取地址运算符重载 二、深究构造函数 三、类型转换 四、static修饰成员 1. static修饰成员变…...

Apache POI 学习

Apache POI 学习 1. 引言2. 环境搭建MavenGradle 3. 基础概念4. 基本操作4.1 创建 Excel 文件4.2 读取 Excel 文件 5. 进阶操作5.1 设置单元格样式5.2 数据验证5.3 图表创建5.4 合并单元格5.5 居中对齐5.6 设置边框和字体颜色 6. 性能优化7. 总结 1. 引言 Apache POI 是一个用…...

福建科立讯通信 指挥调度管理平台 SQL注入漏洞

北峰通信-福建科立讯通信 指挥调度管理平台 SQL注入漏洞 厂商域名和信息收集 域名: 工具sqlmap python sqlmap.py -u "http://ip:端口/api/client/down_file.php?uuid1" --batch 数据包 GET /api/client/down_file.php?uuid1%27%20AND%20(SELECT%20…...

4.qml单例模式

这里写目录标题 js文件单例模式qml文件单例模式 js文件单例模式 直接添加一个js文件到qml中 修改内容 TestA.qml import QtQuick 2.0 import QtQuick.Controls 2.12 import "./MyWork.js" as MWItem {Row{TextField {onEditingFinished: {MW.setA(text)}}Button…...

CACTI 0.8.7 迁移并升级到 1.2.7记录

升级前后环境 升级前: CactiEZ 中文版 V10 升级后: Ubuntu 2204 Cacti 1.2.7 升级原因:风险漏洞太多,升不尽,补不完. 升级流程 Created with Raphal 2.3.0 开始 DST:安装Ububtu/Mariadb/apache/php SRC:备份 DB/RRA 数据导入 结束 Cacti 依赖包 注意:UBUNTU下有些包,它非另外…...

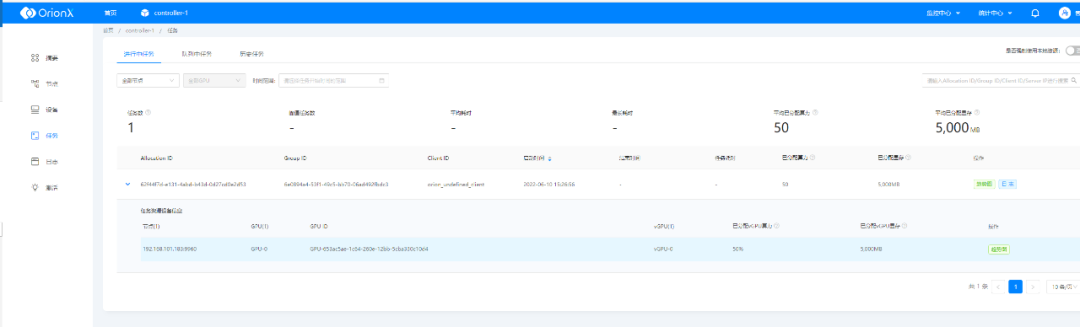

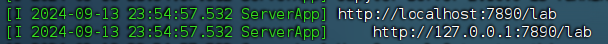

OrionX vGPU 研发测试场景下最佳实践之Jupyter模式

在上周的文章中,我们讲述了OrionX vGPU研发测试场景下最佳实践之SSH模式,今天,让我们走进 Jupyter模式下的最佳实践。 • Jupyter模式:Jupyter是最近几年算法人员使用比较多的一种工具,很多企业已经将其改造集成开发工…...

国风编曲:了解国风 民族调式 五声音阶 作/编曲思路 变化音 六声、七声调式

中国风 以流行为基础加入中国特色乐器、调式、和声融为一体的风格 如:青花瓷、菊花台、绝代风华、江南等等等等 省流:中国风=流行民族乐 两者结合,民族元素越多越中国风 流行民族/摇滚民族/电子民族 注意:中国风≠…...

HTTP 响应状态码详解

HTTP状态码详解:HTTP状态码,是用以表示WEB服务器 HTTP响应状态的3位数字代码 小技巧: CtrlF 快速查找 Http状态码状态码含义100客户端应当继续发送请求。这个临时响应是用来通知客户端它的部分请求已经被服务器接收,且仍未被拒绝。客户端应当…...

在服务器上开Juypter Lab教程(远程访问)

在服务器上开Juypter Lab教程(远程访问) 文章目录 在服务器上开Juypter Lab教程(远程访问)一、安装anaconda1、安装anaconda2、提权限3、运行4、同意协议5、安装6、是否要自动初始化 conda7、结束8、检查 二、Anaconda安装Pytorch…...

【硬件模块】SHT20温湿度传感器

SHT20是一个用IIC通信的温湿度传感器。我们知道这个就可以了。 它支持的电压范围是2.1~3.6V,推荐是3V,所以如果我们的MCU是5V的,那么就得转个电压才能用了。 IIC常见的速率有100k,400k,而SHT20是支持400k的(…...

Redhat 8,9系(复刻系列) 一键部署Oracle23ai rpm

Oracle23ai前言 Oracle Database 23ai Free 让您可以充分体验 Oracle Database 的能力,世界各地的企业都依赖它来处理关键任务工作负载。 Oracle Database Free 的资源限制为 2 个 CPU(前台进程)、2 GB 的 RAM 和 12 GB 的磁盘用户数据。该软件包不仅易于使用,还可轻松下载…...

SIPp uac.xml 之我见

https://sipp.sourceforge.net/doc/uac.xml.html 这个 uac.xml 有没有问题呢? 有! 问题之一是: <recv response"200" rtd"true" rrs"true"> 要加 rrs, 仔细看注释就能看到 问题之二是࿱…...

引领智能家居新风尚,WTN6040F门铃解决方案——让家的呼唤更动听

在追求高效与便捷的智能家居时代,每一个细节都承载着我们对美好生活的向往。WTN6040F,作为一款专为现代家庭设计的低成本、高性能门铃解决方案,正以其独特的魅力,悄然改变着我们的居家生活体验。 芯片功能特点: 1.2.4…...

Android 蓝牙服务启动

蓝牙是Android设备中非常常见的一个feature,设备厂家可以用BT来做RC、连接音箱、设备本身做Sink等常见功能。如果一些设备不需要BT功能,Android也可以通过配置来disable此模块,方便厂家为自己的设备做客制化。APP操作设备的蓝牙功能ÿ…...

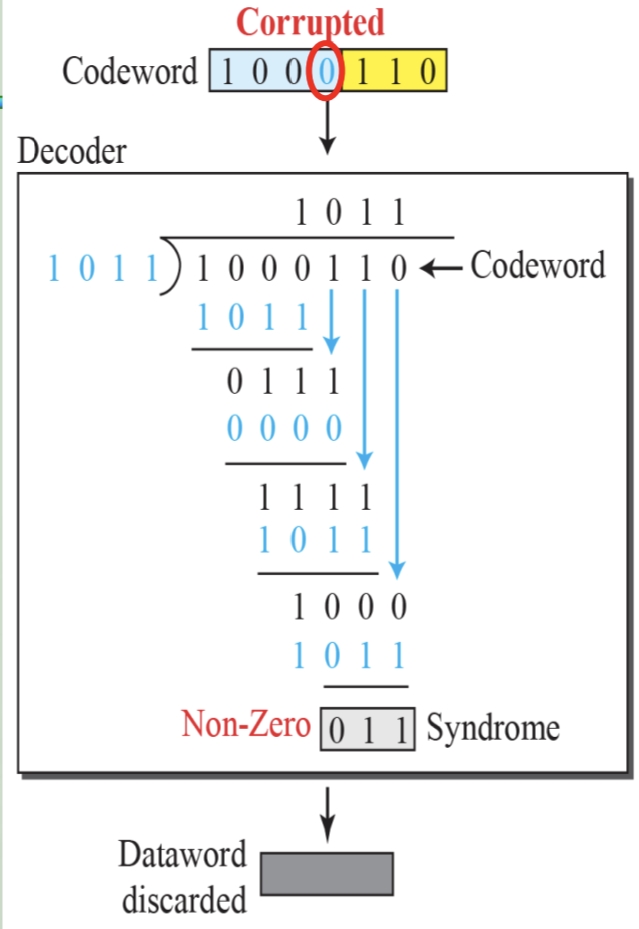

循环冗余码校验CRC码 算法步骤+详细实例计算

通信过程:(白话解释) 我们将原始待发送的消息称为 M M M,依据发送接收消息双方约定的生成多项式 G ( x ) G(x) G(x)(意思就是 G ( x ) G(x) G(x) 是已知的)࿰…...

服务器硬防的应用场景都有哪些?

服务器硬防是指一种通过硬件设备层面的安全措施来防御服务器系统受到网络攻击的方式,避免服务器受到各种恶意攻击和网络威胁,那么,服务器硬防通常都会应用在哪些场景当中呢? 硬防服务器中一般会配备入侵检测系统和预防系统&#x…...

JavaScript 数据类型详解

JavaScript 数据类型详解 JavaScript 数据类型分为 原始类型(Primitive) 和 对象类型(Object) 两大类,共 8 种(ES11): 一、原始类型(7种) 1. undefined 定…...

Proxmox Mail Gateway安装指南:从零开始配置高效邮件过滤系统

💝💝💝欢迎莅临我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。 推荐:「storms…...

提升移动端网页调试效率:WebDebugX 与常见工具组合实践

在日常移动端开发中,网页调试始终是一个高频但又极具挑战的环节。尤其在面对 iOS 与 Android 的混合技术栈、各种设备差异化行为时,开发者迫切需要一套高效、可靠且跨平台的调试方案。过去,我们或多或少使用过 Chrome DevTools、Remote Debug…...

HybridVLA——让单一LLM同时具备扩散和自回归动作预测能力:训练时既扩散也回归,但推理时则扩散

前言 如上一篇文章《dexcap升级版之DexWild》中的前言部分所说,在叠衣服的过程中,我会带着团队对比各种模型、方法、策略,毕竟针对各个场景始终寻找更优的解决方案,是我个人和我司「七月在线」的职责之一 且个人认为,…...

k8s从入门到放弃之HPA控制器

k8s从入门到放弃之HPA控制器 Kubernetes中的Horizontal Pod Autoscaler (HPA)控制器是一种用于自动扩展部署、副本集或复制控制器中Pod数量的机制。它可以根据观察到的CPU利用率(或其他自定义指标)来调整这些对象的规模,从而帮助应用程序在负…...

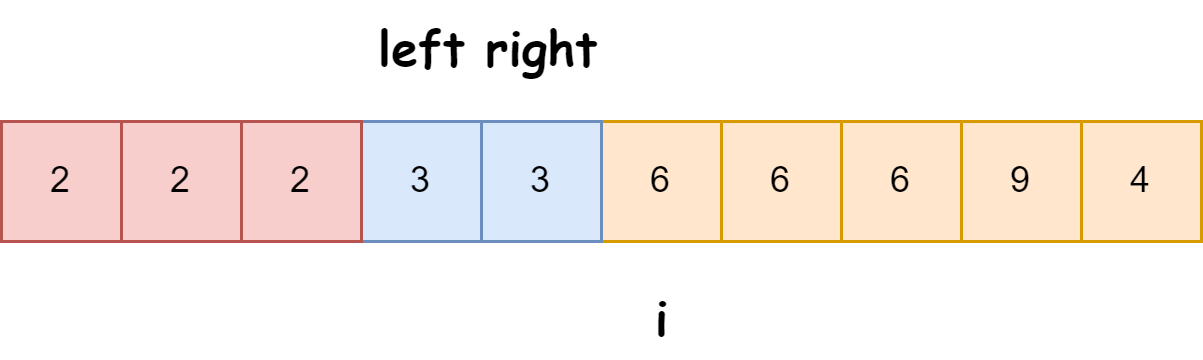

快速排序算法改进:随机快排-荷兰国旗划分详解

随机快速排序-荷兰国旗划分算法详解 一、基础知识回顾1.1 快速排序简介1.2 荷兰国旗问题 二、随机快排 - 荷兰国旗划分原理2.1 随机化枢轴选择2.2 荷兰国旗划分过程2.3 结合随机快排与荷兰国旗划分 三、代码实现3.1 Python实现3.2 Java实现3.3 C实现 四、性能分析4.1 时间复杂度…...

与文本切分器(Splitter)详解《二》)

LangChain 中的文档加载器(Loader)与文本切分器(Splitter)详解《二》

🧠 LangChain 中 TextSplitter 的使用详解:从基础到进阶(附代码) 一、前言 在处理大规模文本数据时,特别是在构建知识库或进行大模型训练与推理时,文本切分(Text Splitting) 是一个…...

车载诊断架构 --- ZEVonUDS(J1979-3)简介第一篇

我是穿拖鞋的汉子,魔都中坚持长期主义的汽车电子工程师。 老规矩,分享一段喜欢的文字,避免自己成为高知识低文化的工程师: 做到欲望极简,了解自己的真实欲望,不受外在潮流的影响,不盲从,不跟风。把自己的精力全部用在自己。一是去掉多余,凡事找规律,基础是诚信;二是…...