kubernetes存储之GlusterFS(GlusterFS for Kubernetes Storage)

💝💝💝欢迎来到我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。

推荐:Linux运维老纪的首页,持续学习,不断总结,共同进步,活到老学到老

导航剑指大厂系列:全面总结 运维核心技术:系统基础、数据库、网路技术、系统安全、自动化运维、容器技术、监控工具、脚本编程、云服务等。

常用运维工具系列:常用的运维开发工具, zabbix、nagios、docker、k8s、puppet、ansible等

数据库系列:详细总结了常用数据库 mysql、Redis、MongoDB、oracle 技术点,以及工作中遇到的 mysql 问题等

懒人运维系列:总结好用的命令,解放双手不香吗?能用一个命令完成绝不用两个操作

数据结构与算法系列:总结数据结构和算法,不同类型针对性训练,提升编程思维,剑指大厂

非常期待和您一起在这个小小的网络世界里共同探索、学习和成长。💝💝💝 ✨✨ 欢迎订阅本专栏 ✨✨

kubernetes存储之GlusterFS

1、glusterfs概述

1.1、glusterfs简介

glusterfs是一个开源的、可扩展的分布式文件系统,集成来自多台服务器上的磁盘存储资源到单一全局命名空间,以提供共享文件存储。设计用于大型、分布式的应用,能够处理对大量数据的访问。 它运行在廉价的普通硬件上,提供了高可用性和容错功能,确保了系统的稳定性和数据的可靠性。GFS由存储服务器、客户端以及NFS/Samba存储网关组成,没有元数据服务器组件,这有助于提升整个系统的性能、可靠性和稳定性。

1.2、glusterfs特点

- 可以扩展到几PB容量

- 支持处理数千个客户端

- 兼容POSIX接口

- 使用通用硬件,普通服务器即可构建

- 能够使用支持扩展属性的文件系统,例如ext4,XFS

- 支持工业标准的协议,例如NFS,SMB

- 提供很多高级功能,例如副本,配额,跨地域复制,快照以及bitrot检测

- 支持根据不同工作负载进行调优

1.3、glusterfs卷的模式

glusterfs中的volume的模式有很多中,包括以下几种:

- 分布卷(默认模式):即DHT, 也叫 分布卷: 将文件以hash算法随机分布到 一台服务器节点中存储。

- 复制模式:即AFR, 创建volume 时带 replica x 数量: 将文件复制到 replica x 个节点中。

- 条带模式:即Striped, 创建volume 时带 stripe x 数量: 将文件切割成数据块,分别存储到 stripe x 个节点中 ( 类似raid 0 )。

- 分布式条带模式:最少需要4台服务器才能创建。 创建volume 时 stripe 2 server = 4 个节点: 是DHT 与 Striped 的组合型。

- 分布式复制模式:最少需要4台服务器才能创建。 创建volume 时 replica 2 server = 4 个节点:是DHT 与 AFR 的组合型。

- 条带复制卷模式:最少需要4台服务器才能创建。 创建volume 时 stripe 2 replica 2 server = 4 个节点: 是 Striped 与 AFR 的组合型。

- 三种模式混合: 至少需要8台 服务器才能创建。 stripe 2 replica 2 , 每4个节点 组成一个 组。

2、heketi概述

heketi是一个提供RESTful API管理gfs卷的框架,能够在kubernetes、openshift、openstack等云平台上实现动态的存储资源供应,支持gfs多集群管理,便于管理员对gfs进行操作,在kubernetes集群中,pod将存储的请求发送至heketi,然后heketi控制gfs集群创建对应的存储卷。heketi动态在集群内选择bricks构建指定的volumes,以确保副本会分散到集群不同的故障域内。heketi还支持任意数量的glusterfs集群,以保证接入的云服务器不局限于单个glusterfs集群。

3、部署heketi+glusterfs

环境:kubeadm安装的最新k8s 1.16.2版本,由1master+2node组成,网络插件选用的是flannel,默认kubeadm安装的k8s,会给master打上污点,本文为了实现gfs集群功能,先手动去掉了污点。

本文的glusterfs卷模式为复制卷模式。

另外,glusterfs在kubernetes集群中需要以特权运行,需要在kube-apiserver中添加–allow-privileged=true参数以开启此功能,默认此版本的kubeadm已开启。

[root@k8s-master-01 ~]# kubectl describe nodes k8s-master-01 |grep Taint

Taints: node-role.kubernetes.io/master:NoSchedule

[root@k8s-master-01 ~]# kubectl taint node k8s-master-01 node-role.kubernetes.io/master-

node/k8s-master-01 untainted

[root@k8s-master-01 ~]# kubectl describe nodes k8s-master-01 |grep Taint

Taints: <none>

3.1、准备工作

为了保证pod能够正常使用gfs作为后端存储,需要每台运行pod的节点上提前安装gfs的客户端工具,其他存储方式也类似。

3.1.1、所有节点安装glusterfs客户端

$ yum install -y glusterfs glusterfs-fuse -y

3.1.2、节点打标签

需要安装gfs的kubernetes设置Label,因为gfs是通过kubernetes集群的DaemonSet方式安装的。DaemonSet安装方式默认会在每个节点上都进行安装,除非安装前设置筛选要安装节点Label,带上此标签的节点才会安装。

安装脚本中设置DaemonSet中设置安装在贴有 storagenode=glusterfs的节点,所以这是事先将节点贴上对应Label。

[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready master 5d v1.16.2

k8s-node-01 Ready <none> 4d23h v1.16.2

k8s-node-02 Ready <none> 4d23h v1.16.2

[root@k8s-master-01 ~]# kubectl label node k8s-master-01 storagenode=glusterfs

node/k8s-master-01 labeled

[root@k8s-master-01 ~]# kubectl label node k8s-node-01 storagenode=glusterfs

node/k8s-node-01 labeled

[root@k8s-master-01 ~]# kubectl label node k8s-node-02 storagenode=glusterfs

node/k8s-node-02 labeled

[root@k8s-master-01 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master-01 Ready master 5d v1.16.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master-01,kubernetes.io/os=linux,node-role.kubernetes.io/master=,storagenode=glusterfs

k8s-node-01 Ready <none> 4d23h v1.16.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-01,kubernetes.io/os=linux,storagenode=glusterfs

k8s-node-02 Ready <none> 4d23h v1.16.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-02,kubernetes.io/os=linux,storagenode=glusterfs

3.1.3、所有节点加载对应模块

$ modprobe dm_snapshot

$ modprobe dm_mirror

$ modprobe dm_thin_pool

查看是否加载

$ lsmod | grep dm_snapshot

$ lsmod | grep dm_mirror

$ lsmod | grep dm_thin_pool

3.2、创建glusterfs集群

采用容器化方式部署gfs集群,同样也可以使用传统方式部署,在生产环境中,gfs集群最好是独立于集群之外进行部署,之后只需要创建对应的endpoints即可。这里采用Daemonset方式部署,同时保证已经打上标签的节点上都运行一个gfs服务,并且均有提供存储的磁盘。

3.2.1、下载相关安装文件

[root@k8s-master-01 glusterfs]# pwd

/root/manifests/glusterfs

[root@k8s-master-01 glusterfs]# wget https://github.com/heketi/heketi/releases/download/v7.0.0/heketi-client-v7.0.0.linux.amd64.tar.gz

[root@k8s-master-01 glusterfs]# tar xf heketi-client-v7.0.0.linux.amd64.tar.gz

[root@k8s-master-01 glusterfs]# cd heketi-client/share/heketi/kubernetes/

[root@k8s-master-01 kubernetes]# pwd

/root/manifests/glusterfs/heketi-client/share/heketi/kubernetes

在本集群中,下面用到的daemonset控制器及后面用到的deployment控制器的api版本均变为了apps/v1,所以需要手动修改下载的json文件再进行部署,资源编排文件中需要指定selector声明。避免出现以下报错:

[root@k8s-master-01 kubernetes]# kubectl apply -f glusterfs-daemonset.json

error: unable to recognize "glusterfs-daemonset.json": no matches for kind "DaemonSet" in version "extensions/v1beta1"

修改api版本

"apiVersion": "extensions/v1beta1"

为apps/v1

"apiVersion": "apps/v1",

指定selector声明

[root@k8s-master-01 kubernetes]# kubectl apply -f glusterfs-daemonset.json

error: error validating "glusterfs-daemonset.json": error validating data: ValidationError(DaemonSet.spec): missing required field "selector" in io.k8s.api.apps.v1.DaemonSetSpec; if you choose to ignore these errors, turn validation off with --validate=false

对应后面内容的selector,用matchlabel相关联

"spec": {"selector": {"matchLabels": {"glusterfs-node": "daemonset"}},

3.2.2、创建集群

[root@k8s-master-01 kubernetes]# kubectl apply -f glusterfs-daemonset.json

daemonset.apps/glusterfs created

注意:

这里使用的是默认的挂载方式,可使用其他磁盘作为gfs的工作目录

- 此处创建的

namespace为default,可手动指定为其他namespace

3.2.3、查看gfs pods

[root@k8s-master-01 kubernetes]# kubectl get pods

NAME READY STATUS RESTARTS AGE

glusterfs-9tttf 1/1 Running 0 1m10s

glusterfs-gnrnr 1/1 Running 0 1m10s

glusterfs-v92j5 1/1 Running 0 1m10s

3.3、创建heketi服务

3.3.1、创建heketi的service account对象

[root@k8s-master-01 kubernetes]# cat heketi-service-account.json

{"apiVersion": "v1","kind": "ServiceAccount","metadata": {"name": "heketi-service-account"}

}

[root@k8s-master-01 kubernetes]# kubectl apply -f heketi-service-account.json

serviceaccount/heketi-service-account created

[root@k8s-master-01 kubernetes]# kubectl get sa

NAME SECRETS AGE

default 1 71m

heketi-service-account 1 5s

3.3.2、创建heketi对应的权限和secret

[root@k8s-master-01 kubernetes]# kubectl create clusterrolebinding heketi-gluster-admin --clusterrole=edit --serviceaccount=dafault:heketi-service-account

clusterrolebinding.rbac.authorization.k8s.io/heketi-gluster-admin created

[root@k8s-master-01 kubernetes]# kubectl create secret generic heketi-config-secret --from-file=./heketi.json

secret/heketi-config-secret created

3.3.3、初始化部署heketi

同样的,需要修改api版本以及增加selector声明部分。

[root@k8s-master-01 kubernetes]# vim heketi-bootstrap.json

..."kind": "Deployment","apiVersion": "apps/v1"

..."spec": {"selector": {"matchLabels": {"name": "deploy-heketi" }},

...

[root@k8s-master-01 kubernetes]# kubectl create -f heketi-bootstrap.json

service/deploy-heketi created

deployment.apps/deploy-heketi created

[root@k8s-master-01 kubernetes]# vim heketi-deployment.json

..."kind": "Deployment","apiVersion": "apps/v1",

..."spec": {"selector": {"matchLabels": {"name": "heketi"}},"replicas": 1,

...

[root@k8s-master-01 kubernetes]# kubectl apply -f heketi-deployment.json

secret/heketi-db-backup created

service/heketi created

deployment.apps/heketi created

[root@k8s-master-01 kubernetes]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deploy-heketi-6c687b4b84-p7mcr 1/1 Running 0 72s

heketi-68795ccd8-9726s 0/1 ContainerCreating 0 50s

glusterfs-9tttf 1/1 Running 0 48m

glusterfs-gnrnr 1/1 Running 0 48m

glusterfs-v92j5 1/1 Running 0 48m

3.4、创建gfs集群

3.4.1、复制二进制文件

复制heketi-cli到/usr/local/bin目录下

[root@k8s-master-01 heketi-client]# pwd

/root/manifests/glusterfs/heketi-client

[root@k8s-master-01 heketi-client]# cp bin/heketi-cli /usr/local/bin/

[root@k8s-master-01 heketi-client]# heketi-cli -v

heketi-cli v7.0.0

3.4.2、配置topology-sample

修改topology-sample,manage为gfs管理服务的节点Node主机名,storage为节点的ip地址,device为节点上的裸设备,也就是用于提供存储的磁盘最好使用裸设备,不进行分区。

因此,需要预先在每个gfs的节点上准备好新的磁盘,这里分别在三个节点都新添加了一块/dev/sdb磁盘设备,大小均为10G。

[root@k8s-master-01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 2G 0 part /boot

└─sda2 8:2 0 48G 0 part ├─centos-root 253:0 0 44G 0 lvm /└─centos-swap 253:1 0 4G 0 lvm

sdb 8:16 0 10G 0 disk

sr0 11:0 1 1024M 0 rom

[root@k8s-node-01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 2G 0 part /boot

└─sda2 8:2 0 48G 0 part ├─centos-root 253:0 0 44G 0 lvm /└─centos-swap 253:1 0 4G 0 lvm

sdb 8:16 0 10G 0 disk

sr0 11:0 1 1024M 0 rom

[root@k8s-node-02 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 2G 0 part /boot

└─sda2 8:2 0 48G 0 part ├─centos-root 253:0 0 44G 0 lvm /└─centos-swap 253:1 0 4G 0 lvm

sdb 8:16 0 10G 0 disk

sr0 11:0 1 1024M 0 rom

配置topology-sample

{"clusters": [{"nodes": [{"node": {"hostnames": {"manage": ["k8s-master-01"],"storage": ["192.168.2.10"]},"zone": 1},"devices": [{"name": "/dev/sdb","destroydata": false}]},{"node": {"hostnames": {"manage": ["k8s-node-01"],"storage": ["192.168.2.11"]},"zone": 1},"devices": [{"name": "/dev/sdb","destroydata": false}]},{"node": {"hostnames": {"manage": ["k8s-node-02"],"storage": ["192.168.2.12"]},"zone": 1},"devices": [{"name": "/dev/sdb","destroydata": false}]}]}]

}

3.4.3、获取当前heketi的ClusterIP

查看当前heketi的ClusterIP,并通过环境变量声明

[root@k8s-master-01 kubernetes]# kubectl get svc|grep heketi

deploy-heketi ClusterIP 10.1.241.99 <none> 8080/TCP 3m18s

[root@k8s-master-01 kubernetes]# curl http://10.1.241.99:8080/hello

Hello from Heketi

[root@k8s-master-01 kubernetes]# export HEKETI_CLI_SERVER=http://10.1.241.99:8080

[root@k8s-master-01 kubernetes]# echo $HEKETI_CLI_SERVER

http://10.1.185.215:8080

3.4.4、使用heketi创建gfs集群

执行如下命令创建gfs集群会提示Invalid JWT token: Token missing iss claim

[root@k8s-master-01 kubernetes]# heketi-cli topology load --json=topology-sample.json

Error: Unable to get topology information: Invalid JWT token: Token missing iss claim

这是因为新版本的heketi在创建gfs集群时需要带上参数,声明用户名及密码,相应值在heketi.json文件中配置,即:

[root@k8s-master-01 kubernetes]# heketi-cli -s $HEKETI_CLI_SERVER --user admin --secret 'My Secret' topology load --json=topology-sample.json

Creating cluster ... ID: 1c5ffbd86847e5fc1562ef70c033292eAllowing file volumes on cluster.Allowing block volumes on cluster.Creating node k8s-master-01 ... ID: b6100a5af9b47d8c1f19be0b2b4d8276Adding device /dev/sdb ... OKCreating node k8s-node-01 ... ID: 04740cac8d42f56e354c94bdbb7b8e34Adding device /dev/sdb ... OKCreating node k8s-node-02 ... ID: 1b33ad0dba20eaf23b5e3a4845e7cdb4Adding device /dev/sdb ... OK

执行了heketi-cli topology load之后,Heketi在服务器做的大致操作如下:

- 进入任意glusterfs Pod内,执行gluster peer status 发现都已把对端加入到了可信存储池(TSP)中。

- 在运行了gluster Pod的节点上,自动创建了一个VG,此VG正是由topology-sample.json 文件中的磁盘裸设备创建而来。

- 一块磁盘设备创建出一个VG,以后创建的PVC,即从此VG里划分的LV。

- heketi-cli topology info 查看拓扑结构,显示出每个磁盘设备的ID,对应VG的ID,总空间、已用空间、空余空间等信息。

通过部分日志查看

[root@k8s-master-01 manifests]# kubectl logs -f deploy-heketi-6c687b4b84-l5b6j

...

[kubeexec] DEBUG 2019/10/23 02:17:44 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [pvs -o pv_name,pv_uuid,vg_name --reportformat=json /dev/sdb] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout [ {"report": [{"pv": [{"pv_name":"/dev/sdb", "pv_uuid":"1UkSIV-RYt1-QBNw-KyAR-Drm5-T9NG-UmO313", "vg_name":"vg_398329cc70361dfd4baa011d811de94a"}]}]}

]: Stderr [ WARNING: Device /dev/sdb not initialized in udev database even after waiting 10000000 microseconds.WARNING: Device /dev/centos/root not initialized in udev database even after waiting 10000000 microseconds.WARNING: Device /dev/sda1 not initialized in udev database even after waiting 10000000 microseconds.WARNING: Device /dev/centos/swap not initialized in udev database even after waiting 10000000 microseconds.WARNING: Device /dev/sda2 not initialized in udev database even after waiting 10000000 microseconds.WARNING: Device /dev/sdb not initialized in udev database even after waiting 10000000 microseconds.

]

[kubeexec] DEBUG 2019/10/23 02:17:44 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [udevadm info --query=symlink --name=/dev/sdb] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 02:17:44 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[kubeexec] DEBUG 2019/10/23 02:17:44 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [udevadm info --query=symlink --name=/dev/sdb] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout [

]: Stderr []

[kubeexec] DEBUG 2019/10/23 02:17:44 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [vgdisplay -c vg_398329cc70361dfd4baa011d811de94a] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 02:17:44 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[negroni] 2019-10-23T02:17:44Z | 200 | 93.868µs | 10.1.241.99:8080 | GET /queue/3d0b6edb0faa67e8efd752397f314a6f

[kubeexec] DEBUG 2019/10/23 02:17:44 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [vgdisplay -c vg_398329cc70361dfd4baa011d811de94a] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout [ vg_398329cc70361dfd4baa011d811de94a:r/w:772:-1:0:0:0:-1:0:1:1:10350592:4096:2527:0:2527:YCPG9X-b270-1jf2-VwKX-ycpZ-OI9u-7ZidOc

]: Stderr []

[cmdexec] DEBUG 2019/10/23 02:17:44 heketi/executors/cmdexec/device.go:273:cmdexec.(*CmdExecutor).getVgSizeFromNode: /dev/sdb in k8s-node-01 has TotalSize:10350592, FreeSize:10350592, UsedSize:0

[heketi] INFO 2019/10/23 02:17:44 Added device /dev/sdb

[asynchttp] INFO 2019/10/23 02:17:44 Completed job 3d0b6edb0faa67e8efd752397f314a6f in 3m2.694238221s

[negroni] 2019-10-23T02:17:45Z | 204 | 105.23µs | 10.1.241.99:8080 | GET /queue/3d0b6edb0faa67e8efd752397f314a6f

[cmdexec] INFO 2019/10/23 02:17:45 Check Glusterd service status in node k8s-node-01

[kubeexec] DEBUG 2019/10/23 02:17:45 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [systemctl status glusterd] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 02:17:45 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[kubeexec] DEBUG 2019/10/23 02:17:45 heketi/pkg/remoteexec/log/commandlog.go:41:log.(*CommandLogger).Success: Ran command [systemctl status glusterd] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout filtered, Stderr filtered

[heketi] INFO 2019/10/23 02:17:45 Adding node k8s-node-02

[negroni] 2019-10-23T02:17:45Z | 202 | 146.998544ms | 10.1.241.99:8080 | POST /nodes

[asynchttp] INFO 2019/10/23 02:17:45 Started job 8da70b6fd6fec1d61c4ba1cd0fe27fe5

[cmdexec] INFO 2019/10/23 02:17:45 Probing: k8s-node-01 -> 192.168.2.12

[negroni] 2019-10-23T02:17:45Z | 200 | 74.577µs | 10.1.241.99:8080 | GET /queue/8da70b6fd6fec1d61c4ba1cd0fe27fe5

[kubeexec] DEBUG 2019/10/23 02:17:45 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [gluster --mode=script --timeout=600 peer probe 192.168.2.12] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 02:17:45 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[negroni] 2019-10-23T02:17:46Z | 200 | 79.893µs | 10.1.241.99:8080 | GET /queue/8da70b6fd6fec1d61c4ba1cd0fe27fe5

[kubeexec] DEBUG 2019/10/23 02:17:46 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [gluster --mode=script --timeout=600 peer probe 192.168.2.12] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout [peer probe: success.

]: Stderr []

[cmdexec] INFO 2019/10/23 02:17:46 Setting snapshot limit

[kubeexec] DEBUG 2019/10/23 02:17:46 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [gluster --mode=script --timeout=600 snapshot config snap-max-hard-limit 14] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 02:17:46 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[kubeexec] DEBUG 2019/10/23 02:17:46 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [gluster --mode=script --timeout=600 snapshot config snap-max-hard-limit 14] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout [snapshot config: snap-max-hard-limit for System set successfully

]: Stderr []

[heketi] INFO 2019/10/23 02:17:46 Added node 1b33ad0dba20eaf23b5e3a4845e7cdb4

[asynchttp] INFO 2019/10/23 02:17:46 Completed job 8da70b6fd6fec1d61c4ba1cd0fe27fe5 in 488.404011ms

[negroni] 2019-10-23T02:17:46Z | 303 | 80.712µs | 10.1.241.99:8080 | GET /queue/8da70b6fd6fec1d61c4ba1cd0fe27fe5

[negroni] 2019-10-23T02:17:46Z | 200 | 242.595µs | 10.1.241.99:8080 | GET /nodes/1b33ad0dba20eaf23b5e3a4845e7cdb4

[heketi] INFO 2019/10/23 02:17:46 Adding device /dev/sdb to node 1b33ad0dba20eaf23b5e3a4845e7cdb4

[negroni] 2019-10-23T02:17:46Z | 202 | 696.018µs | 10.1.241.99:8080 | POST /devices

[asynchttp] INFO 2019/10/23 02:17:46 Started job 21af2069b74762a5521a46e2b52e7d6a

[negroni] 2019-10-23T02:17:46Z | 200 | 82.354µs | 10.1.241.99:8080 | GET /queue/21af2069b74762a5521a46e2b52e7d6a

[kubeexec] DEBUG 2019/10/23 02:17:46 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [pvcreate -qq --metadatasize=128M --dataalignment=256K '/dev/sdb'] on [pod:glusterfs-l2lsv c:glusterfs ns:default (from host:k8s-node-02 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 02:17:46 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

...

3.4.5、持久化heketi配置

上面创建的heketi没有配置持久化的卷,如果heketi的pod重启,可能会丢失之前的配置信息,所以现在创建heketi持久化的卷来对heketi数据进行持久化,该持久化方式利用gfs提供的动态存储,也可以采用其他方式进行持久化。

在所有节点安装device-mapper*

yum install -y device-mapper*

将配置信息保存为文件,并创建持久化相关信息

[root@k8s-master-01 kubernetes]# heketi-cli -s $HEKETI_CLI_SERVER --user admin --secret 'My Secret' setup-openshift-heketi-storage Saving heketi-storage.json

Saving heketi-storage.json

[root@k8s-master-01 kubernetes]# kubectl apply -f heketi-storage.json

secret/heketi-storage-secret created

endpoints/heketi-storage-endpoints created

service/heketi-storage-endpoints created

job.batch/heketi-storage-copy-job created

删除中间产物

[root@k8s-master-01 kubernetes]# kubectl delete all,svc,jobs,deployment,secret --selector="deploy-heketi"

pod "deploy-heketi-6c687b4b84-l5b6j" deleted

service "deploy-heketi" deleted

deployment.apps "deploy-heketi" deleted

replicaset.apps "deploy-heketi-6c687b4b84" deleted

job.batch "heketi-storage-copy-job" deleted

secret "heketi-storage-secret" deleted

创建持久化的heketi

[root@k8s-master-01 kubernetes]# kubectl apply -f heketi-deployment.json

secret/heketi-db-backup created

service/heketi created

deployment.apps/heketi created

[root@k8s-master-01 kubernetes]# kubectl get pods

NAME READY STATUS RESTARTS AGE

glusterfs-cqw5d 1/1 Running 0 41m

glusterfs-l2lsv 1/1 Running 0 41m

glusterfs-lrdz7 1/1 Running 0 41m

heketi-68795ccd8-m8x55 1/1 Running 0 32s

查看持久化后heketi的svc,并重新声明环境变量

[root@k8s-master-01 kubernetes]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

heketi ClusterIP 10.1.45.61 <none> 8080/TCP 2m9s

heketi-storage-endpoints ClusterIP 10.1.26.73 <none> 1/TCP 4m58s

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 14h

[root@k8s-master-01 kubernetes]# export HEKETI_CLI_SERVER=http://10.1.45.61:8080

[root@k8s-master-01 kubernetes]# curl http://10.1.45.61:8080/hello

Hello from Heketi

查看gfs集群信息,更多操作参照官方文档说明

[root@k8s-master-01 kubernetes]# heketi-cli -s $HEKETI_CLI_SERVER --user admin --secret 'My Secret' topology infoCluster Id: 1c5ffbd86847e5fc1562ef70c033292eFile: trueBlock: trueVolumes:Name: heketidbstorageSize: 2Id: b25f4b627cf66279bfe19e8a01e9e85dCluster Id: 1c5ffbd86847e5fc1562ef70c033292eMount: 192.168.2.11:heketidbstorageMount Options: backup-volfile-servers=192.168.2.12,192.168.2.10Durability Type: replicateReplica: 3Snapshot: DisabledBricks:Id: 3ab6c19b8fe0112575ba04d58573a404Path: /var/lib/heketi/mounts/vg_703e3662cbd8ffb24a6401bb3c3c41fa/brick_3ab6c19b8fe0112575ba04d58573a404/brickSize (GiB): 2Node: b6100a5af9b47d8c1f19be0b2b4d8276Device: 703e3662cbd8ffb24a6401bb3c3c41faId: d1fa386f2ec9954f4517431163f67deaPath: /var/lib/heketi/mounts/vg_398329cc70361dfd4baa011d811de94a/brick_d1fa386f2ec9954f4517431163f67dea/brickSize (GiB): 2Node: 04740cac8d42f56e354c94bdbb7b8e34Device: 398329cc70361dfd4baa011d811de94aId: d2b0ae26fa3f0eafba407b637ca0d06bPath: /var/lib/heketi/mounts/vg_7c791bbb90f710123ba431a7cdde8d0b/brick_d2b0ae26fa3f0eafba407b637ca0d06b/brickSize (GiB): 2Node: 1b33ad0dba20eaf23b5e3a4845e7cdb4Device: 7c791bbb90f710123ba431a7cdde8d0bNodes:Node Id: 04740cac8d42f56e354c94bdbb7b8e34State: onlineCluster Id: 1c5ffbd86847e5fc1562ef70c033292eZone: 1Management Hostnames: k8s-node-01Storage Hostnames: 192.168.2.11Devices:Id:398329cc70361dfd4baa011d811de94a Name:/dev/sdb State:online Size (GiB):9 Used (GiB):2 Free (GiB):7 Bricks:Id:d1fa386f2ec9954f4517431163f67dea Size (GiB):2 Path: /var/lib/heketi/mounts/vg_398329cc70361dfd4baa011d811de94a/brick_d1fa386f2ec9954f4517431163f67dea/brickNode Id: 1b33ad0dba20eaf23b5e3a4845e7cdb4State: onlineCluster Id: 1c5ffbd86847e5fc1562ef70c033292eZone: 1Management Hostnames: k8s-node-02Storage Hostnames: 192.168.2.12Devices:Id:7c791bbb90f710123ba431a7cdde8d0b Name:/dev/sdb State:online Size (GiB):9 Used (GiB):2 Free (GiB):7 Bricks:Id:d2b0ae26fa3f0eafba407b637ca0d06b Size (GiB):2 Path: /var/lib/heketi/mounts/vg_7c791bbb90f710123ba431a7cdde8d0b/brick_d2b0ae26fa3f0eafba407b637ca0d06b/brickNode Id: b6100a5af9b47d8c1f19be0b2b4d8276State: onlineCluster Id: 1c5ffbd86847e5fc1562ef70c033292eZone: 1Management Hostnames: k8s-master-01Storage Hostnames: 192.168.2.10Devices:Id:703e3662cbd8ffb24a6401bb3c3c41fa Name:/dev/sdb State:online Size (GiB):9 Used (GiB):2 Free (GiB):7 Bricks:Id:3ab6c19b8fe0112575ba04d58573a404 Size (GiB):2 Path: /var/lib/heketi/mounts/vg_703e3662cbd8ffb24a6401bb3c3c41fa/brick_3ab6c19b8fe0112575ba04d58573a404/brick

4、创建storageclass

[root@k8s-master-01 kubernetes]# vim storageclass-gfs-heketi.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: gluster-heketi

provisioner: kubernetes.io/glusterfs

reclaimPolicy: Retain

parameters:resturl: "http://10.1.45.61:8080"restauthenabled: "true"restuser: "admin"restuserkey: "My Secret"gidMin: "40000"gidMax: "50000"volumetype: "replicate:3"

allowVolumeExpansion: true

[root@k8s-master-01 kubernetes]# kubectl apply -f storageclass-gfs-heketi.yaml

storageclass.storage.k8s.io/gluster-heketi created

参数说明:

- reclaimPolicy:Retain 回收策略,默认是Delete,删除pvc后pv及后端创建的volume、brick(lvm)不会被删除。

- gidMin和gidMax,能够使用的最小和最大gid

- volumetype:卷类型及个数,这里使用的是复制卷,个数必须大于1

5、测试通过gfs提供动态存储

创建一个pod使用动态pv,在StorageClassName指定之前创建的StorageClass的name,即gluster-heketi:

[root@k8s-master-01 kubernetes]# vim pod-use-pvc.yaml

apiVersion: v1

kind: Pod

metadata:name: pod-use-pvc

spec:containers:- name: pod-use-pvcimage: busyboxcommand:- sleep- "3600"volumeMounts:- name: gluster-volumemountPath: "/pv-data"readOnly: falsevolumes:- name: gluster-volumepersistentVolumeClaim:claimName: pvc-gluster-heketi---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: pvc-gluster-heketi

spec:accessModes: [ "ReadWriteOnce" ]storageClassName: "gluster-heketi"resources:requests:storage: 1Gi

创建pod并查看创建的pv和pvc

[root@k8s-master-01 kubernetes]# kubectl apply -f pod-use-pvc.yaml

pod/pod-use-pvc created

persistentvolumeclaim/pvc-gluster-heketi created

[root@k8s-master-01 kubernetes]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-0fb9b246-4da4-491c-b6a2-4f38489ab11c 1Gi RWO Retain Bound default/pvc-gluster-heketi gluster-heketi 57sNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc-gluster-heketi Bound pvc-0fb9b246-4da4-491c-b6a2-4f38489ab11c 1Gi RWO gluster-heketi 62s

6、分析k8s通过heketi创建pv及pvc的过程

通过pvc及向storageclass申请创建对应的pv,具体可通过查看创建的heketi pod的日志

首先发现heketi接收到请求之后运行了一个job任务,创建了三个bricks,在三个gfs节点中创建对应的目录:

[heketi] INFO 2019/10/23 03:08:36 Allocating brick set #0

[negroni] 2019-10-23T03:08:36Z | 202 | 56.193603ms | 10.1.45.61:8080 | POST /volumes

[asynchttp] INFO 2019/10/23 03:08:36 Started job 3ec932315085609bc54ead6e3f6851e8

[heketi] INFO 2019/10/23 03:08:36 Started async operation: Create Volume

[heketi] INFO 2019/10/23 03:08:36 Trying Create Volume (attempt #1/5)

[heketi] INFO 2019/10/23 03:08:36 Creating brick 289fe032c1f4f9f211480e24c5d74a44

[heketi] INFO 2019/10/23 03:08:36 Creating brick a3172661ba1b849d67b500c93c3dd652

[heketi] INFO 2019/10/23 03:08:36 Creating brick 917e27a9dbc5395ebf08dff8d3401b43

[negroni] 2019-10-23T03:08:36Z | 200 | 72.083µs | 10.1.45.61:8080 | GET /queue/3ec932315085609bc54ead6e3f6851e8

[kubeexec] DEBUG 2019/10/23 03:08:36 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [mkdir -p /var/lib/heketi/mounts/vg_703e3662cbd8ffb24a6401bb3c3c41fa/brick_a3172661ba1b849d67b500c93c3dd652] on [pod:glusterfs-cqw5d c:glusterfs ns:default (from host:k8s-master-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:08:36 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[kubeexec] DEBUG 2019/10/23 03:08:36 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [mkdir -p /var/lib/heketi/mounts/vg_398329cc70361dfd4baa011d811de94a/brick_289fe032c1f4f9f211480e24c5d74a44] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:08:36 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 1

[kubeexec] DEBUG 2019/10/23 03:08:36 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [mkdir -p /var/lib/heketi/mounts/vg_7c791bbb90f710123ba431a7cdde8d0b/brick_917e27a9dbc5395ebf08dff8d3401b43] on [pod:glusterfs-l2lsv c:glusterfs ns:default (from host:k8s-node-02 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:08:36 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 2

创建lv,添加自动挂载

[kubeexec] DEBUG 2019/10/23 03:08:37 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 2

[kubeexec] DEBUG 2019/10/23 03:08:37 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [mkfs.xfs -i size=512 -n size=8192 /dev/mapper/vg_703e3662cbd8ffb24a6401bb3c3c41fa-brick_a3172661ba1b849d67b500c93c3dd652] on [pod:glusterfs-cqw5d c:glusterfs ns:default (from host:k8s-master-01 selector:glusterfs-node)]: Stdout [meta-data=/dev/mapper/vg_703e3662cbd8ffb24a6401bb3c3c41fa-brick_a3172661ba1b849d67b500c93c3dd652 isize=512 agcount=8, agsize=32768 blks= sectsz=512 attr=2, projid32bit=1= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=262144, imaxpct=25= sunit=64 swidth=64 blks

naming =version 2 bsize=8192 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2= sectsz=512 sunit=64 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

]: Stderr []

[kubeexec] DEBUG 2019/10/23 03:08:37 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [awk "BEGIN {print \"/dev/mapper/vg_703e3662cbd8ffb24a6401bb3c3c41fa-brick_a3172661ba1b849d67b500c93c3dd652 /var/lib/heketi/mounts/vg_703e3662cbd8ffb24a6401bb3c3c41fa/brick_a3172661ba1b849d67b500c93c3dd652 xfs rw,inode64,noatime,nouuid 1 2\" >> \"/var/lib/heketi/fstab\"}"] on [pod:glusterfs-cqw5d c:glusterfs ns:default (from host:k8s-master-01 selector:glusterfs-node)]

创建brick,设置权限

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [chmod 2775 /var/lib/heketi/mounts/vg_703e3662cbd8ffb24a6401bb3c3c41fa/brick_a3172661ba1b849d67b500c93c3dd652/brick] on [pod:glusterfs-cqw5d c:glusterfs ns:default (from host:k8s-master-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 2

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [chown :40000 /var/lib/heketi/mounts/vg_398329cc70361dfd4baa011d811de94a/brick_289fe032c1f4f9f211480e24c5d74a44/brick] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout []: Stderr []

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [chmod 2775 /var/lib/heketi/mounts/vg_398329cc70361dfd4baa011d811de94a/brick_289fe032c1f4f9f211480e24c5d74a44/brick] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 2

[negroni] 2019-10-23T03:08:38Z | 200 | 83.159µs | 10.1.45.61:8080 | GET /queue/3ec932315085609bc54ead6e3f6851e8

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [chmod 2775 /var/lib/heketi/mounts/vg_7c791bbb90f710123ba431a7cdde8d0b/brick_917e27a9dbc5395ebf08dff8d3401b43/brick] on [pod:glusterfs-l2lsv c:glusterfs ns:default (from host:k8s-node-02 selector:glusterfs-node)]: Stdout []: Stderr []

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [chmod 2775 /var/lib/heketi/mounts/vg_703e3662cbd8ffb24a6401bb3c3c41fa/brick_a3172661ba1b849d67b500c93c3dd652/brick] on [pod:glusterfs-cqw5d c:glusterfs ns:default (from host:k8s-master-01 selector:glusterfs-node)]: Stdout []: Stderr []

[kubeexec] DEBUG 2019/10/23 03:08:38 heketi/pkg/remoteexec/log/commandlog.go:46:log.(*CommandLogger).Success: Ran command [chmod 2775 /var/lib/heketi/mounts/vg_398329cc70361dfd4baa011d811de94a/brick_289fe032c1f4f9f211480e24c5d74a44/brick] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout []: Stderr []

[cmdexec] INFO 2019/10/23 03:08:38 Creating volume vol_08e8447256de2598952dcb240e615d0f replica 3

创建对应的volume

[asynchttp] INFO 2019/10/23 03:08:41 Completed job 3ec932315085609bc54ead6e3f6851e8 in 5.007631648s

[negroni] 2019-10-23T03:08:41Z | 303 | 78.335µs | 10.1.45.61:8080 | GET /queue/3ec932315085609bc54ead6e3f6851e8

[negroni] 2019-10-23T03:08:41Z | 200 | 5.751689ms | 10.1.45.61:8080 | GET /volumes/08e8447256de2598952dcb240e615d0f

[negroni] 2019-10-23T03:08:41Z | 200 | 139.05µs | 10.1.45.61:8080 | GET /clusters/1c5ffbd86847e5fc1562ef70c033292e

[negroni] 2019-10-23T03:08:41Z | 200 | 660.249µs | 10.1.45.61:8080 | GET /nodes/04740cac8d42f56e354c94bdbb7b8e34

[negroni] 2019-10-23T03:08:41Z | 200 | 270.334µs | 10.1.45.61:8080 | GET /nodes/1b33ad0dba20eaf23b5e3a4845e7cdb4

[negroni] 2019-10-23T03:08:41Z | 200 | 345.528µs | 10.1.45.61:8080 | GET /nodes/b6100a5af9b47d8c1f19be0b2b4d8276

[heketi] INFO 2019/10/23 03:09:39 Starting Node Health Status refresh

[cmdexec] INFO 2019/10/23 03:09:39 Check Glusterd service status in node k8s-node-01

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [systemctl status glusterd] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/log/commandlog.go:41:log.(*CommandLogger).Success: Ran command [systemctl status glusterd] on [pod:glusterfs-lrdz7 c:glusterfs ns:default (from host:k8s-node-01 selector:glusterfs-node)]: Stdout filtered, Stderr filtered

[heketi] INFO 2019/10/23 03:09:39 Periodic health check status: node 04740cac8d42f56e354c94bdbb7b8e34 up=true

[cmdexec] INFO 2019/10/23 03:09:39 Check Glusterd service status in node k8s-node-02

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [systemctl status glusterd] on [pod:glusterfs-l2lsv c:glusterfs ns:default (from host:k8s-node-02 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/log/commandlog.go:41:log.(*CommandLogger).Success: Ran command [systemctl status glusterd] on [pod:glusterfs-l2lsv c:glusterfs ns:default (from host:k8s-node-02 selector:glusterfs-node)]: Stdout filtered, Stderr filtered

[heketi] INFO 2019/10/23 03:09:39 Periodic health check status: node 1b33ad0dba20eaf23b5e3a4845e7cdb4 up=true

[cmdexec] INFO 2019/10/23 03:09:39 Check Glusterd service status in node k8s-master-01

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/log/commandlog.go:34:log.(*CommandLogger).Before: Will run command [systemctl status glusterd] on [pod:glusterfs-cqw5d c:glusterfs ns:default (from host:k8s-master-01 selector:glusterfs-node)]

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/kube/exec.go:72:kube.ExecCommands: Current kube connection count: 0

[kubeexec] DEBUG 2019/10/23 03:09:39 heketi/pkg/remoteexec/log/commandlog.go:41:log.(*CommandLogger).Success: Ran command [systemctl status glusterd] on [pod:glusterfs-cqw5d c:glusterfs ns:default (from host:k8s-master-01 selector:glusterfs-node)]: Stdout filtered, Stderr filtered

[heketi] INFO 2019/10/23 03:09:39 Periodic health check status: node b6100a5af9b47d8c1f19be0b2b4d8276 up=true

[heketi] INFO 2019/10/23 03:09:39 Cleaned 0 nodes from health cache

7、测试数据

测试使用该pv的pod之间能否共享数据,手动进入到pod并创建文件

[root@k8s-master-01 kubernetes]# kubectl get pods

NAME READY STATUS RESTARTS AGE

glusterfs-cqw5d 1/1 Running 0 90m

glusterfs-l2lsv 1/1 Running 0 90m

glusterfs-lrdz7 1/1 Running 0 90m

heketi-68795ccd8-m8x55 1/1 Running 0 49m

pod-use-pvc 1/1 Running 0 20m

[root@k8s-master-01 kubernetes]# kubectl exec -it pod-use-pvc /bin/sh

/ # cd /pv-data/

/pv-data # echo "hello world">a.txt

/pv-data # cat a.txt

hello world

查看创建的卷

[root@k8s-master-01 kubernetes]# heketi-cli -s $HEKETI_CLI_SERVER --user admin --secret 'My Secret' volume list

Id:08e8447256de2598952dcb240e615d0f Cluster:1c5ffbd86847e5fc1562ef70c033292e Name:vol_08e8447256de2598952dcb240e615d0f

Id:b25f4b627cf66279bfe19e8a01e9e85d Cluster:1c5ffbd86847e5fc1562ef70c033292e Name:heketidbstorage

将设备挂载查看卷中的数据,vol_08e8447256de2598952dcb240e615d0f为卷名称

[root@k8s-master-01 kubernetes]# mount -t glusterfs 192.168.2.10:vol_08e8447256de2598952dcb240e615d0f /mnt

[root@k8s-master-01 kubernetes]# ll /mnt/

total 1

-rw-r--r-- 1 root 40000 12 Oct 23 11:29 a.txt

[root@k8s-master-01 kubernetes]# cat /mnt/a.txt

hello world

8、测试deployment

测试通过deployment控制器部署能否正常使用storageclass,创建nginx的deployment

[root@k8s-master-01 kubernetes]# vim nginx-deployment-gluster.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: nginx-gfs

spec:selector:matchLabels:name: nginxreplicas: 2template:metadata:labels:name: nginxspec:containers:- name: nginximage: nginximagePullPolicy: IfNotPresentports:- containerPort: 80volumeMounts:- name: nginx-gfs-htmlmountPath: "/usr/share/nginx/html"- name: nginx-gfs-confmountPath: "/etc/nginx/conf.d"volumes:- name: nginx-gfs-htmlpersistentVolumeClaim:claimName: glusterfs-nginx-html- name: nginx-gfs-confpersistentVolumeClaim:claimName: glusterfs-nginx-conf---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: glusterfs-nginx-html

spec:accessModes: [ "ReadWriteMany" ]storageClassName: "gluster-heketi"resources:requests:storage: 500Mi---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: glusterfs-nginx-conf

spec:accessModes: [ "ReadWriteMany" ]storageClassName: "gluster-heketi"resources:requests:storage: 10Mi

查看相应资源

[root@k8s-master-01 kubernetes]# kubectl get pod,pv,pvc|grep nginx

pod/nginx-gfs-7d66cccf76-mkc76 1/1 Running 0 2m45s

pod/nginx-gfs-7d66cccf76-zc8n2 1/1 Running 0 2m45s

persistentvolume/pvc-87481e3a-9b7e-43aa-a0b9-4028ce0a1abb 1Gi RWX Retain Bound default/glusterfs-nginx-conf gluster-heketi 2m34s

persistentvolume/pvc-f954a4ca-ea1c-458d-8490-a49a0a001ab5 1Gi RWX Retain Bound default/glusterfs-nginx-html gluster-heketi 2m34s

persistentvolumeclaim/glusterfs-nginx-conf Bound pvc-87481e3a-9b7e-43aa-a0b9-4028ce0a1abb 1Gi RWX gluster-heketi 2m45s

persistentvolumeclaim/glusterfs-nginx-html Bound pvc-f954a4ca-ea1c-458d-8490-a49a0a001ab5 1Gi RWX gluster-heketi 2m45s

查看挂载情况

[root@k8s-master-01 kubernetes]# kubectl exec -it nginx-gfs-7d66cccf76-mkc76 -- df -Th

Filesystem Type Size Used Avail Use% Mounted on

overlay overlay 44G 3.2G 41G 8% /

tmpfs tmpfs 64M 0 64M 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 44G 3.2G 41G 8% /etc/hosts

shm tmpfs 64M 0 64M 0% /dev/shm

192.168.2.10:vol_adf6fc08c8828fdda27c8aa5ce99b50c fuse.glusterfs 1014M 43M 972M 5% /etc/nginx/conf.d

192.168.2.10:vol_454e14ae3184122ff9a14d77e02b10b9 fuse.glusterfs 1014M 43M 972M 5% /usr/share/nginx/html

tmpfs tmpfs 2.0G 12K 2.0G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/firmware

在宿主机挂载和创建文件

[root@k8s-master-01 kubernetes]# mount -t glusterfs 192.168.2.10:vol_454e14ae3184122ff9a14d77e02b10b9 /mnt/

[root@k8s-master-01 kubernetes]# cd /mnt/

[root@k8s-master-01 mnt]# echo "hello world">index.html

[root@k8s-master-01 mnt]# kubectl exec -it nginx-gfs-7d66cccf76-mkc76 -- cat /usr/share/nginx/html/index.html

hello world

扩容nginx副本,查看是否能正常挂载

[root@k8s-master-01 mnt]# kubectl scale deployment nginx-gfs --replicas=3

deployment.apps/nginx-gfs scaled

[root@k8s-master-01 mnt]# kubectl get pods

NAME READY STATUS RESTARTS AGE

glusterfs-cqw5d 1/1 Running 0 129m

glusterfs-l2lsv 1/1 Running 0 129m

glusterfs-lrdz7 1/1 Running 0 129m

heketi-68795ccd8-m8x55 1/1 Running 0 88m

nginx-gfs-7d66cccf76-mkc76 1/1 Running 0 8m55s

nginx-gfs-7d66cccf76-qzqnv 1/1 Running 0 23s

nginx-gfs-7d66cccf76-zc8n2 1/1 Running 0 8m55s

[root@k8s-master-01 mnt]# kubectl exec -it nginx-gfs-7d66cccf76-qzqnv -- cat /usr/share/nginx/html/index.html

hello world

至此,在k8s集群中部署heketi+glusterfs提供动态存储结束。

相关文章:

kubernetes存储之GlusterFS(GlusterFS for Kubernetes Storage)

💝💝💝欢迎来到我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。 推荐:Linux运维老纪的首页…...

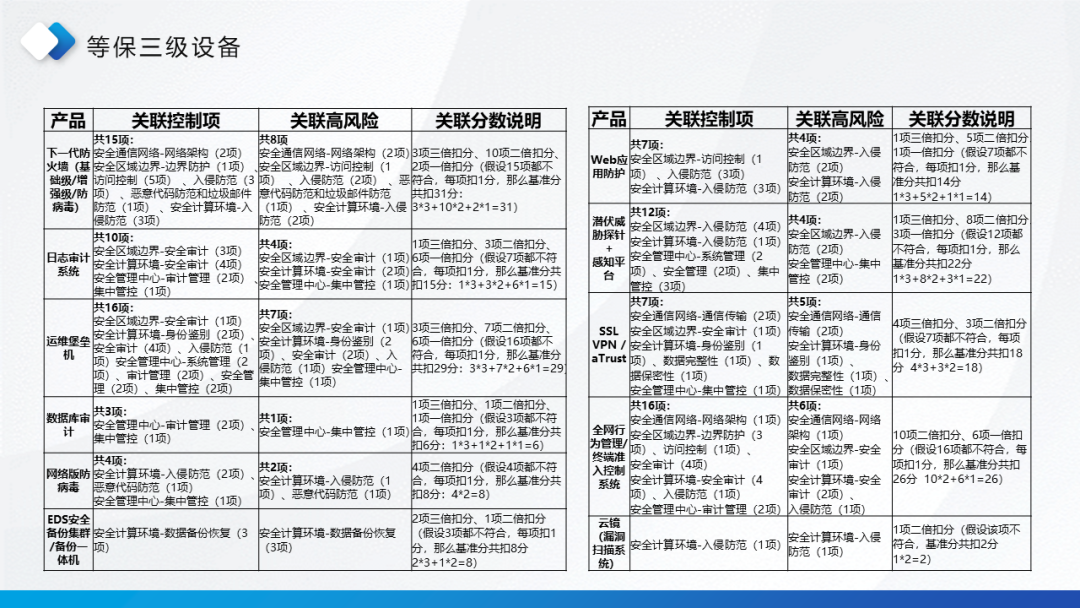

网络安全等保培训 ppt

网络安全等级保护怎么做?...

开关磁阻电机(SRM)系统的matlab性能仿真与分析

目录 1.课题概述 2.系统仿真结果 3.核心程序与模型 4.系统原理简介 4.1 SRM的基本结构 4.2 SRM的电磁关系 4.3 SRM的输出力矩 5.完整工程文件 1.课题概述 开关磁阻电机(SRM)系统的matlab性能仿真与分析,对比平均转矩vs相电流,转矩脉动vs相电流&a…...

最新动态一致的文生视频大模型FancyVideo部署

FancyVideo是一个由360AI团队和中山大学联合开发并开源的视频生成模型。 FancyVideo的创新之处在于它能够实现帧特定的文本指导,使得生成的视频既动态又具有一致性。 FancyVideo模型通过精心设计的跨帧文本引导模块(Cross-frame Textual Guidance Modu…...

茴香豆:企业级知识问答工具实践闯关任务

基础任务 在 InternStudio 中利用 Internlm2-7b 搭建标准版茴香豆知识助手,并使用 Gradio 界面完成 2 轮问答(问题不可与教程重复,作业截图需包括 gradio 界面问题和茴香豆回答)。知识库可根据根据自己工作、学习或感兴趣的内容调…...

英飞凌 PSoC6 RT-Thread 评估板简介

概述 2023年,英飞凌(Infineon)联合 RT-Thread 发布了一款 PSoC™ 62 with CAPSENSE™ evaluation kit 开发板 (以下简称 PSoC 6 RTT 开发板),该开发套件默认内置 RT-Thread 物联网操作系统。PSoC 6 RTT 开…...

深度学习笔记(8)预训练模型

深度学习笔记(8)预训练模型 文章目录 深度学习笔记(8)预训练模型一、预训练模型构建一、微调模型,训练自己的数据1.导入数据集2.数据集处理方法3.完形填空训练 使用分词器将文本转换为模型的输入格式参数 return_tenso…...

C#事件的用法

前言 在C#中,事件(Event)可以实现当类内部发生某些特定的事情时,它可以通知其他类或对象。事件是基于委托(Delegate)的,委托是一种类型安全的函数指针,它定义了方法的类型ÿ…...

金砖软件测试赛项之Jmeter如何录制脚本!

一、简介 Apache JMeter 是一款开源的性能测试工具,用于测试各种服务的负载能力,包括Web应用、数据库、FTP服务器等。它可以模拟多种用户行为,生成负载以评估系统的性能和稳定性。 JMeter 的主要特点: 图形用户界面:…...

docker-squash镜像压缩

docker-squash 和 docker export docker load 的原理和效果有一些相似之处,但它们的工作方式和适用场景有所不同。 docker-squash docker-squash 是一个工具,它通过分析 Docker 镜像的层(layers)并将其压缩成更少的层来减小镜像…...

)

Vue3快速入门+axios的异步请求(基础使用)

学习Vue之前先要学习htmlcssjs的基础使用 Vue其实是js的框架 常用到的Vue指令包括vue-on,vue-for,vue-blind,vue-if&vue-show,v-modul vue的基础模板: <!DOCTYPE html> <html lang"zh-CN"> <head><meta charset"UTF-8&…...

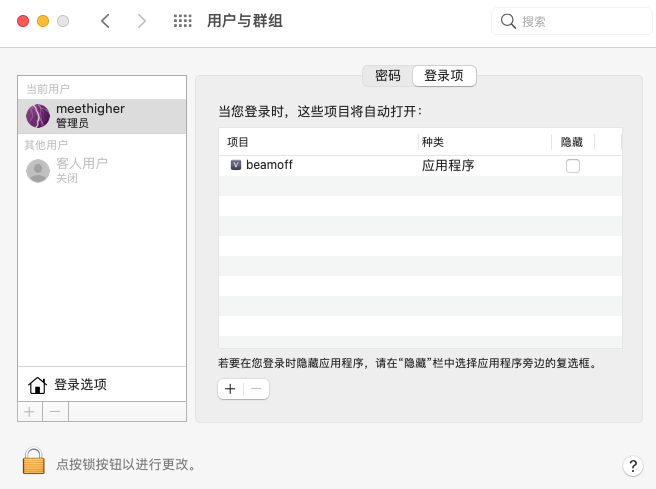

VM16安装macOS11

注意: 本文内容于 2024-09-17 12:08:24 创建,可能不会在此平台上进行更新。如果您希望查看最新版本或更多相关内容,请访问原文地址:VM16安装macOS11。感谢您的关注与支持! 使用 Vmware Workstation Pro 16 安装 macOS…...

自定义复杂AntV/G6案例

一、效果图 二、源码 /** * * Author: me * CreatDate: 2024-08-22 * * Description: 复杂G6案例 * */ <template><div class"moreG6-wapper"><div id"graphContainer" ref"graphRef" class"graph-content"></d…...

Golang | Leetcode Golang题解之第419题棋盘上的战舰

题目: 题解: func countBattleships(board [][]byte) (ans int) {for i, row : range board {for j, ch : range row {if ch X && !(i > 0 && board[i-1][j] X || j > 0 && board[i][j-1] X) {ans}}}return }...

)

CCF刷题计划——LDAP(交集、并集 how to go)

LDAP 计算机软件能力认证考试系统 不知道为什么,直接给我报一个运行错误,得了0分。但是我在Dev里,VS里面都跑的好好的,奇奇怪怪。如果有大佬路过,请帮小弟看看QWQ。本题学到的:交集set_intersection、并集…...

谷歌论文提前揭示o1模型原理:AI大模型竞争或转向硬件

Open AI最强模型o1的护城河已经没有了?仅在OpenAI发布最新推理模型o1几日之后,海外社交平台 Reddit 上有网友发帖称谷歌Deepmind在 8 月发表的一篇论文内容与o1模型原理几乎一致,OpenAI的护城河不复存在。 谷歌DeepMind团队于今年8月6日发布…...

【ShuQiHere】 探索数据挖掘的世界:从概念到应用

🌐 【ShuQiHere】 数据挖掘(Data Mining, DM) 是一种从大型数据集中提取有用信息的技术,无论是在商业分析、金融预测,还是医学研究中,数据挖掘都扮演着至关重要的角色。本文将带您深入了解数据挖掘的核心概…...

LabVIEW提高开发效率技巧----使用事件结构优化用户界面响应

事件结构(Event Structure) 是 LabVIEW 中用于处理用户界面事件的强大工具。通过事件驱动的编程方式,程序可以在用户操作时动态执行特定代码,而不是通过轮询(Polling)的方式不断检查界面控件状态。这种方式…...

【前端】ES6:Set与Map

文章目录 1 Set结构1.1 初识Set1.2 实例的属性和方法1.3 遍历1.4 复杂数据结构去重 2 Map结构2.1 初识Map2.2 实例的属性和方法2.3 遍历 1 Set结构 它类似于数组,但成员的值都是唯一的,没有重复的值。 1.1 初识Set let s1 new Set([1, 2, 3, 2, 3]) …...

Java 之网络编程小案例

1. 多发多收 描述: 编写一个简单的聊天程序,客户端可以向服务器发送多条消息,服务器可以接收所有消息并回复。 代码示例: 服务器端 (Server.java): import java.io.*; import java.net.*; import java.util.concurrent.Execut…...

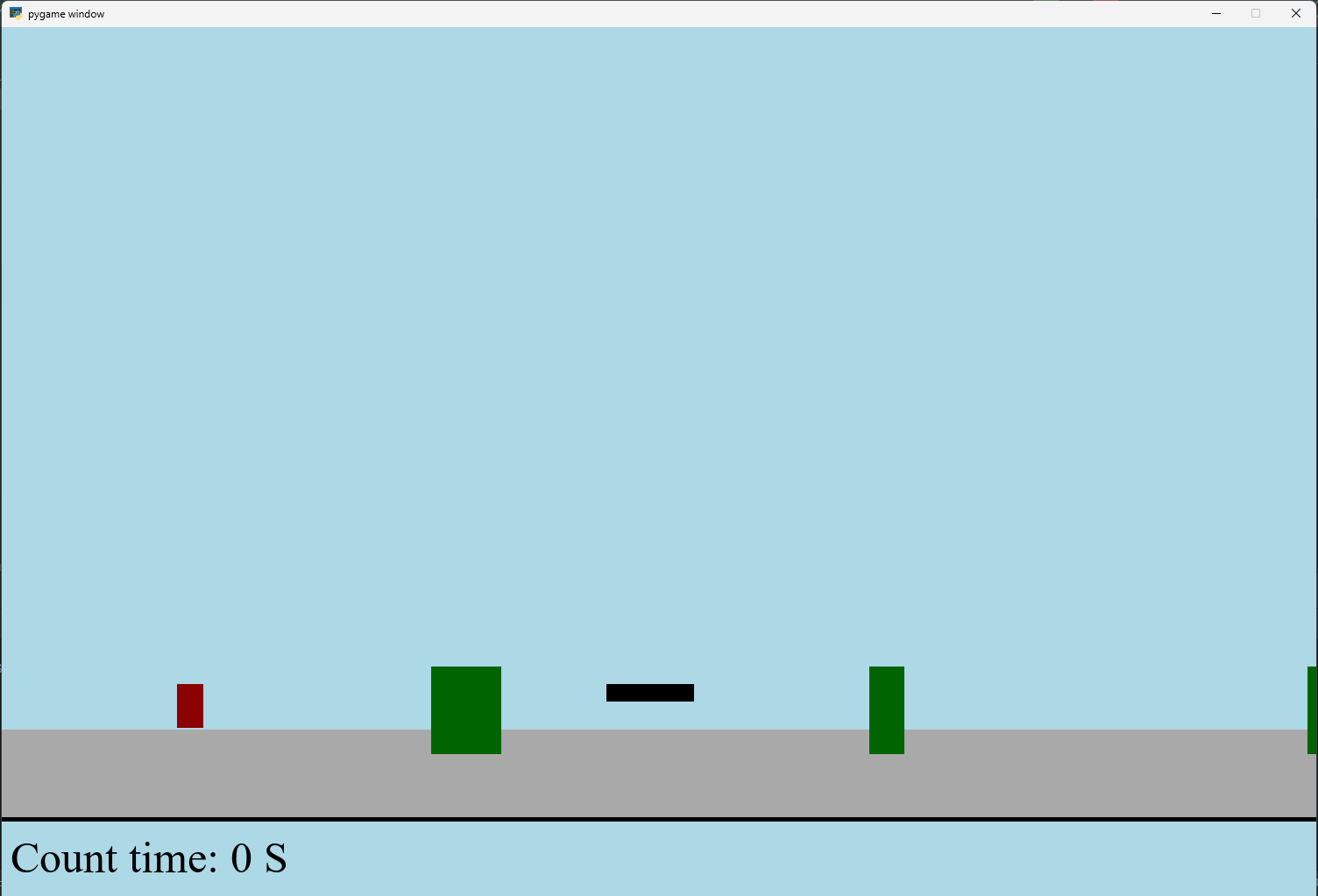

【Python】 -- 趣味代码 - 小恐龙游戏

文章目录 文章目录 00 小恐龙游戏程序设计框架代码结构和功能游戏流程总结01 小恐龙游戏程序设计02 百度网盘地址00 小恐龙游戏程序设计框架 这段代码是一个基于 Pygame 的简易跑酷游戏的完整实现,玩家控制一个角色(龙)躲避障碍物(仙人掌和乌鸦)。以下是代码的详细介绍:…...

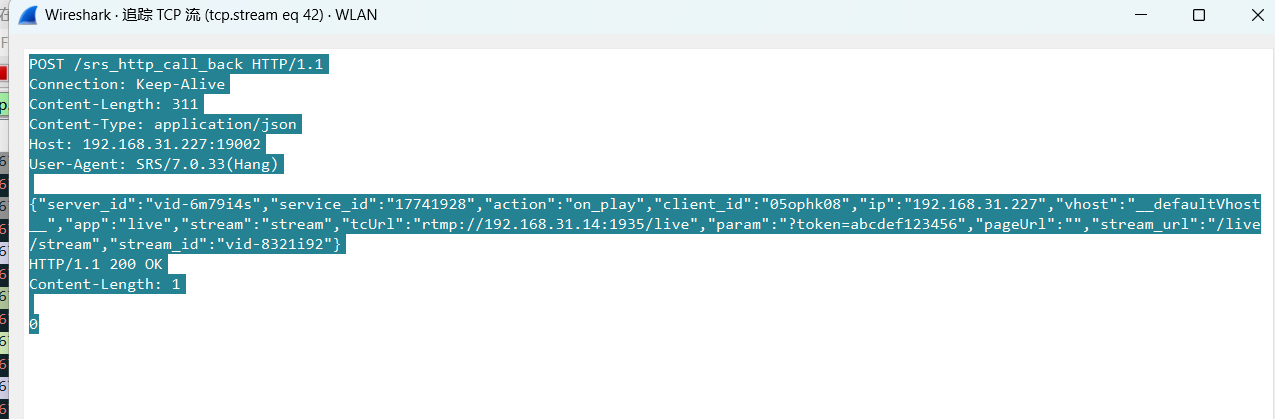

srs linux

下载编译运行 git clone https:///ossrs/srs.git ./configure --h265on make 编译完成后即可启动SRS # 启动 ./objs/srs -c conf/srs.conf # 查看日志 tail -n 30 -f ./objs/srs.log 开放端口 默认RTMP接收推流端口是1935,SRS管理页面端口是8080,可…...

Nginx server_name 配置说明

Nginx 是一个高性能的反向代理和负载均衡服务器,其核心配置之一是 server 块中的 server_name 指令。server_name 决定了 Nginx 如何根据客户端请求的 Host 头匹配对应的虚拟主机(Virtual Host)。 1. 简介 Nginx 使用 server_name 指令来确定…...

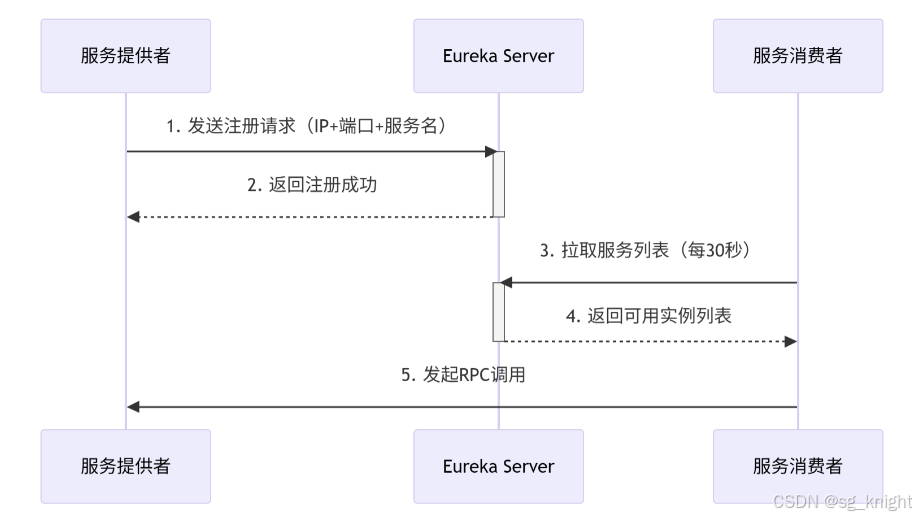

Springcloud:Eureka 高可用集群搭建实战(服务注册与发现的底层原理与避坑指南)

引言:为什么 Eureka 依然是存量系统的核心? 尽管 Nacos 等新注册中心崛起,但金融、电力等保守行业仍有大量系统运行在 Eureka 上。理解其高可用设计与自我保护机制,是保障分布式系统稳定的必修课。本文将手把手带你搭建生产级 Eur…...

)

相机Camera日志分析之三十一:高通Camx HAL十种流程基础分析关键字汇总(后续持续更新中)

【关注我,后续持续新增专题博文,谢谢!!!】 上一篇我们讲了:有对最普通的场景进行各个日志注释讲解,但相机场景太多,日志差异也巨大。后面将展示各种场景下的日志。 通过notepad++打开场景下的日志,通过下列分类关键字搜索,即可清晰的分析不同场景的相机运行流程差异…...

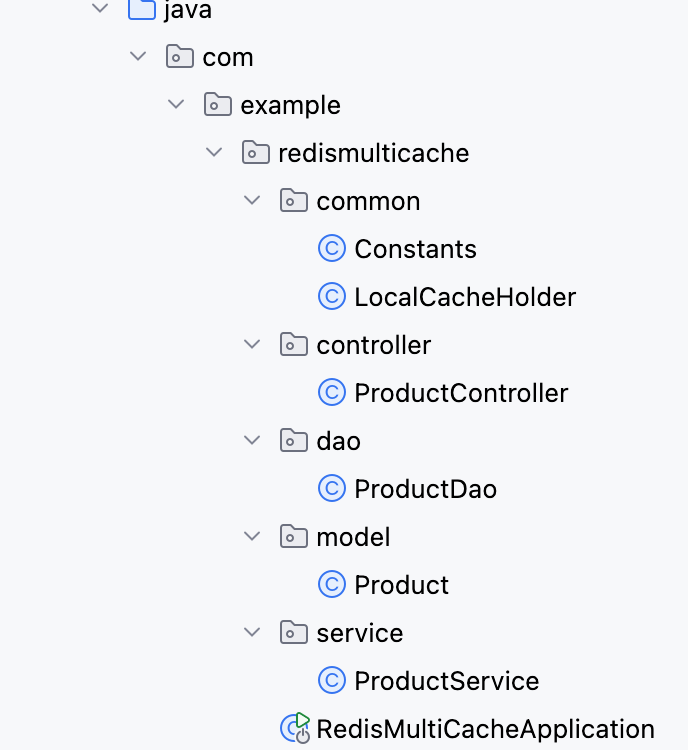

【Redis】笔记|第8节|大厂高并发缓存架构实战与优化

缓存架构 代码结构 代码详情 功能点: 多级缓存,先查本地缓存,再查Redis,最后才查数据库热点数据重建逻辑使用分布式锁,二次查询更新缓存采用读写锁提升性能采用Redis的发布订阅机制通知所有实例更新本地缓存适用读多…...

DingDing机器人群消息推送

文章目录 1 新建机器人2 API文档说明3 代码编写 1 新建机器人 点击群设置 下滑到群管理的机器人,点击进入 添加机器人 选择自定义Webhook服务 点击添加 设置安全设置,详见说明文档 成功后,记录Webhook 2 API文档说明 点击设置说明 查看自…...

华为OD机考-机房布局

import java.util.*;public class DemoTest5 {public static void main(String[] args) {Scanner in new Scanner(System.in);// 注意 hasNext 和 hasNextLine 的区别while (in.hasNextLine()) { // 注意 while 处理多个 caseSystem.out.println(solve(in.nextLine()));}}priv…...

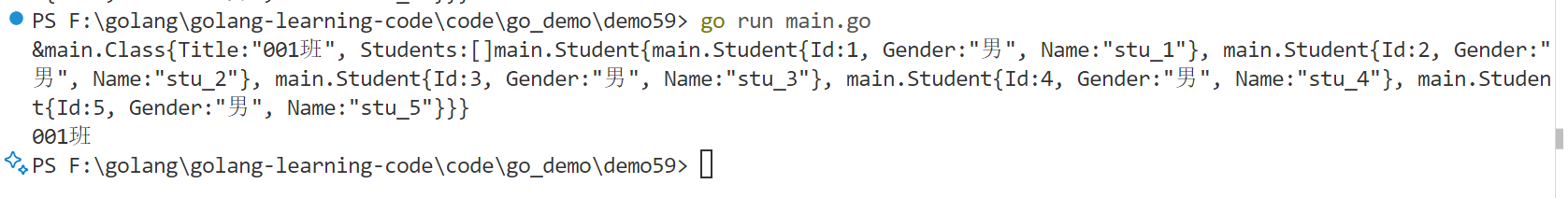

Golang——6、指针和结构体

指针和结构体 1、指针1.1、指针地址和指针类型1.2、指针取值1.3、new和make 2、结构体2.1、type关键字的使用2.2、结构体的定义和初始化2.3、结构体方法和接收者2.4、给任意类型添加方法2.5、结构体的匿名字段2.6、嵌套结构体2.7、嵌套匿名结构体2.8、结构体的继承 3、结构体与…...

:LeetCode 142. 环形链表 II(Linked List Cycle II)详解)

Java详解LeetCode 热题 100(26):LeetCode 142. 环形链表 II(Linked List Cycle II)详解

文章目录 1. 题目描述1.1 链表节点定义 2. 理解题目2.1 问题可视化2.2 核心挑战 3. 解法一:HashSet 标记访问法3.1 算法思路3.2 Java代码实现3.3 详细执行过程演示3.4 执行结果示例3.5 复杂度分析3.6 优缺点分析 4. 解法二:Floyd 快慢指针法(…...