J8学习打卡笔记

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

Inception v1算法实战与解析

- 导入数据

- 数据预处理

- 划分数据集

- 搭建模型

- 训练模型

- 正式训练

- 结果可视化

- 详细网络结构图

- 个人总结

import os, PIL, random, pathlib

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

import torch.nn.functional as Fdevice = torch.device("cuda" if torch.cuda.is_available() else "cpu")device

device(type='cuda')

导入数据

data_dir = r'C:\Users\11054\Desktop\kLearning\p4_learning\data'

data_dir = pathlib.Path(data_dir)data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[-1] for path in data_paths]

print(classeNames)image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:", image_count)

['Monkeypox', 'Others']

图片总数为: 2142

数据预处理

train_transforms = transforms.Compose([transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸# transforms.RandomHorizontalFlip(), # 随机水平翻转transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])test_transform = transforms.Compose([transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])total_data = datasets.ImageFolder(data_dir, transform=train_transforms)

print(total_data.class_to_idx){'Monkeypox': 0, 'Others': 1}

划分数据集

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])batch_size = 8 #根据自己的显卡,选择合适的batch_size大小

train_dl = torch.utils.data.DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=0)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size=batch_size,shuffle=True,num_workers=0)

for X, y in test_dl:print("Shape of X [N, C, H, W]: ", X.shape)print("Shape of y: ", y.shape, y.dtype)breakShape of X [N, C, H, W]: torch.Size([8, 3, 224, 224])

Shape of y: torch.Size([8]) torch.int64

搭建模型

class inception_block(nn.Module):def __init__(self, in_channels, ch1x1, ch3x3red, ch3x3, ch5x5red, ch5x5, pool_proj):super(inception_block, self).__init__()# 1x1 conv branchself.branch1 = nn.Sequential(nn.Conv2d(in_channels, ch1x1, kernel_size=1),nn.BatchNorm2d(ch1x1),nn.ReLU(inplace=True))# 1x1 conv -> 3x3 conv branchself.branch2 = nn.Sequential(nn.Conv2d(in_channels, ch3x3red, kernel_size=1),nn.BatchNorm2d(ch3x3red),nn.ReLU(inplace=True),nn.Conv2d(ch3x3red, ch3x3, kernel_size=3, padding=1),nn.BatchNorm2d(ch3x3),nn.ReLU(inplace=True))# 1x1 conv -> 5x5 conv branchself.branch3 = nn.Sequential(nn.Conv2d(in_channels, ch5x5red, kernel_size=1),nn.BatchNorm2d(ch5x5red),nn.ReLU(inplace=True),nn.Conv2d(ch5x5red, ch5x5, kernel_size=5, padding=2),nn.BatchNorm2d(ch5x5),nn.ReLU(inplace=True))# 3x3 max pooling -> 1x1 conv branchself.branch4 = nn.Sequential(nn.MaxPool2d(kernel_size=3, stride=1, padding=1),nn.Conv2d(in_channels, pool_proj, kernel_size=1),nn.BatchNorm2d(pool_proj),nn.ReLU(inplace=True))def forward(self, x):# Compute forward pass through all branches and concatenate the output feature mapsbranch1_output = self.branch1(x)branch2_output = self.branch2(x)branch3_output = self.branch3(x)branch4_output = self.branch4(x)outputs = [branch1_output, branch2_output, branch3_output, branch4_output]return torch.cat(outputs, 1)class InceptionV1(nn.Module):def __init__(self, num_classes=1000):super(InceptionV1, self).__init__()self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3)self.maxpool1 = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)self.conv2 = nn.Conv2d(64, 64, kernel_size=1, stride=1, padding=0)self.conv3 = nn.Conv2d(64, 192, kernel_size=3, stride=1, padding=1)self.maxpool2 = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)self.inception3a = inception_block(192, 64, 96, 128, 16, 32, 32)self.inception3b = inception_block(256, 128, 128, 192, 32, 96, 64)self.maxpool3 = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)self.inception4a = inception_block(480, 192, 96, 208, 16, 48, 64)self.inception4b = inception_block(512, 160, 112, 224, 24, 64, 64)self.inception4c = inception_block(512, 128, 128, 256, 24, 64, 64)self.inception4d = inception_block(512, 112, 144, 288, 32, 64, 64)self.inception4e = inception_block(528, 256, 160, 320, 32, 128, 128)self.maxpool4 = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)self.inception5a = inception_block(832, 256, 160, 320, 32, 128, 128)self.inception5b=nn.Sequential(inception_block(832, 384, 192, 384, 48, 128, 128),nn.AvgPool2d(kernel_size=7,stride=1,padding=0),nn.Dropout(0.4))# 全连接网络层,用于分类self.classifier = nn.Sequential(nn.Linear(in_features=1024, out_features=1024),nn.ReLU(),nn.Linear(in_features=1024, out_features=num_classes),nn.Softmax(dim=1))def forward(self, x):x = self.conv1(x)x = F.relu(x)x = self.maxpool1(x)x = self.conv2(x)x = F.relu(x)x = self.conv3(x)x = F.relu(x)x = self.maxpool2(x)x = self.inception3a(x)x = self.inception3b(x)x = self.maxpool3(x)x = self.inception4a(x)x = self.inception4b(x)x = self.inception4c(x)x = self.inception4d(x)x = self.inception4e(x)x = self.maxpool4(x)x = self.inception5a(x)x = self.inception5b(x)x = torch.flatten(x, start_dim=1)x = self.classifier(x)return x

# 统计模型参数量以及其他指标

import torchsummary# 调用并将模型转移到GPU中

model = InceptionV1(num_classes=2).to(device)# 显示网络结构

torchsummary.summary(model, (3, 224, 224))

print(model)----------------------------------------------------------------Layer (type) Output Shape Param #

================================================================Conv2d-1 [-1, 64, 112, 112] 9,472MaxPool2d-2 [-1, 64, 56, 56] 0Conv2d-3 [-1, 64, 56, 56] 4,160Conv2d-4 [-1, 192, 56, 56] 110,784MaxPool2d-5 [-1, 192, 28, 28] 0Conv2d-6 [-1, 64, 28, 28] 12,352BatchNorm2d-7 [-1, 64, 28, 28] 128ReLU-8 [-1, 64, 28, 28] 0Conv2d-9 [-1, 96, 28, 28] 18,528BatchNorm2d-10 [-1, 96, 28, 28] 192ReLU-11 [-1, 96, 28, 28] 0Conv2d-12 [-1, 128, 28, 28] 110,720BatchNorm2d-13 [-1, 128, 28, 28] 256ReLU-14 [-1, 128, 28, 28] 0Conv2d-15 [-1, 16, 28, 28] 3,088BatchNorm2d-16 [-1, 16, 28, 28] 32ReLU-17 [-1, 16, 28, 28] 0Conv2d-18 [-1, 32, 28, 28] 12,832BatchNorm2d-19 [-1, 32, 28, 28] 64ReLU-20 [-1, 32, 28, 28] 0MaxPool2d-21 [-1, 192, 28, 28] 0Conv2d-22 [-1, 32, 28, 28] 6,176BatchNorm2d-23 [-1, 32, 28, 28] 64ReLU-24 [-1, 32, 28, 28] 0inception_block-25 [-1, 256, 28, 28] 0Conv2d-26 [-1, 128, 28, 28] 32,896BatchNorm2d-27 [-1, 128, 28, 28] 256ReLU-28 [-1, 128, 28, 28] 0Conv2d-29 [-1, 128, 28, 28] 32,896BatchNorm2d-30 [-1, 128, 28, 28] 256ReLU-31 [-1, 128, 28, 28] 0Conv2d-32 [-1, 192, 28, 28] 221,376BatchNorm2d-33 [-1, 192, 28, 28] 384ReLU-34 [-1, 192, 28, 28] 0Conv2d-35 [-1, 32, 28, 28] 8,224BatchNorm2d-36 [-1, 32, 28, 28] 64ReLU-37 [-1, 32, 28, 28] 0Conv2d-38 [-1, 96, 28, 28] 76,896BatchNorm2d-39 [-1, 96, 28, 28] 192ReLU-40 [-1, 96, 28, 28] 0MaxPool2d-41 [-1, 256, 28, 28] 0Conv2d-42 [-1, 64, 28, 28] 16,448BatchNorm2d-43 [-1, 64, 28, 28] 128ReLU-44 [-1, 64, 28, 28] 0inception_block-45 [-1, 480, 28, 28] 0MaxPool2d-46 [-1, 480, 14, 14] 0Conv2d-47 [-1, 192, 14, 14] 92,352BatchNorm2d-48 [-1, 192, 14, 14] 384ReLU-49 [-1, 192, 14, 14] 0Conv2d-50 [-1, 96, 14, 14] 46,176BatchNorm2d-51 [-1, 96, 14, 14] 192ReLU-52 [-1, 96, 14, 14] 0Conv2d-53 [-1, 208, 14, 14] 179,920BatchNorm2d-54 [-1, 208, 14, 14] 416ReLU-55 [-1, 208, 14, 14] 0Conv2d-56 [-1, 16, 14, 14] 7,696BatchNorm2d-57 [-1, 16, 14, 14] 32ReLU-58 [-1, 16, 14, 14] 0Conv2d-59 [-1, 48, 14, 14] 19,248BatchNorm2d-60 [-1, 48, 14, 14] 96ReLU-61 [-1, 48, 14, 14] 0MaxPool2d-62 [-1, 480, 14, 14] 0Conv2d-63 [-1, 64, 14, 14] 30,784BatchNorm2d-64 [-1, 64, 14, 14] 128ReLU-65 [-1, 64, 14, 14] 0inception_block-66 [-1, 512, 14, 14] 0Conv2d-67 [-1, 160, 14, 14] 82,080BatchNorm2d-68 [-1, 160, 14, 14] 320ReLU-69 [-1, 160, 14, 14] 0Conv2d-70 [-1, 112, 14, 14] 57,456BatchNorm2d-71 [-1, 112, 14, 14] 224ReLU-72 [-1, 112, 14, 14] 0Conv2d-73 [-1, 224, 14, 14] 226,016BatchNorm2d-74 [-1, 224, 14, 14] 448ReLU-75 [-1, 224, 14, 14] 0Conv2d-76 [-1, 24, 14, 14] 12,312BatchNorm2d-77 [-1, 24, 14, 14] 48ReLU-78 [-1, 24, 14, 14] 0Conv2d-79 [-1, 64, 14, 14] 38,464BatchNorm2d-80 [-1, 64, 14, 14] 128ReLU-81 [-1, 64, 14, 14] 0MaxPool2d-82 [-1, 512, 14, 14] 0Conv2d-83 [-1, 64, 14, 14] 32,832BatchNorm2d-84 [-1, 64, 14, 14] 128ReLU-85 [-1, 64, 14, 14] 0inception_block-86 [-1, 512, 14, 14] 0Conv2d-87 [-1, 128, 14, 14] 65,664BatchNorm2d-88 [-1, 128, 14, 14] 256ReLU-89 [-1, 128, 14, 14] 0Conv2d-90 [-1, 128, 14, 14] 65,664BatchNorm2d-91 [-1, 128, 14, 14] 256ReLU-92 [-1, 128, 14, 14] 0Conv2d-93 [-1, 256, 14, 14] 295,168BatchNorm2d-94 [-1, 256, 14, 14] 512ReLU-95 [-1, 256, 14, 14] 0Conv2d-96 [-1, 24, 14, 14] 12,312BatchNorm2d-97 [-1, 24, 14, 14] 48ReLU-98 [-1, 24, 14, 14] 0Conv2d-99 [-1, 64, 14, 14] 38,464BatchNorm2d-100 [-1, 64, 14, 14] 128ReLU-101 [-1, 64, 14, 14] 0MaxPool2d-102 [-1, 512, 14, 14] 0Conv2d-103 [-1, 64, 14, 14] 32,832BatchNorm2d-104 [-1, 64, 14, 14] 128ReLU-105 [-1, 64, 14, 14] 0inception_block-106 [-1, 512, 14, 14] 0Conv2d-107 [-1, 112, 14, 14] 57,456BatchNorm2d-108 [-1, 112, 14, 14] 224ReLU-109 [-1, 112, 14, 14] 0Conv2d-110 [-1, 144, 14, 14] 73,872BatchNorm2d-111 [-1, 144, 14, 14] 288ReLU-112 [-1, 144, 14, 14] 0Conv2d-113 [-1, 288, 14, 14] 373,536BatchNorm2d-114 [-1, 288, 14, 14] 576ReLU-115 [-1, 288, 14, 14] 0Conv2d-116 [-1, 32, 14, 14] 16,416BatchNorm2d-117 [-1, 32, 14, 14] 64ReLU-118 [-1, 32, 14, 14] 0Conv2d-119 [-1, 64, 14, 14] 51,264BatchNorm2d-120 [-1, 64, 14, 14] 128ReLU-121 [-1, 64, 14, 14] 0MaxPool2d-122 [-1, 512, 14, 14] 0Conv2d-123 [-1, 64, 14, 14] 32,832BatchNorm2d-124 [-1, 64, 14, 14] 128ReLU-125 [-1, 64, 14, 14] 0inception_block-126 [-1, 528, 14, 14] 0Conv2d-127 [-1, 256, 14, 14] 135,424BatchNorm2d-128 [-1, 256, 14, 14] 512ReLU-129 [-1, 256, 14, 14] 0Conv2d-130 [-1, 160, 14, 14] 84,640BatchNorm2d-131 [-1, 160, 14, 14] 320ReLU-132 [-1, 160, 14, 14] 0Conv2d-133 [-1, 320, 14, 14] 461,120BatchNorm2d-134 [-1, 320, 14, 14] 640ReLU-135 [-1, 320, 14, 14] 0Conv2d-136 [-1, 32, 14, 14] 16,928BatchNorm2d-137 [-1, 32, 14, 14] 64ReLU-138 [-1, 32, 14, 14] 0Conv2d-139 [-1, 128, 14, 14] 102,528BatchNorm2d-140 [-1, 128, 14, 14] 256ReLU-141 [-1, 128, 14, 14] 0MaxPool2d-142 [-1, 528, 14, 14] 0Conv2d-143 [-1, 128, 14, 14] 67,712BatchNorm2d-144 [-1, 128, 14, 14] 256ReLU-145 [-1, 128, 14, 14] 0inception_block-146 [-1, 832, 14, 14] 0MaxPool2d-147 [-1, 832, 7, 7] 0Conv2d-148 [-1, 256, 7, 7] 213,248BatchNorm2d-149 [-1, 256, 7, 7] 512ReLU-150 [-1, 256, 7, 7] 0Conv2d-151 [-1, 160, 7, 7] 133,280BatchNorm2d-152 [-1, 160, 7, 7] 320ReLU-153 [-1, 160, 7, 7] 0Conv2d-154 [-1, 320, 7, 7] 461,120BatchNorm2d-155 [-1, 320, 7, 7] 640ReLU-156 [-1, 320, 7, 7] 0Conv2d-157 [-1, 32, 7, 7] 26,656BatchNorm2d-158 [-1, 32, 7, 7] 64ReLU-159 [-1, 32, 7, 7] 0Conv2d-160 [-1, 128, 7, 7] 102,528BatchNorm2d-161 [-1, 128, 7, 7] 256ReLU-162 [-1, 128, 7, 7] 0MaxPool2d-163 [-1, 832, 7, 7] 0Conv2d-164 [-1, 128, 7, 7] 106,624BatchNorm2d-165 [-1, 128, 7, 7] 256ReLU-166 [-1, 128, 7, 7] 0inception_block-167 [-1, 832, 7, 7] 0Conv2d-168 [-1, 384, 7, 7] 319,872BatchNorm2d-169 [-1, 384, 7, 7] 768ReLU-170 [-1, 384, 7, 7] 0Conv2d-171 [-1, 192, 7, 7] 159,936BatchNorm2d-172 [-1, 192, 7, 7] 384ReLU-173 [-1, 192, 7, 7] 0Conv2d-174 [-1, 384, 7, 7] 663,936BatchNorm2d-175 [-1, 384, 7, 7] 768ReLU-176 [-1, 384, 7, 7] 0Conv2d-177 [-1, 48, 7, 7] 39,984BatchNorm2d-178 [-1, 48, 7, 7] 96ReLU-179 [-1, 48, 7, 7] 0Conv2d-180 [-1, 128, 7, 7] 153,728BatchNorm2d-181 [-1, 128, 7, 7] 256ReLU-182 [-1, 128, 7, 7] 0MaxPool2d-183 [-1, 832, 7, 7] 0Conv2d-184 [-1, 128, 7, 7] 106,624BatchNorm2d-185 [-1, 128, 7, 7] 256ReLU-186 [-1, 128, 7, 7] 0inception_block-187 [-1, 1024, 7, 7] 0AvgPool2d-188 [-1, 1024, 1, 1] 0Dropout-189 [-1, 1024, 1, 1] 0Linear-190 [-1, 1024] 1,049,600ReLU-191 [-1, 1024] 0Linear-192 [-1, 2] 2,050Softmax-193 [-1, 2] 0

================================================================

Total params: 7,039,122

Trainable params: 7,039,122

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 69.61

Params size (MB): 26.85

Estimated Total Size (MB): 97.04

----------------------------------------------------------------

InceptionV1((conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))(maxpool1): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(conv2): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))(conv3): Conv2d(64, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(maxpool2): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(inception3a): inception_block((branch1): Sequential((0): Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(192, 96, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(96, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(192, 16, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(inception3b): inception_block((branch1): Sequential((0): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(128, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(256, 32, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(32, 96, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(maxpool3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(inception4a): inception_block((branch1): Sequential((0): Conv2d(480, 192, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(480, 96, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(96, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(480, 16, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(16, 48, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(48, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(480, 64, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(inception4b): inception_block((branch1): Sequential((0): Conv2d(512, 160, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(512, 112, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(112, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(112, 224, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(512, 24, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(24, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(512, 64, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(inception4c): inception_block((branch1): Sequential((0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(512, 24, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(24, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(512, 64, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(inception4d): inception_block((branch1): Sequential((0): Conv2d(512, 112, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(112, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(512, 144, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(144, 288, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(512, 32, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(512, 64, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(inception4e): inception_block((branch1): Sequential((0): Conv2d(528, 256, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(528, 160, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(160, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(528, 32, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(32, 128, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(528, 128, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(maxpool4): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(inception5a): inception_block((branch1): Sequential((0): Conv2d(832, 256, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(832, 160, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(160, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(832, 32, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(32, 128, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(inception5b): Sequential((0): inception_block((branch1): Sequential((0): Conv2d(832, 384, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(branch2): Sequential((0): Conv2d(832, 192, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(4): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch3): Sequential((0): Conv2d(832, 48, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(48, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): Conv2d(48, 128, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(5): ReLU(inplace=True))(branch4): Sequential((0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)(1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1))(2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(3): ReLU(inplace=True)))(1): AvgPool2d(kernel_size=7, stride=1, padding=0)(2): Dropout(p=0.4, inplace=False))(classifier): Sequential((0): Linear(in_features=1024, out_features=1024, bias=True)(1): ReLU()(2): Linear(in_features=1024, out_features=2, bias=True)(3): Softmax(dim=1))

)

训练模型

def train(dataloader, model, loss_fn, optimizer):size = len(dataloader.dataset) # 训练集的大小num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)train_loss, train_acc = 0, 0 # 初始化训练损失和正确率for X, y in dataloader: # 获取图片及其标签X, y = X.to(device), y.to(device)# 计算预测误差pred = model(X) # 网络输出loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失# 反向传播optimizer.zero_grad() # grad属性归零loss.backward() # 反向传播optimizer.step() # 每一步自动更新# 记录acc与losstrain_acc += (pred.argmax(1) == y).type(torch.float).sum().item()train_loss += loss.item()train_acc /= sizetrain_loss /= num_batchesreturn train_acc, train_loss

def test(dataloader, model, loss_fn):size = len(dataloader.dataset) # 测试集的大小num_batches = len(dataloader) # 批次数目test_loss, test_acc = 0, 0# 当不进行训练时,停止梯度更新,节省计算内存消耗with torch.no_grad():for imgs, target in dataloader:imgs, target = imgs.to(device), target.to(device)# 计算losstarget_pred = model(imgs)loss = loss_fn(target_pred, target)test_loss += loss.item()test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()test_acc /= sizetest_loss /= num_batchesreturn test_acc, test_loss

正式训练

import copyoptimizer = torch.optim.Adam(model.parameters(), lr=1e-4)

loss_fn = nn.CrossEntropyLoss() # 创建损失函数epochs = 50train_loss = []

train_acc = []

test_loss = []

test_acc = []best_acc = 0 # 设置一个最佳准确率,作为最佳模型的判别指标for epoch in range(epochs):# 更新学习率(使用自定义学习率时使用)# adjust_learning_rate(optimizer, epoch, learn_rate)model.train()epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)# scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)model.eval()epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)# 保存最佳模型到 best_modelif epoch_test_acc > best_acc:best_acc = epoch_test_accbest_model = copy.deepcopy(model)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)# 获取当前的学习率lr = optimizer.state_dict()['param_groups'][0]['lr']template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss,epoch_test_acc * 100, epoch_test_loss, lr))# 保存最佳模型到文件中

PATH = r'C:/Users/11054/Desktop/kLearning/J8_learning/best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)print('Done')

Epoch: 1, Train_acc:62.8%, Train_loss:0.650, Test_acc:66.9%, Test_loss:0.622, Lr:1.00E-04

Epoch: 2, Train_acc:65.6%, Train_loss:0.635, Test_acc:66.2%, Test_loss:0.612, Lr:1.00E-04

Epoch: 3, Train_acc:67.7%, Train_loss:0.612, Test_acc:68.5%, Test_loss:0.621, Lr:1.00E-04

Epoch: 4, Train_acc:71.7%, Train_loss:0.582, Test_acc:73.0%, Test_loss:0.576, Lr:1.00E-04

Epoch: 5, Train_acc:72.1%, Train_loss:0.575, Test_acc:74.4%, Test_loss:0.562, Lr:1.00E-04

Epoch: 6, Train_acc:74.2%, Train_loss:0.556, Test_acc:75.1%, Test_loss:0.548, Lr:1.00E-04

Epoch: 7, Train_acc:75.8%, Train_loss:0.549, Test_acc:78.1%, Test_loss:0.517, Lr:1.00E-04

Epoch: 8, Train_acc:76.9%, Train_loss:0.531, Test_acc:79.5%, Test_loss:0.510, Lr:1.00E-04

Epoch: 9, Train_acc:81.2%, Train_loss:0.498, Test_acc:83.7%, Test_loss:0.478, Lr:1.00E-04

Epoch:10, Train_acc:81.1%, Train_loss:0.497, Test_acc:82.3%, Test_loss:0.486, Lr:1.00E-04

Epoch:11, Train_acc:81.7%, Train_loss:0.490, Test_acc:83.0%, Test_loss:0.476, Lr:1.00E-04

Epoch:12, Train_acc:83.9%, Train_loss:0.472, Test_acc:85.5%, Test_loss:0.454, Lr:1.00E-04

Epoch:13, Train_acc:83.7%, Train_loss:0.474, Test_acc:83.9%, Test_loss:0.467, Lr:1.00E-04

Epoch:14, Train_acc:84.4%, Train_loss:0.462, Test_acc:86.0%, Test_loss:0.444, Lr:1.00E-04

Epoch:15, Train_acc:86.2%, Train_loss:0.446, Test_acc:80.7%, Test_loss:0.490, Lr:1.00E-04

Epoch:16, Train_acc:85.9%, Train_loss:0.449, Test_acc:86.0%, Test_loss:0.445, Lr:1.00E-04

Epoch:17, Train_acc:86.6%, Train_loss:0.444, Test_acc:80.9%, Test_loss:0.501, Lr:1.00E-04

Epoch:18, Train_acc:86.6%, Train_loss:0.446, Test_acc:83.4%, Test_loss:0.468, Lr:1.00E-04

Epoch:19, Train_acc:89.1%, Train_loss:0.417, Test_acc:85.8%, Test_loss:0.453, Lr:1.00E-04

Epoch:20, Train_acc:88.2%, Train_loss:0.425, Test_acc:90.4%, Test_loss:0.404, Lr:1.00E-04

Epoch:21, Train_acc:90.4%, Train_loss:0.407, Test_acc:87.9%, Test_loss:0.428, Lr:1.00E-04

Epoch:22, Train_acc:90.3%, Train_loss:0.411, Test_acc:89.0%, Test_loss:0.422, Lr:1.00E-04

Epoch:23, Train_acc:89.5%, Train_loss:0.415, Test_acc:85.3%, Test_loss:0.449, Lr:1.00E-04

Epoch:24, Train_acc:89.8%, Train_loss:0.412, Test_acc:89.0%, Test_loss:0.416, Lr:1.00E-04

Epoch:25, Train_acc:88.5%, Train_loss:0.428, Test_acc:90.2%, Test_loss:0.411, Lr:1.00E-04

Epoch:26, Train_acc:90.4%, Train_loss:0.406, Test_acc:89.5%, Test_loss:0.413, Lr:1.00E-04

Epoch:27, Train_acc:91.9%, Train_loss:0.395, Test_acc:89.3%, Test_loss:0.418, Lr:1.00E-04

Epoch:28, Train_acc:92.9%, Train_loss:0.381, Test_acc:91.6%, Test_loss:0.388, Lr:1.00E-04

Epoch:29, Train_acc:92.9%, Train_loss:0.383, Test_acc:90.0%, Test_loss:0.409, Lr:1.00E-04

Epoch:30, Train_acc:91.5%, Train_loss:0.397, Test_acc:89.0%, Test_loss:0.420, Lr:1.00E-04

Epoch:31, Train_acc:91.9%, Train_loss:0.392, Test_acc:91.6%, Test_loss:0.396, Lr:1.00E-04

Epoch:32, Train_acc:89.2%, Train_loss:0.421, Test_acc:89.7%, Test_loss:0.411, Lr:1.00E-04

Epoch:33, Train_acc:92.3%, Train_loss:0.392, Test_acc:90.0%, Test_loss:0.409, Lr:1.00E-04

Epoch:34, Train_acc:92.2%, Train_loss:0.386, Test_acc:92.3%, Test_loss:0.387, Lr:1.00E-04

Epoch:35, Train_acc:92.2%, Train_loss:0.393, Test_acc:92.5%, Test_loss:0.387, Lr:1.00E-04

Epoch:36, Train_acc:95.0%, Train_loss:0.362, Test_acc:91.8%, Test_loss:0.395, Lr:1.00E-04

Epoch:37, Train_acc:93.3%, Train_loss:0.383, Test_acc:90.7%, Test_loss:0.409, Lr:1.00E-04

Epoch:38, Train_acc:93.8%, Train_loss:0.378, Test_acc:91.6%, Test_loss:0.399, Lr:1.00E-04

Epoch:39, Train_acc:93.3%, Train_loss:0.384, Test_acc:91.4%, Test_loss:0.392, Lr:1.00E-04

Epoch:40, Train_acc:94.5%, Train_loss:0.371, Test_acc:90.4%, Test_loss:0.405, Lr:1.00E-04

Epoch:41, Train_acc:95.6%, Train_loss:0.360, Test_acc:91.8%, Test_loss:0.397, Lr:1.00E-04

Epoch:42, Train_acc:91.2%, Train_loss:0.401, Test_acc:85.1%, Test_loss:0.450, Lr:1.00E-04

Epoch:43, Train_acc:92.2%, Train_loss:0.391, Test_acc:88.3%, Test_loss:0.425, Lr:1.00E-04

Epoch:44, Train_acc:93.9%, Train_loss:0.375, Test_acc:89.5%, Test_loss:0.413, Lr:1.00E-04

Epoch:45, Train_acc:95.4%, Train_loss:0.359, Test_acc:93.2%, Test_loss:0.381, Lr:1.00E-04

Epoch:46, Train_acc:93.5%, Train_loss:0.381, Test_acc:91.6%, Test_loss:0.395, Lr:1.00E-04

Epoch:47, Train_acc:95.7%, Train_loss:0.354, Test_acc:92.8%, Test_loss:0.382, Lr:1.00E-04

Epoch:48, Train_acc:95.9%, Train_loss:0.356, Test_acc:93.7%, Test_loss:0.373, Lr:1.00E-04

Epoch:49, Train_acc:95.9%, Train_loss:0.354, Test_acc:94.4%, Test_loss:0.367, Lr:1.00E-04

Epoch:50, Train_acc:95.1%, Train_loss:0.362, Test_acc:92.3%, Test_loss:0.391, Lr:1.00E-04

Done

结果可视化

import matplotlib.pyplot as plt

# 隐藏警告

import warningswarnings.filterwarnings("ignore") # 忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率epochs_range = range(epochs)plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()from PIL import Imageclasses = list(total_data.class_to_idx)

print(classes)

print(total_data.class_to_idx)def predict_one_image(image_path, model, transform, classes):test_img = Image.open(image_path).convert('RGB')plt.imshow(test_img) # 展示预测的图片test_img = transform(test_img)img = test_img.to(device).unsqueeze(0)model.eval()output = model(img)_, pred = torch.max(output, 1)pred_class = classes[pred]print(f'预测结果是:{pred_class}')# 预测训练集中的某张照片

predict_one_image(image_path=r'C:\Users\11054\Desktop\kLearning\p4_learning\data\Monkeypox\M01_01_02.jpg',model=model,transform=train_transforms,classes=classes)

['Monkeypox', 'Others']

{'Monkeypox': 0, 'Others': 1}

预测结果是:Monkeypox

详细网络结构图

个人总结

- 主要特点和创新点(Inception模块)

- 其设计理念是,在同一层网络中使用多种不同尺寸的卷积核(如1x1, 3x3, 5x5等)和池化层,然后将它们的输出拼接在一起。这种设计允许网络在同一空间维度上捕获多尺度特征,从而提高了网络的表达能力。

- 1x1卷积核的使用不仅减少了计算量,还起到了降维的作用,帮助减少模型的参数数量和计算复杂度。

- 辅助分类器:

Inception v1在网络的中间层添加了两个辅助分类器。这些分类器通过添加额外的损失函数来帮助训练时的梯度传播,防止梯度消失问题,特别是在深层网络中。在测试时,这些辅助分类器的输出会被忽略。 - 参数效率:

- 通过使用1x1卷积核和特殊的模块设计,Inception v1在保持高性能的同时,有效地减少了模型的参数数量,这使得网络更加高效,能够更好地推广到更大的数据集上。

一个典型的Inception模块包括以下几个部分:

1x1卷积层:用于降维和减少计算量。

3x3卷积层:用于捕获局部细节特征。

5x5卷积层:用于捕获更大范围的特征。

3x3最大池化层:用于捕获空间信息。

拼接层:将所有上述卷积层和池化层的输出在通道维度上拼接在一起。

相关文章:

J8学习打卡笔记

🍨 本文为🔗365天深度学习训练营 中的学习记录博客🍖 原作者:K同学啊 Inception v1算法实战与解析 导入数据数据预处理划分数据集搭建模型训练模型正式训练结果可视化详细网络结构图个人总结 import os, PIL, random, pathlib imp…...

)

前端学习-操作元素内容(二十二)

目录 前言 目标 对象.innerText 属性 对象.innerHTML属性 案例 年会抽奖 需求 方法一 方法二 总结 前言 曾经沧海难为水,除却巫山不是云。 目标 能够修改元素的文本更换内容 DOM对象都是根据标签生成的,所以操作标签,本质上就是操作DOM对象,…...

【踩坑】pip离线+在线在虚拟环境中安装指定版本cudnn攻略

pip离线在线在虚拟环境中安装指定版本cudnn攻略 在线安装离线安装Windows环境:Linux环境: 清华源官方帮助文档 https://mirrors.tuna.tsinghua.edu.cn/help/pypi/ 标题的离线的意思是先下载whl文件再安装到虚拟环境,在线的意思是直接在当前虚…...

golang操作sqlite3加速本地结构化数据查询

目录 摘要Sqlite3SQLite 命令SQLite 语法SQLite 数据类型列亲和类型——优先选择机制 SQLite 创建数据库SQLite 附加数据库SQLite 分离数据库 SQLite 创建表SQLite 删除表 SQLite Insert 语句SQLite Select 语句SQLite 运算符SQLite 算术运算符SQLite 比较运算符SQLite 逻辑运算…...

与流式响应)

vllm加速(以Qwen2.5-7B-instruction为例)与流式响应

1. vllm介绍 什么是vllm? vLLM 是一个高性能的大型语言模型推理引擎,采用创新的内存管理和执行架构,显著提升了大模型推理的速度和效率。它支持高度并发的请求处理,能够同时服务数千名用户,并且兼容多种深度学习框架,…...

WordPress弹窗公告插件-ts小陈

使用效果 使用后网站所有页面弹出窗口 插件特色功能 设置弹窗公告样式:这款插件可展示弹窗样式公告,用户点击完之后不再弹出,不会频繁打扰用户。可设置弹窗中间的logo图:这款插件针对公告图片进行独立设置,你可以在设…...

【ELK】容器化部署Elasticsearch1.14.3集群【亲测可用】

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档 文章目录 1. 部署1.1 单节点1.2 新节点加入集群1.3 docker-compose部署集群 1. 部署 按照官网流程进行部署 使用 Docker 安装 Elasticsearch |Elasticsearch 指南 [8.14] |…...

[SAP ABAP] ALV状态栏GUI STATUS的快速创建

使用事务码SE38进入到指定程序,点击"显示对象列表"按钮 鼠标右键,选择"GUI状态" 弹出【创建状态】窗口,填写状态以及短文本描述以后,点击按钮 点击"调整模板",复制已有程序的状态栏 填…...

【Linux】NET9运行时移植到低版本GLIBC的Linux纯内核板卡上

背景介绍 自制了一块Linux板卡(基于全志T113i) 厂家给的SDK和根文件系统能够提供的GLIBC的版本比较低 V2.25/GCC 7.3.1 这个版本是无法运行dotnet以及dotnet生成的AOT应用的 我用另一块同Cortex-A7的板子运行dotnet的报错 版本不够,运行不了 而我的板子是根本就识…...

)

深入浅出支持向量机(SVM)

1. 引言 支持向量机(SVM, Support Vector Machine)是一种常见的监督学习算法,广泛应用于分类、回归和异常检测等任务。自1990年代初期由Vapnik等人提出以来,SVM已成为机器学习领域的核心方法之一,尤其在模式识别、文本…...

Vue脚手架相关记录

脚手架 安装与配置 安装node node -> 16.20.2 切换淘宝镜像 npm install -g cnpm -registryhttp://registry.npm.taobao.orgnpm config set registry http://registry.npm.taobao.org/使用了第二个,下一步才有用 安装vue npm install -g vue/clivscode中不给运行vue解…...

基于Docker的Minio分布式集群实践

目录 1. 说明 2. 配置表 3. 步骤 3.1 放行服务端口 3.2 docker-compose 编排 4. 入口反向代理与负载均衡配置 4.1 api入口 4.2 管理入口 5. 用例 6. 参考 1. 说明 以多节点的Docker容器方式实现minio存储集群,并配以nginx反向代理及负载均衡作为访问入口。…...

Scala 的迭代器

迭代器定义:迭代器不是一种集合,它是一种用于访问集合的方法。 迭代器需要通过集合对应的迭代器调用迭代器的方法来访问。 支持函数式编程风格,便于链式操作。 创建一个迭代器,相关代码如下: object Test {def mai…...

vue实现文件流形式的导出下载

文章目录 Vue 项目中下载返回的文件流操作步骤一、使用 Axios 请求文件流数据二、设置响应类型为 ‘blob’三、创建下载链接并触发下载四、在 Vue 组件中集成下载功能五、解释与实例说明1、使用 Axios 请求文件流数据:设置响应类型为 blob:创建下载链接并…...

【DIY飞控板PX4移植】深入理解NuttX下PX4串口配置:ttyS设备编号与USARTUART对应关系解析

深入理解NuttX下PX4串口配置:ttyS设备编号与USART&UART对应关系解析 引言问题描述原因分析结论 引言 在嵌入式系统开发中,串口(USART/UART)的配置是一个常见但关键的任务。对于使用 NuttX 作为底层操作系统的飞控系统&#x…...

【报错解决】vsvars32.bat 不是内部或外部命令,也不是可运行的程序或批处理文件

报错信息: 背景问题:Boost提示 “cl” 不是内部或外部命令,也不是可运行的程序或批处理文件时, 按照这篇博客的方法【传送】添加了环境变量后,仍然报错: 报错原因: vsvars32.bat 的路径不正…...

CTFshow-文件上传(Web151-170)

CTFshow-文件上传(Web151-170) 参考了CTF show 文件上传篇(web151-170,看这一篇就够啦)-CSDN博客 Web151 要求png,然后上传带有一句话木马的a.png,burp抓包后改后缀为a.php,然后蚁剑连接,找fl…...

深度学习基础--将yolov5的backbone模块用于目标识别会出现怎么效果呢??

🍨 本文为🔗365天深度学习训练营 中的学习记录博客🍖 原作者:K同学啊 前言 yolov5网络结构比较复杂,上次我们简要介绍了yolov5网络模块,并且复现了C3模块,深度学习基础–yolov5网络结构简介&a…...

操作系统(16)I/O软件

前言 操作系统I/O软件是负责管理和控制计算机系统与外围设备(例如键盘、鼠标、打印机、存储设备等)之间交互的软件。 一、I/O软件的定义与功能 定义:I/O软件,也称为输入/输出软件,是计算机系统中用于管理和控制设备与主…...

leetcode437.路径总和III

标签:前缀和 问题:给定一个二叉树的根节点 root ,和一个整数 targetSum ,求该二叉树里节点值之和等于 targetSum 的 路径 的数目。路径 不需要从根节点开始,也不需要在叶子节点结束,但是路径方向必须是向下…...

)

椭圆曲线密码学(ECC)

一、ECC算法概述 椭圆曲线密码学(Elliptic Curve Cryptography)是基于椭圆曲线数学理论的公钥密码系统,由Neal Koblitz和Victor Miller在1985年独立提出。相比RSA,ECC在相同安全强度下密钥更短(256位ECC ≈ 3072位RSA…...

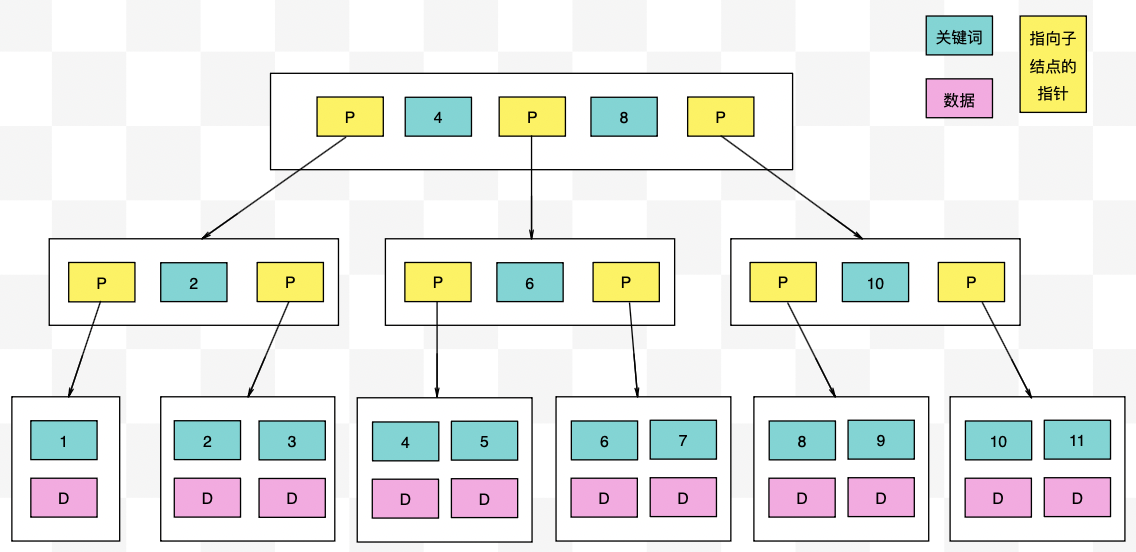

【力扣数据库知识手册笔记】索引

索引 索引的优缺点 优点1. 通过创建唯一性索引,可以保证数据库表中每一行数据的唯一性。2. 可以加快数据的检索速度(创建索引的主要原因)。3. 可以加速表和表之间的连接,实现数据的参考完整性。4. 可以在查询过程中,…...

FastAPI 教程:从入门到实践

FastAPI 是一个现代、快速(高性能)的 Web 框架,用于构建 API,支持 Python 3.6。它基于标准 Python 类型提示,易于学习且功能强大。以下是一个完整的 FastAPI 入门教程,涵盖从环境搭建到创建并运行一个简单的…...

页面渲染流程与性能优化

页面渲染流程与性能优化详解(完整版) 一、现代浏览器渲染流程(详细说明) 1. 构建DOM树 浏览器接收到HTML文档后,会逐步解析并构建DOM(Document Object Model)树。具体过程如下: (…...

什么是EULA和DPA

文章目录 EULA(End User License Agreement)DPA(Data Protection Agreement)一、定义与背景二、核心内容三、法律效力与责任四、实际应用与意义 EULA(End User License Agreement) 定义: EULA即…...

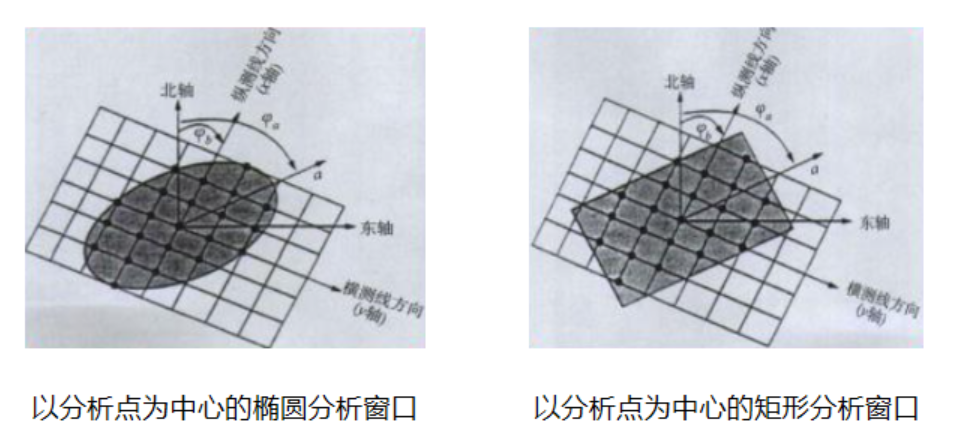

论文笔记——相干体技术在裂缝预测中的应用研究

目录 相关地震知识补充地震数据的认识地震几何属性 相干体算法定义基本原理第一代相干体技术:基于互相关的相干体技术(Correlation)第二代相干体技术:基于相似的相干体技术(Semblance)基于多道相似的相干体…...

在QWebEngineView上实现鼠标、触摸等事件捕获的解决方案

这个问题我看其他博主也写了,要么要会员、要么写的乱七八糟。这里我整理一下,把问题说清楚并且给出代码,拿去用就行,照着葫芦画瓢。 问题 在继承QWebEngineView后,重写mousePressEvent或event函数无法捕获鼠标按下事…...

Java求职者面试指南:计算机基础与源码原理深度解析

Java求职者面试指南:计算机基础与源码原理深度解析 第一轮提问:基础概念问题 1. 请解释什么是进程和线程的区别? 面试官:进程是程序的一次执行过程,是系统进行资源分配和调度的基本单位;而线程是进程中的…...

DingDing机器人群消息推送

文章目录 1 新建机器人2 API文档说明3 代码编写 1 新建机器人 点击群设置 下滑到群管理的机器人,点击进入 添加机器人 选择自定义Webhook服务 点击添加 设置安全设置,详见说明文档 成功后,记录Webhook 2 API文档说明 点击设置说明 查看自…...

[免费]微信小程序问卷调查系统(SpringBoot后端+Vue管理端)【论文+源码+SQL脚本】

大家好,我是java1234_小锋老师,看到一个不错的微信小程序问卷调查系统(SpringBoot后端Vue管理端)【论文源码SQL脚本】,分享下哈。 项目视频演示 【免费】微信小程序问卷调查系统(SpringBoot后端Vue管理端) Java毕业设计_哔哩哔哩_bilibili 项…...