深度学习实战之超分辨率算法(tensorflow)——ESPCN

espcn原理算法请参考上一篇论文,这里主要给实现。

数据集如下:尺寸相等即可

- 针对数据集,生成样本代码

- preeate_data.py

import imageio

from scipy import misc, ndimage

import numpy as np

import imghdr

import shutil

import os

import jsonmat = np.array([[ 65.481, 128.553, 24.966 ],[-37.797, -74.203, 112.0 ],[ 112.0, -93.786, -18.214]])

mat_inv = np.linalg.inv(mat)

offset = np.array([16, 128, 128])def rgb2ycbcr(rgb_img):ycbcr_img = np.zeros(rgb_img.shape, dtype=np.uint8)for x in range(rgb_img.shape[0]):for y in range(rgb_img.shape[1]):ycbcr_img[x, y, :] = np.round(np.dot(mat, rgb_img[x, y, :] * 1.0 / 255) + offset)return ycbcr_imgdef ycbcr2rgb(ycbcr_img):rgb_img = np.zeros(ycbcr_img.shape, dtype=np.uint8)for x in range(ycbcr_img.shape[0]):for y in range(ycbcr_img.shape[1]):[r, g, b] = ycbcr_img[x,y,:]rgb_img[x, y, :] = np.maximum(0, np.minimum(255, np.round(np.dot(mat_inv, ycbcr_img[x, y, :] - offset) * 255.0)))return rgb_imgdef my_anti_shuffle(input_image, ratio):shape = input_image.shapeori_height = int(shape[0])ori_width = int(shape[1])ori_channels = int(shape[2])if ori_height % ratio != 0 or ori_width % ratio != 0:print("Error! Height and width must be divided by ratio!")returnheight = ori_height // ratiowidth = ori_width // ratiochannels = ori_channels * ratio * ratioanti_shuffle = np.zeros((height, width, channels), dtype=np.uint8)for c in range(0, ori_channels):for x in range(0, ratio):for y in range(0, ratio):anti_shuffle[:,:,c * ratio * ratio + x * ratio + y] = input_image[x::ratio, y::ratio, c]return anti_shuffledef shuffle(input_image, ratio):shape = input_image.shapeheight = int(shape[0]) * ratiowidth = int(shape[1]) * ratiochannels = int(shape[2]) // ratio // ratioshuffled = np.zeros((height, width, channels), dtype=np.uint8)for i in range(0, height):for j in range(0, width):for k in range(0, channels):shuffled[i,j,k] = input_image[i // ratio, j // ratio, k * ratio * ratio + (i % ratio) * ratio + (j % ratio)]return shuffleddef prepare_images(params):ratio, training_num, lr_stride, lr_size = params['ratio'], params['training_num'], params['lr_stride'], params['lr_size']hr_stride = lr_stride * ratiohr_size = lr_size * ratio# first clear old images and create new directoriesfor ele in ['training', 'validation', 'test']:new_dir = params[ele + '_image_dir'].format(ratio)if os.path.isdir(new_dir):shutil.rmtree(new_dir)for sub_dir in ['/hr', 'lr']:os.makedirs(new_dir + sub_dir)image_num = 0folder = params['training_image_dir'].format(ratio)for root, dirnames, filenames in os.walk(params['image_dir']):for filename in filenames:path = os.path.join(root, filename)if imghdr.what(path) != 'jpeg':continuehr_image = imageio.imread(path)height = hr_image.shape[0]new_height = height - height % ratiowidth = hr_image.shape[1]new_width = width - width % ratiohr_image = hr_image[0:new_height,0:new_width]blurred = ndimage.gaussian_filter(hr_image, sigma=(1, 1, 0))lr_image = blurred[::ratio,::ratio,:]height = hr_image.shape[0]width = hr_image.shape[1]vertical_number = height / hr_stride - 1horizontal_number = width / hr_stride - 1image_num = image_num + 1if image_num % 10 == 0:print ("Finished image: {}".format(image_num))if image_num > training_num and image_num <= training_num + params['validation_num']:folder = params['validation_image_dir'].format(ratio)elif image_num > training_num + params['validation_num']:folder = params['test_image_dir'].format(ratio)#misc.imsave(folder + 'hr_full/' + filename[0:-4] + '.png', hr_image)#misc.imsave(folder + 'lr_full/' + filename[0:-4] + '.png', lr_image)for x in range(0, int(horizontal_number)):for y in range(0, int(vertical_number)):hr_sub_image = hr_image[y * hr_stride : y * hr_stride + hr_size, x * hr_stride : x * hr_stride + hr_size]lr_sub_image = lr_image[y * lr_stride : y * lr_stride + lr_size, x * lr_stride : x * lr_stride + lr_size]imageio.imwrite("{}hr/{}_{}_{}.png".format(folder, filename[0:-4], y, x), hr_sub_image)imageio.imwrite("{}lr/{}_{}_{}.png".format(folder, filename[0:-4], y, x), lr_sub_image)if image_num >= training_num + params['validation_num'] + params['test_num']:breakelse:continuebreakdef prepare_data(params):ratio = params['ratio']params['hr_stride'] = params['lr_stride'] * ratioparams['hr_size'] = params['lr_size'] * ratiofor ele in ['training', 'validation', 'test']:new_dir = params[ele + '_dir'].format(ratio)if os.path.isdir(new_dir):shutil.rmtree(new_dir)os.makedirs(new_dir)ratio, lr_size, edge = params['ratio'], params['lr_size'], params['edge']image_dirs = [d.format(ratio) for d in [params['training_image_dir'], params['validation_image_dir'], params['test_image_dir']]]data_dirs = [d.format(ratio) for d in [params['training_dir'], params['validation_dir'], params['test_dir']]]hr_start_idx = ratio * edge // 2hr_end_idx = hr_start_idx + (lr_size - edge) * ratiosub_hr_size = (lr_size - edge) * ratiofor dir_idx, image_dir in enumerate(image_dirs):data_dir = data_dirs[dir_idx]print ("Creating {}".format(data_dir))for root, dirnames, filenames in os.walk(image_dir + "/lr"):for filename in filenames:lr_path = os.path.join(root, filename)hr_path = image_dir + "/hr/" + filenamelr_image = imageio.imread(lr_path)hr_image = imageio.imread(hr_path)# convert to Ycbcr color spacelr_image_y = rgb2ycbcr(lr_image)hr_image_y = rgb2ycbcr(hr_image)lr_data = lr_image_y.reshape((lr_size * lr_size * 3))sub_hr_image_y = hr_image_y[int(hr_start_idx):int(hr_end_idx):1,int(hr_start_idx):int(hr_end_idx):1]hr_data = my_anti_shuffle(sub_hr_image_y, ratio).reshape(sub_hr_size * sub_hr_size * 3)data = np.concatenate([lr_data, hr_data])data.astype('uint8').tofile(data_dir + "/" + filename[0:-4])def remove_images(params):# Don't need old image foldersfor ele in ['training', 'validation', 'test']:rm_dir = params[ele + '_image_dir'].format(params['ratio'])if os.path.isdir(rm_dir):shutil.rmtree(rm_dir)if __name__ == '__main__':with open("./params.json", 'r') as f:params = json.load(f)print("Preparing images with scaling ratio: {}".format(params['ratio']))print ("If you want a different ratio change 'ratio' in params.json")print ("Splitting images (1/3)")prepare_images(params)print ("Preparing data, this may take a while (2/3)")prepare_data(params)print ("Cleaning up split images (3/3)")remove_images(params)print("Done, you can now train the model!")- generate.py

import argparse

from PIL import Image

import imageio

import tensorflow as tf

from scipy import ndimage

from scipy import misc

import numpy as np

from prepare_data import *

from psnr import psnr

import json

import pdbfrom espcn import ESPCNdef get_arguments():parser = argparse.ArgumentParser(description='EspcnNet generation script')parser.add_argument('--checkpoint', type=str,help='Which model checkpoint to generate from',default="logdir_2x/train")parser.add_argument('--lr_image', type=str,help='The low-resolution image waiting for processed.',default="images/butterfly_GT.jpg")parser.add_argument('--hr_image', type=str,help='The high-resolution image which is used to calculate PSNR.')parser.add_argument('--out_path', type=str,help='The output path for the super-resolution image',default="result/butterfly_HR")return parser.parse_args()def check_params(args, params):if len(params['filters_size']) - len(params['channels']) != 1:print("The length of 'filters_size' must be greater then the length of 'channels' by 1.")return Falsereturn Truedef generate():args = get_arguments()with open("./params.json", 'r') as f:params = json.load(f)if check_params(args, params) == False:returnsess = tf.Session()net = ESPCN(filters_size=params['filters_size'],channels=params['channels'],ratio=params['ratio'],batch_size=1,lr_size=params['lr_size'],edge=params['edge'])loss, images, labels = net.build_model()lr_image = tf.placeholder(tf.uint8)lr_image_data = imageio.imread(args.lr_image)lr_image_ycbcr_data = rgb2ycbcr(lr_image_data)lr_image_y_data = lr_image_ycbcr_data[:, :, 0:1]lr_image_cb_data = lr_image_ycbcr_data[:, :, 1:2]lr_image_cr_data = lr_image_ycbcr_data[:, :, 2:3]lr_image_batch = np.zeros((1,) + lr_image_y_data.shape)lr_image_batch[0] = lr_image_y_datasr_image = net.generate(lr_image)saver = tf.train.Saver()try:model_loaded = net.load(sess, saver, args.checkpoint)except:raise Exception("Failed to load model, does the ratio in params.json match the ratio you trained your checkpoint with?")if model_loaded:print("[*] Checkpoint load success!")else:print("[*] Checkpoint load failed/no checkpoint found")returnsr_image_y_data = sess.run(sr_image, feed_dict={lr_image: lr_image_batch})sr_image_y_data = shuffle(sr_image_y_data[0], params['ratio'])sr_image_ycbcr_data =np.array(Image.fromarray(lr_image_ycbcr_data).resize(params['ratio'] * np.array(lr_image_data.shape[0:2]),Image.BICUBIC))edge = params['edge'] * params['ratio'] / 2sr_image_ycbcr_data = np.concatenate((sr_image_y_data, sr_image_ycbcr_data[int(edge):int(-edge),int(edge):int(-edge),1:3]), axis=2)sr_image_data = ycbcr2rgb(sr_image_ycbcr_data)imageio.imwrite(args.out_path + '.png', sr_image_data)if args.hr_image != None:hr_image_data = misc.imread(args.hr_image)model_psnr = psnr(hr_image_data, sr_image_data, edge)print('PSNR of the model: {:.2f}dB'.format(model_psnr))sr_image_bicubic_data = misc.imresize(lr_image_data,params['ratio'] * np.array(lr_image_data.shape[0:2]),'bicubic')misc.imsave(args.out_path + '_bicubic.png', sr_image_bicubic_data)bicubic_psnr = psnr(hr_image_data, sr_image_bicubic_data, 0)print('PSNR of Bicubic: {:.2f}dB'.format(bicubic_psnr))if __name__ == '__main__':generate()train.py

```python

from __future__ import print_function

import argparse

from datetime import datetime

import os

import sys

import time

import json

import timeimport tensorflow as tf

from reader import create_inputs

from espcn import ESPCNimport pdbtry:xrange

except Exception as e:xrange = range

# 批次

BATCH_SIZE = 32

# epochs

NUM_EPOCHS = 100

# learning rate

LEARNING_RATE = 0.0001

# logdir

LOGDIR_ROOT = './logdir_{}x'def get_arguments():parser = argparse.ArgumentParser(description='EspcnNet example network')# 权重parser.add_argument('--checkpoint', type=str,help='Which model checkpoint to load from', default=None)# batch_sizeparser.add_argument('--batch_size', type=int, default=BATCH_SIZE,help='How many image files to process at once.')# epochsparser.add_argument('--epochs', type=int, default=NUM_EPOCHS,help='Number of epochs.')# 学习率parser.add_argument('--learning_rate', type=float, default=LEARNING_RATE,help='Learning rate for training.')# logdir_rootparser.add_argument('--logdir_root', type=str, default=LOGDIR_ROOT,help='Root directory to place the logging ''output and generated model. These are stored ''under the dated subdirectory of --logdir_root. ''Cannot use with --logdir.')# 返回参数return parser.parse_args()def check_params(args, params):if len(params['filters_size']) - len(params['channels']) != 1:print("The length of 'filters_size' must be greater then the length of 'channels' by 1.")return Falsereturn Truedef train():args = get_arguments()# load jsonwith open("./params.json", 'r') as f:params = json.load(f)# 存在if check_params(args, params) == False:returnlogdir_root = args.logdir_root # ./logdirif logdir_root == LOGDIR_ROOT:logdir_root = logdir_root.format(params['ratio']) # ./logdir_{RATIO}xlogdir = os.path.join(logdir_root, 'train') # ./logdir_{RATIO}x/train# Load training data as np arrays# 加载数据lr_images, hr_labels = create_inputs(params)# 网络模型net = ESPCN(filters_size=params['filters_size'],channels=params['channels'],ratio=params['ratio'],batch_size=args.batch_size,lr_size=params['lr_size'],edge=params['edge'])loss, images, labels = net.build_model()optimizer = tf.train.AdamOptimizer(learning_rate=args.learning_rate)trainable = tf.trainable_variables()optim = optimizer.minimize(loss, var_list=trainable)# set up logging for tensorboardwriter = tf.summary.FileWriter(logdir)writer.add_graph(tf.get_default_graph())summaries = tf.summary.merge_all()# set up sessionsess = tf.Session()# saver for storing/restoring checkpoints of the modelsaver = tf.train.Saver()init = tf.initialize_all_variables()sess.run(init)if net.load(sess, saver, logdir):print("[*] Checkpoint load success!")else:print("[*] Checkpoint load failed/no checkpoint found")try:steps, start_average, end_average = 0, 0, 0start_time = time.time()for ep in xrange(1, args.epochs + 1):batch_idxs = len(lr_images) // args.batch_sizebatch_average = 0for idx in xrange(0, batch_idxs):# On the fly batch generation instead of Queue to optimize GPU usagebatch_images = lr_images[idx * args.batch_size : (idx + 1) * args.batch_size]batch_labels = hr_labels[idx * args.batch_size : (idx + 1) * args.batch_size]steps += 1summary, loss_value, _ = sess.run([summaries, loss, optim], feed_dict={images: batch_images, labels: batch_labels})writer.add_summary(summary, steps)batch_average += loss_value# Compare loss of first 20% and last 20%batch_average = float(batch_average) / batch_idxsif ep < (args.epochs * 0.2):start_average += batch_averageelif ep >= (args.epochs * 0.8):end_average += batch_averageduration = time.time() - start_timeprint('Epoch: {}, step: {:d}, loss: {:.9f}, ({:.3f} sec/epoch)'.format(ep, steps, batch_average, duration))start_time = time.time()net.save(sess, saver, logdir, steps)except KeyboardInterrupt:print()finally:start_average = float(start_average) / (args.epochs * 0.2)end_average = float(end_average) / (args.epochs * 0.2)print("Start Average: [%.6f], End Average: [%.6f], Improved: [%.2f%%]" \% (start_average, end_average, 100 - (100*end_average/start_average)))if __name__ == '__main__':train()model 实现tensorflow版本

import tensorflow as tf

import os

import sys

import pdbdef create_variable(name, shape):'''Create a convolution filter variable with the specified name and shape,and initialize it using Xavier initialition.'''initializer = tf.contrib.layers.xavier_initializer_conv2d()variable = tf.Variable(initializer(shape=shape), name=name)return variabledef create_bias_variable(name, shape):'''Create a bias variable with the specified name and shape and initializeit to zero.'''initializer = tf.constant_initializer(value=0.0, dtype=tf.float32)return tf.Variable(initializer(shape=shape), name)class ESPCN:def __init__(self, filters_size, channels, ratio, batch_size, lr_size, edge):self.filters_size = filters_sizeself.channels = channelsself.ratio = ratioself.batch_size = batch_sizeself.lr_size = lr_sizeself.edge = edgeself.variables = self.create_variables()def create_variables(self):var = dict()var['filters'] = list()# the input layervar['filters'].append(create_variable('filter',[self.filters_size[0],self.filters_size[0],1,self.channels[0]]))# the hidden layersfor idx in range(1, len(self.filters_size) - 1):var['filters'].append(create_variable('filter', [self.filters_size[idx],self.filters_size[idx],self.channels[idx - 1],self.channels[idx]]))# the output layervar['filters'].append(create_variable('filter',[self.filters_size[-1],self.filters_size[-1],self.channels[-1],self.ratio**2]))var['biases'] = list()for channel in self.channels:var['biases'].append(create_bias_variable('bias', [channel]))var['biases'].append(create_bias_variable('bias', [float(self.ratio)**2]))image_shape = (self.batch_size, self.lr_size, self.lr_size, 3)var['images'] = tf.placeholder(tf.uint8, shape=image_shape, name='images')label_shape = (self.batch_size, self.lr_size - self.edge, self.lr_size - self.edge, 3 * self.ratio**2)var['labels'] = tf.placeholder(tf.uint8, shape=label_shape, name='labels')return vardef build_model(self):images, labels = self.variables['images'], self.variables['labels']input_images, input_labels = self.preprocess([images, labels])output = self.create_network(input_images)reduced_loss = self.loss(output, input_labels)return reduced_loss, images, labelsdef save(self, sess, saver, logdir, step):# print('[*] Storing checkpoint to {} ...'.format(logdir), end="")sys.stdout.flush()if not os.path.exists(logdir):os.makedirs(logdir)checkpoint = os.path.join(logdir, "model.ckpt")saver.save(sess, checkpoint, global_step=step)# print('[*] Done saving checkpoint.')def load(self, sess, saver, logdir):print("[*] Reading checkpoints...")ckpt = tf.train.get_checkpoint_state(logdir)if ckpt and ckpt.model_checkpoint_path:ckpt_name = os.path.basename(ckpt.model_checkpoint_path)saver.restore(sess, os.path.join(logdir, ckpt_name))return Trueelse:return Falsedef preprocess(self, input_data):# cast to float32 and normalize the datainput_list = list()for ele in input_data:if ele is None:continueele = tf.cast(ele, tf.float32) / 255.0input_list.append(ele)input_images, input_labels = input_list[0][:,:,:,0:1], None# Generate doesn't use input_labelsratioSquare = self.ratio * self.ratioif input_data[1] is not None:input_labels = input_list[1][:,:,:,0:ratioSquare]return input_images, input_labelsdef create_network(self, input_labels):'''The default structure of the network is:input (3 channels) ---> 5 * 5 conv (64 channels) ---> 3 * 3 conv (32 channels) ---> 3 * 3 conv (3*r^2 channels)Where `conv` is 2d convolutions with a non-linear activation (tanh) at the output.'''current_layer = input_labelsfor idx in range(len(self.filters_size)):conv = tf.nn.conv2d(current_layer, self.variables['filters'][idx], [1, 1, 1, 1], padding='VALID')with_bias = tf.nn.bias_add(conv, self.variables['biases'][idx])if idx == len(self.filters_size) - 1:current_layer = with_biaselse:current_layer = tf.nn.tanh(with_bias)return current_layerdef loss(self, output, input_labels):residual = output - input_labelsloss = tf.square(residual)reduced_loss = tf.reduce_mean(loss)tf.summary.scalar('loss', reduced_loss)return reduced_lossdef generate(self, lr_image):lr_image = self.preprocess([lr_image, None])[0]sr_image = self.create_network(lr_image)sr_image = sr_image * 255.0sr_image = tf.cast(sr_image, tf.int32)sr_image = tf.maximum(sr_image, 0)sr_image = tf.minimum(sr_image, 255)sr_image = tf.cast(sr_image, tf.uint8)return sr_image- 读取文件

import tensorflow as tf

import numpy as np

import os

import pdbdef create_inputs(params):"""Loads prepared training files and appends them as np arrays to a list.This approach is better because a FIFOQueue with a reader can't utilizethe GPU while this approach can."""sess = tf.Session()lr_images, hr_labels = [], []training_dir = params['training_dir'].format(params['ratio'])# Raise exception if user has not ran prepare_data.py yetif not os.path.isdir(training_dir):raise Exception("You must first run prepare_data.py before you can train")lr_shape = (params['lr_size'], params['lr_size'], 3)hr_shape = output_shape = (params['lr_size'] - params['edge'], params['lr_size'] - params['edge'], 3 * params['ratio']**2)for file in os.listdir(training_dir):train_file = open("{}/{}".format(training_dir, file), "rb")train_data = np.fromfile(train_file, dtype=np.uint8)lr_image = train_data[:17 * 17 * 3].reshape(lr_shape)lr_images.append(lr_image)hr_label = train_data[17 * 17 * 3:].reshape(hr_shape)hr_labels.append(hr_label)return lr_images, hr_labels

psnr计算

import numpy as np

import mathdef psnr(hr_image, sr_image, hr_edge):#assume RGB imagehr_image_data = np.array(hr_image)if hr_edge > 0:hr_image_data = hr_image_data[hr_edge:-hr_edge, hr_edge:-hr_edge].astype('float32')sr_image_data = np.array(sr_image).astype('float32')diff = sr_image_data - hr_image_datadiff = diff.flatten('C')rmse = math.sqrt( np.mean(diff ** 2.) )return 20*math.log10(255.0/rmse)训练过程有个BUG:bias is not unsupportd,但是也能学习。

相关文章:

深度学习实战之超分辨率算法(tensorflow)——ESPCN

espcn原理算法请参考上一篇论文,这里主要给实现。 数据集如下:尺寸相等即可 针对数据集,生成样本代码preeate_data.py import imageio from scipy import misc, ndimage import numpy as np import imghdr import shutil import os import…...

Android unitTest 单元测试用例编写(初始)

文章目录 了解测试相关库导入依赖库新建测试文件示例执行查看结果网页结果其他 本片讲解的重点是unitTest,而不是androidTest哦 了解测试相关库 androidx.compose.ui:ui-test-junit4: 用于Compose UI的JUnit 4测试库。 它提供了测试Compose UI组件的工具和API。 and…...

(初识类))

C++简明教程(10)(初识类)

类的教程 C 类的完整教程 C 中,类(class)是面向对象编程的核心概念,用于定义对象的属性(数据成员)和行为(成员函数)。本教程将带你从零开始,循序渐进地学习如何定义和使…...

光谱相机的工作原理

光谱相机的工作原理主要基于不同物质对不同波长光的吸收、反射和透射特性存在差异,以下是其具体工作过程: 一、光的收集 目标物体在光源照射下,其表面会对光产生吸收、反射和透射等相互作用。光谱相机的光学系统(如透镜、反射镜…...

【Linux进程】基于管道实现进程池

目录 前言 1. 进程池 1.1 基本结构: 1.2. 池化技术 1.3. 思路分析 1.4. 代码实现 总结 前言 上篇文章介绍了管道及其使用,本文在管道的基础上,通过匿名管道来实现一个进程池; 1. 进程池 父进程创建一组子进程,子进…...

软件测试之单元测试

🍅 点击文末小卡片,免费获取软件测试全套资料,资料在手,涨薪更快 一、何为单测 测试有黑盒测试和白盒测试之分,黑盒测试顾名思义就是我们不了解盒子的内部结构,我们通过文档或者对该功能的理解,…...

vscode+编程AI配置、使用说明

文章目录 [toc]1、概述2、github copilot2.1 配置2.2 使用文档2.3 使用说明 3、文心快码(Baidu Comate)3.1 配置3.2 使用文档3.3 使用说明 4、豆包(MarsCode)4.1 配置4.2 使用文档4.3 使用说明 5、通义灵码(TONGYI Lin…...

007-spring-bean的相关配置(重要)

spring-bean的相关配置...

【唐叔学算法】第19天:交换排序-冒泡排序与快速排序的深度解析及Java实现

引言 排序算法是计算机科学中的基础问题,而交换排序作为其中一类经典的排序方法,因其简单直观的思想和易于实现的特点,在初学者中广受欢迎。交换排序的核心思想是通过不断交换相邻元素来达到排序的目的。本文将深入探讨两种典型的交换排序算…...

合并 Python 中的字典

合并 Python 中的字典 如何在 Python 中合并字典? 这取决于你对“合并”一词的具体定义。 在 Python 中使用 | 操作符合并字典 首先,让我们讨论合并字典的最简单方法,这通常已经足够满足你的需求。 以下是两个字典: >>…...

使用Python实现自动化文档生成工具:提升文档编写效率的利器

友友们好! 我的新专栏《Python进阶》正式启动啦!这是一个专为那些渴望提升Python技能的朋友们量身打造的专栏,无论你是已经有一定基础的开发者,还是希望深入挖掘Python潜力的爱好者,这里都将是你不可错过的宝藏。 在这个专栏中,你将会找到: ● 深入解析:每一篇文章都将…...

uniapp使用live-pusher实现模拟人脸识别效果

需求: 1、前端实现模拟用户人脸识别,识别成功后抓取视频流或认证的一张静态图给服务端。 2、服务端调用第三方活体认证接口,验证前端传递的人脸是否存在,把认证结果反馈给前端。 3、前端根据服务端返回的状态,显示在…...

【JavaSE】【网络原理】初识网络

目录 一、网络互联二、局域网与广域网三、网络通信基础3.1 IP地址3.2 端口号3.3 网络协议3.4 五元组 四、协议分层4.1 OSI七层网络模型4.2 TCP/IP五层(四层)网络模型4.3 网络设备 五、网络数据通信基本流程。5.1 封装和分用5.2 简述过程 一、网络互联 网络互联: 网…...

鸿蒙之路的坑

1、系统 Windows 10 家庭版不可用模拟器 对应的解决方案【坑】 升级系统版本 直接更改密钥可自动升级系统 密钥找对应系统的(例:windows 10专业版) 升级完之后要激活 坑1、升级完后事先创建好的模拟器还是无法启动 解决:删除模拟…...

Python生日祝福烟花

1. 实现效果 2. 素材加载 2个图片和3个音频 shoot_image pygame.image.load(shoot(已去底).jpg) # 加载拼接的发射图像 flower_image pygame.image.load(flower.jpg) # 加载拼接的烟花图 烟花不好去底 # 调整图像的像素为原图的1/2 因为图像相对于界面来说有些大 shoo…...

)

Ubuntu环境 nginx.conf详解(二)

1、nginx.conf 结构详解: http 块:用于配置 HTTP 服务器的相关设置,包括处理 HTTP 和 HTTPS。 stream 块:用于配置 TCP/UDP 代理服务器,适用于需要进行四层负载均衡的情况。 ... # 全局块 events {...} …...

shardingsphere分库分表项目实践4-sql解析sql改写

为什么要sql解析重写? 如果我们的系统数据库实现了分表,那么我们的sql中表名需要根据参数动态确定,那么代码怎么写? 方案1: 自己手动拼接, 比如 update t_user_${suffix} , ${suffix} 作为一个变量传递…...

)

mysql数据库中,一棵3层的B+树,假如数据节点大小是1k,那这棵B+可以存多少条记录(2100万的由来)

在MySQL中,3层的B树可以存储的数据量取决于多个因素,包括页大小、每行数据的大小以及索引项的大小。以下是一个详细的计算过程: 一、假设条件 页大小:在InnoDB存储引擎中,B树的每个节点(页)大…...

Git 操作全解:从基础命令到高级操作的实用指南

文章目录 1.基本命令1.初始化仓库2.克隆远程仓库3.查看当前仓库状态4.查看提交日志5.添加文件到暂存区6.提交更改7.查看仓库的配置信息 2.分支操作1.查看所有分支2.创建新分支3.切换名称4.创建并切换到新分支5.删除分支6.查看当前分支 3.合并分支1.合并分支2.解决合并冲突 4.远…...

华院计算参与项目再次被《新闻联播》报道

12月17日,央视《新闻联播》播出我国推进乡村振兴取得积极进展。其中,华院计算参与的江西省防止返贫监测帮扶大数据系统被报道,该系统实现了由原来的“人找人”向“数据找人”的转变,有效提升监测帮扶及时性和有效性,守…...

RestClient

什么是RestClient RestClient 是 Elasticsearch 官方提供的 Java 低级 REST 客户端,它允许HTTP与Elasticsearch 集群通信,而无需处理 JSON 序列化/反序列化等底层细节。它是 Elasticsearch Java API 客户端的基础。 RestClient 主要特点 轻量级ÿ…...

Ubuntu系统下交叉编译openssl

一、参考资料 OpenSSL&&libcurl库的交叉编译 - hesetone - 博客园 二、准备工作 1. 编译环境 宿主机:Ubuntu 20.04.6 LTSHost:ARM32位交叉编译器:arm-linux-gnueabihf-gcc-11.1.0 2. 设置交叉编译工具链 在交叉编译之前&#x…...

K8S认证|CKS题库+答案| 11. AppArmor

目录 11. AppArmor 免费获取并激活 CKA_v1.31_模拟系统 题目 开始操作: 1)、切换集群 2)、切换节点 3)、切换到 apparmor 的目录 4)、执行 apparmor 策略模块 5)、修改 pod 文件 6)、…...

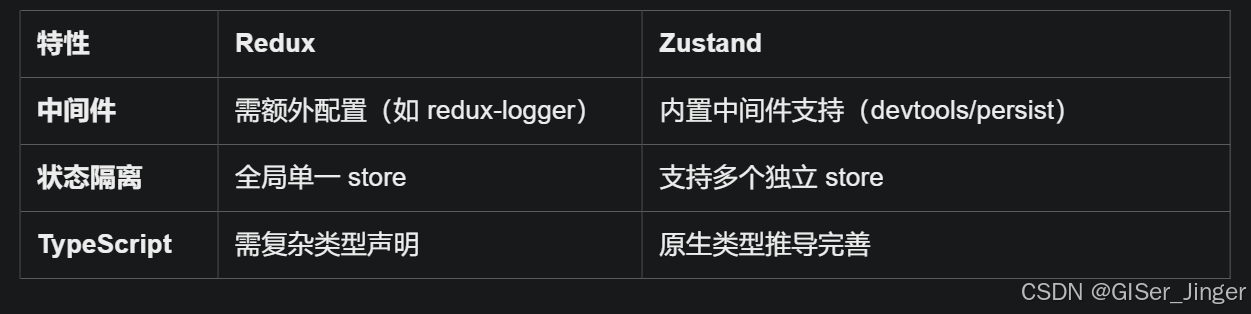

Zustand 状态管理库:极简而强大的解决方案

Zustand 是一个轻量级、快速和可扩展的状态管理库,特别适合 React 应用。它以简洁的 API 和高效的性能解决了 Redux 等状态管理方案中的繁琐问题。 核心优势对比 基本使用指南 1. 创建 Store // store.js import create from zustandconst useStore create((set)…...

FFmpeg 低延迟同屏方案

引言 在实时互动需求激增的当下,无论是在线教育中的师生同屏演示、远程办公的屏幕共享协作,还是游戏直播的画面实时传输,低延迟同屏已成为保障用户体验的核心指标。FFmpeg 作为一款功能强大的多媒体框架,凭借其灵活的编解码、数据…...

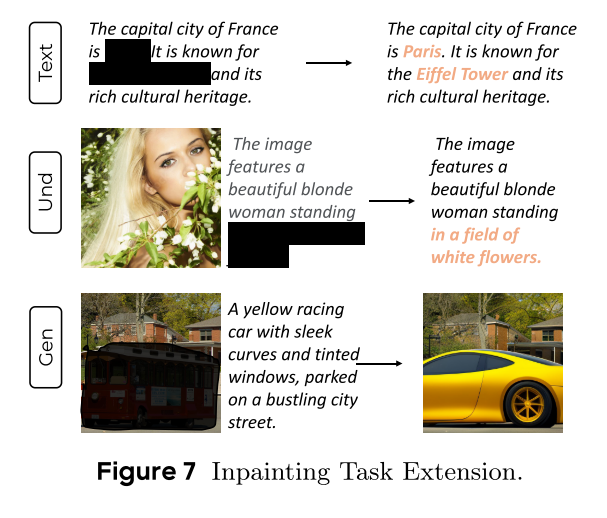

MMaDA: Multimodal Large Diffusion Language Models

CODE : https://github.com/Gen-Verse/MMaDA Abstract 我们介绍了一种新型的多模态扩散基础模型MMaDA,它被设计用于在文本推理、多模态理解和文本到图像生成等不同领域实现卓越的性能。该方法的特点是三个关键创新:(i) MMaDA采用统一的扩散架构…...

cf2117E

原题链接:https://codeforces.com/contest/2117/problem/E 题目背景: 给定两个数组a,b,可以执行多次以下操作:选择 i (1 < i < n - 1),并设置 或,也可以在执行上述操作前执行一次删除任意 和 。求…...

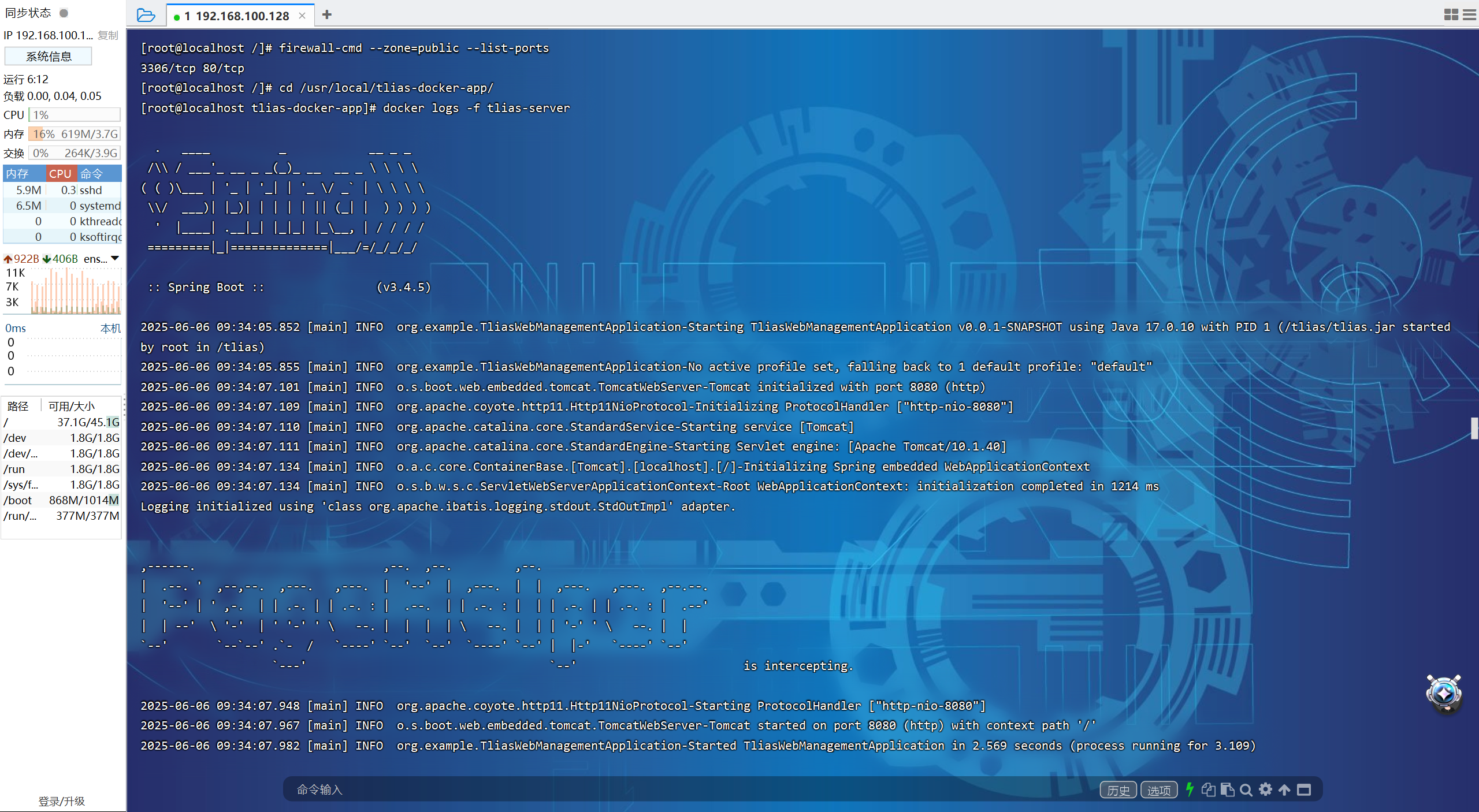

【JavaWeb】Docker项目部署

引言 之前学习了Linux操作系统的常见命令,在Linux上安装软件,以及如何在Linux上部署一个单体项目,大多数同学都会有相同的感受,那就是麻烦。 核心体现在三点: 命令太多了,记不住 软件安装包名字复杂&…...

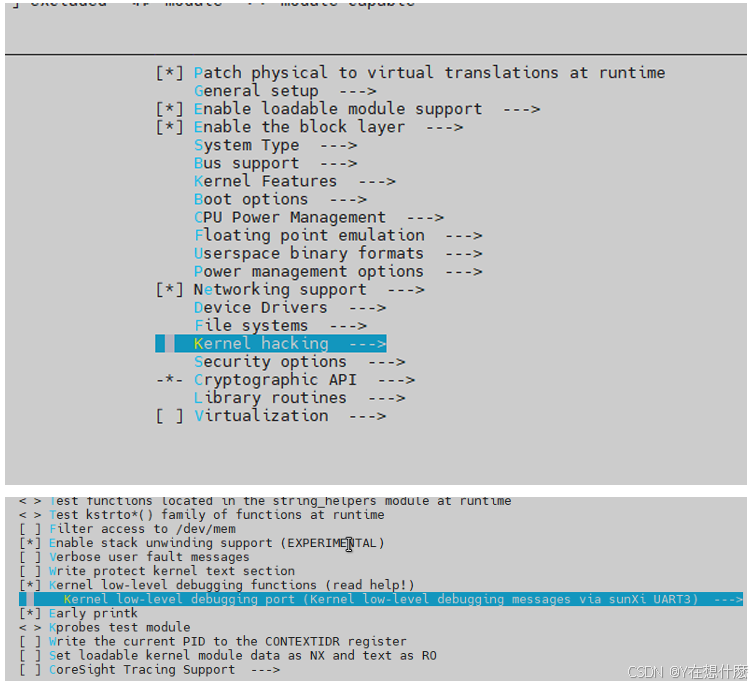

全志A40i android7.1 调试信息打印串口由uart0改为uart3

一,概述 1. 目的 将调试信息打印串口由uart0改为uart3。 2. 版本信息 Uboot版本:2014.07; Kernel版本:Linux-3.10; 二,Uboot 1. sys_config.fex改动 使能uart3(TX:PH00 RX:PH01),并让boo…...

中的KV缓存压缩与动态稀疏注意力机制设计)

大语言模型(LLM)中的KV缓存压缩与动态稀疏注意力机制设计

随着大语言模型(LLM)参数规模的增长,推理阶段的内存占用和计算复杂度成为核心挑战。传统注意力机制的计算复杂度随序列长度呈二次方增长,而KV缓存的内存消耗可能高达数十GB(例如Llama2-7B处理100K token时需50GB内存&a…...