用于牙科的多任务视频增强

Multi-task Video Enhancement for Dental Interventions

2022 miccai

Abstract

微型照相机牢牢地固定在牙科手机上,这样牙医就可以持续地监测保守牙科手术的进展情况。但视频辅助牙科干预中的视频增强减轻了低光、噪音、模糊和相机握手等降低视觉舒适度的问题。为此,我们引入了一种新的深度网络,用于多任务视频增强,使牙科场景的宏观可视化。特别是,该网络以多尺度方式联合利用视频恢复和时间对齐来有效增强视频。我们对虚幻场景中自然牙齿的视频进行的实验表明,所提出的网络在多任务中获得了接近实时处理的最新结果。我们在https://doi.org/10.34808/1jby-ay90 上发布了video -lab,这是第一个具有多任务标签的牙科视频数据集,以促进相关视频处理应用的进一步研究。

Related Work

UberNet [9] and cross-stitch networks [16] are encoder-focused architectures that propagate task outputs across scales in the encoder.

Multi-modal distillation in PAD-Net [27] and PAP-Net [29] are decoder-focused networks that fuse outputs of task heads to make the final dense predictions but only at a single scale.

MTI-Net [24], which is most similar to our architecture, extends the decoder fusion by propagating task-specific features bottom-up across multiple scales through the encoder.

Instead of propagating the task features in scale-specific distillation modules across scales to the encoder, our network simultaneously propagates task outputs to the encoder and to the task heads in the decoder. Furthermore, the networks make dense task prediction in static images while we extend our network to videos.

Contribution

i) a novel application of a microcamera in computer-aided dental intervention for continuous tooth macro-visualization during drilling (居然是硬件创新)(悻悻离去)

(ii) a new, asymmetrically annotated dataset of natural teeth in phantom scenes with pairs of frames of compromised and good quality using a beam splitter,

(iii) a novel deep network for video processing that propagates task outputs to encoder and decoder across multiple scales to model task interactions, and (iv) demonstration that an instantiated model e˙ectively addresses multi-task video enhancement in our application by matching and surpassing state-of-the-art re-sults of single task networks in near real-time.

Method

通过不同任务间的交互来增强视频的处理效果

视频增强任务是相互关联的。比如:

--对齐视频帧(aligning video frames)有助于去模糊(deblurring)。

--去噪(denoising)和去模糊可以揭示有助于运动估计(motion estimation)的图像特征。

这种相互依赖性可以通过设计一个多任务模型来充分利用。

MOST-Net 是一种多输出、多尺度、多任务的网络架构。它的目标是通过编码器和解码器之间的多尺度特性建模任务间的交互。网络的输出包括多个任务(用 T 表示),这些任务在不同尺度(用 s 表示)上都有输出。例如

传播方式:

- 尺度内传播:任务的输出会在当前尺度内传播。

- 跨尺度传播:任务输出会从较低的尺度上采样(upsample),然后传播到较高尺度的解码器层和任务分支中。

约束条件:

ui denotes some operator, for instance, the upsampling operator for seg-mentation or the scaling operator for homography estimation.

Problem Statement

模型需要同时解决视频恢复、牙齿分割和运动估计任务,并在一个退化图像生成模型的假设下进行学习和优化。

T = 3 and O1: video restoration, O2:segmentation , O3: homography esti-mation.

video stream generates observations![]() , where t is the time index and P > 0 is a scalar value referring to the number of past frames.

, where t is the time index and P > 0 is a scalar value referring to the number of past frames.

The problem is to 1. estimate a clean frame, 2. a binary teeth segmentation mask and 3. approximate the inter-frame motion by a homography matrix, denoted by the triplet (三个任务的联合输出在尺度 s=1上表示为一个三元组↓)

![]()

Let x correspond to pixel location. Given per-pixel blur kernels kx,t of size K, the degraded image(为了模拟输入视频的退化过程(如模糊和噪声)) at s = 1 is generated as:

We assume multiple independently moving objects present in the considered scenes, while our task is to estimate only the motion related to the object of interest (i.e. teeth), which is present in the region indicated by non-zero values of mask M:

![]()

∀t ∀x 是指所有t和x

Training***

在多任务和多尺度的深度学习模型中定义损失函数和优化目标

数据集

Loss Function

需要对 N(样本数)、T(任务数)和 S(尺度数)进行总共 N * T * S 次求和操作。

损失函数类型

模型通过最小化总损失函数来学习参数 Θ,以便同时优化所有任务和所有尺度下的输出预测。优化过程需要考虑不同任务之间的相互关系和尺度之间的协同作用(多任务多尺度学习的核心思想)。

感觉这个multi task learning这块还是有点没搞清楚,我再看看别的论文

Structure

MOST-Net enables refinement of lower scale segmentations by upsampling and inputting them at the task-specific branches of higher scales.

Encoders

MOST-Net extracts features ![]() from two input frames Bt−1 and Bt independently at three scales.也就是说,模型同时在多个尺度上处理输入数据。

from two input frames Bt−1 and Bt independently at three scales.也就是说,模型同时在多个尺度上处理输入数据。

U-shaped Downsampling : features are extracted via 3 × 3 convolutions with strides of 1, 2, 2 for s = 1, 2, 3 followed by ReLU activations and 5 residual blocks [4] at each scale. The residual connections are augmented with an additional branch of convolutions in the Fast Fourier domain.

output channel dimension :2^(s+4)

At each scale, features![]() and

and![]() are concatenated and a channel attention mechanism follows [30] to fuse them into

are concatenated and a channel attention mechanism follows [30] to fuse them into![]()

MOST-Net uses homography outputs from lower scales to warp encoder features from the previous time step as

Decoders

encoder features![]() are passed onto the expanding blocks scale-wisely via the skipping connections.

are passed onto the expanding blocks scale-wisely via the skipping connections.

At the lower scale (s = 3),![]() are directly passed on a stack of two residual blocks with 128 output channels. transposed convolutions with strides of 2 are used twice to recover the resolution scale.

are directly passed on a stack of two residual blocks with 128 output channels. transposed convolutions with strides of 2 are used twice to recover the resolution scale.

At higher scales (s < 3), features![]() are first concatenated with the upsampled decoder features

are first concatenated with the upsampled decoder features ![]() and convolved by 3X 3 kernels to halve the number of channels.(为啥要减半?)Subsequently, they are propagated onto two residual blocks with 64 and 32 output channels each. The residual block outputs constitute scale-specific shared backbones. Lightweight task-specific branches follow to estimate the dense outputs. Specifically, one 3×3 convolution estimates

and convolved by 3X 3 kernels to halve the number of channels.(为啥要减半?)Subsequently, they are propagated onto two residual blocks with 64 and 32 output channels each. The residual block outputs constitute scale-specific shared backbones. Lightweight task-specific branches follow to estimate the dense outputs. Specifically, one 3×3 convolution estimates ![]() and two 3 × 3 convolutions, separated by ReLU, yield

and two 3 × 3 convolutions, separated by ReLU, yield![]() at each scale

at each scale

At each scale, homography estimation modules estimate 4 offsets(偏移量), related 1-1 to homographies via the Direct Linear Transformation (DLT) as in [5,12]. The motion gated attention modules multiply features![]() with segmentations

with segmentations![]() to filter out context irrelevant to the motion of the teeth.The channel dimensionality is then halved by a 3 × 3 convolution while a second one extracts features from the restored output

to filter out context irrelevant to the motion of the teeth.The channel dimensionality is then halved by a 3 × 3 convolution while a second one extracts features from the restored output![]() . The concatenation of the two streams forms features

. The concatenation of the two streams forms features ![]()

Homography Estimation Module: At each scale, ![]() and

and ![]() are employed to predict the offsets with shallow downstream networks. Predicted offsets at lower scales are transformed back to homographies and cascaded(串联) bottom-up [12] to refine the higher scale ones.

are employed to predict the offsets with shallow downstream networks. Predicted offsets at lower scales are transformed back to homographies and cascaded(串联) bottom-up [12] to refine the higher scale ones.

Similarly to [5], we use blocks of 3 × 3 convolutions coupled with ReLU, batch normalization and max-pooling to reduce the spatial size of the features. Before the regression layer, a 0.2 dropout is applied.or s = 1, the convolution output channels are 64, 128, 256, 256 and 256. For s=2,3 the network depth is cropped from the second and third layers onwards respectively.

Task-Specific Branches

这段是自己根据gpt加的,以前没弄过多任务学习,方便理解*

Each task (colorization, motion estimation, segmentation) is handled by separate branches of the network. These branches can be seen in the image as the paths where F1,F2,F3 (the features at different scales) are passed through different processing stages (e.g., motion gated attention, channel attention, homography estimation) to produce task-specific outputs, such as the colorized frame Rt, mask Mt, and flow Ht.

The network is optimized for multiple tasks by using shared features across different task-specific branches, while each branch focuses on a particular task's output (colorization, segmentation, motion estimation).The losses corresponding to each task are computed separately and combined in the final objective function, which allows the model to simultaneously learn multiple tasks while sharing common feature representations.

Experiment

Dataset

Vident-lab: a dataset for multi-task video processing of phantom dental scenes - Open Research Data - Bridge of Knowledge

-

Frame-to-Frame (F2F) Training:

- The model is trained using static video fragments recorded with a camera (C1). The goal is to apply a trained image denoiser to clean noisy frames, obtain denoised frames and and their noise maps

-

Denoising Process:

- The noisy frames are first denoised using the trained model. Then, these denoised frames are temporally interpolated (using 17 frames) to generate a blurry effect. The temporal interpolation helps in simulating realistic motion blur.

-

Adding Noise:

- After the blur effect, noise maps are added to the blurry frames(The denoised frames are tem-porally interpolated [19] 8 times and averaged over a temporal window of 17 frames to synthesize real-istic blur) to form the input video frames (B). The noise maps represent the original noise that would have been present in the actual noisy frames.

-

Colorization: registration of frames between two di˙erent modalities C1 and C2

- To generate output video frames (R), frames from camera C1 are colorized using a process where frames from a second camera (C2) are mapped to create the ground truth frames.

- Specifically, the frames from C1 are colorized based on data from C2 to form the colorized video frames. This helps in overcoming the difficulty of aligning the frames between the two cameras and creating accurate pixel-to-pixel correspondences.

-

Color Mapping Network:

- A color mapping (CM) network is learned to predict parameters that map 3D functions from the dental scene colors of camera C2 to the camera C1. This network helps achieve precise color mapping and ensures accurate spatial correspondence between frames B and R.

Segmentation masks and homographies 单应性

HRNet48 [22] pretrained on ImageNet, is fine-tuned on our annotations to automatically segment the teeth in the remaining frames in all three sets. We compute optical flows between consecutive clean frames with RAFT [23]. Motion fields are cropped with teeth masks Mt to discard other moving objects, such as the dental bur or the suction tube, as we are interested in stabilizing the videos with respect to the teeth. Subsequently, a partial aÿne homography H is fitted by RANSAC to the segmented motion field.

Setup

We train, validate, and test all methods on our dataset (Tab. 1). In all MOST-Net training runs, we set λ1, λ2, λ3 to 2 × 10−4, 5 × 10−5 and 1 for balancing tasks in Eq. 4.

augmented by horizontal and vertical flips with 0.5 probability, random channel perturbations, and color jittering, after [31].

batch size 16 , Adam , Learning rate 1e − 4, decayed to 1e − 6 with cosine annealing

PyTorch 1.10 (FP32). The inference speed is reported in frames-per-second (FPS) on GPU NVidia RTX 5000.

Results

相关文章:

用于牙科的多任务视频增强

Multi-task Video Enhancement for Dental Interventions 2022 miccai Abstract 微型照相机牢牢地固定在牙科手机上,这样牙医就可以持续地监测保守牙科手术的进展情况。但视频辅助牙科干预中的视频增强减轻了低光、噪音、模糊和相机握手等降低视觉舒适度的问题。…...

【Node.js]

一、概述 Node.js 是一个基于 Chrome V8 引擎的 JavaScript 运行环境 ,使用了一个事件驱动、非阻塞式I/O模型, 让JavaScript 运行在服务端的开发平台,它让JavaScript成为与PHP、Python、Perl、Ruby等服务端语言平起平坐的脚本语言。 官网地…...

【Elasticsearch】腾讯云安装Elasticsearch

Elasticsearch 认识Elasticsearch安装Elasticsearch安装Kibana安装IK分词器分词器的作用是什么?IK分词器有几种模式?IK分词器如何拓展词条?如何停用词条? 认识Elasticsearch Elasticsearch的官方网站如下 Elasticsearch官网 Ela…...

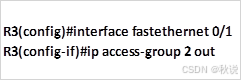

【网络协议】ACL(访问控制列表)第一部分

概述 网络安全在网络中的重要性不言而喻。本文(即第一部分)将介绍ACL的基本概念以及标准ACL的配置。第二部分将重点讨论扩展ACL、其他相关概念以及ACL的故障排除。 文章目录 概述ACL定义数据包过滤ACLACL配置指导原则配置ACL的三条规则ACL功能ACL工作原…...

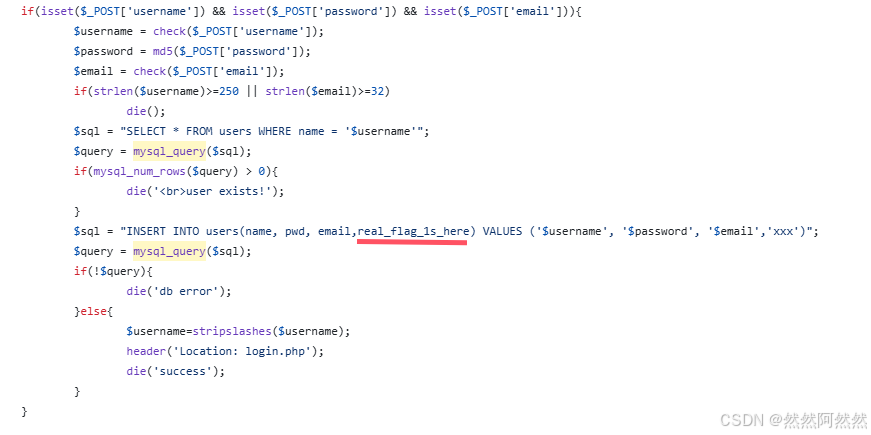

2025.1.20——一、[RCTF2015]EasySQL1 二次注入|报错注入|代码审计

题目来源:buuctf [RCTF2015]EasySQL1 目录 一、打开靶机,整理信息 二、解题思路 step 1:初步思路为二次注入,在页面进行操作 step 2:尝试二次注入 step 3:已知双引号类型的字符型注入,构造…...

Spring Boot 整合 Knife4j:打造更优雅的 API 文档

在现代 Web 应用开发中,API 文档的重要性不言而喻。清晰、准确、易用的 API 文档不仅可以方便开发者理解和使用 API,还能提高团队协作效率。Knife4j 是一个基于 Swagger 的增强型 API 文档工具,它可以为 Spring Boot 项目生成美观、易于交互的…...

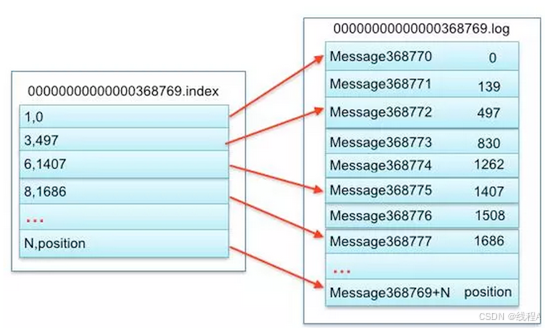

Kafka 源码分析(一) 日志段

首先我们的 kafka 的消息本身是存储在日志段中的, 对应的源码是下面这段代码: class LogSegment private[log] (val log: FileRecords,val lazyOffsetIndex: LazyIndex[OffsetIndex],val lazyTimeIndex: LazyIndex[TimeIndex],val txnIndex: TransactionIndex,val baseOffset:…...

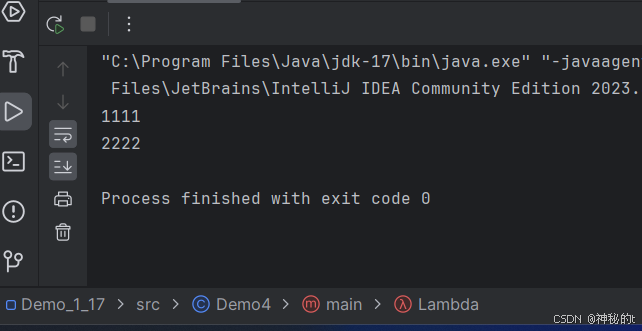

javaEE初阶————多线程初阶(2)

今天给大家带来第二期啦,保证给大家讲懂嗷; 1,线程状态 NEW安排了工作还未开始行动RUNNABLE可工作的,或者即将工作,正在工作BLOCKED排队等待WAITING排队等待其他事TIMED_WAITING排队等待其他事TERMINATED工作完成了 …...

Redis学习笔记1【数据类型和常用命令】

Redis学习笔记 基础语法 1.数据类型 String: 最基本的类型,可以存储任何数据,例如文本或数字。示例值为 hello world。Hash: 用于存储键值对,适合存储对象或结构体。示例值为 {"name": "Jack", "age": 21}。…...

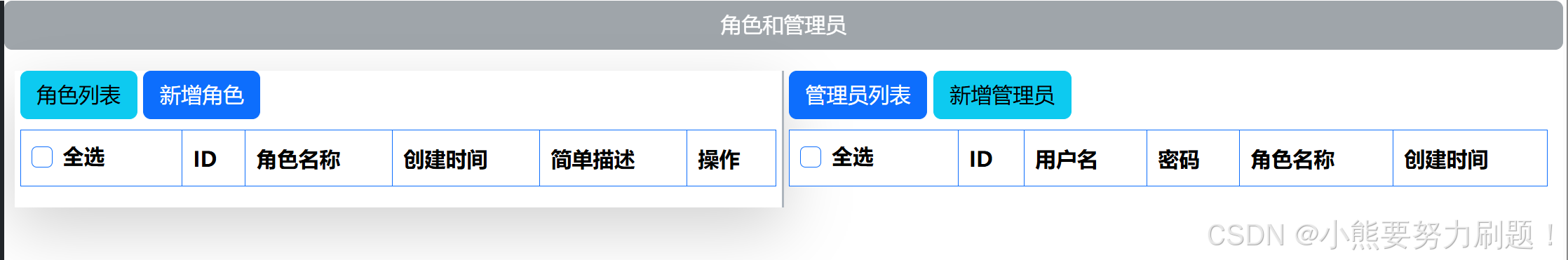

JavaWeb项目——查询角色列表到页面中——转发模式

一、知识点 1、req.getRequestDispatch与resp.sendRedirect跳转方式的比较 一、实现原理 1、req.getRequestDispatcher: 属于服务器端跳转,在服务器内部将请求转发给另一个资源(如另一个 Servlet 或 JSP 页面)。调用 getReques…...

feign调用跳过HTTPS的SSL证书校验配置详解

一、问题抛出 如果不配置跳过SSL证书校验,当Feign客户端尝试连接到一个使用自签名证书的服务器时,可能会抛出类似以下的异常: javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path building faile…...

今天也是记录小程序进展的一天(破晓时8)

嗨嗨嗨朋友们,今天又来记录一下小程序的进展啦!真是太激动了,项目又迈出了重要的一步,231啦!感觉每一步的努力都在积累,功能逐渐完善,离最终上线的目标越来越近了。大家一直支持着这个项目&…...

SQL-leetcode—1084. 销售分析 III

1084. 销售分析 III 表: Product --------------------- | Column Name | Type | --------------------- | product_id | int | | product_name | varchar | | unit_price | int | --------------------- product_id 是该表的主键(具有唯一值的列&…...

Linux C\C++编程-文件位置指针与读写文件数据块

【图书推荐】《Linux C与C一线开发实践(第2版)》_linux c与c一线开发实践pdf-CSDN博客 《Linux C与C一线开发实践(第2版)(Linux技术丛书)》(朱文伟,李建英)【摘要 书评 试读】- 京东图书 Linu…...

Flask简介与安装以及实现一个糕点店的简单流程

目录 1. Flask简介 1.1 Flask的核心特点 1.2 Flask的基本结构 1.3 Flask的常见用法 1.3.1 创建Flask应用 1.3.2 路由和视图函数 1.3.3 动态URL参数 1.3.4 使用模板 1.4 Flask的优点 1.5 总结 2. Flask 环境创建 2.1 创建虚拟环境 2.2 激活虚拟环境 1.3 安装Flask…...

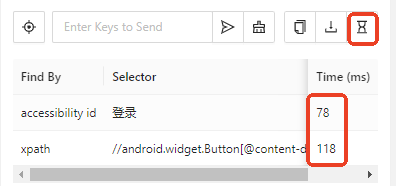

【自动化测试】—— Appium使用保姆教程

目录 一. 连接手机 1. 授权 2. 调试 3. 获取参数 二. 启动APP 1. 启动Appium服务 2. 启动Appium Inspector 3. 配置Appium Inspector 三. 功能说明 1. 主菜单功能 2. 快照视图菜单 3. 元素视图菜单 四. 常见问题 1. appPackage有多个设备时 一. 连接手机 1. 授权 首先将手机的开…...

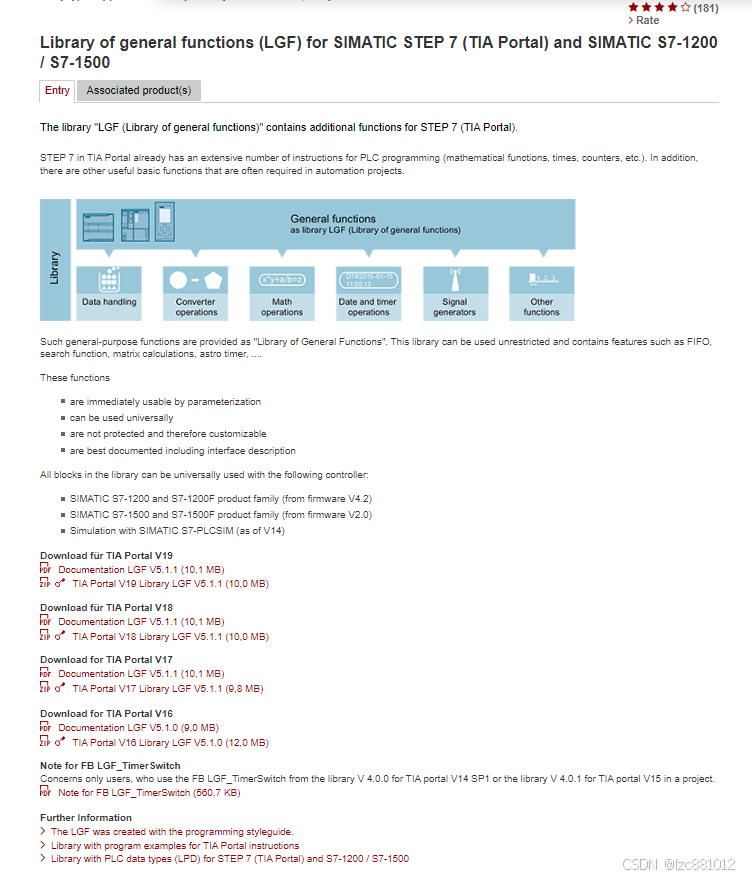

西门子【Library of General Functions (LGF) for SIMATIC S7-1200 / S7-1500】

文章目录 概要整体架构流程技术名词解释技术细节小结 概要 通用函数库 (LGF) 扩展了 TIA Portal 中用于 PLC 编程的 STEP 7 指令(数学函数、时间、计数器 等)。该库可以不受限制地使用,并包含 FIFO 、搜索功能、矩阵计算、 astro 计…...

IntelliJ IDEA 2023.3 中配置 Spring Boot 项目的热加载

IntelliJ IDEA 2023.3 中配置 Spring Boot 项目的热加载 在 IntelliJ IDEA 2023.3 中配置 Spring Boot 项目的热加载,可以让你在不重启应用的情况下看到代码修改的效果。以下是详细的配置步骤: 添加 spring-boot-devtools 依赖 在 pom.xml 文件中添加 …...

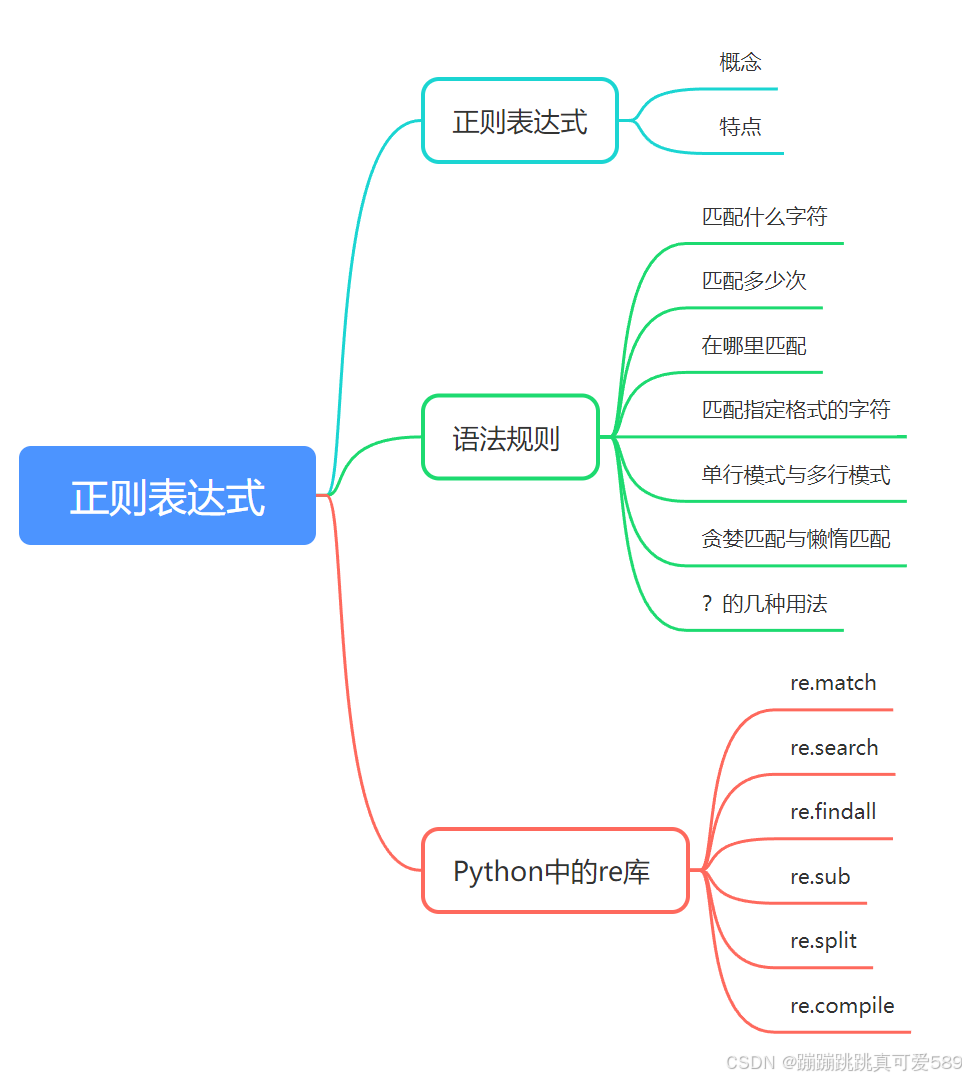

Python----Python高级(正则表达式:语法规则,re库)

一、正则表达式 1.1、概念 正则表达式,又称规则表达式,(Regular Expression,在代码中常简写为regex、 regexp或RE),是一种文本模式,包括普通字符(例如,a 到 z 之间的字母࿰…...

通过Ukey或者OTP动态口令实现windows安全登录

通过 安当SLA(System Login Agent)实现Windows安全登录认证,是一种基于双因素认证(2FA)的解决方案,旨在提升 Windows 系统的登录安全性。以下是详细的实现方法和步骤: 1. 安当SLA的核心功能 安…...

C++初阶-list的底层

目录 1.std::list实现的所有代码 2.list的简单介绍 2.1实现list的类 2.2_list_iterator的实现 2.2.1_list_iterator实现的原因和好处 2.2.2_list_iterator实现 2.3_list_node的实现 2.3.1. 避免递归的模板依赖 2.3.2. 内存布局一致性 2.3.3. 类型安全的替代方案 2.3.…...

)

椭圆曲线密码学(ECC)

一、ECC算法概述 椭圆曲线密码学(Elliptic Curve Cryptography)是基于椭圆曲线数学理论的公钥密码系统,由Neal Koblitz和Victor Miller在1985年独立提出。相比RSA,ECC在相同安全强度下密钥更短(256位ECC ≈ 3072位RSA…...

【机器视觉】单目测距——运动结构恢复

ps:图是随便找的,为了凑个封面 前言 在前面对光流法进行进一步改进,希望将2D光流推广至3D场景流时,发现2D转3D过程中存在尺度歧义问题,需要补全摄像头拍摄图像中缺失的深度信息,否则解空间不收敛…...

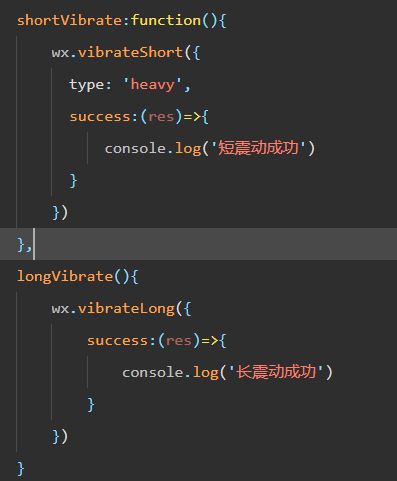

微信小程序 - 手机震动

一、界面 <button type"primary" bindtap"shortVibrate">短震动</button> <button type"primary" bindtap"longVibrate">长震动</button> 二、js逻辑代码 注:文档 https://developers.weixin.qq…...

HBuilderX安装(uni-app和小程序开发)

下载HBuilderX 访问官方网站:https://www.dcloud.io/hbuilderx.html 根据您的操作系统选择合适版本: Windows版(推荐下载标准版) Windows系统安装步骤 运行安装程序: 双击下载的.exe安装文件 如果出现安全提示&…...

Redis数据倾斜问题解决

Redis 数据倾斜问题解析与解决方案 什么是 Redis 数据倾斜 Redis 数据倾斜指的是在 Redis 集群中,部分节点存储的数据量或访问量远高于其他节点,导致这些节点负载过高,影响整体性能。 数据倾斜的主要表现 部分节点内存使用率远高于其他节…...

关键领域软件测试的突围之路:如何破解安全与效率的平衡难题

在数字化浪潮席卷全球的今天,软件系统已成为国家关键领域的核心战斗力。不同于普通商业软件,这些承载着国家安全使命的软件系统面临着前所未有的质量挑战——如何在确保绝对安全的前提下,实现高效测试与快速迭代?这一命题正考验着…...

NXP S32K146 T-Box 携手 SD NAND(贴片式TF卡):驱动汽车智能革新的黄金组合

在汽车智能化的汹涌浪潮中,车辆不再仅仅是传统的交通工具,而是逐步演变为高度智能的移动终端。这一转变的核心支撑,来自于车内关键技术的深度融合与协同创新。车载远程信息处理盒(T-Box)方案:NXP S32K146 与…...

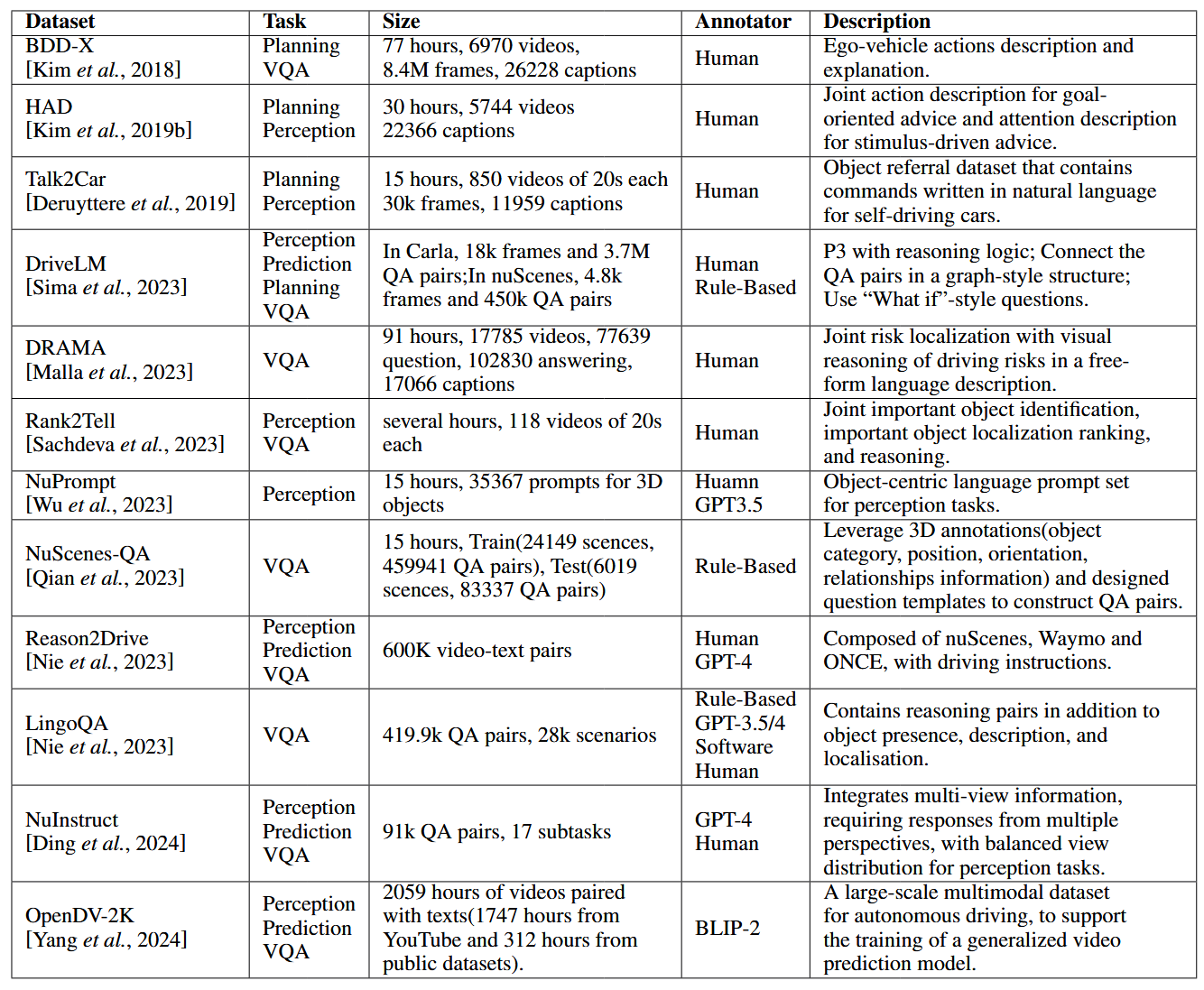

论文阅读:LLM4Drive: A Survey of Large Language Models for Autonomous Driving

地址:LLM4Drive: A Survey of Large Language Models for Autonomous Driving 摘要翻译 自动驾驶技术作为推动交通和城市出行变革的催化剂,正从基于规则的系统向数据驱动策略转变。传统的模块化系统受限于级联模块间的累积误差和缺乏灵活性的预设规则。…...

comfyui 工作流中 图生视频 如何增加视频的长度到5秒

comfyUI 工作流怎么可以生成更长的视频。除了硬件显存要求之外还有别的方法吗? 在ComfyUI中实现图生视频并延长到5秒,需要结合多个扩展和技巧。以下是完整解决方案: 核心工作流配置(24fps下5秒120帧) #mermaid-svg-yP…...