langchain教程-2.prompt

前言

该系列教程的代码: https://github.com/shar-pen/Langchain-MiniTutorial

我主要参考 langchain 官方教程, 有选择性的记录了一下学习内容

这是教程清单

- 1.初试langchain

- 2.prompt

- 3.OutputParser/输出解析

- 4.model/vllm模型部署和langchain调用

- 5.DocumentLoader/多种文档加载器

- 6.TextSplitter/文档切分

- 7.Embedding/文本向量化

- 8.VectorStore/向量数据库存储和检索

- 9.Retriever/检索器

- 10.Reranker/文档重排序

- 11.RAG管道/多轮对话RAG

- 12.Agent/工具定义/Agent调用工具/Agentic RAG

Prompt Template

Prompt 模板对于生成动态且灵活的提示至关重要,可用于各种场景,例如会话历史记录、结构化输出和特定查询。

在本教程中,我们将探讨创建 PromptTemplate 对象的方法,应用部分变量,通过 YAML 文件管理模板,并利用 ChatPromptTemplate 和 MessagePlaceholder 等高级工具来增强功能。

from langchain_openai import ChatOpenAIllm = ChatOpenAI(base_url='http://localhost:5551/v1',api_key='EMPTY',model_name='Qwen2.5-7B-Instruct',temperature=0.2,

)

创建 PromptTemplate 对象

有两种方法可以创建 PromptTemplate 对象:

- 1. 使用

from_template()方法。 - 2. 直接创建

PromptTemplate对象并同时生成提示词。

方法 1. 使用 from_template() 方法

- 使用

{variable}语法定义模板,其中variable代表可替换的变量。

from langchain_core.prompts import PromptTemplate# {}内部是变量

template = "What is the capital of {country}?"# 使用`from_template`函数来创建模板

prompt = PromptTemplate.from_template(template)

prompt

PromptTemplate(input_variables=['country'], input_types={}, partial_variables={}, template='What is the capital of {country}?')

PromptTemplate(input_variables=['country'], input_types={}, partial_variables={}, template='What is the capital of {country}?')

类已经解析出country这个变量,可以通过为变量 country 赋值来完成提示词。

# 类似str的`format`方法来创建实例

prompt.format(country="United States of America")

'What is the capital of United States of America?'

进一步用chain来简化流程

template = "What is the capital of {country}?"

prompt = PromptTemplate.from_template(template)

chain = prompt | llm

chain.invoke("United States of America").content

'The capital of the United States of America is Washington, D.C.'

方法 2. 直接创建 PromptTemplate 对象并同时生成提示

- 明确指定

input_variables以进行额外的验证。 - 否则,如果

input_variables与模板字符串中的变量不匹配,实例化时可能会引发异常。

from langchain_core.prompts import PromptTemplate

# Define template

template = "What is the capital of {country}?"# Create a prompt template with `PromptTemplate` object

prompt = PromptTemplate(template=template,input_variables=["country"],

)

prompt

PromptTemplate(input_variables=['country'], input_types={}, partial_variables={}, template='What is the capital of {country}?')

partial variables

可临时固定的可变参数, 是特殊的 input_variables, 是对应 input_variables 在缺失时的默认值。

使用 partial_variables,您可以部分应用函数。这在需要共享 通用变量 时特别有用。

常见示例:

- 日期或时间(date or time) 是典型的应用场景。

例如,假设您希望在提示中指定当前日期:

- 直接硬编码日期 或 每次手动传递日期变量 可能不太灵活。

- 更好的方法 是使用一个返回当前日期的函数,将其部分应用于提示模板,从而动态填充日期变量,使提示更具适应性。

from langchain_core.prompts import PromptTemplate

# Define template

template = "What are the capitals of {country1} and {country2}, respectively?"# Create a prompt template with `PromptTemplate` object

prompt = PromptTemplate(template=template,input_variables=["country1"],partial_variables={"country2": "United States of America" # Pass `partial_variables` in dictionary form},

)

prompt

PromptTemplate(input_variables=['country1'], input_types={}, partial_variables={'country2': 'United States of America'}, template='What are the capitals of {country1} and {country2}, respectively?')

prompt.format(country1="South Korea")

'What are the capitals of South Korea and United States of America, respectively?'

通过partial()函数修改或者增加临时变量, 或者直接修改 PromptTemplate.partial_variables

- prompt_partial = prompt.partial(country2=“India”), 可创建新实例的同时保留原实例

- prompt.partial_variables = {‘country2’:‘china’}, 直接修改原实例

prompt_partial = prompt.partial(country2="India")

prompt_partial.format(country1="South Korea")

'What are the capitals of South Korea and India, respectively?'

prompt.partial_variables = {'country2':'china'}

prompt.format(country1="South Korea")

'What are the capitals of South Korea and china, respectively?'

partial variables 可以临时用新值, 不会影响缺失时的默认值

print(prompt_partial.format(country1="South Korea", country2="Canada"))

print(prompt_partial.format(country1="South Korea"))

What are the capitals of South Korea and Canada, respectively?

What are the capitals of South Korea and India, respectively?

partial variables 可用函数传递, 不需要手动设置新值

from datetime import datetimedef get_today():return datetime.now().strftime("%B %d")prompt = PromptTemplate(template="Today's date is {today}. Please list {n} celebrities whose birthday is today. Please specify their date of birth.",input_variables=["n"],partial_variables={"today": get_today # Pass `partial_variables` in dictionary form},

)prompt.format(n=3)

"Today's date is January 30. Please list 3 celebrities whose birthday is today. Please specify their date of birth."

从 YAML 文件加载 Prompt 模板

您可以将 Prompt 模板 存储在单独的 YAML 文件 中,并使用 load_prompt 进行加载和管理。

以下是一个yaml示例:

_type: "prompt"

template: "What is the color of {fruit}?"

input_variables: ["fruit"]

from langchain_core.prompts import load_promptprompt = load_prompt("prompts/fruit_color.yaml", encoding="utf-8")

prompt

ChatPromptTemplate

ChatPromptTemplate 可用于将会话历史记录包含到提示词中,以提供上下文信息。消息以 (role, message) 元组的形式组织,并存储在 列表 中。

角色(role):

"system":系统设置信息,通常用于全局指令或设定 AI 的行为。"human":用户输入的消息。"ai":AI 生成的响应消息。

from langchain_core.prompts import ChatPromptTemplatechat_prompt = ChatPromptTemplate.from_template("What is the capital of {country}?")

chat_prompt

ChatPromptTemplate(input_variables=['country'], input_types={}, partial_variables={}, messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['country'], input_types={}, partial_variables={}, template='What is the capital of {country}?'), additional_kwargs={})])

ChatPromptTemplate(input_variables=[‘country’], input_types={}, partial_variables={}, messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=[‘country’], input_types={}, partial_variables={}, template=‘What is the capital of {country}?’), additional_kwargs={})])

注意这个prompt被 HumanMessagePromptTemplate包装了,而且位于一个list中

chat_prompt.format(country="United States of America")

'Human: What is the capital of United States of America?'

多角色

使用 ChatPromptTemplate.from_messages来定义模板, 内部是 chat list, 每个 chat 都是以 (role, message) 元组的形式组织

from langchain_core.prompts import ChatPromptTemplatechat_template = ChatPromptTemplate.from_messages([# role, message("system", "You are a friendly AI assistant. Your name is {name}."),("human", "Nice to meet you!"),("ai", "Hello! How can I assist you?"),("human", "{user_input}"),]

)# Create chat messages

messages = chat_template.format_messages(name="Teddy", user_input="What is your name?")

messages

[SystemMessage(content='You are a friendly AI assistant. Your name is Teddy.', additional_kwargs={}, response_metadata={}),HumanMessage(content='Nice to meet you!', additional_kwargs={}, response_metadata={}),AIMessage(content='Hello! How can I assist you?', additional_kwargs={}, response_metadata={}),HumanMessage(content='What is your name?', additional_kwargs={}, response_metadata={})]

可直接用上面的 Message list 的形式调用大模型

from langchain_openai import ChatOpenAIllm = ChatOpenAI(base_url='http://localhost:5551/v1',api_key='EMPTY',model_name='Qwen2.5-7B-Instruct',temperature=0.2,

)

llm.invoke(messages).content

"My name is Teddy. It's nice to meet you! How can I help you today?"

MessagePlaceholder

LangChain 提供了 MessagePlaceholder,用途包括:

- 当不确定使用哪些角色 作为消息提示模板的一部分时,它可以提供灵活性。

- 在格式化时插入一组消息列表,适用于动态会话历史记录的场景。

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholderchat_prompt = ChatPromptTemplate.from_messages([("system","You are a summarization specialist AI assistant. Your mission is to summarize conversations using key points.",),MessagesPlaceholder(variable_name="conversation"),("human", "Summarize the conversation so far in {word_count} words."),]

)

chat_prompt

ChatPromptTemplate(input_variables=['conversation', 'word_count'], input_types={'conversation': list[typing.Annotated[typing.Union[typing.Annotated[langchain_core.messages.ai.AIMessage, Tag(tag='ai')], typing.Annotated[langchain_core.messages.human.HumanMessage, Tag(tag='human')], typing.Annotated[langchain_core.messages.chat.ChatMessage, Tag(tag='chat')], typing.Annotated[langchain_core.messages.system.SystemMessage, Tag(tag='system')], typing.Annotated[langchain_core.messages.function.FunctionMessage, Tag(tag='function')], typing.Annotated[langchain_core.messages.tool.ToolMessage, Tag(tag='tool')], typing.Annotated[langchain_core.messages.ai.AIMessageChunk, Tag(tag='AIMessageChunk')], typing.Annotated[langchain_core.messages.human.HumanMessageChunk, Tag(tag='HumanMessageChunk')], typing.Annotated[langchain_core.messages.chat.ChatMessageChunk, Tag(tag='ChatMessageChunk')], typing.Annotated[langchain_core.messages.system.SystemMessageChunk, Tag(tag='SystemMessageChunk')], typing.Annotated[langchain_core.messages.function.FunctionMessageChunk, Tag(tag='FunctionMessageChunk')], typing.Annotated[langchain_core.messages.tool.ToolMessageChunk, Tag(tag='ToolMessageChunk')]], FieldInfo(annotation=NoneType, required=True, discriminator=Discriminator(discriminator=<function _get_type at 0x7ff1a966cfe0>, custom_error_type=None, custom_error_message=None, custom_error_context=None))]]}, partial_variables={}, messages=[SystemMessagePromptTemplate(prompt=PromptTemplate(input_variables=[], input_types={}, partial_variables={}, template='You are a summarization specialist AI assistant. Your mission is to summarize conversations using key points.'), additional_kwargs={}), MessagesPlaceholder(variable_name='conversation'), HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['word_count'], input_types={}, partial_variables={}, template='Summarize the conversation so far in {word_count} words.'), additional_kwargs={})])

formatted_chat_prompt = chat_prompt.format(word_count=5,conversation=[("human", "Hello! I’m Teddy. Nice to meet you."),("ai", "Nice to meet you! I look forward to working with you."),],

)print(formatted_chat_prompt)

System: You are a summarization specialist AI assistant. Your mission is to summarize conversations using key points.

Human: Hello! I’m Teddy. Nice to meet you.

AI: Nice to meet you! I look forward to working with you.

Human: Summarize the conversation so far in 5 words.

Few-Shot Prompting

LangChain 的 Few-Shot Prompting 提供了一种强大的框架,通过提供精心挑选的示例,引导语言模型生成高质量的输出。此技术减少了大量模型微调的需求,同时确保在各种应用场景中提供精准且符合上下文的结果。

-

Few-Shot Prompt 模板:

- 通过嵌入示例定义提示的结构和格式,指导模型生成一致的输出。

-

示例选择策略(Example Selection Strategies):

- 动态选择最相关的示例 以匹配特定查询,增强模型的上下文理解能力,提高响应准确性。

-

Chroma 向量存储(Chroma Vector Store):

- 用于存储和检索基于语义相似度的示例,提供可扩展且高效的 Prompt 结构构建。

FewShotPromptTemplate

Few-shot prompting 是一种强大的技术,它通过提供少量精心设计的示例,引导语言模型生成准确且符合上下文的输出。LangChain 的 FewShotPromptTemplate 简化了这一过程,使用户能够构建灵活且可复用的提示,适用于问答、摘要、文本校正等任务。

1. 设计 Few-Shot 提示(Designing Few-Shot Prompts)

- 定义示例,展示所需的输出结构和风格。

- 确保示例覆盖边界情况,以增强模型的理解能力和性能。

2. 动态示例选择(Dynamic Example Selection)

- 利用语义相似性或向量搜索,选择最相关的示例,以匹配输入查询。

3. 集成 Few-Shot 提示(Integrating Few-Shot Prompts)

- 结合 Prompt 模板与语言模型,构建强大的链式调用,以生成高质量的响应。

from langchain_openai import ChatOpenAIllm = ChatOpenAI(base_url='http://localhost:5551/v1',api_key='EMPTY',model_name='Qwen2.5-7B-Instruct',temperature=0.2,

)# User query

question = "What is the capital of United States of America?"# Query the model

response = llm.invoke(question)# Print the response

print(response.content)

The capital of the United States of America is Washington, D.C.

以下是一个 CoT 的示例prompt

from langchain_core.prompts import PromptTemplate, FewShotPromptTemplate# Define examples for the few-shot prompt

examples = [{"question": "Who lived longer, Steve Jobs or Einstein?","answer": """Does this question require additional questions: Yes.

Additional Question: At what age did Steve Jobs die?

Intermediate Answer: Steve Jobs died at the age of 56.

Additional Question: At what age did Einstein die?

Intermediate Answer: Einstein died at the age of 76.

The final answer is: Einstein

""",},{"question": "When was the founder of Naver born?","answer": """Does this question require additional questions: Yes.

Additional Question: Who is the founder of Naver?

Intermediate Answer: Naver was founded by Lee Hae-jin.

Additional Question: When was Lee Hae-jin born?

Intermediate Answer: Lee Hae-jin was born on June 22, 1967.

The final answer is: June 22, 1967

""",},{"question": "Who was the reigning king when Yulgok Yi's mother was born?","answer": """Does this question require additional questions: Yes.

Additional Question: Who is Yulgok Yi's mother?

Intermediate Answer: Yulgok Yi's mother is Shin Saimdang.

Additional Question: When was Shin Saimdang born?

Intermediate Answer: Shin Saimdang was born in 1504.

Additional Question: Who was the king of Joseon in 1504?

Intermediate Answer: The king of Joseon in 1504 was Yeonsangun.

The final answer is: Yeonsangun

""",},{"question": "Are the directors of Oldboy and Parasite from the same country?","answer": """Does this question require additional questions: Yes.

Additional Question: Who is the director of Oldboy?

Intermediate Answer: The director of Oldboy is Park Chan-wook.

Additional Question: Which country is Park Chan-wook from?

Intermediate Answer: Park Chan-wook is from South Korea.

Additional Question: Who is the director of Parasite?

Intermediate Answer: The director of Parasite is Bong Joon-ho.

Additional Question: Which country is Bong Joon-ho from?

Intermediate Answer: Bong Joon-ho is from South Korea.

The final answer is: Yes

""",},

]example_prompt = PromptTemplate.from_template("Question:\n{question}\nAnswer:\n{answer}"

)# Print the first formatted example

print(example_prompt.format(**examples[0]))

Question:

Who lived longer, Steve Jobs or Einstein?

Answer:

Does this question require additional questions: Yes.

Additional Question: At what age did Steve Jobs die?

Intermediate Answer: Steve Jobs died at the age of 56.

Additional Question: At what age did Einstein die?

Intermediate Answer: Einstein died at the age of 76.

The final answer is: Einstein

以下这个 FewShotPromptTemplate 将 examples 以 example_prompt 格式添加到真正 QA 的前面。真正的 QA 按照 suffix 格式展示

# Initialize the FewShotPromptTemplate

few_shot_prompt = FewShotPromptTemplate(examples=examples,example_prompt=example_prompt,suffix="Question:\n{question}\nAnswer:",input_variables=["question"],

)# Example question

question = "How old was Bill Gates when Google was founded?"# Generate the final prompt

final_prompt = few_shot_prompt.format(question=question)

print(final_prompt)

Question:

Who lived longer, Steve Jobs or Einstein?

Answer:

Does this question require additional questions: Yes.

Additional Question: At what age did Steve Jobs die?

Intermediate Answer: Steve Jobs died at the age of 56.

Additional Question: At what age did Einstein die?

Intermediate Answer: Einstein died at the age of 76.

The final answer is: EinsteinQuestion:

When was the founder of Naver born?

Answer:

Does this question require additional questions: Yes.

Additional Question: Who is the founder of Naver?

Intermediate Answer: Naver was founded by Lee Hae-jin.

Additional Question: When was Lee Hae-jin born?

Intermediate Answer: Lee Hae-jin was born on June 22, 1967.

The final answer is: June 22, 1967Question:

Who was the reigning king when Yulgok Yi's mother was born?

Answer:

Does this question require additional questions: Yes.

Additional Question: Who is Yulgok Yi's mother?

Intermediate Answer: Yulgok Yi's mother is Shin Saimdang.

Additional Question: When was Shin Saimdang born?

Intermediate Answer: Shin Saimdang was born in 1504.

Additional Question: Who was the king of Joseon in 1504?

Intermediate Answer: The king of Joseon in 1504 was Yeonsangun.

The final answer is: YeonsangunQuestion:

Are the directors of Oldboy and Parasite from the same country?

Answer:

Does this question require additional questions: Yes.

Additional Question: Who is the director of Oldboy?

Intermediate Answer: The director of Oldboy is Park Chan-wook.

Additional Question: Which country is Park Chan-wook from?

Intermediate Answer: Park Chan-wook is from South Korea.

Additional Question: Who is the director of Parasite?

Intermediate Answer: The director of Parasite is Bong Joon-ho.

Additional Question: Which country is Bong Joon-ho from?

Intermediate Answer: Bong Joon-ho is from South Korea.

The final answer is: YesQuestion:

How old was Bill Gates when Google was founded?

Answer:

response = llm.invoke(final_prompt)

print(response.content)

Does this question require additional questions: Yes.

Additional Question: When was Google founded?

Intermediate Answer: Google was founded in 1998.

Additional Question: When was Bill Gates born?

Intermediate Answer: Bill Gates was born on October 28, 1955.

The final answer is: Bill Gates was 43 years old when Google was founded.

特殊 prompt

RAG 文档分析

基于检索到的文档上下文处理并回答问题,确保高准确性和高相关性。

from langchain.prompts import ChatPromptTemplatesystem = """You are a precise and helpful AI assistant specializing in question-answering tasks based on provided context.

Your primary task is to:

1. Analyze the provided context thoroughly

2. Answer questions using ONLY the information from the context

3. Preserve technical terms and proper nouns exactly as they appear

4. If the answer cannot be found in the context, respond with: 'The provided context does not contain information to answer this question.'

5. Format responses in clear, readable paragraphs with relevant examples when available

6. Focus on accuracy and clarity in your responses

"""human = """#Question:

{question}#Context:

{context}#Answer:

Please provide a focused, accurate response that directly addresses the question using only the information from the provided context."""prompt = ChatPromptTemplate.from_messages([("system", system), ("human", human)]

)prompt

ChatPromptTemplate(input_variables=['context', 'question'], input_types={}, partial_variables={}, messages=[SystemMessagePromptTemplate(prompt=PromptTemplate(input_variables=[], input_types={}, partial_variables={}, template="You are a precise and helpful AI assistant specializing in question-answering tasks based on provided context.\nYour primary task is to:\n1. Analyze the provided context thoroughly\n2. Answer questions using ONLY the information from the context\n3. Preserve technical terms and proper nouns exactly as they appear\n4. If the answer cannot be found in the context, respond with: 'The provided context does not contain information to answer this question.'\n5. Format responses in clear, readable paragraphs with relevant examples when available\n6. Focus on accuracy and clarity in your responses\n"), additional_kwargs={}), HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['context', 'question'], input_types={}, partial_variables={}, template='#Question:\n{question}\n\n#Context:\n{context}\n\n#Answer:\nPlease provide a focused, accurate response that directly addresses the question using only the information from the provided context.'), additional_kwargs={})])

具有来源归因的 RAG(RAG with Source Attribution)

增强型 RAG 实现,支持详细的来源追踪和引用,以提高可追溯性和验证可靠性。

from langchain.prompts import ChatPromptTemplatesystem = """You are a precise and thorough AI assistant that provides well-documented answers with source attribution.

Your responsibilities include:

1. Analyzing provided context thoroughly

2. Generating accurate answers based solely on the given context

3. Including specific source references for each key point

4. Preserving technical terminology exactly as presented

5. Maintaining clear citation format [source: page/document]

6. If information is not found in the context, state: 'The provided context does not contain information to answer this question.'Format your response as:

1. Main Answer

2. Sources Used (with specific locations)

3. Confidence Level (High/Medium/Low)"""human = """#Question:

{question}#Context:

{context}#Answer:

Please provide a detailed response with source citations using only information from the provided context."""prompt = ChatPromptTemplate.from_messages([("system", system), ("human", human)]

)

PROMPT_OWNER = "eun"

hub.push(f"{PROMPT_OWNER}/{prompt_title}", prompt, new_repo_is_public=True)

其实在回答要求里加入了源引用的要求

LLM 响应评估(LLM Response Evaluation)

基于多项质量指标对 LLM 响应进行全面评估,并提供详细的评分方法。

from langchain.prompts import PromptTemplateevaluation_prompt = """Evaluate the LLM's response based on the following criteria:INPUT:

Question: {question}

Context: {context}

LLM Response: {answer}EVALUATION CRITERIA:

1. Accuracy (0-10)

- Perfect (10): Completely accurate, perfectly aligned with context

- Good (7-9): Minor inaccuracies

- Fair (4-6): Some significant inaccuracies

- Poor (0-3): Major inaccuracies or misalignment2. Completeness (0-10)

- Perfect (10): Comprehensive coverage of all relevant points

- Good (7-9): Covers most important points

- Fair (4-6): Missing several key points

- Poor (0-3): Critically incomplete3. Context Relevance (0-10)

- Perfect (10): Optimal use of context

- Good (7-9): Good use with minor omissions

- Fair (4-6): Partial use of relevant context

- Poor (0-3): Poor context utilization4. Clarity (0-10)

- Perfect (10): Exceptionally clear and well-structured

- Good (7-9): Clear with minor issues

- Fair (4-6): Somewhat unclear

- Poor (0-3): Confusing or poorly structuredSCORING METHOD:

1. Calculate individual scores

2. Compute weighted average:- Accuracy: 40%- Completeness: 25%- Context Relevance: 25%- Clarity: 10%

3. Normalize to 0-1 scaleOUTPUT FORMAT:

{"individual_scores": {"accuracy": float,"completeness": float,"context_relevance": float,"clarity": float},"weighted_score": float,"normalized_score": float,"evaluation_notes": string

}Return ONLY the normalized_score as a decimal between 0 and 1."""prompt = PromptTemplate.from_template(evaluation_prompt)

相关文章:

langchain教程-2.prompt

前言 该系列教程的代码: https://github.com/shar-pen/Langchain-MiniTutorial 我主要参考 langchain 官方教程, 有选择性的记录了一下学习内容 这是教程清单 1.初试langchain2.prompt3.OutputParser/输出解析4.model/vllm模型部署和langchain调用5.DocumentLoader/多种文档…...

GGML、GGUF、GPTQ 都是啥?

GGML、GGUF和GPTQ是三种与大型语言模型(LLM)量化和优化相关的技术和格式。它们各自有不同的特点和应用场景,下面将详细解释: 1. GGML(GPT-Generated Model Language) 定义:GGML是一种专为机器学习设计的张量库,由Georgi Gerganov创建。它最初的目标是通过单一文件格式…...

C++ 原码、反码、补码和位操作符

目录 一、原码、反码、补码 二、位操作符 1、左移操作符是双⽬操作符 移位规则:左边抛弃、右边补 0 2、右移操作符是双⽬操作符 逻辑右移:左边⽤ 0 填充,右边丢弃算术右移:左边⽤原该值的符号位填充,右边丢弃 3、…...

idea中git版本回退

idea中git版本回退 将dev分支代码合并到master分支执行回退步骤 将dev分支代码合并到master分支 #合并成功之后 执行回退步骤 #在指定的版本上 右键 #这里选择【Hard】彻底回退 #本地的master分支回退成功 #将本地的master强制推送到远程,需要执行命令 git p…...

)】)

【PostgreSQL内核学习 —— (WindowAgg(三))】

WindowAgg set_subquery_pathlist 部分函数解读check_and_push_window_quals 函数find_window_run_conditions 函数执行案例总结 计划器模块(set_plan_refs函数)set_windowagg_runcondition_references 函数执行案例 fix_windowagg_condition_expr 函数f…...

redis教程

Redis 教程 Redis 是一个开源的内存数据结构存储系统,用作数据库、缓存和消息代理。以下是一些基础知识和常用操作。 一、简介 Redis 支持多种数据结构,如字符串、哈希、列表、集合、有序集合等。它具有高性能、高可用性和数据持久化的特性。 二、安…...

Python aiortc API

本研究的主要目的是基于Python aiortc api实现抓取本地设备媒体流(摄像机、麦克风)并与Web端实现P2P通话。本文章仅仅描述实现思路,索要源码请私信我。 1 demo-server解耦 1.1 原始代码解析 1.1.1 http服务器端 import argparse import …...

Transaction rolled back because it has been marked as rollback-only问题解决

1、背景 在我们的日常开发中,经常会存在在一个Service层中调用另外一个Service层的方法。比如:我们有一个TaskService,里面有一个execTask方法,且这个方法存在事物,这个方法在执行完之后,需要调用LogServi…...

深入浅出 DeepSeek V2 高效的MoE语言模型

今天,我们来聊聊 DeepSeek V2 高效的 MoE 语言模型,带大家一起深入理解这篇论文的精髓,同时,告诉大家如何将这些概念应用到实际中。 🌟 什么是 MoE?——Mixture of Experts(专家混合模型&#x…...

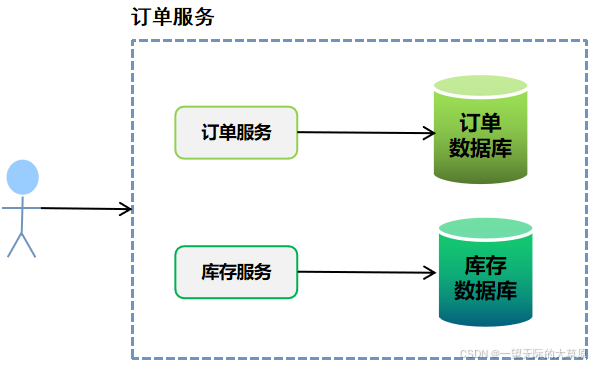

读书笔记--分布式架构的异步化和缓存技术原理及应用场景

本篇是在上一篇的基础上,主要对分布式应用架构下的异步化机制和缓存技术进行学习,主要记录和思考如下,供大家学习参考。大家知道原来传统的单一WAR应用中,由于所有数据都在同一个数据库中,因此事务问题一般借助数据库事…...

售后板子HDMI无输出分析

问题: 某产品售后有1例HDMI无输出。 分析: 1、测试HDMI的HPD脚(HDMI座子的19pin),测试电压4.5V,属于正常。 2、用万用表直流电压档,测试HDMI的3对数据脚和1对时钟脚(板子通过HDM…...

python3处理表格常用操作

使用pandas库读取excel文件 import pandas as pd data pd.read_excel(D:\\飞书\\近一年用量.xlsx)指定工作表 import pandas as pd data pd.read_excel(D:\\飞书\\近一年用量.xlsx, sheet_nameSheet1)读取日期格式 data pd.read_excel(example.xlsx, parse_dates[Date])添…...

)

AUX接口(Auxiliary Port)

AUX接口(Auxiliary Port)是网络设备(如路由器、交换机等)上的一个辅助端口,主要用于设备的配置、管理和维护。以下是关于AUX接口的一些关键点: ### 1. **功能** - **设备配置**:通过AUX接口连接…...

计算机毕业设计Python+Vue.js游戏推荐系统 Steam游戏推荐系统 Django Flask 游 戏可视化 游戏数据分析 游戏大数据 爬虫

温馨提示:文末有 CSDN 平台官方提供的学长联系方式的名片! 温馨提示:文末有 CSDN 平台官方提供的学长联系方式的名片! 温馨提示:文末有 CSDN 平台官方提供的学长联系方式的名片! 作者简介:Java领…...

【梦想终会实现】Linux驱动学习5

加油加油坚持住! 1、 Linux驱动模型:驱动模型即将各模型中共有的部分抽象成C结构体。Linux2.4版本前无驱动模型的概念,每个驱动写的代码因人而异,随后为规范书写方式,发明了驱动模型,即提取公共信息组成一…...

Spring 核心技术解析【纯干货版】-Spring 数据访问模块 Spring-Jdbc

在 Spring 框架中,有一个重要的子项目叫做 spring-jdbc。这个模块提供了一种方 便的编程方式来访问基于 JDBC(Java数据库连接)的数据源。本篇博客将详细解析 Spring JDBC 的主要组件和用法,以帮助你更好地理解并使用这个强大的工具…...

Docker 安装详细教程(适用于CentOS 7 系统)

目录 步骤如下: 1. 卸载旧版 Docker 2. 配置 Docker 的 YUM 仓库 3. 安装 Docker 4. 启动 Docker 并验证安装 5. 配置 Docker 镜像加速 总结 前言 Docker 分为 CE 和 EE 两大版本。CE即社区版(免费,支持周期7个月)…...

Mac本地部署DeekSeek-R1下载太慢怎么办?

Ubuntu 24 本地安装DeekSeek-R1 在命令行先安装ollama curl -fsSL https://ollama.com/install.sh | sh 下载太慢,使用讯雷,mac版下载链接 https://ollama.com/download/Ollama-darwin.zip 进入网站 deepseek-r1:8b,看内存大小4G就8B模型 …...

《Angular之image loading 404》

前言: 千锤万凿出深山,烈火焚烧若等闲。 正文: 一。问题描述 页面加载图片,报错404 二。问题定位 页面需要加载图片,本地开发写成硬编码的形式请求图片资源: 然而部署到服务器上报错404 三。解决方案 正确…...

JavaScript前后端交互-AJAX/fetch

摘自千峰教育kerwin的js教程 AJAX 1、AJAX 的优势 不需要插件的支持,原生 js 就可以使用用户体验好(不需要刷新页面就可以更新数据)减轻服务端和带宽的负担缺点: 搜索引擎的支持度不够,因为数据都不在页面上…...

)

React Native 导航系统实战(React Navigation)

导航系统实战(React Navigation) React Navigation 是 React Native 应用中最常用的导航库之一,它提供了多种导航模式,如堆栈导航(Stack Navigator)、标签导航(Tab Navigator)和抽屉…...

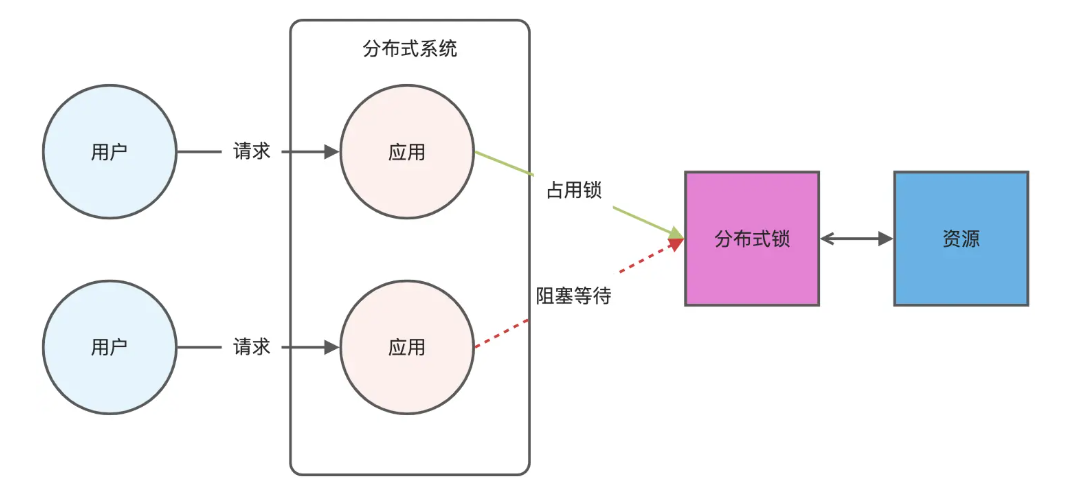

Redis相关知识总结(缓存雪崩,缓存穿透,缓存击穿,Redis实现分布式锁,如何保持数据库和缓存一致)

文章目录 1.什么是Redis?2.为什么要使用redis作为mysql的缓存?3.什么是缓存雪崩、缓存穿透、缓存击穿?3.1缓存雪崩3.1.1 大量缓存同时过期3.1.2 Redis宕机 3.2 缓存击穿3.3 缓存穿透3.4 总结 4. 数据库和缓存如何保持一致性5. Redis实现分布式…...

Swift 协议扩展精进之路:解决 CoreData 托管实体子类的类型不匹配问题(下)

概述 在 Swift 开发语言中,各位秃头小码农们可以充分利用语法本身所带来的便利去劈荆斩棘。我们还可以恣意利用泛型、协议关联类型和协议扩展来进一步简化和优化我们复杂的代码需求。 不过,在涉及到多个子类派生于基类进行多态模拟的场景下,…...

【配置 YOLOX 用于按目录分类的图片数据集】

现在的图标点选越来越多,如何一步解决,采用 YOLOX 目标检测模式则可以轻松解决 要在 YOLOX 中使用按目录分类的图片数据集(每个目录代表一个类别,目录下是该类别的所有图片),你需要进行以下配置步骤&#x…...

)

GitHub 趋势日报 (2025年06月08日)

📊 由 TrendForge 系统生成 | 🌐 https://trendforge.devlive.org/ 🌐 本日报中的项目描述已自动翻译为中文 📈 今日获星趋势图 今日获星趋势图 884 cognee 566 dify 414 HumanSystemOptimization 414 omni-tools 321 note-gen …...

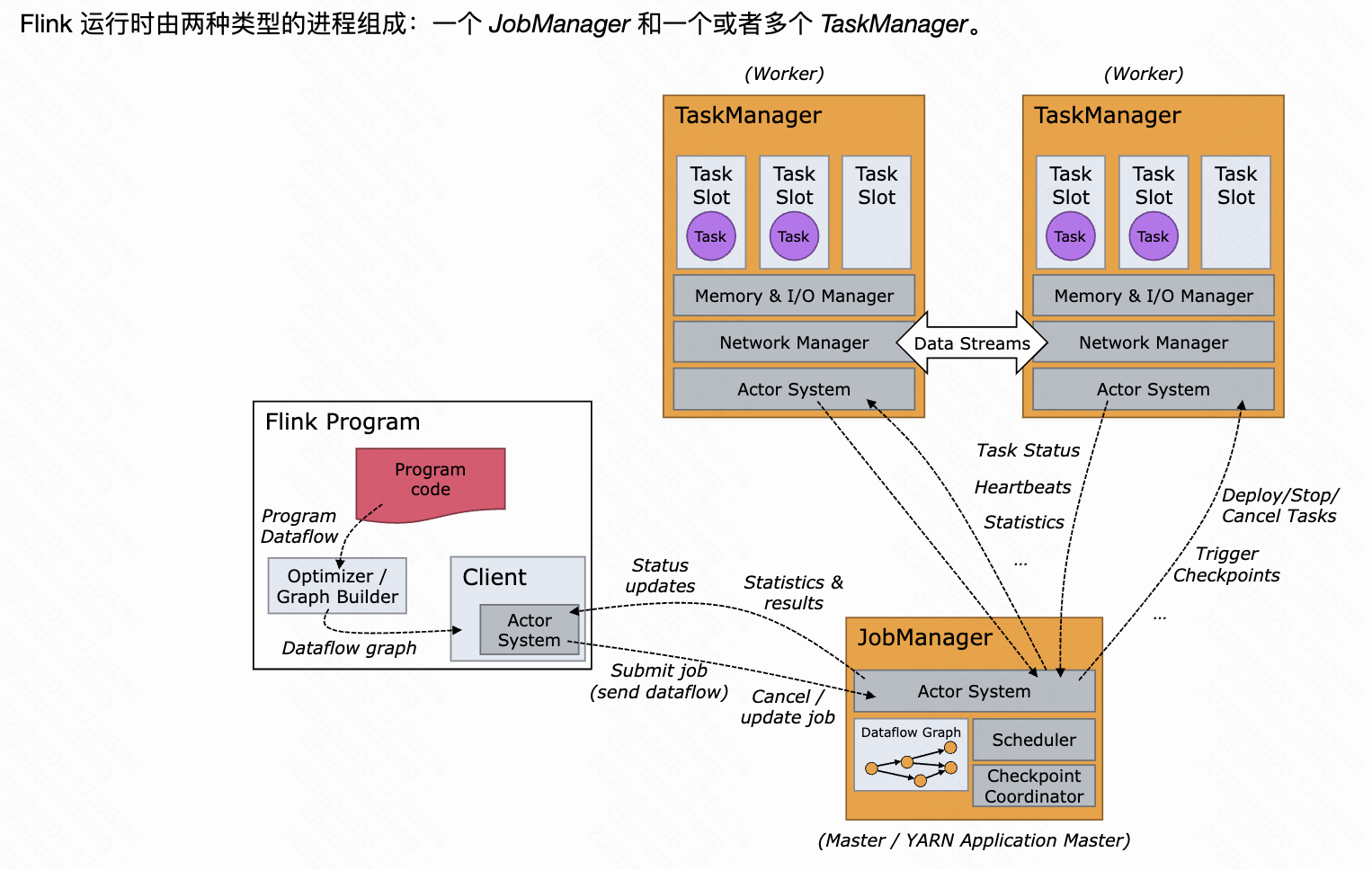

《基于Apache Flink的流处理》笔记

思维导图 1-3 章 4-7章 8-11 章 参考资料 源码: https://github.com/streaming-with-flink 博客 https://flink.apache.org/bloghttps://www.ververica.com/blog 聚会及会议 https://flink-forward.orghttps://www.meetup.com/topics/apache-flink https://n…...

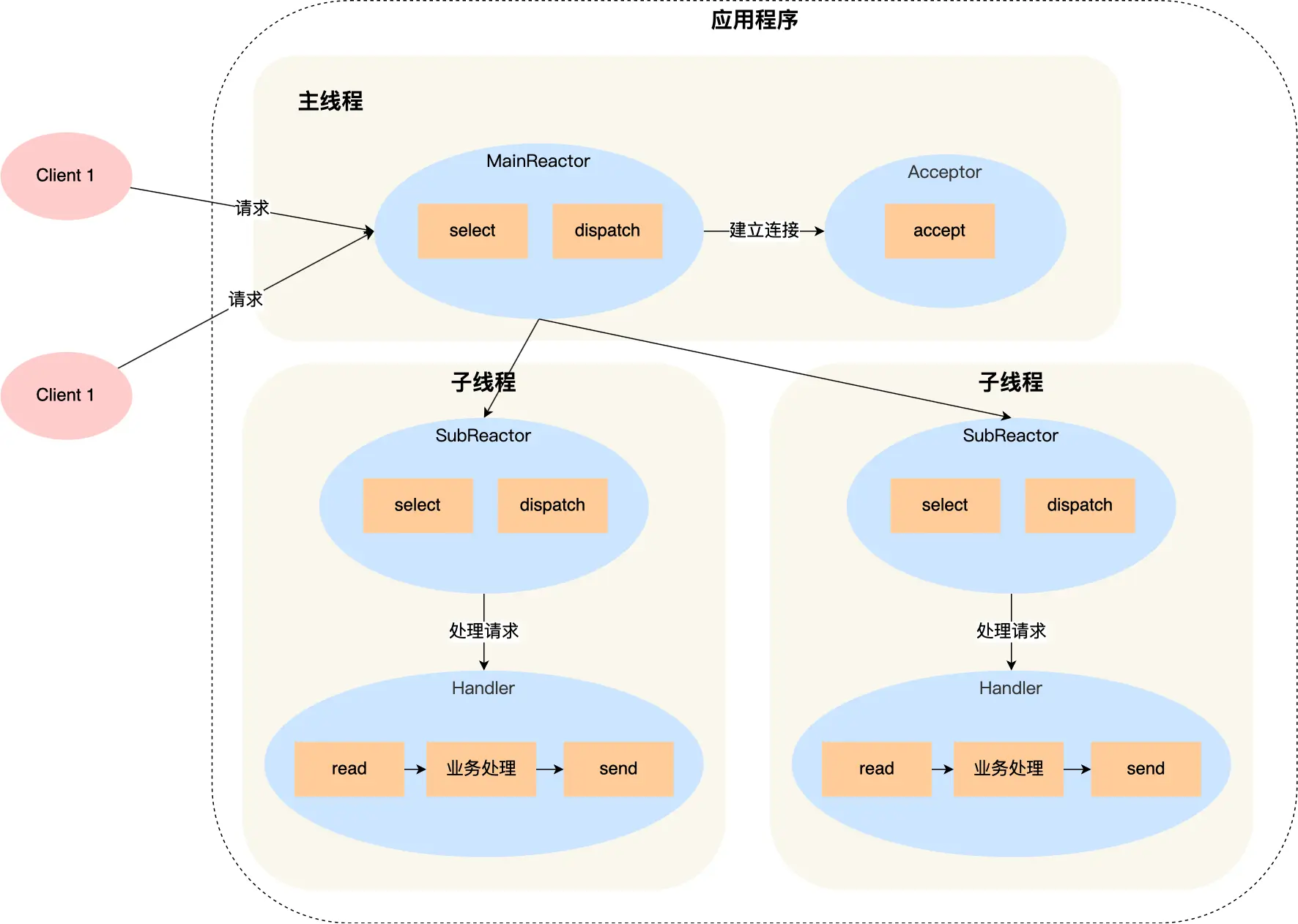

select、poll、epoll 与 Reactor 模式

在高并发网络编程领域,高效处理大量连接和 I/O 事件是系统性能的关键。select、poll、epoll 作为 I/O 多路复用技术的代表,以及基于它们实现的 Reactor 模式,为开发者提供了强大的工具。本文将深入探讨这些技术的底层原理、优缺点。 一、I…...

Hive 存储格式深度解析:从 TextFile 到 ORC,如何选对数据存储方案?

在大数据处理领域,Hive 作为 Hadoop 生态中重要的数据仓库工具,其存储格式的选择直接影响数据存储成本、查询效率和计算资源消耗。面对 TextFile、SequenceFile、Parquet、RCFile、ORC 等多种存储格式,很多开发者常常陷入选择困境。本文将从底…...

RSS 2025|从说明书学习复杂机器人操作任务:NUS邵林团队提出全新机器人装配技能学习框架Manual2Skill

视觉语言模型(Vision-Language Models, VLMs),为真实环境中的机器人操作任务提供了极具潜力的解决方案。 尽管 VLMs 取得了显著进展,机器人仍难以胜任复杂的长时程任务(如家具装配),主要受限于人…...

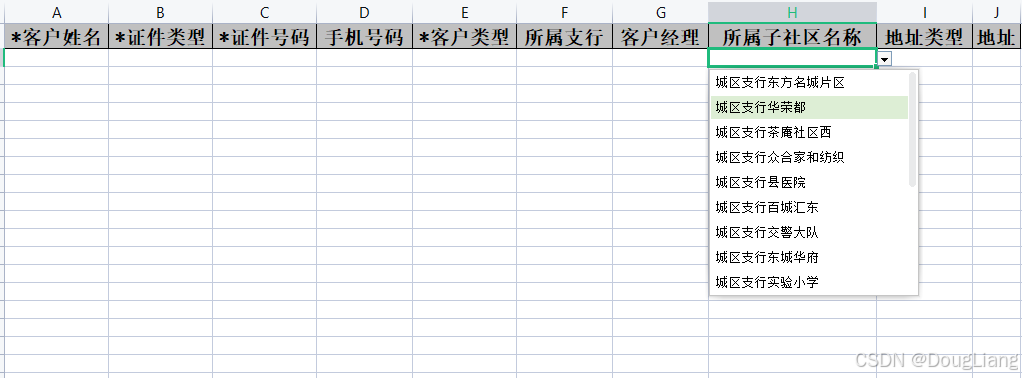

关于easyexcel动态下拉选问题处理

前些日子突然碰到一个问题,说是客户的导入文件模版想支持部分导入内容的下拉选,于是我就找了easyexcel官网寻找解决方案,并没有找到合适的方案,没办法只能自己动手并分享出来,针对Java生成Excel下拉菜单时因选项过多导…...