python环境的yolov11.rknn物体检测

1.首先是我手里生成的一个yolo11的.rknn模型:

2.比对一下yolov5的模型:

2.1 yolov5模型的后期处理:

outputs = rknn.inference(inputs=[img2], data_format=['nhwc'])np.save('./onnx_yolov5_0.npy', outputs[0])np.save('./onnx_yolov5_1.npy', outputs[1])np.save('./onnx_yolov5_2.npy', outputs[2])print('done')# post processinput0_data = outputs[0]input1_data = outputs[1]input2_data = outputs[2]input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))input_data = list()input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))然后:

def yolov5_post_process(input_data):masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],[59, 119], [116, 90], [156, 198], [373, 326]]boxes, classes, scores = [], [], []for input, mask in zip(input_data, masks):b, c, s = process(input, mask, anchors)b, c, s = filter_boxes(b, c, s)boxes.append(b)classes.append(c)scores.append(s)然后:

def process(input, mask, anchors):anchors = [anchors[i] for i in mask]grid_h, grid_w = map(int, input.shape[0:2])box_confidence = input[..., 4]box_confidence = np.expand_dims(box_confidence, axis=-1)box_class_probs = input[..., 5:]box_xy = input[..., :2]*2 - 0.5col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)grid = np.concatenate((col, row), axis=-1)box_xy += gridbox_xy *= int(IMG_SIZE/grid_h)box_wh = pow(input[..., 2:4]*2, 2)box_wh = box_wh * anchorsbox = np.concatenate((box_xy, box_wh), axis=-1)return box, box_confidence, box_class_probs3.修改1 - 基于昨天在宿主机上成功执行的onnx代码:

#!/usr/bin/env python3

# -*- coding:utf-8 -*-

import os

import sys

from math import exp

import cv2

import numpy as npROOT = os.getcwd()

if str(ROOT) not in sys.path:sys.path.append(str(ROOT))RKNN_MODEL = r'/home/firefly/app/models/sim_moonpie-640-640_rk3588.rknn'

IMG_PATH = '/home/firefly/app/images/cake26.jpg'

QUANTIZE_ON = FalseCLASSES = ['moonpie', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light','fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow','elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee','skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard','tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple','sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch','potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone','microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear','hair drier', 'toothbrush', 'moonpie']meshgrid = []class_num = len(CLASSES)

headNum = 3

strides = [8, 16, 32]

mapSize = [[80, 80], [40, 40], [20, 20]]

nmsThresh = 0.45

objectThresh = 0.5input_imgH = 640

input_imgW = 640from rknn.api import RKNN

def rk3588_detect(model, pic, classes):rknn = RKNN(verbose=True)'''# model configrknn.config(mean_values=[[0, 0, 0]],std_values=[[255,255,255]],quant_img_RGB2BGR=False,target_platform='rk3588')'''rknn.load_rknn(path=model)rknn.init_runtime(target="rk3588", core_mask=RKNN.NPU_CORE_AUTO)outputs = rknn.inference(inputs=[pic], data_format=['nhwc'])return outputsclass DetectBox:def __init__(self, classId, score, xmin, ymin, xmax, ymax):self.classId = classIdself.score = scoreself.xmin = xminself.ymin = yminself.xmax = xmaxself.ymax = ymaxclass YOLOV11DetectObj:def __init__(self):passdef GenerateMeshgrid(self):for index in range(headNum):for i in range(mapSize[index][0]):for j in range(mapSize[index][1]):meshgrid.append(j + 0.5)meshgrid.append(i + 0.5)def IOU(self, xmin1, ymin1, xmax1, ymax1, xmin2, ymin2, xmax2, ymax2):xmin = max(xmin1, xmin2)ymin = max(ymin1, ymin2)xmax = min(xmax1, xmax2)ymax = min(ymax1, ymax2)innerWidth = xmax - xmininnerHeight = ymax - ymininnerWidth = innerWidth if innerWidth > 0 else 0innerHeight = innerHeight if innerHeight > 0 else 0innerArea = innerWidth * innerHeightarea1 = (xmax1 - xmin1) * (ymax1 - ymin1)area2 = (xmax2 - xmin2) * (ymax2 - ymin2)total = area1 + area2 - innerAreareturn innerArea / totaldef NMS(self, detectResult):predBoxs = []sort_detectboxs = sorted(detectResult, key=lambda x: x.score, reverse=True)for i in range(len(sort_detectboxs)):xmin1 = sort_detectboxs[i].xminymin1 = sort_detectboxs[i].yminxmax1 = sort_detectboxs[i].xmaxymax1 = sort_detectboxs[i].ymaxclassId = sort_detectboxs[i].classIdif sort_detectboxs[i].classId != -1:predBoxs.append(sort_detectboxs[i])for j in range(i + 1, len(sort_detectboxs), 1):if classId == sort_detectboxs[j].classId:xmin2 = sort_detectboxs[j].xminymin2 = sort_detectboxs[j].yminxmax2 = sort_detectboxs[j].xmaxymax2 = sort_detectboxs[j].ymaxiou = IOU(xmin1, ymin1, xmax1, ymax1, xmin2, ymin2, xmax2, ymax2)if iou > nmsThresh:sort_detectboxs[j].classId = -1return predBoxsdef sigmoid(self, x):return 1 / (1 + exp(-x))def postprocess(self, out, img_h, img_w):print('postprocess ... ')detectResult = []output = []for i in range(len(out)):print(out[i].shape)output.append(out[i].reshape((-1)))scale_h = img_h / input_imgHscale_w = img_w / input_imgWgridIndex = -2cls_index = 0cls_max = 0for index in range(headNum):reg = output[index * 2 + 0]cls = output[index * 2 + 1]for h in range(mapSize[index][0]):for w in range(mapSize[index][1]):gridIndex += 2if 1 == class_num:cls_max = sigmoid(cls[0 * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w])cls_index = 0else:for cl in range(class_num):cls_val = cls[cl * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w]if 0 == cl:cls_max = cls_valcls_index = clelse:if cls_val > cls_max:cls_max = cls_valcls_index = clcls_max = self.sigmoid(cls_max)if cls_max > objectThresh:regdfl = []for lc in range(4):sfsum = 0locval = 0for df in range(16):temp = exp(reg[((lc * 16) + df) * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w])reg[((lc * 16) + df) * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w] = tempsfsum += tempfor df in range(16):sfval = reg[((lc * 16) + df) * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w] / sfsumlocval += sfval * dfregdfl.append(locval)x1 = (meshgrid[gridIndex + 0] - regdfl[0]) * strides[index]y1 = (meshgrid[gridIndex + 1] - regdfl[1]) * strides[index]x2 = (meshgrid[gridIndex + 0] + regdfl[2]) * strides[index]y2 = (meshgrid[gridIndex + 1] + regdfl[3]) * strides[index]xmin = x1 * scale_wymin = y1 * scale_hxmax = x2 * scale_wymax = y2 * scale_hxmin = xmin if xmin > 0 else 0ymin = ymin if ymin > 0 else 0xmax = xmax if xmax < img_w else img_wymax = ymax if ymax < img_h else img_hbox = DetectBox(cls_index, cls_max, xmin, ymin, xmax, ymax)detectResult.append(box)# NMSprint('detectResult:', len(detectResult))predBox = self.NMS(detectResult)return predBoxdef precess_image(self, img_src, resize_w, resize_h):print(f'{type(img_src)}')image = cv2.resize(img_src, (resize_w, resize_h), interpolation=cv2.INTER_LINEAR)image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)image = image.astype(np.float32)image /= 255.0return imagedef detect(self, img_path):self.GenerateMeshgrid()orig = cv2.imread(img_path)if orig is None:print(f"无法读取图像: {img_path}")returnimg_h, img_w = orig.shape[:2]image = self.precess_image(orig, input_imgW, input_imgH)image = image.transpose((2, 0, 1))image = np.expand_dims(image, axis=0)#image = np.ones((1, 3, 640, 640), dtype=np.uint8)# print(image.shape)#ort_session = ort.InferenceSession(ONNX_MODEL)#pred_results = (ort_session.run(None, {'data': image}))pred_results = rk3588_detect(RKNN_MODEL, image, CLASSES)out = []for i in range(len(pred_results)):out.append(pred_results[i])predbox = self.postprocess(out, img_h, img_w)print('obj num is :', len(predbox))for i in range(len(predbox)):xmin = int(predbox[i].xmin)ymin = int(predbox[i].ymin)xmax = int(predbox[i].xmax)ymax = int(predbox[i].ymax)classId = predbox[i].classIdscore = predbox[i].scorecv2.rectangle(orig, (xmin, ymin), (xmax, ymax), (0, 255, 0), 2)ptext = (xmin, ymin)title = CLASSES[classId] + "%.2f" % scorecv2.putText(orig, title, ptext, cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2, cv2.LINE_AA)cv2.imwrite('./test_onnx_result.jpg', orig)if __name__ == '__main__':print('This is main ....')img_path = IMG_PATHobj = YOLOV11DetectObj()obj.detect(img_path)输出不对:

firefly@firefly:~/app/test$ python3 ./detect_rk3588.py

This is main ....

<class 'numpy.ndarray'>

I rknn-toolkit2 version: 2.3.0

I target set by user is: rk3588

postprocess ...

(1, 64, 80, 80)

(1, 81, 80, 80)

(1, 64, 40, 40)

(1, 81, 40, 40)

(1, 64, 20, 20)

(1, 81, 20, 20)

detectResult: 0

obj num is : 0

4.修改2 - 基于yolov5.rknn代码

4.1 似乎有如下映射关系:

似乎:yolo11.output5 == yolo5.output2,

yolo11.output3 == yolo5.output1,

yolo11.output1 == yolo5.output

#!/usr/bin/env python3

# -*- coding:utf-8 -*-

import os

import sys

import urllib

import traceback

import time

import numpy as np

import cv2

from rknn.api import RKNNROOT = os.getcwd()

if str(ROOT) not in sys.path:sys.path.append(str(ROOT))# Model from https://github.com/airockchip/rknn_model_zoo yolov11->selftrained. yolo11s?

ONNX_MODEL = r'/home/firefly/app/models/yolo11_selfgen.onnx'

RKNN_MODEL = r'/home/firefly/app/models/new_moonpie_yolo11_640x640.rknn'

IMG_PATH = r'/home/firefly/app/images/cake26.jpg'

QUANTIZE_ON = True

DATASET=r'./dataset.txt'CLASSES = ['moonpie', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light','fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow','elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee','skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard','tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple','sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch','potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone','microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear','hair drier', 'toothbrush']meshgrid = []class_num = len(CLASSES)

headNum = 3

strides = [8, 16, 32]

mapSize = [[80, 80], [40, 40], [20, 20]]

input_imgH = 640

input_imgW = 640

IMG_SIZE = input_imgH

QUANTIZE_ON = TrueOBJ_THRESH = 0.25

NMS_THRESH = 0.45def xywh2xyxy(x):# Convert [x, y, w, h] to [x1, y1, x2, y2]y = np.copy(x)y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left xy[:, 1] = x[:, 1] - x[:, 3] / 2 # top left yy[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right xy[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right yreturn ydef process(input, mask, anchors):anchors = [anchors[i] for i in mask]grid_h, grid_w = map(int, input.shape[0:2])box_confidence = input[..., 4]box_confidence = np.expand_dims(box_confidence, axis=-1)box_class_probs = input[..., 5:]box_xy = input[..., :2]*2 - 0.5col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)grid = np.concatenate((col, row), axis=-1)box_xy += gridbox_xy *= int(IMG_SIZE/grid_h)box_wh = pow(input[..., 2:4]*2, 2)box_wh = box_wh * anchorsbox = np.concatenate((box_xy, box_wh), axis=-1)return box, box_confidence, box_class_probsdef filter_boxes(boxes, box_confidences, box_class_probs):"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!# Argumentsboxes: ndarray, boxes of objects.box_confidences: ndarray, confidences of objects.box_class_probs: ndarray, class_probs of objects.# Returnsboxes: ndarray, filtered boxes.classes: ndarray, classes for boxes.scores: ndarray, scores for boxes."""boxes = boxes.reshape(-1, 4)box_confidences = box_confidences.reshape(-1)box_class_probs = box_class_probs.reshape(-1, box_class_probs.shape[-1])_box_pos = np.where(box_confidences >= OBJ_THRESH)boxes = boxes[_box_pos]box_confidences = box_confidences[_box_pos]box_class_probs = box_class_probs[_box_pos]class_max_score = np.max(box_class_probs, axis=-1)classes = np.argmax(box_class_probs, axis=-1)_class_pos = np.where(class_max_score >= OBJ_THRESH)boxes = boxes[_class_pos]classes = classes[_class_pos]scores = (class_max_score* box_confidences)[_class_pos]return boxes, classes, scoresdef nms_boxes(boxes, scores):"""Suppress non-maximal boxes.# Argumentsboxes: ndarray, boxes of objects.scores: ndarray, scores of objects.# Returnskeep: ndarray, index of effective boxes."""x = boxes[:, 0]y = boxes[:, 1]w = boxes[:, 2] - boxes[:, 0]h = boxes[:, 3] - boxes[:, 1]areas = w * horder = scores.argsort()[::-1]keep = []while order.size > 0:i = order[0]keep.append(i)xx1 = np.maximum(x[i], x[order[1:]])yy1 = np.maximum(y[i], y[order[1:]])xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)inter = w1 * h1ovr = inter / (areas[i] + areas[order[1:]] - inter)inds = np.where(ovr <= NMS_THRESH)[0]order = order[inds + 1]keep = np.array(keep)return keepdef yolov5_post_process(input_data):masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],[59, 119], [116, 90], [156, 198], [373, 326]]boxes, classes, scores = [], [], []for input, mask in zip(input_data, masks):b, c, s = process(input, mask, anchors)b, c, s = filter_boxes(b, c, s)boxes.append(b)classes.append(c)scores.append(s)boxes = np.concatenate(boxes)boxes = xywh2xyxy(boxes)classes = np.concatenate(classes)scores = np.concatenate(scores)nboxes, nclasses, nscores = [], [], []for c in set(classes):inds = np.where(classes == c)b = boxes[inds]c = classes[inds]s = scores[inds]keep = nms_boxes(b, s)nboxes.append(b[keep])nclasses.append(c[keep])nscores.append(s[keep])if not nclasses and not nscores:return None, None, Noneboxes = np.concatenate(nboxes)classes = np.concatenate(nclasses)scores = np.concatenate(nscores)return boxes, classes, scoresdef draw(image, boxes, scores, classes):"""Draw the boxes on the image.# Argument:image: original image.boxes: ndarray, boxes of objects.classes: ndarray, classes of objects.scores: ndarray, scores of objects.all_classes: all classes name."""print("{:^12} {:^12} {}".format('class', 'score', 'xmin, ymin, xmax, ymax'))print('-' * 50)for box, score, cl in zip(boxes, scores, classes):top, left, right, bottom = boxtop = int(top)left = int(left)right = int(right)bottom = int(bottom)cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),(top, left - 6),cv2.FONT_HERSHEY_SIMPLEX,0.6, (0, 0, 255), 2)print("{:^12} {:^12.3f} [{:>4}, {:>4}, {:>4}, {:>4}]".format(CLASSES[cl], score, top, left, right, bottom))def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):# Resize and pad image while meeting stride-multiple constraintsshape = im.shape[:2] # current shape [height, width]if isinstance(new_shape, int):new_shape = (new_shape, new_shape)# Scale ratio (new / old)r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])# Compute paddingratio = r, r # width, height ratiosnew_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh paddingdw /= 2 # divide padding into 2 sidesdh /= 2if shape[::-1] != new_unpad: # resizeim = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))left, right = int(round(dw - 0.1)), int(round(dw + 0.1))im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add borderreturn im, ratio, (dw, dh)def detect_on_ubuntu(onnx_model_path=ONNX_MODEL, rknn_path=RKNN_MODEL, image_path=IMG_PATH, classes=CLASSES):# Create RKNN objectrknn = RKNN(verbose=True)# pre-process configprint('--> Config model')rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]], target_platform='rk3588')print('done')# Load ONNX modelprint('--> Loading model')ret = rknn.load_onnx(model=onnx_model_path)if ret != 0:print('Load model failed!')exit(ret)print('done')# Build modelprint('--> Building model')ret = rknn.build(do_quantization=QUANTIZE_ON, dataset=DATASET)if ret != 0:print('Build model failed!')exit(ret)print('done')# Export RKNN modelprint('--> Export rknn model')ret = rknn.export_rknn(rknn_path)if ret != 0:print('Export rknn model failed!')exit(ret)print('done')# Init runtime environmentprint('--> Init runtime environment')ret = rknn.init_runtime()if ret != 0:print('Init runtime environment failed!')exit(ret)print('done')# Set inputsimg = cv2.imread(image_path)# img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))# Inferenceprint('--> Running model')img2 = np.expand_dims(img, 0)np.save('./raw_yolov11_in.npy', [img2])outputs = rknn.inference(inputs=[img2], data_format=['nhwc'])np.save('./onnx_yolov11_0_raw.npy', outputs[1])np.save('./onnx_yolov11_1_raw.npy', outputs[3])np.save('./onnx_yolov11_2_raw.npy', outputs[5])print('done')# post processinput0_data = outputs[1]input1_data = outputs[3]input2_data = outputs[5]# 创建一个全零数组,用于填充# 计算需要填充的通道数pad_channels = 255 - len(classes)padding = np.zeros((1, pad_channels, 80, 80), dtype=np.float32)input0_data = np.concatenate((input0_data, padding), axis=1)padding = np.zeros((1, pad_channels, 40, 40), dtype=np.float32)input1_data = np.concatenate((input1_data, padding), axis=1)padding = np.zeros((1, pad_channels, 20, 20), dtype=np.float32)input2_data = np.concatenate((input2_data, padding), axis=1)np.save('./onnx_yolov11_0.npy', input0_data)np.save('./onnx_yolov11_1.npy', input1_data)np.save('./onnx_yolov11_2.npy', input2_data)input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))input_data = list()input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))boxes, classes, scores = yolov5_post_process(input_data)img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)if boxes is not None:draw(img_1, boxes, scores, classes)cv2.imwrite('result.jpg', img_1)print('Save results to result.jpg!')rknn.release()def precess_image(img_src, resize_w, resize_h):orig = cv2.imread(img_src)if orig is None:print(f"无法读取图像: {img_path}")returnimg_h, img_w = orig.shape[:2]print(f'{type(orig)}')image = cv2.resize(orig, (resize_w, resize_h), interpolation=cv2.INTER_LINEAR)image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)image = image.astype(np.float32)image /= 255.0'''# Set inputsimg = cv2.imread(IMG_PATH)# img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))# Inferenceprint('--> Running model')img2 = np.expand_dims(img, 0)'''return imagedef detect_on_rk3588(rknn_path=RKNN_MODEL, image_path=IMG_PATH, classes=CLASSES):# Create RKNN objectrknn = RKNN(verbose=True)# pre-process configprint('--> Config model')rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]], target_platform='rk3588')print('done')# Load ONNX modelret = rknn.load_rknn(rknn_path)if ret != 0:print('Export rknn model failed!')exit(ret)print('done')# Init runtime environmentprint('--> Init runtime environment')ret = rknn.init_runtime(target="rk3588", core_mask=RKNN.NPU_CORE_AUTO)if ret != 0:print('Init runtime environment failed!')exit(ret)print('done')img2 = precess_image(image_path, input_imgW, input_imgH)np.save('./raw_yolov11_in.npy', [img2])outputs = rknn.inference(inputs=[img2], data_format=['nhwc'])np.save('./raw_yolov11_0.npy', outputs[1])np.save('./raw_yolov11_1.npy', outputs[3])np.save('./raw_yolov11_2.npy', outputs[5])print('done')# 创建一个全零数组,用于填充# 计算需要填充的通道数pad_channels = 255 - len(classes)padding = np.zeros((1, pad_channels, 80, 80), dtype=np.float32)input0_data = np.concatenate((input0_data, padding), axis=1)padding = np.zeros((1, pad_channels, 40, 40), dtype=np.float32)input1_data = np.concatenate((input1_data, padding), axis=1)padding = np.zeros((1, pad_channels, 20, 20), dtype=np.float32)input2_data = np.concatenate((input2_data, padding), axis=1)np.save('./modified_yolov11_0.npy', input0_data)np.save('./modified_yolov11_1.npy', input1_data)np.save('./modified_yolov11_2.npy', input2_data)input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))input_data = list()input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))boxes, classes, scores = yolov5_post_process(input_data)img = cv2.imread(image_path)img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)if boxes is not None:draw(img_1, boxes, scores, classes)cv2.imwrite('rknn_detect_result.jpg', img_1)print('Save results to rknn_detect_result.jpg!')else:print('target can not be found!')rknn.release()if __name__ == '__main__':detect_on_ubuntu(ONNX_MODEL, RKNN_MODEL, IMG_PATH, CLASSES)#detect_on_rk3588(RKNN_MODEL, IMG_PATH, CLASSES)5.结论:

最终通过仔细核对yolov5, yolo11, onnx几个模型的输入输出参数,发现了这样的事情:

- yolov11 的输出参数有6个,大概率,按照上面的结论是,1,3,5相当于原来的输出参数0,1,2

- yolov11的输出的6个参数,第二维尺寸,现在不是255(-1)个,而是len(classes)个。

- 我的问题在于我在训练时把classes设置为81,而在导出.rknn时,仍然导出为80.所以,结果就是onnx模式访问正常,而.rknn方式访问错误。

- yolo detect代码中的.save是瑞芯微的那些同志在调试接口时留下的一些调试语句,它们不必存在。

- rknn代码输入输出参数建议u8化,在simluation环境传递的是float32。这个修改结束,应该速度会快不少。

- 还有,yolo detect的matrix pack in pack out时,效率很低,他还在进行float转换。这个我没有仔细看代码,理论上,onnx的识别信息析取是更快的。

还在测试。如果确认最终稿的结论成立。我会在这个帖子里留下标记。上面的两端代码没有大问题,我在测试成功后会更新,现在就是对的。

相关文章:

python环境的yolov11.rknn物体检测

1.首先是我手里生成的一个yolo11的.rknn模型: 2.比对一下yolov5的模型: 2.1 yolov5模型的后期处理: outputs rknn.inference(inputs[img2], data_format[nhwc])np.save(./onnx_yolov5_0.npy, outputs[0])np.save(./onnx_yolov5_1.npy, outpu…...

I2C、SPI、UART

I2C:串口通信,同步,半双工,双线(数据线SDA时钟线SCL),最大距离1米到几米 SPI(串行外设接口):串口通信,同步,全双工,四线&…...

如何监控和优化 MySQL 中的慢 SQL

如何监控和优化 MySQL 中的慢 SQL 前言一、什么是慢 SQL?二、如何监控慢 SQL?1. 启用慢查询日志启用方法:日志内容: 2. 使用 mysqldumpslow 分析日志 三、如何分析慢 SQL?1. 使用 EXPLAIN 分析执行计划使用方法&#x…...

)

13-二叉树最小深度-深度优先(DFS)

一、定义 什么是二叉树的最小深度? 二叉树的最小深度是指从根节点到最近的叶子节点的最短路径上的节点数。叶子节点是指没有子节点的节点。 举个例子: 1/ \2 3/ 4 这棵树的最小深度是 2,因为从根节点 1 到叶子节点 3 的路径最短&#x…...

51单片机入门_10_数码管动态显示(数字的使用;简单动态显示;指定值的数码管动态显示)

接上篇的数码管静态显示,以下是接上篇介绍到的动态显示的原理。 动态显示的特点是将所有位数码管的段选线并联在一起,由位选线控制是哪一位数码管有效。选亮数码管采用动态扫描显示。所谓动态扫描显示即轮流向各位数码管送出字形码和相应的位选ÿ…...

代码补全『三重奏』:EverEdit如何用上下文识别+语法感知+智能片段重构你的编码效率!

1 代码自动完成 1.1 应用场景 在编辑文档时,为了提高编辑效率,编辑器一般都会带有自动完成功能,比如:输入括号时自动补全另一半,输入文字时,自动补全剩下的部分。 1.2 使用方法 1.2.1 自动缩进 单击主菜…...

电脑系统损坏,备份文件

一、工具准备 1.U盘:8G以上就够用,注意会格式化U盘,提前备份U盘内容 2.电脑:下载Windows系统并进行启动盘制作 二、Windows启动盘制作 1.微软官网下载启动盘制作工具微软官网下载启动盘制作工具https://www.microsoft.com/zh-c…...

Token Statistics Transformer:线性注意力革命,重新定义Transformer效率天花板

“TOKEN STATISTICS TRANSFORMER: LINEAR-TIME ATTENTION VIA VARIATIONAL RATE REDUCTION” 由Ziyang Wu等人撰写。文章提出一种新型Transformer注意力算子,通过对最大编码率降低( M C R 2 MCR^{2} MCR2)目标的变分形式进行展开优化得到&…...

项目结构与管理)

Django 5实用指南(二)项目结构与管理

2.1 Django5项目结构概述 当你创建一个新的 Django 项目时,Django 会自动生成一个默认的项目结构。这个结构是根据 Django 的最佳实践来设计的,以便开发者能够清晰地管理和维护项目中的各种组件。理解并管理好这些文件和目录结构是 Django 开发的基础。…...

)

JAVA监听器(学习自用)

一、什么是监听器 servlet监听器是一种特殊的接口,用于监听特定的事件(如请求创建和销毁、会话创建和销毁、上下文的初始化和销毁)。 当Web应用程序中反生特定事件时,Servlet容器就会自动调用监听器中相应的方法来处理这些事件。…...

Ubuntu下mysql主从复制搭建

本文介绍mysql 8.4主从集群的搭建,从单个机器安装到集群的配置,整体走了一遍,希望对大家有帮助。mysql 8.4和之前的版本命令上有些变化,大家用来参考。 0、环境 ubuntu: 22.04mysql:8.4 1、安装mysql 1…...

VirtualBox 中使用 桥接网卡 并设置 MAC 地址

在 VirtualBox 中使用 桥接网卡 并设置 MAC 地址,可以按照以下步骤操作: 步骤 1:设置桥接网卡 打开 VirtualBox,选择你的虚拟机,点击 “设置” (Settings)。进入 “网络” (Network) 选项卡。在 “适配器 1” (Adapt…...

Ubuntu 20 掉显卡驱动的解决办法

目录 问题背景解决办法Step1:首先查看当前linux内核Step2:重启Step3:进入ubuntu advanced (即高级选项)Step4:查看有哪些linux内核Step5:如果滚回老板kernel还是没有驱动,就找到驱动…...

EasyPoi系列之框架集成及基础使用

EasyPoi系列之框架集成及基础使用 1 EasyPoi1.1 gitee仓库地址 2 EasyPoi集成至SpringBoot2.1 maven引入jar包 3 EasyPoi Excel导出3.1 基于实体对象导出3.1.1 Excel 注解3.1.2 编写实体3.1.3 编写导出方法3.1.4 导出效果 3.2 基于模板导出3.2.1 编写模板文件3.2.2 编写导出方法…...

Web后端 Tomcat服务器

一 Tomcat Web 服务器 介绍: Tomcat是一个开源的Java Servlet容器和Web服务器,由Apache软件基金会开发。它实现了Java Servlet和JavaServer Pages (JSP) 技术,用于运行Java Web应用程序。Tomcat轻量、易于配置,常作为开发和部署…...

【RK3588嵌入式图形编程】-SDL2-构建模块化UI

构建模块化UI 文章目录 构建模块化UI1、概述2、创建UI管理器3、嵌套组件4、继承5、多态子组件6、总结在本文中,将介绍如何使用C++和SDL创建一个灵活且可扩展的UI系统,重点关注组件层次结构和多态性。 1、概述 在前面的文章中,我们介绍了应用程序循环和事件循环,这为我们的…...

面向机器学习的Java库与平台简介、适用场景、官方网站、社区网址

Java机器学习的库与平台 最近听到有的人说要做机器学习就一定要学Python,我想他们掌握的知道还不够系统全面。本文作者给大家介绍几种常用Java实现的机器学习库,快快收藏加关注吧~ Java机器学习库表格 Java机器学习库整理库/平台概念适合场…...

基于YOLO11深度学习的心脏超声图像间隔壁检测分割与分析系统【python源码+Pyqt5界面+数据集+训练代码】深度学习实战、目标分割、人工智能

《------往期经典推荐------》 一、AI应用软件开发实战专栏【链接】 项目名称项目名称1.【人脸识别与管理系统开发】2.【车牌识别与自动收费管理系统开发】3.【手势识别系统开发】4.【人脸面部活体检测系统开发】5.【图片风格快速迁移软件开发】6.【人脸表表情识别系统】7.【…...

ubuntu24基于虚拟机无法从主机拖拽文件夹

以下是解决问题的精简步骤: 安装 open-vm-tools-desktop: bash复制 sudo apt-get install open-vm-tools-desktop 重启虚拟机后,文字复制粘贴功能可正常工作。 禁用 Wayland: 编辑 /etc/gdm3/custom.conf 文件: bash复…...

常用Webpack Loader汇总介绍

引言 在前端项目开发中,Webpack 作为强大的模块打包工具,能够将各种资源进行打包处理。而其中的 Loader 则是 Webpack 处理不同类型文件的关键,它允许 Webpack 不仅仅局限于处理 JavaScript 文件,还能处理 CSS、图片、字体等多种…...

未来机器人的大脑:如何用神经网络模拟器实现更智能的决策?

编辑:陈萍萍的公主一点人工一点智能 未来机器人的大脑:如何用神经网络模拟器实现更智能的决策?RWM通过双自回归机制有效解决了复合误差、部分可观测性和随机动力学等关键挑战,在不依赖领域特定归纳偏见的条件下实现了卓越的预测准…...

Chapter03-Authentication vulnerabilities

文章目录 1. 身份验证简介1.1 What is authentication1.2 difference between authentication and authorization1.3 身份验证机制失效的原因1.4 身份验证机制失效的影响 2. 基于登录功能的漏洞2.1 密码爆破2.2 用户名枚举2.3 有缺陷的暴力破解防护2.3.1 如果用户登录尝试失败次…...

OpenLayers 可视化之热力图

注:当前使用的是 ol 5.3.0 版本,天地图使用的key请到天地图官网申请,并替换为自己的key 热力图(Heatmap)又叫热点图,是一种通过特殊高亮显示事物密度分布、变化趋势的数据可视化技术。采用颜色的深浅来显示…...

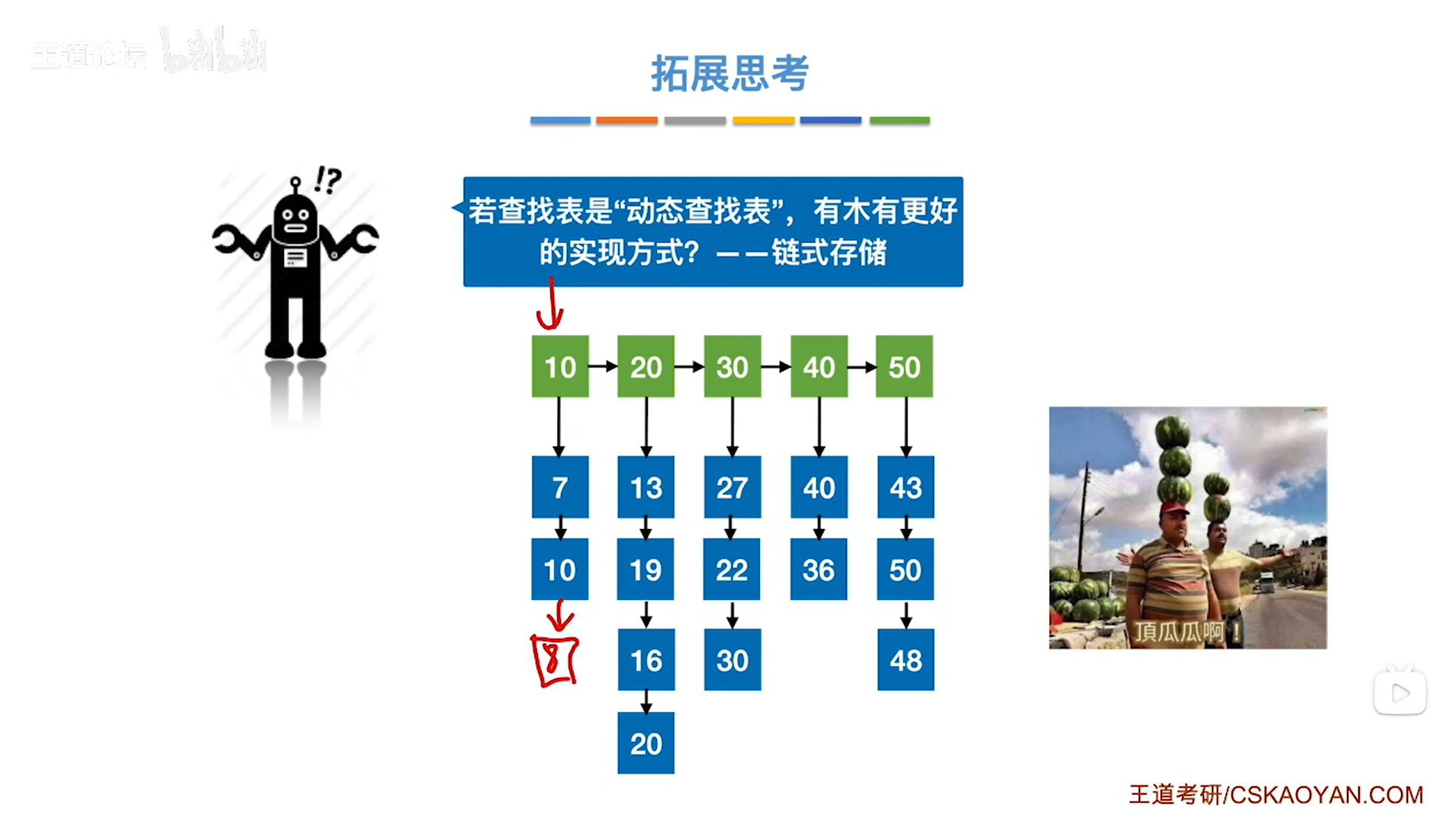

7.4.分块查找

一.分块查找的算法思想: 1.实例: 以上述图片的顺序表为例, 该顺序表的数据元素从整体来看是乱序的,但如果把这些数据元素分成一块一块的小区间, 第一个区间[0,1]索引上的数据元素都是小于等于10的, 第二…...

)

Java 语言特性(面试系列1)

一、面向对象编程 1. 封装(Encapsulation) 定义:将数据(属性)和操作数据的方法绑定在一起,通过访问控制符(private、protected、public)隐藏内部实现细节。示例: public …...

对WWDC 2025 Keynote 内容的预测

借助我们以往对苹果公司发展路径的深入研究经验,以及大语言模型的分析能力,我们系统梳理了多年来苹果 WWDC 主题演讲的规律。在 WWDC 2025 即将揭幕之际,我们让 ChatGPT 对今年的 Keynote 内容进行了一个初步预测,聊作存档。等到明…...

Mac软件卸载指南,简单易懂!

刚和Adobe分手,它却总在Library里给你写"回忆录"?卸载的Final Cut Pro像电子幽灵般阴魂不散?总是会有残留文件,别慌!这份Mac软件卸载指南,将用最硬核的方式教你"数字分手术"࿰…...

Axios请求超时重发机制

Axios 超时重新请求实现方案 在 Axios 中实现超时重新请求可以通过以下几种方式: 1. 使用拦截器实现自动重试 import axios from axios;// 创建axios实例 const instance axios.create();// 设置超时时间 instance.defaults.timeout 5000;// 最大重试次数 cons…...

数据库分批入库

今天在工作中,遇到一个问题,就是分批查询的时候,由于批次过大导致出现了一些问题,一下是问题描述和解决方案: 示例: // 假设已有数据列表 dataList 和 PreparedStatement pstmt int batchSize 1000; // …...

Java编程之桥接模式

定义 桥接模式(Bridge Pattern)属于结构型设计模式,它的核心意图是将抽象部分与实现部分分离,使它们可以独立地变化。这种模式通过组合关系来替代继承关系,从而降低了抽象和实现这两个可变维度之间的耦合度。 用例子…...