·[K8S:使用calico网络插件]:解决集群节点NotReady问题

文章目录

- 一:安装calico:

- 1.1:weget安装Colico网络通信插件:

- 1.2:修改calico.yaml网卡相关配置:

- 1.2.1:查看本机ip 网卡相关信息:

- 1.2.2:修改calico.yaml网卡interface相关信息

- 1.3:kubectl apply -f calico.yaml 生成calico pod 对象:

- 1.3.1:异常日志抛出:

- 1.3.2:场景一:执行K8S admin config配置文件替换相关操作:

- 1.3.2:场景二:执行K8S admin config配置文件替换相关操作:

- 二:安装完成calico pod:解决没用正常运行问题:

- 2.1:查看calico pod 运行状态:

- 2.2:查看init:error calico pod 异常节点信息:执行 kubectl describe pod podcode

- 2.3:可以试试重新下载coredns image 和 执行docker tag coredns相关命令:

- 2.4:再次查看coredns和calico pods启动信息:

- 2.4.1:执行命令kubectl get pod -A.查看coredns和calico pods启动信息:

- 2.5:查看异常calico-node pod 日志:

- 2.5.1:master:命令: kubectl logs -f calico-node-cwpt8 -n kube-system:

- 2.5.2:master:查看异常日志

- 2.5.3:master:telnet 异常信息ip:port 地址加端口:

- 2.5.3.1:安装telnet插件:

- 2.5.3.2:telnet 异常信息ip:port 地址加端口: telnet 192.168.56.102 10250

- 2.5.3.3:开放路由不通的机器端口:10250

- 2.5.3.4:成功: telnet 192.168.56.102 10250

- 2.6:master:再次查看异常calico-node pod 日志:还是不行

- 2.7:master:查看coredns 异常日志:显示和从机器网络有关

- 2.8:cluster:查看coredns 异常日志:显示和从机器网络有关

- 2.8.1:cluster:查看异常日志:journalctl -f -u kubelet:

- 2.8.1.1重点:cni相关配置找不到:"Unable to update cni config" err="no networks found in /etc/cni/net.d"

- 2.8.2:master:查看/etc/cni/net.d配置信息:

- 2.8.3:拷贝到cluster从master:/etc/cni/net.d配置信息

- 2.9:重启kubelet查看各nodes节点状态

- 三:后续问题:

一:安装calico:

1.1:weget安装Colico网络通信插件:

执行: wget --no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

[root@vboxnode3ccccccttttttchenyang kubernetes]# wget --no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

--2023-05-03 02:23:02-- https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

正在解析主机 projectcalico.docs.tigera.io (projectcalico.docs.tigera.io)... 13.228.199.255, 18.139.194.139, 2406:da18:880:3800::c8, ...

正在连接 projectcalico.docs.tigera.io (projectcalico.docs.tigera.io)|13.228.199.255|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:238089 (233K) [text/yaml]

正在保存至: “calico.yaml”100%[=====================================================================================>] 238,089 392KB/s 用时 0.6s2023-05-03 02:23:03 (392 KB/s) - 已保存 “calico.yaml” [238089/238089])

1.2:修改calico.yaml网卡相关配置:

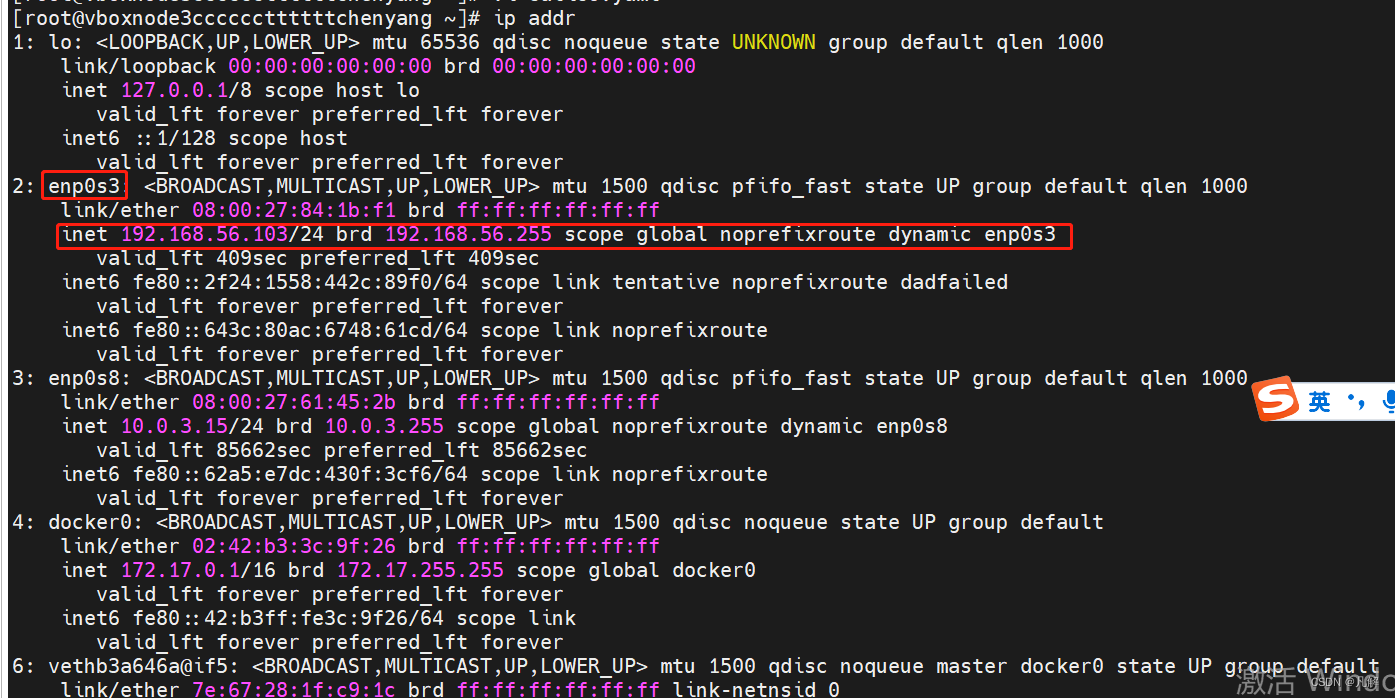

1.2.1:查看本机ip 网卡相关信息:

[root@vboxnode3ccccccttttttchenyang ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 08:00:27:84:1b:f1 brd ff:ff:ff:ff:ff:ffinet 192.168.56.103/24 brd 192.168.56.255 scope global noprefixroute dynamic enp0s3valid_lft 409sec preferred_lft 409secinet6 fe80::2f24:1558:442c:89f0/64 scope link tentative noprefixroute dadfailedvalid_lft forever preferred_lft foreverinet6 fe80::643c:80ac:6748:61cd/64 scope link noprefixroutevalid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 08:00:27:61:45:2b brd ff:ff:ff:ff:ff:ffinet 10.0.3.15/24 brd 10.0.3.255 scope global noprefixroute dynamic enp0s8valid_lft 85662sec preferred_lft 85662secinet6 fe80::62a5:e7dc:430f:3cf6/64 scope link noprefixroutevalid_lft forever preferred_lft forever

4: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:b3:3c:9f:26 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:b3ff:fe3c:9f26/64 scope linkvalid_lft forever preferred_lft forever

6: vethb3a646a@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether 7e:67:28:1f:c9:1c brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::7c67:28ff:fe1f:c91c/64 scope linkvalid_lft forever preferred_lft forever

8: veth87a3698@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether de:5c:0b:87:e1:9c brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::dc5c:bff:fe87:e19c/64 scope linkvalid_lft forever preferred_lft forever

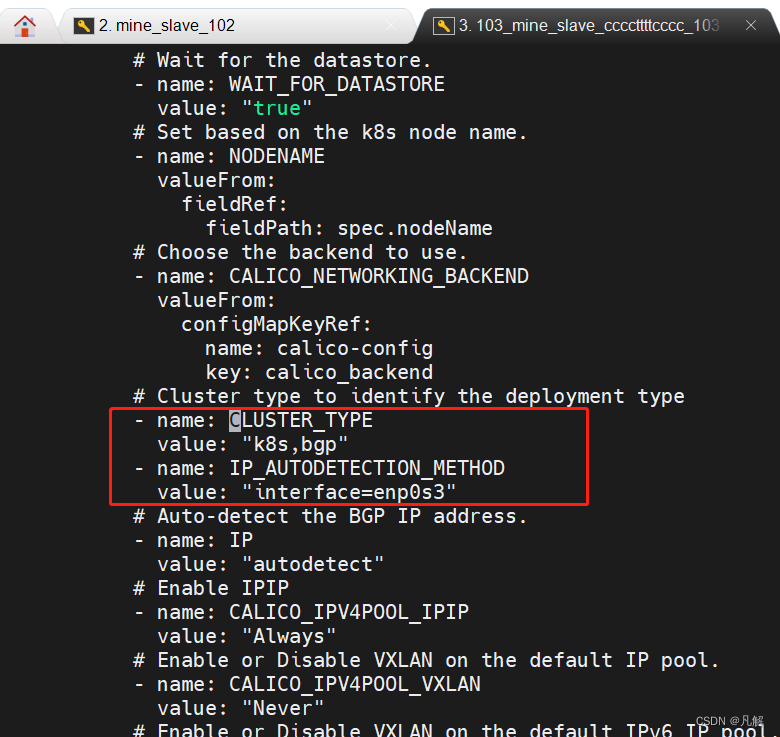

1.2.2:修改calico.yaml网卡interface相关信息

# Cluster type to identify the deployment type- name: CLUSTER_TYPEvalue: "k8s,bgp"- name: IP_AUTODETECTION_METHODvalue: "interface=enp0s3"

1.3:kubectl apply -f calico.yaml 生成calico pod 对象:

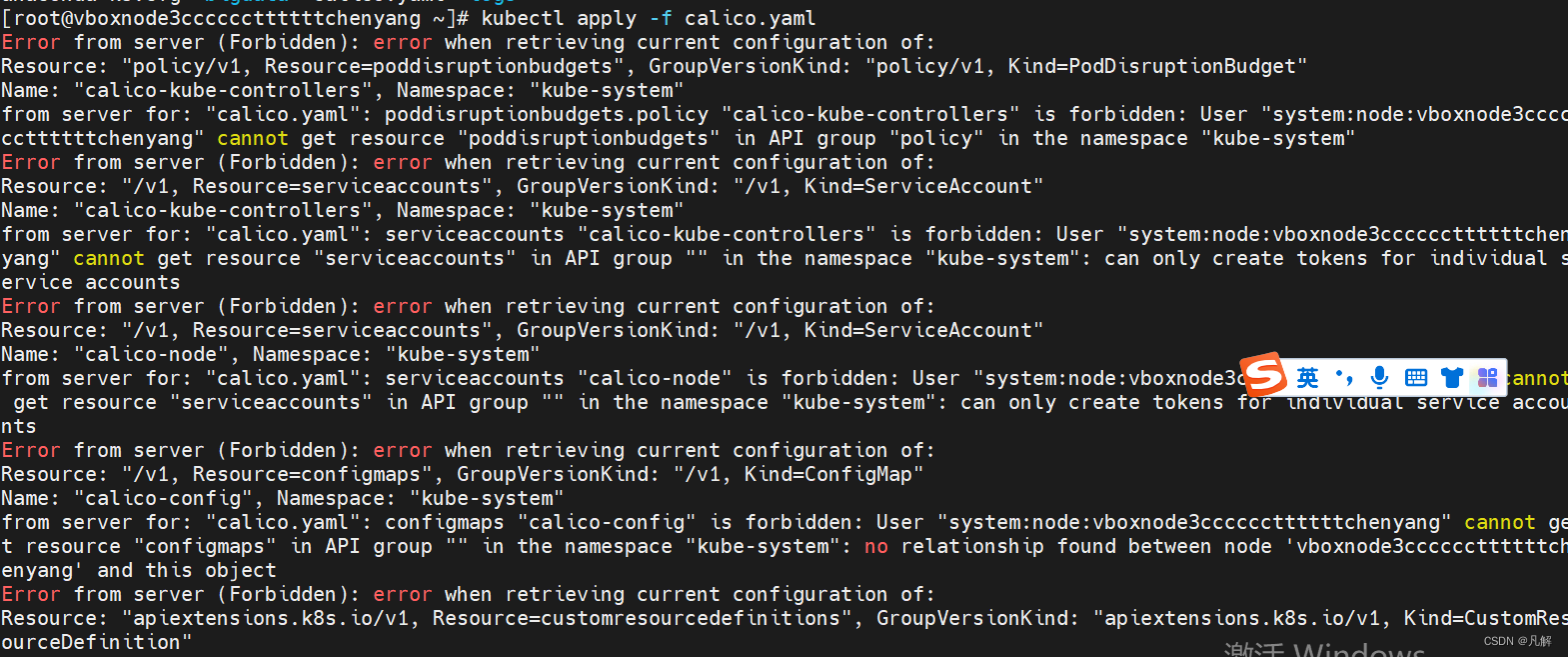

1.3.1:异常日志抛出:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "policy/v1, Resource=poddisruptionbudgets", GroupVersionKind: "policy/v1, Kind=PodDisruptionBudget"

Name: "calico-kube-controllers", Namespace: "kube-system"

from server for: "calico.yaml": poddisruptionbudgets.policy "calico-kube-controllers" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "poddisruptionbudgets" in API group "policy" in the namespace "kube-system"

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "/v1, Resource=serviceaccounts", GroupVersionKind: "/v1, Kind=ServiceAccount"

Name: "calico-kube-controllers", Namespace: "kube-system"

from server for: "calico.yaml": serviceaccounts "calico-kube-controllers" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "serviceaccounts" in API group "" in the namespace "kube-system": can only create tokens for individual service accounts

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "/v1, Resource=serviceaccounts", GroupVersionKind: "/v1, Kind=ServiceAccount"

Name: "calico-node", Namespace: "kube-system"

from server for: "calico.yaml": serviceaccounts "calico-node" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "serviceaccounts" in API group "" in the namespace "kube-system": can only create tokens for individual service accounts

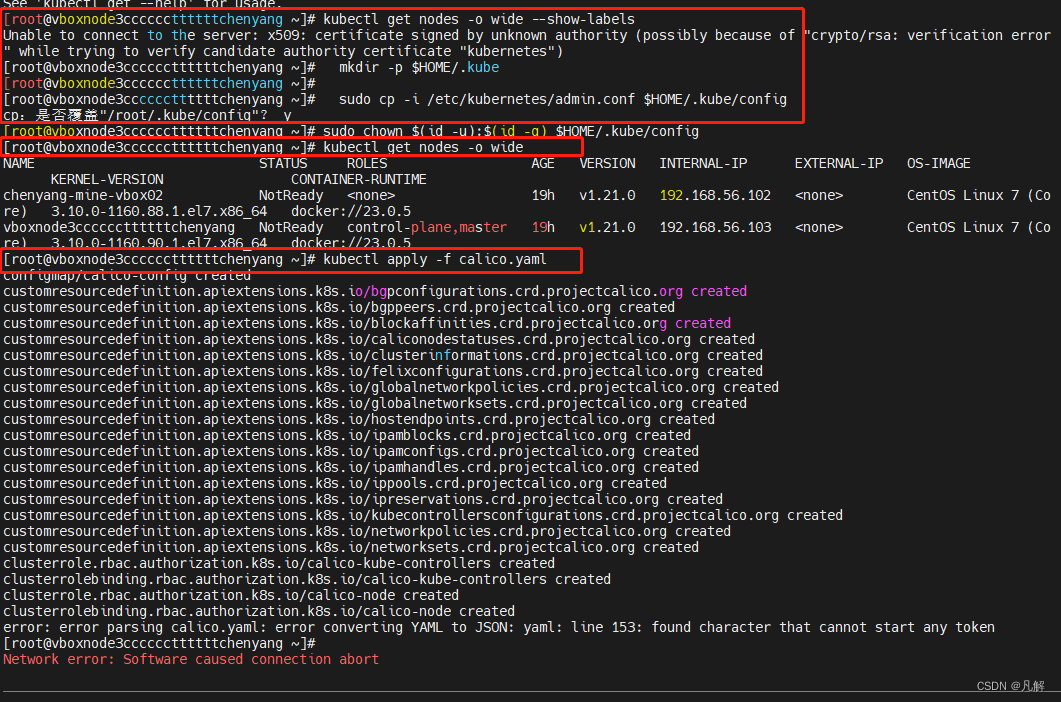

1.3.2:场景一:执行K8S admin config配置文件替换相关操作:

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get nodes -o wide --show-labels

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error " while trying to verify candidate authority certificate "kubernetes")

[root@vboxnode3ccccccttttttchenyang ~]# mkdir -p $HOME/.kube

[root@vboxnode3ccccccttttttchenyang ~]#

[root@vboxnode3ccccccttttttchenyang ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

cp:是否覆盖"/root/.kube/config"? y

[root@vboxnode3ccccccttttttchenyang ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get nodes -o wide

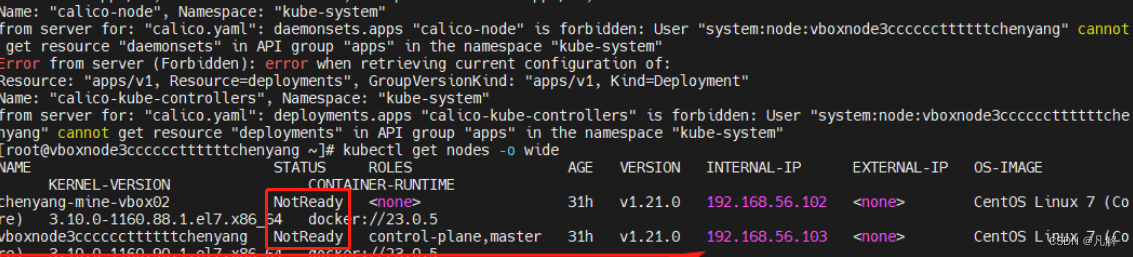

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

chenyang-mine-vbox02 NotReady <none> 19h v1.21.0 192.168.56.102 <none> CentOS Linux 7 (Co re) 3.10.0-1160.88.1.el7.x86_64 docker://23.0.5

vboxnode3ccccccttttttchenyang NotReady control-plane,master 19h v1.21.0 192.168.56.103 <none> CentOS Linux 7 (Co re) 3.10.0-1160.90.1.el7.x86_64 docker://23.0.5

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

error: error parsing calico.yaml: error converting YAML to JSON: yaml: line 153: found character that cannot start any token

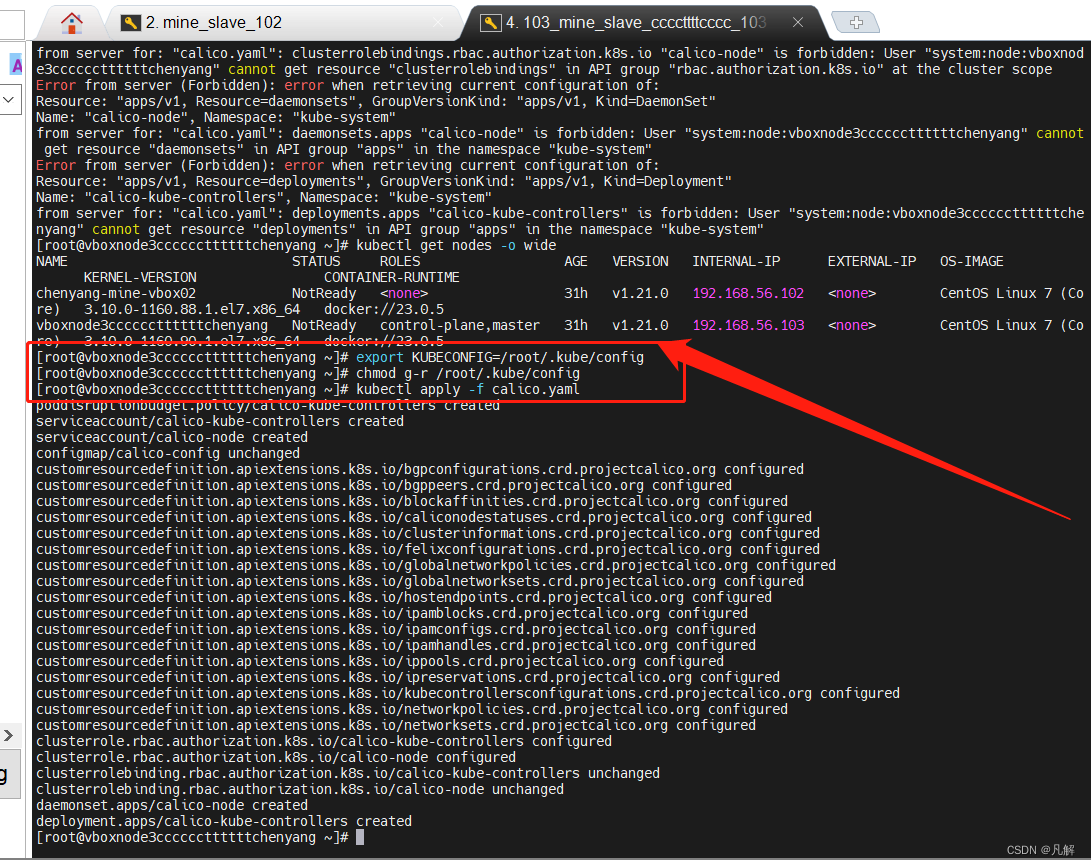

1.3.2:场景二:执行K8S admin config配置文件替换相关操作:

执行相关命令:

export KUBECONFIG=/root/.kube/config

chmod g-r /root/.kube/config

kubectl apply -f calico.yaml

[root@vboxnode3ccccccttttttchenyang ~]# export KUBECONFIG=/root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# chmod g-r /root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

from server for: "calico.yaml": clusterrolebindings.rbac.authorization.k8s.io "calico-node" is forbidden: User "system:node:vboxnod e3ccccccttttttchenyang" cannot get resource "clusterrolebindings" in API group "rbac.authorization.k8s.io" at the cluster scope

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "apps/v1, Resource=daemonsets", GroupVersionKind: "apps/v1, Kind=DaemonSet"

Name: "calico-node", Namespace: "kube-system"

from server for: "calico.yaml": daemonsets.apps "calico-node" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "daemonsets" in API group "apps" in the namespace "kube-system"

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "apps/v1, Resource=deployments", GroupVersionKind: "apps/v1, Kind=Deployment"

Name: "calico-kube-controllers", Namespace: "kube-system"

from server for: "calico.yaml": deployments.apps "calico-kube-controllers" is forbidden: User "system:node:vboxnode3ccccccttttttche nyang" cannot get resource "deployments" in API group "apps" in the namespace "kube-system"

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

chenyang-mine-vbox02 NotReady <none> 31h v1.21.0 192.168.56.102 <none> CentOS Linux 7 (Co re) 3.10.0-1160.88.1.el7.x86_64 docker://23.0.5

vboxnode3ccccccttttttchenyang NotReady control-plane,master 31h v1.21.0 192.168.56.103 <none> CentOS Linux 7 (Co re) 3.10.0-1160.90.1.el7.x86_64 docker://23.0.5

[root@vboxnode3ccccccttttttchenyang ~]# export KUBECONFIG=/root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# chmod g-r /root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers configured

clusterrole.rbac.authorization.k8s.io/calico-node configured

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@vboxnode3ccccccttttttchenyang ~]#

二:安装完成calico pod:解决没用正常运行问题:

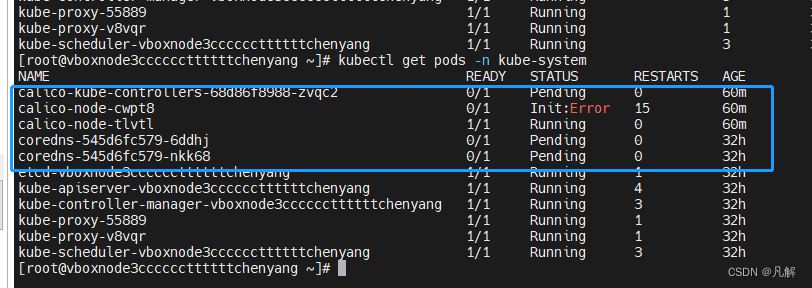

2.1:查看calico pod 运行状态:

查看所有命名空间

kubectl get ns -o wide

查看所有pod在kube-system命名空间

kubectl get pods -n kube-system

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get ns -o wide

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pods -n kube-system

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get ns -o wide

NAME STATUS AGE

default Active 31h

kube-node-lease Active 31h

kube-public Active 31h

kube-system Active 31h

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-68d86f8988-zvqc2 0/1 Pending 0 30m

calico-node-cwpt8 0/1 Init:CrashLoopBackOff 9 30m

calico-node-tlvtl 1/1 Running 0 30m

coredns-545d6fc579-6ddhj 0/1 Pending 0 31h

coredns-545d6fc579-nkk68 0/1 Pending 0 31h

etcd-vboxnode3ccccccttttttchenyang 1/1 Running 1 31h

kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 4 31h

kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 3 31h

kube-proxy-55889 1/1 Running 1 31h

kube-proxy-v8vqr 1/1 Running 1 31h

kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 3 31h

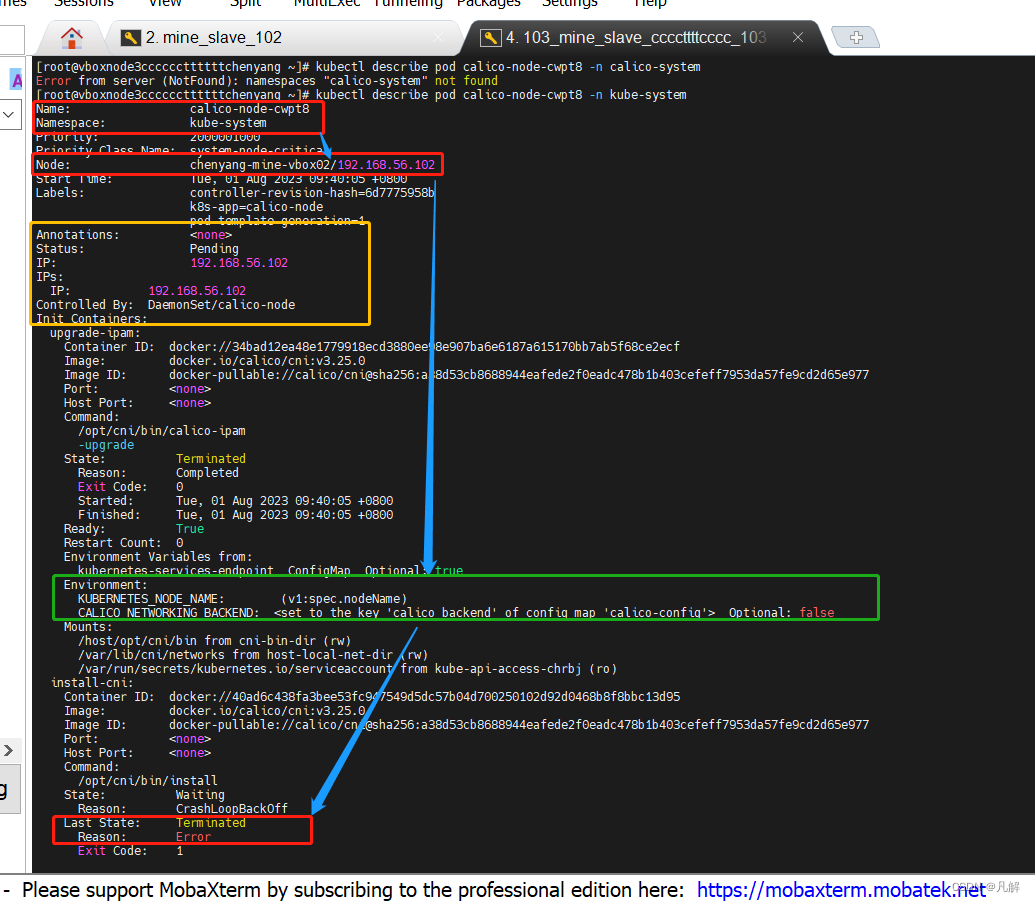

2.2:查看init:error calico pod 异常节点信息:执行 kubectl describe pod podcode

命令:kubectl describe pod calico-node-cwpt8 -n calico-system

部分关键信息打印:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl describe pod calico-node-cwpt8 -n calico-system

Error from server (NotFound): namespaces "calico-system" not found

[root@vboxnode3ccccccttttttchenyang ~]# kubectl describe pod calico-node-cwpt8 -n kube-system

Name: calico-node-cwpt8

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: chenyang-mine-vbox02/192.168.56.102

Start Time: Tue, 01 Aug 2023 09:40:05 +0800

Labels: controller-revision-hash=6d7775958bk8s-app=calico-nodepod-template-generation=1

Annotations: <none>

Status: Pending

IP: 192.168.56.102

IPs:IP: 192.168.56.102

Controlled By: DaemonSet/calico-node

Init Containers:upgrade-ipam:Container ID: docker://34bad12ea48e1779918ecd3880ee98e907ba6e6187a615170bb7ab5f68ce2ecfImage: docker.io/calico/cni:v3.25.0Image ID: docker-pullable://calico/cni@sha256:a38d53cb8688944eafede2f0eadc478b1b403cefeff7953da57fe9cd2d65e977Port: <none>Host Port: <none>Command:/opt/cni/bin/calico-ipam-upgradeState: TerminatedReason: CompletedExit Code: 0Started: Tue, 01 Aug 2023 09:40:05 +0800Finished: Tue, 01 Aug 2023 09:40:05 +0800Ready: TrueRestart Count: 0Environment Variables from:kubernetes-services-endpoint ConfigMap Optional: trueEnvironment:KUBERNETES_NODE_NAME: (v1:spec.nodeName)CALICO_NETWORKING_BACKEND: <set to the key 'calico_backend' of config map 'calico-config'> Optional: falseMounts:/host/opt/cni/bin from cni-bin-dir (rw)/var/lib/cni/networks from host-local-net-dir (rw)/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-chrbj (ro)install-cni:Container ID: docker://40ad6c438fa3bee53fc947549d5dc57b04d700250102d92d0468b8f8bbc13d95Image: docker.io/calico/cni:v3.25.0Image ID: docker-pullable://calico/cni@sha256:a38d53cb8688944eafede2f0eadc478b1b403cefeff7953da57fe9cd2d65e977Port: <none>Host Port: <none>Command:/opt/cni/bin/installState: WaitingReason: CrashLoopBackOffLast State: TerminatedReason: ErrorExit Code: 1Started: Tue, 01 Aug 2023 11:18:31 +0800Finished: Tue, 01 Aug 2023 11:19:02 +0800Ready: FalseRestart Count: 22Environment Variables from:kubernetes-services-endpoint ConfigMap Optional: trueEnvironment:CNI_CONF_NAME: 10-calico.conflistCNI_NETWORK_CONFIG: <set to the key 'cni_network_config' of config map 'calico-config'> Optional: falseKUBERNETES_NODE_NAME: (v1:spec.nodeName)CNI_MTU: <set to the key 'veth_mtu' of config map 'calico-config'> Optional: falseSLEEP: false

2.3:可以试试重新下载coredns image 和 执行docker tag coredns相关命令:

2.4:再次查看coredns和calico pods启动信息:

2.4.1:执行命令kubectl get pod -A.查看coredns和calico pods启动信息:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68d86f8988-zvqc2 1/1 Running 1 24h

kube-system calico-node-cwpt8 0/1 Init:CrashLoopBackOff 9 24h

kube-system calico-node-tlvtl 1/1 Running 1 24h

kube-system coredns-545d6fc579-nggnz 0/1 Pending 0 22h

kube-system coredns-545d6fc579-rbd8c 0/1 Pending 0 22h

kube-system etcd-vboxnode3ccccccttttttchenyang 1/1 Running 2 2d7h

kube-system kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 5 2d7h

kube-system kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 6 2d7h

kube-system kube-proxy-55889 1/1 Running 2 2d7h

kube-system kube-proxy-v8vqr 1/1 Running 2 2d7h

kube-system kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 6 2d7h

2.5:查看异常calico-node pod 日志:

2.5.1:master:命令: kubectl logs -f calico-node-cwpt8 -n kube-system:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs -f calico-node-cwpt8 -n kube-system

2.5.2:master:查看异常日志

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs -f calico-node-cwpt8 -n kube-system

Error from server: Get "https://192.168.56.102:10250/containerLogs/kube-system/calico-node-cwpt8/calico-node?follow=true": dial tcp 192.168.56.102:10250: connect: no route to host

2.5.3:master:telnet 异常信息ip:port 地址加端口:

2.5.3.1:安装telnet插件:

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102:10250

-bash: telnet: 未找到命令

[root@vboxnode3ccccccttttttchenyang ~]# rpm -q telnet

未安装软件包 telnet

[root@vboxnode3ccccccttttttchenyang ~]# rpm -q telnet-server

未安装软件包 telnet-server

[root@vboxnode3ccccccttttttchenyang ~]# yum list telnet*

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile* base: ftp.sjtu.edu.cn* extras: ftp.sjtu.edu.cn* updates: mirrors.bfsu.edu.cn

可安装的软件包

telnet.x86_64 1:0.17-66.el7 updates

telnet-server.x86_64 1:0.17-66.el7 updates

[root@vboxnode3ccccccttttttchenyang ~]# yum install telnet-server

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile* base: ftp.sjtu.edu.cn* extras: ftp.sjtu.edu.cn* updates: mirrors.bfsu.edu.cn

base | 3.6 kB 00:00:00

docker-ce-stable | 3.5 kB 00:00:00

extras | 2.9 kB 00:00:00

kubernetes | 1.4 kB 00:00:00

updates | 2.9 kB 00:00:00

docker-ce-stable/7/x86_64/primary_db | 116 kB 00:00:01

正在解决依赖关系

--> 正在检查事务

---> 软件包 telnet-server.x86_64.1.0.17-66.el7 将被 安装

--> 解决依赖关系完成依赖关系解决===========================================================================================================================Package 架构 版本 源 大小

===========================================================================================================================

正在安装:telnet-server x86_64 1:0.17-66.el7 updates 41 k事务概要

===========================================================================================================================

安装 1 软件包总下载量:41 k

安装大小:55 k

Is this ok [y/d/N]: y

Downloading packages:

telnet-server-0.17-66.el7.x86_64.rpm | 41 kB 00:00:00

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction正在安装 : 1:telnet-server-0.17-66.el7.x86_64 1/1验证中 : 1:telnet-server-0.17-66.el7.x86_64 1/1已安装:telnet-server.x86_64 1:0.17-66.el7完毕!

2.5.3.2:telnet 异常信息ip:port 地址加端口: telnet 192.168.56.102 10250

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102:10250

telnet: 192.168.56.102:10250: Name or service not known

192.168.56.102:10250: Unknown host

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102 10250

Trying 192.168.56.102...

telnet: connect to address 192.168.56.102: No route to host

[root@vboxnode3ccccccttttttchenyang ~]#

2.5.3.3:开放路由不通的机器端口:10250

systemctl status firewalld

否则->systemctl start firewalld.service

firewall-cmd --permanent --zone=public --add-port=10250/tcp

firewall-cmd --reload

firewall-cmd --permanent --zone=public --list-port[root@chenyang-mine-vbox02 ~]# firewall-cmd --permanent --zone=public --list-port

3306/tcp 8848/tcp 6443/tcp 8080/tcp 8083/tcp 8086/tcp 9200/tcp 9300/tcp 10250/tcp

[root@chenyang-mine-vbox02 ~]#

2.5.3.4:成功: telnet 192.168.56.102 10250

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102 10250

Trying 192.168.56.102…

Connected to 192.168.56.102.

Escape character is ‘^]’.

2.6:master:再次查看异常calico-node pod 日志:还是不行

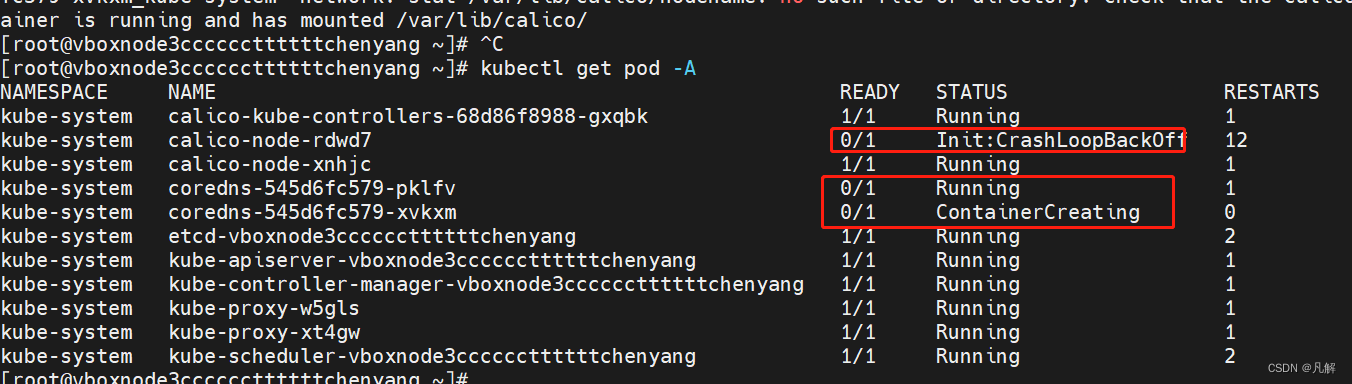

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68d86f8988-gxqbk 1/1 Running 0 46m

kube-system calico-node-rdwd7 0/1 Init:CrashLoopBackOff 12 46m

kube-system calico-node-xnhjc 1/1 Running 0 46m

kube-system coredns-545d6fc579-dmjsp 0/1 Running 0 48m

kube-system coredns-545d6fc579-pklfv 0/1 Running 0 48m

kube-system etcd-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

kube-system kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

kube-system kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

kube-system kube-proxy-w5gls 1/1 Running 0 48m

kube-system kube-proxy-xt4gw 1/1 Running 0 47m

kube-system kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs calico-node-rdwd7 -f --tail=50 -n kube-system

Error from server (BadRequest): container "calico-node" in pod "calico-node-rdwd7" is waiting to start: PodInitializing

[root@vboxnode3ccccccttttttchenyang ~]#

2.7:master:查看coredns 异常日志:显示和从机器网络有关

重点:意思是从节点网络无法被当前master节点查找到网络资源

User “system:serviceaccount:kube-system:coredns” cannot list resource “endpointslices” in API group “discovery.k8s.io” at the cluster scope

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs coredns-545d6fc579-dmjsp -f --tail=50 -n kube-system

E0805 18:36:01.342345 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:kube-system:coredns" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

[INFO] plugin/ready: Still waiting on: "kubernetes"

E0805 18:36:02.676563 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:kube-system:coredns" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

E0805 18:36:04.964823 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:kube-system:coredns" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

[WARNING] plugin/kubernetes: starting server with unsynced Kubernetes API

2.8:cluster:查看coredns 异常日志:显示和从机器网络有关

2.8.1:cluster:查看异常日志:journalctl -f -u kubelet:

2.8.1.1重点:cni相关配置找不到:“Unable to update cni config” err=“no networks found in /etc/cni/net.d”

[root@chenyang-mine-vbox02 ~]# cd /etc/cni/net.d/

[root@chenyang-mine-vbox02 net.d]# ls

[root@chenyang-mine-vbox02 net.d]#

[root@chenyang-mine-vbox02 ~]# journalctl -f -u kubelet

-- Logs begin at 日 2023-08-06 02:16:53 CST. --

8月 06 02:43:22 chenyang-mine-vbox02 kubelet[6109]: I0806 02:43:22.030642 6109 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

8月 06 02:43:23 chenyang-mine-vbox02 kubelet[6109]: E0806 02:43:23.138847 6109 pod_workers.go:190] "Error syncing pod, skipping" err="failed to \"StartContainer\" for \"install-cni\" with CrashLoopBackOff: \"back-off 2m40s restarting failed container=install-cni pod=calico-node-rdwd7_kube-system(940cdb9e-c99b-46d3-a1f5-92ee1f175299)\"" pod="kube-system/calico-node-rdwd7" podUID=940cdb9e-c99b-46d3-a1f5-92ee1f175299

8月 06 02:43:23 chenyang-mine-vbox02 kubelet[6109]: E0806 02:43:23.215196 6109 kubelet.go:2218] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

8月 06 02:43:27 chenyang-mine-vbox02 kubelet[6109]: I0806 02:43:27.032036 6109 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

2.8.2:master:查看/etc/cni/net.d配置信息:

[root@vboxnode3ccccccttttttchenyang ~]# cd /etc/cni/net.d/

[root@vboxnode3ccccccttttttchenyang net.d]# ls

10-calico.conflist calico-kubeconfig

[root@vboxnode3ccccccttttttchenyang net.d]#

2.8.3:拷贝到cluster从master:/etc/cni/net.d配置信息

[root@chenyang-mine-vbox02 net.d]# touch calico-kubeconfig

[root@chenyang-mine-vbox02 net.d]# touch 10-calico.conflist

[root@chenyang-mine-vbox02 net.d]# vi 10-calico.conflist

[root@chenyang-mine-vbox02 net.d]# vi calico-kubeconfig

[root@chenyang-mine-vbox02 net.d]# ls

10-calico.conflist calico-kubeconfig

[root@chenyang-mine-vbox02 net.d]#

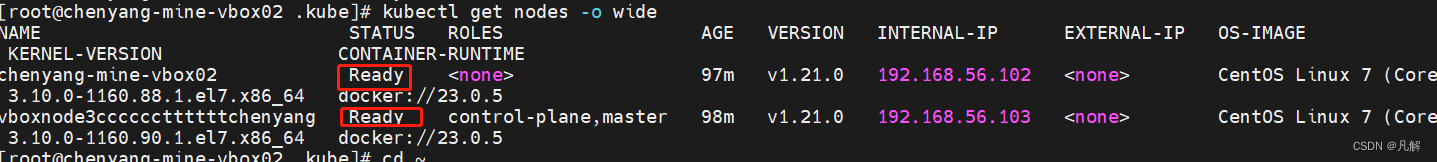

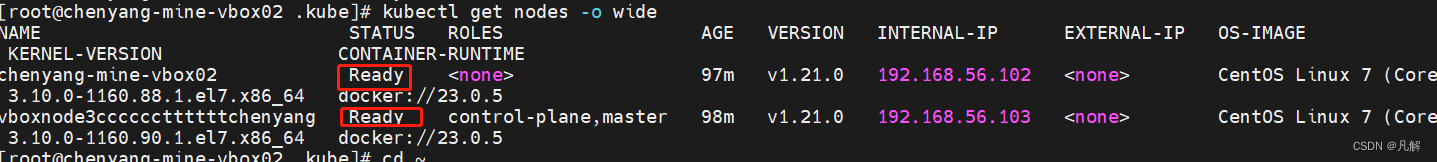

2.9:重启kubelet查看各nodes节点状态

systemctl restart kubelet

kubectl get nodes -o wide

[root@chenyang-mine-vbox02 .kube]# systemctl restart kubelet

[root@chenyang-mine-vbox02 .kube]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

chenyang-mine-vbox02 Ready <none> 97m v1.21.0 192.168.56.102 <none> CentOS Linux 7 (Core) 3.10.0-1160.88.1.el7.x86_64 docker://23.0.5

vboxnode3ccccccttttttchenyang Ready control-plane,master 98m v1.21.0 192.168.56.103 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://23.0.5

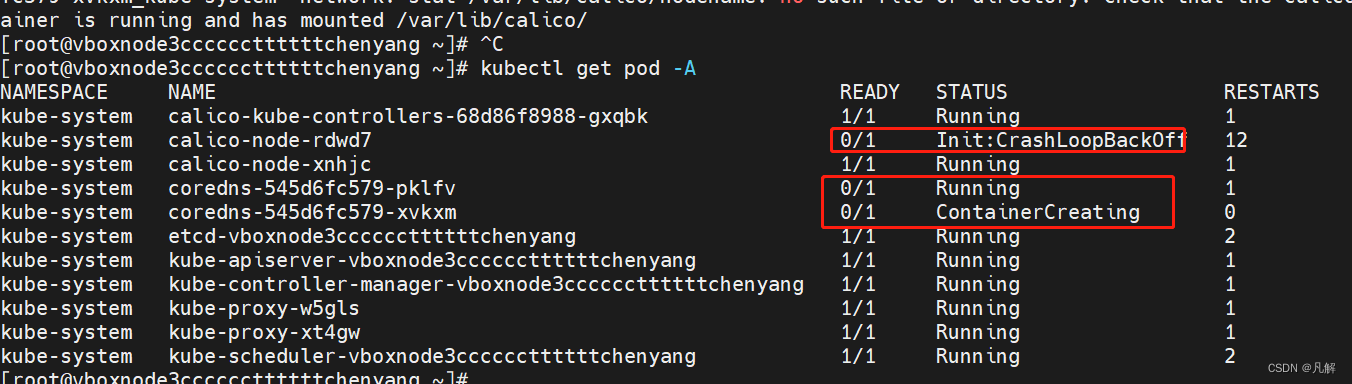

三:后续问题:

虽然节点状态从NotReady变为Ready,但coredns和calico还是没有Ready,后续会继续跟进。

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68d86f8988-gxqbk 1/1 Running 1 111m

kube-system calico-node-rdwd7 0/1 Init:CrashLoopBackOff 12 111m

kube-system calico-node-xnhjc 1/1 Running 1 111m

kube-system coredns-545d6fc579-pklfv 0/1 Running 1 113m

kube-system coredns-545d6fc579-xvkxm 0/1 ContainerCreating 0 13m

kube-system etcd-vboxnode3ccccccttttttchenyang 1/1 Running 2 114m

kube-system kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 1 114m

kube-system kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 1 114m

kube-system kube-proxy-w5gls 1/1 Running 1 113m

kube-system kube-proxy-xt4gw 1/1 Running 1 113m

kube-system kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 2 114m

[root@vboxnode3ccccccttttttchenyang ~]#

相关文章:

·[K8S:使用calico网络插件]:解决集群节点NotReady问题

文章目录 一:安装calico:1.1:weget安装Colico网络通信插件:1.2:修改calico.yaml网卡相关配置:1.2.1:查看本机ip 网卡相关信息:1.2.2:修改calico.yaml网卡interface相关信…...

泊松损坏图像的快速尺度间小波去噪研究(Matlab代码实现)

💥💥💞💞欢迎来到本博客❤️❤️💥💥 🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。 ⛳️座右铭&a…...

服务器端开发-golang dlv 远程调试

1。需要root权限的服务器代码调试 sudo ./appps to get piddlv attach pid --headless --listen:40000 --api-version2 --accept-multiclientattach the golang IDE or other IDE 2。不需要root权限的服务器代码调试,另一种选择 dlv --listen:40000 --headlesstr…...

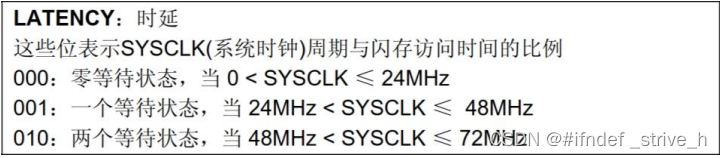

STM32F103——时钟配置

目录 1、认识时钟树 1.1 什么是时钟树 1.2 时钟系统解析 1.2.1 时钟源 1.2.2 锁相环PLL 1.2.3 系统时钟SYSCLK 1.2.4 时钟信号输出MCO 2、如何修改主频 2.1 STM32F1时钟系统配置 2.2 STM32F1 时钟使能和配置 下列进行举例的开发板是原子哥的战舰开发板STM32F103ZET…...

【Linux】信号捕捉

目录 信号捕捉1.用户态与内核态1.1关于内核空间与内核态:1.2关于用户态与内核态的表征: 2.信号捕捉过程 信号捕捉 1.用户态与内核态 用户态:执行用户代码时,进程的状态 内核态:执行OS代码时,进程的状态 …...

超详情的开源知识库管理系统- mm-wiki的安装和使用

背景:最近公司需要一款可以记录公司内部文档信息,一些只是累计等,通过之前的经验积累,立马想到了 mm-wiki,然后就给公司搭建了一套,分享一下安装和使用说明: 当前市场上众多的优秀的文档系统百…...

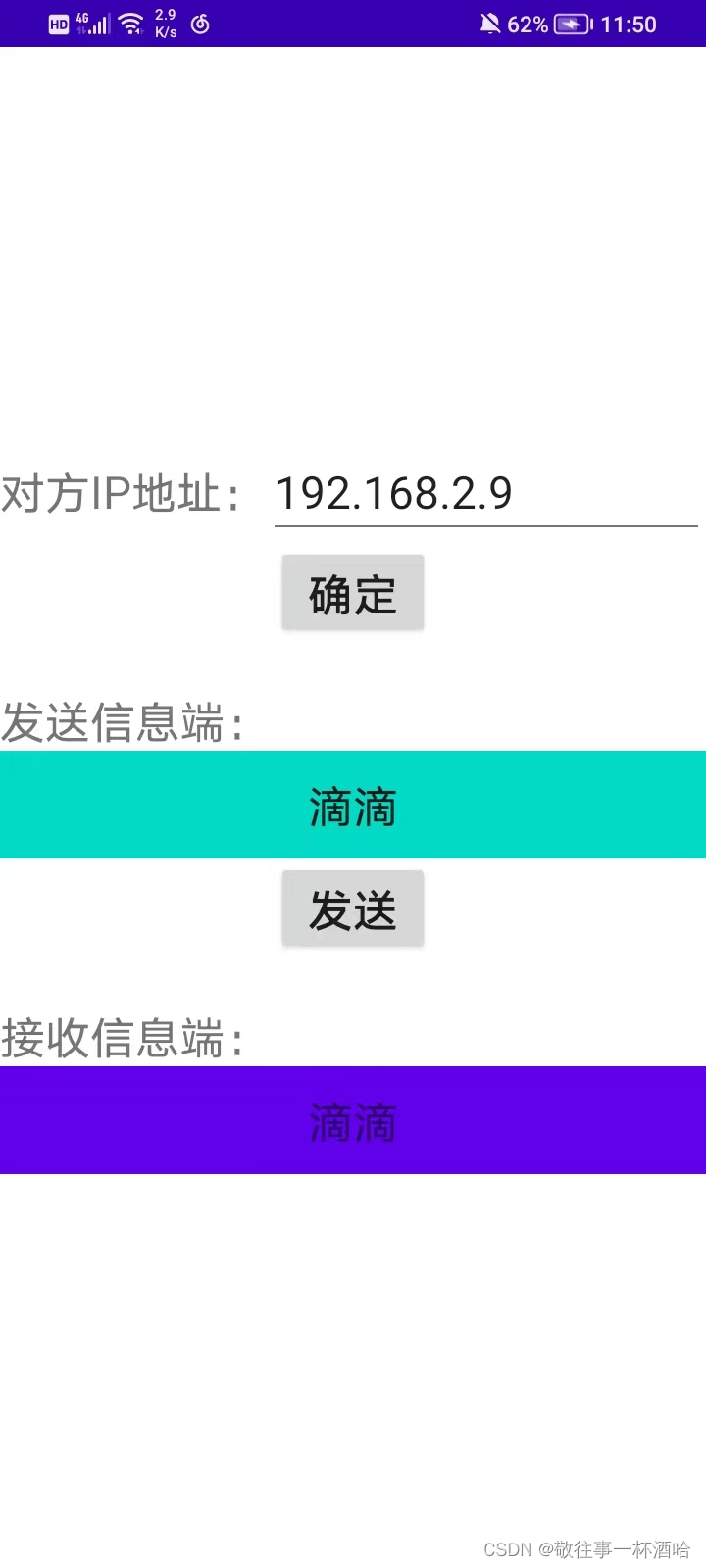

安卓:UDP通信

目录 一、介绍 网络通信的三要素: (1)、IP地址: IPv4: IPv6: IP地址形式: IP常用命令: IP地址操作类: (2)、端口: (3)、协议: UDP协…...

clickhouse安装

clickhouse安装 在线安装和离线安装 一、环境准备: 1.检查系统是否支持clickhouse安装 (向量化支持) grep -q sse4_2 /proc/cpuinfo && echo “SSE 4.2 supported” || echo “SSE 4.2 not supported.” 2.下载对应的clickhouse包 复制运行之后,就会将对应的包加入…...

Cpp学习——string(2)

目录 编辑 容器string中的一些函数 1.capacity() 2.reserve() 3.resize() 4.push_back()与append() 5.find系列函数 容器string中的一些函数 1.capacity() capacity是string当中表示容量大小的函数。但是string开空间时是如何开的呢?现在就来看一下。先写…...

python进阶编程

lambda匿名函数 python使用lambda表达式来创建匿名函数 语法 // lambda 参数们:对参数的处理 lambda x : 2 * x // x 是参数, 2*x 是返回值 //使用lambda实现求和 sum lambda arg1, arg2 : agr1 arg2 print(sum(10,20)) // 将匿名函数封装在一…...

算法练习--leetcode 链表

文章目录 合并两个有序链表删除排序链表中的重复元素 1删除排序链表中的重复元素 2环形链表1环形链表2相交链表反转链表 合并两个有序链表 将两个升序链表合并为一个新的 升序 链表并返回。 新链表是通过拼接给定的两个链表的所有节点组成的。 示例 1: 输入&…...

Android性能优化—Apk瘦身优化

随着业务迭代,apk体积逐渐变大。项目中积累的无用资源,未压缩的图片资源等,都为apk带来了不必要的体积 增加。而APK 的大小会影响应用加载速度、使用的内存量以及消耗的电量。在讨论如何缩减应用的大小之前,有必要了解下应用 APK …...

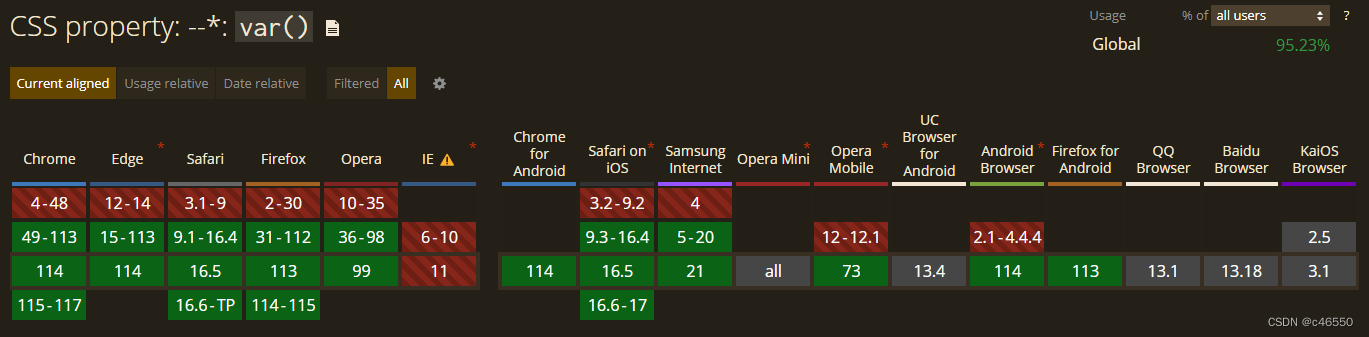

前端主题切换方案——CSS变量

前言 主题切换是前端开发中老生常谈的问题,本文将介绍主流的前端主题切换实现方案——CSS变量 CSS变量 简介 编写CSS样式时,为了避免代码冗余,降低维护成本,一些CSS预编译工具(Sass/Less/Stylus)等都支…...

Java8 list多属性去重

大家好,我是三叔,很高兴这期又和大家见面了,一个奋斗在互联网的打工人。 在 Java 开发中,我们经常会面临对 List 中的对象属性去重的需求。然而,当需要根据多个属性来进行去重时,情况会稍微复杂一些。本篇…...

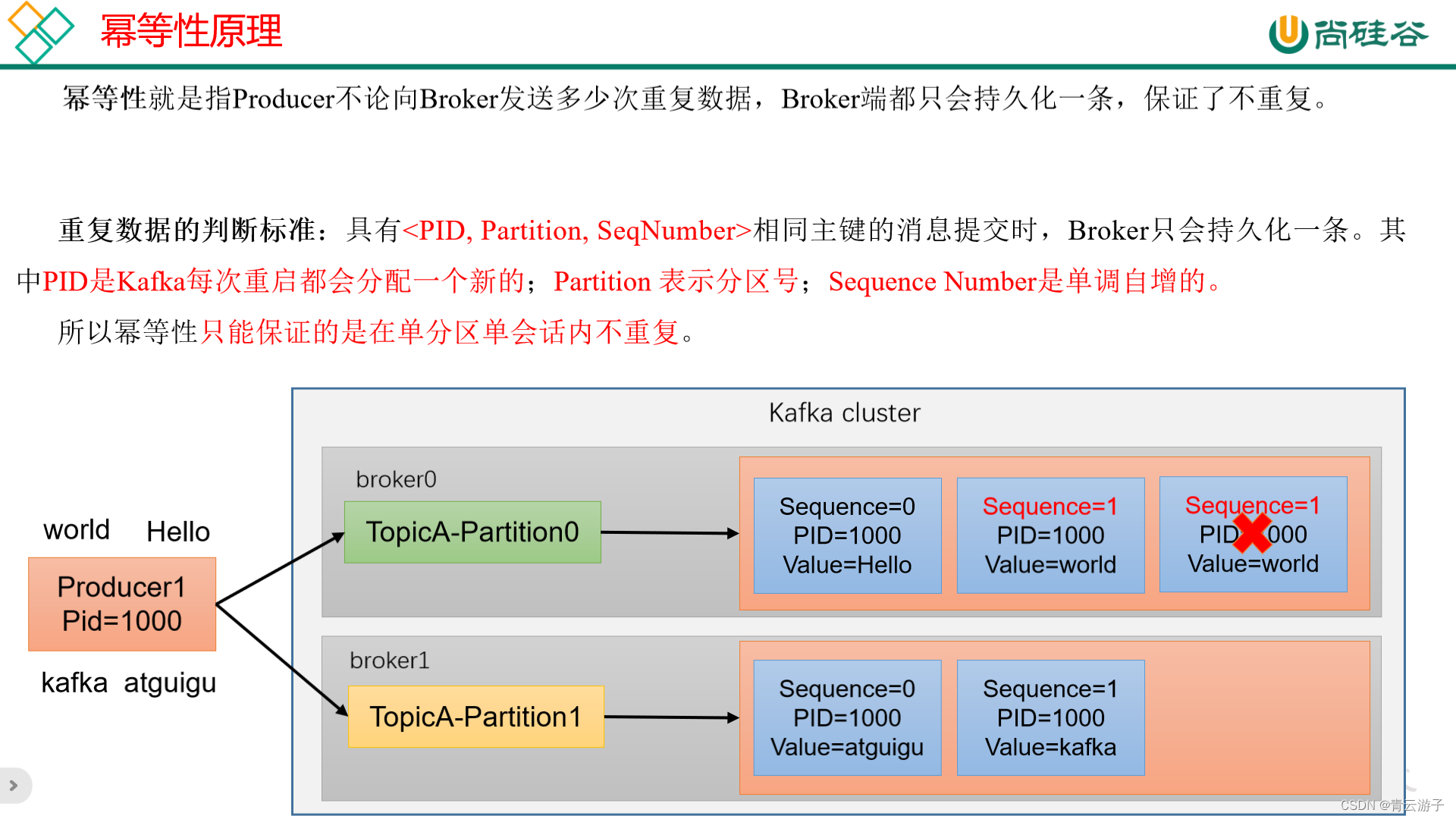

kafka-保证数据不重复-生产者开启幂等性和事务的作用?

1. 生产者开启幂等性为什么能去重? 1.1 场景 适用于消息在写入到服务器日志后,由于网络故障,生产者没有及时收到服务端的ACK消息,生产者误以为消息没有持久化到服务端,导致生产者重复发送该消息,造成了消…...

[AI in security]-214 网络安全威胁情报的建设

文章目录 1.什么是威胁情报2. 威胁情报3. 智能威胁情报3.1 智能威胁情报的组成3.2 整合威胁情报3.3 最佳实践4. 威胁情报的作用5.威胁情报模型6.反杀链模型7.基于TI的局部优势模型参考文献相关的研究1.什么是威胁情报 威胁情报是循证知识,包括环境、机制、指标、意义和可行性…...

Javaweb学习(2)

Javaweb学习 一、Maven1.1 Maven概述1.2 Maven简介1.3、Maven基本使用1.4、IDEA配置Maven1.6、依赖管理&依赖范围 二、MyBatis2.1 MyBatis简介2.2 Mybatis快速入门2.3、解决SQL映射文件的警告提示2.4、Mapper代理开发 三、MyBaits核心配置文件四、 配置文件的增删改查4.1 M…...

leetcode410. 分割数组的最大值 动态规划

hard:https://leetcode.cn/problems/split-array-largest-sum/ 给定一个非负整数数组 nums 和一个整数 m ,你需要将这个数组分成 m 个非空的连续子数组。 设计一个算法使得这 m 个子数组各自和的最大值最小。 示例 1:输入:nums [7,2,5,1…...

C函数指针与类型定义

#include <stdio.h> #define PI 3.14 typedef int uint32_t; /* pfun is a pointer and its type is void (*)(void) */ void (*pfun)(void); /* afer typedef like this we can use “pfun1” as a data type to a function that has form like: / -------…...

最新2024届【海康威视】内推码【GTK3B6】

最新2024届【海康威视】内推码【GTK3B6】 【内推码使用方法】 1.请学弟学妹们登录校招官网,选择岗位投递简历; 2.投递过程中填写内推码完成内推步骤,即可获得内推特权。 内推码:GTK3B6 内推码:GTK3B6 内推码&…...

【网络】每天掌握一个Linux命令 - iftop

在Linux系统中,iftop是网络管理的得力助手,能实时监控网络流量、连接情况等,帮助排查网络异常。接下来从多方面详细介绍它。 目录 【网络】每天掌握一个Linux命令 - iftop工具概述安装方式核心功能基础用法进阶操作实战案例面试题场景生产场景…...

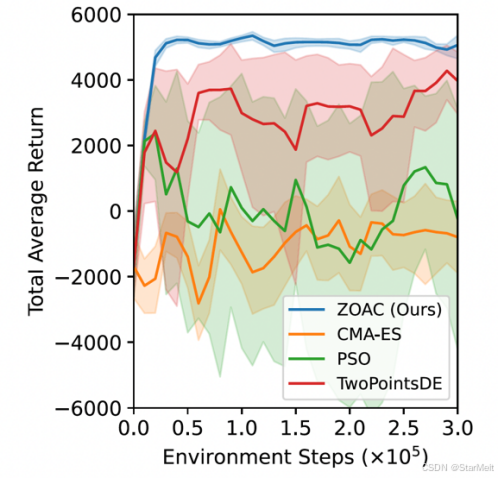

突破不可导策略的训练难题:零阶优化与强化学习的深度嵌合

强化学习(Reinforcement Learning, RL)是工业领域智能控制的重要方法。它的基本原理是将最优控制问题建模为马尔可夫决策过程,然后使用强化学习的Actor-Critic机制(中文译作“知行互动”机制),逐步迭代求解…...

ssc377d修改flash分区大小

1、flash的分区默认分配16M、 / # df -h Filesystem Size Used Available Use% Mounted on /dev/root 1.9M 1.9M 0 100% / /dev/mtdblock4 3.0M...

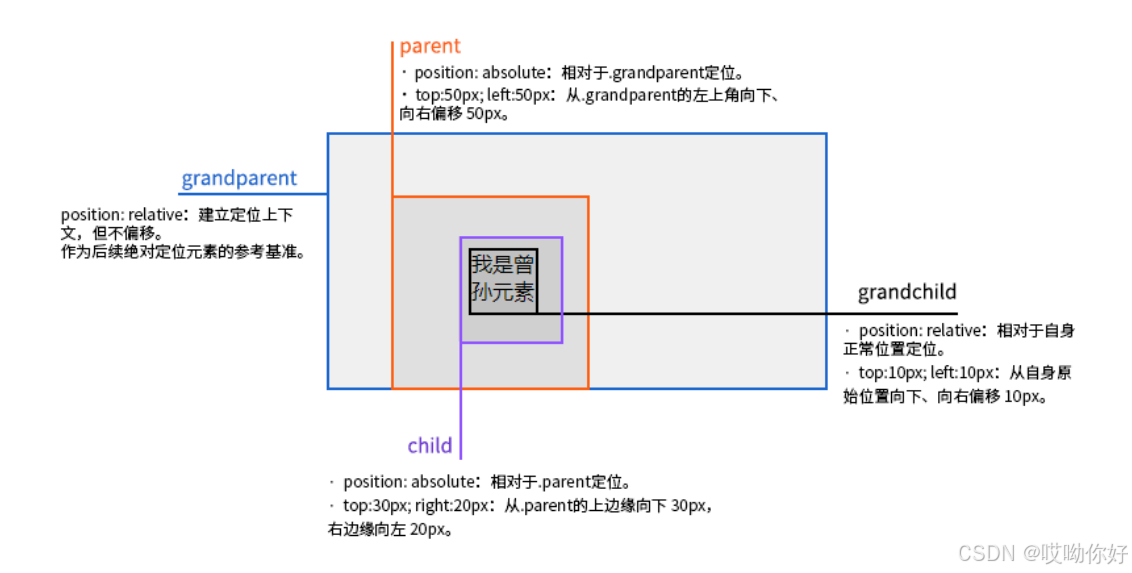

【CSS position 属性】static、relative、fixed、absolute 、sticky详细介绍,多层嵌套定位示例

文章目录 ★ position 的五种类型及基本用法 ★ 一、position 属性概述 二、position 的五种类型详解(初学者版) 1. static(默认值) 2. relative(相对定位) 3. absolute(绝对定位) 4. fixed(固定定位) 5. sticky(粘性定位) 三、定位元素的层级关系(z-i…...

(转)什么是DockerCompose?它有什么作用?

一、什么是DockerCompose? DockerCompose可以基于Compose文件帮我们快速的部署分布式应用,而无需手动一个个创建和运行容器。 Compose文件是一个文本文件,通过指令定义集群中的每个容器如何运行。 DockerCompose就是把DockerFile转换成指令去运行。 …...

06 Deep learning神经网络编程基础 激活函数 --吴恩达

深度学习激活函数详解 一、核心作用 引入非线性:使神经网络可学习复杂模式控制输出范围:如Sigmoid将输出限制在(0,1)梯度传递:影响反向传播的稳定性二、常见类型及数学表达 Sigmoid σ ( x ) = 1 1 +...

安宝特方案丨船舶智造的“AR+AI+作业标准化管理解决方案”(装配)

船舶制造装配管理现状:装配工作依赖人工经验,装配工人凭借长期实践积累的操作技巧完成零部件组装。企业通常制定了装配作业指导书,但在实际执行中,工人对指导书的理解和遵循程度参差不齐。 船舶装配过程中的挑战与需求 挑战 (1…...

QT3D学习笔记——圆台、圆锥

类名作用Qt3DWindow3D渲染窗口容器QEntity场景中的实体(对象或容器)QCamera控制观察视角QPointLight点光源QConeMesh圆锥几何网格QTransform控制实体的位置/旋转/缩放QPhongMaterialPhong光照材质(定义颜色、反光等)QFirstPersonC…...

DAY 26 函数专题1

函数定义与参数知识点回顾:1. 函数的定义2. 变量作用域:局部变量和全局变量3. 函数的参数类型:位置参数、默认参数、不定参数4. 传递参数的手段:关键词参数5 题目1:计算圆的面积 任务: 编写一…...

鸿蒙HarmonyOS 5军旗小游戏实现指南

1. 项目概述 本军旗小游戏基于鸿蒙HarmonyOS 5开发,采用DevEco Studio实现,包含完整的游戏逻辑和UI界面。 2. 项目结构 /src/main/java/com/example/militarychess/├── MainAbilitySlice.java // 主界面├── GameView.java // 游戏核…...