62、华为昇腾开发板Atlas 200I DK A2配置mmpose的hrnet模型推理python/c++

基本思想:适配mmpose模型,记录一下流水帐,环境配置和模型来自,请查看参考链接。

链接: https://pan.baidu.com/s/1IkiwuZf1anyKX1sZkYmD1g?pwd=i51s 提取码: i51s

一、转模型

(base) root@davinci-mini:~/sxj731533730# atc --model=end2end.onnx --framework=5 --output=end2end --input_format=NCHW --input_shape="input:1,3,256,256" --log=error --soc_version=Ascend310B1

ATC start working now, please wait for a moment.

...

ATC run success, welcome to the next use.python代码

import time

import cv2

import numpy as np

from ais_bench.infer.interface import InferSessionmodel_path = "end2end.om"

IMG_PATH = "ca110.jpeg"def bbox_xywh2cs(bbox, aspect_ratio, padding=1., pixel_std=200.):"""Transform the bbox format from (x,y,w,h) into (center, scale)Args:bbox (ndarray): Single bbox in (x, y, w, h)aspect_ratio (float): The expected bbox aspect ratio (w over h)padding (float): Bbox padding factor that will be multilied to scale.Default: 1.0pixel_std (float): The scale normalization factor. Default: 200.0Returns:tuple: A tuple containing center and scale.- np.ndarray[float32](2,): Center of the bbox (x, y).- np.ndarray[float32](2,): Scale of the bbox w & h."""x, y, w, h = bbox[:4]center = np.array([x + w * 0.5, y + h * 0.5], dtype=np.float32)if w > aspect_ratio * h:h = w * 1.0 / aspect_ratioelif w < aspect_ratio * h:w = h * aspect_ratioscale = np.array([w, h], dtype=np.float32) / pixel_stdscale = scale * paddingreturn center, scaledef rotate_point(pt, angle_rad):"""Rotate a point by an angle.Args:pt (list[float]): 2 dimensional point to be rotatedangle_rad (float): rotation angle by radianReturns:list[float]: Rotated point."""assert len(pt) == 2sn, cs = np.sin(angle_rad), np.cos(angle_rad)new_x = pt[0] * cs - pt[1] * snnew_y = pt[0] * sn + pt[1] * csrotated_pt = [new_x, new_y]return rotated_ptdef _get_3rd_point(a, b):"""To calculate the affine matrix, three pairs of points are required. Thisfunction is used to get the 3rd point, given 2D points a & b.The 3rd point is defined by rotating vector `a - b` by 90 degreesanticlockwise, using b as the rotation center.Args:a (np.ndarray): point(x,y)b (np.ndarray): point(x,y)Returns:np.ndarray: The 3rd point."""assert len(a) == 2assert len(b) == 2direction = a - bthird_pt = b + np.array([-direction[1], direction[0]], dtype=np.float32)return third_ptdef get_affine_transform(center,scale,rot,output_size,shift=(0., 0.),inv=False):"""Get the affine transform matrix, given the center/scale/rot/output_size.Args:center (np.ndarray[2, ]): Center of the bounding box (x, y).scale (np.ndarray[2, ]): Scale of the bounding boxwrt [width, height].rot (float): Rotation angle (degree).output_size (np.ndarray[2, ] | list(2,)): Size of thedestination heatmaps.shift (0-100%): Shift translation ratio wrt the width/height.Default (0., 0.).inv (bool): Option to inverse the affine transform direction.(inv=False: src->dst or inv=True: dst->src)Returns:np.ndarray: The transform matrix."""assert len(center) == 2assert len(scale) == 2assert len(output_size) == 2assert len(shift) == 2# pixel_std is 200.scale_tmp = scale * 200.0shift = np.array(shift)src_w = scale_tmp[0]dst_w = output_size[0]dst_h = output_size[1]rot_rad = np.pi * rot / 180src_dir = rotate_point([0., src_w * -0.5], rot_rad)dst_dir = np.array([0., dst_w * -0.5])src = np.zeros((3, 2), dtype=np.float32)src[0, :] = center + scale_tmp * shiftsrc[1, :] = center + src_dir + scale_tmp * shiftsrc[2, :] = _get_3rd_point(src[0, :], src[1, :])dst = np.zeros((3, 2), dtype=np.float32)dst[0, :] = [dst_w * 0.5, dst_h * 0.5]dst[1, :] = np.array([dst_w * 0.5, dst_h * 0.5]) + dst_dirdst[2, :] = _get_3rd_point(dst[0, :], dst[1, :])if inv:trans = cv2.getAffineTransform(np.float32(dst), np.float32(src))else:trans = cv2.getAffineTransform(np.float32(src), np.float32(dst))return transdef bbox_xyxy2xywh(bbox_xyxy):"""Transform the bbox format from x1y1x2y2 to xywh.Args:bbox_xyxy (np.ndarray): Bounding boxes (with scores), shaped (n, 4) or(n, 5). (left, top, right, bottom, [score])Returns:np.ndarray: Bounding boxes (with scores),shaped (n, 4) or (n, 5). (left, top, width, height, [score])"""bbox_xywh = bbox_xyxy.copy()bbox_xywh[:, 2] = bbox_xywh[:, 2] - bbox_xywh[:, 0]bbox_xywh[:, 3] = bbox_xywh[:, 3] - bbox_xywh[:, 1]return bbox_xywhdef _get_max_preds(heatmaps):"""Get keypoint predictions from score maps.Note:batch_size: Nnum_keypoints: Kheatmap height: Hheatmap width: WArgs:heatmaps (np.ndarray[N, K, H, W]): model predicted heatmaps.Returns:tuple: A tuple containing aggregated results.- preds (np.ndarray[N, K, 2]): Predicted keypoint location.- maxvals (np.ndarray[N, K, 1]): Scores (confidence) of the keypoints."""assert isinstance(heatmaps,np.ndarray), ('heatmaps should be numpy.ndarray')assert heatmaps.ndim == 4, 'batch_images should be 4-ndim'N, K, _, W = heatmaps.shapeheatmaps_reshaped = heatmaps.reshape((N, K, -1))idx = np.argmax(heatmaps_reshaped, 2).reshape((N, K, 1))maxvals = np.amax(heatmaps_reshaped, 2).reshape((N, K, 1))preds = np.tile(idx, (1, 1, 2)).astype(np.float32)preds[:, :, 0] = preds[:, :, 0] % Wpreds[:, :, 1] = preds[:, :, 1] // Wpreds = np.where(np.tile(maxvals, (1, 1, 2)) > 0.0, preds, -1)return preds, maxvalsdef transform_preds(coords, center, scale, output_size, use_udp=False):"""Get final keypoint predictions from heatmaps and apply scaling andtranslation to map them back to the image.Note:num_keypoints: KArgs:coords (np.ndarray[K, ndims]):* If ndims=2, corrds are predicted keypoint location.* If ndims=4, corrds are composed of (x, y, scores, tags)* If ndims=5, corrds are composed of (x, y, scores, tags,flipped_tags)center (np.ndarray[2, ]): Center of the bounding box (x, y).scale (np.ndarray[2, ]): Scale of the bounding boxwrt [width, height].output_size (np.ndarray[2, ] | list(2,)): Size of thedestination heatmaps.use_udp (bool): Use unbiased data processingReturns:np.ndarray: Predicted coordinates in the images."""assert coords.shape[1] in (2, 4, 5)assert len(center) == 2assert len(scale) == 2assert len(output_size) == 2# Recover the scale which is normalized by a factor of 200.scale = scale * 200.0if use_udp:scale_x = scale[0] / (output_size[0] - 1.0)scale_y = scale[1] / (output_size[1] - 1.0)else:scale_x = scale[0] / output_size[0]scale_y = scale[1] / output_size[1]target_coords = np.ones_like(coords)target_coords[:, 0] = coords[:, 0] * scale_x + center[0] - scale[0] * 0.5target_coords[:, 1] = coords[:, 1] * scale_y + center[1] - scale[1] * 0.5return target_coordsdef keypoints_from_heatmaps(heatmaps,center,scale,unbiased=False,post_process='default',kernel=11,valid_radius_factor=0.0546875,use_udp=False,target_type='GaussianHeatmap'):# Avoid being affectedheatmaps = heatmaps.copy()N, K, H, W = heatmaps.shapepreds, maxvals = _get_max_preds(heatmaps)# add +/-0.25 shift to the predicted locations for higher acc.for n in range(N):for k in range(K):heatmap = heatmaps[n][k]px = int(preds[n][k][0])py = int(preds[n][k][1])if 1 < px < W - 1 and 1 < py < H - 1:diff = np.array([heatmap[py][px + 1] - heatmap[py][px - 1],heatmap[py + 1][px] - heatmap[py - 1][px]])preds[n][k] += np.sign(diff) * .25if post_process == 'megvii':preds[n][k] += 0.5# Transform back to the imagefor i in range(N):preds[i] = transform_preds(preds[i], center[i], scale[i], [W, H], use_udp=use_udp)if post_process == 'megvii':maxvals = maxvals / 255.0 + 0.5return preds, maxvalsdef decode(output, center, scale, score_, batch_size=1):c = np.zeros((batch_size, 2), dtype=np.float32)s = np.zeros((batch_size, 2), dtype=np.float32)score = np.ones(batch_size)for i in range(batch_size):c[i, :] = centers[i, :] = scalescore[i] = np.array(score_).reshape(-1)preds, maxvals = keypoints_from_heatmaps(output,c,s,False,'default',11,0.0546875,False,'GaussianHeatmap')all_preds = np.zeros((batch_size, preds.shape[1], 3), dtype=np.float32)all_boxes = np.zeros((batch_size, 6), dtype=np.float32)all_preds[:, :, 0:2] = preds[:, :, 0:2]all_preds[:, :, 2:3] = maxvalsall_boxes[:, 0:2] = c[:, 0:2]all_boxes[:, 2:4] = s[:, 0:2]all_boxes[:, 4] = np.prod(s * 200.0, axis=1)all_boxes[:, 5] = scoreresult = {}result['preds'] = all_predsresult['boxes'] = all_boxesprint(result)return resultdef draw(bgr, predict_dict, skeleton,box):cv2.rectangle(bgr, (int(box[0]), int(box[1])), (int(box[0]) + int(box[2]), int(box[1]) + int(box[3])),(255, 0, 0))all_preds = predict_dict["preds"]for all_pred in all_preds:for x, y, s in all_pred:cv2.circle(bgr, (int(x), int(y)), 3, (0, 255, 120), -1)for sk in skeleton:x0 = int(all_pred[sk[0]][0])y0 = int(all_pred[sk[0]][1])x1 = int(all_pred[sk[1]][0])y1 = int(all_pred[sk[1]][1])cv2.line(bgr, (x0, y0), (x1, y1), (0, 255, 0), 1)cv2.imwrite("sxj731533730_sxj.jpg", bgr)if __name__ == "__main__":# Create RKNN objectmodel = InferSession(0, model_path)print("done")bbox = [13.711652 , 26.188112, 293.61298-13.711652 , 227.78246-26.188112, 9.995332e-01]image_size = [256, 256]src_img = cv2.imread(IMG_PATH)img = cv2.cvtColor(src_img, cv2.COLOR_BGR2RGB) # hwc rgbaspect_ratio = image_size[0] / image_size[1]img_height = img.shape[0]img_width = img.shape[1]padding = 1.25pixel_std = 200center, scale = bbox_xywh2cs(bbox,aspect_ratio,padding,pixel_std)trans = get_affine_transform(center, scale, 0, image_size)img = cv2.warpAffine(img,trans, (int(image_size[0]), int(image_size[1])),flags=cv2.INTER_LINEAR)print(trans)img = img / 255.0 # 归一化到0~1img = img.transpose(2, 0, 1)img = np.ascontiguousarray(img, dtype=np.float32)# Inferenceprint("--> Running model")outputs = model.infer([img])[0]print(outputs)predict_dict = decode(outputs, center, scale, bbox[-1])skeleton = [[0, 1],[0, 2],[1, 3],[0, 4],[1, 4],[4, 5],[5, 7],[5,8],[5, 9],[6, 7],[6, 10],[6, 11],[8, 12],[9, 13],[10, 14],[11, 15],[12, 16],[13, 17],[14, 18],[15, 19]]draw(src_img, predict_dict, skeleton,bbox)

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(untitled10)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD 11)

add_definitions(-DENABLE_DVPP_INTERFACE)include_directories(/usr/local/samples/cplusplus/common/acllite/include)

include_directories(/usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/include)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

add_library(libascendcl SHARED IMPORTED)

set_target_properties(libascendcl PROPERTIES IMPORTED_LOCATION /usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/lib64/libascendcl.so)

add_library(libacllite SHARED IMPORTED)

set_target_properties(libacllite PROPERTIES IMPORTED_LOCATION /usr/local/samples/cplusplus/common/acllite/out/aarch64/libacllite.so)add_executable(untitled10 main.cpp)

target_link_libraries(untitled10 ${OpenCV_LIBS} libascendcl libacllite)c++代码

#include <opencv2/opencv.hpp>

#include "AclLiteUtils.h"

#include "AclLiteImageProc.h"

#include "AclLiteResource.h"

#include "AclLiteError.h"

#include "AclLiteModel.h"using namespace std;

using namespace cv;

typedef enum Result {SUCCESS = 0,FAILED = 1

} Result;struct Keypoints {float x;float y;float score;Keypoints() : x(0), y(0), score(0) {}Keypoints(float x, float y, float score) : x(x), y(y), score(score) {}

};struct Box {float center_x;float center_y;float scale_x;float scale_y;float scale_prob;float score;Box() : center_x(0), center_y(0), scale_x(0), scale_y(0), scale_prob(0), score(0) {}Box(float center_x, float center_y, float scale_x, float scale_y, float scale_prob, float score) :center_x(center_x), center_y(center_y), scale_x(scale_x), scale_y(scale_y), scale_prob(scale_prob),score(score) {}

};void bbox_xywh2cs(float bbox[], float aspect_ratio, float padding, float pixel_std, float *center, float *scale) {float x = bbox[0];float y = bbox[1];float w = bbox[2];float h = bbox[3];*center = x + w * 0.5;*(center + 1) = y + h * 0.5;if (w > aspect_ratio * h)h = w * 1.0 / aspect_ratio;else if (w < aspect_ratio * h)w = h * aspect_ratio;*scale = (w / pixel_std) * padding;*(scale + 1) = (h / pixel_std) * padding;

}void rotate_point(float *pt, float angle_rad, float *rotated_pt) {float sn = sin(angle_rad);float cs = cos(angle_rad);float new_x = pt[0] * cs - pt[1] * sn;float new_y = pt[0] * sn + pt[1] * cs;rotated_pt[0] = new_x;rotated_pt[1] = new_y;}void _get_3rd_point(cv::Point2f a, cv::Point2f b, float *direction) {float direction_0 = a.x - b.x;float direction_1 = a.y - b.y;direction[0] = b.x - direction_1;direction[1] = b.y + direction_0;}void get_affine_transform(float *center, float *scale, float rot, float *output_size, float *shift, bool inv,cv::Mat &trans) {float scale_tmp[] = {0, 0};scale_tmp[0] = scale[0] * 200.0;scale_tmp[1] = scale[1] * 200.0;float src_w = scale_tmp[0];float dst_w = output_size[0];float dst_h = output_size[1];float rot_rad = M_PI * rot / 180;float pt[] = {0, 0};pt[0] = 0;pt[1] = src_w * (-0.5);float src_dir[] = {0, 0};rotate_point(pt, rot_rad, src_dir);float dst_dir[] = {0, 0};dst_dir[0] = 0;dst_dir[1] = dst_w * (-0.5);cv::Point2f src[3] = {cv::Point2f(0, 0), cv::Point2f(0, 0), cv::Point2f(0, 0)};src[0] = cv::Point2f(center[0] + scale_tmp[0] * shift[0], center[1] + scale_tmp[1] * shift[1]);src[1] = cv::Point2f(center[0] + src_dir[0] + scale_tmp[0] * shift[0],center[1] + src_dir[1] + scale_tmp[1] * shift[1]);float direction_src[] = {0, 0};_get_3rd_point(src[0], src[1], direction_src);src[2] = cv::Point2f(direction_src[0], direction_src[1]);cv::Point2f dst[3] = {cv::Point2f(0, 0), cv::Point2f(0, 0), cv::Point2f(0, 0)};dst[0] = cv::Point2f(dst_w * 0.5, dst_h * 0.5);dst[1] = cv::Point2f(dst_w * 0.5 + dst_dir[0], dst_h * 0.5 + dst_dir[1]);float direction_dst[] = {0, 0};_get_3rd_point(dst[0], dst[1], direction_dst);dst[2] = cv::Point2f(direction_dst[0], direction_dst[1]);if (inv) {trans = cv::getAffineTransform(dst, src);} else {trans = cv::getAffineTransform(src, dst);}}void

transform_preds(std::vector <cv::Point2f> coords, std::vector <Keypoints> &target_coords, float *center, float *scale,int w, int h, bool use_udp = false) {float scale_x[] = {0, 0};float temp_scale[] = {scale[0] * 200, scale[1] * 200};if (use_udp) {scale_x[0] = temp_scale[0] / (w - 1);scale_x[1] = temp_scale[1] / (h - 1);} else {scale_x[0] = temp_scale[0] / w;scale_x[1] = temp_scale[1] / h;}for (int i = 0; i < coords.size(); i++) {target_coords[i].x = coords[i].x * scale_x[0] + center[0] - temp_scale[0] * 0.5;target_coords[i].y = coords[i].y * scale_x[1] + center[1] - temp_scale[1] * 0.5;}}int main() {const char *modelPath = "../end2end.om";bool flip_test = true;bool heap_map = false;float keypoint_score = 0.3f;cv::Mat bgr = cv::imread("../ca110.jpeg");cv::Mat rgb;cv::cvtColor(bgr, rgb, cv::COLOR_BGR2RGB);float image_target_w = 256;float image_target_h = 256;float padding = 1.25;float pixel_std = 200;float aspect_ratio = image_target_h / image_target_w;float bbox[] = {13.711652, 26.188112, 293.61298, 227.78246, 9.995332e-01};// 需要检测框架 这个矩形框来自检测框架的坐标 x y w h scorebbox[2] = bbox[2] - bbox[0];bbox[3] = bbox[3] - bbox[1];float center[2] = {0, 0};float scale[2] = {0, 0};bbox_xywh2cs(bbox, aspect_ratio, padding, pixel_std, center, scale);float rot = 0;float shift[] = {0, 0};bool inv = false;float output_size[] = {image_target_h, image_target_w};cv::Mat trans;get_affine_transform(center, scale, rot, output_size, shift, inv, trans);std::cout << trans << std::endl;std::cout << center[0] << " " << center[1] << " " << scale[0] << " " << scale[1] << std::endl;cv::Mat detect_image;//= cv::Mat::zeros(image_target_w ,image_target_h, CV_8UC3);cv::warpAffine(rgb, detect_image, trans, cv::Size(image_target_h, image_target_w), cv::INTER_LINEAR);//cv::imwrite("te.jpg",detect_image);std::cout << detect_image.cols << " " << detect_image.rows << std::endl;// inferencebool release = false;//SampleYOLOV7 sampleYOLO(modelPath, target_width, target_height);float *imageBytes;AclLiteResource aclResource_;AclLiteImageProc imageProcess_;AclLiteModel model_;aclrtRunMode runMode_;ImageData resizedImage_;const char *modelPath_;int32_t modelWidth_;int32_t modelHeight_;AclLiteError ret = aclResource_.Init();if (ret == FAILED) {ACLLITE_LOG_ERROR("resource init failed, errorCode is %d", ret);return FAILED;}ret = aclrtGetRunMode(&runMode_);if (ret == FAILED) {ACLLITE_LOG_ERROR("get runMode failed, errorCode is %d", ret);return FAILED;}// init dvpp resourceret = imageProcess_.Init();if (ret == FAILED) {ACLLITE_LOG_ERROR("imageProcess init failed, errorCode is %d", ret);return FAILED;}// load model from fileret = model_.Init(modelPath);if (ret == FAILED) {ACLLITE_LOG_ERROR("model init failed, errorCode is %d", ret);return FAILED;}// data standardizationfloat meanRgb[3] = {0, 0, 0};float stdRgb[3] = {1 / 255.0f, 1 / 255.0f, 1 / 255.0f};// create malloc of image, which is shape with NCHW//const float meanRgb[3] = {0.485f * 255.f, 0.456f * 255.f, 0.406f * 255.f};//const float stdRgb[3] = {(1 / 0.229f / 255.f), (1 / 0.224f / 255.f), (1 / 0.225f / 255.f)};int32_t channel = detect_image.channels();int32_t resizeHeight = detect_image.rows;int32_t resizeWeight = detect_image.cols;imageBytes = (float *) malloc(channel * image_target_w * image_target_h * sizeof(float));memset(imageBytes, 0, channel * image_target_h * image_target_w * sizeof(float));// image to bytes with shape HWC to CHW, and switch channel BGR to RGBfor (int c = 0; c < channel; ++c) {for (int h = 0; h < resizeHeight; ++h) {for (int w = 0; w < resizeWeight; ++w) {int dstIdx = c * resizeHeight * resizeWeight + h * resizeWeight + w;imageBytes[dstIdx] = static_cast<float>((detect_image.at<cv::Vec3b>(h, w)[c] -1.0f * meanRgb[c]) * 1.0f * stdRgb[c] );}}}std::vector <InferenceOutput> inferOutputs;ret = model_.CreateInput(static_cast<void *>(imageBytes),channel * image_target_w * image_target_h * sizeof(float));if (ret == FAILED) {ACLLITE_LOG_ERROR("CreateInput failed, errorCode is %d", ret);return FAILED;}// inferenceret = model_.Execute(inferOutputs);if (ret != ACL_SUCCESS) {ACLLITE_LOG_ERROR("execute model failed, errorCode is %d", ret);return FAILED;}// for()float *data = static_cast<float *>(inferOutputs[0].data.get());//输出维度int shape_d =1;int shape_c = 20;int shape_w = 64;int shape_h = 64;std::vector<float> vec_heap;for (int i = 0; i < shape_c * shape_h * shape_w; i++) {vec_heap.push_back(data[i]);}std::vector <Keypoints> all_preds;std::vector<int> idx;for (int i = 0; i < shape_c; i++) {auto begin = vec_heap.begin() + i * shape_w * shape_h;auto end = vec_heap.begin() + (i + 1) * shape_w * shape_h;float maxValue = *max_element(begin, end);int maxPosition = max_element(begin, end) - begin;all_preds.emplace_back(Keypoints(0, 0, maxValue));idx.emplace_back(maxPosition);}std::vector <cv::Point2f> vec_point;for (int i = 0; i < idx.size(); i++) {int x = idx[i] % shape_w;int y = idx[i] / shape_w;vec_point.emplace_back(cv::Point2f(x, y));}for (int i = 0; i < shape_c; i++) {int px = vec_point[i].x;int py = vec_point[i].y;if (px > 1 && px < shape_w - 1 && py > 1 && py < shape_h - 1) {float diff_0 = vec_heap[py * shape_w + px + 1] - vec_heap[py * shape_w + px - 1];float diff_1 = vec_heap[(py + 1) * shape_w + px] - vec_heap[(py - 1) * shape_w + px];vec_point[i].x += diff_0 == 0 ? 0 : (diff_0 > 0) ? 0.25 : -0.25;vec_point[i].y += diff_1 == 0 ? 0 : (diff_1 > 0) ? 0.25 : -0.25;}}std::vector <Box> all_boxes;if (heap_map) {all_boxes.emplace_back(Box(center[0], center[1], scale[0], scale[1], scale[0] * scale[1] * 400, bbox[4]));}transform_preds(vec_point, all_preds, center, scale, shape_w, shape_h);//0 L_Eye 1 R_Eye 2 L_EarBase 3 R_EarBase 4 Nose 5 Throat 6 TailBase 7 Withers 8 L_F_Elbow 9 R_F_Elbow 10 L_B_Elbow 11 R_B_Elbow// 12 L_F_Knee 13 R_F_Knee 14 L_B_Knee 15 R_B_Knee 16 L_F_Paw 17 R_F_Paw 18 L_B_Paw 19 R_B_Pawint skeleton[][2] = {{0, 1},{0, 2},{1, 3},{0, 4},{1, 4},{4, 5},{5, 7},{5, 8},{5, 9},{6, 7},{6, 10},{6, 11},{8, 12},{9, 13},{10, 14},{11, 15},{12, 16},{13, 17},{14, 18},{15, 19}};cv::rectangle(bgr, cv::Point(bbox[0], bbox[1]), cv::Point(bbox[0] + bbox[2], bbox[1] + bbox[3]),cv::Scalar(255, 0, 0));for (int i = 0; i < all_preds.size(); i++) {if (all_preds[i].score > keypoint_score) {cv::circle(bgr, cv::Point(all_preds[i].x, all_preds[i].y), 3, cv::Scalar(0, 255, 120), -1);//画点,其实就是实心圆}}for (int i = 0; i < sizeof(skeleton) / sizeof(sizeof(skeleton[1])); i++) {int x0 = all_preds[skeleton[i][0]].x;int y0 = all_preds[skeleton[i][0]].y;int x1 = all_preds[skeleton[i][1]].x;int y1 = all_preds[skeleton[i][1]].y;cv::line(bgr, cv::Point(x0, y0), cv::Point(x1, y1),cv::Scalar(0, 255, 0), 1);}cv::imwrite("../image.jpg", bgr);model_.DestroyResource();imageProcess_.DestroyResource();aclResource_.Release();return SUCCESS;

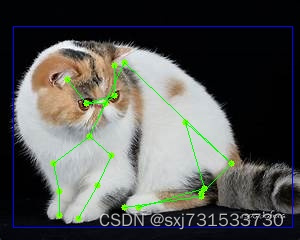

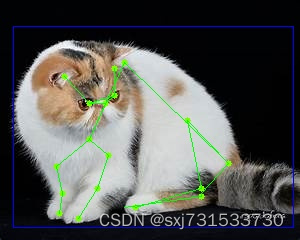

}测试结果

/root/sxj731533730/cmake-build-debug/untitled10

[0.7316863791031282, -0, 15.56737128098375;-4.62405306581973e-17, 0.7316863791031282, 35.08659815701316]

153.662 126.985 1.74938 1.74938

256 256

[INFO] Acl init ok

[INFO] Open device 0 ok

[INFO] Use default context currently

[INFO] dvpp init resource ok

[INFO] Load model ../end2end.om success

[INFO] Create model description success

[INFO] Create model(../end2end.om) output success

[INFO] Init model ../end2end.om success

[INFO] Unload model ../end2end.om success

[INFO] destroy context ok

[INFO] Reset device 0 ok

[INFO] Finalize acl okProcess finished with exit code 0

参考自己的博客

48、mmpose中hrnet关键点识别模型转ncnn和mnn,并进行训练和部署_hrnet ncnn_sxj731533730的博客-CSDN博客

61、华为昇腾开发板Atlas 200I DK A2初步测试,yolov7_batchsize_1&yolov7_batchsize_3的python/c++推理测试_sxj731533730的博客-CSDN博客

相关文章:

62、华为昇腾开发板Atlas 200I DK A2配置mmpose的hrnet模型推理python/c++

基本思想:适配mmpose模型,记录一下流水帐,环境配置和模型来自,请查看参考链接。 链接: https://pan.baidu.com/s/1IkiwuZf1anyKX1sZkYmD1g?pwdi51s 提取码: i51s 一、转模型 (base) rootdavinci-mini:~/sxj731533730# atc --mo…...

【数据结构】双链表

大家好!今天我们来学习数据结构中的双链表。(我们这里讲解的是带头(哨兵位)双向循环链表哦~) 目录 1.双链表的概念 2. 双链表的逻辑结构 3. 双链表的定义 4. 双链表的接口实现 4.1 动态申请一个新结点 4.2 双链表…...

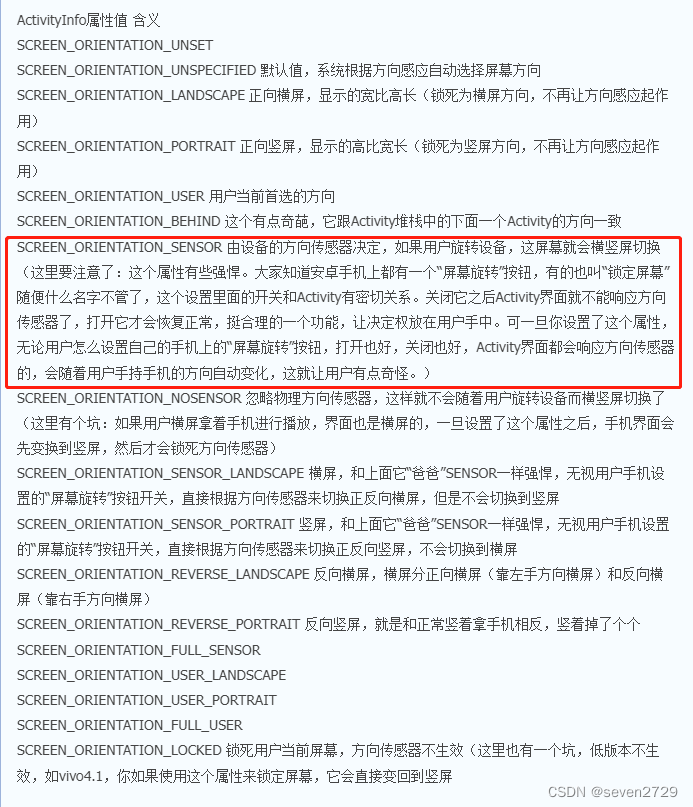

android设置竖屏仍然跟随屏幕旋转怎么办

如题所问,我最近遇到一个bug,就是设置了摇感,然后有用户反馈说设置了手机下拉的系统设置-屏幕旋转-关闭。然后屏幕还是会旋转的问题。 首先,我们先从如何设置横竖屏了解下好了 设置横屏和竖屏的方法: 方法一&#x…...

java spring cloud 企业电子招标采购系统源码:营造全面规范安全的电子招投标环境,促进招投标市场健康可持续发展 tbms

项目说明 随着公司的快速发展,企业人员和经营规模不断壮大,公司对内部招采管理的提升提出了更高的要求。在企业里建立一个公平、公开、公正的采购环境,最大限度控制采购成本至关重要。符合国家电子招投标法律法规及相关规范,以…...

【Java】2021 RoboCom 机器人开发者大赛-高职组(初赛)题解

7-1 机器人打招呼 机器人小白要来 RoboCom 参赛了,在赛场中遇到人要打个招呼。请你帮它设置好打招呼的这句话:“ni ye lai can jia RoboCom a?”。 输入格式: 本题没有输入。 输出格式: 在一行中输出 ni ye lai can jia Robo…...

汽车制造业上下游协作时 外发数据如何防泄露?

数据文件是制造业企业的核心竞争力,一旦发生数据外泄,就会给企业造成经济损失,严重的,可能会带来知识产权剽窃损害、名誉伤害等。汽车制造业,会涉及到重要的汽车设计图纸,像小米发送汽车设计图纸外泄事件并…...

H13-922题库 HCIP-GaussDB-OLAP V1.5

**H13-922 V1.5 GaussDB(DWS) OLAP题库 华为认证GaussDB OLAP数据库高级工程师HCIP-GaussDB-OLAP V1.0自2019年10月18日起,正式在中国区发布。当前版本V1.5 考试前提: 掌握基本的数据库基础知识、掌握数据仓库运维的基础知识、掌握基本Linux运维知识、…...

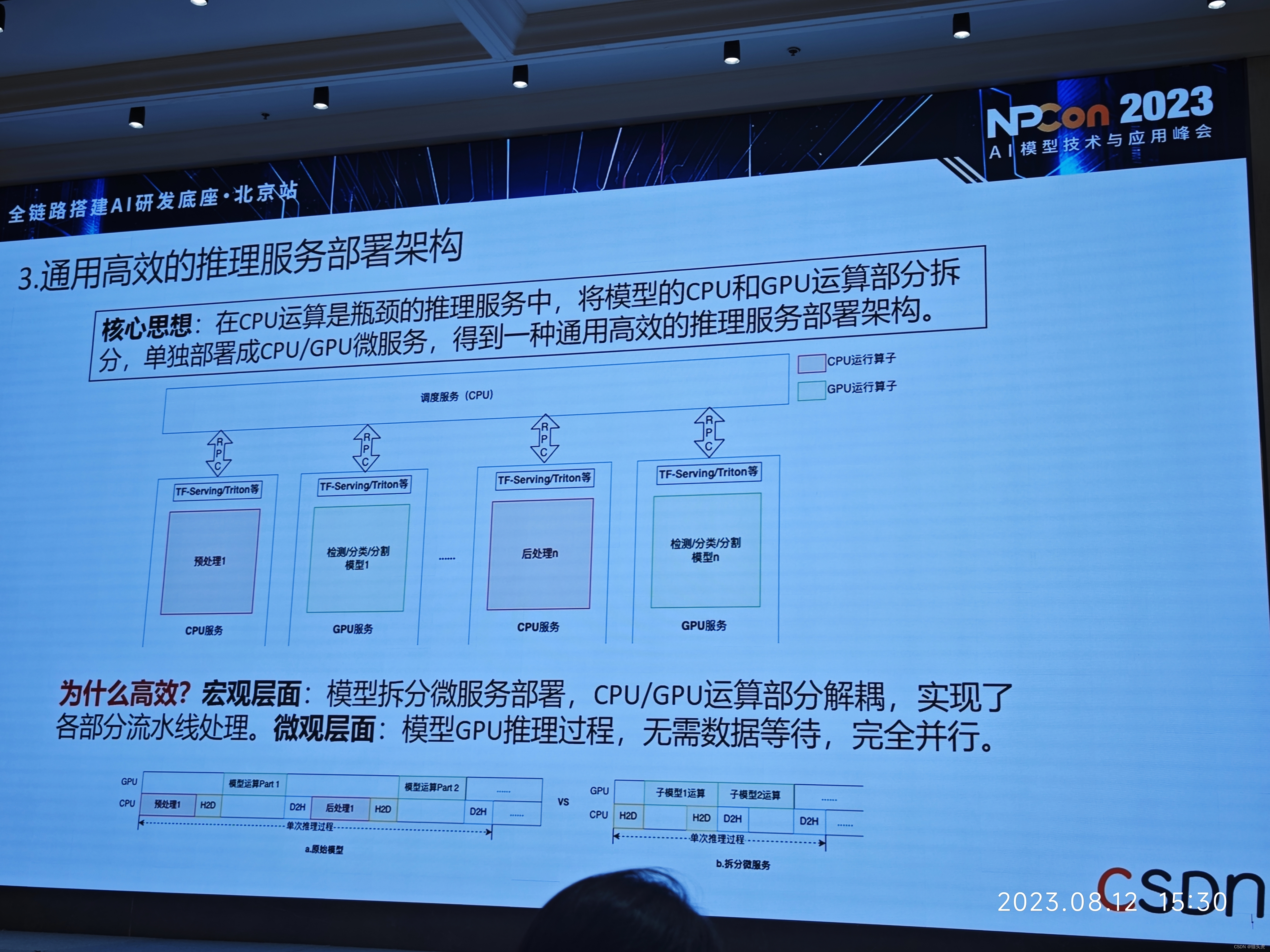

美团视觉GPU推理服务部署架构优化实战

🌷🍁 博主 libin9iOak带您 Go to New World.✨🍁 🦄 个人主页——libin9iOak的博客🎐 🐳 《面试题大全》 文章图文并茂🦕生动形象🦖简单易学!欢迎大家来踩踩~ἳ…...

什么是前端框架?怎么学习? - 易智编译EaseEditing

前端框架是一种用于开发Web应用程序界面的工具集合,它提供了一系列预定义的代码和结构,以简化开发过程并提高效率。 前端框架通常包括HTML、CSS和JavaScript的库和工具,用于构建交互式、动态和响应式的用户界面。 学习前端框架可以让您更高效…...

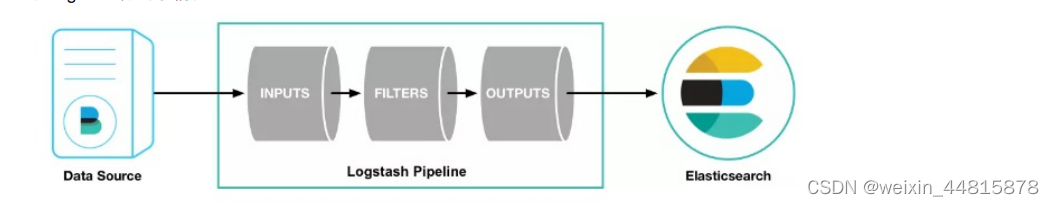

logstash 原理(含部署)

1、ES原理 原理 使⽤filebeat来上传⽇志数据,logstash进⾏⽇志收集与处理,elasticsearch作为⽇志存储与搜索引擎,最后使⽤kibana展现⽇志的可视化输出。所以不难发现,⽇志解析主要还 是logstash做的事情 从上图中可以看到&#x…...

CSS中的position属性有哪些值,并分别描述它们的作用。

聚沙成塔每天进步一点点 ⭐ 专栏简介⭐ static⭐ relative⭐ absolute⭐ fixed⭐ sticky⭐ 写在最后 ⭐ 专栏简介 前端入门之旅:探索Web开发的奇妙世界 记得点击上方或者右侧链接订阅本专栏哦 几何带你启航前端之旅 欢迎来到前端入门之旅!这个专栏是为那…...

视频联网报警厂家怎么找?

视频联网报警厂家怎么找?要找到联网报警设备厂家,可以按照以下步骤进行: 1. 在互联网上搜索:可以使用搜索引擎,如谷歌或百度,搜索关键词,如“联网报警设备厂家”、“安防设备厂家”等ÿ…...

配置文件优先级解读

目录 概述 同级目录application配置文件优先级 application 以及bootstrap 优先级 不同级目录配置文件优先级 外部配置加载顺序 概述 SpringBoot除了支持properties格式的配置文件,还支持另外两种格式的配置文件。三种配置文件格式分别如下: properties格式…...

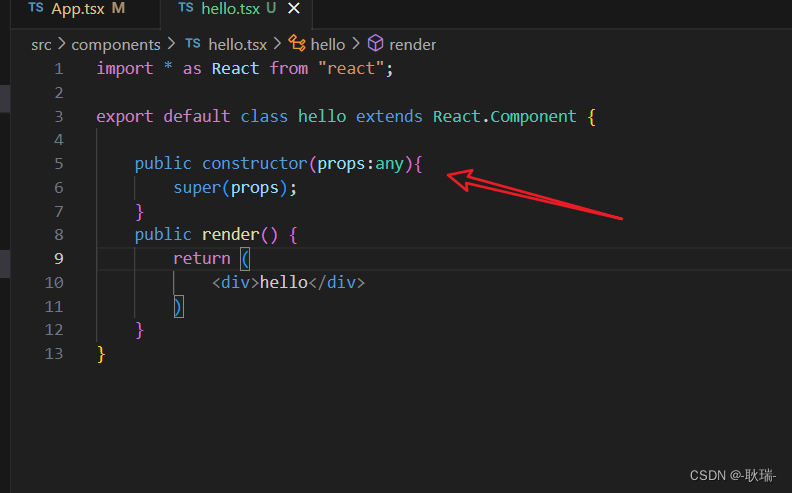

在 React+Typescript 项目环境中创建并使用组件

上文 ReactTypescript清理项目环境 我们将自己创建的项目环境 好好清理了一下 下面 我们来看组件的创建 组件化在这种数据响应式开发中肯定是非常重要的。 我们现在src下创建一个文件夹 叫 components 就用他专门来处理组件业务 然后 我们在下面创建一个 hello.tsx 注意 是t…...

UNIAPP中开发企业微信小程序

概述 需求为使用uni-app开发企业微信小程序。希望可以借助现成的uni-app框架,快速开发。遇到的问题是uni-app引入jweixin-1.2.0.js提示异常: Reason: TypeError: Cannot read properties of undefined (reading ‘title’)。本文中描述了如何解决该问题,…...

NGINX负载均衡及LVS-DR负载均衡集群

目录 LVS-DR原理搭建过程nginx 负载均衡 LVS-DR原理 原理: 1. 当用户向负载均衡调度器(Director Server)发起请求,调度器将请求发往至内核空间 2. PREROUTING链首先会接收到用户请求,判断目标IP确定是本机IPÿ…...

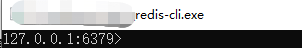

由于目标计算机积极拒绝,无法连接。 Could not connect to Redis at 127.0.0.1:6379

项目在启动时候报出redis连接异常 然后查看是redis 连接被计算机拒绝 解决方法 打开redis安装文件夹 先打开redis-servce.exe挂着,再打开redis-cli.exe 也不会弹出被拒接的问题了。而且此方法不用每次都去cmd里输入命令。...

电脑提示数据错误循环冗余检查怎么办?

有些时候,我们尝试在磁盘上创建分区或清理硬盘时,还可能会遇到这个问题:数据错误循环冗余检查。这是如何导致的呢?我们又该如何解决这个问题呢?下面我们就来了解一下。 导致冗余检查错误的原因有哪些? 数据…...

剑指offer62.圆圈中最后剩下的数字

这道题在算法课上的一个小故事上有一个类似的,就是一个军官打了败仗,带着他的几个兵逃到一个山洞,他们不想当俘虏想自杀,但是军官不想自杀但是又不好意思走,于是军官想了个办法,他们几个人围成一个圈&#…...

Python分享之 Spider

一、网络爬虫 网络爬虫又被称为网络蜘蛛,我们可以把互联网想象成一个蜘蛛网,每一个网站都是一个节点,我们可以使用一只蜘蛛去各个网页抓取我们想要的资源。举一个最简单的例子,你在百度和谷歌中输入‘Python,会有大量和…...

Lombok 的 @Data 注解失效,未生成 getter/setter 方法引发的HTTP 406 错误

HTTP 状态码 406 (Not Acceptable) 和 500 (Internal Server Error) 是两类完全不同的错误,它们的含义、原因和解决方法都有显著区别。以下是详细对比: 1. HTTP 406 (Not Acceptable) 含义: 客户端请求的内容类型与服务器支持的内容类型不匹…...

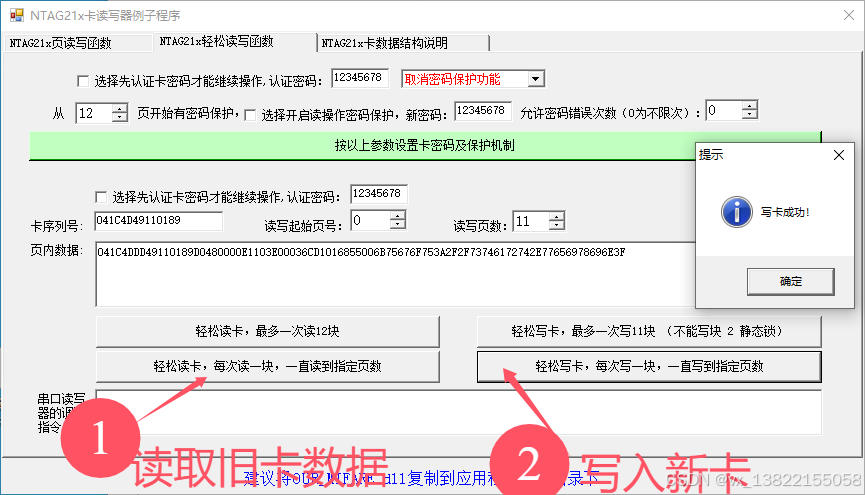

VB.net复制Ntag213卡写入UID

本示例使用的发卡器:https://item.taobao.com/item.htm?ftt&id615391857885 一、读取旧Ntag卡的UID和数据 Private Sub Button15_Click(sender As Object, e As EventArgs) Handles Button15.Click轻松读卡技术支持:网站:Dim i, j As IntegerDim cardidhex, …...

8k长序列建模,蛋白质语言模型Prot42仅利用目标蛋白序列即可生成高亲和力结合剂

蛋白质结合剂(如抗体、抑制肽)在疾病诊断、成像分析及靶向药物递送等关键场景中发挥着不可替代的作用。传统上,高特异性蛋白质结合剂的开发高度依赖噬菌体展示、定向进化等实验技术,但这类方法普遍面临资源消耗巨大、研发周期冗长…...

STM32+rt-thread判断是否联网

一、根据NETDEV_FLAG_INTERNET_UP位判断 static bool is_conncected(void) {struct netdev *dev RT_NULL;dev netdev_get_first_by_flags(NETDEV_FLAG_INTERNET_UP);if (dev RT_NULL){printf("wait netdev internet up...");return false;}else{printf("loc…...

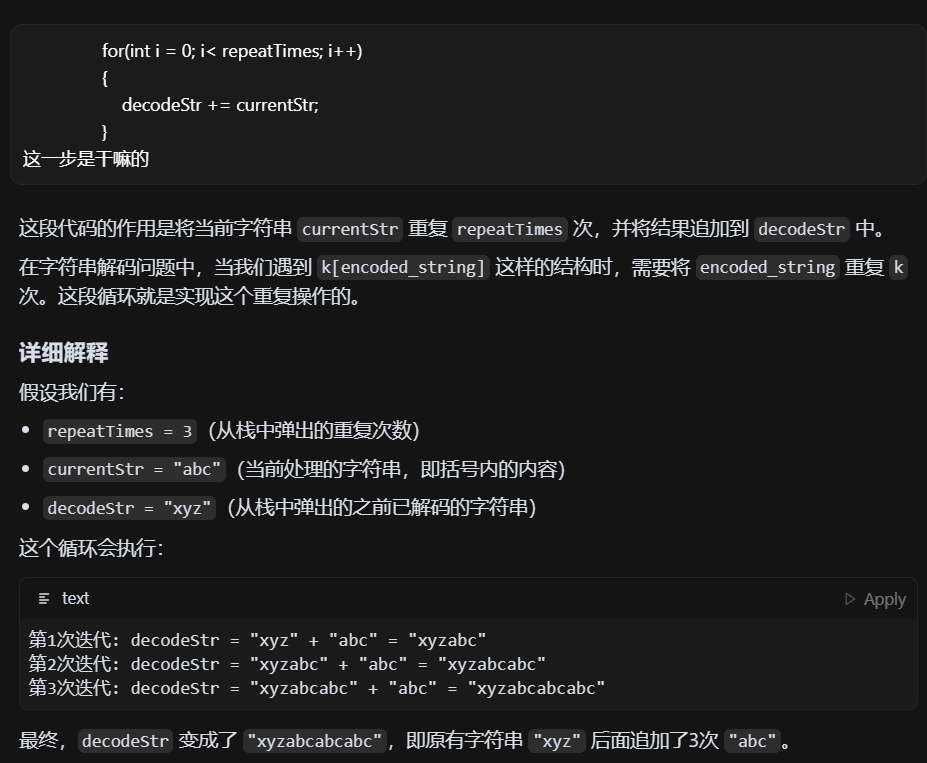

LeetCode - 394. 字符串解码

题目 394. 字符串解码 - 力扣(LeetCode) 思路 使用两个栈:一个存储重复次数,一个存储字符串 遍历输入字符串: 数字处理:遇到数字时,累积计算重复次数左括号处理:保存当前状态&a…...

Java 二维码

Java 二维码 **技术:**谷歌 ZXing 实现 首先添加依赖 <!-- 二维码依赖 --><dependency><groupId>com.google.zxing</groupId><artifactId>core</artifactId><version>3.5.1</version></dependency><de…...

)

【LeetCode】3309. 连接二进制表示可形成的最大数值(递归|回溯|位运算)

LeetCode 3309. 连接二进制表示可形成的最大数值(中等) 题目描述解题思路Java代码 题目描述 题目链接:LeetCode 3309. 连接二进制表示可形成的最大数值(中等) 给你一个长度为 3 的整数数组 nums。 现以某种顺序 连接…...

关于uniapp展示PDF的解决方案

在 UniApp 的 H5 环境中使用 pdf-vue3 组件可以实现完整的 PDF 预览功能。以下是详细实现步骤和注意事项: 一、安装依赖 安装 pdf-vue3 和 PDF.js 核心库: npm install pdf-vue3 pdfjs-dist二、基本使用示例 <template><view class"con…...

WebRTC从入门到实践 - 零基础教程

WebRTC从入门到实践 - 零基础教程 目录 WebRTC简介 基础概念 工作原理 开发环境搭建 基础实践 三个实战案例 常见问题解答 1. WebRTC简介 1.1 什么是WebRTC? WebRTC(Web Real-Time Communication)是一个支持网页浏览器进行实时语音…...

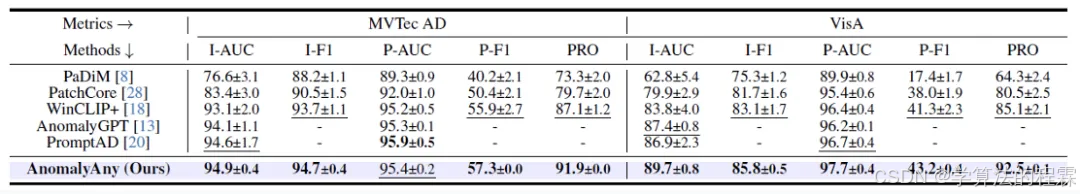

CVPR2025重磅突破:AnomalyAny框架实现单样本生成逼真异常数据,破解视觉检测瓶颈!

本文介绍了一种名为AnomalyAny的创新框架,该方法利用Stable Diffusion的强大生成能力,仅需单个正常样本和文本描述,即可生成逼真且多样化的异常样本,有效解决了视觉异常检测中异常样本稀缺的难题,为工业质检、医疗影像…...