open clip论文阅读摘要

看下open clip论文

Learning Transferable Visual Models From Natural Language Supervision

These results suggest that the aggregate supervision accessible to modern pre-training methods within web-scale collections of text surpasses that of high-quality crowd-labeled NLP datasets.

CNNs trained to predict words in image captions learn useful image representations

learn image representations from text

我好奇,在OCR上是怎么测试的?

CLIP训练样本要怎么准备,400 million (image, text) pairs,这个量级的样本集是怎么准备出来的

论文说CLIP这种预训练,zero-shot可以媲美基于监督学习构建的模型,我需要打个问号,在特定领域的业务数据上好像不太够啊?

Learning from natural language has several potential strengths over other training methods. It’s much easier to scale natural language supervision compared to standard crowd-sourced labeling for image classification since it does not require annotations to be in a classic “machine learning compatible format” such as the canonical 1-of-N majority vote “gold label”

MS-COCO and Visual Genome are high quality crowd-labeled datasets, they are small by modern standards with approximately 100,000 training photos each

YFCC100M, at 100 million photos, is a possible alternative, but the metadata for each image is sparse and of varying quality

Many images use automatically generated filenames like 20160716 113957.JPG as “titles” or contain “descriptions” of camera exposure settings. After filtering to keep only images with natural language titles and/or descriptions in English, the dataset shrunk by a factor of 6 to only 15 million photos. This is

approximately the same size as ImageNet

A major motivation for natural language supervision is the large quantities of data of this form available publicly on the internet.

we constructed a new dataset of 400 million (image, text) pairs collected form a variety of publicly available sources on the Internet

We approximately class balance the results by including up to 20,000 (image, text) pairs per query. The resulting dataset has a similar total word count as the WebText dataset used to train GPT-2. We refer to this dataset as WIT for WebImageText

注重样本的类别平衡

we found training efficiency was key to successfully scaling natural language supervision and we selected our final pre-training method based on this metric

是的,在这样规模的数据集上训练,需要的时间是令人畏惧的,所以掌握更快速的训练效率是关键

Recent work in contrastive representation learning for images has found that contrastive objectives can learn better representations than their equivalent

predictive objective

这个发现很有意思,这说明我们可以不需要准确预测每个图片的text caption,这太难了

Other work has found that although generative models of images can learn high quality image representations, they require over an order of magnitude more compute than contrastive models with the same performance

这里又提到了生成模型,在学习表征方面,有监督学习CNN、对比学习CLIP、生成模型Stable Diffusion

We train CLIP from scratch without initializing the image encoder with ImageNet weights or the text encoder with pre-trained weights.

在这么一个大数据集上,甚至比ImageNet还大,加载预训练的ImageNet模型和text encoder模型确实没必要

CLIP is pre-trained to predict if an image and a text snippet are paired together in its dataset. To perform zero-shot classification, we reuse this capability. For each dataset, we use the names of all the classes in the dataset as the set of potential text pairings and predict the most probable (image, text)

pair according to CLIP. In a bit more detail, we first compute the feature embedding of the image and the feature embedding of the set of possible texts by their respective encoders. The cosine similarity of these embeddings is then calculated, scaled by a temperature parameter τ , and normalized into a

probability distribution via a softmax. Note that this prediction layer is a multinomial logistic regression classifier with L2-normalized inputs, L2-normalized weights, no bias, and temperature scaling

Another issue we encountered is that it’s relatively rare in our pre-training dataset for the text paired with the image to be just a single word. Usually the text is a full sentence describing the image in some way. To help bridge this distribution gap, we found that using the prompt template “A photo of a {label}.” to be a good default that helps specify the text is about the content of the image. This often improves performance over the baseline of using only the label text

出现这个问题的原因是模型没能理解语言,不过现在GPT4可以做到了,估计会有点儿突破?

Similar to the “prompt engineering” discussion around GPT3 (Brown et al., 2020; Gao et al., 2020), we have also observed that zero-shot performance can be significantly improved by customizing the prompt text to each task. A few, non exhaustive, examples follow. We found on several fine-grained image classification datasets that it helped to specify the category. For example on Oxford-IIIT Pets, using “A photo of a {label}, a type of pet.” to help provide context worked well. Likewise, on Food101 specifying a type of food and on FGVC Aircraft a type of aircraft helped too. For OCR datasets, we found that putting quotes around the text or number to be recognized improved performance. Finally, we found that on satellite image classification datasets it helped to specify that the images were of this form and we use variants of “a satellite photo of a {label}.”.

这种prompt对于性能的提升是肯定的

We also experimented with ensembling over multiple zeroshot classifiers as another way of improving performance. These classifiers are computed by using different context prompts such as ‘A photo of a big {label}” and “A photo of a small {label}”. We construct the ensemble over the embedding space instead of probability space. This allows us to cache a single set of averaged text embeddings so that the compute cost of the ensemble is the same as using a single classifier when amortized over many predictions

这里使用emsemble的方法提升性能

说白了,比监督学习强在:

1、数据量更多

2、任务种类更多

3、加上文本学习语义信息,不单单是空间信息

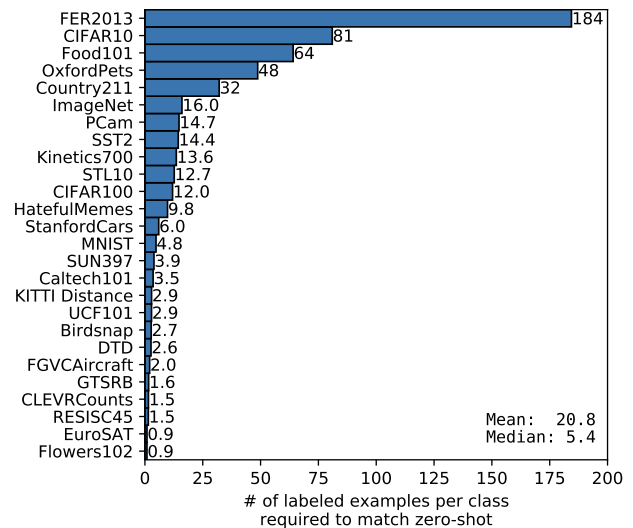

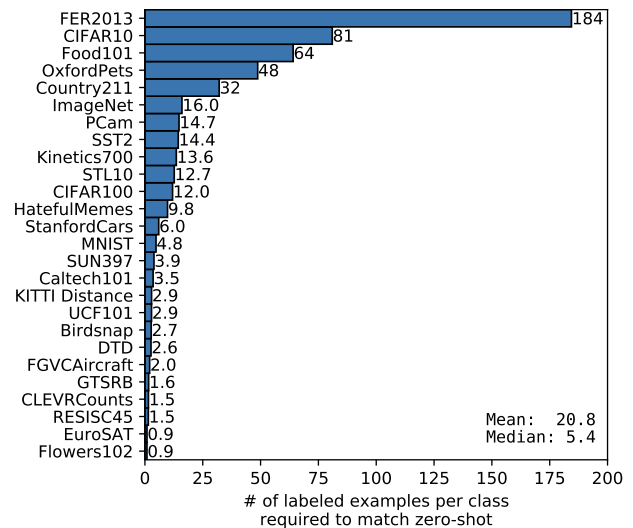

we see that zero-shot CLIP is quite weak on several specialized, complex, or abstract tasks such as satellite image classification (EuroSAT and RESISC45), lymph node tumor detection (PatchCamelyon), counting objects in synthetic scenes (CLEVRCounts), self-driving related tasks such as

German traffic sign recognition (GTSRB), recognizing distance to the nearest car (KITTI Distance). These results highlight the poor capability of zero-shot CLIP on more complex tasks.

貌似跟GPT4也有点像?虽然通用性很不错,但是没办法做到全能,特别是复杂任务上,我感觉还是数据的问题吧,当然也有可能是现在的模型架构没办法应对复杂任务,所以需要拆解成更简单的子任务。不可否认的是在业务数据标注上存在加速作用

First, CLIP’s zero-shot classifier is generated via natural language which allows for visual concepts to be directly specified (“communicated”). By contrast,

“normal” supervised learning must infer concepts indirectly from training examples. Context-less example-based learning has the drawback that many different hypotheses can be consistent with the data, especially in the one-shot case. A single image often contains many different visual concepts. Although a capable learner is able to exploit visual cues and heuristics, such as assuming that the concept being demonstrated is the primary object in an image, there is no guarantee

是的,few-shot的难点主要在于,你不知道模型把什么特征跟最终的标签做了关联,所以需要加大样本数据量,才能使模型正确找到这个路径

zero-shot主要是在大数据量上预训练了,所以跟few-shot还是有区别的

我比较看好在大数据上预训练过的大模型

其实这个评估有点儿问题?如何评判稳定性?你这个只是在建立的测试样本集上的结果而已,并不是大量的数据评估结果,特别是放到真实业务场景下的分析结果,我觉得每个类别多点儿数据不是坏事,可以加强特征到标签的连接,特别是捕获正确的特征

If we assume that evaluation datasets are large enough that the parameters of linear classifiers trained on them are well estimated, then, because CLIP’s zero-shot classifier is also a linear classifier, the performance of the fully supervised classifiers roughly sets an upper bound for what zero-shot transfer can achieve

从拟合能力上来看,监督学习可以拟合的性能上限,也是zero-shot可以达到的上限

在大数据上学习到通用表征能力,跟在特定数据集上做监督训练,并不是冲突的

Over the past few years, empirical studies of deep learning

systems have documented that performance is predictable as

a function of important quantities such as training compute

and dataset size

这里提到,近年来的深度学习预测能力,是可以评估的,这个确实有点儿意思哈

相关文章:

open clip论文阅读摘要

看下open clip论文 Learning Transferable Visual Models From Natural Language Supervision These results suggest that the aggregate supervision accessible to modern pre-training methods within web-scale collections of text surpasses that of high-quality crowd…...

上挂载数据)

Vue3像Vue2一样在prototype(原型)上挂载数据

Vue2的写法 import App from ./App import Vue from vue import ./uni.promisify.adaptor Vue.config.productionTip false App.mpType app import config from "./static/js/config/config.js" Vue.prototype.$configconfig; const app new Vue({...App }) app.…...

API接口自动化测试

本节介绍,使用python实现接口自动化实现。 思路:讲接口数据存放在excel文档中,读取excel数据,将每一行数据存放在一个个列表当中。然后获取URL,header,请求体等数据,进行请求发送。 结构如下 excel文档内容如下&#x…...

基于springboot实现驾校管理系统项目【项目源码】

基于springboot实现驾校管理系统演示 JAVA简介 JavaScript是一种网络脚本语言,广泛运用于web应用开发,可以用来添加网页的格式动态效果,该语言不用进行预编译就直接运行,可以直接嵌入HTML语言中,写成js语言࿰…...

)

稀疏数组的保存优化(java版本)

什么是稀疏矩阵? 矩阵中,若数值为 0 的元素数目远远多于非 0 元素的数目,并且非 0 元素分布没有规律时,则称该矩阵为稀疏矩阵;与之相反,若非 0 元素数目占大多数时,则称该矩阵为稠密矩阵。 …...

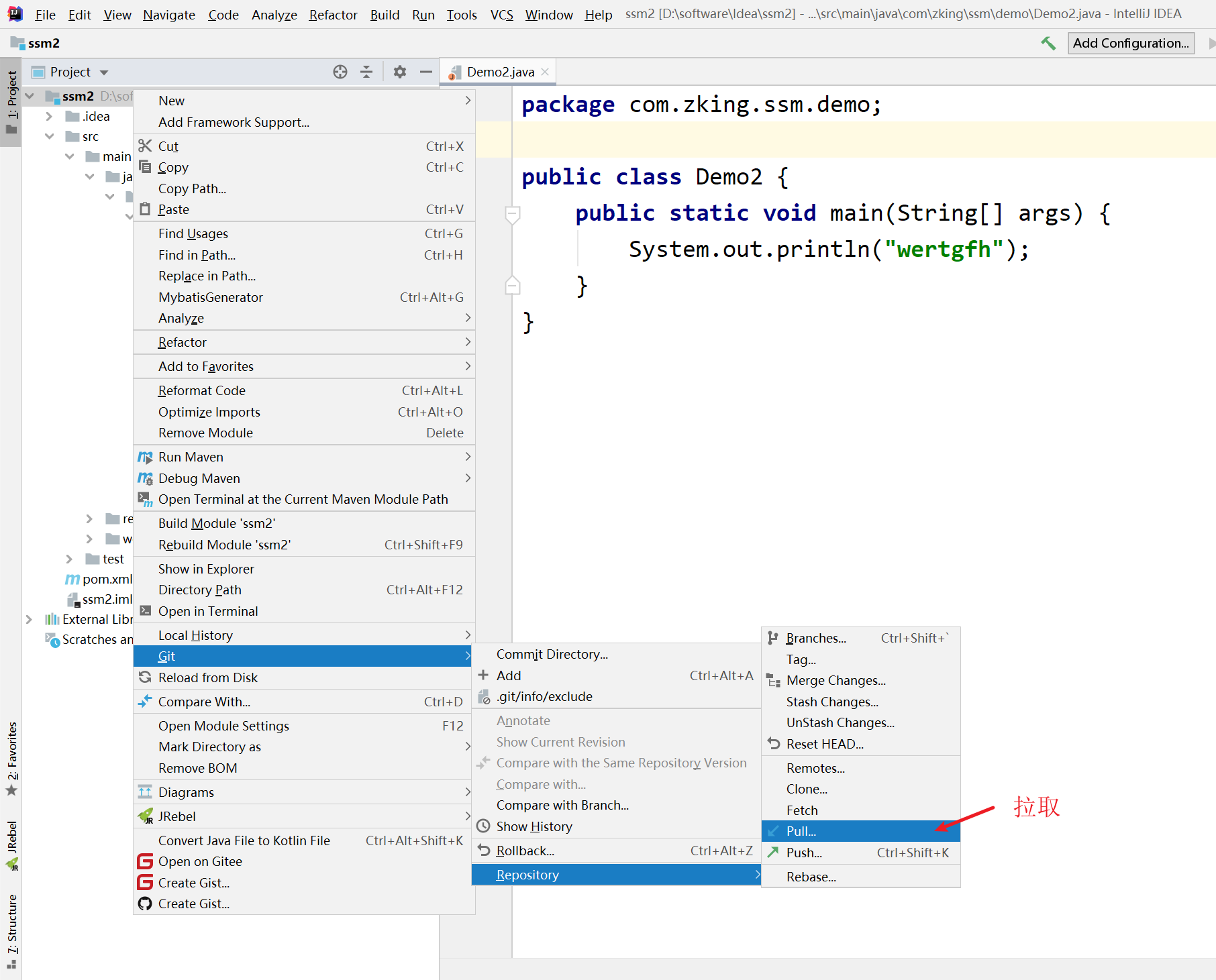

Git GUI、SSH协议和IDEA中的Git使用详解

目录 前言 一、Git GUI的使用 1. 什么是Git GUI 2. 常见的Git GUI工具 3.使用 4.使用Git GUI工具的优缺点 优点: 缺点: 二、SSH协议 1.什么是SSH协议 2.SSH的主要特点和作用 3.SSH密钥认证的原理和流程 4. SSH协议的使用 三、IEDA使用git …...

Linux下C++调用python脚本实现LDAP协议通过TNLM认证连接到AD服务器

1.前言 首先要实现这个功能,必须先搞懂如何通过C调用python脚本文件最为关键,因为两者的环境不同。本质上是在 c 中启动了一个 python 解释器,由解释器对 python 相关的代码进行执行,执行完毕后释放资源。 2 模块功能 2.1python…...

计算机毕业设计选题推荐-校园交流平台微信小程序/安卓APP-项目实战

✨作者主页:IT研究室✨ 个人简介:曾从事计算机专业培训教学,擅长Java、Python、微信小程序、Golang、安卓Android等项目实战。接项目定制开发、代码讲解、答辩教学、文档编写、降重等。 ☑文末获取源码☑ 精彩专栏推荐⬇⬇⬇ Java项目 Python…...

FlinK之检查点与保存点机制

检查点与保存点 检查点Checkpoint概述保存时机保存与恢复检查点算法 检查点配置启用检查点指定存储位置其它配置通用增量 保存点Savepoint概述使用保存点切换状态后端 SQL客户端中操作提交作业触发恢复 检查点Checkpoint 概述 在 Flink 中,检查点是用于实现状态一致…...

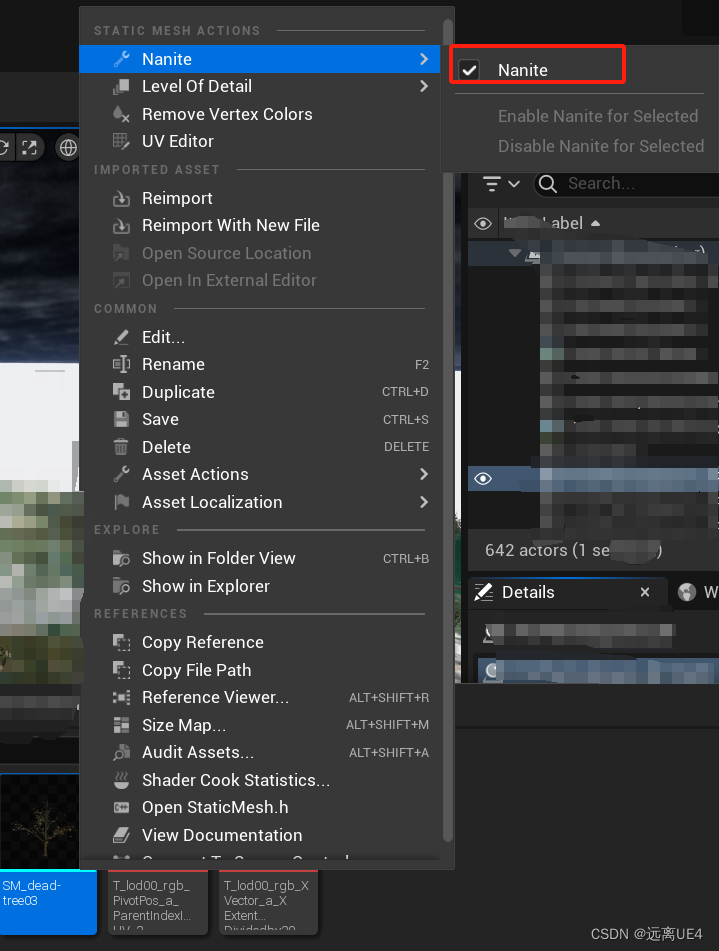

UE5 新特性 Nanite 开启

啥也不说,只能说,真的牛,在自己的项目上,从10几20的帧数,直接彪到了70 适用场景: 大场景,三角面足够多 在Project Setting里面 将这几个勾未true 勾上这个,放入场景即可...

仿写知乎日报第四周

本周主要修改了以往的一些bug,实现了一些遗漏的新功能。 无限右滑 无限右滑我听了学长的思路,首先在scrollView的画布大小设置多一个宽度的画布,然后每当滑到那个画布的时候,就调用一个通知,该通知会触发在首页的vie…...

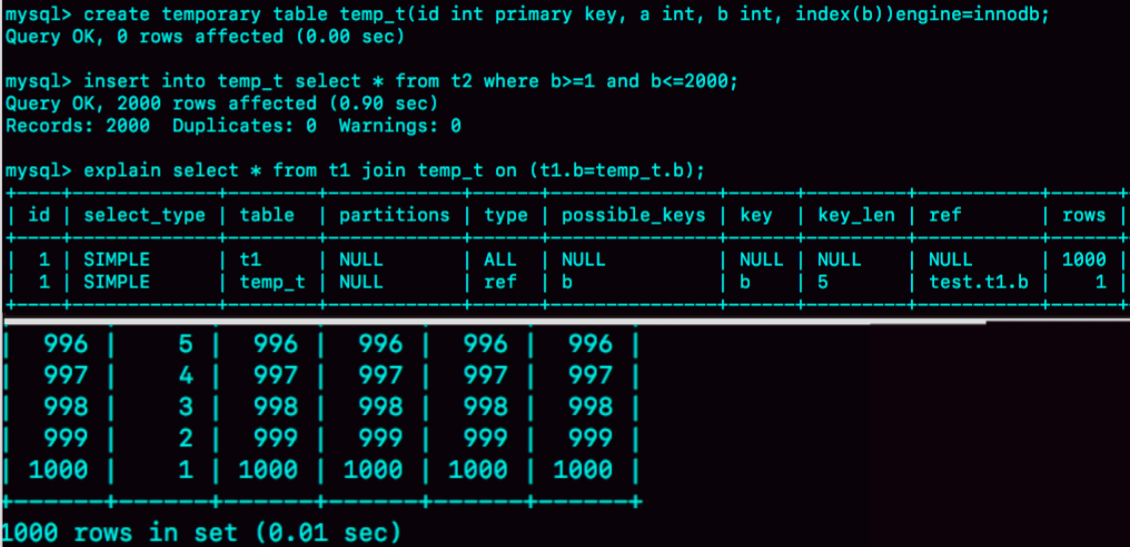

MySQL join原理及优化

MySQL的JOIN原理是基于索引和算法的。在执行JOIN查询时,MySQL会根据连接字段上的索引来查找匹配的记录。 这种算法在链接查询的时候,驱动表会根据关联字段的索引进行查找,当在索引上找到了符合的值,再回表进行查询,也就…...

js案例:打地鼠游戏(打灰太狼)

效果预览图 游戏规则 当灰太狼出现的时候鼠标左键点击灰太狼加10分,小灰灰出现的时候鼠标左键点小灰灰击减10分,不点击不减分不加分。 整体思路 1.把获取背景图片中每个地洞的位置,把所有位置放到一个数组中。 2.封装随机数函数,随…...

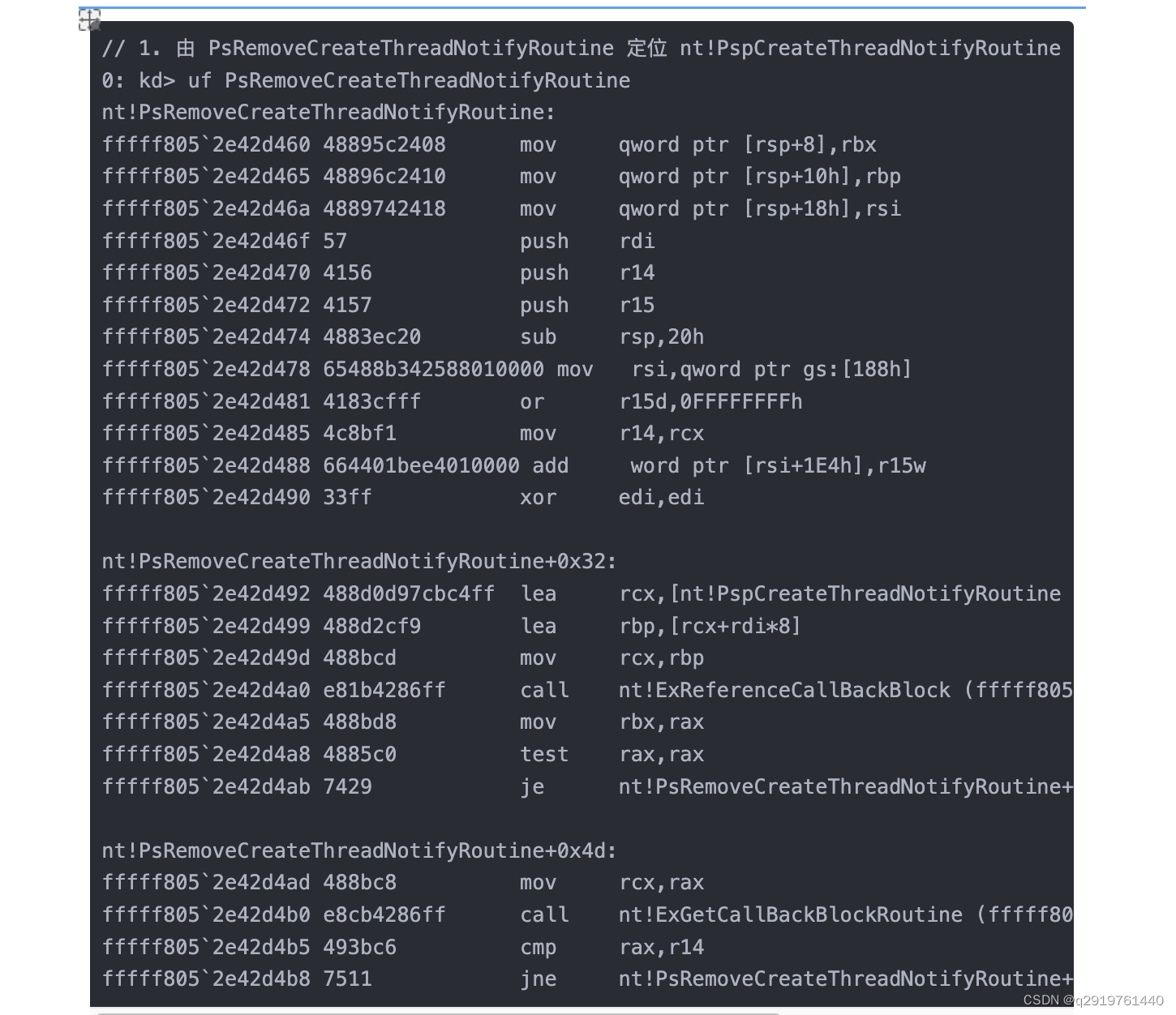

删除杀软回调 bypass EDR 研究

01 — 杀软或EDR内核回调简介 Windows x64 系统中,由于 PatchGuard 的限制,杀软或EDR正常情况下,几乎不能通过 hook 的方式,完成其对恶意软件的监控和查杀。那怎么办呢?别急,微软为我们提供了其他的方法&a…...

Ansible自动化部署工具-组件及语法介绍

大家好,我是蓝胖子,我认为自动化运维要做的事情就是把运维过程中的某些步骤流程化,代码化,这样在以后执行类似的操作的时候就可以解放双手了,让程序自动完成。避免出错,Ansible就是这方面非常好用的工具。它…...

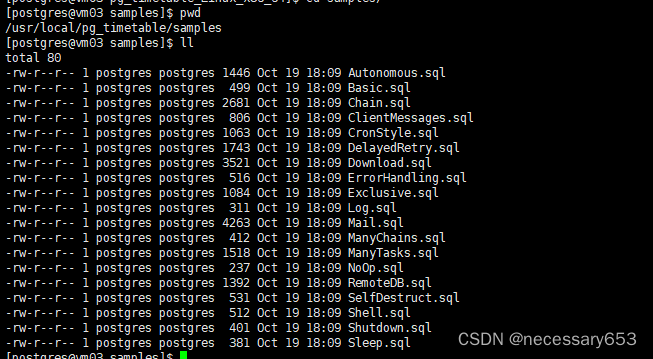

postgresql实现job的六种方法

简介 在postgresql数据库中并没有想oracle那样的job功能,要想实现job调度,就需要借助于第三方。本人更为推荐kettle,pgagent这样的图形化界面,对于开发更为友好 优势劣势Linux 定时任务(crontab) 简单易用…...

layui 表格(table)合计 取整数

第一步 开启合计行 是否开启合计行区域 table.render({elem: #myTable, url: ../baidui/, page: true, cellMinWidth: 100,totalRow:true,cols: [[ //表头//{ type: checkbox },{ type: checkbox,totalRowText: "合计" },//合计行区域{ field: id, align: center,…...

深入理解 TCP;场景复现,掌握鲜为人知的细节

握手失败 第一次握手丢失了,会发生什么? 当客户端想和服务端建立 TCP 连接的时候,首先第一个发的就是 SYN 报文,然后进入到 SYN_SENT 状态。 在这之后,如果客户端迟迟收不到服务端的 SYN-ACK 报文(第二次…...

【MySQL系列】 第二章 · SQL(中)

写在前面 Hello大家好, 我是【麟-小白】,一位软件工程专业的学生,喜好计算机知识。希望大家能够一起学习进步呀!本人是一名在读大学生,专业水平有限,如发现错误或不足之处,请多多指正࿰…...

IBM Qiskit量子机器学习速成(一)

声明:本篇笔记基于IBM Qiskit量子机器学习教程的第一节,中文版译文详见:https://blog.csdn.net/qq_33943772/article/details/129860346?spm1001.2014.3001.5501 概述 首先导入关键的包 from qiskit import QuantumCircuit from qiskit.u…...

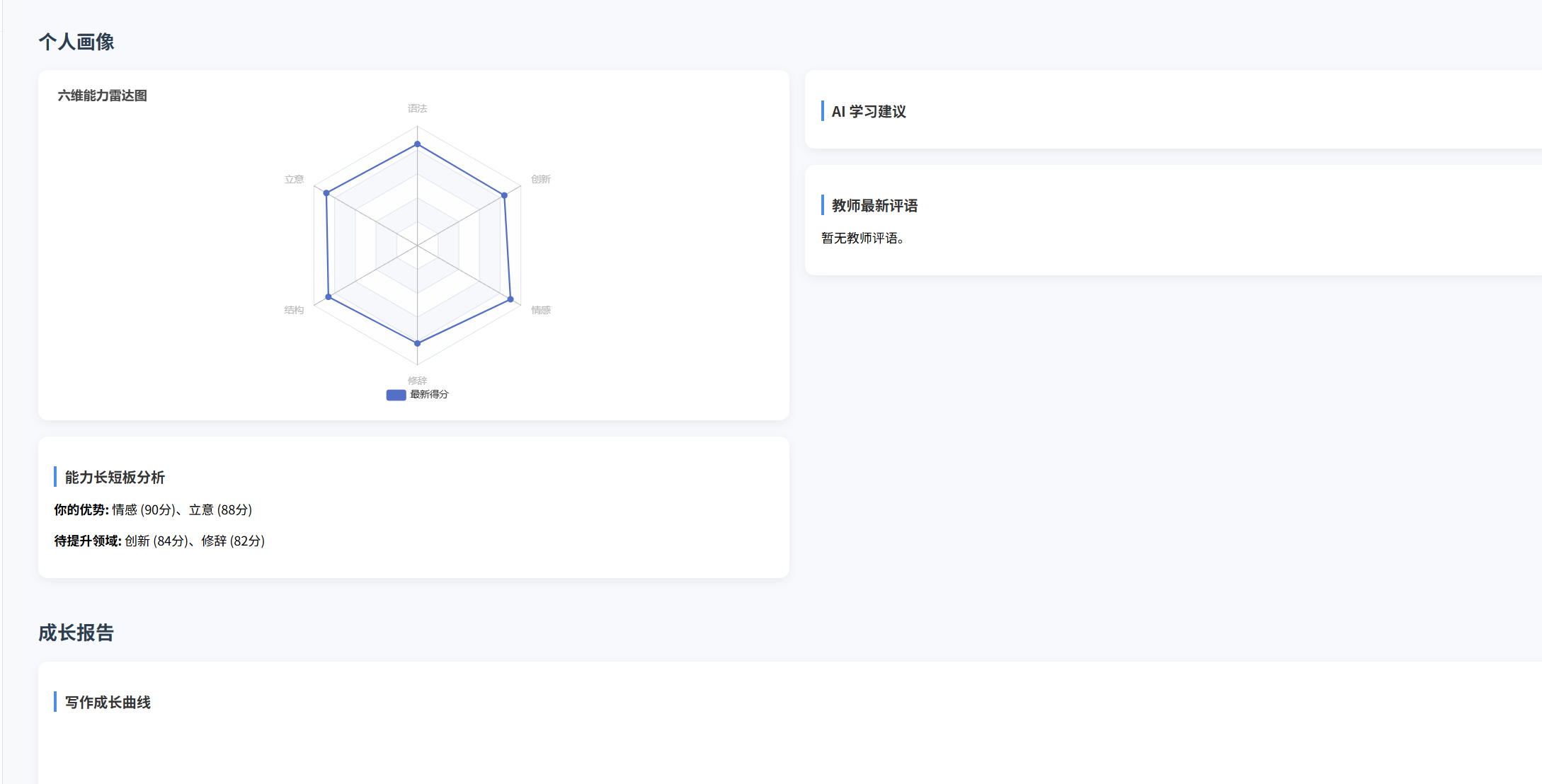

(十)学生端搭建

本次旨在将之前的已完成的部分功能进行拼装到学生端,同时完善学生端的构建。本次工作主要包括: 1.学生端整体界面布局 2.模拟考场与部分个人画像流程的串联 3.整体学生端逻辑 一、学生端 在主界面可以选择自己的用户角色 选择学生则进入学生登录界面…...

【OSG学习笔记】Day 18: 碰撞检测与物理交互

物理引擎(Physics Engine) 物理引擎 是一种通过计算机模拟物理规律(如力学、碰撞、重力、流体动力学等)的软件工具或库。 它的核心目标是在虚拟环境中逼真地模拟物体的运动和交互,广泛应用于 游戏开发、动画制作、虚…...

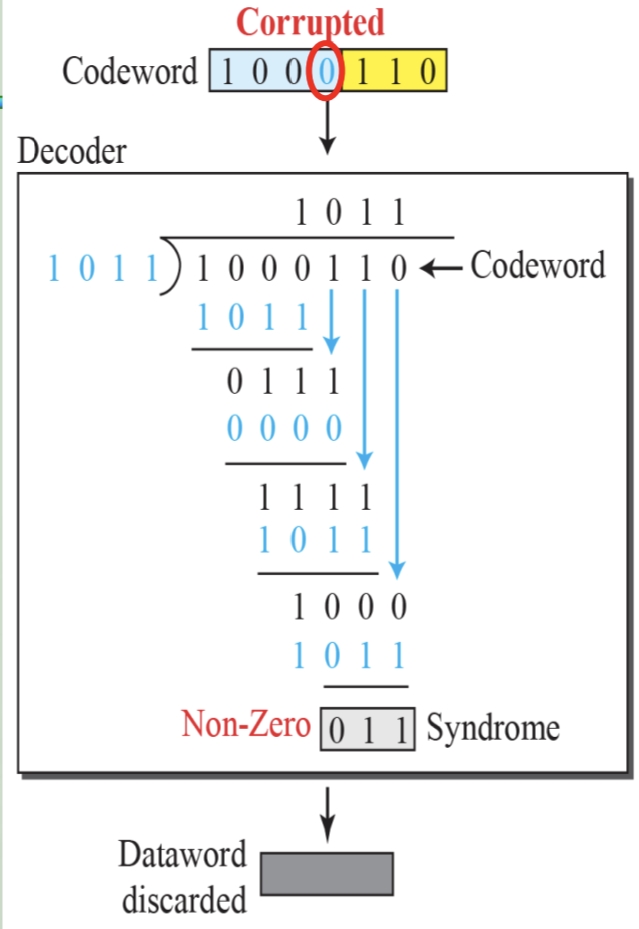

循环冗余码校验CRC码 算法步骤+详细实例计算

通信过程:(白话解释) 我们将原始待发送的消息称为 M M M,依据发送接收消息双方约定的生成多项式 G ( x ) G(x) G(x)(意思就是 G ( x ) G(x) G(x) 是已知的)࿰…...

多模态商品数据接口:融合图像、语音与文字的下一代商品详情体验

一、多模态商品数据接口的技术架构 (一)多模态数据融合引擎 跨模态语义对齐 通过Transformer架构实现图像、语音、文字的语义关联。例如,当用户上传一张“蓝色连衣裙”的图片时,接口可自动提取图像中的颜色(RGB值&…...

华硕a豆14 Air香氛版,美学与科技的馨香融合

在快节奏的现代生活中,我们渴望一个能激发创想、愉悦感官的工作与生活伙伴,它不仅是冰冷的科技工具,更能触动我们内心深处的细腻情感。正是在这样的期许下,华硕a豆14 Air香氛版翩然而至,它以一种前所未有的方式&#x…...

JAVA后端开发——多租户

数据隔离是多租户系统中的核心概念,确保一个租户(在这个系统中可能是一个公司或一个独立的客户)的数据对其他租户是不可见的。在 RuoYi 框架(您当前项目所使用的基础框架)中,这通常是通过在数据表中增加一个…...

IP如何挑?2025年海外专线IP如何购买?

你花了时间和预算买了IP,结果IP质量不佳,项目效率低下不说,还可能带来莫名的网络问题,是不是太闹心了?尤其是在面对海外专线IP时,到底怎么才能买到适合自己的呢?所以,挑IP绝对是个技…...

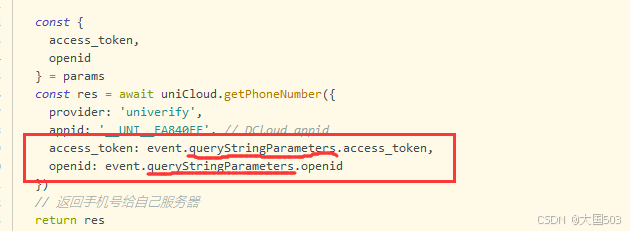

uniapp手机号一键登录保姆级教程(包含前端和后端)

目录 前置条件创建uniapp项目并关联uniClound云空间开启一键登录模块并开通一键登录服务编写云函数并上传部署获取手机号流程(第一种) 前端直接调用云函数获取手机号(第三种)后台调用云函数获取手机号 错误码常见问题 前置条件 手机安装有sim卡手机开启…...

实战三:开发网页端界面完成黑白视频转为彩色视频

一、需求描述 设计一个简单的视频上色应用,用户可以通过网页界面上传黑白视频,系统会自动将其转换为彩色视频。整个过程对用户来说非常简单直观,不需要了解技术细节。 效果图 二、实现思路 总体思路: 用户通过Gradio界面上…...

离线语音识别方案分析

随着人工智能技术的不断发展,语音识别技术也得到了广泛的应用,从智能家居到车载系统,语音识别正在改变我们与设备的交互方式。尤其是离线语音识别,由于其在没有网络连接的情况下仍然能提供稳定、准确的语音处理能力,广…...