安装和使用 nn-Meter

安装和使用 nn-Meter

nn-Meter: Towards Accurate Latency Prediction of Deep-Learning Model Inference on Diverse Edge Devices

nn-Meter:精准预测深度学习模型在边缘设备上的推理延迟

Li Lyna Zhang, Shihao Han, Jianyu Wei, Ningxin Zheng, Ting Cao, Yuqing Yang, Yunxin Liu

1. nn-Meter

https://github.com/microsoft/nn-Meter

nn-Meter is a novel and efficient system to accurately predict the inference latency of DNN models on diverse edge devices. The key idea is dividing a whole model inference into kernels, i.e., the execution units of fused operators on a device, and conduct kernel-level prediction. We currently evaluate four popular platforms on a large dataset of 26k models. It achieves 99.0% (mobile CPU), 99.1% (mobile Adreno 640 GPU), 99.0% (mobile Adreno 630 GPU), and 83.4% (Intel VPU) prediction accuracy.

为了高效、准确地预测深度神经网络模型在不同边缘设备上的推理延迟,作者提出并开发了一个基于内核的模型推理延迟预测系统 nn-Meter,引入了内核检测,可找出算子融合行为。通过对最有价值的数据进行采样,nn-Meter 有效地建立了内核的延迟预测器。

The current supported hardware and inference frameworks:

| Device | Framework | Processor | ±10% Accuracy | Hardware name |

|---|---|---|---|---|

| Pixel4 | TFLite v2.1 | CortexA76 CPU | 99.0% | cortexA76cpu_tflite21 |

| Mi9 | TFLite v2.1 | Adreno 640 GPU | 99.1% | adreno640gpu_tflite21 |

| Pixel3XL | TFLite v2.1 | Adreno 630 GPU | 99.0% | adreno630gpu_tflite21 |

| Intel Movidius NCS2 | OpenVINO2019R2 | Myriad VPU | 83.4% | myriadvpu_openvino2019r2 |

Tags

https://github.com/microsoft/nn-Meter/tags

Releases

https://github.com/microsoft/nn-Meter/releases

https://github.com/microsoft/nn-Meter/releases/tag/v1.0-data

adreno630gpu_tflite21.zip

adreno640gpu_tflite21.zip

cortexA76cpu_tflite21.zip

datasets.zip

ir_graphs.zip

myriadvpu_openvino2019r2.zip

onnx_models.zip

pb_models.zip

Source code (zip)

Source code (tar.gz)

https://github.com/microsoft/nn-Meter/releases/tag/v2.0-data

tflite_benchmark_tools_v2.1.zip

tflite_benchmark_tools_v2.7.zip

Source code (zip)

Source code (tar.gz)

nn-Meter Builder

https://github.com/microsoft/nn-Meter/blob/main/docs/builder/overview.md

Neural Network Intelligence,NNI

https://github.com/microsoft/nni

https://www.microsoft.com/en-us/research/project/neural-network-intelligence/

https://nni.readthedocs.io/zh/stable/

NNI (Neural Network Intelligence) is a toolkit to help users run automated machine learning (AutoML) experiments.

26k latency benchmark dataset

https://github.com/microsoft/nn-Meter/releases/download/v1.0-data/datasets.zip

2. Installation

Currently nn-Meter has been tested on Linux and Windows system. Windows 10, Ubuntu 16.04 and 20.04 with python 3.6.10 are tested and supported. Please first install python3 before nn-Meter installation. Then nn-Meter could be installed by running:

pip install nn-meter

pip install nn-meter --default-timeout=1000 -i https://pypi.tuna.tsinghua.edu.cn/simple

nn-meter==2.0 has been released now.

(base) yongqiang@yongqiang:~/yongqiang_work$ pip install nn-meter --default-timeout=1000 -i https://pypi.tuna.tsinghua.edu.cn/simple

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: nn-meter in /home/yongqiang/miniconda3/lib/python3.11/site-packages (2.0)

Requirement already satisfied: numpy in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (1.25.2)

Requirement already satisfied: pandas in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (1.5.3)

Requirement already satisfied: tqdm in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (4.65.0)

Requirement already satisfied: networkx in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (3.2.1)

Requirement already satisfied: requests in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (2.31.0)

Requirement already satisfied: protobuf in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (4.25.0)

Requirement already satisfied: PyYAML in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (6.0.1)

Requirement already satisfied: scikit-learn in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (1.3.2)

Requirement already satisfied: packaging in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (23.0)

Requirement already satisfied: jsonlines in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from nn-meter) (4.0.0)

Requirement already satisfied: attrs>=19.2.0 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from jsonlines->nn-meter) (23.1.0)

Requirement already satisfied: python-dateutil>=2.8.1 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from pandas->nn-meter) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from pandas->nn-meter) (2022.7)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from requests->nn-meter) (2.0.4)

Requirement already satisfied: idna<4,>=2.5 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from requests->nn-meter) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from requests->nn-meter) (1.26.16)

Requirement already satisfied: certifi>=2017.4.17 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from requests->nn-meter) (2023.7.22)

Requirement already satisfied: scipy>=1.5.0 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from scikit-learn->nn-meter) (1.11.3)

Requirement already satisfied: joblib>=1.1.1 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from scikit-learn->nn-meter) (1.3.2)

Requirement already satisfied: threadpoolctl>=2.0.0 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from scikit-learn->nn-meter) (3.2.0)

Requirement already satisfied: six>=1.5 in /home/yongqiang/miniconda3/lib/python3.11/site-packages (from python-dateutil>=2.8.1->pandas->nn-meter) (1.16.0)

(base) yongqiang@yongqiang:~/yongqiang_work$

If you want to try latest code, please install nn-Meter from source code. First git clone nn-Meter package to local:

git clone git@github.com:microsoft/nn-Meter.git

cd nn-Meter

Then simply run the following pip install in an environment that has python >= 3.6. The command will complete the automatic installation of all necessary dependencies and nn-Meter.

pip install .

nn-Meter is a latency predictor of models with type of Tensorflow, PyTorch, Onnx, nn-meter IR graph and NNI IR graph (https://github.com/microsoft/nni). To use nn-Meter for specific model type, you also need to install corresponding required packages. The well tested versions are listed below:

| Testing Model Type | Requirements |

|---|---|

| Tensorflow | tensorflow==2.6.0 |

| Torch | torch==1.9.0, torchvision==0.10.0, (alternative) [onnx>=1.9.0, onnx-simplifier==0.3.6] or [nni>=2.4] |

| Onnx | onnx==1.9.0 |

| nn-Meter IR graph | — |

| NNI IR graph | nni>=2.4 |

Please also check the versions of numpy and scikit_learn. The different versions may change the prediction accuracy of kernel predictors.

The stable version of wheel binary package will be released soon.

3. Usage

To apply for hardware latency prediction, nn-Meter provides two types of interfaces:

- command line

nn-meterafternn-meterinstallation. - Python binding provided by the module

nn_meter

Here is a summary of supported inputs of the two methods.

| Testing Model Type | Command Support | Python Binding |

|---|---|---|

| Tensorflow | Checkpoint file dumped by tf.saved_model() and end with .pb | Checkpoint file dumped by tf.saved_model and end with .pb |

| Torch | Models in torchvision.models | Object of torch.nn.Module |

| Onnx | Checkpoint file dumped by torch.onnx.export() or onnx.save() and end with .onnx | Checkpoint file dumped by onnx.save() or model loaded by onnx.load() |

| nn-Meter IR graph | Json file in the format of nn-Meter IR Graph | dict object following the format of nn-Meter IR Graph |

| NNI IR graph | - | NNI IR graph object |

In both methods, users could appoint predictor name and version to target a specific hardware platform (device). Currently, nn-Meter supports prediction on the following four configs:

| Predictor (device_inference_framework) | Processor Category | Version |

|---|---|---|

| cortexA76cpu_tflite21 | CPU | 1.0 |

| adreno640gpu_tflite21 | GPU | 1.0 |

| adreno630gpu_tflite21 | GPU | 1.0 |

| myriadvpu_openvino2019r2 | VPU | 1.0 |

Users can get all predefined predictors and versions by running

# all predefined predictors

nn-meter --list-predictors

(base) yongqiang@yongqiang:~/yongqiang_work$ nn-meter --list-predictors

(nn-Meter) Supported latency predictors:

(nn-Meter) [Predictor] cortexA76cpu_tflite21: version=1.0

(nn-Meter) [Predictor] adreno640gpu_tflite21: version=1.0

(nn-Meter) [Predictor] adreno630gpu_tflite21: version=1.0

(nn-Meter) [Predictor] myriadvpu_openvino2019r2: version=1.0

(base) yongqiang@yongqiang:~/yongqiang_work$

(base) yongqiang@yongqiang:~/yongqiang_work$ nn-meter -h

usage: nn-meter [-h] [-v] [--list-predictors] [--list-backends] [--list-kernels] [--list-operators] [--list-testcases]{predict,lat_pred,get_ir,create,connect,register,unregister} ...please run "nn-meter {positional argument} --help" to see nn-meter guidancepositional arguments:{predict,lat_pred,get_ir,create,connect,register,unregister}predict (lat_pred) apply latency predictor for testing modelget_ir specify a model type to convert to nn-meter ir graphcreate create a workspace folder for nn-Meter builderconnect connect to backendregister register customized module to nn-Meter, supporting type: predictor, backend, operator, testcase, operatorunregister unregister customized module from nn-Meter, supporting type: predictor, backend, operator, testcase, operatoroptions:-h, --help show this help message and exit-v, --verbose increase output verbosity--list-predictors list all supported predictors--list-backends list all supported backends--list-kernels list all supported kernels when building kernel predictors--list-operators list all supported operators when building fusion rule test cases--list-testcases list all supported special test cases when building fusion rule test cases

(base) yongqiang@yongqiang:~/yongqiang_work$

3.1. Predict latency of saved CNN model

After installation, a command named nn-meter is enabled. To predict the latency for a CNN model with a predefined predictor in command line, users can run the following commands (sample models can be downloaded here)

# for Tensorflow (*.pb) file

nn-meter predict --predictor <hardware> [--predictor-version <version>] --tensorflow <pb-file_or_folder>

# Example Usage

nn-meter predict --predictor cortexA76cpu_tflite21 --predictor-version 1.0 --tensorflow mobilenetv3small_0.pb # for ONNX (*.onnx) file

nn-meter predict --predictor <hardware> [--predictor-version <version>] --onnx <onnx-file_or_folder>

# Example Usage

nn-meter predict --predictor cortexA76cpu_tflite21 --predictor-version 1.0 --onnx mobilenetv3small_0.onnx # for torch model from torchvision model zoo

nn-meter predict --predictor <hardware> [--predictor-version <version>] --torchvision <model-name> <model-name>...

# Example Usage

nn-meter predict --predictor cortexA76cpu_tflite21 --predictor-version 1.0 --torchvision mobilenet_v2# for nn-Meter IR (*.json) file

nn-meter predict --predictor <hardware> [--predictor-version <version>] --nn-meter-ir <json-file_or_folder>

# Example Usage

nn-meter predict --predictor cortexA76cpu_tflite21 --predictor-version 1.0 --nn-meter-ir mobilenetv3small_0.json

--predictor-version <version> arguments is optional. When the predictor version is not specified by users, nn-meter will use the latest version of the predictor.

(base) yongqiang@yongqiang:~/yongqiang_work/nn-Meter/material/testmodels$ pwd

/home/yongqiang/yongqiang_work/nn-Meter/material/testmodels

(base) yongqiang@yongqiang:~/yongqiang_work/nn-Meter/material/testmodels$ ls -l

total 18080

-rw-r--r-- 1 yongqiang yongqiang 239602 Nov 12 10:13 mobilenetv3small_0.json

-rw-r--r-- 1 yongqiang yongqiang 10169165 Nov 12 10:13 mobilenetv3small_0.onnx

-rw-r--r-- 1 yongqiang yongqiang 8098911 Nov 12 10:13 mobilenetv3small_0.pb

(base) yongqiang@yongqiang:~/yongqiang_work/nn-Meter/material/testmodels$

nn-meter predict --predictor cortexA76cpu_tflite21 --predictor-version 1.0 --tensorflow /home/yongqiang/yongqiang_work/nn-Meter/material/testmodels/mobilenetv3small_0.pb

nn-Meter can support batch mode prediction. To predict latency for multiple models in the same model type once, user should collect all models in one folder and state the folder after --[model-type] liked argument.

It should also be noted that for PyTorch model, nn-meter can only support existing models in torchvision model zoo. The string followed by --torchvision should be exactly one or more string indicating name(s) of some existing torchvision models. To apply latency prediction for torchvision model in command line, onnx and onnx-simplifier packages are required.

3.2. Convert to nn-Meter IR Graph

Furthermore, users may be interested to convert tensorflow pb-file or onnx file to nn-Meter IR graph. Users could convert nn-Meter IR graph and save to .json file be running

# for Tensorflow (*.pb) file

nn-meter get_ir --tensorflow <pb-file> [--output <output-name>]# for ONNX (*.onnx) file

nn-meter get_ir --onnx <onnx-file> [--output <output-name>]

Output name is default to be /path/to/input/file/<input_file_name>_<model-type>_ir.json if not specified by users.

3.3. Use nn-Meter in your Python code

After installation, users can import nn-Meter in python code

from nn_meter import load_latency_predictorpredictor = load_latency_predictor(hardware_name, hardware_predictor_version) # case insensitive in backend# build your model (e.g., model instance of torch.nn.Module)

model = ... lat = predictor.predict(model, model_type) # the resulting latency is in unit of ms

By calling load_latency_predictor, user selects the target hardware and loads the corresponding predictor. nn-Meter will try to find the right predictor file in ~/.nn_meter/data. If the predictor file doesn’t exist, it will download from the Github release.

In predictor.predict(), the allowed items of the parameter model_type include ["pb", "torch", "onnx", "nnmeter-ir", "nni-ir"], representing model types of tensorflow, torch, onnx, nn-meter IR graph and NNI IR graph, respectively.

For Torch models, the shape of feature maps is unknown merely based on the given network structure, which is, however, significant parameters in latency prediction. Therefore, torch model requires a shape of input tensor for inference as a input of predictor.predict(). Based on the given input shape, a random tensor according to the shape will be generated and used. Another thing for Torch model prediction is that users can install the onnx and onnx-simplifier packages for latency prediction (referred to as Onnx-based latency prediction for torch model), or alternatively install the nni package (referred to as NNI-based latency prediction for torch model). Note that the nni option does not support command line calls. In addition, if users use nni for latency prediction, the PyTorch modules should be defined by the nn interface from NNI import nni.retiarii.nn.pytorch as nn (view NNI Documentation (https://nni.readthedocs.io/en/stable/) for more information), and the parameter apply_nni should be set as True in the function predictor.predict(). Here is an example of NNI-based latency prediction for Torch model:

import nni.retiarii.nn.pytorch as nn

from nn_meter import load_latency_predictorpredictor = load_latency_predictor(...)# build your model using nni.retiarii.nn.pytorch as nn

model = nn.Module ...input_shape = (1, 3, 224, 224)

lat = predictor.predict(model, model_type='torch', input_shape=input_shape, apply_nni=True)

The Onnx-based latency prediction for torch model is stable but slower, while the NNI-based latency prediction for torch model is unstable as it could fail in some case but much faster compared to the Onnx-based model. The Onnx-based model is set as the default one for Torch model latency prediction in nn-Meter. Users could choose which one they preferred to use according to their needs.

Users could view the information all built-in predictors by list_latency_predictors or view the config file in nn_meter/configs/predictors.yaml.

Users could get a nn-Meter IR graph by applying model_file_to_graph and model_to_graph by calling the model name or model object and specify the model type. The supporting model types of model_file_to_graph include “onnx”, “pb”, “torch”, “nnmeter-ir” and “nni-ir”, while the supporting model types of model_to_graph include “onnx”, “torch” and “nni-ir”.

3.4. nn-Meter Builder

nn-Meter builder is an open source tool for users to build latency predictor on their own devices. There are three main parts in nn-Meter builder:

backend: the module of connecting backends;

backend_meta: the meta tools related to backend. Here we provide the fusion rule tester to detect fusion rules for users’ backend;

kernel_predictor_builder: the tool to build different kernel latency predictors.

Users could get access to nn-Meter builder by calling nn_meter.builder. For more details to use nn-Meter builder, please check the document of nn-Meter builder.

3.5. Hardware-aware NAS by nn-Meter and NNI

To empower affordable DNN on the edge and mobile devices, hardware-aware NAS searches both high accuracy and low latency models. In particular, the search algorithm only considers the models within the target latency constraints during the search process.

empower [ɪmˈpaʊə(r)]:v. 授权,给 (某人) ... 的权力,增加 (某人的) 自主权,使控制局势

affordable [əˈfɔː(r)dəb(ə)l]:adj. 负担得起的

Currently we provide two examples of hardware-aware NAS, including end-to-end multi-trial NAS (https://nni.readthedocs.io/en/stable/) which is a random search algorithm (https://arxiv.org/abs/1902.07638) on SPOS NAS (Single Path One-Shot Neural Architecture Search with Uniform Sampling) search space, and the popular ProxylessNAS (https://nni.readthedocs.io/en/stable/), which is a one-shot NAS algorithm with hardware-efficient loss function. More examples of other widely-used hardware-aware NAS and model compression algorithms are coming soon.

3.5.1. Multi-trial SPOS Demo

To run multi-trail SPOS demo, NNI should be installed through source code by following NNI Documentation (https://nni.readthedocs.io/en/stable/)

python setup.py develop

Then run multi-trail SPOS demo:

python ${NNI_ROOT}/examples/nas/oneshot/spos/multi_trial.py

- How the demo works

Refer to NNI Documentation (https://nni.readthedocs.io/en/stable/) for how to perform NAS by NNI.

To support hardware-aware NAS, you first need a Strategy that supports filtering the models by latency. We provide such a filter named LatencyFilter in NNI and initialize a Random strategy with the filter:

simple_strategy = strategy.Random(model_filter=LatencyFilter(threshold=100, predictor=base_predictor))

LatencyFilter will predict the models’ latency by using nn-Meter and filter out the models whose latency with the given predictor are larger than the threshold (i.e., 100 in this example).

You can also build your own strategies and filters to support more flexible NAS such as sorting the models according to latency.

Then, pass this strategy to RetiariiExperiment:

exp = RetiariiExperiment(base_model, trainer, strategy=simple_strategy)exp_config = RetiariiExeConfig('local')

...

exp_config.dummy_input = [1, 3, 32, 32]exp.run(exp_config, port)

In exp_config, dummy_input is required for tracing shape info.

3.5.2. ProxylessNAS Demo

To run the one-shot ProxylessNAS demo, users can run the NNI ProxylessNAS training demo:

python ${NNI_ROOT}/examples/nas/oneshot/proxylessnas/main.py --applied_hardware <hardware> --reference_latency <reference latency (ms)>

- How the demo works

Refer to NNI Documentation (https://nni.readthedocs.io/en/stable/) for how to perform NAS by NNI.

ProxylessNAS currently builds a lookup table, that stores the measured latency of each candidate building block in the search space. The latency sum of all building blocks in a candidate model will be treated as the model inference latency. With leveraging nn-Meter in NNI, users can apply ProxylessNAS to search efficient DNN models on more types of edge devices. In NNI implementation, a HardwareLatencyEstimator predicts expected latency for the mixed operation based on the path weight of ProxylessLayerChoice. To call nn-Meter in NNI ProxylessNAS, users can add the arguments of “--applied_hardware <hardware> --reference_latency <reference latency (ms)>” in the example (https://github.com/microsoft/nni/blob/master/examples/nas/legacy/oneshot/proxylessnas/main.py).

3.6. Benchmark Dataset

To evaluate the effectiveness of a prediction model on an arbitrary DNN model, we need a representative dataset that covers a large prediction scope. nn-Meter collects and generates 26k CNN models. (Please refer the paper for the dataset generation method.)

We release the dataset, and provide an interface of nn_meter.dataset for users to get access to the dataset. Users can also download the data from the Download Link (https://github.com/microsoft/nn-Meter/releases/download/v1.0-data/datasets.zip) on their own.

4. Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a

Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide

a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions

provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct (https://opensource.microsoft.com/codeofconduct/).

For more information see the Code of Conduct FAQ (https://opensource.microsoft.com/codeofconduct/faq/) or

contact opencode@microsoft.com with any additional questions or comments.

5. License

The entire codebase is under MIT license (https://github.com/microsoft/nn-Meter/blob/main/LICENSE)

The dataset is under Open Use of Data Agreement (https://github.com/Community-Data-License-Agreements/Releases/blob/main/O-UDA-1.0.md)

6. Citation

If you find that nn-Meter helps your research, please consider citing it:

@inproceedings{nnmeter,author = {Zhang, Li Lyna and Han, Shihao and Wei, Jianyu and Zheng, Ningxin and Cao, Ting and Yang, Yuqing and Liu, Yunxin},title = {nn-Meter: Towards Accurate Latency Prediction of Deep-Learning Model Inference on Diverse Edge Devices},year = {2021},publisher = {ACM},address = {New York, NY, USA},url = {https://doi.org/10.1145/3458864.3467882},doi = {10.1145/3458864.3467882},booktitle = {Proceedings of the 19th Annual International Conference on Mobile Systems, Applications, and Services},pages = {81–93},

}@misc{nnmetercode,author = {Microsoft Research nn-Meter Team},title = {nn-Meter: Towards Accurate Latency Prediction of Deep-Learning Model Inference on Diverse Edge Devices},year = {2021},url = {https://github.com/microsoft/nn-Meter},

}

References

[1] Yongqiang Cheng, https://yongqiang.blog.csdn.net/

[2] microsoft / nn-Meter, https://github.com/microsoft/nn-Meter

[3] nn-meter 2.0, https://pypi.org/project/nn-meter/

[4] nn-Meter: Towards Accurate Latency Prediction of Deep-Learning Model Inference on Diverse Edge Devices, https://air.tsinghua.edu.cn/pdf/nn-Meter-Towards-Accurate-Latency-Prediction-of-Deep-Learning-Model-Inference-on-Diverse-Edge-Devices.pdf

相关文章:

安装和使用 nn-Meter

安装和使用 nn-Meter nn-Meter: Towards Accurate Latency Prediction of Deep-Learning Model Inference on Diverse Edge Devices nn-Meter:精准预测深度学习模型在边缘设备上的推理延迟 Li Lyna Zhang, Shihao Han, Jianyu Wei, Ningxin Zheng, Ting Cao, Yuqin…...

PHP原生类总结利用

再SPL介绍 SPL就是Standard PHP Library的缩写。据手册显示,SPL是用于解决典型问题(standard problems)的一组接口与类的集合。打开手册,正如上面的定义一样,有许多封装好的类。因为是要解决典型问题,免不了有一些处理文…...

C/C++满足条件的数累加 2021年9月电子学会青少年软件编程(C/C++)等级考试一级真题答案解析

目录 C/C满足条件的数累加 一、题目要求 1、编程实现 2、输入输出 二、算法分析 三、程序编写 四、程序说明 五、运行结果 六、考点分析 C/C满足条件的数累加 2021年9月 C/C编程等级考试一级编程题 一、题目要求 1、编程实现 现有n个整数,将其中个位数…...

zookeeper:服务器有几种状态?

四种: looking(选举中)、leading(leader)、following( follower)、 observer(观察者角色)...

)

大数据-之LibrA数据库系统告警处理(ALM-12040 系统熵值不足)

告警解释 每天零点系统检查熵值,每次检查都连续检查五次,首先检查是否启用并正确配置了rng-tools工具或者haveged工具,如果没有配置,则继续检查当前熵值,如果五次均小于500,则上报故障告警。 当检查到真随…...

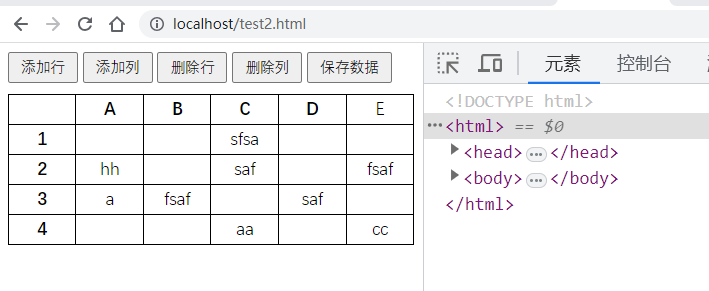

HTML页面模拟了一个类似Excel的表格在线diy修改表格内容

html实现在线表格编辑,可以修改每个表格内容,并且可以添加行和列 这个HTML页面模拟了一个类似Excel的表格,可以添加和删除行和列,并且可以编辑每个表格的内容。通过点击按钮可以添加新的行和列,通过按钮可以删除最后一…...

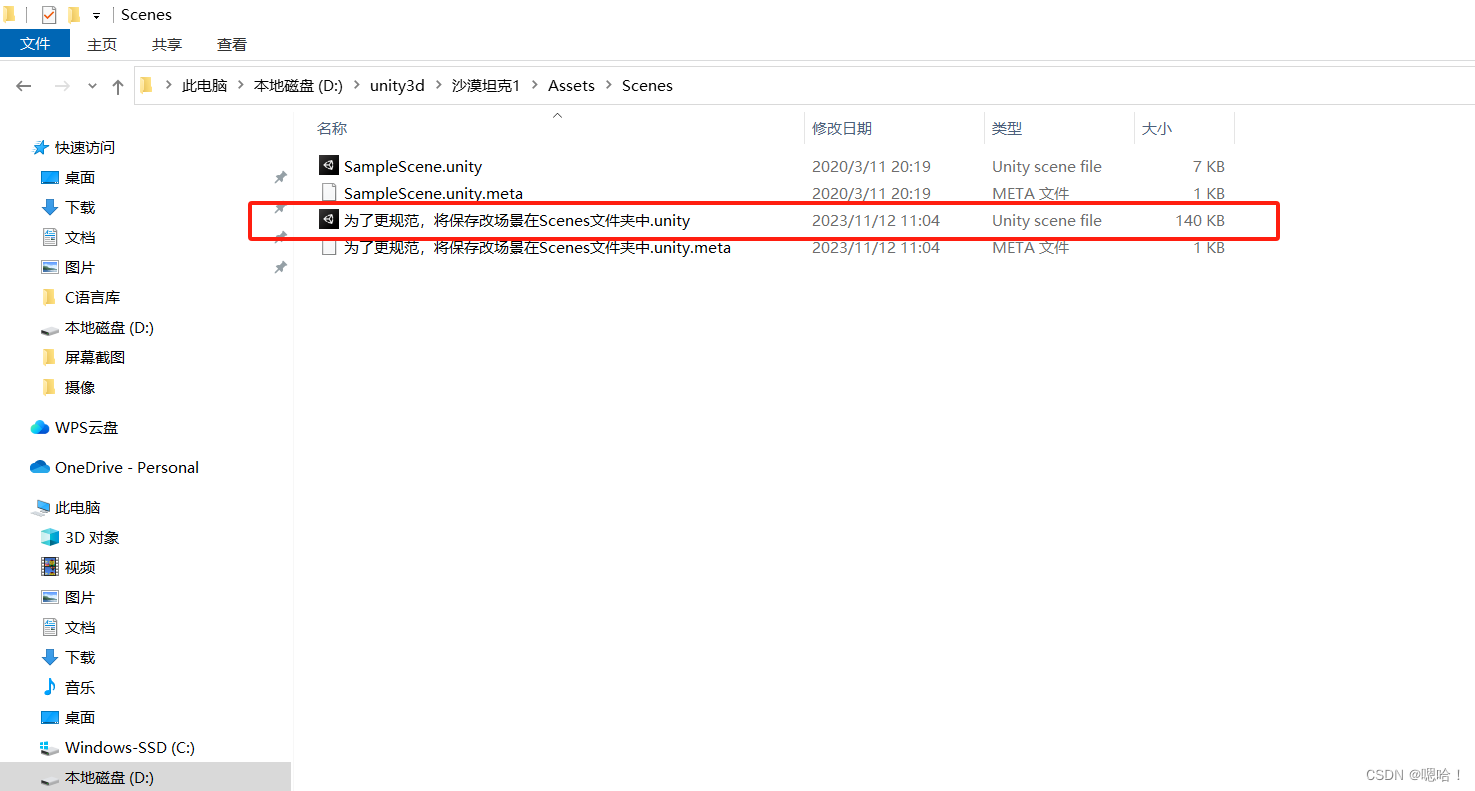

Unity如何保存场景,如何导出工程文件/如何查看保存位置?【各版本通用】

如何保存场景? 在unity中CtrlS 或者File—>Save 输入你要保存的场景名【建议保存在Scenes文件夹下】 下图,保存场景不在Scenes文件夹下: 下图,保存在Scenes文件夹下: 下图,保存完成 如何导出工程文…...

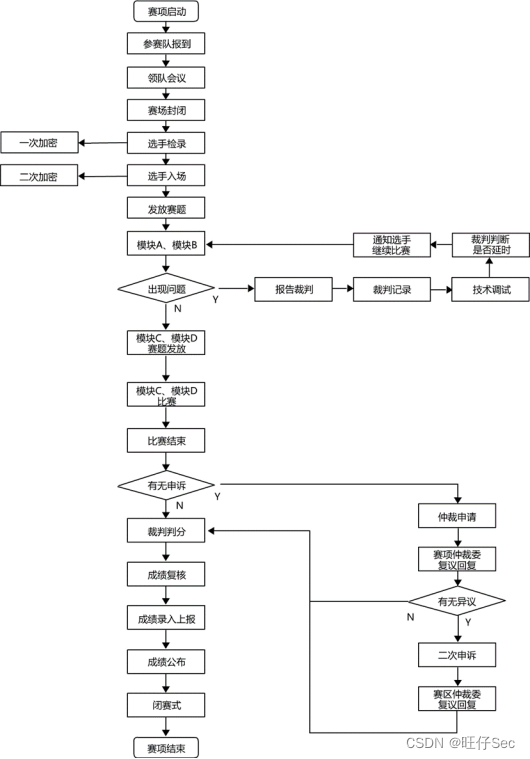

2023年第十六届山东省职业院校技能大赛中职组“网络安全”赛项规程

第十六届山东省职业院校技能大赛 中职组“网络安全”赛项规程 一、赛项名称 赛项名称:网络安全 英文名称:Cyber Security 赛项组别:中职组 专业大类:电子与信息大类 二、竞赛目的 网络空间已经成为陆、海、空、天之后的第…...

html菜单的基本制作

前面写过一点网页菜单的博文;下面再复习一些技术要点; <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd"> <html xmlns"http://www.w3.…...

Spark Job优化

1 Map端优化 1.1 Map端聚合 map-side预聚合,就是在每个节点本地对相同的key进行一次聚合操作,类似于MapReduce中的本地combiner。map-side预聚合之后,每个节点本地就只会有一条相同的key,因为多条相同的key都被聚合起来了。其他节…...

CSS花边001:无衬线字体和有衬线字体

网站中我们看到过很多字体,样子各有千秋。通常针对结构,区分为有衬字体(serif) 和无衬字体(sans-serif)。今天我们聊一下这个话题。 什么是有衬字体,什么是无衬字体? 衬线字体&…...

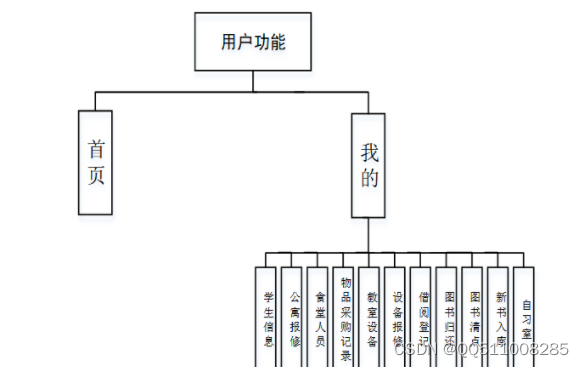

nodejs+vue+python+PHP+微信小程序-安卓- 基于小程序的高校后勤管理系统-计算机毕业设计

考虑到实际生活中在高校后勤管理小程序管理方面的需要以及对该系统认真的分析,将系统权限按管理员和用户这两类涉及用户划分。任何系统都要遵循系统设计的基本流程,本系统也不例外,同样需要经过市场调研,需求分析,概要设计&#x…...

Leetcode153. Find Minimum in Rotated Sorted Array

旋转数组找最小值 其中数组中的值唯一 你可以顺序遍历,当然一般会让你用二分来搞 方法1 数组可以分成两部分,左边是 ≥ n u m s [ 0 ] \ge nums[0] ≥nums[0], 右边是 < n u m s [ 0 ] <nums[0] <nums[0] 换句话说就是找第一个 < n u m s…...

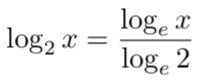

为什么要用“交叉熵”做损失函数

大家好啊,我是董董灿。 今天看一个在深度学习中很枯燥但很重要的概念——交叉熵损失函数。 作为一种损失函数,它的重要作用便是可以将“预测值”和“真实值(标签)”进行对比,从而输出 loss 值,直到 loss 值收敛,可以…...

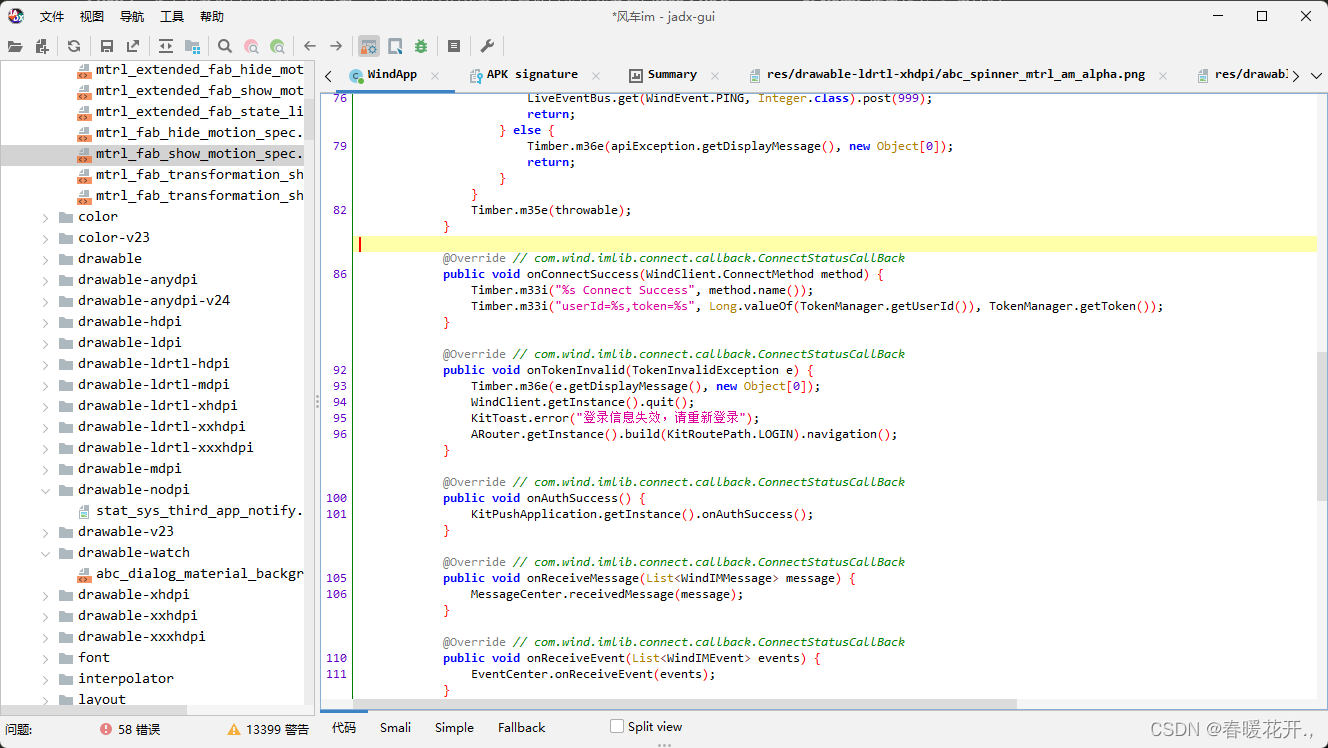

【Android】Android apk 逆向编译

链接:https://pan.baidu.com/s/14r5s9EJwQgeLK5cCb1Gq1Q 提取码:qdqt 解压jadx 在 lib 文件内找到 jadx-gui-1.4.7.jar 打开cmd 执行 :java -jar jadx-gui-1.4.7.jar示列:...

04-详解SpringBoot自动装配的原理,依赖属性配置的实现,源码分析

自动装配原理 依赖属性配置 提供Bean用来封装配置文件中对应属性的值 Data public class Cat {private String name;private Integer age; }Data public class Mouse {private String name;private Integer age; }cartoon:cat:name: "图多盖洛"age: 5mouse:name: …...

)

[100天算法】-不同路径 III(day 73)

题目描述 在二维网格 grid 上,有 4 种类型的方格:1 表示起始方格。且只有一个起始方格。 2 表示结束方格,且只有一个结束方格。 0 表示我们可以走过的空方格。 -1 表示我们无法跨越的障碍。 返回在四个方向(上、下、左、右&#…...

【c++随笔12】继承

【c随笔12】继承 一、继承1、继承的概念2、3种继承方式3、父类和子类对象赋值转换4、继承中的作用域——隐藏5、继承与友元6、继承与静态成员 二、继承和子类默认成员函数1、子类构造函数 二、子类拷贝构造函数3、子类的赋值重载4、子类析构函数 三、单继承、多继承、菱形继承1…...

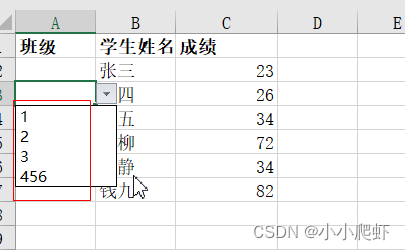

Excel中使用数据验证、OFFSET实现自动更新式下拉选项

在excel工作簿中,有两个Sheet工作表。 Sheet1: Sheet2(数据源表): 要实现Sheet1中的“班级”内容,从数据源Sheet2中获取并形成下拉选项,且Sheet2中“班级”内容更新后,Sheet1中“班…...

Android修行手册 - 可变参数中星号什么作用(冷知识)

点击跳转>Unity3D特效百例点击跳转>案例项目实战源码点击跳转>游戏脚本-辅助自动化点击跳转>Android控件全解手册点击跳转>Scratch编程案例点击跳转>软考全系列 👉关于作者 专注于Android/Unity和各种游戏开发技巧,以及各种资源分享&…...

[特殊字符] 智能合约中的数据是如何在区块链中保持一致的?

🧠 智能合约中的数据是如何在区块链中保持一致的? 为什么所有区块链节点都能得出相同结果?合约调用这么复杂,状态真能保持一致吗?本篇带你从底层视角理解“状态一致性”的真相。 一、智能合约的数据存储在哪里…...

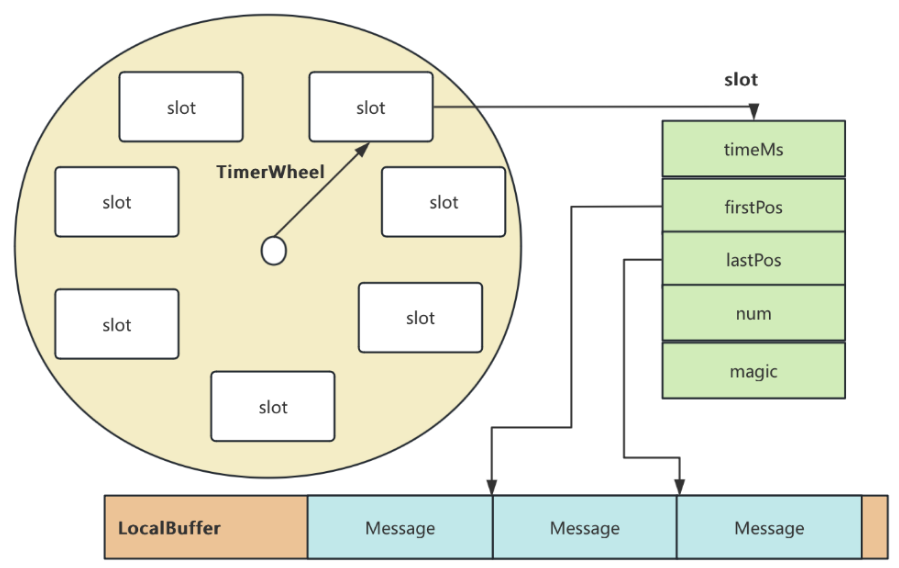

RocketMQ延迟消息机制

两种延迟消息 RocketMQ中提供了两种延迟消息机制 指定固定的延迟级别 通过在Message中设定一个MessageDelayLevel参数,对应18个预设的延迟级别指定时间点的延迟级别 通过在Message中设定一个DeliverTimeMS指定一个Long类型表示的具体时间点。到了时间点后…...

大型活动交通拥堵治理的视觉算法应用

大型活动下智慧交通的视觉分析应用 一、背景与挑战 大型活动(如演唱会、马拉松赛事、高考中考等)期间,城市交通面临瞬时人流车流激增、传统摄像头模糊、交通拥堵识别滞后等问题。以演唱会为例,暖城商圈曾因观众集中离场导致周边…...

基于服务器使用 apt 安装、配置 Nginx

🧾 一、查看可安装的 Nginx 版本 首先,你可以运行以下命令查看可用版本: apt-cache madison nginx-core输出示例: nginx-core | 1.18.0-6ubuntu14.6 | http://archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages ng…...

JVM垃圾回收机制全解析

Java虚拟机(JVM)中的垃圾收集器(Garbage Collector,简称GC)是用于自动管理内存的机制。它负责识别和清除不再被程序使用的对象,从而释放内存空间,避免内存泄漏和内存溢出等问题。垃圾收集器在Ja…...

)

postgresql|数据库|只读用户的创建和删除(备忘)

CREATE USER read_only WITH PASSWORD 密码 -- 连接到xxx数据库 \c xxx -- 授予对xxx数据库的只读权限 GRANT CONNECT ON DATABASE xxx TO read_only; GRANT USAGE ON SCHEMA public TO read_only; GRANT SELECT ON ALL TABLES IN SCHEMA public TO read_only; GRANT EXECUTE O…...

MODBUS TCP转CANopen 技术赋能高效协同作业

在现代工业自动化领域,MODBUS TCP和CANopen两种通讯协议因其稳定性和高效性被广泛应用于各种设备和系统中。而随着科技的不断进步,这两种通讯协议也正在被逐步融合,形成了一种新型的通讯方式——开疆智能MODBUS TCP转CANopen网关KJ-TCPC-CANP…...

Nginx server_name 配置说明

Nginx 是一个高性能的反向代理和负载均衡服务器,其核心配置之一是 server 块中的 server_name 指令。server_name 决定了 Nginx 如何根据客户端请求的 Host 头匹配对应的虚拟主机(Virtual Host)。 1. 简介 Nginx 使用 server_name 指令来确定…...

Spring Boot+Neo4j知识图谱实战:3步搭建智能关系网络!

一、引言 在数据驱动的背景下,知识图谱凭借其高效的信息组织能力,正逐步成为各行业应用的关键技术。本文聚焦 Spring Boot与Neo4j图数据库的技术结合,探讨知识图谱开发的实现细节,帮助读者掌握该技术栈在实际项目中的落地方法。 …...

)

相机Camera日志分析之三十一:高通Camx HAL十种流程基础分析关键字汇总(后续持续更新中)

【关注我,后续持续新增专题博文,谢谢!!!】 上一篇我们讲了:有对最普通的场景进行各个日志注释讲解,但相机场景太多,日志差异也巨大。后面将展示各种场景下的日志。 通过notepad++打开场景下的日志,通过下列分类关键字搜索,即可清晰的分析不同场景的相机运行流程差异…...