Grafana Promtail 配置解析

由于目前项目一般都是部署在k8s上,因此这篇文章中的配置只摘录k8s相关的配置,仅供参考,其他的配置建议上官网查询。

运行时打印配置

-print-config-stderr 通过 ./promtail 直接运行Promtail时能够快速输出配置

-log-config-reverse-order 配置通过反向输出,这样再Grafana中就能从上到下正确读取

配置文件参考

-config.file 通过该配置指定需要加载的文件,

-config.expand-env=true 通过该配置可以在配置文件中引用环境变量,比如${VAR},VAR是环境变量的名称。每个变量引用在启动时都会替换为环境变量的值。替换区分大小写,发生在解析YAML文件之前。除非指定默认值或自定义错误文本,否则对未定义变量的引用将替换为空字符串。指定默认值使用:${VAR:-default_value}

占位符说明

<boolean>: true 或 false<int>: 匹配[1-9]+[0-9]*正则的任何整数<duration>: 匹配[0-9]+(ms|[smhdwy])正则的时间<labelname>: 匹配[a-zA-Z_][a-zA-Z0-9_]*正则的字符串<labelvalue>: Unicode编码字符串<filename>: 相对于当前工作目录的有效路径或绝对路径<host>: 主机名或 ip:port端口号组成的字符串<string>: 字符串<secret>: 表示密码字符串

config.yml

# Configures global settings which impact all targets.

# 影响所有target的全局配置

[global: <global_config>]# Configures the server for Promtail.

# Promtail server端配置

[server: <server_config>]# Describes how Promtail connects to multiple instances

# of Grafana Loki, sending logs to each.

# 描述Promtail如何连接到多个Loki实例,并向每个实例发送日志

# WARNING: If one of the remote Loki servers fails to respond or responds

# with any error which is retryable, this will impact sending logs to any

# other configured remote Loki servers. Sending is done on a single thread!

# It is generally recommended to run multiple Promtail clients in parallel

# if you want to send to multiple remote Loki instances.

#如果其中一个远程Loki服务器未能响应或响应任何可重试的错误,

#这将影响向任何其他配置的远程Loki Server发送日志。

#发送是在一个线程上完成的!如果要发送到多个远程Loki实例,通常建议并行运行多个Promtail客户端。

clients:- [<client_config>]# Describes how to save read file offsets to disk

# 描述如何将读取文件偏移量保存到磁盘

[positions: <position_config>]scrape_configs:- [<scrape_config>]# Configures global limits for this instance of Promtail

# 配置此Promtail实例的全局限制

[limits_config: <limits_config>]# 目标监视配置

[target_config: <target_config>]# 其他promtail配置

[options: <options_config>]# Configures tracing support

# 配置跟踪支持

[tracing: <tracing_config>]

global

# Configure how frequently log files from disk get polled for changes.

# 配置轮询磁盘中日志文件的更改频率

[file_watch_config: <file_watch_config>]

file_watch_config

# Minimum frequency to poll for files. Any time file changes are detected, the

# poll frequency gets reset to this duration.

# 轮询文件的最小频率,检测到任何文件更改时,轮询频率都会重置为此持续时间

[min_poll_frequency: <duration> | default = "250ms"]# Maximum frequency to poll for files. Any time no file changes are detected,

# the poll frequency doubles in value up to the maximum duration specified by

# this value.

#

# The default is set to the same as min_poll_frequency.

# 轮询文件的最大频率,未检测到任何文件变更,轮询频率都会加倍,直到制定的最大值

# 默认设置为与min_poll_frequency相同

[max_poll_frequency: <duration> | default = "250ms"]

server

# Disable the HTTP and GRPC server.

# 禁用HTTP 和 GRPC服务

[disable: <boolean> | default = false]# Enable the /debug/fgprof and /debug/pprof endpoints for profiling.

#启用/debug/fgprof和/debug/prof端点进行分析

[profiling_enabled: <boolean> | default = false]# HTTP server listen host

# HTTP服务监听地址

[http_listen_address: <string>]# HTTP server listen port (0 means random port)

# HTTP服务监听端口,0表示随机端口

[http_listen_port: <int> | default = 80]# gRPC server listen host

#gRPC服务监听端口

[grpc_listen_address: <string>]# gRPC server listen port (0 means random port)

# gRPC服务监听端口,0表示随机端口

[grpc_listen_port: <int> | default = 9095]# Register instrumentation handlers (/metrics, etc.)

# 注册检测程序

[register_instrumentation: <boolean> | default = true]# Timeout for graceful shutdowns

# 正常关机超时时间

[graceful_shutdown_timeout: <duration> | default = 30s]# Read timeout for HTTP server

# HTTP 服务读取超时时间

[http_server_read_timeout: <duration> | default = 30s]# Write timeout for HTTP server

# HTTP 服务写入超时时间

[http_server_write_timeout: <duration> | default = 30s]# Idle timeout for HTTP server

# HTTP 服务空闲超时时间

[http_server_idle_timeout: <duration> | default = 120s]# Max gRPC message size that can be received

# gRPC能够接收的最大消息

[grpc_server_max_recv_msg_size: <int> | default = 4194304]# Max gRPC message size that can be sent

# gRPC能够发送的最大消息

[grpc_server_max_send_msg_size: <int> | default = 4194304]# Limit on the number of concurrent streams for gRPC calls (0 = unlimited)

# gRPC并发调用的最大数量,0 不限制

[grpc_server_max_concurrent_streams: <int> | default = 100]# Log only messages with the given severity or above. Supported values [debug,

# info, warn, error]

# 日志级别

[log_level: <string> | default = "info"]# Base path to server all API routes from (e.g., /v1/).

# 所有API路径前缀,比如 /v1/

[http_path_prefix: <string>]# Target managers check flag for Promtail readiness, if set to false the check is ignored

# 是否健康检查

[health_check_target: <bool> | default = true]# Enable reload via HTTP request.

# 通过HTTP请求开启重载

[enable_runtime_reload: <bool> | default = false]

clients

配置Promtail 如何连接到 Loki实例

# The URL where Loki is listening, denoted in Loki as http_listen_address and

# http_listen_port. If Loki is running in microservices mode, this is the HTTP

# URL for the Distributor. Path to the push API needs to be included.

# Example: http://example.com:3100/loki/api/v1/push

# Loki监听的URL,在Loki中表示为http_listen_address和http_listen_port

# 如果Loki在微服务模式下运行,如下是HTTP示例:http://example.com:3100/loki/api/v1/push

url: <string># Custom HTTP headers to be sent along with each push request.

# Be aware that headers that are set by Promtail itself (e.g. X-Scope-OrgID) can't be overwritten.

# 每个请求发送的HTTP头

#请注意,Promtail本身设置的头(例如X-Scope-OrgID)不能被覆盖

headers:# Example: CF-Access-Client-Id: xxx[ <labelname>: <labelvalue> ... ]# The tenant ID used by default to push logs to Loki. If omitted or empty

# it assumes Loki is running in single-tenant mode and no X-Scope-OrgID header

# is sent.

# 默认情况下用于将日志推送到Loki的租户ID。

# 如果省略或为空,则假定Loki在单租户模式下运行,并且不发送X-Scope-OrgID标头。

[tenant_id: <string>]# Maximum amount of time to wait before sending a batch, even if that

# batch isn't full.

# 一批发送之前等待的最长时间,即使该批未满

[batchwait: <duration> | default = 1s]# Maximum batch size (in bytes) of logs to accumulate before sending

# the batch to Loki.

# 向 Loki 发送批次之前要累积的日志的最大批大小(以字节为单位)

[batchsize: <int> | default = 1048576]# If using basic auth, configures the username and password

# sent.

# 基础认证,配置用户名和密码

basic_auth:# The username to use for basic auth[username: <string>]# The password to use for basic auth[password: <string>]# The file containing the password for basic auth[password_file: <filename>]# Optional OAuth 2.0 configuration

# Cannot be used at the same time as basic_auth or authorization

# 可选的

# OAuth 2.0配置不能与basic_auth或授权同时使用

oauth2:# Client id and secret for oauth2[client_id: <string>][client_secret: <secret>]# Read the client secret from a file# It is mutually exclusive with `client_secret`# 从文件中读取client secret,跟client_secret排斥[client_secret_file: <filename>]# Optional scopes for the token request# 可选,Token请求作用域scopes:[ - <string> ... ]# The URL to fetch the token from# 获取token的URL地址token_url: <string># Optional parameters to append to the token URL# 可选,token URL地址附加参数endpoint_params:[ <string>: <string> ... ]# Bearer token to send to the server.

# 发送到服务端的 Bearer token

[bearer_token: <secret>]# File containing bearer token to send to the server.

# 发送到服务端的包含bearer token的文件

[bearer_token_file: <filename>]# HTTP proxy server to use to connect to the server.

# 连接到服务端的HTTP代理

[proxy_url: <string>]# If connecting to a TLS server, configures how the TLS

# authentication handshake will operate.

tls_config:# The CA file to use to verify the server[ca_file: <string>]# The cert file to send to the server for client auth[cert_file: <filename>]# The key file to send to the server for client auth[key_file: <filename>]# Validates that the server name in the server's certificate# is this value.[server_name: <string>]# If true, ignores the server certificate being signed by an# unknown CA.[insecure_skip_verify: <boolean> | default = false]# Configures how to retry requests to Loki when a request

# fails.

# 配置请求失败时如何重试对Loki的请求

# Default backoff schedule:

# 默认策略

# 0.5s, 1s, 2s, 4s, 8s, 16s, 32s, 64s, 128s, 256s(4.267m)

# For a total time of 511.5s(8.5m) before logs are lost

# 在日志丢失之前总共持续511.5秒(8.5分钟)

backoff_config:# Initial backoff time between retries# 重试之间的初始回退时间[min_period: <duration> | default = 500ms]# Maximum backoff time between retries# 重试之间的最大回退时间[max_period: <duration> | default = 5m]# Maximum number of retries to do# 要执行的最大重试次数[max_retries: <int> | default = 10]# Disable retries of batches that Loki responds to with a 429 status code (TooManyRequests). This reduces

# impacts on batches from other tenants, which could end up being delayed or dropped due to exponential backoff.

# 在 Loki 返回 429 状态码(大量请求)的情况下禁用批量的重试

# 这减少了对其他租户批量的影响,这些租户最终可能会因指数回退而延迟或减少

[drop_rate_limited_batches: <boolean> | default = false]# Static labels to add to all logs being sent to Loki.

# Use map like {"foo": "bar"} to add a label foo with

# value bar.

# These can also be specified from command line:

# -client.external-labels=k1=v1,k2=v2

# (or --client.external-labels depending on your OS)

# labels supplied by the command line are applied

# to all clients configured in the `clients` section.

# NOTE: values defined in the config file will replace values

# defined on the command line for a given client if the

# label keys are the same.

# 要添加到发送到Loki的所有日志中的静态标签

# 比如{"foo": "bar"},添加 foo标签 bar值

# 也可以通过命令行指定:-client.external-labels=k1=v1,k2=v2 ,或者 --client.external-labels

# 命令行提供的标签应用于“客户端”部分中配置的所有客户端

# 如果标签键相同,则配置文件中定义的值将替换命令行中为给定客户端定义的值

external_labels:[ <labelname>: <labelvalue> ... ]# Maximum time to wait for a server to respond to a request

# 等待服务器响应请求的最长时间

[timeout: <duration> | default = 10s]

positions

positions配置Promtail将保存的文件,该文件指示它已读取文件的进度。当Promtail重新启动时,需要此文件以从停止的地方继续读取

# Location of positions file

[filename: <string> | default = "/var/log/positions.yaml"]# How often to update the positions file

[sync_period: <duration> | default = 10s]# Whether to ignore & later overwrite positions files that are corrupted

# 是否要忽略并稍后覆盖损坏的positions文件

[ignore_invalid_yaml: <boolean> | default = false]

scrape_configs

scrape_configs配置了Promtail如何使用指定的发现方法从一系列目标中提取日志。Promtail使用与Prometheus相同的scrape_configs。这意味着如果您已经拥有一个Prometheus实例,那么配置将非常相似

# Name to identify this scrape config in the Promtail UI.

# 在Promtail UI中标识的唯一名称

job_name: <string># Describes how to transform logs from targets.

# 描述如何从目标转换日志

[pipeline_stages: <pipeline_stages>]# Defines decompression behavior for the given scrape target.

#定义给定刮取目标的解压缩行为

decompression:# Whether decompression should be tried or not.# 是否尝试解压缩[enabled: <boolean> | default = false]# Initial delay to wait before starting the decompression.# 开始解压前等待的初始延迟# Especially useful in scenarios where compressed files are found before the compression is finished.# 在压缩完成之前发现压缩文件的情况下尤其有用[initial_delay: <duration> | default = 0s]# Compression format. Supported formats are: 'gz', 'bz2' and 'z.# 压缩格式,支持'gz', 'bz2' and 'z[format: <string> | default = ""]# Describes how to scrape logs from the journal.

# 描述如何从journal中拉取日志

[journal: <journal_config>]# Describes from which encoding a scraped file should be converted.

# 描述应从哪种编码将提取的文件转换为编码的方法

[encoding: <iana_encoding_name>]# Describes how to receive logs from syslog.

# 描述如何从syslog中接收日志

[syslog: <syslog_config>]# Describes how to receive logs via the Loki push API, (e.g. from other Promtails or the Docker Logging Driver)

# 描述如何通过Loki 推送API 接收日志

[loki_push_api: <loki_push_api_config>]# Describes how to scrape logs from the Windows event logs.

[windows_events: <windows_events_config>]# Configuration describing how to pull/receive Google Cloud Platform (GCP) logs.

[gcplog: <gcplog_config>]# Configuration describing how to get Azure Event Hubs messages.

[azure_event_hub: <azure_event_hub_config>]# Describes how to fetch logs from Kafka via a Consumer group.

[kafka: <kafka_config>]# Describes how to receive logs from gelf client.

[gelf: <gelf_config>]# Configuration describing how to pull logs from Cloudflare.

[cloudflare: <cloudflare>]# Configuration describing how to pull logs from a Heroku LogPlex drain.

[heroku_drain: <heroku_drain>]# Describes how to relabel targets to determine if they should

# be processed.

# 描述是否重写这些目标

relabel_configs:- [<relabel_config>]# Static targets to scrape.

# 静态目标拉取

static_configs:- [<static_config>]# Files containing targets to scrape.

# 目标文件拉取

file_sd_configs:- [<file_sd_configs>]# Describes how to discover Kubernetes services running on the

# same host.

# k8s拉取配置

kubernetes_sd_configs:- [<kubernetes_sd_config>]# Describes how to use the Consul Catalog API to discover services registered with the

# consul cluster.

# consul 拉取配置

consul_sd_configs:[ - <consul_sd_config> ... ]# Describes how to use the Consul Agent API to discover services registered with the consul agent

# running on the same host as Promtail.

# 通过consul aget api来发现拉取配置

consulagent_sd_configs:[ - <consulagent_sd_config> ... ]# Describes how to use the Docker daemon API to discover containers running on

# the same host as Promtail.

# 通过Docker 守护API来发现拉取的配置

docker_sd_configs:[ - <docker_sd_config> ... ]

pipeline_stages

管道阶段用于转换日志条目及其标签。管道在发现过程结束后执行。pipeline_stages对象由与以下列表中列出的条目相对应的阶段列表组成。

在大多数情况下,您使用regex或json阶段从日志中提取数据。提取的数据将转换为临时映射对象。然后,数据可用于Promtail,例如用作标签的值或输出。此外,除了docker和cri之外的任何阶段都可以访问提取的数据。

- [<docker> |<cri> |<regex> |<json> |<template> |<match> |<timestamp> |<output> |<labels> |<metrics> |<tenant> |<replace>]

docker

Docker阶段解析Docker容器中的日志内容,并通过名称定义为一个空对象:

docker: {}

docker阶段将匹配并解析以下格式的日志行:

`{"log":"level=info ts=2019-04-30T02:12:41.844179Z caller=filetargetmanager.go:180 msg=\"Adding target\"\n","stream":"stderr","time":"2019-04-30T02:12:41.8443515Z"}`

自动将time提取到日志时间戳中,stream提取到标签中,并将log字段提取到输出中,这非常有帮助,因为docker以这种方式包装应用程序日志,以便仅对日志内容进行进一步的管道处理。

Docker 阶段只是一个便捷的包装器定义:

- json:expressions:output: logstream: streamtimestamp: time

- labels:stream:

- timestamp:source: timestampformat: RFC3339Nano

- output:source: output

CRI

解析来自CRI容器的日志,使用空对象按名称定义:

cri: {}

CRI阶段将匹配和格式化以下日志:

2019-01-01T01:00:00.000000001Z stderr P some log message

time 提取为日志时间戳,stream提取为标签,将剩余的消息打包到消息中

CRI阶段包装器定义:

- regex:expression: "^(?s)(?P<time>\\S+?) (?P<stream>stdout|stderr) (?P<flags>\\S+?) (?P<content>.*)$"

- labels:stream:

- timestamp:source: timeformat: RFC3339Nano

- output:source: content

regex

采用正则表达式提取日志

regex:# The RE2 regular expression. Each capture group must be named.# RE2 正则表达式,必须命名每个拉取组expression: <string># Name from extracted data to parse. If empty, uses the log message.# 要分析的提取数据的名称。如果为空,则使用日志消息[source: <string>]

json

将日志解析为JSON,并使用JMESPath从JSON中提取数据

json:# Set of key/value pairs of JMESPath expressions. The key will be# the key in the extracted data while the expression will be the value,# evaluated as a JMESPath from the source data.# 做为JMESPath表达式的 key/valu键值,key是提取数据中的键,value是表达式expressions:[ <string>: <string> ... ]# Name from extracted data to parse. If empty, uses the log message.# 从提取的数据中解析的名称。如果为空,则使用日志消息。[source: <string>]

template

使用GO的 text/template语言操作值

template:# Name from extracted data to parse. If key in extract data doesn't exist, an# entry for it will be created.# 分析数据的名称,如果key在数据中不存在,将创建一个条目source: <string># Go template string to use. In additional to normal template# functions, ToLower, ToUpper, Replace, Trim, TrimLeft, TrimRight,# TrimPrefix, TrimSuffix, and TrimSpace are available as functions.# 要使用的Go模板字符串。template: <string>

比如:

template:source: leveltemplate: '{{ if eq .Value "WARN" }}{{ Replace .Value "WARN" "OK" -1 }}{{ else }}{{ .Value }}{{ end }}'

match

当日志条目与可配置的LogQL流选择器匹配时,匹配阶段有条件地执行一组阶段

match:# LogQL stream selector.selector: <string># Names the pipeline. When defined, creates an additional label in# the pipeline_duration_seconds histogram, where the value is# concatenated with job_name using an underscore.#命名管道。定义后,在pipeline_duration_seconds直方图中创建一个附加标签,其中该值使用下划线与job_name连接。[pipeline_name: <string>]# Nested set of pipeline stages only if the selector# matches the labels of the log entries:# 仅当选择器与日志条目标签匹配时,才使用的管道阶段的嵌套集合stages:- [<docker> |<cri> |<regex><json> |<template> |<match> |<timestamp> |<output> |<labels> |<metrics>]

timestamp

timestamp阶段从提取的地图中解析数据,并覆盖Loki存储的日志的最终时间值。如果不存在此阶段,Promtail将把日志条目的时间戳与读取该日志条目的时刻相关联。

timestamp:# Name from extracted data to use for the timestamp.source: <string># Determines how to parse the time string. Can use# pre-defined formats by name: [ANSIC UnixDate RubyDate RFC822# RFC822Z RFC850 RFC1123 RFC1123Z RFC3339 RFC3339Nano Unix# UnixMs UnixUs UnixNs].format: <string># IANA Timezone Database string.[location: <string>]

output

output阶段从提取的映射中获取数据,并设置将由Loki存储的日志条目的内容。

output:# Name from extracted data to use for the log entry.source: <string>

labels

labels阶段从提取的map中获取数据,并在将发送给Loki的日志条目上设置其他标签。

labels:# Key is REQUIRED and the name for the label that will be created.# Value is optional and will be the name from extracted data whose value# will be used for the value of the label. If empty, the value will be# inferred to be the same as the key.[ <string>: [<string>] ... ]

metrics

允许从提取的数据中定义度量

# A map where the key is the name of the metric and the value is a specific

# metric type.

metrics:[<string>: [ <counter> | <gauge> | <histogram> ] ...]

counter

定义一个计数器指标,其值只会增加

# The metric type. Must be Counter.

type: Counter# Describes the metric.

[description: <string>]# Key from the extracted data map to use for the metric,

# defaulting to the metric's name if not present.

[source: <string>]config:# Filters down source data and only changes the metric# if the targeted value exactly matches the provided string.# If not present, all data will match.[value: <string>]# Must be either "inc" or "add" (case insensitive). If# inc is chosen, the metric value will increase by 1 for each# log line received that passed the filter. If add is chosen,# the extracted value must be convertible to a positive float# and its value will be added to the metric.action: <string>

gauge

定义一个gauge度量,值可上下

# The metric type. Must be Gauge.

type: Gauge# Describes the metric.

[description: <string>]# Key from the extracted data map to use for the metric,

# defaulting to the metric's name if not present.

[source: <string>]config:# Filters down source data and only changes the metric# if the targeted value exactly matches the provided string.# If not present, all data will match.[value: <string>]# Must be either "set", "inc", "dec"," add", or "sub". If# add, set, or sub is chosen, the extracted value must be# convertible to a positive float. inc and dec will increment# or decrement the metric's value by 1 respectively.action: <string>

histogram

定义一个histogram度量,值为条形

# The metric type. Must be Histogram.

type: Histogram# Describes the metric.

[description: <string>]# Key from the extracted data map to use for the metric,

# defaulting to the metric's name if not present.

[source: <string>]config:# Filters down source data and only changes the metric# if the targeted value exactly matches the provided string.# If not present, all data will match.[value: <string>]# Must be either "inc" or "add" (case insensitive). If# inc is chosen, the metric value will increase by 1 for each# log line received that passed the filter. If add is chosen,# the extracted value must be convertible to a positive float# and its value will be added to the metric.action: <string># Holds all the numbers in which to bucket the metric.buckets:- <int>tenant

tenant阶段是一个操作阶段,它为从提取的数据映射中的字段中提取的日志条目设置租户ID

tenant:# Either label, source or value config option is required, but not all (they# are mutually exclusive).# Name from labels to whose value should be set as tenant ID.[ label: <string> ]# Name from extracted data to whose value should be set as tenant ID.[ source: <string> ]# Value to use to set the tenant ID when this stage is executed. Useful# when this stage is included within a conditional pipeline with "match".[ value: <string> ]

replace

replace阶段是使用正则表达式解析日志行并替换日志行的解析阶段。

replace:# The RE2 regular expression. Each named capture group will be added to extracted.# Each capture group and named capture group will be replaced with the value given in# `replace`expression: <string># Name from extracted data to parse. If empty, uses the log message.# The replaced value will be assigned back to soure key[source: <string>]# Value to which the captured group will be replaced. The captured group or the named# captured group will be replaced with this value and the log line will be replaced with# new replaced values. An empty value will remove the captured group from the log line.[replace: <string>]

static_configs

static_configs允许指定目标列表和为它们设置的公共标签。这是在scrape配置中指定静态目标的规范方法。

# Configures the discovery to look on the current machine.

# This is required by the prometheus service discovery code but doesn't

# really apply to Promtail which can ONLY look at files on the local machine

# As such it should only have the value of localhost, OR it can be excluded

# entirely and a default value of localhost will be applied by Promtail.

# 当前机器上发现配置。

#这是prometheus服务发现代码所要求的,但并不真正适用于Promtail,因为Promtail只能查看本地计算机上的文件。

# 因此,它应该只有localhost值,或者可以完全排除它,Promtail将应用默认值localhost

targets:- localhost# Defines a file to scrape and an optional set of additional labels to apply to

# all streams defined by the files from __path__.

#定义一个要刮取的文件和一组可选的附加标签,以应用于__path__中的文件定义的所有流。

labels:# The path to load logs from. Can use glob patterns (e.g., /var/log/*.log).# 加载的日志路径__path__: <string># Used to exclude files from being loaded. Can also use glob patterns.# 从加载中排除文件__path_exclude__: <string># Additional labels to assign to the logs# 要分配的其他标签[ <labelname>: <labelvalue> ... ]

kubernetes_sd_config

Kubernetes SD configurations allow retrieving scrape targets from Kubernetes’ REST API and always staying synchronized with the cluster state.

Kubernetes SD配置允许从Kubernetes REST API 中检索目标,以便与集群保持同步

One of the following role types can be configured to discover targets:

以下role 类型之一可以配置为发现目标

node

The node role discovers one target per cluster node with the address defaulting to the Kubelet’s HTTP port.

node 角色将发现的每个集群节点做为一个目标,地址默认为Kubelete的HTTP端口

The target address defaults to the first existing address of the Kubernetes node object in the address type order of NodeInternalIP, NodeExternalIP, NodeLegacyHostIP, and NodeHostName.

目标地址默认为Kubernetes节点对象的第一个现有地址,地址类型顺序为NodeInternalIP、NodeExternalIP、NodeLegacyHostIP和NodeHostName

Available meta labels:

- __meta_kubernetes_node_name: The name of the node object.

- _meta_kubernetes_node_label: Each label from the node object.

- _meta_kubernetes_node_labelpresent: true for each label from the node object.

- _meta_kubernetes_node_annotation: Each annotation from the node object.

- _meta_kubernetes_node_annotationpresent: true for each annotation from the node object.

- _meta_kubernetes_node_address<address_type>: The first address for each node address type, if it exists.

In addition, the instance label for the node will be set to the node name as retrieved from the API server.

可用元标签:

- __meta_kubernetes_node_name: 节点对象的名称

- _meta_kubernetes_node_label: 节点对象的每个标签

- _meta_kubernetes_node_labelpresent: 对于节点对象中的每个标签为true

- _meta_kubernetes_node_annotation: 节点对象中的每个注释

- _meta_kubernetes_node_annotationpresent: 对于节点对象的每个注释为true。

- _meta_kubernetes_node_address<address_type>: 每个节点地址类型的第一个地址(如果存在)

此外,节点的实例标签将设置为从API服务器检索到的节点名称。

service

The service role discovers a target for each service port of each service. This is generally useful for blackbox monitoring of a service. The address will be set to the Kubernetes DNS name of the service and respective service port.

service 角色将每个发现的服务做为一个目标。这对于服务的黑盒监视通常很有用。地址将设置为服务的Kubernetes DNS名称和相应的服务端口

Available meta labels:

- __meta_kubernetes_namespace: The namespace of the service object.

- _meta_kubernetes_service_annotation: Each annotation from the service object.

- _meta_kubernetes_service_annotationpresent: “true” for each annotation of the service object.

- __meta_kubernetes_service_cluster_ip: The cluster IP address of the service. (Does not apply to services of type ExternalName)

- __meta_kubernetes_service_external_name: The DNS name of the service. (Applies to services of type ExternalName)

- _meta_kubernetes_service_label: Each label from the service object.

- _meta_kubernetes_service_labelpresent: true for each label of the service object.

- __meta_kubernetes_service_name: The name of the service object.

- __meta_kubernetes_service_port_name: Name of the service port for the target.

- __meta_kubernetes_service_port_protocol: Protocol of the service port for the target.

pod

The pod role discovers all pods and exposes their containers as targets. For each declared port of a container, a single target is generated. If a container has no specified ports, a port-free target per container is created for manually adding a port via relabeling.

pod 角色将发现的所有pod 做为目标。每个容器的端口将生成一个目标,如果容器没有指定的端口,则会为每个容器创建一个无端口目标,用于通过重新标记手动添加端口。

Available meta labels:

- __meta_kubernetes_namespace: The namespace of the pod object.

- __meta_kubernetes_pod_name: The name of the pod object.

- __meta_kubernetes_pod_ip: The pod IP of the pod object.

- _meta_kubernetes_pod_label: Each label from the pod object.

- _meta_kubernetes_pod_labelpresent: truefor each label from the pod object.

- _meta_kubernetes_pod_annotation: Each annotation from the pod object.

- _meta_kubernetes_pod_annotationpresent: true for each annotation from the pod object.

- __meta_kubernetes_pod_container_init: true if the container is an InitContainer

- __meta_kubernetes_pod_container_name: Name of the container the target address points to.

- __meta_kubernetes_pod_container_port_name: Name of the container port.

- __meta_kubernetes_pod_container_port_number: Number of the container port.

- __meta_kubernetes_pod_container_port_protocol: Protocol of the container port.

- __meta_kubernetes_pod_ready: Set to true or false for the pod’s ready state.

- __meta_kubernetes_pod_phase: Set to Pending, Running, Succeeded, Failed or Unknown in the lifecycle.

- __meta_kubernetes_pod_node_name: The name of the node the pod is scheduled onto.

- __meta_kubernetes_pod_host_ip: The current host IP of the pod object.

- __meta_kubernetes_pod_uid: The UID of the pod object.

- __meta_kubernetes_pod_controller_kind: Object kind of the pod controller.

- __meta_kubernetes_pod_controller_name: Name of the pod controller.

endpoints

The endpoints role discovers targets from listed endpoints of a service. For each endpoint address one target is discovered per port. If the endpoint is backed by a pod, all additional container ports of the pod, not bound to an endpoint port, are discovered as targets as well.

Available meta labels:

- __meta_kubernetes_namespace: The namespace of the endpoints object.

- __meta_kubernetes_endpoints_name: The names of the endpoints object.

- For all targets discovered directly from the endpoints list (those not additionally inferred from underlying pods), the following labels are attached:

- __meta_kubernetes_endpoint_hostname: Hostname of the endpoint.

- __meta_kubernetes_endpoint_node_name: Name of the node hosting the endpoint.

- __meta_kubernetes_endpoint_ready: Set to true or false for the endpoint’s ready state.

- __meta_kubernetes_endpoint_port_name: Name of the endpoint port.

- __meta_kubernetes_endpoint_port_protocol: Protocol of the endpoint port.

- __meta_kubernetes_endpoint_address_target_kind: Kind of the endpoint address target.

- __meta_kubernetes_endpoint_address_target_name: Name of the endpoint address target.

- If the endpoints belong to a service, all labels of the role: service discovery are attached.

- For all targets backed by a pod, all labels of the role: pod discovery are attached.

ingress

The ingress role discovers a target for each path of each ingress. This is generally useful for blackbox monitoring of an ingress. The address will be set to the host specified in the ingress spec.

Available meta labels:

- __meta_kubernetes_namespace: The namespace of the ingress object.

- __meta_kubernetes_ingress_name: The name of the ingress object.

- _meta_kubernetes_ingress_label: Each label from the ingress object.

- _meta_kubernetes_ingress_labelpresent: true for each label from the ingress object.

- _meta_kubernetes_ingress_annotation: Each annotation from the ingress object.

- _meta_kubernetes_ingress_annotationpresent: true for each annotation from the ingress object.

- __meta_kubernetes_ingress_scheme: Protocol scheme of ingress, https if TLS config is set. Defaults to http.

- __meta_kubernetes_ingress_path: Path from ingress spec. Defaults to /.

See below for the configuration options for Kubernetes discovery:

# The information to access the Kubernetes API.# The API server addresses. If left empty, Prometheus is assumed to run inside

# of the cluster and will discover API servers automatically and use the pod's

# CA certificate and bearer token file at /var/run/secrets/kubernetes.io/serviceaccount/.

# API服务器地址。如果为空,Prometheus在集群内部运行

# 将自动发现API服务并使用在/var/run/secrets/kubernetes.io/serviceaccount/.路径下的CA证书和 bearer token文件

[ api_server: <host> ]# The Kubernetes role of entities that should be discovered.

# Kubernetes中应该被发现的实体角色

role: <role># Optional authentication information used to authenticate to the API server.

# Note that `basic_auth`, `bearer_token` and `bearer_token_file` options are

# mutually exclusive.

# password and password_file are mutually exclusive.# Optional HTTP basic authentication information.

basic_auth:[ username: <string> ][ password: <secret> ][ password_file: <string> ]# Optional bearer token authentication information.

[ bearer_token: <secret> ]# Optional bearer token file authentication information.

[ bearer_token_file: <filename> ]# Optional proxy URL.

[ proxy_url: <string> ]# TLS configuration.

tls_config:[ <tls_config> ]# Optional namespace discovery. If omitted, all namespaces are used.

# 可选的,发现命名空间,省略使用所有的命名空间

namespaces:names:[ - <string> ]# Optional label and field selectors to limit the discovery process to a subset of available

# resources.

# 可选的标签和字段选择器,用于将发现过程限制为可用资源的子集

#See

# https://kubernetes.io/docs/concepts/overview/working-with-objects/field-selectors/

# and https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/ to learn

# more about the possible filters that can be used. The endpoints role supports pod,

# service, and endpoint selectors. Roles only support selectors matching the role itself;

# for example, the node role can only contain node selectors.

# Note: When making decisions about using field/label selectors, make sure that this

# is the best approach. It will prevent Promtail from reusing single list/watch

# for all scrape configurations. This might result in a bigger load on the Kubernetes API,

# because for each selector combination, there will be additional LIST/WATCH.

# On the other hand, if you want to monitor a small subset of pods of a large cluster,

# we recommend using selectors. The decision on the use of selectors or not depends

# on the particular situation.

[ selectors:[ - role: <string>[ label: <string> ][ field: <string> ] ]]

Where must be endpoints, service, pod, node, or ingress.

See this example Prometheus configuration file for a detailed example of configuring Prometheus for Kubernetes.

You may wish to check out the 3rd party Prometheus Operator, which automates the Prometheus setup on top of Kubernetes.

包含endpoints, service, pod, node, 或 ingress

有关为Kubernetes配置Prometheus的详细示例,请参阅此示例Prometheus配置文件。其他第三方的 Prometheus Operator,能够自动配置Kubernetes上的Prometheus

原文链接:https://grafana.com/docs/loki/latest/send-data/promtail/configuration/

作者其他要推荐的文章,欢迎来学习:

Prometheus 系列文章

- Prometheus 的介绍和安装

- 直观感受PromQL及其数据类型

- PromQL之选择器和运算符

- PromQL之函数

- Prometheus 告警机制介绍及命令解读

- Prometheus 告警模块配置深度解析

- Prometheus 配置身份认证

- Prometheus 动态拉取监控服务

- Prometheus 监控云Mysql和自建Mysql

Grafana 系列文章,版本:OOS v9.3.1

- Grafana 的介绍和安装

- Grafana监控大屏配置参数介绍(一)

- Grafana监控大屏配置参数介绍(二)

- Grafana监控大屏可视化图表

- Grafana 查询数据和转换数据

- Grafana 告警模块介绍

- Grafana 告警接入飞书通知

相关文章:

Grafana Promtail 配置解析

由于目前项目一般都是部署在k8s上,因此这篇文章中的配置只摘录k8s相关的配置,仅供参考,其他的配置建议上官网查询。 运行时打印配置 -print-config-stderr 通过 ./promtail 直接运行Promtail时能够快速输出配置 -log-config-reverse-order 配…...

电脑DIY-主板参数

电脑主板参数 主板系列芯片组主板支持的CPU系列主板支持CPU的第几代主板的尺寸主板支持的内存主板是否支持专用WIFI模块插槽主板规格主板供电规格M.2插槽(固态硬盘插槽)规格USB接口规格质保方式 华硕TUF GAMING B650M-PLUS WIFI DDR5重炮手主板 华硕&…...

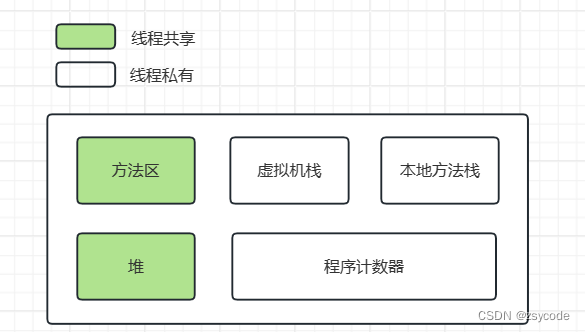

JVM知识总结(持续更新)

这里写目录标题 java内存区域程序计数器虚拟机栈本地方法栈堆方法区 java内存区域 Java 虚拟机在执行 Java 程序的过程中会把它管理的内存划分成若干个不同的数据区域: 程序计数器虚拟机栈本地方法栈堆方法区 程序计数器 记录下一条需要执行的虚拟机字节码指令…...

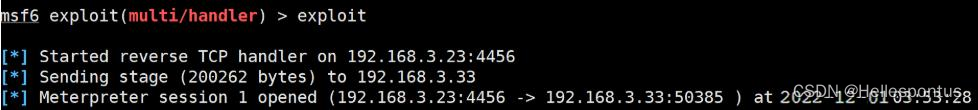

信息系统安全——基于 KALI 和 Metasploit 的渗透测试

实验 2 基于 KALI 和 Metasploit 的渗透测试 2.1 实验名称 《基于 KALI 和 Metasploit 的渗透测试》 2.2 实验目的 1 、熟悉渗透测试方法 2 、熟悉渗透测试工具 Kali 及 Metasploit 的使用 2.3 实验步骤及内容 1 、安装 Kali 系统 2 、选择 Kali 中 1-2 种攻击工具,…...

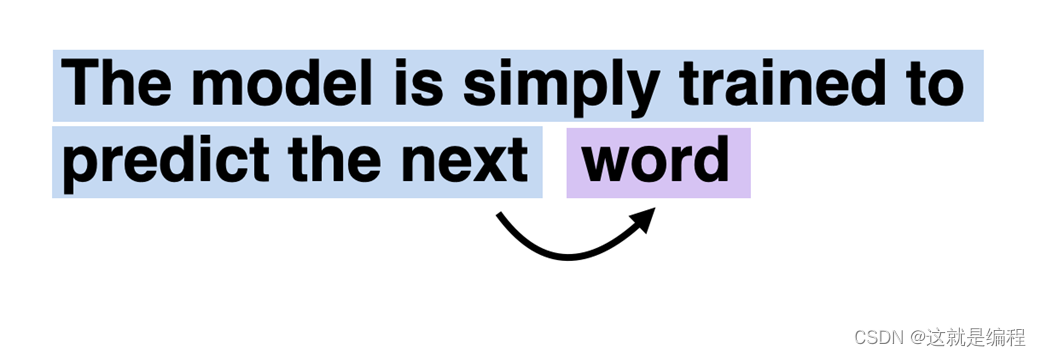

05. 深入理解 GPT 架构

在本章的前面,我们提到了类 GPT 模型、GPT-3 和 ChatGPT 等术语。现在让我们仔细看看一般的 GPT 架构。首先,GPT 代表生成式预训练转换器,最初是在以下论文中引入的: 通过生成式预训练提高语言理解 (2018) 作者:Radford 等人,来自 OpenAI,http://cdn.openai.com/rese…...

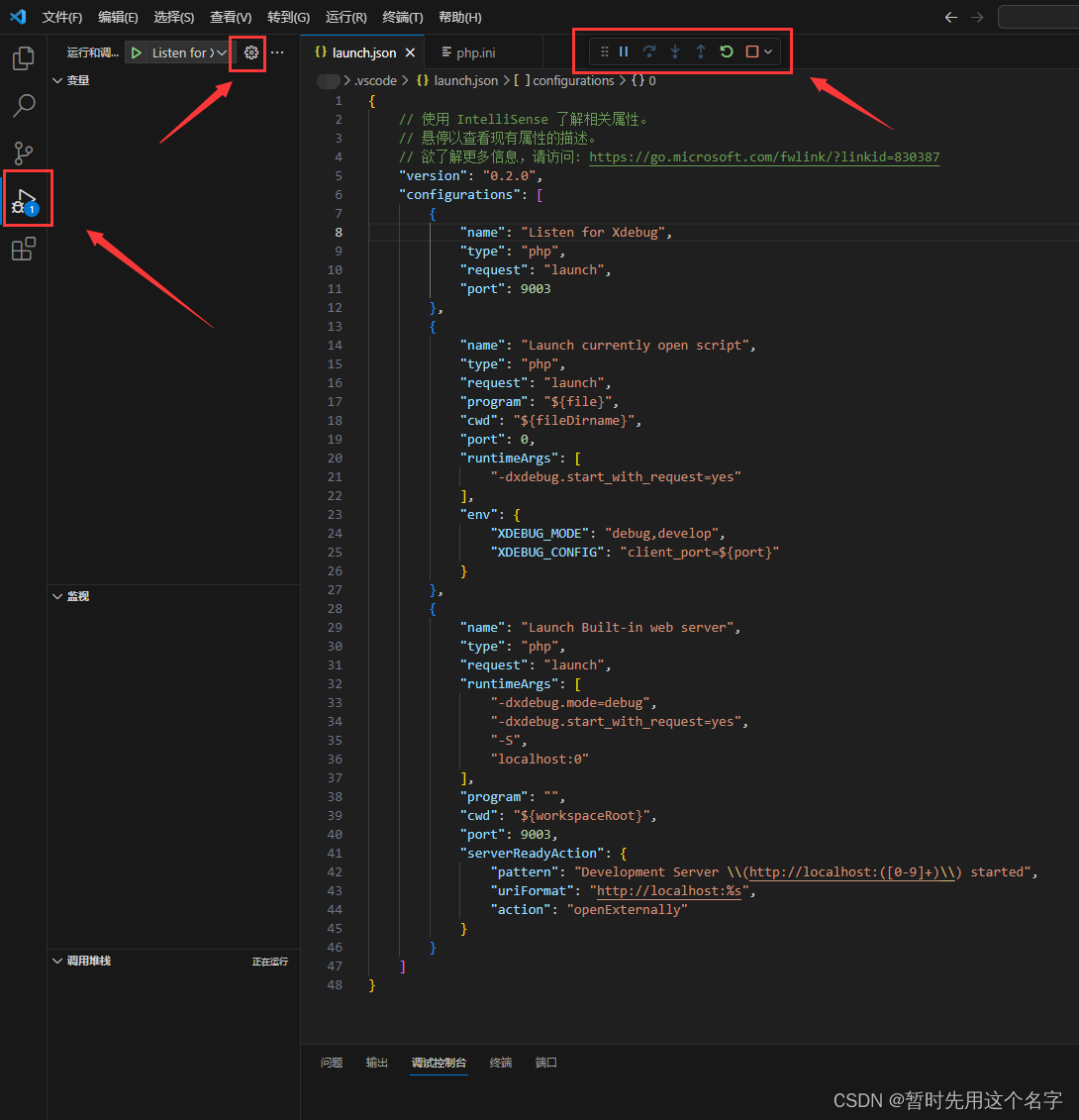

PHP开发日志 ━━ php8.3安装与使用组件Xdebug

今天开头写点历史: 二十年前流行asp,当时用vb整合常用函数库写了一个dll给asp调用,并在此基础上开发一套仿windows界面的后台管理系统;后来asp逐渐没落,于是在十多年前转投php,不久后用php写了一套mvc框架&…...

Python - 深夜数据结构与算法之 Two-Ended BFS

目录 一.引言 二.双向 BFS 简介 1.双向遍历示例 2.搜索模版回顾 三.经典算法实战 1.Word-Ladder [127] 2.Min-Gen-Mutation [433] 四.总结 一.引言 DFS、BFS 是常见的初级搜索方式,为了提高搜索效率,衍生了剪枝、双向 BFS 以及 A* 即启发式搜索…...

langchain-Agent-工具检索

有时会定义很多工具,而定义Agent的时候只想使用与问题相关的工具,这是可以通过向量数据库来检索相关的工具,传递给Agent # Define which tools the agent can use to answer user queries search SerpAPIWrapper() search_tool Tool(name …...

猫头虎分享:探索TypeScript的世界 — TS基础入门

博主猫头虎的技术世界 🌟 欢迎来到猫头虎的博客 — 探索技术的无限可能! 专栏链接: 🔗 精选专栏: 《面试题大全》 — 面试准备的宝典!《IDEA开发秘籍》 — 提升你的IDEA技能!《100天精通Golang》…...

Unity-生命周期函数

目录 生命周期函数是什么? 生命周期函数有哪些? Awake() OnEnable() Start() FixedUpdate() Update() Late Update() OnDisable() OnDestroy() Unity中生命周期函数支持继承多态吗? 生命周期函数是什么? 在Unity中&…...

SQL概述及SQL分类

SQL由IBM上世纪70年代开发出来,是使用关系模型的数据库应用型语言,与数据直接打交道。 SQL标准 SQL92,SQL99,他们分别代表了92年和99年颁布的SQL标准,我们今天使用的SQL语言依旧遵循这些标准。 SQL的分类 DDL:数据定…...

[VSCode] VSCode 常用快捷键

文章目录 VSCode 源代码编辑器VSCode 常用快捷键分类汇总01 编辑02 导航03 调试04 其他05 重构06 测试07 扩展08 选择09 搜索10 书签11 多光标12 代码片段13 其他 VSCode 源代码编辑器 官网:https://code.visualstudio.com/ 下载地址:https://code.visua…...

函数指针和回调函数 以及指针函数

函数指针(Function Pointer): 定义: 函数指针是指向函数的指针,它存储了函数的地址。函数的二制制代码存放在内存四区中的代码段,函数的地址它在内存中的开始地址。如果把函数的地址作为参数,就…...

京东年度数据报告-2023全年度游戏本十大热门品牌销量(销额)榜单

同笔记本市场类似,2023年度游戏本市场的整体销售也呈下滑态势。根据鲸参谋电商数据分析平台的相关数据显示,京东平台上游戏本的年度销量累计超过350万,同比下滑约6%;销售额将近270亿,同比下滑约11%。 鲸参谋综合了京东…...

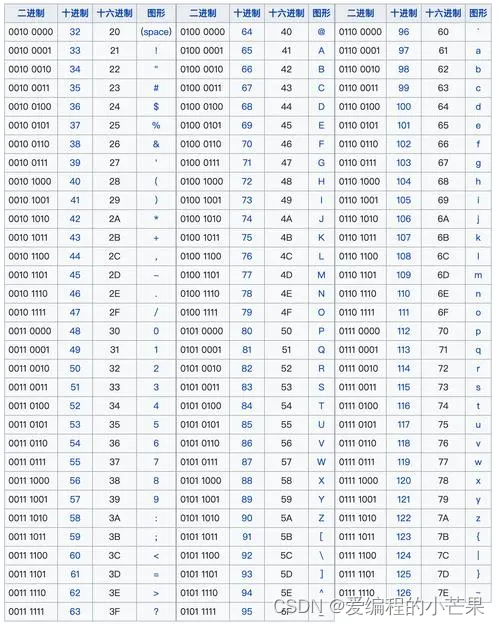

秒懂百科,C++如此简单丨第十二天:ASCLL码

目录 必看信息 Everyday English 📝ASCLL码是什么? 📝ASCLL码表 📝利用ASCLL码实现大写转小写 📝小试牛刀 总结 必看信息 ▶本篇文章由爱编程的小芒果原创,未经许可,严禁转载。 ▶本篇文…...

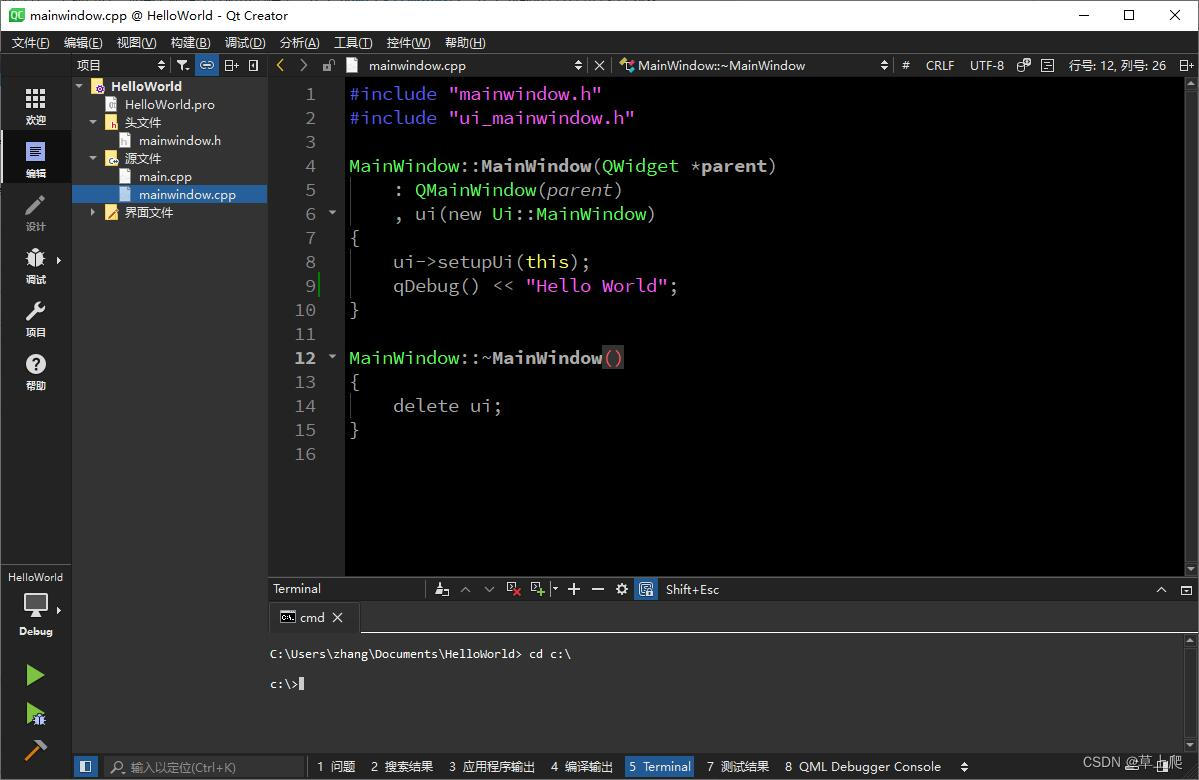

Qt6入门教程 4:Qt Creator常用技巧

在上一篇Qt6入门教程 3:创建Hello World项目中,通过创建一个Qt项目,对Qt Creator已经有了比较直观的认识,本文将介绍它的一些常用技巧。 Qt Creator启动后默认显示欢迎页面 创建项目已经用过了,打开项目也很简单&#…...

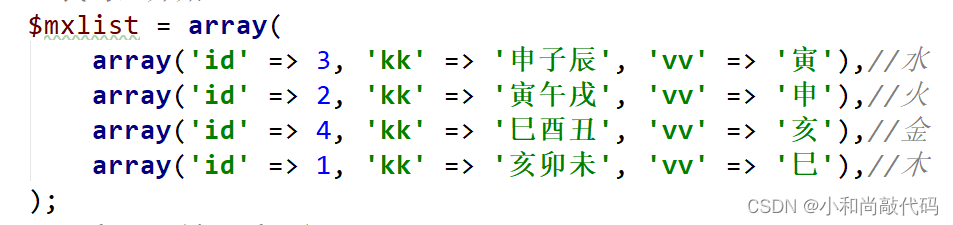

阴盘奇门八字排盘马星位置计算方法php代码

如下位置,马星的四个位置。 计算方法: 1。先根据出生年月日,计算得八字四柱。比如 2024年01月09日,四柱为 其中时柱地支为“申” 2。然后根据以下对应的数组,来找到id号,即马星位置。 根据下表来找到&am…...

vue3 使用 jsoneditor

vue3 使用 jsoneditor 在main.js中引入 样式文件 import jsoneditor/dist/jsoneditor.css复制代码放到文件中就能用了 jsoneditor.vue <template><div ref"jsonDom" style"width: 100%; height: 460px"></div> </template> <…...

若依前后端分离版使用mybatis-plus实践教程

1、根目录得pom加入依赖 <properties><mybatis-plus.version>3.5.1</mybatis-plus.version> </properties> <dependencies><!-- mp配置--><dependency><groupId>com.baomidou</groupId><artifactId>mybatis-plus…...

SpringBoot-Dubbo-Zookeeper

Apache Dubbo:https://cn.dubbo.apache.org/zh-cn/overview/home/ 依赖 <!--dubbo--> <dependency><groupId>org.apache.dubbo</groupId><artifactId>dubbo-spring-boot-starter</artifactId><version>2.7.3</versio…...

华为云AI开发平台ModelArts

华为云ModelArts:重塑AI开发流程的“智能引擎”与“创新加速器”! 在人工智能浪潮席卷全球的2025年,企业拥抱AI的意愿空前高涨,但技术门槛高、流程复杂、资源投入巨大的现实,却让许多创新构想止步于实验室。数据科学家…...

Docker 离线安装指南

参考文章 1、确认操作系统类型及内核版本 Docker依赖于Linux内核的一些特性,不同版本的Docker对内核版本有不同要求。例如,Docker 17.06及之后的版本通常需要Linux内核3.10及以上版本,Docker17.09及更高版本对应Linux内核4.9.x及更高版本。…...

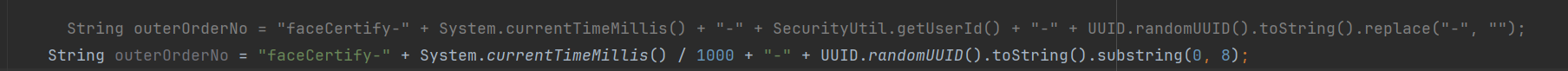

调用支付宝接口响应40004 SYSTEM_ERROR问题排查

在对接支付宝API的时候,遇到了一些问题,记录一下排查过程。 Body:{"datadigital_fincloud_generalsaas_face_certify_initialize_response":{"msg":"Business Failed","code":"40004","sub_msg…...

【Oracle APEX开发小技巧12】

有如下需求: 有一个问题反馈页面,要实现在apex页面展示能直观看到反馈时间超过7天未处理的数据,方便管理员及时处理反馈。 我的方法:直接将逻辑写在SQL中,这样可以直接在页面展示 完整代码: SELECTSF.FE…...

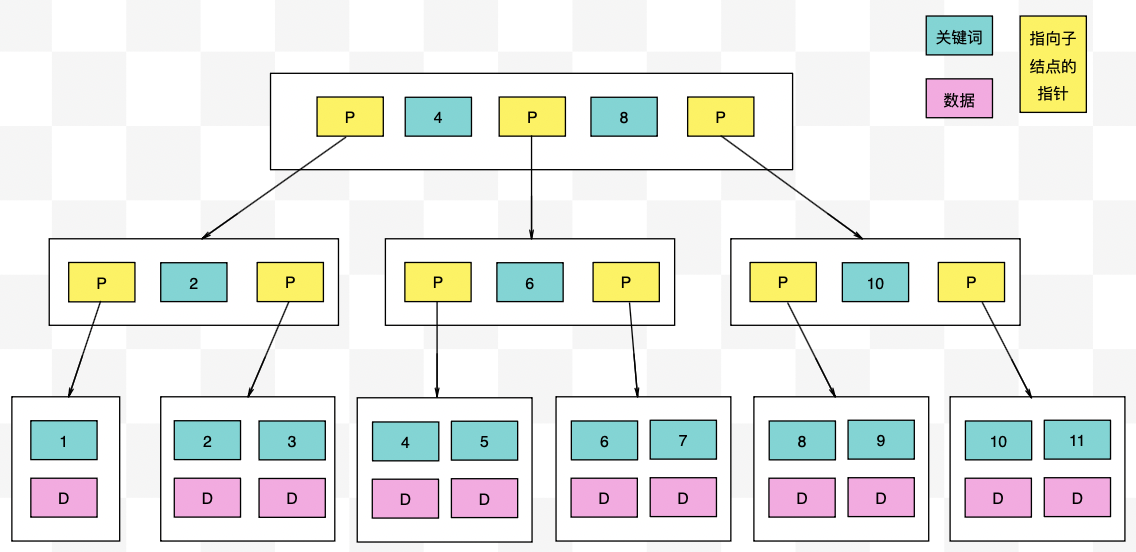

【力扣数据库知识手册笔记】索引

索引 索引的优缺点 优点1. 通过创建唯一性索引,可以保证数据库表中每一行数据的唯一性。2. 可以加快数据的检索速度(创建索引的主要原因)。3. 可以加速表和表之间的连接,实现数据的参考完整性。4. 可以在查询过程中,…...

关于iview组件中使用 table , 绑定序号分页后序号从1开始的解决方案

问题描述:iview使用table 中type: "index",分页之后 ,索引还是从1开始,试过绑定后台返回数据的id, 这种方法可行,就是后台返回数据的每个页面id都不完全是按照从1开始的升序,因此百度了下,找到了…...

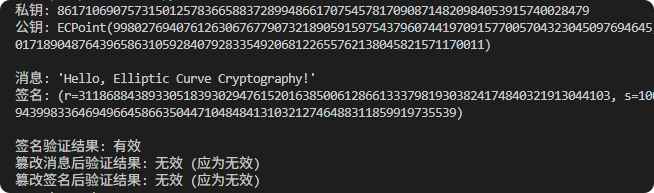

现代密码学 | 椭圆曲线密码学—附py代码

Elliptic Curve Cryptography 椭圆曲线密码学(ECC)是一种基于有限域上椭圆曲线数学特性的公钥加密技术。其核心原理涉及椭圆曲线的代数性质、离散对数问题以及有限域上的运算。 椭圆曲线密码学是多种数字签名算法的基础,例如椭圆曲线数字签…...

06 Deep learning神经网络编程基础 激活函数 --吴恩达

深度学习激活函数详解 一、核心作用 引入非线性:使神经网络可学习复杂模式控制输出范围:如Sigmoid将输出限制在(0,1)梯度传递:影响反向传播的稳定性二、常见类型及数学表达 Sigmoid σ ( x ) = 1 1 +...

用机器学习破解新能源领域的“弃风”难题

音乐发烧友深有体会,玩音乐的本质就是玩电网。火电声音偏暖,水电偏冷,风电偏空旷。至于太阳能发的电,则略显朦胧和单薄。 不知你是否有感觉,近两年家里的音响声音越来越冷,听起来越来越单薄? —…...

AI病理诊断七剑下天山,医疗未来触手可及

一、病理诊断困局:刀尖上的医学艺术 1.1 金标准背后的隐痛 病理诊断被誉为"诊断的诊断",医生需通过显微镜观察组织切片,在细胞迷宫中捕捉癌变信号。某省病理质控报告显示,基层医院误诊率达12%-15%,专家会诊…...