docker容器stop流程

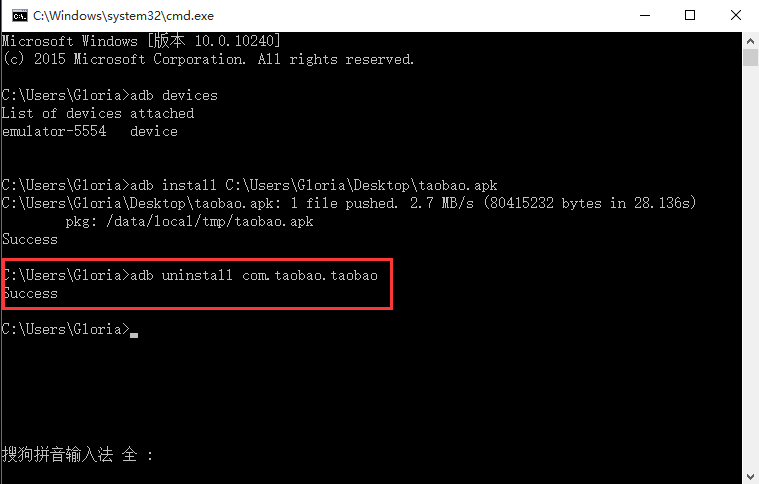

从API route开始看StopContainer接口的调用过程。

// NewRouter initializes a new container router

func NewRouter(b Backend, decoder httputils.ContainerDecoder) router.Router {r := &containerRouter{backend: b,decoder: decoder,}r.initRoutes()return r

}

...

// initRoutes initializes the routes in container router

func (r *containerRouter) initRoutes() {r.routes = []router.Route{...router.NewPostRoute("/containers/{name:.*}/stop", r.postContainersStop),...}

}

func (s *containerRouter) postContainersStop(ctx context.Context, w http.ResponseWriter, r *http.Request, vars map[string]string) error {...if err := s.backend.ContainerStop(vars["name"], seconds); err != nil {return err}w.WriteHeader(http.StatusNoContent)return nil

}

func (cli *DaemonCli) start(opts *daemonOptions) (err error) {...d, err := daemon.NewDaemon(ctx, cli.Config, pluginStore)...

}

// ContainerStop looks for the given container and stops it.

// In case the container fails to stop gracefully within a time duration

// specified by the timeout argument, in seconds, it is forcefully

// terminated (killed).

//

// If the timeout is nil, the container's StopTimeout value is used, if set,

// otherwise the engine default. A negative timeout value can be specified,

// meaning no timeout, i.e. no forceful termination is performed.

func (daemon *Daemon) ContainerStop(name string, timeout *int) error {container, err := daemon.GetContainer(name)if err != nil {return err}if !container.IsRunning() {return containerNotModifiedError{running: false}}if timeout == nil {stopTimeout := container.StopTimeout()timeout = &stopTimeout}if err := daemon.containerStop(container, *timeout); err != nil {return errdefs.System(errors.Wrapf(err, "cannot stop container: %s", name))}return nil

}

// containerStop sends a stop signal, waits, sends a kill signal.

func (daemon *Daemon) containerStop(container *containerpkg.Container, seconds int) error {if !container.IsRunning() {return nil}stopSignal := container.StopSignal()// 1. Send a stop signalif err := daemon.killPossiblyDeadProcess(container, stopSignal); err != nil {// While normally we might "return err" here we're not going to// because if we can't stop the container by this point then// it's probably because it's already stopped. Meaning, between// the time of the IsRunning() call above and now it stopped.// Also, since the err return will be environment specific we can't// look for any particular (common) error that would indicate// that the process is already dead vs something else going wrong.// So, instead we'll give it up to 2 more seconds to complete and if// by that time the container is still running, then the error// we got is probably valid and so we force kill it.ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second)defer cancel()if status := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning); status.Err() != nil {logrus.Infof("Container failed to stop after sending signal %d to the process, force killing", stopSignal)if err := daemon.killPossiblyDeadProcess(container, 9); err != nil {return err}}}// 2. Wait for the process to exit on its ownctx := context.Background()if seconds >= 0 {var cancel context.CancelFuncctx, cancel = context.WithTimeout(ctx, time.Duration(seconds)*time.Second)defer cancel()}if status := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning); status.Err() != nil {logrus.Infof("Container %v failed to exit within %d seconds of signal %d - using the force", container.ID, seconds, stopSignal)// 3. If it doesn't, then send SIGKILLif err := daemon.Kill(container); err != nil {// Wait without a timeout, ignore result.<-container.Wait(context.Background(), containerpkg.WaitConditionNotRunning)logrus.Warn(err) // Don't return error because we only care that container is stopped, not what function stopped it}}daemon.LogContainerEvent(container, "stop")return nil

}

container.StopSignal() 优先用容器指定的信号,如果没有则默认是SIGTERM, 如果2s后容器仍未退出,再按上层(kubelet)指定的超时时间等待容器退出。

如果容器始终未退出,daemon.Kill(container) 给容器发送SIGKILL信号,强制容器退出。

这里涉及容器的两种启动方式:

- shell格式

PID1进程为

/bin/sh -c,

因为/bin/sh不会转发信号至任何子进程。所以我们的应用将永远不会收到SIGTERM信号。显然要解决这个问题,就需要将我们的进程作为PID1进程运行。

- exec格式

PID进程为应用程序执行文件(脚本或二进制), 我们的程序捕获了

docker stop命令发送的SIGTERM信号

优先看下强制删除的过程

// Kill forcefully terminates a container.

func (daemon *Daemon) Kill(container *containerpkg.Container) error {if !container.IsRunning() {return errNotRunning(container.ID)}// 1. Send SIGKILLif err := daemon.killPossiblyDeadProcess(container, int(syscall.SIGKILL)); err != nil {// While normally we might "return err" here we're not going to// because if we can't stop the container by this point then// it's probably because it's already stopped. Meaning, between// the time of the IsRunning() call above and now it stopped.// Also, since the err return will be environment specific we can't// look for any particular (common) error that would indicate// that the process is already dead vs something else going wrong.// So, instead we'll give it up to 2 more seconds to complete and if// by that time the container is still running, then the error// we got is probably valid and so we return it to the caller.if isErrNoSuchProcess(err) {return nil}ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second)defer cancel()if status := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning); status.Err() != nil {return err}}// 2. Wait for the process to die, in last resort, try to kill the process directlyif err := killProcessDirectly(container); err != nil {if isErrNoSuchProcess(err) {return nil}return err}// Wait for exit with no timeout.// Ignore returned status.<-container.Wait(context.Background(), containerpkg.WaitConditionNotRunning)return nil

}

killWithSignal() 先从容器层面尝试停止容器,如果容器是 Restarting 状态,就放弃这次的Kill操作。

如果容器时 Paused 状态,先执行Resume,在容器恢复后,立刻发送SIGKILL。

等待2s,容器状态没有转成 NotRunning, 就直接给容器中的进程发送SIGKILL。到这里再等上10s,如果容器还不退,就查询容器的1号进程,发送SIGKILL。

<-container.Wait 发送完SIGKILL后,开始阻塞等, 这次没有设置超时,就是死等, 这时当前goroutine 握着一把容器级别的锁(state.Lock()) 。

TODO: daemon.containerd.Resume()

// killWithSignal sends the container the given signal. This wrapper for the

// host specific kill command prepares the container before attempting

// to send the signal. An error is returned if the container is paused

// or not running, or if there is a problem returned from the

// underlying kill command.

func (daemon *Daemon) killWithSignal(container *containerpkg.Container, sig int) error {logrus.Debugf("Sending kill signal %d to container %s", sig, container.ID)container.Lock()defer container.Unlock()if !container.Running {return errNotRunning(container.ID)}var unpause boolif container.Config.StopSignal != "" && syscall.Signal(sig) != syscall.SIGKILL {...} else {container.ExitOnNext()unpause = container.Paused}if !daemon.IsShuttingDown() {container.HasBeenManuallyStopped = truecontainer.CheckpointTo(daemon.containersReplica)}// if the container is currently restarting we do not need to send the signal// to the process. Telling the monitor that it should exit on its next event// loop is enoughif container.Restarting {return nil}if err := daemon.kill(container, sig); err != nil {if errdefs.IsNotFound(err) {unpause = falselogrus.WithError(err).WithField("container", container.ID).WithField("action", "kill").Debug("container kill failed because of 'container not found' or 'no such process'")} else {return errors.Wrapf(err, "Cannot kill container %s", container.ID)}}if unpause {// above kill signal will be sent once resume is finishedif err := daemon.containerd.Resume(context.Background(), container.ID); err != nil {logrus.Warnf("Cannot unpause container %s: %s", container.ID, err)}}attributes := map[string]string{"signal": fmt.Sprintf("%d", sig),}daemon.LogContainerEventWithAttributes(container, "kill", attributes)return nil

}func killProcessDirectly(cntr *container.Container) error {ctx, cancel := context.WithTimeout(context.Background(), 10*time.Second)defer cancel()// Block until the container to stops or timeout.status := <-cntr.Wait(ctx, container.WaitConditionNotRunning)if status.Err() != nil {// Ensure that we don't kill ourselvesif pid := cntr.GetPID(); pid != 0 {logrus.Infof("Container %s failed to exit within 10 seconds of kill - trying direct SIGKILL", stringid.TruncateID(cntr.ID))if err := unix.Kill(pid, 9); err != nil {if err != unix.ESRCH {return err}e := errNoSuchProcess{pid, 9}logrus.Debug(e)return e}}}return nil

}

// Wait waits until the container is in a certain state indicated by the given

// condition. A context must be used for cancelling the request, controlling

// timeouts, and avoiding goroutine leaks. Wait must be called without holding

// the state lock. Returns a channel from which the caller will receive the

// result. If the container exited on its own, the result's Err() method will

// be nil and its ExitCode() method will return the container's exit code,

// otherwise, the results Err() method will return an error indicating why the

// wait operation failed.

func (s *State) Wait(ctx context.Context, condition WaitCondition) <-chan StateStatus {s.Lock()defer s.Unlock()if condition == WaitConditionNotRunning && !s.Running {// Buffer so we can put it in the channel now.resultC := make(chan StateStatus, 1)// Send the current status.resultC <- StateStatus{exitCode: s.ExitCode(),err: s.Err(),}return resultC}// If we are waiting only for removal, the waitStop channel should// remain nil and block forever.var waitStop chan struct{}if condition < WaitConditionRemoved {waitStop = s.waitStop}// Always wait for removal, just in case the container gets removed// while it is still in a "created" state, in which case it is never// actually stopped.waitRemove := s.waitRemoveresultC := make(chan StateStatus)go func() {select {case <-ctx.Done():// Context timeout or cancellation.resultC <- StateStatus{exitCode: -1,err: ctx.Err(),}returncase <-waitStop:case <-waitRemove:}s.Lock()result := StateStatus{exitCode: s.ExitCode(),err: s.Err(),}s.Unlock()resultC <- result}()return resultC

}

Kill() 死等的对象,要么容器的waitStop信道醒来,要么waitRemove信道醒来。

// SetStopped sets the container state to "stopped" without locking.

func (s *State) SetStopped(exitStatus *ExitStatus) {s.Running = falses.Paused = falses.Restarting = falses.Pid = 0if exitStatus.ExitedAt.IsZero() {s.FinishedAt = time.Now().UTC()} else {s.FinishedAt = exitStatus.ExitedAt}s.ExitCodeValue = exitStatus.ExitCodes.OOMKilled = exitStatus.OOMKilledclose(s.waitStop) // fire waiters for stops.waitStop = make(chan struct{})

}

...

// SetRestarting sets the container state to "restarting" without locking.

// It also sets the container PID to 0.

func (s *State) SetRestarting(exitStatus *ExitStatus) {// we should consider the container running when it is restarting because of// all the checks in docker around rm/stop/etcs.Running = trues.Restarting = trues.Paused = falses.Pid = 0s.FinishedAt = time.Now().UTC()s.ExitCodeValue = exitStatus.ExitCodes.OOMKilled = exitStatus.OOMKilledclose(s.waitStop) // fire waiters for stops.waitStop = make(chan struct{})

}

先看看waitStop,在 SetStopped 和 SetRestarting 时会重置,可以让 Kill()结束等待,释放那把锁。

- 在docker服务重启恢复时,会批量处理一波, 从containerd查询容器的状态,如果containerd反馈容器已死,会执行一次

SetStopped()。

需要注意的是,如果如果容器活着,但是dockerd未开启 --live-restore, 会执行一次 daemon.kill(), 直接给容器的1号进程发送结束信号。

func (daemon *Daemon) restore() error {...for _, c := range containers {group.Add(1)go func(c *container.Container) {...alive, _, process, err = daemon.containerd.Restore(context.Background(), c.ID, c.InitializeStdio)...if !alive && process != nil {ec, exitedAt, err = process.Delete(context.Background())if err != nil && !errdefs.IsNotFound(err) {logrus.WithError(err).Errorf("Failed to delete container %s from containerd", c.ID)return}} else if !daemon.configStore.LiveRestoreEnabled {if err := daemon.kill(c, c.StopSignal()); err != nil && !errdefs.IsNotFound(err) {logrus.WithError(err).WithField("container", c.ID).Error("error shutting down container")return}}...if !alive {c.Lock()c.SetStopped(&container.ExitStatus{ExitCode: int(ec), ExitedAt: exitedAt})daemon.Cleanup(c)if err := c.CheckpointTo(daemon.containersReplica); err != nil {logrus.Errorf("Failed to update stopped container %s state: %v", c.ID, err)}c.Unlock()}...

- 在docker的事件处理中,有两个地方调用了

SetStopped

当docker收到退出事件后,拿住一把 容器级别的锁 (container.Lock()), 通知containerd删除对应的task,就等2秒钟,然后继续。

如果断定容器不需要需要重启,会调用一次SetStopped。

如果需要重启,但重启失败了,也会调用一次SetStopped,此前已经放掉手里的锁。

// ProcessEvent is called by libcontainerd whenever an event occurs

func (daemon *Daemon) ProcessEvent(id string, e libcontainerdtypes.EventType, ei libcontainerdtypes.EventInfo) error {c, err := daemon.GetContainer(id)if err != nil {return errors.Wrapf(err, "could not find container %s", id)}switch e {...case libcontainerdtypes.EventExit:if int(ei.Pid) == c.Pid {c.Lock()_, _, err := daemon.containerd.DeleteTask(context.Background(), c.ID)...ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second)c.StreamConfig.Wait(ctx)cancel()c.Reset(false)exitStatus := container.ExitStatus{ExitCode: int(ei.ExitCode),ExitedAt: ei.ExitedAt,OOMKilled: ei.OOMKilled,}restart, wait, err := c.RestartManager().ShouldRestart(ei.ExitCode, daemon.IsShuttingDown() || c.HasBeenManuallyStopped, time.Since(c.StartedAt))if err == nil && restart {c.RestartCount++c.SetRestarting(&exitStatus)} else {if ei.Error != nil {c.SetError(ei.Error)}c.SetStopped(&exitStatus)defer daemon.autoRemove(c)}defer c.Unlock() ...if err == nil && restart {go func() {err := <-waitif err == nil {// daemon.netController is initialized when daemon is restoring containers.// But containerStart will use daemon.netController segment.// So to avoid panic at startup process, here must wait util daemon restore done.daemon.waitForStartupDone()if err = daemon.containerStart(c, "", "", false); err != nil {logrus.Debugf("failed to restart container: %+v", err)}}if err != nil {c.Lock()c.SetStopped(&exitStatus)daemon.setStateCounter(c)c.CheckpointTo(daemon.containersReplica)c.Unlock()defer daemon.autoRemove(c)if err != restartmanager.ErrRestartCanceled {logrus.Errorf("restartmanger wait error: %+v", err)}}}()}...

func (c *client) processEventStream(ctx context.Context, ns string) {...// Filter on both namespace *and* topic. To create an "and" filter,// this must be a single, comma-separated stringeventStream, errC := c.client.EventService().Subscribe(ctx, "namespace=="+ns+",topic~=|^/tasks/|")...for {var oomKilled boolselect {...case ev = <-eventStream:...switch t := v.(type) {...case *apievents.TaskExit:et = libcontainerdtypes.EventExitei = libcontainerdtypes.EventInfo{ContainerID: t.ContainerID,ProcessID: t.ID,Pid: t.Pid,ExitCode: t.ExitStatus,ExitedAt: t.ExitedAt,}...}...c.processEvent(ctx, et, ei)}}

}

//libcontainerd/remote/client.go

func (c *client) processEvent(ctx context.Context, et libcontainerdtypes.EventType, ei libcontainerdtypes.EventInfo) {c.eventQ.Append(ei.ContainerID, func() {err := c.backend.ProcessEvent(ei.ContainerID, et, ei)...if et == libcontainerdtypes.EventExit && ei.ProcessID != ei.ContainerID {p, err := c.getProcess(ctx, ei.ContainerID, ei.ProcessID)...ctr, err := c.getContainer(ctx, ei.ContainerID)if err != nil {c.logger.WithFields(logrus.Fields{"container": ei.ContainerID,"error": err,}).Error("failed to find container")} else {labels, err := ctr.Labels(ctx)if err != nil {c.logger.WithFields(logrus.Fields{"container": ei.ContainerID,"error": err,}).Error("failed to get container labels")return}newFIFOSet(labels[DockerContainerBundlePath], ei.ProcessID, true, false).Close()}_, err = p.Delete(context.Background())...}})

}

// plugin/executor/containerd/containerd.go// deleteTaskAndContainer deletes plugin task and then plugin container from containerd

func deleteTaskAndContainer(ctx context.Context, cli libcontainerdtypes.Client, id string, p libcontainerdtypes.Process) {if p != nil {if _, _, err := p.Delete(ctx); err != nil && !errdefs.IsNotFound(err) {logrus.WithError(err).WithField("id", id).Error("failed to delete plugin task from containerd")}} else {if _, _, err := cli.DeleteTask(ctx, id); err != nil && !errdefs.IsNotFound(err) {logrus.WithError(err).WithField("id", id).Error("failed to delete plugin task from containerd")}}if err := cli.Delete(ctx, id); err != nil && !errdefs.IsNotFound(err) {logrus.WithError(err).WithField("id", id).Error("failed to delete plugin container from containerd")}

}...

// ProcessEvent handles events from containerd

// All events are ignored except the exit event, which is sent of to the stored handler

func (e *Executor) ProcessEvent(id string, et libcontainerdtypes.EventType, ei libcontainerdtypes.EventInfo) error {switch et {case libcontainerdtypes.EventExit:deleteTaskAndContainer(context.Background(), e.client, id, nil)return e.exitHandler.HandleExitEvent(ei.ContainerID)}return nil

}

dockerd订阅了containerd服务的 /tasks/exit 事件, 那么交接棒就到了 containerd ?

containerd里发送TaskExit事件的地方:

- containerd-shim 主动发布退出事件

func (r *Runtime) cleanupAfterDeadShim(ctx context.Context, bundle *bundle, ns, id string) error {...// Notify ClientexitedAt := time.Now().UTC()r.events.Publish(ctx, runtime.TaskExitEventTopic, &eventstypes.TaskExit{ContainerID: id,ID: id,Pid: uint32(pid),ExitStatus: 128 + uint32(unix.SIGKILL),ExitedAt: exitedAt,})r.tasks.Delete(ctx, id)...

}

- containerd-shim服务收到SIGCHLD信号后,且为Init进程退出时,发布退出事件

func (s *Service) checkProcesses(e runc.Exit) {for _, p := range s.allProcesses() {if p.Pid() != e.Pid {continue}if ip, ok := p.(*process.Init); ok {shouldKillAll, err := shouldKillAllOnExit(s.bundle)if err != nil {log.G(s.context).WithError(err).Error("failed to check shouldKillAll")}// Ensure all children are killedif shouldKillAll {if err := ip.KillAll(s.context); err != nil {log.G(s.context).WithError(err).WithField("id", ip.ID()).Error("failed to kill init's children")}}}p.SetExited(e.Status)s.events <- &eventstypes.TaskExit{ContainerID: s.id,ID: p.ID(),Pid: uint32(e.Pid),ExitStatus: uint32(e.Status),ExitedAt: p.ExitedAt(),}return}

}

调用 cleanupAfterDeadShim() 地方:

- 创建Task时,设置

exitHandler

// Create a new task

func (r *Runtime) Create(ctx context.Context, id string, opts runtime.CreateOpts) (_ runtime.Task, err error) {namespace, err := namespaces.NamespaceRequired(ctx)...ropts, err := r.getRuncOptions(ctx, id)if err != nil {return nil, err}bundle, err := newBundle(id,filepath.Join(r.state, namespace),filepath.Join(r.root, namespace),opts.Spec.Value)...shimopt := ShimLocal(r.config, r.events)if !r.config.NoShim {...exitHandler := func() {log.G(ctx).WithField("id", id).Info("shim reaped")if _, err := r.tasks.Get(ctx, id); err != nil {// Task was never started or was already successfully deletedreturn}if err = r.cleanupAfterDeadShim(context.Background(), bundle, namespace, id); err != nil {log.G(ctx).WithError(err).WithFields(logrus.Fields{"id": id,"namespace": namespace,}).Warn("failed to clean up after killed shim")}}shimopt = ShimRemote(r.config, r.address, cgroup, exitHandler)}s, err := bundle.NewShimClient(ctx, namespace, shimopt, ropts)if err != nil {return nil, err}defer func() {if err != nil {deferCtx, deferCancel := context.WithTimeout(namespaces.WithNamespace(context.TODO(), namespace), cleanupTimeout)defer deferCancel()if kerr := s.KillShim(deferCtx); kerr != nil {log.G(ctx).WithError(err).Error("failed to kill shim")}}}()rt := r.config.Runtimeif ropts != nil && ropts.Runtime != "" {rt = ropts.Runtime}...cr, err := s.Create(ctx, sopts)...t, err := newTask(id, namespace, int(cr.Pid), s, r.events, r.tasks, bundle)...r.events.Publish(ctx, runtime.TaskCreateEventTopic, &eventstypes.TaskCreate{ContainerID: sopts.ID,Bundle: sopts.Bundle,Rootfs: sopts.Rootfs,IO: &eventstypes.TaskIO{Stdin: sopts.Stdin,Stdout: sopts.Stdout,Stderr: sopts.Stderr,Terminal: sopts.Terminal,},Checkpoint: sopts.Checkpoint,Pid: uint32(t.pid),})return t, nil

}

- containerd恢复时重新加载所有Tasks

func (r *Runtime) loadTasks(ctx context.Context, ns string) ([]*Task, error) {dir, err := ioutil.ReadDir(filepath.Join(r.state, ns))if err != nil {return nil, err}var o []*Taskfor _, path := range dir {ctx = namespaces.WithNamespace(ctx, ns)pid, _ := runc.ReadPidFile(filepath.Join(bundle.path, process.InitPidFile))shimExit := make(chan struct{})s, err := bundle.NewShimClient(ctx, ns, ShimConnect(r.config, func() {defer close(shimExit)if _, err := r.tasks.Get(ctx, id); err != nil {// Task was never started or was already successfully deletedreturn}if err := r.cleanupAfterDeadShim(ctx, bundle, ns, id); err != nil {...}}), nil)if err != nil {log.G(ctx).WithError(err).WithFields(logrus.Fields{"id": id,"namespace": ns,}).Error("connecting to shim")err := r.cleanupAfterDeadShim(ctx, bundle, ns, id)if err != nil {log.G(ctx).WithError(err).WithField("bundle", bundle.path).Error("cleaning up after dead shim")}continue}func (r *Runtime) restoreTasks(ctx context.Context) ([]*Task, error) {dir, err := ioutil.ReadDir(r.state)...for _, namespace := range dir {...log.G(ctx).WithField("namespace", name).Debug("loading tasks in namespace")tasks, err := r.loadTasks(ctx, name)if err != nil {return nil, err}o = append(o, tasks...)}return o, nil

}

// New returns a configured runtime

func New(ic *plugin.InitContext) (interface{}, error) {...tasks, err := r.restoreTasks(ic.Context)if err != nil {return nil, err}...

containerd-shim收到SIGCHLD信号时,生成一个runc.Exit事件,推送所有订阅者,这里订阅者基本就是containerd-shim自己了,

在协程processExit里调用checkProcesses, 然后向containerd推送TaskExit事件。

func handleSignals(logger *logrus.Entry, signals chan os.Signal, server *ttrpc.Server, sv *shim.Service) error {var (termOnce sync.Oncedone = make(chan struct{}))for {select {case <-done:return nilcase s := <-signals:switch s {case unix.SIGCHLD:if err := reaper.Reap(); err != nil {logger.WithError(err).Error("reap exit status")}...

// Reap should be called when the process receives an SIGCHLD. Reap will reap

// all exited processes and close their wait channels

func Reap() error {now := time.Now()exits, err := sys.Reap(false)for _, e := range exits {done := Default.notify(runc.Exit{Timestamp: now,Pid: e.Pid,Status: e.Status,})select {case <-done:case <-time.After(1 * time.Second):}}return err

}

...

func (m *Monitor) notify(e runc.Exit) chan struct{} {const timeout = 1 * time.Millisecondvar (done = make(chan struct{}, 1)timer = time.NewTimer(timeout)success = make(map[chan runc.Exit]struct{}))stop(timer, true)go func() {defer close(done)for {var (failed intsubscribers = m.getSubscribers())for _, s := range subscribers {s.do(func() {if s.closed {return}if _, ok := success[s.c]; ok {return}timer.Reset(timeout)recv := trueselect {case s.c <- e:success[s.c] = struct{}{}case <-timer.C:recv = falsefailed++}stop(timer, recv)})}// all subscribers received the messageif failed == 0 {return}}}()return done

}

相关文章:

docker容器stop流程

从API route开始看StopContainer接口的调用过程。 // NewRouter initializes a new container router func NewRouter(b Backend, decoder httputils.ContainerDecoder) router.Router {r : &containerRouter{backend: b,decoder: decoder,}r.initRoutes()return r } ... …...

生产环境_Spark接收传入的sql并替换sql中的表名与解析_非常NB

背景 开发时遇到一个较为复杂的周期需求,为了适配读取各种数据库中的数据并将数据库数据转换为DataFrame并进行后续的开发分析工作,做了如下代码。 在爷们开发这段生产中的代码,可适配mysql,hive,hbase,gbase等等…...

【issue-YOLO】自定义数据集训练YOLO-v7 Segmentation

1. 拉取代码创建环境 执行nvidia-smi验证cuda环境是否可用;拉取官方代码; clone官方代码仓库 git clone https://github.com/WongKinYiu/yolov7;从main分支切换到u7分支 cd yolov7 && git checkout 44f30af0daccb1a3baecc5d80eae229…...

【八大排序】选择排序 | 堆排序 + 图文详解!!

📷 江池俊: 个人主页 🔥个人专栏: ✅数据结构冒险记 ✅C语言进阶之路 🌅 有航道的人,再渺小也不会迷途。 文章目录 一、选择排序1.1 基本思想1.2 算法步骤 动图演示1.3 代码实现1.4 选择排序特性总结 二…...

C语言贪吃蛇详解

个人简介:双非大二学生 个人博客:Monodye 今日鸡汤:人生就像一盒巧克力,你永远不知道下一块是什么味的 C语言基础刷题:牛客网在线编程_语法篇_基础语法 (nowcoder.com) 一.贪吃蛇游戏背景 贪吃蛇是久负盛名的游戏&…...

go使用gopprof分析内存泄露

假设我们使用的是比如beego这样的网络框架,我们可以这样加代码来使用gopprof来进行内存泄露分析: beego框架加gopprof分析代码: 1.先在router.go里添加路由信息: beego.Router("/debug/pprof", &controllers.ProfController{}) beego.Router("/debug…...

uniapp中组件库Mask 遮罩层 的使用方法

目录 #平台差异说明 #基本使用 #嵌入内容 #遮罩样式 #API #Props #Events #Slot 创建一个遮罩层,用于强调特定的页面元素,并阻止用户对遮罩下层的内容进行操作,一般用于弹窗场景 #平台差异说明 AppH5微信小程序支付宝小程序百度小程…...

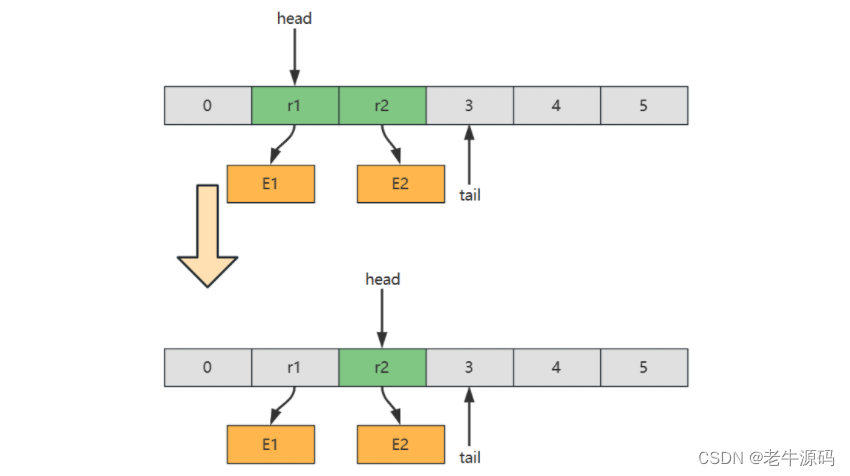

【数据结构与算法】(7)基础数据结构之双端队列的链表实现、环形数组实现示例讲解

目录 2.6 双端队列1) 概述2) 链表实现3) 数组实现习题E01. 二叉树 Z 字层序遍历-Leetcode 103 2.6 双端队列 1) 概述 双端队列、队列、栈对比 定义特点队列一端删除(头)另一端添加(尾)First In First Out栈一端删除和添加&…...

2024 高级前端面试题之 前端工程相关 「精选篇」

该内容主要整理关于 前端工程相关模块的相关面试题,其他内容面试题请移步至 「最新最全的前端面试题集锦」 查看。 前端工程相关模块精选篇 1. webpack的基本配置2. webpack高级配置3. webpack性能优化-构建速度4. webpack性能优化-产出代码(线上运行&am…...

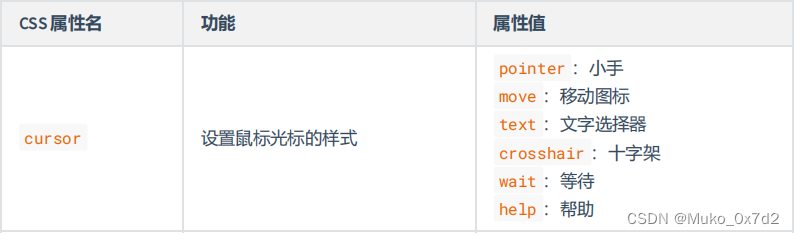

CSS常用属性

CSS常用属性 1. 像素的概念 概念:我们的电脑屏幕是,是由一个一个“小点”组成的,每个“小点”,就是一个像素(px)。规律:像素点越小,呈现的内容就越清晰、越细腻。 注意点ÿ…...

AI新宠Arc浏览器真可以取代Chrome吗?

每周跟踪AI热点新闻动向和震撼发展 想要探索生成式人工智能的前沿进展吗?订阅我们的简报,深入解析最新的技术突破、实际应用案例和未来的趋势。与全球数同行一同,从行业内部的深度分析和实用指南中受益。不要错过这个机会,成为AI领…...

基于Java (spring-boot)的实验室管理系统

一、项目介绍 普通用户: 1.登录,注册 2.查看实验室列表信息 3.实验室预约 4.查看预约进度并取消 5.查看公告 6.订阅课程 7.实验室报修 8.修改个人信息 教师登录: 1.查看并审核预约申请 2.查看已审核预约并导出到excel 3.实验室设备管理,报修 …...

Android用setRectToRect实现Bitmap基于Matrix矩阵scale缩放RectF动画,Kotlin(一)

Android用setRectToRect实现Bitmap基于Matrix矩阵scale缩放RectF动画,Kotlin(一) 基于Matrix,控制Bitmap的setRectToRect的目标RectF的宽高。从很小的宽高开始,不断迭代增加setRectToRect的目标RectF的宽高,…...

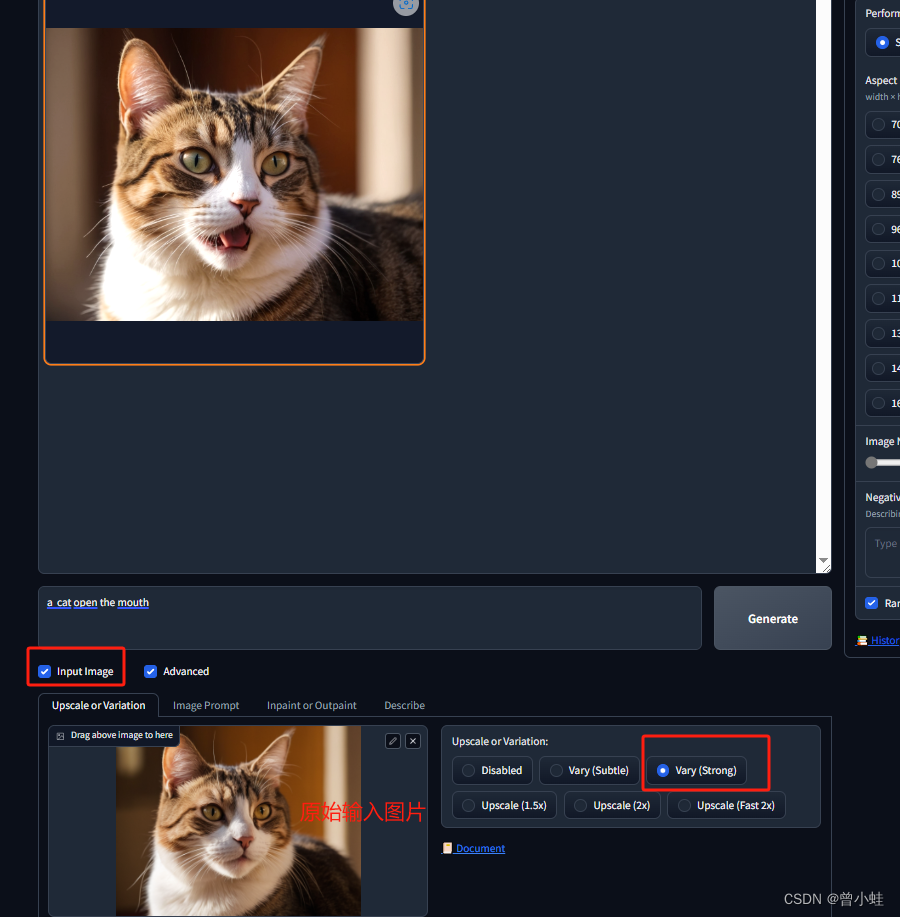

【AI绘画+Midjourney平替】Fooocus:图像生成、修改软件(Controlnet原作者重新设计的UI+Windows一键部署)

代码:https://github.com/lllyasviel/Fooocus windows一键启动包下载:https://github.com/lllyasviel/Fooocus/releases/download/release/Fooocus_win64_2-1-831.7z B站视频教程:AI绘画入门神器:Fooocus | 简化SD流程,…...

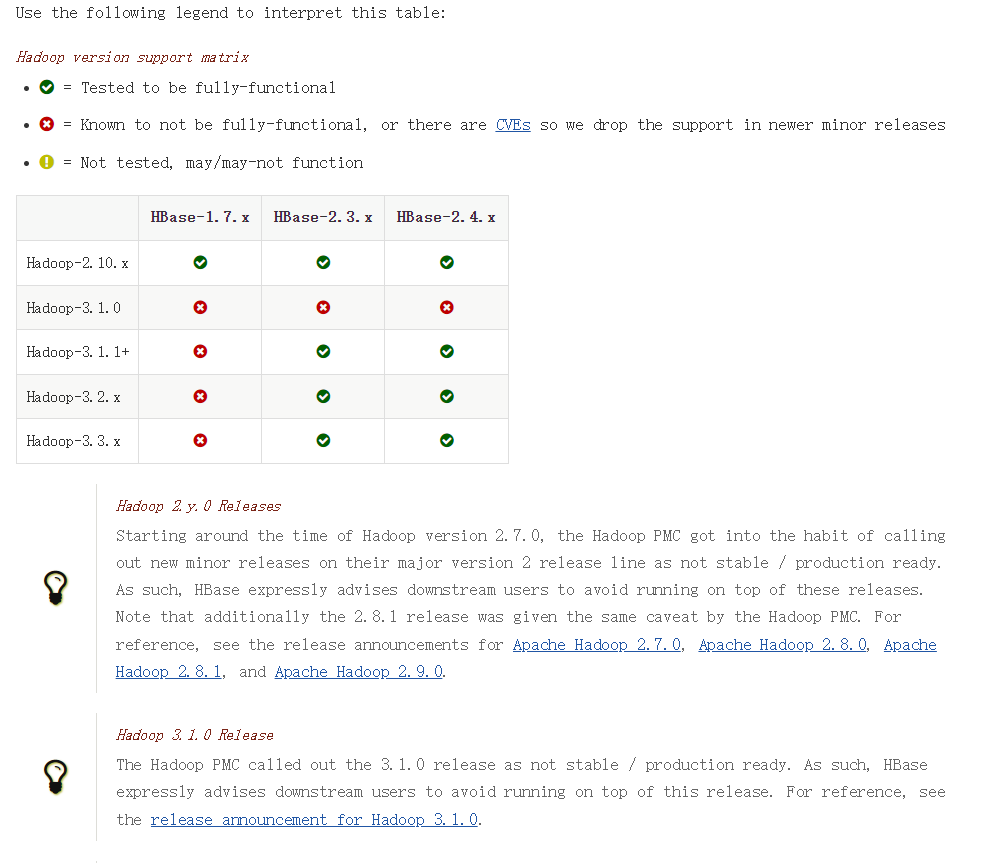

Java技术栈 —— Hive与HBase

Java技术栈 —— Hive与HBase 一、 什么是Hive与HBase二、如何使用Hive与HBase?2.1 Hive2.1.1 安装2.1.2 使用2.1.2.1 使用前准备2.1.2.2 开始使用hive 2.2 HBase2.2.1 安装2.2.2 使用 三、Apache基金会 一、 什么是Hive与HBase 见参考文章。 一、参考文章或视频链…...

【代码随想录-哈希表】有效的字母异位词

💝💝💝欢迎来到我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。 推荐:kwan 的首页,持续学习,不断总结,共同进步,活到老学到老导航 檀越剑指大厂系列:全面总结 jav…...

SQL Server之DML触发器

一、如何创建一个触发器呢 触发器的定义语言如下: CREATE [ OR ALTER ] TRIGGER trigger_nameon {table_name | view_name}{for | After | Instead of }[ insert, update,delete ]assql_statement从这个定义语言我们可以知道如下信息: trigger_name&…...

04. 【Linux教程】安装 Linux 操作系统

通过前面的小节学习,我们已经对 Linux 操作系统有了简单的了解,同时也在 Windows 下安装了虚拟机软件 VMware ,那么本节课我们就介绍下如何使用虚拟机软件安装 Linux 操作系统。 通过第一小节的学习我们知道 Linux 有很多的发行版本…...

Facebook群控:利用IP代理提高聊单效率

在当今社交媒体竞争激烈的环境中,Facebook已经成为广告营销和推广的重要平台,为了更好地利用Facebook进行推广活动,群控技术应运而生。 本文将深入探讨Facebook群控的定义、作用以及如何利用IP代理来提升群控效率,为你提供全面的…...

香港倾斜模型3DTiles数据漫游

谷歌地球全香港地区倾斜摄影数据,通过工具转换成3DTiles格式,将这份数据完美加载到三维数字地球Cesium上进行完美呈现,打造香港地区三维倾斜数据覆盖,完美呈现香港城市壮美以及维多利亚港繁荣景象。再由12.5米高分辨率地形数据&am…...

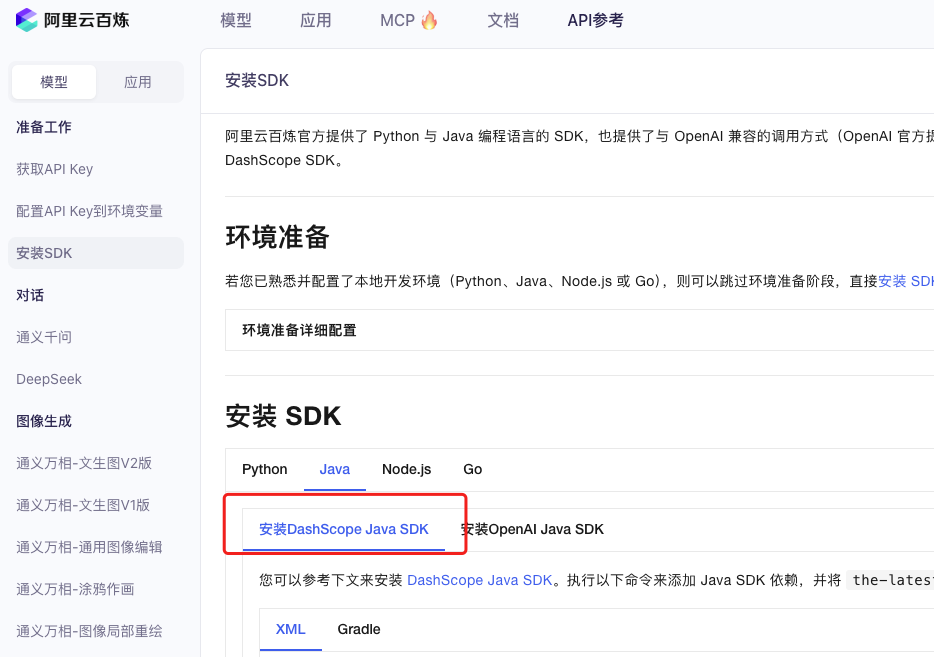

业务系统对接大模型的基础方案:架构设计与关键步骤

业务系统对接大模型:架构设计与关键步骤 在当今数字化转型的浪潮中,大语言模型(LLM)已成为企业提升业务效率和创新能力的关键技术之一。将大模型集成到业务系统中,不仅可以优化用户体验,还能为业务决策提供…...

Appium+python自动化(十六)- ADB命令

简介 Android 调试桥(adb)是多种用途的工具,该工具可以帮助你你管理设备或模拟器 的状态。 adb ( Android Debug Bridge)是一个通用命令行工具,其允许您与模拟器实例或连接的 Android 设备进行通信。它可为各种设备操作提供便利,如安装和调试…...

)

Typeerror: cannot read properties of undefined (reading ‘XXX‘)

最近需要在离线机器上运行软件,所以得把软件用docker打包起来,大部分功能都没问题,出了一个奇怪的事情。同样的代码,在本机上用vscode可以运行起来,但是打包之后在docker里出现了问题。使用的是dialog组件,…...

佰力博科技与您探讨热释电测量的几种方法

热释电的测量主要涉及热释电系数的测定,这是表征热释电材料性能的重要参数。热释电系数的测量方法主要包括静态法、动态法和积分电荷法。其中,积分电荷法最为常用,其原理是通过测量在电容器上积累的热释电电荷,从而确定热释电系数…...

Caliper 配置文件解析:fisco-bcos.json

config.yaml 文件 config.yaml 是 Caliper 的主配置文件,通常包含以下内容: test:name: fisco-bcos-test # 测试名称description: Performance test of FISCO-BCOS # 测试描述workers:type: local # 工作进程类型number: 5 # 工作进程数量monitor:type: - docker- pro…...

【SpringBoot自动化部署】

SpringBoot自动化部署方法 使用Jenkins进行持续集成与部署 Jenkins是最常用的自动化部署工具之一,能够实现代码拉取、构建、测试和部署的全流程自动化。 配置Jenkins任务时,需要添加Git仓库地址和凭证,设置构建触发器(如GitHub…...

医疗AI模型可解释性编程研究:基于SHAP、LIME与Anchor

1 医疗树模型与可解释人工智能基础 医疗领域的人工智能应用正迅速从理论研究转向临床实践,在这一过程中,模型可解释性已成为确保AI系统被医疗专业人员接受和信任的关键因素。基于树模型的集成算法(如RandomForest、XGBoost、LightGBM)因其卓越的预测性能和相对良好的解释性…...

[QMT量化交易小白入门]-六十二、ETF轮动中简单的评分算法如何获取历史年化收益32.7%

本专栏主要是介绍QMT的基础用法,常见函数,写策略的方法,也会分享一些量化交易的思路,大概会写100篇左右。 QMT的相关资料较少,在使用过程中不断的摸索,遇到了一些问题,记录下来和大家一起沟通,共同进步。 文章目录 相关阅读1. 策略概述2. 趋势评分模块3 代码解析4 木头…...

LeetCode - 148. 排序链表

目录 题目 思路 基本情况检查 复杂度分析 执行示例 读者可能出的错误 正确的写法 题目 148. 排序链表 - 力扣(LeetCode) 思路 链表归并排序采用"分治"的策略,主要分为三个步骤: 分割:将链表从中间…...

dvwa11——XSS(Reflected)

LOW 分析源码:无过滤 和上一关一样,这一关在输入框内输入,成功回显 <script>alert(relee);</script> MEDIUM 分析源码,是把<script>替换成了空格,但没有禁用大写 改大写即可,注意函数…...