kafka2.x版本配置SSL进行加密和身份验证

背景:找了一圈资料,都是东讲讲西讲讲,最后我还没搞好,最终决定参考官网说明。

官网指导手册地址:Apache Kafka

需要预备的知识,keytool和openssl

关于keytool的参考:keytool的使用-CSDN博客

关于openssl的参考:openssl常用命令大全_openssl命令参数大全-CSDN博客

先只看SSL安全机制方式。

Apache Kafka 允许客户端通过 SSL 进行连接。默认情况下,SSL 处于禁用状态,但可以根据需要打开。

- 1为每个 Kafka 代理生成 SSL 密钥和证书

部署一个或多个支持 SSL 的代理的第一步是为集群中的每台计算机生成密钥和证书。您可以使用 Java 的 keytool 实用程序来完成此任务。我们最初会将密钥生成到临时密钥库中,以便稍后使用 CA 导出和签名。

keytool -keystore server.keystore.jks -alias localhost -validity 700 -genkey -keyalg RSA您需要在上面的命令中指定两个参数:

- 密钥库:存储证书的密钥库文件。密钥库文件包含证书的私钥;因此,它需要安全保存。这里是server.keystore.jks

- 有效期:证书的有效时间,单位为天。这里是700天。

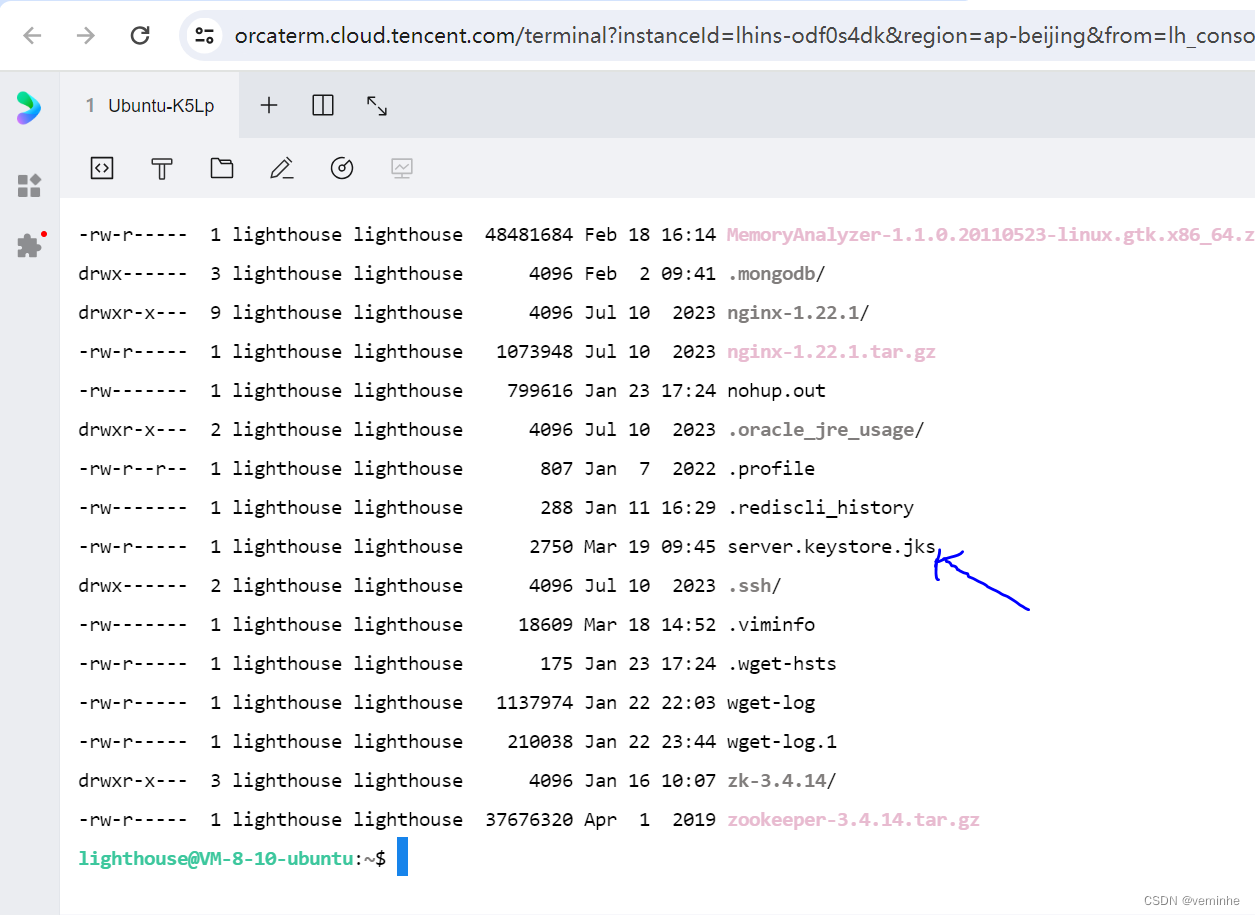

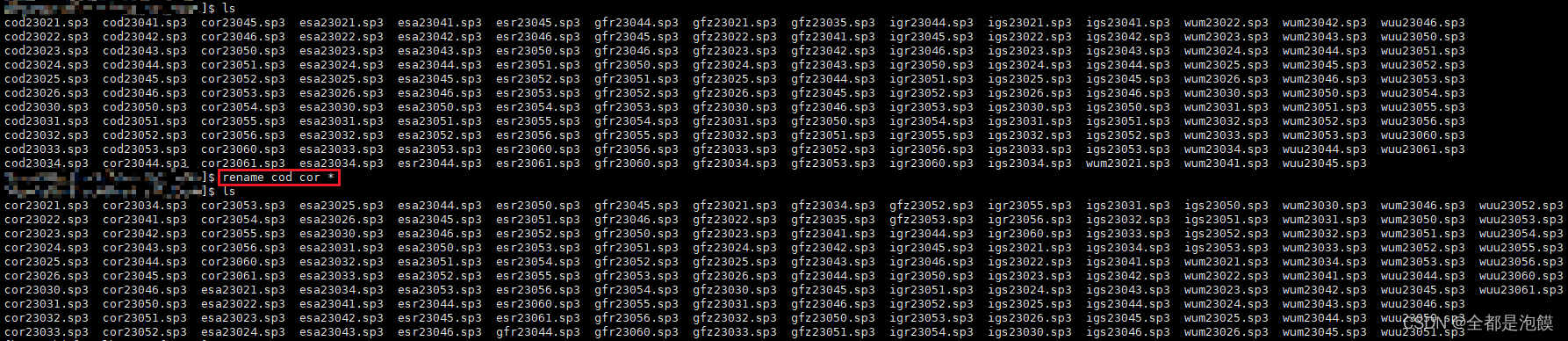

可以看到,目录下生成了对应文件

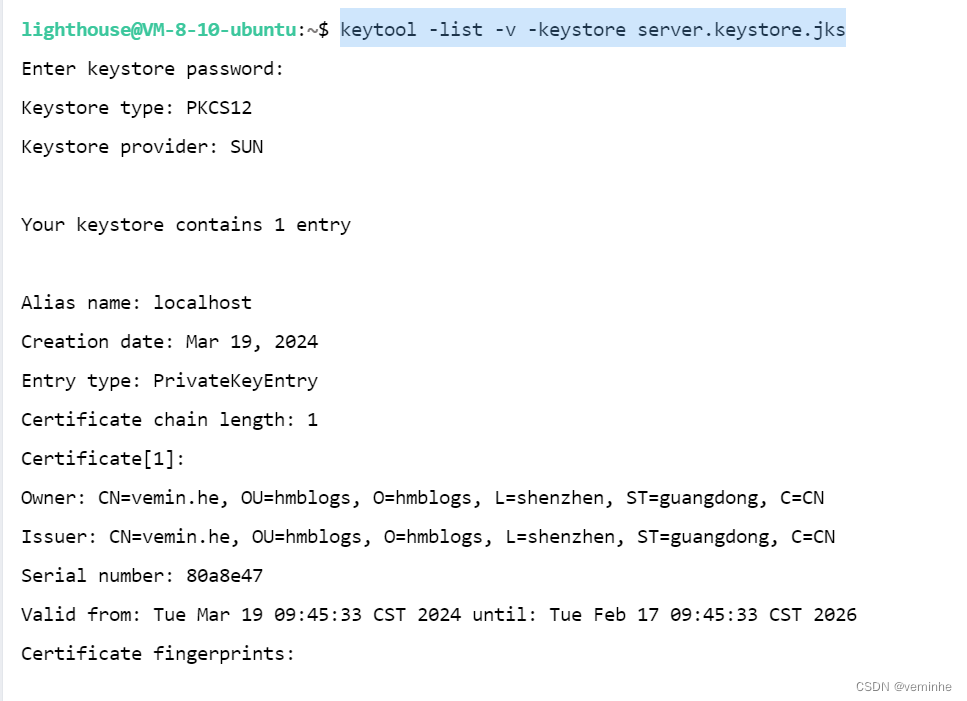

之后可以运行以下命令来验证生成的证书的内容:

keytool -list -v -keystore server.keystore.jks

- 2创建您自己的 CA

完成第一步后,群集中的每台计算机都有一个公钥-私钥对,以及一个用于标识计算机的证书。但是,该证书是未签名的,这意味着攻击者可以创建此类证书来伪装成任何计算机。

因此,通过为群集中的每台计算机对证书进行签名来防止伪造证书非常重要。证书颁发机构 (CA) 负责对证书进行签名。CA的工作方式类似于签发护照的政府——政府在每本护照上盖章(签名),使护照变得难以伪造。其他政府会验证印章以确保护照的真实性。同样,CA 对证书进行签名,而加密技术保证签名证书在计算上难以伪造。因此,只要 CA 是真实且受信任的颁发机构,客户端就可以高度保证它们连接到真实的计算机。

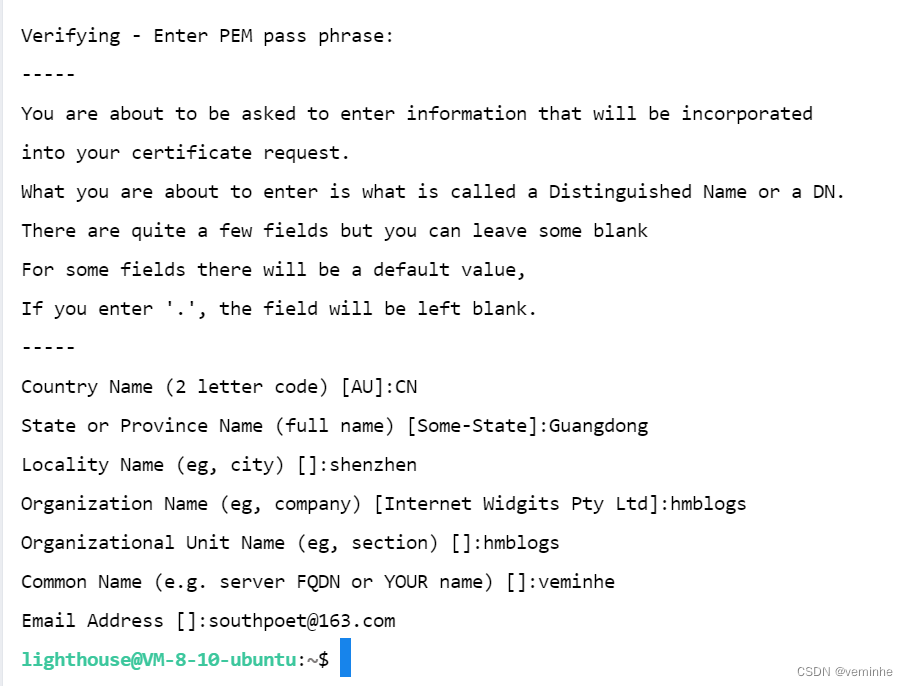

openssl req -new -x509 -keyout ca-key -out ca-cert -days 365

生成的 CA 只是一个公钥-私钥对和证书,它旨在对其他证书进行签名。

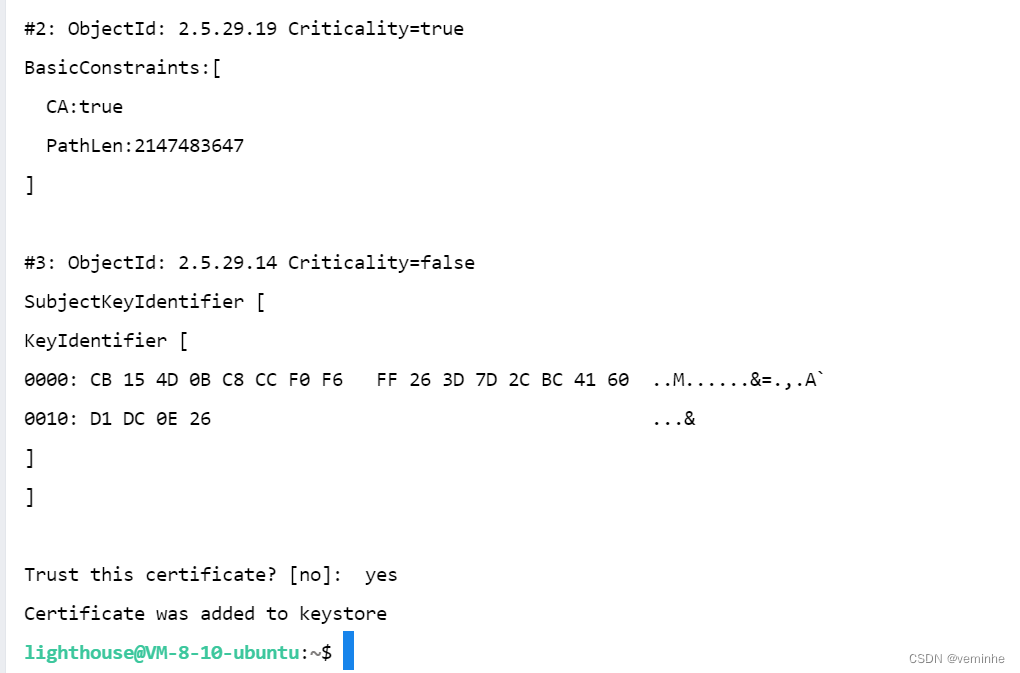

下一步是将生成的 CA 添加到客户端的信任库中,以便客户端可以信任此 CA:

keytool -keystore server.truststore.jks -alias CARoot -import -file ca-cert与步骤 1 中存储每台机器自己的身份的密钥库不同,客户机的信任库存储客户机应信任的所有证书。将证书导入到信任库中还意味着信任由该证书签名的所有证书。如上所述,信任政府 (CA) 也意味着信任它签发的所有护照(证书)。此属性称为信任链,在大型 Kafka 集群上部署 SSL 时特别有用。您可以使用单个 CA 对集群中的所有证书进行签名,并让所有计算机共享信任该 CA 的同一信任库。这样,所有计算机都可以对所有其他计算机进行身份验证。

- 3对证书进行签名

下一步是使用步骤 2 中生成的 CA 对步骤 1 生成的所有证书进行签名。首先,您需要从密钥库中导出证书:

keytool -密钥库 client.truststore.jks -alias CARoot -import -file ca-certkeytool -keystore server.keystore.jks -alias localhost -certreq -file cert-file

然后与 CA 一起签名:

openssl x509 -req -CA ca-cert -CAkey ca-key -in cert-file -out cert-signed -days 700 -CAcreateserial -passin pass:{ca-password}

最后,您需要将 CA 的证书和签名的证书都导入到密钥库中:

keytool -keystore server.keystore.jks -alias CARoot -import -file ca-certkeytool -keystore server.keystore.jks -alias localhost -import -file cert-signed

参数的定义如下:

- 密钥库:密钥库的位置

- ca-cert:CA的证书

- ca-key:CA的私钥

- ca-password:CA的密码

- cert-file:导出的服务器未签名证书

- cert-signed:服务器的签名证书

- 4配置 Kafka 代理

Kafka Broker 支持侦听多个端口上的连接。我们需要在 server.properties 中配置以下属性,该属性必须具有一个或多个逗号分隔值:

如果未为代理间通信启用 SSL(请参阅下文了解如何启用它),则需要 PLAINTEXT 和 SSL 端口。

listeners=PLAINTEXT://localhost:9092,SSL://localhost:9092代理端需要以下 SSL 配置

ssl.keystore.location=/home/lighthouse/server.keystore.jksssl.keystore.password=test1234ssl.key.password=test1234ssl.truststore.location=/home/lighthouse/server.truststore.jksssl.truststore.password=测试1234注意:ssl.truststore.password 在技术上是可选的,但强烈建议使用。如果未设置密码,则对信任库的访问仍然可用,但完整性检查将被禁用。值得考虑的可选设置:

- ssl.client.auth=none(“required” => 需要客户端身份验证,“requested” =>请求客户端身份验证,没有证书的客户端仍然可以连接。不建议使用“requested”,因为它提供了错误的安全感,并且配置错误的客户端仍将成功连接。

- ssl.cipher.suites(可选)。密码套件是身份验证、加密、MAC 和密钥交换算法的命名组合,用于协商使用 TLS 或 SSL 网络协议的网络连接的安全设置。(默认值为空列表)

- ssl.enabled.protocols=TLSv1.2,TLSv1.1,TLSv1 (列出要从客户端接受的 SSL 协议。请注意,SSL 已被弃用,取而代之的是 TLS,不建议在生产中使用 SSL)

- ssl.keystore.type=JKS

- ssl.truststore.type=JKS

- ssl.secure.random.implementation=SHA1PRNG

如果要为代理之间的通信启用 SSL,请将以下内容添加到 server.properties 文件(默认为 PLAINTEXT)

security.inter.broker.protocol=SSL- 5配置 Kafka 客户端

SSL 仅支持新的 Kafka 生产者和使用者,不支持较旧的 API。对于生产者和使用者,SSL 的配置是相同的。

如果代理中不需要客户机认证,那么下面是一个最小配置示例:

security.protocol=SSL协议ssl.truststore.location=/var/private/ssl/client.truststore.jksssl.truststore.password=测试1234注意:ssl.truststore.password 在技术上是可选的,但强烈建议使用。如果未设置密码,则对信任库的访问仍然可用,但完整性检查将被禁用。如果需要客户机认证,那么必须像步骤 1 中一样创建密钥库,并且还必须配置以下内容:

ssl.keystore.location=/var/private/ssl/client.keystore.jksssl.keystore.password=test1234ssl.key.password=test1234根据我们的要求和代理配置,可能还需要其他配置设置:

- ssl.provider(可选)。用于 SSL 连接的安全提供程序的名称。缺省值是 JVM 的缺省安全提供程序。

- ssl.cipher.suites(可选)。密码套件是身份验证、加密、MAC 和密钥交换算法的命名组合,用于协商使用 TLS 或 SSL 网络协议的网络连接的安全设置。

- ssl.enabled.protocols=TLSv1.2,TLSv1.1,TLSv1。它应该列出至少一个在代理端配置的协议

- ssl.truststore.type=JKS

- ssl.keystore.type=JKS

生产者和消费者共同使用到的client-ssl.properties文件内容如下:

使用 console-producer 和 console-consumer 的示例:

./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test --producer.config ./config/client-ssl.properties

./bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test --consumer.config ./config/client-ssl.properties报错了:

还要在用户目录下执行如下命令,信任客户端:

keytool -keystore client.truststore.jks -alias CARoot -import -file ca-cert

keytool -keystore client.keystore.jks -alias CARoot -import -file ca-cert如果密码错了,还会报如下错误:

lighthouse@VM-8-10-ubuntu:~/kafkaWithZk/kafka_2.12-2.2.1$ ./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test --producer.config ./config/client-ssl.properties

org.apache.kafka.common.KafkaException: Failed to construct kafka producerat org.apache.kafka.clients.producer.KafkaProducer.<init>(KafkaProducer.java:431)at org.apache.kafka.clients.producer.KafkaProducer.<init>(KafkaProducer.java:299)at kafka.tools.ConsoleProducer$.main(ConsoleProducer.scala:44)at kafka.tools.ConsoleProducer.main(ConsoleProducer.scala)

Caused by: org.apache.kafka.common.KafkaException: org.apache.kafka.common.KafkaException: org.apache.kafka.common.KafkaException: Failed to load SSL keystore /home/lighthouse/client.truststore.jks of type JKSat org.apache.kafka.common.network.SslChannelBuilder.configure(SslChannelBuilder.java:73)at org.apache.kafka.common.network.ChannelBuilders.create(ChannelBuilders.java:146)at org.apache.kafka.common.network.ChannelBuilders.clientChannelBuilder(ChannelBuilders.java:67)at org.apache.kafka.clients.ClientUtils.createChannelBuilder(ClientUtils.java:99)at org.apache.kafka.clients.producer.KafkaProducer.newSender(KafkaProducer.java:439)at org.apache.kafka.clients.producer.KafkaProducer.<init>(KafkaProducer.java:420)... 3 more

Caused by: org.apache.kafka.common.KafkaException: org.apache.kafka.common.KafkaException: Failed to load SSL keystore /home/lighthouse/client.truststore.jks of type JKSat org.apache.kafka.common.security.ssl.SslFactory.configure(SslFactory.java:144)at org.apache.kafka.common.network.SslChannelBuilder.configure(SslChannelBuilder.java:71)... 8 more

Caused by: org.apache.kafka.common.KafkaException: Failed to load SSL keystore /home/lighthouse/client.truststore.jks of type JKSat org.apache.kafka.common.security.ssl.SslFactory$SecurityStore.load(SslFactory.java:357)at org.apache.kafka.common.security.ssl.SslFactory.createSSLContext(SslFactory.java:248)at org.apache.kafka.common.security.ssl.SslFactory.configure(SslFactory.java:141)... 9 more

Caused by: java.io.IOException: keystore password was incorrectat java.base/sun.security.pkcs12.PKCS12KeyStore.engineLoad(PKCS12KeyStore.java:2092)at java.base/sun.security.util.KeyStoreDelegator.engineLoad(KeyStoreDelegator.java:243)at java.base/java.security.KeyStore.load(KeyStore.java:1479)at org.apache.kafka.common.security.ssl.SslFactory$SecurityStore.load(SslFactory.java:354)... 11 more

Caused by: java.security.UnrecoverableKeyException: failed to decrypt safe contents entry: javax.crypto.BadPaddingException: Given final block not properly padded. Such issues can arise if a bad key is used during decryption.... 15 more然后我核对了client-ssl.properties文件中的配置(包含密码),再次启动producer,会报如下错:

lighthouse@VM-8-10-ubuntu:~/kafkaWithZk/kafka_2.12-2.2.1$ ./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test --producer.config ./config/client-ssl.properties

>[2024-03-19 13:42:49,783] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:49,835] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:49,937] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:50,140] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:50,543] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:51,298] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:52,203] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:53,158] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:54,264] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:55,220] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:56,376] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

^C^Clighthouse@VM-8-10-ubuntu:~/kafkaWithZk/kafka_2.12-2.2.1$核对了server.properties文件的密码后,启动kafka还是报错,报的错关键信息如下:

[2024-03-19 14:34:31,955] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 14:34:31,957] WARN SSL handshake failed (kafka.utils.CoreUtils$)

org.apache.kafka.common.errors.SslAuthenticationException: SSL handshake failed

Caused by: javax.net.ssl.SSLHandshakeException: No name matching localhost foundat java.base/sun.security.ssl.Alert.createSSLException(Alert.java:131)at java.base/sun.security.ssl.TransportContext.fatal(TransportContext.java:360)at java.base/sun.security.ssl.TransportContext.fatal(TransportContext.java:303)at java.base/sun.security.ssl.TransportContext.fatal(TransportContext.java:298)at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.checkServerCerts(CertificateMessage.java:654)at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.onCertificate(CertificateMessage.java:473)at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.consume(CertificateMessage.java:369)at java.base/sun.security.ssl.SSLHandshake.consume(SSLHandshake.java:392)at java.base/sun.security.ssl.HandshakeContext.dispatch(HandshakeContext.java:443)at java.base/sun.security.ssl.SSLEngineImpl$DelegatedTask$DelegatedAction.run(SSLEngineImpl.java:1076)at java.base/sun.security.ssl.SSLEngineImpl$DelegatedTask$DelegatedAction.run(SSLEngineImpl.java:1063)at java.base/java.security.AccessController.doPrivileged(Native Method)at java.base/sun.security.ssl.SSLEngineImpl$DelegatedTask.run(SSLEngineImpl.java:1010)at org.apache.kafka.common.network.SslTransportLayer.runDelegatedTasks(SslTransportLayer.java:402)at org.apache.kafka.common.network.SslTransportLayer.handshakeUnwrap(SslTransportLayer.java:484)at org.apache.kafka.common.network.SslTransportLayer.doHandshake(SslTransportLayer.java:340)at org.apache.kafka.common.network.SslTransportLayer.handshake(SslTransportLayer.java:265)at org.apache.kafka.common.network.KafkaChannel.prepare(KafkaChannel.java:170)at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:547)at org.apache.kafka.common.network.Selector.poll(Selector.java:483)at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:535)at org.apache.kafka.clients.NetworkClientUtils.awaitReady(NetworkClientUtils.java:74)at kafka.server.KafkaServer.doControlledShutdown$1(KafkaServer.scala:510)at kafka.server.KafkaServer.controlledShutdown(KafkaServer.scala:563)at kafka.server.KafkaServer.$anonfun$shutdown$2(KafkaServer.scala:585)at kafka.utils.CoreUtils$.swallow(CoreUtils.scala:86)at kafka.server.KafkaServer.shutdown(KafkaServer.scala:585)at kafka.server.KafkaServerStartable.shutdown(KafkaServerStartable.scala:48)at kafka.Kafka$$anon$1.run(Kafka.scala:72)

Caused by: java.security.cert.CertificateException: No name matching localhost foundat java.base/sun.security.util.HostnameChecker.matchDNS(HostnameChecker.java:234)at java.base/sun.security.util.HostnameChecker.match(HostnameChecker.java:103)at java.base/sun.security.ssl.X509TrustManagerImpl.checkIdentity(X509TrustManagerImpl.java:461)at java.base/sun.security.ssl.X509TrustManagerImpl.checkIdentity(X509TrustManagerImpl.java:435)at java.base/sun.security.ssl.X509TrustManagerImpl.checkTrusted(X509TrustManagerImpl.java:283)at java.base/sun.security.ssl.X509TrustManagerImpl.checkServerTrusted(X509TrustManagerImpl.java:141)at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.checkServerCerts(CertificateMessage.java:632)... 24 more

[2024-03-19 14:34:31,957] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:31,960] INFO [/config/changes-event-process-thread]: Shutting down (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2024-03-19 14:34:31,961] INFO [/config/changes-event-process-thread]: Shutdown completed (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2024-03-19 14:34:31,961] INFO [/config/changes-event-process-thread]: Stopped (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2024-03-19 14:34:31,962] INFO [SocketServer brokerId=0] Stopping socket server request processors (kafka.network.SocketServer)

[2024-03-19 14:34:31,979] INFO [SocketServer brokerId=0] Stopped socket server request processors (kafka.network.SocketServer)

[2024-03-19 14:34:31,980] INFO [data-plane Kafka Request Handler on Broker 0], shutting down (kafka.server.KafkaRequestHandlerPool)

[2024-03-19 14:34:31,988] INFO [data-plane Kafka Request Handler on Broker 0], shut down completely (kafka.server.KafkaRequestHandlerPool)

[2024-03-19 14:34:31,995] INFO [KafkaApi-0] Shutdown complete. (kafka.server.KafkaApis)

[2024-03-19 14:34:31,997] INFO [ExpirationReaper-0-topic]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,059] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

^C[2024-03-19 14:34:32,114] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

[2024-03-19 14:34:32,132] INFO [ExpirationReaper-0-topic]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,132] INFO [ExpirationReaper-0-topic]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,134] INFO [TransactionCoordinator id=0] Shutting down. (kafka.coordinator.transaction.TransactionCoordinator)

[2024-03-19 14:34:32,135] INFO [ProducerId Manager 0]: Shutdown complete: last producerId assigned 1000 (kafka.coordinator.transaction.ProducerIdManager)

[2024-03-19 14:34:32,136] INFO [Transaction State Manager 0]: Shutdown complete (kafka.coordinator.transaction.TransactionStateManager)

[2024-03-19 14:34:32,136] INFO [Transaction Marker Channel Manager 0]: Shutting down (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2024-03-19 14:34:32,139] INFO [Transaction Marker Channel Manager 0]: Stopped (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2024-03-19 14:34:32,140] INFO [Transaction Marker Channel Manager 0]: Shutdown completed (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2024-03-19 14:34:32,141] INFO [TransactionCoordinator id=0] Shutdown complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2024-03-19 14:34:32,141] INFO [GroupCoordinator 0]: Shutting down. (kafka.coordinator.group.GroupCoordinator)

[2024-03-19 14:34:32,144] INFO [ExpirationReaper-0-Heartbeat]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,160] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,261] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,344] INFO [ExpirationReaper-0-Heartbeat]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,344] INFO [ExpirationReaper-0-Heartbeat]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,344] INFO [ExpirationReaper-0-Rebalance]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,362] INFO [ExpirationReaper-0-Rebalance]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,362] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,363] INFO [ExpirationReaper-0-Rebalance]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,363] INFO [GroupCoordinator 0]: Shutdown complete. (kafka.coordinator.group.GroupCoordinator)

[2024-03-19 14:34:32,364] INFO [ReplicaManager broker=0] Shutting down (kafka.server.ReplicaManager)

[2024-03-19 14:34:32,364] INFO [LogDirFailureHandler]: Shutting down (kafka.server.ReplicaManager$LogDirFailureHandler)

[2024-03-19 14:34:32,366] INFO [LogDirFailureHandler]: Stopped (kafka.server.ReplicaManager$LogDirFailureHandler)

[2024-03-19 14:34:32,366] INFO [LogDirFailureHandler]: Shutdown completed (kafka.server.ReplicaManager$LogDirFailureHandler)

[2024-03-19 14:34:32,368] INFO [ReplicaFetcherManager on broker 0] shutting down (kafka.server.ReplicaFetcherManager)

[2024-03-19 14:34:32,369] INFO [ReplicaFetcherManager on broker 0] shutdown completed (kafka.server.ReplicaFetcherManager)

[2024-03-19 14:34:32,370] INFO [ReplicaAlterLogDirsManager on broker 0] shutting down (kafka.server.ReplicaAlterLogDirsManager)

[2024-03-19 14:34:32,370] INFO [ReplicaAlterLogDirsManager on broker 0] shutdown completed (kafka.server.ReplicaAlterLogDirsManager)

[2024-03-19 14:34:32,370] INFO [ExpirationReaper-0-Fetch]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,463] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,492] INFO [ExpirationReaper-0-Fetch]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,492] INFO [ExpirationReaper-0-Fetch]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,492] INFO [ExpirationReaper-0-Produce]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,564] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,666] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,674] INFO [ExpirationReaper-0-Produce]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,674] INFO [ExpirationReaper-0-Produce]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,674] INFO [ExpirationReaper-0-DeleteRecords]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,692] INFO [ExpirationReaper-0-DeleteRecords]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,692] INFO [ExpirationReaper-0-DeleteRecords]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,693] INFO [ExpirationReaper-0-ElectPreferredLeader]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,768] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,870] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,893] INFO [ExpirationReaper-0-ElectPreferredLeader]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,893] INFO [ExpirationReaper-0-ElectPreferredLeader]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,897] INFO [ReplicaManager broker=0] Shut down completely (kafka.server.ReplicaManager)

[2024-03-19 14:34:32,898] INFO Shutting down. (kafka.log.LogManager)

[2024-03-19 14:34:32,934] INFO Shutdown complete. (kafka.log.LogManager)

[2024-03-19 14:34:32,960] INFO [ZooKeeperClient] Closing. (kafka.zookeeper.ZooKeeperClient)

[2024-03-19 14:34:32,964] INFO Session: 0x100cd6124170002 closed (org.apache.zookeeper.ZooKeeper)

[2024-03-19 14:34:32,966] INFO EventThread shut down for session: 0x100cd6124170002 (org.apache.zookeeper.ClientCnxn)

[2024-03-19 14:34:32,966] INFO [ZooKeeperClient] Closed. (kafka.zookeeper.ZooKeeperClient)

[2024-03-19 14:34:32,968] INFO [ThrottledChannelReaper-Fetch]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,168] INFO [ThrottledChannelReaper-Fetch]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,168] INFO [ThrottledChannelReaper-Fetch]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,168] INFO [ThrottledChannelReaper-Produce]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,170] INFO [ThrottledChannelReaper-Produce]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,170] INFO [ThrottledChannelReaper-Produce]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,170] INFO [ThrottledChannelReaper-Request]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

^C[2024-03-19 14:34:33,740] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

^C[2024-03-19 14:34:33,972] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

[2024-03-19 14:34:34,170] INFO [ThrottledChannelReaper-Request]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:34,170] INFO [ThrottledChannelReaper-Request]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:34,172] INFO [SocketServer brokerId=0] Shutting down socket server (kafka.network.SocketServer)

^C[2024-03-19 14:34:34,204] INFO [SocketServer brokerId=0] Shutdown completed (kafka.network.SocketServer)

[2024-03-19 14:34:34,204] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

[2024-03-19 14:34:34,206] INFO [KafkaServer id=0] shut down completed (kafka.server.KafkaServer)经过一番清理ca-*,cert-*,client-*,server-*文件后,然后重新生成秘钥证书和CA、签名,步骤如下:

一、生成 SSL 密钥和证书

keytool -keystore server.keystore.jks -alias localhost -validity 700 -genkey -keyalg RSA

keytool -keystore server.truststore.jks -alias localhost -validity 700 -genkey -keyalg RSA

keytool -keystore client.keystore.jks -alias localhost -validity 700 -genkey -keyalg RSA

keytool -keystore client.truststore.jks -alias localhost -validity 700 -genkey -keyalg RSA2、创建我自己的CA

openssl req -new -x509 -keyout ca-key -out ca-cert -days 700

keytool -keystore server.keystore.jks -alias localhost -certreq -file cert-file

openssl x509 -req -CA ca-cert -CAkey ca-key -in cert-file -out cert-signed -days 700 -CAcreateserial -passin pass:1234563、对证书进行签名

keytool -keystore server.keystore.jks -alias CARoot -import -file ca-cert

keytool -keystore server.keystore.jks -alias localhost -import -file cert-signedkeytool -keystore server.truststore.jks -alias CARoot -import -file ca-certkeytool -keystore client.keystore.jks -alias CARoot -import -file ca-certkeytool -keystore client.truststore.jks -alias CARoot -import -file ca-cert再次启动zookeeper和kafka,然后执行生产producer命令,发现还是报错:

[2024-03-19 17:52:38,773] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:38,876] INFO [Controller id=0, targetBrokerId=0] Failed authentication with localhost/127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:38,876] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed (org.apache.kafka.clients.NetworkClient)

[2024-03-19 17:52:38,876] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:38,979] INFO [Controller id=0, targetBrokerId=0] Failed authentication with localhost/127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.netw

ork.Selector)

[2024-03-19 17:52:38,980] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed(org.apache.kafka.clients.NetworkClient)

[2024-03-19 17:52:38,980] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:39,083] INFO [Controller id=0, targetBrokerId=0] Failed authentication with localhost/127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.netw

ork.Selector)

[2024-03-19 17:52:39,083] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:39,083] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed(org.apache.kafka.clients.NetworkClient)相关文章:

kafka2.x版本配置SSL进行加密和身份验证

背景:找了一圈资料,都是东讲讲西讲讲,最后我还没搞好,最终决定参考官网说明。 官网指导手册地址:Apache Kafka 需要预备的知识,keytool和openssl 关于keytool的参考:keytool的使用-CSDN博客 …...

Linux和Windows下的文件批量重命名

一、Linux下文件批量重命名 rename命令说明: Usage: rename [options] … Rename files. Options: -v, --verbose explain what is being done -s, --symlink act on the target of symlinks -n, --no-act do not make any changes -o, --no-overwrite don’t overw…...

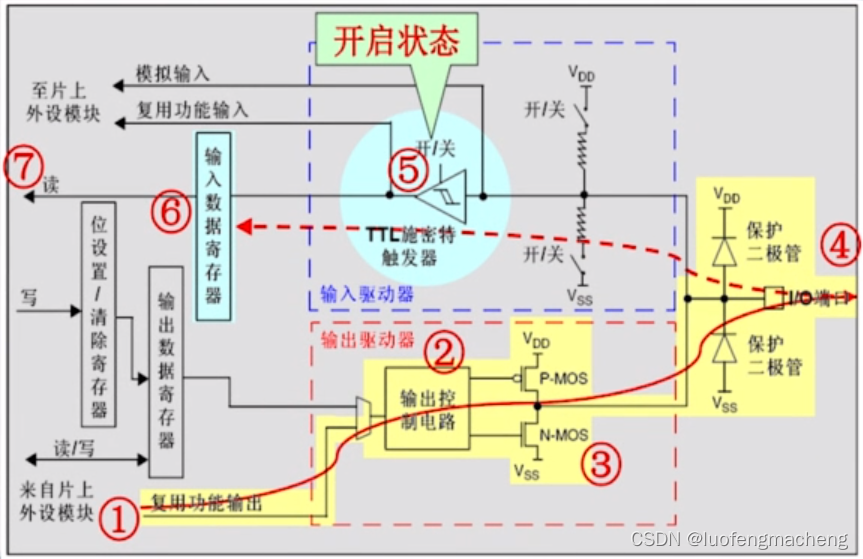

stm32之GPIO电路介绍

文章目录 1 GPIO介绍2 GPIO的工作模式2.1 浮空输入2.2 上拉输入2.3 下拉输入2.4 模拟输入2.5 开漏输出2.6 推挽输出2.7 复用开漏输出2.8 复用推挽输出2.9 其他 3 应用方式4 常用库函数 1 GPIO介绍 保护二极管:保护引脚,让引脚的电压位于正常的范围施密特…...

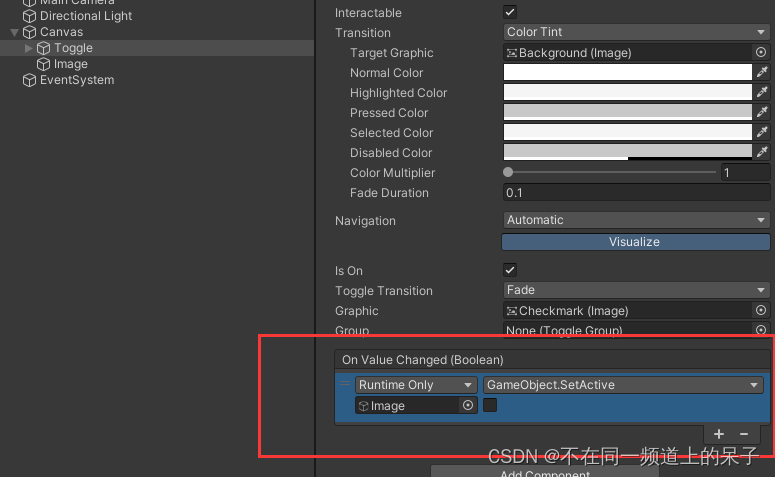

Unity Toggle处理状态变化事件

Toggle处理状态变化事件,有两个方法。 法一、通过Inspector面板设置 实现步骤: 在Inspector面板中找到Toggle组件的"On Value Changed"事件。单击""按钮添加一个新的监听器。拖动一个目标对象到"None (Object)"字段&am…...

UE5.1 iClone8 正确导入角色骨骼与动作

使用iClone8插件Auto Setup 附录下载链接 里面有两个文件夹,使用Auto Setup C:\Program Files\Reallusion\Shared Plugins 在UE内新建Plugins,把插件复制进去 在工具栏出现这三个人物的图标就安装成功了 iClone选择角色,导入动作 选择导出FBX UE内直接导入 会出现是否启动插件…...

FFmpeg-- c++实现:pcm和yuv编码

文章目录 流程音频视频 api核心代码audioencoder.haudioencoder.cppvideoencoder.hvideoencoder.cpp pcm和yuv编码为aac和h264,封装为c的AudioEncoder类和VideoEncoder类 流程 音频 初始化音频参数 int InitAAC(int channels, int sample_rate, int bit_rate); 音…...

图解CodeWhisperer的安装使用

🎬 江城开朗的豌豆:个人主页 🔥 个人专栏 :《 VUE 》 《 javaScript 》 📝 个人网站 :《 江城开朗的豌豆🫛 》 ⛺️ 生活的理想,就是为了理想的生活 ! 目录 📘 CodeWhisperer简介 &#…...

Python内置对象

Python是一种强大的、动态类型的高级编程语言,其内置对象是构成程序的基础元素。Python的内置对象包括数字、字符串、列表、元组、字典、集合、布尔值和None等,每种对象都有特定的类型和用途。 01 什么是内置对象 这些对象是编程语言的基础构建块&…...

开源数据集 nuScenes 之 3D Occupancy Prediction

数据总体结构 Nuscenes 数据结构 可以看一下我的blog如何下载完整版 mmdetection3d ├── mmdet3d ├── tools ├── configs ├── data │ ├── nuscenes │ │ ├── maps │ │ ├── samples │ │ ├── sweeps │ │ ├── lidarseg (o…...

物联网竞赛板CubMx全部功能简洁配置汇总

目录 前言:1、按键&LED灯配置:2、OLED配置:3、继电器配置:4、LORA模块配置:5、矩阵模块:6、串口模块:7、RTC配置:8、ADC模块配置:9、温度传感器模块:后续…...

使用Redis做缓存的小案例

如果不了解Redis,可以查看本人博客:Redis入门 Redis基于内存,因此查询速度快,常常可以用来作为缓存使用,缓存就是我们在内存中开辟一段区域来存储我们查询比较频繁的数据,这样,我们在下一次查询…...

剧本杀小程序功能介绍

剧本杀功能介绍 剧本杀,一种融合了角色扮演与推理解谜的社交游戏,近年来在年轻人中越来越受欢迎。它不仅可以锻炼参与者的逻辑推理能力,还能增进朋友间的感情,提升团队协作能力。下面,我们将详细介绍剧本杀的核心功能…...

)

C#基础语法学习笔记(传智播客学习)

最近在学习C#开发知识,跟着传智播客的视频学习了一下,感觉还不错,整理一下学习笔记。 C#基础语法学习笔记 1.背景知识2.Visual Studio使用3.基础知识4.变量5.运算符与表达式6.程序调试7.判断结构8.循环结构9.常量、枚举类型10.结构体类型11.数…...

)

图论01-DFS和BFS(深搜和广搜邻接矩阵和邻接表/Java)

1.深度优先理论基础(dfs) dfs的两个关键操作 搜索方向,是认准一个方向搜,直到碰壁之后再换方向 换方向是撤销原路径,改为节点链接的下一个路径,回溯的过程。dfs解题模板 void dfs(参数) {if (终止条件) {存放结果;return;}for …...

【Python】Miniconda+Vscode+Jupyter 环境搭建

1.安装 Miniconda Conda 是一个开源的包管理和环境管理系统,可在 Windows、macOS 和 Linux 上运行,它可以快速安装、运行和更新软件包及其依赖项。使用 Conda,我们可以轻松在本地计算机上创建、保存、加载和切换不同的环境 Conda 分为 Anaco…...

Redis消息队列与thinkphp/queue操作

业务场景 场景一 用户完成注册后需要发送欢迎注册的问候邮件、同时后台要发送实时消息给用户对应的业务员有新的客户注册、最后将用户的注册数据通过接口推送到一个营销用的第三方平台。 遇到两个问题: 由于代码是串行方式,流程大致为:开…...

【Ubuntu】常用命令

一般操作 pwd(present working directory) 显示当前的工作目录/路径。 cd (change directory) 改变目录,用于输入需要前往的路径/目录。 有一些特殊命令也很常用 : 解释 前往同一级的另一个目录 cd ../directory name cd .. 表示进入上…...

稀碎从零算法笔记Day22-LeetCode:

题型:链表 链接:2. 两数相加 - 力扣(LeetCode) 来源:Leet 题目描述 给你两个 非空 的链表,表示两个非负的整数。它们每位数字都是按照 逆序 的方式存储的,并且每个节点只能存储 一位 数字。 …...

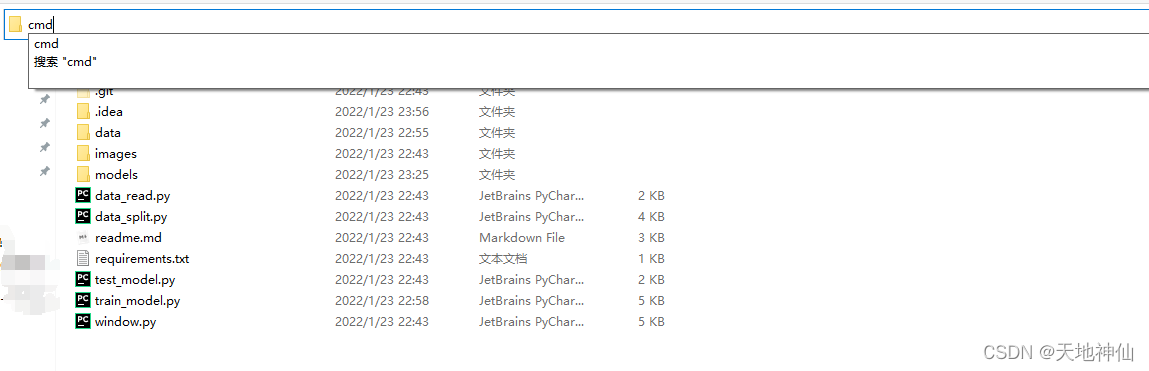

Nacos下载和安装

(1)下载地址和版本 下载地址:Releases alibaba/nacos GitHub 解压在没有中文及空格的文件夹 (2)启动nacos服务 在bin目录下,打开命令行,输入 启动命令:sh startup.sh -m standalone - Linux/Unix/Mac …...

)

pandas简介(python)

pandas是什么 Pandas 是一个开源的第三方 Python 库,从 Numpy 和 Matplotlib 的基础上构建而来,享有数据分析“三剑客之一”的盛名(NumPy、Matplotlib、Pandas)。Pandas 已经成为 Python 数据分析的必备高级工具,它的…...

docker详细操作--未完待续

docker介绍 docker官网: Docker:加速容器应用程序开发 harbor官网:Harbor - Harbor 中文 使用docker加速器: Docker镜像极速下载服务 - 毫秒镜像 是什么 Docker 是一种开源的容器化平台,用于将应用程序及其依赖项(如库、运行时环…...

)

rknn优化教程(二)

文章目录 1. 前述2. 三方库的封装2.1 xrepo中的库2.2 xrepo之外的库2.2.1 opencv2.2.2 rknnrt2.2.3 spdlog 3. rknn_engine库 1. 前述 OK,开始写第二篇的内容了。这篇博客主要能写一下: 如何给一些三方库按照xmake方式进行封装,供调用如何按…...

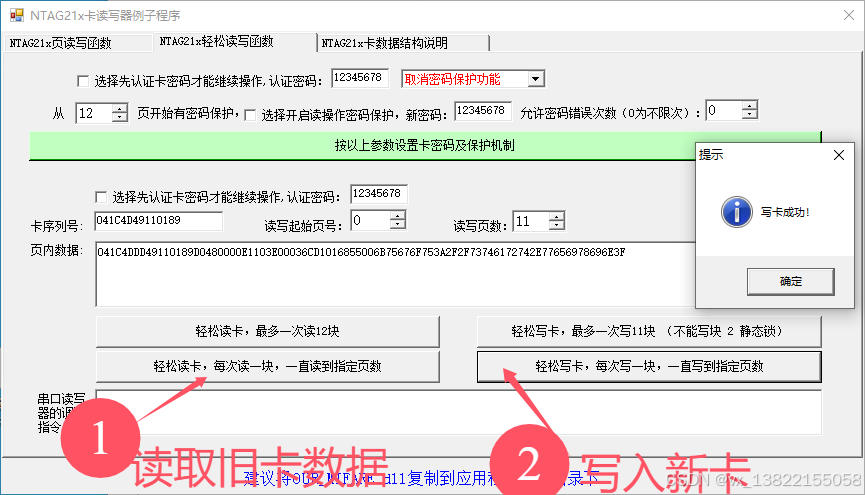

VB.net复制Ntag213卡写入UID

本示例使用的发卡器:https://item.taobao.com/item.htm?ftt&id615391857885 一、读取旧Ntag卡的UID和数据 Private Sub Button15_Click(sender As Object, e As EventArgs) Handles Button15.Click轻松读卡技术支持:网站:Dim i, j As IntegerDim cardidhex, …...

LeetCode - 394. 字符串解码

题目 394. 字符串解码 - 力扣(LeetCode) 思路 使用两个栈:一个存储重复次数,一个存储字符串 遍历输入字符串: 数字处理:遇到数字时,累积计算重复次数左括号处理:保存当前状态&a…...

2.Vue编写一个app

1.src中重要的组成 1.1main.ts // 引入createApp用于创建应用 import { createApp } from "vue"; // 引用App根组件 import App from ./App.vue;createApp(App).mount(#app)1.2 App.vue 其中要写三种标签 <template> <!--html--> </template>…...

uniapp微信小程序视频实时流+pc端预览方案

方案类型技术实现是否免费优点缺点适用场景延迟范围开发复杂度WebSocket图片帧定时拍照Base64传输✅ 完全免费无需服务器 纯前端实现高延迟高流量 帧率极低个人demo测试 超低频监控500ms-2s⭐⭐RTMP推流TRTC/即构SDK推流❌ 付费方案 (部分有免费额度&#x…...

html-<abbr> 缩写或首字母缩略词

定义与作用 <abbr> 标签用于表示缩写或首字母缩略词,它可以帮助用户更好地理解缩写的含义,尤其是对于那些不熟悉该缩写的用户。 title 属性的内容提供了缩写的详细说明。当用户将鼠标悬停在缩写上时,会显示一个提示框。 示例&#x…...

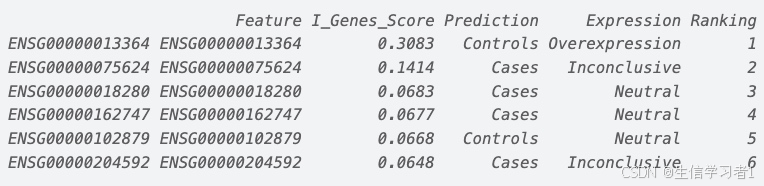

【数据分析】R版IntelliGenes用于生物标志物发现的可解释机器学习

禁止商业或二改转载,仅供自学使用,侵权必究,如需截取部分内容请后台联系作者! 文章目录 介绍流程步骤1. 输入数据2. 特征选择3. 模型训练4. I-Genes 评分计算5. 输出结果 IntelliGenesR 安装包1. 特征选择2. 模型训练和评估3. I-Genes 评分计…...

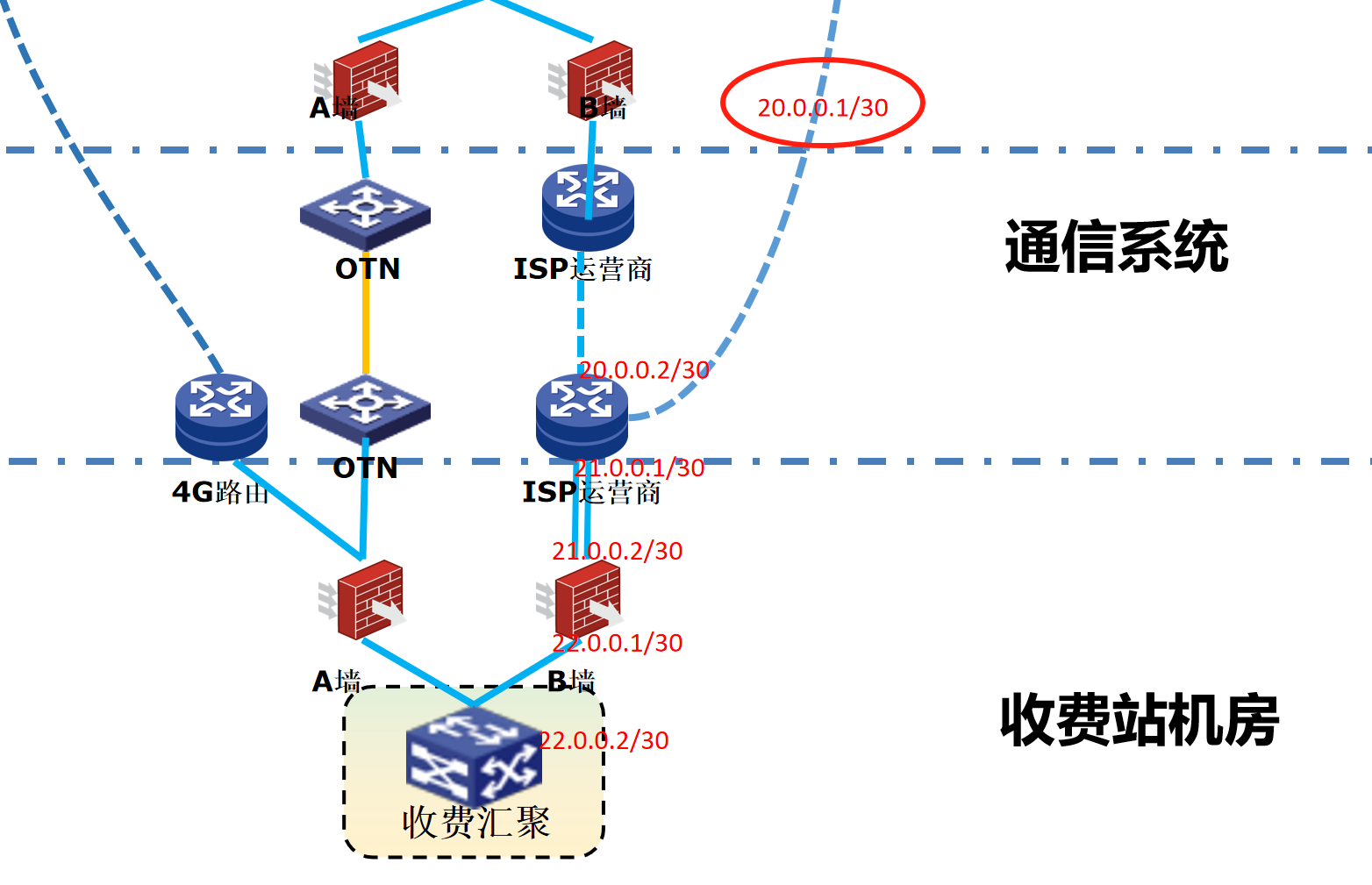

浪潮交换机配置track检测实现高速公路收费网络主备切换NQA

浪潮交换机track配置 项目背景高速网络拓扑网络情况分析通信线路收费网络路由 收费汇聚交换机相应配置收费汇聚track配置 项目背景 在实施省内一条高速公路时遇到的需求,本次涉及的主要是收费汇聚交换机的配置,浪潮网络设备在高速项目很少,通…...

比较数据迁移后MySQL数据库和OceanBase数据仓库中的表

设计一个MySQL数据库和OceanBase数据仓库的表数据比较的详细程序流程,两张表是相同的结构,都有整型主键id字段,需要每次从数据库分批取得2000条数据,用于比较,比较操作的同时可以再取2000条数据,等上一次比较完成之后,开始比较,直到比较完所有的数据。比较操作需要比较…...