DataX案例,MongoDB数据导入HDFS与MySQL

- 【尚硅谷】Alibaba开源数据同步工具DataX技术教程_哔哩哔哩_bilibili

目录

1、MongoDB

1.1、MongoDB介绍

1.2、MongoDB基本概念解析

1.3、MongoDB中的数据存储结构

1.4、MongoDB启动服务

1.5、MongoDB小案例

2、DataX导入导出案例

2.1、读取MongoDB的数据导入到HDFS

2.1.1、mongodb2hdfs.json

2.2.2、mongodb数据

2.2.3、运行命令

2.2、读取MongoDB的数据导入 MySQL

2.2.1、mongodb2mysql.json

2.2.2、MySQL数据

2.2.3、运行命令

1、MongoDB

1.1、MongoDB介绍

- MongoDB:应用程序数据平台 | MongoDB

1.2、MongoDB基本概念解析

1.3、MongoDB中的数据存储结构

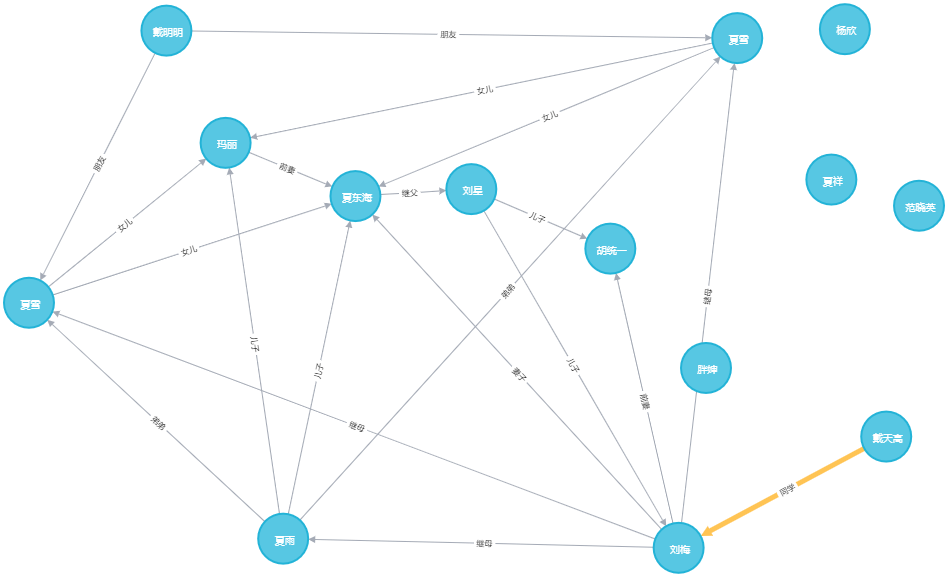

通过下图实例,我们也可以更直观的了解 Mongo 中的一些概念:

1.4、MongoDB启动服务

1、启动 MongoDB 服务

[atguigu@node001 mongodb-5.0.2]$ pwd

/opt/module/mongoDB/mongodb-5.0.2

[atguigu@node001 mongodb-5.0.2]$ bin/mongod --bind_ip 0.0.0.02、进入 shell 页面

[atguigu@node001 bin]$ pwd

/opt/module/mongoDB/mongodb-5.0.2/bin

[atguigu@node001 bin]$ /opt/module/mongoDB/mongodb-5.0.2/bin/mongo

5)启动 MongoDB 服务

[atguigu@hadoop102 mongodb]$ bin/mongod

6)进入 shell 页面

[atguigu@hadoop102 mongodb]$ bin/mongo[atguigu@node001 mongodb-5.0.2]$ pwd

/opt/module/mongoDB/mongodb-5.0.2

[atguigu@node001 mongodb-5.0.2]$ sudo mkdir -p /data/db/

[atguigu@node001 mongodb-5.0.2]$ sudo mkdir -p /opt/module/mongoDB/mongodb-5.0.2/data/db/

[atguigu@node001 mongodb-5.0.2]$ sudo chmod 777 -R /data/db/

[atguigu@node001 mongodb-5.0.2]$ sudo chmod 777 -R /opt/module/mongoDB/mongodb-5.0.2/data/db/

[atguigu@node001 mongodb-5.0.2]$ bin/mongod[atguigu@node001 bin]$ /opt/module/mongoDB/mongodb-5.0.2/bin/mongo

MongoDB shell version v5.0.2

connecting to: mongodb://127.0.0.1:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("b9e6b776-c67d-4a8d-8e31-c0350850065e") }

MongoDB server version: 5.0.2

================

Warning: the "mongo" shell has been superseded by "mongosh",

which delivers improved usability and compatibility.The "mongo" shell has been deprecated and will be removed in

an upcoming release.

We recommend you begin using "mongosh".

For installation instructions, see

https://docs.mongodb.com/mongodb-shell/install/

================

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, seehttps://docs.mongodb.com/

Questions? Try the MongoDB Developer Community Forumshttps://community.mongodb.com

---

The server generated these startup warnings when booting: 2024-04-09T15:39:39.728+08:00: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine. See http://dochub.mongodb.org/core/prodnotes-filesystem2024-04-09T15:39:40.744+08:00: Access control is not enabled for the database. Read and write access to data and configuration is unrestricted2024-04-09T15:39:40.744+08:00: This server is bound to localhost. Remote systems will be unable to connect to this server. Start the server with --bind_ip <address> to specify which IP addresses it should serve responses from, or with --bind_ip_all to bind to all interfaces. If this behavior is desired, start the server with --bind_ip 127.0.0.1 to disable this warning2024-04-09T15:39:40.748+08:00: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. We suggest setting it to 'never'2024-04-09T15:39:40.749+08:00: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. We suggest setting it to 'never'

---

---Enable MongoDB's free cloud-based monitoring service, which will then receive and displaymetrics about your deployment (disk utilization, CPU, operation statistics, etc).The monitoring data will be available on a MongoDB website with a unique URL accessible to youand anyone you share the URL with. MongoDB may use this information to make productimprovements and to suggest MongoDB products and deployment options to you.To enable free monitoring, run the following command: db.enableFreeMonitoring()To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

>

1.5、MongoDB小案例

> show databases;

admin 0.000GB

config 0.000GB

local 0.000GB

> show tables;

> db

test

> use test

switched to db test

> db.create

db.createCollection( db.createRole( db.createUser( db.createView(

> db.createCollection("atguigu")

{ "ok" : 1 }

> show tables;

atguigu

> db.atguigu.insert({"name":"atguigu","url":"www.atguigu.com"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.find()

{ "_id" : ObjectId("6614f3467fc519a5008ef01a"), "name" : "atguigu", "url" : "www.atguigu.com" }

> db.createCollection("mycol",{ capped : true,autoIndexId : true,size : 6142800, max :

... 1000})

{"note" : "The autoIndexId option is deprecated and will be removed in a future release","ok" : 1

}

> show tables;

atguigu

mycol

> db.mycol2.insert({"name":"atguigu"})

WriteResult({ "nInserted" : 1 })

> show collections

atguigu

mycol

mycol2

> 2、DataX导入导出案例

2.1、读取MongoDB的数据导入到HDFS

2.1.1、mongodb2hdfs.json

[atguigu@node001 ~]$ cd /opt/module/datax

[atguigu@node001 datax]$ bin/datax.py -r mongodbreader -w hdfswriterDataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.Please refer to the mongodbreader document:https://github.com/alibaba/DataX/blob/master/mongodbreader/doc/mongodbreader.md Please refer to the hdfswriter document:https://github.com/alibaba/DataX/blob/master/hdfswriter/doc/hdfswriter.md Please save the following configuration as a json file and usepython {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.{"job": {"content": [{"reader": {"name": "mongodbreader", "parameter": {"address": [], "collectionName": "", "column": [], "dbName": "", "userName": "", "userPassword": ""}}, "writer": {"name": "hdfswriter", "parameter": {"column": [], "compress": "", "defaultFS": "", "fieldDelimiter": "", "fileName": "", "fileType": "", "path": "", "writeMode": ""}}}], "setting": {"speed": {"channel": ""}}}

}

[atguigu@node001 datax]$ {"job": {"content": [{"reader": {"name": "mongodbreader","parameter": {"address": ["node001:27017"],"collectionName": "atguigu","column": [{"name": "name","type": "string"},{"name": "url","type": "string"}],"dbName": "test","userName": "","userPassword": ""}},"writer": {"name": "hdfswriter","parameter": {"column": [{"name": "name","type": "string"},{"name": "url","type": "string"}],"compress": "","defaultFS": "hdfs://node001:8020","fieldDelimiter": "\t","fileName": "mongoDB001.txt","fileType": "text","path": "/mongoDB","writeMode": "append"}}}],"setting": {"speed": {"channel": "1"}}}

}2.2.2、mongodb数据

> db.atguigu.find()

{ "_id" : ObjectId("6614f3467fc519a5008ef01a"), "name" : "atguigu", "url" : "www.atguigu.com" }

> db.atguigu.insert({"name":"atguigu001","url":"www.atguigu.com001"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.insert({"name":"atguigu002","url":"www.atguigu.com002"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.insert({"name":"atguigu003","url":"www.atguigu.com003"})

WriteResult({ "nInserted" : 1 })

> db.atguigu.find()

{ "_id" : ObjectId("6614f3467fc519a5008ef01a"), "name" : "atguigu", "url" : "www.atguigu.com" }

{ "_id" : ObjectId("6614fb187fc519a5008ef01c"), "name" : "atguigu001", "url" : "www.atguigu.com001" }

{ "_id" : ObjectId("6614fb207fc519a5008ef01d"), "name" : "atguigu002", "url" : "www.atguigu.com002" }

{ "_id" : ObjectId("6614fb297fc519a5008ef01e"), "name" : "atguigu003", "url" : "www.atguigu.com003" }

> 2.2.3、运行命令

[atguigu@node001 mongodb-5.0.2]$ bin/mongod --bind_ip 0.0.0.0

{"t":{"$date":"2024-04-09T16:11:21.173+08:00"},"s":"I", "c":"NETWORK", "id":4915701, "ctx":"-","msg":"Initialized wire specification","attr":{"spec":{"incomingExternalClient":{"minWireVersion":0,"maxWireVersion":13},"incomingInternalClient":{"minWireVersion":0,"maxWireVersion":13},"outgoing":{"minWireVersion":0,"maxWireVersion":13},"isInternalClient":true}}}[atguigu@node001 datax]$ bin/datax.py job/mongodb/mongdb2hdfs.json DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.2024-04-09 16:13:00.914 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2024-04-09 16:13:00.930 [main] INFO Engine - the machine info => osInfo: Red Hat, Inc. 1.8 25.372-b07jvmInfo: Linux amd64 3.10.0-862.el7.x86_64cpu num: 4totalPhysicalMemory: -0.00GfreePhysicalMemory: -0.00GmaxFileDescriptorCount: -1currentOpenFileDescriptorCount: -1GC Names [PS MarkSweep, PS Scavenge]MEMORY_NAME | allocation_size | init_size PS Eden Space | 256.00MB | 256.00MB Code Cache | 240.00MB | 2.44MB Compressed Class Space | 1,024.00MB | 0.00MB PS Survivor Space | 42.50MB | 42.50MB PS Old Gen | 683.00MB | 683.00MB Metaspace | -0.00MB | 0.00MB 2024-04-09 16:13:00.959 [main] INFO Engine -

{"content":[{"reader":{"name":"mongodbreader","parameter":{"address":["node001:27017"],"collectionName":"atguigu","column":[{"name":"name","type":"string"},{"name":"url","type":"string"}],"dbName":"test","userName":"","userPassword":""}},"writer":{"name":"hdfswriter","parameter":{"column":[{"name":"name","type":"string"},{"name":"url","type":"string"}],"compress":"","defaultFS":"hdfs://node001:8020","fieldDelimiter":"\t","fileName":"mongo.txt","fileType":"text","path":"/","writeMode":"append"}}}],"setting":{"speed":{"channel":"1"}}

}2024-04-09 16:13:00.986 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2024-04-09 16:13:00.990 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2024-04-09 16:13:00.990 [main] INFO JobContainer - DataX jobContainer starts job.

2024-04-09 16:13:00.993 [main] INFO JobContainer - Set jobId = 0

2024-04-09 16:13:01.175 [job-0] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:13:01.175 [job-0] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:13:01.405 [cluster-ClusterId{value='6614f88d21b10d1b9122f208', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:1, serverValue:2}] to node001:27017

2024-04-09 16:13:01.408 [cluster-ClusterId{value='6614f88d21b10d1b9122f208', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1825826}

2024-04-09 16:13:01.410 [cluster-ClusterId{value='6614f88d21b10d1b9122f208', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

四月 09, 2024 4:13:02 下午 org.apache.hadoop.util.NativeCodeLoader <clinit>

警告: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2024-04-09 16:13:02.883 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2024-04-09 16:13:02.886 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do prepare work .

2024-04-09 16:13:02.887 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] do prepare work .

2024-04-09 16:13:03.426 [job-0] INFO HdfsWriter$Job - 由于您配置了writeMode append, 写入前不做清理工作, [/] 目录下写入相应文件名前缀 [mongo.txt] 的文件

2024-04-09 16:13:03.429 [job-0] INFO JobContainer - jobContainer starts to do split ...

2024-04-09 16:13:03.430 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2024-04-09 16:13:03.565 [job-0] INFO connection - Opened connection [connectionId{localValue:2, serverValue:3}] to node001:27017

2024-04-09 16:13:03.591 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] splits to [1] tasks.

2024-04-09 16:13:03.592 [job-0] INFO HdfsWriter$Job - begin do split...

2024-04-09 16:13:03.628 [job-0] INFO HdfsWriter$Job - splited write file name:[hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd]

2024-04-09 16:13:03.628 [job-0] INFO HdfsWriter$Job - end do split.

2024-04-09 16:13:03.629 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] splits to [1] tasks.

2024-04-09 16:13:03.709 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2024-04-09 16:13:03.731 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2024-04-09 16:13:03.741 [job-0] INFO JobContainer - Running by standalone Mode.

2024-04-09 16:13:03.774 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2024-04-09 16:13:03.823 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2024-04-09 16:13:03.824 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2024-04-09 16:13:03.942 [0-0-0-reader] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:13:03.942 [0-0-0-reader] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:13:03.943 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2024-04-09 16:13:03.986 [cluster-ClusterId{value='6614f88f21b10d1b9122f209', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:3, serverValue:4}] to node001:27017

2024-04-09 16:13:03.988 [cluster-ClusterId{value='6614f88f21b10d1b9122f209', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1293606}

2024-04-09 16:13:03.988 [cluster-ClusterId{value='6614f88f21b10d1b9122f209', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

2024-04-09 16:13:04.029 [0-0-0-reader] INFO connection - Opened connection [connectionId{localValue:4, serverValue:5}] to node001:27017

2024-04-09 16:13:04.061 [0-0-0-writer] INFO HdfsWriter$Task - begin do write...

2024-04-09 16:13:04.062 [0-0-0-writer] INFO HdfsWriter$Task - write to file : [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd]

2024-04-09 16:13:08.157 [0-0-0-writer] INFO HdfsWriter$Task - end do write

2024-04-09 16:13:08.185 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[4268]ms

2024-04-09 16:13:08.185 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2024-04-09 16:13:13.866 [job-0] INFO StandAloneJobContainerCommunicator - Total 1 records, 22 bytes | Speed 2B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:13:13.866 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2024-04-09 16:13:13.866 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] do post work.

2024-04-09 16:13:13.867 [job-0] INFO HdfsWriter$Job - start rename file [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd] to file [hdfs://node001:8020/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd].

2024-04-09 16:13:13.964 [job-0] INFO HdfsWriter$Job - finish rename file [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd] to file [hdfs://node001:8020/mongo.txt__355376ce_ce5d_40be_911e_e884dfac15bd].

2024-04-09 16:13:13.965 [job-0] INFO HdfsWriter$Job - start delete tmp dir [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49] .

2024-04-09 16:13:13.995 [job-0] INFO HdfsWriter$Job - finish delete tmp dir [hdfs://node001:8020/__8ea8878b_9c2e_4b6a_95b7_dcacb6f05d49] .

2024-04-09 16:13:13.996 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do post work.

2024-04-09 16:13:13.996 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2024-04-09 16:13:13.997 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2024-04-09 16:13:14.102 [job-0] INFO JobContainer - [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00%[total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime PS MarkSweep | 1 | 1 | 1 | 0.042s | 0.042s | 0.042s PS Scavenge | 1 | 1 | 1 | 0.020s | 0.020s | 0.020s 2024-04-09 16:13:14.102 [job-0] INFO JobContainer - PerfTrace not enable!

2024-04-09 16:13:14.103 [job-0] INFO StandAloneJobContainerCommunicator - Total 1 records, 22 bytes | Speed 2B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:13:14.111 [job-0] INFO JobContainer -

任务启动时刻 : 2024-04-09 16:13:00

任务结束时刻 : 2024-04-09 16:13:14

任务总计耗时 : 13s

任务平均流量 : 2B/s

记录写入速度 : 0rec/s

读出记录总数 : 1

读写失败总数 : 0[atguigu@node001 datax]$ 2.2、读取MongoDB的数据导入 MySQL

2.2.1、mongodb2mysql.json

[atguigu@node001 datax]$ bin/datax.py -r mongodbreader -w mysqlwriterDataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.Please refer to the mongodbreader document:https://github.com/alibaba/DataX/blob/master/mongodbreader/doc/mongodbreader.md Please refer to the mysqlwriter document:https://github.com/alibaba/DataX/blob/master/mysqlwriter/doc/mysqlwriter.md Please save the following configuration as a json file and usepython {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.{"job": {"content": [{"reader": {"name": "mongodbreader", "parameter": {"address": [], "collectionName": "", "column": [], "dbName": "", "userName": "", "userPassword": ""}}, "writer": {"name": "mysqlwriter", "parameter": {"column": [], "connection": [{"jdbcUrl": "", "table": []}], "password": "", "preSql": [], "session": [], "username": "", "writeMode": ""}}}], "setting": {"speed": {"channel": ""}}}

}

[atguigu@node001 datax]$ {"job": {"content": [{"reader": {"name": "mongodbreader","parameter": {"address": ["node001:27017"],"collectionName": "atguigu","column": [{"name": "name","type": "string"},{"name": "url","type": "string"}],"dbName": "test","userName": "","userPassword": ""}},"writer": {"name": "mysqlwriter","parameter": {"column": ["*"],"connection": [{"jdbcUrl": "jdbc:mysql://node001:3306/datax","table": ["mongodb"]}],"username": "root","password": "123456","preSql": [],"session": [],"writeMode": "insert"}}}],"setting": {"speed": {"channel": "1"}}}

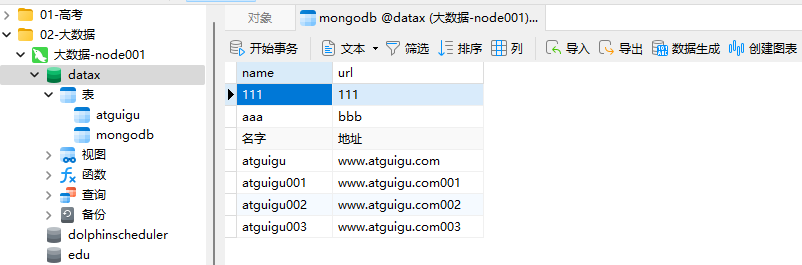

}2.2.2、MySQL数据

/*Navicat Premium Data TransferSource Server : localhostSource Server Type : MySQLSource Server Version : 50735 (5.7.35-log)Source Host : localhost:3306Source Schema : dataxTarget Server Type : MySQLTarget Server Version : 50735 (5.7.35-log)File Encoding : 65001Date: 09/04/2024 16:40:39

*/SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;-- ----------------------------

-- Table structure for atguigu

-- ----------------------------

DROP TABLE IF EXISTS `atguigu`;

CREATE TABLE `atguigu` (`name` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,`url` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;-- ----------------------------

-- Records of atguigu

-- ----------------------------

INSERT INTO `atguigu` VALUES ('111', '111');

INSERT INTO `atguigu` VALUES ('名字', '地址');-- ----------------------------

-- Table structure for mongodb

-- ----------------------------

DROP TABLE IF EXISTS `mongodb`;

CREATE TABLE `mongodb` (`name` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,`url` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;-- ----------------------------

-- Records of mongodb

-- ----------------------------

INSERT INTO `mongodb` VALUES ('111', '111');

INSERT INTO `mongodb` VALUES ('aaa', 'bbb');

INSERT INTO `mongodb` VALUES ('名字', '地址');SET FOREIGN_KEY_CHECKS = 1;2.2.3、运行命令

[atguigu@node001 datax]$ bin/datax.py job/mongodb/mongodb2mysql.json DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.2024-04-09 16:42:09.299 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2024-04-09 16:42:09.319 [main] INFO Engine - the machine info => osInfo: Red Hat, Inc. 1.8 25.372-b07jvmInfo: Linux amd64 3.10.0-862.el7.x86_64cpu num: 4totalPhysicalMemory: -0.00GfreePhysicalMemory: -0.00GmaxFileDescriptorCount: -1currentOpenFileDescriptorCount: -1GC Names [PS MarkSweep, PS Scavenge]MEMORY_NAME | allocation_size | init_size PS Eden Space | 256.00MB | 256.00MB Code Cache | 240.00MB | 2.44MB Compressed Class Space | 1,024.00MB | 0.00MB PS Survivor Space | 42.50MB | 42.50MB PS Old Gen | 683.00MB | 683.00MB Metaspace | -0.00MB | 0.00MB 2024-04-09 16:42:09.366 [main] INFO Engine -

{"content":[{"reader":{"name":"mongodbreader","parameter":{"address":["node001:27017"],"collectionName":"atguigu","column":[{"name":"name","type":"string"},{"name":"url","type":"string"}],"dbName":"test","userName":"","userPassword":""}},"writer":{"name":"mysqlwriter","parameter":{"column":["*"],"connection":[{"jdbcUrl":"jdbc:mysql://node001:3306/datax","table":["mongodb"]}],"password":"******","preSql":[],"session":[],"username":"root","writeMode":"insert"}}}],"setting":{"speed":{"channel":"1"}}

}2024-04-09 16:42:09.401 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2024-04-09 16:42:09.405 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2024-04-09 16:42:09.405 [main] INFO JobContainer - DataX jobContainer starts job.

2024-04-09 16:42:09.411 [main] INFO JobContainer - Set jobId = 0

2024-04-09 16:42:09.681 [job-0] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:42:09.681 [job-0] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:42:09.951 [cluster-ClusterId{value='6614ff6121b10d1da8148cac', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:1, serverValue:17}] to node001:27017

2024-04-09 16:42:09.954 [cluster-ClusterId{value='6614ff6121b10d1da8148cac', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1877048}

2024-04-09 16:42:09.960 [cluster-ClusterId{value='6614ff6121b10d1da8148cac', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

2024-04-09 16:42:11.020 [job-0] INFO OriginalConfPretreatmentUtil - table:[mongodb] all columns:[

name,url

].

2024-04-09 16:42:11.021 [job-0] WARN OriginalConfPretreatmentUtil - 您的配置文件中的列配置信息存在风险. 因为您配置的写入数据库表的列为*,当您的表字段个数、类型有变动时,可能影响任务正确性甚至会运行出错。请检查您的配置并作出修改.

2024-04-09 16:42:11.024 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

insert INTO %s (name,url) VALUES(?,?)

], which jdbcUrl like:[jdbc:mysql://node001:3306/datax?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true]

2024-04-09 16:42:11.025 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2024-04-09 16:42:11.027 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do prepare work .

2024-04-09 16:42:11.031 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

2024-04-09 16:42:11.032 [job-0] INFO JobContainer - jobContainer starts to do split ...

2024-04-09 16:42:11.032 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2024-04-09 16:42:11.094 [job-0] INFO connection - Opened connection [connectionId{localValue:2, serverValue:18}] to node001:27017

2024-04-09 16:42:11.123 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] splits to [1] tasks.

2024-04-09 16:42:11.125 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

2024-04-09 16:42:11.172 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2024-04-09 16:42:11.183 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2024-04-09 16:42:11.189 [job-0] INFO JobContainer - Running by standalone Mode.

2024-04-09 16:42:11.204 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2024-04-09 16:42:11.217 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2024-04-09 16:42:11.218 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2024-04-09 16:42:11.244 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2024-04-09 16:42:11.246 [0-0-0-reader] INFO cluster - Cluster created with settings {hosts=[node001:27017], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500}

2024-04-09 16:42:11.247 [0-0-0-reader] INFO cluster - Adding discovered server node001:27017 to client view of cluster

2024-04-09 16:42:11.292 [cluster-ClusterId{value='6614ff6321b10d1da8148cad', description='null'}-node001:27017] INFO connection - Opened connection [connectionId{localValue:3, serverValue:19}] to node001:27017

2024-04-09 16:42:11.295 [cluster-ClusterId{value='6614ff6321b10d1da8148cad', description='null'}-node001:27017] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=node001:27017, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[5, 0, 2]}, minWireVersion=0, maxWireVersion=13, maxDocumentSize=16777216, roundTripTimeNanos=1154664}

2024-04-09 16:42:11.295 [cluster-ClusterId{value='6614ff6321b10d1da8148cad', description='null'}-node001:27017] INFO cluster - Discovered cluster type of STANDALONE

2024-04-09 16:42:11.311 [0-0-0-reader] INFO connection - Opened connection [connectionId{localValue:4, serverValue:20}] to node001:27017

2024-04-09 16:42:11.447 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[207]ms

2024-04-09 16:42:11.449 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2024-04-09 16:42:21.236 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 106 bytes | Speed 10B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:42:21.236 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2024-04-09 16:42:21.237 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do post work.

2024-04-09 16:42:21.238 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do post work.

2024-04-09 16:42:21.241 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2024-04-09 16:42:21.242 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2024-04-09 16:42:21.244 [job-0] INFO JobContainer - [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00%[total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime PS MarkSweep | 1 | 1 | 1 | 0.057s | 0.057s | 0.057s PS Scavenge | 1 | 1 | 1 | 0.021s | 0.021s | 0.021s 2024-04-09 16:42:21.244 [job-0] INFO JobContainer - PerfTrace not enable!

2024-04-09 16:42:21.245 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 106 bytes | Speed 10B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-09 16:42:21.248 [job-0] INFO JobContainer -

任务启动时刻 : 2024-04-09 16:42:09

任务结束时刻 : 2024-04-09 16:42:21

任务总计耗时 : 11s

任务平均流量 : 10B/s

记录写入速度 : 0rec/s

读出记录总数 : 4

读写失败总数 : 0[atguigu@node001 datax]$ 相关文章:

DataX案例,MongoDB数据导入HDFS与MySQL

【尚硅谷】Alibaba开源数据同步工具DataX技术教程_哔哩哔哩_bilibili 目录 1、MongoDB 1.1、MongoDB介绍 1.2、MongoDB基本概念解析 1.3、MongoDB中的数据存储结构 1.4、MongoDB启动服务 1.5、MongoDB小案例 2、DataX导入导出案例 2.1、读取MongoDB的数据导入到HDFS 2…...

HarmonyOS鸿蒙端云一体化开发--适合小白体制

端云一体化 什么是“端”,什么是“云”? 答:“端“:手机APP端 “云”:后端服务端 什么是端云一体化? 端云一体化开发支持开发者在 DevEco Studio 内使用一种语言同时完成 HarmonyOS 应用的端侧与云侧开发。 …...

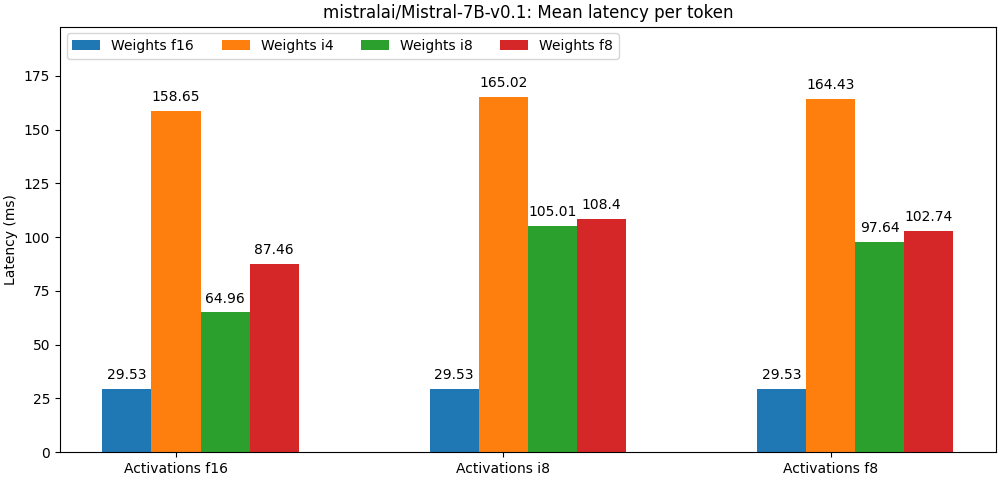

Quanto: PyTorch 量化工具包

量化技术通过用低精度数据类型 (如 8 位整型 (int8)) 来表示深度学习模型的权重和激活,以减少传统深度学习模型使用 32 位浮点 (float32) 表示权重和激活所带来的计算和内存开销。 减少位宽意味着模型的内存占用更低,这对在消费设备上部署大语言模型至关…...

宝塔面板Docker+Uwsgi+Nginx+SSL部署Django项目

这次为大家带来的是从零开始搭建一个django项目并将它部署到linux服务器上。大家可以按照我的步骤一步步操作,最终可以完成部署。 步骤1:在某个文件夹中创建一个django项目 安装django pip install django创建一个django项目将其命名为djangoProject …...

Android 无线调试 adb connect ip:port 失败

1. 在手机打开 无线调试 使用 adb connect 连接 adb connect 192.168.14.164:39511如果连接成功, 查看连接的设备, 忽略 配对下面的步骤. adb devices如果连接失败: failed to connect to 192.168.14.164:39511如果失败了, 可以杀死一下进程, 然后执行后面的操作 adb kill…...

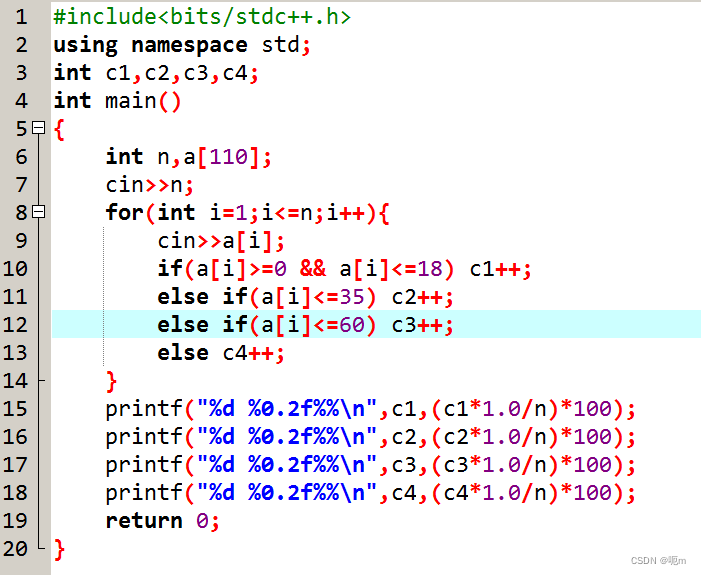

年龄与疾病c++

题目描述 某医院想统计一下某项疾病的获得与否与年龄是否有关,需要对以前的诊断记录进行整理,按照0-18岁、19-35岁、36-60岁、61以上(含61)四个年龄段统计的患病人数以及占总患病人数的比例。 输入 共2行,第一行为过…...

neo4j-01

Neo4j是: 开源的(社区版开源免费)无模式(不用预设数据的格式,数据更加灵活)noSQL(非关系型数据库,数据更易拓展)图数据库(使用图这种数据结构作为数据存储方…...

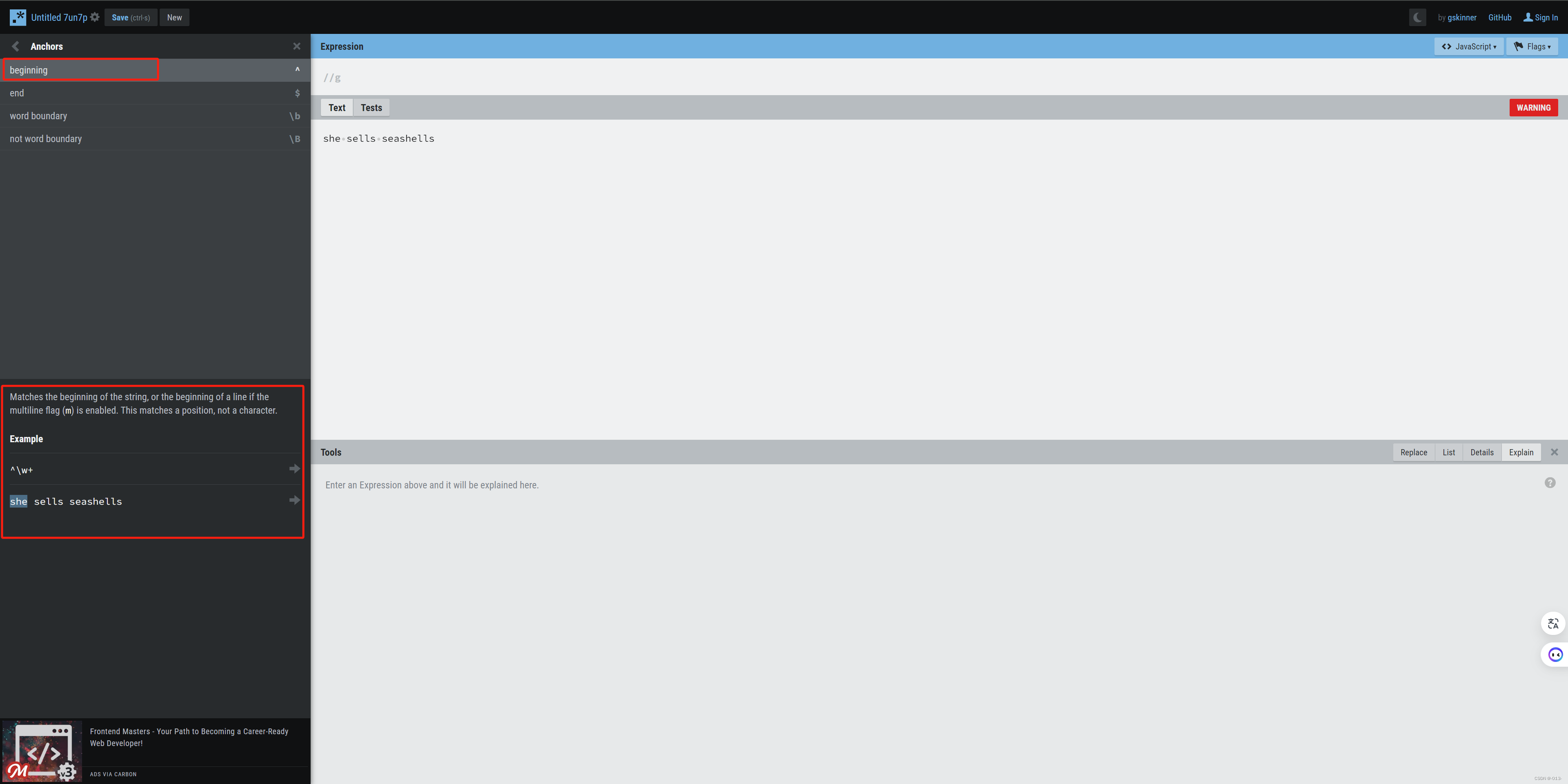

正则表达式 速成

正则表达式的作用 正则表达式,又称规则表达式,(Regular Expression,在代码中常简写为regex、regexp或RE),是一种文本模式,包括普通字符(例如,a 到 z 之间的字母)和特殊字…...

21、Lua 面向对象

Lua 面向对象 Lua 面向对象面向对象特征Lua 中面向对象一个简单实例创建对象访问属性访问成员函数完整实例 Lua 继承完整实例 函数重写 Lua 面向对象 面向对象编程(Object Oriented Programming,OOP)是一种非常流行的计算机编程架构。 以下…...

openssl3.2 - exp - class warp for sha3-512

文章目录 openssl3.2 - exp - class warp for sha3-512概述笔记调用方代码子类 - cipher_sha3_512.h子类 - cipher_sha3_512.cpp基类 - cipher_md_base.h基类 - cipher_md_base.cpp备注END openssl3.2 - exp - class warp for sha3-512 概述 前面实验整了一个对buffer进行sha…...

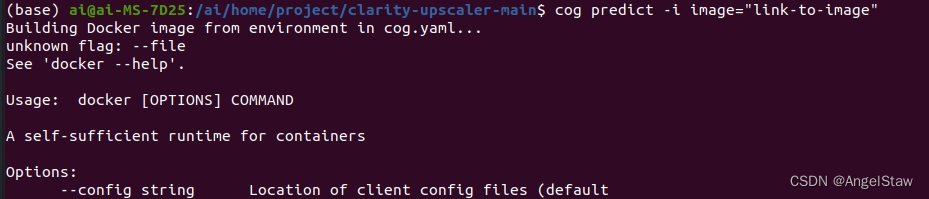

cog predict docker unknown flag: --file

如图: 使用cog predict -i image“link-to-image” 出现docker unknown flag: --file的问题。 解决方法(对我可行):切换cog版本。 这个是我一开始的cog安装命令(大概是下的最新版?)࿱…...

SpringMVC接收参数方式讲解

PathVariable 该注解用于接收具有Restful风格的参数,如/api/v1/1001,最终userId的值为1001。 如下代码中,使用name属性可以指定GetMapping中的id名称与之对应,从而可以自定义参数名称userId,而不是使用默认名称id G…...

JavaScript 中arguments 对象详细解析与案例

在JavaScript中,每个函数都有一个内部对象arguments,它包含了函数调用时传递的所有参数。arguments对象类似一个数组,但是它并不是真正的数组,它没有数组的方法,只有length属性和索引访问元素的能力。 以下是对argume…...

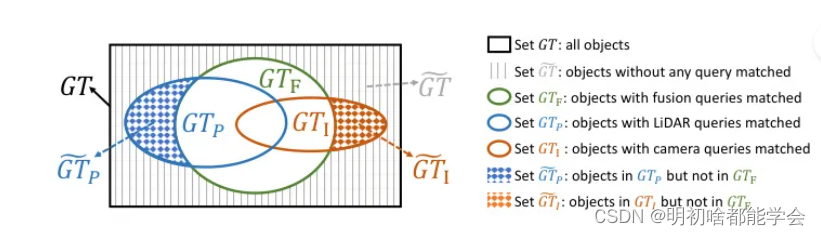

消除 BEV 空间中的跨模态冲突,实现 LiDAR 相机 3D 目标检测

Eliminating Cross-modal Conflicts in BEV Space for LiDAR-Camera 3D Object Detection 消除 BEV 空间中的跨模态冲突,实现 LiDAR 相机 3D 目标检测 摘要Introduction本文方法Single-Modal BEV Feature ExtractionSemantic-guided Flow-based AlignmentDissolved…...

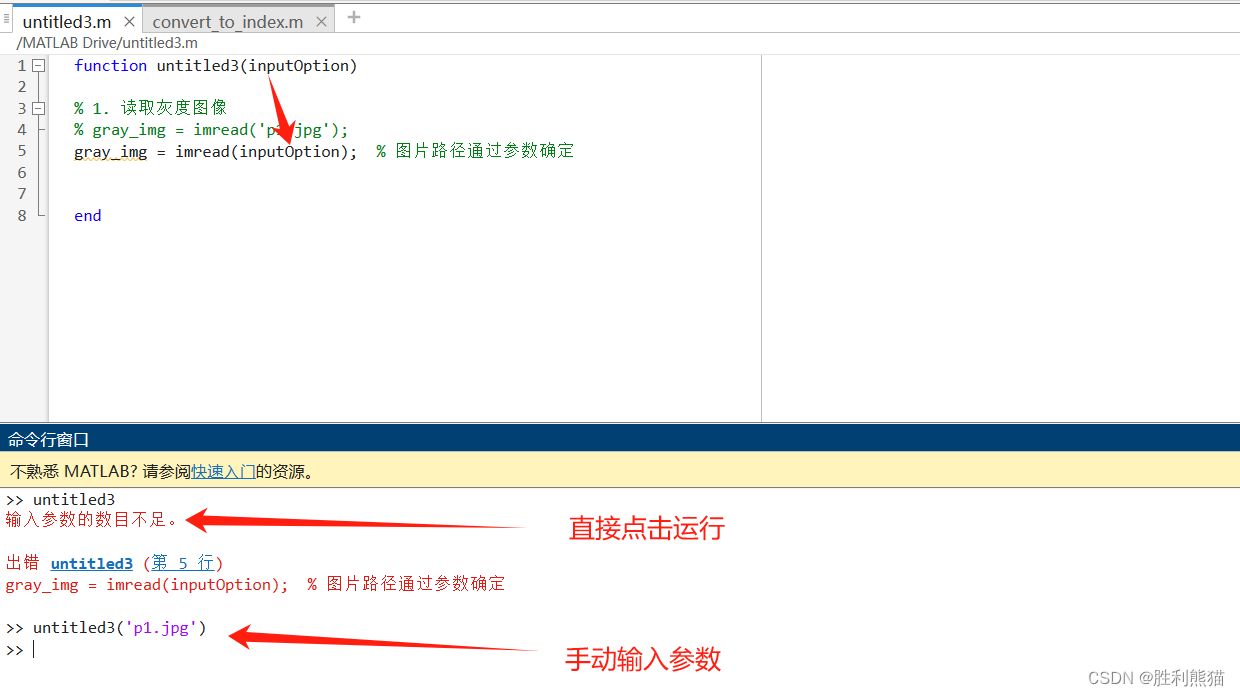

【免安装的MATLAB--MATLAB online】

目录: 前言账号的注册图片处理的示例准备图片脚本函数 总结 前言 在计算机、数学等相关专业中,或多或少都会与MATLAB产生藕断丝连的联系,如果你需要使用MATLAB,但是又不想要安装到自己的电脑上(它实在是太大了啊&#…...

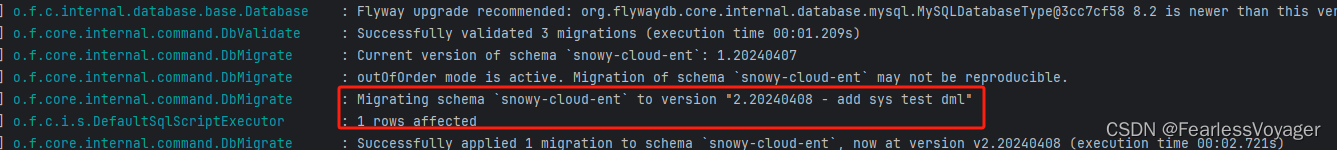

Flyway 数据库版本管理

一、Flyway简介 Flyway是一款开源的数据库迁移工具,可以管理和版本化数据库架构。通过Flyway,可以跟踪数据库的变化,并将这些变化作为版本控制的一部分。Flyway支持SQL和NoSQL数据库,并且可以与现有的开发流程无缝集成࿰…...

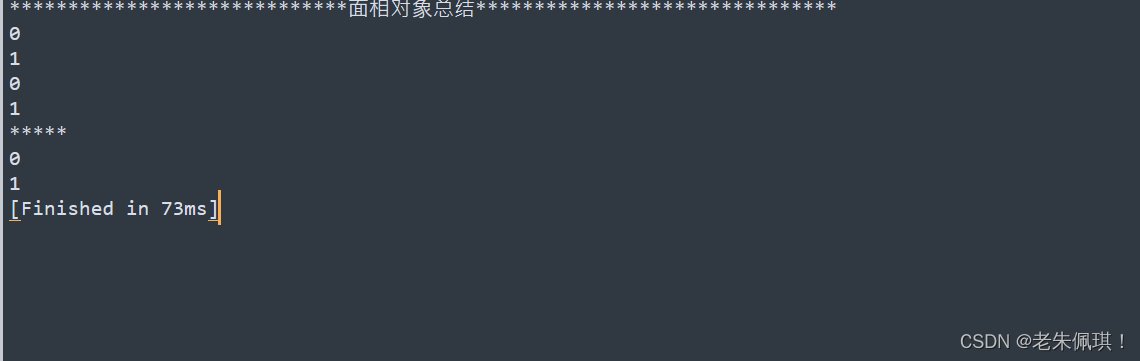

lua学习笔记19(面相对象学习的一点总结)

print("*****************************面相对象总结*******************************") object{} --实例化方法 function object:new()local obj{}self.__indexselfsetmetatable(obj,self)return obj end-------------------------如何new一个对象 function object:…...

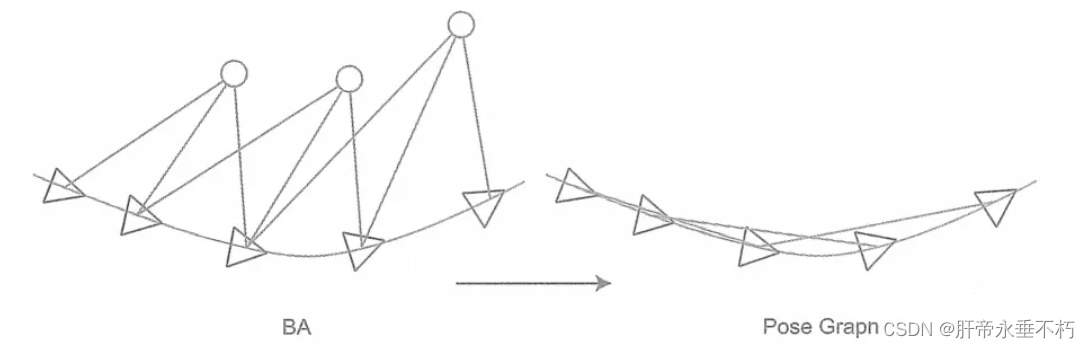

视觉SLAM学习打卡【10】-后端·滑动窗口法位姿图

本节是对上一节BA的进一步简化,旨在提高优化实时性.难点在于位姿图部分的雅可比矩阵求解(涉及李代数扰动模型求导),书中的相关推导存在跳步(可能数学功底强的人认为过渡的理所当然),笔者参考了知…...

【动态规划 区间dp 位运算】100259. 划分数组得到最小的值之和

本文涉及知识点 动态规划 区间dp 位运算 LeetCode100259. 划分数组得到最小的值之和 给你两个数组 nums 和 andValues,长度分别为 n 和 m。 数组的 值 等于该数组的 最后一个 元素。 你需要将 nums 划分为 m 个 不相交的连续 子数组,对于第 ith 个子数…...

CSS核心样式-02-盒模型属性及扩展应用

目录 三、盒模型属性 常见盒模型区域 盒模型图 盒模型五大属性 1. 宽度 width 2. 高度 height 3. 内边距 padding 四值法 三值法 二值法 单值法 案例 4. 边框 border 按照属性值的类型划分为三个单一属性 ①线宽 border-width ②线型 border-style ③边框颜色 bo…...

智慧医疗能源事业线深度画像分析(上)

引言 医疗行业作为现代社会的关键基础设施,其能源消耗与环境影响正日益受到关注。随着全球"双碳"目标的推进和可持续发展理念的深入,智慧医疗能源事业线应运而生,致力于通过创新技术与管理方案,重构医疗领域的能源使用模式。这一事业线融合了能源管理、可持续发…...

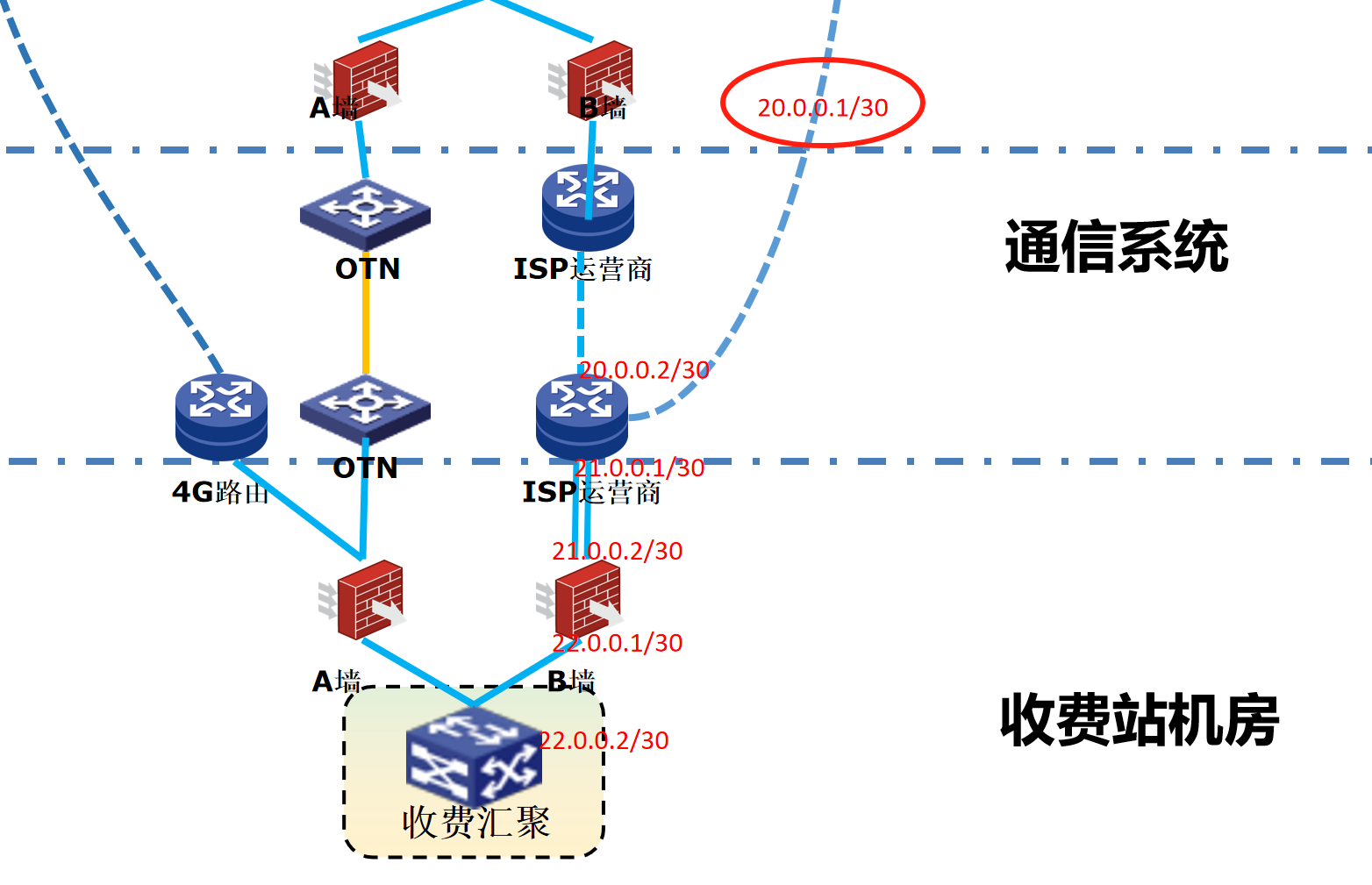

浪潮交换机配置track检测实现高速公路收费网络主备切换NQA

浪潮交换机track配置 项目背景高速网络拓扑网络情况分析通信线路收费网络路由 收费汇聚交换机相应配置收费汇聚track配置 项目背景 在实施省内一条高速公路时遇到的需求,本次涉及的主要是收费汇聚交换机的配置,浪潮网络设备在高速项目很少,通…...

招商蛇口 | 执笔CID,启幕低密生活新境

作为中国城市生长的力量,招商蛇口以“美好生活承载者”为使命,深耕全球111座城市,以央企担当匠造时代理想人居。从深圳湾的开拓基因到西安高新CID的战略落子,招商蛇口始终与城市发展同频共振,以建筑诠释对土地与生活的…...

uniapp 实现腾讯云IM群文件上传下载功能

UniApp 集成腾讯云IM实现群文件上传下载功能全攻略 一、功能背景与技术选型 在团队协作场景中,群文件共享是核心需求之一。本文将介绍如何基于腾讯云IMCOS,在uniapp中实现: 群内文件上传/下载文件元数据管理下载进度追踪跨平台文件预览 二…...

第八部分:阶段项目 6:构建 React 前端应用

现在,是时候将你学到的 React 基础知识付诸实践,构建一个简单的前端应用来模拟与后端 API 的交互了。在这个阶段,你可以先使用模拟数据,或者如果你的后端 API(阶段项目 5)已经搭建好,可以直接连…...

【Qt】控件 QWidget

控件 QWidget 一. 控件概述二. QWidget 的核心属性可用状态:enabled几何:geometrywindows frame 窗口框架的影响 窗口标题:windowTitle窗口图标:windowIconqrc 机制 窗口不透明度:windowOpacity光标:cursor…...

开疆智能Ethernet/IP转Modbus网关连接鸣志步进电机驱动器配置案例

在工业自动化控制系统中,常常会遇到不同品牌和通信协议的设备需要协同工作的情况。本案例中,客户现场采用了 罗克韦尔PLC,但需要控制的变频器仅支持 ModbusRTU 协议。为了实现PLC 对变频器的有效控制与监控,引入了开疆智能Etherne…...

claude3.7高阶玩法,生成系统架构图,国内直接使用

文章目录 零、前言一、操作指南操作指导 二、提示词模板三、实战图书管理系统通过4o模型生成系统描述通过claude3.7生成系统架构图svg代码转换成图片 在线考试系统通过4o模型生成系统描述通过claude3.7生成系统架构图svg代码转换成图片 四、感受 零、前言 现在很多AI大模型可以…...

【Ragflow】26.RagflowPlus(v0.4.0):完善解析逻辑/文档撰写模式全新升级

概述 在历经半个月的间歇性开发后,RagflowPlus再次迎来一轮升级,正式发布v0.4.0。 开源地址:https://github.com/zstar1003/ragflow-plus 更新方法 下载仓库最新代码: git clone https://github.com/zstar1003/ragflow-plus.…...

CCF 开源发展委员会 “开源高校行“ 暨红山开源 + OpenAtom openKylin 高校行活动在西安四所高校成功举办

点击蓝字 关注我们 CCF Opensource Development Committee CCF开源高校行 暨红山开源 openKylin 高校行 西安站 5 月 26 日至 28 日,CCF 开源发展委员会 "开源高校行" 暨红山开源 OpenAtom openKylin 高校行活动在西安四所高校(西安交通大学…...