kubesphere报错

1.安装过程报错unable to sign certificate: must specify a CommonName

[root@node1 ~]# ./kk init registry -f config-sample.yaml -a kubesphere.tar.gz _ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |__/ ||___/11:28:47 CST [GreetingsModule] Greetings

11:28:47 CST message: [node1]

Greetings, KubeKey!

11:28:47 CST success: [node1]

11:28:47 CST [UnArchiveArtifactModule] Check the KubeKey artifact md5 value

11:28:49 CST success: [LocalHost]

11:28:49 CST [UnArchiveArtifactModule] UnArchive the KubeKey artifact

11:28:49 CST skipped: [LocalHost]

11:28:49 CST [UnArchiveArtifactModule] Create the KubeKey artifact Md5 file

11:28:49 CST skipped: [LocalHost]

11:28:49 CST [RegistryPackageModule] Download registry package

11:28:49 CST message: [localhost]

downloading amd64 harbor v2.5.3 ...

11:28:56 CST message: [localhost]

downloading amd64 docker 24.0.6 ...

11:28:56 CST message: [localhost]

downloading amd64 compose v2.2.2 ...

11:28:56 CST success: [LocalHost]

11:28:56 CST [ConfigureOSModule] Get OS release

11:28:56 CST success: [node1]

11:28:56 CST [ConfigureOSModule] Prepare to init OS

11:28:57 CST success: [node1]

11:28:57 CST [ConfigureOSModule] Generate init os script

11:28:57 CST success: [node1]

11:28:57 CST [ConfigureOSModule] Exec init os script

11:28:58 CST stdout: [node1]

setenforce: SELinux is disabled

Disabled

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

net.core.netdev_max_backlog = 65535

net.core.rmem_max = 33554432

net.core.wmem_max = 33554432

net.core.somaxconn = 32768

net.ipv4.tcp_max_syn_backlog = 1048576

net.ipv4.neigh.default.gc_thresh1 = 512

net.ipv4.neigh.default.gc_thresh2 = 2048

net.ipv4.neigh.default.gc_thresh3 = 4096

net.ipv4.tcp_retries2 = 15

net.ipv4.tcp_max_tw_buckets = 1048576

net.ipv4.tcp_max_orphans = 65535

net.ipv4.udp_rmem_min = 131072

net.ipv4.udp_wmem_min = 131072

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.arp_accept = 1

net.ipv4.conf.default.arp_accept = 1

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.default.arp_ignore = 1

vm.max_map_count = 262144

vm.swappiness = 0

vm.overcommit_memory = 0

fs.inotify.max_user_instances = 524288

fs.inotify.max_user_watches = 524288

fs.pipe-max-size = 4194304

fs.aio-max-nr = 262144

kernel.pid_max = 65535

kernel.watchdog_thresh = 5

kernel.hung_task_timeout_secs = 5

11:28:58 CST success: [node1]

11:28:58 CST [ConfigureOSModule] configure the ntp server for each node

11:28:58 CST skipped: [node1]

11:28:58 CST [InitRegistryModule] Fetch registry certs

11:28:58 CST success: [node1]

11:28:58 CST [InitRegistryModule] Generate registry Certs

[certs] Using existing ca certificate authority

11:28:59 CST message: [LocalHost]

unable to sign certificate: must specify a CommonName

11:28:59 CST failed: [LocalHost]

error: Pipeline[InitRegistryPipeline] execute failed: Module[InitRegistryModule] exec failed:

failed: [LocalHost] [GenerateRegistryCerts] exec failed after 1 retries: unable to sign certificate: must specify a CommonName

解决办法

配置文件原因导致的,修改配置文件将注释打开

官网是注释掉的

这是官网的截取

registry:# 如需使用 kk 部署 harbor, 可将该参数设置为 harbor,不设置该参数且需使用 kk 创建容器镜像仓库,将默认使用docker registry。type: harbor# 如使用 kk 部署的 harbor 或其他需要登录的仓库,可设置对应仓库的auths,如使用 kk 创建的 docker registry 仓库,则无需配置该参数。# 注意:如使用 kk 部署 harbor,该参数请于 harbor 启动后设置。#auths:# "dockerhub.kubekey.local":# username: admin# password: Harbor12345# 设置集群部署时使用的私有仓库privateRegistry: ""

本地修改的

[root@node1 ~]# apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:name: sample

spec:hosts:1. {name: node1, address: 10.1.1.1, internalAddress: 10.1.1.1, user: root, password: "123456"}roleGroups:etcd:- node1control-plane:- node1worker:- node1registry:- node1controlPlaneEndpoint:## Internal loadbalancer for apiservers# internalLoadbalancer: haproxydomain: lb.kubesphere.localaddress: ""port: 6443kubernetes:version: v1.22.12clusterName: cluster.localautoRenewCerts: truecontainerManager: dockeretcd:type: kubekeynetwork:plugin: calicokubePodsCIDR: 10.233.64.0/18kubeServiceCIDR: 10.233.0.0/18## multus support. https://github.com/k8snetworkplumbingwg/multus-cnimultusCNI:enabled: falseregistry:type: harbordomain: dockerhub.kubekey.localtls:selfSigned: truecertCommonName: dockerhub.kubekey.localauths:"dockerhub.kubekey.local":username: adminpassword: Harbor12345privateRegistry: "dockerhub.kubekey.local"namespaceOverride: "kubesphereio"

# privateRegistry: ""

# namespaceOverride: ""registryMirrors: []insecureRegistries: []addons: []

2.报错显示缺少包

pull image failed: Failed to exec command: sudo -E /bin/bash -c "env PATH=$PATH docker pull dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1 --platform amd64"

downloading image: dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1

14:10:52 CST message: [node1]

pull image failed: Failed to exec command: sudo -E /bin/bash -c "env PATH=$PATH docker pull dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1 --platform amd64"

Error response from daemon: unknown: repository kubesphereio/pod2daemon-flexvol not found: Process exited with status 1

14:10:52 CST retry: [node1]

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/pause:3.5

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/kube-apiserver:v1.22.12

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/kube-controller-manager:v1.22.12

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/kube-scheduler:v1.22.12

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/kube-proxy:v1.22.12

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/coredns:1.8.0

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/k8s-dns-node-cache:1.15.12

14:10:57 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/kube-controllers:v3.26.1

14:10:58 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/cni:v3.26.1

14:10:58 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/node:v3.26.1

14:10:58 CST message: [node1]

downloading image: dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1

14:10:58 CST message: [node1]

pull image failed: Failed to exec command: sudo -E /bin/bash -c "env PATH=$PATH docker pull dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1 --platform amd64"

Error response from daemon: unknown: repository kubesphereio/pod2daemon-flexvol not found: Process exited with status 1

14:10:58 CST failed: [node1]

error: Pipeline[CreateClusterPipeline] execute failed: Module[PullModule] exec failed:

failed: [node1] [PullImages] exec failed after 3 retries: pull image failed: Failed to exec command: sudo -E /bin/bash -c "env PATH=$PATH docker pull dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1 --platform amd64"

Error response from daemon: unknown: repository kubesphereio/pod2daemon-flexvol not found: Process exited with status 1

这是缺少安装包,外网下载然后本地导入

docker save -o pod2daemon-flexvo.tar registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvo:v3.26.1

3、etcd x509 certificate is valid for 127.0.0.1 not 155.1.94.77 error

remote error tls bad certifcate servianem

13:10:44 CST [CertsModule] Generate etcd Certs

[certs] Using existing ca certificate authority

[certs] Using existing admin-node1 certificate and key on disk

[certs] Using existing member-node1 certificate and key on disk

[certs] Using existing node-node1 certificate and key on disk

13:10:44 CST success: [LocalHost]

13:10:44 CST [CertsModule] Synchronize certs file

13:10:46 CST success: [node1]

13:10:46 CST [CertsModule] Synchronize certs file to master

13:10:46 CST skipped: [node1]

13:10:46 CST [InstallETCDBinaryModule] Install etcd using binary

13:10:47 CST success: [node1]

13:10:47 CST [InstallETCDBinaryModule] Generate etcd service

13:10:47 CST success: [node1]

13:10:47 CST [InstallETCDBinaryModule] Generate access address

13:10:47 CST success: [node1]

13:10:47 CST [ETCDConfigureModule] Health check on exist etcd

13:10:47 CST skipped: [node1]

13:10:47 CST [ETCDConfigureModule] Generate etcd.env config on new etcd

13:10:48 CST success: [node1]

13:10:48 CST [ETCDConfigureModule] Refresh etcd.env config on all etcd

13:10:48 CST success: [node1]

13:10:48 CST [ETCDConfigureModule] Restart etcd

13:10:52 CST success: [node1]

13:10:52 CST [ETCDConfigureModule] Health check on all etcd

13:10:52 CST message: [node1]

etcd health check failed: Failed to exec command: sudo -E /bin/bash -c "export ETCDCTL_API=2;export ETCDCTL_CERT_FILE='/etc/ssl/etcd/ssl/admin-node1.pem';export ETCDCTL_KEY_FILE='/etc/ssl/etcd/ssl/admin-node1-key.pem';export ETCDCTL_CA_FILE='/etc/ssl/etcd/ssl/ca.pem';/usr/local/bin/etcdctl --endpoints=https://10.1.1.1:2379 cluster-health | grep -q 'cluster is healthy'"

Error: client: etcd cluster is unavailable or misconfigured; error #0: x509: certificate is valid for 127.0.0.1, ::1, 155.1.94.77, not 10.1.1.247error #0: x509: certificate is valid for 127.0.0.1, ::1, 155.1.94.77., not 10.1.1.1: Process exited with status 1

13:10:52 CST retry: [node1]

这是因为旧版本的证书已经生成导致的证书有问题,删除文件里面生成的证书

删除这个路径下已经生成的证书/etc/ssl/etcd/ssl

使用./kk delete cluster,删除其他文件保留镜像images文件夹,建议删除先备份

4.May 31 15:48:14 node1 etcd[43663]: listen tcp 155.1.94.77:2380: bind: cannot assign requested address

不能绑定地址,是因为网段原因,实际ip是10.1.1.1,映射IP155段,需要用10段的ip才能绑定

May 31 15:48:14 node1 etcd[43663]: peerTLS: cert = /etc/ssl/etcd/ssl/member-node1.pem, key = /etc/ssl/etcd/ssl/member-node1-key.pem, trusted-ca = /etc/ssl/etcd/ssl/ca.pem, client-cert-auth = true, crl-file =

May 31 15:48:14 node1 etcd[43663]: listen tcp 155.1.94.77:2380: bind: cannot assign requested address

May 31 15:48:14 node1 systemd[1]: etcd.service: main process exited, code=exited, status=1/FAILURE

May 31 15:48:14 node1 systemd[1]: Failed to start etcd.

-- Subject: Unit etcd.service has failed

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit etcd.service has failed.

--

-- The result is failed.

5.离线安装需要harbor配置仓库名

执行配置文件

[root@node1 ~]# ./create_project_harbor.sh

bash: ./create_project_harbor.sh: /bin/bash^M: bad interpreter: No such file or directory

这是因为格式原因,里面有空格不能识别

sed -i "s/\r//" create_project_harbor.sh

5.执行安装集群报错需要rhel-7.5-amd64.iso

./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz --with-packages

–with-packages:若需要安装操作系统依赖,需指定该选项。

报错显示需要rhel-7.5-amd64.iso 系统,

rhel是商业操作系统 建议自己把依赖装好 conntrack socat 这两装上就行了 然后安装的时候不要加 –wit-packages

6.执行创建集群报错

W0603 11:26:02.921549 60122 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[init] Using Kubernetes version: v1.22.12

[preflight] Running pre-flight checks[WARNING FileExisting-socat]: socat not found in system path[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 24.0.6. Latest validated version: 20.10

error execution phase preflight: [preflight] Some fatal errors occurred:[ERROR FileExisting-conntrack]: conntrack not found in system path #缺少组件

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

11:26:03 CST stdout: [node1]

[preflight] Running pre-flight checks

W0603 11:26:03.194656 60202 removeetcdmember.go:80] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please, manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

W0603 11:26:03.198708 60202 cleanupnode.go:109] [reset] Failed to evaluate the "/var/lib/kubelet" directory. Skipping its unmount and cleanup: lstat /var/lib/kubelet: no such file or directory

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/dockershim /var/run/kubernetes /var/lib/cni]The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.dThe reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

11:26:03 CST message: [node1]

init kubernetes cluster failed: Failed to exec command: sudo -E /bin/bash -c "/usr/local/bin/kubeadm init --config=/etc/kubernetes/kubeadm-config.yaml --ignore-preflight-errors=FileExisting-crictl,ImagePull"

报错缺少安装文件

socat not found in system path

- 这是一个警告,说明你的系统中没有找到socat这个工具。socat是一个多功能的网络工具,尽管在kubeadm的预检查中它可能不是必需的,但建议最好还是安装它,因为它可能在某些操作中被使用到。

Docker version is not on the list of validated versions

- 这也是一个警告,表示你当前使用的Docker版本(24.0.6)并不在kubeadm官方验证过的Docker版本列表中。虽然这个警告不一定会阻止kubeadm的操作,但建议使用一个经过验证的Docker版本(如20.10),以避免潜在的问题。

contrack not found in system path

- 这是一个错误,表示kubeadm在预检查阶段没有找到contrack这个命令。但通常我们使用的应该是conntrack,它是Linux内核用来跟踪网络连接的工具。你可能需要安装或检查conntrack-tools包是否已正确安装在你的系统上。

kubeadm reset 相关输出

- 在重置过程中,kubeadm会尝试停止kubelet服务、卸载挂载的目录、删除Kubernetes的配置文件和状态目录。从日志来看,kubeadm成功地删除了部分文件和目录,但遇到了对/var/lib/kubelet目录评估失败的问题(可能是因为该目录不存在或不可访问)。

CNI配置和iptables/IPVS表未清理

- 重置过程不会清理CNI(容器网络接口)配置和iptables/IPVS表。如果你需要清理这些,你需要手动执行相关命令。

yum -y install conntrack-toolsyum -y install socat

7、报错因为是挂在文件找不到,在创建集群初始化calico一直等待,超时失败报错日志

kubelet MountVolume.SetUp failed for volume “bpffs” : hostPath type check failed: /sys/fs/bpf is not a directory

14:17:31 CST message: [node1]

Default storageClass in cluster is not unique!

14:17:31 CST skipped: [node1]

14:17:31 CST [DeployStorageClassModule] Deploy OpenEBS as cluster default StorageClass

14:17:31 CST message: [node1]

Default storageClass in cluster is not unique!

14:17:31 CST skipped: [node1]

#查看pod

[root@node1 logs]# kubectl get po -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-769bbc4c9-2smqd 0/1 Pending 0 4h39m <none> <none> <none> <none>

kube-system calico-node-hsj57 0/1 Init:0/3 0 4h39m 10.1.1.1 node1 <none> <none>

kube-system coredns-558b97598-d6v2c 0/1 Pending 0 4h39m <none> <none> <none> <none>

kube-system coredns-558b97598-gqwh4 0/1 Pending 0 4h39m <none> <none> <none> <none>

kube-system kube-apiserver-node1 1/1 Running 0 4h39m 10.1.1.1 node1 <none> <none>

kube-system kube-controller-manager-node1 1/1 Running 0 4h39m 10.1.1.1 node1 <none> <none>

kube-system kube-proxy-c4tg9 1/1 Running 0 4h39m 10.1.1.1 node1 <none> <none>

kube-system kube-scheduler-node1 1/1 Running 0 4h39m 10.1.1.1 node1 <none> <none>

kube-system nodelocaldns-kcz4p 1/1 Running 0 4h39m 10.1.1.1 node1 <none> <none>

kube-system openebs-localpv-provisioner-7869648cbc-cls8s 0/1 Pending 0 4h39m <none> <none> <none> <none>

kubesphere-system ks-installer-6c6c47d8f8-jnzj9 0/1 Pending 0 4h39m <none> <none> <none> <none>

#查看日志

kubectl describe pod calico-node-hsj57 -n kube-system

Events:Type Reason Age From Message---- ------ ---- ---- -------Warning FailedMount 7m6s (x117 over 4h27m) kubelet MountVolume.SetUp failed for volume "bpffs" : hostPath type check failed: /sys/fs/bpf is not a directoryWarning FailedMount 2m33s (x128 over 4h7m) kubelet (combined from similar events): Unable to attach or mount volumes: unmounted volumes=[bpffs], unattached volumes=[policysync kube-api-access-xqn6h var-run-calico bpffs host-local-net-dir lib-modules cni-log-dir cni-bin-dir xtables-lock var-lib-calico cni-net-dir sys-fs nodeproc]: timed out waiting for the condition

[root@node1 logs]# ls -ld /sys/fs/bpf

ls: cannot access /sys/fs/bpf: No such file or directory

[root@node1 logs]# cat /boot/config/-$(uname -r)| grep CONFIG_BPF

cat: /boot/config/-3.10.0-862.el7.x86_64: No such file or directory

[root@node1 logs]# cat /boot/config-$(uname -r)| grep CONFIG_BPF

CONFIG_BPF_JIT=y

是因为不支持挂载的功能,这跟内核版本有关 linux版本cnetos7.5

centos7.8版本

[root@node1 cert]# cat /boot/config-$(uname -r) | grep CONFIG_BPF

CONFIG_BPF=y

CONFIG_BPF_SYSCALL=y

CONFIG_BPF_JIT_ALWAYS_ON=y

CONFIG_BPF_JIT=y

CONFIG_BPF_EVENTS=y

CONFIG_BPF_KPROBE_OVERRIDE=y

要重新安装系统内核

8报错failed to create network harbor_harbor error response for daemon

failed to setup ip tables:unable to enable skip DNAT rule 重启docker解决

systemctl start docker

相关文章:

kubesphere报错

1.安装过程报错unable to sign certificate: must specify a CommonName [rootnode1 ~]# ./kk init registry -f config-sample.yaml -a kubesphere.tar.gz _ __ _ _ __ | | / / | | | | / / | |/ / _ _| |__ ___| |/…...

【QT5】<总览二> QT信号槽、对象树及样式表

文章目录 前言 一、QT信号与槽 1. 信号槽连接模型 2. 信号槽介绍 3. 自定义信号槽 二、不使用UI文件编程 三、QT的对象树 四、添加资源文件 五、样式表的使用 六、QSS文件的使用 前言 承接【QT5】<总览一> QT环境搭建、快捷键及编程规范。若存…...

2024.05.24 校招 实习 内推 面经

绿*泡*泡VX: neituijunsir 交流*裙 ,内推/实习/校招汇总表格 1、实习丨蔚来2025届实习生招募计划开启(内推) 实习丨蔚来2025届实习生招募计划开启(内推) 2、校招&实习丨联芯集成电路2025届暑期实习…...

如何理解 Java 8 引入的 Lambda 表达式及其使用场景

Lambda表达式是Java 8引入的一项重要特性,它使得编写简洁、可读和高效的代码成为可能。Lambda表达式本质上是一种匿名函数,能够更简洁地表示可传递的代码块,用于简化函数式编程的实现。 一、Lambda表达式概述 1. 什么是Lambda表达式 Lambd…...

GPT-4与GPT-4O的区别详解:面向小白用户

1. 模型介绍 在人工智能的语言模型领域,OpenAI的GPT-4和GPT-4O是最新的成员。这两个模型虽然来源于相同的基础技术,但在功能和应用上有着明显的区别。 GPT-4:这是一个通用型语言模型,可以理解和生成自然语言。无论是写作、对话还…...

使用throttle防止按钮多次点击

背景:如上图所示,点击按钮,防止按钮点击多次 <div class"footer"><el-button type"primary" click"submitThrottle">发起咨询 </el-button> </div>import { throttle } from loda…...

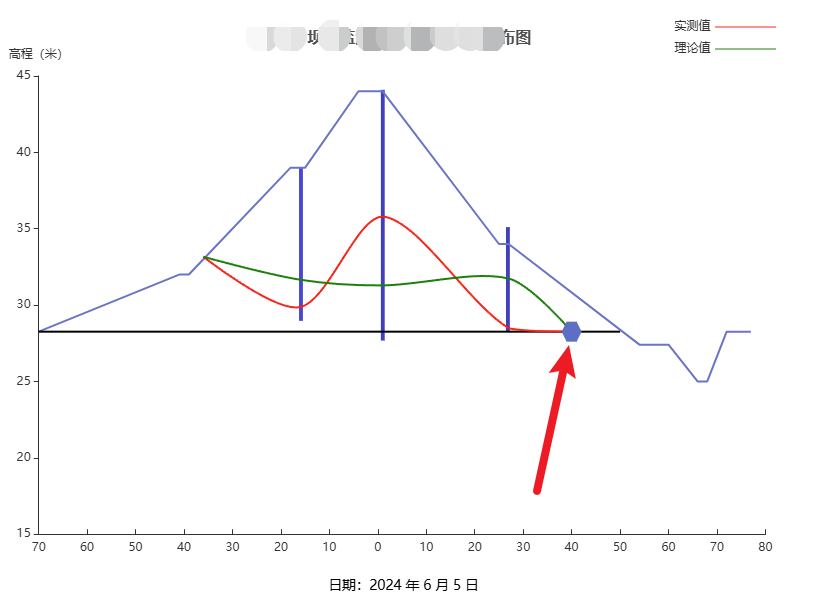

Echarts 在折线图的指定位置绘制一个图标展示

文章目录 需求分析需求 在线段交汇处用一个六边形图标展示 分析 可以使用 markPoint 和 symbol 属性来实现。这是一个更简单和更标准的方法来添加标记点在运行下述代码后,你将在浏览器中看到一个折线图,其中在 [3, 35] (即图表中第四个数据点 Thu 的 y 值为 35 的位置)处…...

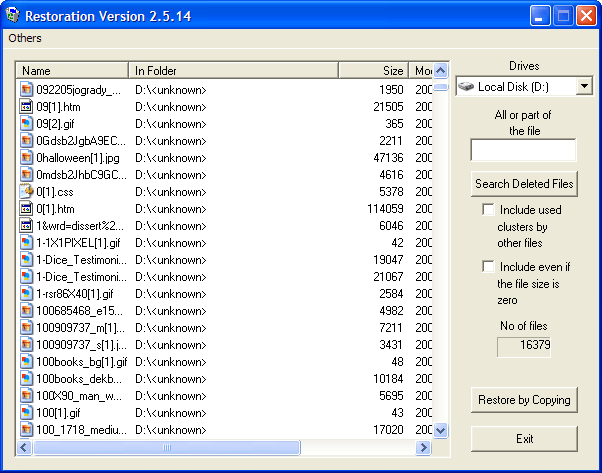

适用于 Windows 的 8 大数据恢复软件

数据恢复软件可帮助您恢复因意外删除或由于某些技术故障(如硬盘损坏等)而丢失的数据。这些工具可帮助您从硬盘驱动器 (HDD) 中高效地恢复丢失的数据,因为这些工具不支持从 SSD 恢复数据。重要的是要了解,您删除的数据不会被系统永…...

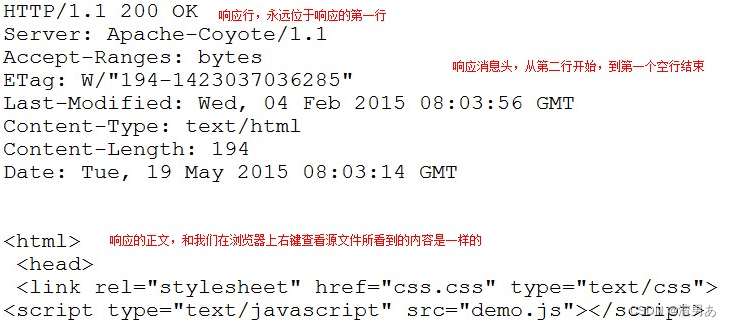

HTTP基础

一、HTTP协议 1、HTTP协议概念 HTTP的全称是:Hyper Text Transfer Protocol,意为 超文本传输协议。它指的是服务器和客户端之间交互必须遵循的一问一答的规则。形容这个规则:问答机制、握手机制。 它规范了请求和响应内容的类型和格式, 是基于…...

深入了解Linux命令:visudo

深入了解Linux命令:visudo 在Linux系统中,sudo(superuser do)是一个允许用户以其他用户身份(通常是超级用户或其他用户)执行命令的程序。sudo的配置文件/etc/sudoers存储了哪些用户可以执行哪些命令的权限…...

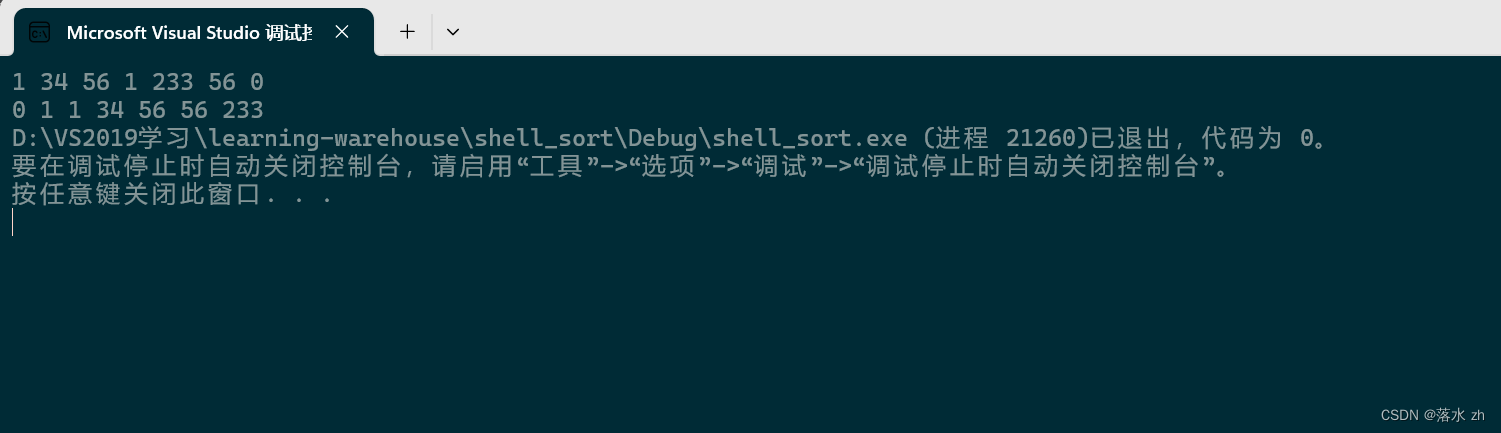

十大排序 —— 希尔排序

十大排序 —— 希尔排序 什么是希尔排序插入排序希尔排序递归版本 我们今天来看另一个很有名的排序——希尔排序 什么是希尔排序 希尔排序(Shell Sort)是插入排序的一种更高效的改进版本,由Donald Shell于1959年提出。它通过比较相距一定间…...

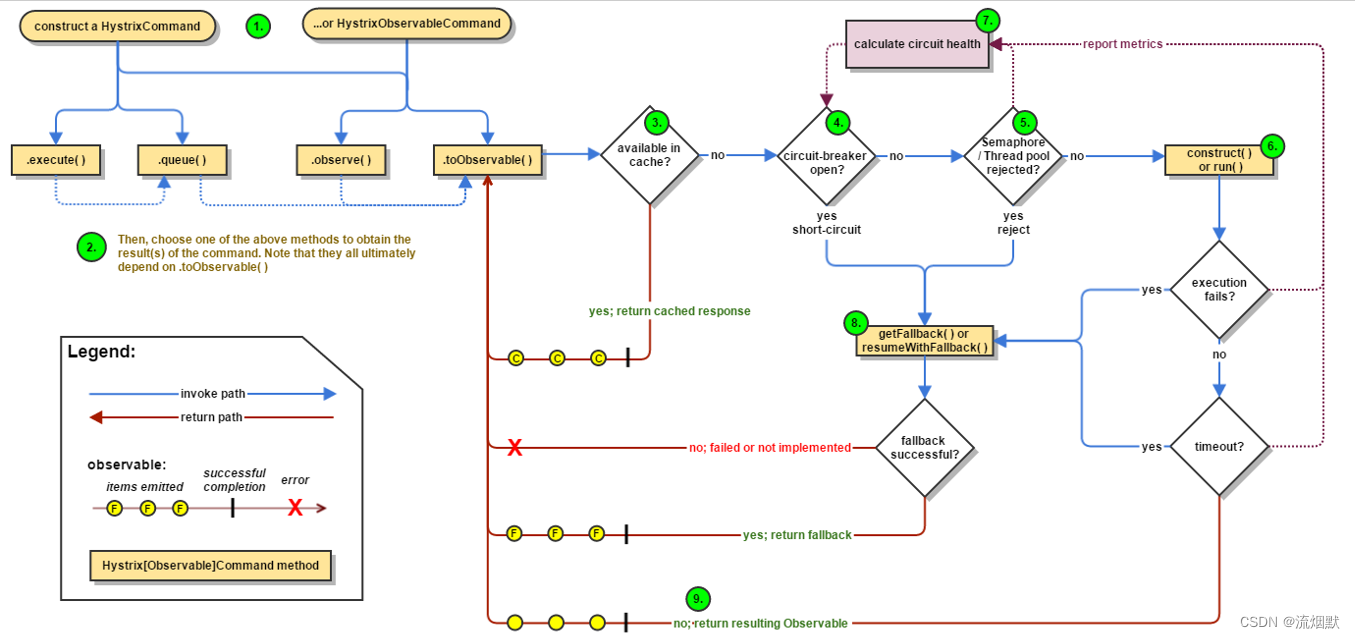

SpringCloud Hystrix服务熔断实例总结

SpringCloud Hystrix断路器-服务熔断与降级和HystrixDashboard SpringCloud Hystrix服务降级实例总结 本文采用版本为Hoxton.SR1系列,SpringBoot为2.2.2.RELEASE <dependency><groupId>org.springframework.cloud</groupId><artifactId>s…...

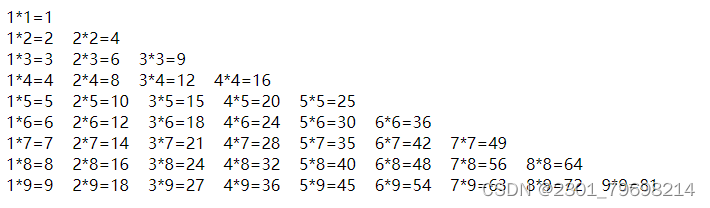

为什么没有输出九九乘法表?

下面的程序本来想输出九九乘法表到屏幕上,为什么没有输出呢?怎样修改? <!DOCTYPE html> <html> <head> <meta charset"utf-8" /> <title>我的HTML练习</title> …...

EasyRecovery5步轻松恢复电脑手机数据,EasyRecovery带你探索!

在当今的数字化时代,数据已经成为我们生活和工作中不可或缺的一部分。无论是个人照片、工作文件还是重要的商业信息,数据的安全存储和恢复都显得尤为重要。EasyRecovery作为一款广受欢迎的数据恢复软件,为用户提供了强大的数据恢复功能&#…...

904. 水果成篮

904. 水果成篮 原题链接:完成情况:解题思路:参考代码:_904水果成篮_滑动窗口 错误经验吸取 原题链接: 904. 水果成篮 https://leetcode.cn/problems/fruit-into-baskets/description/ 完成情况: 解题思…...

在618集中上新,蕉下、VVC们为何押注拼多多?

编辑|Ray 自前两年崛起的防晒产品,今年依旧热度不减。 头部品牌蕉下,2020年入驻拼多多,如今年销售额已过亿元。而自去年起重点押注拼多多的时尚防晒品牌VVC,很快销量翻番。这两家公司,不约而同在618之前上…...

Maximo Attachments配置

以下内容以 Windows 上 Maximo 为例,并假定设置 DOCLINKS 的根路径为 “C:\DOCLINKS”。 HTTP Server配置 修改C:\Program Files\IBM\HTTPServer\conf\httpd.conf文件 查找 “DocumentRoot” 并修改成如下配置 DocumentRoot "C:\DOCLINKS"查找 “<…...

一分钟了解香港的场外期权报价

香港的场外期权报价 在香港这个国际金融中心,场外期权交易是金融市场不可或缺的一部分。场外期权,作为一种非标准化的金融衍生品,为投资者提供了在特定时间以约定价格买入或卖出某种资产的机会。对于希望参与这一市场的投资者来说࿰…...

专业开放式耳机什么牌子更好?六大技巧教你不踩坑!

相信很多入坑的朋友再最开始挑选耳机的时候都会矛盾,现在市面上这么多耳机,我该怎么选择?其实对于开放式耳机,大家都没有一个明确的概念,可能会为了音质的一小点提升而耗费大量的资金,毕竟这是一个无底洞。…...

注意!!24软考系统集成有变化,第三版考试一定要看这个!

系统集成在今年年初改版之后,上半年的考试也取消了,留给大家充足的时间来学习新的教材和考纲。但11月也将是第三版考纲的第1次考试,重点到底有什么?今天带大家详细的了解一下最新版中项考试大纲。 一、考试说明 1.考试目标 通过…...

Vue记事本应用实现教程

文章目录 1. 项目介绍2. 开发环境准备3. 设计应用界面4. 创建Vue实例和数据模型5. 实现记事本功能5.1 添加新记事项5.2 删除记事项5.3 清空所有记事 6. 添加样式7. 功能扩展:显示创建时间8. 功能扩展:记事项搜索9. 完整代码10. Vue知识点解析10.1 数据绑…...

springboot 百货中心供应链管理系统小程序

一、前言 随着我国经济迅速发展,人们对手机的需求越来越大,各种手机软件也都在被广泛应用,但是对于手机进行数据信息管理,对于手机的各种软件也是备受用户的喜爱,百货中心供应链管理系统被用户普遍使用,为方…...

R语言AI模型部署方案:精准离线运行详解

R语言AI模型部署方案:精准离线运行详解 一、项目概述 本文将构建一个完整的R语言AI部署解决方案,实现鸢尾花分类模型的训练、保存、离线部署和预测功能。核心特点: 100%离线运行能力自包含环境依赖生产级错误处理跨平台兼容性模型版本管理# 文件结构说明 Iris_AI_Deployme…...

《Playwright:微软的自动化测试工具详解》

Playwright 简介:声明内容来自网络,将内容拼接整理出来的文档 Playwright 是微软开发的自动化测试工具,支持 Chrome、Firefox、Safari 等主流浏览器,提供多语言 API(Python、JavaScript、Java、.NET)。它的特点包括&a…...

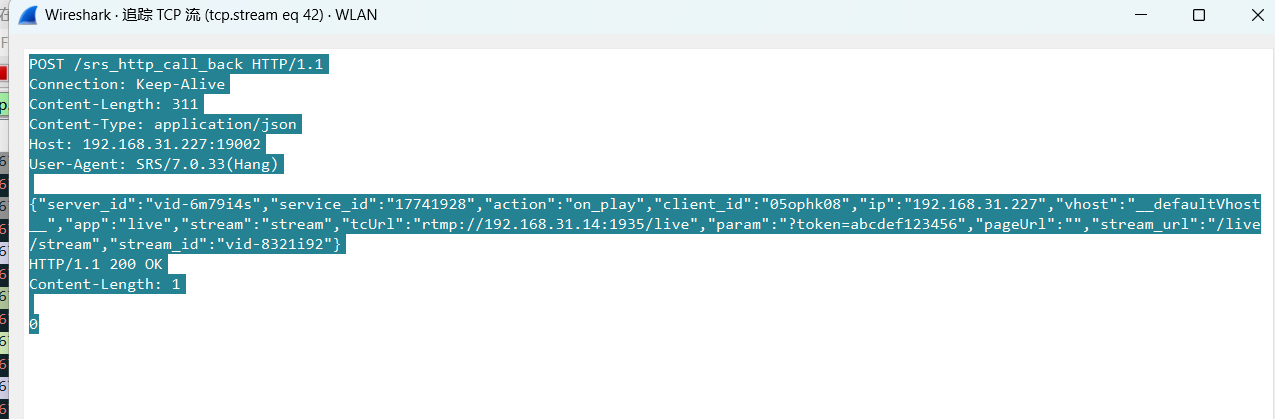

srs linux

下载编译运行 git clone https:///ossrs/srs.git ./configure --h265on make 编译完成后即可启动SRS # 启动 ./objs/srs -c conf/srs.conf # 查看日志 tail -n 30 -f ./objs/srs.log 开放端口 默认RTMP接收推流端口是1935,SRS管理页面端口是8080,可…...

镜像里切换为普通用户

如果你登录远程虚拟机默认就是 root 用户,但你不希望用 root 权限运行 ns-3(这是对的,ns3 工具会拒绝 root),你可以按以下方法创建一个 非 root 用户账号 并切换到它运行 ns-3。 一次性解决方案:创建非 roo…...

解决本地部署 SmolVLM2 大语言模型运行 flash-attn 报错

出现的问题 安装 flash-attn 会一直卡在 build 那一步或者运行报错 解决办法 是因为你安装的 flash-attn 版本没有对应上,所以报错,到 https://github.com/Dao-AILab/flash-attention/releases 下载对应版本,cu、torch、cp 的版本一定要对…...

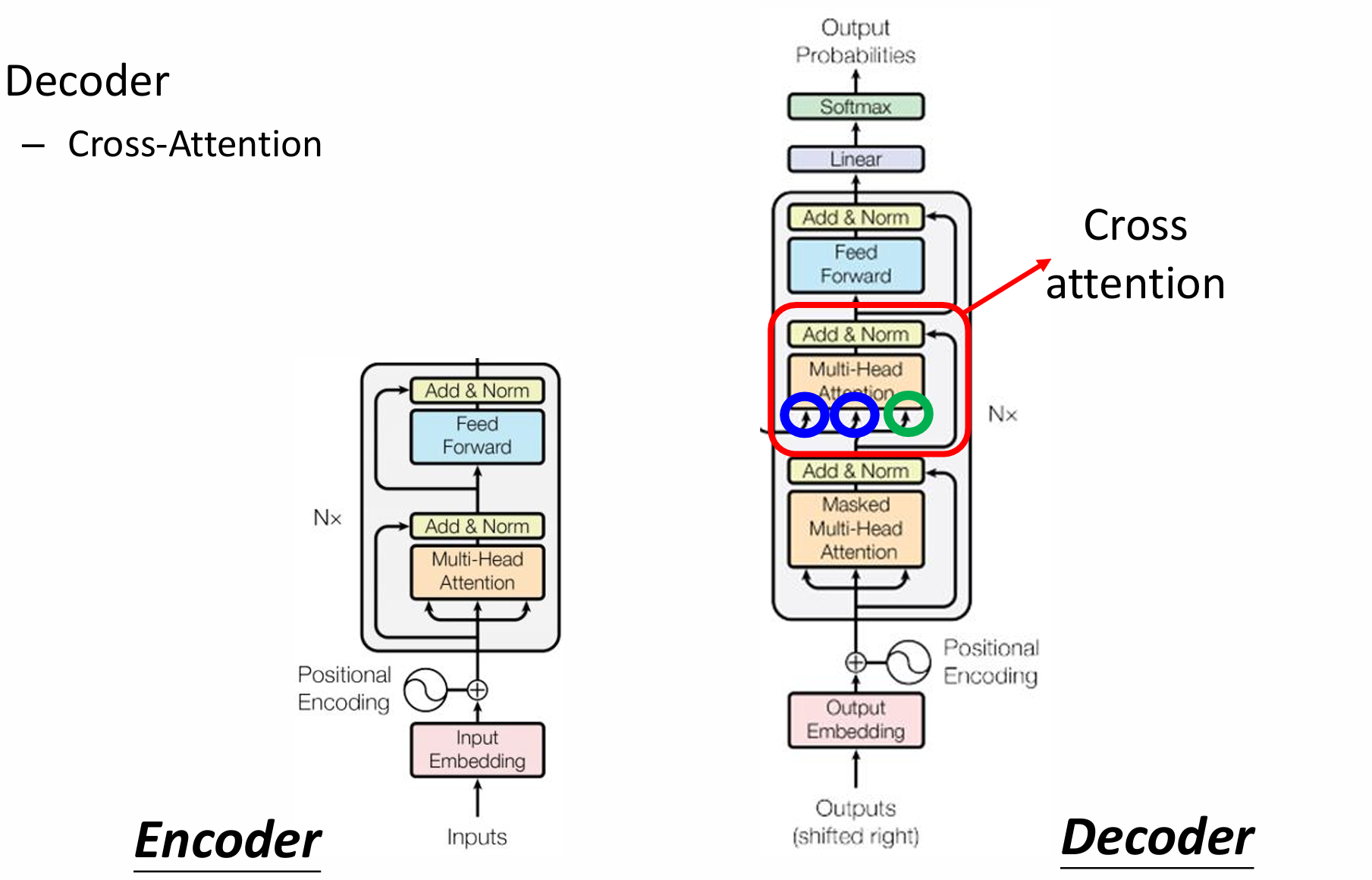

自然语言处理——Transformer

自然语言处理——Transformer 自注意力机制多头注意力机制Transformer 虽然循环神经网络可以对具有序列特性的数据非常有效,它能挖掘数据中的时序信息以及语义信息,但是它有一个很大的缺陷——很难并行化。 我们可以考虑用CNN来替代RNN,但是…...

LangChain知识库管理后端接口:数据库操作详解—— 构建本地知识库系统的基础《二》

这段 Python 代码是一个完整的 知识库数据库操作模块,用于对本地知识库系统中的知识库进行增删改查(CRUD)操作。它基于 SQLAlchemy ORM 框架 和一个自定义的装饰器 with_session 实现数据库会话管理。 📘 一、整体功能概述 该模块…...

从面试角度回答Android中ContentProvider启动原理

Android中ContentProvider原理的面试角度解析,分为已启动和未启动两种场景: 一、ContentProvider已启动的情况 1. 核心流程 触发条件:当其他组件(如Activity、Service)通过ContentR…...