深度学习每周学习总结J1(ResNet-50算法实战与解析 - 鸟类识别)

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

目录

- 0. 总结

- 1. 设置GPU

- 2. 导入数据及处理部分

- 3. 划分数据集

- 4. 模型构建部分

- 5. 设置超参数:定义损失函数,学习率,以及根据学习率定义优化器等

- 6. 训练函数

- 7. 测试函数

- 7. 正式训练

- 9. 结果可视化

- 10. 模型的保存

- 11. 使用训练好的模型进行预测

0. 总结

数据导入及处理部分:本次数据导入没有使用torchvision自带的数据集,需要将原始数据进行处理包括数据导入,查看数据分类情况,定义transforms,进行数据类型转换等操作。

划分数据集:划定训练集测试集后,再使用torch.utils.data中的DataLoader()分别加载上一步处理好的训练及测试数据,查看批处理维度.

模型构建部分:resnet-50

设置超参数:在这之前需要定义损失函数,学习率(动态学习率),以及根据学习率定义优化器(例如SGD随机梯度下降),用来在训练中更新参数,最小化损失函数。

定义训练函数:函数的传入的参数有四个,分别是设置好的DataLoader(),定义好的模型,损失函数,优化器。函数内部初始化损失准确率为0,接着开始循环,使用DataLoader()获取一个批次的数据,对这个批次的数据带入模型得到预测值,然后使用损失函数计算得到损失值。接下来就是进行反向传播以及使用优化器优化参数,梯度清零放在反向传播之前或者是使用优化器优化之后都是可以的,一般是默认放在反向传播之前。

定义测试函数:函数传入的参数相比训练函数少了优化器,只需传入设置好的DataLoader(),定义好的模型,损失函数。此外除了处理批次数据时无需再设置梯度清零、返向传播以及优化器优化参数,其余部分均和训练函数保持一致。

训练过程:定义训练次数,有几次就使用整个数据集进行几次训练,初始化四个空list分别存储每次训练及测试的准确率及损失。使用model.train()开启训练模式,调用训练函数得到准确率及损失。使用model.eval()将模型设置为评估模式,调用测试函数得到准确率及损失。接着就是将得到的训练及测试的准确率及损失存储到相应list中并合并打印出来,得到每一次整体训练后的准确率及损失。

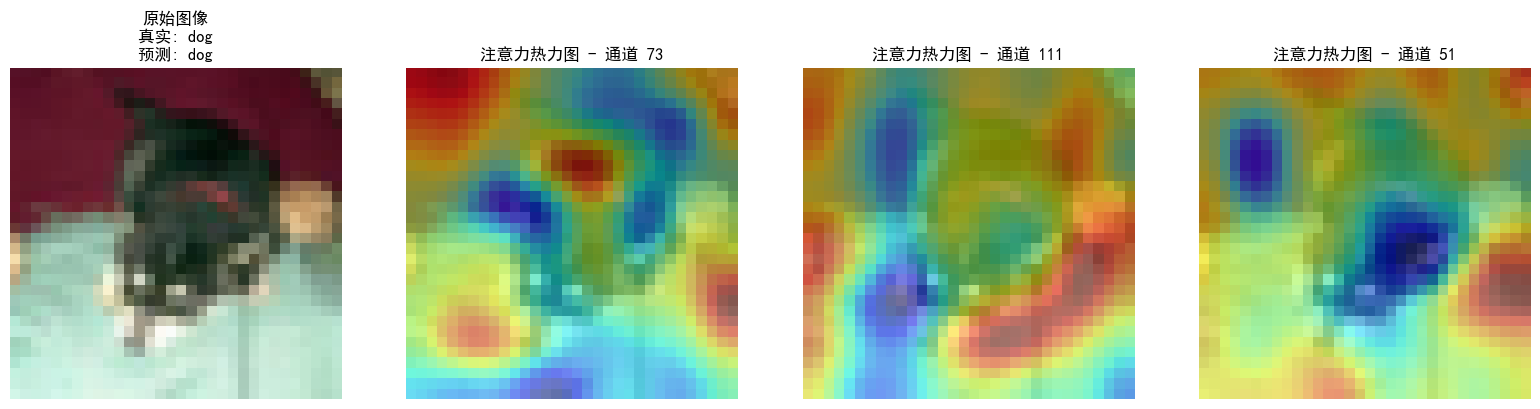

结果可视化

模型的保存,调取及使用。在PyTorch中,通常使用 torch.save(model.state_dict(), ‘model.pth’) 保存模型的参数,使用 model.load_state_dict(torch.load(‘model.pth’)) 加载参数。

需要改进优化的地方:在保证整体流程没有问题的情况下,继续细化细节研究,比如一些函数的原理及作用,如何提升训练集准确率等问题。

import torch

import torch.nn as nn

import torchvision

from torchvision import datasets,transforms

from torch.utils.data import DataLoader

import torchvision.models as models

import torch.nn.functional as Fimport os,PIL,pathlib

import matplotlib.pyplot as plt

import warningswarnings.filterwarnings('ignore') # 忽略警告信息plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

1. 设置GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device

device(type='cuda')

2. 导入数据及处理部分

# 获取数据分布情况

path_dir = './data/bird_photos/'

path_dir = pathlib.Path(path_dir)paths = list(path_dir.glob('*'))

# classNames = [str(path).split("\\")[-1] for path in paths] # ['Bananaquit', 'Black Skimmer', 'Black Throated Bushtiti', 'Cockatoo']

classNames = [path.parts[-1] for path in paths]

classNames

['Bananaquit', 'Black Skimmer', 'Black Throated Bushtiti', 'Cockatoo']

# 定义transforms 并处理数据

train_transforms = transforms.Compose([transforms.Resize([224,224]), # 将输入图片resize成统一尺寸transforms.RandomHorizontalFlip(), # 随机水平翻转transforms.ToTensor(), # 将PIL Image 或 numpy.ndarray 装换为tensor,并归一化到[0,1]之间transforms.Normalize( # 标准化处理 --> 转换为标准正太分布(高斯分布),使模型更容易收敛mean = [0.485,0.456,0.406], # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。std = [0.229,0.224,0.225])

])

test_transforms = transforms.Compose([transforms.Resize([224,224]),transforms.ToTensor(),transforms.Normalize(mean = [0.485,0.456,0.406],std = [0.229,0.224,0.225])

])

total_data = datasets.ImageFolder('./data/bird_photos/',transform = train_transforms)

total_data

Dataset ImageFolderNumber of datapoints: 565Root location: ./data/bird_photos/StandardTransform

Transform: Compose(Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=True)RandomHorizontalFlip(p=0.5)ToTensor()Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]))

total_data.class_to_idx

{'Bananaquit': 0,'Black Skimmer': 1,'Black Throated Bushtiti': 2,'Cockatoo': 3}

3. 划分数据集

# 划分数据集

train_size = int(len(total_data) * 0.8)

test_size = len(total_data) - train_sizetrain_dataset,test_dataset = torch.utils.data.random_split(total_data,[train_size,test_size])

train_dataset,test_dataset

(<torch.utils.data.dataset.Subset at 0x23b545994b0>,<torch.utils.data.dataset.Subset at 0x23b54599300>)

# 定义DataLoader用于数据集的加载batch_size = 32train_dl = torch.utils.data.DataLoader(train_dataset,batch_size = batch_size,shuffle = True,num_workers = 1

)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size = batch_size,shuffle = True,num_workers = 1

)

# 观察数据维度

for X,y in test_dl:print("Shape of X [N,C,H,W]: ",X.shape)print("Shape of y: ", y.shape,y.dtype)break

Shape of X [N,C,H,W]: torch.Size([32, 3, 224, 224])

Shape of y: torch.Size([32]) torch.int64

4. 模型构建部分

import torch

import torch.nn as nn

import torch.nn.functional as Fclass ConvBlock(nn.Module):def __init__(self, in_channels, filters, kernel_size, strides=1):super(ConvBlock, self).__init__()filters1, filters2, filters3 = filtersself.conv1 = nn.Conv2d(in_channels, filters1, kernel_size=1, stride=strides)self.bn1 = nn.BatchNorm2d(filters1)self.conv2 = nn.Conv2d(filters1, filters2, kernel_size=kernel_size, padding=1)self.bn2 = nn.BatchNorm2d(filters2)self.conv3 = nn.Conv2d(filters2, filters3, kernel_size=1)self.bn3 = nn.BatchNorm2d(filters3)self.shortcut = nn.Sequential()if in_channels != filters3 or strides != 1:self.shortcut = nn.Sequential(nn.Conv2d(in_channels, filters3, kernel_size=1, stride=strides),nn.BatchNorm2d(filters3))def forward(self, x):shortcut = self.shortcut(x)x = F.relu(self.bn1(self.conv1(x)))x = F.relu(self.bn2(self.conv2(x)))x = self.bn3(self.conv3(x))x += shortcutx = F.relu(x)return xclass ResNet50(nn.Module):def __init__(self, num_classes=1000):super(ResNet50, self).__init__()self.pad = nn.ZeroPad2d(3)self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3)self.bn1 = nn.BatchNorm2d(64)self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)# Define block layers with appropriate strides and filter sizesself.layer1 = self._build_layer(64, [64, 64, 256], blocks=3, strides=1)self.layer2 = self._build_layer(256, [128, 128, 512], blocks=4, strides=2)self.layer3 = self._build_layer(512, [256, 256, 1024], blocks=6, strides=2)self.layer4 = self._build_layer(1024, [512, 512, 2048], blocks=3, strides=2)self.avgpool = nn.AdaptiveAvgPool2d((1, 1))self.fc = nn.Linear(2048, num_classes)def _build_layer(self, in_channels, filters, blocks, strides=1):layers = []# Add the first block with potential downsampling (by strides)layers.append(ConvBlock(in_channels, filters, kernel_size=3, strides=strides))in_channels = filters[-1]# Add the additional blocks in this layer without downsamplingfor _ in range(1, blocks):layers.append(ConvBlock(in_channels, filters, kernel_size=3, strides=1))return nn.Sequential(*layers)def forward(self, x):x = self.pad(x)x = F.relu(self.bn1(self.conv1(x)))x = self.maxpool(x)x = self.layer1(x)x = self.layer2(x)x = self.layer3(x)x = self.layer4(x)x = self.avgpool(x)x = torch.flatten(x, 1)x = self.fc(x)return x# Ensure the model uses the correct device

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = ResNet50()

model.to(device)

ResNet50((pad): ZeroPad2d((3, 3, 3, 3))(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(layer1): Sequential((0): ConvBlock((conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential((0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): ConvBlock((conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(2): ConvBlock((conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential()))(layer2): Sequential((0): ConvBlock((conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(2, 2))(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential((0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2))(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): ConvBlock((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(2): ConvBlock((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(3): ConvBlock((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential()))(layer3): Sequential((0): ConvBlock((conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(2, 2))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential((0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2))(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): ConvBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(2): ConvBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(3): ConvBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(4): ConvBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(5): ConvBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential()))(layer4): Sequential((0): ConvBlock((conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(2, 2))(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential((0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2))(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): ConvBlock((conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential())(2): ConvBlock((conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1))(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1))(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(shortcut): Sequential()))(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))(fc): Linear(in_features=2048, out_features=1000, bias=True)

)

5. 设置超参数:定义损失函数,学习率,以及根据学习率定义优化器等

# loss_fn = nn.CrossEntropyLoss() # 创建损失函数# learn_rate = 1e-3 # 初始学习率

# def adjust_learning_rate(optimizer,epoch,start_lr):

# # 每两个epoch 衰减到原来的0.98

# lr = start_lr * (0.92 ** (epoch//2))

# for param_group in optimizer.param_groups:

# param_group['lr'] = lr# optimizer = torch.optim.Adam(model.parameters(),lr=learn_rate)

# 调用官方接口示例

loss_fn = nn.CrossEntropyLoss()learn_rate = 1e-4

lambda1 = lambda epoch:(0.92**(epoch//2))optimizer = torch.optim.Adam(model.parameters(),lr = learn_rate)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer,lr_lambda=lambda1) # 选定调整方法

6. 训练函数

# 训练函数

def train(dataloader,model,loss_fn,optimizer):size = len(dataloader.dataset) # 训练集大小num_batches = len(dataloader) # 批次数目train_loss,train_acc = 0,0for X,y in dataloader:X,y = X.to(device),y.to(device)# 计算预测误差pred = model(X)loss = loss_fn(pred,y)# 反向传播optimizer.zero_grad()loss.backward()optimizer.step()# 记录acc与losstrain_acc += (pred.argmax(1)==y).type(torch.float).sum().item()train_loss += loss.item()train_acc /= sizetrain_loss /= num_batchesreturn train_acc,train_loss

7. 测试函数

# 测试函数

def test(dataloader,model,loss_fn):size = len(dataloader.dataset)num_batches = len(dataloader)test_acc,test_loss = 0,0with torch.no_grad():for X,y in dataloader:X,y = X.to(device),y.to(device)# 计算losspred = model(X)loss = loss_fn(pred,y)test_acc += (pred.argmax(1)==y).type(torch.float).sum().item()test_loss += loss.item()test_acc /= sizetest_loss /= num_batchesreturn test_acc,test_loss

7. 正式训练

import copyepochs = 40train_acc = []

train_loss = []

test_acc = []

test_loss = []best_acc = 0.0for epoch in range(epochs):# 更新学习率——使用自定义学习率时使用# adjust_learning_rate(optimizer,epoch,learn_rate)model.train()epoch_train_acc,epoch_train_loss = train(train_dl,model,loss_fn,optimizer)scheduler.step() # 更新学习率——调用官方动态学习率时使用model.eval()epoch_test_acc,epoch_test_loss = test(test_dl,model,loss_fn)# 保存最佳模型到 best_modelif epoch_test_acc > best_acc:best_acc = epoch_test_accbest_model = copy.deepcopy(model)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)# 获取当前学习率lr = optimizer.state_dict()['param_groups'][0]['lr']template = ('Epoch:{:2d},Train_acc:{:.1f}%,Train_loss:{:.3f},Test_acc:{:.1f}%,Test_loss:{:.3f},Lr:{:.2E}')print(template.format(epoch+1,epoch_train_acc*100,epoch_train_loss,epoch_test_acc*100,epoch_test_loss,lr))print('Done')

Epoch: 1,Train_acc:35.4%,Train_loss:3.431,Test_acc:23.9%,Test_loss:3.033,Lr:1.00E-04

Epoch: 2,Train_acc:69.2%,Train_loss:0.899,Test_acc:26.5%,Test_loss:3.017,Lr:9.20E-05

Epoch: 3,Train_acc:74.1%,Train_loss:0.652,Test_acc:59.3%,Test_loss:1.278,Lr:9.20E-05

Epoch: 4,Train_acc:78.3%,Train_loss:0.587,Test_acc:67.3%,Test_loss:1.353,Lr:8.46E-05

Epoch: 5,Train_acc:82.5%,Train_loss:0.521,Test_acc:75.2%,Test_loss:0.829,Lr:8.46E-05

Epoch: 6,Train_acc:86.3%,Train_loss:0.433,Test_acc:66.4%,Test_loss:1.308,Lr:7.79E-05

Epoch: 7,Train_acc:90.3%,Train_loss:0.340,Test_acc:67.3%,Test_loss:1.600,Lr:7.79E-05

Epoch: 8,Train_acc:89.2%,Train_loss:0.374,Test_acc:71.7%,Test_loss:1.014,Lr:7.16E-05

Epoch: 9,Train_acc:88.7%,Train_loss:0.305,Test_acc:77.0%,Test_loss:0.841,Lr:7.16E-05

Epoch:10,Train_acc:90.7%,Train_loss:0.309,Test_acc:79.6%,Test_loss:1.094,Lr:6.59E-05

Epoch:11,Train_acc:91.8%,Train_loss:0.318,Test_acc:72.6%,Test_loss:0.976,Lr:6.59E-05

Epoch:12,Train_acc:93.6%,Train_loss:0.243,Test_acc:73.5%,Test_loss:1.209,Lr:6.06E-05

Epoch:13,Train_acc:94.7%,Train_loss:0.159,Test_acc:71.7%,Test_loss:0.947,Lr:6.06E-05

Epoch:14,Train_acc:98.0%,Train_loss:0.076,Test_acc:80.5%,Test_loss:0.707,Lr:5.58E-05

Epoch:15,Train_acc:97.8%,Train_loss:0.083,Test_acc:79.6%,Test_loss:0.923,Lr:5.58E-05

Epoch:16,Train_acc:98.2%,Train_loss:0.059,Test_acc:82.3%,Test_loss:0.650,Lr:5.13E-05

Epoch:17,Train_acc:98.5%,Train_loss:0.072,Test_acc:76.1%,Test_loss:0.828,Lr:5.13E-05

Epoch:18,Train_acc:98.0%,Train_loss:0.175,Test_acc:78.8%,Test_loss:0.834,Lr:4.72E-05

Epoch:19,Train_acc:94.2%,Train_loss:0.173,Test_acc:61.9%,Test_loss:2.606,Lr:4.72E-05

Epoch:20,Train_acc:96.2%,Train_loss:0.123,Test_acc:77.9%,Test_loss:0.959,Lr:4.34E-05

Epoch:21,Train_acc:96.5%,Train_loss:0.166,Test_acc:76.1%,Test_loss:1.266,Lr:4.34E-05

Epoch:22,Train_acc:96.9%,Train_loss:0.196,Test_acc:85.0%,Test_loss:0.698,Lr:4.00E-05

Epoch:23,Train_acc:98.7%,Train_loss:0.082,Test_acc:82.3%,Test_loss:0.626,Lr:4.00E-05

Epoch:24,Train_acc:96.0%,Train_loss:0.136,Test_acc:81.4%,Test_loss:0.805,Lr:3.68E-05

Epoch:25,Train_acc:98.5%,Train_loss:0.101,Test_acc:83.2%,Test_loss:0.576,Lr:3.68E-05

Epoch:26,Train_acc:98.5%,Train_loss:0.062,Test_acc:80.5%,Test_loss:0.597,Lr:3.38E-05

Epoch:27,Train_acc:99.6%,Train_loss:0.039,Test_acc:83.2%,Test_loss:0.574,Lr:3.38E-05

Epoch:28,Train_acc:99.3%,Train_loss:0.080,Test_acc:85.0%,Test_loss:0.758,Lr:3.11E-05

Epoch:29,Train_acc:99.6%,Train_loss:0.059,Test_acc:84.1%,Test_loss:0.608,Lr:3.11E-05

Epoch:30,Train_acc:98.9%,Train_loss:0.054,Test_acc:82.3%,Test_loss:0.753,Lr:2.86E-05

Epoch:31,Train_acc:98.9%,Train_loss:0.035,Test_acc:83.2%,Test_loss:0.617,Lr:2.86E-05

Epoch:32,Train_acc:98.7%,Train_loss:0.046,Test_acc:78.8%,Test_loss:0.847,Lr:2.63E-05

Epoch:33,Train_acc:98.9%,Train_loss:0.028,Test_acc:82.3%,Test_loss:0.746,Lr:2.63E-05

Epoch:34,Train_acc:99.6%,Train_loss:0.032,Test_acc:79.6%,Test_loss:0.629,Lr:2.42E-05

Epoch:35,Train_acc:99.3%,Train_loss:0.027,Test_acc:82.3%,Test_loss:0.597,Lr:2.42E-05

Epoch:36,Train_acc:99.6%,Train_loss:0.029,Test_acc:87.6%,Test_loss:0.488,Lr:2.23E-05

Epoch:37,Train_acc:99.6%,Train_loss:0.026,Test_acc:87.6%,Test_loss:0.552,Lr:2.23E-05

Epoch:38,Train_acc:99.1%,Train_loss:0.029,Test_acc:79.6%,Test_loss:0.572,Lr:2.05E-05

Epoch:39,Train_acc:99.8%,Train_loss:0.107,Test_acc:84.1%,Test_loss:0.704,Lr:2.05E-05

Epoch:40,Train_acc:99.1%,Train_loss:0.094,Test_acc:76.1%,Test_loss:0.772,Lr:1.89E-05

Done

9. 结果可视化

epochs_range = range(epochs)plt.figure(figsize = (12,3))plt.subplot(1,2,1)

plt.plot(epochs_range,train_acc,label = 'Training Accuracy')

plt.plot(epochs_range,test_acc,label = 'Test Accuracy')

plt.legend(loc = 'lower right')

plt.title('Training and Validation Accuracy')plt.subplot(1,2,2)

plt.plot(epochs_range,train_loss,label = 'Test Accuracy')

plt.plot(epochs_range,test_loss,label = 'Test Loss')

plt.legend(loc = 'lower right')

plt.title('Training and validation Loss')

plt.show()

10. 模型的保存

# 自定义模型保存

# 状态字典保存

torch.save(model.state_dict(),'./模型参数/J1_resnet50_model_state_dict.pth') # 仅保存状态字典# 加载状态字典到模型

best_model = ResNet50().to(device) # 定义官方vgg16模型用来加载参数best_model.load_state_dict(torch.load('./模型参数/J1_resnet50_model_state_dict.pth')) # 加载状态字典到模型

<All keys matched successfully>

11. 使用训练好的模型进行预测

# 指定路径图片预测

from PIL import Image

import torchvision.transforms as transformsclasses = list(total_data.class_to_idx) # classes = list(total_data.class_to_idx)def predict_one_image(image_path,model,transform,classes):test_img = Image.open(image_path).convert('RGB')# plt.imshow(test_img) # 展示待预测的图片test_img = transform(test_img)img = test_img.to(device).unsqueeze(0)model.eval()output = model(img)print(output) # 观察模型预测结果的输出数据_,pred = torch.max(output,1)pred_class = classes[pred]print(f'预测结果是:{pred_class}')

# 预测训练集中的某张照片

predict_one_image(image_path='./data/bird_photos/Bananaquit/007.jpg',model = model,transform = test_transforms,classes = classes)

tensor([[13.8683, 4.9143, 4.8488, 0.3971, -4.3269, -6.6820, -5.4773, -4.8104,-6.3061, -6.0159, -4.1786, -5.2478, -4.7984, -4.5901, -5.0879, -4.8318,-5.1802, -4.8213, -4.1029, -4.8857, -5.7887, -4.4304, -5.2544, -4.4558,-3.9174, -4.3528, -5.6769, -5.0493, -4.5999, -6.1482, -4.0200, -3.2741,-4.6080, -6.3622, -3.1413, -4.3438, -5.2021, -4.2925, -5.5243, -4.7640,-4.9537, -5.5491, -5.6634, -3.9548, -4.3976, -5.2634, -5.1851, -4.0045,-6.0956, -4.2346, -4.1718, -4.4907, -4.1201, -4.3656, -4.0707, -5.3282,-5.3788, -4.4176, -4.8130, -5.5919, -5.5178, -5.1131, -4.6648, -4.9041,-5.3978, -5.4855, -5.0189, -4.8627, -5.7097, -4.2608, -5.3921, -4.4241,-5.8152, -5.2379, -5.2549, -5.9455, -4.4937, -5.2863, -5.2548, -5.5048,-4.9770, -5.0247, -4.8115, -5.1978, -5.0874, -4.8657, -4.7004, -6.1652,-7.1067, -5.3773, -5.5511, -4.6870, -5.7534, -4.9669, -4.2131, -5.3542,-4.9979, -5.4464, -5.2461, -4.8821, -5.5314, -5.8627, -4.4703, -4.0505,-4.3990, -6.0654, -4.9767, -4.8933, -5.0749, -5.0969, -4.7121, -4.7877,-5.9138, -5.0609, -5.6325, -4.4758, -5.3832, -5.3308, -5.1663, -5.3744,-4.6568, -5.5547, -4.7015, -4.5332, -4.7048, -4.0575, -4.9354, -5.9719,-4.3700, -4.3375, -4.3835, -5.3360, -4.6949, -4.7043, -3.0128, -5.4335,-4.1490, -5.5024, -5.1213, -4.5514, -4.3888, -5.1336, -4.9035, -2.9031,-4.2921, -5.1654, -4.5989, -5.7990, -5.1267, -4.8723, -5.2425, -5.2648,-4.7031, -4.4419, -4.9500, -5.4625, -4.4614, -6.0281, -4.5982, -5.2243,-5.2080, -4.5609, -4.2382, -5.6643, -5.3306, -4.9993, -5.4132, -5.1988,-4.8110, -4.3645, -4.7883, -4.4945, -4.1371, -6.1615, -4.3348, -6.0694,-5.0832, -5.7544, -7.4370, -5.0871, -4.8265, -4.9912, -5.4170, -4.7337,-4.8869, -4.2867, -6.0734, -5.3458, -4.6136, -4.6427, -5.0670, -5.2674,-5.3138, -6.1891, -4.5351, -5.4272, -4.0822, -3.8868, -5.3524, -4.5716,-5.2943, -5.4524, -5.8091, -4.6113, -6.2336, -3.9732, -5.5085, -4.9551,-5.8190, -4.7829, -6.0425, -4.4670, -5.0961, -4.8832, -4.9884, -5.3502,-4.7928, -4.6363, -5.2002, -5.2305, -6.3564, -4.8475, -5.7222, -4.9972,-4.8803, -4.9031, -4.7486, -5.1633, -4.4830, -5.8250, -4.9781, -6.4707,-5.1635, -6.2186, -4.6911, -6.1466, -4.3560, -4.0647, -5.6964, -4.6971,-4.5650, -5.2661, -4.4342, -6.5263, -5.0083, -4.1184, -4.4144, -4.3142,-3.9152, -4.6833, -3.9448, -4.7297, -4.7736, -4.5994, -5.2097, -3.7666,-4.4968, -4.7199, -3.7594, -4.8945, -4.8153, -4.3126, -5.4928, -4.2669,-4.4810, -5.1798, -6.5569, -6.4180, -6.2858, -5.0941, -4.5702, -5.1153,-5.6136, -5.2451, -4.9205, -5.2890, -4.1699, -4.1866, -4.2302, -4.9986,-4.8075, -5.6134, -3.6785, -5.4610, -5.5478, -6.9582, -4.6375, -5.1448,-4.6614, -5.0536, -4.4895, -5.3882, -4.4433, -5.9130, -5.5548, -5.1081,-5.9129, -5.2355, -5.0405, -4.8093, -4.5147, -6.5717, -4.7978, -6.3876,-4.6591, -6.3384, -5.6406, -5.2991, -3.7750, -4.6432, -5.0256, -5.9266,-4.7254, -5.3511, -4.1279, -5.8108, -5.6086, -4.8933, -4.4658, -4.2604,-4.2203, -6.0904, -4.4941, -4.3299, -4.4338, -4.5120, -5.5362, -5.6916,-4.3631, -5.7946, -4.5226, -5.0334, -5.3108, -4.6992, -5.8546, -5.3448,-4.7411, -4.6165, -5.0561, -3.7938, -4.5349, -6.3409, -5.6717, -5.2238,-5.4950, -5.6322, -5.9336, -4.7374, -5.2193, -4.7341, -5.5894, -4.9538,-4.9265, -4.3776, -5.9501, -4.6434, -4.7603, -3.4144, -4.9672, -4.5454,-4.5984, -4.3427, -5.4971, -4.6948, -4.5390, -4.1352, -5.3147, -5.4603,-5.7149, -4.4007, -5.0655, -5.0953, -3.7071, -5.5659, -4.5470, -4.9357,-4.5709, -4.9261, -4.6197, -4.9192, -5.8589, -5.4089, -4.1649, -5.2944,-5.5576, -5.7486, -4.8553, -4.2282, -4.0010, -6.0522, -5.6085, -5.3450,-5.6549, -4.8746, -4.5044, -5.1996, -5.0918, -4.1159, -4.2053, -4.7821,-5.6509, -6.5732, -4.7520, -5.1714, -5.1409, -5.1455, -6.2418, -5.0210,-5.5984, -3.9693, -5.4372, -5.1220, -5.2193, -4.5396, -4.4132, -5.4393,-4.9878, -5.6195, -4.7220, -5.4855, -4.8570, -4.3293, -5.4886, -5.1216,-6.0905, -5.6525, -4.9218, -5.5533, -4.9065, -5.2826, -4.3511, -4.3716,-4.7577, -5.2228, -4.3568, -5.2613, -7.0981, -4.7413, -4.1612, -5.1176,-5.5265, -4.8816, -5.5066, -3.1811, -5.5949, -5.1209, -5.3330, -4.5807,-5.0738, -6.2544, -5.7920, -4.8863, -5.4842, -4.1021, -4.2780, -5.6989,-4.9062, -4.9241, -4.6026, -3.8766, -4.2304, -5.3913, -3.9213, -4.8042,-3.8525, -4.7163, -5.0682, -5.0261, -5.7655, -6.6659, -5.0076, -4.4935,-4.9612, -5.2125, -5.8215, -5.3559, -4.8470, -4.5751, -5.2267, -5.7606,-5.1162, -5.7072, -4.0790, -5.3081, -4.5741, -5.2311, -5.0682, -3.9425,-4.0050, -5.5297, -4.4969, -4.0187, -5.7901, -4.9107, -5.2692, -4.6438,-5.0574, -5.8231, -5.3713, -5.0068, -4.3541, -4.4603, -4.4939, -4.8495,-5.5300, -4.9960, -3.5204, -4.3364, -6.1883, -4.1293, -5.0230, -4.7546,-5.8216, -4.1616, -5.9442, -5.3490, -3.8164, -5.5285, -4.4659, -3.8073,-4.0800, -4.3338, -4.8140, -5.4170, -5.5654, -3.5721, -5.4222, -5.4133,-5.3061, -5.6210, -5.0620, -4.9480, -5.4236, -5.0383, -4.5365, -6.5556,-4.9221, -5.6572, -5.3458, -4.0751, -3.5474, -5.1584, -5.4831, -5.0386,-5.1952, -5.7538, -5.2630, -4.6680, -5.3158, -4.4897, -5.2653, -5.6185,-5.2754, -4.9732, -3.6142, -3.3807, -5.9308, -3.3762, -4.5909, -4.8592,-5.4307, -5.8186, -3.1528, -5.8814, -4.3251, -5.4645, -5.7290, -5.5545,-6.9818, -4.6826, -5.2847, -5.2316, -5.3749, -5.5513, -5.0912, -6.0087,-5.0094, -5.2834, -5.9248, -4.2985, -5.6460, -4.0210, -5.0835, -5.0239,-4.2798, -4.0711, -3.0299, -4.4249, -3.8580, -5.4938, -6.1500, -6.2026,-5.5107, -4.6297, -5.2490, -4.1693, -5.5621, -5.3462, -4.4608, -5.4131,-4.8175, -4.7294, -5.3795, -4.7759, -4.9308, -4.6114, -4.8512, -5.6910,-4.5780, -6.0506, -4.5082, -5.0415, -5.1643, -4.3208, -5.7007, -5.4402,-5.0097, -3.7647, -5.9034, -4.9685, -4.2927, -3.3002, -6.0967, -4.7784,-5.0862, -4.9967, -5.5023, -4.9356, -5.8725, -4.7621, -4.8660, -5.6542,-4.1783, -5.8236, -4.4004, -5.2087, -6.2577, -5.9747, -3.9065, -4.9602,-4.9865, -6.0940, -5.1763, -4.1279, -4.7915, -4.5835, -4.4909, -5.0032,-4.4306, -5.1279, -5.9237, -5.6016, -4.1580, -5.8993, -5.5826, -5.1257,-5.2317, -5.4553, -5.0349, -5.3762, -4.8476, -5.7886, -4.9892, -5.3761,-6.2784, -4.3499, -5.6478, -5.4893, -3.0633, -4.5597, -5.5055, -5.4887,-5.8181, -5.6683, -6.3172, -4.5253, -5.2920, -4.5857, -5.5727, -4.5398,-4.7678, -5.5095, -3.8584, -5.2461, -5.6084, -4.6665, -5.4107, -4.8152,-5.4975, -5.0608, -4.3795, -6.9169, -5.0226, -5.4908, -5.5358, -5.0029,-5.5313, -4.9354, -4.8460, -4.9901, -4.7630, -4.8377, -5.0652, -5.9628,-5.9703, -5.4385, -4.6380, -5.6674, -5.3192, -4.4605, -3.6204, -5.3540,-7.2942, -4.0140, -5.5212, -4.0053, -3.5999, -5.2319, -5.9446, -5.6247,-4.3006, -5.1588, -4.5104, -3.9837, -5.1592, -4.2998, -6.2257, -5.3139,-4.5336, -5.6970, -4.5432, -5.0471, -5.1169, -5.7729, -5.3915, -3.4912,-4.6369, -5.2365, -6.0601, -5.3651, -5.0499, -5.1211, -4.7336, -6.7476,-5.6456, -5.0389, -4.7289, -5.5121, -2.9521, -4.6852, -5.8838, -5.6147,-4.9222, -4.7334, -5.0473, -5.3684, -4.3785, -4.4092, -5.7249, -5.1093,-4.9247, -4.0973, -5.2757, -5.0103, -5.3298, -5.2246, -5.2376, -5.0409,-5.3682, -4.4103, -5.2739, -5.7753, -5.3121, -5.3229, -4.7973, -5.3419,-4.0869, -5.5489, -6.0253, -4.6384, -4.7001, -5.3798, -5.4072, -4.6274,-5.2741, -6.1363, -4.6067, -5.3099, -5.0059, -5.1699, -4.9767, -5.2467,-5.0942, -4.4623, -5.6662, -4.6116, -5.3887, -4.7994, -4.1454, -2.2531,-5.6628, -5.2896, -4.7683, -6.1704, -5.5401, -7.0299, -5.1669, -5.5821,-4.7684, -5.2925, -4.6315, -4.8803, -5.1687, -5.2941, -4.9299, -4.8190,-5.4344, -4.7551, -4.7198, -5.1019, -5.7492, -6.1550, -5.6996, -4.4132,-6.2275, -5.4198, -4.8401, -5.1504, -5.1971, -5.7264, -4.6849, -5.0585,-3.8531, -3.6110, -4.3900, -4.0146, -5.6333, -5.7491, -4.2836, -4.9333,-5.2104, -4.4378, -4.6586, -6.6317, -5.3482, -4.9060, -4.0507, -4.3850,-4.3232, -4.4060, -4.6808, -6.1481, -5.0341, -5.0642, -4.2104, -4.4937,-5.8942, -5.7710, -5.0782, -4.6604, -6.3730, -7.0023, -5.4708, -6.7699,-4.6094, -5.3579, -6.3511, -5.2445, -3.5320, -4.9982, -5.1565, -5.1640,-5.4871, -3.6589, -3.9923, -5.0592, -4.5019, -5.9001, -4.1611, -4.6703,-5.1029, -2.9716, -5.3578, -5.1678, -4.0690, -6.2209, -4.3308, -4.6219,-5.7228, -3.6582, -4.6460, -5.5739, -4.9900, -5.2182, -5.1610, -4.2914,-3.4296, -4.7841, -5.4836, -4.0942, -5.3494, -4.3294, -6.1656, -5.4277,-5.9730, -3.6229, -5.0981, -5.5474, -5.6498, -4.2508, -3.6384, -4.9230,-5.5150, -3.9948, -5.5786, -4.2315, -4.3435, -5.1986, -4.8257, -4.2392,-4.6649, -5.5955, -5.2190, -4.8896, -5.6084, -5.1639, -5.6644, -4.2302,-4.6478, -5.2653, -5.4640, -4.5797, -6.1926, -4.3489, -5.2554, -4.2098,-5.5954, -4.5482, -4.8853, -5.3986, -4.9047, -4.2353, -4.8603, -5.6714,-5.5819, -5.5323, -4.5244, -3.7890, -5.6494, -5.6447, -4.6866, -4.5489,-5.4129, -5.2079, -5.7100, -5.2953, -4.0829, -5.3427, -4.6995, -5.5088,-5.3798, -5.3735, -4.4773, -5.0795, -5.4732, -5.3964, -5.0798, -4.6644,-5.7256, -6.7874, -4.4255, -4.7243, -4.0838, -4.5000, -5.7769, -5.8769,-5.8356, -5.6986, -4.8260, -4.9483, -3.8793, -4.4843, -4.7780, -2.8808,-5.0341, -5.5861, -4.7410, -4.6428, -6.2251, -4.2188, -3.2221, -5.4640,-5.5350, -3.3220, -5.3559, -5.2414, -5.0133, -3.9686, -5.3160, -4.1124]],device='cuda:0', grad_fn=<AddmmBackward0>)

预测结果是:Bananaquit

classes

['Bananaquit', 'Black Skimmer', 'Black Throated Bushtiti', 'Cockatoo']

相关文章:

深度学习每周学习总结J1(ResNet-50算法实战与解析 - 鸟类识别)

🍨 本文为🔗365天深度学习训练营 中的学习记录博客🍖 原作者:K同学啊 | 接辅导、项目定制 目录 0. 总结1. 设置GPU2. 导入数据及处理部分3. 划分数据集4. 模型构建部分5. 设置超参数:定义损失函数,学习率&a…...

商家营销工具架构升级总结

今年以来,商家营销工具业务需求井喷,需求数量多且耗时都比较长,技术侧面临很大的压力。因此这篇文章主要讨论营销工具前端要如何应对这样大规模的业务需求。 问题拆解 我们核心面对的问题主要如下: 1. 人力有限 我们除了要支撑存量…...

移动硬盘无法读取:问题解析与高效数据恢复实战

一、移动硬盘无法读取的困扰 在数字化时代,移动硬盘作为数据存储和传输的重要媒介,承载着大量珍贵的数据资源。然而,当移动硬盘突然无法读取时,我们往往会陷入深深的困扰之中。这种无法读取的现象可能表现为插入电脑后毫无反应、…...

20241005给荣品RD-RK3588-AHD开发板刷Rockchip原厂的Android12时使用iperf3测网速

20241005给荣品RD-RK3588-AHD开发板刷Rockchip原厂的Android12时使用iperf3测网速 2024/10/5 14:06 对于荣品RD-RK3588-AHD开发板,eth1位置上的PCIE转RJ458的以太网卡是默认好用的! PCIE TO RJ45:RTL8111HS 被识别成为eth0了。inet addr:192.…...

node配置swagger

安装swagger npm install swagger-jsdoc swagger-ui-express 创建 swagger.js 配置文件 const path require(path); const express require(express); const swaggerUI require(swagger-ui-express); const swaggerJsDoc require(swagger-jsdoc); // 修改 swaggerDoc…...

MATLAB plot画线的颜色 形状

文章目录 前言一、MATLAB plot画线的颜色 形状?颜色选项标记选项示例代码详细说明 总结 前言 提示:这里可以添加本文要记录的大概内容: 项目需要: 提示:以下是本篇文章正文内容,下面案例可供参考 一、MA…...

Goland使用SSH远程Linux进行断点调试 (兼容私有库)

① 前置需求 ssh远程的 Linux 服务器必须安装 高于本地的 Go推荐golang 安装方式使用 apt yum snap 等系统自管理方式,(要安装最新版本的可以找找第三方源),如无特殊需求不要自行编译安装golang ② Goland设置 2.1、设置项处理…...

)

LLM | Ollama WebUI 安装使用(pip 版)

Open WebUI (Formerly Ollama WebUI) 也可以通过 docker 来安装使用 1. 详细步骤 1.1 安装 Open WebUI # 官方建议使用 python3.11(2024.09.27),conda 的使用参考其他文章 conda create -n open-webui python3.11 conda activate open-web…...

Three.js基础内容(一)

目录 一、几何体顶点和模型 1.1、点模型对象(Points)渲染顶点数据 1.2、线模型(Line)渲染顶点数据(画个心) 1.3、网格模型(Mesh)渲染顶点数据(三角形概念) 1.4、构建一个矩形平面几何体 1.5、几何顶点索引数据 1.6、顶点法线数据 1.7、查看three…...

网站建设制作需要注意

网站建设制作不仅仅是简单的技术活,更是一个企业或个人在互联网上展示自己形象和实力的重要手段。本文将探讨网站建设制作的重要性、步骤和关键要素。 1. 网站建设的重要性 1.1 品牌形象与宣传 一个精心设计的网站能够突显企业或个人的品牌形象,传递清晰…...

【Python】Uvicorn:Python 异步 ASGI 服务器详解

Uvicorn 是一个为 Python 设计的 ASGI(异步服务器网关接口)Web 服务器。它填补了 Python 在异步框架中缺乏一个最小化低层次服务器/应用接口的空白。Uvicorn 支持 HTTP/1.1 和 WebSockets,是构建现代异步Web应用的强大工具。 ⭕️宇宙起点 &a…...

类型转换【C++提升】(隐式转换、显式转换、自定义转换、转换构造函数、转换运算符重载......你想知道的全都有)

更多精彩内容..... 🎉❤️播主の主页✨😘 Stark、-CSDN博客 本文所在专栏: C系列语法知识_Stark、的博客-CSDN博客 座右铭:梦想是一盏明灯,照亮我们前行的路,无论风雨多大,我们都要坚持不懈。 一…...

微信小程序hbuilderx+uniapp+Android 新农村综合风貌旅游展示平台

目录 项目介绍支持以下技术栈:具体实现截图HBuilderXuniappmysql数据库与主流编程语言java类核心代码部分展示登录的业务流程的顺序是:数据库设计性能分析操作可行性技术可行性系统安全性数据完整性软件测试详细视频演示源码获取方式 项目介绍 小程序端…...

【AI大模型】使用Embedding API

一、使用OpenAI API 目前GPT embedding mode有三种,性能如下所示: 模型每美元页数MTEB得分MIRACL得分text-embedding-3-large9,61554.964.6text-embedding-3-small62,50062.344.0text-embedding-ada-00212,50061.031.4 MTEB得分为embedding model分类…...

面试速通宝典——11

188. 总结static的应用和作用 函数体内static变量的作用范围为该函数体,不同于auto变量,该变量的内存只被分配一次,因此其值在下次调用时仍维持上次的值。在模块内的static全局变量可以被模块内所用函数访问,但不能被模块外其他函…...

python:reportlab 将多个图片合并成一个PDF文件

承上一篇:java:pdfbox 3.0 去除扫描版PDF中文本水印 # 导出扫描版PDF文件中每页的图片文件 java -jar pdfbox-app-3.0.3.jar export:images -prefixtest -i your_book.pdf 导出 Writing image: test-1.jpg Writing image: test-2.jpg Writing image: t…...

决策树:机器学习中的强大工具

什么是决策树? 决策树是一种通过树状结构进行决策的模型。它的每个节点代表一个特征(或属性),每个分支代表特征的可能值,而每个叶子节点则代表最终的决策结果或分类。想象一下,在选择晚餐时,你…...

平面电磁波(解麦克斯韦方程)电场相位是复数的积分常数,电场矢量每个分量都有一个相位。磁场相位和电场一样,这是因为无损介质中实数的波阻抗

注意无源代表你立方程那个点xyzt处没有源,电场磁场也是这个点的。 j电流面密度,电流除以单位面积,ρ电荷体密度,电荷除以单位体积。 j方程组有16个未知数,每个矢量有三个xyz分量,即三个未知数,…...

复习HTML(进阶)

前言 上一篇的最后我介绍了在表单中,上传文件需要使用到 method属性 和enctype属性。本篇博客主要是详细的介绍这些知识 <form action"http://localhost:8080/test" method"post" enctype"multipart/form-data"> method属性…...

Qt 每日面试题 -7

61、如何安全的在另外一个线程中调用QObject对象的接口 QObject被设计成在一个单线程中创建与使用,因此,在一个线程中创建一个对象,而在另外的线程中调用它的函数,这样的行为不能保证工作良好。使用信号槽的队列连接或者QT的反射…...

设计模式和设计原则回顾

设计模式和设计原则回顾 23种设计模式是设计原则的完美体现,设计原则设计原则是设计模式的理论基石, 设计模式 在经典的设计模式分类中(如《设计模式:可复用面向对象软件的基础》一书中),总共有23种设计模式,分为三大类: 一、创建型模式(5种) 1. 单例模式(Sing…...

利用ngx_stream_return_module构建简易 TCP/UDP 响应网关

一、模块概述 ngx_stream_return_module 提供了一个极简的指令: return <value>;在收到客户端连接后,立即将 <value> 写回并关闭连接。<value> 支持内嵌文本和内置变量(如 $time_iso8601、$remote_addr 等)&a…...

day52 ResNet18 CBAM

在深度学习的旅程中,我们不断探索如何提升模型的性能。今天,我将分享我在 ResNet18 模型中插入 CBAM(Convolutional Block Attention Module)模块,并采用分阶段微调策略的实践过程。通过这个过程,我不仅提升…...

解决Ubuntu22.04 VMware失败的问题 ubuntu入门之二十八

现象1 打开VMware失败 Ubuntu升级之后打开VMware上报需要安装vmmon和vmnet,点击确认后如下提示 最终上报fail 解决方法 内核升级导致,需要在新内核下重新下载编译安装 查看版本 $ vmware -v VMware Workstation 17.5.1 build-23298084$ lsb_release…...

关于nvm与node.js

1 安装nvm 安装过程中手动修改 nvm的安装路径, 以及修改 通过nvm安装node后正在使用的node的存放目录【这句话可能难以理解,但接着往下看你就了然了】 2 修改nvm中settings.txt文件配置 nvm安装成功后,通常在该文件中会出现以下配置&…...

DAY 47

三、通道注意力 3.1 通道注意力的定义 # 新增:通道注意力模块(SE模块) class ChannelAttention(nn.Module):"""通道注意力模块(Squeeze-and-Excitation)"""def __init__(self, in_channels, reduction_rat…...

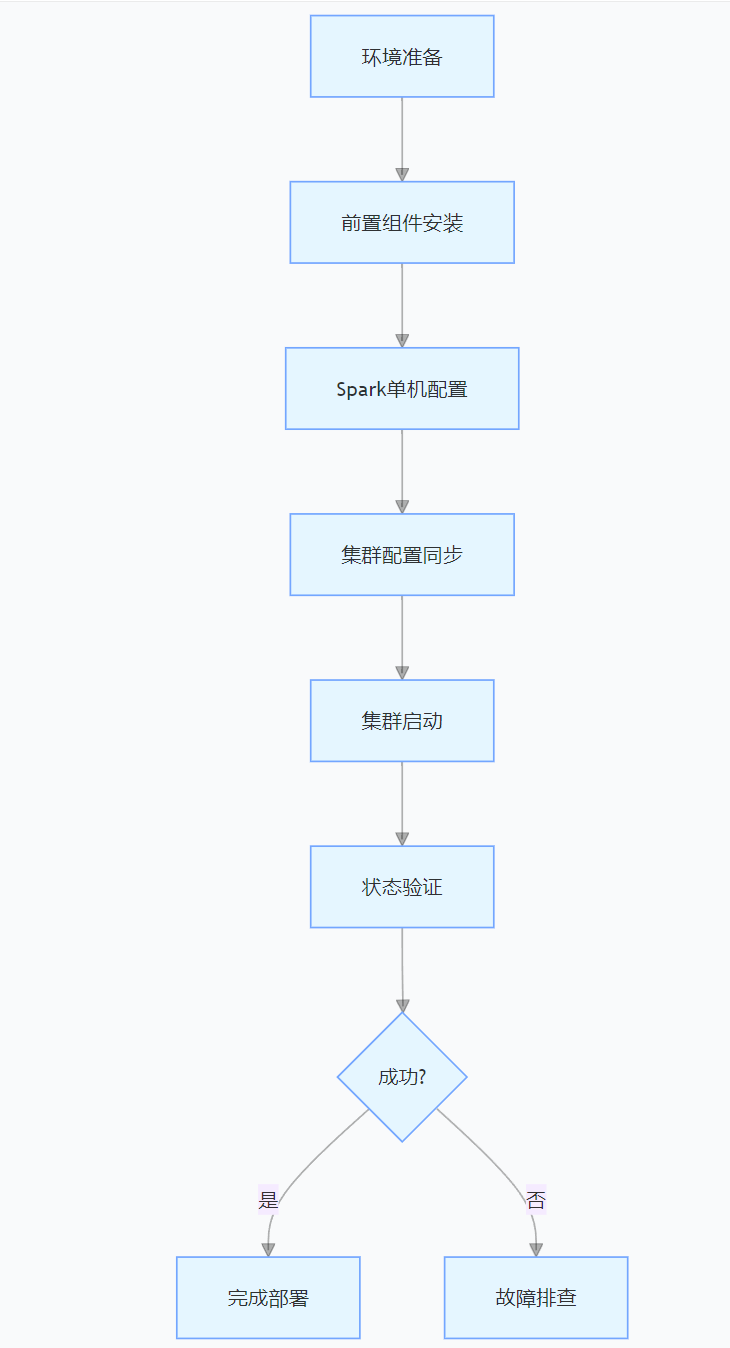

CentOS下的分布式内存计算Spark环境部署

一、Spark 核心架构与应用场景 1.1 分布式计算引擎的核心优势 Spark 是基于内存的分布式计算框架,相比 MapReduce 具有以下核心优势: 内存计算:数据可常驻内存,迭代计算性能提升 10-100 倍(文档段落:3-79…...

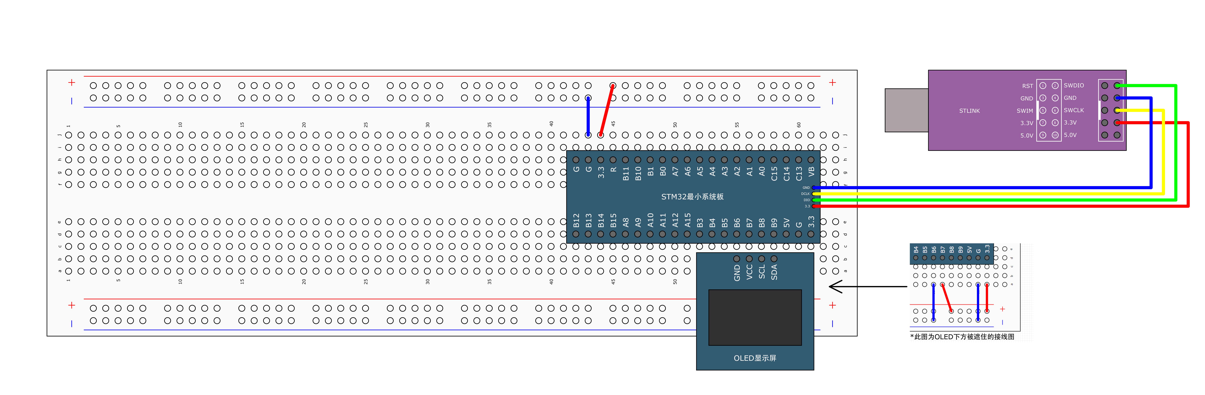

STM32标准库-DMA直接存储器存取

文章目录 一、DMA1.1简介1.2存储器映像1.3DMA框图1.4DMA基本结构1.5DMA请求1.6数据宽度与对齐1.7数据转运DMA1.8ADC扫描模式DMA 二、数据转运DMA2.1接线图2.2代码2.3相关API 一、DMA 1.1简介 DMA(Direct Memory Access)直接存储器存取 DMA可以提供外设…...

学校招生小程序源码介绍

基于ThinkPHPFastAdminUniApp开发的学校招生小程序源码,专为学校招生场景量身打造,功能实用且操作便捷。 从技术架构来看,ThinkPHP提供稳定可靠的后台服务,FastAdmin加速开发流程,UniApp则保障小程序在多端有良好的兼…...

【HTML-16】深入理解HTML中的块元素与行内元素

HTML元素根据其显示特性可以分为两大类:块元素(Block-level Elements)和行内元素(Inline Elements)。理解这两者的区别对于构建良好的网页布局至关重要。本文将全面解析这两种元素的特性、区别以及实际应用场景。 1. 块元素(Block-level Elements) 1.1 基本特性 …...