Kylin Server V10 下自动安装并配置Kafka

Kafka是一个分布式的、分区的、多副本的消息发布-订阅系统,它提供了类似于JMS的特性,但在设计上完全不同,它具有消息持久化、高吞吐、分布式、多客户端支持、实时等特性,适用于离线和在线的消息消费,如常规的消息收集、网站活性采集、聚合统计系统运营数据(监测数据)、日志收集等大量数据的互联网服务的数据收集场景。

1. 查看操作系统信息

[root@localhost ~]# cat /etc/.kyinfo

[dist]

name=Kylin

milestone=Server-V10-GFB-Release-ZF9_01-2204-Build03

arch=arm64

beta=False

time=2023-01-09 11:04:36

dist_id=Kylin-Server-V10-GFB-Release-ZF9_01-2204-Build03-arm64-2023-01-09 11:04:36

[servicekey]

key=0080176

[os]

to=

term=2024-05-16

2. 编辑setup.sh安装脚本

#!/bin/bash

###########################################################################################

# @programe : setup.sh

# @version : 3.8.1

# @function@ :

# @campany :

# @dep. :

# @writer : Liu Cheng ji

# @phone : 18037139992

# @date : 2024-09-24

############################################################################################getent group kafka >/dev/null || groupadd -r kafka

getent passwd kafka >/dev/null || useradd -r -g kafka -d /var/lib/kafka \-s /sbin/nologin -c "Kafka user" kafkatar -zxvf kafka.tar.gz -C /usr/local/

mkdir /usr/local/kafka/logs -p

cp -f ./config/server.properties /usr/local/kafka/config/

chown -R kafka:kafka /usr/local/kafkacp ./config/kafka.sh /etc/profile.d/

chown root:root /etc/profile.d/kafka.sh

chmod 644 /etc/profile.d/kafka.sh

source /etc/profilecp ./config/kafka.service /usr/lib/systemd/system/

chown root:root /usr/lib/systemd/system/kafka.service

chmod 644 /usr/lib/systemd/system/kafka.servicecp ./config/kafka.conf /usr/lib/tmpfiles.d/

chown root:root /usr/lib/tmpfiles.d/kafka.conf

chmod 0644 /usr/lib/tmpfiles.d/kafka.conf

systemd-tmpfiles --create /usr/lib/tmpfiles.d/kafka.confsystemctl unmask kafka.service

systemctl daemon-reload

service_power_on_status=`systemctl is-enabled kafka`

if [ $service_power_on_status != 'enabled' ]; thensystemctl enable kafka.service

fiecho "+--------------------------------------------------------------------------------------------------------------+"

echo "| Kafka 3.8.1 Install Sucesses |"

echo "+--------------------------------------------------------------------------------------------------------------+"3. 设置环境变量配置文件kafka.sh

export KAFKA_HOME=/usr/local/kafka

export PATH=$KAFKA_HOME/bin:$PATH

4. 设置kafka.service文件

[Unit]

Requires=zookeeper.service

After=zookeeper.service[Service]

Type=simple

LimitNOFILE=1048576

ExecStart=/usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties

ExecStop=/usr/local/kafka/bin/kafka-server-stop.sh

Restart=Always[Install]

WantedBy=multi-user.target

5. 编写 kafka.conf 文件

d /var/lib/kafka 0775 kafka kafka -

6. 根据需要优化设置server.properties文件

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.#

# This configuration file is intended for use in ZK-based mode, where Apache ZooKeeper is required.

# See kafka.server.KafkaConfig for additional details and defaults

############################## Server Basics ############################## The id of the broker. This must be set to a unique integer for each broker.

broker.id=0############################# Socket Server Settings ############################## The address the socket server listens on. If not configured, the host name will be equal to the value of

# java.net.InetAddress.getCanonicalHostName(), with PLAINTEXT listener name, and port 9092.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://:15903# Listener name, hostname and port the broker will advertise to clients.

# If not set, it uses the value for "listeners".

advertised.listeners=PLAINTEXT://your.host.name:15903# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

#listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL# The number of threads that the server uses for receiving requests from the network and sending responses to the network

num.network.threads=128# The number of threads that the server uses for processing requests, which may include disk I/O

num.io.threads=65# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400# The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600############################# Log Basics ############################## A comma separated list of directories under which to store log files

log.dirs=/usr/local/kafka/logs# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1############################# Internal Topic Settings #############################

# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1############################# Log Flush Policy ############################## Messages are immediately written to the filesystem but by default we only fsync() to sync

# the OS cache lazily. The following configurations control the flush of data to disk.

# There are a few important trade-offs here:

# 1. Durability: Unflushed data may be lost if you are not using replication.

# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

# The settings below allow one to configure the flush policy to flush data after a period of time or

# every N messages (or both). This can be done globally and overridden on a per-topic basis.# The number of messages to accept before forcing a flush of data to disk

#log.flush.interval.messages=10000# The maximum amount of time a message can sit in a log before we force a flush

#log.flush.interval.ms=1000############################# Log Retention Policy ############################## The following configurations control the disposal of log segments. The policy can

# be set to delete segments after a period of time, or after a given size has accumulated.

# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

# from the end of the log.# The minimum age of a log file to be eligible for deletion due to age

log.retention.hours=168# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

#log.retention.bytes=1073741824# The maximum size of a log segment file. When this size is reached a new log segment will be created.

#log.segment.bytes=1073741824# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000############################# Zookeeper ############################## Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=localhost:15902# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=18000############################# Group Coordinator Settings ############################## The following configuration specifies the time, in milliseconds, that the GroupCoordinator will delay the initial consumer rebalance.

# The rebalance will be further delayed by the value of group.initial.rebalance.delay.ms as new members join the group, up to a maximum of max.poll.interval.ms.

# The default value for this is 3 seconds.

# We override this to 0 here as it makes for a better out-of-the-box experience for development and testing.

# However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup.

group.initial.rebalance.delay.ms=0

7. 配置说明

相关文章:

Kylin Server V10 下自动安装并配置Kafka

Kafka是一个分布式的、分区的、多副本的消息发布-订阅系统,它提供了类似于JMS的特性,但在设计上完全不同,它具有消息持久化、高吞吐、分布式、多客户端支持、实时等特性,适用于离线和在线的消息消费,如常规的消息收集、…...

windows环境下cmd窗口打开就进入到对应目录,一般人都不知道~

前言 很久以前,我还在上一家公司的时候,有一次我看到我同事打开cmd窗口的方式,瞬间把我惊呆了。原来他打开cmd窗口的方式,不是一般的在开始里面输入cmd,然后打开cmd窗口。而是另外一种方式。 我这个同事是个技术控&a…...

企微SCRM价格解析及其性价比分析

内容概要 在如今的数字化时代,企业对于客户关系管理的需求日益增长,而企微SCRM(Social Customer Relationship Management)作为一款新兴的客户管理工具,正好满足了这一需求。本文旨在为大家深入解析企微SCRM的价格体系…...

【SpringMVC】记录一次Bug——mvc:resources设置静态资源不过滤导致WEB-INF下的资源无法访问

SpringMVC 记录一次bug 其实都是小毛病,但是为了以后再出毛病,记录一下: mvc:resources设置静态资源不过滤问题 SpringMVC中配置的核心Servlet——DispatcherServlet,为了可以拦截到所有的请求(JSP页面除外…...

【React】React 生命周期完全指南

🌈个人主页: 鑫宝Code 🔥热门专栏: 闲话杂谈| 炫酷HTML | JavaScript基础 💫个人格言: "如无必要,勿增实体" 文章目录 React 生命周期完全指南一、生命周期概述二、生命周期的三个阶段2.1 挂载阶段&a…...

【NLP】使用 SpaCy、ollama 创建用于命名实体识别的合成数据集

命名实体识别 (NER) 是自然语言处理 (NLP) 中的一项重要任务,用于自动识别和分类文本中的实体,例如人物、位置、组织等。尽管它很重要,但手动注释大型数据集以进行 NER 既耗时又费钱。受本文 ( https://huggingface.co/blog/synthetic-data-s…...

【C++练习】二进制到十进制的转换器

题目:二进制到十进制的转换器 描述 编写一个程序,将用户输入的8位二进制数转换成对应的十进制数并输出。如果用户输入的二进制数不是8位,则程序应提示用户输入无效,并终止运行。 要求 程序应首先提示用户输入一个8位二进制数。…...

Vue功能菜单的异步加载、动态渲染

实际的Vue应用中,常常需要提供功能菜单,例如:文件下载、用户注册、数据采集、信息查询等等。每个功能菜单项,对应某个.vue组件。下面的代码,提供了一种独特的异步加载、动态渲染功能菜单的构建方法: <s…...

)

云技术基础学习(一)

内容预览 ≧∀≦ゞ 声明导语云技术历史 云服务概述云服务商与部署模式1. 公有云服务商2. 私有云部署3. 混合云模式 云服务分类1. 基础设施即服务(IaaS)2. 平台即服务(PaaS)3. 软件即服务(SaaS) 云架构云架构…...

【优选算法篇】微位至简,数之恢宏——解构 C++ 位运算中的理与美

文章目录 C 位运算详解:基础题解与思维分析前言第一章:位运算基础应用1.1 判断字符是否唯一(easy)解法(位图的思想)C 代码实现易错点提示时间复杂度和空间复杂度 1.2 丢失的数字(easy࿰…...

MFC工控项目实例二十九主对话框调用子对话框设定参数值

在主对话框调用子对话框设定参数值,使用theApp变量实现。 子对话框各参数变量 CString m_strTypeName; CString m_strBrand; CString m_strRemark; double m_edit_min; double m_edit_max; double m_edit_time2; double …...

Java | Leetcode Java题解之第546题移除盒子

题目: 题解: class Solution {int[][][] dp;public int removeBoxes(int[] boxes) {int length boxes.length;dp new int[length][length][length];return calculatePoints(boxes, 0, length - 1, 0);}public int calculatePoints(int[] boxes, int l…...

【前端】Svelte:响应性声明

Svelte 的响应性声明机制简化了动态更新 UI 的过程,让开发者不需要手动追踪数据变化。通过 $ 前缀与响应式声明语法,Svelte 能够自动追踪依赖关系,实现数据变化时的自动重新渲染。在本教程中,我们将详细探讨 Svelte 的响应性声明机…...

PostgreSQL 性能优化全方位指南:深度提升数据库效率

PostgreSQL 性能优化全方位指南:深度提升数据库效率 别忘了请点个赞收藏关注支持一下博主喵!!! 在现代互联网应用中,数据库性能优化是系统优化中至关重要的一环,尤其对于数据密集型和高并发的应用而言&am…...

Flutter鸿蒙next 使用 BLoC 模式进行状态管理详解

1. 引言 在 Flutter 中,随着应用规模的扩大,管理应用中的状态变得越来越复杂。为了处理这种复杂性,许多开发者选择使用不同的状态管理方案。其中,BLoC(Business Logic Component)模式作为一种流行的状态管…...

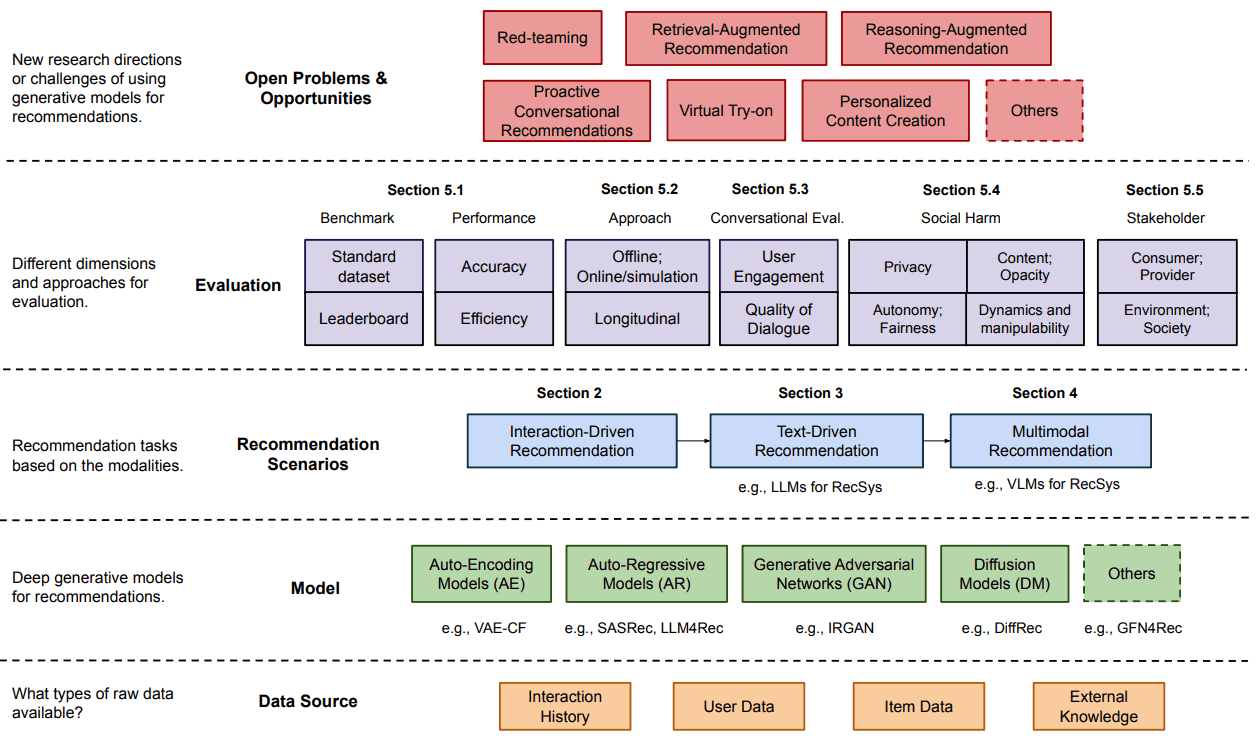

Gen-RecSys——一个通过生成和大规模语言模型发展起来的推荐系统

概述 生成模型的进步对推荐系统的发展产生了重大影响。传统的推荐系统是 “狭隘的专家”,只能捕捉特定领域内的用户偏好和项目特征,而现在生成模型增强了这些系统的功能,据报道,其性能优于传统方法。这些模型为推荐的概念和实施带…...

Android 重新定义一个广播修改系统时间,避免系统时间混乱

有时候,搞不懂为什么手机设备无法准确定义系统时间,出现混乱或显示与实际不符,需要重置或重新设定一次才行,也是真的够无语的!! vendor/mediatek/proprietary/packages/apps/MtkSettings/AndroidManifest.…...

第3章:角色扮演提示-Claude应用开发教程

更多教程,请访问claude应用开发教程 设置 运行以下设置单元以加载您的 API 密钥并建立 get_completion 辅助函数。 !pip install anthropic# Import pythons built-in regular expression library import re import anthropic# Retrieve the API_KEY & MODEL…...

【FAQ】HarmonyOS SDK 闭源开放能力 —Vision Kit

1.问题描述: 人脸活体检测页面会有声音提示,如何控制声音开关? 解决方案: 活体检测暂无声音控制开关,但可通过其他能力控制系统音量,从而控制音量。 活体检测页面固定音频流设置的是8(无障碍…...

【问题解决】Tomcat由低于8版本升级到高版本使用Tomcat自带连接池报错无法找到表空间的问题

问题复现 项目上历史项目为解决漏洞扫描从Tomcat 6.0升级到了9.0版本,服务启动的日志显示如下警告,数据源是通过JNDI方式在server.xml中配置的,控制台上狂刷无法找到表空间的错误(没截图) 报错: 06-Nov-…...

测试微信模版消息推送

进入“开发接口管理”--“公众平台测试账号”,无需申请公众账号、可在测试账号中体验并测试微信公众平台所有高级接口。 获取access_token: 自定义模版消息: 关注测试号:扫二维码关注测试号。 发送模版消息: import requests da…...

vscode里如何用git

打开vs终端执行如下: 1 初始化 Git 仓库(如果尚未初始化) git init 2 添加文件到 Git 仓库 git add . 3 使用 git commit 命令来提交你的更改。确保在提交时加上一个有用的消息。 git commit -m "备注信息" 4 …...

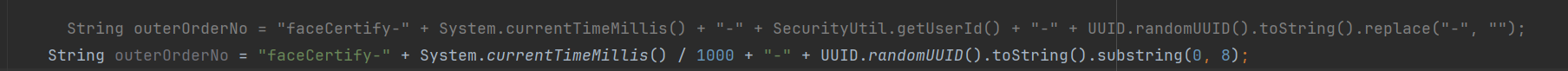

调用支付宝接口响应40004 SYSTEM_ERROR问题排查

在对接支付宝API的时候,遇到了一些问题,记录一下排查过程。 Body:{"datadigital_fincloud_generalsaas_face_certify_initialize_response":{"msg":"Business Failed","code":"40004","sub_msg…...

质量体系的重要

质量体系是为确保产品、服务或过程质量满足规定要求,由相互关联的要素构成的有机整体。其核心内容可归纳为以下五个方面: 🏛️ 一、组织架构与职责 质量体系明确组织内各部门、岗位的职责与权限,形成层级清晰的管理网络…...

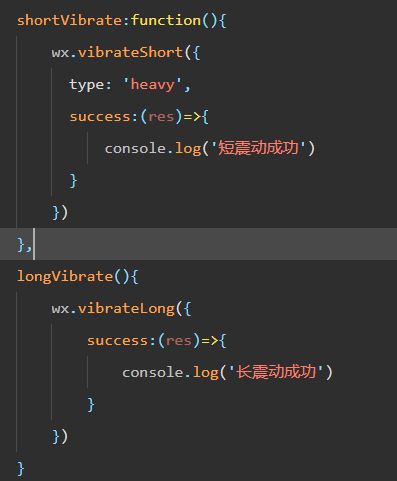

微信小程序 - 手机震动

一、界面 <button type"primary" bindtap"shortVibrate">短震动</button> <button type"primary" bindtap"longVibrate">长震动</button> 二、js逻辑代码 注:文档 https://developers.weixin.qq…...

【Go】3、Go语言进阶与依赖管理

前言 本系列文章参考自稀土掘金上的 【字节内部课】公开课,做自我学习总结整理。 Go语言并发编程 Go语言原生支持并发编程,它的核心机制是 Goroutine 协程、Channel 通道,并基于CSP(Communicating Sequential Processes࿰…...

鱼香ros docker配置镜像报错:https://registry-1.docker.io/v2/

使用鱼香ros一件安装docker时的https://registry-1.docker.io/v2/问题 一键安装指令 wget http://fishros.com/install -O fishros && . fishros出现问题:docker pull 失败 网络不同,需要使用镜像源 按照如下步骤操作 sudo vi /etc/docker/dae…...

2025季度云服务器排行榜

在全球云服务器市场,各厂商的排名和地位并非一成不变,而是由其独特的优势、战略布局和市场适应性共同决定的。以下是根据2025年市场趋势,对主要云服务器厂商在排行榜中占据重要位置的原因和优势进行深度分析: 一、全球“三巨头”…...

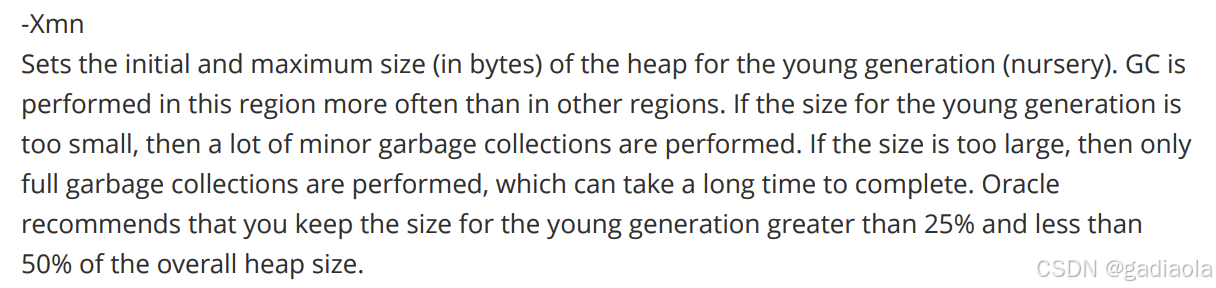

【JVM】Java虚拟机(二)——垃圾回收

目录 一、如何判断对象可以回收 (一)引用计数法 (二)可达性分析算法 二、垃圾回收算法 (一)标记清除 (二)标记整理 (三)复制 (四ÿ…...

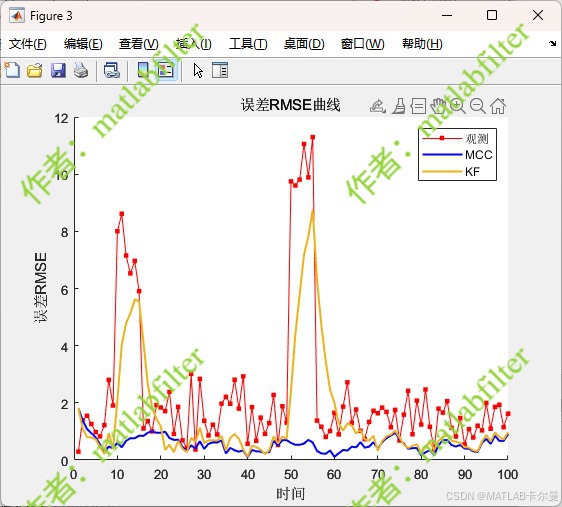

【MATLAB代码】基于最大相关熵准则(MCC)的三维鲁棒卡尔曼滤波算法(MCC-KF),附源代码|订阅专栏后可直接查看

文章所述的代码实现了基于最大相关熵准则(MCC)的三维鲁棒卡尔曼滤波算法(MCC-KF),针对传感器观测数据中存在的脉冲型异常噪声问题,通过非线性加权机制提升滤波器的抗干扰能力。代码通过对比传统KF与MCC-KF在含异常值场景下的表现,验证了后者在状态估计鲁棒性方面的显著优…...